Abstract

In recent years, advancements in human–computer interaction (HCI) have enabled the development of versatile immersive devices, including Head-Mounted Displays (HMDs). These devices are usually used for entertainment activities as video-gaming or augmented/virtual reality applications for tourist or learning purposes. Actually, HMDs, together with the design of ad-hoc exercises, can also be used to support rehabilitation tasks, including neurocognitive rehabilitation due to strokes, traumatic brain injuries, or brain surgeries. In this paper, a tool for immersive neurocognitive rehabilitation is presented. The tool allows therapists to create and set 3D rooms to simulate home environments in which patients can perform tasks of their everyday life (e.g., find a key, set a table, do numerical exercises). The tool allows therapists to implement the different exercises on the basis of a random mechanism by which different parameters (e.g., objects position, task complexity) can change over time, thus stimulating the problem-solving skills of patients. The latter aspect plays a key role in neurocognitive rehabilitation. Experiments obtained on 35 real patients and comparative evaluations, conducted by five therapists, of the proposed tool with respect to the traditional neurocognitive rehabilitation methods highlight remarkable results in terms of motivation, acceptance, and usability as well as recovery of lost skills.

1. Introduction

In recent years, computer vision and graphics to support complex frameworks in human–computer interactions (HCI) are becoming increasingly important to address a wide range of application areas [1,2]. Nowadays, in fact, more and more critical tasks see advanced interactions between complex systems and users, or more in general, between intelligent environments and users, so that the latter can interact, more or less voluntarily, with smart and autonomous applications. A typical example in this direction is represented by the new generation of video surveillance systems [3,4,5,6,7]. In these systems, vehicles and people are detected and tracked over time within video streams and, at the same time, their actions and behaviours are encoded and classified in order to have a sort of smart understanding of what is happening inside a scenario, thus allowing a quick identification of dangerous situations or events of interest. Actually, in video surveillance systems, or more in general, in video monitoring systems [8,9], the whole input captured through cameras is provided by specific visual features [10,11,12] of moving subjects (e.g., skeleton joints or centre of gravity in case of people or vehicles, respectively) whose changes over time (e.g., position, speed, acceleration) determine both subjects themselves [13,14,15] and their interactive behaviour [16,17]. Other scenarios of complex interaction between humans and computers in which systems are driven by users’ input are, for example, the re-identification systems [18,19], in which the matching between a probe person and a set of gallery people is computed on the basis of similarity measures coming from the subjects, including shapes, colours, textures, gaits, and others; the deception detection systems [20,21], in which, among other things, facial expressions, vocal tones, and chosen words are used to determine if a subject is lying or telling the truth; the sketch-based systems [22,23,24], in which freehand gestures forming graphical languages are used to express concepts and commands to drive general-purpose interfaces; and many others.

Starting from the complex HCI based systems reported above, recent years have also seen the introduction of the augmented/virtual reality to support medical areas, including the rehabilitation one. In fact, in the current state-of-the-art, it is always more common to find captivating and stimulating games suitably designed to allow patients the regaining of some lost skills. A key work, for example, is reported in [25], where a system to improve postural balance control by combining virtual reality with a bicycle is presented. In the just reported system, several parameters, including path deviation, path deviation velocity, cycling time, and head movement can be extracted and evaluated to quantify the extent of control. Another interesting work is, instead, given in [26], where a system to recover physical and functional balance is described. In their work, the authors show how a rough body reconstruction of a patient together with ad-hoc virtual exercises can be a concrete first step to fully support the balance rehabilitation. The most recent work is reported in [27], where the virtual reality is used on two test applications: an application in the context of motor rehabilitation following injury of the lower limbs and an application in the context of real-time functional magnetic resonance imaging neurofeedback, to regulate brain function in specific brain regions of interest.

Among the various types of rehabilitation, neurocognitive rehabilitation seems to be the one that could benefit most from the use of virtual environments with a high level of interaction [28,29,30]. For this reason, and on the basis of our previous experiences in hand and body motor rehabilitation based on virtual environments [31,32,33,34], in this paper a tool for immersive neurocognitive rehabilitation is presented. Notice that, even if the tool proposed in this paper inherits technical and theoretical experiences from the just cited systems, with respect to them, and more importantly, with respect to the current state-of-the-art, it presents totally re-designed and re-developed architecture and modules. Concluding, the contributions of the proposed tool can be summarized as follows:

- Similarly to the works in [33,34], the proposed tool is based on the immersive virtual reality. But, unlike all our previous works [31,32,33,34], it is specifically designed for neurocognitive rehabilitation, thus providing a new set of serious games based on a different paradigm;

- Unlike the current state-of-the-art, the tool allows us to create 3D environments both automatically (i.e., by using a planimetry) and manually (i.e., created ad-hoc by a therapist). In this way, it is possible to recreate an environment similar to the patient’s home, thus decreasing the stress induced by the rehabilitation sessions;

- Another novelty with respect to the common literature is that the tool allows us to create serious games with an increasing level of difficulty, thus allowing both patients to familiarize with the exercises and therapists to adapt game parameters according to the patients’ status;

- A final not common novelty of the proposed tool is the implementation of a random mechanism, inside each serious game, that allows it, for example, to place the objects in the rooms always in different positions, thus increasing the longevity of the rehabilitative exercises and, at the same time, limiting the habituation factor of the patients that, as well known, is a crucial aspect of the neurocognitive rehabilitation.

The rest of the paper is structured as follows. In Section 2 a concise overview of immersive and non-immersive frameworks focused on neurocognitive rehabilitation is presented. In Section 3 both the developed tool and the proposed rehabilitative serious games are reported. In Section 4 experimental results and advantages of neurocognitive rehabilitation based on immersive serious games compared to classical techniques are discussed. Finally, Section 5 concludes the paper.

2. Related Work

In the first and second subsection, respectively, selected works about non-immersive and immersive systems for the neurocognitive rehabilitation are reported. Notice that, while the first set of systems often uses Graphical User Interfaces (GUIs) and more-or-less large screens to interact with patients, the second set of systems adopts different kinds of Head-Mounted Displays (HMDs) for the interaction and tracker mechanisms to detect patients’ position within the real environments.

2.1. Non Immersive Neurocognitive Rehabilitation

Since from the first applications, even the non-immersive virtual reality has shown to be useful to overcome some drawbacks related to the classical rehabilitation techniques, such as lack of motivation, lack of stimuli, boring repetitiveness, and many others. A first interesting work is presented in [35], where the authors propose a system to address brain injuries due to strokes or traumatic events. Their system consists of a WEB platform that allows therapists to set stimulating on-line games, in “arcade” style, to improve attention, memory, and planning skills. Another work is, instead, reported in [36], where a cooking plot is employed to assess and stimulate executive functions (e.g., planning abilities) and praxis. The proposed game can be used on tablets, to be flexibly employed at home and in nursing homes. In their paper, the authors have also reported a deep study about the game experience felt by the patients, highlighting remarkable results in terms of acceptability, motivation, and perceived emotions. A last example is shown in [37], where the authors have developed a serious game whose aim is to aid patients in the development of compensatory navigation strategies by providing exercises in 3D virtual environments on their home computers. The objective of their study has been to assess the usability of three critical gaming attributes: movement control in 3D virtual environments, instruction modality and feedback timing. In this case, the authors have obtained a positive overall appreciation in terms of usability and effectiveness.

2.2. Immersive Neurocognitive Rehabilitation

Moving to the immersive rehabilitation systems, advantages and effectiveness in the recovering of patients’ lost skills show an increased performance. A key example is discussed in [38], where the authors present a study to determine how differences in the virtual reality display type can influence motor behaviour, cognitive load, and participant engagement. The authors conclude that visual display type influences motor behaviour in their game, thus providing important guidelines for the development of virtual reality applications for home and clinic use. Results as those just reported are also confirmed by other studies, like that shown in [39] regarding a virtual simulated store, in which the advantages of immersive virtual reality compared to conventional desktop technology are pointed out in terms of interaction, usability, and naturalness. In the latter context, several applications are available to assess, by feasible and valid scenarios, everyday memory in complex conditions that are close to real-life situations, an example is reported in [40].

3. Proposed Tool

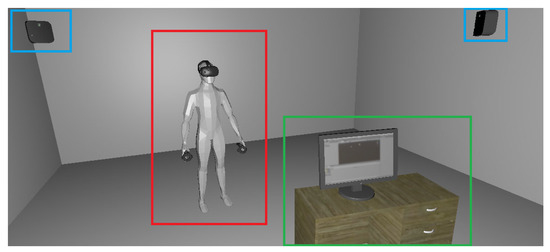

In this section, the proposed tool, together with the architecture of the system, is discussed. In Figure 1, the latter is shown. Concerning the used hardware, the system is composed by a HMD to show the environment to the patient, two controllers that allow the patient to perform actions, and two base stations to track the patient’s movements. The remainder of this section will describe how the proposed tool works.

Figure 1.

The architecture of the proposed tool. The red box highlights the patient wearing the Head-Mounted Display (HMD) and the controllers, the green box highlights the therapist workstation and, finally, the blue boxes highlight the two base stations.

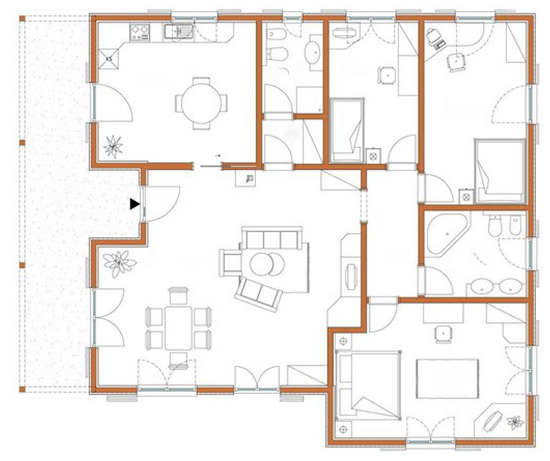

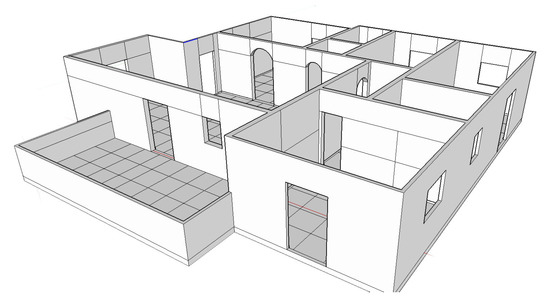

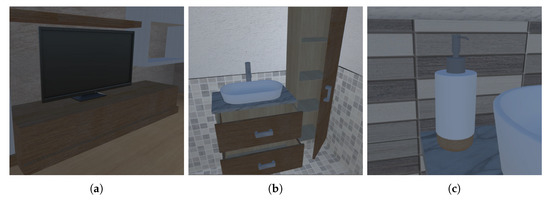

Before starting the rehabilitation session, the therapist must define the virtual environment in which the patient will act. The proposed tool allows two modes to create a 3D environment. With the first mode, a therapist loads an image file containing the planimetry of the environment. Then, the proposed tool performs a 3D extrusion of the walls within the planimetry, thus creating automatically the environment. In Figure 2, an example of planimetry is shown, while in Figure 3 the 3D environment created with the first mode is depicted. In the second mode, instead, the therapist is capable to design the 3D environment from scratch by using the tool’s built-in functionality. In detail, the latter allows us to create walls, windows, doors, and all the elements needed to build the environment. Both the modes allow us to place objects such as vases, tables, bookshelves, and so on, with which the patient will interact during the rehabilitation session. These objects can be of three types: static, static interactive, and dynamic. The static objects have merely the function of decorating the environment, but they can also work as a distraction for the patient, e.g., a picture frame. The static interactive objects are those that cannot be moved, but they can be used by the patient, e.g., door handles, desks, and so on. Finally, the dynamic objects are those that can be handled by the patient, and they are needed to complete the exercise. Since these objects are strictly dependent on the latter, so they can be different for each exercise. In Figure 4 examples of the three types of objects are shown.

Figure 2.

Example of planimetry that can be imported in the tool for the automatic creation of the 3D environment.

Figure 3.

Example of 3D extrusion created with the automatic 3D environment creation.

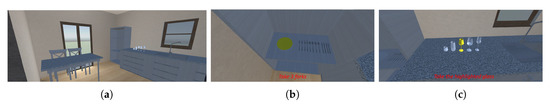

Figure 4.

Example of (a) static object, (b) static interactive object, and (c) dynamic object.

The placement of the dynamic objects is the subsequent step to the creation of the environment, and this process is performed semi-automatically. In detail, the therapist chooses the objects needed to perform the exercise and the room in which to place them. Then, the tool will randomly place each object in its corresponding room, formally:

where is the position of the dynamic object t, is the i-th room of the environment, and are the static interactive objects contained in that room. Usually, the dynamic objects are placed over or inside a static interactive object, meaning that the parts of the latter in which a dynamic object can be placed are well-defined. For example, if we are considering a desk, it is possible to place objects over it and within its drawers, if there are any. The definition is done by associating an Extensible Markup Language (XML) file for each static interactive object. This XML file contains all the coordinates of the planes within the static interactive object on which a dynamic object can be placed. We decided to use the random placement of the objects since it helps the stimulation of the patient and avoids the memorization of the exercises.

The entire tool has been developed by using the Unity https://unity.com/ engine. The choice fell on this 3D engine due to its easiness of VR devices integration. Regarding the latter, the HTC Vive has been used since it is a complete system, comprised of HMD, hand joypads and Time of Flight (ToF) base station for tracking the patient movements. Concerning the exercises, we have interviewed 5 therapists to know the mechanics behind post-stroke rehabilitation. After collecting their experiences, we have decided to design an interactive path composed of six stages ordered by difficulty. Each stage is a different rehabilitation exercise, and the patient must complete a stage in order to move to the next one. The house used in the performed experiments is the one shown in Figure 2, and the designed path is the following:

- The patient starts the rehabilitation from the bedroom, in which the first exercise is performed;

- From the bedroom, the patient goes to the kitchen, where the second exercise is performed;

- From the kitchen, the patient moves to the living room, in which the third exercise is performed;

- From the living room, the patient goes to both the bathroom to perform the fourth exercise;

- Subsequently, the patient goes to the small bedroom to perform the fifth exercise;

- Finally, the patient goes to the office to perform the last exercise.

To prevent the situation in which the patient wanders around the house and loses the focus on the task, all the doors are locked except the one in which the current exercise must be performed. Then, after the completion of the exercise, the patient will be rewarded with the key to open the next door. In addition, the patient is guided through the several stages by a help text that pops up at the bottom of the HMD view. Moreover, if the patient forgets the instruction, he can push a specific button on the joypad (it can be both the left or the right one) and the help text will be shown again. In the case that the patient has a strong impairment and can not push the buttons, the help text can be prompted from the therapist by pressing the set button on the keyboard.

In the next subsections, the six exercises will be described in detail. Notice that, for each exercise, the therapist can change the number, the type, or both for each used dynamic object.

3.1. Exercise 1

In the first exercise, the patient has to find within the room, i.e., the bedroom, the key for open the door and continue to the next exercise. In this exercise, the dynamic object is the key to be found, and it is randomly placed inside or over the objects present in the room. In Figure 5, the example in which the key is inside the drawer is shown. According to the interviewed pool of therapists, the bedroom is the most reassuring environment, becoming the best room in which a patient can acquire familiarity with the devices and their behaviour. The benefit of this exercise is the stimulation of the patient’s orientation and neuromotive capabilities. As an example, if we consider the case in which the key appears inside the drawer, the patient has:

Figure 5.

First exercise case in which the key is inside the drawer.

- To get closer to the drawer;

- To bow in order to open the drawer;

- To open the drawer by pulling it towards his body;

- To get the key;

- To go to the closed door, and open it as a real door.

The first exercise has been designed deliberately easy in order to make the patient to familiarize with the used technology.

3.2. Exercise 2

The second exercise consists in laying the kitchen table by using the number of dishes and silverware, which are the dynamic objects of this exercise, asked by the system. These are random numbers, and they are different for each object to pick. In addition, there are 5 different types of glasses, having different shapes and grabbing difficulty, and also, in this case, the system will choose randomly the glass that the patient has to grab. In Figure 6, the steps of this exercise are shown. The benefits of this exercise are, firstly, the stimulation of the short memory. This is due to the fact that the patient has to remember the different numbers of the objects needed to lay the table. Moreover, also the recognition of the object shapes is stimulated, thanks to the different shapes of the glass.

Figure 6.

Example of steps to be performed for the second exercise. The patient enters the exercise room (a), then it takes the required number of silverware (b) and the required glass (c).

3.3. Exercise 3

The third exercise consists in remembering the position of the objects in the living room. The steps of this exercise are the following:

- The patient enters the living room and observes all the present objects;

- Subsequently, the patient goes to the garden next to the living room. As soon the patient reaches the garden, a random object changes its position and it is placed in the centre of the table;

- Next, the patient comes back in the living room, and has to move the object in the centre of the table to its original position.

After the completion of the exercise, the key for the door of the next one will appear on the table. The benefit of this exercise is, as for the previous exercise, the stimulation of the memory. However, in this exercise, there are some distracting elements, i.e., the objects that will never change their position such as television, which improve the difficulty of the exercise itself. In Figure 7 an example of this exercise is shown.

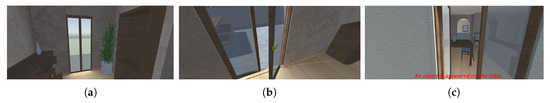

Figure 7.

Example of steps tp be performed during the third exercise. The patient moves toward the window (a), then he opens the latter to move outside (b). Finally, the system prompts the istructions for the exercise and the patient moves back to the living room (c).

3.4. Exercise 4

In the fourth exercise, the patient has to take the objects present in the bigger bathroom and bring them into the smaller one (Figure 8). In detail, the objects have to be placed in the same position and orientation in which they were in the bigger bathroom. In our experiments, three objects have been chosen: a hairdryer, a brush, and a soap dispenser. To facilitate the patient, the same furnishings have been placed in both the bathrooms. Due to the nature of this exercise, the benefits are the stimulation of the memory and the orientation of the patient. After the exercise has been completed, the key for the next door will appear beside the sink.

Figure 8.

Figure depicting the final step of the fourth exercise. In detail, the final placement of the objects performed by a patient is shown.

3.5. Exercise 5

The fifth exercise is performed in the small bedroom, and consists of sorting nine numbered books. The books must be placed on the two shelves near the window, and the sorting is twofold. The first sorting approach consists in putting even-numbered books on the lower shelf and odd-numbered books on the higher one. The second sorting approach is by a pivot number. In detail, the books with a number less than the pivot must be placed on the lower shelf, while the books numbered higher than the pivot must be placed on the higher shelf. Both the type of sorting and the pivot number are randomly chosen by the system. In Figure 9, an example regarding the second sorting approach is depicted. Differently from past exercises, this exercise is the first that stimulates the arithmetic capabilities of the patient. The key for the last exercise will appear on the bed, after the correct placing of the books.

Figure 9.

An example of the pivot number exercise. In Figure (a), the task is presented to the patient, while in (b) the completed exercise is shown.

3.6. Exercise 6

The sixth and last exercise is performed in the office, and consists in opening a safe. In particular, the latter will be opened with a numeric code that the patient digits on a numerical keypad. This code is computed by the patient as follows:

- The first number is obtained by summing all the chairs of the house;

- The second number is obtained from the difference between the chairs in the garden and the chairs in the living room. If the number is negative, the modulus is applied to the result;

- The last two numbers are obtained by dividing by two the total number of the chair’s legs.

For example, let us assume that in the current rehabilitation session we have three chairs in the kitchen, two chairs in the living room and four chairs in the garden. We have that:

- The first number is 9, obtained by summing ;

- The second number is 2, obtained by the subtraction ;

- The last two numbers are 18, by dividing 36, i.e., the total number of chairs legs, by 2.

Hence, the code needed to open the safe is 9218. As for the previous exercise, the benefit of the last exercise is the stimulation of the arithmetic capabilities of the patient. In Figure 10, the execution of the last step of the exercise is shown.

Figure 10.

Image showing the digitation of the code needed to open the safe.

4. Experiments and Discussion

In this section, the performed experiments are presented and discussed. In detail, the tests have been conducted on 35 patients having an age between 25 and 85 years old. Each patient has performed a total of nine rehabilitation sessions, divided into three sessions per week for a total of three weeks. As evaluation metric, the time needed by the patient to perform an exercise is used, which is automatically stored by the tool for each exercise and for each session.

4.1. Healthy Subjects Pre-Test

Before testing the tool with real patients, we produced a baseline performance with a sample of seven healthy subjects. The latter are subjects of different ages, sex, and videogame experience to reflect at the best a real pool of patients. In Table 1, information about the latter are shown, while in Table 2 their performance are shown.

Table 1.

Information about healthy subjects used for the tool baseline performance.

Table 2.

Performance obtained by healthy subjects measured in minutes.

4.2. Test on Real Patients

This section provides the results obtained in the several exercises with the different patients. For a better understanding of the results, the patients have been divided into five age groups: 25–36, 37–48, 49–60, 61–72, 73–85.

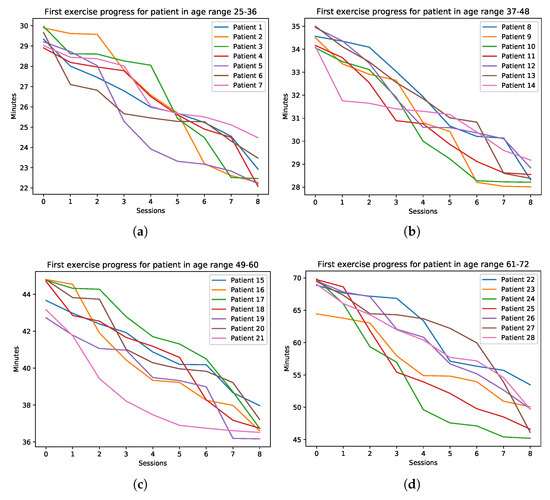

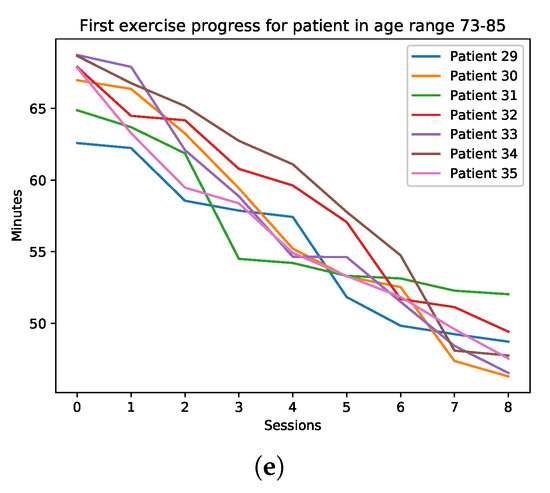

4.2.1. Exercise 1

In Figure 11, the results obtained from the patients for the first exercise are shown. As it is possible to see, there is a remarkable difference between the performance of the younger patients with respect to the older ones. In our opinion, this is not only influenced by the age, but also by the grade of impairment and the previous experience with videogames. In fact, in the first exercise, some problems were mostly related to the use of VR technology, as the patients had to familiarize with the devices and with the different type of interaction. However, independently of these factors, it is possible to observe that there is an improvement of the patients’ performance over the several rehabilitation sessions.

Figure 11.

The performance of the patients for the first exercise. The plots are divided by age: (a) 25–36, (b) 37–48, (c) 49–60, (d) 61–72, (e) 73–85.

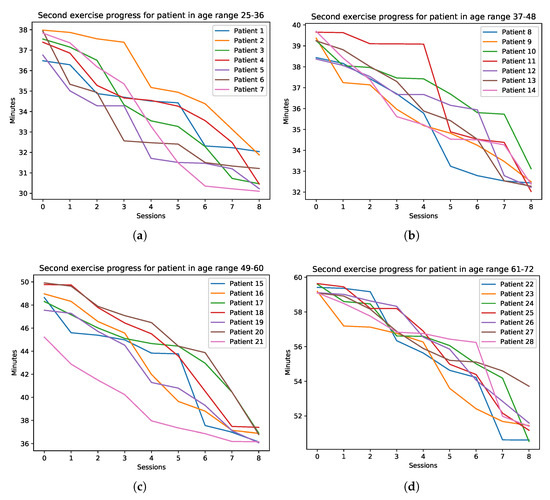

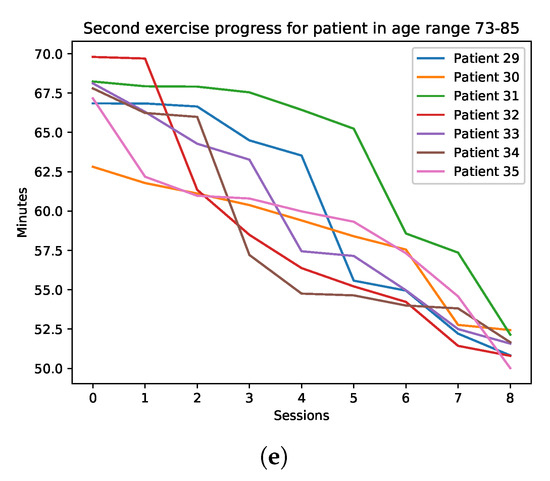

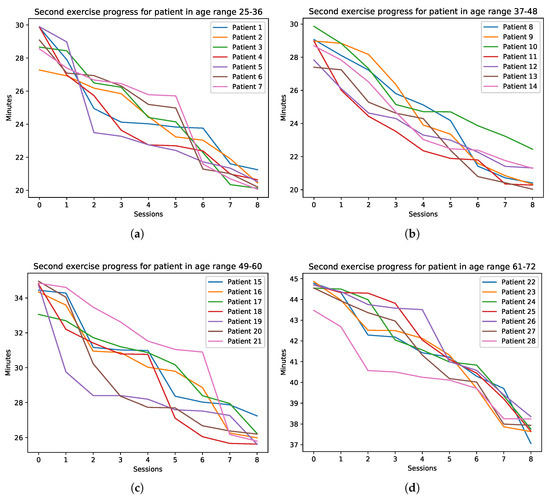

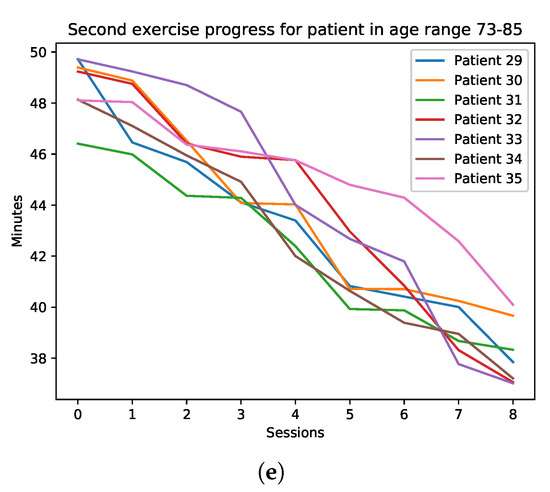

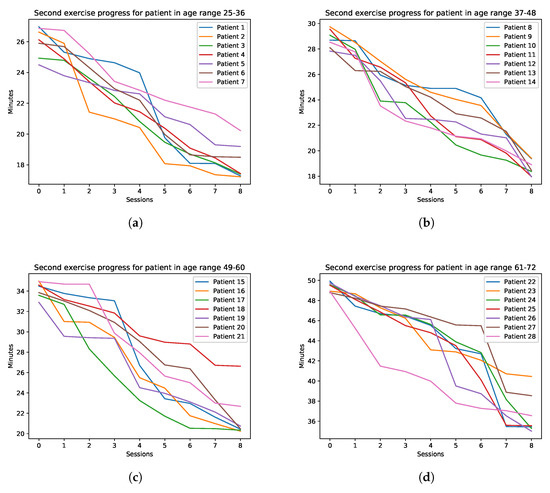

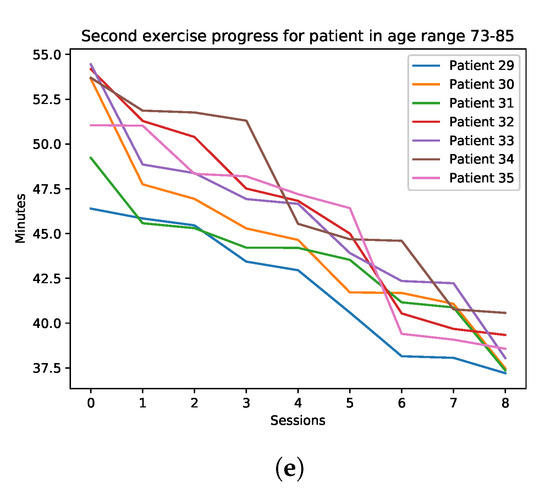

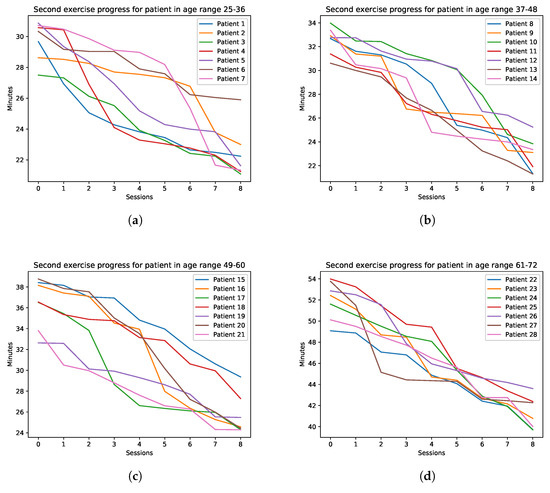

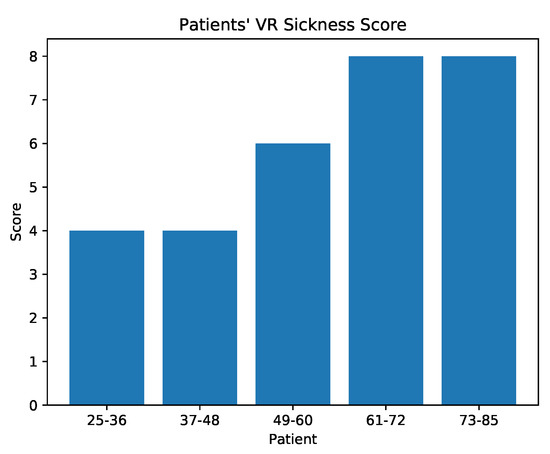

4.2.2. Exercise 2

In Figure 12, the patients’ data obtained in the second exercise is shown. The maximum difficulty for the patients in remembering the required objects was during the first week of rehabilitation. More than one patient needed to use the help in order to remember the number of objects to take. This is due to the facts that the patients were still learning how to interact within the 3D environment. In the subsequent weeks, despite some episodes of relapse for a couple of patients, there has been a general improvement.

Figure 12.

The performance of the patients for the second exercise. The plots are divided by age: (a) 25–36, (b) 37–48, (c) 49–60, (d) 61–72, (e) 73–85.

4.2.3. Exercise 3

In this exercise, there has been an improvement since the first week. This can be related to the fact that the third exercise is performed in a room that is bigger with respect to the ones of the past exercises. As expected, the bigger the moved object was, the easiest it was for the patient to remember its original position. In Figure 13, the trend of the patients during the several sessions is shown.

Figure 13.

The performance of the patients for the third exercise. The plots are divided by age: (a) 25–36, (b) 37–48, (c) 49–60, (d) 61–72, (e) 73–85.

4.2.4. Exercise 4

The fourth exercise is the one in which most of the patients performed well since the first rehabilitation session. In detail, most of the patients remembered immediately the correct position of the objects, but not their orientation. As shown in Figure 14, this is the exercise in which the patients have obtained the greatest improvement during the several sessions.

Figure 14.

The performance of the patients for the fourth exercise. The plots are divided by age: (a) 25–36, (b) 37–48, (c) 49–60, (d) 61–72, (e) 73–85.

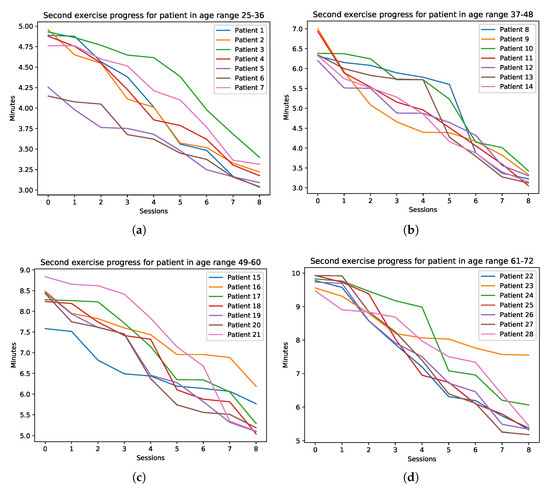

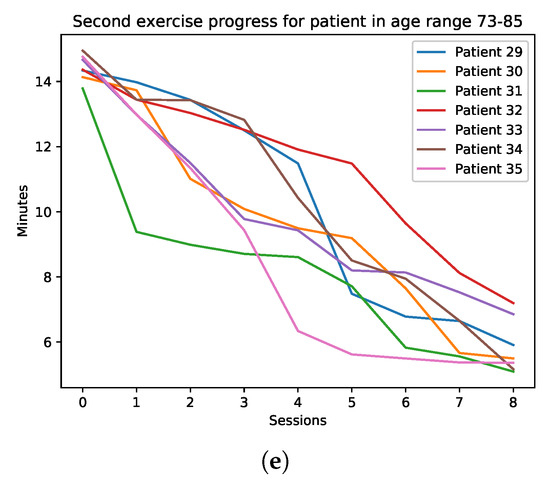

4.2.5. Exercise 5

As for the fourth exercise, this exercise had the best results from the majority of the patients. In particular, the main problem for some the latter was to understand the correct shelves on which place the books. In Figure 15, the trend of the patients for this exercise is shown.

Figure 15.

The performance of the patients for the fifth exercise. The plots are divided by age: (a) 25–36, (b) 37–48, (c) 49–60, (d) 61–72, (e) 73–85.

4.2.6. Exercise 6

The last exercise is the one with the worst patients performance. This is mainly due to the fact that in order to compute the code for opening the safe, a patient has to walk again for the entire house to count the required chairs and chairs legs. An interesting fact is that some patients, to calculate the last two number, have multiplied the number of the chairs by two instead of dividing the total number of legs by the same number. In Figure 16, the data concerning the last exercise is reported.

Figure 16.

The performance of the patients for the last exercise. The plots are divided by age: (a) 25–36, (b) 37–48, (c) 49–60, (d) 61–72, (e) 73–85.

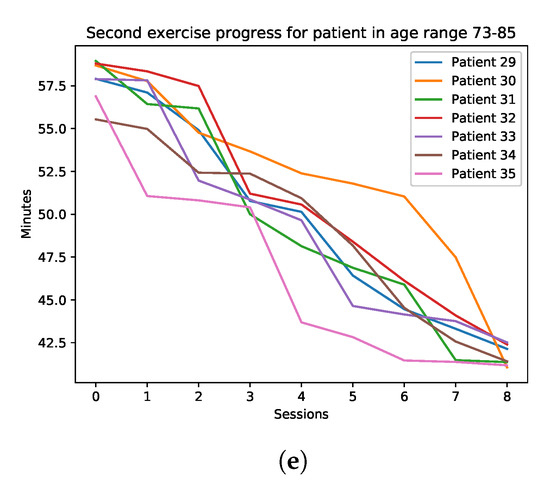

4.3. Discussion

In this section, therapists and patients’ opinions concerning the proposed tool are discussed. Concerning the patients’ opinion, there is a general appreciation of the system, as shown in Figure 17. As it is possible to see, the older the patients are, the less the appreciation is.

Figure 17.

Appreciation score provided by the patients.

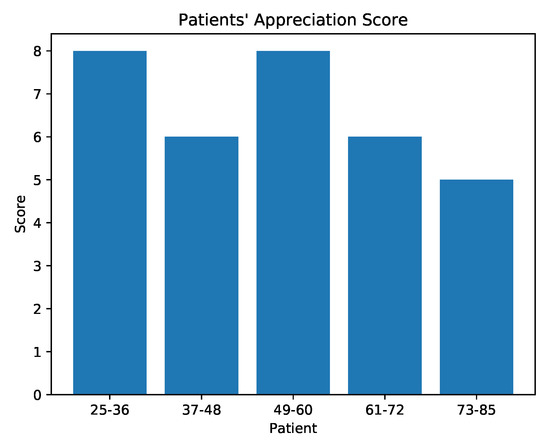

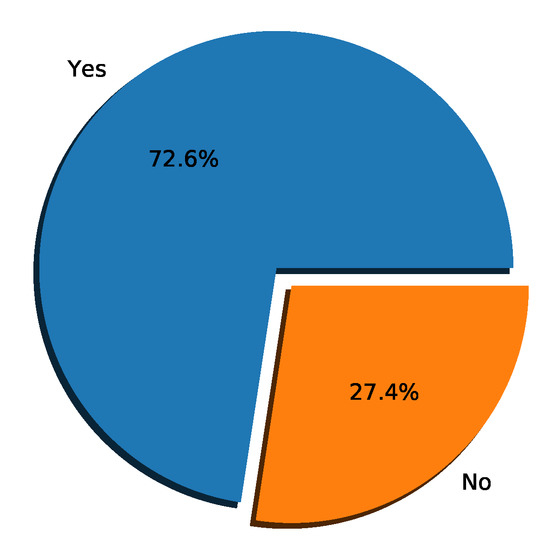

This can be related to the fact that younger patients are more prone to this type of technology. In Figure 18, some data about VR sickness is provided. Despite only a few patients suffering from sickness, we have that the majority of the episodes are related to older patients. Finally, in Figure 19 the results about the question “would you reuse this system again?” are reported. The high number of patients that would reuse the proposed system is encouraging.

Figure 18.

Sickness score provided by the patients.

Figure 19.

Patients’ opinion about future reuse of the system.

Also regarding the therapists, there is an overall appreciation of the system. They found the creation of the exercises, together with the placement of the dynamic objects, very easy and intuitive. However, they asked for having more control concerning the creation of the exercises and the dynamic objects to use. In fact, the therapists were able to use only the dynamic objects we loaded into the tool and the exercises we designed after interviewing them. As a future improvement, we aim to provide a fully customizable tool, which will allow the therapist to load custom 3D models and to choose where the dynamic objects will appear.

5. Conclusions

The recent advancement in HCI technology has allowed the development of immersive devices, including HMDs. In conjunction with entertaining activities, these devices can be used for the design of rehabilitative exercises. In this paper, a framework for designing serious games for neurocognitive rehabilitation is presented. The framework allows therapists to create 3D environments in which patients perform rehabilitation exercises. The latter are in the form of stages of an interactive path, and these stages are ordered by increasing difficulty. Experiments conducted with 35 real patients affected by stroke have highlighted the effectiveness of the proposed framework and the use of this technology in serious games.

Author Contributions

Conceptualization, D.A., L.C., and D.P.; methodology, D.A., L.C., and D.P.; software, D.A. and D.P.; writing—original draft, D.A. and D.P.; supervision, L.C.; writing—review and editing, L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

This work was supported in part by the MIUR under grant “Departments of Excellence 2018–2022” of the Department of Computer Science of Sapienza University.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, Y.; Carboni, G.; Gonzalez, F.; Campolo, D.; Burdet, E. Differential game theory for versatile physical human-robot interaction. Nat. Mach. Intell. 2019, 1, 36–43. [Google Scholar] [CrossRef]

- de Graaf, M.M.A.; Allouch, S.B.; van Dijk, J.A.G.M. Why Would I Use This in My Home? A Model of Domestic Social Robot Acceptance. Hum. Comput. Interact. 2019, 34, 115–173. [Google Scholar] [CrossRef]

- Martinel, N.; Avola, D.; Piciarelli, C.; Micheloni, C.; Vernier, M.; Cinque, L.; Foresti, G.L. Selection of temporal features for event detection in smart security. In Proceedings of the International Conference on Image Analysis and Processing (ICIAP), Cagliari, Italy, 6–8 September 2015; pp. 609–619. [Google Scholar]

- Arunnehru, J.; Chamundeeswari, G.; Bharathi, S.P. Human action recognition using 3D convolutional neural networks with 3D motion cuboids in surveillance videos. Procedia Comput. Sci. 2018, 133, 471–477. [Google Scholar] [CrossRef]

- Baba, M.; Gui, V.; Cernazanu, C.; Pescaru, D. A sensor network approach for violence detection in smart cities using deep learning. Sensors 2019, 19, 1676. [Google Scholar] [CrossRef]

- Avola, D.; Bernardi, M.; Foresti, G.L. Fusing depth and colour information for human action recognition. Multimed. Tools Appl. 2019, 78, 5919–5939. [Google Scholar] [CrossRef]

- Yi, Y.; Li, A.; Zhou, X. Human action recognition based on action relevance weighted encoding. Signal Process. Image Commun. 2020, 80, 1–11. [Google Scholar] [CrossRef]

- Avola, D.; Foresti, G.L.; Martinel, N.; Micheloni, C.; Pannone, D.; Piciarelli, C. Aerial video surveillance system for small-scale UAV environment monitoring. In Proceedings of the IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Avola, D.; Cinque, L.; Foresti, G.L.; Martinel, N.; Pannone, D.; Piciarelli, C. A UAV video dataset for mosaicking and change detection from low-altitude flights. IEEE Trans. Syst. Man Cybern. Syst. 2018, 1–11. [Google Scholar] [CrossRef]

- Chiu, L.; Chang, T.; Chen, J.; Chang, N.Y. Fast SIFT Design for Real-Time Visual Feature Extraction. IEEE Trans. Image Process. 2013, 22, 3158–3167. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Foresti, G.L.; Martinel, N.; Pannone, D.; Piciarelli, C. Low-Level Feature Detectors and Descriptors for Smart Image and Video Analysis: A Comparative Study. In Bridging the Semantic Gap in Image and Video Analysis; Springer International Publishing: Cham, Switzerland, 2018; pp. 7–29. [Google Scholar]

- Ghariba, B.; Shehata, M.S.; McGuire, P. Visual saliency prediction based on deep learning. Information 2019, 10, 257. [Google Scholar] [CrossRef]

- Avola, D.; Bernardi, M.; Cinque, L.; Foresti, G.L.; Massaroni, C. Adaptive bootstrapping management by keypoint clustering for background initialization. Pattern Recognit. Lett. 2017, 100, 110–116. [Google Scholar] [CrossRef]

- Ramirez-Alonso, G.; Ramirez-Quintana, J.A.; Chacon-Murguia, M.I. Temporal weighted learning model for background estimation with an automatic re-initialization stage and adaptive parameters update. Pattern Recognit. Lett. 2017, 96, 34–44. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Foresti, G.L.; Massaroni, C.; Pannone, D. A keypoint-based method for background modeling and foreground detection using a PTZ camera. Pattern Recognit. Lett. 2017, 96, 96–105. [Google Scholar] [CrossRef]

- Chemuturi, R.; Amirabdollahian, F.; Dautenhahn, K. A Study to Understand Lead-Lag Performance of Subject vs Rehabilitation System. In Proceedings of the IEEE International Conference on Augmented Human (AH), Megeve, France, 8–9 March 2012; pp. 1–7. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Levialdi, S.; Placidi, G. Human body language analysis: A preliminary study based on kinect skeleton tracking. In Proceedings of the International Conference on Image Analysis and Processing (ICIAP), Naples, Italy, 9–13 September 2013; pp. 465–473. [Google Scholar]

- Lin, W.; Shen, Y.; Yan, J.; Xu, M.; Wu, J.; Wang, J.; Lu, K. Learning Correspondence Structures for Person Re-Identification. IEEE Trans. Image Process. 2017, 26, 2438–2453. [Google Scholar] [CrossRef] [PubMed]

- Avola, D.; Cascio, M.; Cinque, L.; Fagioli, A.; Foresti, G.L.; Massaroni, C. Master and Rookie Networks for Person Re-identification. In Proceedings of the International Conference on Computer Analysis of Images and Patterns (CAIP), Salerno, Italy, 3–5 September 2019; pp. 470–479. [Google Scholar]

- Zhou, L.; Zhang, D. Following Linguistic Footprints: Automatic Deception Detection in Online Communication. Commun. ACM 2008, 51, 119–122. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Foresti, G.L.; Pannone, D. Automatic Deception Detection in RGB Videos Using Facial Action Units. In Proceedings of the International Conference on Distributed Smart Cameras (ICDSC), Trento, Italy, 9–11 September 2019; pp. 470–479. [Google Scholar]

- Avola, D.; Ferri, F.; Grifoni, P.; Paolozzi, S. A Framework for Designing and Recognizing Sketch-Based Libraries for Pervasive Systems. In Proceedings of the International Conference on Information Systems and e-Business Technologies (UNISCOM), Guangzhou, China, 7–9 May 2008; pp. 405–416. [Google Scholar]

- Avola, D.; Del Buono, A.; Gianforme, G.; Wang, R. A novel client-server sketch recognition approach for advanced mobile services. In Proceedings of the IADIS International Conference on WWW/Internet (ICWI), Algarve, Portugal, 5–8 November 2009; pp. 400–407. [Google Scholar]

- Avola, D.; Bernardi, M.; Cinque, L.; Foresti, G.L.; Massaroni, C. Online separation of handwriting from freehand drawing using extreme learning machines. In Multimedia Tools and Applications; Springer: New York, NY, USA, 2019; pp. 1–19. [Google Scholar]

- Nam, G.K.; Choong, K.Y.; Jae, J.I. A new rehabilitation training system for postural balance control using virtual reality technology. IEEE Trans. Rehabil. Eng. 1999, 7, 482–485. [Google Scholar]

- Avola, D.; Cinque, L.; Levialdi, S.; Petracca, A.; Placidi, G.; Spezialetti, M. Time-of-Flight camera based virtual reality interaction for balance rehabilitation purposes. In Proceedings of the International Symposium on Computational Modeling of Objects Represented in Images (CompIMAGE), Pittsburgh, PA, USA, 3–5 September 2014; pp. 363–374. [Google Scholar]

- Torner, J.; Skouras, S.; Molinuevo, J.L.; Gispert, J.D.; Alpiste, F. Multipurpose Virtual Reality Environment for Biomedical and Health Applications. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1511–1520. [Google Scholar] [CrossRef] [PubMed]

- Pessoa, T.M.; Coutinho, D.S.; Pereira, V.M.; de Oliveira Ribeiro, N.P.; Nardi, A.E.; de Oliveira e Silva, A.C. The Nintendo Wii as a tool for neurocognitive rehabilitation, training and health promotion. Comput. Hum. Behav. 2014, 31, 384–392. [Google Scholar] [CrossRef]

- Kim, K.J.; Heo, M. Effects of virtual reality programs on balance in functional ankle instability. J. Phys. Ther. Sci. 2015, 27, 3097–3101. [Google Scholar] [CrossRef] [PubMed]

- Faria, A.L.; Andrade, A.; Soares, L.; Badia, S.B. Benefits of virtual reality based cognitive rehabilitation through simulated activities of daily living: A randomized controlled trial with stroke patients. J. NeuroEng. Rehab. 2016, 13, 1–12. [Google Scholar] [CrossRef]

- Avola, D.; Spezialetti, M.; Placidi, G. Design of an efficient framework for fast prototyping of customized human–computer interfaces and virtual environments for rehabilitation. Comput. Methods Programs Biomed. 2013, 110, 490–502. [Google Scholar] [CrossRef]

- Placidi, G.; Avola, D.; Iacoviello, D.; Cinque, L. Overall design and implementation of the virtual glove. Comput. Biol. Med. 2013, 43, 1927–1940. [Google Scholar] [CrossRef] [PubMed]

- Avola, D.; Cinque, L.; Foresti, G.L.; Marini, M.R.; Pannone, D. VRheab: A Fully Immersive Motor Rehabilitation System Based on Recurrent Neural Network. Multimed. Tools Appl. 2018, 77, 24955–24982. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Foresti, G.L.; Marini, M.R. An interactive and low-cost full body rehabilitation framework based on 3D immersive serious games. J. Biomed. Inform. 2019, 89, 81–100. [Google Scholar] [CrossRef] [PubMed]

- Shapi’i, A.; Zin, N.A.M.; Elaklouk, A.M. A game system for cognitive rehabilitation. BioMed Res. Int. 2015, 2015, 493562. [Google Scholar] [CrossRef] [PubMed]

- Manera, V.; Petit, P.D.; Derreumaux, A.; Orvieto, I.; Romagnoli, M.; Lyttle, G.; David, R.; Robert, P.H. ‘Kitchen and cooking,’ a serious game for mild cognitive impairment and Alzheimer’s disease: A pilot study. Front. Aging Neurosci. 2016, 7, 1–10. [Google Scholar]

- van der Kuil, M.N.A.; Visser-Meily, J.M.A.; Evers, A.W.M.; van der Ham, I.J.M. A Usability Study of a Serious Game in Cognitive Rehabilitation: A Compensatory Navigation Training in Acquired Brain Injury Patients. Front. Psychol. 2018, 9, 1–12. [Google Scholar]

- Thomas, J.S.; France, C.R.; Applegate, M.E.; Leitkam, S.T.; Pidcoe, P.E.; Walkowski, S. Effects of Visual Display on Joint Excursions Used to Play Virtual Dodgeball. JMIR Serious Games 2016, 4, 1–12. [Google Scholar] [CrossRef]

- Schnack, A.; Wright, M.J.; Holdershaw, J.L. Immersive virtual reality technology in a three-dimensional virtual simulated store: Investigating telepresence and usability. Food Res. Int. 2019, 117, 40–49. [Google Scholar] [CrossRef]

- Émilie, O.; Boller, B.; Corriveau-Lecavalier, N.; Cloutier, S.; Belleville, S. The Virtual Shop: A new immersive virtual reality environment and scenario for the assessment of everyday memory. J. Neurosci. Methods 2018, 303, 126–135. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).