Methods and Challenges Using Multispectral and Hyperspectral Images for Practical Change Detection Applications

Abstract

1. Introduction

2. Change Detection Approaches

2.1. Traditional Approaches

2.1.1. Direct Subtraction

- Absolute average difference (AAD) [40], defined as

- Vector angle (VA) [41], also known as spectral angle, and defined as

- Normalized Euclidean distance (NED) [42], defined aswhere is the normalized variance of all the pixels in the two images.

2.1.2. Principal Component Analysis (PCA)

- For each band, a 2D graph is created in which the X axis is the pixel value from image 1 and the Y axis is the pixel value from the same band in image 2. Each point then will correspond to a location on the images, and the value of each axis will correspond to the pixel value on each image.

- PCA is performed for the above 2D data and the distance in the second component is considered as the difference between images 1 and 2.

- The above process is applied to each channel independently. Each change map is thresholded. Change maps from multiple channels are fused by taking the union of all maps.

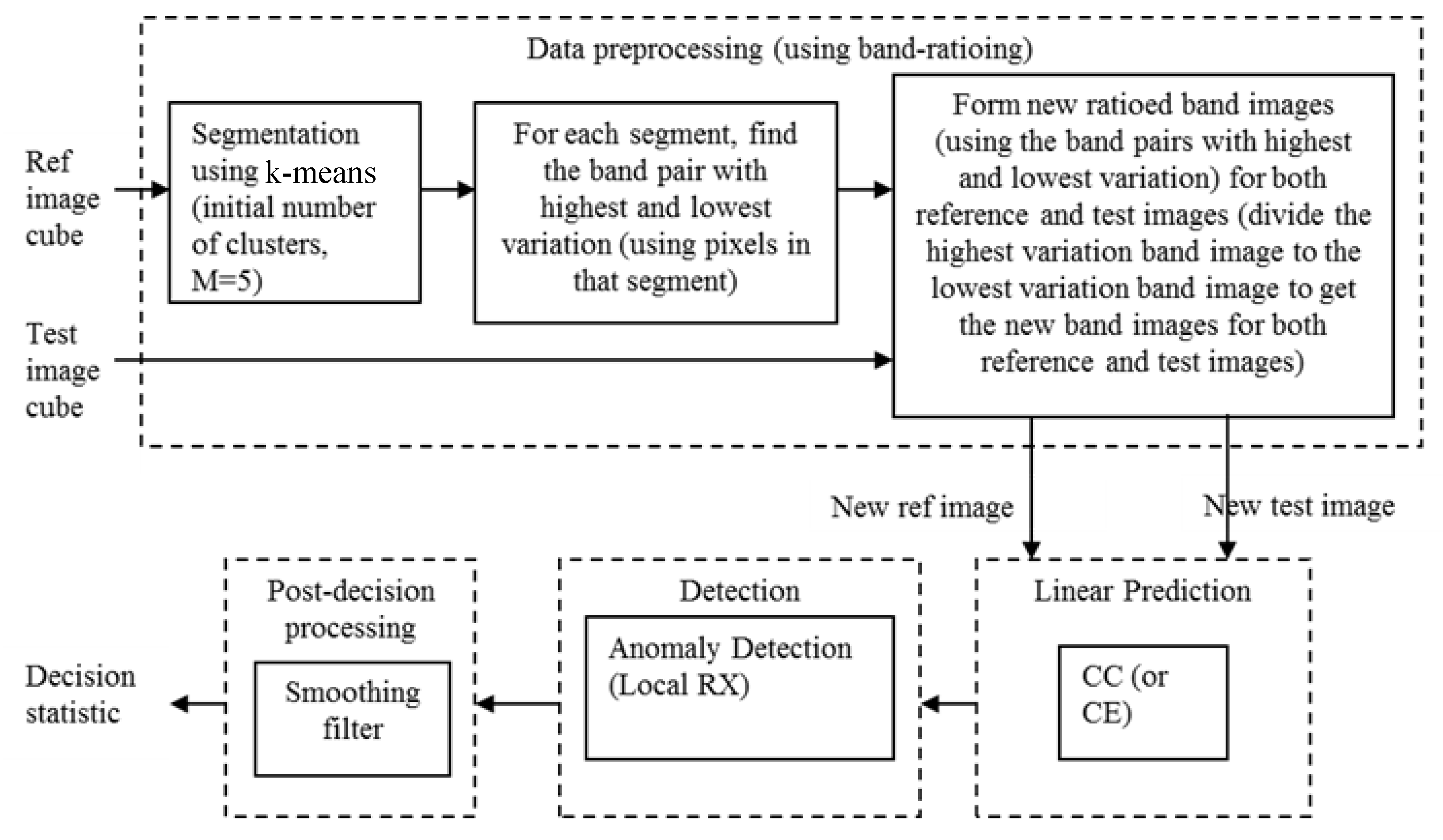

2.1.3. Change Detection Based on Band Ratios

2.2. Prediction Based Approach

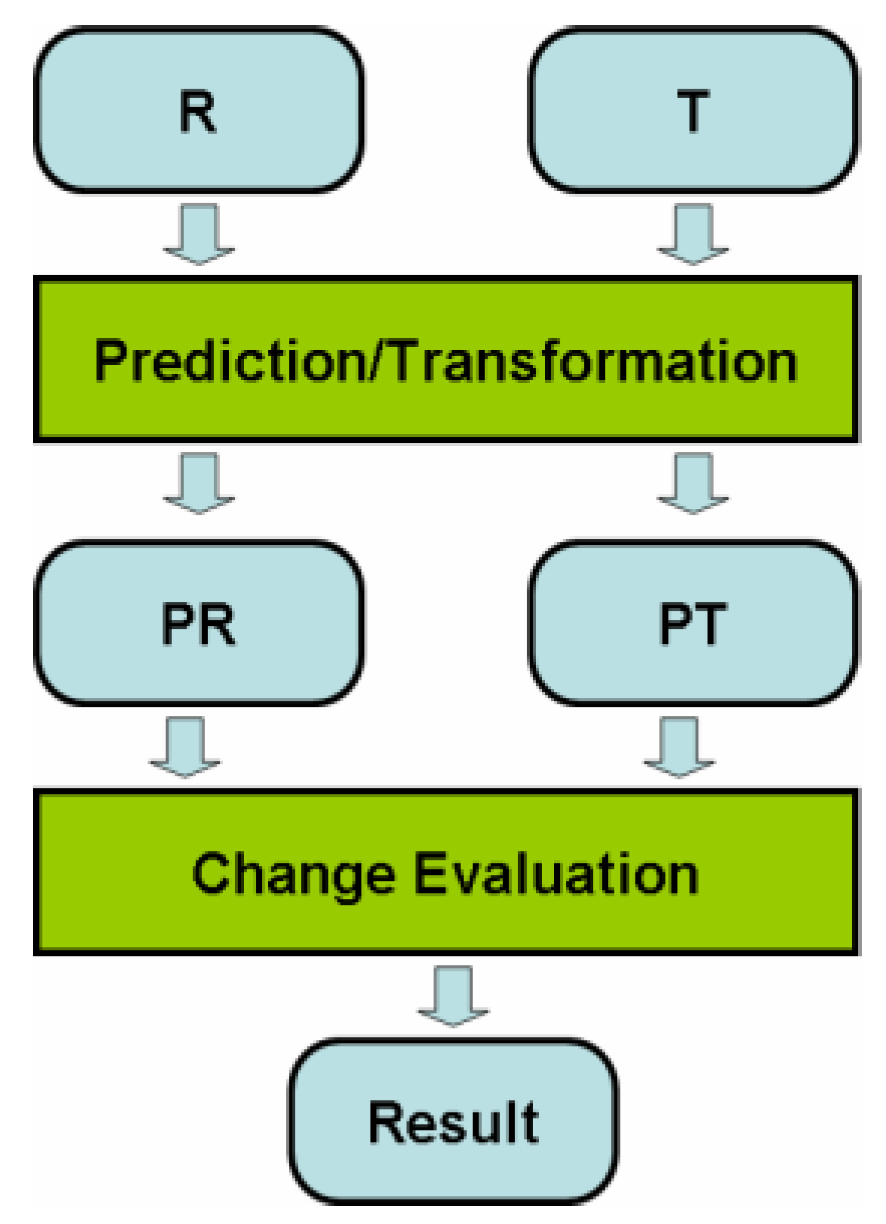

- (1)

- Prediction/Transformation. The original reference image (R) and test image (T) are transformed to new spaces as PR and PT. In change detection, the reference image normally refers to an image at an earlier date, and the test image is the image at a later date.

- (2)

- Change Evaluation. The residual between the transformed image pair is generated. If the interest is only in which pixels have changed, then an anomaly detector is usually applied. If one is more interested in the type of changes, then some additional pixel analyses are needed for the changed areas.

2.2.1. Prediction

- Compute mean and covariance of R and T as , , ,

- Do eigen-decomposition (or SVD). ,where VR and VT are the orthonormal eigenvectors, and DR and DT are the singular values of R and T.

- Do the transformation.

- Calculate means and covariances of R and T as , , ,

- Generate cross-covariance between R and T as

- Perform the transformation.

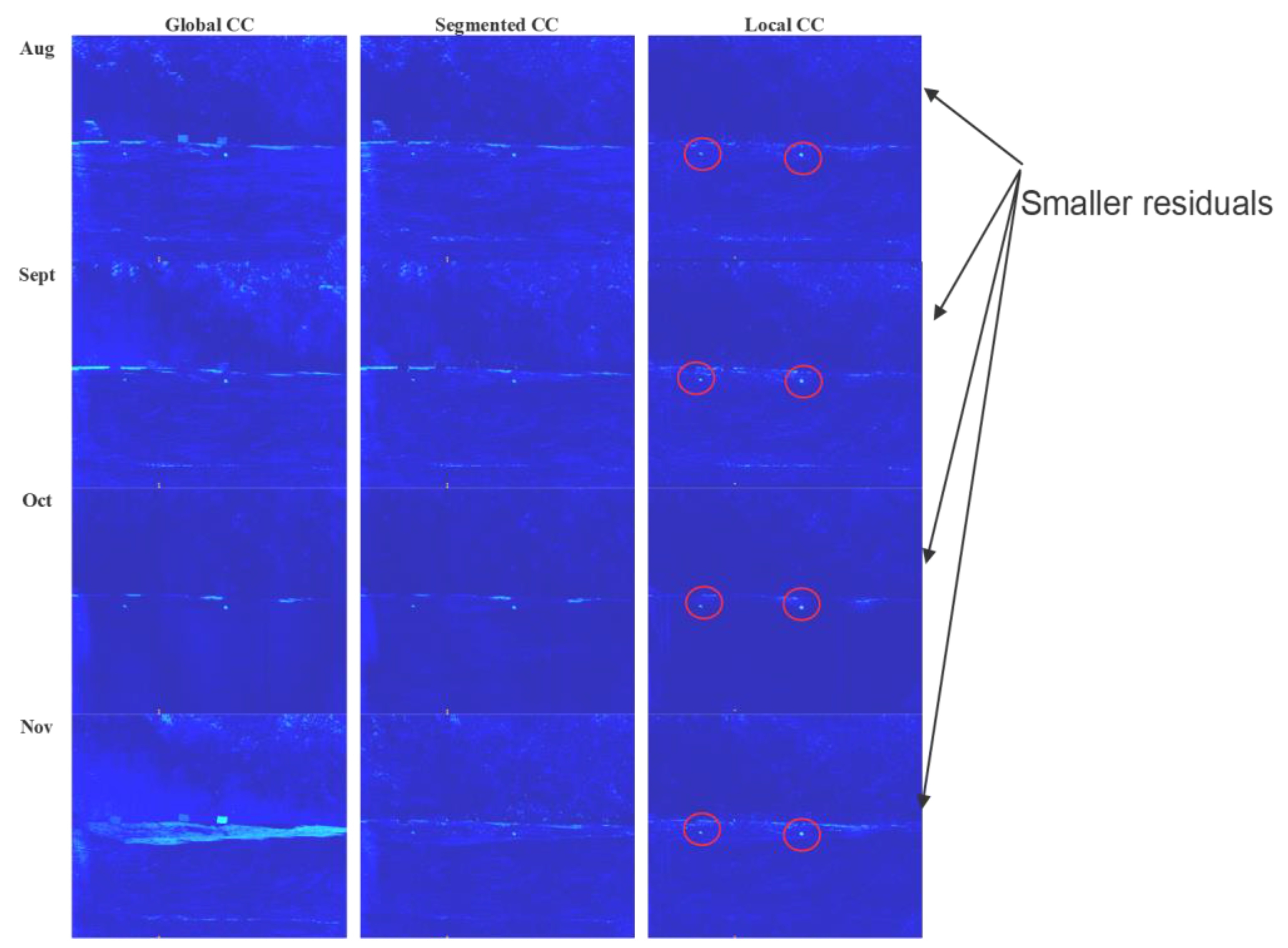

- Divide the images into non-overlapped blocks.

- For each non-overlapped block, pick the prediction windows in the images. The prediction window size is larger than the non-overlapped block.

- Apply CE, CC, or NN within the prediction window

2.2.2. Residual Analysis

2.3. Alternative Approaches

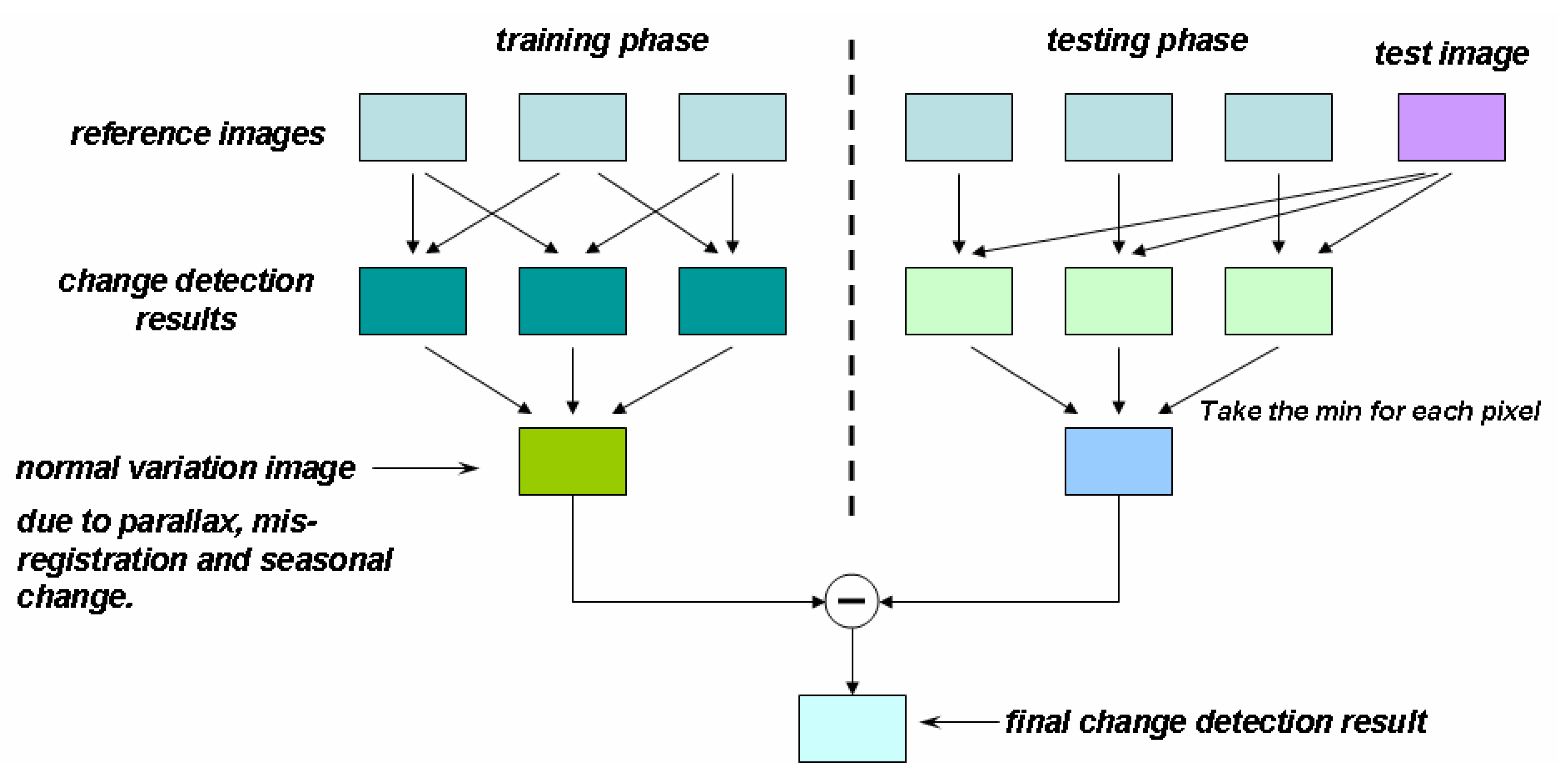

2.3.1. Change Detection Using Multiple References

| Algorithm 1. MRCD: (Multiple References Change Detection) [55] |

| Input: A set of reference images , a test image T, a single reference change detection function f Output: Change detection result image O Algorithm:

|

2.3.2. Band Rationing

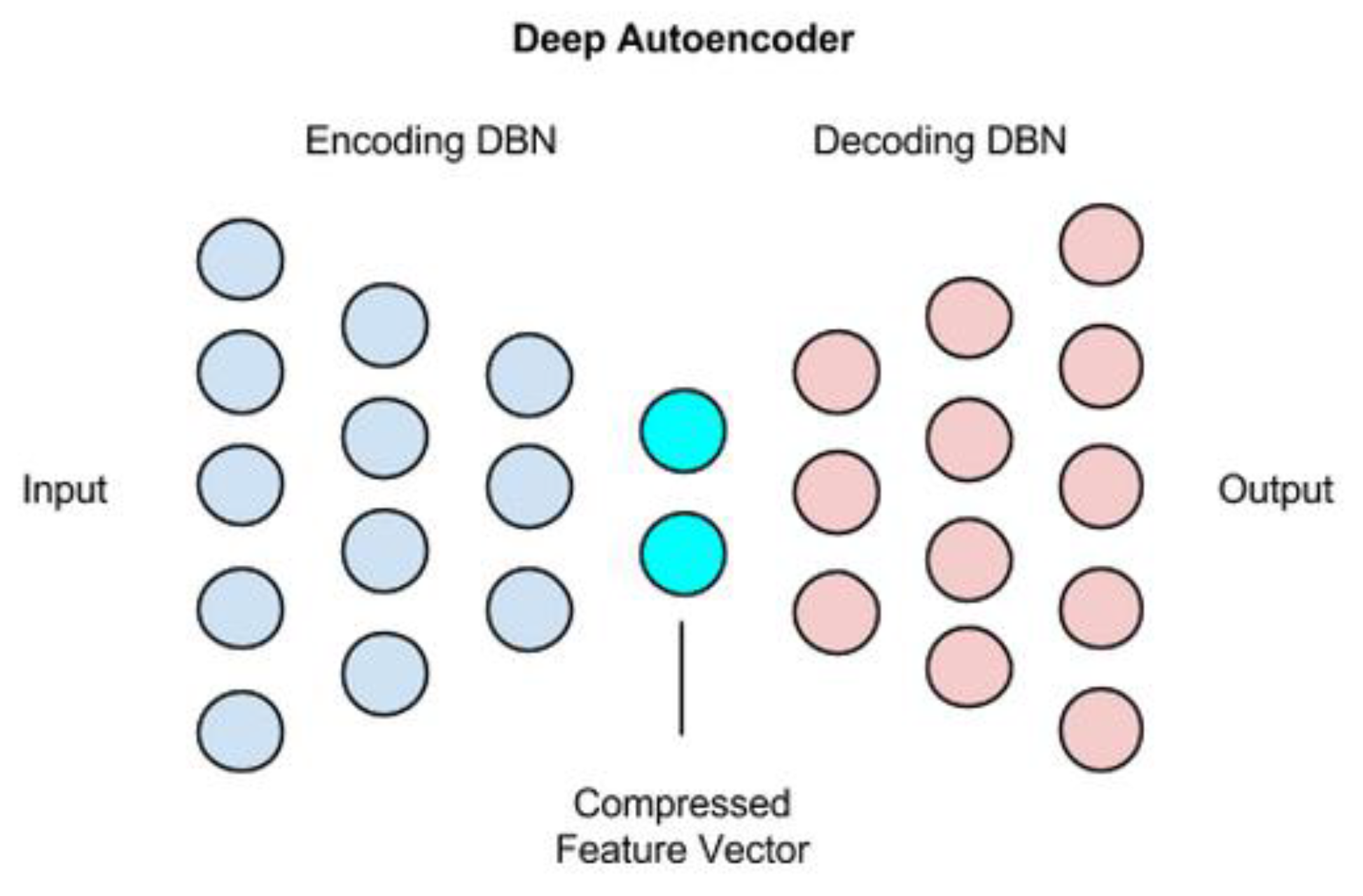

2.3.3. Deep Learning (Autoencoder)

| Algorithm 2. Change Detection Using Auto-encoder: |

| Input: Past images {x1, …, xM}; Current image {y} Output: Change map Steps: For image pairs {xi,y}, i = 1, …, M

|

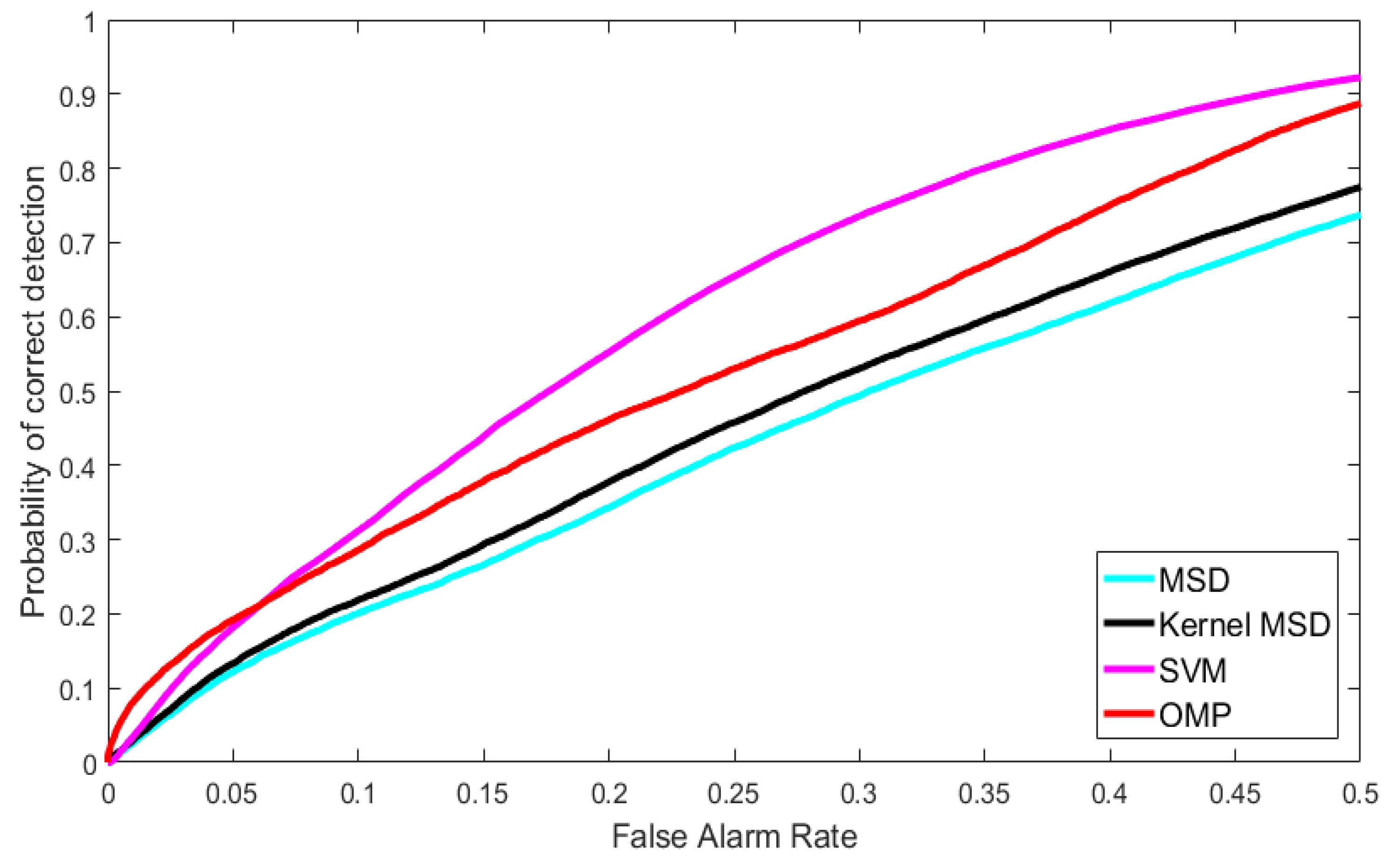

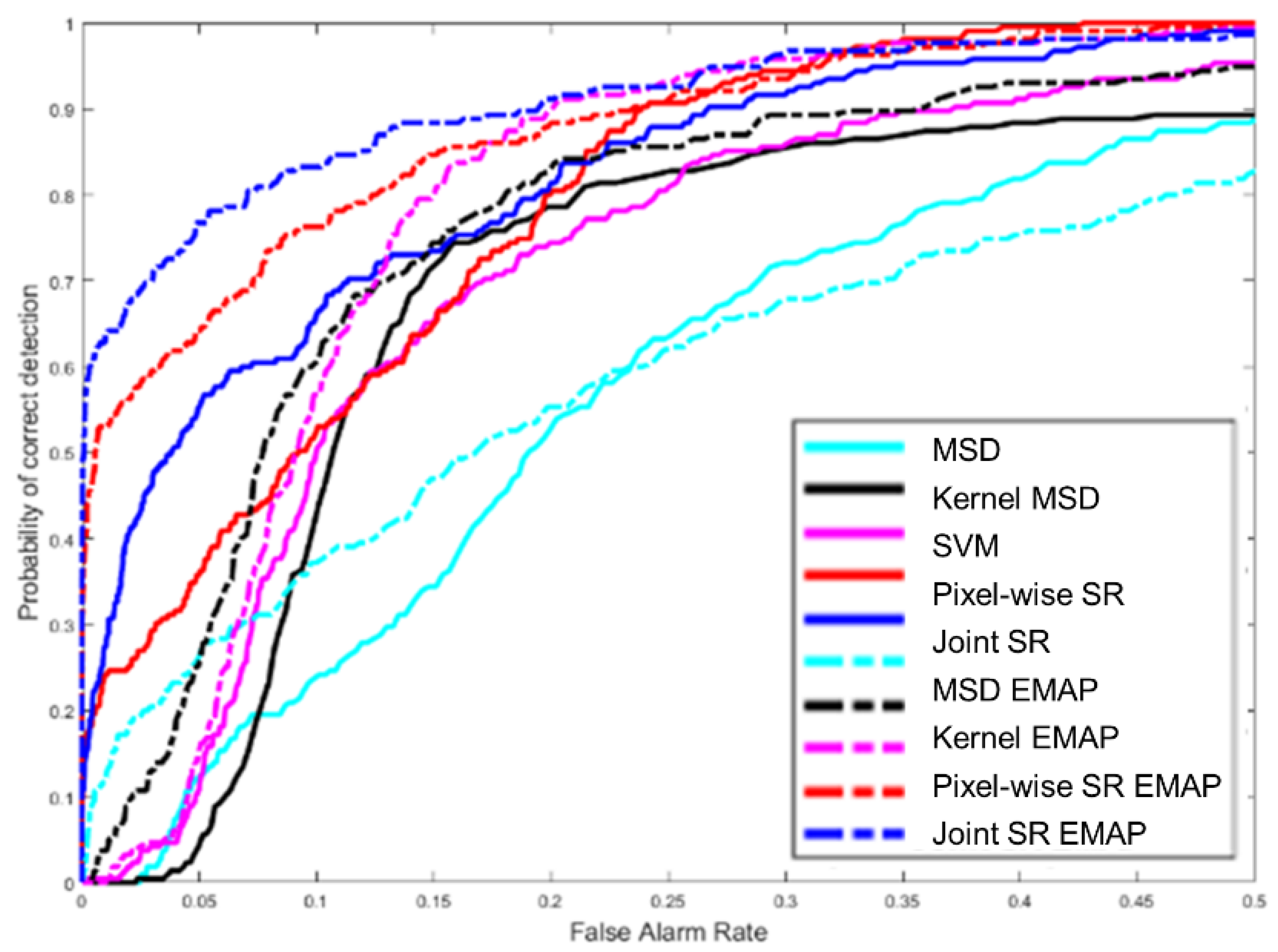

2.3.4. Joint Sparsity Approach

2.4. Other Approaches

2.5. Survey of Approaches After 2015

2.5.1. Supervised

2.5.2. Unsupervised

2.5.3. Applications

3. Challenges in Change Detection from Practitioners’ Viewpoints

3.1. Need to Enhance Registration Performance

3.2. Need to Improve Computational Efficiency

3.3. Possibility of Change Detection Using Enhanced MS and HS Images

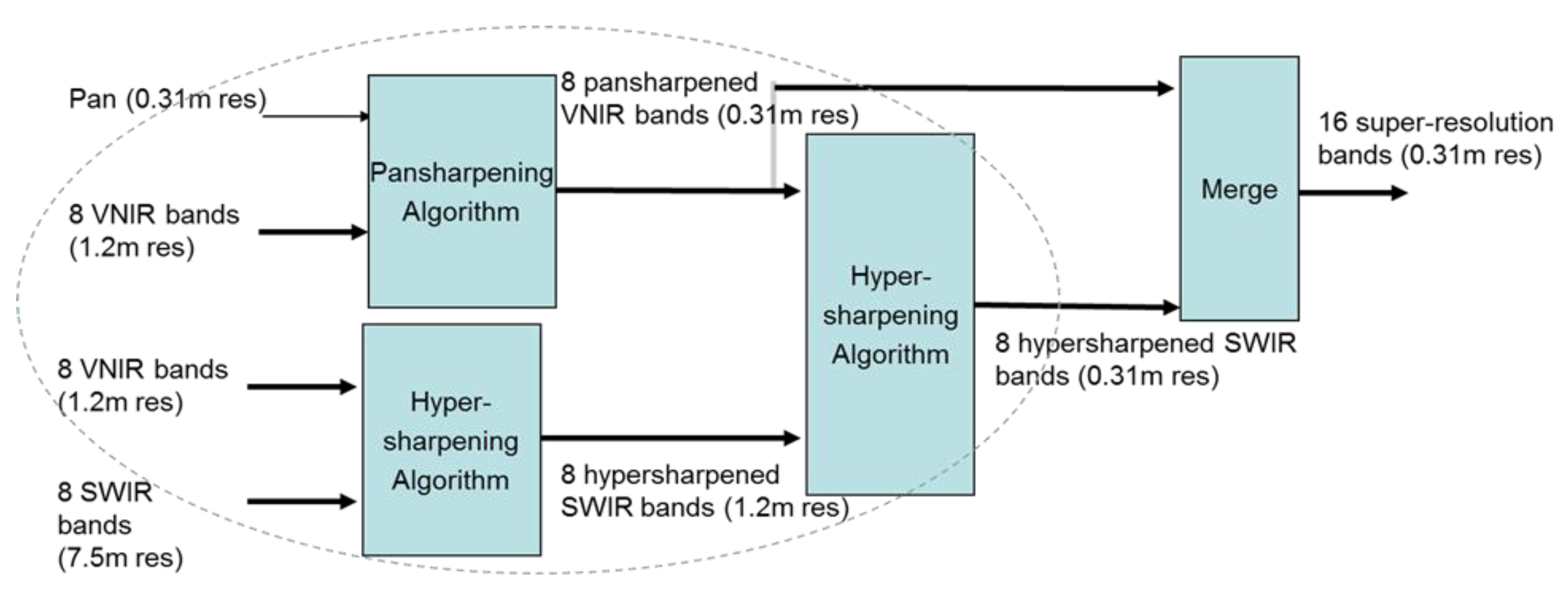

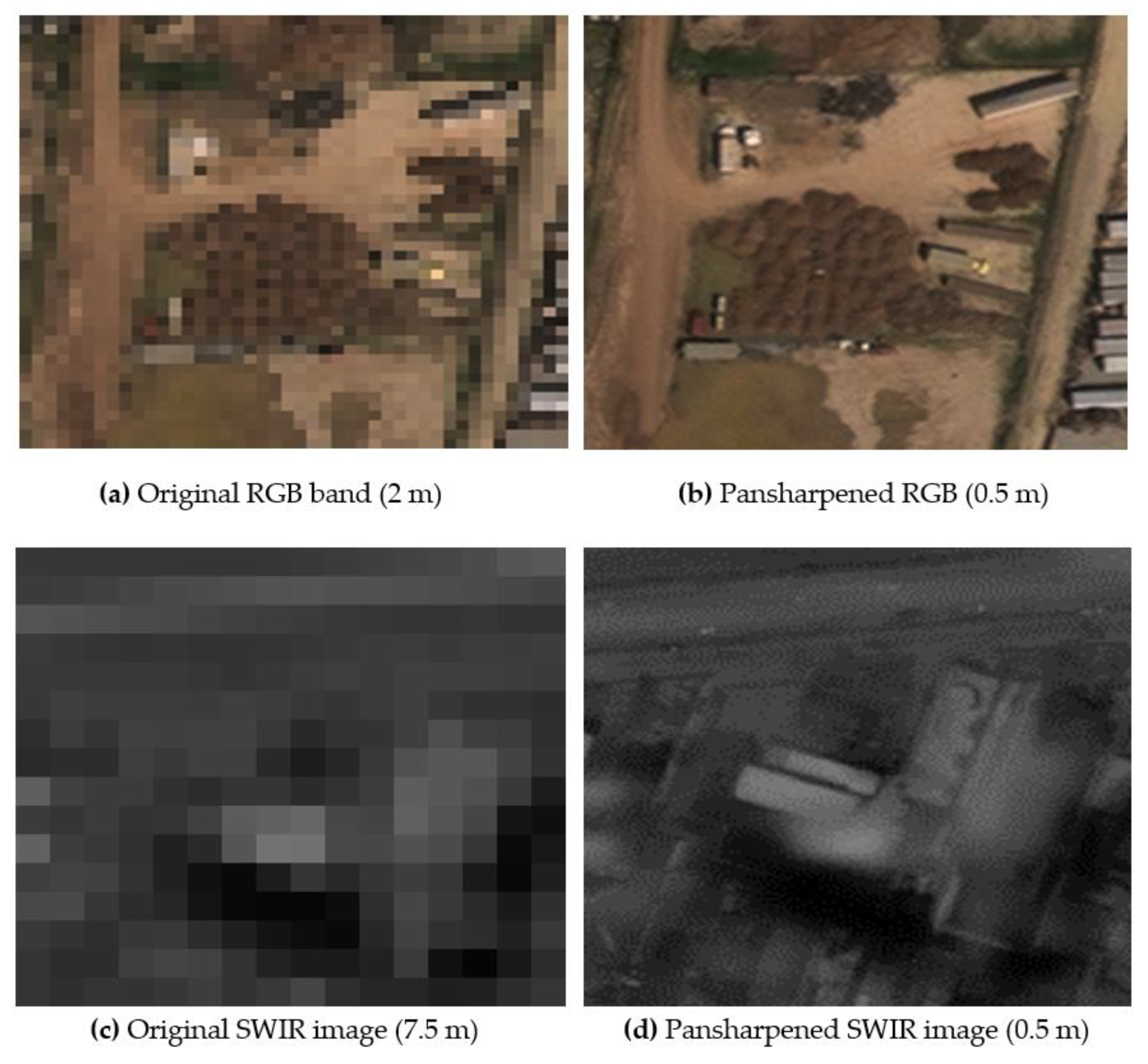

3.3.1. Enhancing the Spatial Resolution of MS Images

3.3.2. Spatial Resolution Enhancement for HS Images

- Lack of data

- Pansharpening performance assessmentIn practical applications, there is no ground truth images available to assess the pansharpening performance. A full resolution approach [22] is needed. However, existing full resolution assessment methods such as Quality with No Reference (QNR) are still inconsistent, because the best performing method based on QNR may not be the best method based on the peak-signal-to-noise ratio (PSNR). It may require the whole community to address this critical issue.

3.4. Possibility of Change Detection Using Synthetic HS Images

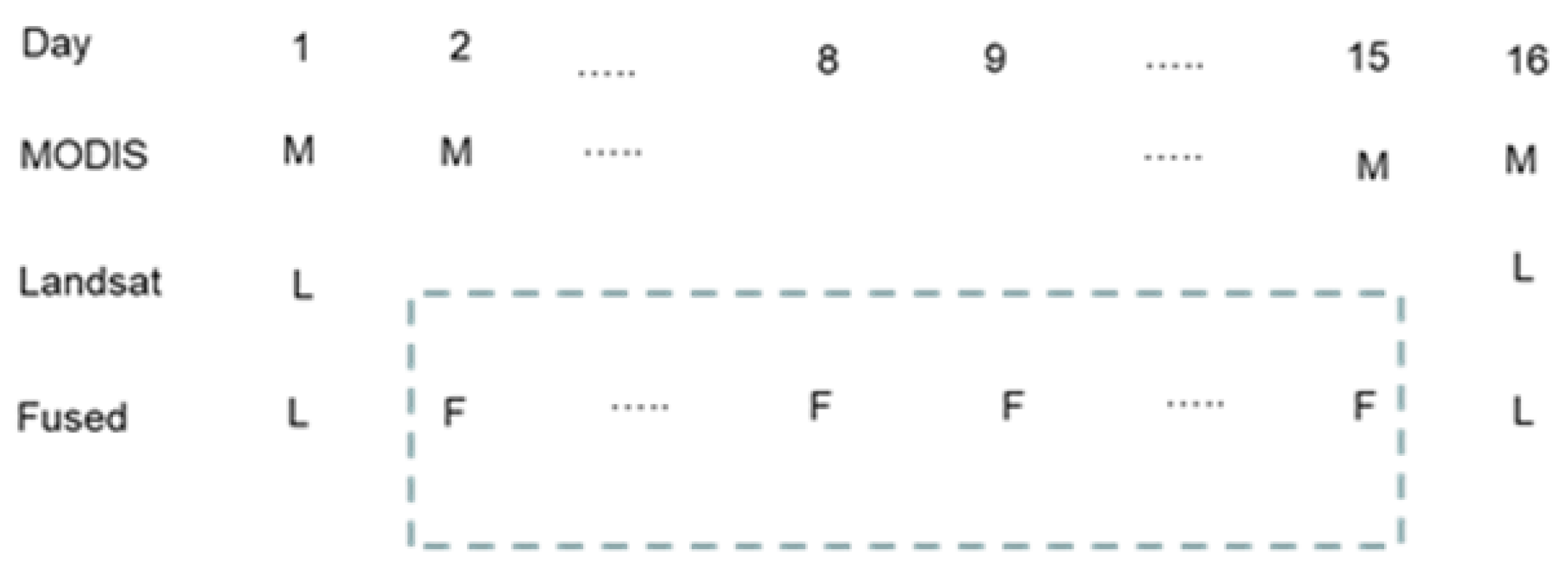

3.5. Possibility of Change Detection Using Temporally-Fused Images

3.6. Possibility of Change Detection Using Multimodal Images

4. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Landsat Bands. Available online: https://www.usgs.gov/media/images/landsat-8-band-designations (accessed on 5 August 2019).

- Kwan, C.; Budavari, B.; Bovik, A.C.; Marchisio, G. Blind Quality Assessment of Fused WorldView-3 Images by Using the Combinations of Pansharpening and Hypersharpening Paradigms. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1835–1839. [Google Scholar] [CrossRef]

- Kwan, C.; Choi, J.H.; Chan, S.H.; Zhou, J.; Budavari, B. A Super-Resolution and Fusion Approach to Enhancing Hyperspectral Images. Remote Sens. 2018, 10, 1416. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C.; Jensen, J.O. Remote vapor detection and classification using hyperspectral images. In Chemical, Biological, Radiological, Nuclear, and Explosives (CBRNE) Sensing XX; SPIE: Bellingham, WA, USA, 2019; Volume 110100U. [Google Scholar]

- Dao, M.; Kwan, C.; Ayhan, B.; Tran, T. Burn Scar Detection Using Cloudy MODIS Images via Low-rank and Sparsity-based Models. In Proceedings of the IEEE Global Conference on Signal. and Information Processing, Washington, DC, USA, 7–9 December 2016; pp. 177–181. [Google Scholar]

- Wang, W.; Li, S.; Qi, H.; Ayhan, B.; Kwan, C.; Vance, S. Identify Anomaly Component by Sparsity and Low Rank. In Proceedings of the IEEE Workshop on Hyperspectral Image and Signal. Processing: Evolution in Remote Sensor (WHISPERS), Tokyo, Japan, 2–5 June 2015. [Google Scholar]

- Chang, C.I. Hyperspectral Imaging; Springer: Berlin, Germany, 2003. [Google Scholar]

- Li, S.; Wang, W.; Qi, H.; Ayhan, B.; Kwan, C.; Vance, S. Low-rank Tensor Decomposition based Anomaly Detection for Hyperspectral Imagery. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4525–4529. [Google Scholar]

- Qu, Y.; Guo, R.; Wang, W.; Qi, H.; Ayhan, B.; Kwan, C.; Vance, S. Anomaly Detection in Hyperspectral Images Through Spectral Unmixing and Low Rank Decomposition. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1855–1858. [Google Scholar]

- Qu, Y.; Qi, H.; Ayhan, B.; Kwan, C.; Kidd, R. Does Multispectral/Hyperspectral Pansharpening Improve the Performance of Anomaly Detection? In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 June 2017; pp. 6130–6133. [Google Scholar]

- Kwan, C.; Ayhan, B.; Chen, G.; Chang, C.; Wang, J.; Ji, B. A Novel Approach for Spectral Unmixing, Classification, and Concentration Estimation of Chemical and Biological Agents. IEEE Trans. Geosci. Remote Sens. 2006, 44, 409–419. [Google Scholar] [CrossRef]

- Dao, M.; Kwan, C.; Koperski, K.; Marchisio, G. A Joint Sparsity Approach to Tunnel Activity Monitoring Using High Resolution Satellite Images. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 19–21 October 2017; pp. 322–328. [Google Scholar]

- Zhou, J.; Kwan, C.; Ayhan, B. Improved Target Detection for Hyperspectral Images Using Hybrid In-Scene Calibration. J. Appl. Remote Sens. 2017, 11, 035010. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. Application of Deep Belief Network to Land Classification Using Hyperspectral Images. In Proceedings of the 14th International Symposium on Neural Networks, Hokkaido, Japan, 21–26 June 2017; pp. 269–276. [Google Scholar]

- Kwan, C.; Ayhan, B. Automatic Target Recognition System with Online Machine Learning Capability. U.S. Patent 9940520, 10 April 2018. [Google Scholar]

- Zhou, J.; Kwan, C.; Ayhan, B.; Eismann, M. A Novel Cluster Kernel RX Algorithm for Anomaly and Change Detection Using Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6497–6504. [Google Scholar] [CrossRef]

- Kwan, C.; Chou, B.; Hagen, L.; Perez, D.; Li, J.; Shen, Y.; Koperski, K. Change Detection using Landsat and Worldview Images. In Algorithms, Technologies, and Applications for Multispectral and Hyperspectral Imagery XXV (Conference SI109); SPIE: Bellingham, WA, USA, 2019. [Google Scholar]

- Lee, C.M.; Cable, M.L.; Hook, S.J.; Green, R.O.; Ustin, S.L.; Mandl, D.J.; Middleton, E.M. An introduction to the NASA hyperspectral infrared imager (HyspIRI) mission and preparatory activities. Remote Sens. Environ. 2015, 167, 6–19. [Google Scholar] [CrossRef]

- Kwan, C. Image Resolution Enhancement for Remote Sensing Applications. In Proceedings of the 2nd International Conference on Vision, Image and Signal Processing, Las Vegas, CA, USA, 27–29 August 2018. [Google Scholar]

- Kwan, C.; Choi, J.H.; Chan, S.; Zhou, J.; Budavari, B. Resolution Enhancement for Hyperspectral Images: A Super-Resolution and Fusion Approach. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, New Orleans, LA, USA, 5–9 March 2017; pp. 6180–6184. [Google Scholar]

- Loncan, L.; de Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Radke, R.J.; Andra, S.; Al-Kofani, O.; Roysam, B. Image change detection algorithms: A systematic survey. IEEE Trans. Image Proc. 2005, 14, 294–307. [Google Scholar] [CrossRef]

- Unsalan, C.; Boyer, K.L. Multispectral Satellite Image Understanding; Springer: Berlin, Germany, 2011. [Google Scholar]

- İlsever, M.; Unsalan, C. Two-Dimensional Change Detection Methods; Springer: Berlin, Germany, 2012. [Google Scholar]

- Bovolo, F.; Bruzzone, L. The time variable in data fusion: A change detection perspective. IEEE Geosci. Remote Sens. Mag. 2015, 3, 8–26. [Google Scholar] [CrossRef]

- Perez, D.; Banerjee, D.; Kwan, C.; Dao, M.; Shen, Y.; Koperski, K.; Marchisio, G.; Li, J. Deep Learning for Effective Detection of Excavated Soil Related to Illegal Tunnel Activities. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 19–21 October 2017; pp. 626–632. [Google Scholar]

- Lu, Y.; Perez, D.; Dao, M.; Kwan, C.; Li, J. Deep Learning with Synthetic Hyperspectral Images for Improved Soil Detection in Multispectral Imagery. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 8–10 November 2018. [Google Scholar]

- Kwan, C.; Chou, B.; Hagen, L.; Yang, J.; Ayhan, B.; Koperski, K. Ground Object Detection Using Worldview Images. In Signal Processing, Sensor/Information Fusion, and Target Recognition XXVIII; SPIE: Bellingham, WA, USA, 2019. [Google Scholar]

- Kwan, C.; Ayhan, B.; Budavari, B. Fusion of THEMIS and TES for Accurate Mars Surface Characterization. In Proceedings of the IEEE International Geoscience Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–18 July 2017; pp. 3381–3384. [Google Scholar]

- Kwan, C.; Haberle, C.; Ayhan, B.; Chou, B.; Echavarren, A.; Castaneda, G.; Budavari, B.; Dickenshied, S. On the Generation of High-Spatial and High-Spectral Resolution Images Using THEMIS and TES for Mars Exploration. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XXIV; SPIE: Bellingham, WA, USA, 2018; Volume 106440B. [Google Scholar]

- Dao, M.; Kwan, C.; Ayhan, B.; Bell, J.F. Enhancing Mastcam Images for Mars Rover Mission. In Proceedings of the 14th International Symposium on Neural Networks, Hokkaido, Japan, 21–26 June 2017; pp. 197–206. [Google Scholar]

- Kwan, C.; Budavari, B.; Dao, M.; Ayhan, B.; Bell, J.F. Pansharpening of Mastcam images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5117–5120. [Google Scholar]

- Ayhan, B.; Dao, M.; Kwan, C.; Chen, H.; Bell, J.F.; Kidd, R. A Novel Utilization of Image Registration Techniques to Process Mastcam Images in Mars Rover with Applications to Image Fusion, Pixel Clustering, and Anomaly Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4553–4564. [Google Scholar] [CrossRef]

- Kwan, C.; Chou, B.; Ayhan, B. Enhancing Stereo Image Formation and Depth Map Estimation for Mastcam Images. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 8–10 November 2018. [Google Scholar]

- Kwan, C.; Chou, B.; Ayhan, B. Stereo Image and Depth Map Generation for Images with Different Views and Resolutions. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 8–10 November 2018. [Google Scholar]

- Dao, M.; Kwan, C.; Garcia, S.B.; Plaza, A.; Koperski, K. A New Approach to Soil Detection Using Expanded Spectral Bands. IEEE Geosci. Remote Sens. Lett. 2019. [Google Scholar] [CrossRef]

- Kwan, C. Remote Sensing Performance Enhancement in Hyperspectral Images. Sensors 2018, 18, 3598. [Google Scholar] [CrossRef] [PubMed]

- Zanetti, M.; Bovolo, F.; Bruzzone, L. Rayleigh-Rice mixture parameter estimation via EM algorithm for change detection in multispectral images. IEEE Trans. Image Proc. 2015, 24, 5004–5016. [Google Scholar] [CrossRef] [PubMed]

- Kwan, C.; Zhu, X.; Gao, F.; Chou, B.; Perez, D.; Li, J.; Shen, Y.; Koperski, K.; Marchisio, G. Assessment of Spatiotemporal Fusion Algorithms for Worldview and Planet Images. Sensors 2018, 18, 1051. [Google Scholar] [CrossRef]

- Liu, S.; Bruzzone, L.; Bovolo, F.; Zanetti, M.; Du, P. Sequential Spectral Change Vector Analysis for Change Detection in Multitemporal Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4363–4378. [Google Scholar] [CrossRef]

- Euclidean Distance: Raw, Normalized, and Double-Scaled Coefficients. Available online: https://www.pbarrett.net/techpapers/euclid.pdf (accessed on 25 March 2019).

- Perez, D.; Lu, Y.; Kwan, C.; Shen, Y.; Koperski, K.; Li, J. Combining Satellite Images with Feature Indices for Improved Change Detection. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 8–10 November 2018. [Google Scholar]

- Gao, J. Digital Analysis of Remotely Sensed Imagery; McGraw Hill Company: New York, NY, USA, 2009. [Google Scholar]

- Ayhan, B.; Kwan, C.; Zhou, J. A New Nonlinear Change Detection Approach Based on Band Ratioing. In Proceedings SPIE 10644, Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XXIV; SPIE: Bellingham, WA, USA, 2018; Volume 1064410. [Google Scholar]

- Eismann, M. Hyperspectral Remote Sensing; SPIE: Bellingham, WA, USA, 2012; Volume PM210. [Google Scholar]

- Schaum, A. Local Covariance Equalization of Hyperspectral Imagery: Advantages and Limitations for Target Detection. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 6–13 March 2004. [Google Scholar]

- Schaum, A.; Stocker, A. Long-interval chronochrome target detection. In Proceedings of the International Symposium on Spectral Sensing Research, San Diego, CA, USA, 14–19 December 1997. [Google Scholar]

- Zhou, J.; Ayhan, B.; Kwan, C.; Eismann, M. New and Fast algorithms for Anomaly and Change Detection in Hyperspectral images. In Proceedings of the International Symposium on Spectral Sensing Research, Springfield, MO, USA, 21–24 June 2010. [Google Scholar]

- Eismann, M.T.; Meola, J.; Hardie, R.C. Hyperspectral change detection in the presence of diurnal and seasonal variations. IEEE Trans. Geosci. Remote Sens. 2008, 46, 237–249. [Google Scholar] [CrossRef]

- Reed, I.S.; Yu, X. Adaptive multiple-band CFAR detection of an optical pattern with unknown spectral distribution. IEEE Trans. Acoust. Speech Signal Process. 1990, 38, 1760–1770. [Google Scholar] [CrossRef]

- Kwon, H.; Nasrabadi, N.M. Kernel RX-algorithm: A nonlinear anomaly detector for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2005, 43, 388–397. [Google Scholar] [CrossRef]

- Zhao, C.; Deng, W.; Yan, Y.; Yao, X. Progressive Line Processing of Kernel RX Anomaly Detection Algorithm for Hyperspectral Imagery. Sensors 2017, 17, 1815. [Google Scholar] [CrossRef]

- Qu, Y.; Wang, W.; Guo, R.; Ayhan, B.; Kwan, C.; Vance, S.; Qi, H. Hyperspectral Anomaly Detection Through Spectral Unmixing and Dictionary-Based Low-Rank Decomposition. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4391–4405. [Google Scholar] [CrossRef]

- Zhou, J.; Kwan, C. High Performance Change Detection in Hyperspectral Images Using Multiple References. In Proceedings of the SPIE Defense + Security Conference, Anaheim, CA, USA, 15–19 April 2018. [Google Scholar]

- Ruff, S.W.; Christensen, P.R. Bright and dark regions on Mars: Particle size and mineralogical characteristics based on Thermal Emission Spectrometer data. J. Geogr. Res. Planets 2002, 107. [Google Scholar] [CrossRef]

- Li, X.; Zhao, S.; Yang, H.; Cong, D.; Zhang, Z. A Bi-Band Binary Mask Based Land-Use Change Detection Using Landsat 8 OLI Imagery. Sustainability 2017, 9, 479. [Google Scholar] [CrossRef]

- Xu, Y.; Xiang, S.; Huo, C.; Pan, C. Change Detection Based on Auto-encoder Model for VHR Images. In SPIE MIPPR (2013): Pattern Recognition and Computer Vision, Proceedings of the 8th International Symposium on Multispectral Image Processing and Pattern Recognition, Wuhan, China, 26–27 October 2013; Cao, Z., Ed.; SPIE: Bellingham, WA, USA, 2013; p. 8919. [Google Scholar]

- Wu, C.; Du, B.; Zhang, L. Hyperspectral anomalous change detection based on joint sparse representation. ISPRS J. Photogramm. Remote Sens. 2018, 146, 137–150. [Google Scholar] [CrossRef]

- Zanetti, M.; Bruzzone, L. A theoretical framework for change detection based on a compound multiclass statistical model of the difference image. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1129–1143. [Google Scholar] [CrossRef]

- Falco, N.; Mura, M.D.; Bovolo, F.; Benediktsson, J.A.; Bruzzone, L. Change detection in VHR images based on morphological attribute profiles. IEEE Geosci. Remote Sensing Lett. 2013, 10, 636–640. [Google Scholar] [CrossRef]

- Touati, R.; Mignotte, M. An Energy-Based Model Encoding Nonlocal Pairwise Pixel Interactions for Multisensor Change Detection. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1046–1058. [Google Scholar] [CrossRef]

- Gong, M.; Zhang, P.; Su, L.; Liu, J. Coupled dictionary learning for change detection from multisource data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7077–7091. [Google Scholar] [CrossRef]

- Ziemann, A.K.; Theiler, J. Multi-sensor anomalous change detection at scale. In Proceedings of the SPIE Conference Algorithms, Technologies, and Applications for Multispectral and Hyperspectral Imagery XXV, Baltimore, MD, USA, 26–30 April 2019. [Google Scholar]

- Liu, Z.; Li, G.; Mercier, G.; He, Y.; Pan, Q. Change Detection in Heterogeneous Remote Sensing Images via Homogeneous Pixel Transformation. IEEE Trans. Image Proc. 2018, 27, 1822–1834. [Google Scholar] [CrossRef]

- Zhan, T.; Gong, M.; Jiang, X.; Li, S. Log-Based Transformation Feature Learning for Change Detection in Heterogeneous Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1352–1356. [Google Scholar] [CrossRef]

- Tan, K.; Jin, X.; Plaza, A.; Wang, X.; Xiao, L.; Du, P. Automatic Change Detection in High-Resolution Remote Sensing Images by Using a Multiple Classifier System and Spectral–Spatial Features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3439–3451. [Google Scholar] [CrossRef]

- Wang, X.; Liu, S.; Du, P.; Liang, H.; Xia, J.; Li, Y. Object-Based Change Detection in Urban Areas from High Spatial Resolution Images Based on Multiple Features and Ensemble Learning. Remote Sens. 2018, 10, 276. [Google Scholar] [CrossRef]

- Huo, C.; Chen, K.; Ding, K.; Zhou, Z.; Pan, C. Learning relationship for very high resolution image change detection. IEEE J. Sel. Top. App. Earth Obs. Remote Sens. 2016, 9, 3384–3394. [Google Scholar] [CrossRef]

- Wu, C.; Zhang, L.; Du, B. Kernel Slow Feature Analysis for Scene Change Detection. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2367–2384. [Google Scholar] [CrossRef]

- Zhang, W.; Lu, X.; Li, X. A Coarse-to-Fine Semi-Supervised Change Detection for Multispectral Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3587–3599. [Google Scholar] [CrossRef]

- Chen, Q.; Chen, Y.; Jiang, W. Genetic Particle Swarm Optimization–Based Feature Selection for Very-High-Resolution Remotely Sensed Imagery Object Change Detection. Sensors 2016, 16, 1204. [Google Scholar] [CrossRef]

- Hou, B.; Wang, Y.; Liu, Q. A Saliency Guided Semi-Supervised Building Change Detection Method for High Resolution Remote Sensing Images. Sensors 2016, 16, 1377. [Google Scholar] [CrossRef]

- Yu, H.; Yang, W.; Hua, G.; Ru, H.; Huang, P. Change Detection Using High Resolution Remote Sensing Images Based on Active Learning and Markov Random Fields. Remote Sens. 2017, 9, 1233. [Google Scholar] [CrossRef]

- Feng, W.; Sui, H.; Tu, J.; Huang, W.; Xu, C.; Sun, K. A Novel Change Detection Approach for Multi-Temporal High-Resolution Remote Sensing Images Based on Rotation Forest and Coarse-to-Fine Uncertainty Analyses. Remote Sens. 2018, 10, 1015. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.; Wang, X.; Chen, Y. Object-Based Change Detection Using Multiple Classifiers and Multi-Scale Uncertainty Analysis. Remote Sens. 2019, 11, 359. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, B. Spectrally-Spatially Regularized Low-Rank and Sparse Decomposition: A Novel Method for Change Detection in Multitemporal Hyperspectral Images. Remote Sens. 2017, 9, 1044. [Google Scholar] [CrossRef]

- Erturk, A.; Iordache, M.-D.; Plaza, A. Sparse Unmixing With Dictionary Pruning for Hyperspectral Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 321–330. [Google Scholar] [CrossRef]

- Wu, K.; Du, Q.; Wang, Y.; Yang, Y. Supervised Sub-Pixel Mapping for Change Detection from Remotely Sensed Images with Different Resolutions. Remote Sens. 2017, 9, 284. [Google Scholar] [CrossRef]

- Zhang, W.; Lu, X. The Spectral-Spatial Joint Learning for Change Detection in Multispectral Imagery. Remote Sens. 2019, 11, 240. [Google Scholar] [CrossRef]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A General End-to-End 2-D CNN Framework for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3–13. [Google Scholar] [CrossRef]

- Lyu, H.; Lu, H.; Mou, L. Learning a Transferable Change Rule from a Recurrent Neural Network for Land Cover Change Detection. Remote Sens. 2016, 8, 506. [Google Scholar] [CrossRef]

- Song, A.; Choi, J.; Han, Y.; Kim, Y. Change Detection in Hyperspectral Images Using Recurrent 3D Fully Convolutional Networks. Remote Sens. 2018, 10, 1827. [Google Scholar] [CrossRef]

- Ma, W.; Xiong, Y.; Wu, Y.; Yang, H.; Zhang, X.; Jiao, L. Change Detection in Remote Sensing Images Based on Image Mapping and a Deep Capsule Network. Remote Sens. 2019, 11, 626. [Google Scholar] [CrossRef]

- Liu, S.; Bruzzone, L.; Bovolo, F.; Du, P. Hierarchical Unsupervised Change Detection in Multitemporal Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 244–260. [Google Scholar]

- Zhuang, H.; Deng, K.; Fan, H.; Yu, M. Strategies Combining Spectral Angle Mapper and Change Vector Analysis to Unsupervised Change Detection in Multispectral Images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 681–685. [Google Scholar] [CrossRef]

- Liu, S.; Bruzzone, L.; Bovolo, F.; Du, P. Unsupervised Multitemporal Spectral Unmixing for Detecting Multiple Changes in Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2733–2748. [Google Scholar] [CrossRef]

- Shah-Hosseini, R.; Homayouni, S.; Safari, A. A Hybrid Kernel-Based Change Detection Method for Remotely Sensed Data in a Similarity Space. Remote Sens. 2015, 7, 12829–12858. [Google Scholar] [CrossRef]

- Shao, P.; Shi, W.; He, P.; Hao, M.; Zhang, X. Novel Approach to Unsupervised Change Detection Based on a Robust Semi-Supervised FCM Clustering Algorithm. Remote Sens. 2016, 8, 264. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Blaschke, T.; Ma, X.; Tiede, D.; Cheng, L.; Chen, Z.; Chen, D. Object-Based Change Detection in Urban Areas: The Effects of Segmentation Strategy, Scale, and Feature Space on Unsupervised Methods. Remote Sens. 2016, 8, 761. [Google Scholar] [CrossRef]

- Liu, Q.; Liu, L.; Wang, Y. Unsupervised Change Detection for Multispectral Remote Sensing Images Using Random Walks. Remote Sens. 2017, 9, 438. [Google Scholar] [CrossRef]

- Wang, B.; Choi, J.; Choi, S.; Lee, S.; Wu, P.; Gao, Y. Image Fusion-Based Land Cover Change Detection Using Multi-Temporal High-Resolution Satellite Images. Remote Sens. 2017, 9, 804. [Google Scholar] [CrossRef]

- Liu, S.; Du, Q.; Tong, X.; Samat, A.; Pan, H.; Ma, X. Band Selection-Based Dimensionality Reduction for Change Detection in Multi-Temporal Hyperspectral Images. Remote Sens. 2017, 9, 1008. [Google Scholar] [CrossRef]

- Lv, Z.; Liu, T.; Wan, Y.; Benediktsson, J.A.; Zhang, X. Post-Processing Approach for Refining Raw Land Cover Change Detection of Very High-Resolution Remote Sensing Images. Remote Sens. 2018, 10, 472. [Google Scholar] [CrossRef]

- Solano-Correa, Y.T.; Bovolo, F.; Bruzzone, L. An Approach for Unsupervised Change Detection in Multitemporal VHR Images Acquired by Different Multispectral Sensors. Remote Sens. 2018, 10, 533. [Google Scholar] [CrossRef]

- Yan, L.; Xia, W.; Zhao, Z.; Wang, Y. A Novel Approach to Unsupervised Change Detection Based on Hybrid Spectral Difference. Remote Sens. 2018, 10, 841. [Google Scholar] [CrossRef]

- Park, H.; Choi, J.; Park, W.; Park, H. Modified S2CVA Algorithm Using Cross-Sharpened Images for Unsupervised Change Detection. Sustainability 2018, 10, 3301. [Google Scholar] [CrossRef]

- Lei, T.; Xue, D.; Lv, Z.; Li, S.; Zhang, Y.; Nandi, A.K. Unsupervised Change Detection Using Fast Fuzzy Clustering for Landslide Mapping from Very High-Resolution Images. Remote Sens. 2018, 10, 1381. [Google Scholar] [CrossRef]

- Li, X.; Yuan, Z.; Wang, Q. Unsupervised Deep Noise Modeling for Hyperspectral Image Change Detection. Remote Sens. 2019, 11, 258. [Google Scholar] [CrossRef]

- Mandanici, E.; Bitelli, G. Multi-Image and Multi-Sensor Change Detection for Long-Term Monitoring of Arid Environments with Landsat Series. Remote Sens. 2015, 7, 14019–14038. [Google Scholar] [CrossRef]

- Misbari, S.; Hashim, M. Change Detection of Submerged Seagrass Biomass in Shallow Coastal Water. Remote Sens. 2016, 8, 200. [Google Scholar] [CrossRef]

- Liu, H.; Meng, X.; Jiang, T.; Liu, X.; Zhang, A. Change Detection of Phragmites Australis Distribution in the Detroit Wildlife Refuge Based on an Iterative Intersection Analysis Algorithm. Sustainability 2016, 8, 264. [Google Scholar] [CrossRef]

- Ballanti, L.; Byrd, K.B.; Woo, I.; Ellings, C. Remote Sensing for Wetland Mapping and Historical Change Detection at the Nisqually River Delta. Sustainability 2017, 9, 1919. [Google Scholar] [CrossRef]

- Lv, Z.; Shi, W.; Zhou, X.; Benediktsson, J.A. Semi-Automatic System for Land Cover Change Detection Using Bi-Temporal Remote Sensing Images. Remote Sens. 2017, 9, 1112. [Google Scholar] [CrossRef]

- Lv, Z.; Liu, T.; Zhang, P.; Benediktsson, J.A.; Chen, Y. Land Cover Change Detection Based on Adaptive Contextual Information Using Bi-Temporal Remote Sensing Images. Remote Sens. 2018, 10, 901. [Google Scholar] [CrossRef]

- Luo, H.; Liu, C.; Wu, C.; Guo, X. Urban Change Detection Based on Dempster–Shafer Theory for Multi-temporal Very High-Resolution Imagery. Remote Sens. 2018, 10, 980. [Google Scholar] [CrossRef]

- Liu, H.; Yang, M.; Chen, J.; Hou, J.; Deng, M. Line-Constrained Shape Feature for Building Change Detection in VHR Remote Sensing Imagery. ISPRS Int. J. Geo-Inf. 2018, 7, 410. [Google Scholar] [CrossRef]

- Zhou, Z.; Ma, L.; Fu, T.; Zhang, G.; Yao, M.; Li, M. Change Detection in Coral Reef Environment Using High-Resolution Images: Comparison of Object-Based and Pixel-Based Paradigms. ISPRS Int. J. Geo-Inf. 2018, 7, 441. [Google Scholar] [CrossRef]

- Gstaiger, V.; Tian, J.; Kiefl, R.; Kurz, F. 2D vs. 3D Change Detection Using Aerial Imagery to Support Crisis Management of Large-Scale Events. Remote Sens. 2018, 10, 2054. [Google Scholar] [CrossRef]

- Gadal, S.; Ouerghemmi, W. Multi-Level Morphometric Characterization of Built-up Areas and Change Detection in Siberian Sub-Arctic Urban Area: Yakutsk. ISPRS Int. J. Geo-Inf. 2019, 8, 129. [Google Scholar] [CrossRef]

- Bueno, I.T.; Acerbi Júnior, F.W.; Silveira, E.M.O.; Mello, J.M.; Carvalho, L.M.T.; Gomide, L.R.; Withey, K.; Scolforo, J.R.S. Object-Based Change Detection in the Cerrado Biome Using Landsat Time Series. Remote Sens. 2019, 11, 570. [Google Scholar] [CrossRef]

- Gong, J.; Hu, X.; Pang, S.; Li, K. Patch Matching and Dense CRF-Based Co-Refinement for Building Change Detection from Bi-Temporal Aerial Images. Sensors 2019, 19, 1557. [Google Scholar] [CrossRef]

- Marchesi, S.; Bovolo, F.; Bruzzone, L. A Context-Sensitive Technique Robust to Registration Noise for Change Detection in VHR Multispectral Images. IEEE Trans. Image Proc. 2010, 19, 1877–1889. [Google Scholar] [CrossRef]

- Vongsy, K.; Eismann, M.T.; Mendenhall, M.J. Extension of the Linear Chromodynamics Model for Spectral Change Detection in the Presence of Residual Spatial Misregistration. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3005–3021. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. On the use of Radiance Domain for Burn Scar Detection under Varying Atmospheric Illumination Conditions and Viewing Geometry. J. Signal Image Video Proc. 2016, 11, 605–612. [Google Scholar] [CrossRef]

- Zhou, J.; Kwan, C. Fast Anomaly Detection Algorithms for Hyperspectral Images. J. Multidiscip. Eng. Sci. Technol. 2015, 2, 2521–2525. [Google Scholar]

- Chang, C.-I. Real-Time Recursive Hyperspectral Sample and Band Processing; Springer: Berlin, Germany, 2017. [Google Scholar]

- Manolakis, D.; Siracusa, C.; Shaw, G. Adaptive Matched Subspace Detectors for Hyperspectral Imaging Applications. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing, Salt Lake City, UT, USA, 7–11 May 2001. [Google Scholar]

- Kwan, C. Method and System for Generating High ResolutionWorldview-3 Images. U.S. Patent 10192288, 28 June 2019. [Google Scholar]

- Kwon, H.; Nasrabadi, N.M. Kernel matched subspace detectors for hyperspectral target detection. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 178–194. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Dao, M.; Nguyen, D.; Tran, T.; Chin, S. Chemical plume detection in hyperspectral imagery via joint sparse representation. Mil. Commun. Conf. (MILCOM) 2012, 1–5. [Google Scholar] [CrossRef]

- Kwan, C.; Dao, M.; Chou, B.; Kwan, L.M.; Ayhan, B. Mastcam Image Enhancement Using Estimated Point Spread Functions. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 19–21 October 2017. [Google Scholar]

- Chan, S.H.; Wang, X.; Elgendy, O.A. Plug-and-play ADMM for image restoration: Fixed point convergence and applications. IEEE Trans. Comput. Imaging 2017, 3, 84–98. [Google Scholar] [CrossRef]

- Yan, Q.; Xu, Y.; Yang, X.; Truong, T.Q. Single image superresolution based on gradient profile sharpness. IEEE Trans. Image Process. 2015, 24, 3187–3202. [Google Scholar] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 391–407. [Google Scholar]

- Timofte, R.; de Smet, V.; Van Gool, L. A+: Adjusted anchored neighborhood regression for fast super-resolution. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; pp. 111–126. [Google Scholar]

- Chang, H.; Yeung, D.; Xiong, Y. Super-resolution through neighbor embedding. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Hardie, R.C.; Eismann, M.T.; Wilson, G.L. Map estimation for hyperspectral image resolution enhancement using an auxiliary sensor. IEEE Trans. Image Process. 2004, 13, 1174–1184. [Google Scholar] [CrossRef] [PubMed]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J.Y. Hyperspectral and multispectral image fusion based on a sparse representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef]

- Choi, J.; Yu, K.; Kim, Y. A new adaptive component-substitution based satellite image fusion by using partial replacement. IEEE Trans. Geosci. Remote Sens. 2011, 49, 295–309. [Google Scholar] [CrossRef]

- Hook, S.J.; Rast, M. Mineralogic Mapping Using Airborne Visible Infrared Imaging Spectrometer (aviris), Shortwave Infrared (swir) data Acquired over Cuprite, Nevada. In Proceedings of the Second Airborne Visible Infrared Imaging Spectrometer (AVIRIS) Workshop, Pasadena, CA, USA, 4–5 June 1990; pp. 199–207. [Google Scholar]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef]

- Chavez, P.S., Jr.; Sides, S.C.; Anderson, J.A. Comparison of three different methods to merge multiresolution and multispectral data: Landsat tm and spot panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 295–303. [Google Scholar]

- Liao, W.; Huang, X.; Coillie, F.V.; Gautama, S.; Pizurica, A.; Philips, W.; Liu, H.; Zhu, T.; Shimoni, M.; Moser, G.; et al. Processing of multiresolution thermal hyperspectral and digital color data: Outcome of the 2014 IEEE GRSS data fusion contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2984–2996. [Google Scholar] [CrossRef]

- Laben, C.; Brower, B. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6011875, 4 January 2000. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of ms+pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution ms and pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Dalla Mura, M.; Licciardi, G.; Chanussot, J. Contrast and error-based fusion schemes for multispectral image pansharpening. IEEE Geosci. Remote Sens. Lett. 2014, 11, 930–934. [Google Scholar] [CrossRef]

- Simoes, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. A convex formulation for hyperspectral image superresolution via subspace-based regularization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3373–3388. [Google Scholar] [CrossRef]

- Simoes, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. Hyperspectral image superresolution: An edge-preserving convex formulation. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4166–4170. [Google Scholar]

- Liu, J.G. Smoothing filter based intensity modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

- Zhou, J.; Kwan, C.; Budavari, B. Hyperspectral image super-resolution: A hybrid color mapping approach. J. Appl. Remote Sens. 2016, 10, 35024. [Google Scholar] [CrossRef]

- Ren, H.; Chang, C.I. A generalized orthogonal subspace projection approach to unsupervised multispectral image classification. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2515–2528. [Google Scholar]

- Bernabé, S.; Marpu, P.R.; Plaza, A.; Mura, M.D.; Benediktsson, J.A. Spectral–Spatial Classification of Multispectral Images Using Kernel Feature Space Representation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 288–292. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Kwan, C.; Budavari, B.; Gao, F.; Zhu, X. A Hybrid Color Mapping Approach to Fusing MODIS and Landsat Images for Forward Prediction. Remote Sens. 2018, 10, 520. [Google Scholar] [CrossRef]

- Kwan, C.; Chou, B.; Yang, J.; Perez, D.; Shen, Y.; Li, J.; Koperski, K. Landsat and Worldview Image Fusion. In Proceedings of the Signal Processing, Sensor/Information Fusion, and Target Recognition XXVIII, Baltimore, MD, USA, 15–17 April 2019. [Google Scholar]

- Xu, Y.; Lin, L.; Meng, D. Learning-Based Sub-Pixel Change Detection Using Coarse Resolution Satellite Imagery. Remote Sens. 2017, 9, 709. [Google Scholar] [CrossRef]

- Kwan, C.; Ayhan, B.; Larkin, J.; Kwan, L.; Bernabé, S.; Plaza, A. Performance of change detection using heterogeneous images and Extended Multi-Attribute Profiles (EMAPs). Remote Sens. 2019, 11, 2377. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. A New Approach to Change Detection Using Heterogeneous Images. In Proceedings of the IEEE 10th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 10–12 October 2019. [Google Scholar]

| Supervised Methods | Reference and Notes | Data |

|---|---|---|

| Deep Learning | [82] recurrent neural network (RNN) | Landsat, Hyperion |

| [83] RNN | Hyperion | |

| [84] Capsule Network | SAR, optical | |

| Object based | [68] ensemble learning | Quickbird, Ziyuan-3, Gaofen-1, |

| [73] Combination of pixel and object based | Quickbird | |

| [75] Rotation forest | Gaofen-2 | |

| [76] Multiple classifiers (SVM, CVA, …) | ZY-3 | |

| Sparsity | [77] low rank and sparse representation | Pavia HSI, Hyperion |

| [78] spectral unmixing | AVIRIS, APEX | |

| [79] Sub-pixel classification | Landsat, MODIS | |

| [80] Joint spectral-spatial learning | Landsat, GF-1 | |

| [81] abundance extraction using unmixing | Hyperion | |

| Others | [67] Combination of conventional methods | ZY-3 |

| [69] Relationship learning | QuickBird 2, Pleiades 1A | |

| [70] Kernel Slow Feature Analysis | IKONOS | |

| [71] Semi-automatic | Landsat | |

| [72] genetic particle swarming | WV-2, WV-3 | |

| [74] Combination of k-means and MRF | WV-2 |

| Unsupervised Methods | Reference and Notes | Data |

|---|---|---|

| Hierarchical | [85] Key algorithm: CVA | Hyperion (hyperspectral data) |

| Hybrid | [86] combination of CVA and SAM | Quickbird |

| Spectral unmixing | [87] change detection based on endmembers | Hyperion (hyperspectral data) |

| Kernel based | [88] Utilized many clustering tools | Landsat and Quickbird |

| Feature based | [89] Change detection using segmentation | Landsat and SAR |

| Object based | [90] Segmentation is key to success | WV-2 |

| [94] Emphasize on post-processing after object detection | Aerial images and SPOT-5 | |

| Random walk | [91] Combination of random walk, PCA, and GMM | Landsat, ASTER, Quickbird |

| Image fusion based on cross-pansharpened images | [92] IR-MAD for change detection | IKONOS-2, WV-3, GF-1 |

| [97] Use modified CVA | KOMPSAT-2 | |

| Band selection | [93] CVA was used after band selection | Hyperion |

| Transformation based | [95] focus on features from linear transformation | Quickbird, WV-2, Geoeye-1 |

| Hybrid spectral difference | [96] Combine spectral shapes, gradient of spectral shapes, and Euclidean distance | WV-2, WV-3, Landsat-7 |

| Fuzzy clustering | [98] fuzzy c-means | Aerial images |

| Deep learning | [99] Unsupervised | Hyperion |

| Reference and Notes | Data | |

|---|---|---|

| Arid environment monitoring | [100] NDVI for change detection | Landsat |

| Submerged biomass in shallow coastal water | [101] substrate-leaving radiance estimation | Landsat |

| Phragmites australis distribution | [102] iterative intersection analysis algorithm based on NDVI | Landsat |

| Wetland mapping and historical change detection | [103] object-based approach | Aerial images, WV-3 images, lidar |

| Land cover change detection | [104] region growing algorithm | Aerial images, Landsat |

| [105] Contextual based | Aerial images, Landsat | |

| Urban change detection | [106] Combined object- and pixel-based approaches | GF-2 images |

| Building change detection | [107] Object-based approach | WV-2 images |

| [112] Patch-based approach | Aerial images | |

| Coral reef change detection | [108] Compared object- and pixel-based methods | Quickbird and WV-2 images |

| Large event crisis management | [109] Compared 2D and 3D change detection methods | Aerial images |

| Built-up area monitoring | [110] supervised classification group and spectral index-based group | Sentinel-2A and SPOT 6 |

| Cerrado (Brazilian savanna) biome monitoring | [111] Object based approach | Landsat |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kwan, C. Methods and Challenges Using Multispectral and Hyperspectral Images for Practical Change Detection Applications. Information 2019, 10, 353. https://doi.org/10.3390/info10110353

Kwan C. Methods and Challenges Using Multispectral and Hyperspectral Images for Practical Change Detection Applications. Information. 2019; 10(11):353. https://doi.org/10.3390/info10110353

Chicago/Turabian StyleKwan, Chiman. 2019. "Methods and Challenges Using Multispectral and Hyperspectral Images for Practical Change Detection Applications" Information 10, no. 11: 353. https://doi.org/10.3390/info10110353

APA StyleKwan, C. (2019). Methods and Challenges Using Multispectral and Hyperspectral Images for Practical Change Detection Applications. Information, 10(11), 353. https://doi.org/10.3390/info10110353