Abstract

This article presents the data-driven equation-free modeling of the dynamics of a hexafloat floating offshore wind turbine based on the application of dynamic mode decomposition (DMD). The DMD has here been used (i) to extract knowledge from the dynamic system through its modal analysis, (ii) for short-term forecasting (nowcasting) from the knowledge of the immediate past of the system state, and (iii) for system identification and reduced-order modeling. All the analyses are performed on experimental data collected from an operating prototype. The nowcasting method for motions, accelerations, and forces acting on the floating system applies Hankel-DMD, a methodological extension that includes time-delayed copies of the states in an augmented state vector. The system identification task is performed by using Hankel-DMD with a control (Hankel-DMDc), which models the system as externally forced. The influence of the main hyperparameters of the methods is investigated with a full factorial analysis using error metrics analyzing complementary aspects of the prediction. A Bayesian extension of the Hankel-DMD and Hankel-DMDc is introduced by considering the hyperparameters as stochastic variables, enriching the predictions with uncertainty quantification. The results show the capability of the approaches for data-lean nowcasting and system identification, with computational costs being compatible with real-time applications. Accurate predictions are obtained up to 4 wave encounters for nowcasting and 20 wave encounters for system identification, suggesting the potential of the methods for real-time continuous-learning digital twinning and surrogate data-driven reduced-order modeling.

1. Introduction

In the efforts to contain the global temperature increase under 2 °C below pre-industrial levels, as set by the Paris Agreement [1], most countries have committed to reaching the goal of net zero emissions by 2050, meaning that all their greenhouse gas emissions must be counterbalanced by an equal amount of removals from the atmosphere. To reach this critical and ambitious task for sustainable growth, the decarbonization of our society is a key aspect that passes through the decarbonization of energy production [2,3]. The shift from fossil fuels to renewable sources for power production is considered the fundamental step in the process. Power generation is, in fact, responsible for 30% of the global carbon dioxide emissions at the moment. In 2018, the European Union (EU) set intermediate targets of 20% of energy obtained from renewable resources by 2020 and 32% by 2030, and the latter has been raised to 42.5% (with the aspiration of reaching 45%) by amending the Renewable Energy Directive in 2021 [4]. Reaching the mentioned targets means almost doubling the existing share of renewable energy in the EU.

Wind energy technology has been identified as one of the most promising ones, along with photovoltaic, for power production from renewable sources. Several growth scenarios predict a prominent role of wind power, exceeding the 35% share of the total electricity demand by 2050 [5] which represents a nearly nine-fold rise in the wind power share in the total generation mix compared to 2016 levels. Offshore wind energy production has a bigger growth potential compared to its onshore counterpart. The reasons are connected to fewer technical, logistic, and social restrictions of the former. Offshore installed turbines may exploit abundant and more consistent winds, which would be helped by the reduced friction of the sea surface and the absence of surrounding hills and buildings [6]. In addition, offshore wind farms benefit from greater social acceptance, a minor value of their occupied space, and the possibility of installing larger turbines with fewer transportation issues than onshore [7,8,9]. The 2023 Global Offshore Wind Report predicts the installation of more than 380 GW of offshore wind capacity worldwide in the next ten years [10].

The exponential growth of the sector passes through the possibility of realizing floating offshore plants, enabling the exploitation of sea areas with deeper water that make fixed-foundation turbines not a feasible/affordable solution (indicatively deeper than 60 m). The main advantage of exploiting deep offshore sea areas relies on the abundant and steady winds characterizing them. One of the main limiting factors in the reduction of the levelized cost of energy (LCOE) of advanced floating offshore wind turbines (FOWTs) is the current size and cost of their platforms. Its reduction, alongside the development of advanced moorings, improved control systems, and maintenance procedures, is among the most impacting technical goals research activities are focusing on.

The power production by FOWTs presents additional challenges compared to their fixed wind turbine counterpart (on- or offshore) which are inherent to their floating characteristic that adds six degrees of freedom to the structures. Nonlinear hydrodynamic loads, wave–current interactions, aero-hydrodynamics coupling producing negative aerodynamic damping, and wind-induced low-frequency rotations are among the main causes of large amplitude motions of the platform. These are in turn causes of reductions in the average power output of the power plant and increases in the fluctuations of the produced power. Both the quantity and quality of the power production are affected, and the structure and all the components (blades, cables, bearings, etc.) also suffer increased fatigue-induced wear from nonconstant loadings [11] (about 20% of operation and maintenance costs come from blade failures [12], and almost 70% of the gearbox downtime is due to bearing faults [13]). The floating wind turbine operations are considerably altered by the stochastic nature of the wind, waves, and currents in the sea environment, which excite platform motion, leading to uncertainties in structural loads and power extraction capability. As shown first in Jonkman [14], waves are responsible for a large part of the dynamic excitation of an FOWT. Rotor speed fluctuations are 60% larger when the same turbine is operated in a floating environment compared to onshore installation, and the difference has been shown to increase with increasing wave conditions. Therefore, it is essential to develop appropriate strategies to improve FOWTs’ platform stability and maximize their turbines’ energy conversion rate, achieving a better LCOE with higher power production and lower maintenance operation costs.

Both passive and active technologies have been studied and developed within this scope, such as tuned mass dampers [15,16,17] mounted on different floaters, ballasted buoyancy cans [18,19,20], gyro-stabilizers [21], and blade pitch and/or torque controllers [7,8,9,22,23]. Developing advanced control systems is a high-potential cost reduction strategy for offshore wind turbines that impacts multiple levels: effective control strategies may increase the energy production, which has a direct impact on the LCOE; a reduction in platform motions may help in reducing their sizes and costs; reduced vibratory loads on the turbine’s components help increase their lifetime and reduce maintenance costs. A thorough comparison of various controllers designed for managing vibratory loads is provided in Awada et al. [24]. Both feedback [7,8,25,26,27,28,29,30,31] and feedforward [32,33,34,35,36,37,38,39,40,41] control systems have been successfully developed for the effective control of FOWTs. The two philosophies may also be effectively coupled, creating a feedforward control with a feedback loop such as in Al et al. [42], where real-time prediction of the free-surface elevation was exploited to compensate for the wave disturbances on the FOWT. Feedback algorithms may take advantage of accurate models of the controlled systems for improved performance; at the same time, feedforward controllers rely on forecasting techniques to estimate upcoming disturbances or changes in the state to be controlled. Hence, the predictive algorithm plays a primary role in the success of the control strategy.

Although white- and gray-box models for the FOWT dynamics can be obtained [43,44], the modeling process can be extremely complex, and clearly representing the operational status of the turbine and platform under random meteorological conditions is not trivial. Recently, data-driven methods have demonstrated to be a powerful alternative for the identification of dynamic systems and the forecasting of their response. In particular, advanced machine learning and deep learning algorithms have been successfully applied to predict FOWT motions and loads. Several examples can be found in the literature. A multilayer feedforward neural network was used in Wang et al. [45] to predict maximum blade and tower loadings and maximum mooring line tension using the wind speed, turbulence intensity, meaningful wave height, and spectral peak period as the model’s input parameters. Zhang et al. [46] built a data-driven prediction model for the FOWT output power, the platform pitch angle, and the blades’ flapwise moment at the root using wind speed, wave height, and blade pitch control variables as inputs by training a gated recurrent neural network (GRNN). The study in Barooni and Velioglu Sogut [47] adopted a convolutional neural network merged with a GRNN to forecast the dynamic behavior of FOWTs. Long short-term memory (LSTM) networks are the subject of the studies in Gräfe et al. [48], where the fairlead tension, surge, and pitch motions were predicted with a data-driven method, including onboard sensors measurements and lidar inflow data. A self-attention method was integrated with LSTM in Deng et al. [49] to improve the accuracy in predicting the motion response of an FOWT in wind–wave coupled environments. The hybridization of LSTM with empirical mode decomposition (EMD) was studied in Ye et al. [50], where the neural network was used to predict the subcomponents of the EMD process for the short-term prediction of the motions of a semi-submersible platform, and in Song et al. [51], where the authors studied FOWT motion response prediction under different sea states.

Albeit powerful, machine learning and deep learning methods typically require large training datasets, comprising a range of operating conditions needing to be as complete as possible to learn patterns that generalize to new situations. In addition, the training of such algorithms can be computationally expensive and typically not compatible with real-time learning and digital twinning [52], where the system characteristics and responses to external perturbations also change as the system ages.

Dynamic mode decomposition (DMD) offers an interesting alternative for data-driven and equation-free modeling [53,54,55]. The method is based on the Koopman operator theory, an alternative formulation of the dynamical systems theory that provides a versatile framework for the data-driven study of high-dimensional nonlinear systems [56]. DMD builds a reduced-order linear model of a dynamical system, approximating the Koopman operator. The approach requires no specific knowledge or assumption about the system’s dynamics and can be applied to both empirical and simulated data. The model is obtained with a direct procedure from a small set of multidimensional input–output pairs, which constitutes, from a machine learning perspective, the training phase.

The literature features several methodological extensions of the original DMD algorithm aimed at improving the accuracy of the decomposition and, more generally, broadening the method’s capabilities. Particularly relevant to this study are the Hankel-DMD [55,56,57,58,59], the DMD with control (DMDc) [60,61], and their combination in the Hankel-DMD with control (Hankel-DMDc) [62,63]. The Hankel-DMD, also referred to as Augmented-DMD [59], Time Delay Coordinates Extended DMD [62], and Time Delay DMD [64], has proven to be a powerful tool for enhancing the linear model’s ability to capture significant features of nonlinear and chaotic dynamics. This is achieved by extending the system state with time-delayed copies, which, in the limit of infinite-time observations, yields the true Koopman eigenfunctions and eigenvalues [56]. For instance, it has been effectively used in Dylewsky et al. and Mohan et al. [64,65] to predict the short-term evolution of electric loads on the grid. The DMDc extends the DMD framework to handle externally forced systems, allowing for the separation of the system’s free response from the effects of external inputs. Notable applications include Al-Jiboory [66], wherein they developed a novel real-time control technique for unmanned aerial quadrotors using DMDc, enabling the control system to adapt promptly to environmental or system behavior change. Another example is Dawson et al. [67], where DMDc was applied to simulation data to create a reduced-order model (ROM) of the forces acting on a rapidly pitching airfoil. In Brunton et al. [62], the algorithmic variant combining control and state augmentation with time-delayed copies is called Time Delay Coordinates DMDc, and it was introduced using the same number of delayed copies for both the state and the input. Similarly, Zawacki and Abed [63] introduced the DMD with Input-Delayed Control, which, as the name suggests, includes time-delayed copies of the inputs only.

The data-driven nature, noniterative training process, and data efficiency of DMD have contributed to its widespread adoption as a reduced-order modeling technique and real-time forecasting tool in various fields. These include fluid dynamics and aeroacoustics [53,68,69,70,71], epidemiology [72], neuroscience [73], and finance [74], among others. Several studies have demonstrated the effectiveness of DMD in forecasting complex system behaviors in the marine environment, such as Diez et al. [75], where DMD was applied for the forecasting of ship trajectories motions and forces. Serani et al. [59] conducted a statistical evaluation of DMD’s predictive performance for naval applications, incorporating state augmentation techniques such as augmenting the system state with its derivatives and time-delayed copies. Diez et al. [76] provides a comparative analysis for naval application of DMD-based prediction algorithms and various neural network architectures, including standard and bidirectional long short-term memory networks, gated recurrent units, and feedforward neural networks. Furthermore, Diez et al. [77] studied the hybridization of DMD with artificial neural networks to enhance prediction accuracy.

The objective of this paper is to propose the use of DMD and its variants to extract knowledge and forecast motions and loads, as well as perform system identification of an FOWT from experimental data. In particular, Hankel-DMD is used as a data-lean forecasting method, producing short-term forecasting (nowcasting) from the immediate past history of the system state, with a continuously learning data-driven reduced-order model suitable for digital twinning and real-time predictions. On the other side, Hankel-DMDc is applied as an effective and efficient approach to model-free system identification, aiming to create an accurate ROM for the long-term prediction of the platform motions and loads from the knowledge of the wave elevation in the proximity of the platform and the wind speed. In this work, the nowcasting and system identification tasks are performed on experimentally measured data; however, the Hankel-DMD and Hankel-DMDc methods here developed also directly apply to different data sources such as simulations of various fidelity levels. The effect on the predictions of the main hyperparameters of the methods are studied with a full-factorial design of experiment, assessing the performances using three error metrics and identifying the most promising configurations. In addition, novel Bayesian extensions of Hankel-DMD and Hankel-DMDc are introduced to include uncertainty quantification in the methods’ predictions by considering their hyperparameters as stochastic variables. The stochastic hyperparameter variation ranges are identified after deterministic analyses, and the results from the deterministic and Bayesian methods were compared using the same test sequences.

The two approaches, nowcasting and system identification, are of paramount relevance for developing advanced controllers and digital twins for the FOWT, combining accuracy, adaptivity, and reliability (through uncertainty quantification), with computational costs compatible with real-time execution.The efficiency of the DMD-based methods is provided by the small dimensionality of the relevant state variables and the low computational cost required for both the model construction (training) and exploitation (prediction) as opposed to more data- and resource-intensive machine learning methods.

The methods are applied to real-life measured data obtained by various sensors mounted on a scale prototype of a 5MW Hexafloat FOWT, which is the first of its type. The experimental activity has been conducted as part of the National Research Project RdS-Electrical Energy from the Sea funded by the Italian Ministry for the Environment (MaSE) and coordinated by CNR-INM.

The paper is organized as follows. Section 2 presents the wind turbine test case, details the DMD methods applied, and introduces the performance metrics used to assess the predictive performances of the algorithms. The numerical setup and the data preprocessing are described in Section 4. Section 5 collects the results from the modal analysis and the forecasting of the quantities of interest obtained with the deterministic Hankel-DMD and and its Bayesian version for nowcasting, and finally, conclusions about the conducted analyses are given in Section 6.

2. Material and Methods

2.1. Wind Turbine Test Case

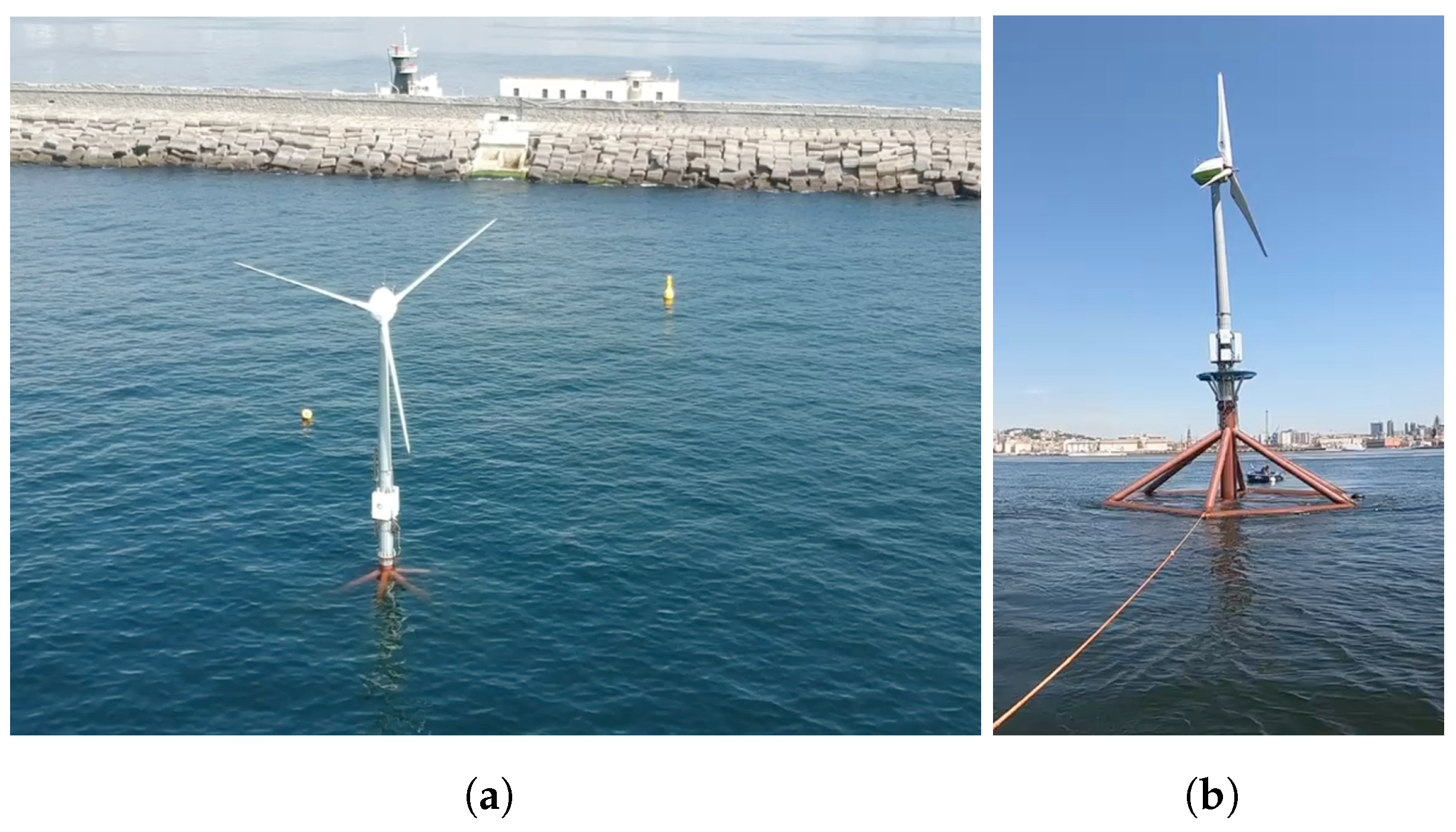

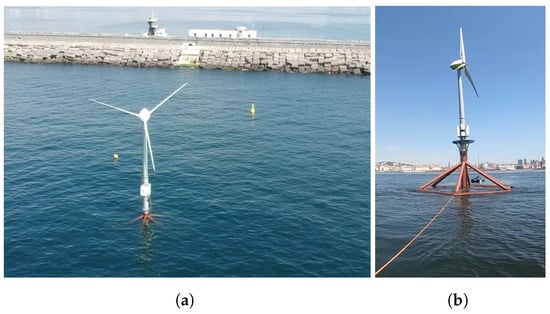

The presented analyses are conducted on a set of experimental data collected on the prototype of an FOWT built and tested at the Interdisciplinary Marine Renewable Energy Sea Lab (In-MaRELab); see Figure 1a.

Figure 1.

(a) Aerial view of In-MaRELab with the Hexafloat FOWT during the tests at sea in 2021. (b) View of the Hexafloat FOWT during the towing stage from the shipyard to the test site in 2024.

The tests were conducted offshore the Naples port, right in front of the breakwater Molo San Vincenzo. The prototype and the experimental activity at sea are part of the National Research Project RdS-Electrical Energy from the Sea funded by the MaSE and coordinated by CNR-INM.

The FOWT is a 1:6.8 scale prototype of a 5MW FOWT, which is the first one existing at sea for the Hexafloat concept. The floater is a Saipem patented lightweight semisub platform consisting of a hexagonal tubular steel structure around a central column and a deeper counterweight connected to the floater by six tendons (one for each corner of the hexagon) in synthetic material. The FOWT platform features a maximum outer diameter of 13 m, with a nondimensional draught-to-diameter ratio of 0.37.

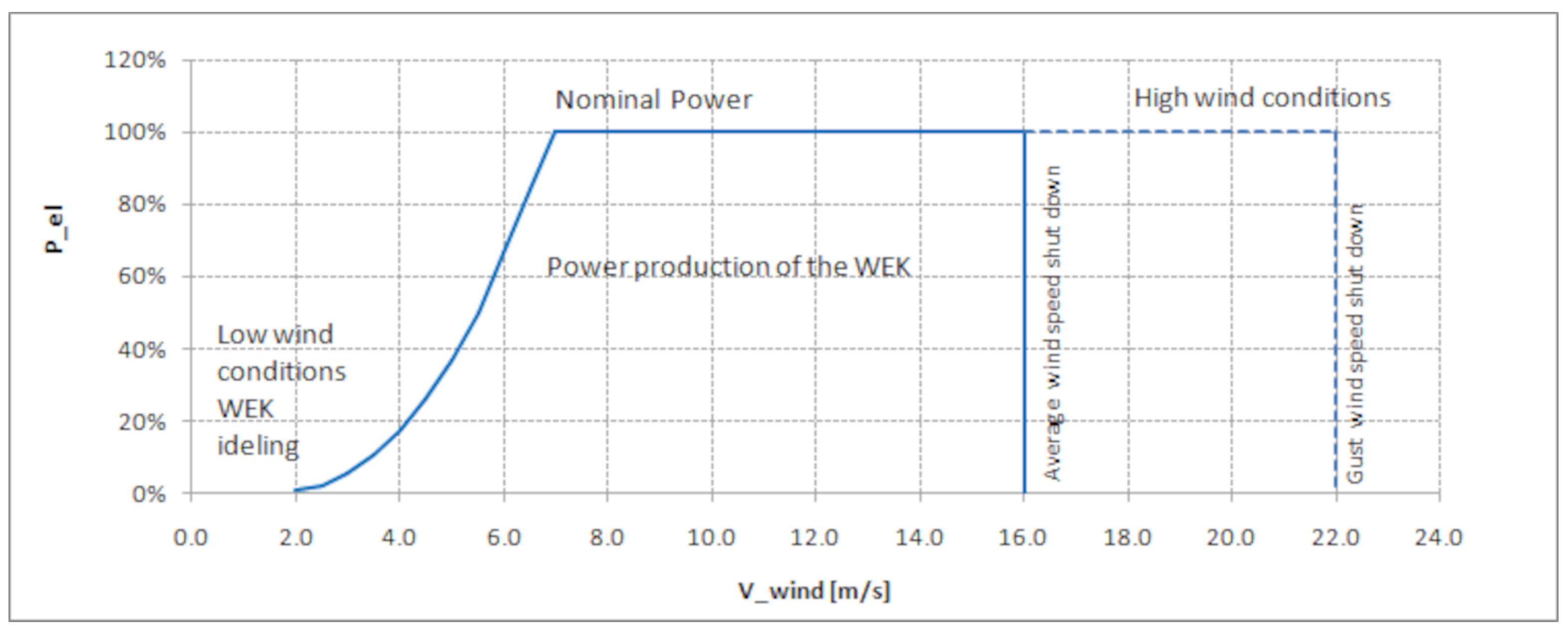

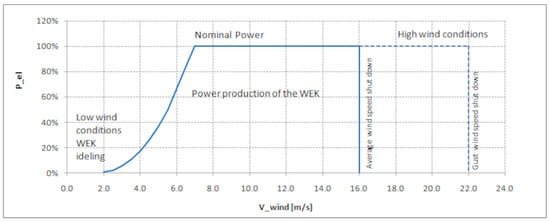

The floater hosts a Tozzi Nord TN535 10-kW wind turbine [78] originally designed for onshore application and, within the present research project, suitably modified in the electrical part for the specific aims of the offshore application (see Figure 1b). The turbine is characterized by a cut-in wind speed m/s, a rated wind speed m/s, and a cut-out wind speed m/s. Figure 2 shows the power curve of the TN535 10 kW wind turbine.

Figure 2.

Tozzi Nord TN535 10 kW wind turbine power curve [78].

The FOWT was anchored with three drag anchors located at 30 m of water depth through three mooring lines, with ropes breaking load of about 3.3 tons, in catenary configuration at a relative angle of 120 degrees counterclockwise, with directed toward the breakwater and orthogonal to it. The whole structure, i.e., the floater and the turbine, weighs a total of approximately 11 tons.

The studied dataset includes 12-hour synchronized time histories of the following:

- The loads applied to one of the three moorings of the platform () and three of the six tendons connecting the counterweight to the floater (, , and ), as measured by a system of underwater load cells LCM5404 with work limit load (WLL) of tons and tons.

- The acceleration along three coordinate axes (, , ), the pitch and roll angles (, ), and the respective angular rates (, ) collected by a Norwegian Subsea MRU 3000 inertial motion unit.

- The power extracted by the wind turbine (P) estimated by a programmable logic controller (PLC) through a direct measure of the electrical quantities at the generator on board the nacelle of the wind turbine, the rotor angular velocity () measured by two sensors in continuous cross-check, and the relative wind speed () through two different anemometers positioned on the nacelle, behind the rotor. All signals were collected by the PLC on the nacelle with a variable but well-known sample frequency of approximately 1 Hz.

- The wave elevation () measured by a pressure transducer integrated into the Acoustic Doppler Current Profiler (ADCP) Teledyne Marine Sentinel V20, located at a distance of approximately 50 m from the FOWT in the southeast direction.

The observed state of the system is hence composed as , , , , , , , , , , , P, , , }.

The measured significant wave height in the considered time frame is in the order of m, and the wave peak period is approximately s. The data represent a period of continuous operation for the FOWT in extreme weather conditions for the Mediterranean Sea [79], which the FOWT is designed for: the full-scale significant wave height reaches approximately 8.75 m, and the full-scale wave peak period is about s. The system may show induced strongly nonlinear physical dynamics in such weather conditions. In particular, the intense wind causes a nonlinear behavior in the extracted power and blades’ rotating speed, showing a saturation to the maximum values supported by the machine. This poses the DMD-based methods in a challenging condition for modal analysis and prediction.

An average incoming wave period is identified from the peak in the wave elevation spectrum and used in the following as reference period s ( Hz).

2.2. Dynamic Mode Decomposition

Dynamic mode decomposition was originally presented in Schmid and Sesterhenn [80] and Schmid [53] to identify spatiotemporal coherent structures from high-dimensional time series data, providing a linear reduced-order representation of possibly nonlinear system dynamics. Given a time series of data, the DMD computes a set of modes with their associated frequencies and decay/growth rates [62]. When the analyzed system is linear, the modes obtained by the DMD correspond to the system’s linear normal modes. The potential of the DMD in the analysis of nonlinear systems comes from its close relation to the spectral analysis of the Koopman operator [68]. The Koopman operator theory is built upon the original work in Koopman [81], defining the possibility of transforming a nonlinear dynamical system into a possibly infinite-dimensional linear system [61,82]. DMD is an equation-free data-driven approach that was shown by Rowley et al. [68] to be a computation of the Koopman operator for linear observables [83].

Its state-of-the-art definition has been given by Tu et al. [82] and is resumed in the following. Consider a dynamical system described by the following:

where represents the system’s state at time t, contains the parameters of the system, and represents its dynamics, possibly including nonlinearities. Equation (1) can represent various types of systems, including discretized partial differential equations at some discrete spatial points or the evolution of multivariable time series. The DMD analysis approximates the eigenmodes and eigenvalues of the infinite-dimensional linear Koopman operator associated with the system , providing a locally (in time) linearized finite-dimensional representation of it () based on observed data [54]:

DMD makes no assumption of the underlying physics, i.e., it does not require knowledge of the system governing equations and considers the system as an unknown when extracting its data-driven model .

Once obtained, the DMD model can be used to forecast the system behavior. The solution of the approximated system can be expressed in terms of n eigenvalues and eigenvectors of the matrix [62]:

where the coefficients define the coordinates of the initial condition in the eigenvector basis, , and is a matrix containing the eigenvectors columnwise.

In practical applications, the state of the system is measured at m discrete time steps and can be expressed as , with m. Consequently, the approximation can be written as follows:

For each time step j, a snapshot of the system is defined as the column vector collecting the measured full state of the system . Two matrices, and , can be obtained by arranging the available snapshots as follows:

such that Equation (4) may be written in terms of these data matrices:

Hence, the matrix can be constructed using the following approximation

where is the Moore–Penrose pseudoinverse of , which minimize , where is the Frobenius norm.

The evaluation of the matrix , drawing a parallel with other machine learning techniques, practically constitutes the training or learning phase of the method, which is performed in a fast and direct way, i.e., with no need for iterative processes.

In order to evaluate the DMD modes and frequencies , the exact DMD algorithm is applied as described in [82]. The pseudoinverse of can be efficiently evaluated using singular value decomposition (SVD) as

where denotes the complex conjugate transpose. Due to the low dimensionality of data in the current context, Equation (8) is computed using full SVD decomposition, with no rank truncation. The matrix is evaluated by projecting onto the proper orthogonal decomposition (POD) modes in as

and its spectral decomposition can be evaluated as

The diagonal matrix contains the DMD eigenvalues , while the DMD modes constituting the matrix are then reconstructed using the eigenvectors and the time-shifted data matrix :

The state-variable evolution in time can be approximated by the modal expansion of Equation (3), where , starting from an initial condition corresponding to the end of the measured data .

2.3. Dynamic Mode Decomposition with Control

The original DMD characterizes naturally evolving dynamical systems. The DMDc aims at extending the algorithm to include in the analysis the influence of forcing inputs and disambiguate it from the unforced dynamics of the system [60]. While DMD is considered in this work for the nowcasting task, DMDc is considered more suitable for the system identification task, obtaining a reduced-order model for the prediction of FOWT platform motions and loads forced by two control variables, namely, wind velocity and wave elevation.

The DMDc formulation can be obtained from a forced dynamic system:

similarly to Equation (1) and now including the forcing input in the nonlinear mapping describing the evolution of the state. DMDc modeling approximates Equation (12) as

where , and the discrete time matrix .

Introducing the vector as

Equation (13) can be rewritten in a form close to Equation (4):

The collected data are arranged in the matrices and as follows:

and the DMD approximation of the matrix can be obtained from

where is the Moore–Penrose pseudoinverse of , which minimizes . In this way, the matrices and are obtained as the ones providing the best fitting of the sampled data in the least squares sense. The pseudoinverse of can be again efficiently evaluated using the singular value decomposition (SVD)

The DMD approximations of the matrices and are obtained by splitting the operator in and such that becomes

Due to the low dimensionality of the data in the current context, the pseudoinverse was computed using the full SVD decomposition with no rank truncation. Otherwise, truncating the SVD to rank p, one would have and .

Once the DMDc approximations of and are obtained, the system dynamics can be predicted with Equation (13) with the given initial conditions and the sequence of inputs .

2.4. Hankel Extension to DMD and DMDc

The standard DMD and DMDc formulations approximate the Koopman operator based on linear measurements, creating a best-fit linear model linking sequential data snapshots [53,54,84]. In this way, DMD provides a locally linear representation of the dynamics that is unable to capture many essential features of nonlinear systems. The augmentation of the system state is thus the subject of several DMD algorithmic variants [62,85,86,87], aiming to find a coordinate system (or embedding) that spans a Koopman-invariant subspace to search for an approximation of the Koopman operator that is valid also far from fixed points and periodic orbits in a larger space. The need for state augmentation through additional observables is even more critical for applications in which the number of states in the system is small, typically smaller than the number of available snapshots, such as the case at hand. However, there is no general rule for defining these observables and guaranteeing they will form a closed subspace under the Koopman operator [88].

The Hankel-DMD [56] is a specific version of the DMD algorithm that has been developed to deal with the cases of nonlinear systems in which only partial observations are available such that there are latent variables [62]. The state vector is thus augmented, embedding s time-delayed copies of the original variables. The Hankel-DMD with control (Hankel-DMDc) involves, in addition, the augmentation of the input vector with z time-delayed copies of the original forcing inputs. This results in an intrinsic coordinate system that forms an invariant subspace of the Koopman operator (the time delays form a set of observable functions that span a finite-dimensional subspace of Hilbert space, in which the Koopman operator preserves the structure of the system Brunton et al. [57], Pan and Duraisamy [89]). The use of time-delayed copies as additional observables in the DMD has been connected to the Koopman operator as a universal linearizing basis [57], yielding the true Koopman eigenfunctions and eigenvalues in the limit of infinite-time observations [56].

Incorporating time-lagged information in the data used to learn the model, Hankel-DMD and Hankel-DMDc increase the dimensionality of the system, allowing the algorithm to represent a richer phase space of the system, which is essential for capturing nonlinear dynamics of the original variables, even though the underlying DMD algorithm remains linear. In other words, including time-delayed data in the analysis, the Hankel-DMD and Hankel-DMDc can extract linear modes spanning a space of augmented dimensionality that are able to reflect the nonlinearities in the time evolution of the original system through complex relations between present and past states. Hence, the Hankel augmented DMD variants can better represent the underlying dynamics of the systems allowing the capture of their important nonlinear features.

The formulation of the Hankel-DMD can be obtained starting from the DMD presented in Section 2.2. The dynamical system is approximated by Hankel-DMD as

where the augmented state vector is defined as . Two augmented data matrices and are built as follows:

where the Hankel matrices and are

In this way, the augmented system matrix is approximated with

Following the exact DMD procedure [82] as described in Section 2.2, in order to evaluate the Hankel-DMD modes and frequencies , the pseudoinverse of is obtained with SVD as

and a matrix is calculated as

The spectral decomposition of is evaluated as follows:

The diagonal matrix contains the Hankel-DMD eigenvalues , while the Hankel-DMD eigenvectors constituting the matrix are then reconstructed using the eigenvectors of the matrix and the time-shifted data matrix :

The time evolution of the augmented state variables can be finally evaluated by the following modal expansion:

where , and the coefficients are the coordinates of the augmented initial condition for the prediction in the eigenvector basis .

The prediction of the time evolution of the original state variables is finally extracted by the augmented state vector isolating its first n components.

The augmentation of the system state with its delayed copies can be similarly applied to DMDc, modifying the formulation presented in Section 2.3. In this case, not only the state but also the input vector is extended with time-shifted copies, leading to the following representation of the dynamic system:

where follows the definition given for the Hankel-DMD, and is the extended input vector, including the z delayed copies, and is the augmented system input matrix. In addition to , the augmented data matrix is defined as

with the matrix showing a Hankel structure defined as

The augmented matrix is approximated in Hankel-DMDc as

The pseudoinverse of is evaluated by SVD as , leading to

The Hankel-DMDc approximations of the matrices and are obtained by splitting the operator in and such that . Equation (29) is hence used to obtain the time evolution of the augmented state vector, and finally, by isolating its first n components, the predicted time evolution of the original state variables are extracted.

2.5. Bayesian Extension to Hankel-DMD and Hankel-DMDc

The Bayesian extension is introduced to incorporate uncertainty quantification in the analyses, adding confidence intervals to the predictions coming from the numerical methods. Its definition starts by noting that the dimensions and the values within matrices and depend on three hyperparameters of the algorithms: the observation time length, and the maximum delay time in the augmented state for Hankel-DMD, with the addition of the maximum delay time in the augmented input for Hankel-DMDc.

These dependencies can be denoted as follows:

In the Bayesian formulations, the hyperparameters are considered stochastic variables with given probability density functions , , and , introducing uncertainty in the process. Through uncertainty propagation, the solution also depends on , , and, in Bayesian Hankel-DMDc, . At a given time t, the expected value of the solution and its standard deviation can be expressed for the Bayesian Hankel-DMD as

and, for the Bayesian Hankel-DMDc, as

where , , and and , , and are lower and upper bounds for , , and .

In practice, a uniform probability density function is assigned to the hyperparameters, and a set of realizations is obtained through a Monte Carlo sampling. Accordingly, for each realization of the hyperparameters, the solution or is computed, and at a given time t, the expected value and standard deviation of the solution are then evaluated.

3. Performance Metrics

To evaluate the predictions made by the models and to compare the effectiveness of different configurations, three error indices are employed: the normalized mean square error (NRMSE) [76], the normalized average minimum/maximum absolute error (NAMMAE) [76], and the Jensen-Shannon divergence (JSD) [90]. All the metrics are averaged over the variables that constitute the system’s state, providing a holistic assessment of the prediction accuracy. This comprehensive evaluation considers aspects such as overall error, the range, and the statistical similarity of predicted versus measured values.

The NRMSE quantifies the average root mean square error between the predicted values and the measured (test) values at different time steps. It is calculated by taking the square root of the average squared differences, which is normalized by k times the standard deviation of the measured values:

where N is the number of variables in the predicted state, is the number of considered time instants, and is the standard deviation of the measured values in the considered time window for the variable .

The NAMMAE metric, introduced in Diez et al. [76,77], provides an engineering-oriented assessment of the prediction accuracy. It measures the absolute difference between the minimum and maximum values of the predicted and measured time series that is normalized by k times the standard deviation of the measured values as follows:

Lastly, the JSD measures the similarity between the probability distributions of the predicted and reference signal [90]. For each variable, it estimates the entropy of the predicted time series probability density function Q relative to the probability density function of the measured time series R, where M is the average of the two [91].

The JSD is based on the Kullback–Leibler divergence D, given by Equation (43), which is the expectation of the logarithmic difference between the probabilities K and H that are both defined over the domain , where the expectation is taken using the probabilities K [92]. The similarity between the distributions is higher when the Jensen–Shannon distance is closer to zero. The JSD is upper bounded by ln(2).

Each of the three indices contributes to the error assessment with its peculiarity, providing a holistic assessment of prediction accuracy:

- -

- The NRMSE evidences phase, frequency, and amplitude errors between the reference and the predicted signal, evaluating a pointwise difference between the two. However, it is not possible to discern between the three types of error and to what extent each type contributes to the overall value.

- -

- The NAMMAE indicates if the prediction varies in the same range of the original signal, but it does not give any hint about the phase or frequency similarity of the two.

- -

- The JSD index is ineffective in detecting phase errors between the predicted and the reference signals and is scarcely able to detect infrequent but large amplitude errors. Instead, it highlights whether the compared time histories assume each value in their range of variation a similar number of times. Hence, it is sensitive to errors in the frequency and trend of the predicted signal.

An example of synergic use of the three is given for the case of a prediction that rapidly goes to zero and evolves with an overly small amplitude. In this case, the NRMSE has a subtle behavior that may mislead the interpretation of the results if used alone: using the definition in Equation (39), the NRMSE would be close to an eighth of the standard deviation of the observed signal. This may be lower than or comparable to the error obtained with a prediction that captures the trend of the observed time history but with a small phase shift, and it may be misleading regarding the real capability of the algorithm at hand. Assessment of the NAMMAE and JSD would help to discriminate the mentioned situation, as those metrics tackle different aspects of the prediction.

An additional time-resolved error index is considered, evaluating the time evolution of the squared root difference between the reference and predicted signal averaged among the variables and normalized by k times the standard deviation of the measured values:

The index is used to investigate the progression of the prediction error in the test time frame and identify possible trends.

The value of k in Equations (39), (40) and (44) is set to 10, indicating a normalizing interval of that corresponds to a coverage percentage equal to 96% using Chebishev’s inequality.

4. Numerical Setup

This section presents the numerical setup of the DMD for the nowcasting and system identification tasks, along with the preprocessing applied to the data. In this work, the analyses are performed on experimentally measured data, but it is worth noting that the methods also directly apply to other data sources such as simulations of various fidelity levels. All DMD analyses are based on normalized data using the Z-score standardization. Specifically, time histories are shifted and scaled, with the average and variance evaluated on the training set.

A lexicon borrowed from machine learning can be used to describe the workflow for the DMD analyses due to their data-driven nature, even though the peculiarities of the method will cause some differences in the meaning of some terms. Calling present instant the DMD prediction starting point, the observed data lie in the past. Hence, the Hankel-DMD models the matrices and , which are built using such past time histories that constitute the training data. The test data, conversely, lie in the future, and test sequences are used to assess the predictive performances of the models.

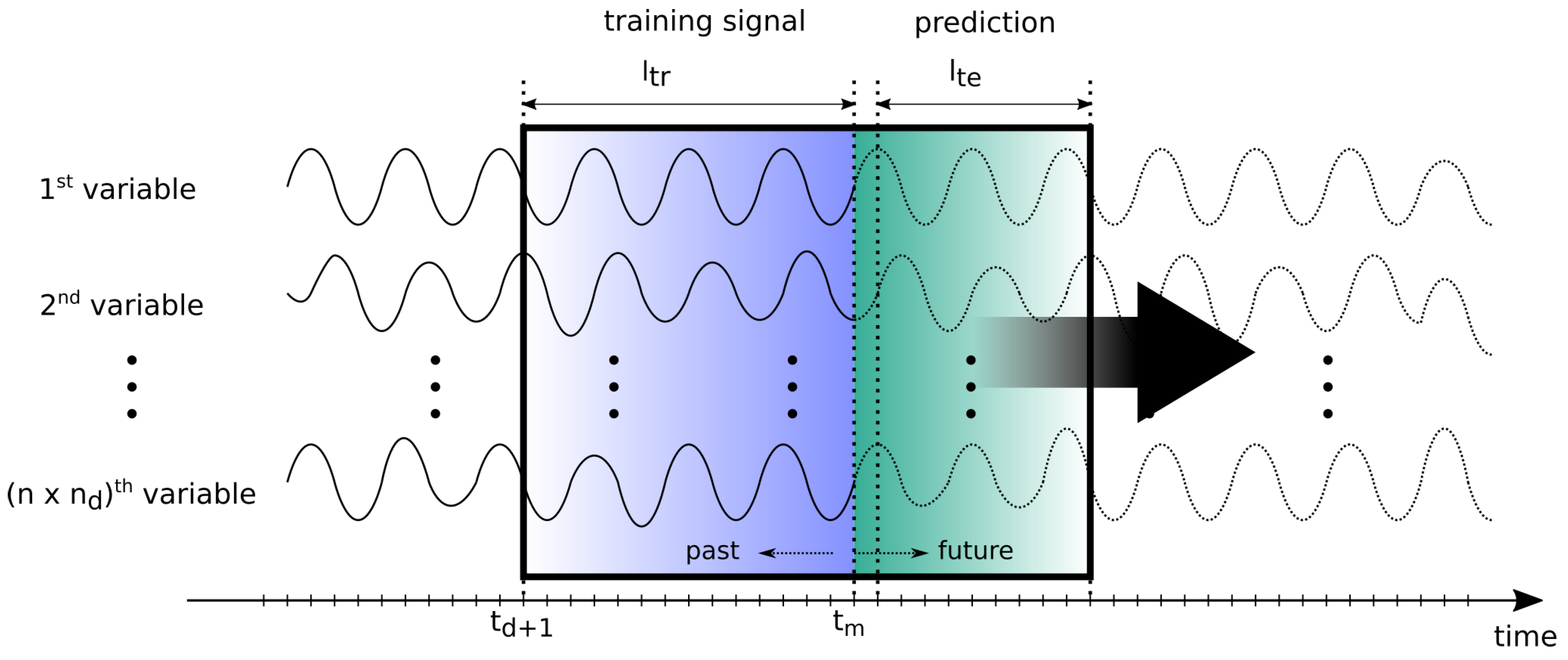

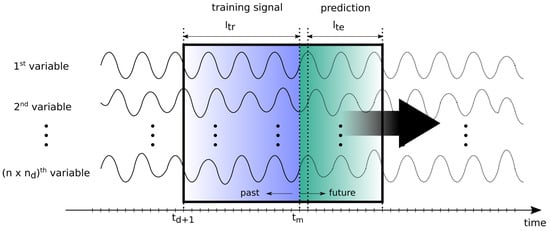

In the nowcasting approach, the (Bayesian) Hankel-DMD models are trained with sequences from the near past, i.e., ending just before the present instant, and used to forecast short sequences in the future in the order of few wave encounter periods (referring to the time between the passage of two consecutive waves relative to a fixed point on the floating platform). A new training is performed for each test sequence in a sliding window fashion, as sketched in Figure 3.

Figure 3.

Sketch of the Hankel-DMD modeling approach for short-term forecasting (nowcasting).

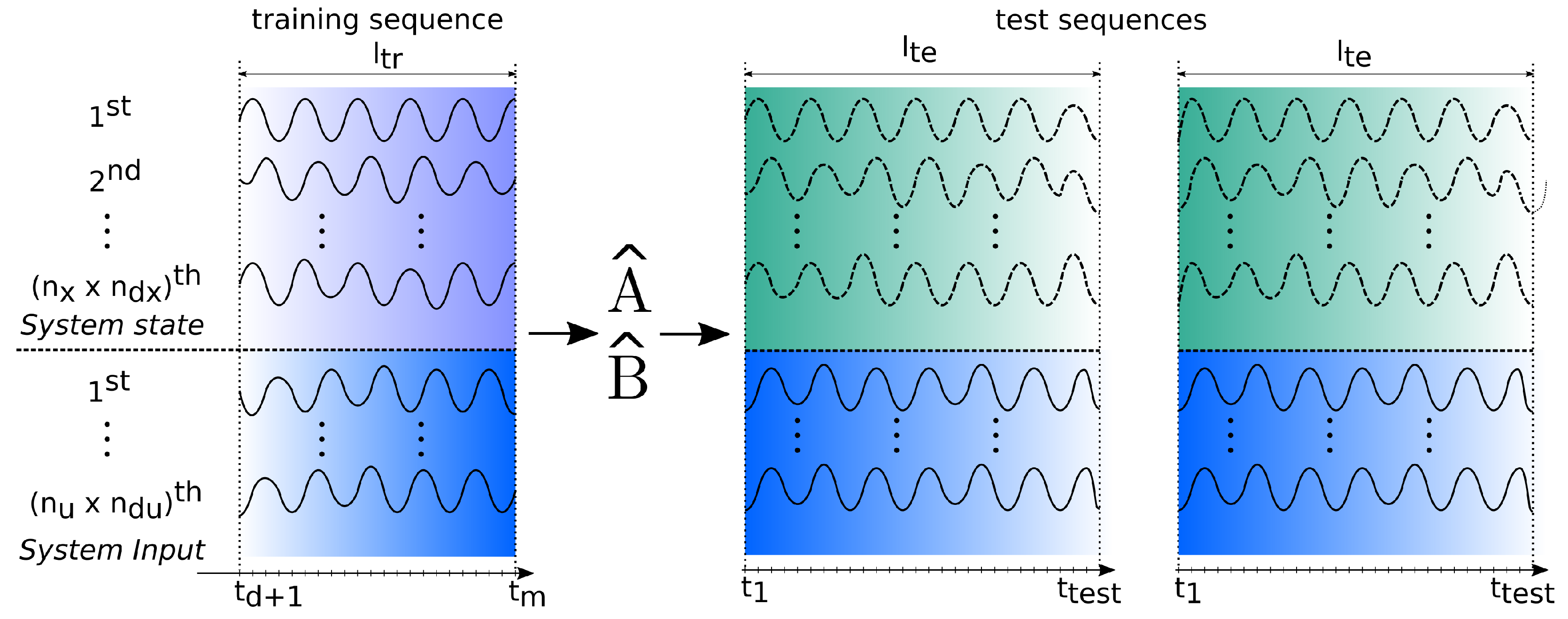

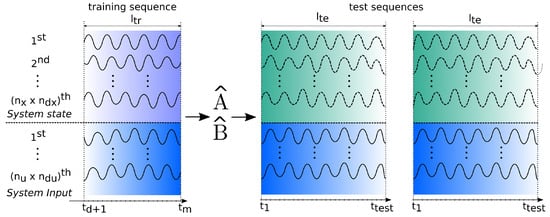

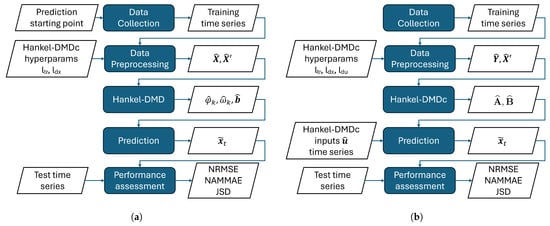

In the system identification task, the training and the test phases are independent such that a (Bayesian) Hankel-DMDc model is trained on a dedicated sequence only once and taken as a representative ROM for the FOWT. Hence, its performances are tested against multiple test sequences with no changes in the and matrices, as suggested by the sketch in Figure 4.

Figure 4.

Sketch of the Hankel-DMD modeling approach for system identification.

It is worth stressing that, differently from most machine learning methods, training a DMD/DMDc model is not an iterative procedure. In fact, the model is built, i.e., trained, with a direct procedure as described in Section 2.4, identifying the Hankel-DMD modes, the matrix and, for DMDc, the matrix .

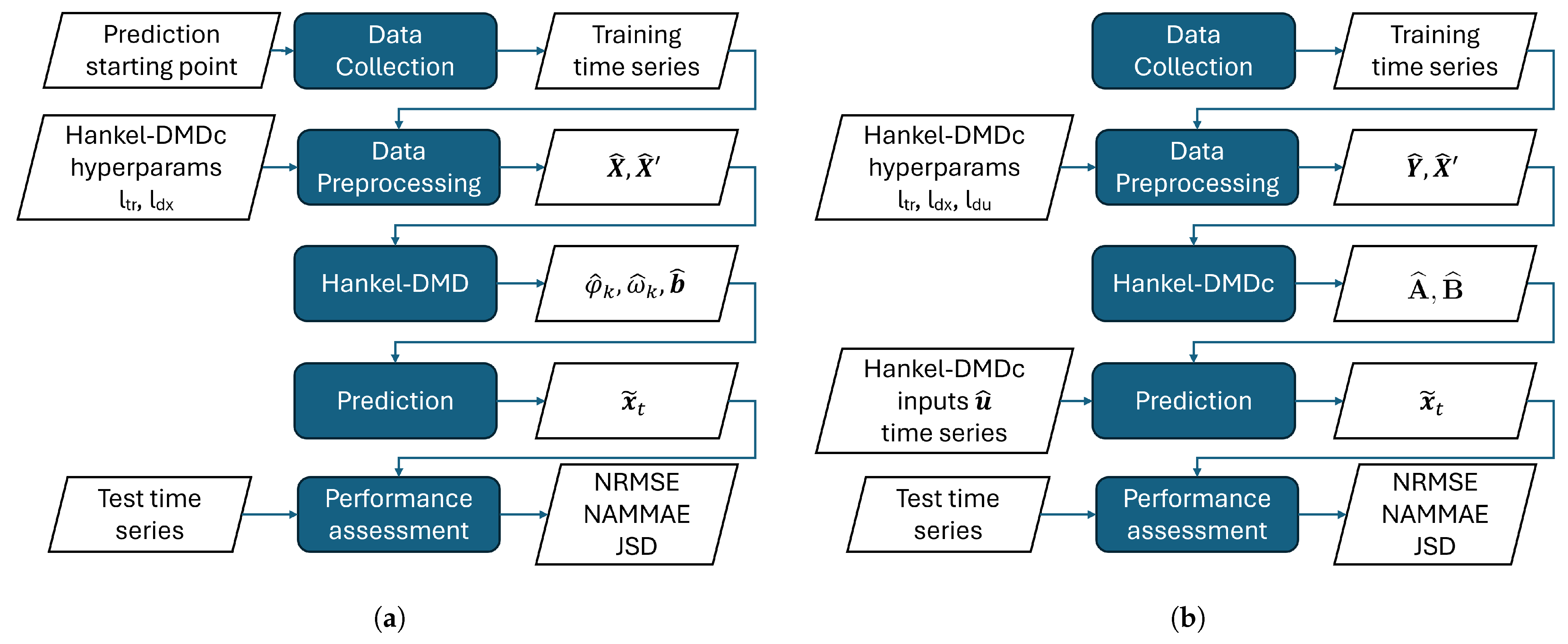

Figure 5 offers a view of the workflow of the Hankel-DMD (Figure 5a) and Hankel-DMDc (Figure 5b) analyses. The first operation is the collection of the training data to be processed, which are in this work extracted from the existent dataset. This is strictly dependent on the prediction time instant only for the nowcasting using Hankel-DMD. Then, data are fed to the preprocessing step: To provide a common baseline for processing by DMD, time sequences are resampled using a sampling rate s. The wave encounter frequency is expected to be relevant in the platform dynamics, which will have, however, relevant energetic content also at higher frequencies. The sampling rate has been hence selected to ensure at least six samples per wavelength up to , avoiding aliasing. Data are then organized into the matrices and based on the hyperparameter values of the DMD method at hand.

Figure 5.

Hankel-DMD for nowcasting (a) and Hankel-DMDc for system identification (b) learning–prediction–assessment flowchart.

The Hankel-DMD/DMDc algorithm is thus applied, obtaining the eigenvalues and eigenvectors of the matrix and the vector of the initial conditions for Hankel-DMD in nowcasting or the matrices and for Hankel-DMDc in system identification.

The output of the Hankel-DMD or Hankel-DMDc is used to calculate the predicted time series of the states, which are then compared with the test signals to evaluate the error metrics for performance assessment.

The nowcasting by Hankel-DMD only requires past histories of the variables under analysis, in contrast to the system identification in which the Hankel-DMDc needs the current value of the input time series at each time step to advance with the prediction. In particular, in this study, the wind speed and the wave elevation as measured by the PLC on the nacelle and the ACDP, respectively, are included in the vector . The mentioned characteristics make the two approaches very different from each other. The nowcasting is more suitable for short-term predictions, in the range of a few characteristic periods, and appropriate for being applied in real-time digital twins or to model predictive controllers, also exploiting the possibility of continuous learning of the method during the system evolution. The system identification approach is more suited to produce a reduced-order model of the system as a surrogate of the original one that produced the data used for training. Once trained, the ROM can be applied for fast and reliable predictions of the system’s response to given operational conditions of possibly undefined time extension at a reduced computational cost, with potentially useful applications in control, design, life-cycle cost assessment, maintenance, and operational planning, as an alternative to high-fidelity simulations or experiments.

A full-factorial design of experiment is conducted to investigate the influence of the two main hyperparameters of the Hankel-DMD on the nowcasting performances. Five levels of variation are used for and six for , as also outlined in Table 1 in terms of training time steps and number of delayed time histories embedded with the current time sampling.

Table 1.

List of the hyperparameter settings tested for Hankel-DMD nowcasting.

The prediction performance of each configuration is assessed through the NRMSE, NAMMAE, and JSD metrics introduced in Section 3 on a statistical basis using 100 random starting instants as validation cases for prediction inside the 12-hour considered time frame. A forecasting horizon of is considered, corresponding to approximately 30 s.

The statistical assessment of the system identification procedure shall also include the choice of the training sequence as an influencing parameter. For this reason, the dataset is subdivided into training, validation, and test portions that are completely separated from each other. Ten different training sets are identified inside the data portion dedicated to training. For each one of them, the effect of the three hyperparameters of Hankel-DMDc on the system identification is analyzed with a full-factorial design of experiment using seven levels for and six levels for and , with the values in Table 2 used.

Table 2.

List of the hyperparameter settings tested for Hankel-DMDc system identification algorithm.

The prediction performance of each of the 252 configurations is assessed through the NRMSE, NAMMAE, and JSD metrics on a statistical basis using 10 random validation signals extracted from the validation set for a total of 100 evaluations of each error metric per hyperparameters configuration. The prediction time window considered has a length of , or approximately 146 s.

The results from the deterministic Hankel-DMD and Hankel-DMDc analyses are used to identify suitable ranges for the hyperparameter values to be used with their Bayesian extensions.

5. Results

This section discusses the DMD analysis of the system dynamics in terms of complex modal frequencies, modal participation, and most energetic modes, and it assesses the results of the DMD-based forecasting method for the prediction of the state evolution.

5.1. Modal Analysis

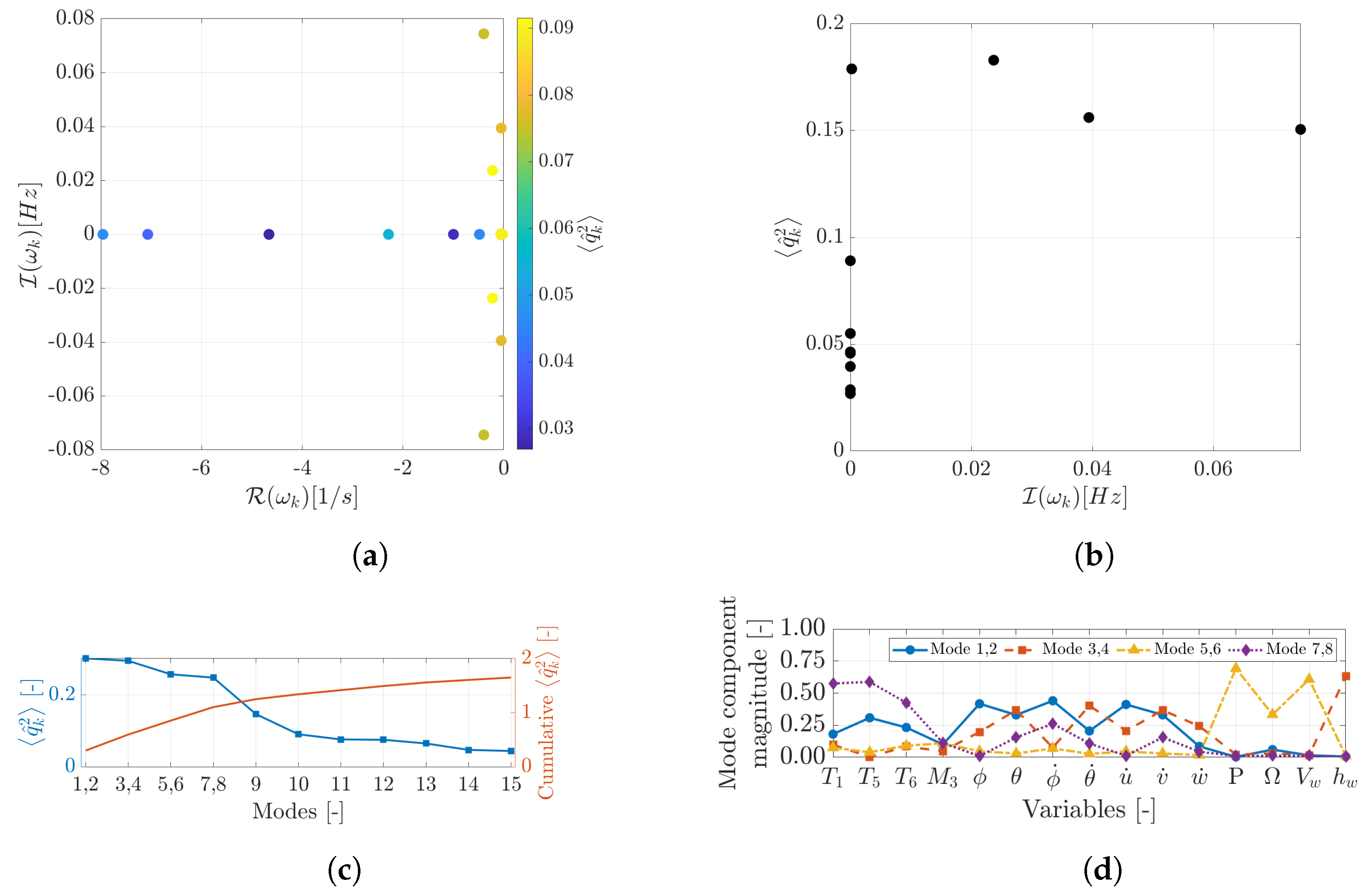

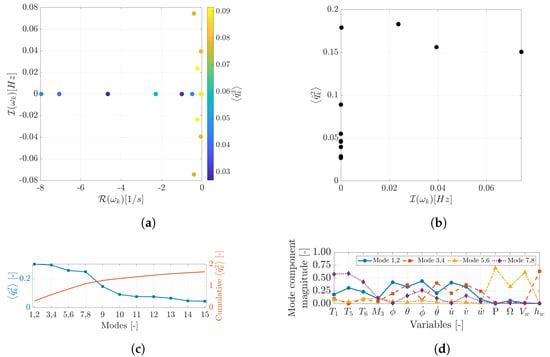

Figure 6 presents the DMD results for the FOWT system dynamics. Specifically, Figure 6a shows the complex modal frequencies identified by DMD with no state augmentation. These were ranked in Figure 6b based on the normalized energy of the respective modal coordinate signal:

As can be seen in Figure 6c, the dynamic is dominated by four couples of complex conjugate modes. Their components’ magnitudes are presented in Figure 6d. Modes (1,2) and (7,8) show a slow (frequency around 0.0237 Hz, period around 42.3 s) and a faster (frequency around 0.0745 Hz, period around 13.5 s) coupling between the loads on tendons (and weakly on moorings) and the platform motion. Mode (3,4)’s variable participation suggests that the mode describes a slow wave height oscillation (frequency 0.00022 Hz, with a period of almost 1 h and 15 min) influencing the platform motion variables, both angular and linear. Finally, mode (5,6) identifies a subsystem describing the variation in extracted power and turbine rotational speed with wind speed (frequency 0.039 Hz, period around 25.4 s). The power extracted by the wind turbine and the rpm of its blades are almost not involved in the description of the floating motions and scarcely influenced by them, despite the extreme operating conditions that could have a coupling effect through large motions, as well as hydro- and aerodynamic nonlinearities.

Figure 6.

DMD complex modal frequencies (a), modal participation spectrum (b) and cumulative values (c), and first modes shapes (d).

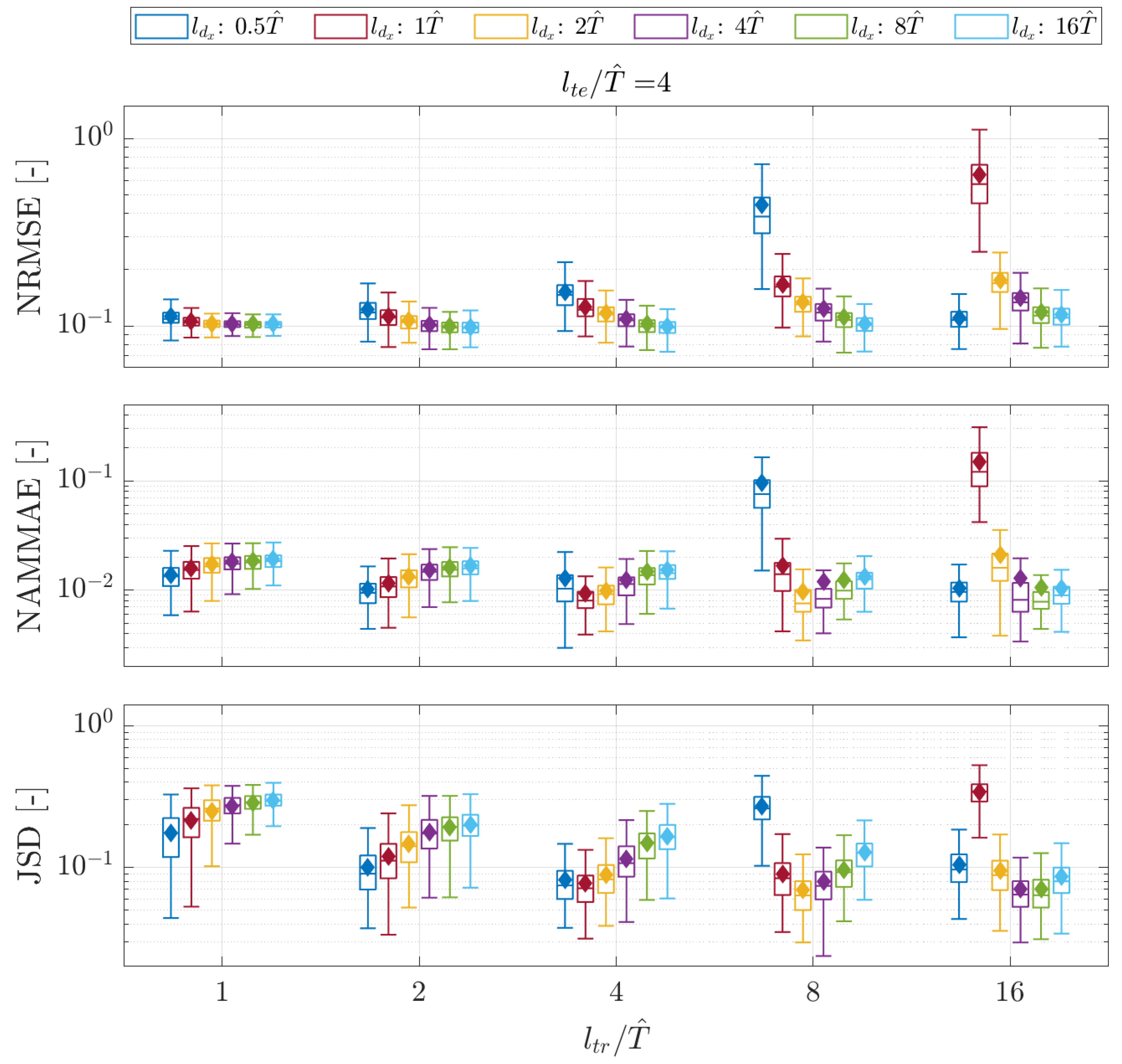

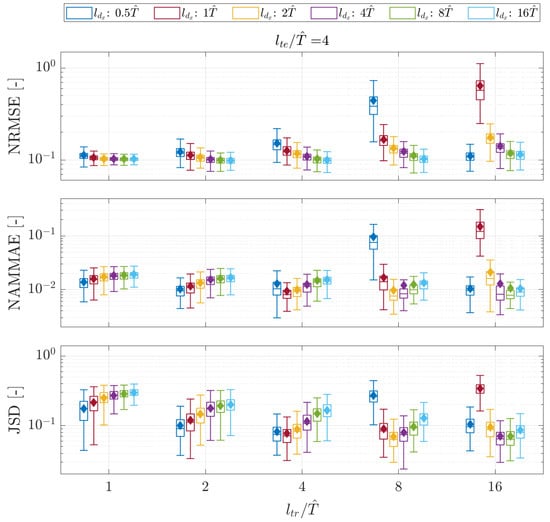

5.2. Nowcasting via Hankel-DMD

As anticipated in Section 4, a full-factorial combination of settings of the Hankel-DMD hyperparameters was tested to investigate their influence on the forecasting capability of the method. The results, gathering the outcomes from all the algorithm setups and test cases, are outlined in Figure 7 as boxplots for the NRMSE, NAMMAE, and JSD, focusing on a length of the test signal . The boxplots show the first, second (equivalent to the median value), and third quartiles, while the whiskers extend from the box to the farthest data point lying within 1.5 times the inter-quartile range, which is defined as the difference between the third and the first quartiles from the box. The diamonds indicate the average of the results for each combination. Outliers are not shown to improve the readability of the plot.

Figure 7.

Hankel-DMD boxplot of error metrics over the validation set for tested and , (∼30 s). Diamonds indicate the average value of the respective configuration.

It can be noted that the three metrics indicate different best hyperparameter settings. Their values are reported in Table 3. However, the different types of errors targeted by the three metrics help to interpret the results and gain some insight:

Table 3.

Summary of the best hyperparameter configurations for nowcasting as identified by the deterministic design of experiment and Bayesian setup, where .

- (a)

- Long training signals with few delayed copies in the augmented state showed poor prediction capabilities, as confirmed by all the metrics for both the short-term and mid-term time windows. The effect is notable for .

- (b)

- A high number of embedded time-delayed signals with insufficiently long training lengths was prone to producing NRMSEs, progressively reducing the metric’s dispersion around the value of an eighth of the standard deviation of the observed signals. This happens, with the explored values of , particularly for , , and, to a lesser extent, when exceeded 1. At the same time, the NAMMAE and JSD values for the same settings are progressively increasing their values; this indicates that the predicted signals are not able to catch the maximum and minimum values of the reference sequences and that the distribution of the predicted data is not adherent to the true data advancing in time. The combination of those two behaviors is due to the method generating numerous rapidly decaying predictions, whose signals become highly damped after a short observation time.

A suitable range for the hyperparameter settings to obtain accurate results can be inferred by combining the above considerations. The best deterministic results were obtained when and .

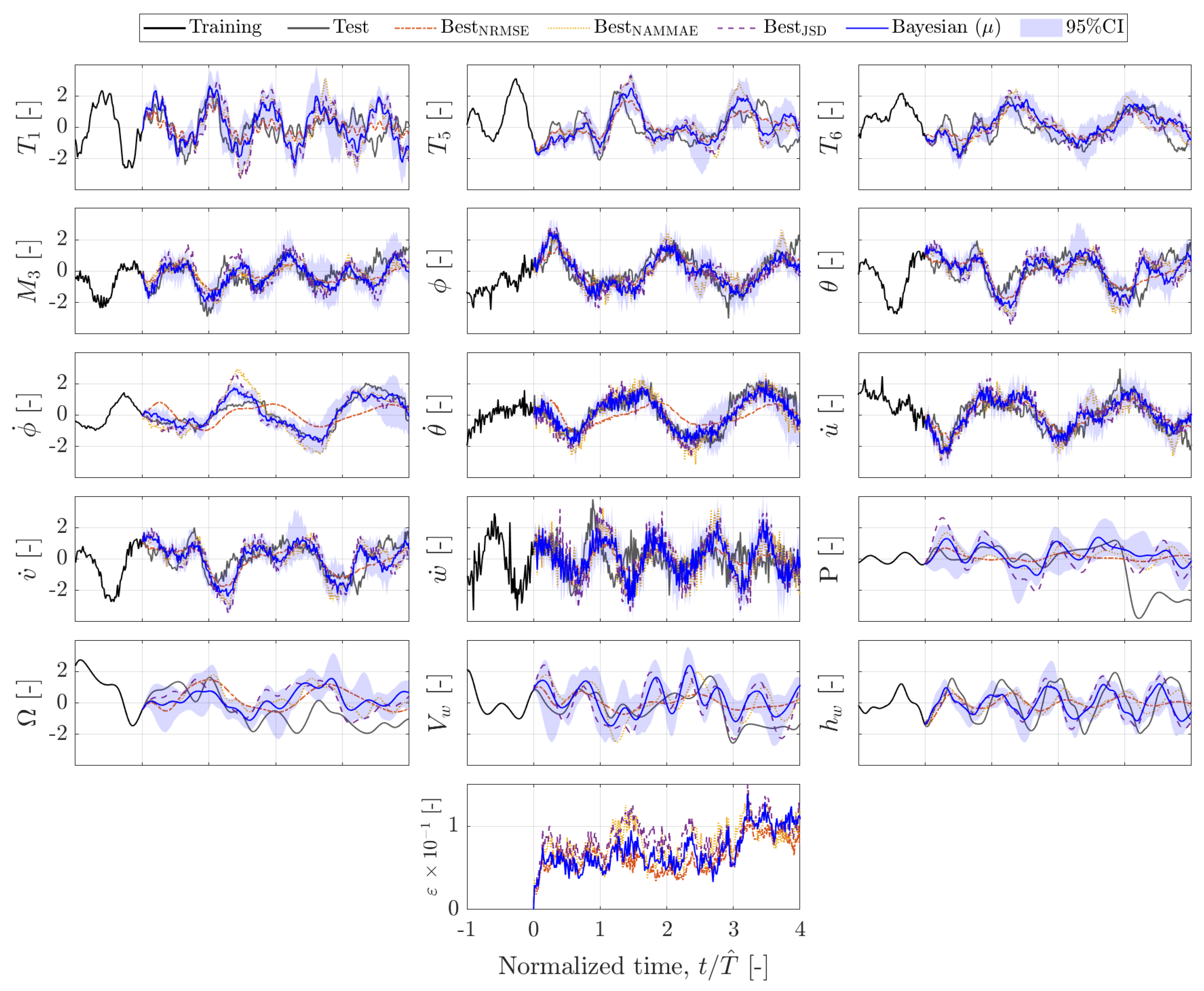

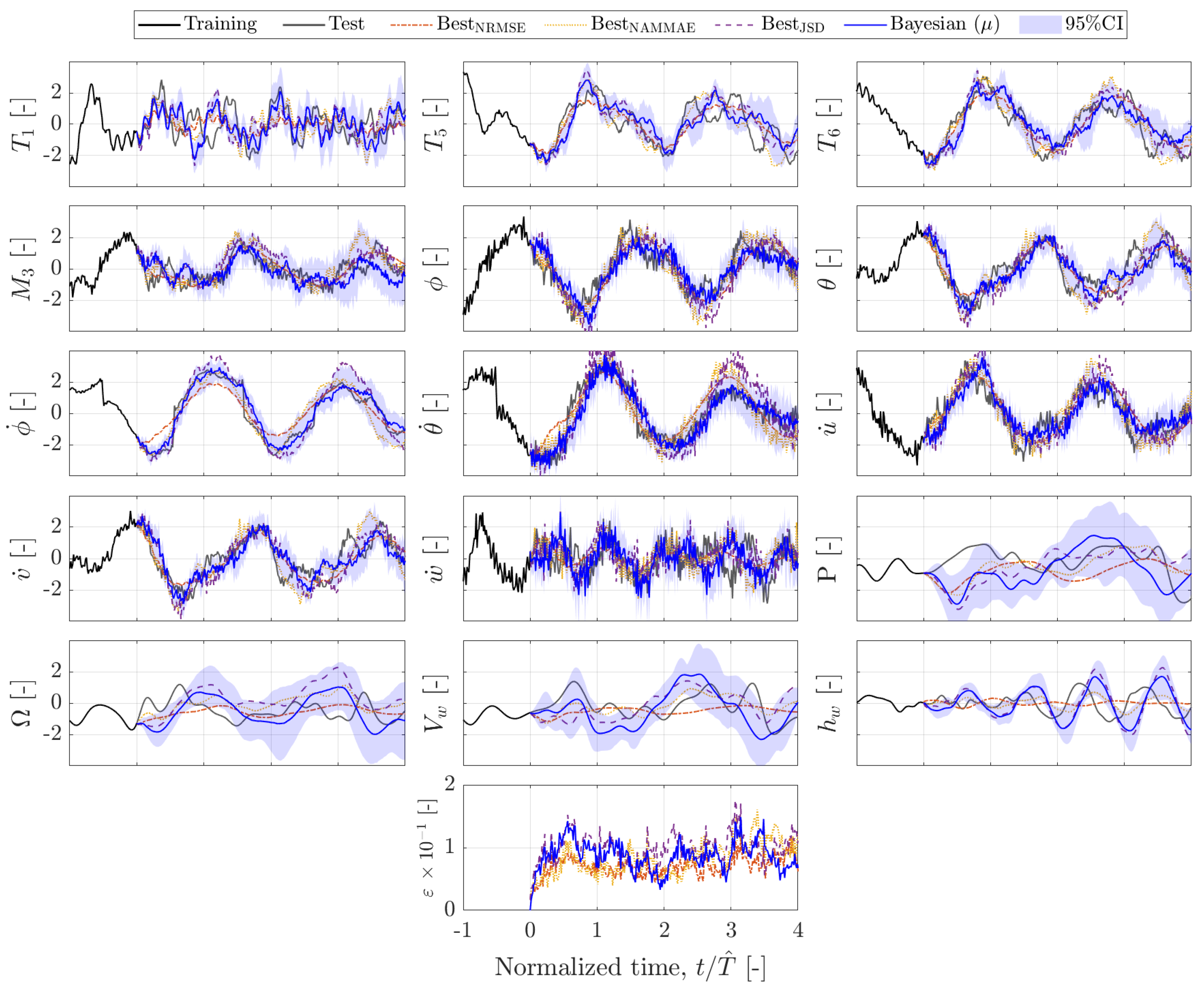

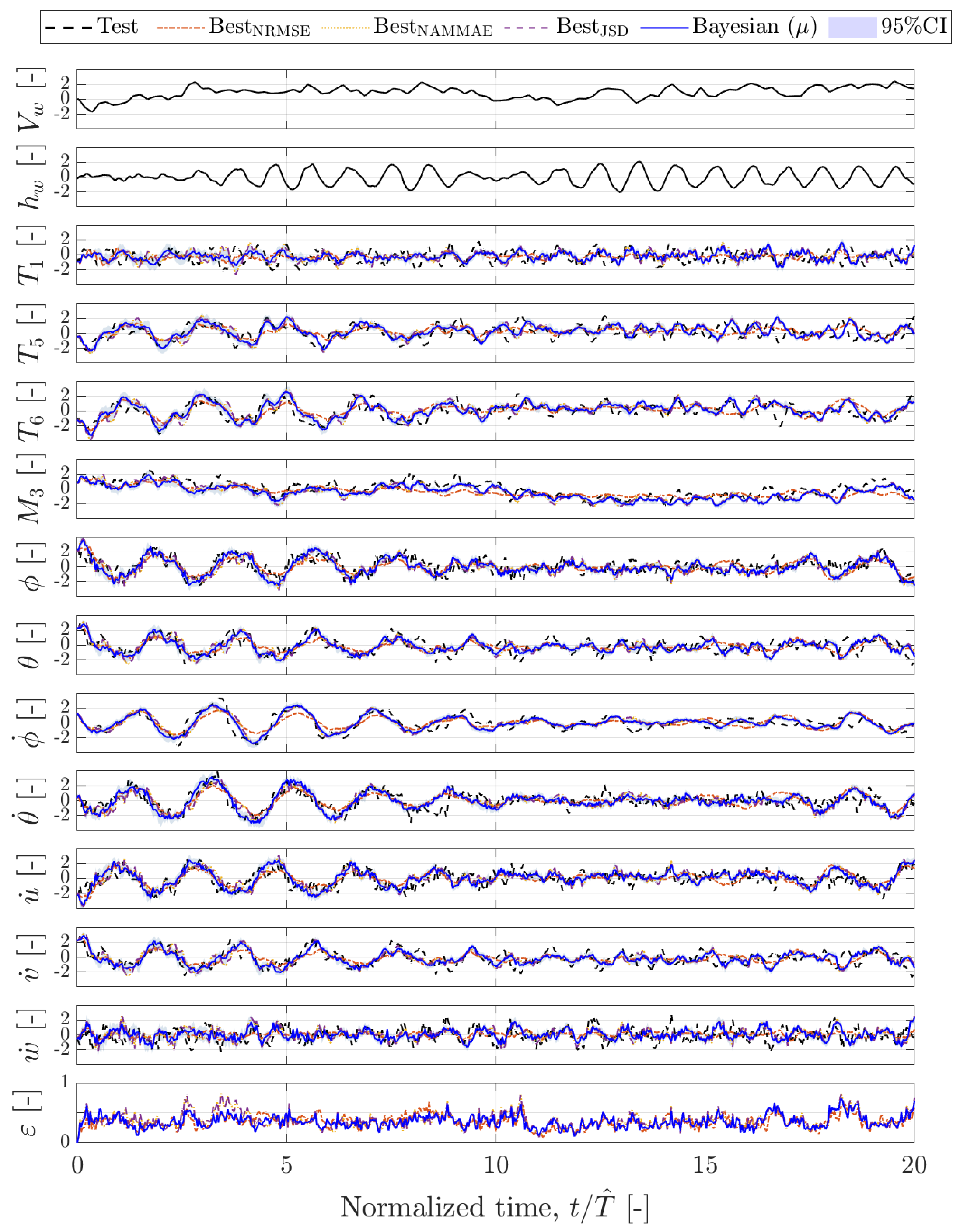

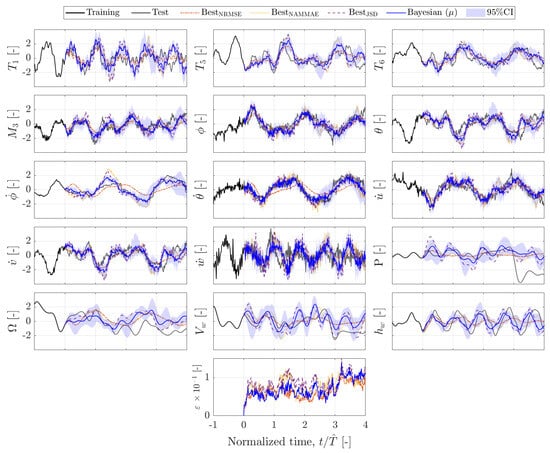

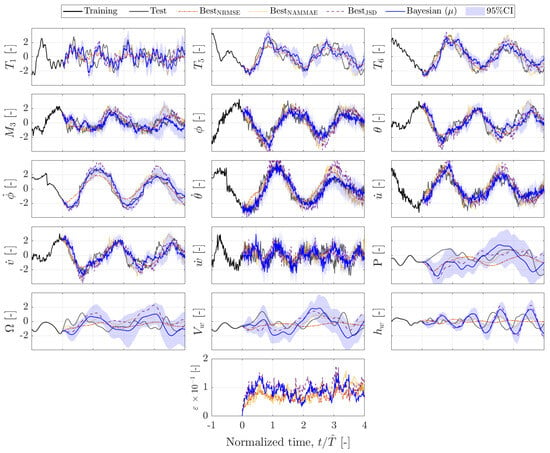

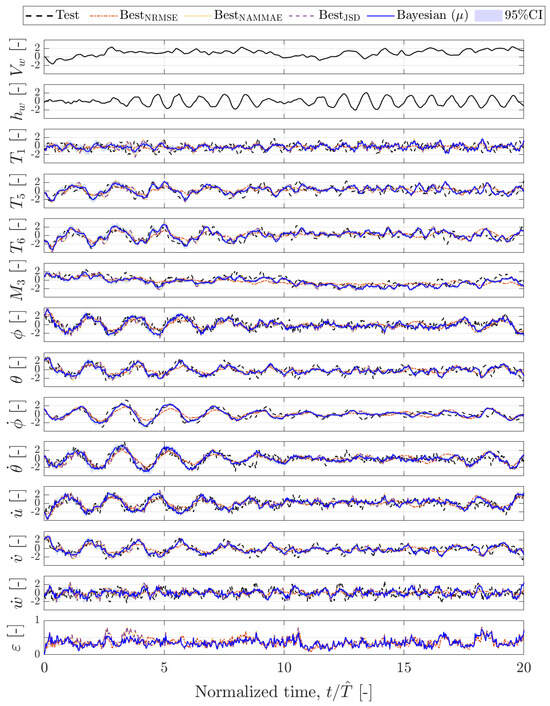

Figure 8, Figure 9, Figure 10 and Figure 11 show the forecast by the Hankel-DMD for random test sequences taken as representative nowcasting examples. The figures show the last part of the training sequence with a solid black line, the test sequence in a dashed black line, and the prediction obtained with the BestNRMSE, BestNAMMAE, and BestJSD hyperparameters, as reported in Table 3, with an orange dash-dotted, yellow dotted, and purple dashed line, respectively.

Figure 8.

Standardized time series nowcasting by deterministic (hyperparameters for best average metrics) and Bayesian Hankel-DMD. Selected sequence 1.

Figure 9.

Standardized time series nowcasting by deterministic (hyperparameters for best average metrics) and Bayesian Hankel-DMD. Selected sequence 2.

Figure 10.

Standardized time series nowcasting by deterministic (hyperparameters for best average metrics) and Bayesian Hankel-DMD. Selected sequence 3.

Figure 11.

Standardized time series nowcasting by deterministic (hyperparameters for best average metrics) and Bayesian Hankel-DMD. Selected sequence 4.

The forecast data are in fairly good agreement with the measurements, particularly for the BestNAMMAE and BestJSD lines. Confirming the previous statement, some predictions of the BestNRMSE show a rapidly decaying amplitude not following the ground truth; see, e.g., , , , , , , and in Figure 10 or and in Figure 8.

As expected from the nowcasting algorithm, the forecasting accuracy is higher at the beginning of the prediction, see the trend in Figure 8, Figure 9, Figure 10 and Figure 11, but in most cases is satisfactory for the entire window. The variables reproduced with the highest errors are the extracted power P, the blade rotational speed , and the wind speed . Their time histories estimated by the PLC on the nacelle do not show strong periodicity in the observed time frame and, moreover, show strong nonlinearities: the turbine control algorithms limit the maximum power extracted by the turbine and its blades’ rotational speed for example, acting as a saturator when the wind is too strong. In addition, and partially as a consequence of the above, these variables are seen to form an almost separate subsystem in the modal analysis; hence, a limited portion of the data could be actually used by the DMD to extract related modal content and produce their forecast, putting the method in a very challenging situation.

5.3. Nowcasting via Bayesian Hankel-DMD

As highlighted by the authors in previous works [59,76,77,93], the final prediction from DMD-based models may strongly vary for different hyperparameters settings, and no general rule is given for the determination of their optimal values. Aiming at including uncertainty quantification in the prediction and making the prediction more robust, the Bayesian extension of the Hankel-DMD considers the hyperparameters of the method as stochastic variables with uniform probability density functions.

The insights gained in the deterministic analysis are applied to determine suitable variation ranges for and . In particular, a probabilistic length of the training time history is considered, uniformly distributed between 4 to 16 incoming wave periods as . Moreover, for each realization of , is also taken as a probabilistic variable, being uniformly distributed in the interval (each actual is taken as its respective integer part).

The solid blue lines in Figure 8, Figure 9, Figure 10 and Figure 11 show the expected value of the Bayesian predictions on representative test set sequences obtained using 100 uniformly distributed Monte Carlo realizations; the blue shadowed areas indicate the 95% confidence interval of the uncertain predictions. The Bayesian Hankel-DMD provides fairly good forecasting of the measured quantities and the same considerations made in the deterministic analysis hold. The biggest discrepancies are noted in the prediction of P, , and , which also show the largest uncertainty bands, suggesting poor accuracy.

Figure 12 compares the error metrics from the Bayesian Hankel-DMD and the BestNRMSE, BestNAMMAE, and BestJSD configurations of the deterministic analysis for . The mean values of the metrics are summarized in Table 3. The boxplots indicate that Bayesian nowcasting achieved results comparable to the best deterministic configurations for NAMMAE and JSD, slightly improving their NRMSEs. This appears remarkable considering the poor NAMMAE and JSD performance of the BestNRMSE configuration.

Figure 12.

Error metrics comparison, Hankel-DMD vs. Bayesian Hankel-DMD nowcasting, (∼30 s).

The forecasting time window here considered, i.e., (∼30 s), can be assumed satisfactory from the perspective of the design of a model predictive or feedforward controller according to Ma et al. [39], where a five-second horizon was considered and found to be sufficient. Moreover, the nowcasting algorithm is inherently suitable for continuous learning and digital twinning, adapting the model and predictions along with the incoming data from an evolving changing system and environment.

It shall be noted that the evaluation time for a single Hankel-DMD training, averaged over 10 realizations using 100 different hyperparameter values in the intervals of the Bayesian analysis, ranged from s, s to s, s on a mid-end laptop with an Intel Core i5-1235U CPU and 16 Gb of memory using a noncompiled MATLAB 2023a code, depending on the algorithm setup (longer training signals with more delays require more time). The computational cost for obtaining a Bayesian Hankel-DMD prediction linearly depends on the number of Monte Carlo samples used in the hyperparameters sampling: for each hyperparameter combination, a different Hankel-DMD model has to be evaluated. However, the task is embarrassingly parallel, and, with a sufficient number of computational units, the actual computational time can be kept as low as a single deterministic evaluation. The one-shot and fast training phase, related to the direct linear algebra operations detailed in Section 2.2, makes the Hankel-DMD and Bayesian Hankel-DMD algorithms very promising in the context of real-time forecasting and nowcasting.

5.4. System Identification via Hankel-DMDc

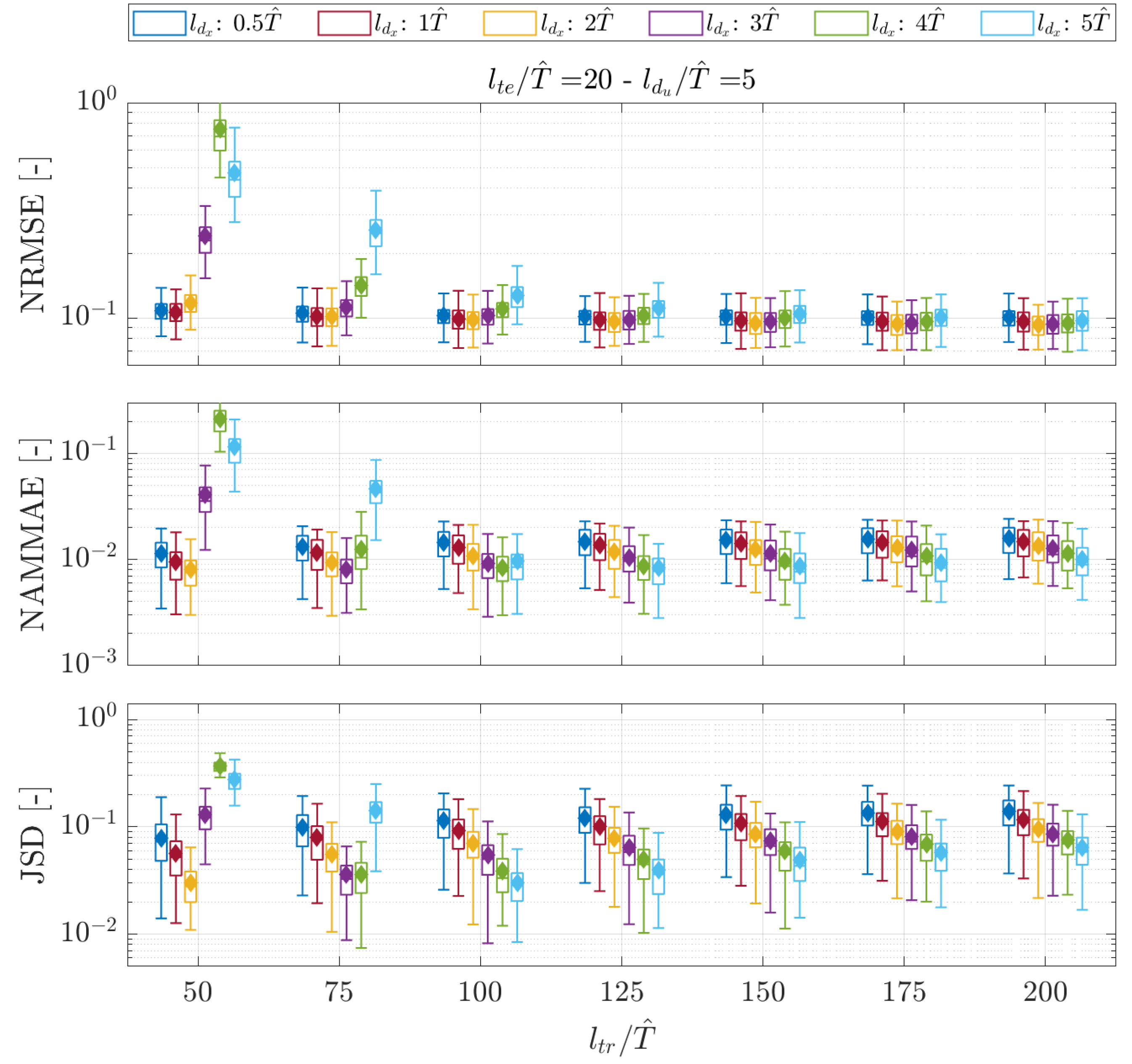

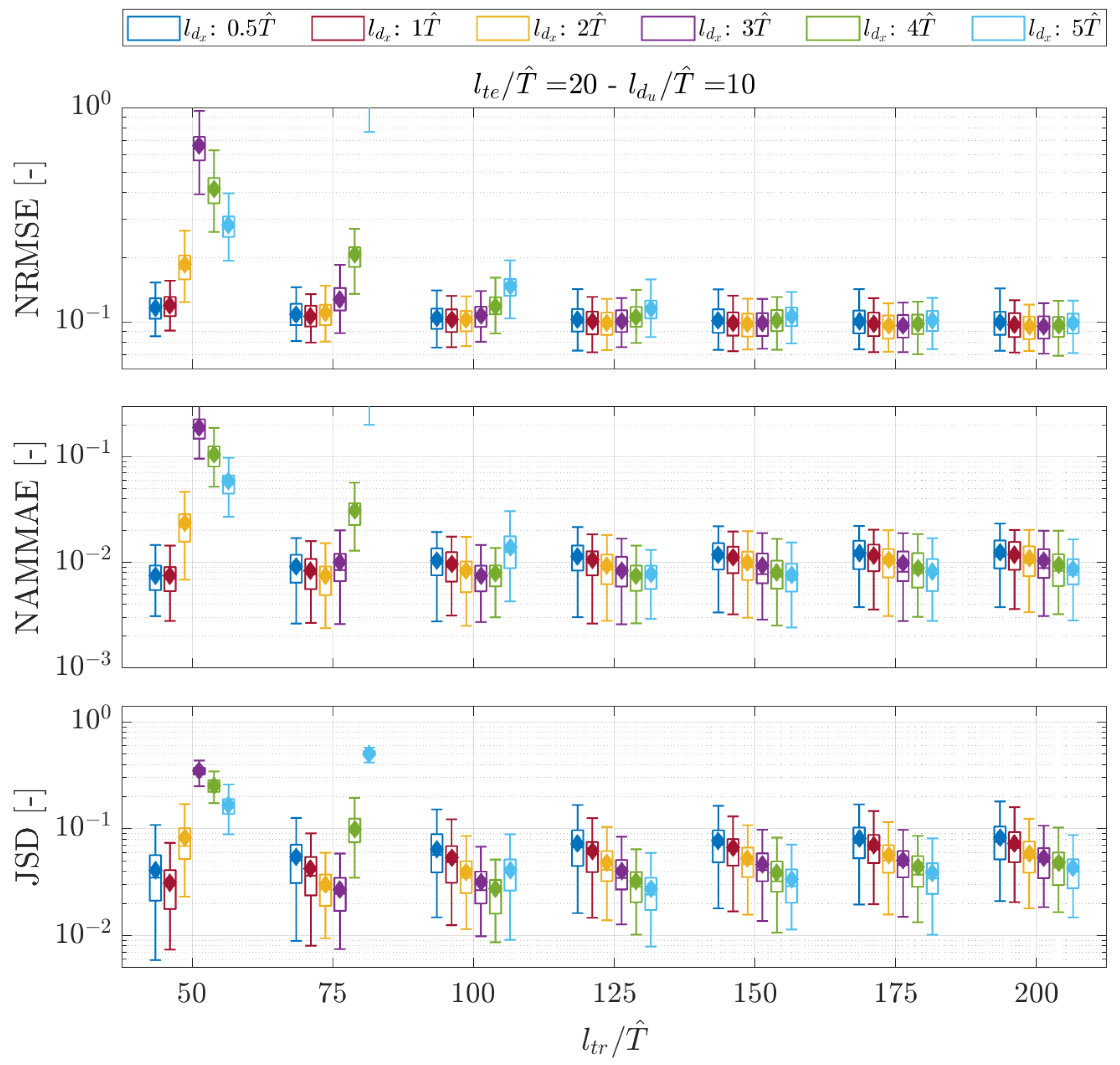

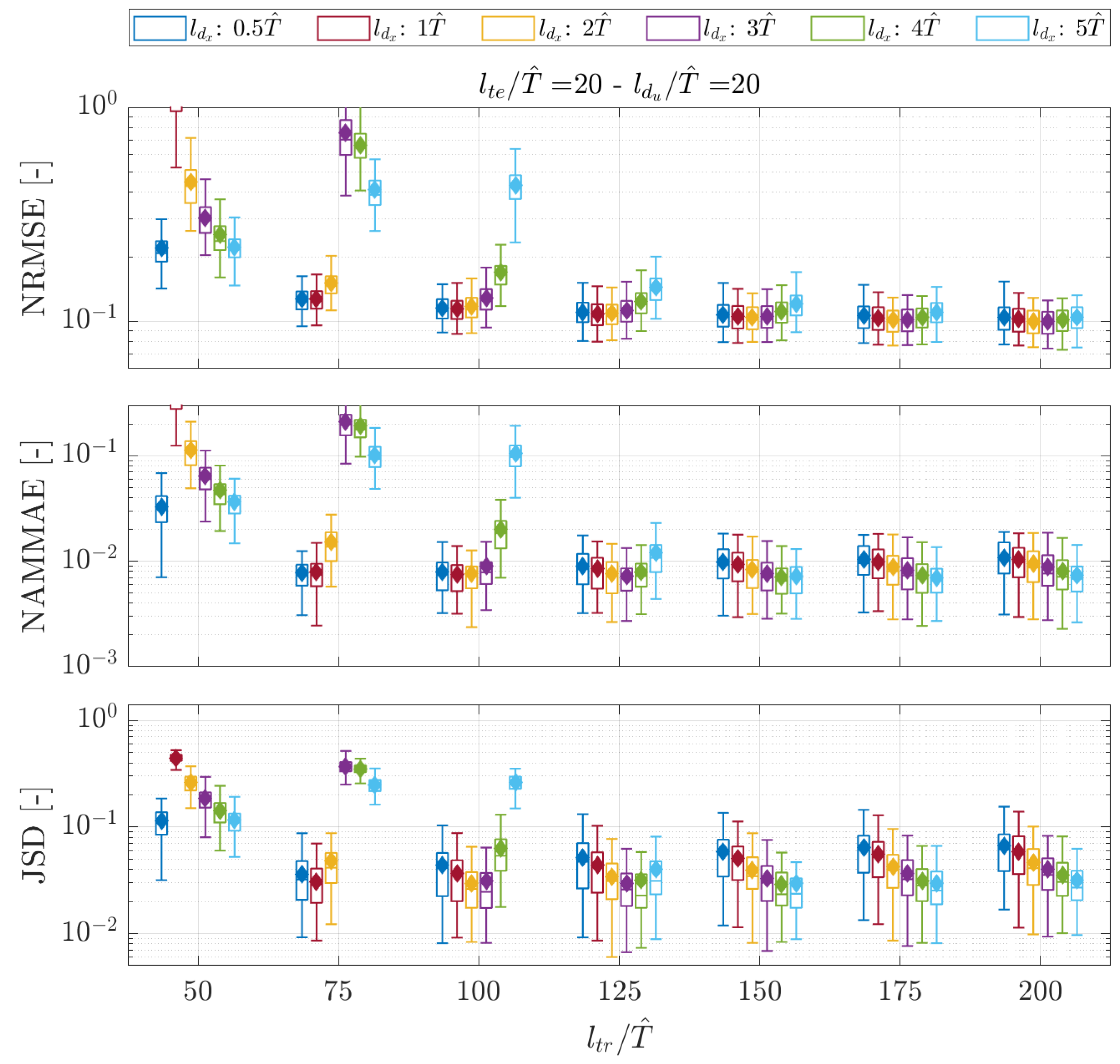

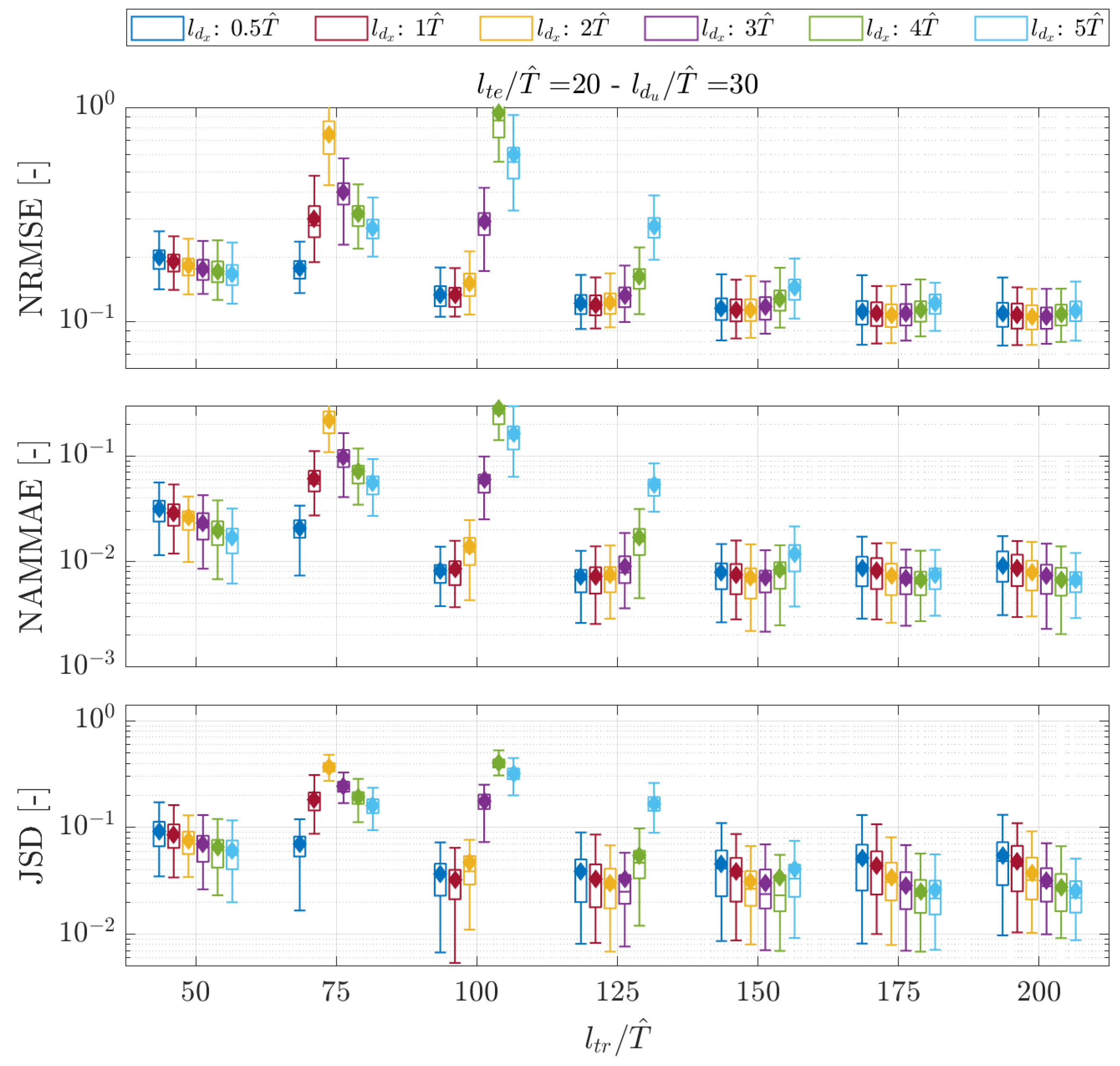

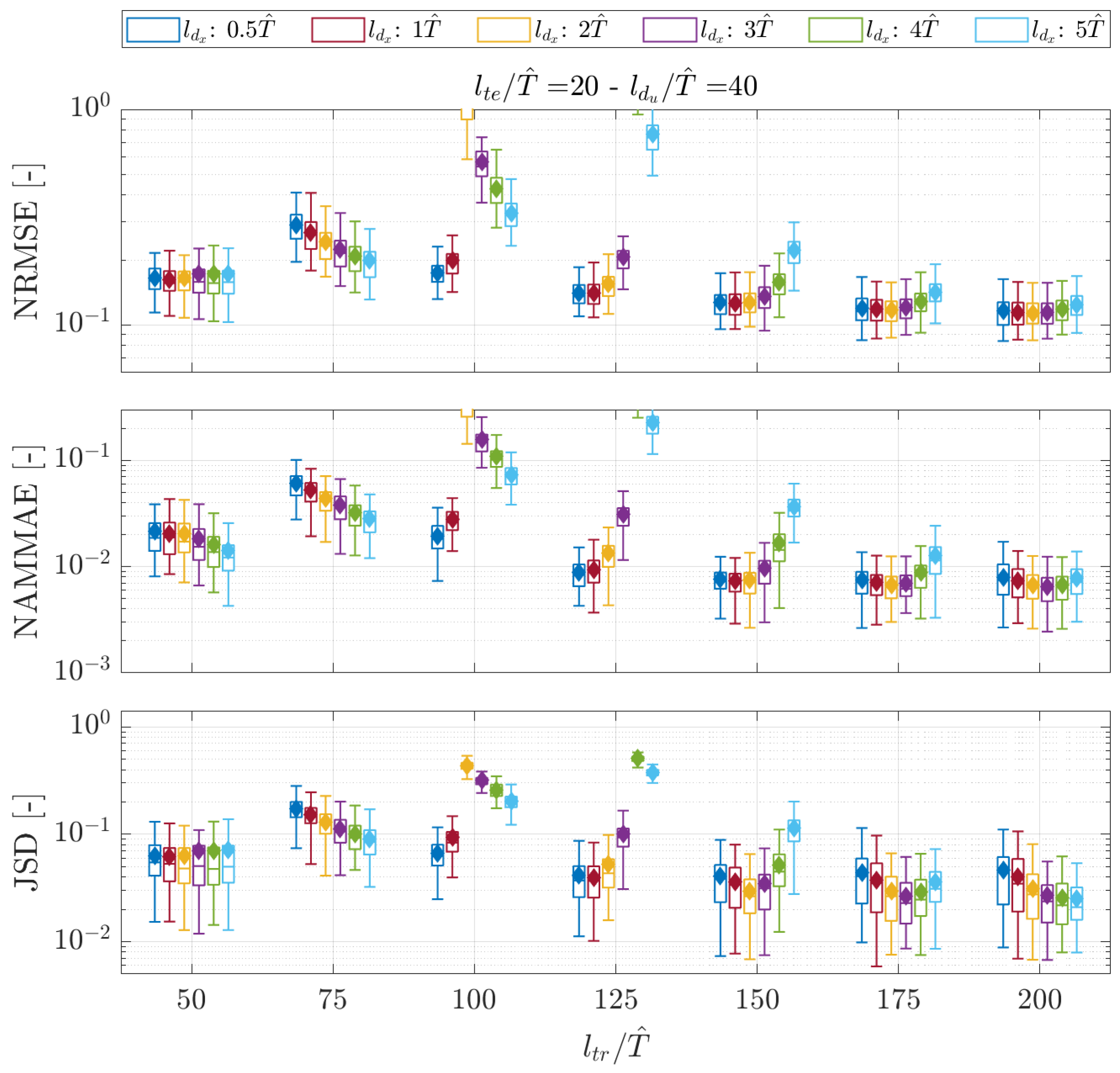

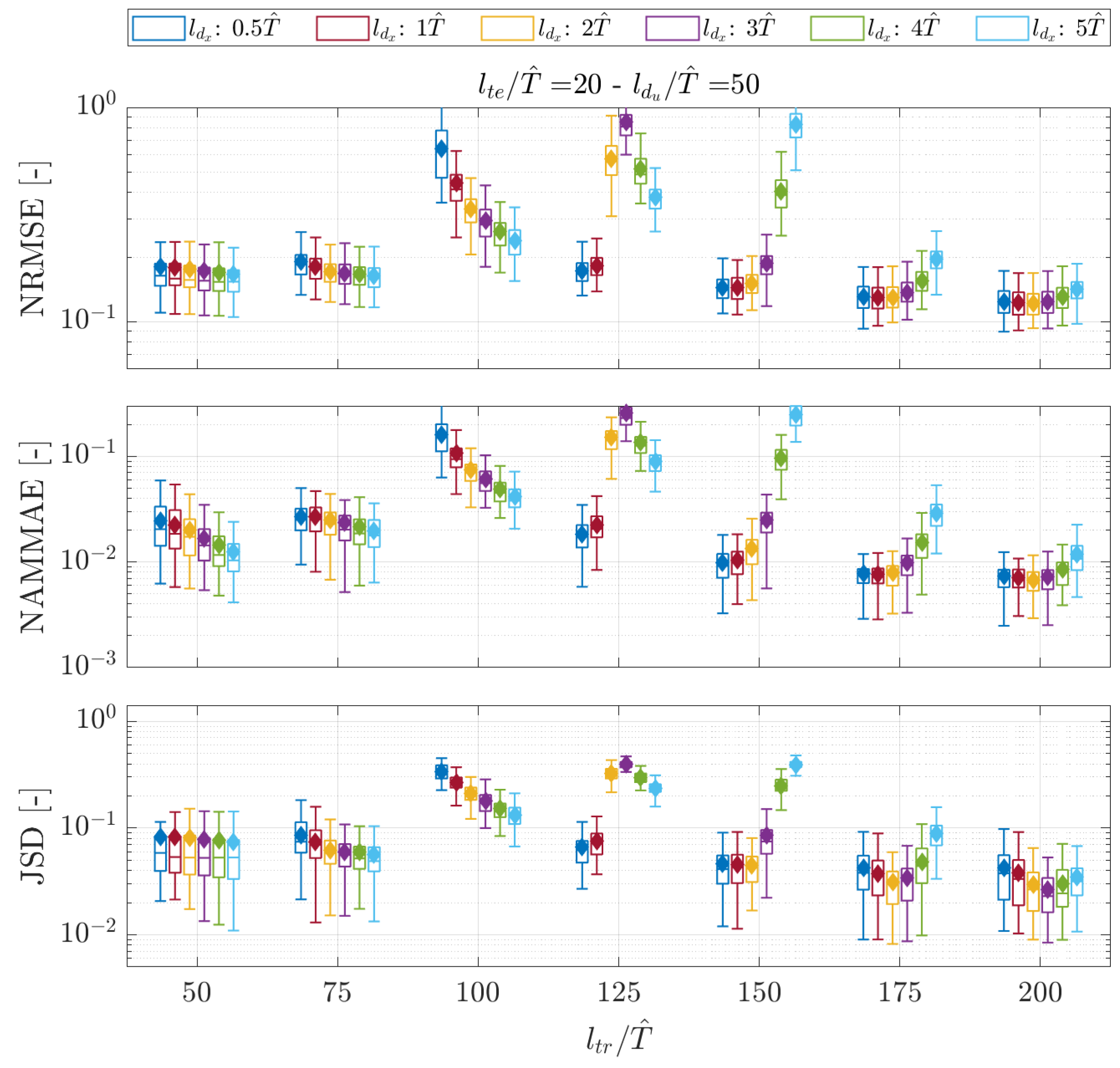

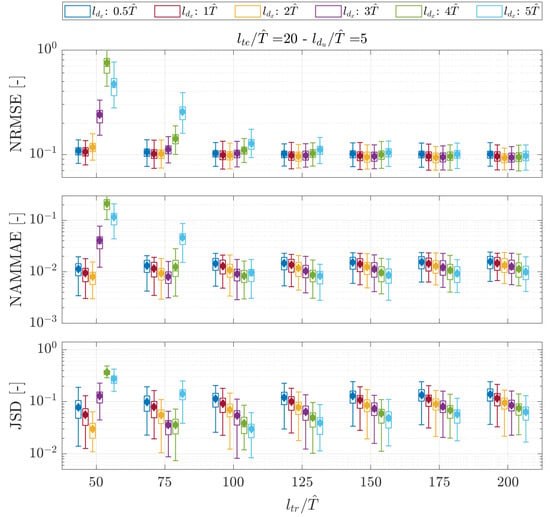

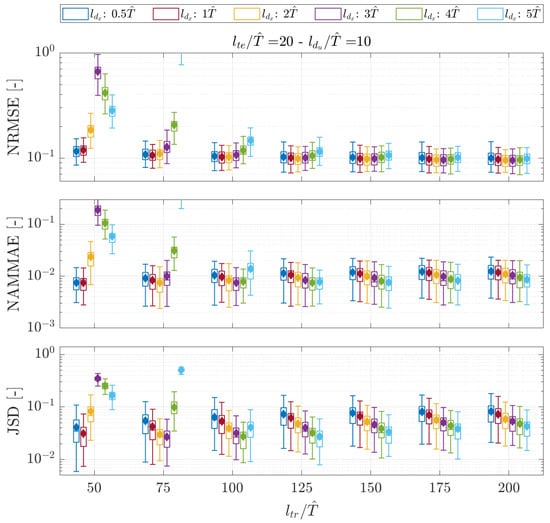

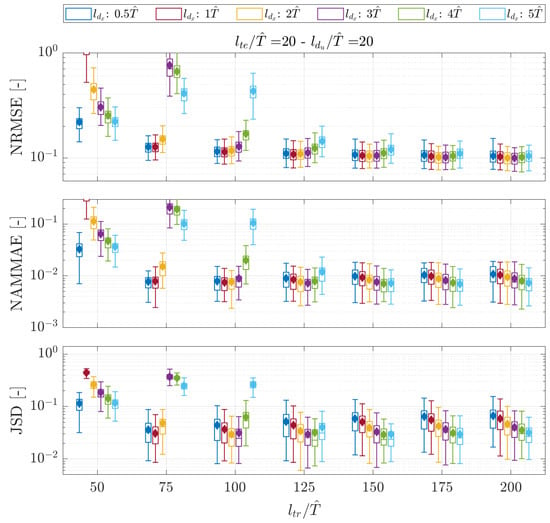

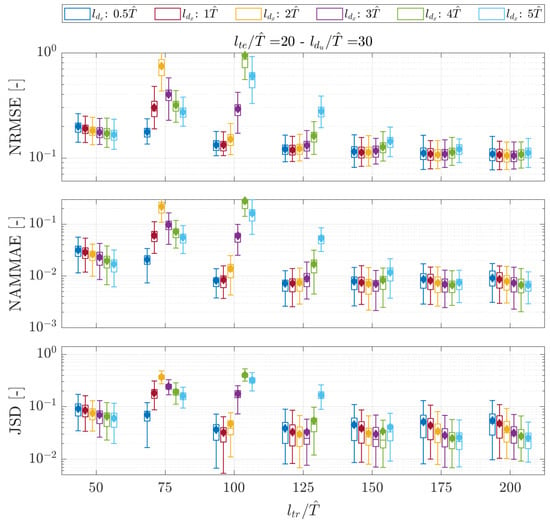

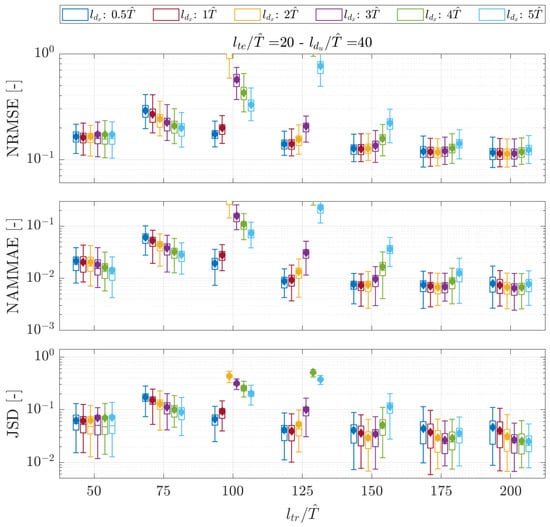

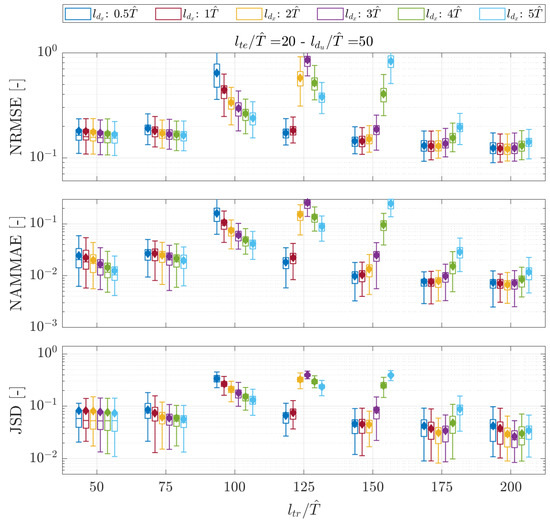

The results from the design of experiment on system identification via the deterministic Hankel-DMDc are reported in terms of boxplots graphs in Figure 13, Figure 14, Figure 15, Figure 16, Figure 17 and Figure 18 combining the outcomes of the 100 training/validation sequences combinations for each hyperparameter configuration. The metrics are evaluated using a prediction timeframe of (∼146 s).

Figure 13.

Hankel-DMDc boxplot of error metrics over the validation set for tested and , with . Diamonds indicate the average value of the respective configuration. (∼146 s).

Figure 14.

Hankel-DMDc boxplot of error metrics over the validation set for tested and , with . Diamonds indicate the average value of the respective configuration. (∼146 s).

Figure 15.

Hankel-DMDc boxplot of error metrics over the validation set for tested and , with . Diamonds indicate the average value of the respective configuration. (∼146 s).

Figure 16.

Hankel-DMDc boxplot of error metrics over the validation set for tested and , with . Diamonds indicate the average value of the respective configuration. (∼146 s).

Figure 17.

Hankel-DMDc boxplot of error metrics over the validation set for tested and , with . Diamonds indicate the average value of the respective configuration. (∼146 s).

Figure 18.

Hankel-DMDc boxplot of error metrics over the validation set for tested and , with . Diamonds indicate the average value of the respective configuration. (∼146 s).

Analyzing the boxplots, it can be noted that some configurations of the hyperparameters are characterized by very high values of all the metrics. The matrices of the pertaining Hankel-DMDc models have been noted to have eigenvalues with positive real parts, causing the predictions to be unstable. As pointed out by Rains et al. [94], DMDc algorithms are particularly susceptible to the choice of the model dimensions and prone to identify spurious unstable eigenvalues. In the nowcasting approach with Hankel-DMD, this phenomenon can be effectively mitigated by projecting the unstable discrete time eigenvalues onto the unit circle, i.e., stabilizing them. However, in system identification with Hankel-DMDc, the matrix is also affected by the identification of unstable eigenvalues, and no simple stabilization procedure is available.

Table 4 reports the value of the hyperparameters for the configurations producing the best average value of the NRMSE, NAMME, and JSD. It emerges that the most accurate predictions are obtained with high values of training length of . The noisy nature of the experimental measures and the variability of operational conditions encountered by the FOWT during the acquisitions can partially explain this result. A large implies a training signal that extends over a broader set of operative situations, allowing the Hankel-DMDc to capture the system’s relevant features with increased effectiveness and, hence, to obtain good predictions for unseen input sequences. A subdomain of the explored range for the hyperparameters value, defined as , , and , includes configurations with low values of all the metrics. The BestNAMMAE and BestJSD configurations are similar and lay in this range for which also the NRMSE showed small values (although not the best one). On the contrary, the BestNRMSE setup is characterized by a small value of in a range that showed higher NAMMAE and JSD errors.

Table 4.

Summary of the best hyperparameter configurations for system identification as identified by the deterministic design of experiment, , and Bayesian setup.

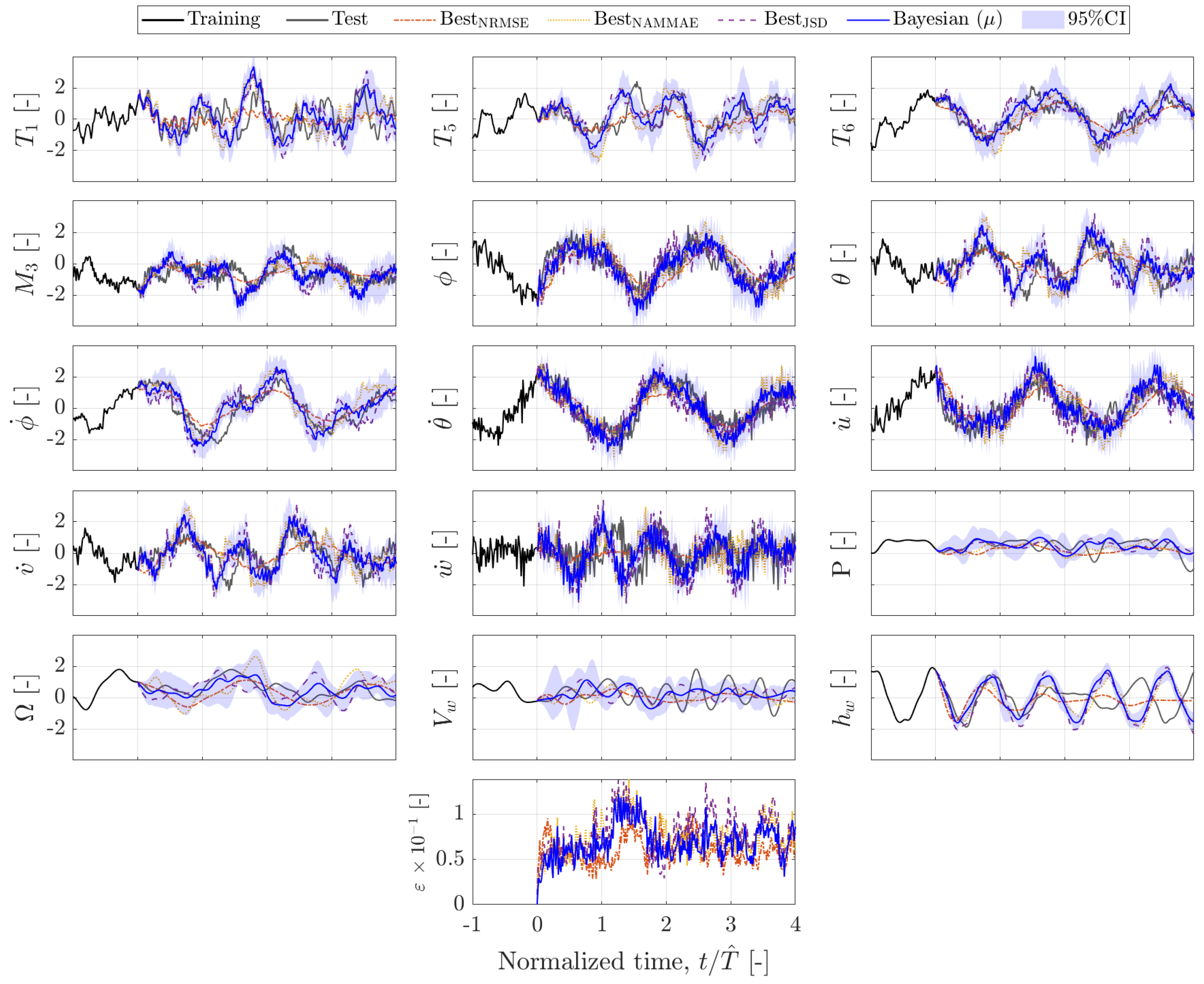

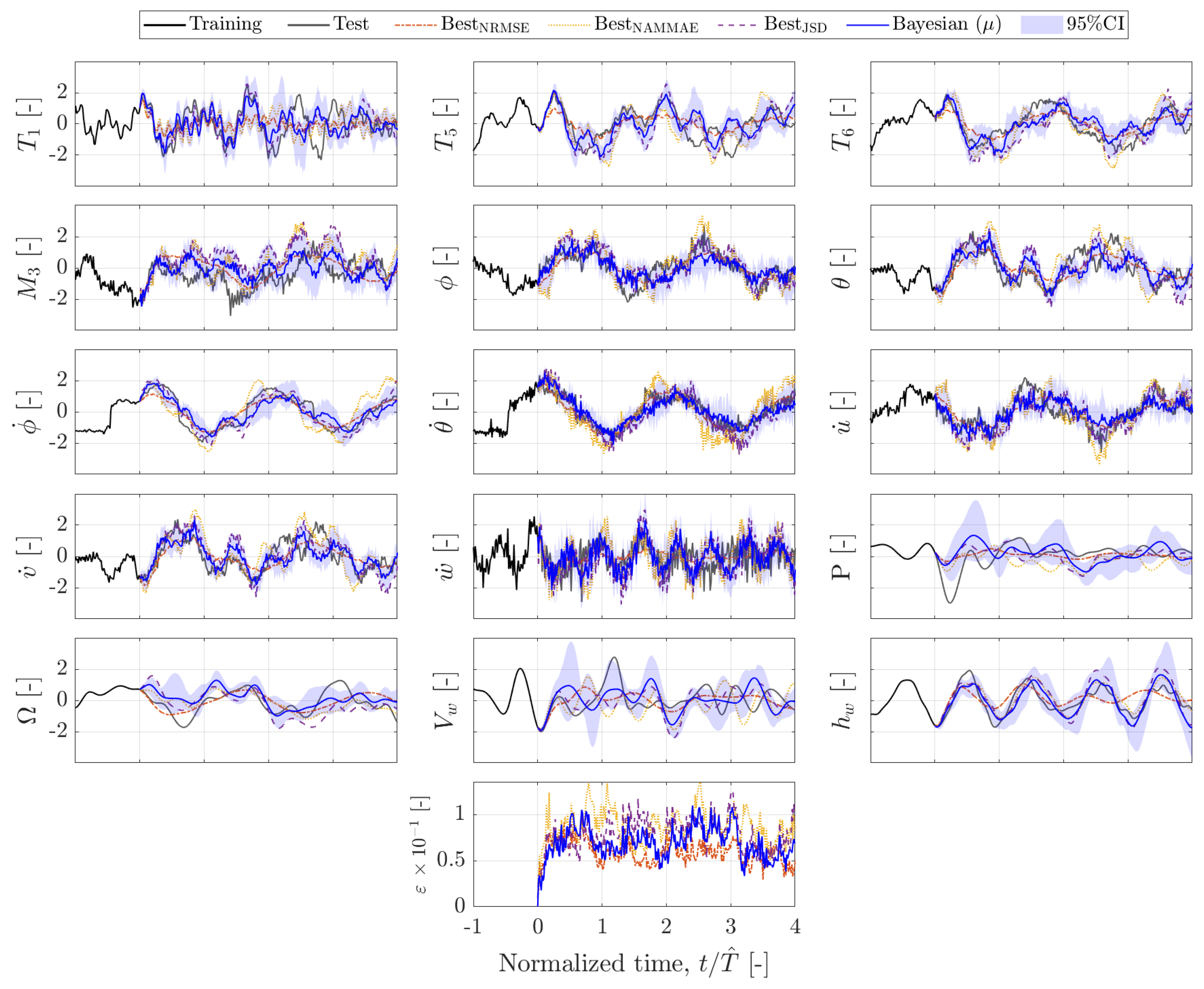

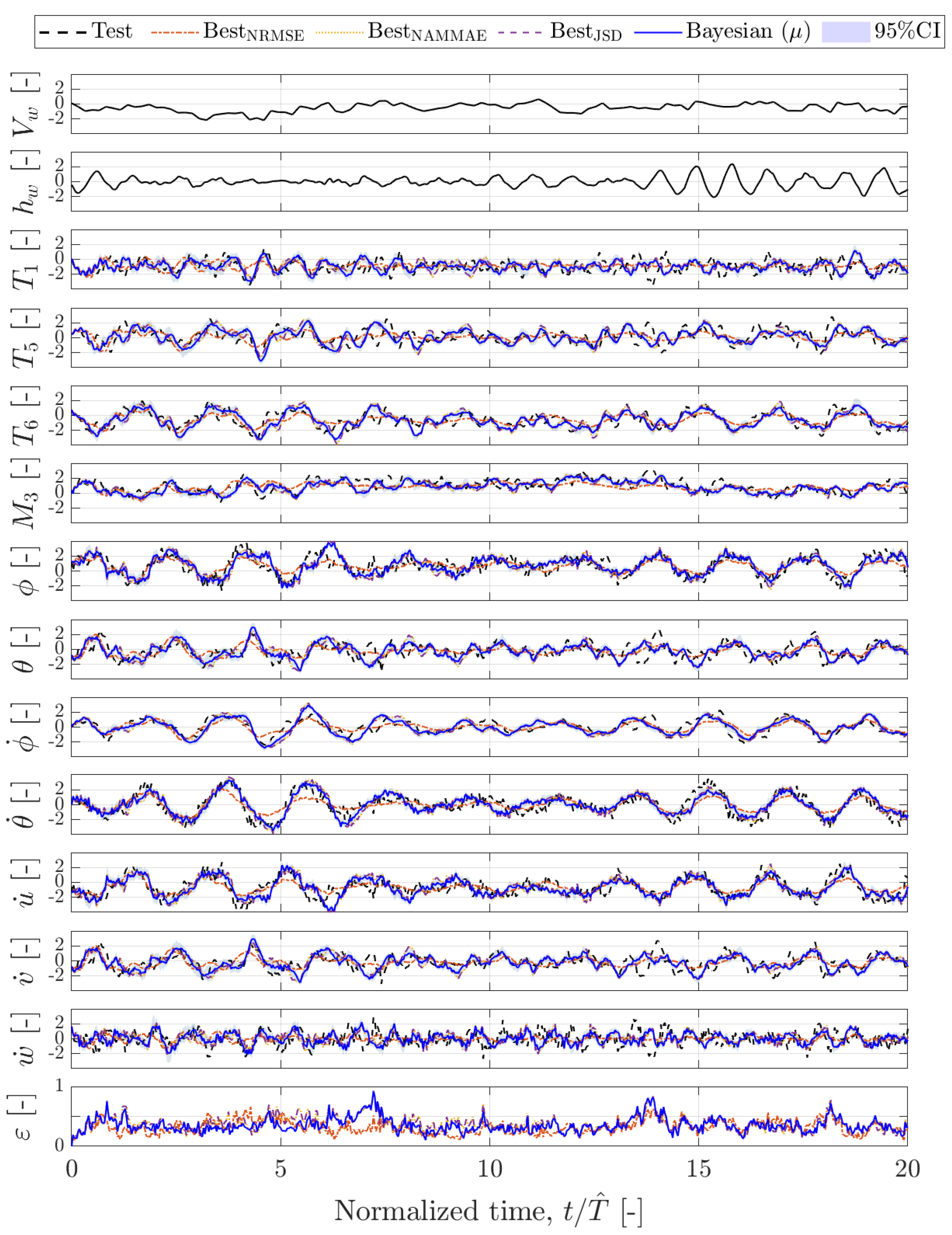

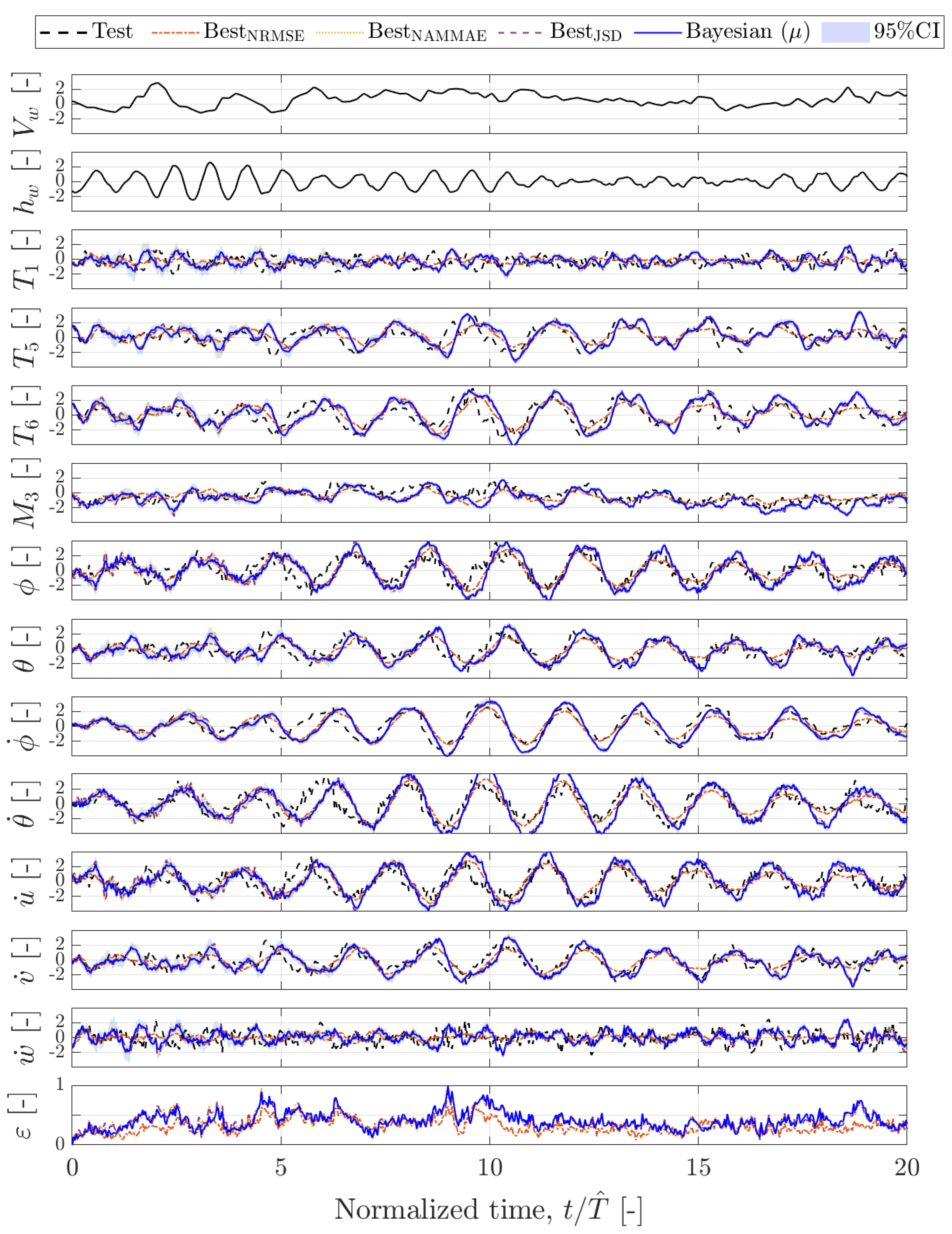

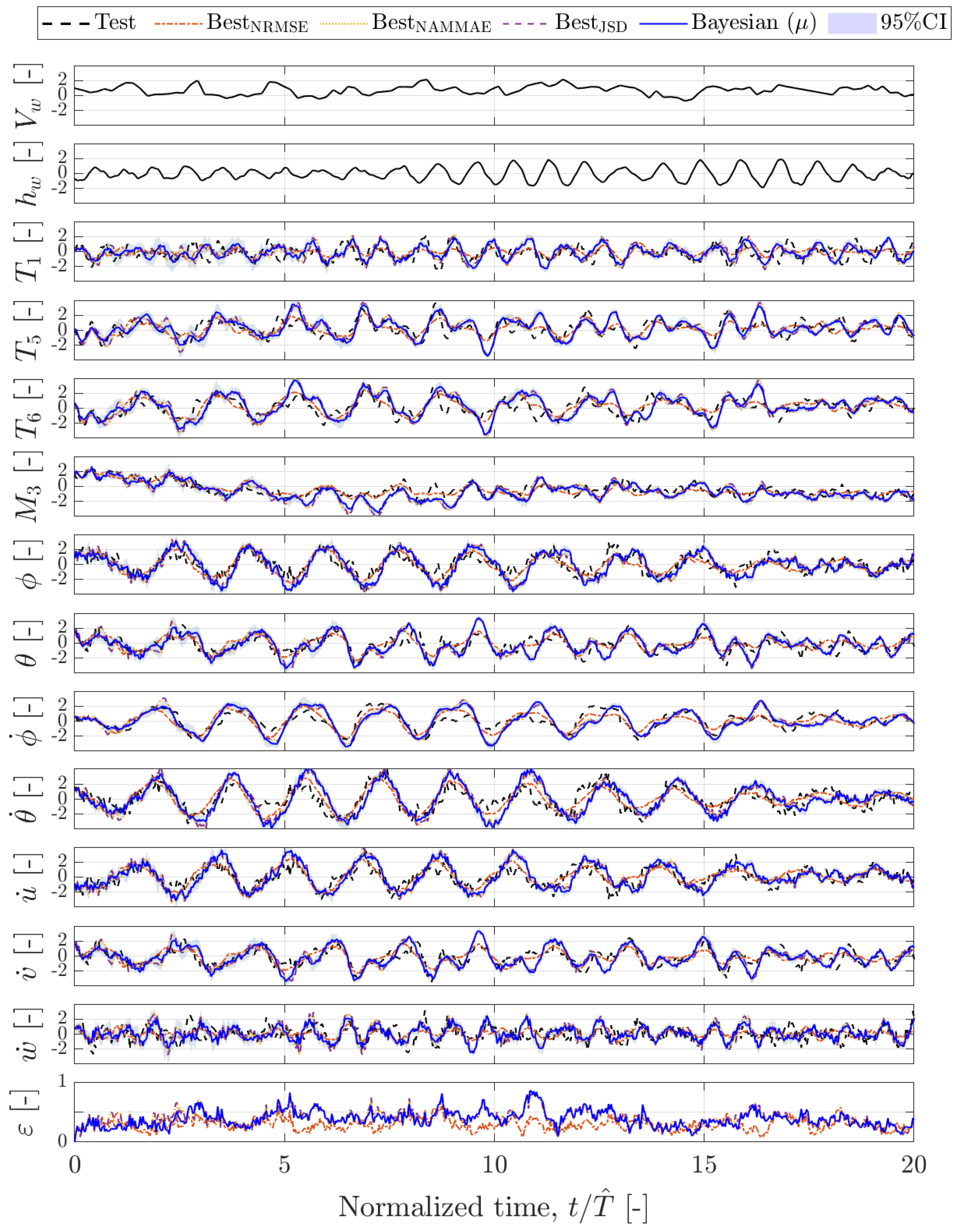

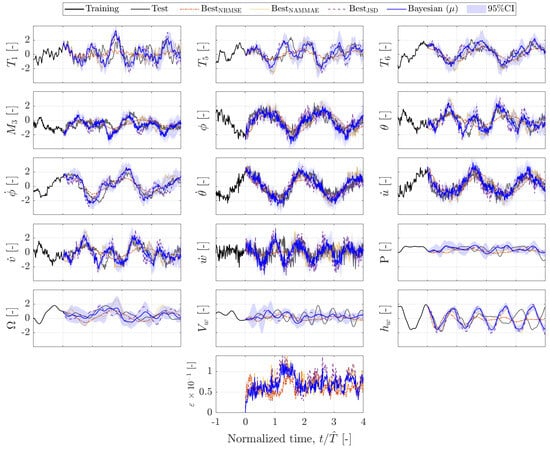

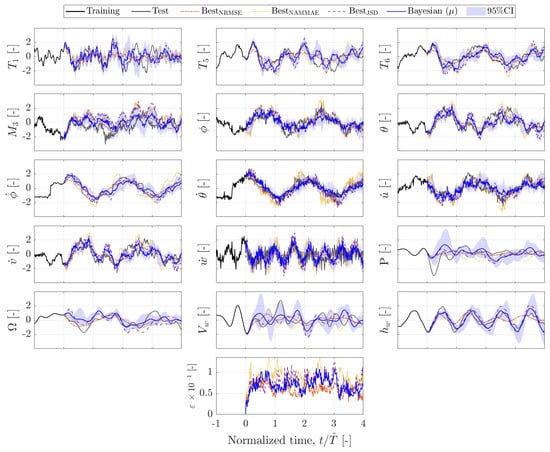

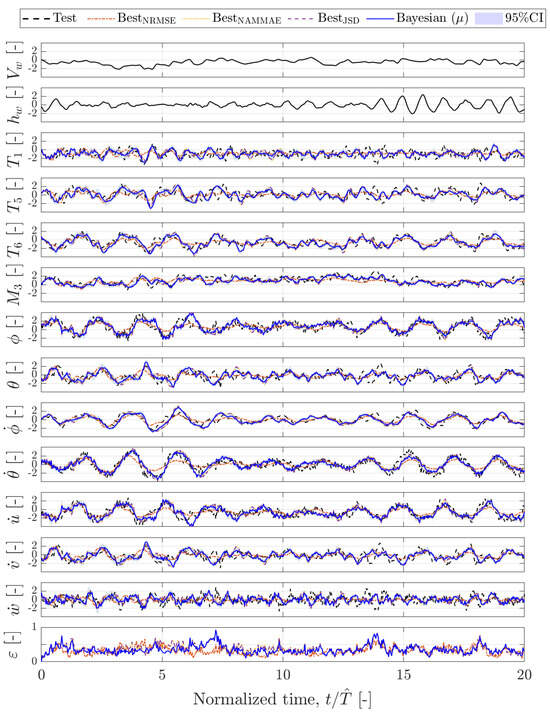

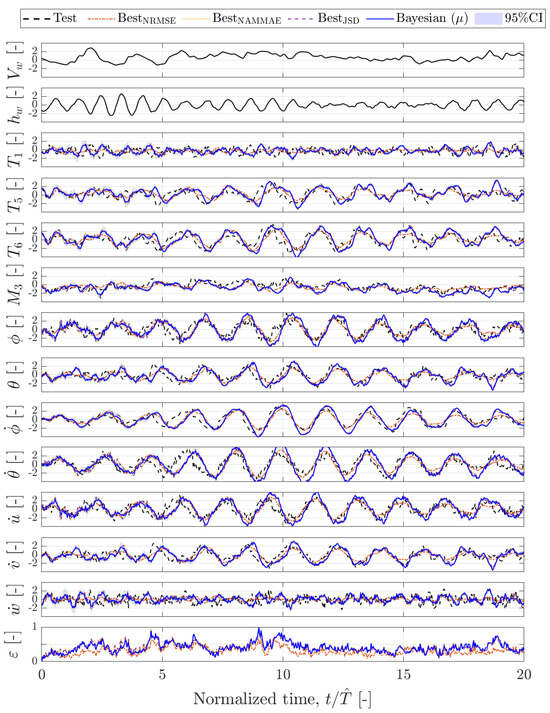

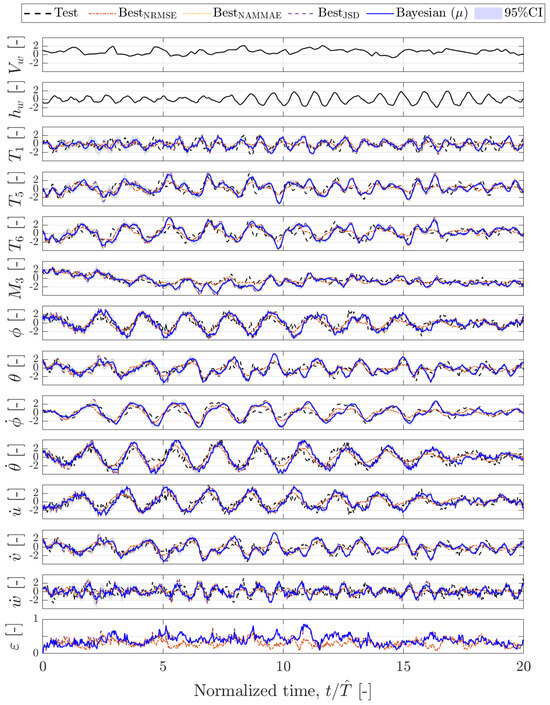

Figure 19, Figure 20, Figure 21 and Figure 22 show the predictions by the Hankel-DMDc for random test sequences taken as representatives. The figures show the input variables with a solid black line, the test sequence in a dashed black line, and the prediction obtained with the BestNRMSE, BestNAMMAE, and BestJSD hyperparameters, as reported in Table 4, with an orange dash-dotted, yellow dotted, and purple dashed line, respectively. BestNAMMAE and BestJSD produces similar predictions, following their similarity in hyperparameters values. The BestNRMSE line behaves slightly differently from the other two and seems to capture less high-frequency oscillations of the test sequence.

Figure 19.

Standardized time series prediction by deterministic (hyperparameters for best average metrics) and Bayesian Hankel-DMDc. Selected sequence 1.

Figure 20.

Standardized time series prediction by deterministic (hyperparameters for best average metrics) and Bayesian Hankel-DMDc. Selected sequence 2.

Figure 21.

Standardized time series prediction by deterministic (hyperparameters for best average metrics) and Bayesian Hankel-DMDc. Selected sequence 3.

Figure 22.

Standardized time series prediction by deterministic (hyperparameters for best average metrics) and Bayesian Hankel-DMDc. Selected sequence 4.

As a general consideration, even though a phase-resolved agreement is not always obtained, the predictions show a strong statistical similarity with the test signals, as testified both qualitatively by Figure 19, Figure 20, Figure 21 and Figure 22 and quantitatively by the JSD boxplots. It can be noted that, for all the plotted configurations, the value of does not show a monotonically increasing trend as the prediction time progresses, or in other words, the prediction accuracy is not negatively proportional to the prediction horizon. This suggests that the systems identified by the Hankel-DMDc may keep the shown level of accuracy indefinitely in time. This characteristic is fundamental for the proposed usages of the method.

5.5. System Identification via Bayesian Hankel-DMDc

As it was done for the nowcasting, the Bayesian extension of the Hankel-DMDc algorithm for system identification is obtained by exploiting the insights on the hyperparameters derived from the deterministic analysis, in particular for the identification of a suitable range of variation of , , and . The three hyperparameters are treated as probabilistic variables uniformly distributed in ∼ , ∼ , and ∼ (the actual and are taken as the nearest integers from the calculated values).

Figure 19, Figure 20, Figure 21 and Figure 22 show a comparison between the prediction by the Bayesian system identification and the deterministic best configurations, with the blue shadowed area representing the 95% confidence interval of the stochastic prediction. As observed for the deterministic analysis, no accuracy degradation trend with the prediction time is noted for the Bayesian prediction. The Bayesian expectation is very close to the BestNAMMAE and BestJSD lines, with small uncertainty. This reflects a general robustness of the deterministic predictions in the range of variation of the stochastic parameters, which has been already noted in the similarity between the BestNAMMAE and BestJSD solutions.

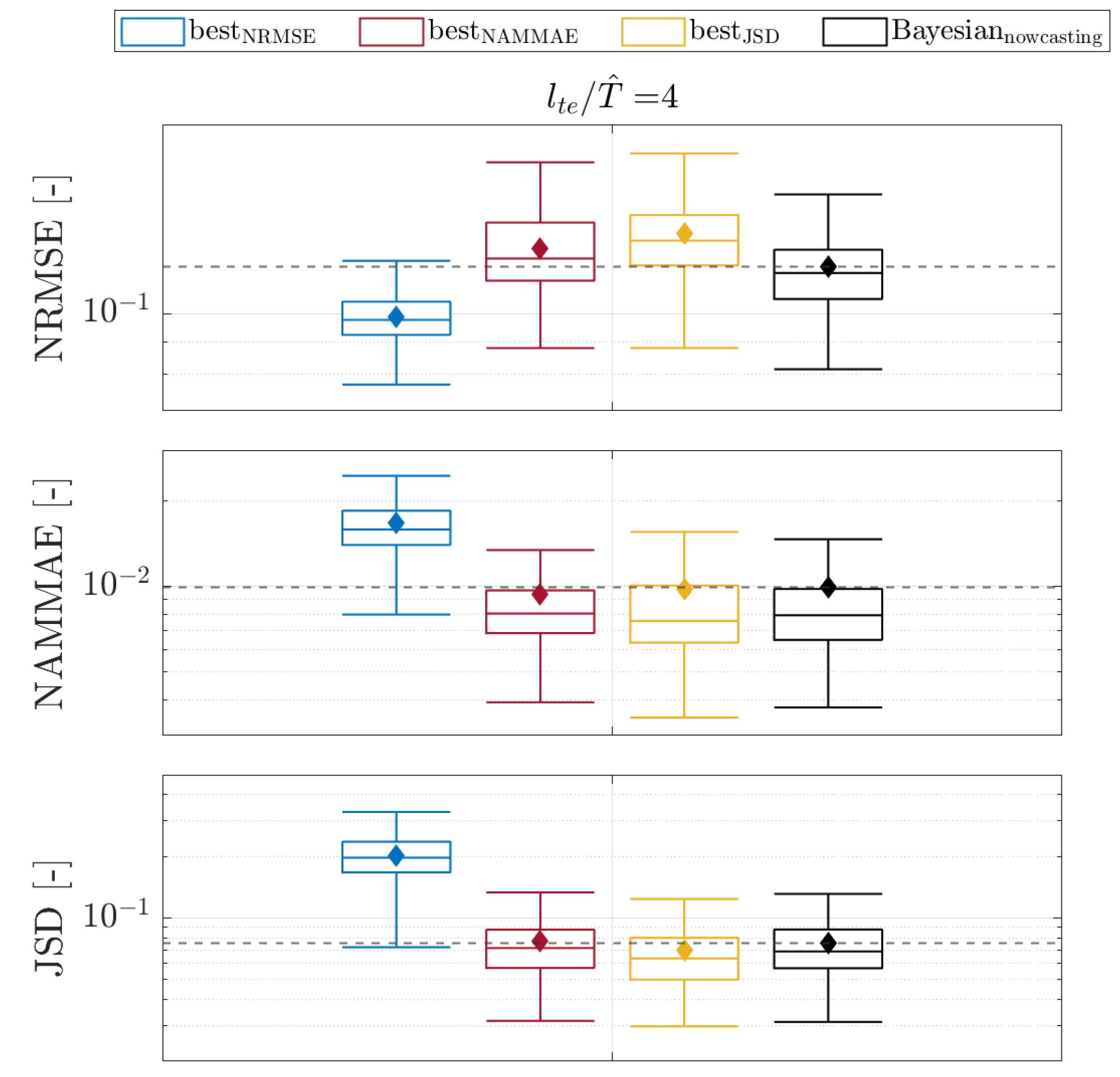

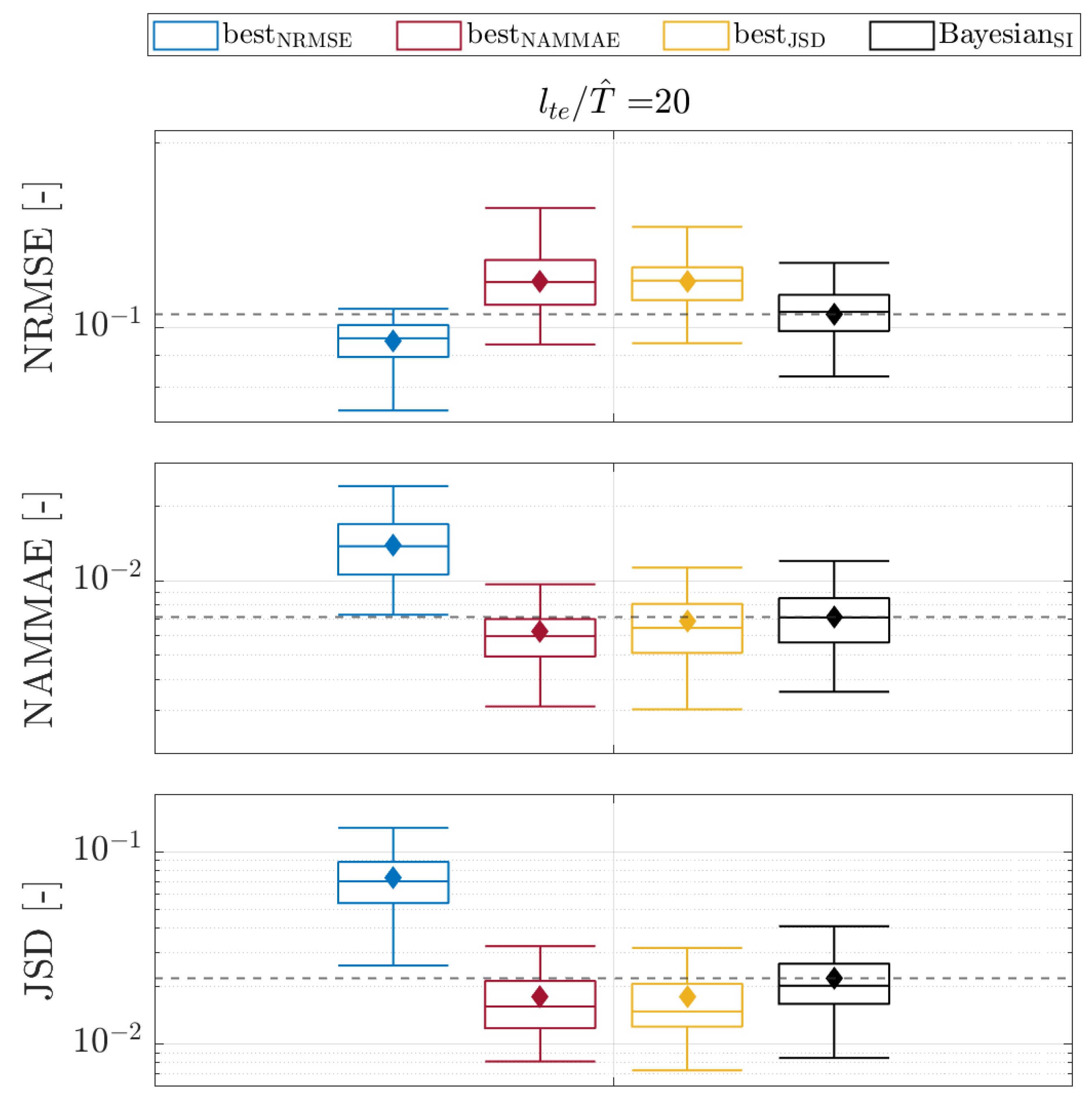

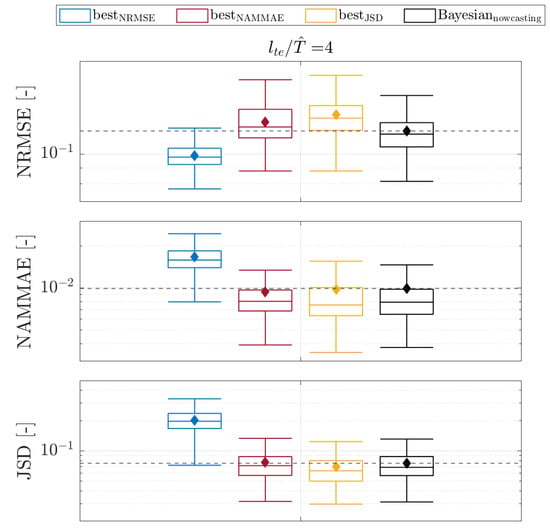

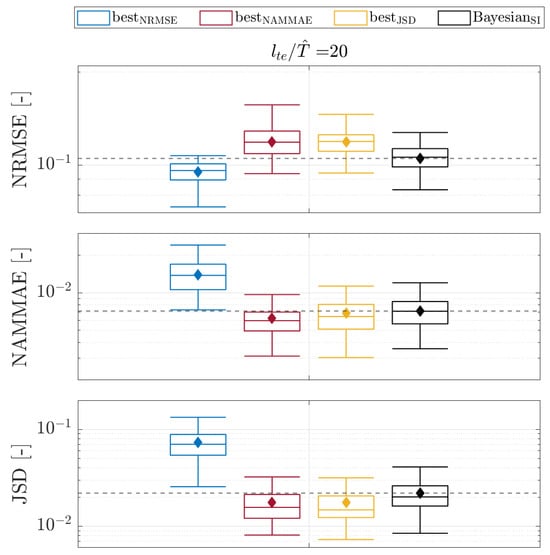

A comparison between the best deterministic configurations and the Bayesian system identification in terms of the NRMSE, NAMMAE, and JSD metrics is presented in Figure 23 for . The Bayesian approach improves the NRMSE compared to the deterministic solutions, preserving the NAMMAE and JSD results that are only slightly degraded.

Figure 23.

Error metrics comparison, Hankel-DMDc vs. Bayesian Hankel-DMDc system identification, (∼146 s).

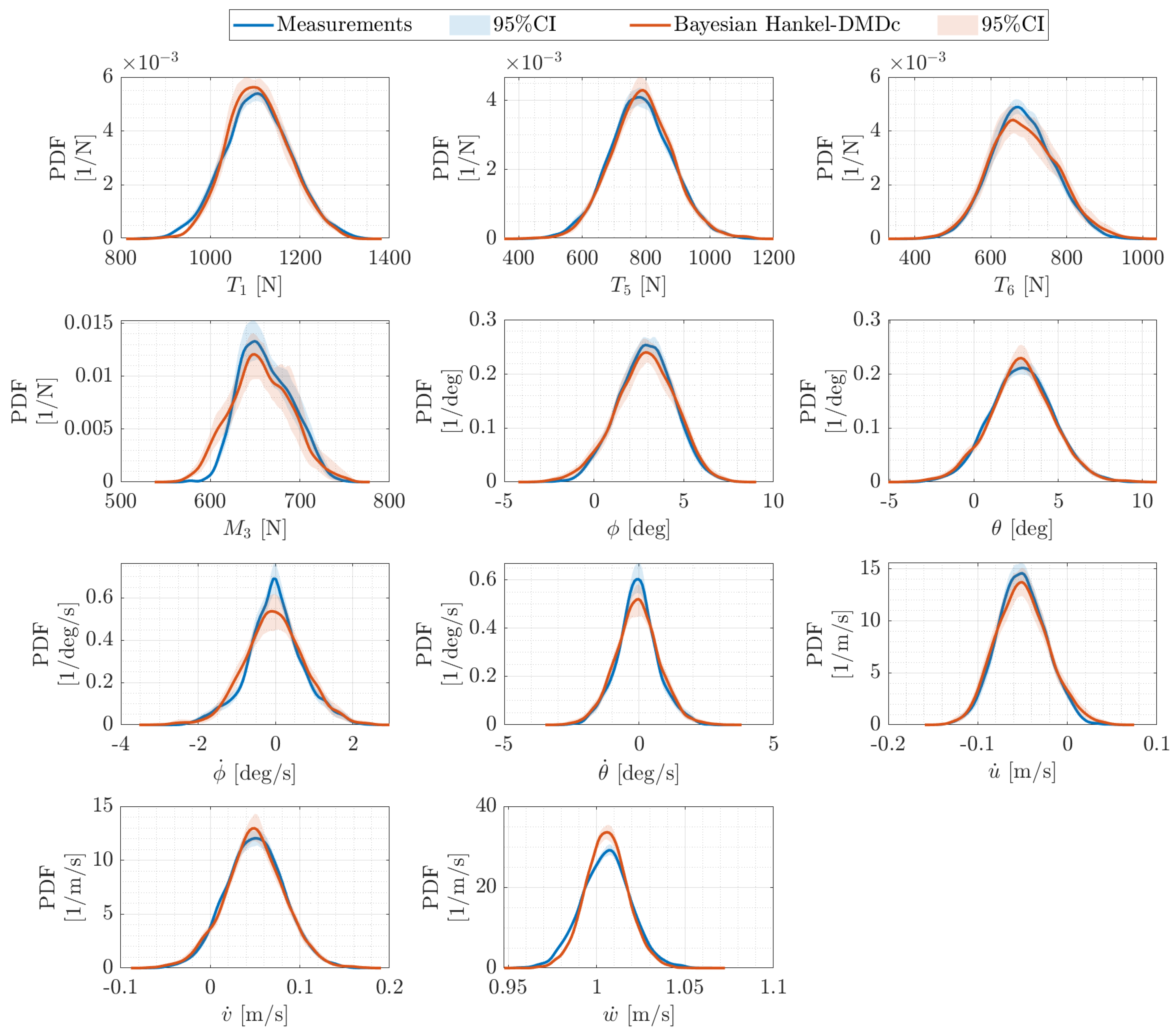

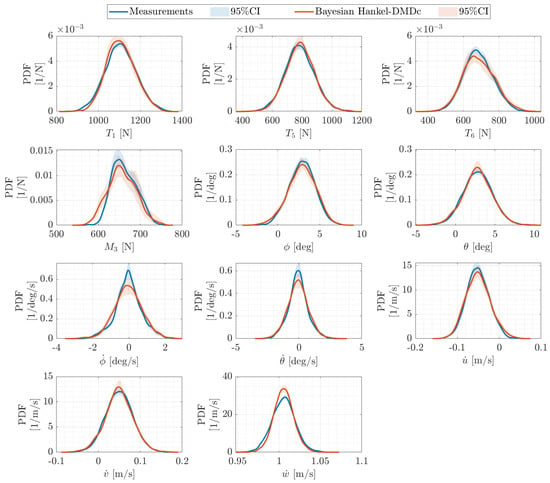

The effectiveness of the Bayesian Hankel-DMDc ROM as a surrogate model is further assessed by statistically comparing the probability density functions (PDFs) of each variable as obtained from the experimental measurements and the Bayesian system identification time series. In particular, a moving block bootstrap (MBB) method is employed to define 100 time series for the analysis, following [95]. The PDF of each time series, for both the experimental data and expected value of ROM predictions, are obtained using kernel density estimation [96] as follows:

Here, K is a normal kernel function defined as

where is the bandwidth [97]. In this way, a set of 100 PDFs is obtained for each variable of the system state , introducing uncertainty in their estimation. Hence, an expected value and a confidence interval are calculated for the PDF of each variable for both the experimental data and expected value of ROM predictions. The quantile function q is evaluated at probabilities and , defining the lower and upper bounds of the 95% confidence interval as . Figure 24 shows, per each variable, the expected value of the PDFs over the bootstrapped series as solid lines, with the 95% confidence interval shown as shaded areas.

Figure 24.

Probability density function comparison between measured data and the expected value of the Bayesian Hankel-DMDc prediction on bootstrapped sequences for each variable. Shaded areas indicate the 95% confidence interval of the two PDFs.

The results show a good overall agreement between the distributions obtained from the ROM and experimental measurements for all the system variables. In other words, the statistics of motion and loads emerging from the real data are effectively captured over the bootstrapped histories by the Bayesian Hankel-DMDc ROM. It may be noted that the tails of the measured PDFs, representing large amplitudes, are particularly well matched by the predictions for motion variables. The largest differences are observed for the derivative of the pitch and roll angles in the small values range. The confidence intervals of the Bayesian Hankel-DMDc predictions adequately cover the PDF of the measured data, indicating that the predictions are accurate and reliable. The statistics of the JSD metrics, in terms of the expected value and confidence interval as evaluated on the bootstrapped time series for the eleven variables, are reported in Table 5 to quantify the differences between the experimental and DMD distributions in Figure 24. The analysis confirms the similarity between the probability distributions of Bayesian Hankel-DMDc and real data sequences, as JSD showed small expected values and uncertainty. The adherence between the experimental and predicted PDF for the system variables confirms that the ROM identified by the Hankel-DMDc can be effectively applied in unseen scenarios, providing accurate and reliable predictions from the knowledge of the input variables and . The statistical accuracy of the system identification is of paramount importance for the application of the method in lifecycle assessment and maintenance planning.

Table 5.

Expected value and 95% confidence lower bound, upper bound, and interval of JSD of bootstrapped time series.

The training time for the system identification is investigated as in Section 5.3 on the same mid-end laptop, averaging over 10 realizations using 100 different hyperparameter values in the intervals of the Bayesian analysis. The larger sizes of data managed compared to the nowcasting task results in larger matrices and longer times, ranging from s, s to s, s, though these results are still computationally cheaper compared to high-fidelity simulations or typical training of deep learning methods, as well as more data-lean compared to the latter ones. The computational cost of the Bayesian system identification is linearly dependent on the number of Monte Carlo realizations used in the sampling of the hyperparameters combinations, as a different Hankel-DMDc model is evaluated for each combination. However, as mentioned for nowcasting, the task is embarrassingly parallel, and the actual time required to obtain a Bayesian prediction can be kept close to the single deterministic evaluation time by using multiple computational units.

6. Conclusions

To the best of the authors’ knowledge, this work represents the first use of DMD and its variants to extract knowledge, as well as forecast and identify the system dynamics of a real-life FOWT using experimental field data. The approach also applies to other data sources such as simulations of various fidelity levels with no modifications.

Modal analysis revealed strong couplings between floater motions, tendons/moorings loads, and wave elevation, demonstrating the method’s ability to capture complex system interactions.

Hankel-DMD successfully demonstrated data-driven, equation-free, and data-lean short-term forecasting (nowcasting) of FOWT motions and loads, achieving accurate predictions up to four wave encounters with a computational cost compatible with real-time execution and the needed accuracy for applications such as model predictive control and digital twinning. Spanning an augmented coordinate system by incorporating time-delayed variables in the system state, Hankel-DMD effectively captures the essential nonlinear features of FOWT dynamics within a linear framework. Uncertainty quantification is introduced through a novel Bayesian extension of the Hankel-DMD by considering the hyperparameters of the method as stochastic variables, with prior distribution identified from a full-factorial deterministic analysis. A systematic difficulty was encountered in predicting the turbine extracted power P and blades RPM , which is partially explained by the very weak coupling observed in the modal analysis between the mentioned variables and the FOWT motions and loads: this dramatically reduced the size of data explaining the dynamics of P and that is available to the Hankel-DMD method to extract a meaningful data-driven model for them. An additional challenge for the DMD-based method is represented by the large nonlinear characteristics of the power and RPM signals due to the intervention of the turbine control system.

The system identification task is performed by applying the Hankel-DMDc method, which models the FOWT as an externally forced dynamical system considering wave elevation and wind speed as inputs. This approach yielded data-driven equation-free reduced-order models capable of extended prediction horizons using small data, providing continuous knowledge of the input variables. Such models provide statistically accurate predictions with significant computational efficiency, making them valuable for applications in control systems, life-cycle assessments, and maintenance planning. A novel Bayesian extension is obtained for incorporating uncertainty quantification in the Hankel-DMDc by using the same rationale of the Bayesian Hankel-DMD.

Despite challenges posed by noisy data and strong nonlinearities from extreme conditions, the DMD-based methods showed promising results for both nowcasting and system identification. The complexity and variety of operational conditions in the experimental data have been, on one side, precious to test the DMD-based system identification in a realistic environment; on the other side, they posed a significant challenge for system identification, requiring long training signals and numerous delayed variables.

Future work will explore hybridizing DMD methods with machine learning-based approaches to further improve noise rejection and nonlinear feature capturing while preserving the DMD’s inherent characteristics. In addition, a different approach for the system identification task shall also be tested for model parametrization and generalization: firstly applying the Hankel-DMDc to data collected in more specific conditions/excitations (such as single wave headings, specific magnitude ranges, wind direction, etc.) and secondly using parametric reduced-order models interpolation [98,99]. Enhancements to the experimental setup, such as multiple wave elevation measurements and additional load cells, would also provide more comprehensive data for improved system identification, adding, for example, meaningful information about waves and wind directions.

Author Contributions

G.P.: Methodology, Software, Investigation, Validation, Formal analysis, Writing—Original Draft, Visualization. A.B.: Investigation, Data curation, Resources. A.L.: Investigation, Data curation, Resources. C.P.: Investigation, Data curation, Resources. A.S.: Methodology, Writing—Review and Editing. C.L.: Investigation, Data curation, Resources, Project administration, Funding acquisition. M.D.: Conceptualization, Methodology, Resources, Writing—Review and Editing, Supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported thorugh the “Ricerca di Sistema” project (RSE, 1.8 “Energia elettrica dal mare”) by the Italian Ministry of the Environment and Energy Safety (Ministero dell’Ambiente e della Sicurezza Energetica, MASE), CUP B53C22008560001, and through “Network 4 Energy Sustainable Transition–NEST”, Project code PE0000021, Concession Decree No. 1561 of 11.10.2022, by the Italian Ministry of University and Research (Ministero dell’Università e della Ricerca, MUR), CUP B53C22004060006.

Data Availability Statement

The original data presented in the study are openly available at https://cnrsc-my.sharepoint.com/:f:/g/personal/giorgio_palma_cnr_it/EvegVNzuvytGpPio8gj8IJwBAdSfNIfYJ7dniapE0G98bA?e=AvrcRj accessed on 21 March 2025.

Conflicts of Interest

The authors declare no conflicts of interest.

List of Symbols and Abbreviations

| Abbreviations | |

| ADCP | acoustic doppler current profiler |

| DMDc | dynamic mode decomposition with control |

| DMD | dynamic mode decomposition |

| EMD | empirical mode decomposition |

| EU | European Union |

| FOWT | floating offshore wind turbine |

| GRNN | gated recurrent neural network |

| JSD | Jensen-Shannon divergence |

| LCOE | levelized cost of energy |

| LSTM | long short-term memory |

| MBB | moving block bootstrap |

| NAMMAE | normalized average minimum/maximum absolute error |

| NRMSE | normalized mean square error |

| probability density function | |

| PLC | programmable logic controller |

| POD | proper orthogonal decomposition |

| ROM | reduced order model |

| SVD | singular value decomposition |

| Greek symbols | |

| time history of the expected value of the components of the vector | |

| time history of the standard deviation of the components of the vector | |

| time step for discretization | |

| floater roll velocity | |

| floater pitch velocity | |

| expected value of | |

| wind turbine rotor angular velocity | |

| k-th eigenvalue of , | |

| floater roll angle | |

| standard deviation of | |

| floater pitch angle | |

| k-th eigenvector of | |

| Symbols | |

| Hermitian transpose operator on | |

| non conjugate transpose operator on | |

| Moore-Penrose pseudoinverse of | |

| j-th time snapshot of , | |

| floater acceleration along x direction | |

| floater acceleration along y direction | |

| floater acceleration along z direction | |

| augmented system state vector | |

| snapshot of the augmented system state vector | |

| floater wave encounter frequency | |

| floater wave encounter period. reference period for non-dimensional time | |

| inner product | |

| discrete time system state matrix | |

| discrete time system input matrix | |

| matrix collecting delayed system state vector snapshots excluding the first | |

| matrix collecting delayed system state vector snapshots excluding the last | |

| augmented system input vector | |

| system input vector | |

| matrix collecting system state vector snapshots excluding the first | |

| matrix collecting system state vector snapshots excluding the last | |

| system state vector | |

| initial condition of the system state vector | |

| matrix collecting delayed system input vector snapshots excluding the last | |

| continuous time system matrix | |

| augmented discrete time system state matrix | |

| augmented discrete time system input matrix | |

| i-th coordinate of the initial condition in the eigenvector basis | |

| wave elevation | |

| maximum delay time in augmented input | |

| maximum delay time in augmented state | |

| test signal time length | |

| training signal time length | |

| number of maximum delay samples in augmented input | |

| number of maximum delay samples in augmented state | |

| number of samples in training signal | |

| P | power extracted by the wind turbine |

| t | time |

| relative wind speed at the wind turbine rotor | |

| load at the i-th mooring of the FOWT platform | |

| load at the i-th tendon of the floater counterweight |

References

- Falkner, R. The Paris Agreement and the new logic of international climate politics. Int. Aff. 2016, 92, 1107–1125. [Google Scholar]

- Nastasi, B.; Markovska, N.; Puksec, T.; Duić, N.; Foley, A. Renewable and sustainable energy challenges to face for the achievement of Sustainable Development Goals. Renew. Sustain. Energy Rev. 2022, 157, 112071. [Google Scholar] [CrossRef]