Improved Convolutional Neural Network YOLOv5 for Underwater Target Detection Based on Autonomous Underwater Helicopter

Abstract

1. Introduction

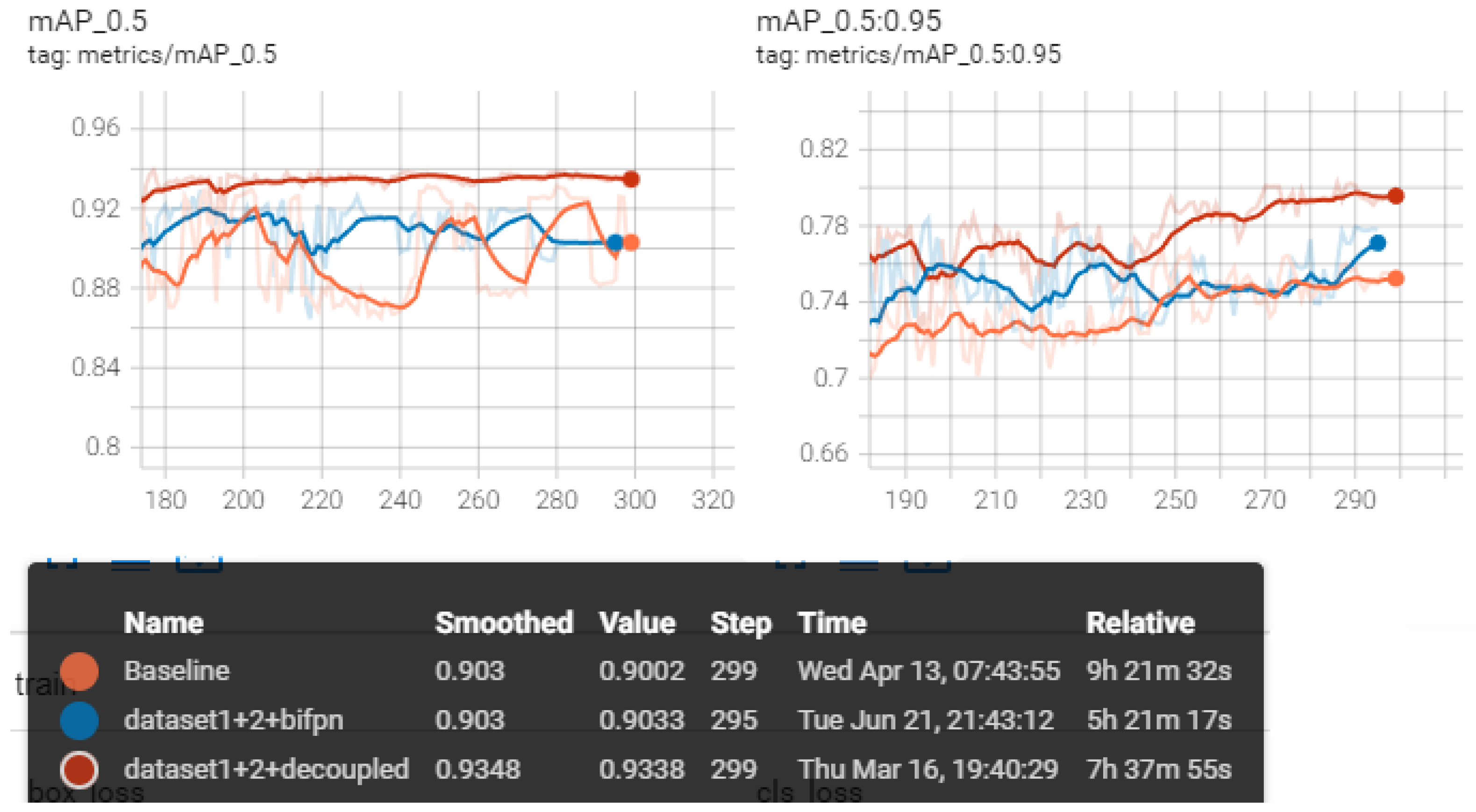

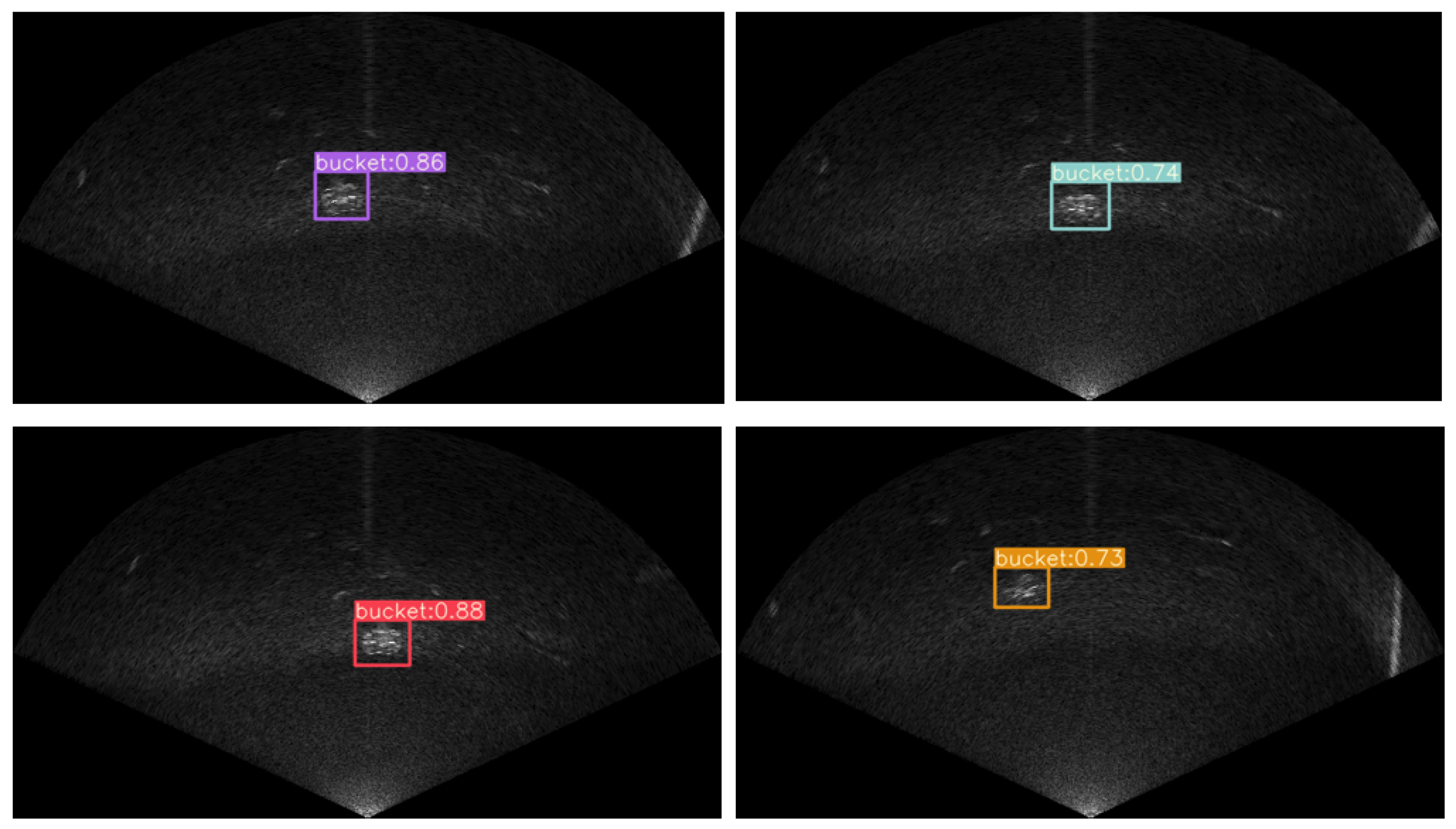

- Some improvements based on YOLOv5 are introduced, including attention mechanisms that add to the backbone, BiFPN that replaces the PANet as the neck, and decoupled heads to separately classify and localize the targets.

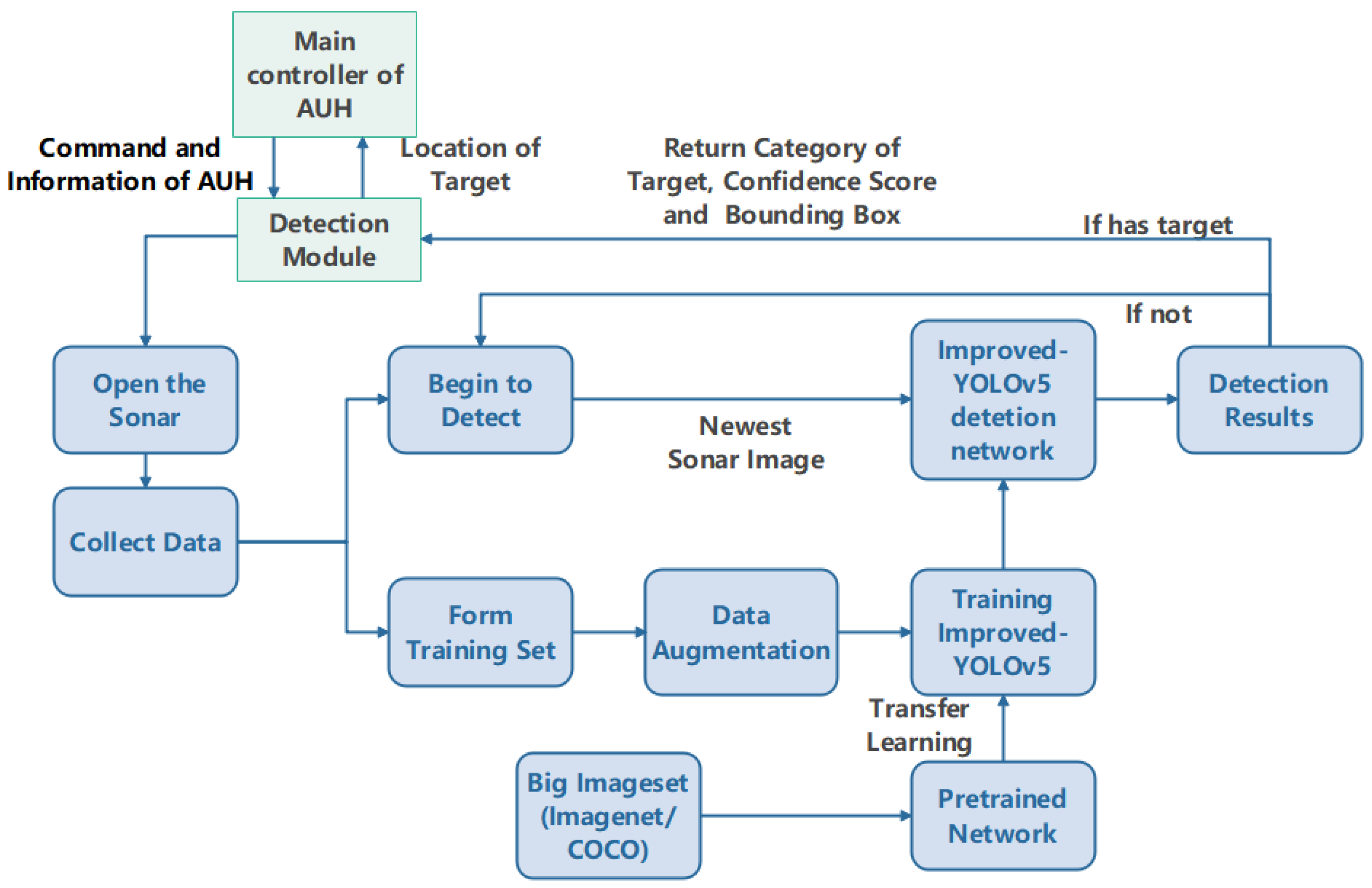

- A system for underwater target detection based on sonar images is presented. From training network improved-YOLOv5 to deploying it, to AUH, and then to obtaining the detection results from the algorithm, the whole system to detect the desired underwater targets has been designed.

- Several tank experiments and outdoor tests are implemented to validate the detection system, and the superiority of the improved-YOLOv5 in target detecting is validated.

2. Methods

2.1. Target Detection System Design

2.2. Network Building

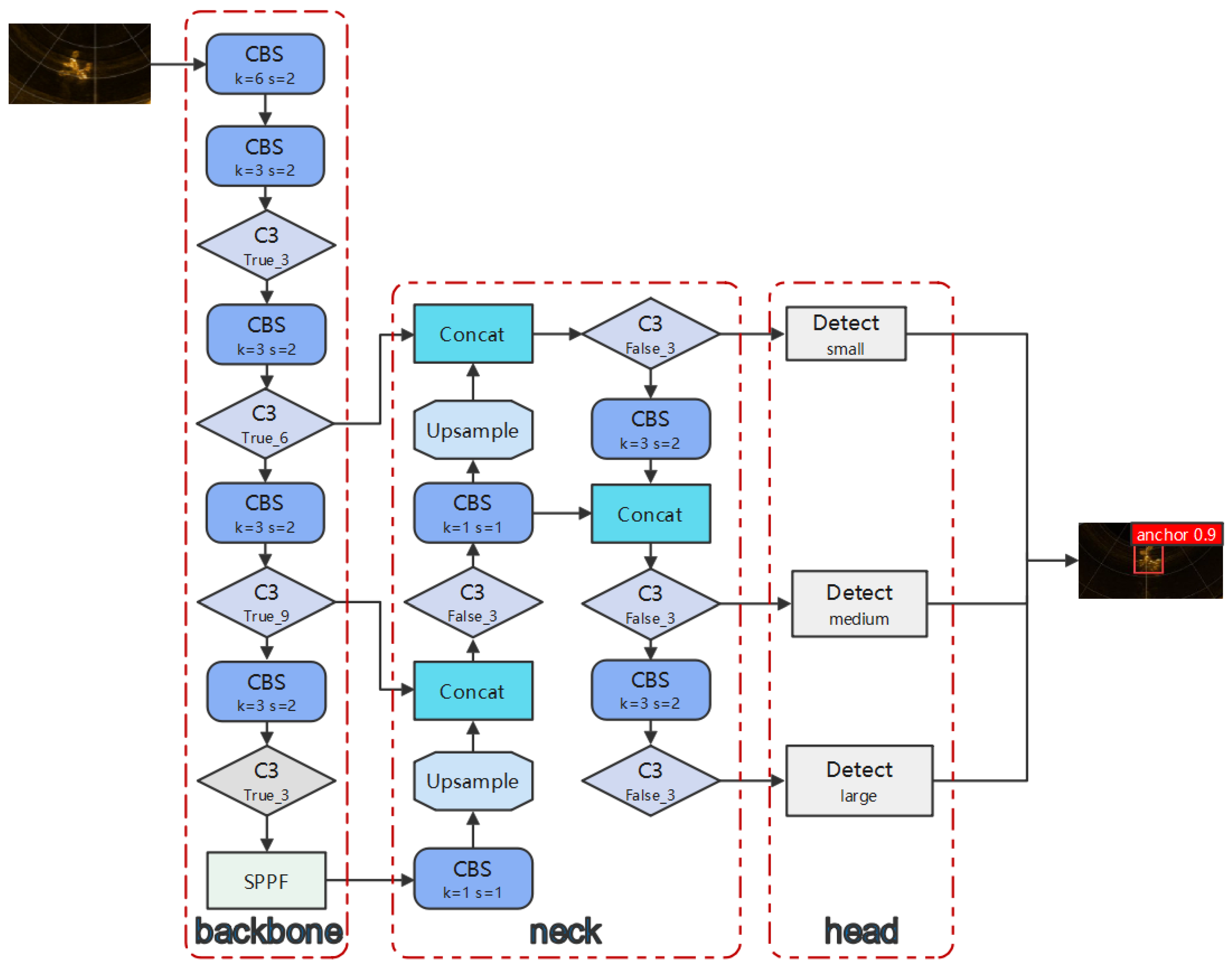

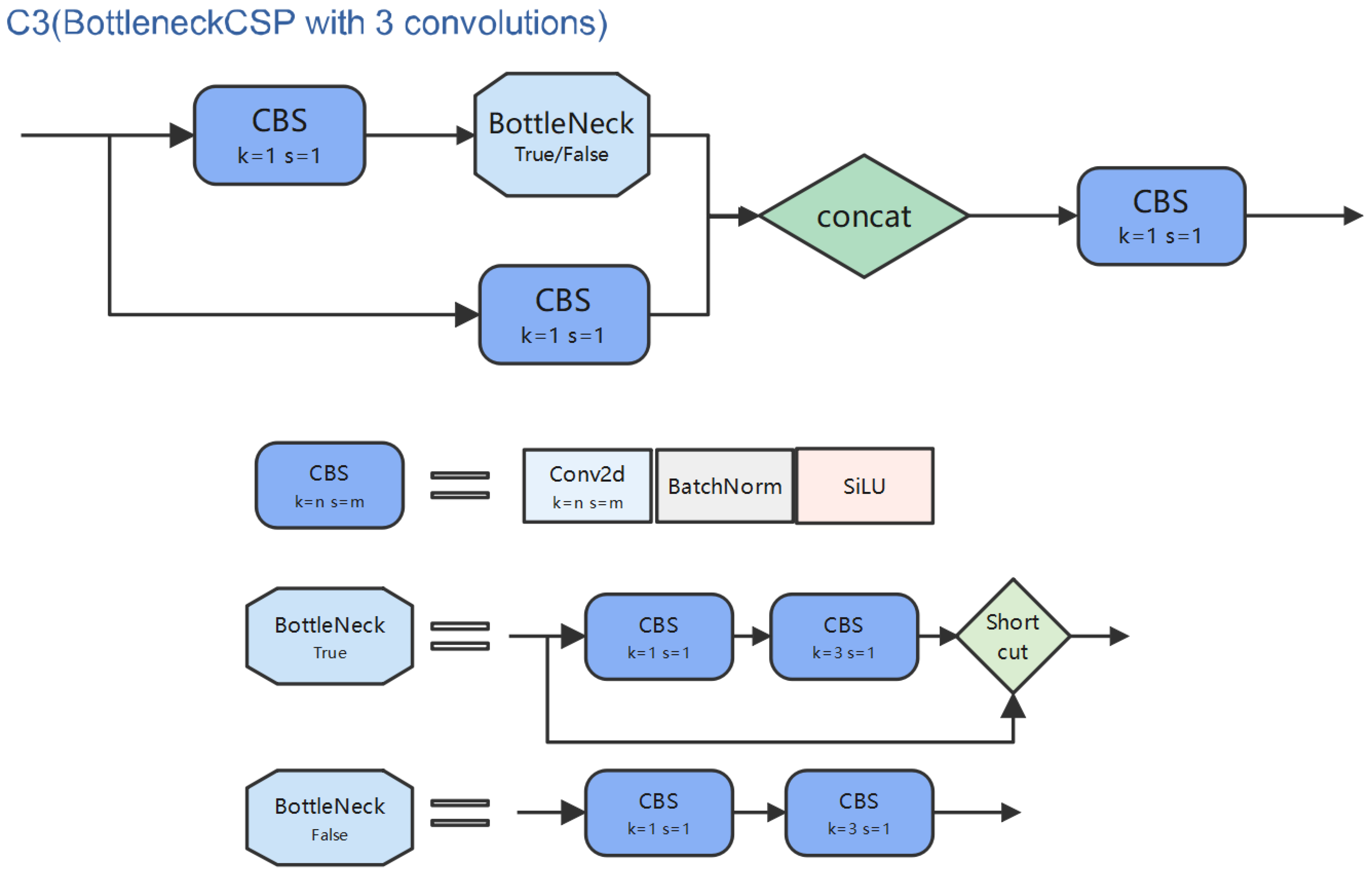

2.2.1. Basic Architecture

Backbone

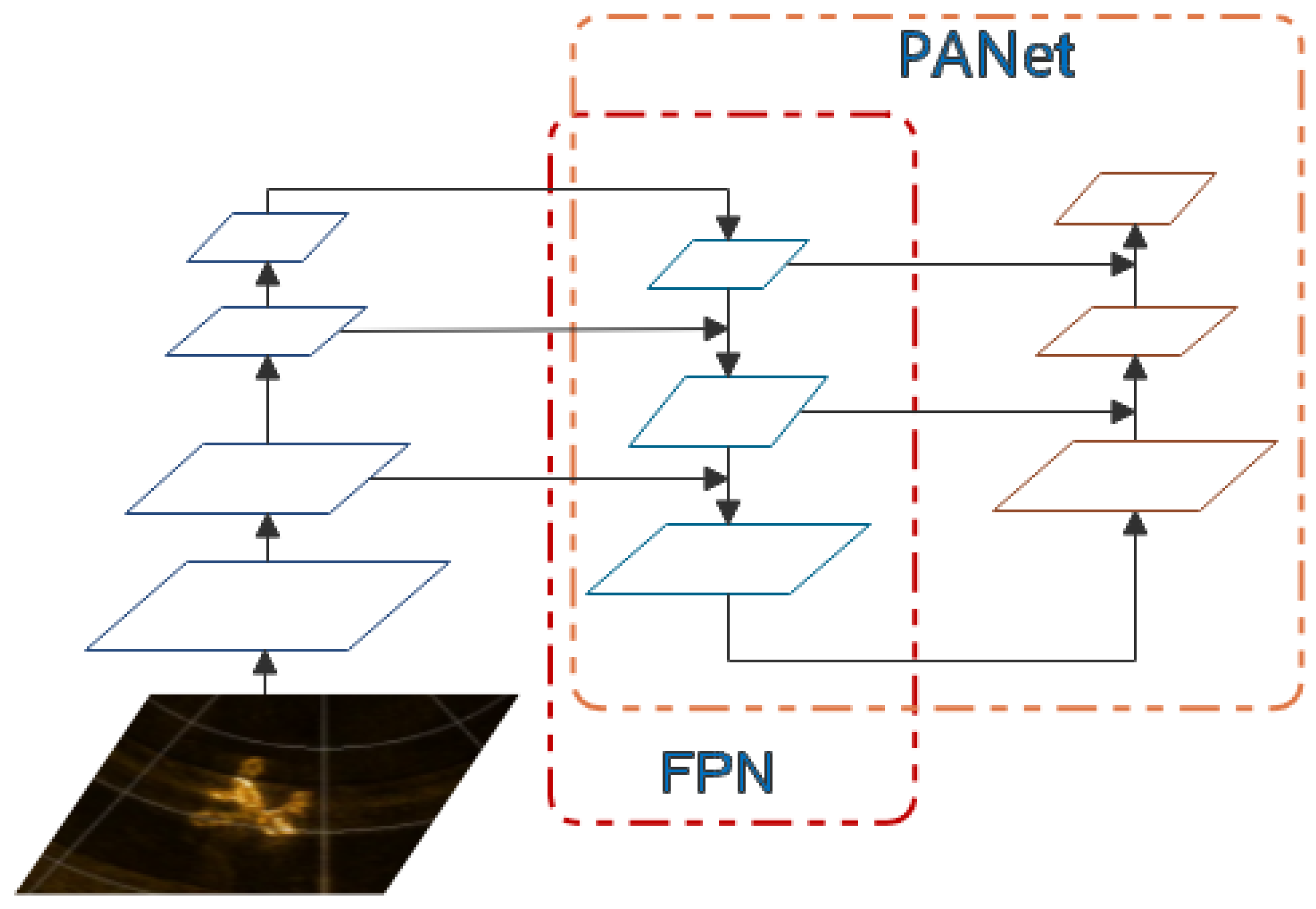

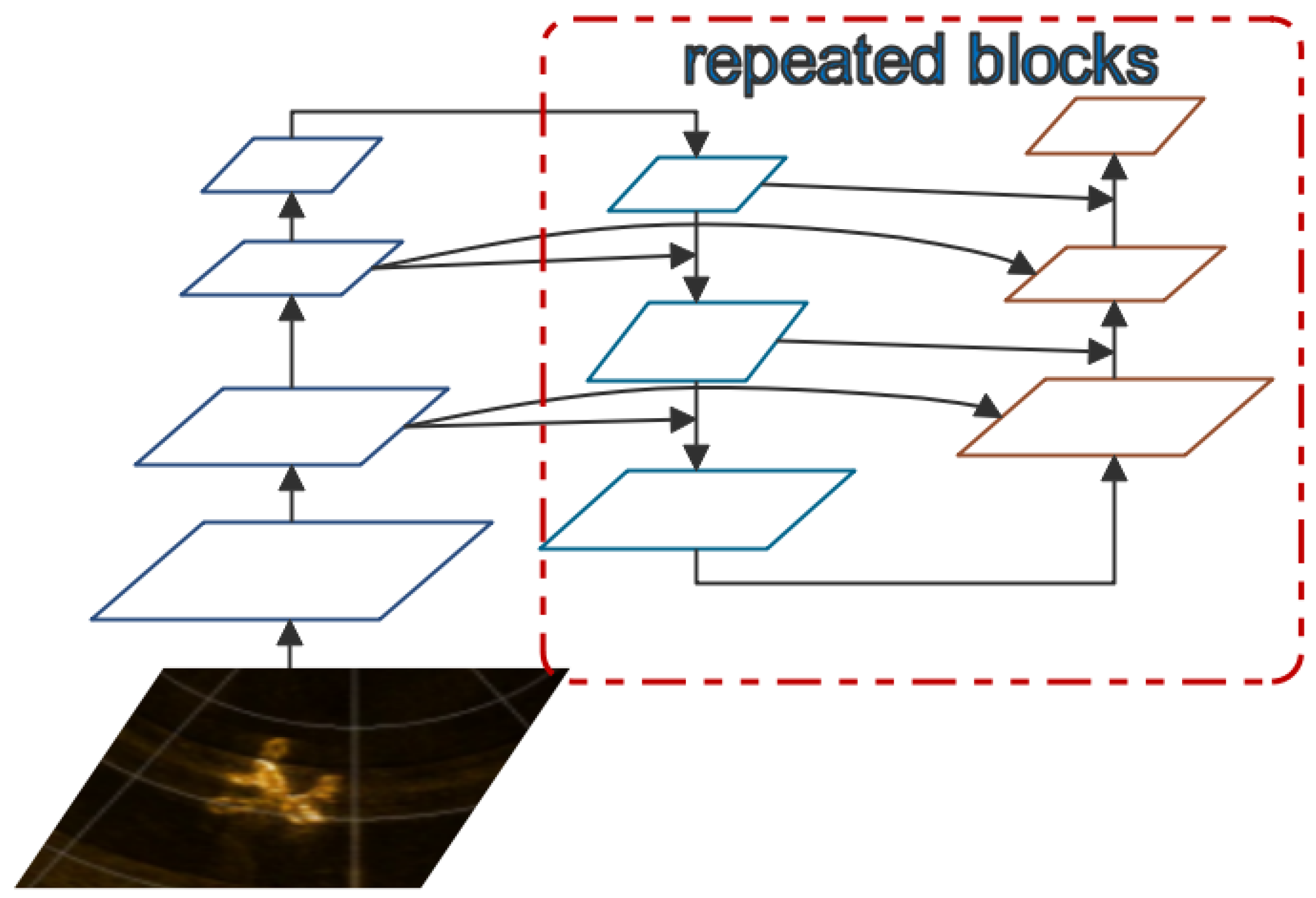

Neck

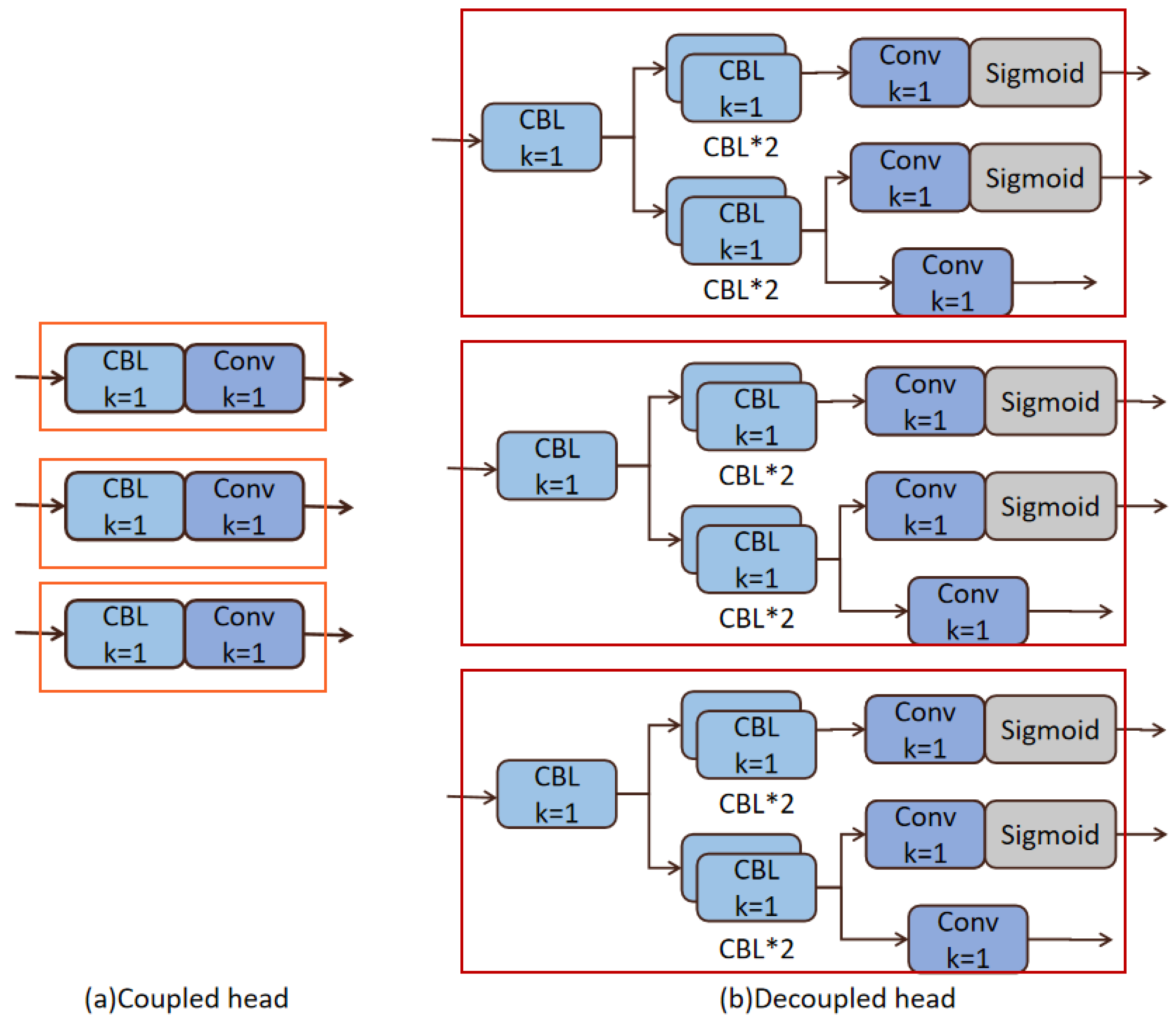

Detect Heads

Attention Mechanisms

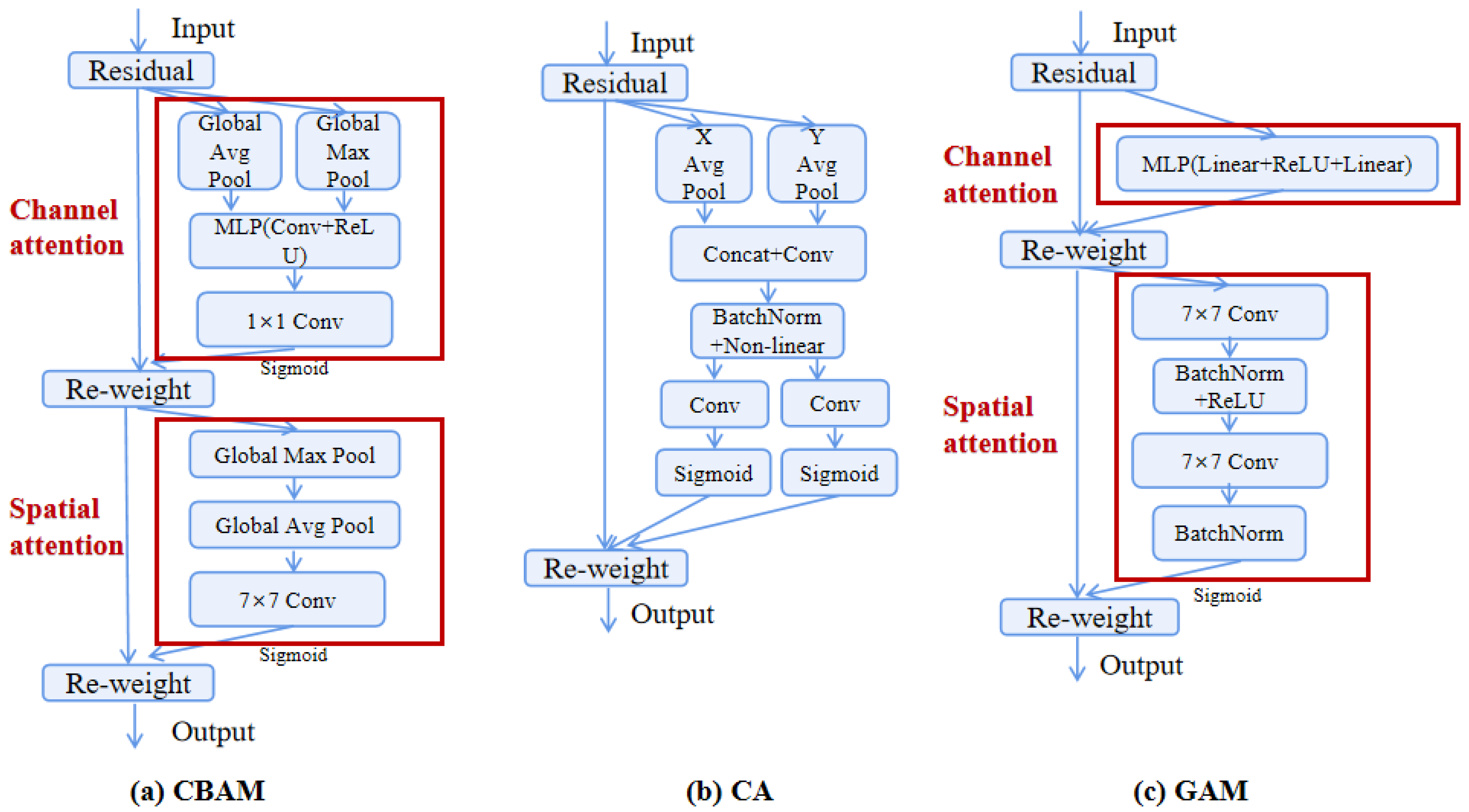

CBAM [36]

CA [37]

GAM [38]

2.2.2. Other Tricks

BiFPN

Decoupled Heads

3. Experiments

3.1. Detector Training

- 1.

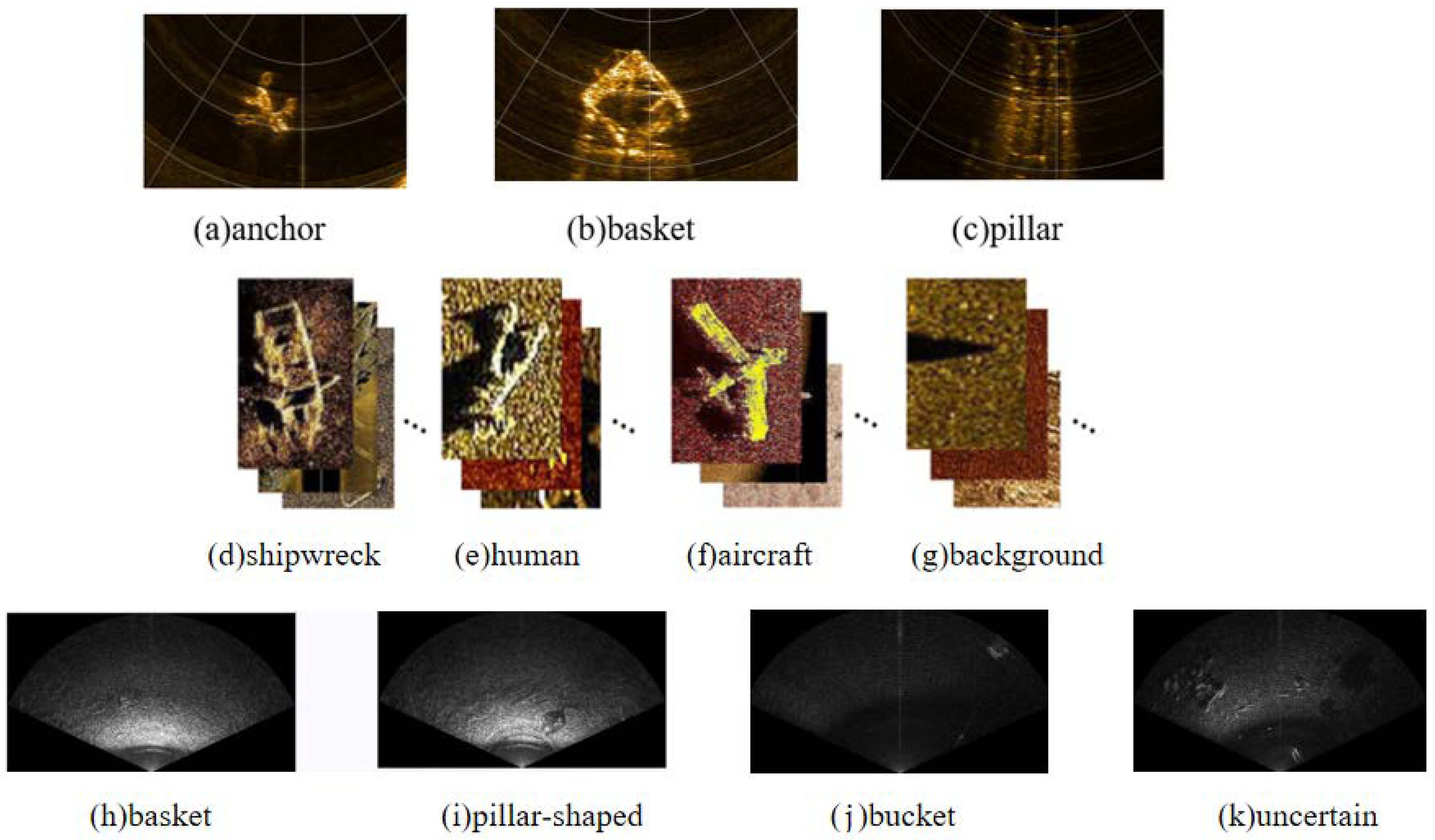

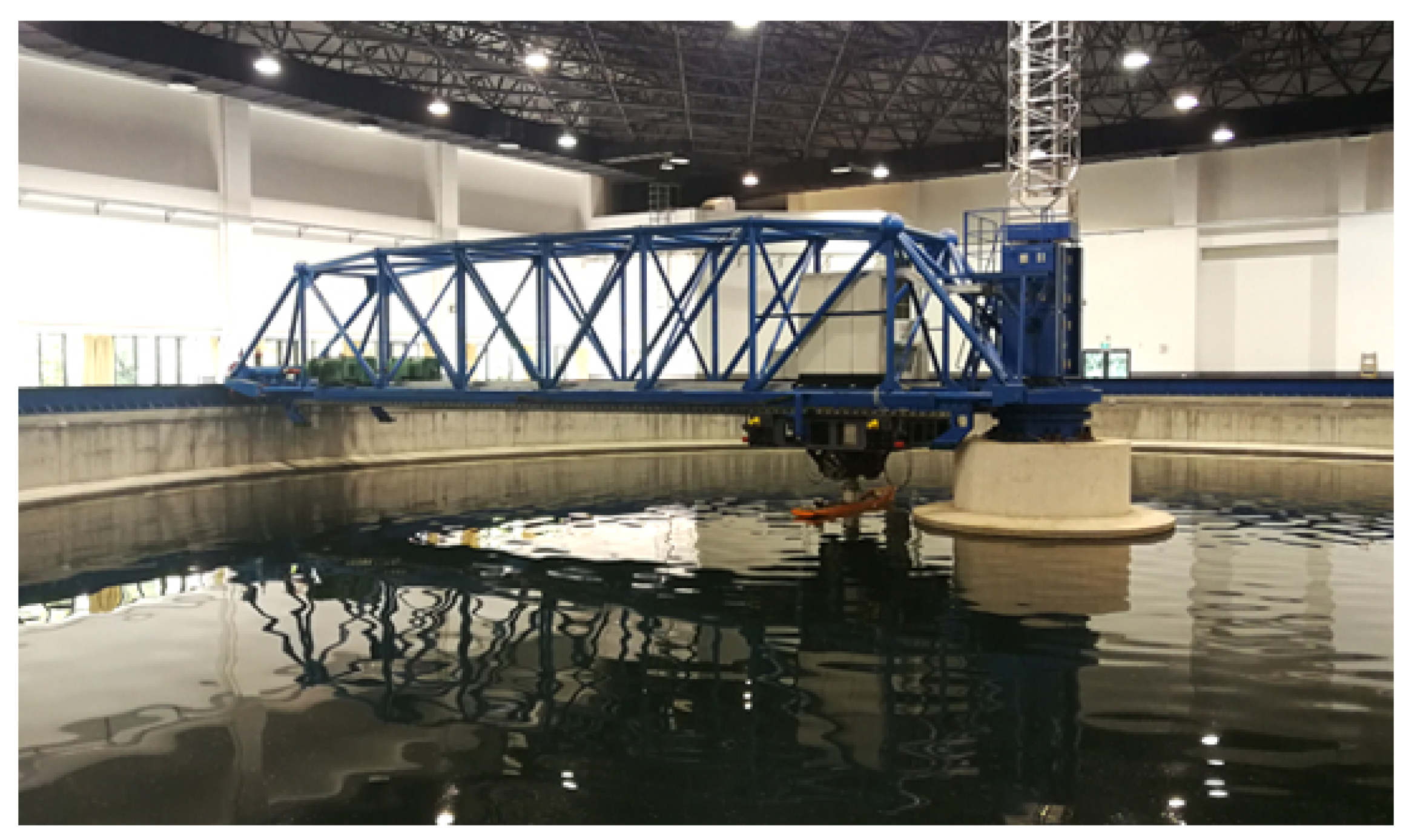

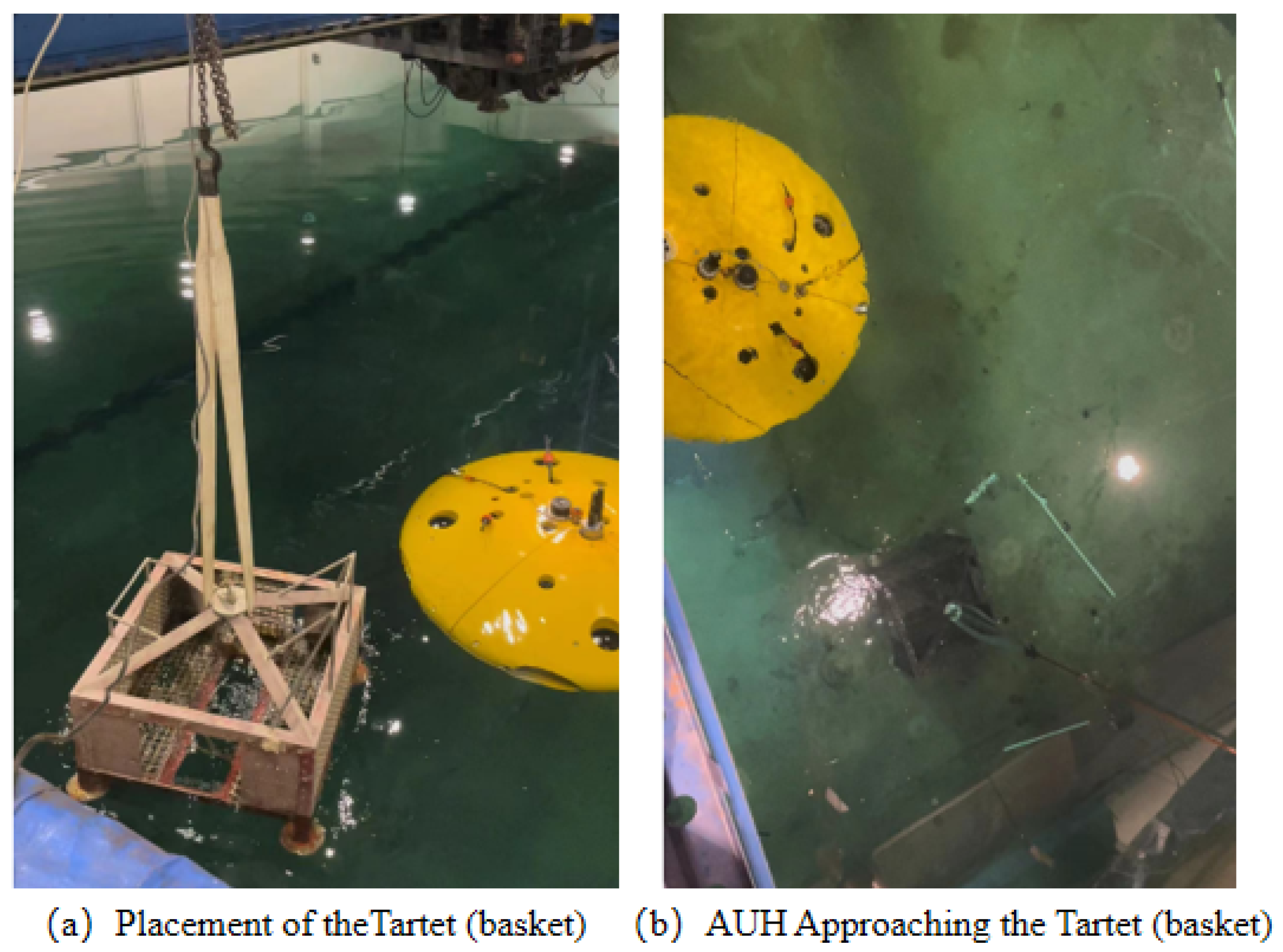

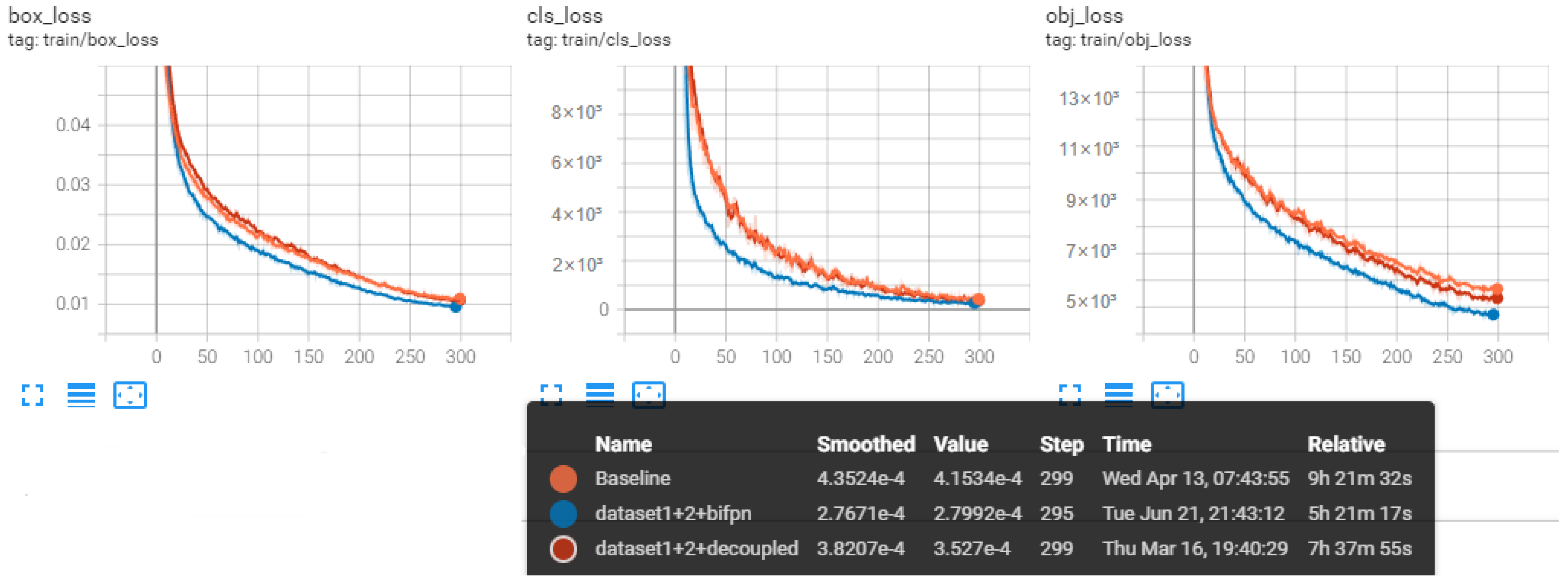

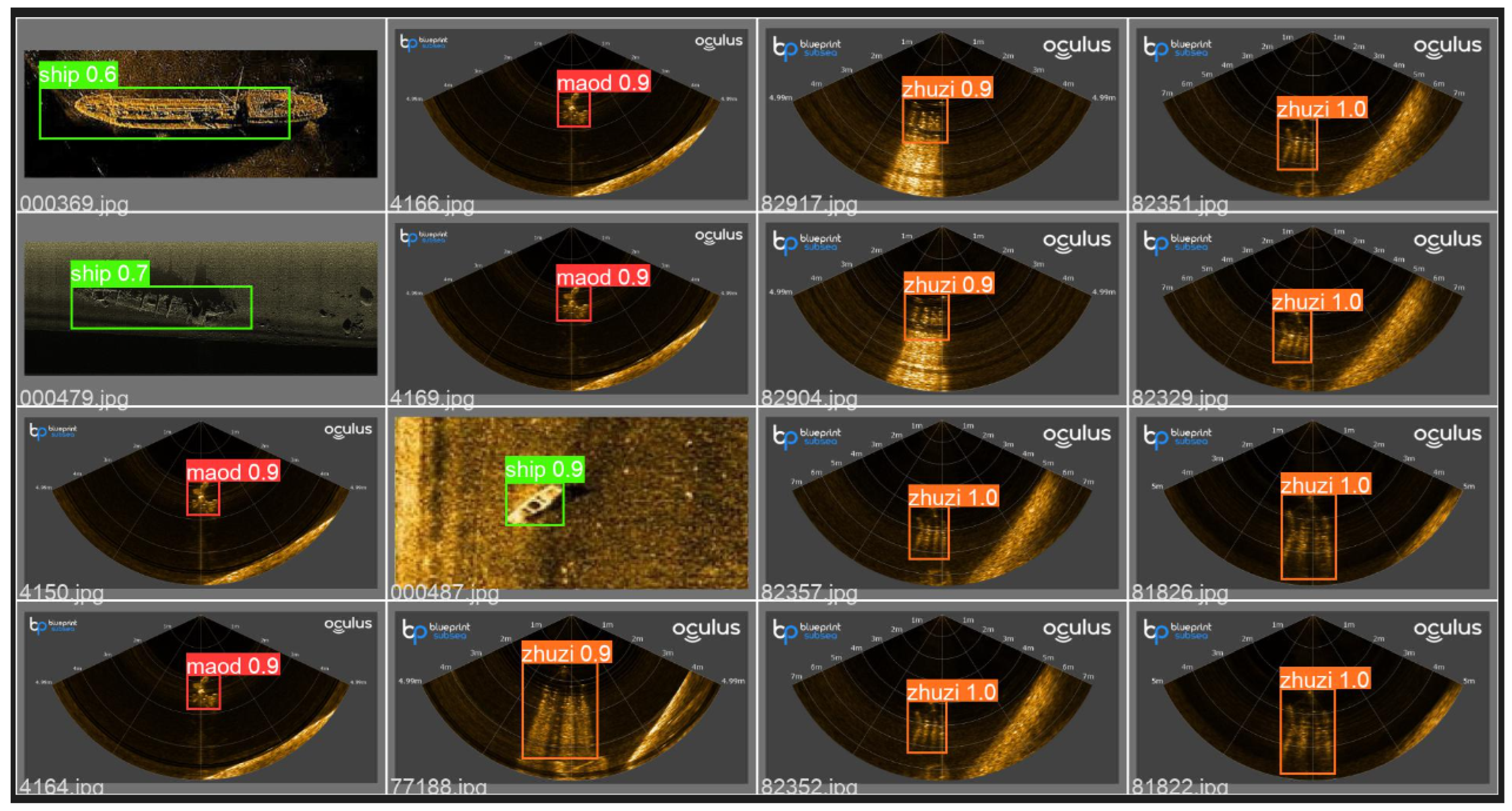

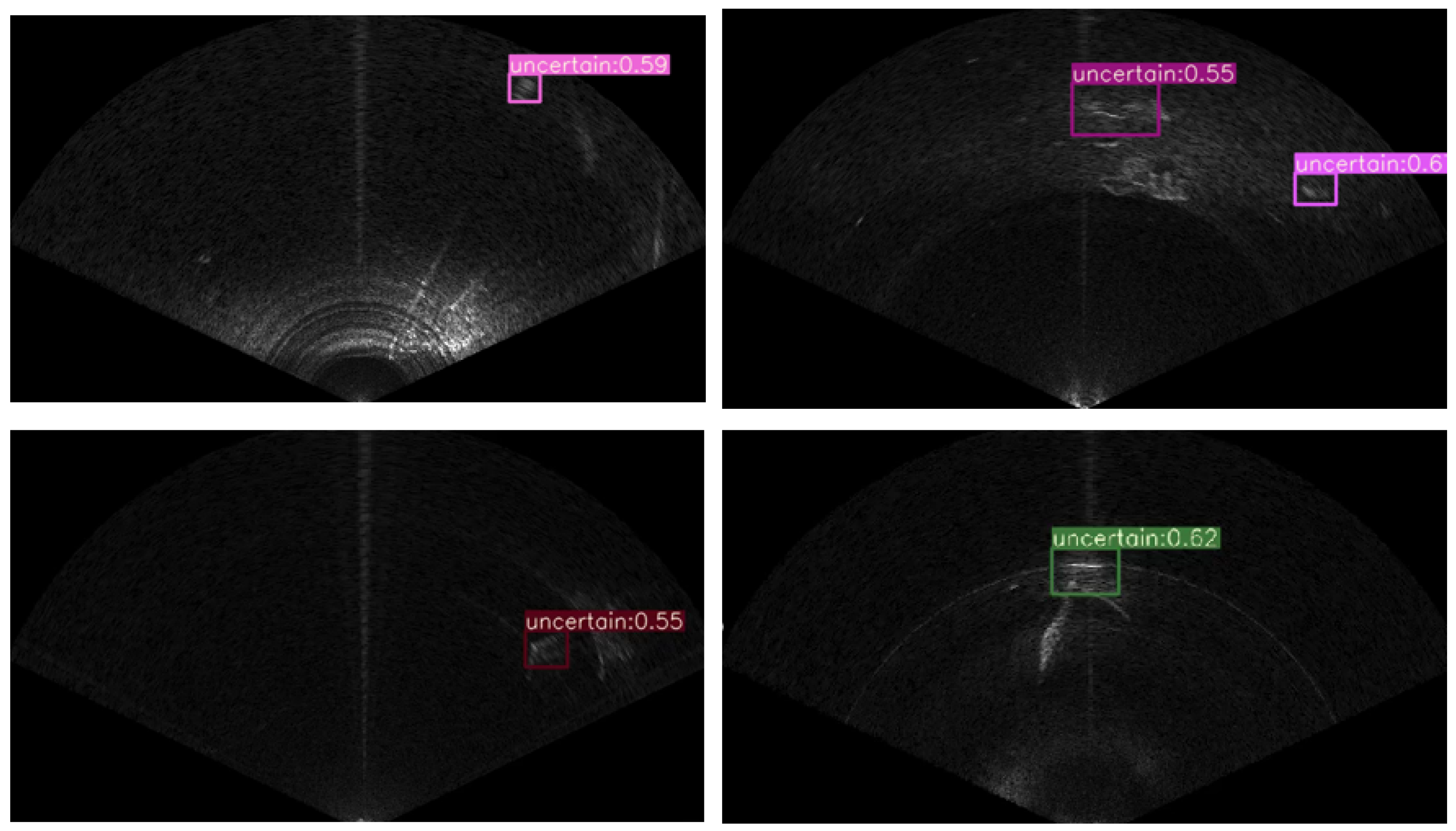

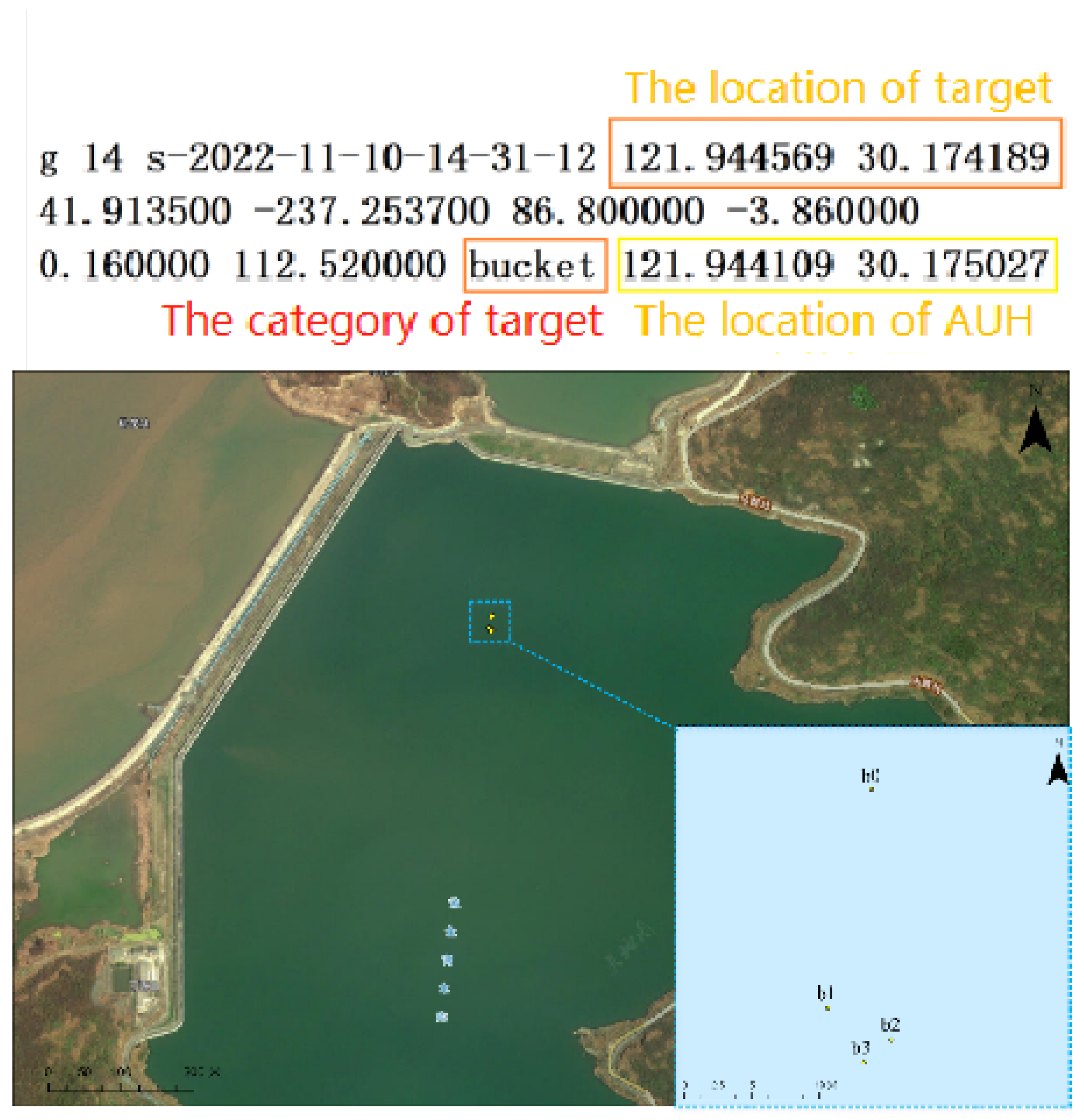

- DatasetsFour different datasets were collected in different water environments, including the tank at Ocean College, Zhejiang University, and the lake in Zhoushan, and they were divided into eight common underwater targets: anchor, basket, pillar, shipwreck, human, aircraft, bucket, and uncertain target.An anchor, a basket, and a pillar-shaped target were placed inside the school’s experimental tank. Oculus BluePrint MD750d forward-looking sonar mounted on a ROV was used for collection, named Dataset 1, and it includes 2239 images formed by the software ViewPoint, which the sonar company provided. Dataset 2 is an open-source dataset called the Sonar Common Target Detection Dataset (SCTD) [41], which has 357 images in total, including shipwrecks, humans, and aircraft. SCTD was composed of forward-looking sonar (FLS) images, side-scan sonar (SSS) images and synthetic aperture sonar (SAS) images. This dataset was used for training with the expectation that the model can determine all the types of sonar images to prevent overfitting. Datasets 3 and 4 were collected using MD750d forward-looking sonar, but the experimental site was moved outside. An anchor, a basket, and a group of buckets were place into the lake, and AUH was used to collect data. The program was written by us to obtain the original gray sonar images.Figure 8 shows some examples of the datasets.

- 2.

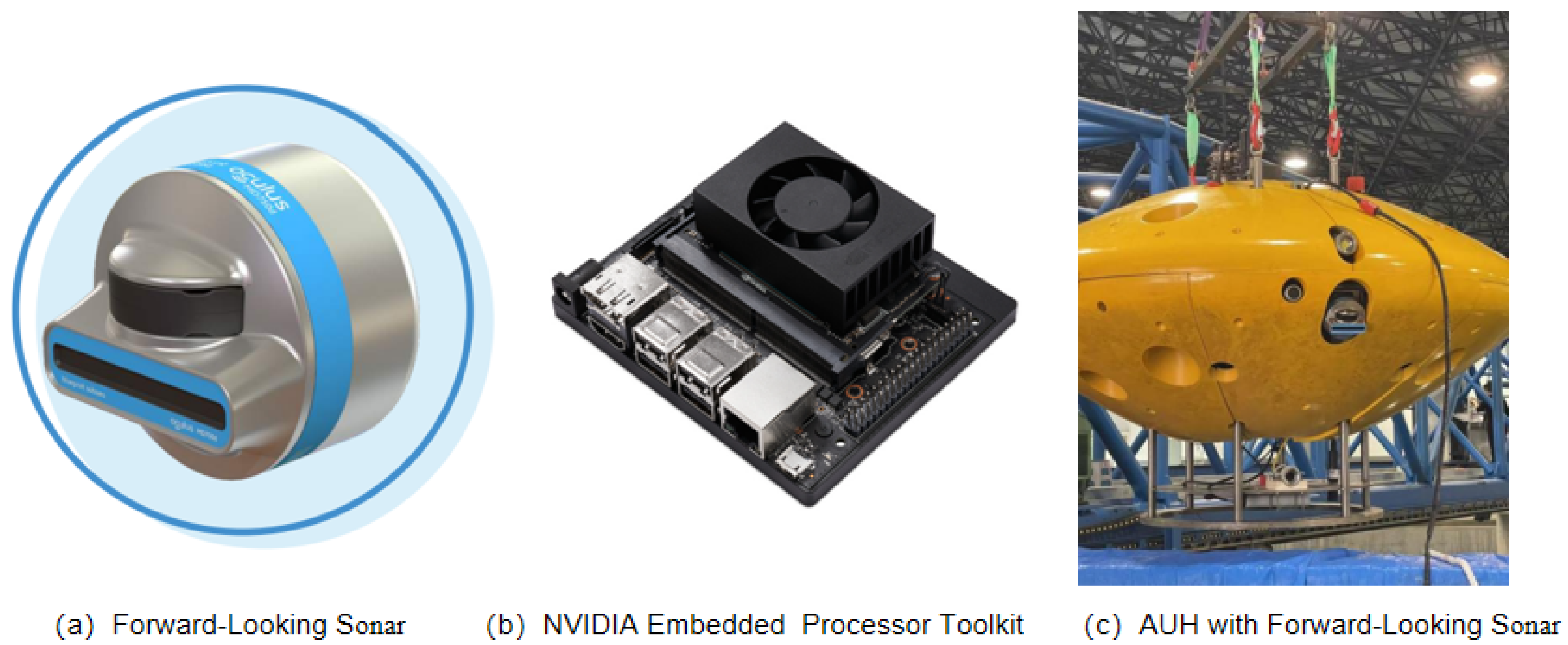

- Hardware PlatformThe autonomous underwater helicopter (AUH) [29] is one of the newly developed autonomous submersibles by Zhejiang University, with a disc-shaped design that enables ultra-mobility movements underwater, including full-circle rotation, stationary hovering, and free take-off and landing. It possesses various features, such as small-scale agile maneuverability, long-distance navigation, closed exterior, low operational resistance, and high structural stability. It can cruise for a long time at a fixed height close to the bottom of the water. Named as an underwater helicopter due to its similarity to a land-based helicopter in terms of characteristics, it can be employed as the ideal equipment for target detection or operation at a specific location by performing flexible and agile movements. These specialties make it highly suitable for solving the underwater target detection problem.Thus, forward-looking sonar was assembled at the front of the AUH at 20 degrees downward, as shown in Figure 9. The data were collected at a height of 5 m away from the bottom of the water.

- 3.

- Software PlatformThe computer configuration and coding environment for training the network are shown in Table 1.

- 4.

- ParametersThe parameter is the important factor influencing the results of the detection network, and a better outcome will be obtained simply by tuning the parameters. Thus, the standard and unified parameters shown in Table 2 are utilized to compare the networks with equity.SGD and Adamw have been separately tried as the optimizer to train the models. The outcome shows that Adamw is preferred over SGD due to its ability to converge faster and to handle sparse gradients better. The learning rate is adjusted automatically based on the historical gradients of the parameters and incorporates momentum to help accelerate convergence when using Adamw; on the other hand, SGD only uses a fixed learning rate for all parameters. Warm-up and cosine annealing are the commonly used strategies that also work for adjusting the learning rate during the training to let the model converge faster and better.

- 5.

- Training strategiesSome powerful training strategies in YOLOv5 are utilized to encourage the model to learn more context, generalize better to unseen data, and improve model robustness that can handle target variations across multiple scenarios.

- AutoAnchor:The k-means clustering algorithm is used to self-adaptively generate prior anchors by using all detection frames in the dataset before each training to enable the detection network to obtain more prior knowledge of the underwater target.

- Multi-scale training and distortion:The input images are randomly resized to different scales during each iteration of the training process. Multi-scale training is commonly used in conjunction with data augmentation techniques such as random cropping, rotating, scaling, flipping, translating, and shearing to geometrically distort the images. The purpose is about exposing the model to objects at different sizes and resolutions and to force it to learn spatial invariance and more robust features that can handle object variations across multiple scales and different water environments.

- Letterbox resize:Before training the images, all images need to be resized to fit into a fixed size without stretching or distorting the shape so that they can be fed into the neural network. Black bars are added to the top and bottom (or left and right) to fill the empty space created by the new size by using the least amount of bars. This strategy ensures that the relative scale and aspect ratio of objects within the images are preserved and that it can improve the accuracy and accelerate the training compared to the old version of YOLO because of the reduction of the filled area, which is redundant information.

3.2. Detector Evaluation

3.3. Results and Analysis

3.3.1. The Tank Experiments Stage

3.3.2. The Lake Test Stage

4. Discussion

4.1. Significance of the Proposed Method

- 1.

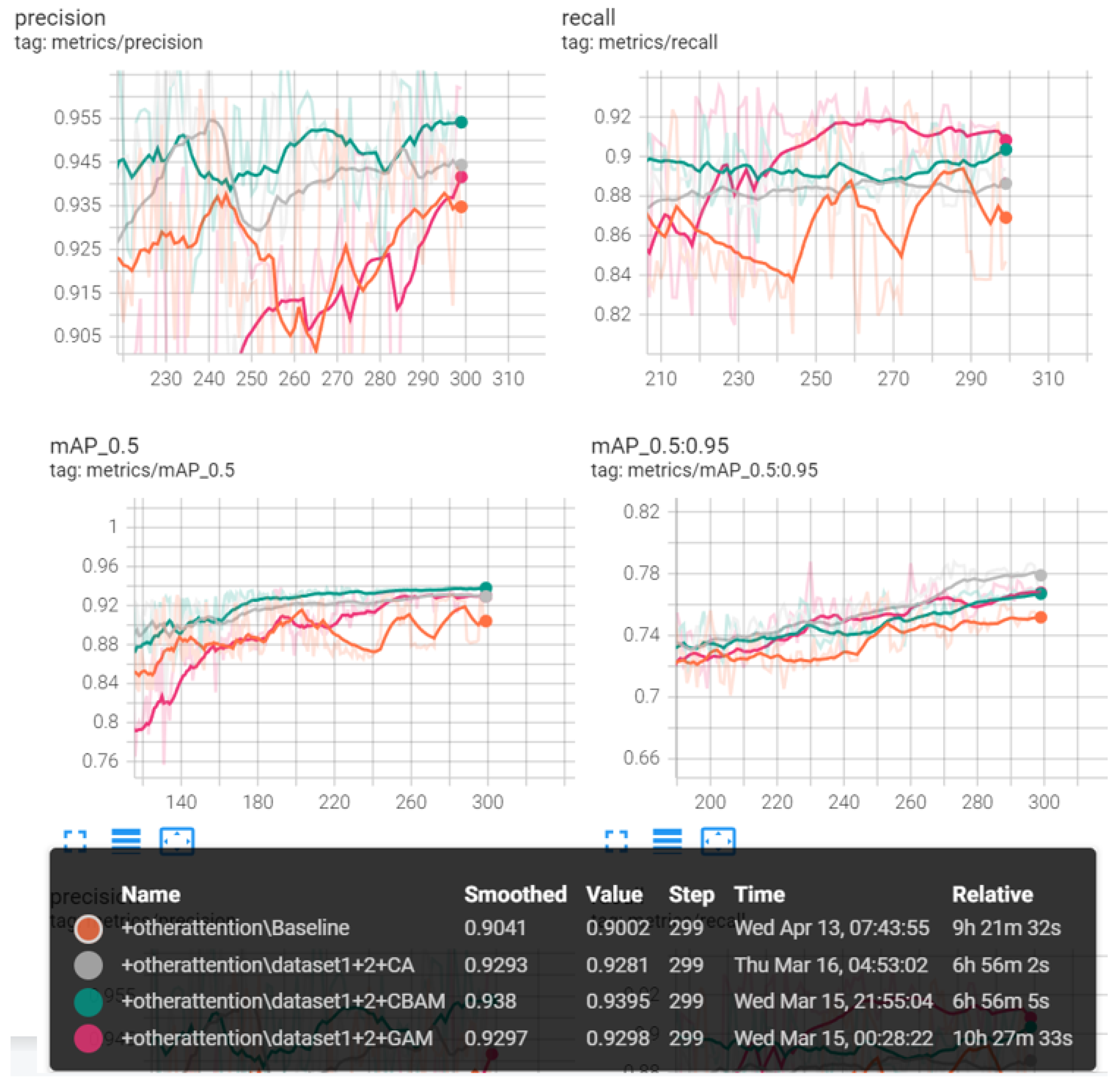

- TheoreticallyThe theory of the proposed improved-YOLOv5 is based on many previous works that have received validation. The principles of the attention mechanism have been carefully studied across the literature and have proven that CBAM, CA and GAM are all applicable to sonar-imagery detection and that they can improve the accuracy of most detection models to an extent of about 1–2% on our datasets. Therefore, attention mechanisms can force the models to focus on specific areas of which we want. Furthermore, BiFPN and decoupled heads can boost the mAP by 2–3% on our dataset in a relatively simple way and can achieve a 80.2% high mAP.In the meantime, we performed some comparison experiments with other SOTA models, including one-stage and two-stage CNN methods, to prove the advancements of the proposed improved-YOLOv5s. We fed dataset 1 + 2 into the different models and kept the training parameters as similar as possible.According to the results shown in Table 8, when attention mechanisms, BiFPN and decoupled heads are added into YOLOv5s, most of them can achieve better results than the other SOTA models—one-stage or two-stage.

- 2.

- SystematicallyFrom the installation of forward-looking sonar to the deployment of the detection model, from the training of an improved network to the validation of efficiency of the detector, and from the communication between the main controller and the detection module to the conversion of the target’s location, the research was covered. This real-time AUH-based underwater target detection system using sonar images was designed and test and achieved some significant achievements.

- 3.

- PracticallyThe whole workflow was validated through tank experiments and outdoor tests in open water. A series of experiments give strong support for the facility and efficiency of the designed detection system. The subsea AUH and some simple sensors can achieve the detection goal and detect the desired targets. Moreover, the system provides some thoughts for overcoming the difficulties of underwater target detection. Even with the low-cost sensors and low-quality images, good results can still be achieved by using the stable mounted platform and improved models.

4.2. Limitations of the Proposed Method

- 1.

- TheoreticallyAlthough attention mechanisms can improve mAP, neither the connection between the datasets nor the efficiency of the methods were found, for example, the reason that CBAM only improves by 0.1% mAP when applied to Datasets 1 and 2 but improves by 2% when joining Dataset 3 and why CA performs best in Datasets 1 and 2 but GAM wins in Dataset 3. This information seems to be random, and the reasons that explain these phenomena still need to be studied.On the other hand, decoupled heads have introduced more parameters and time to inference, which should be considered carefully, even though it is an efficient way to boost the mAP.

- 2.

- SystematicallyBecause of the time and cost restrictions of the outdoor experiments, the ablation tests, in reality used to verify the superiority of the improved-YOLOv5 network compared to the raw one, are not finished. The conclusion is qualitative such that the improved network is useful and efficient, but it is not quantitative. In the future, it needs to be proven that in an open water area, when the AUH is on a fixed track and obtains the same amount of sonar images, improved-YOLOv5 can recognize more targets and detect them faster. How to convert longitude and latitude of the targets more precisely through the coordinates of the bounding boxes is also a direction to revise and improve.

- 3.

- PracticallyCurrently, the proposed method for detecting underwater targets is restricted by the requirements of sonar images. The sonar images of the desired target for detection should be acquired to train the models. However, sometimes it is hard and inconvenient to obtain the desired images; thus, using other techniques such as GAN [42] and diffuse models to generate sonar images with the limited images that already exist without using sonar is a topic that is worthy of discussion. The uncertain targets cannot be clarified. Thus, aligning other methods and devices to detect them together will make the detection system more practical, for example, using cameras in clean water or magnetometers to detect magnetic targets.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Fukushima, K.; Miyake, S. Neocognitron: A new algorithm for pattern recognition tolerant of deformations and shifts in position. Pattern Recognit. 1982, 15, 455–469. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Chin-Hsing, C.; Jiann-Der, L.; Ming-Chi, L. Classification of underwater signals using wavelet transforms and neural networks. Math. Comput. Model. 1998, 27, 47–60. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support vector machine. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Wan, A.; Dunlap, L.; Ho, D.; Yin, J.; Lee, S.; Jin, H.; Petryk, S.; Bargal, S.A.; Gonzalez, J.E. NBDT: Neural-backed decision trees. arXiv 2020, arXiv:2004.00221. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W.; Jackel, L. Handwritten digit recognition with a back-propagation network. Adv. Neural Inf. Process. Syst. 1989, 2, 396–404. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Wang, J.; Shan, T.; Chandrasekaran, M.; Osedach, T.; Englot, B. Deep learning for detection and tracking of underwater pipelines using multibeam imaging sonar. In Proceedings of the IEEE International Conference on Robotics and Automation Workshop, Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Sung, M.; Kim, J.; Lee, M.; Kim, B.; Kim, T.; Kim, J.; Yu, S.C. Realistic sonar image simulation using deep learning for underwater object detection. Int. J. Control. Autom. Syst. 2020, 18, 523–534. [Google Scholar] [CrossRef]

- Lee, S.; Park, B.; Kim, A. Deep learning from shallow dives: Sonar image generation and training for underwater object detection. arXiv 2018, arXiv:1810.07990. [Google Scholar]

- Chen, R.; Zhan, S.; Chen, Y. Underwater Target Detection Algorithm Based on YOLO and Swin Transformer for Sonar Images. In Proceedings of the OCEANS 2022, Hampton Roads, VA, USA, 17–20 October 2022; pp. 1–7. [Google Scholar]

- Dobeck, G.J. Algorithm fusion for the detection and classification of sea mines in the very shallow water region using side-scan sonar imagery. In Detection and Remediation Technologies for Mines and Minelike Targets V; SPIE: Bellingham, WA, USA, 2000; Volume 4038, pp. 348–361. [Google Scholar]

- Jing, Y.; Ren, Y.; Liu, Y.; Wang, D.; Yu, L. Automatic extraction of damaged houses by earthquake based on improved YOLOv5: A case study in Yangbi. Remote Sens. 2022, 14, 382. [Google Scholar] [CrossRef]

- Panboonyuen, T.; Thongbai, S.; Wongweeranimit, W.; Santitamnont, P.; Suphan, K.; Charoenphon, C. Object detection of road assets using transformer-based YOLOX with feature pyramid decoder on thai highway panorama. Information 2022, 13, 5. [Google Scholar] [CrossRef]

- Xu, C.; Wang, X.; Yang, Y. Attention-YOLO: YOLO Object Detection Algorithm with Attention Mechanism. Comput. Eng. Appl. 2019, 55, 12. [Google Scholar]

- Zhang, M.; Xu, S.; Song, W.; He, Q.; Wei, Q. Lightweight underwater object detection based on yolo v4 and multi-scale attentional feature fusion. Remote Sens. 2021, 13, 4706. [Google Scholar] [CrossRef]

- Kong, W.; Hong, J.; Jia, M.; Yao, J.; Cong, W.; Hu, H.; Zhang, H. YOLOv3-DPFIN: A dual-path feature fusion neural network for robust real-time sonar target detection. IEEE Sens. J. 2019, 20, 3745–3756. [Google Scholar] [CrossRef]

- Topple, J.M.; Fawcett, J.A. MiNet: Efficient deep learning automatic target recognition for small autonomous vehicles. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1014–1018. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Wang, Z.; Liu, X.; Huang, H.; Chen, Y. Development of an autonomous underwater helicopter with high maneuverability. Appl. Sci. 2019, 9, 4072. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 1–40. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef] [PubMed]

- Xu, B.; Wang, N.; Chen, T.; Li, M. Empirical evaluation of rectified activations in convolutional network. arXiv 2015, arXiv:1505.00853. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Zhou, Y.; Chen, S.; Wu, K.; Ning, M.; Chen, H.; Zhang, P. SCTD1. 0: Sonar common target detection dataset. Comput. Sci. 2021, 48, 334–339. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Naseer, M.M.; Ranasinghe, K.; Khan, S.H.; Hayat, M.; Shahbaz Khan, F.; Yang, M.H. Intriguing properties of vision transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 23296–23308. [Google Scholar]

| Items | Version |

|---|---|

| CPU | AMD Ryzen 7 5800 8-Core Processor |

| GPU | NVIDIA GeForce RTX 3070 Ti |

| Video memory | 8 GB |

| RAM | 16 GB |

| CUDA | CUDA v11.0 CuDNN v8.0.4 |

| Python | 3.7.11 |

| Pytorch | 1.7.0 |

| Operating system | Windows11 |

| NVIDIA’s Embedded Processor Toolkit | Jetson Xavier NX |

| Training Parameter | Value |

|---|---|

| train:val:test | 8:1:1 |

| batch_size | 8 for training and 4 for validating |

| epochs | 300 |

| input_size | (640, 640) |

| momentum | 0.937 |

| weight_decay | 0.0005 |

| initial learning rate (lr0) | 0.01 (SGD) 0.001 (Adamw) |

| cyclical learning rate (lrf) | 0.1 (Cosine annealing) |

| warmup_epochs | 3 |

| warmup_momentum | 0.8 |

| warmup_bias_lr | 0.1 |

| default anchor size | [32, 31, 47, 46, 65, 60] [59, 75, 83, 75, 84, 87] [97, 98, 117, 114, 118, 144] |

| Confusion Matrix | Results from Detection Network | ||

|---|---|---|---|

| Positive | Negative | ||

| Ground Truth | Positive | True Positive (TP) | False Negative (FN) |

| Negative | False Positive (FP) | True Negative (TN) | |

| Structure | YOLOv5s | YOLOv5s+ | YOLOv5s+ | YOLOv5s+ |

|---|---|---|---|---|

| (Datasets 1 + 2) | Baseline | CBAM | CA | GAM |

| Backbone | C3 | C3 | C3 | C3 |

| C3 | C3 | C3 + CA | C3 | |

| C3 | C3 | C3 + CA | C3 | |

| C3 | C3 + CBAM | C3 + CA | C3 + GAM | |

| Neck | C3 | C3 | C3 | C3 |

| C3 | C3 + CBAM | C3 | C3 + GAM | |

| C3 | C3 + CBAM | C3 | C3 + GAM | |

| C3 | C3 + CBAM | C3 | C3 + GAM | |

| mAP(0.50:0.95) | 0.769 | 0.77 (+0.1%) | 0.79 (+2.1%) | 0.788 (+1.9%) |

| Layers | 213 | 257 | 237 | 257 |

| Parameter (M) | 7.02 | 7.10 | 7.06 | 11.05 |

| Weights (MB) | 13.7 | 13.9 | 13.8 | 21.4 |

| Structure (Dataset 1 + 2) | Baseline | +BiFPN | +Decoupled Heads |

|---|---|---|---|

| MAP (0.5:0.95) | 0.769 | 0.79 (+2.1%) | 0.802 (+3.3%) |

| Layers | 213 | 229 | 267 |

| Parameter | 7.02 M | 8.09 M | 14.30 M |

| Inference Time (ms) | 3.5 | 4.0 | 5.4 |

| Weights (MB) | 13.7 | 14.8 | 27.7 |

| Structure | YOLOv5s | YOLOv5s+ | YOLOv5s+ | YOLOv5s+ |

|---|---|---|---|---|

| (Datasets 1 + 2 + 3) | Baseline | CBAM | CA | GAM |

| Backbone | C3 | C3 | C3 | C3 |

| C3 | C3 | C3 + CA | C3 | |

| C3 | C3 | C3 + CA | C3 | |

| C3 | C3 + CBAM | C3 + CA | C3 + GAM | |

| Neck | C3 | C3 | C3 | C3 |

| C3 | C3 + CBAM | C3 | C3 + GAM | |

| C3 | C3 + CBAM | C3 | C3 + GAM | |

| C3 | C3 + CBAM | C3 | C3 + GAM | |

| mAP (0.50:0.95) | 0.763 | 0.783 (+2.0%) | 0.773 (+1.0%) | 0.784 (+2.1%) |

| Inference Time (ms) | 3.5 | 3.7 | 4.3 | 6.1 |

| Methods | .pt | .onnx | .engine (with C++) | .engine (with Python) |

|---|---|---|---|---|

| Average Inference Time (ms) | 55 | 1311 | 30 | 21 |

| Methods | mAP (0.5:0.95) | |

|---|---|---|

| YOLOseries | YOLOv3 | 0.605 |

| YOLOv4 | 0.603 | |

| YOLOv5 | 0.769 | |

| Other One-stage Detectors | RetinaNet | 0.753 |

| SSD | 0.780 | |

| Two-Stage Detector | Faster-RCNN | 0.617 |

| Proposed Methods | +CBAM | 0.77 |

| +CA | 0.79 | |

| +GAM | 0.788 | |

| +BiFPN | 0.79 | |

| +decoupled heads | 0.802 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, R.; Chen, Y. Improved Convolutional Neural Network YOLOv5 for Underwater Target Detection Based on Autonomous Underwater Helicopter. J. Mar. Sci. Eng. 2023, 11, 989. https://doi.org/10.3390/jmse11050989

Chen R, Chen Y. Improved Convolutional Neural Network YOLOv5 for Underwater Target Detection Based on Autonomous Underwater Helicopter. Journal of Marine Science and Engineering. 2023; 11(5):989. https://doi.org/10.3390/jmse11050989

Chicago/Turabian StyleChen, Ruoyu, and Ying Chen. 2023. "Improved Convolutional Neural Network YOLOv5 for Underwater Target Detection Based on Autonomous Underwater Helicopter" Journal of Marine Science and Engineering 11, no. 5: 989. https://doi.org/10.3390/jmse11050989

APA StyleChen, R., & Chen, Y. (2023). Improved Convolutional Neural Network YOLOv5 for Underwater Target Detection Based on Autonomous Underwater Helicopter. Journal of Marine Science and Engineering, 11(5), 989. https://doi.org/10.3390/jmse11050989