Fusion of Acoustic and Vis-NIRS Information for High-Accuracy Online Detection of Moldy Core in Apples

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Sample Preparation

2.1.1. Experimental Apple Samples

2.1.2. Sample Preparation of Moldy Core Apples

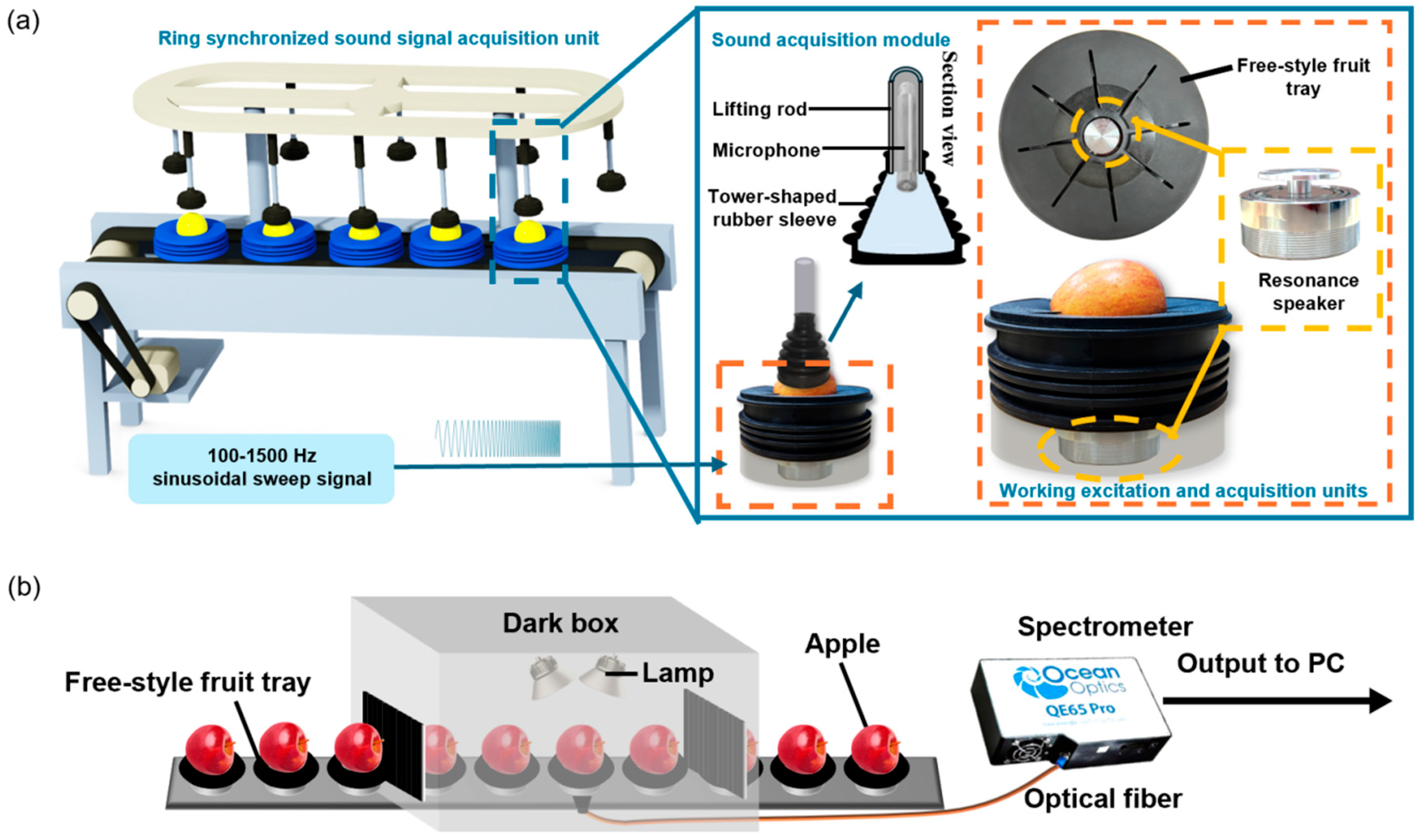

2.2. Collection of Acoustic and Vis–NIRS Signals of Apples

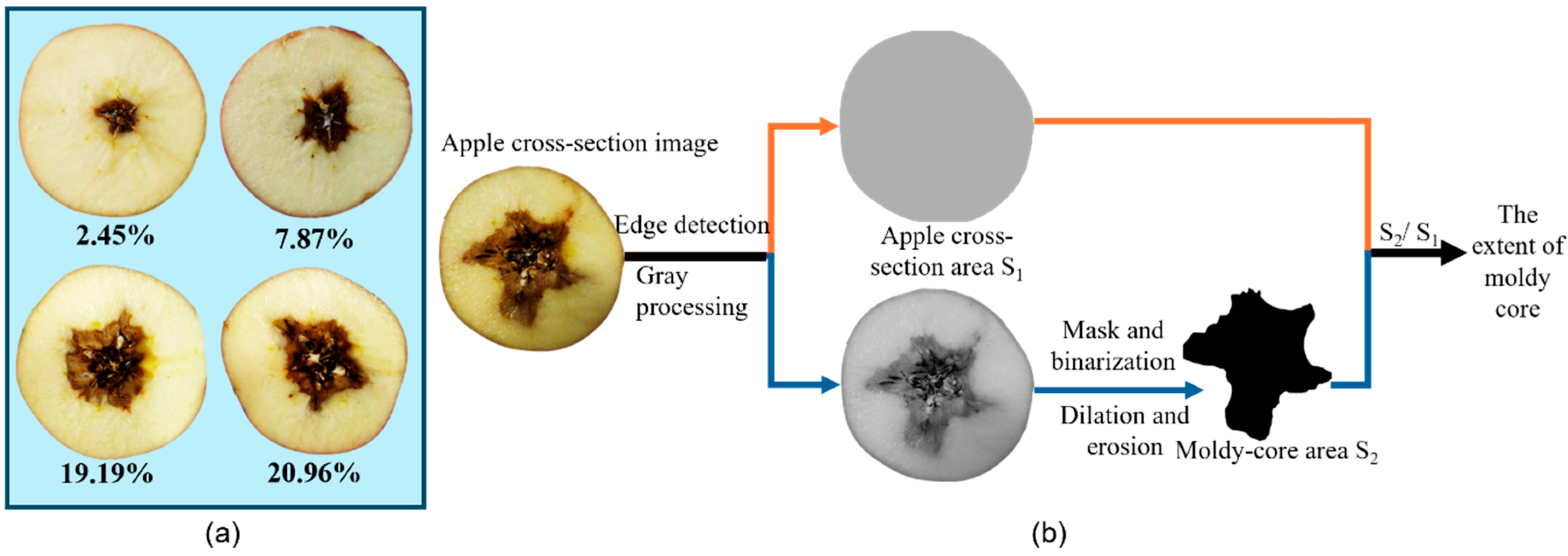

2.3. Classifying the Extent of Apple Moldy Core

2.4. Apple Moldy Core Detection Models

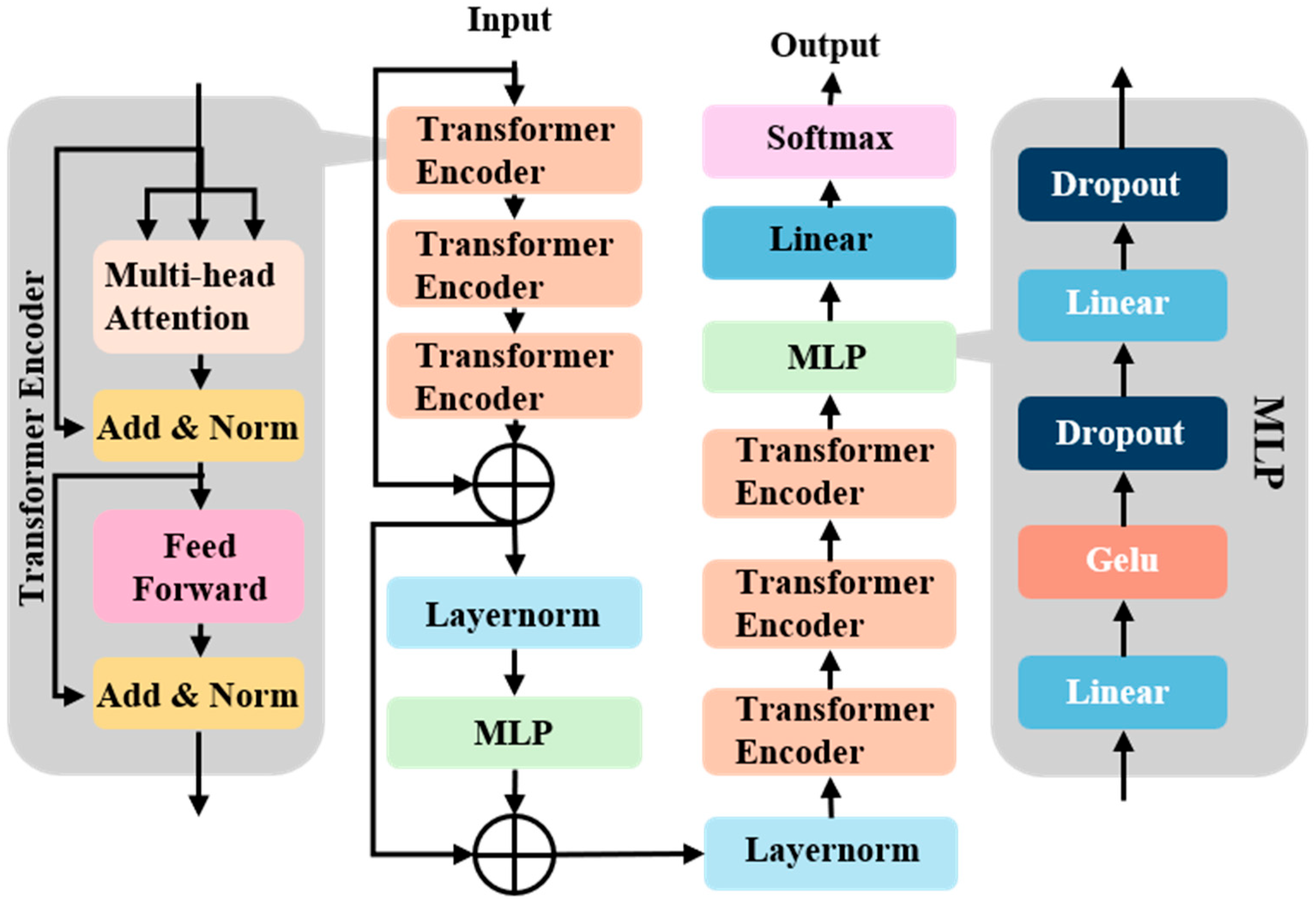

2.4.1. MLP-Transformer Model

2.4.2. PLS-DA and SVM Models

2.5. Performance Parameters of the Models

3. Results and Discussion

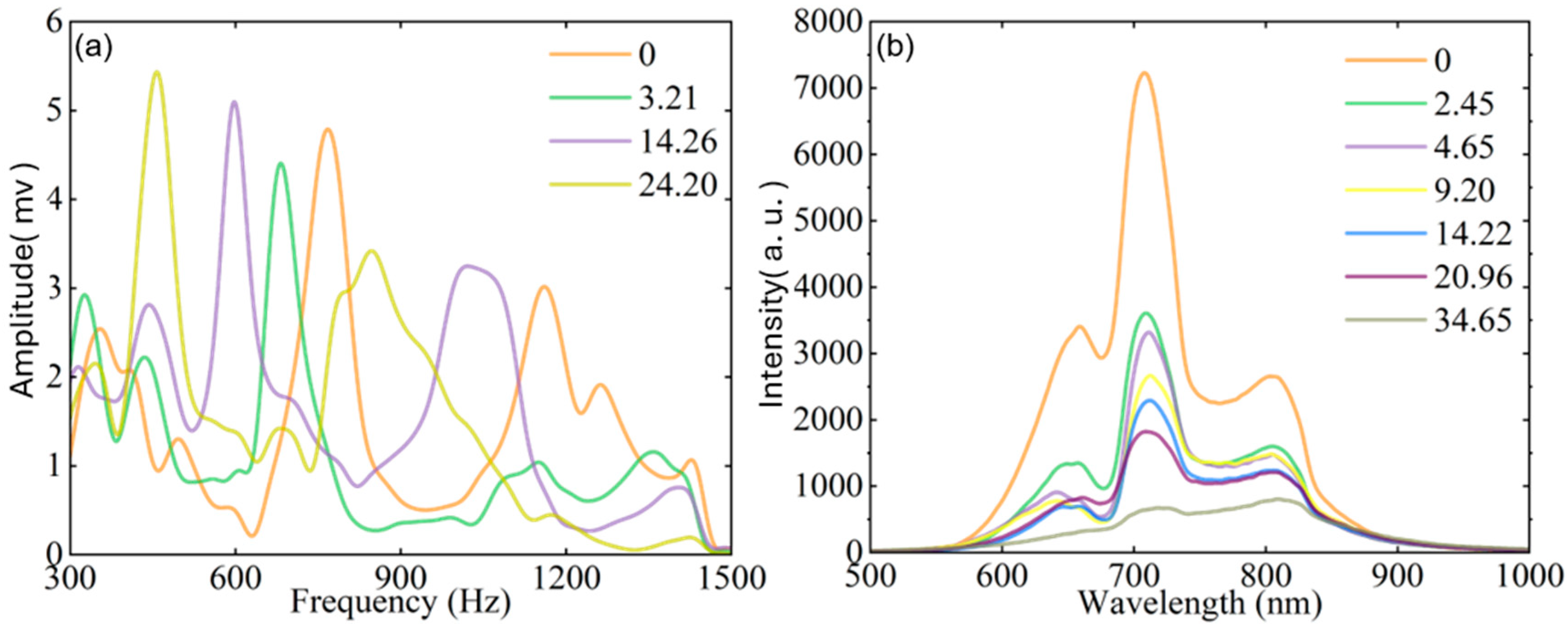

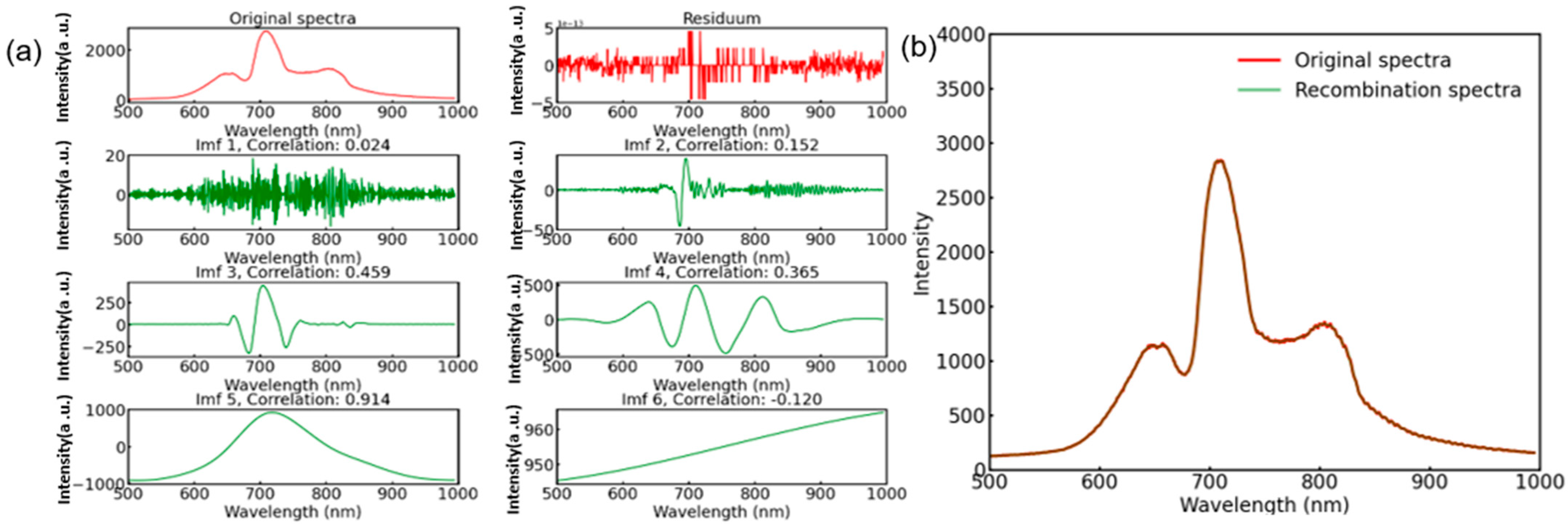

3.1. Sound and Vis–NIRS Data Analysis

3.2. Classification Results from Traditional Machine Learning Algorithms

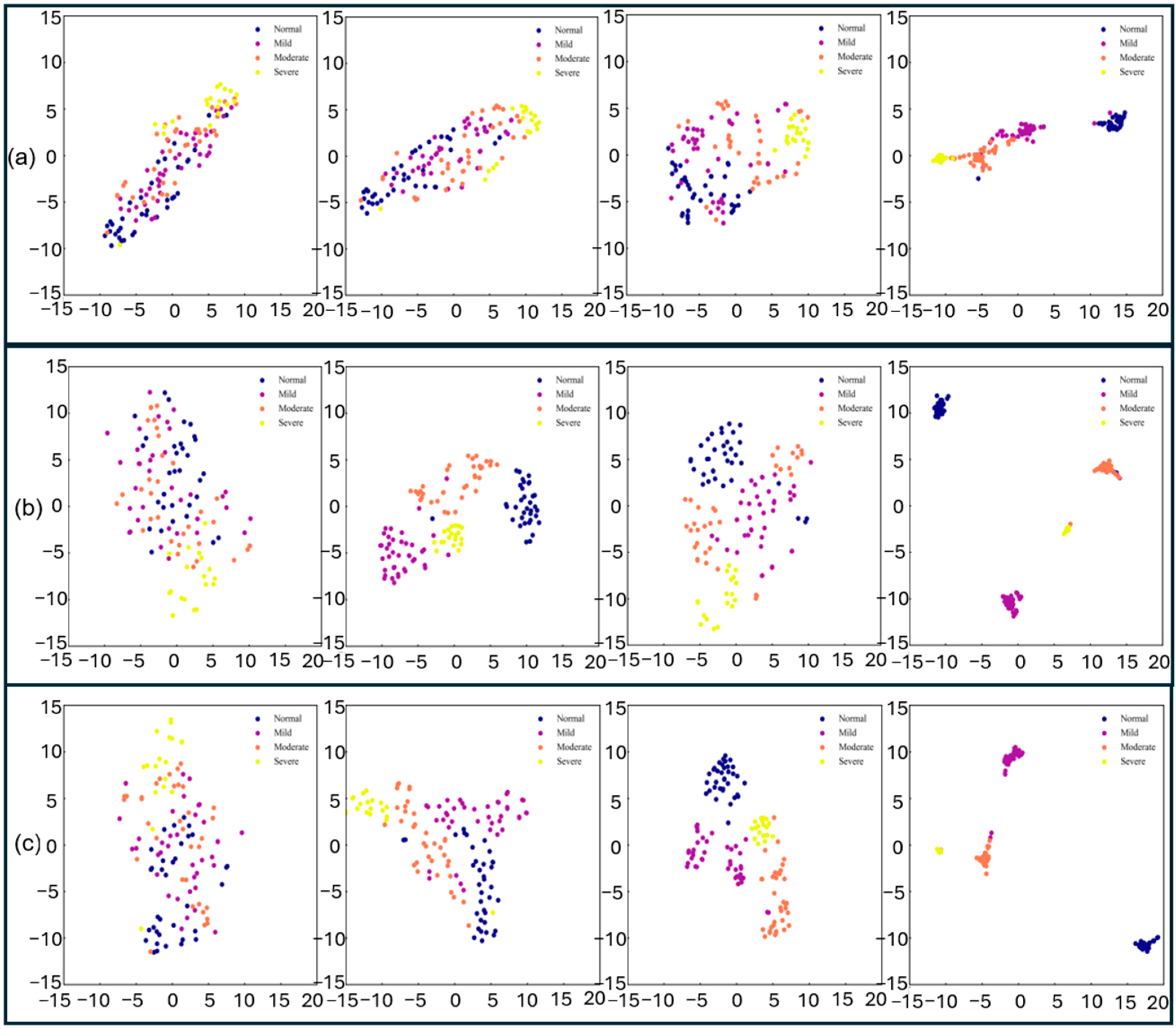

3.3. Visualization of Hidden Layer Features of MLP-Transformer Using T-SNE Algorithm

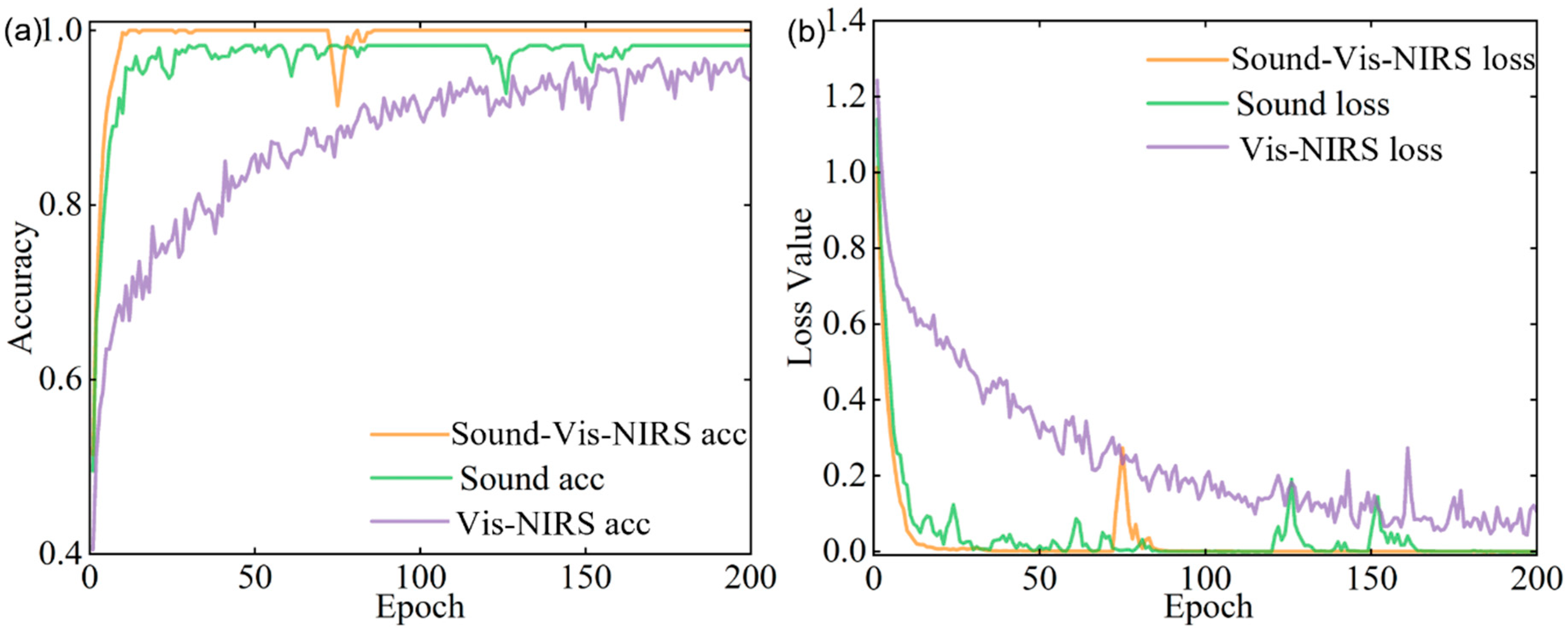

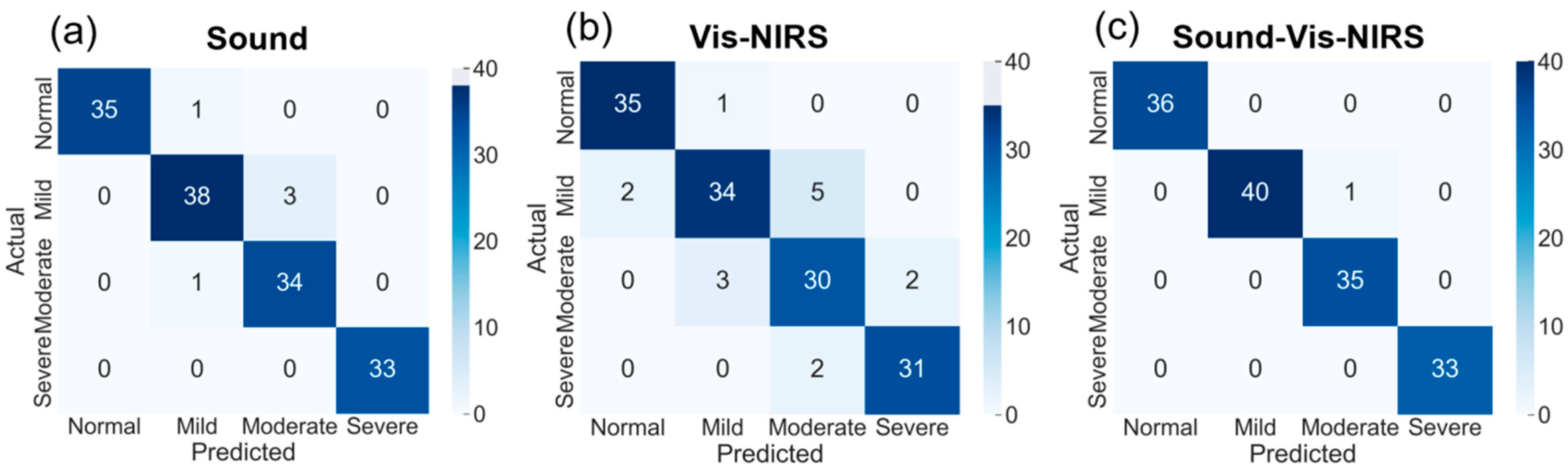

3.4. Classification Results of Deep Learning Models

3.5. Comparative Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Tournas, V.H.; Uppal Memon, S. Internal contamination and spoilage of harvested apples by patulin-producing and other toxigenic fungi. Int. J. Food Microbiol. 2009, 133, 206–209. [Google Scholar] [CrossRef]

- Patriarca, A. Fungi and mycotoxin problems in the apple industry. Curr. Opin. Food Sci. 2019, 29, 42–47. [Google Scholar] [CrossRef]

- Shen, F.; Wu, Q.F.; Liu, P.; Jiang, X.S.; Fang, Y.; Cao, C.J. Detection of Aspergillus spp. contamination levels in peanuts by near infrared spectroscopy and electronic nose. Food Control 2018, 93, 1–8. [Google Scholar] [CrossRef]

- Zhao, K.; Zha, Z.; Li, H.; Wu, J. Early detection of moldy apple core based on time-frequency images of vibro-acoustic signals. Postharvest Biol. Technol. 2021, 179, 111589. [Google Scholar] [CrossRef]

- Liu, Z.; Chen, N.; Le, D.; Lai, Q.; Li, B.; Wu, J.; Song, Y.; Liu, Y. Acoustic vibration multi-domain images vision transformer (AVMDI-ViT) to the detection of moldy apple core: Using a novel device based on micro-LDV and resonance speaker. Postharvest Biol. Technol. 2024, 211, 112838. [Google Scholar] [CrossRef]

- Han, Q.-L.; Long, B.-X.; Yan, X.-J.; Wang, W.; Liu, F.-R.; Chen, X.; Ma, F. Exploration of using acoustic vibration technology to non-destructively detect moldy kernels of in-shell hickory nuts (Carya cathayensis Sarg.). Comput. Electron. Agric. 2023, 212, 108137. [Google Scholar] [CrossRef]

- Srivastava, R.K.; Talluri, S.; Beebi, S.K.; Kumar, B.R. Magnetic Resonance Imaging for Quality Evaluation of Fruits: A Review. Food Anal. Methods 2018, 11, 2943–2960. [Google Scholar] [CrossRef]

- Tian, X.; Wang, Q.; Huang, W.; Fan, S.; Li, J. Online detection of apples with moldy core using the Vis/NIR full-transmittance spectra. Postharvest Biol. Technol. 2020, 168, 111269. [Google Scholar] [CrossRef]

- Zhang, Q.; Huang, W.; Wang, Q.; Wu, J.; Li, J. Detection of pears with moldy core using online full-transmittance spectroscopy combined with supervised classifier comparison and variable optimization. Comput. Electron. Agric. 2022, 200, 107231. [Google Scholar] [CrossRef]

- Tian, S.J.; Zhang, M.S.; Li, B.; Zhang, Z.X.; Zhao, J.; Zhang, Z.J.; Zhang, H.H.; Hu, J. Measurement orientation compensation and comparison of transmission spectroscopy for online detection of moldy apple core. Infrared Phys. Technol. 2020, 111, 103510. [Google Scholar] [CrossRef]

- Li, J.B.; Huang, W.Q.; Zhao, C.J.; Zhang, B.H. A comparative study for the quantitative determination of soluble solids content, pH and firmness of pears by Vis/NIR spectroscopy. J. Food Eng. 2013, 116, 324–332. [Google Scholar] [CrossRef]

- O’Brien, C.; Falagán, N.; Kourmpetli, S.; Landahl, S.; Terry, L.A.; Alamar, M.C. Non-destructive methods for mango ripening prediction: Visible and near-infrared spectroscopy (visNIRS) and laser Doppler vibrometry (LDV). Postharvest Biol. Technol. 2024, 212, 112878. [Google Scholar] [CrossRef]

- Anderson, N.T.; Walsh, K.B. Review: The evolution of chemometrics coupled with near infrared spectroscopy for fruit quality evaluation. J. Near Infrared Spectrosc. 2022, 30, 3–17. [Google Scholar] [CrossRef]

- Cruz, S.; Guerra, R.; Brazio, A.; Cavaco, A.M.; Antunes, D.; Passos, D. Nondestructive simultaneous prediction of internal browning disorder and quality attributes in ‘Rocha’ pear (Pyrus communis L.) using VIS-NIR spectroscopy. Postharvest Biol. Technol. 2021, 179, 111562. [Google Scholar] [CrossRef]

- Tian, S.J.; Zhang, J.H.; Zhang, Z.X.; Zhao, J.; Zhang, Z.J.; Zhang, H.H. Effective modification through transmission Vis/NIR spectra affected by fruit size to improve the prediction of moldy apple core. Infrared Phys. Technol. 2019, 100, 117–124. [Google Scholar] [CrossRef]

- Cortés, V.; Blasco, J.; Aleixos, N.; Cubero, S.; Talens, P. Monitoring strategies for quality control of agricultural products using visible and near-infrared spectroscopy: A review. Trends Food Sci. Technol. 2019, 85, 138–148. [Google Scholar] [CrossRef]

- Ding, C.; Feng, Z.; Wang, D.; Cui, D.; Li, W. Acoustic vibration technology: Toward a promising fruit quality detection method. Compr. Rev. Food Sci. Food Saf. 2021, 20, 1655–1680. [Google Scholar] [CrossRef]

- Zhang, H.; Zha, Z.; Kulasiri, D.; Wu, J. Detection of Early Core Browning in Pears Based on Statistical Features in Vibro-Acoustic Signals. Food Bioprocess Technol. 2021, 14, 887–897. [Google Scholar] [CrossRef]

- Kawai, T.; Matsumori, F.; Akimoto, H.; Sakurai, N.; Hirano, K.; Nakano, R.; Fukuda, F. Nondestructive Detection of Split-pit Peach Fruit on Trees with an Acoustic Vibration Method. Hortic. J. 2018, 87, 499–507. [Google Scholar] [CrossRef]

- Nakano, R.T.; Akimoto, H.; Fukuda, F.; Kawai, T.; Ushijima, K.; Fukamatsu, Y.; Kubo, Y.; Fujii, Y.; Hirano, K.; Morinaga, K.; et al. Nondestructive Detection of Split Pit in Peaches Using an Acoustic Vibration Method. Hortic. J. 2018, 87, 281–287. [Google Scholar] [CrossRef]

- Blanes, C.; Ortiz, C.; Mellado, M.; Beltrán, P. Assessment of eggplant firmness with accelerometers on a pneumatic robot gripper. Comput. Electron. Agric. 2015, 113, 44–50. [Google Scholar] [CrossRef]

- Hosoya, N.; Mishima, M.; Kajiwara, I.; Maeda, S. Non-destructive firmness assessment of apples using a non-contact laser excitation system based on a laser-induced plasma shock wave. Postharvest Biol. Technol. 2017, 128, 11–17. [Google Scholar] [CrossRef]

- Arai, N.; Miyake, M.; Yamamoto, K.; Kajiwara, I.; Hosoya, N. Soft Mango Firmness Assessment Based on Rayleigh Waves Generated by a Laser-Induced Plasma Shock Wave Technique. Foods 2021, 10, 323. [Google Scholar] [CrossRef]

- AWETA. Avocado Sorting Machine. Available online: https://www.aweta.com/en/produce/avocado (accessed on 27 May 2025).

- Wang, D.; Feng, Z.; Ji, S.; Cui, D. Simultaneous prediction of peach firmness and weight using vibration spectra combined with one-dimensional convolutional neural network. Comput. Electron. Agric. 2022, 201, 107341. [Google Scholar] [CrossRef]

- Liu, Z.; Le, D.; Zhang, T.; Lai, Q.; Zhang, J.; Li, B.; Song, Y.; Nan, C. Detection of apple moldy core disease by fusing vibration and Vis/NIR spectroscopy data with dual-input MLP-Transformer. J. Food Eng. 2024, 382, 112219. [Google Scholar] [CrossRef]

- Barbosa, S.; Saurina, J.; Puignou, L.; Núñez, O. Classification and Authentication of Paprika by UHPLC-HRMS Fingerprinting and Multivariate Calibration Methods (PCA and PLS-DA). Foods 2020, 9, 486. [Google Scholar] [CrossRef] [PubMed]

- Chorowski, J.; Wang, J.; Zurada, J.M. Review and performance comparison of SVM- and ELM-based classifiers. Neurocomputing 2014, 128, 507–516. [Google Scholar] [CrossRef]

- Cheng, P.; Yu, H.; Liu, C.; Luo, K.; Akhtar, N.; Chen, X. RID-Net: A Hybrid MLP-Transformer Network for Robust Point Cloud Registration. IEEE Robot. Autom. Lett. 2025, 10, 5066–5073. [Google Scholar] [CrossRef]

- van de Ruit, M.; Billeter, M.; Eisemann, E. An Efficient Dual-Hierarchy t-SNE Minimization. IEEE Trans. Vis. Comput. Graph. 2022, 28, 614–622. [Google Scholar] [CrossRef]

- Thompson, A.K. Recommended CA Storage Conditions for Selected Crops; CABI: Oxon, UK, 2010; pp. 116–191. [Google Scholar] [CrossRef]

- Linke, M.; Praeger, U.; Neuwald, D.A.; Geyer, M. Measurement of Water Vapor Condensation on Apple Surfaces during Controlled Atmosphere Storage. Sensors 2023, 23, 1739. [Google Scholar] [CrossRef]

- Han, Z.; Wang, Z.; Bi, Y.; Zong, Y.; Gong, D.; Wang, B.; Li, B.; Sionov, E.; Prusky, D. The Effect of Environmental pH during Trichothecium roseum (Pers.:Fr.) Link Inoculation of Apple Fruits on the Host Differential Reactive Oxygen Species Metabolism. Antioxidants 2021, 10, 692. [Google Scholar] [CrossRef] [PubMed]

- Gong, D.; Bi, Y.; Jiang, H.; Xue, S.; Wang, Z.; Li, Y.; Zong, Y.; Prusky, D. A comparison of postharvest physiology, quality and volatile compounds of ‘Fuji’ and ‘Delicious’ apples inoculated with Penicillium expansum. Postharvest Biol. Technol. 2019, 150, 95–104. [Google Scholar] [CrossRef]

- Zhao, K.; Li, H.; Zha, Z.; Zhai, M.; Wu, J. Detection of sub-healthy apples with moldy core using deep-shallow learning for vibro-acoustic multi-domain features. Meas. Food 2022, 8, 100068. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Tian, S.; Xu, H. Mechanical-based and Optical-based Methods for Nondestructive Evaluation of Fruit Firmness. Food Rev. Int. 2022, 39, 4009–4039. [Google Scholar] [CrossRef]

- Ghooshkhaneh, N.G.; Golzarian, M.R.; Mollazade, K. VIS-NIR spectroscopy for detection of citrus core rot caused by Alternaria alternata. Food Control 2023, 144, 109320. [Google Scholar] [CrossRef]

- Liu, H.; Wei, Z.; Lu, M.; Gao, P.; Li, J.; Zhao, J.; Hu, J. A Vis/NIR device for detecting moldy apple cores using spectral shape features. Comput. Electron. Agric. 2024, 220, 108898. [Google Scholar] [CrossRef]

- Zhang, Z.X.; Pu, Y.G.; Wei, Z.C.; Liu, H.L.; Zhang, D.L.; Zhang, B.; Zhang, Z.J.; Zhao, J.; Hu, J. Combination of interactance and transmittance modes of Vis/NIR spectroscopy improved the performance of PLS-DA model for moldy apple core. Infrared Phys. Technol. 2022, 126, 104366. [Google Scholar] [CrossRef]

| Parameters | Fruit Mass (g) | Fruit Diameter (mm) |

|---|---|---|

| Minimum | 204.59 | 78.48 |

| Maximum | 289.48 | 92.97 |

| Mean | 248.13 | 84.03 |

| SD | 23.17 | 3.59 |

| Training Data | SVM Parameters | PLS-DA Parameters | ||

|---|---|---|---|---|

| Kernel Function | Penalty Parameter | Poly Order | Components | |

| Sound | RBF | 2 | 5 | 58 |

| Vis–NIRS | Poly | 7 | 3 | 24 |

| Sound–Vis–NIRS | Poly | 4 | 6 | 59 |

| Models | Training Data | Training Set Accuracy (%) | Prediction Set Accuracy (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Normal | Mild | Moderate | Severe | Overall | Normal | Mild | Moderate | Severe | Overall | ||

| PLS-DA | Sound | 97.27 | 83.19 | 71.17 | 84.91 | 83.97 | 91.67 | 80.49 | 74.29 | 78.79 | 81.38 |

| Vis–NIRS | 82.73 | 77.31 | 63.96 | 88.68 | 76.59 | 75.00 | 58.54 | 62.86 | 84.85 | 69.66 | |

| Sound–Vis–NIRS | 96.36 | 92.44 | 94.59 | 96.23 | 94.66 | 94.44 | 82.93 | 94.29 | 87.89 | 89.66 | |

| SVM | Sound | 100.00 | 100.00 | 100.0 0 | 100.00 | 100.00 | 100.00 | 92.68 | 88.57 | 93.94 | 93.79 |

| Vis–NIRS | 97.27 | 84.87 | 88.29 | 96.23 | 90.84 | 94.44 | 80.49 | 82.86 | 84.85 | 85.52 | |

| Sound–Vis–NIRS | 100.00 | 100.00 | 100.0 0 | 100.00 | 100.00 | 97.22 | 90.24 | 94.29 | 100.00 | 95.17 | |

| Models | Training Data | Training Set Accuracy (%) | Prediction Set Accuracy (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Normal | Mild | Moderate | Severe | Overall | Normal | Mild | Moderate | Severe | Overall | ||

| MLP-Transformer | Sound | 98.15 | 97.27 | 98.18 | 99.07 | 98.16 | 97.22 | 92.68 | 97.14 | 100.00 | 96.55 |

| Vis–NIRS | 98.18 | 94.12 | 98.20 | 98.11 | 96.94 | 97.22 | 82.93 | 85.71 | 93.94 | 89.66 | |

| Sound–Vis–NIRS | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 97.56 | 97.14 | 100.00 | 98.62 | |

| ResNet | Sound | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 88.89 | 92.68 | 82.86 | 100.00 | 90.08 |

| Vis–NIRS | 98.18 | 99.16 | 100.00 | 98.11 | 98.98 | 91.67 | 78.05 | 85.71 | 90.91 | 86.21 | |

| Sound–Vis–NIRS | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 97.22 | 92.68 | 88.57 | 100.00 | 94.48 | |

| Models | Indicators (%) | Normal | Mild | Moderate | Severe | Weighted_P | Weighted_R | Weighted_F1 |

|---|---|---|---|---|---|---|---|---|

| MLP-Transformer | Precision | 100.00 | 100.00 | 97.14 | 97.06 | 98.64 | ||

| Recall | 100.00 | 97.56 | 97.14 | 100.00 | 98.62 | |||

| F1 Score | 100.00 | 98.77 | 97.14 | 98.51 | 98.62 | |||

| ResNet | Precision | 94.59 | 91.57 | 92.54 | 100.00 | 94.59 | ||

| Recall | 97.22 | 92.68 | 88.57 | 100.00 | 94.48 | |||

| F1 Score | 94.59 | 91.57 | 92.54 | 100.00 | 94.47 |

| Objects | Detection Methods | Algorithm | Accuracy | References |

|---|---|---|---|---|

| Moldy apple core | Vis–NIR | BPNN | 93% | [39] |

| Moldy apple core | Vis–NIR | AdaBoost | 97.3% | [40] |

| Moldy apple core | Vis–NIR | PLS-DA | 89.39% | [41] |

| Moldy apple core | Acoustic | ELM | 93.9% | [4] |

| Moldy apple core | Acoustic | IResNet50 | 96.7% | [35] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, N.; Zhang, X.; Liu, Z.; Zhang, T.; Lai, Q.; Li, B.; Lu, Y.; Hu, B.; Jiang, X.; Liu, Y. Fusion of Acoustic and Vis-NIRS Information for High-Accuracy Online Detection of Moldy Core in Apples. Agriculture 2025, 15, 1202. https://doi.org/10.3390/agriculture15111202

Chen N, Zhang X, Liu Z, Zhang T, Lai Q, Li B, Lu Y, Hu B, Jiang X, Liu Y. Fusion of Acoustic and Vis-NIRS Information for High-Accuracy Online Detection of Moldy Core in Apples. Agriculture. 2025; 15(11):1202. https://doi.org/10.3390/agriculture15111202

Chicago/Turabian StyleChen, Nan, Xiaoyu Zhang, Zhi Liu, Tianyu Zhang, Qingrong Lai, Bin Li, Yeqing Lu, Bo Hu, Xiaogang Jiang, and Yande Liu. 2025. "Fusion of Acoustic and Vis-NIRS Information for High-Accuracy Online Detection of Moldy Core in Apples" Agriculture 15, no. 11: 1202. https://doi.org/10.3390/agriculture15111202

APA StyleChen, N., Zhang, X., Liu, Z., Zhang, T., Lai, Q., Li, B., Lu, Y., Hu, B., Jiang, X., & Liu, Y. (2025). Fusion of Acoustic and Vis-NIRS Information for High-Accuracy Online Detection of Moldy Core in Apples. Agriculture, 15(11), 1202. https://doi.org/10.3390/agriculture15111202