Research on Recognition of Green Sichuan Pepper Clusters and Cutting-Point Localization in Complex Environments

Abstract

1. Introduction

2. Materials and Methods

2.1. Green Sichuan Pepper Trees and Harvesting Environment

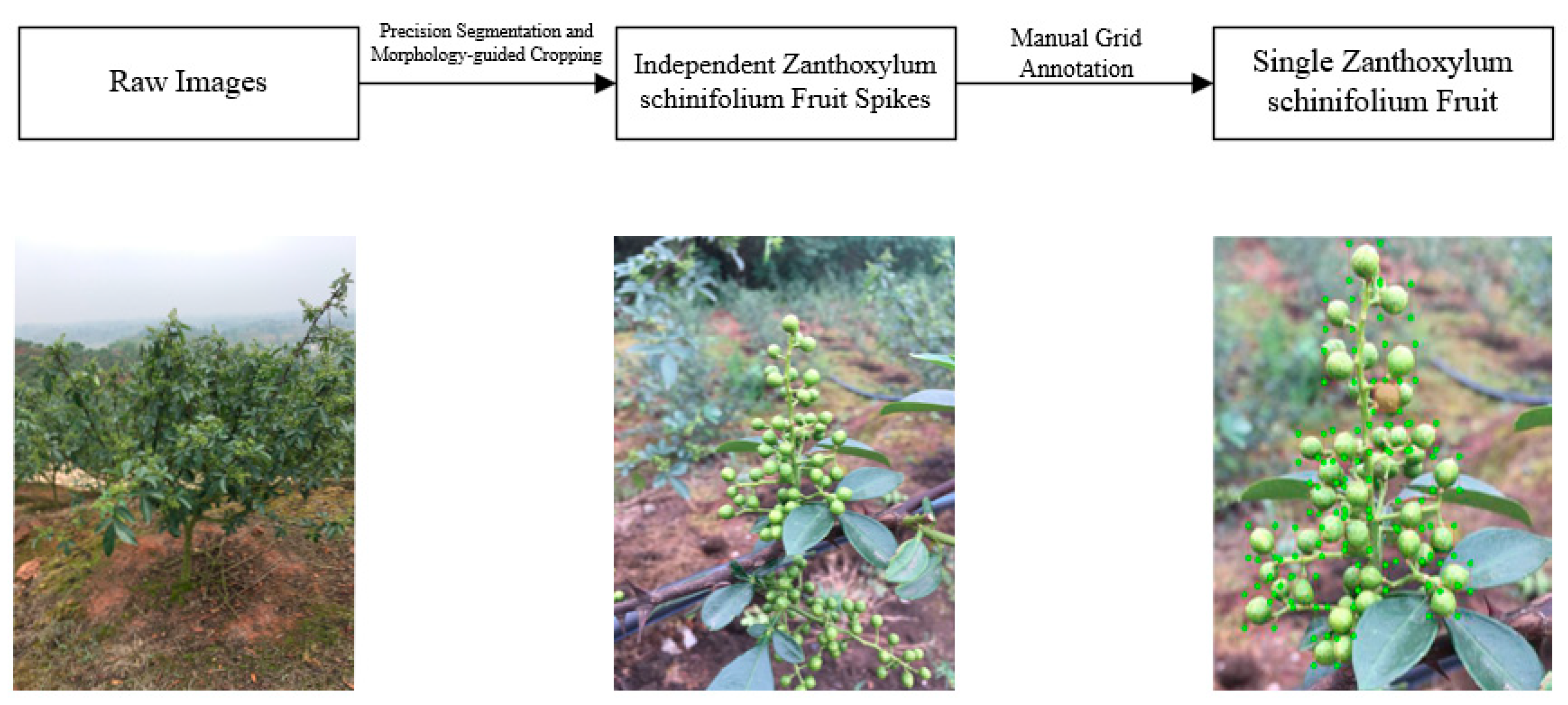

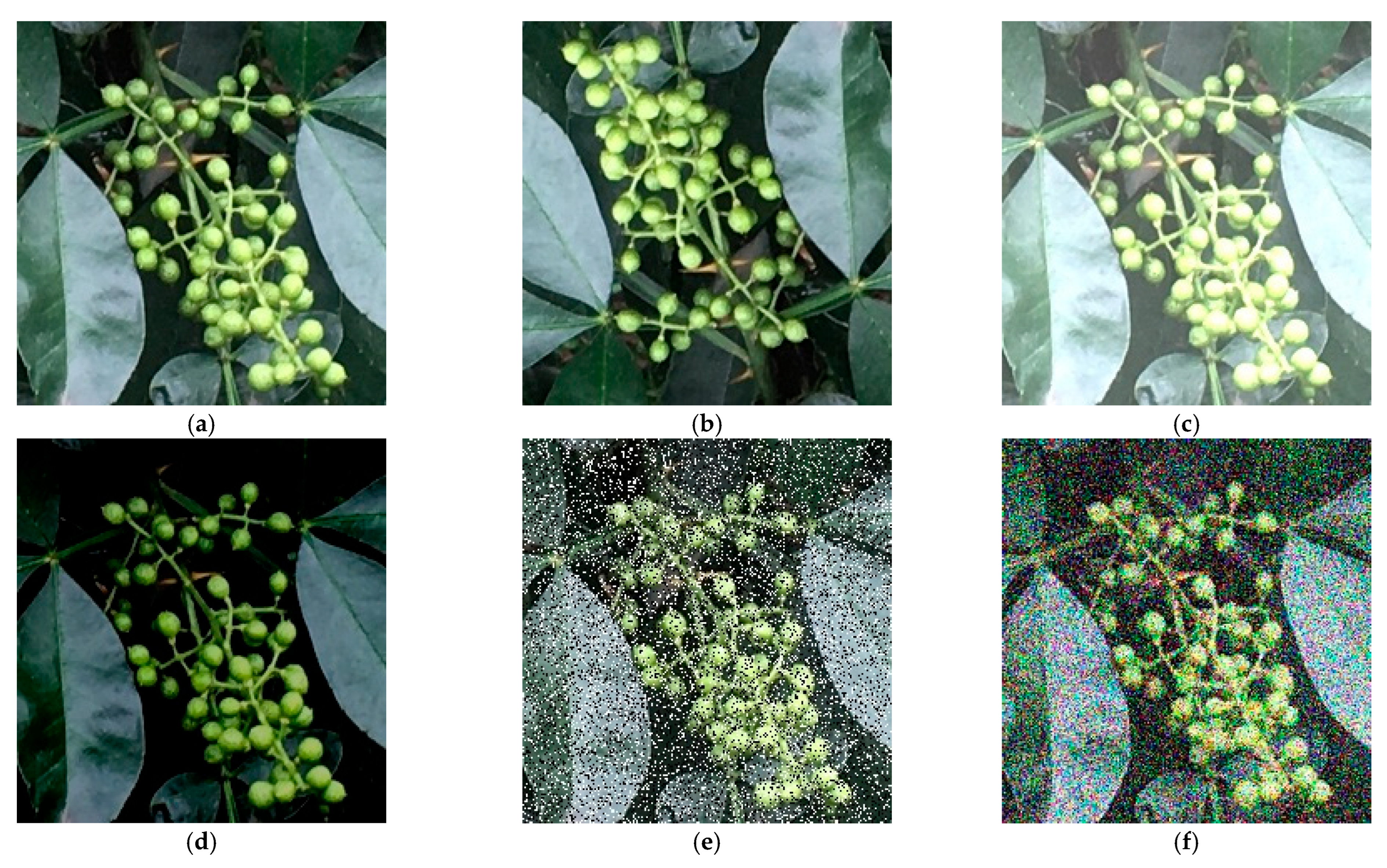

2.2. Green Sichuan Pepper Fruit Dataset

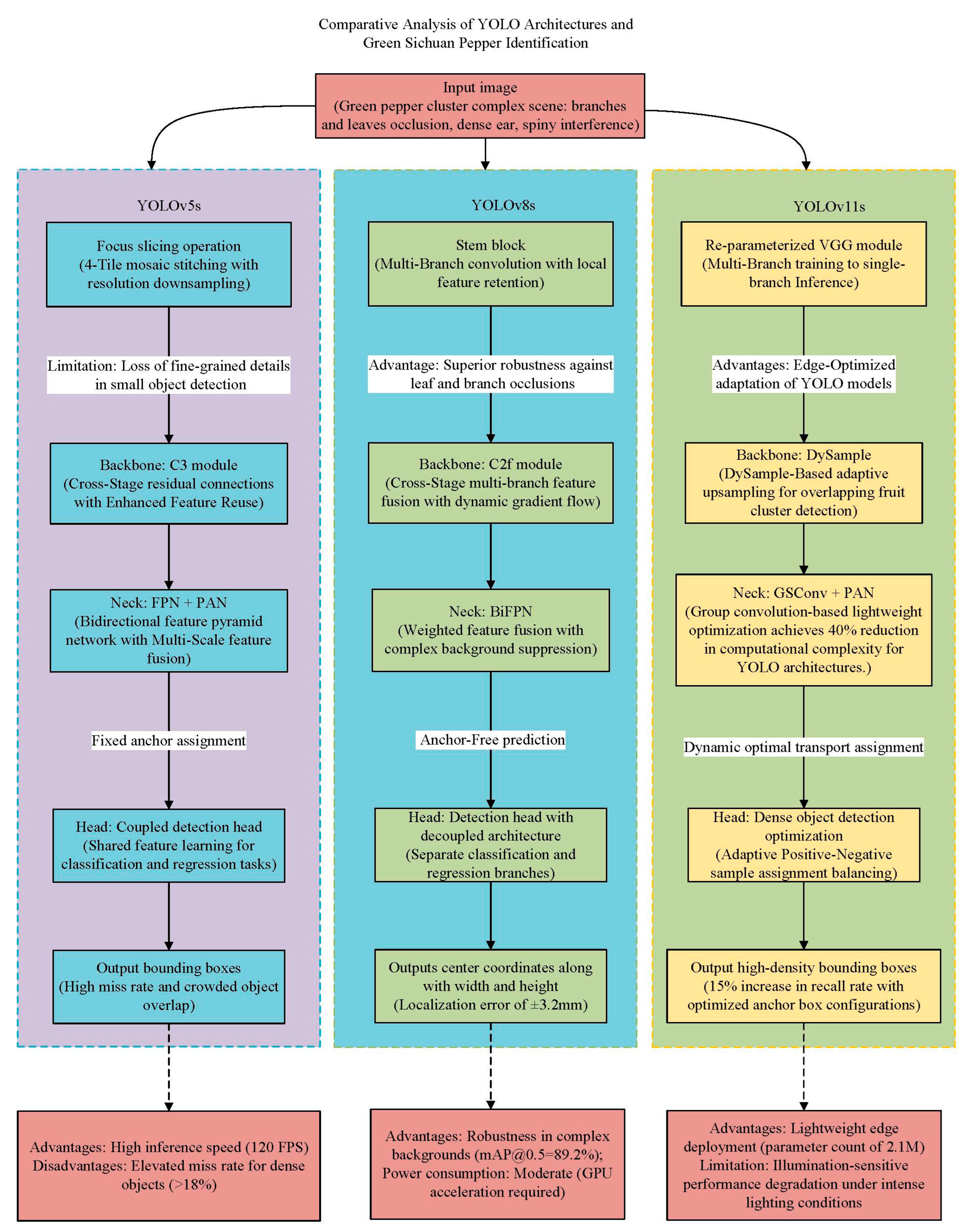

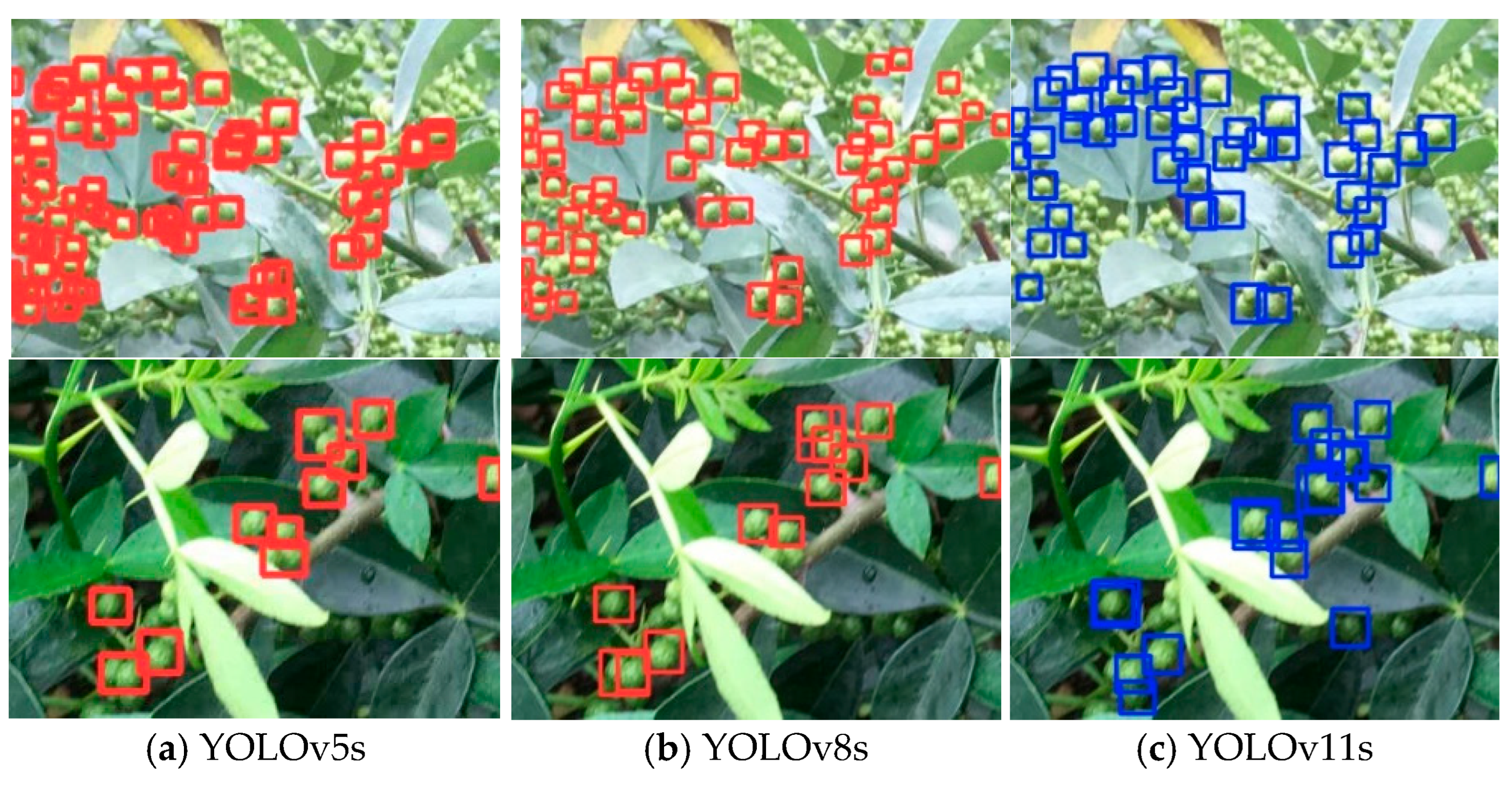

2.3. Comparative Analysis of Green Sichuan Pepper Recognition Algorithms Based on YOLO Architectures

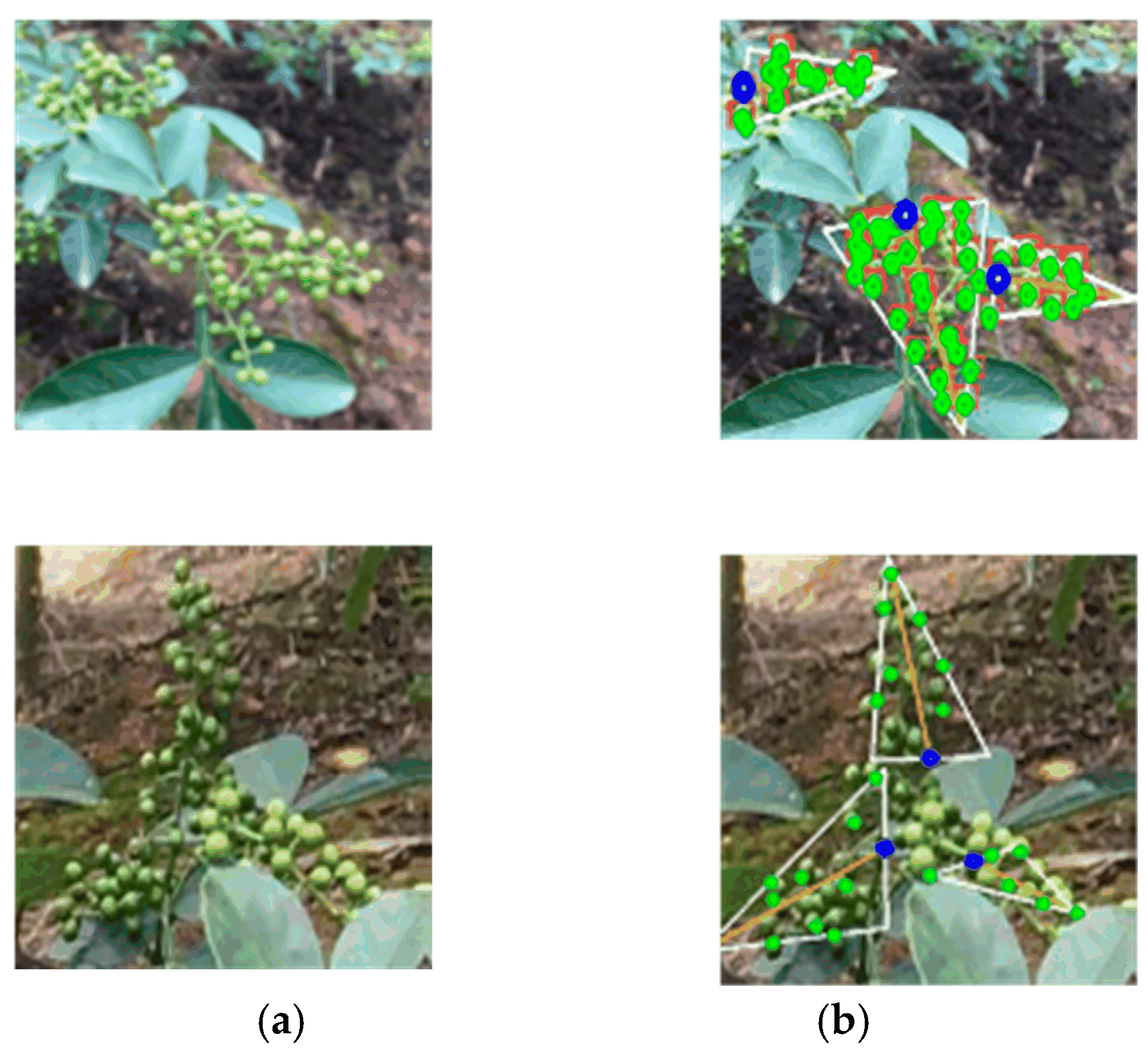

2.4. Algorithm Design for Green Sichuan Pepper Cutting-Point Localization

3. Results and Analysis

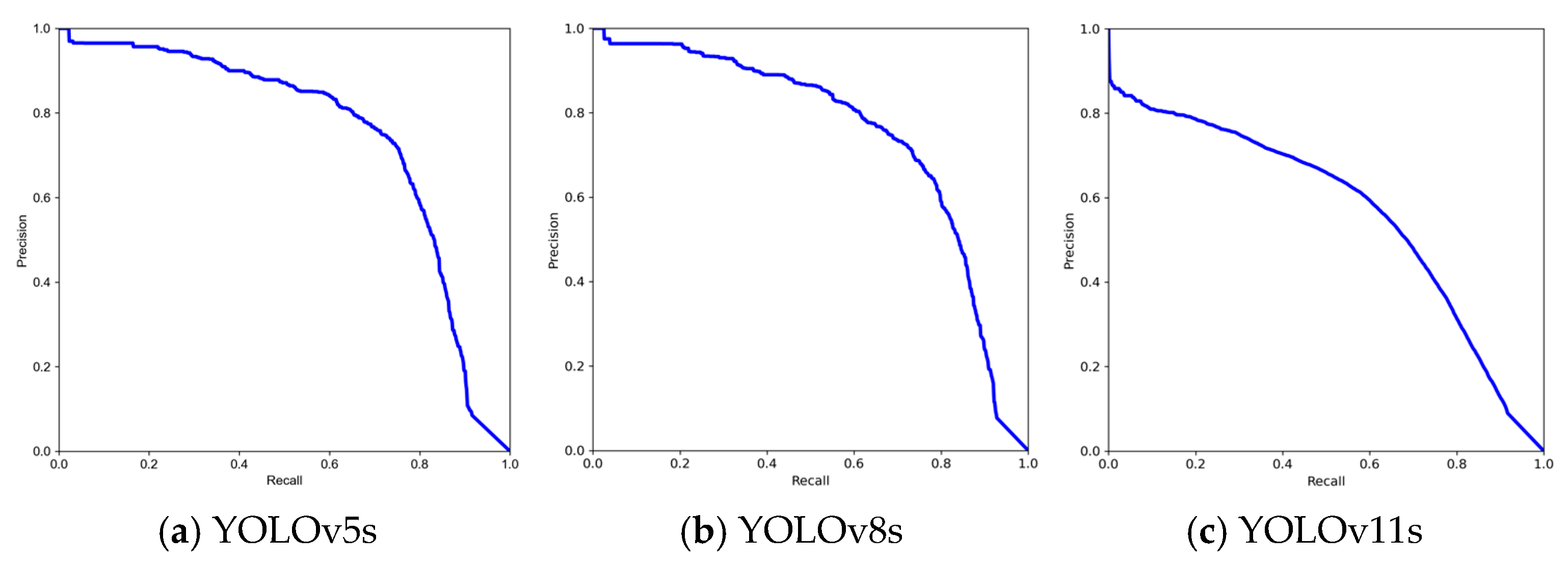

3.1. Comparative Training of Green Pepper Recognition Algorithm Models Based on YOLO Architecture

3.1.1. Model Training Configuration and Evaluation Metrics

3.1.2. Comparative Training Results and Analysis of Models

3.2. Green Pepper Cutting Point Experiment

3.2.1. Cutting Point Evaluation Parameters

3.2.2. Experimental Results and Error Analysis of Green Pepper Cutting-Point Program

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Lin, C. Current Status and Countermeasures of Standardization in China’s Zanthoxylum Bungeanum Industry. China Trop. Agric. 2024, 15–24. Available online: http://qqkk.kmwh.top/index.php?c=content&a=show&id=7657 (accessed on 19 April 2025).

- Chen, Z. Current Development Status and Future Prospects of China Sichuan Pepper Industry. Contemp. Hortic. 2022, 45, 16–18. [Google Scholar] [CrossRef]

- Xia, G.; Wang, M.; Li, H.; Ren, M.; Wahia, H.; Zhou, C.; Yang, H. Synergistic principle of catalytic infrared and intense pulsed light for bacteriostasis on green sichuan pepper (Zanthoxylum schinifolium). Food Biosci. 2023, 56, 103177. [Google Scholar] [CrossRef]

- Zhang, D.; Lin, Z.; Xuan, L.; Lu, M.; Shi, B.; Shi, J.; He, F.; Battino, M.; Zhao, L.; Zou, X. Rapid determination of geographical authenticity and pungency intensity of the red Sichuan pepper (Zanthoxylum bungeanum) using differential pulse voltammetry and machine learning algorithms. Food Chem. 2024, 439, 137978. [Google Scholar] [CrossRef] [PubMed]

- Jin, T.; Han, X.; Wang, P.; Zhang, Z.; Guo, J.; Ding, F. Enhanced deep learning model for apple detection, localization, and counting in complex orchards for robotic arm-based harvesting. Smart Agric. Technol. 2025, 10, 100784. [Google Scholar] [CrossRef]

- Wang, J.; Xia, D.; Wan, J.; Hou, X.; Shen, G.; Li, S.; Chen, H.; Cui, Q.; Zhou, M.; Wang, J.; et al. Color grading of green Sichuan pepper (Zanthoxylum armatum DC.)dried fruit based on image processing and BP neural network algorithm. Sci. Hortic. 2024, 331, 113171. [Google Scholar] [CrossRef]

- Liang, X.; Wei, Z.; Chen, K. A method for segmentation and localization of tomato lateral pruning points in complex environments based on improved YOLOV5. Comput. Electron. Agric. 2025, 229, 109731. [Google Scholar] [CrossRef]

- Bhattarai, U.; Bhusal, S.; Zhang, Q.; Karkee, M. AgRegNet: A deep regression network for flower and fruit density estimation, localization, and counting in orchards. Comput. Electron. Agric. 2024, 227, 109534. [Google Scholar] [CrossRef]

- Li, Y.; Zhuo, P.; Jiao, H.; Wang, P.; Wang, L.; Li, C.; Niu, Q. Construction of constitutive model and parameter determination of green Sichuan pepper (Zanthoxylum armatum) branches. Biosyst. Eng. 2023, 227, 147–160. [Google Scholar] [CrossRef]

- Li, Y.; Li, B.; Jiang, Y.; Xu, C.; Zhou, B.; Niu, Q.; Li, C. Study on the Dynamic Cutting Mechanism of Green Pepper (Zanthoxylum armatum) Branches under Optimal Tool Parameters. Agriculture 2022, 12, 1165. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, Y.; He, Z.; Li, S.; Pu, Y.; Chen, W.; Yang, S.; Yang, M. Design and experiment of the rotating shear picking device forgreen Sichuan peppers. Trans. Chin. Soc. Agric. Eng. 2024, 40, 72–83. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, S.; Wang, C.; Wang, L.; Zhang, Y.; Song, H. Segmentation Method of Zanthoxylum bungeanum Cluster Based on Improved Mask R-CNN. Agriculture 2024, 14, 1585. [Google Scholar] [CrossRef]

- Zeng, W. Image data augmentation techniques based on deep learning: A survey. Math. Biosci. Eng. 2024, 21, 6190–6224. [Google Scholar] [CrossRef]

- Jiang, L.; Jin, P.; Geng, J. Research on Image Data Augmentation Technology. Ship Electron. Eng. 2024, 44, 19–21+93. [Google Scholar]

- Azizi, A.; Zhang, Z.; Hua, W.; Li, M.; Igathinathane, C.; Yang, L.; Ampatzidis, Y.; Ghasemi-Varnamkhasti, M.; Radi; Zhang, M.; et al. Image processing and artificial intelligence for apple detection and localization: A comprehensive review. Comput. Sci. Rev. 2024, 54, 100690. [Google Scholar] [CrossRef]

- Xu, Y.; Xiong, J.; Li, L.; Peng, Y.; He, J. Detecting pepper cluster using improved YOLOv5s. Trans. Chin. Soc. Agric. Eng. 2023, 39, 283–290. [Google Scholar] [CrossRef]

- Li, G.; Gong, H.; Yuan, K. Research on lightweight pepper cluster detection based on YOLOv5s. J. Chin. Agric. Mech. 2023, 44, 153–158. [Google Scholar] [CrossRef]

- Ji, W.; He, L. Study on the Application of YOLOv8 in Sichuan Pepper Recognition in Natural Scenes. China-Arab States Sci. Technol. Forum 2024, 45–49. Available online: http://www.zakjlt.cn/ (accessed on 19 April 2025).

- Lee, Y.-S.; Patil, M.P.; Kim, J.G.; Seo, Y.B.; Ahn, D.-H.; Kim, G.-D. Hyperparameter Optimization for Tomato Leaf Disease Recognition Based on YOLOv11m. Plants 2025, 14, 653. [Google Scholar] [CrossRef]

- Khanam, R.; Asghar, T.; Hussain, M. Comparative Performance Evaluation of YOLOv5, YOLOv8, and YOLOv11 for Solar Panel Defect Detection. Solar 2025, 5, 6. [Google Scholar] [CrossRef]

- Huang, H.; Zhang, H.; Hu, X.; Nie, X. Recognition and Localization Method for Pepper Clusters in Complex Environments Based on Improved YOLO v5. Trans. Chin. Soc. Agric. Mach. 2024, 55, 243–251. [Google Scholar] [CrossRef]

- Cui, J.; Xu, J. Research and Implementation of the K-means++ Clustering Algorithm. Comput. Knowl. Technol. 2024, 20, 78–81. [Google Scholar] [CrossRef]

- Yu, Q.; Han, Y.; Han, Y.; Gao, X.; Zheng, L. Enhancing YOLOv5 Performance for Small-Scale Corrosion Detection in Coastal Environments Using IoU-Based Loss Functions. J. Mar. Sci. Eng. 2024, 12, 2295. [Google Scholar] [CrossRef]

- Yang, P.; Guo, Z. Vision recognition and location solution of Zanthoxylum bungeanum picking robot. J. Hebei Agric. Univ. 2020, 43, 121–129. [Google Scholar] [CrossRef]

| Model | mAP@0.5 | Precision | Recall | F1 | File Size |

|---|---|---|---|---|---|

| YOLOv5s | 0.750 | 0.707 | 0.750 | 0.69 | 13.7 MB |

| YOLOv8s | 0.753 | 0.746 | 0.754 | 0.719 | 5.94 MB |

| YOLOv11s | 0.567 | 0.730 | 0.910 | 0.754 | 5.19 MB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niu, Q.; Ma, W.; Diao, R.; Yu, W.; Wang, C.; Li, H.; Wang, L.; Li, C.; Wang, P. Research on Recognition of Green Sichuan Pepper Clusters and Cutting-Point Localization in Complex Environments. Agriculture 2025, 15, 1079. https://doi.org/10.3390/agriculture15101079

Niu Q, Ma W, Diao R, Yu W, Wang C, Li H, Wang L, Li C, Wang P. Research on Recognition of Green Sichuan Pepper Clusters and Cutting-Point Localization in Complex Environments. Agriculture. 2025; 15(10):1079. https://doi.org/10.3390/agriculture15101079

Chicago/Turabian StyleNiu, Qi, Wenjun Ma, Rongxiang Diao, Wei Yu, Chunlei Wang, Hui Li, Lihong Wang, Chengsong Li, and Pei Wang. 2025. "Research on Recognition of Green Sichuan Pepper Clusters and Cutting-Point Localization in Complex Environments" Agriculture 15, no. 10: 1079. https://doi.org/10.3390/agriculture15101079

APA StyleNiu, Q., Ma, W., Diao, R., Yu, W., Wang, C., Li, H., Wang, L., Li, C., & Wang, P. (2025). Research on Recognition of Green Sichuan Pepper Clusters and Cutting-Point Localization in Complex Environments. Agriculture, 15(10), 1079. https://doi.org/10.3390/agriculture15101079