Abstract

Background: Artificial intelligence (AI) is increasingly shaping the landscape of emergency surgery by offering real-time decision support, enhancing diagnostic accuracy, and optimizing workflows. However, its implementation raises significant ethical concerns, particularly regarding accountability, transparency, patient autonomy, and bias. Objective: This perspective paper, grounded in a narrative review, explores the ethical dilemmas associated with AI in emergency surgery and proposes future directions for its responsible and equitable integration. Methods: A comprehensive narrative review was conducted using PubMed, Scopus, Web of Science, and Google Scholar, covering the literature published from January 2010 to December 2024. We focused on peer-reviewed articles discussing AI in surgical or emergency care and highlighting ethical, legal, or regulatory issues. A thematic analysis was used to synthesize the main ethical challenges. Results: Key ethical concerns identified include issues of accountability in AI-assisted decision-making, the “black box” effect and bias in algorithmic design, data privacy and protection, and the lack of global regulatory coherence. Thematic domains were developed around autonomy, beneficence, justice, transparency, and informed consent. Conclusions: Responsible AI implementation in emergency surgery requires transparent and explainable models, diverse and representative datasets, robust consent frameworks, and clear guidelines for liability and oversight. Interdisciplinary collaboration is essential to align technological innovation with patient-centered and ethically sound clinical practice.

1. Introduction

The rapid advancement of artificial intelligence (AI)-driven technologies is transforming the field of surgery, with emergency surgery emerging as a key area where AI can provide significant clinical benefits. In clinical practice, the Predictive OpTimal Trees in Emergency Surgery Risk (POTTER) calculator, an AI-based tool, has demonstrated superior accuracy in predicting postoperative mortality and complications compared to surgeons’ assessments [1]. Additionally, the development of AI-powered applications like POTTER-Intensive Care Unit (ICU), which predicts the need for ICU admission after emergency surgery, exemplifies how AI can assist in triaging patients and potentially reduce failure-to-rescue rates [2]. AI-based systems are being leveraged to process vast amounts of patient data, assist in decision-making, predict complications, and optimize surgical workflows [3]. However, the unpredictable nature of emergency settings introduces ethical challenges that require careful consideration.

The balance between the autonomy of AI-driven tools and human responsibility in making decisions or performing a surgical procedure may seem unclear. A core issue in AI-assisted emergency surgery is maintaining human oversight while leveraging AI’s capabilities, as shown in Figure 1 [4]. In high-pressure, time-sensitive environments where rapid decision-making is required, AI must function as a supportive tool rather than a replacement for human clinical judgment [4,5]. The question remaining is this: “Can AI improve outcomes without undermining ethical standards and human responsibility in surgical decision-making?”

Figure 1.

Artificial intelligence emergency surgery: it is the result of the augmented eye, brain, and hand provided by AI tools, under the surgeon’s experience supervision.

Despite the growing body of literature on AI in surgery, relatively few studies have focused on the specific ethical, legal, and regulatory concerns associated with AI integration in emergency settings. Issues such as informed consent under urgent conditions, data privacy in perioperative environments, algorithmic bias, and lack of explainability pose complex dilemmas. Moreover, the acceleration of technological development has outpaced the establishment of robust frameworks for ethical governance, transparency, and accountability.

This paper aims to explore these challenges by providing a narrative review of the key ethical and regulatory issues surrounding the implementation of AI in emergency surgery and to provide recommendations for clinical practice. Rather than focusing on a quantitative synthesis, we adopt a thematic and critical lens to identify recurring concerns, unresolved questions, and areas requiring further research, and discuss the critical issues related to the emergency setting.

2. Methods

This paper is based on a narrative review of the literature, with the aim of identifying and analyzing the most relevant ethical concerns and governance challenges related to AI integration in emergency surgery.

We conducted a search of the literature across the PubMed, Scopus, Web of Science, and Google Scholar databases. The search strategy included the following terms, alone or in combination: “artificial intelligence”, “machine learning”, “computer vision”, “digital surgery”, “emergency surgery”, “healthcare”, “ethics”, “bioethics”, “informed consent”, “black box effect”, “data privacy”, and “regulatory frameworks”.

In our analysis, we included peer-reviewed articles published in English between January 2010 and December 2024, focused on AI technologies in surgical or emergency settings, addressing at least one ethical, legal, or governance issue related to AI use.

Commentaries, non-peer-reviewed materials, editorials, and articles lacking focus on ethical or regulatory aspects were excluded.

An initial pool of 387 articles was identified. After removing duplicates and applying inclusion/exclusion criteria, 112 full-text articles were assessed, and 67 were selected for final inclusion.

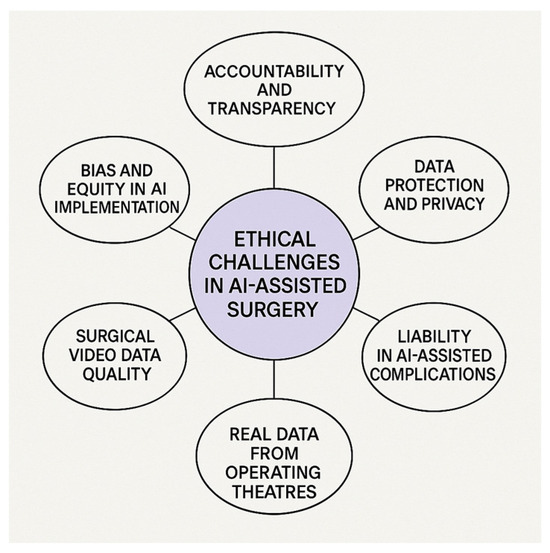

We employed thematic analysis based on Braun and Clarke’s six-phase approach: (1) familiarization with the data; (2) generation of initial codes; (3) searching for themes; (4) reviewing themes; (5) defining and naming themes; and (6) writing up the findings. Key ethical concerns were categorized into six main themes, which structure the core of the discussion presented in this manuscript, as shown in Figure 2 [6].

Figure 2.

Key ethical challenges in AI-assisted emergency surgery. The figure presents six main ethical themes identified through thematic analysis, providing a conceptual framework for the discussion in this review.

This narrative review did not involve quantitative synthesis or formal quality appraisal of studies, in line with its exploratory and integrative nature.

3. Results

A total of 67 sources were deemed eligible for review. The thematic analysis led to the identification of six major ethical domains: accountability, transparency, data quality, autonomy, liability, and privacy and informed consent. These themes emerged consistently across articles addressing AI integration in surgical and emergency settings, and were selected based on frequency of appearance, depth of ethical discussion, and relevance to acute care contexts.

Notably, while most articles highlighted the promise of AI in enhancing clinical decision-making, few offered detailed solutions for managing associated risks. Many sources emphasized the importance of explainability and fairness, but lacked a unified framework or consensus on implementation. Discrepancies were also observed between regions, with European and North American authors focusing more on legal governance, while others prioritized technical development. These inconsistencies reflect the urgent need for harmonized ethical standards and were critically considered in the formulation of our discussion and recommendations.

To provide a clearer overview of the literature analyzed, Table 1 summarizes the key studies included in this narrative review, their characteristics, and the primary ethical themes discussed.

Table 1.

Summary of key studies included in the narrative review.

4. Ethical Considerations in AI-Assisted Emergency Surgery: Summary of Evidence and Discussion of Critical Issues for Implementation

Ethical concerns in AI-assisted surgery primarily revolve around informed consent, privacy protection, trust, and potential legal implications [13].

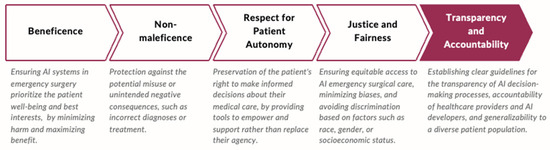

The ethical framework guiding the adoption of new surgical technologies is typically structured around four core principles: beneficence, non-maleficence, autonomy, and justice, as summarized in the Figure 3 [14].

Figure 3.

Ethical principles guiding AI integration in surgical care. This framework illustrates how the core bioethical principles—beneficence, non-maleficence, autonomy, and justice—inform patient-centered decision-making when adopting AI technologies in surgery.

However, the ethical landscape of AI in surgery—particularly regarding autonomous and semi-autonomous actions—remains uncertain. This is largely due to the fact that AI-driven procedures could be executed through surgical robots already approved for human use, rather than AI operating independently inside the patient’s body. While robotic arms will deliver AI-powered movements, the AI itself will function externally. Regulatory approval for technologies that do not directly interact with a patient’s internal anatomy is often more straightforward compared to those that do [15].

A current example of an autonomous surgical device already implemented in surgical practices is the use of AI-driven automatic staplers to perform intestinal anastomosis; this device is capable of adjusting stapling speed according to the thickness of tissues using by built-in sensors [16].

As AI-driven automation advances, the question arises of whether more rigorous regulatory processes will be required for increasingly autonomous surgical systems, even if the AI system remains external to the patient.

From an ethical standpoint, the experiences of surgeons and patient expectations in AI-assisted surgery can be categorized into five key areas: rescue, proximity, ordeal, aftermath, and presence [4]. Additionally, experts identified six fundamental ethical concerns in this field: reliability of robotic and AI systems; respect for patient privacy and data protection; use of comprehensive and unbiased datasets; transparency and recognition of AI limitations; equity in healthcare access—avoiding the exacerbation of disparities; and AI as a tool for enhancing surgical education and training [4].

As AI technologies continue to evolve in surgery, addressing these ethical challenges will be crucial in ensuring safe, equitable, and transparent implementation.

4.1. Accountability and Transparency

One of the primary ethical concerns surrounding AI in surgery is accountability. If an AI-driven system contributes to a medical error or adverse outcome, determining responsibility becomes complex [7,17]. Traditional medical malpractice frameworks rely on the concept of human agency, which AI challenges by introducing automated decision-making elements. The legal and ethical framework must establish clear accountability mechanisms that delineate human versus machine responsibility in clinical decision-making [18].

The potential for AI-driven decisions to contribute to adverse outcomes depends on the quality, completeness, and representativeness of the datasets used to train AI models. Many current algorithms are built on retrospective or non-standardized data, often lacking input from diverse patient populations or reflecting the unique dynamics of emergency care environments. This “data quality gap” can result in biased or non-generalizable predictions, especially when applied to underrepresented clinical scenarios [7,17,18].

Transparency and interpretability of AI-driven decision-making are essential to fostering trust among healthcare providers and patients. The “black box effect” refers to recommendations generated by AI algorithms without clear explanations. It means that although the model may produce accurate predictions, it is often unclear how or why a given output is reached. This opacity poses serious concerns in emergency surgery, where clinical decisions must be rapidly justified and clearly communicated. Explainable AI (XAI) seeks to address this by providing interpretable decision-making models, ensuring that clinicians can understand and validate AI-driven recommendations before acting upon them [19]. XAI principles are particularly relevant for Generative Pretrained Transformers (GPTs) and other deep learning models, which, despite their impressive capabilities, often lack interpretability and transparency [20,21,22].

In emergency settings, where time is limited and decisions carry high stakes, explainability becomes even more critical. Surgeons must be able to interpret and, if needed, challenge AI recommendations. Emerging solutions include visual heatmaps, logical flow diagrams, and probabilistic reasoning layers that clarify the rationale behind predictions [19,20,21,22].

Despite these advances, current explainability tools are often not integrated into clinical interfaces.

4.2. Bias and Equity in AI Algorithms

AI algorithms are trained on historical datasets which may inherently reflect existing biases in healthcare and data quality. If training datasets have an underrepresentation of certain racial, socioeconomic, or gender groups, AI models risk perpetuating these disparities rather than mitigating them [23]. The concept of “health data poverty” highlights how certain populations remain underrepresented in medical research, potentially leading to biased AI recommendations [24]. Addressing these biases requires large, diverse, and inclusive datasets, rigorous validation, and continuous monitoring of AI performance across different patient populations [25,26].

The General Data Protection Regulation (GDPR), introduced in the European Union (EU) in 2016, primarily addresses data security and patient privacy but does not directly mitigate biases or underrepresentation in AI training datasets [27]. While regulatory measures safeguard patient confidentiality, additional frameworks are needed to promote ethical research practices and ensure AI-driven surgical innovations benefit all patient populations equitably. Ensuring compliance with Health Insurance Portability and Accountability Act (HIPAA) security standards is crucial for the protection of Electronic Health Records (EHRs), requiring AI technologies to align with evolving regulatory frameworks [28].

4.3. Data Protection and Privacy

Given the complexity of multidisciplinary healthcare, curating accurate, representative datasets requires comprehensive efforts.

One significant initiative driving data protection and transparency is the FAIR guiding principles, which emphasize Findability, Accessibility, Interoperability, and Reusability. These principles are essential in medical Big Data, promoting not only security but also data reproducibility, validation, and generalizability [29]. Further challenges include vulnerabilities to cybersecurity threats such as malware and hacking, posing risks to AI-driven interfaces. One prospective mode of safety precautions for these technological interfaces is an AI “trustworthy architecture that uses decentralized blockchain characteristics such as smart contracts and trust oracles” [30].

In surgery, challenges related to video data storage further complicate AI integration, particularly regarding compliance with privacy regulations. The integration of AI into surgical perioperative decision-making heavily relies on the availability and quality of surgical video data. However, managing and storing video data presents several challenges that can impact the effectiveness of AI applications, such as the following [31]:

- Storage Capacity and Infrastructure:

- High Data Volume: Surgical procedures, especially those recorded in high-definition formats, generate substantial amounts of data. Continuous recording of all surgeries can quickly exceed existing storage capacities, necessitating significant investments in scalable storage solutions.

- Cost Implications: Maintaining and upgrading storage infrastructure to accommodate the growing volume of video data can be financially burdensome for healthcare institutions, particularly those with limited resources.

- Data Management and Accessibility:

- Efficient Retrieval: As the volume of stored video data increases, implementing effective data management systems becomes essential to ensure that relevant videos can be easily retrieved for analysis and review.

- Standardization Issues: Variations in video formats, annotations, and metadata can complicate data integration and analysis, underscoring the need for standardized protocols in video recording and storage.

- Legal and Ethical Considerations:

- Patient Privacy: Surgical videos often contain sensitive patient information. Ensuring compliance with data protection regulations, such as GDPR and HIPAA, is crucial to safeguard patient privacy and maintain trust.

- Consent and Data Ownership: Clarifying issues related to informed consent for recording and using surgical videos, as well as determining data ownership, is essential to address ethical and legal concerns.

4.4. Surgical Data Quality

Enhancing the quality of surgical video data to feed AI algorithms is essential for an effective AI integration in perioperative decision-making. Proper annotation, expert validation, and structured labeling ensure that AI models are trained on clinically relevant data, improving accuracy and reliability. However, challenges persist due to a lack of uniform annotation guidelines and standardization. Differences in terminology and classification methods among surgeons and across institutions create inconsistencies that hinder algorithm development and cross-center generalizability.

To mitigate these issues, the implementation of standardized frameworks—such as the SAGES (Society of American Gastrointestinal and Endoscopic Surgeons) consensus on surgical video structuring—is crucial [31]. Manual annotation is time-consuming and subject to inter-observer variability, even among experienced surgeons. Hybrid models that combine computer vision (CV)-generated pre-annotations with expert review can reduce workload while maintaining high precision [8,9,32].

To improve annotation quality, standardized frameworks should be implemented. Hierarchical annotation models that categorize surgical steps into structured stages (e.g., incision, dissection, hemostasis, closure) facilitate AI learning and interpretation. Segmentation-based labeling helps AI models differentiate critical anatomical landmarks, such as Calot’s Triangle in laparoscopic cholecystectomy [10]. Additionally, adopting common surgical taxonomies as recommended by SAGES and EAES (European Association for Endoscopic Surgery) ensures consistency across multiple institutions [33,34]. Beyond annotation, expert validation and quality control play a crucial role in ensuring an AI system’s reliability. A multi-tiered review process—where junior surgeons provide initial annotations, senior surgeons refine them, and AI-assisted correction is applied—enhances dataset accuracy. Crowdsourced labeling platforms, where multiple experts collaboratively annotate large datasets, can further improve precision and efficiency [33]. A study by Hong et al. emphasizes the importance of expert-generated annotations in surgical phase recognition. The researchers observed that discrepancies in annotations, even among experts, can affect the generalization performance of Convolutional Neural Networks (CNNs). By implementing a rigorous annotation process involving multiple specialists, they achieved improved performance in surgical phase recognition models [35].

Emerging collaborative models, such as multi-tiered expert review and crowdsourced labeling platforms, have demonstrated potential. For example, the Annotated Videos of Open Surgery (AVOS) dataset, created through crowd-annotated surgical videos, enabled the development of AI systems capable of interpreting complex intraoperative behavior in real time [35,36].

Advancements in deep learning, including the use of architectures like You Only Look Once (YOLO) v3 and Mask Region-based Convolutional Neural Network (R-CNN), have enabled automated detection of anatomical structures and surgical instruments, accelerating annotation processes [37,38]. Natural Language Processing (NLP) techniques can further enhance data structuring by linking narrative operative reports to corresponding video events [11,39].

Refining classification systems through contextual metadata tagging—including surgeon expertise, intraoperative complications, and patient comorbidities—can improve model generalizability and clinical relevance. By integrating structured annotation, validated expert review, and AI-assisted tools, healthcare systems can build reproducible, unbiased datasets to support the safe and effective use of AI in emergency surgery.

4.5. Real-Time Data and Workflow in the Operating Room

The integration of AI into the surgical environment is reshaping operating room (OR) workflows—particularly in emergency surgery, where efficiency, precision, and adaptability are critical. Effective OR management involves balancing multiple factors such as estimating case duration, coordinating staff, prioritizing patients based on urgency, and allocating resources efficiently [40]. Delays or inefficiencies in these processes can increase complications and worsen outcomes, highlighting the need for intelligent workflow optimization tools [41].

AI-driven systems are addressing these challenges by leveraging ML, real-time data analytics, and CV to enhance intraoperative monitoring, resource utilization, and patient safety. In unpredictable emergency settings, these tools can dynamically adjust schedules and prioritize cases using classification systems like the New Timing in Acute Care Surgery (New TACS), which stratify patients by disease severity and timing needs [42].

A notable innovation is the OR Black Box System—an AI-powered platform inspired by aviation’s black box concept. It records synchronized data streams, including audio, video, vitals, and equipment metrics, to identify risks and promote quality improvement. The system de-identifies visual and audio data to preserve team privacy while enabling constructive feedback on technical and non-technical performance [43,44].

Real-world adoption has demonstrated its effectiveness. Stanford Hospital reported improved safety protocols and workflow efficiency, while Toronto General Hospital observed fewer non-technical errors, especially those linked to miscommunication and stress [45,46]. These insights foster continuous professional development and help identify systemic issues, such as equipment failures or protocol deviations, allowing for targeted interventions [47,48].

In parallel, CV-based AI tools are being used to support surgical phase recognition and anatomical landmark identification, enhancing precision and reducing operative times. For instance, AI models trained to detect the “Critical View of Safety” in laparoscopic cholecystectomy have helped reduce bile duct injuries and standardize procedural safety [49]. Instrument tracking systems further assist OR teams by anticipating surgical needs, minimizing delays, and optimizing intraoperative efficiency [50].

While the benefits of AI-enhanced OR workflows are substantial, caution is warranted. Excessive reliance on automation risks undermining clinical autonomy. AI should function as an augmentation—not a replacement—of surgical judgment, maintaining the surgeon’s central role in intraoperative decision-making.

Equity must also be considered. Many AI tools are trained on data from well-resourced centers, limiting their generalizability to underfunded or rural hospitals. Ensuring dataset diversity is essential to avoid amplifying healthcare disparities and to guarantee the safe implementation of AI across various surgical contexts.

Finally, widespread use of AI-based OR monitoring raises ethical concerns around data privacy and ownership. Even with de-identification protocols, unresolved questions persist regarding the long-term storage and use of this data for research or quality improvement. Transparent governance frameworks will be crucial to protect patient and clinician rights while fostering trust in AI-driven innovation.

4.6. Informed Consent in Emergency AI-Assisted Surgery

Unlike elective surgeries, emergency procedures often occur under circumstances where obtaining informed consent is challenging. AI integration adds another layer of complexity, as patients may not fully understand its role in their treatment. Studies have indicated that patients prefer transparency regarding AI involvement in their care and show increased trust when AI systems are disclosed and explained [51,52]. Furthermore, evidence suggests that when patients are adequately educated about AI’s role, their acceptance and confidence in AI-assisted procedures significantly improve, highlighting the necessity for transparency in AI deployment [53]. Beyond informed consent, AI integration in surgery must align with core ethical principles, particularly the respect for patient autonomy. Ethical concerns arise when AI-driven decisions conflict with individual patient values, potentially leading to recommendations that may not fully reflect personal preferences. This underscores the critical need for AI systems that are not only explainable but also adaptable to patient-centered care. Ensuring that AI remains a supportive tool rather than a substitute for human judgment is essential to maintain ethical integrity in emergency surgical settings [54].

Developing standardized protocols to communicate AI usage to patients or their families is essential for maintaining ethical standards in emergency surgery. As AI continues to evolve in emergency surgical practice, healthcare professionals must advocate for ethical frameworks that prioritize patient engagement, informed consent, and shared decision-making.

4.7. Regulatory and Legal Frameworks

Regulatory frameworks for AI in healthcare vary widely across jurisdictions, as shown in Table 2.

Table 2.

Key global regulatory frameworks related to AI in emergency surgery. FDA: U.S. Food and Drug Administration; GDPR: General Data Protection Regulation; EU: European Union; MHRA: Medicines and Healthcare products Regulatory Agency; NMPA: National Medical Products Administration.

The European Union’s General Data Protection Regulation (GDPR) sets robust standards for data protection and consent, yet it does not directly address key issues such as algorithmic bias or dataset representativeness [27]. In parallel, the U.S. Food and Drug Administration (FDA) has authorized several AI-based surgical tools and continues to update its regulatory pathways for autonomous systems, focusing on safety and performance [55].

In recognition of the need for global alignment, the World Health Organization (WHO) has proposed six core principles for ethical AI governance in health. These pillars emphasize safety, transparency, inclusiveness, human-centered design, and accountability. The WHO also underscores AI’s potential to improve diagnosis, treatment, self-care, and professional training, provided that implementation adheres to rigorous ethical standards [56].

At the legislative level, the European Commission proposed the first comprehensive AI regulation in 2021. This framework adopts a risk-based approach, requiring that high-risk AI systems in healthcare demonstrate transparency, human oversight, non-discrimination, and traceability [57]. Importantly, it reinforces the principle that automation should support—not replace—human judgment to prevent harm.

However, legal requirements and enforcement mechanisms vary widely by region. As a result, the integration of AI into clinical practice remains uneven, with national policies often lacking cohesion. To ethically advance the field, consistent global standards are needed—particularly in emergency surgery, where real-time, cross-institutional collaboration is common.

- The issue of explainability is increasingly central to regulatory efforts. EU legislation, including the GDPR and amended Directive 2011/83 on Consumer Rights, outlines obligations for explainable AI in automated decision-making (e.g., GDPR Articles 13.2(f) and 14.2(g)) [55]. In practice, explainability entails providing clinicians with access to key information such as the following:

- The main features driving the model’s decision;

- All contributing data points;

- How features interact in the model’s logic;

- And, in some cases, the architecture of the model itself [58].

The FDA first approved surgical robots in 2000, and since then, the number of AI/ML-enabled devices has grown dramatically. In 2020, the use of these tools increased by 39% compared to the previous year, with 2023 volumes projected to exceed 30% of all new digital devices [55,59,60,61]. These systems are now used not only to assist surgical procedures but also to support diagnostic accuracy, with the potential to reduce treatment costs by up to 50% and improve health outcomes by 40%.

Nevertheless, regulatory coverage remains limited. Although many AI tools function as decision-support systems, they still pose significant ethical and safety risks—especially when used in high-stakes, autonomous roles. Regulations vary across manufacturers, and existing standards do not yet fully address the complexities of moral accountability, real-world variability, or the diverse patient populations served by AI.

To ensure ethical implementation, regulators must prioritize comprehensive documentation, risk assessment, data validation, and transparency. Privacy protections and equitable data quality standards must also be enforced. Given the complexity and sensitivity of surgical AI systems—especially in emergency care—a unified, globally harmonized regulatory framework is essential for ensuring both innovation and patient safety.

4.8. Liability in AI-Assisted Complications: Who Is Responsible?

The introduction of AI in emergency surgery brings complex medico-legal challenges, regarding who is liable when AI-driven decisions contribute to a complication or negative outcomes. It is not clear who is accountable—the surgeon, the hospital, the AI developer, or the manufacturer—when an AI-assisted system (ML) makes an incorrect recommendation or an AI-automated tool malfunctions [62,63].

AI’s role in emergency decision-making must be contextualized based on its intended role and defined as follows (Table 3):

Table 3.

Levels of AI integration in emergency surgical decision-making and associated ethical considerations. This table outlines the progressive levels of AI integration in emergency surgery, from assistive tools to experimental full automation, with examples and ethical implications for each stage.

- Supportive AI, aimed to support clinical decision-making;

- AI-assisted decision-making, which provides semi-autonomous guidance;

- Autonomous AI in predefined tasks, which automates specific surgical steps.

Liability in AI-based tool complications can be categorized into three main scenarios according to the degree of autonomy of the AI device implemented, as summarized in Table 4 [63]:

Table 4.

Legal liability scenarios in AI-assisted emergency surgical practices. This table presents illustrative liability scenarios based on different degrees of AI involvement in emergency surgery, emphasizing shared responsibility between clinicians, developers, and manufacturers.

Current FDA and EU regulations do not define liability for AI-assisted errors in medicine, leading to grey areas in litigation.

In clinical practice, patients might need to explicitly consent to AI-assisted decisions and to be aware the AI is involved in the management of his/her surgical disease.

Furthermore, AI should explain why it made a certain decision providing black box AI systems clearer validation frameworks.

Future policies may introduce hybrid liability models, where hospitals, surgeons, and AI vendors share responsibility based on case specifics.

5. Call to Action and Clinical Recommendations for Ethical AI Implementation in Emergency Surgery

Significant concerns remain regarding the opacity of AI algorithms and the risk of automation bias, where surgeons may overly rely on AI recommendations without independent clinical assessment. This raises critical ethical and practical questions: “Should AI be permitted to override human judgment in high-stakes surgical emergencies? How can we ensure that AI serves as an augmentation of clinical expertise rather than a replacement?”

To address these concerns, it is essential to establish clear lines of responsibility and liability when AI systems contribute to emergency medical decisions. Defining accountability ensures both patient safety and legal protection for healthcare providers. Additionally, caution must be exercised to prevent over-reliance on AI, particularly when it challenges human autonomy in critical decision-making.

To optimize the responsible and effective integration of AI in emergency surgery, actions are required according to the following:

- Enhancing AI Transparency—Prioritizing the development of explainable AI (XAI) models to improve interpretability, ensuring that healthcare providers can critically assess and validate AI-generated recommendations.

- Developing Clear Communication Protocols—Standardizing the disclosure of AI involvement in patient care to maintain trust and uphold patient autonomy.

- Mitigating Bias in AI Training Data—Ensuring that AI training datasets are diverse and representative of all patient populations to prevent the exacerbation of health disparities.

- Aligning AI with Patient-Centered Care—Designing AI systems that integrate ethical considerations and patient values into their decision-making frameworks.

- Strengthening Regulatory Oversight—Establishing comprehensive legal frameworks to define AI accountability, enhance data protection, and uphold ethical standards in emergency surgical applications.

While XAI techniques hold great promise, they are not yet widely integrated into clinical user interfaces. Future AI systems must prioritize interpretability by design to foster trust, support adoption, and meet evolving transparency and accountability standards [64].

At present, no single global framework fully harmonizes AI regulation and data protection. However, foundational efforts are underway. In the European Union, the General Data Protection Regulation (GDPR) establishes strong rules for data privacy and consent. The proposed EU AI Act complements this with a risk-based approach, mandating transparency, human oversight, and reliability for high-risk AI systems.

In the United States, the FDA offers evolving guidance on AI/ML-enabled medical devices, with a focus on safety and effectiveness, though a unified legal structure equivalent to the EU AI Act is still lacking.

Globally, the WHO has outlined six pillars for ethical AI in health, emphasizing human-centered design, accountability, and fairness. Other efforts, such as the OECD AI Principles and the Global Partnership on AI (GPAI), aim to promote regulatory alignment, though practical implementation—particularly around cross-border data use—remains a major hurdle [65,66].

These legal and institutional differences impact consent processes, algorithm transparency, and equitable access to AI technologies. These challenges are particularly pressing in emergency surgery, where timely decision-making often depends on rapid data exchange among professionals across institutions and international borders. In low- and middle-income countries, limited regulatory infrastructure and technological capacity may hinder the ethical deployment of AI, potentially widening global health disparities.

To enable responsible innovation, developing interoperable legal and technical standards that ensure strong data protection without impeding progress is essential.

By addressing these issues, AI can fulfill its potential to enhance surgical decision-making, improve workflows, and deliver safer, more equitable care in emergency settings [12,67,68,69,70,71,72,73].

Table 5 summarizes the core ethical and regulatory concerns identified in this review and proposes actionable recommendations to guide the safe and effective implementation of AI in emergency surgery.

Table 5.

Key clinical recommendations for ethical AI implementation in emergency surgery.

6. Conclusions

The adoption of AI in emergency surgery continues to face significant ethical challenges, including issues of transparency, accountability, data quality and security, and bias mitigation. Although AI is increasingly recognized as a transformative tool in healthcare, regulatory frameworks remain fragmented, and comprehensive ethical guidelines are still evolving.

Responsible integration of AI into emergency surgical practice demands multidisciplinary collaboration among surgeons, clinicians, data scientists, ethicists, and policymakers. Key priorities include mitigating bias in training datasets, strengthening informed consent processes, and establishing robust governance frameworks to uphold trust in AI-assisted decision-making. Additionally, ensuring equitable access to technology, providing clinicians with appropriate training, and fostering active stakeholder engagement will be critical for the safe adoption of AI in surgical workflows.

Future research should focus on implementation science to ensure that AI technologies are generalizable, representative, and adaptable across diverse patient populations and healthcare settings. Notably, most of the current literature and datasets originate from high-income countries, creating a significant gap in understanding how AI can be safely and ethically deployed in low- and middle-income contexts, where resource limitations pose unique challenges.

We strongly advocate for more inclusive, globally coordinated research efforts to ensure that AI tools are equitable, scalable, and responsive to varied healthcare environments. Moreover, empirical validation studies—such as clinical trials and real-world implementation research—should be planned to assess the safety, effectiveness, and ethical implications of AI tools in emergency surgical settings.

Ultimately, AI should not replace human expertise but rather serve as a complementary tool that enhances clinical decision-making while preserving professional judgment—especially in high-stakes emergency scenarios. With ethical oversight, responsible governance, and ongoing refinement, AI has the potential to meaningfully transform emergency surgical care.

Author Contributions

Conceptualization B.D.S.; methodology, B.D.S.; writing—original draft preparation, B.D.S.; writing—review and editing, B.D.S., G.D. and F.C.; visualization, B.D.S.; supervision, B.D.S.; project administration, B.D.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The Authors declare no conflicts of interests.

Abbreviations

| AI | Artificial Intelligence |

| ML | Machine Learning |

| DL | Deep Learning |

| CV | Computer Vision |

| CNNs | Convolutional Neural Networks |

| R-CNN | Region-based Convolutional Neural Network |

| FDA | U.S. Food and Drug Administration |

| GDPR | General Data Protection Regulation |

| EU | European Union |

| MHRA | Medicines and Healthcare products Regulatory Agency |

| NMPA | National Medical Products Administration |

References

- Panossian, V.S.; Argandykov, D.; Arnold, S.C.; Gebran, A.; Paranjape, C.N.; Hwabejire, J.O.; DeWane, M.P.; Velmahos, G.C.; Kaafarani, H.M.; POTTER Validation Group. Validation of Artificial Intelligence-Based POTTER Calculator in Emergency General Surgery Patients Undergoing Laparotomy: Prospective, Bi-Institutional Study. J. Am. Coll. Surg. 2025, 240, 254–262. [Google Scholar] [CrossRef] [PubMed]

- Gebran, A.; Vapsi, A.; Maurer, L.R.; El Moheb, M.; Naar, L.; Thakur, S.S.; Sinyard, R.; Daye, D.; Velmahos, G.C.; Bertsimas, D.; et al. POTTER-ICU: An artificial intelligence smartphone-accessible tool to predict the need for intensive care after emergency surgery. Surgery 2022, 172, 470–475. [Google Scholar] [CrossRef] [PubMed]

- Elhaddad, M.; Hamam, S. AI-Driven Clinical Decision Support Systems: An Ongoing Pursuit of Potential. Cureus 2024, 16, e57728. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Capelli, G.; Verdi, D.; Frigerio, I.; Rashidian, N.; Ficorilli, A.; Grasso, V.; Majidi, D.; Gumbs, A.A.; Spolverato, G.; Artificial Intelligence Surgery Editorial Board Study Group on Ethics. White paper: Ethics and trustworthiness of artificial intelligence in clinical surgery. Artif. Intell. Surg. 2023, 3, 111–122. [Google Scholar] [CrossRef]

- Cobianchi, L.; Verde, J.M.; Loftus, T.J.; Piccolo, D.; Dal Mas, F.; Mascagni, P.; Garcia Vazquez, A.; Ansaloni, L.; Marseglia, G.R.; Massaro, M.; et al. Artificial Intelligence and Surgery: Ethical Dilemmas and Open Issues. J. Am. Coll. Surg. 2022, 235, 268–275. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Reflecting on reflexive thematic analysis. Qual. Res. Sport Exerc. Health 2019, 11, 589–597. [Google Scholar] [CrossRef]

- Hashimoto, D.A.; Rosman, G.; Rus, D.; Meireles, O.R. Artificial Intelligence in Surgery: Promises and Perils. Ann. Surg. 2018, 268, 70–76. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Mascagni, P.; Vardazaryan, A.; Alapatt, D.; Urade, T.; Emre, T.; Fiorillo, C.; Pessaux, P.; Mutter, D.; Marescaux, J.; Costamagna, G.; et al. Artificial Intelligence for Surgical Safety: Automatic Assessment of the Critical View of Safety in Laparoscopic Cholecystectomy Using Deep Learning. Ann. Surg. 2022, 275, 955–961. [Google Scholar] [CrossRef] [PubMed]

- Mascagni, P.; Alapatt, D.; Urade, T.; Vardazaryan, A.; Mutter, D.; Marescaux, J.; Costamagna, G.; Dallemagne, B.; Padoy, N. A Computer Vision Platform to Automatically Locate Critical Events in Surgical Videos: Documenting Safety in Laparoscopic Cholecystectomy. Ann. Surg. 2021, 274, e93–e95. [Google Scholar] [CrossRef] [PubMed]

- Shinozuka, K.; Turuda, S.; Fujinaga, A.; Nakanuma, H.; Kawamura, M.; Matsunobu, Y.; Tanaka, Y.; Kamiyama, T.; Ebe, K.; Endo, Y.; et al. Artificial intelligence software available for medical devices: Surgical phase recognition in laparoscopic cholecystectomy. Surg. Endosc. 2022, 36, 7444–7452. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Madani, A.; Namazi, B.; Altieri, M.S.; Hashimoto, D.A.; Rivera, A.M.; Pucher, P.H.; Navarrete-Welton, A.; Sankaranarayanan, G.; Brunt, L.M.; Okrainec, A.; et al. Artificial Intelligence for Intraoperative Guidance: Using Semantic Segmentation to Identify Surgical Anatomy During Laparoscopic Cholecystectomy. Ann. Surg. 2022, 276, 363–369. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- De Simone, B.; Abu-Zidan, F.M.; Gumbs, A.A.; Chouillard, E.; Di Saverio, S.; Sartelli, M.; Coccolini, F.; Ansaloni, L.; Collins, T.; Kluger, Y.; et al. Knowledge, attitude, and practice of artificial intelligence in emergency and trauma surgery, the ARIES project: An international web-based survey. World J. Emerg. Surg. 2022, 17, 10. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Rodgers, C.M.; Ellingson, S.R.; Chatterjee, P. Open Data and transparency in artificial intelligence and machine learning: A new era of research. F1000Research 2023, 12, 387. [Google Scholar] [CrossRef]

- Angelos, P. Complications, Errors, and Surgical Ethics. World J. Surg. 2009, 33, 609–611. [Google Scholar] [CrossRef] [PubMed]

- Fosch-Villaronga, E.; Khanna, P.; Drukarch, H.; Custers, B. The Role of Humans in Surgery Automation. Int. J. Soc. Robot. 2023, 15, 563–580. [Google Scholar] [CrossRef]

- Kim, Y.S.; Park, S.H.; Lee, I.Y.; Son, G.M.; Baek, K.R. AI-driven automatic compression system for colorectal anastomosis. J. Robot. Surg. 2024, 18, 290. [Google Scholar] [CrossRef] [PubMed]

- Habli, I.; Lawton, T.; Porter, Z. Artificial intelligence in health care: Accountability and safety. Bull. World Health Organ. 2020, 98, 251–256. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Adegbesan, A.; Akingbola, A.; Aremu, O.; Adewole, O.; Amamdikwa, J.C.; Shagaya, U. From Scalpels to Algorithms: The Risk of Dependence on Artificial Intelligence in Surgery. J. Med. Surg. Public Health 2024, 3, 100140. [Google Scholar] [CrossRef]

- Shahbazi, Z.; Byun, Y.C. Analysis of the Security and Reliability of Cryptocurrency Systems Using Knowledge Discovery and Machine Learning Methods. Sensors 2022, 22, 9083. [Google Scholar] [CrossRef]

- Brożek, B.; Furman, M.; Jakubiec, M.; Kucharzyk, B. The black box problem revisited. Real and imaginary challenges for automated legal decision making. Artif. Intell. Law 2023, 32, 427–440. [Google Scholar] [CrossRef]

- Iserson, K.; Baker, E.; Bissmeyer, P.; Derse, A. Artificial Intelligence in the ED: Ethical Issues. ACEP Now 2024, 43, 6. [Google Scholar]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting Black-Box Models: A Review on Explainable Artificial Intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Agarwal, R.; Bjarnadottir, M.; Rhue, L.; Dugas, M.; Crowley, K.; Clark, J.; Gao, G. Addressing algorithmic bias and the perpetuation of health inequities: An AI bias aware framework. Health Policy Technol. 2023, 12, 100702. [Google Scholar] [CrossRef]

- Prien, C.; Lincango, E.P.; Holubar, S.D. Big Data in Surgery. Surg. Clin. N. Am. 2023, 103, 219–232. [Google Scholar] [CrossRef]

- Cross, J.L.; Choma, M.A.; Onofrey, J.A. Bias in medical AI: Implications for clinical decision-making. PLoS Digit. Health 2024, 3, e0000651. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Ueda, D.; Kakinuma, T.; Fujita, S.; Kamagata, K.; Fushimi, Y.; Ito, R.; Matsui, Y.; Nozaki, T.; Nakaura, T.; Fujima, N.; et al. Fairness of artificial intelligence in healthcare: Review and recommendations. jpn J. Radiol. 2024, 42, 3–15. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- The Impact of the General Data Protection Regulation (GDPR) on Artificial Intelligence. Available online: https://www.europarl.europa.eu/RegData/etudes/STUD/2020/641530/EPRS_STU(2020)641530_EN.pdf (accessed on 20 March 2025).

- World Health Organization. WHO Calls for Safe and Ethical AI for Health. 2023. Available online: https://www.who.int/news/item/16-05-2023-who-calls-for-safe-and-ethical-ai-for-health (accessed on 20 March 2025).

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci. Data 2016, 3, 160018, Erratum in Sci. Data 2019, 6, 6. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- van Grinsven, M.J.J.P.; van Ginneken, B.; Hoyng, C.B.; Theelen, T.; Sanchez, C.I. Fast Convolutional Neural Network Training Using Selective Data Sampling: Application to Hemorrhage Detection in Color Fundus Images. IEEE Trans. Med. Imaging 2016, 35, 1273–1284. [Google Scholar] [CrossRef]

- Eckhoff, J.A.; Rosman, G.; Altieri, M.S.; Speidel, S.; Stoyanov, D.; Anvari, M.; Meier-Hein, L.; März, K.; Jannin, P.; Pugh, C.; et al. SAGES consensus recommendations on surgical video data use, structure, and exploration (for research in artificial intelligence, clinical quality improvement, and surgical education). Surg. Endosc. 2023, 37, 8690–8707. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Nyangoh Timoh, K.; Huaulme, A.; Cleary, K.; Zaheer, M.A.; Lavoué, V.; Donoho, D.; Jannin, P. A systematic review of annotation for surgical process model analysis in minimally invasive surgery based on video. Surg. Endosc. 2023, 37, 4298–4314. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Meireles, O.R.; Rosman, G.; Altieri, M.S.; Carin, L.; Hager, G.; Madani, A.; Padoy, N.; Pugh, C.M.; Sylla, P.; Ward, T.M.; et al. SAGES consensus recommendations on an annotation framework for surgical video. Surg. Endosc. 2021, 35, 4918–4929. [Google Scholar] [CrossRef] [PubMed]

- Neugebauer, E.A.; Becker, M.; Buess, G.F.; Cuschieri, A.; Dauben, H.P.; Fingerhut, A.; Fuchs, K.H.; Habermalz, B.; Lantsberg, L.; Morino, M.; et al. EAES recommendations on methodology of innovation management in endoscopic surgery. Surg. Endosc. 2010, 24, 1594–1615. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.; Lee, J.; Park, B.; Alwusaibie, A.A.; Alfadhel, A.H.; Park, S.; Hyung, W.J.; Choi, M.-K. Rethinking Generalization Performance of Surgical Phase Recognition with Expert-Generated Annotations. arXiv 2021, arXiv:2110.11626. [Google Scholar]

- Goodman, E.D.; Patel, K.K.; Zhang, Y.; Locke, W.; Kennedy, C.J.; Mehrotra, R.; Ren, S.; Guan, M.; Zohar, O.; Downing, M.; et al. Analyzing Surgical Technique in Diverse Open Surgical Videos With Multitask Machine Learning. JAMA Surg. 2024, 159, 185–192. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lee, J.-D.; Chien, J.-C.; Hsu, Y.-T.; Wu, C.-T. Automatic Surgical Instrument Recognition—A Case of Comparison Study between the Faster R-CNN, Mask R-CNN, and Single-Shot Multi-Box Detectors. Appl. Sci. 2021, 11, 8097. [Google Scholar] [CrossRef]

- Jiang, K.; Pan, S.; Yang, L.; Yu, J.; Lin, Y.; Wang, H. Surgical Instrument Recognition Based on Improved YOLOv5. Appl. Sci. 2023, 13, 11709. [Google Scholar] [CrossRef]

- Sagheb, E.; Ramazanian, T.; Tafti, A.P.; Fu, S.; Kremers, W.K.; Berry, D.J.; Lewallen, D.G.; Sohn, S.; Maradit Kremers, H. Use of Natural Language Processing Algorithms to Identify Common Data Elements in Operative Notes for Knee Arthroplasty. J. Arthroplast. 2021, 36, 922–926. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Birkhoff, D.C.; van Dalen, A.S.H.M.; Schijven, M.P. A Review on the Current Applications of Artificial Intelligence in the Operating Room. Surg. Innov. 2021, 28, 611–619. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- De Simone, B.; Agnoletti, V.; Abu-Zidan, F.M.; Biffl, W.L.; Moore, E.E.; Chouillard, E.; Coccolini, F.; Sartelli, M.; Podda, M.; Di Saverio, S.; et al. The Operating Room management for emergency Surgical Activity (ORSA) study: A WSES international survey. Updates Surg. 2024, 76, 687–698. [Google Scholar] [CrossRef] [PubMed]

- De Simone, B.; Kluger, Y.; Moore, E.E.; Sartelli, M.; Abu-Zidan, F.M.; Coccolini, F.; Ansaloni, L.; Tebala, G.D.; Di Saverio, S.; Di Carlo, I.; et al. The new timing in acute care surgery (new TACS) classification: A WSES Delphi consensus study. World J. Emerg. Surg. 2023, 18, 32. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Tony Peregrin. Black Box Technology Shines Light on Improving OR Safety, Efficiency. 2023. Available online: https://www.facs.org/for-medical-professionals/news-publications/news-and-articles/bulletin/2023/july-2023-volume-108-issue-7/black-box-technology-shines-light-on-improving-or-safety-efficiency/ (accessed on 20 March 2025).

- Mascagni, P.; Padoy, N. OR black box and surgical control tower: Recording and streaming data and analytics to improve surgical care. J. Visc. Surg. 2021, 158, S18–S25. [Google Scholar] [CrossRef] [PubMed]

- Bai, N. ‘Black Boxes’ in Stanford Hospital Operating Rooms aid Training and Safety. Stanford Medicine News Center, 28 September 2022. Available online: https://med.stanford.edu/news/all-news/2022/09/black-box-surgery.html (accessed on 24 May 2023).

- Jung, J.J.; Jüni, P.; Lebovic, G.; Grantcharov, T. First-year Analysis of the Operating Room Black Box Study. Ann. Surg. 2020, 271, 122–127. [Google Scholar] [CrossRef] [PubMed]

- Campbell, K.K.; Abreu, A.A.; Zeh, H.J.; Daniel, W.C.; Palter, V.N.; Bishop, S.J.; Sims, S.; Odeh, J.M.; Evans, K.; Dandekar, P.; et al. Using OR Black Box Technology to Determine Quality Improvement Outcomes for In-situ Timeout and Debrief Simulation. Ann. Surg. 2024. Epub ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Rai, A.; Beland, L.; Aro, T.; Jarrett, M.; Kavoussi, L. Patient Safety in the Operating Room During Urologic Surgery: The OR Black Box Experience. World J. Surg. 2021, 45, 3306–3312. [Google Scholar] [CrossRef] [PubMed]

- Golany, T.; Aides, A.; Freedman, D.; Rabani, N.; Liu, Y.; Rivlin, E.; Corrado, G.S.; Matias, Y.; Khoury, W.; Kashtan, H.; et al. Artificial intelligence for phase recognition in complex laparoscopic cholecystectomy. Surg. Endosc. 2022, 36, 9215–9223. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Deol, E.S.; Henning, G.; Basourakos, S.; Vasdev, R.M.S.; Sharma, V.; Kavoussi, N.L.; Karnes, R.J.; Leibovich, B.C.; Boorjian, S.A.; Khanna, A. Artificial intelligence model for automated surgical instrument detection and counting: An experimental proof-of-concept study. Patient Saf. Surg. 2024, 18, 24. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Park, H.J. Patient perspectives on informed consent for medical AI: A web-based experiment. Digit Health 2024, 10, 20552076241247938. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kituuka, O.; Munabi, I.G.; Mwaka, E.S.; Galukande, M.; Harris, M.; Sewankambo, N. Informed consent process for emergency surgery: A scoping review of stakeholders’ perspectives, challenges, ethical concepts, and policies. SAGE Open Med. 2023, 11, 20503121231176666. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Teasdale, A.; Mills, L.; Costello, R. Artificial Intelligence-Powered Surgical Consent: Patient Insights. Cureus 2024, 16, e68134. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Harishbhai Tilala, M.; Kumar Chenchala, P.; Choppadandi, A.; Kaur, J.; Naguri, S.; Saoji, R.; Devaguptapu, B. Ethical Considerations in the Use of Artificial Intelligence and Machine Learning in Health Care: A Comprehensive Review. Cureus 2024, 16, e62443. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices. Available online: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices (accessed on 20 March 2025).

- World Health Organization. WHO Outlines Considerations for Regulation of Artificial Intelligence for Health. 2023. Available online: https://www.who.int/news/item/19-10-2023-who-outlines-considerations-for-regulation-of-artificial-intelligence-for-health (accessed on 20 March 2025).

- EU AI Act: First Regulation on Artificial Intelligence. Topics European Parliament. 2023. Available online: https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence (accessed on 20 March 2025).

- Directive 2011/83/EU of the European Parliament and of the Council of 25 October 2011 on Consumer Rights, Amending Council Directive 93/13/EEC and Directive 1999/44/EC of the European Parliament and of the Council and Repealing Council Directive 85/577/EEC and Directive 97/7/EC of the European Parliament and of the Council Text with EEA Relevance. Available online: https://eur-lex.europa.eu/eli/dir/2011/83/oj/eng (accessed on 20 March 2025).

- Bibal, A.; Lognoul, M.; De Streel, A.; Frénay, B. Legal requirements on explainability in machine learning. Artif. Intell. Law 2021, 29, 149–169. [Google Scholar] [CrossRef]

- Ahuja, A.S. The impact of artificial intelligence in medicine on the future role of the physician. PeerJ 2019, 7, e7702. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Maleki Varnosfaderani, S.; Forouzanfar, M. The Role of AI in Hospitals and Clinics: Transforming Healthcare in the 21st Century. Bioengineering 2024, 11, 337. [Google Scholar] [CrossRef]

- Cestonaro, C.; Delicati, A.; Marcante, B.; Caenazzo, L.; Tozzo, P. Defining medical liability when artificial intelligence is applied on diagnostic algorithms: A systematic review. Front. Med. 2023, 10, 1305756. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Eldakak, A.; Alremeithi, A.; Dahiyat, E.; El-Gheriani, M.; Mohamed, H.; Abdulrahim Abdulla, M.I. Civil liability for the actions of autonomous AI in healthcare: An invitation to further contemplation. Humanit. Soc. Sci. Commun. 2024, 11, 305. [Google Scholar] [CrossRef]

- Brandenburg, J.M.; Müller-Stich, B.P.; Wagner, M.; van der Schaar, M. Can surgeons trust AI? Perspectives on machine learning in surgery and the importance of eXplainable Artificial Intelligence (XAI). Langenbeck’s Arch. Surg. 2025, 410, 53. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- OECD AI Principles Overview. Available online: https://oecd.ai/en/ai-principles (accessed on 20 March 2025).

- The GPAI Initiative and OECD Work on AI Have Joined Forces Under the GPAI Brand to Create an Integrated Partnership. GPAI Work Is Now Available on the OECD AI Policy Observatory. Available online: https://gpai.ai/ (accessed on 20 March 2025).

- De Simone, B.; Chouillard, E.; Gumbs, A.A.; Loftus, T.J.; Kaafarani, H.; Catena, F. Artificial intelligence in surgery: The emergency surgeon’s perspective (the ARIES project). Discov. Health Syst. 2022, 1, 9. [Google Scholar] [CrossRef]

- De Simone, B.; Di Saverio, S. Invited Commentary: Artificial Intelligence in Surgical Care: We Must Overcome Ethical Boundaries. J. Am. Coll. Surg. 2022, 235, 275–277. [Google Scholar] [CrossRef]

- De Simone, B.; Kluger, Y.; Moore, E.E.; Di Saverio, S.; Sartelli, M.; Ansaloni, L.; Coccolini, F.; Biffl, W.L.; Catena, F. The WSES: What do we see in the future? World J. Emerg. Surg. 2021, 16, 13. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- De Simone, B.; Abu-Zidan, F.M.; Saeidi, S.; Deeken, G.; Biffl, W.L.; Moore, E.E.; Sartelli, M.; Coccolini, F.; Ansaloni, L.; Di Saverio, S.; et al. Knowledge, attitudes and practices of using Indocyanine Green (ICG) fluorescence in emergency surgery: An international web-based survey in the ARtificial Intelligence in Emergency and trauma Surgery (ARIES)-WSES project. Updates Surg. 2024, 76, 1969–1981. [Google Scholar] [CrossRef] [PubMed]

- De Simone, B.; Abu-Zidan, F.M.; Boni, L.; Castillo, A.M.G.; Cassinotti, E.; Corradi, F.; Di Maggio, F.; Ashraf, H.; Baiocchi, G.L.; Tarasconi, A.; et al. Indocyanine green fluorescence-guided surgery in the emergency setting: The WSES international consensus position paper. World J. Emerg. Surg. 2025, 20, 13. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Saeidi, H.; Opfermann, J.D.; Kam, M.; Wei, S.; Léonard, S.; Hsieh, M.H. Autonomous robotic laparoscopic surgery for intestinal anastomosis. Sci. Robot. 2022, 7, eabj2908. [Google Scholar] [PubMed]

- Shademan, A.; Decker, R.S.; Opfermann, J.D.; Leonard, S.; Krieger, A.; Kim, P.C. Supervised autonomous robotic soft tissue surgery. Sci. Transl. Med. 2016, 8, 337ra64. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).