Interpretable Machine Learning Model Integrating Electrocardiographic and Acute Physiology Metrics for Mortality Prediction in Critical Ill Patients

Abstract

1. Introduction

2. Materials and Methods

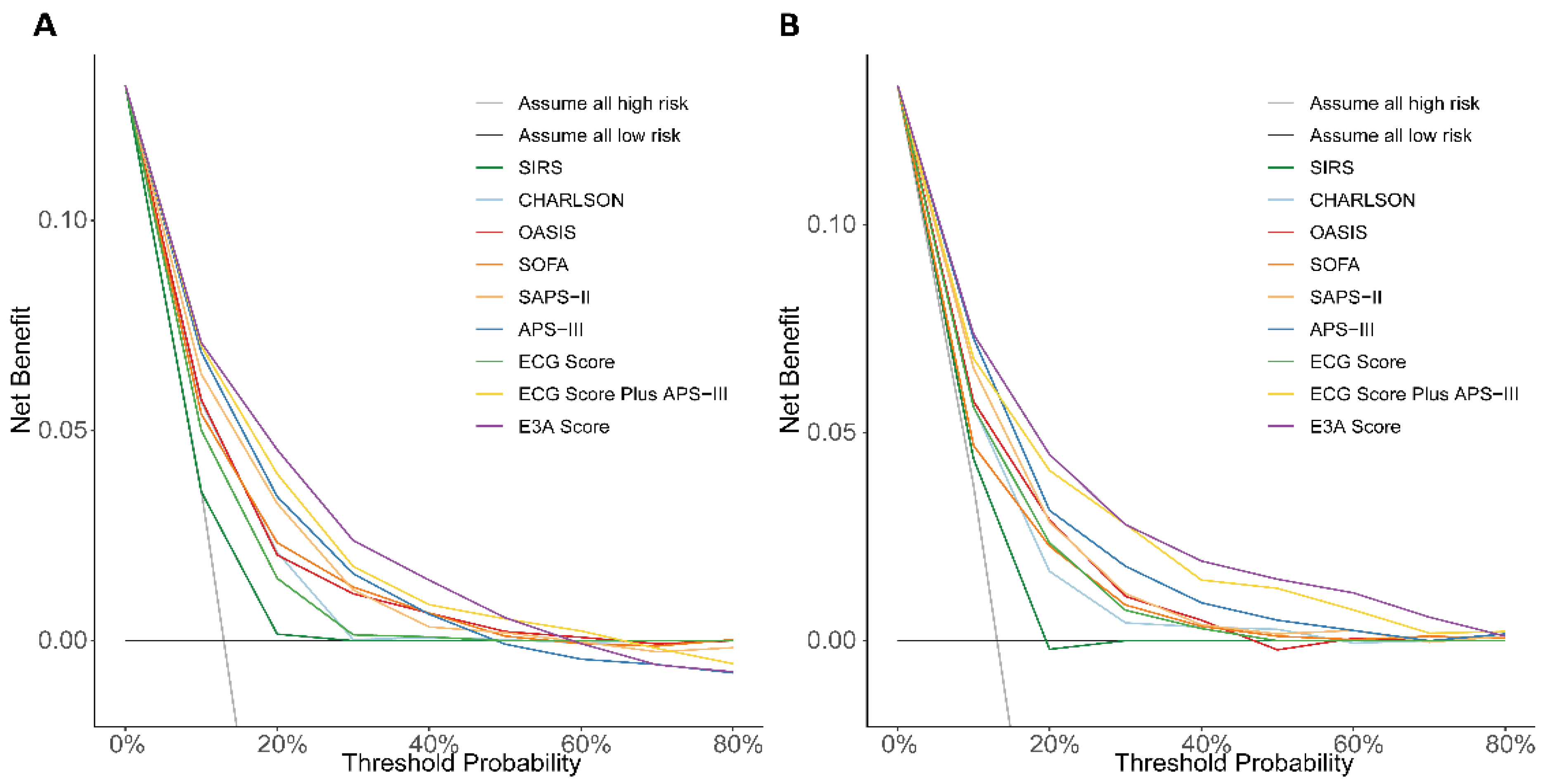

2.1. Study Design and Data Source

2.2. Study Population and Endpoints

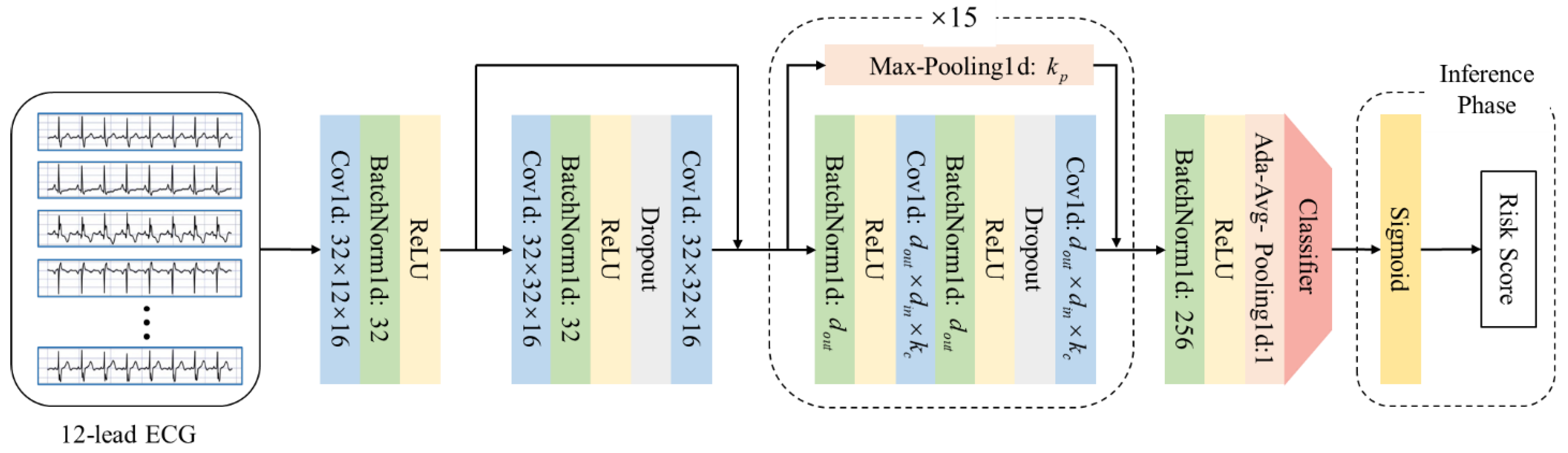

2.3. Clinical Feature Extraction and ECG Based Risk Score Generation

2.4. Machine Learning Model Development and Validation

2.5. Development of the Overall Scoring System

2.6. Feature Ablation for Variable Selection

2.7. Data Analysis

3. Results

3.1. Baseline Characteristics

3.2. Calculation of ECG-Based Risk Score

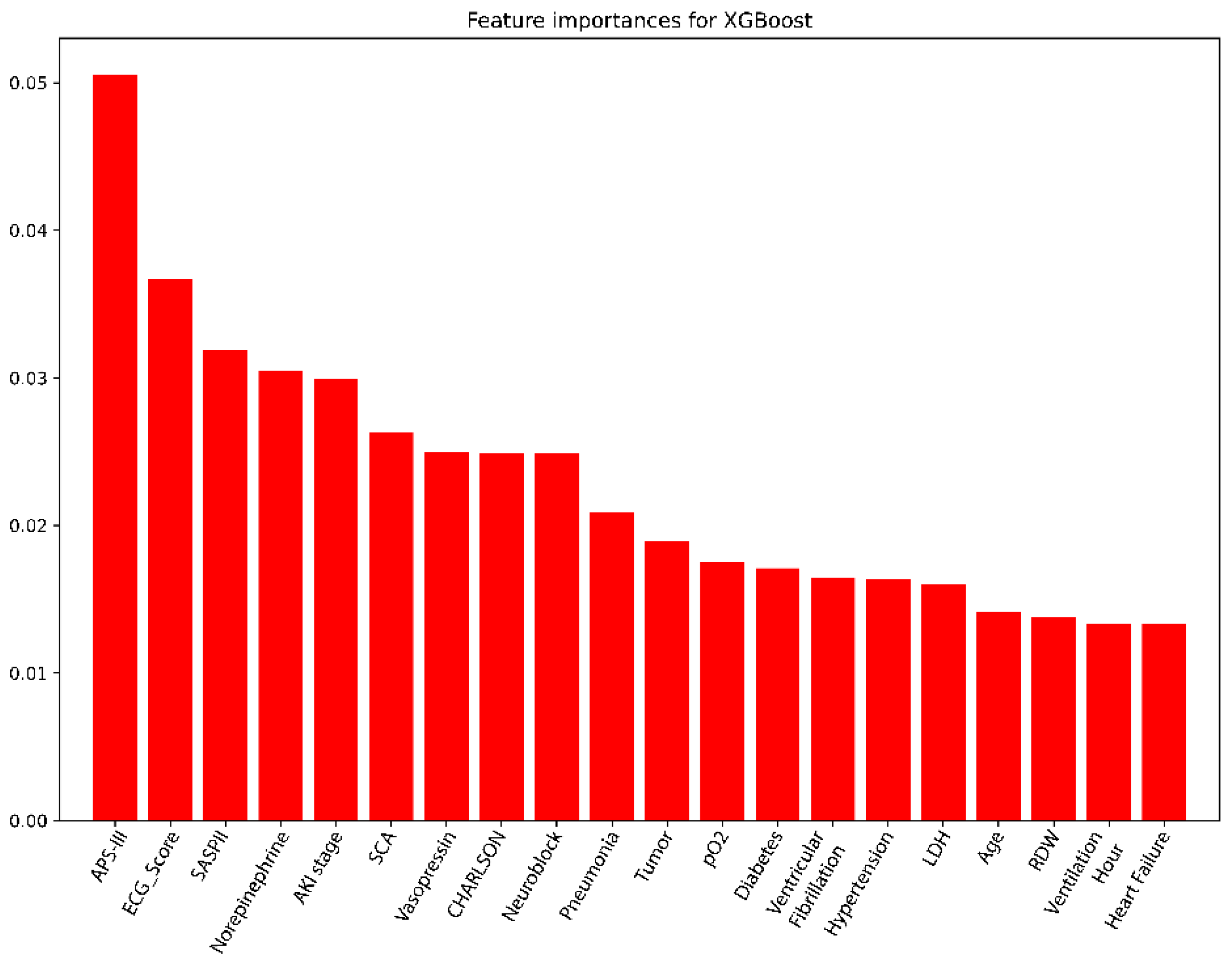

3.3. Screening Variables Using the XGBoost Model

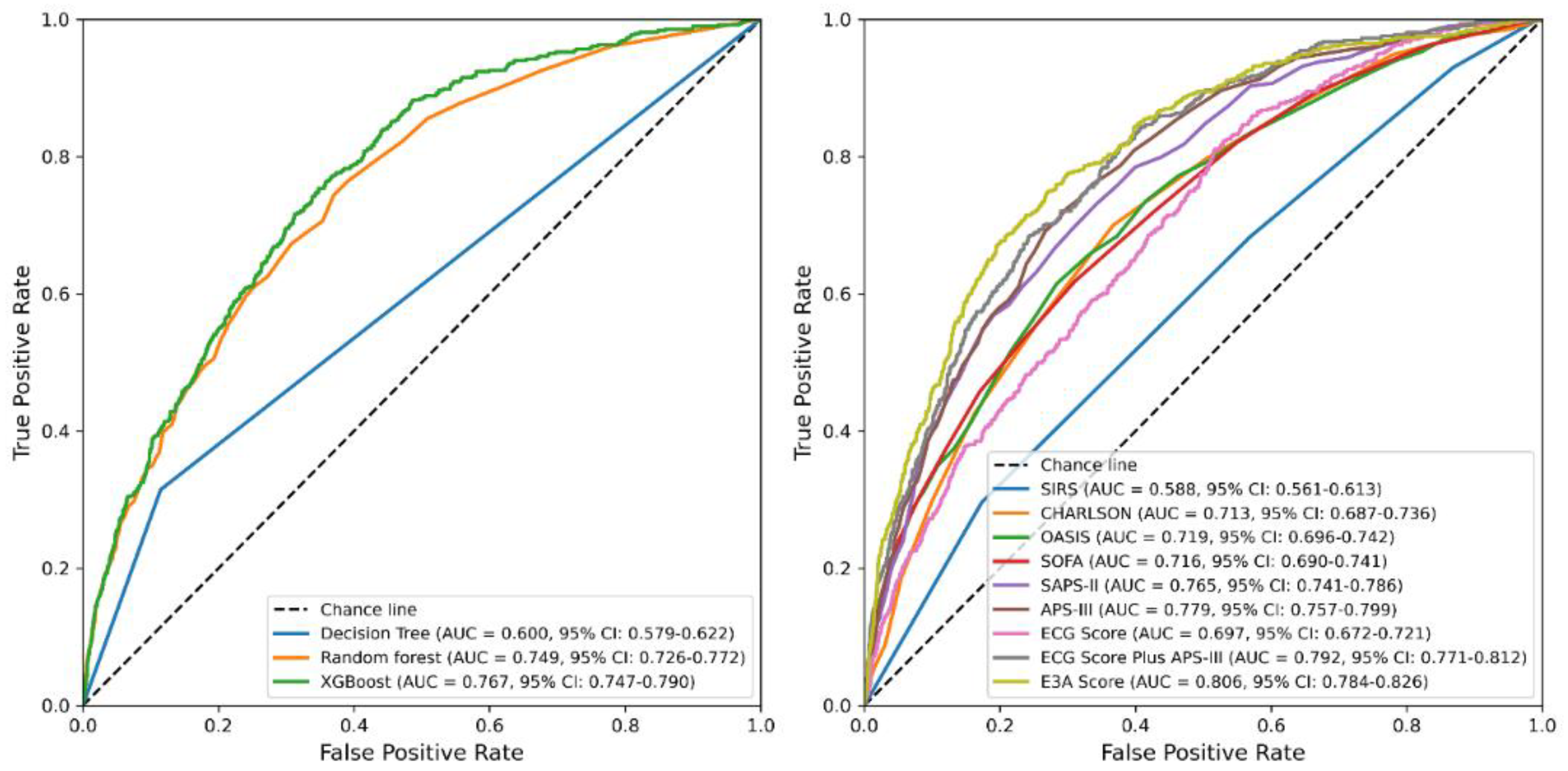

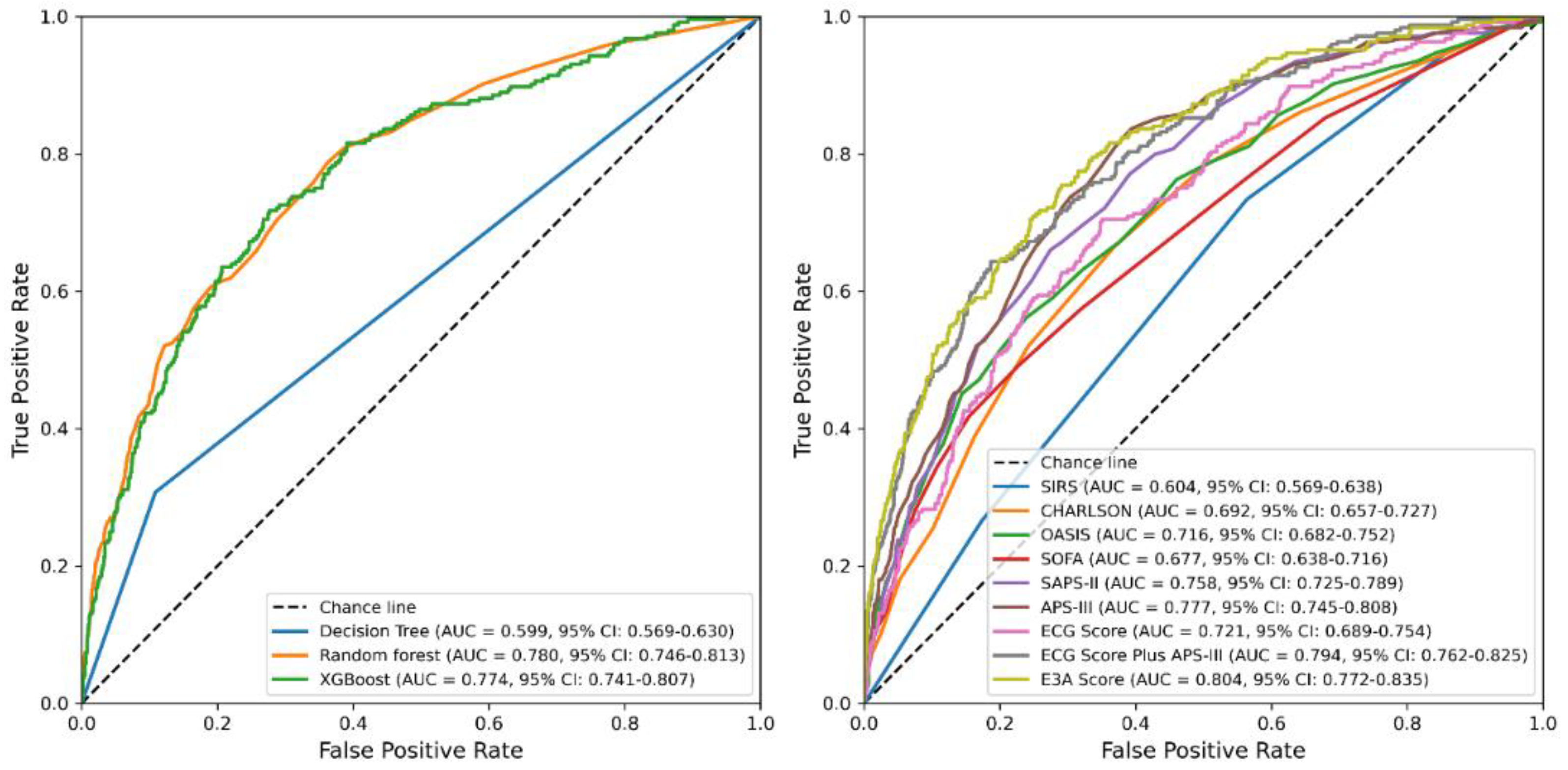

3.4. Derivation and Evaluation of the 28-Day Mortality Score

3.5. Multivariable Logistic Regression Analysis

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| APS-III | Acute Physiology Score III |

| AUC | Area Under Curve |

| DCA | Decision Curve Analysis |

| ECG | Electrocardiogram |

| GCS | Glasgow coma scale |

| ICU | Intensive Care Unit |

| ML | Machine Learning |

| OASIS | Oxford Acute Severity of Illness Score |

| SAPS-II | Simplified Acute Physiology Score II |

| SHAP | Shapley Additive exPlanations |

| SIRS | Systemic Inflammatory Response Syndrome |

| SOFA | Sequential Organ Failure Assessment |

References

- Arbous, S.M.; Termorshuizen, F.; Brinkman, S.; de Lange, D.W.; Bosman, R.J.; Dekkers, O.M.; de Keizer, N.F. Three-Year Mortality of ICU Survivors with Sepsis, an Infection or an Inflammatory Illness: An Individually Matched Cohort Study of ICU Patients in the Netherlands from 2007 to 2019. Crit. Care 2024, 28, 374. [Google Scholar] [CrossRef]

- Kalimouttou, A.; Lerner, I.; Cheurfa, C.; Jannot, A.-S.; Pirracchio, R. Machine-Learning-Derived Sepsis Bundle of Care. Intensive Care Med. 2023, 49, 26–36. [Google Scholar] [CrossRef]

- Zhang, S.; Han, Y.; Xiao, Q.; Li, H.; Wu, Y. Effectiveness of Bundle Interventions on ICU Delirium: A Meta-Analysis. Crit. Care Med. 2021, 49, 335–346. [Google Scholar] [CrossRef]

- He, Y.; Xu, J.; Shang, X.; Fang, X.; Gao, C.; Sun, D.; Yao, L.; Zhou, T.; Pan, S.; Zou, X.; et al. Clinical Characteristics and Risk Factors Associated with ICU-Acquired Infections in Sepsis: A Retrospective Cohort Study. Front. Cell. Infect. Microbiol. 2022, 12, 962470. [Google Scholar] [CrossRef]

- Le Gall, J.R.; Lemeshow, S.; Saulnier, F. A New Simplified Acute Physiology Score (SAPS II) Based on a European/North American Multicenter Study. JAMA 1993, 270, 2957–2963. [Google Scholar] [CrossRef]

- Qiu, X.; Lei, Y.-P.; Zhou, R.-X. SIRS, SOFA, qSOFA, and NEWS in the Diagnosis of Sepsis and Prediction of Adverse Outcomes: A Systematic Review and Meta-Analysis. Expert Rev. Anti-Infect. Ther. 2023, 21, 891–900. [Google Scholar] [CrossRef]

- Johnson, A.E.W.; Kramer, A.A.; Clifford, G.D. A New Severity of Illness Scale Using a Subset of Acute Physiology and Chronic Health Evaluation Data Elements Shows Comparable Predictive Accuracy. Crit. Care Med. 2013, 41, 1711–1718. [Google Scholar] [CrossRef]

- Charlson, M.E.; Carrozzino, D.; Guidi, J.; Patierno, C. Charlson Comorbidity Index: A Critical Review of Clinimetric Properties. Psychother. Psychosom. 2022, 91, 8–35. [Google Scholar] [CrossRef]

- Luo, Y.; Dong, R.; Liu, J.; Wu, B. A Machine Learning-Based Predictive Model for the in-Hospital Mortality of Critically Ill Patients with Atrial Fibrillation. Int. J. Med. Inform. 2024, 191, 105585. [Google Scholar] [CrossRef]

- Wang, F.; Wu, X.; Hu, S.-Y.; Wu, Y.-W.; Ding, Y.; Ye, L.-Z.; Hui, J. Type 2 Myocardial Infarction among Critically Ill Elderly Patients in the Intensive Care Unit: The Clinical Features and in-Hospital Prognosis. Aging Clin. Exp. Res. 2020, 32, 1801–1807. [Google Scholar] [CrossRef]

- Ordine, L.; Canciello, G.; Borrelli, F.; Lombardi, R.; Di Napoli, S.; Polizzi, R.; Falcone, C.; Napolitano, B.; Moscano, L.; Spinelli, A.; et al. Artificial Intelligence-Driven Electrocardiography: Innovations in Hypertrophic Cardiomyopathy Management. Trends Cardiovasc. Med. 2025, 35, 126–134. [Google Scholar] [CrossRef]

- Amadio, J.M.; Grogan, M.; Muchtar, E.; Lopez-Jimenez, F.; Attia, Z.I.; AbouEzzeddine, O.; Lin, G.; Dasari, S.; Kapa, S.; Borgeson, D.D.; et al. Predictors of Mortality by an Artificial Intelligence Enhanced Electrocardiogram Model for Cardiac Amyloidosis. ESC Heart Fail. 2025, 12, 677–682. [Google Scholar] [CrossRef] [PubMed]

- Wade, R.C.; Martinez, F.J.; Criner, G.J.; Tombs, L.; Lipson, D.A.; Halpin, D.M.G.; Han, M.K.; Singh, D.; Wise, R.A.; Kalhan, R.; et al. ECG-Based Risk Factors for Adverse Cardiopulmonary Events and Treatment Outcomes in COPD. Eur. Respir. J. 2025, 65, 2400171. [Google Scholar] [CrossRef]

- Udompap, P.; Liu, K.; Attia, I.Z.; Canning, R.E.; Benson, J.T.; Therneau, T.M.; Noseworthy, P.A.; Friedman, P.A.; Rattan, P.; Ahn, J.C.; et al. Performance of AI-Enabled Electrocardiogram in the Prediction of Metabolic Dysfunction-Associated Steatotic Liver Disease. Clin. Gastroenterol. Hepatol. 2025, 23, 574–582.e3. [Google Scholar] [CrossRef]

- Sau, A.; Pastika, L.; Sieliwonczyk, E.; Patlatzoglou, K.; Ribeiro, A.H.; McGurk, K.A.; Zeidaabadi, B.; Zhang, H.; Macierzanka, K.; Mandic, D.; et al. Artificial Intelligence-Enabled Electrocardiogram for Mortality and Cardiovascular Risk Estimation: A Model Development and Validation Study. Lancet Digit. Health 2024, 6, e791–e802. [Google Scholar] [CrossRef]

- Kilic, M.E.; Arayici, M.E.; Turan, O.E.; Yilancioglu, Y.R.; Ozcan, E.E.; Yilmaz, M.B. Diagnostic Accuracy of Machine Learning Algorithms in Electrocardiogram-Based Sleep Apnea Detection: A Systematic Review and Meta-Analysis. Sleep Med. Rev. 2025, 81, 102097. [Google Scholar] [CrossRef]

- Mayourian, J.; van Boxtel, J.P.A.; Sleeper, L.A.; Diwanji, V.; Geva, A.; O’Leary, E.T.; Triedman, J.K.; Ghelani, S.J.; Wald, R.M.; Valente, A.M.; et al. Electrocardiogram-Based Deep Learning to Predict Mortality in Repaired Tetralogy of Fallot. JACC Clin. Electrophysiol. 2024, 10, 2600–2612. [Google Scholar] [CrossRef]

- Johnson, A.E.W.; Bulgarelli, L.; Shen, L.; Gayles, A.; Shammout, A.; Horng, S.; Pollard, T.J.; Hao, S.; Moody, B.; Gow, B.; et al. MIMIC-IV, a Freely Accessible Electronic Health Record Dataset. Sci. Data 2023, 10, 1. [Google Scholar] [CrossRef] [PubMed]

- Hannun, A.Y.; Rajpurkar, P.; Haghpanahi, M.; Tison, G.H.; Bourn, C.; Turakhia, M.P.; Ng, A.Y. Cardiologist-Level Arrhythmia Detection and Classification in Ambulatory Electrocardiograms Using a Deep Neural Network. Nat. Med. 2019, 25, 65–69. [Google Scholar] [CrossRef] [PubMed]

- Singh, R.; Lanchantin, J.; Sekhon, A.; Qi, Y. Attend and Predict: Understanding Gene Regulation by Selective Attention on Chromatin. Adv. Neural. Inf. Process. Syst. 2017, 30, 6785–6795. [Google Scholar]

- Huang, J.; Cai, Y.; Wu, X.; Huang, X.; Liu, J.; Hu, D. Prediction of Mortality Events of Patients with Acute Heart Failure in Intensive Care Unit Based on Deep Neural Network. Comput. Methods Programs Biomed. 2024, 256, 108403. [Google Scholar] [CrossRef]

- Kahraman, F.; Yılmaz, A.S.; Ersoy, İ.; Demir, M.; Orhan, H. Predictive Outcomes of APACHE II and Expanded SAPS II Mortality Scoring Systems in Coronary Care Unit. Int. J. Cardiol. 2023, 371, 427–431. [Google Scholar] [CrossRef]

- Xie, W.; Li, Y.; Meng, X.; Zhao, M. Machine Learning Prediction Models and Nomogram to Predict the Risk of In-Hospital Death for Severe DKA: A Clinical Study Based on MIMIC-IV, eICU Databases, and a College Hospital ICU. Int. J. Med. Inform. 2023, 174, 105049. [Google Scholar] [CrossRef]

- Tseng, H.-H.; Lin, Y.-B.; Lin, K.-Y.; Lin, C.-H.; Li, H.-Y.; Chang, C.-H.; Tung, Y.-C.; Chen, P.-L.; Wang, C.-Y.; Yang, W.-S.; et al. A 20-Year Study of Autoimmune Polyendocrine Syndrome Type II and III in Taiwan. Eur. Thyroid J. 2023, 12, e230162. [Google Scholar] [CrossRef] [PubMed]

- Rosén, J.; Noreland, M.; Stattin, K.; Lipcsey, M.; Frithiof, R.; Malinovschi, A.; Hultström, M. Uppsala Intensive Care COVID-19 Research Group ECG Pathology and Its Association with Death in Critically Ill COVID-19 Patients, a Cohort Study. PLoS ONE 2021, 16, e0261315. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, A.H.; Ribeiro, M.H.; Paixão, G.M.M.; Oliveira, D.M.; Gomes, P.R.; Canazart, J.A.; Ferreira, M.P.S.; Andersson, C.R.; Macfarlane, P.W.; Meira, W.; et al. Automatic Diagnosis of the 12-Lead ECG Using a Deep Neural Network. Nat. Commun. 2020, 11, 1760. [Google Scholar] [CrossRef] [PubMed]

- Qin, Y.; Sun, L.; Chen, H.; Yang, W.; Zhang, W.-Q.; Fei, J.; Wang, G. MVKT-ECG: Efficient Single-Lead ECG Classification for Multi-Label Arrhythmia by Multi-View Knowledge Transferring. Comput. Biol. Med. 2023, 166, 107503. [Google Scholar] [CrossRef] [PubMed]

- Holmstrom, L.; Chugh, H.; Nakamura, K.; Bhanji, Z.; Seifer, M.; Uy-Evanado, A.; Reinier, K.; Ouyang, D.; Chugh, S.S. An ECG-Based Artificial Intelligence Model for Assessment of Sudden Cardiac Death Risk. Commun. Med. 2024, 4, 17. [Google Scholar] [CrossRef]

- Lin, C.-S.; Liu, W.-T.; Tsai, D.-J.; Lou, Y.-S.; Chang, C.-H.; Lee, C.-C.; Fang, W.-H.; Wang, C.-C.; Chen, Y.-Y.; Lin, W.-S.; et al. AI-Enabled Electrocardiography Alert Intervention and All-Cause Mortality: A Pragmatic Randomized Clinical Trial. Nat. Med. 2024, 30, 1461–1470. [Google Scholar] [CrossRef]

- Adedinsewo, D.A.; Morales-Lara, A.C.; Afolabi, B.B.; Kushimo, O.A.; Mbakwem, A.C.; Ibiyemi, K.F.; Ogunmodede, J.A.; Raji, H.O.; Ringim, S.H.; Habib, A.A.; et al. Artificial Intelligence Guided Screening for Cardiomyopathies in an Obstetric Population: A Pragmatic Randomized Clinical Trial. Nat. Med. 2024, 30, 2897–2906. [Google Scholar] [CrossRef]

- Peng, S.; Huang, J.; Liu, X.; Deng, J.; Sun, C.; Tang, J.; Chen, H.; Cao, W.; Wang, W.; Duan, X.; et al. Interpretable Machine Learning for 28-Day All-Cause in-Hospital Mortality Prediction in Critically Ill Patients with Heart Failure Combined with Hypertension: A Retrospective Cohort Study Based on Medical Information Mart for Intensive Care Database-IV and eICU Databases. Front. Cardiovasc. Med. 2022, 9, 994359. [Google Scholar] [CrossRef]

- Zhang, N.; Lin, Q.; Jiang, H.; Zhu, H. Age-Adjusted Charlson Comorbidity Index as Effective Predictor for in-Hospital Mortality of Patients with Cardiac Arrest: A Retrospective Study. BMC Emerg. Med. 2023, 23, 7. [Google Scholar] [CrossRef]

- Chen, J.; Mei, Z.; Wang, Y.; Shou, X.; Zeng, R.; Chen, Y.; Liu, Q. A Nomogram to Predict In-Hospital Mortality in Patients with Post-Cardiac Arrest: A Retrospective Cohort Study. Pol. Arch. Intern. Med. 2022, 133, 16325. [Google Scholar] [CrossRef] [PubMed]

- Christodoulou, E.; Ma, J.; Collins, G.S.; Steyerberg, E.W.; Verbakel, J.Y.; Van Calster, B. A Systematic Review Shows No Performance Benefit of Machine Learning over Logistic Regression for Clinical Prediction Models. J. Clin. Epidemiol. 2019, 110, 12–22. [Google Scholar] [CrossRef] [PubMed]

- Ingwersen, E.W.; Stam, W.T.; Meijs, B.J.V.; Roor, J.; Besselink, M.G.; Groot Koerkamp, B.; de Hingh, I.H.J.T.; van Santvoort, H.C.; Stommel, M.W.J.; Daams, F.; et al. Machine Learning versus Logistic Regression for the Prediction of Complications after Pancreatoduodenectomy. Surgery 2023, 174, 435–440. [Google Scholar] [CrossRef] [PubMed]

- Guan, C.; Gong, A.; Zhao, Y.; Yin, C.; Geng, L.; Liu, L.; Yang, X.; Lu, J.; Xiao, B. Interpretable Machine Learning Model for New-Onset Atrial Fibrillation Prediction in Critically Ill Patients: A Multi-Center Study. Crit. Care 2024, 28, 349. [Google Scholar] [CrossRef]

- Wang, K.; Tian, J.; Zheng, C.; Yang, H.; Ren, J.; Liu, Y.; Han, Q.; Zhang, Y. Interpretable Prediction of 3-Year All-Cause Mortality in Patients with Heart Failure Caused by Coronary Heart Disease Based on Machine Learning and SHAP. Comput. Biol. Med. 2021, 137, 104813. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Erion, G.; Chen, H.; DeGrave, A.; Prutkin, J.M.; Nair, B.; Katz, R.; Himmelfarb, J.; Bansal, N.; Lee, S.-I. From Local Explanations to Global Understanding with Explainable AI for Trees. Nat. Mach. Intell. 2020, 2, 56–67. [Google Scholar] [CrossRef]

- Collins, G.S.; Reitsma, J.B.; Altman, D.G.; Moons, K.G.M. Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD): The TRIPOD Statement. BMJ 2015, 350, g7594. [Google Scholar] [CrossRef]

- Saito, T.; Rehmsmeier, M. The Precision-Recall Plot Is More Informative than the ROC Plot When Evaluating Binary Classifiers on Imbalanced Datasets. PLoS ONE 2015, 10, e0118432. [Google Scholar] [CrossRef]

- Wang, Z.; Yan, W.; Oates, T. Time series classification from scratch with deep neural networks: A strong baseline. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1578–1585. [Google Scholar]

- Ismail Fawaz, H.; Lucas, B.; Forestier, G.; Pelletier, C.; Schmidt, D.F.; Weber, J.; Webb, G.I.; Idoumghar, L.; Muller, P.A.; Petitjean, F. InceptionTime: Finding AlexNet for time series classification. Data Min. Knowl. Disc. 2020, 34, 1936–1962. [Google Scholar] [CrossRef]

- Strodthoff, N.; Wagner, P.; Schaeffter, T.; Samek, W. Deep Learning for ECG Analysis: Benchmarks and Insights from PTB-XL. IEEE J. Biomed. Health Inform. 2021, 25, 1519–1528. [Google Scholar] [CrossRef] [PubMed]

| Overall (n = 18,256) | Non-Survivors (n = 2412) | Survivors (n = 15,844) | p Value | |

|---|---|---|---|---|

| Age, median [Q1, Q3] | 68.0 [57.0, 79.0] | 75.0 [63.0, 84.0] | 67.0 [56.0, 78.0] | <0.001 |

| Gender, n (%) | <0.001 | |||

| Male | 10753 (58.9) | 1313 (54.4) | 9440 (59.6) | |

| Female | 7503 (41.1) | 1099 (45.6) | 6404 (40.4) | |

| ECG Score, median [Q1, Q3] | 14.7 [7.9, 24.2] | 24.4 [15.6, 33.4] | 13.5 [7.2, 22.1] | <0.001 |

| SIRS, median [Q1, Q3] | 3.0 [2.0, 3.0] | 3.0 [2.0, 4.0] | 3.0 [2.0, 3.0] | <0.001 |

| CHARLSON, median [Q1, Q3] | 5.0 [3.0, 7.0] | 7.0 [5.0, 9.0] | 5.0 [3.0, 7.0] | <0.001 |

| OASIS, median [Q1, Q3] | 32.0 [26.0, 37.0] | 38.0 [32.0, 44.0] | 31.0 [25.0, 36.0] | <0.001 |

| SOFA, median [Q1, Q3] | 4.0 [2.0, 7.0] | 7.0 [4.0, 10.0] | 4.0 [2.0, 6.0] | <0.001 |

| SAPS-II, median [Q1, Q3] | 36.0 [28.0, 45.0] | 47.0 [38.0, 58.0] | 34.0 [27.0, 42.0] | <0.001 |

| APS-III, median [Q1, Q3] | 41.0 [30.0, 55.0] | 59.0 [45.0, 79.0] | 38.0 [29.0, 51.0] | <0.001 |

| Item | Accuracy | PPV | Sensitivity | Specificity | AUC | AUPRC | F1 Score | Brier Score |

|---|---|---|---|---|---|---|---|---|

| Machine learning models | ||||||||

| (ECG Score + APS-III + Age) | ||||||||

| Decision Tree | 0.81 | 0.295 | 0.315 | 0.885 | 0.6 | 0.35 | 0.304 | 0.190 |

| Random forest | 0.862 | 0.452 | 0.197 | 0.964 | 0.749 | 0.333 | 0.274 | 0.107 |

| XGBoost | 0.867 | 0.497 | 0.159 | 0.975 | 0.767 | 0.342 | 0.241 | 0.104 |

| Logistic regression models | ||||||||

| SIRS | 0.868 | 0 | 1 | 0.588 | 0.295 | 0 | 0.113 | |

| CHARLSON | 0.868 | 0.533 | 0.017 | 0.998 | 0.713 | 0.265 | 0.032 | 0.107 |

| OASIS | 0.87 | 0.577 | 0.062 | 0.993 | 0.719 | 0.311 | 0.112 | 0.104 |

| SOFA | 0.869 | 0.530 | 0.072 | 0.99 | 0.716 | 0.308 | 0.128 | 0.105 |

| SAPS-II | 0.87 | 0.534 | 0.114 | 0.985 | 0.765 | 0.343 | 0.188 | 0.102 |

| APS-III | 0.867 | 0.484 | 0.095 | 0.985 | 0.779 | 0.344 | 0.159 | 0.102 |

| ECG Score | 0.868 | 0 | 1 | 0.697 | 0.258 | 0 | 0.109 | |

| ECG Score Plus APS-III | 0.873 | 0.576 | 0.149 | 0.983 | 0.792 | 0.377 | 0.237 | 0.098 |

| E3A Score | 0.873 | 0.578 | 0.153 | 0.983 | 0.806 | 0.399 | 0.242 | 0.096 |

| Item | Accuracy | PPV | Sensitivity | Specificity | AUC | AUPRC | F1 Score | Brier Score |

|---|---|---|---|---|---|---|---|---|

| Machine learning models | ||||||||

| (ECG Score + APS-III + Age) | ||||||||

| Decision Tree | 0.813 | 0.304 | 0.307 | 0.891 | 0.599 | 0.352 | 0.305 | 0.187 |

| Random forest | 0.871 | 0.543 | 0.234 | 0.970 | 0.780 | 0.402 | 0.327 | 0.099 |

| XGBoost | 0.865 | 0.490 | 0.197 | 0.968 | 0.774 | 0.374 | 0.281 | 0.102 |

| Logistic regression models | ||||||||

| SIRS | 0.866 | 0.000 | 1.000 | 0.604 | 0.283 | 0.000 | 0.114 | |

| CHARLSON | 0.869 | 0.727 | 0.033 | 0.998 | 0.692 | 0.273 | 0.063 | 0.109 |

| OASIS | 0.864 | 0.389 | 0.029 | 0.993 | 0.716 | 0.316 | 0.053 | 0.105 |

| SOFA | 0.867 | 0.536 | 0.061 | 0.992 | 0.677 | 0.289 | 0.110 | 0.108 |

| SAPS-II | 0.868 | 0.548 | 0.070 | 0.991 | 0.758 | 0.336 | 0.124 | 0.103 |

| APS-III | 0.871 | 0.588 | 0.123 | 0.987 | 0.777 | 0.373 | 0.203 | 0.101 |

| ECG Score | 0.866 | 0.000 | 1.000 | 0.721 | 0.299 | 0.000 | 0.107 | |

| ECG Score Plus APS-III | 0.879 | 0.667 | 0.189 | 0.985 | 0.794 | 0.440 | 0.294 | 0.095 |

| E3A Score | 0.881 | 0.696 | 0.197 | 0.987 | 0.804 | 0.466 | 0.307 | 0.093 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Wang, B.; Chen, B.; Li, Q.; Zhao, Y.; Dong, T.; Wang, Y.; Zhang, P. Interpretable Machine Learning Model Integrating Electrocardiographic and Acute Physiology Metrics for Mortality Prediction in Critical Ill Patients. J. Clin. Med. 2025, 14, 7163. https://doi.org/10.3390/jcm14207163

Wang Q, Wang B, Chen B, Li Q, Zhao Y, Dong T, Wang Y, Zhang P. Interpretable Machine Learning Model Integrating Electrocardiographic and Acute Physiology Metrics for Mortality Prediction in Critical Ill Patients. Journal of Clinical Medicine. 2025; 14(20):7163. https://doi.org/10.3390/jcm14207163

Chicago/Turabian StyleWang, Qiuyu, Bin Wang, Bo Chen, Qing Li, Yutong Zhao, Tianshan Dong, Yifei Wang, and Ping Zhang. 2025. "Interpretable Machine Learning Model Integrating Electrocardiographic and Acute Physiology Metrics for Mortality Prediction in Critical Ill Patients" Journal of Clinical Medicine 14, no. 20: 7163. https://doi.org/10.3390/jcm14207163

APA StyleWang, Q., Wang, B., Chen, B., Li, Q., Zhao, Y., Dong, T., Wang, Y., & Zhang, P. (2025). Interpretable Machine Learning Model Integrating Electrocardiographic and Acute Physiology Metrics for Mortality Prediction in Critical Ill Patients. Journal of Clinical Medicine, 14(20), 7163. https://doi.org/10.3390/jcm14207163