Individual Differences in Infants’ Temperament Affect Face Processing

Abstract

1. Introduction

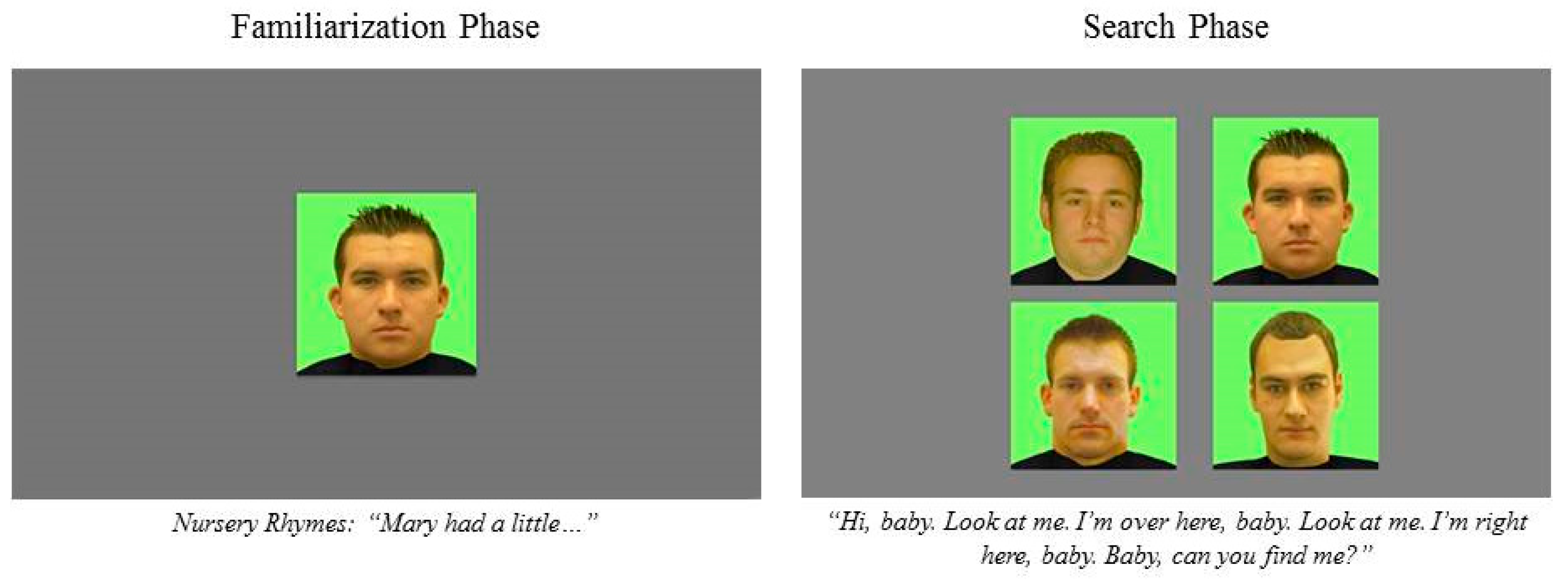

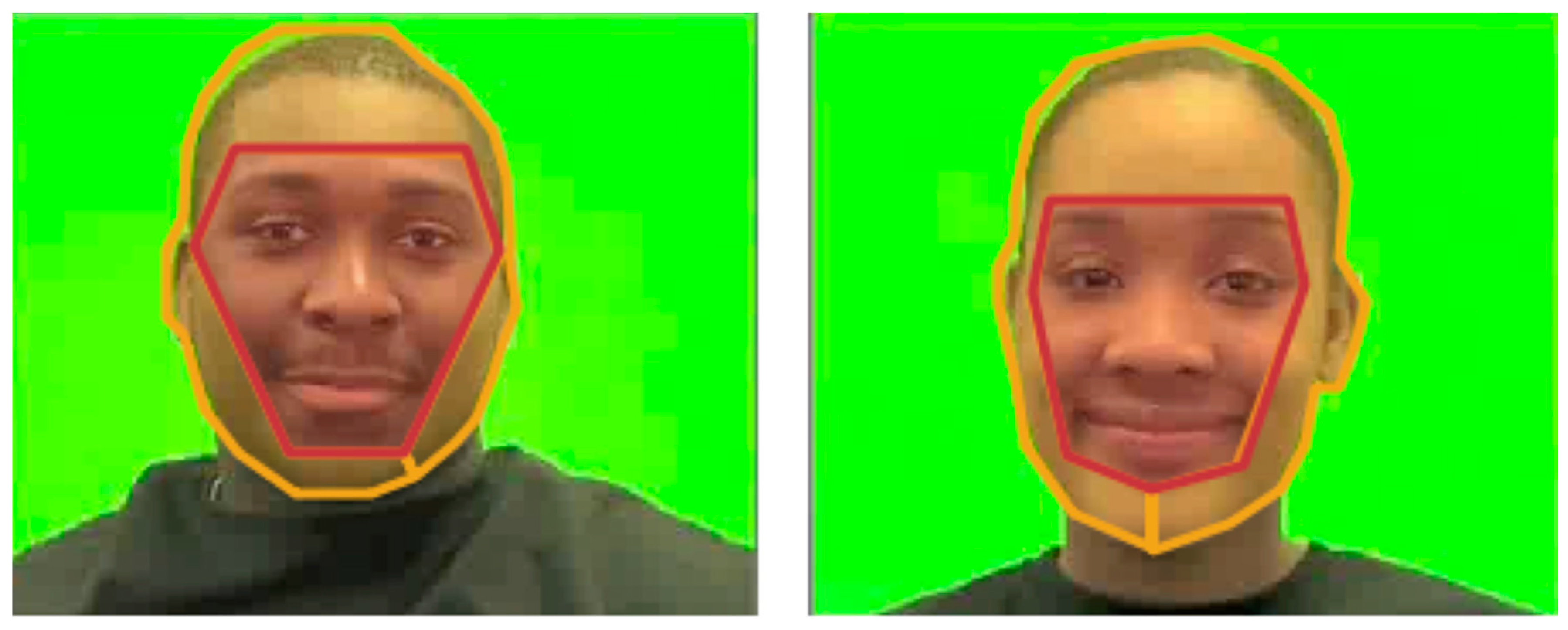

2. Materials and Methods

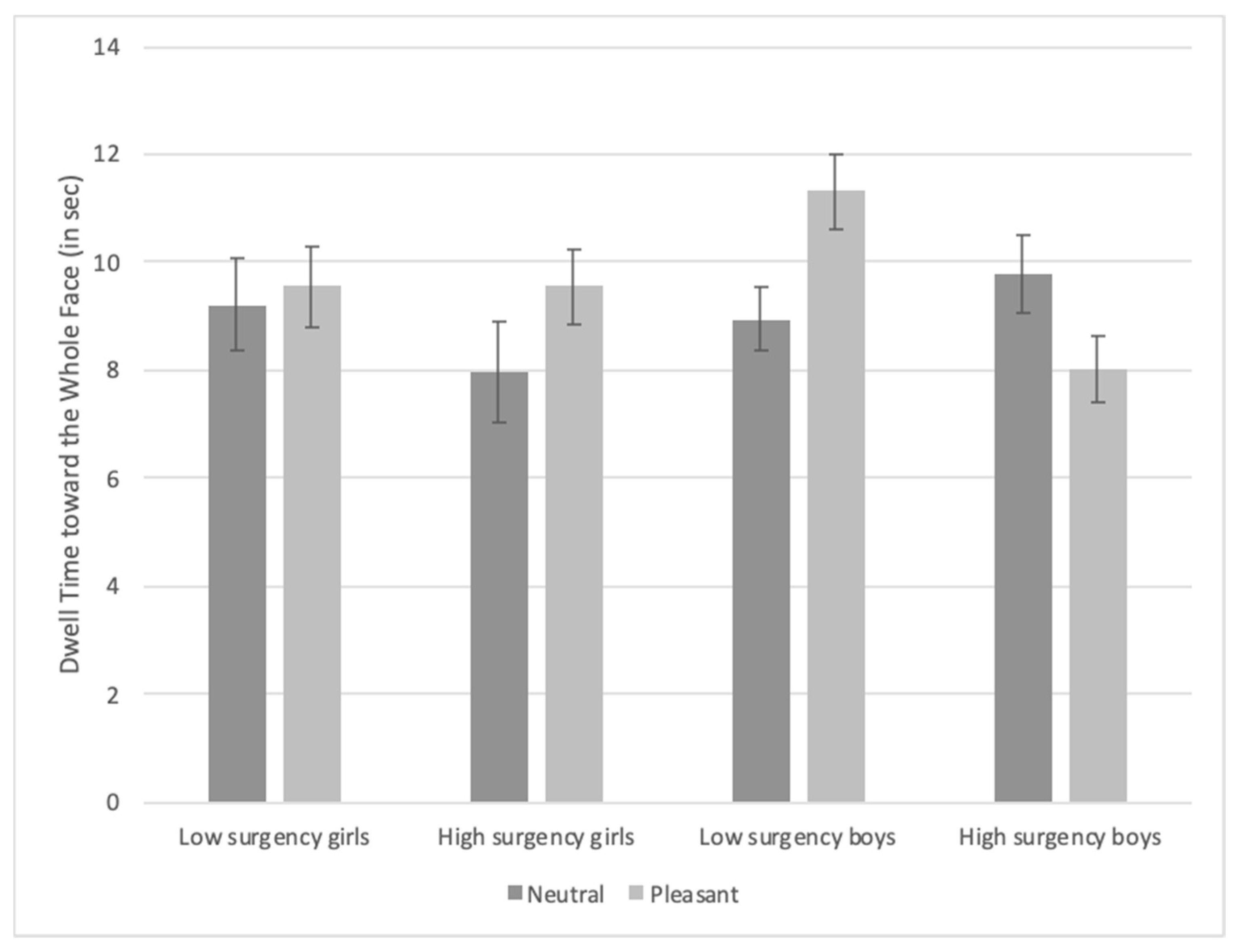

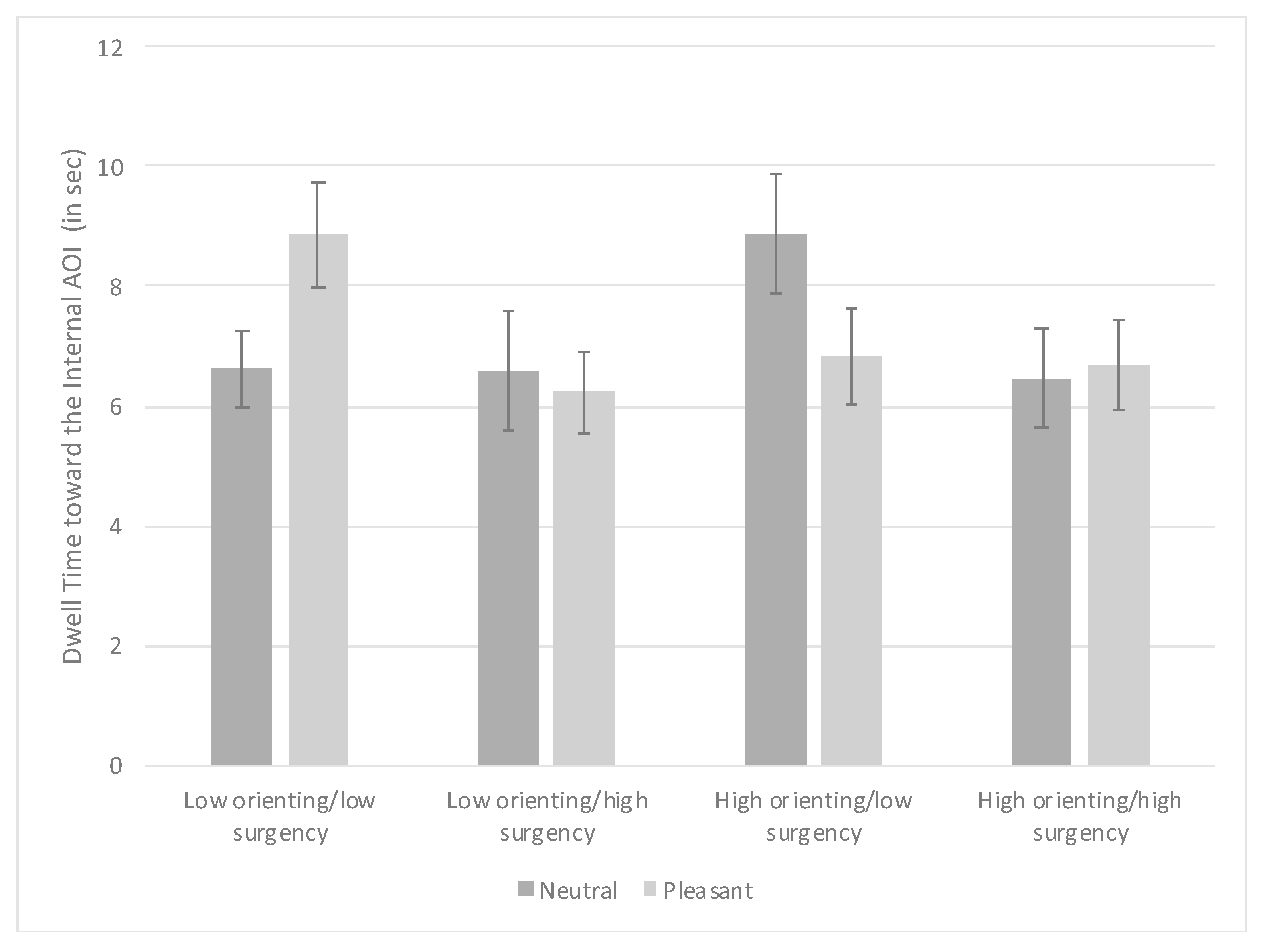

3. Results

3.1. Facial Experience

3.2. Temperament

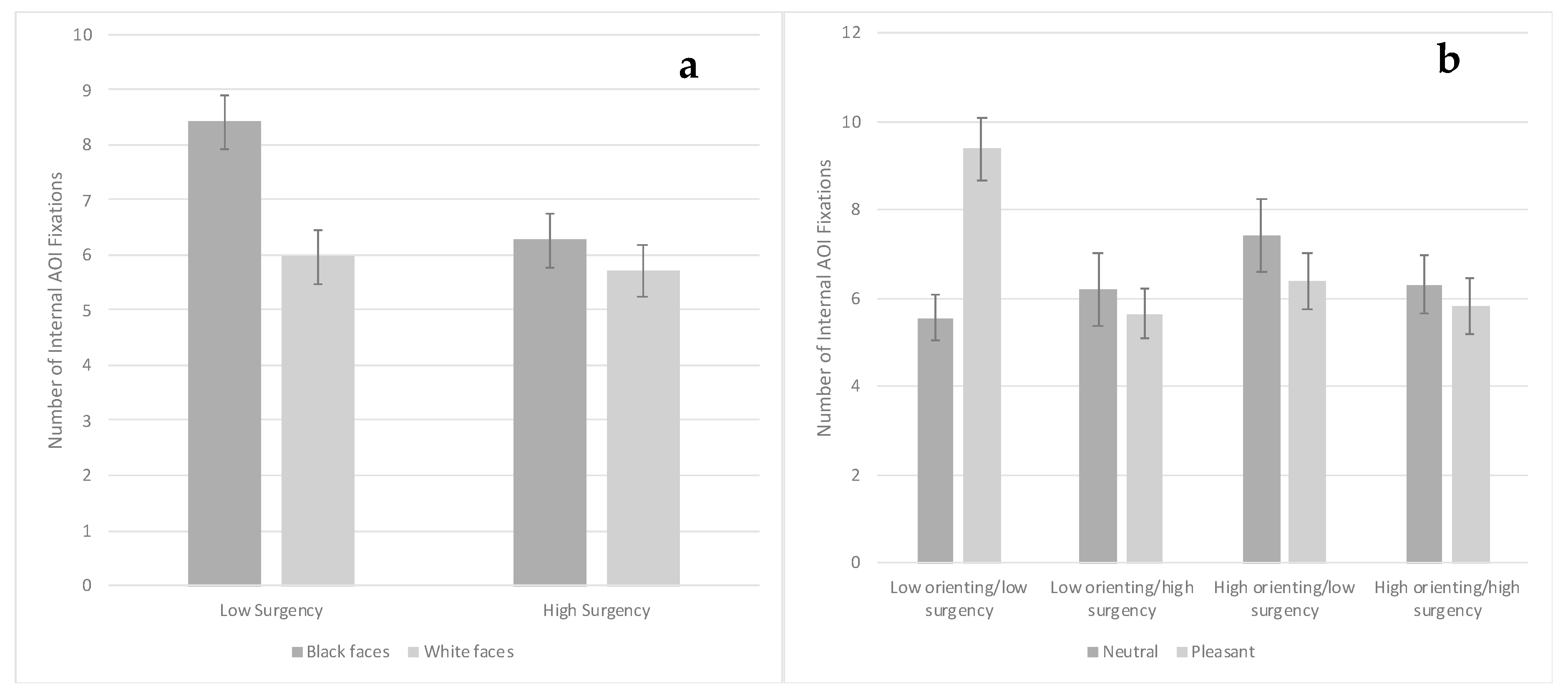

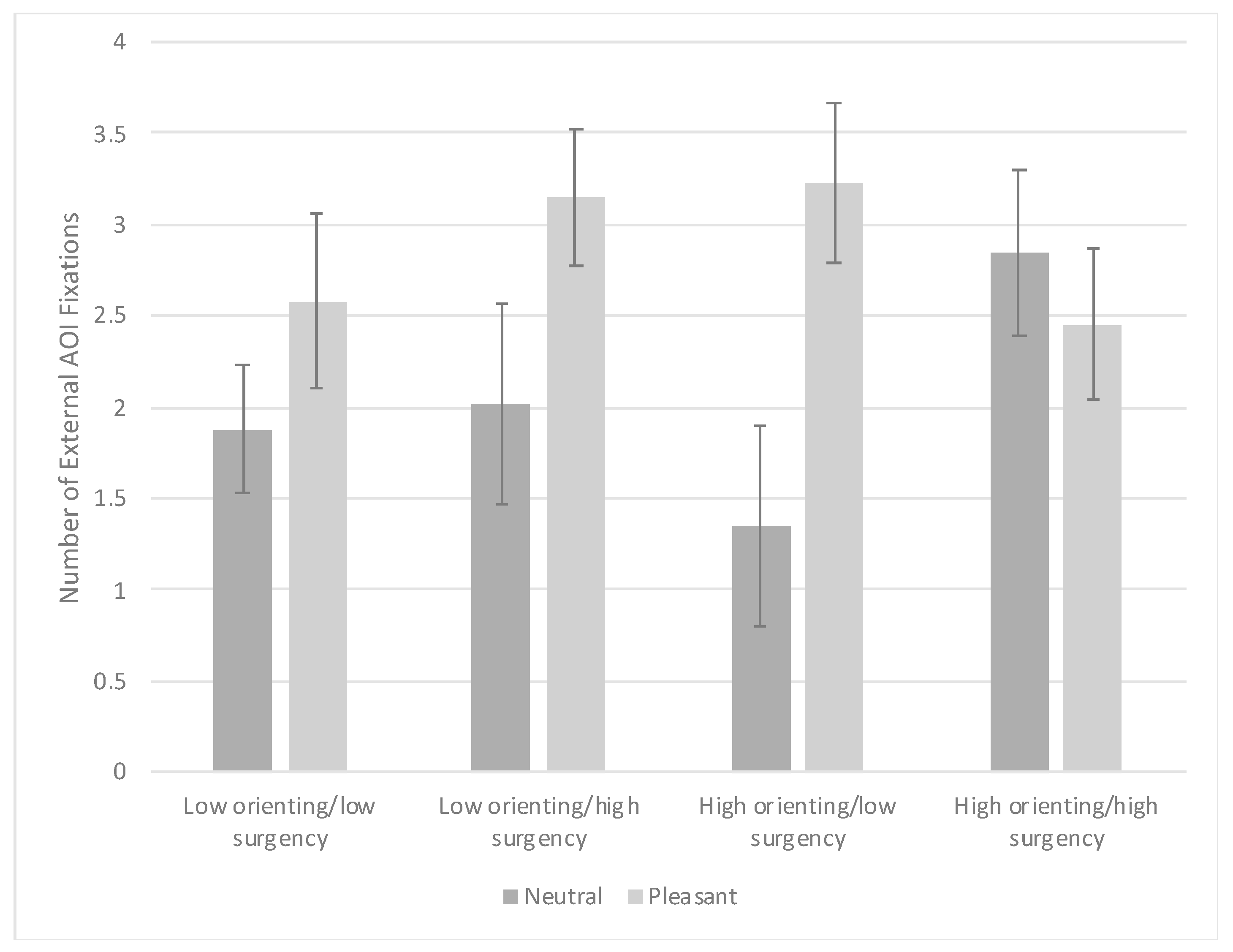

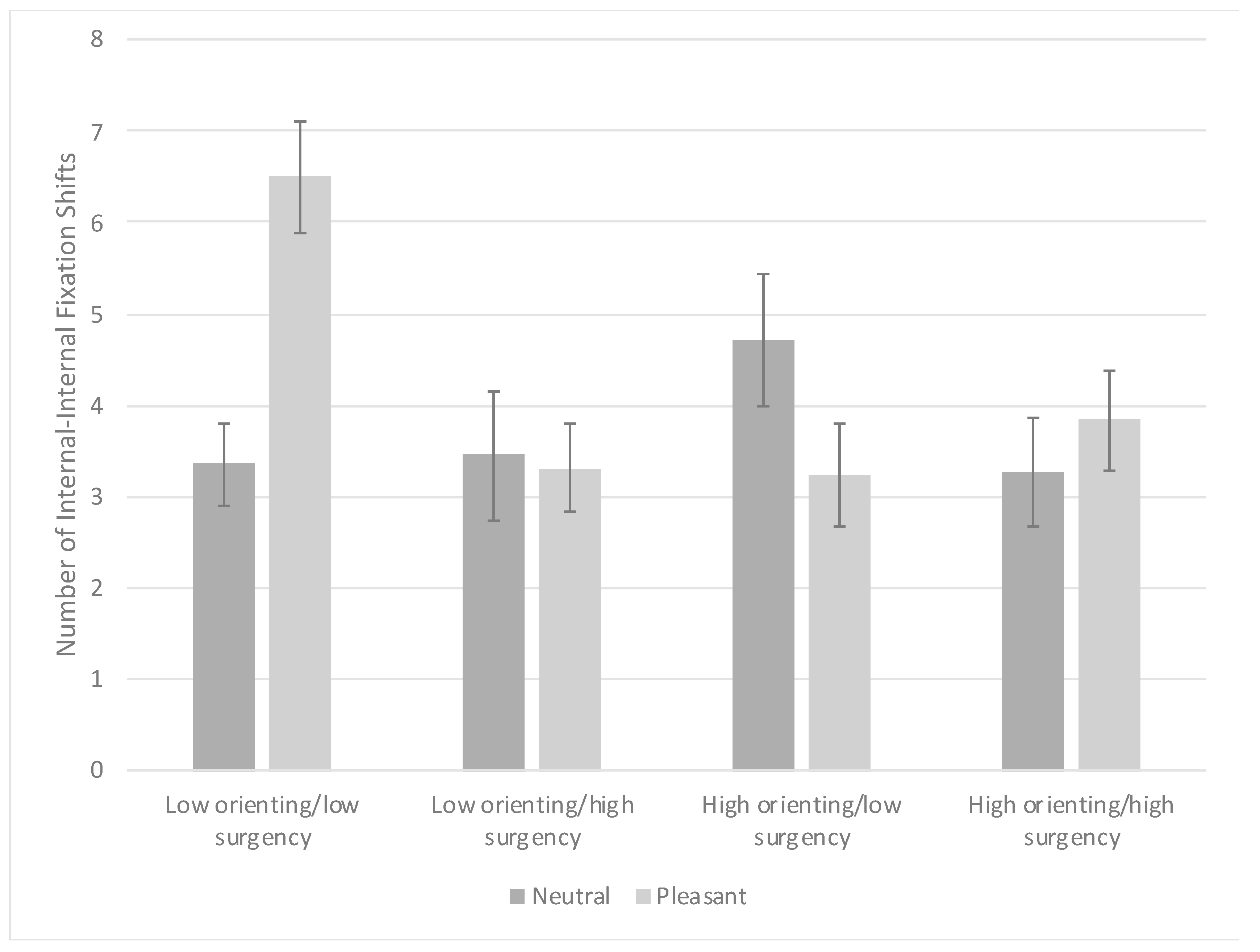

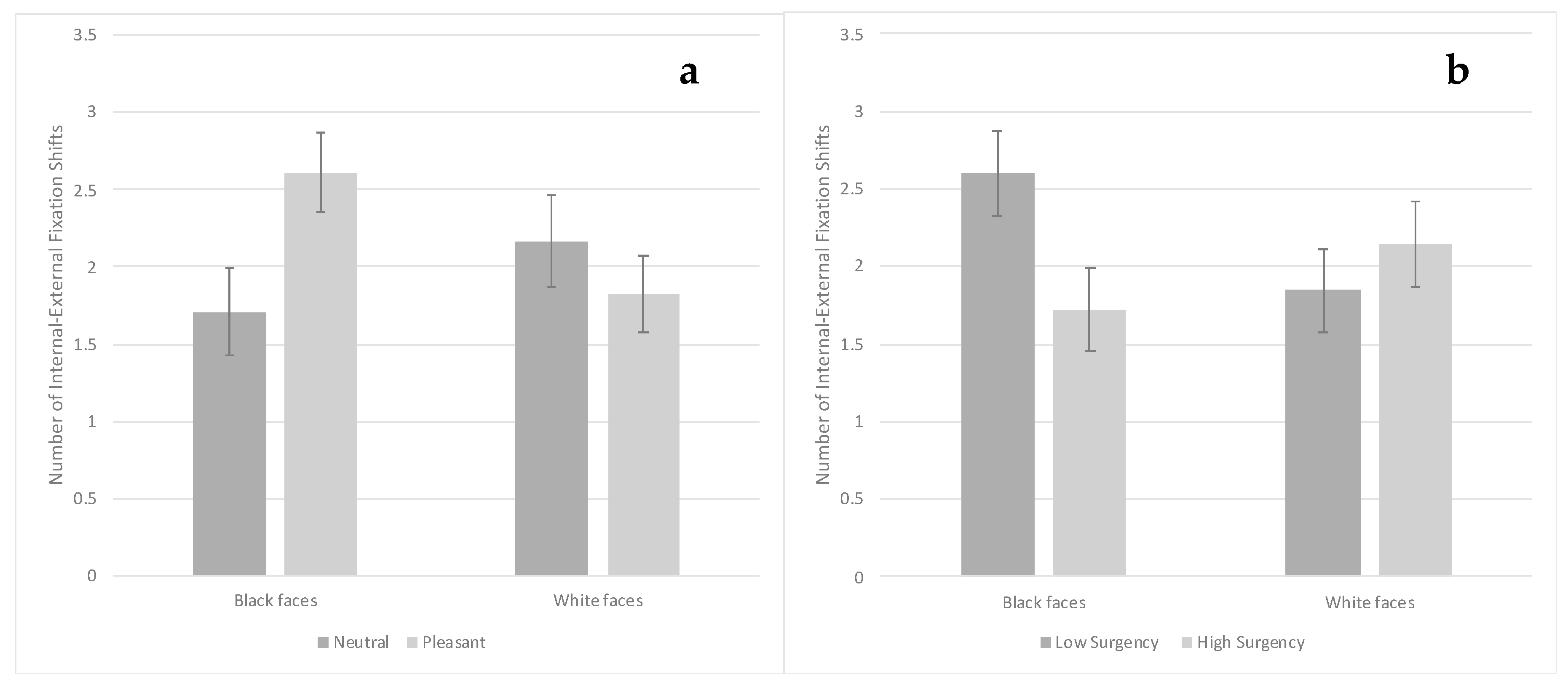

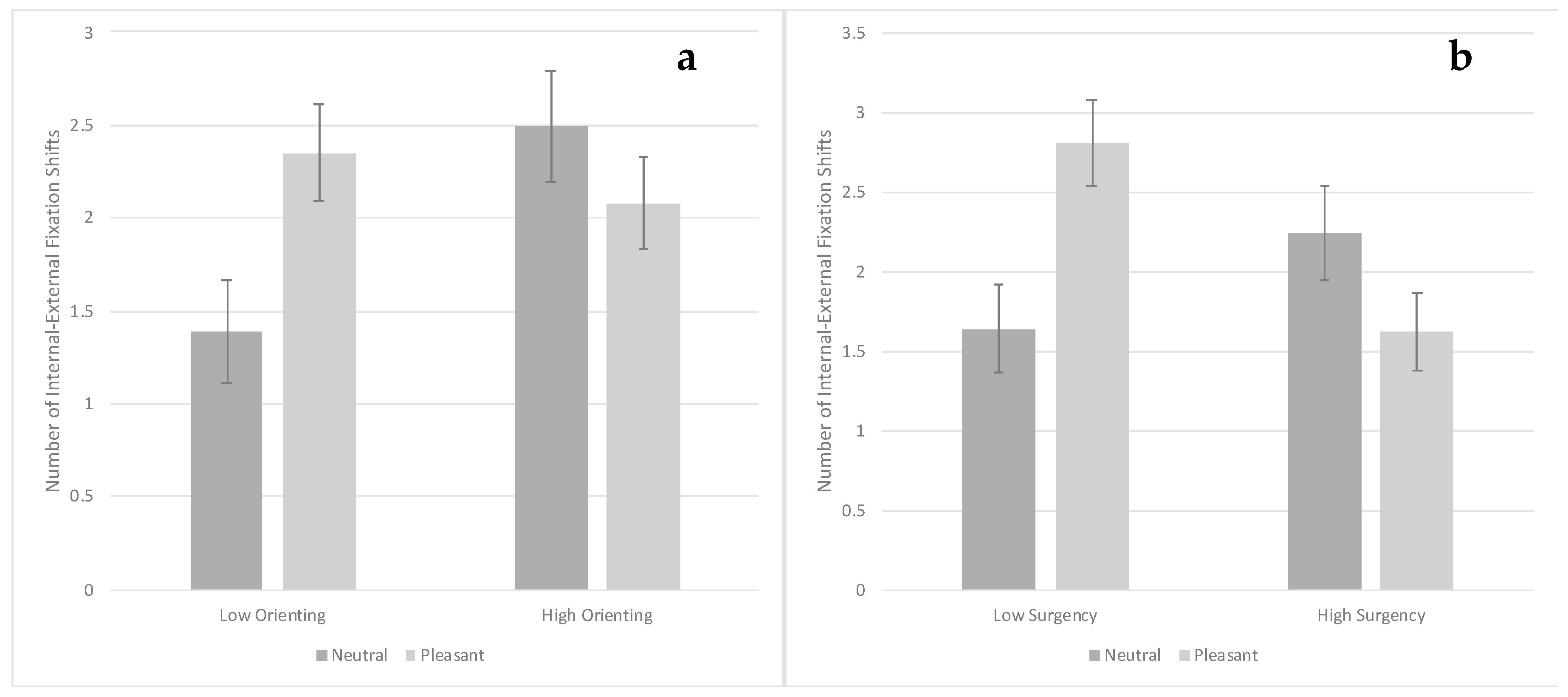

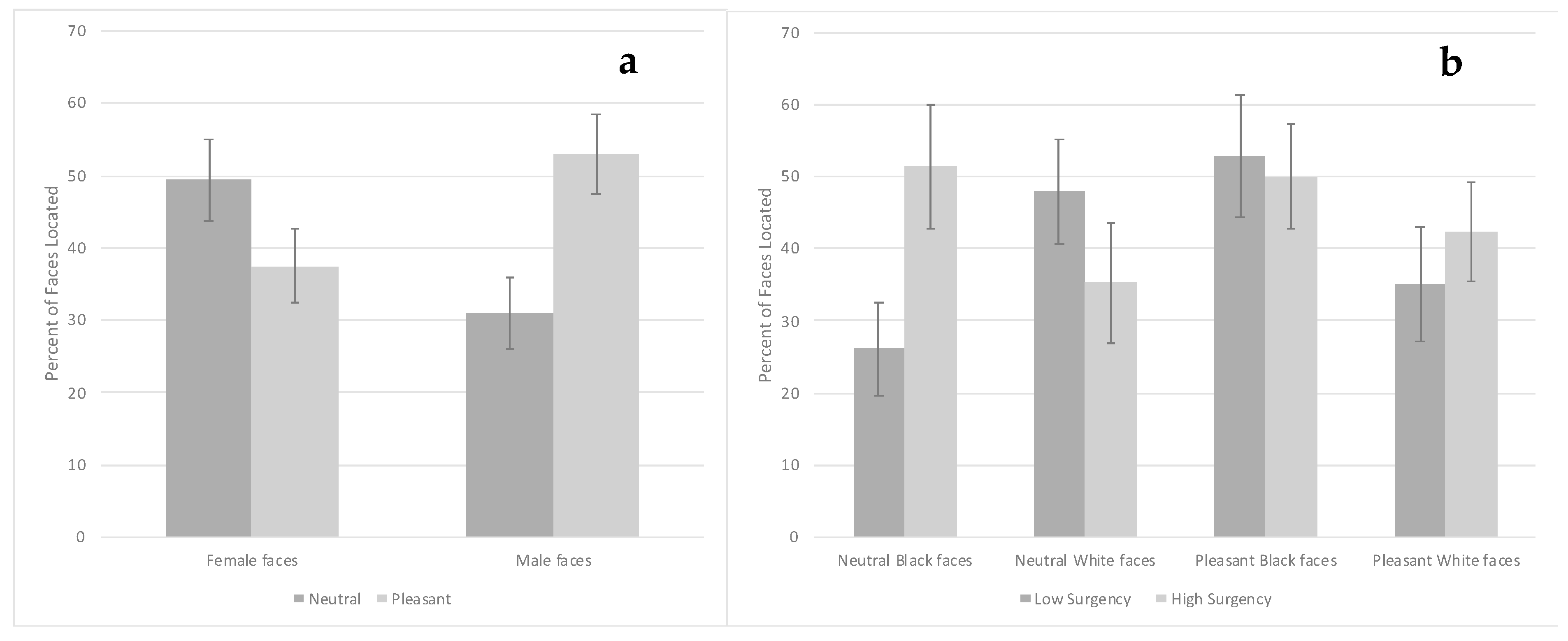

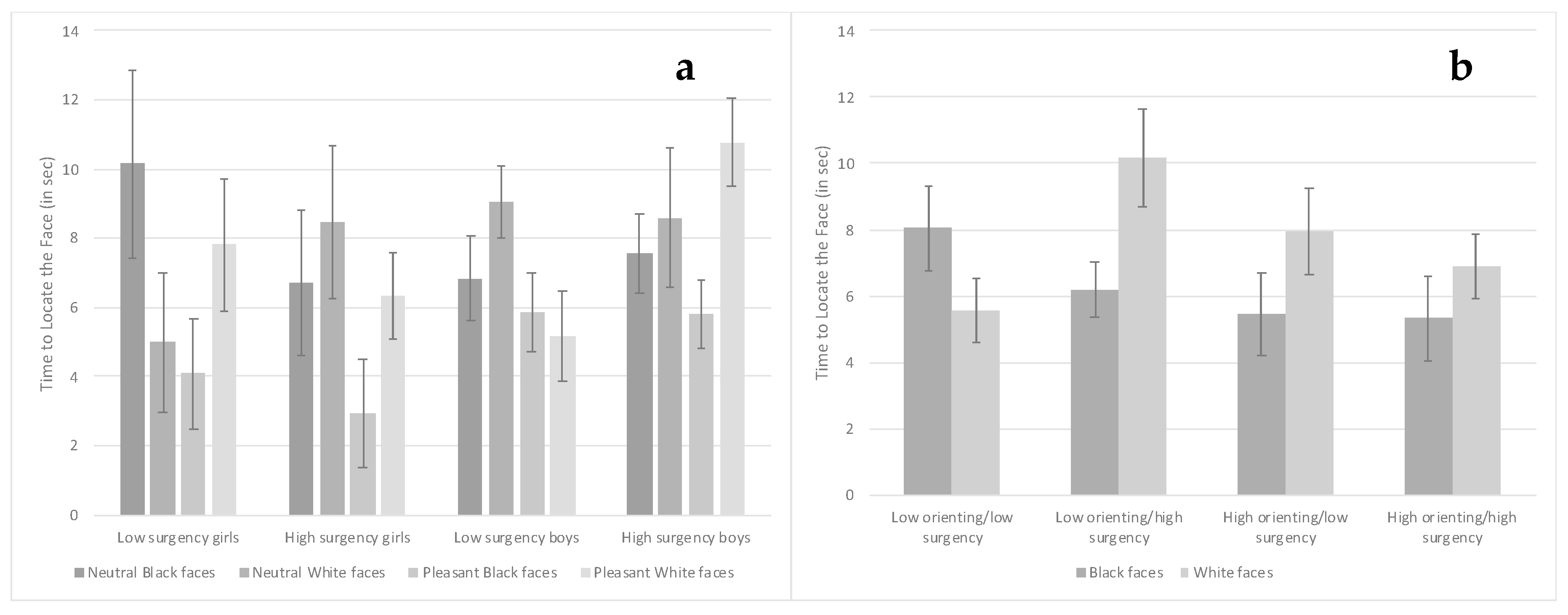

3.3. Visual Search Task

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Quinn, P.C.; Yahr, J.; Kuhn, A.; Slater, A.M.; Pascalis, O. Representation of the gender of human faces by infants: A preference for female. Perception 2002, 31, 1109–1121. [Google Scholar] [CrossRef] [PubMed]

- Rennels, J.L.; Juvrud, J.; Kayl, A.J.; Asperholm, M.; Gredebäck, G.; Herlitz, A. Caregiving experience and its relation to perceptual narrowing of face gender. Dev. Psychol. 2017, 53, 1437–1446. [Google Scholar] [CrossRef] [PubMed]

- Righi, G.; Westerlund, A.; Congdon, E.L.; Troller-Renfree, S.; Nelson, C.A. Infants’ experience-dependent processing of male and female faces: Insights from eye tracking and event-related potentials. Dev. Cogn. Neurosci. 2014, 8, 144–152. [Google Scholar] [CrossRef]

- Tham, D.S.Y.; Woo, P.J.; Bremner, J.G. Development of the other-race effect in Malaysian-Chinese infants. Dev. Psychobiol. 2019, 61, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Hayden, A.; Bhatt, R.S.; Joseph, J.E.; Tanaka, J.W. The other-race effect in infancy: Evidence using a morphing technique. Infancy 2007, 12, 95–104. [Google Scholar] [CrossRef]

- Sangrigoli, S.; de Schonen, S. Recognition of own-race and other-race faces by three-month-old infants. J. Child. Psychol. Psychiatry 2004, 45, 1219–1227. [Google Scholar] [CrossRef]

- Kelly, D.J.; Liu, S.; Lee, K.; Quinn, P.C.; Pascalis, O.; Slater, A.M.; Ge, L. Development of the other-race effect during infancy: Evidence toward universality? J. Exp. Child Psychol. 2009, 104, 105–114. [Google Scholar] [CrossRef]

- Kelly, D.J.; Quinn, P.C.; Slater, A.M.; Lee, K.; Ge, L.; Pascalis, O. The other-race effect develops during infancy. Psychol. Sci. 2007, 18, 1084–1089. [Google Scholar] [CrossRef] [PubMed]

- Rennels, J.L.; Davis, R.E. Facial experience during the first year. Infant Behav. Dev. 2008, 31, 665–678. [Google Scholar] [CrossRef]

- Sugden, N.A.; Mohamed-Ali, A.M.I.; Moulson, M.C. I spy with my little eye: Typical, daily exposure to faces documented from a first-person infant perspective. Dev. Psychobiol. 2014, 56, 249–261. [Google Scholar] [CrossRef]

- Sugden, N.A.; Marquis, A.R. Meta-analytic review of the development of face discrimination in infancy: Face race, face gender, infant age, and methodology moderate face discrimination. Psychol. Bull. 2017, 143, 1201–1244. [Google Scholar] [CrossRef] [PubMed]

- Rothbart, M.K. Becoming Who We Are; The Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Rothbart, M.K.; Bates, J.E. Temperament. In Handbook of Child Psychology: Social, Emotional, and Personality Development, 6th ed.; Damon, W., Lerner, R.L., Eisenberg, N., Eds.; Wiley: New York, NY, USA, 2006; Volume 3, pp. 99–166. [Google Scholar]

- Spangler, S.M.; Freitag, C.; Schwarzer, G.; Vierhaus, M.; Teubert, M.; Lamm, B.; Kolling, T.; Graf, F.; Goertz, C.; Fassbender, I.; et al. Recognition of faces and Greebles in 3-month-old infants: Influence of temperament and cognitive abilities. Int. J. Behav. Dev. 2011, 35, 432–440. [Google Scholar] [CrossRef]

- Nelson, C.A. The development and neural bases of face recognition. Infant Child Dev. 2001, 10, 3–18. [Google Scholar] [CrossRef]

- Scott, L.S.; Pascalis, O.; Nelson, C.A. A domain-general theory of the development of perceptual discrimination. Curr. Dir. Psychol. Sci. 2007, 16, 197–201. [Google Scholar] [CrossRef] [PubMed]

- Shiner, R.L.; Caspi, A. Temperament and the development of personality traits, adaptations, and narratives. In Handbook of Temperament; Zenter, M., Shiner, R.L., Eds.; Guilford: New York, NY, USA, 2012; pp. 497–516. [Google Scholar]

- Aktar, E.; Mandell, D.J.; de Vente, W.; Majdandžicć, M.; Raijmakers, M.E.J.; Bögels, S.M. Infants’ temperament and mothers’, and fathers’ depression predict infants’ attention to objects paired with emotions. J. Abnorm. Child Psychol. 2016, 44, 975–990. [Google Scholar] [CrossRef] [PubMed]

- de Haan, M.; Belsky, J.; Reid, V.; Volein, A.; Johnson, M.H. Maternal positivity and infants’ neural and visual responsivity to facial expressions of emotion. J. Child Psychol. Psychiatry 2004, 45, 1209–1218. [Google Scholar] [CrossRef]

- Martinos, M.; Matheson, A.; de Haan, M. Links between infant temperament and neurophysiological measures of attention to happy and fearful faces. J. Child Psychol. Psychiatry 2012, 53, 1118–1127. [Google Scholar] [CrossRef]

- Nakagawa, A.; Sukigara, M. Difficulty in disengaging from threat and temperamental negative affectivity in early life: A longitudinal study of infants aged 12–36 months. Behav. Brain Funct. 2012, 8, 40. [Google Scholar] [CrossRef]

- Quadrelli, E.; Conte, S.; Macchi Cassia, V.; Turati, C. Emotion in motion: Facial dynamics affect infants’ neural processing of emotions. Dev. Psychobiol. 2019, 61, 843–858. [Google Scholar] [CrossRef]

- Putnam, S.P.; Helbig, A.L.; Gartstein, M.A.; Rothbart, M.K.; Leerkes, E. Development and assessment of short and very short forms of the infant behavior questionnaire-revised. J. Pers. Assess. 2014, 96, 445–458. [Google Scholar] [CrossRef]

- Putnam, S.P.; Rothbart, M.K.; Gartstein, M.A. Homotypic and heterotypic continuity of fine-grained temperament during infancy, toddlerhood, and early childhood. Infant Child Dev. 2008, 17, 387–405. [Google Scholar] [CrossRef]

- Frick, M.A.; Forslund, T.; Fransson, M.; Johansson, M.; Bohlin, G.; Brocki, K.C. The role of sustained attention, maternal sensitivity, and infant temperament in the development of early self-regulation. Br. J. Psychol. 2018, 109, 277–298. [Google Scholar] [CrossRef] [PubMed]

- Papageorgiou, K.A.; Smith, T.J.; Wu, R.; Johnson, M.H.; Kirkham, N.Z.; Ronald, A. Individual differences in infant fixation duration relate to attention and behavioral control in childhood. Psychol. Sci. 2014, 25, 1371–1379. [Google Scholar] [CrossRef]

- Braungart-Rieker, J.; Garwood, M.M.; Powers, B.P.; Notaro, P.C. Infant affect and affect regulation during the still-face paradigm with mothers and fathers: The role of infant characteristics and parental sensitivity. Dev. Psychol. 1998, 34, 1428–1437. [Google Scholar] [CrossRef] [PubMed]

- LaBarbera, J.D.; Izard, C.E.; Vietze, P.P.; Parisi, S.A. Four- and six-month-old infants’ visual responses to joy, anger, and neutral expressions. Child Dev. 1976, 47, 535–538. [Google Scholar] [CrossRef]

- Nelson, C.A.; Dolgin, K.G. The generalized discrimination of facial expressions by seven-month-old infants. Child Dev. 1985, 56, 58–61. [Google Scholar] [CrossRef]

- Gross, C.; Schwarzer, G. Face recognition across varying poses in 7- and 9-month- old infants: The role of facial expression. Int. J. Behav. Dev. 2010, 34, 417–426. [Google Scholar] [CrossRef]

- Turati, C.; Montirosso, R.; Brenna, V.; Ferrara, V.; Borgatti, R. A smile enhances 3- month-olds’ recognition of an individual face. Infancy 2011, 16, 306–317. [Google Scholar] [CrossRef]

- Quinn, P.C.; Lee, K.; Pascalis, O.; Xiao, N.G. Emotional expressions reinstate recognition of other-race faces in infants following perceptual narrowing. Dev. Psychol. 2020, 56, 15–27. [Google Scholar] [CrossRef]

- Shah, R.; Lewis, M.B. Locating the neutral expression in the facial-emotion space. Vis. Cogn. 2003, 10, 549–566. [Google Scholar] [CrossRef]

- Zebrowitz, L.A.; Kikuchi, M.; Fellous, J.-M. Facial resemblance to emotions: Group differences, impression effects, and race stereotypes. J. Pers. Soc. Psychol. 2010, 98, 175–189. [Google Scholar] [CrossRef] [PubMed]

- Hunnius, S.; de Wit, T.C.J.; Vrins, S.; von Hofsten, C. Facing threat: Infants’ and adults visual scanning of faces with neutral, happy, sad, angry, and fearful emotional expressions. Cogn. Emot. 2011, 25, 193–205. [Google Scholar] [CrossRef]

- Deruelle, C.; de Schonen, S. Do the right and left hemispheres attend to the same visuospatial information within a face in infancy? Dev. Neuropsychol. 1998, 14, 535–554. [Google Scholar] [CrossRef]

- Maurer, D.; Le Grand, R.; Mondloch, C.J. The many faces of configural processing. Trends Cogn. Sci. 2002, 6, 255–260. [Google Scholar] [CrossRef]

- Rennels, J.L.; Cummings, A.J. Sex differences in facial scanning: Similarities and dissimilarities between infants and adults. Int. J. Behav. Dev. 2013, 37, 111–117. [Google Scholar] [CrossRef]

- Gaither, S.E.; Pauker, K.; Johnson, S.P. Biracial and monoracial infant own-race face perception: An eye tracking study. Dev. Sci. 2012, 15, 775–782. [Google Scholar] [CrossRef]

- Xiao, N.G.; Quinn, P.C.; Liu, S.; Ge, L.; Pascalis, O.; Lee, K. Eye tracking reveals a crucial role for facial motion in recognition of faces by infants. Dev. Psychol. 2015, 51, 744–757. [Google Scholar] [CrossRef]

- Bodnarchuk, J.L.; Eaton, W.O. Can parent reports be trusted? Validity of daily checklists of gross motor milestone attainment. J. Appl. Dev. Psychol. 2004, 25, 481–490. [Google Scholar] [CrossRef]

- Applied Science Laboratories. Eye Tracker System Manual: ASL Results & Results Pro, 2.02 ed.; Applied Science Laboratories: Bedford, MA, USA, 2011. [Google Scholar]

- Cashon, C.H.; Cohen, L.B. Beyond U-shaped development in infants’ processing of faces: An information-processing account. J. Cogn. Dev. 2004, 5, 59–80. [Google Scholar] [CrossRef]

- Pascalis, O.; de Haan, M.; Nelson, C.A.; de Schonen, S. Long-term recognition memory for faces assessed by visual paired comparison in 3- and 6-month-old infants. J. Exp. Psychol. Learn. 1998, 24, 249–260. [Google Scholar] [CrossRef]

- Benjamini, Y.; Krieger, A.M.; Yekutieli, D. Adaptive linear step-up procedures that control the false discovery rate. Biometrika 2006, 93, 491–507. [Google Scholar] [CrossRef]

- Calvo, M.G.; Beltrán, D. Brain lateralization of holistic versus analytic processing of emotional facial expressions. NeuroImage 2014, 92, 237–247. [Google Scholar] [CrossRef]

- Richler, J.J.; Cheung, O.S.; Gauthier, I. Holistic processing predicts face recognition. Psychol. Sci. 2011, 22, 464–471. [Google Scholar] [CrossRef] [PubMed]

- Ferguson, K.T.; Kulkofsky, S.; Cashon, C.H.; Casasola, M. The development of specialized processing of own-race faces in infancy. Infancy 2009, 14, 263–284. [Google Scholar] [CrossRef]

- Rothbart, M.K. Temperament and the development of inhibited approach. Child Dev. 1988, 59, 1241–1250. [Google Scholar] [CrossRef] [PubMed]

- Gartstein, M.A.; Rothbart, M.K. Studying infant temperament via the Revised Infant Behavior Questionnaire. Infant Behav. Dev. 2003, 26, 64–86. [Google Scholar] [CrossRef]

- Rose, S.A.; Jankowski, J.J.; Feldman, J.R. The inversion effect in infancy: The role of internal and external features. Infant Behav. Dev. 2008, 31, 470–480. [Google Scholar] [CrossRef]

- Lewkowicz, D.J.; Hansen-Tift, A.M. Infants deploy selective attention to the mouth of a talking face when learning speech. Proc. Natl. Acad. Sci. USA 2012, 109, 1431–1436. [Google Scholar] [CrossRef]

- Xiao, W.S.; Xiao, N.G.; Quinn, P.C.; Anzures, G.; Lee, K. Development of face scanning for own- and other-race faces in infancy. Int. J. Behav. Dev. 2013, 37, 100–105. [Google Scholar] [CrossRef]

| Average Percent Facial Experience | Temperament |

|---|---|

| White females (M = 0.42, SD = 0.29; range = 0–0.94) White males (M = 0.20, SD = 0.15; range = 0–0.56) Black females (M = 0.05, SD = 0.15; range = 0–0.91) Black males (M = 0.02; SD = 0.05; range = 0–0.32) Other race females (M = 0.21, SD = 0.24; range = 0–0.84) Other race males (M = 0.10, SE = 0.14; range = 0–0.65) | Low surgency (M = 4.43, SD = 0.48;), low orienting (M = 4.32, SD = 0.57) − n = 30 Low surgency (M = 4.36, SD = 0.54), high orienting (M = 5.61, SD = 0.42) − n = 23 High surgency (M = 5.45, SD = 0.30), low orienting (M = 4.56, SD = 0.50) − n = 25 High surgency (M = 5.72; SD = 0.42), high orienting (M = 5.64, SD = 0.40) − n = 30 |

| Whole Face Dwell Time (sec) | Internal AOI Dwell Time (sec) | External AOI Dwell Time (sec) | Internal AOI Fixations | External AOI Fixations | Internal–Internal Fixation Shifts | Internal–External Fixation Shifts | |

|---|---|---|---|---|---|---|---|

| Mean | 9.07 | 6.96 | 2.12 | 6.33 | 2.48 | 3.79 | 1.01 |

| Std Error | 0.23 | 0.26 | 0.15 | 0.22 | 0.14 | 0.19 | 0.07 |

| Range | 1.00–15.02 | 0.00–15.02 | 0.00–15.02 | 0–20 | 0–18 | 0–18 | 0–6 |

| Whole Face Dwell Time | Internal AOI Dwell Time | External AOI Dwell Time | Internal AOI Fixations | External AOI Fixations | Internal–Internal Fixation Shifts | Internal–External Fixation Shifts | |

|---|---|---|---|---|---|---|---|

| Face located | 0.228 *** | 0.146 ** | 0.130 * | 0.087 | 0.078 | 0.092 † | 0.108 * |

| adjusted for temperament | 0.236 *** | 0.151 ** | 0.129 * | 0.093† | 0.078 | 0.096 † | 0.110 * |

| Time to locate | −0.255 ** | −0.180 * | −0.056 | −0.03 | 0.03 | −0.02 | −0.135 |

| adjusted for temperament | −0.260 ** | −0.186 * | −0.052 | −0.1 | 0.032 | −0.032 | −0.134 |

| Dependent Variable | AICC without Temperament Measures | AICC with Temperament Measures |

|---|---|---|

| Dwell time toward whole face | 1907.6 | 1688.1 |

| Dwell time toward internal AOI | 1975.0 | 1754.5 |

| Dwell time toward external AOI | 1638.4 | 1465.3 |

| Fixations toward internal AOI | 1866.4 | 1642.0 |

| Fixations toward external AOI | 1588.7 | 1427.8 |

| Internal-internal fixation shifts | 1783.4 | 1570.0 |

| Internal-external fixation shifts | 1508.4 | 1333.7 |

| Time to locate face | 785.4 | 660.1 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rennels, J.L.; Kayl, A.J.; Kulhanek, K.M. Individual Differences in Infants’ Temperament Affect Face Processing. Brain Sci. 2020, 10, 474. https://doi.org/10.3390/brainsci10080474

Rennels JL, Kayl AJ, Kulhanek KM. Individual Differences in Infants’ Temperament Affect Face Processing. Brain Sciences. 2020; 10(8):474. https://doi.org/10.3390/brainsci10080474

Chicago/Turabian StyleRennels, Jennifer L., Andrea J. Kayl, and Kirsty M. Kulhanek. 2020. "Individual Differences in Infants’ Temperament Affect Face Processing" Brain Sciences 10, no. 8: 474. https://doi.org/10.3390/brainsci10080474

APA StyleRennels, J. L., Kayl, A. J., & Kulhanek, K. M. (2020). Individual Differences in Infants’ Temperament Affect Face Processing. Brain Sciences, 10(8), 474. https://doi.org/10.3390/brainsci10080474