1. Introduction

Visual object tracking is a crucial research issue in computer vision and has many applications including video security, traffic monitoring, robotics and human computer interface. In the past decade, great improvements have been made by some visual tracking algorithms [

1,

2,

3,

4,

5,

6], meanwhile large visual tracking datasets and benchmarks, such as the Object Tracking Benchmark (OTB)-50 [

7], OTB-100 [

8], Visual Object Tracking (VOT) [

9,

10], Need for Speed (NfS) [

11] and Multi-Object Tracking (MOT) [

12] have sparked the interest of numerous scholars and given impetus to the research area appreciably. In spite of having made considerable headway in recent decades, the tracking issue is still considered a big challenge in some scenarios such as illumination variation, scale variation, occlusion, fast motion, deformation, background clutters, etc.

Lately, correlation filter (CF) based methods [

13,

14,

15] have been sought in visual tracking based on their excellent performance. CF-based trackers learn a correlation filter with training samples obtained by cyclically shifting base samples. For each new frame, the trained filter locates to the new target position where has the maximum response. At last, the filter is updated by the new location. By utilizing the circulant construction of training samples, CF-based trackers enable efficient learning and detecting in the Fourier domain.

Despite their appealing performance both in accuracy and high frames per second (FPS) rate, there are some drawbacks that reduce the performance of CF-based trackers. A major drawback is the boundary effect caused by circularly shifting. To inhibit the boundary effect, a cosine window is usually employed. But the cosine window limits search areas and thereby the quantity of samples, specifically the quantity of negative samples, is sharply reduce. Therefore, CF-based trackers usually have insufficient context and thus readily drift in scenes of fast motion or background clutter. Moreover, CF-based trackers only learn a holistic model so that they cannot deal with cases of partial occlusion well.

To further improve the performance of CF-based trackers, several effective methods have been proposed. By handling large scale changes in complex image sequences, the Scale Adaptive With Multiple Features Tracker (SAMF) [

16], and the Discriminative Scale Space Tracker (DSST) [

17] have achieved state of the art performance. Sum of Template and Pixel-wise LEarners (Staple) trackers [

18] have learned a filter to enhance the robustness of color variations and distortions by combining two notations easily affected by complementary factors. However, these trackers have not more effective approaches to mitigate the boundary effect. The Spatially Regularized Discriminative Correlation Filters (SRDCF) tracker [

19] induces boundary effects by adopting spatially regularized elements in training to punish correlation modulus according to their position. However, solving this optimization problem of this strategy is time-consuming. Lately, context-aware (CA) [

20] and background-aware [

21] CF-based trackers have demonstrated notable enhancement on visual tracking performance. These methods incorporate global context information into the filter training to add number of negative samples, the quantity of negative samples will obviously affect tracking results. However, there are two major problems: (1) context patches are simply selected on fixed positions around the target, which causes the problem that these selected negative samples are perhaps non-representative; and (2) only a holistic model is learned, these trackers lack robustness against partial occlusion.

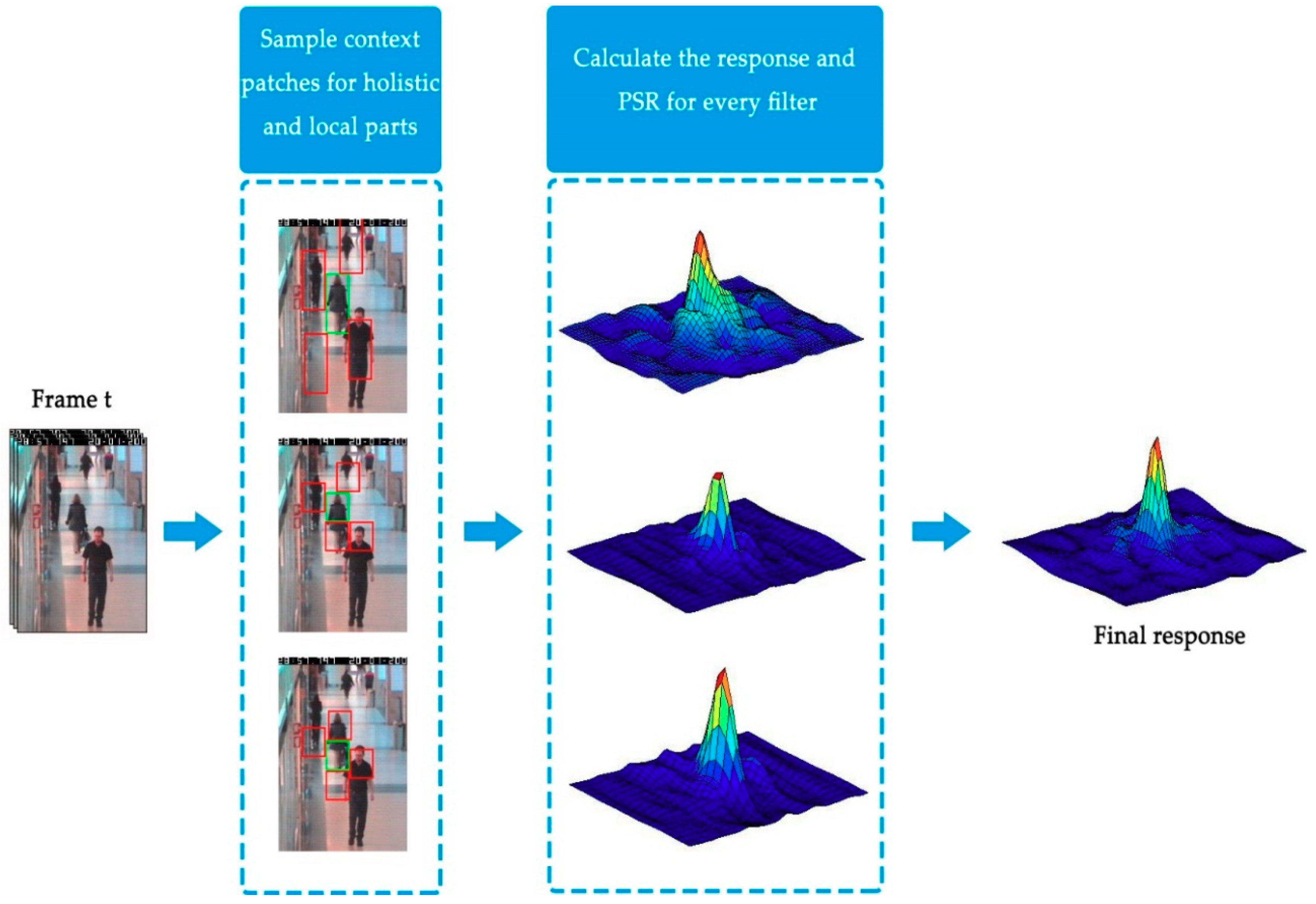

To tackle the above inherent problems, we propose an adaptive context-aware and structural correlation filter for visual tracking from the following three perspectives. Firstly, in order to add informative negative samples to inhibit the boundary effect caused by circularly shifting, we introduce a novel context selecting strategy to obtain negative samples. We evenly divide the target neighborhood into four blocks, then the maximum response patch is selected from each block as the context patches for collecting negative samples. Negative samples selected by this strategy are more informative. Secondly, to gain robustness against partial occlusion, we construct a structural correlation filter by learning both the holistic and local model. The final response of the correlation filter can be obtained by integrating every component with adaptive weight based on the Peak to Sidelobe Ratio (PSR) that is used to measure the reliability of each component. The new location of target is predicted according to the final weighted response. Finally, we propose an adaptive updating scheme by using a fluctuation parameter. The parameter is calculated by the deviation of PSR between two consecutive frames, which can describe the degree that appearance changing is alleviating or deteriorating. The updating scheme can adaptively adjust the updating rate for different frames, thereby updating the filter more accurately. We evaluate our proposed method on OTB-100 benchmark. The benchmark annotated 100 video sequences with 11 sub-categories (including occlusion, background clutter, illumination variation, scale variation, deformation, motion blur, fast motion, in-plane rotation, out-of-plane rotation, out-of-view and low resolution) that describe the different challenges in the tracking problem. The precision plot and success plot are used to carry out quantitative analysis in the benchmark. The procedure of the adaptive context-aware and structural correlation filter shows in

Figure 1. Extensive comprehensive experiments on OTB-100 datasets demonstrate that our approach performs favorably against state-of-the-art methods.

The rest of the paper arranged as follows. In

Section 2, we discuss recent visual tracking algorithms.

Section 3 elaborates the proposed adaptive context-aware and structural correlation filter exhaustively.

Section 4 evaluates the performance of our proposed tracker by Visual Tracking benchmark [

8] datasets, and compares it with several state-of-art trackers, and

Section 5 concludes this paper.

2. Related Works

Visual tracking is a challenging research issue [

22] in computer vision. The existing trackers are generally partitioned into two categories [

23]. The first category is to achieve the purpose of tracking by modeling the target and combining the target detection method, which is called generative [

24,

25,

26,

27,

28]. Kwon et al. [

2] have proposed a visual tracking decomposition algorithm that employs a diverse observation-motion model to explain a comparatively apparent shape change caused by lighting variation and fast motion. The algorithm has been further developed to search for proper trackers by Markov Chain Monte Carlo sampling [

3]. Another category of tracking methods, which is called discriminative, is to treat the issue as a classification task of foreground (target) and background, by training classifiers in combination with detection and tracking to track target. Discriminative methods are usually similar to tracking-by-detection [

22]. Tracking-by-detection trackers [

1,

5,

29,

30] are more popular in current research, because of their the high performance and efficiency. Hare et al. [

1] propose a Structured Output Support Vector Machine (SVM) and Gaussian kernels that locate a target directly. Kalal et al. [

5] make use of structural constraints to direct the sampling of a boosting method. Zhang et al. [

29] propose compressive tracking that learns a Naive Bayesian Model using a compressive sensing theory for dimensionality reduction of Haar-like features to accelerate calculation. Khattak et al. [

31] merge multiple features into tracking to improve robustness. Additionally, the Sparsity-based Collaborative Model (SCM) [

4] integrates both discriminative and generative classifier to generate a more robust tracker.

2.1. Correlation Filter Based Trackers

Over the past decade, CF-based trackers [

32] have attracted more interest in the research of tracking, as well as attained very effective improvements in diverse challenges [

9] and benchmarks [

8]. Traditionally, correlation peaks are typically generated for each patch of interest in a frame while producing a low response to the background, which is often used as a detector of the desired model. Although tracking issue can be successfully resolved using these filters, they are not suitable for online tracking as the required training needs. This situation has been changed after the Minimum Output Sum of Squared Error (MOSSE) [

13] filter has been proposed. By an adaptive learning method, the MOSSE tracker has robust and efficient results in tracking. According to the fundamental theory of MOSSE, multiple improved algorithms followed. For instance, Henriques et al. [

14] improve the MOSSE filter by adopting the Circulant Structure of with Kernels (CSK). Danelljan et al. [

33] employ Color Name features that can convey color attributes to improve the tracking performance of the CSK tracker. Since high dimension of color features, the updating scheme [

33] is adapted to reduce the dimension of feature by principal Component Analysis (PCA). An improved Kernelized Correlation Filter (KCF) [

15] employed multi-channel features to replace raw pixels, which is the most prevalent filter widely adopted since it has good overall performance and high FPS rate. Zhang et al. [

34] formulate a distribution scheme in a Bayesian optimization framework to alleviate drifting.

By further coping to scale variation, SAMF [

16] and DSST tracker [

17] based on correlation filter have appealing performance, and have defeated the rest of the participating methods from aspects of accurateness and robustness in recent competitions. Li et al. [

35] introduce a variant of SAMF based on water flow driven minimum barrier distance (MBD) algorithm. Staple [

18] has learned a model to improve the robustness of both color variations and distortions by combining two notations easily affected by complementary factors. To induce the boundary effects, SRDCF tracker [

19] exploits spatially regularized elements in training to punish correlation modulus according to their position. Recently, convolution features have also been used for visual tracking tasks to improve tracking accuracy and robustness. Deep SRDCF [

36] employs deep features of single convolution layer in Convolutional Neural Networks (CNN) for tracking, and Continuous Convolution Operator Tracker (C-COT) [

37] extends it to multi-layers of convolution.

2.2. Context-Aware Based Trackers

Furthermore, context-aware trackers have demonstrated notable improvement in tracking performance. Dinh et al. [

30] propose a method that automatically explores distractors and supporters around the tracking object adopting a consecutive random forest classifier. Distractors are patches which have similar appearance with the target and consistently have high response value. Xiao et al. [

38] utilize contextual information by using multi-level clustering. In more recent research, Mueller et al. [

20] incorporate global context information into the filter training to add a number of negative samples, and remodel the original regression function, while offering a closed-form solution for both single and multi-channel features. However, the CA tracker [

20] simply samples context patches on fixed locations around the target, which causes the problem that selected negative samples are perhaps non-representative. Qie et al. [

39] sample patches of top m response value directly on the whole neighboring region of target and then employ K-means cluster algorithm to select hard negative samples. Our proposed context selecting strategy is similar to [

39]. However, the difference between these two methods is evident. The method proposed by [

39] directly samples patches of top m response around the target. These patches may be concentrated in a small area, thus ignoring distractors from other areas. Our method divides the target neighborhood evenly into four blocks and then selects the maximum response patch from each block. The distractors sampled by our scheme are more representative. In addition, the clustering process employed by [

39] will consumes extra time.

2.3. Part-Based Correlation Filter Trackers

Jeong et al. [

40] propose an adaptive partial block scheme to attenuate the influence by partial occlusion. Sun et al. [

41] propose a framework that employs shape-preserved scheme to overcome object variations for each individual sub-part. Liu et al. [

42] retain the spatial distribution structure of each sub-part and take advantage of the inherent connection of sub-parts to improve tracking performance. However, all these trackers [

41,

42] just focus on local parts, ignoring the relationship between holistic target and local models. Akin et al. [

43] cope with the partial occlusion tasks by applying coupled interactions between a holistic tracker with some local trackers. Fan et al. [

44] combine holistic and local models to catch the internal structure of target to make to filter more robust for part-occlusion. Structural models of [

43,

44] are based on the response value which is perhaps misleading as the response between the tracking window and the current target that is continuously adapted by learning from the previous match. Jeong et al. [

45] pick the maximum response part from both global and sub-block as the appointing tracking object, but employing an independent model isn’t reliable in occlusion tasks. Different from the above methods, we construct a structural correlation filter by learning both the holistic and local model. The final response of the correlation filter can be obtained by integrating every component with adaptive weight based on the Peak to Sidelobe Ratio (PSR) that is used to measure the reliability of each component. And the final location of target is predicted according to the final weighted response.

2.4. Adaptive Update Schemes

Moreover, in order to ensure long-term tracking, the correlation filter should be updated robustly. In the process of losing for the object, the updating rate must be reduced to avoid updating much harmful confusion into appearance model. Several researches have focused on long-term components for failure tracking strategies. Ma et al. [

46] propose an online random fern classifier as re-detection component for long-term tracking (LCT). Jeong et al. [

45] introduce an adaptive learning rate by calculating the ratio between a value of current frame and a desire result based on the Peak to Sidelobe Ratio. However, the above schemes neglect the interframe deviation, which can measure the degree that appearance changing is alleviating or deteriorating.

In this paper, we propose an adaptive context-aware and structural correlation filter for object tracking. Firstly, we propose a novel context selecting strategy to obtain negative samples, instead of sampling on fixed locations. Secondly, to gain robustness against partial occlusion, we construct a structural correlation filter by learning both the holistic and local models. Finally, we introduce an adaptive update scheme by using a fluctuation parameter.

3. The Proposed Tracker

In this section, we elaborate our proposed adaptive context-aware and structural correlation filter. Firstly, we review the classical KFC tracker [

15], and then a novel context selecting strategy to obtain negative samples will be introduced. Moreover, we construct structural correlation filter to improve robustness against partial occlusion. Finally, a fluctuation parameter is proposed to update the model.

3.1. The KCF Tracker

Like other CF-based methods, our proposed method is also based on the KCF tracker. Therefore, before the exhaustive discussion of our proposed method, we first review the KCF tracker [

15]. The KCF tracker achieves excellent results and high-speed performance on the visual tracker benchmark [

8], despite the idea and implementation of the KCF tracker being very simple. The KCF tracker collects positive and negative samples around the target using the structure of the circulant matrix, to improve the discriminative capability of the track-by-detector tracker. The circulant matrix can be diagonalized with the Discrete Fourier Transform (DFT), enabling a fast dot-product instead of an expensive Matrix algebra.

The goal of the KCF tracker is to find a function that minimizes the squared error over data matrix

and their regression target

,

where the square matrix

contains all circulant shifts of the base sample

, the regression target

is Gaussian-shaped, and the

is a regularization parameter to ensure the generalization performance of the classifier, Equation (1) has the closed-form solution.

The circulant matrix

has some intriguing properties [

47,

48], and the most useful one is that the circulant matrix can be diagonalized by the Discrete Fourier Transform (DFT) as below:

where

is the DFT matrix, and

is the Hermitian transpose.

denotes the DFT of

,

Applying Equation (3) into the solution of linear regression (Equation (2)), we have the solution as below:

where

is the a complex-conjugate of

. The symbol

and the fraction denote element-wise product and division respectively.

For detecting the new location of target in the next frame, we can compute the response

for all candidate patches

, and diagonalize

to obtain as below:

The candidate patch with the maximum response is considered as the new location of target.

3.2. Context Selection Strategy for Tracking

Context information of the tracking target has a significant effect on overall performance. For instance, in the scenery with lots of background clutter, selecting optimal context patches is very important for successful tracking. Mueller et al. [

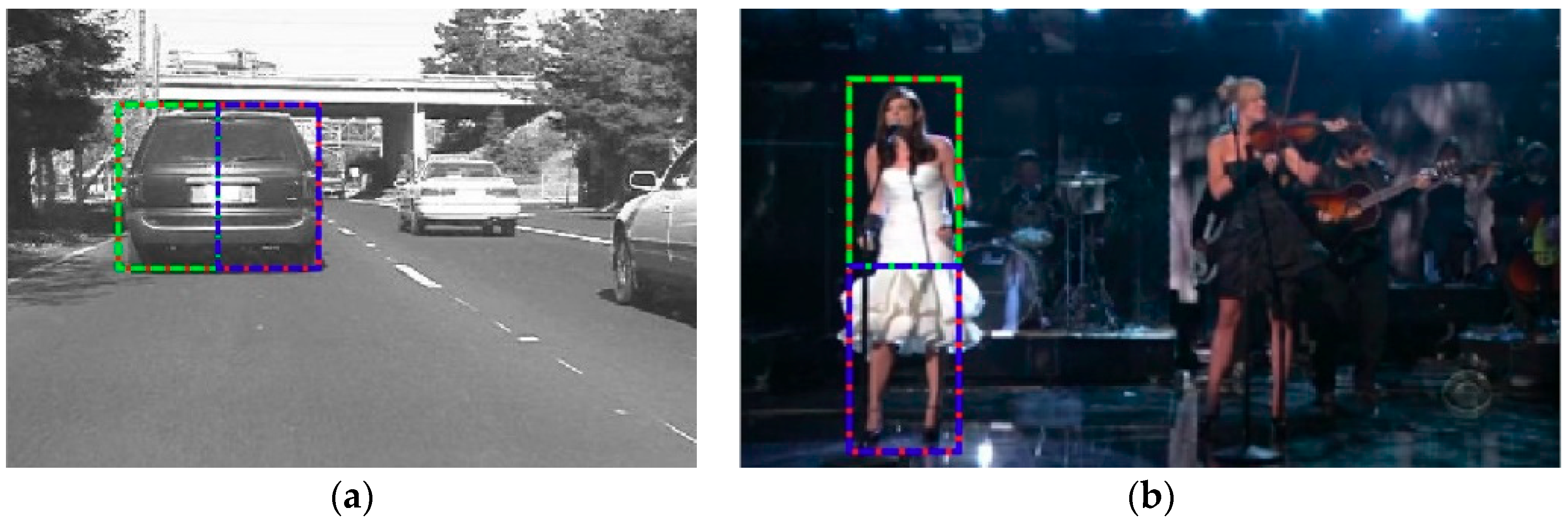

20] introduced a method based on a context-aware framework to add contextual information to the filter and got competitive results in some tracking challenges. However, the conventional CA tracker [

20] simply samples context patches on fixed locations around the target, which causes the problem that these selected negative samples are perhaps non-representative (see

Figure 2). The ideal strategy of selecting context patches is that context patches are sampled at locations where the filter response is high and spatially far from the maximum.

In this paper, we propose a novel context selecting strategy to obtain negative samples. Firstly, we divide the surroundings of the tracked object into four areas, each area is twice the target. Then, patches that have the maximum response value in their respective regions are selected as the context patches for collecting negative samples (as shown in

Figure 3).

The method has two advantages. Firstly the strategy of conventional CA tracker may yield meaningless negative samples. By contrast, sampled negative patches by our proposed strategy are more similar with the target. In other words, these context patches will more probably become background distractors in next frames. Secondly, our method avoids redundancy which led by sampling patches of top response value directly on the whole neighboring region of target.

Next, we learn the correlation filter using the target samples and the negative samples based on CA [

20]. Our goal is to train a filter with a high response on the target patch and closes to zero response on context patches. The regression function that adds context patches can be written as Equation (6):

where

and

are the corresponding circulant matrices of target and context patches, respectively. The parameter

can control context patches to regress to zeros.

We can stack context image patches under the target patch to establish a new data matrix

, and concatenate

with zeros to construct a new regression function

. The Equation (6) can be rewritten as below:

where

and

.

Because the Equation (7) is convex, it can be minimized by setting the gradient to zero. The closed-form solution of Equation (7) can be obtained.

The closed-form solution in the Fourier domain can be calculated by applying Equation (3).

The detection formula is exactly the same as in the standard formulation in Equation (5).

3.3. Structural Correlation Filter

In visual tracking tasks, partial occlusion is one of the major challenges limiting performance of tracker. The correlation filter based on a single holistic appearance model is sensitive to partial occlusion, which may reduce the overall performance and even lead to failure in the tracking challenge. To address this defect, we combine the correlation filters of holistic target and local parts to preserve the inner spatial structure of the target. We employ effective spatial distributions to divide the target into two local parts, one for the horizontally and one for the vertically aligned object based on the ratio of the height and width of the target. The scale and positions of the local parts are strictly confined. As illustrated in

Figure 4.

In most situations, the relative movement between the holistic target and local parts is limited, so we can combine all confidence maps to obtain a robust correlation filter. It is critical to note how to combine confidence maps of each part. If we simply sum confidence maps with the same weight, the false tracked parts maybe unexpectedly highlighted. Intuitively speaking, the response of reliable parts should be given larger weight, while the failure detection part will have less weight. Fortunately, the Peak to Sidelobe Ratio (PSR) is a suitable indicator to quantify the reliability of filter. PSR is used to measure the sharpness of the correlation peak, the higher PSR score means more confident detection. Thence, we adopt PSR to define the weight of every part.

The PSR is defined as:

where

and

are the mean and the standard deviation of

i-th confidence map, respectively.

The final confidence map can be defined as:

where

denotes the holistic part,

denote local parts.

3.4. Updating Strategy

During tracking, the appearance of the object will change due to many factors, such as deformation and rotation. Furthermore, the target object may be occluded by other objects. A conventional CF tracker, such as KCF, used a fixed learning rate to updating the model. If the tracker is occluded or drifts, the correlation filter will be contaminated. To tackle this problem, we propose an adaptive updating scheme by using a fluctuation parameter.

In the process of deforming or occluding for the object, we want to gradually reduce the learning rate to avoid updating much confusion into model. When the target is restoring, the learning rate should gradually increase to adapt the change of model. The deviation of PSR between two consecutive frames can describe the degree which appearance changing is alleviating or deteriorating. Based on the above motivation, we propose a fluctuation parameter , the fluctuation parameter can adaptively adjust the updating rate for different frames. Moreover, when PSR score drops to a threshold, it is an indication that the object is heavy-occluded or tracking has failed. Therefore, the correlation filter shouldn’t be updated when PSR score is less than the threshold.

For both holistic and local components, the fluctuation parameter of

t-th frame can be computed as below:

where the

denotes the PSR threshold.

Finally, if , this component will be updated individually by its own learning rate , else its model will be prohibited updating. Where the is an initial learning rate.

4. Experiments

In this section, we introduce the detail and parameters of implementation, and the experimental methodology. Moreover, to evaluate the performance of the proposed adaptive context-aware and structural correlation filter, we implemented our method on the OTB-100 benchmark [

8] and VOT2016 with comparisons to several recent state-of-the-art trackers.

4.1. Detail and Parameters

We implement our proposed method on Staple and SAMF trackers, and we name them Staple_SCA and SAMF_SCA, respectively. Staple_SCA tracker integrates HoG [

49] and color histogram, and the scale scheme is based on DSST like Staple, the more information refer to [

18]. Similar to SAMF tracker [

16], SAMF_SCA copes to the scale variation with a scaling pool

, and

. SAMF_SCA employs HoG and Color-naming [

33] features, using a cell size of

and the number of bin is 9. We also increase the padding windows from 2.5 times of target object to 3, due to the increased robustness from context patches. The number of context patches

is set to 4. The regularization factor

is set to

. We set the other regularization factor

to {0.5, 0.4} and the initial learning rate

are {0.015, 0.005} for Staple_SCA and SAMF_SCA, respectively.

For our proposed method, the PSR score of filter generally ranges between 16 and 34 under normal tracking conditions. When PSR score drops to around 7, it signifies that the object is heavy-occluded or tracking has failed. Thus, the threshold of PSR is set to 7.

The proposed method is implemented in MATLAB R2014a version. All the experiments are conducted on an Intel Xeon(R) E3-1226 V3 CPU (3.30 GHz) PC with 32GB RAM. Staple_SCA and SAMF_SCA trackers run at 24.9 fps and 10.6 fps, which are still within real time range.

4.2. Experimental Methodology

We select two quantitative evaluation components from the object tracking benchmark [

7,

8], including precision and success. Precision estimates the center location error between the bounding box of the tracked targets and the bounding box of ground truths. In the precision plot, the center location error in pixel distance varies along the x-axis, and the y-axis represents the percentage of accurately located bounding window per threshold. The threshold is set at 20 pixels [

8] for ranking trackers. Success is calculated as the intersection-over-union (IOU) of the tracking bounding window and the labeled ground truths. In the success plot, the IOU overlap rate varies along the x-axis, and the y-axis represents the percentage of accurately located bounding window per threshold. The final ranking is determined by the area under the curve (AUC), computed from the mean of the success rates corresponding to the sampled overlap thresholds from 0 to 1.

4.3. Overall Performances

To indicate the performance improvements of our approach, we compare Staple_SCA and SAMF_SCA trackers with five recent state-of-art trackers that include STAPLE _CA [

20], SAMF_CA [

20], SRDCF [

19], DSST [

17], KCF [

15] on the OTB-100 datasets.

Figure 5 shows the precision and success plots of proposed tracker and other methods.

It is apparent from the success and precision plots of

Figure 5 that our proposed trackers have better comprehensive performance than other state of the art trackers. As shown in

Figure 5, the success rate and precision rate of the Staple_SCA tracker are 61.6% and 83.7% respectively, which demonstrates the outstanding performance of the proposed method in the visual tracking challenge. Comparing to KCF tracker [

15], the proposed tracker gets a 29.1% and 20.4% improvement for the success rate and precision rate, respectively. Furthermore, compared with the conventional Context-aware-based tracker, STAPLE _CA tracker [

20], the proposed tracker outperforms it by 3% and 3.3% on success rate and precision rate, respectively.

For qualitative evaluation, we compared our proposed trackers with five state of the art trackers in several challenging situations, such as occlusion, background clutters, fast motion, deformation, motion blur, etc.

Figure 6 shows that proposed tracker achieves favorable performance under different challenging scenarios. Specifically, in Panda sequences (show in

Figure 6e), our trackers are the only two that have completed the tracking task successfully. However, we also observe that our trackers are unsuccessful in achieving Bird1 sequences, same as other trackers. This failure is caused by the long-term occlusion, trackers are hard to retrieve long missing target in a limited searching window.

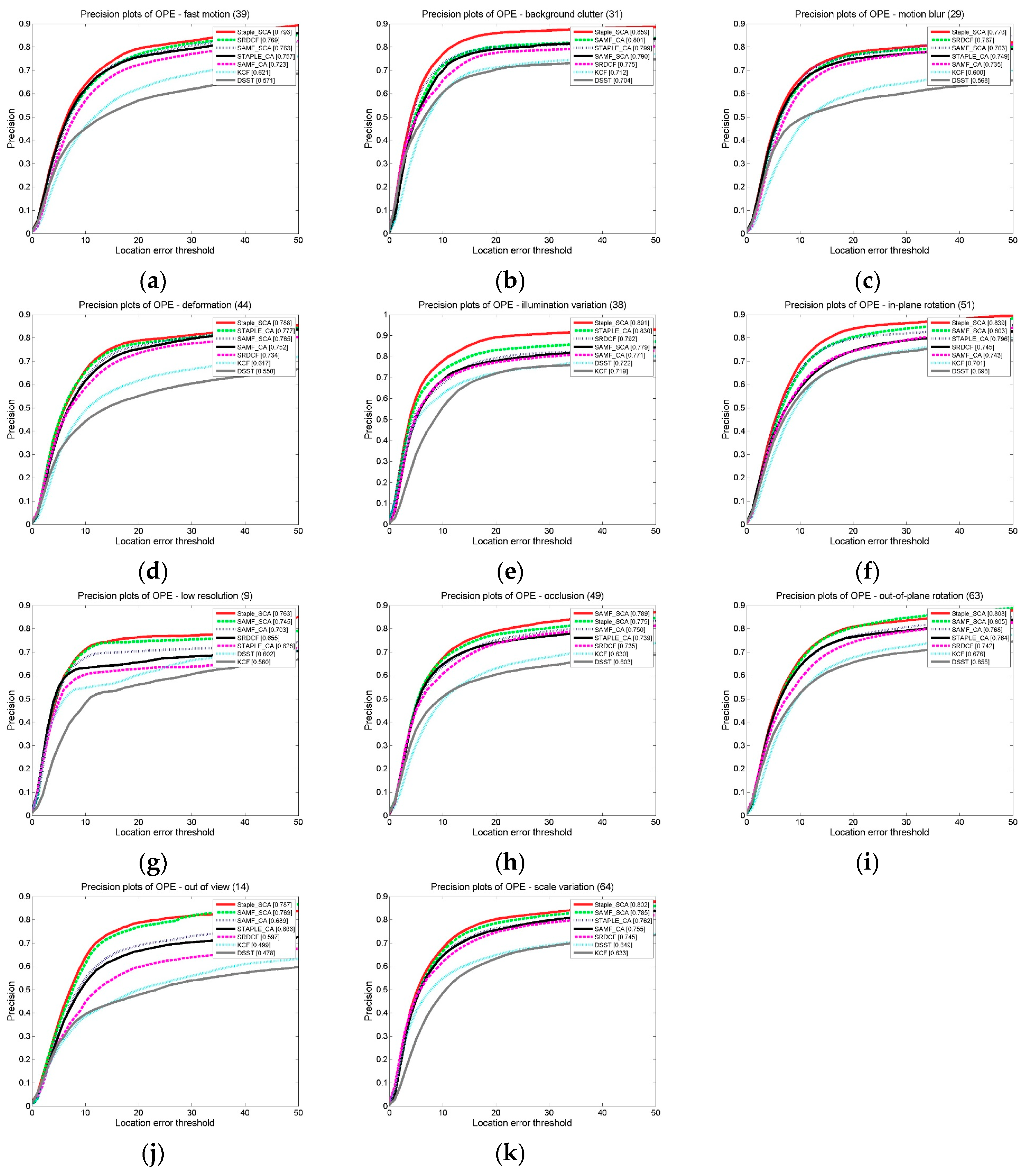

For attribute-based analysis, we evaluate the overall performance of seven competing trackers under 11 sub-categories tagged in the OTB-100.

Figure 7 shows the precision plots of different challenging sub-categories on the OTB-100 datasets for seven competing trackers. Impressively, our proposed Staple_SCA tracker obtains ten the best and one the second places (the first place in this sub-category is our SAMF_SCA tracker.) in 11 sub-categories tasks. In the background clutter attribute (

Figure 7b), trackers using context-aware framework outperform other trackers. It demonstrates that incorporating context information into the filter can mitigate the drifting problem led by background clutter, and our proposed context selection strategy is more effective than conventional method. Furthermore, the proposed tracker exceeds other trackers obviously in occlusion task. The result suggests that the proposed structural correlation filter produces the desired effect in the occlusion challenge.

Furthermore, in order to compare the effectiveness with the conventional CA method comprehensively, we apply our method to Staple, SAMF, DCF, and MOSSE trackers like [

20].

Figure 8 shows the comparing result. Compared to Staple_CA, SAMF_CA, DCF_CA, and MOSSE_CA, our method has improved performance by 3%, 4.9%, 3.7%, and 1.6% on the success plots, respectively. And the precision plots have been improved by 3.3%, 3.5%, 2.7%, and 3.2%, respectively.

Compared to the convention CA method, we divide the target neighborhood evenly into four blocks, then select the maximum response patch from each block, instead of sampling on fixed locations. The more representative negative samples enhanced the discrimination of filter. Moreover, the structure which integrates the holistic and local model, and the updating scheme by using interframe PSR deviation further improved performance of trackers based on our method.

4.4. Evaluation on VOT2016

To show the generality of our method, we evaluate the performance of our method on the VOT2016 [

10] datasets. VOT2016 includes 60 challenging video sequences, in which background clutters are serious and occlusion is common. The trackers comprehensive performance is evaluated by the expected average overlap (EAO). The EAO combines the raw values of per-frame accuracies and failures in a principled manner and has a clear practical interpretation. We compare our Staple_SCA tracker with five baseline trackers (staple, SRDCF, KCF, SAMF, DSST) on VOT2016.

Figure 9 shows the result. Our Staple_SCA tracker with an EAO of 0.298 is superior to other trackers