Having a Smarter City through Digital Urban Interfaces: An Evaluation Method

Abstract

Featured Application

Abstract

1. Introduction

2. Smart Cities and Urban Interfaces

2.1. Smart Cities

2.2. Urban Interfaces

3. Objectives and Hypothesis

3.1. Hypothesis

3.2. Objectives

- -

- Develop specific guidelines to evaluate user experience in the urban interface domain;

- -

- Create an open web application that helps to evaluate the user experience criteria for an urban interface;

- -

- Plan and perform a field study that covers different types of urban interfaces using qualitative research methods with users from four cities in two countries;

- -

- Document the results obtained in the field study, revising shared problems and challenges that support the general objective and hypothesis;

- -

- Discuss the application of the proposed guidelines and identify areas of opportunity for future research and improvements.

4. Literature Review

4.1. Guidelines and Usability Heuristics

4.2. User Research and Evaluation of Urban Interface Usability

5. Methodology

- Defining usability guidelines exclusively for urban interfaces. In this step a series of 14 specific guidelines were defined that are based on the literature review mentioned above. The specific features of urban interfaces were also taken into account, including physical location, use schedule, and type of person or citizen using them, amongst others.

- Selecting the urban interfaces that will be evaluated. A total of eight interfaces were selected—from government and private organizations—each serving different purposes and situated in different physical locations. The interfaces are located in the following cities: Mexico City and Monterrey in Mexico, and Cali and Bogotá in Colombia.

- Performing a field study of each interface. To be able to revise the guidelines in action, a field study was conducted with users that involved qualitative research based on observations [24,36] of different types of users. This qualitative research involved observing individuals, as shown in Figure 1, as they used the eight interfaces selected. During each observation researchers formally documented how each of the 14 guidelines influenced the experience of each user.

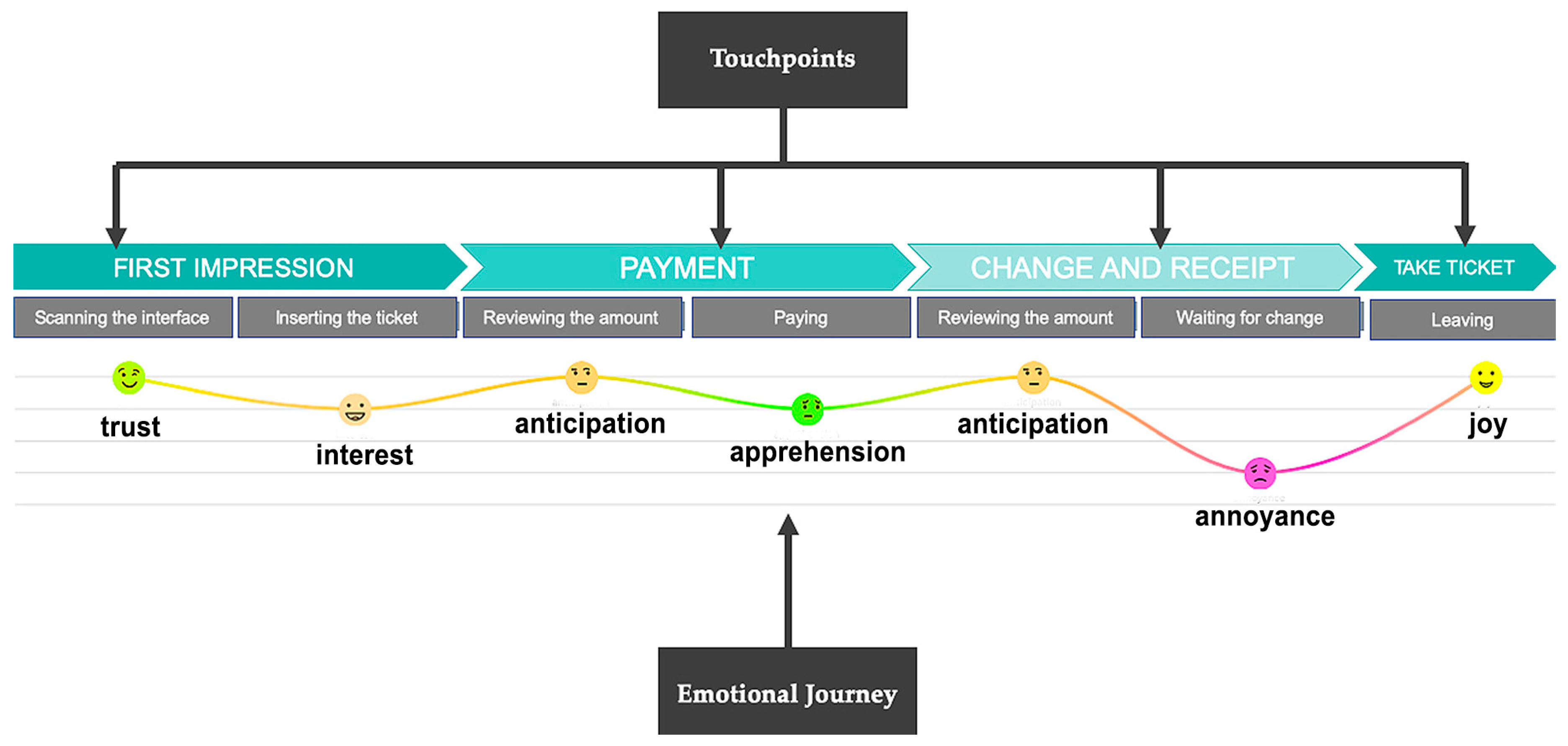

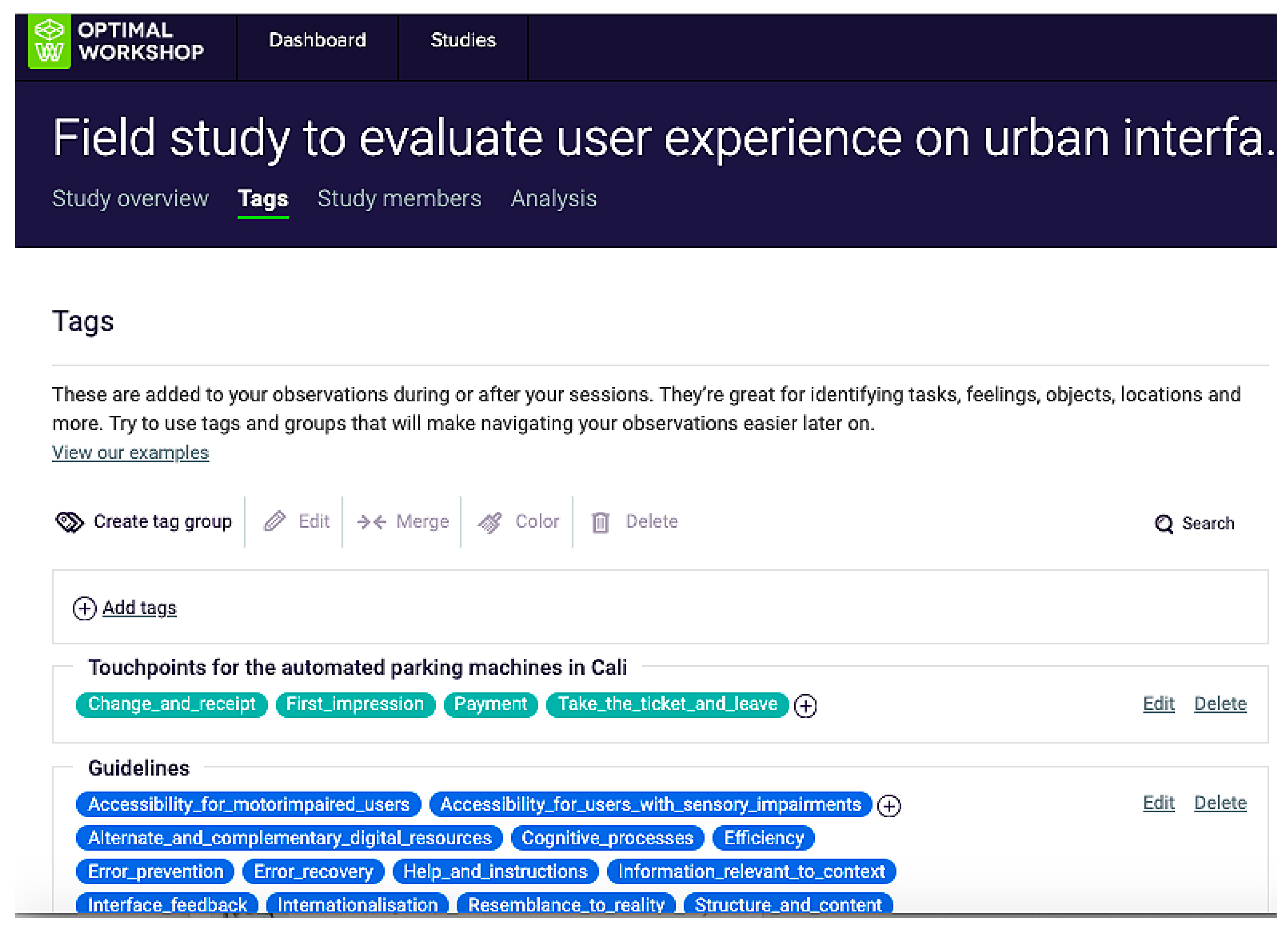

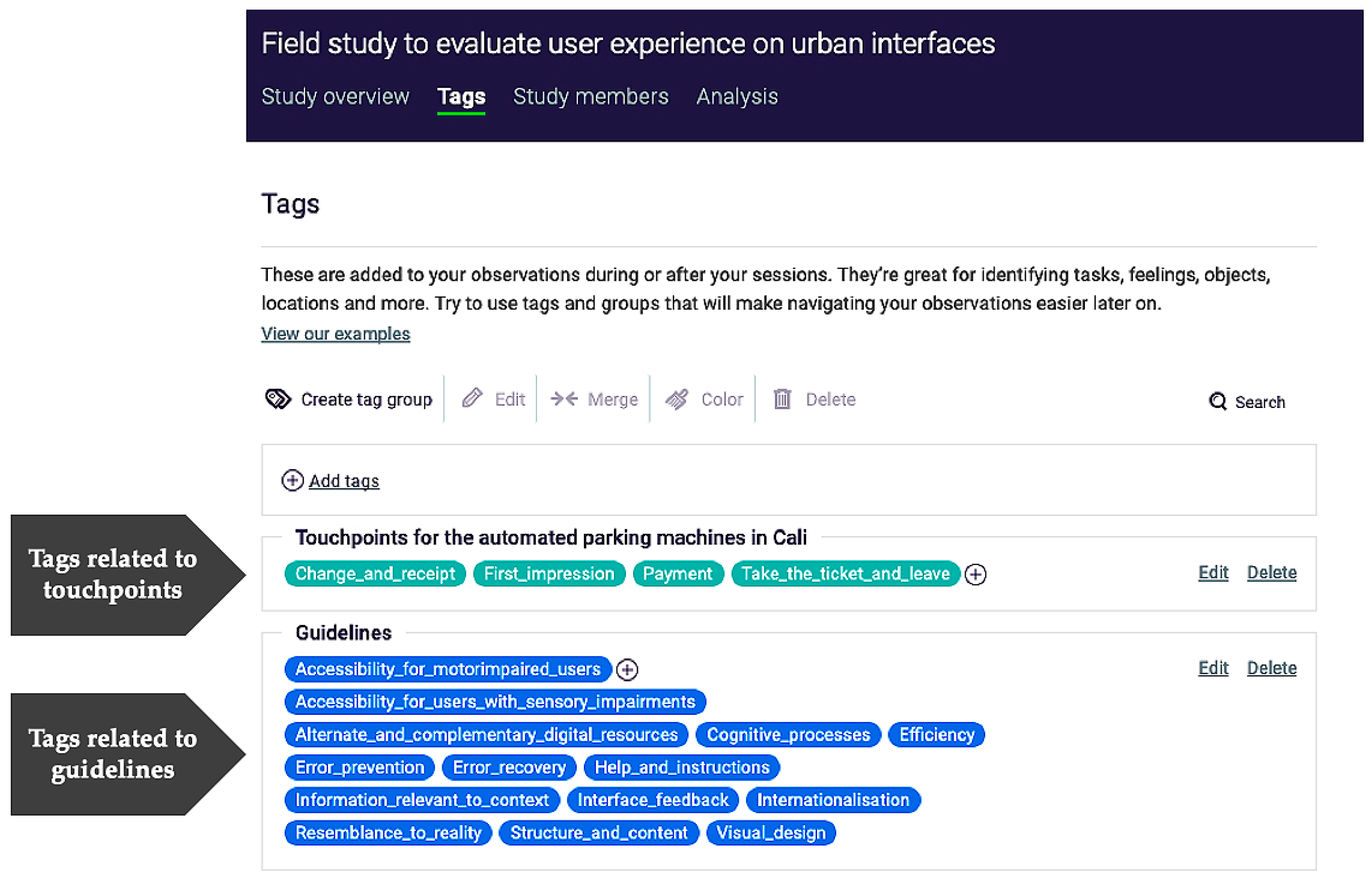

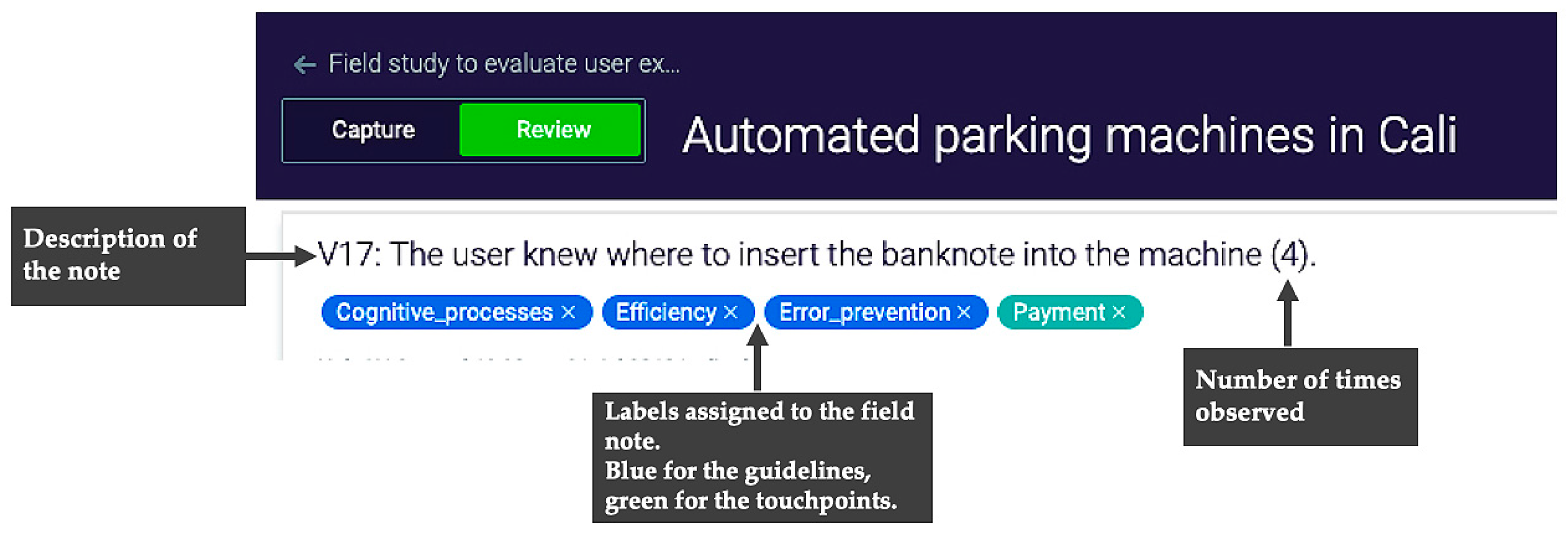

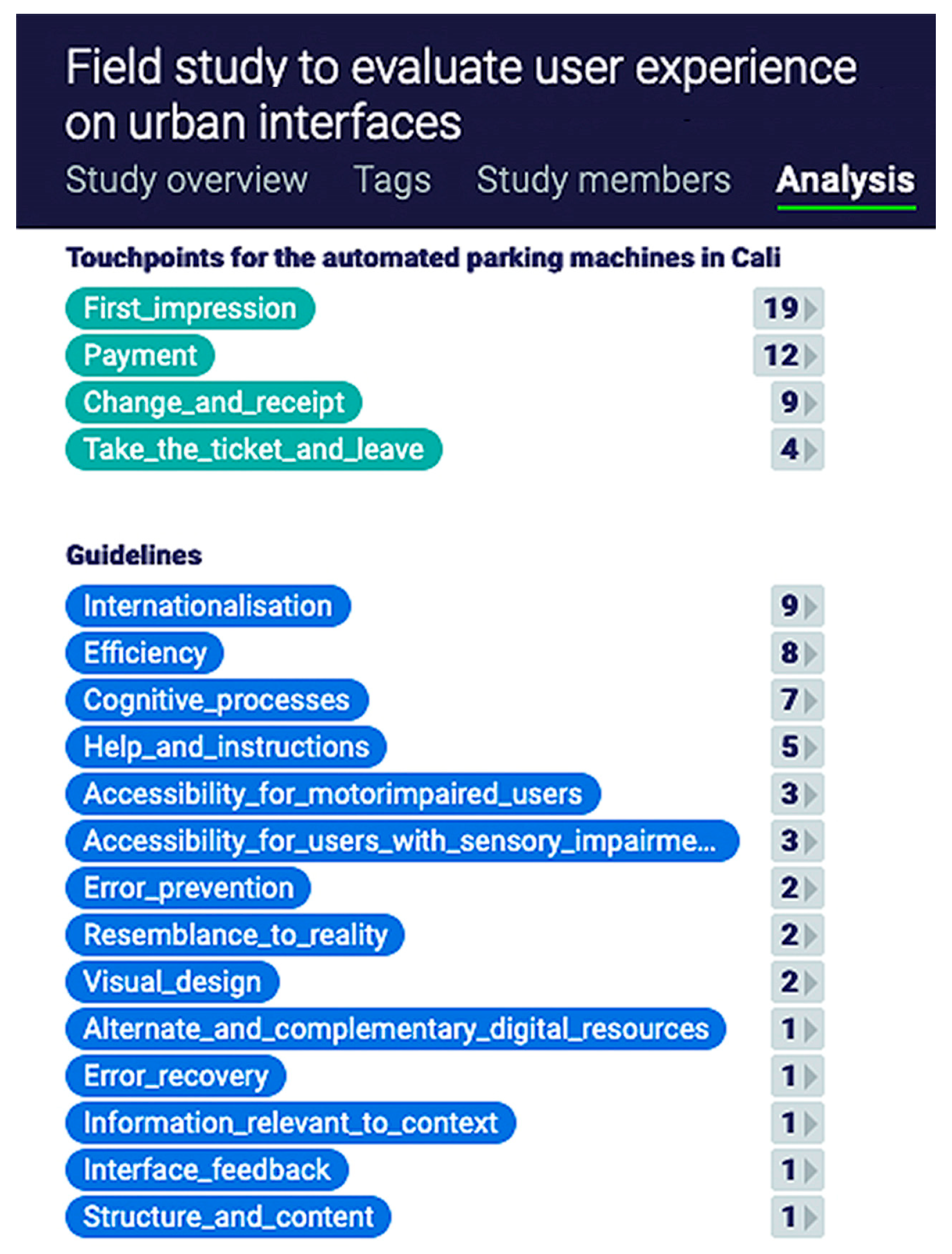

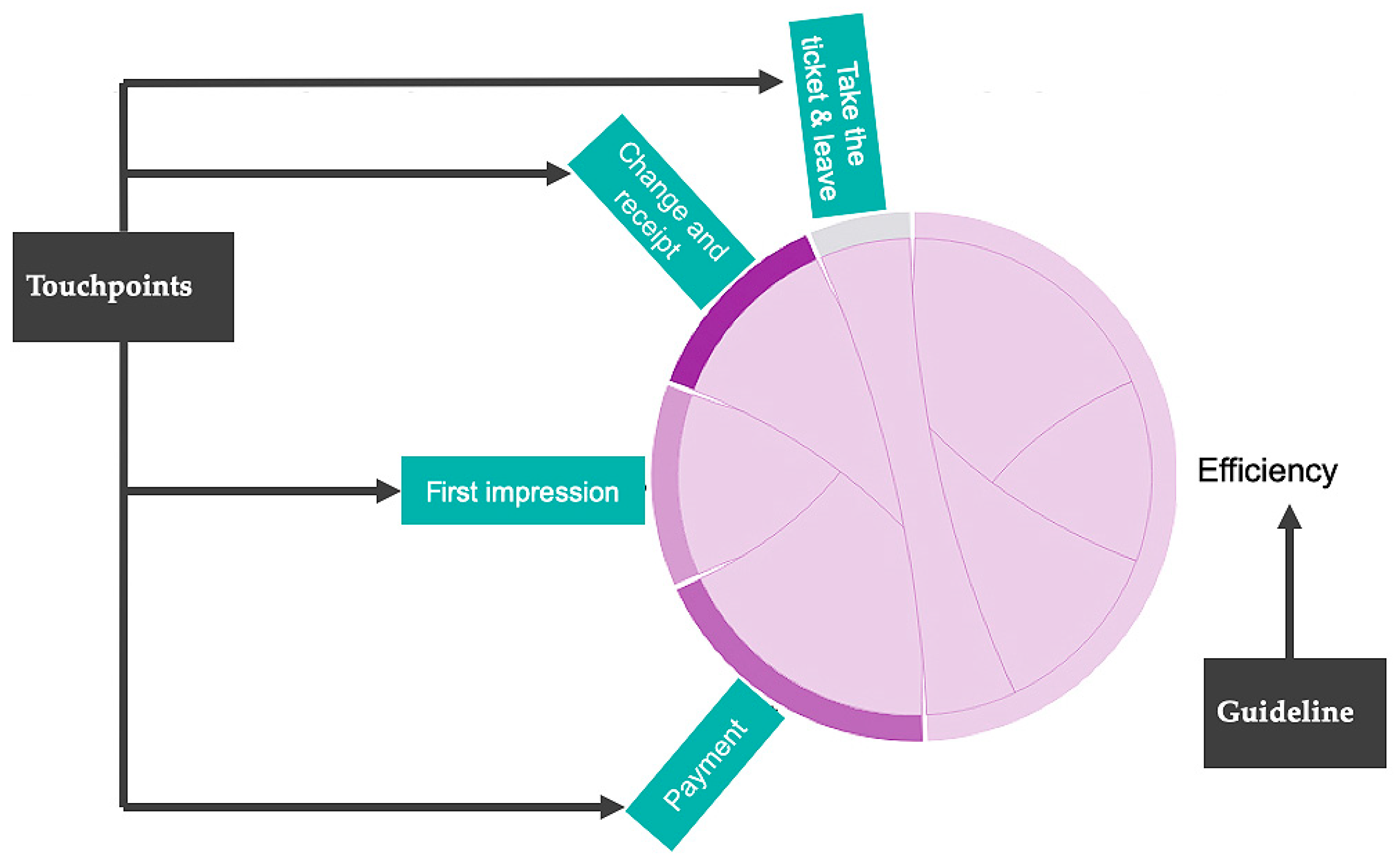

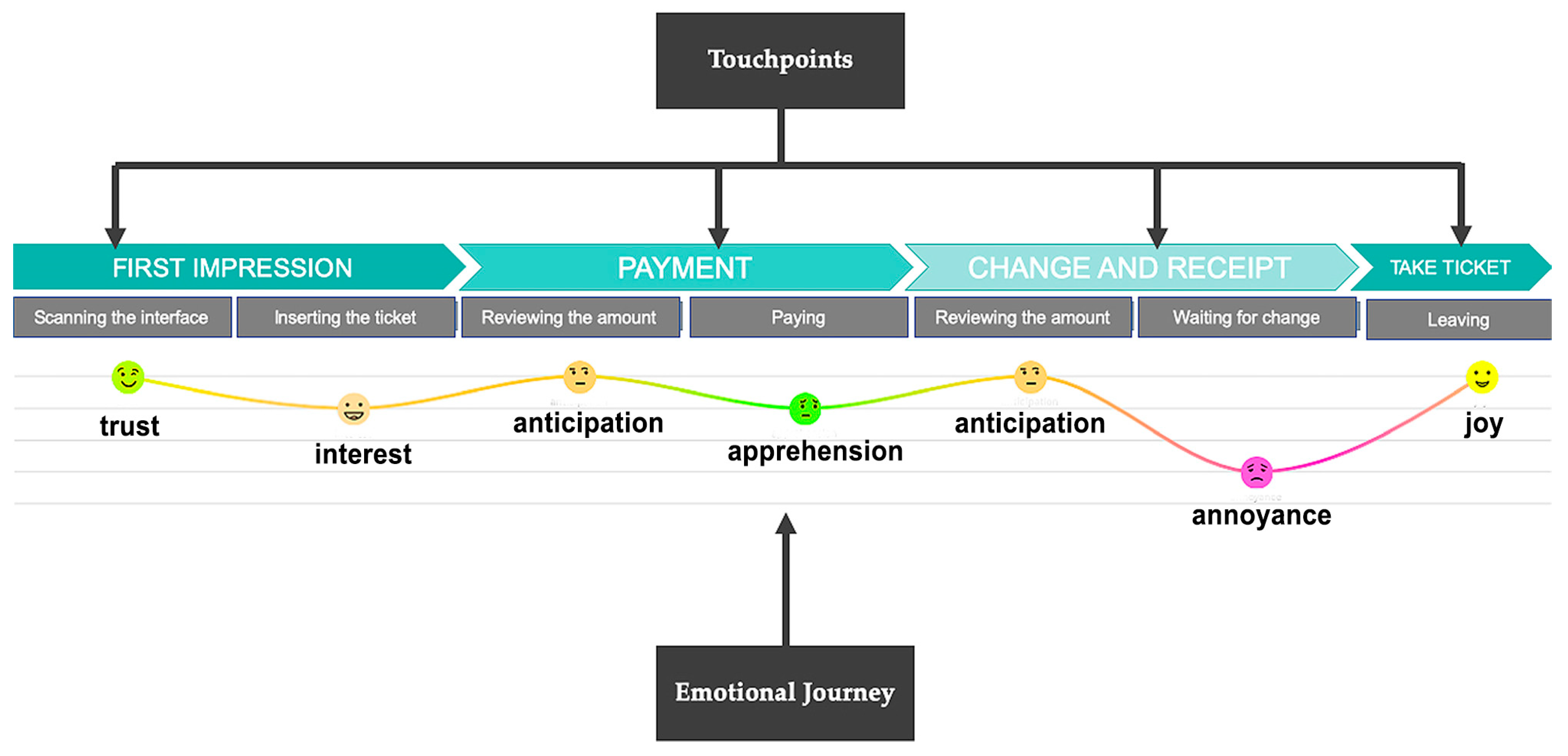

- Gathering and analyzing results on the use of each interface. To evaluate user experiences both qualitative and quantitative data were collected. Regarding the qualitative data that was collected, researchers documented the following: the aspects of the urban interface that had a negative impact, those that had a positive impact or rating, and any comments offered by the users themselves. Next, the data were recorded in Reframer, a specialized cloud-based tool for logging field study notes and observations. Once notes are logged, the tool then allows users to analyze their notes to detect patterns and reoccurring themes (https://www.optimalworkshop.com/reframer) [51]. In addition, a customer experience map [52], as shown in Figure 2, was used in order to map the emotional journey of the user experience in each touchpoint or moment that a person has contact with an interface. This visual map helps to better understand where the experience was better or worse and to understand which guidelines were followed and which were not.

6. User Experience Guidelines for Designing Urban Interfaces

6.1. Process for Defining Guidelines

6.2. Description of Guidelines for Evaluating Urban Interfaces

- -

- Does a person have the opportunity to skip a step to complete the task?

- -

- Is he/she able to complete the task without making a mistake?

- -

- Is he/she able to go one step backwards or all the way back to the beginning?

- -

- Does a person obtain all the information from only one place?

- -

- Does a person capture the minimum amount of data?

- -

- Does a person have to find information on how to perform a specific step?

- -

- Is there a way to contact someone in case a mistake is made?

- -

- Does he/she know exactly what to do to reach his/her goal?

- -

- Once a person has completed the task, can he/she remember the steps required to complete it?

- -

- Does he/she make mistakes when he/she captures the information?

- -

- Does he/she understand what he/she will find based on the labelling?

- -

- Can a person access the content in a simple and easy manner?

- -

- Is there a search engine tool?

- -

- Are any of the instructions ambiguous?

- -

- Is the information grouped in such a way that anybody can comprehend how to complete the format and interpret the results?

- -

- Are there other ways to find the same information?

- -

- Did the individual have previous knowledge of the elements included in the interface before he/she interacted with them?

- -

- Does the interface show relevant information?

- -

- Does the interface constrain the user into having to memorize the previous steps?

- -

- Is the information clear and concise?

- -

- Does the interface allow users to choose options that are not valid?

- -

- Did a person have to consult the same information more than once?

- -

- Yes, there was a mistake. Was the person able to recognize what caused it?

- -

- Do error messages allow a person to identify what went wrong?

- -

- Do error messages allow him/her to discern how to retrieve and correct the error?

- -

- Did he/she perform an action more than once or did he/she use an element of the interface that wasn’t required?

- -

- Does he/she know the percentage of progress made regarding task completion?

- -

- Was it possible to find the desired option without making a mistake?

- -

- Is a person familiar with the unresolved steps needed to complete the task?

- -

- Does the interface inform a person whether he/she is performing an action? For example: recording, consulting, information processing

- -

- Does the interface give the impression of being user friendly? This includes not only the screen but also the hardware’s appearance;

- -

- Is the information difficult to remember? Does a person need to obtain these data from other sources?

- -

- Does a user find distractors that hinder the process to complete the task?

- -

- Does he/she feel safe when using the interface?

- -

- Does the user feel satisfied after completing the task?

- -

- Is the content available in relevant languages?

- -

- Is the text translated properly?

- -

- Are there available options in the original language to change it to a foreign language?

- -

- Is it possible to choose the desired alphabet?

- -

- Are special characters (accents, ñ, etc.) properly used in the text?

- -

- Are the formats adapted to the country? (date, currency, measure units, names, addresses, etc.);

- -

- Does the interface use colors that correspond to the country´s cultural background and dismisses those that do not correspond?

- -

- Are the images and symbols that have been selected appropriate for the local cultural context?

- -

- Is the same visual processing used in all elements throughout the entire interface?

- -

- When a large number of elements are used: Are they similar to each other? Can they be mentally grouped together?

- -

- Is the same structural grid used throughout the interface and across different devices?

- -

- Does it use a suitable color palette with contrasting colors?

- -

- Is suitable symbology used to help identify the size relationship between objects?

- -

- Is it possible to activate and use the keyboard or screen only with a light touch?

- -

- Are the shapes, texture and spacing between the keys adequate, so that fingers do not accidently slip off keys or accidently press two or more at the same time?

- -

- Is it easy to access from a wheelchair?

- -

- Is the height of the machine suitable, according to the country’s standards?

- -

- Is it possible to access elements using only one hand without adopting awkward or uncomfortable postures?

- -

- Is there an option of listening to audio using a clearly marked headphone jack?

- -

- Does the interface work for everyone and every task that they attempt to perform?

- -

- Does the interface present information in formats that people living with disabilities can recognize?

- -

- Is there a way to tailor preferences to the individual user?

- -

- Can a user select one or multiple information output channels to complement the interface process? (e.g., receiving an email or SMS containing ticket details, or topping up a card via a mobile);

- -

- Can a user select one or multiple information input channels? (e.g., using a printed ticket, using a card with a bar code, scanning a code);

- -

- Is it possible to interact using a digital mobile device or other type of digital device?

- -

- When necessary, is it possible to make payment using different payment methods other than cash? (Debit or credit cards, electronic money transfers, etc.).

7. Field Study to Evaluate User Experience of Urban Interfaces

7.1. Selected Urban Interfaces

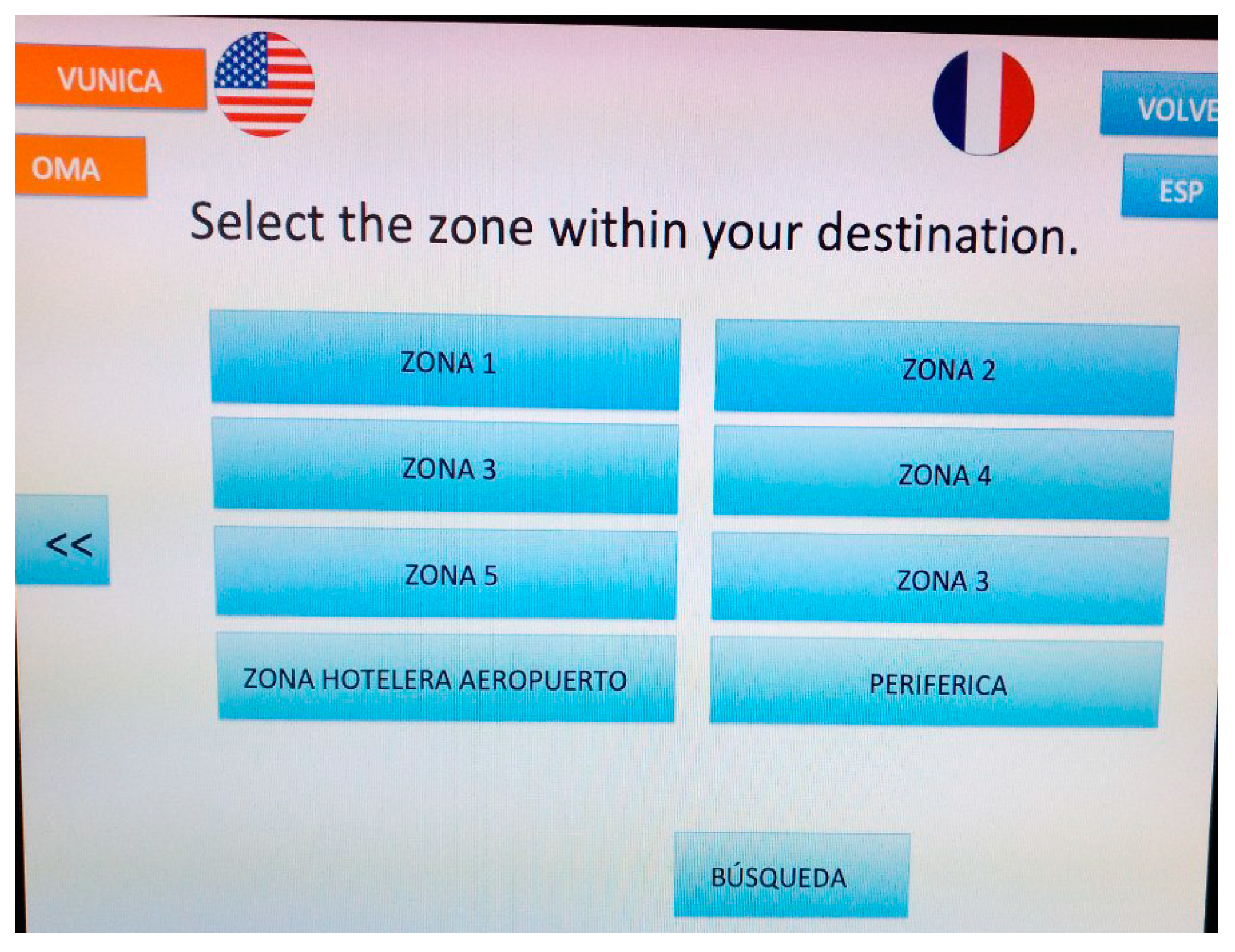

- Taxi ticketing kiosk in the Monterrey International Airport for purchasing airport transfer. This interface is located inside the airport building and is the only official means of buying a taxi ticket for an airport transfer service to the city. The kiosk is managed by a private company. It accepts different payment methods: Bank cards and local currency in the form of bank notes and coins. Its target audience includes national and international citizens. Figure 3 provides a general and more detailed overview of the interface.

- ATM CFE-Mático for paying electricity bills. This interface is one of the official means for paying electricity bills. It is aimed at the entire population of Mexico and can generally be found inside the offices of the Comisión Federal de Electricidad, which belongs to the Government of Mexico. The only accepted payment method is banknotes and coins. See Figure 4 for details.

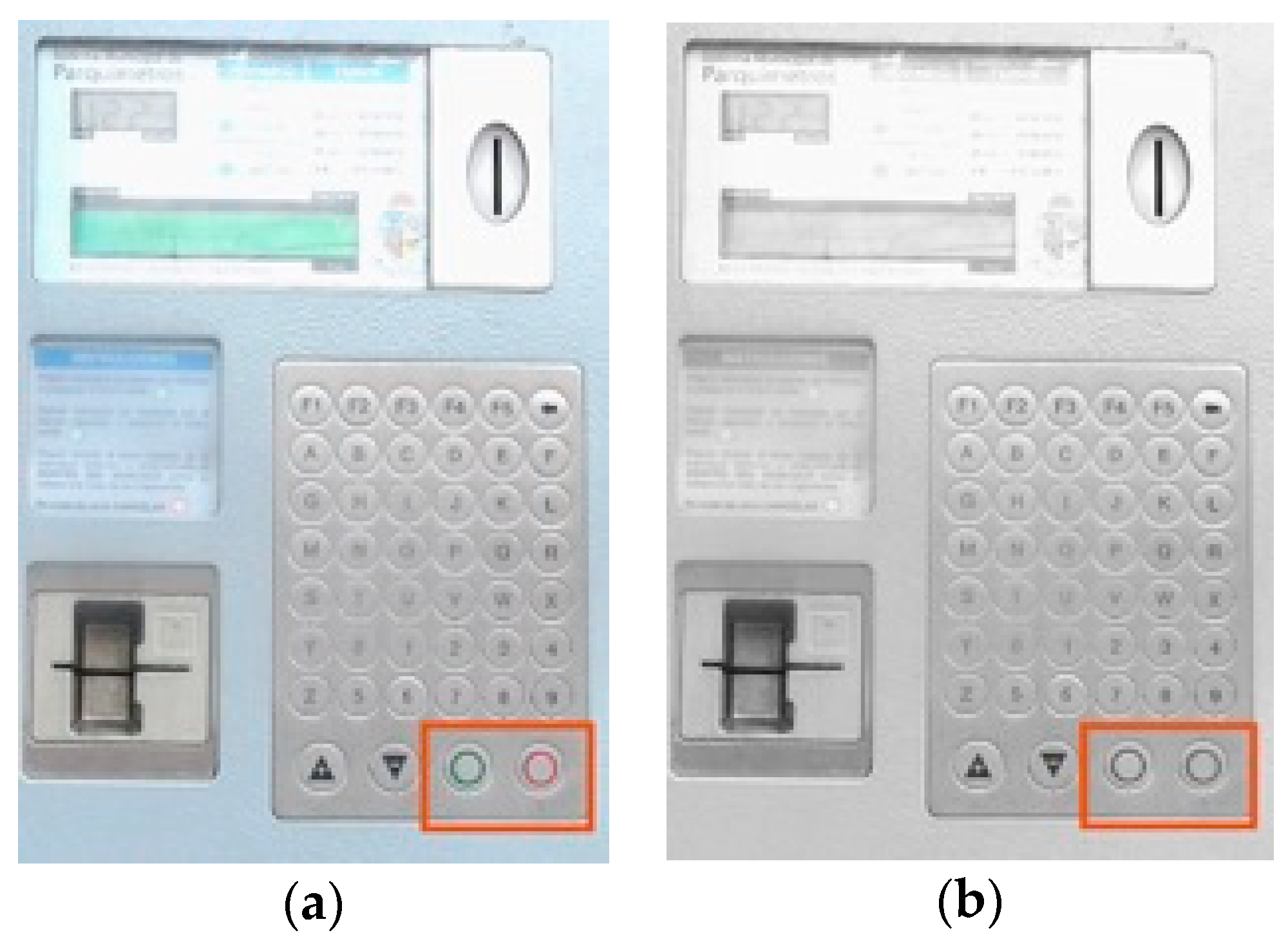

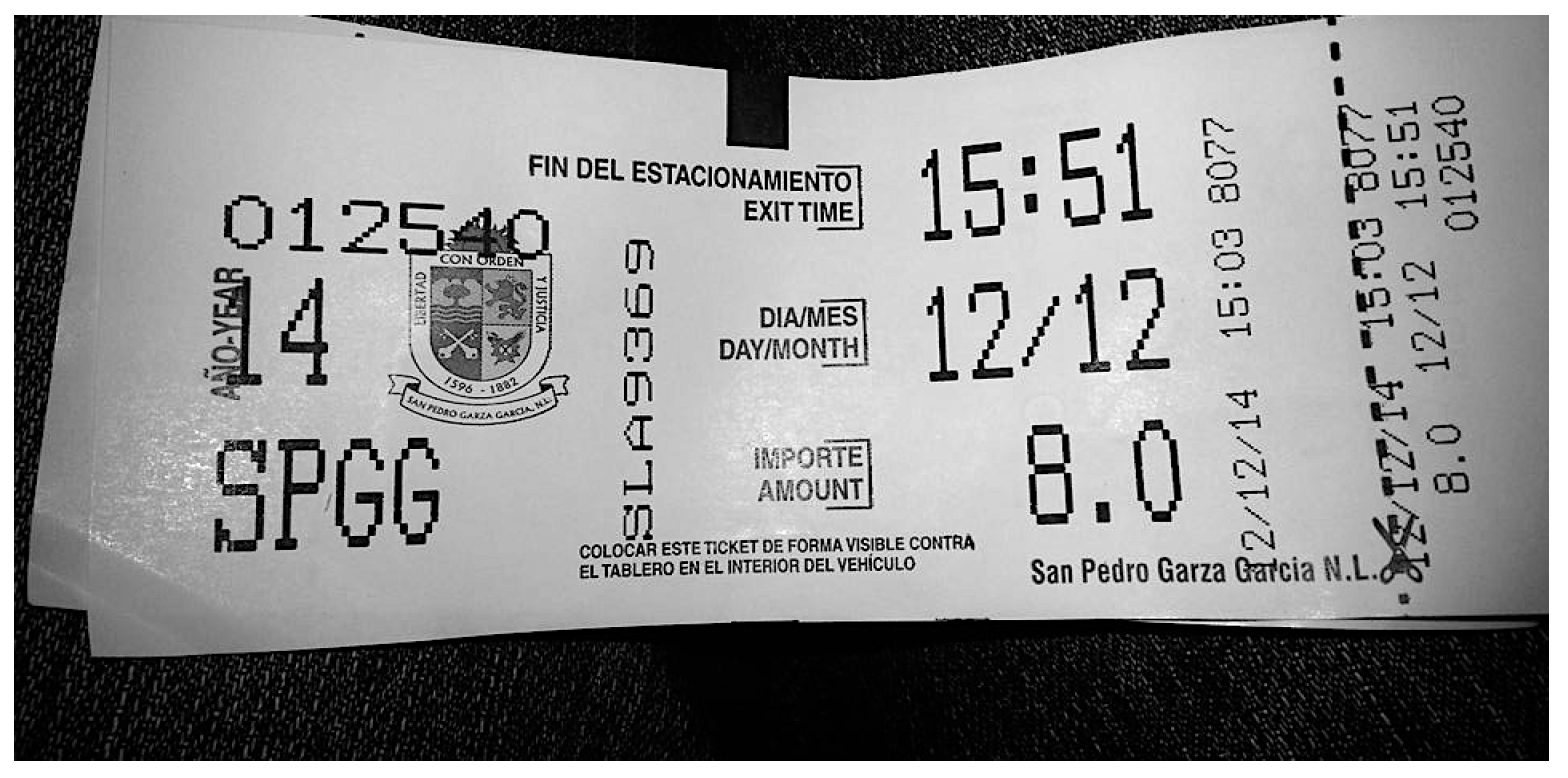

- Parking Meter of San Pedro Garza García Town Hall. This device is used to collect payment for outdoor parking in the city’s streets. The interface belongs to the Town Hall of San Pedro Garza García, which is located in Monterrey, Mexico. The target audience is any individual who parks their vehicle on a public road Accepted payment method include local currency, bank cards and a mobile app. See Figure 5 for details.

- Mexico City Government Treasury Kiosk. This kiosk is used by the entire population of Mexico City to perform official bureaucratic processes, such as obtaining birth certificates or paying local taxes. It only accepts bank notes and coins. See Figure 6 for details.

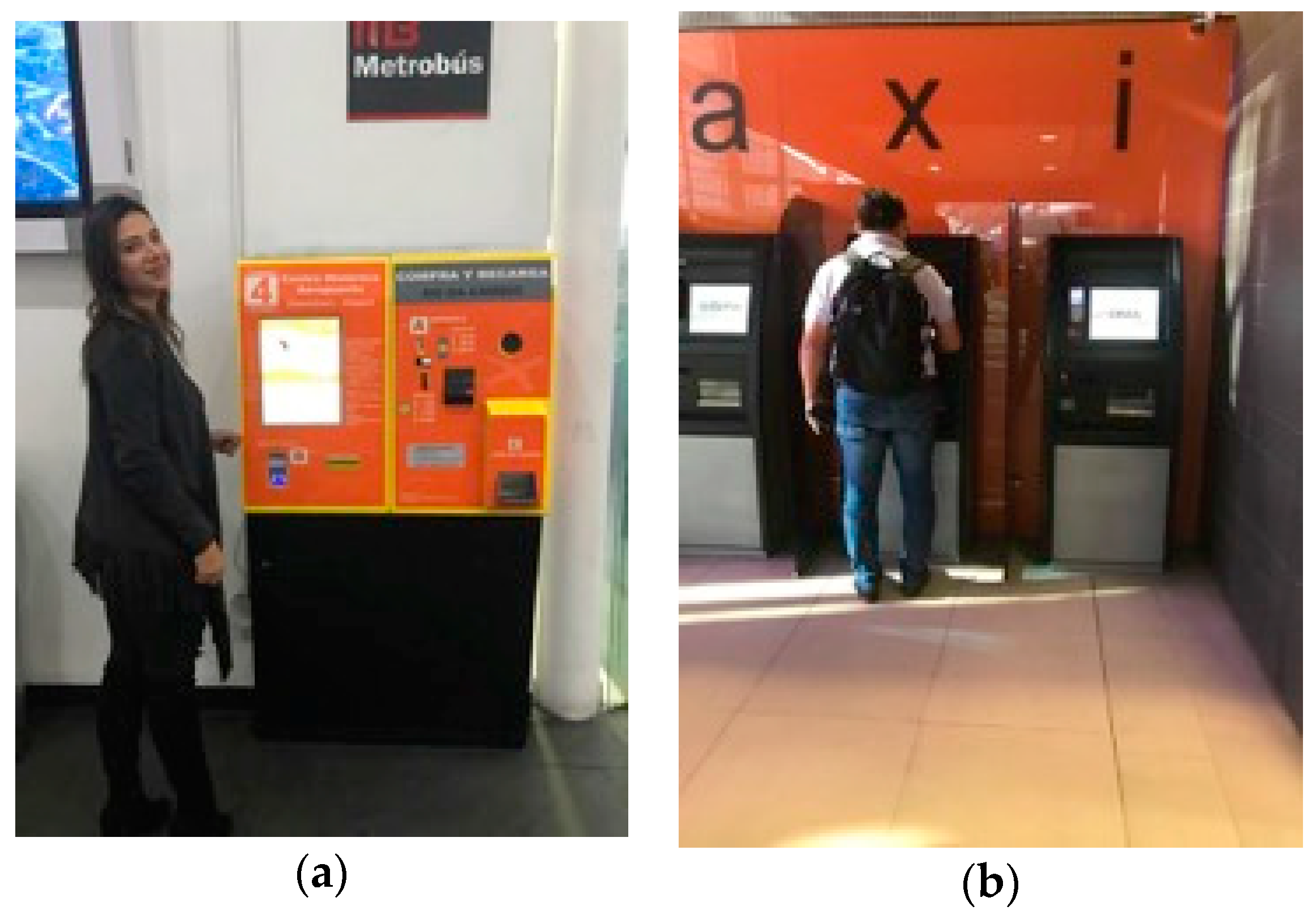

- Metrobús ticket vending machine for public transport in Mexico City. This machine is can be found at different sites across Mexico City, such as the airport, local bus stations, and in the underground station. It is aimed at local residents and tourists alike. The machine is owned by the Metrobús government consortium. Accepted methods of payment include bank notes and coins, and bank cards. See Figure 7 for details.

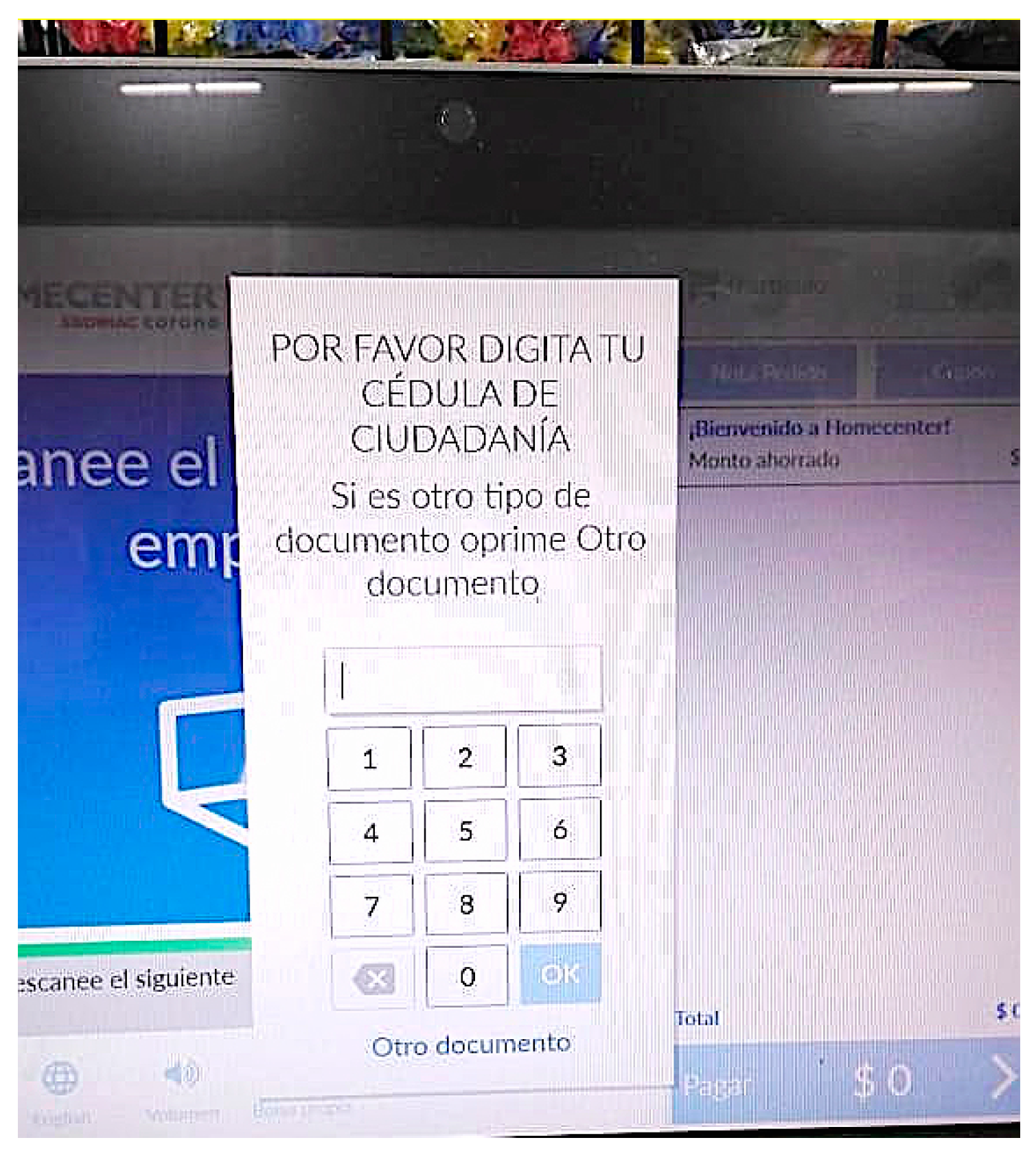

- Home Center Self-Checkout Machine in Bogotá. This interface is located inside the shop called Home Center, which sells construction and DIY materials. The self-service checkout provides clients with an alternative way in which to pay for the goods they wish to purchase. See Figure 8 for details.

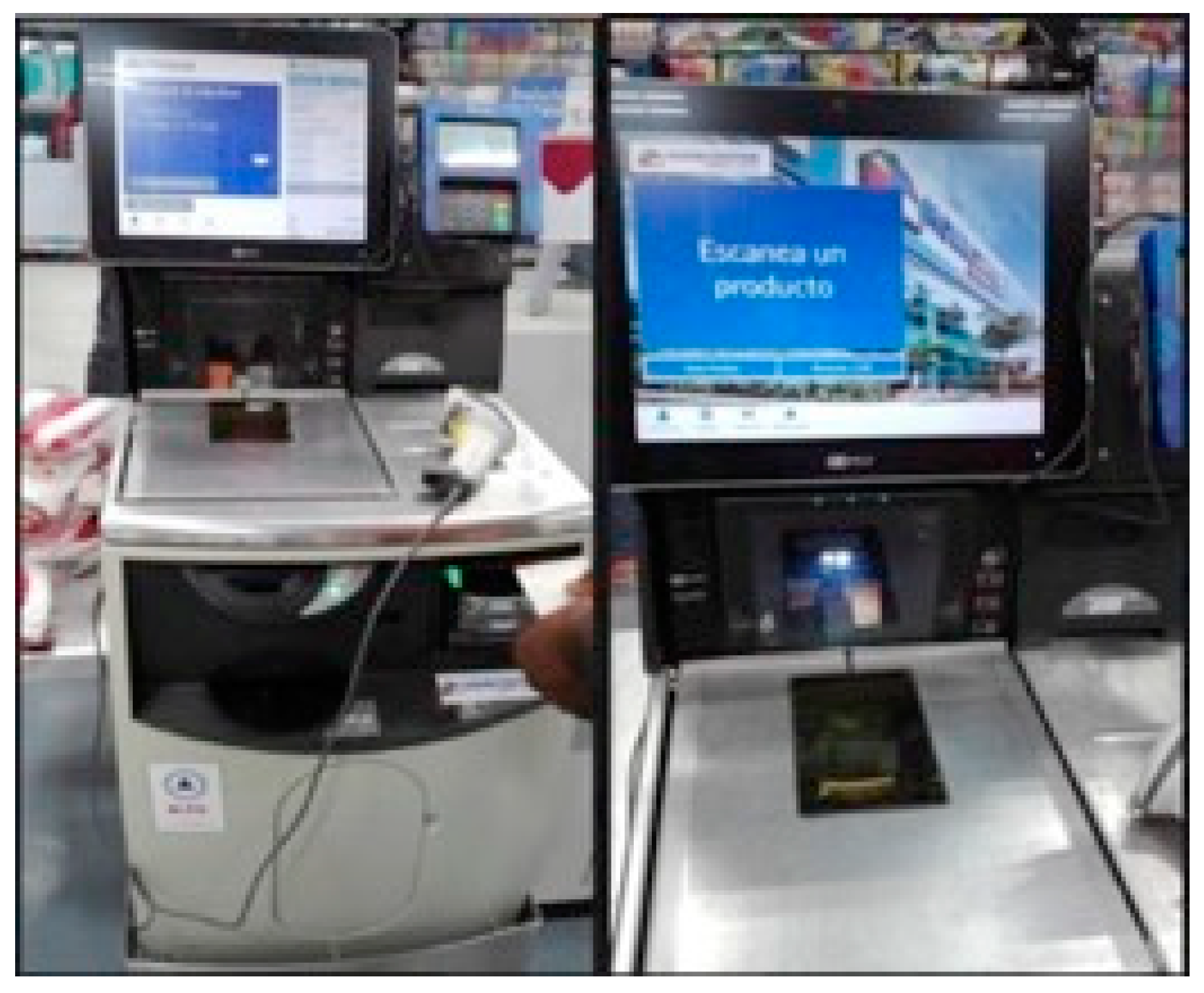

- Automated parking machines in commercial shopping centers in the city of Cali. This machine is located in malls and shopping centers in Cali. It is used to charge a parking fee for parking a vehicle inside the premises. See Figure 9 for details.

- Cine Colombia Ticketing Kiosk in Bogotá. This machine is located inside the cinema building and can be used to buy tickets for any performance any day of the week. The process involves selecting a film and performance time, using a rewards card, and making payment. See Figure 10 for details.

- Mío top-up terminal for public transport in Cali. This machine is aimed at local residents and foreign visitors who wish to use public transport in Cali. It is used to top up a travel card with funds and can be used in any station. See Figure 11 for details.

7.2. Description of Observed End Users

- Taxi ticketing kiosk in the Monterrey International Airport for purchasing airport transfers. Three groups of potential users of this kiosk were identified: foreign visitors on business trips, parents who are holidaymakers, and foreign students attending a local educational institution for study purposes. In each case, these individuals require a taxi service, and are unfamiliar with the interface. It is important to mention the presence of an additional variable: An individual’s native language. The kiosk offers information in Spanish, English and French, but in each group, there are individuals who may need to use the kiosk in different languages. The rest of the characteristics are displayed in Table 4;

- ATM of CFE-Mático for paying electricity bills. Three groups were established: female homemakers dedicated to housework, parents with a stable job, and elderly people who have retired and collect a pension. In each case, these people use the machine to pay the electricity bill corresponding to their permanent address. In this instance, individuals’ familiarity with making payments via this digital channel was also taken into consideration, as some individuals will have used this method before whilst others may not. Table 4 displays the full set of characteristics of this group;

- Parking Meter of San Pedro Garza García Town Hall. Three groups were formed consisting of individuals who need to pay for parking using an urban interface. Firstly, those visiting a local bank or business near the car park when time is a factor; secondly, those visiting a local restaurant when time is not a factor; and thirdly women who going shopping who are not sure how long they will take. It is important to mention that one criterion that was taken into consideration was that the individual using the car park must be the owner of the vehicle in question. Additionally, researchers also checked that the people did not work in any of the nearby businesses. Table 4 displays the full set of characteristics of this group;

- Mexico City Government Treasury Kiosk. Three groups were observed: Business owners or managers needing to perform business activities, and pensioners or parents needing to perform personal bureaucratic procedures. In each of these groups all individuals lived in Mexico City. Table 4 displays the full set of characteristics of this group;

- Metrobús ticket vending machine for public transport in Mexico City. Three groups were formed: individuals living in the city who have stable employment and who need to move about locally, tourists who are in the city and need to use public transport for a short period of time, and students who live in the city and need to get to one of the local academic institutions on a regular basis. As in the case of the airport taxi kiosk, the native language of users was taken into account, as tourists may need to use the machine in another language. Table 4 displays the full set of characteristics of this group;

- Home Center Self-Checkout Machine in Bogotá. Three groups were observed: self-employed professionals dedicated to construction and maintenance, maintenance employees, and individuals doing DIY and remodeling their homes. In each case the individuals observed lived in Bogotá. Table 4 displays the full set of characteristics of this group;

- Automated parking machines in commercial shopping centers in the city of Cali. Three groups were considered: individuals going to the commercial shopping center to go shopping, students taking a stroll and window shopping or hanging out with friends and pursuing leisure activities, and elderly people attending a specific activity in the shopping center at a specific time of day who limit their visit solely to said activity. In the case of the first two groups it was understood that they do not go the shopping center on a specific schedule or for a limited amount of time. As in the case of the San Pedro Garza García Town Hall parking meter, everyone using the machine had to be the owner of the vehicle in question and they could not be workers from nearby businesses. Table 4 displays the full set of characteristics of this group;

- Cine Colombia Ticketing Kiosk in Bogotá. Three groups were formed: parents going to the cinema with their partner or children at the weekend or on a specific date, students going to the cinema in groups, and individuals who are cinema fans. Also taken into consideration was whether individuals held a cinema rewards card or not. Table 4 displays the full set of characteristics of this group;

- Mío top-up terminal for public transport in Cali. Four groups were formed: Local residents with stable work who need to get around the local area, tourists who are in the city and need to use public transportation for a short period of time, people living in rural communities or nearby areas who need to go into the city to complete bureaucratic processes, and students living in the city who need to get to local academic institutions on a regular basis. As in the case of the airport taxi kiosk, the native language of users was taken into account, as tourists may need to use the terminal in another language. Table 4 displays the full set of characteristics of this group.

7.3. Planning of Field Study

7.3.1. Research Method

7.3.2. Materials

7.3.3. Field Study Logistics

- -

- Monterrey: November 2017 to February 2018,

- -

- Mexico City: February to April 2018,

- -

- Cali: July to October 2018,

- -

- Bogotá: September to December 2018.

8. Debriefing & Analysis of Results

8.1. Classifying Field Notes

8.2. Analysis of Information

9. Results and Findings

9.1. The Majority of Interfaces Are Not Designed for Users with Disabilities

9.1.1. Interfaces Do Not Consider Issues Pertaining to Visual Impairments

9.1.2. The Ergonomics and Hardware Design Are Deficient

9.2. Poor Attention Paid to Internationalisation and Foreign Visitors

9.2.1. Cultural Differences Fail to Be Taken into Consideration

9.2.2. Use of Uncommon or Unknown Words, Information and Data

9.3. Digital Urban Interface Design Limited to the Digital Context

9.3.1. Hardware Location and Weather Conditions

9.3.2. Context and Physical Location

9.3.3. Improvised Directions and Instructions

9.4. Urban Interface Processes Have a Larger Cognitive Load than Personal Interface Processes

- -

- Unlike other systems, urban interfaces are not used as frequently, or may even only be used once. This is most evident when a person performs a transaction for the very first time and is completely unfamiliar with the interface. It was observed that for some interfaces individuals spent up to 30 s trying to understand where to start, and another 15 s going from one step to another. A psychological factor that has a negative impact on individuals and makes them take longer to perform actions is a perception of pressure from others waiting in line;

- -

- Another issue that increases the cognitive load and response times is related to bad emotional design [27]. Psychological factors that influence decision-making are not taken into account when actions are executed in the interface. In Figure 28, the keyboard is laid out in alphabetical order despite the majority of people being familiar with the QWERTY keyboard layout;

- -

- Different characteristics and limitations unique to different demographic and psychographic audiences have been overlooked. These limitations can make an action more difficult for some groups to perform. These types of situations are extremely evident in government interfaces, such as those shown in Figure 29.

9.5. Poorly Designed Service, Isolated Processes, and Lack of Omnichanneling

9.6. The Interface Requires ‘Independent’ Add-Ons in Order to Offer Omnichannel Processes

9.7. Greater Focus on Efficiency than on Learning Process

9.8. Face-to-Face Human ‘Support Staff’ Become Indispensable

10. Discussion

11. Conclusions, Challenges and Future Research

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Information and Data Availability

References

- We Are Social Ltd. Global Digital Report 2019. Available online: https://wearesocial.com/global-digital-report-2019 (accessed on 30 March 2019).

- Çalışkan, H.K. Technological Change and Economic Growth. Procedia Soc. Behav. Sci. 2015, 195, 649–654. [Google Scholar] [CrossRef]

- Andrea Vesco Ferrero, F. Handbook of Research on Social, Economic, and Environmental Sustainability in the Development of Smart Cities. Available online: https://searchworks.stanford.edu/view/11474955 (accessed on 12 June 2019).

- Joshi, S.; Saxena, S.; Godbole, T. Developing Smart Cities: An Integrated Framework. Procedia Comput. Sci. 2016, 93, 902–909. [Google Scholar] [CrossRef]

- Schipper, R.; Silvius, A. Characteristics of Smart Sustainable City Development: Implications for Project Management. Smart Cities 2018, 1, 75–97. [Google Scholar] [CrossRef]

- Garcia, R.; Dacko, S. Design Thinking for Sustainability. In Design Thinking; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2015; pp. 381–400. [Google Scholar] [CrossRef]

- Albino, V.; Berardi, U.; Dangelico, R.M. Smart Cities: Definitions, Dimensions, Performance, and Initiatives. J. Urban Technol. 2015, 22, 3–21. [Google Scholar] [CrossRef]

- Verhoef, P.C.; Kannan, P.K.; Inman, J.J. From Multi-Channel Retailing to Omni-Channel Retailing: Introduction to the Special Issue on Multi-Channel Retailing. J. Retail. 2015, 91, 174–181. [Google Scholar] [CrossRef]

- DeSouza, K.C. Citizen Apps to Solve Complex Urban Problems. J. Urban Technol. 2012, 19, 107–136. [Google Scholar] [CrossRef]

- Roblek, V.; Meško, M.; Krapež, A. A Complex View of Industry 4.0. SAGE Open 2016, 1–11. [Google Scholar] [CrossRef]

- Caprotti, F. Spaces of visibility in the smart city: Flagship urban spaces and the smart urban imaginary. Urban Stud. 2018. [Google Scholar] [CrossRef]

- Lim, C.; Kim, K.J.; Maglio, P.P. Smart cities with big data: Reference models, challenges, and considerations. Cities 2018, 82, 86–99. [Google Scholar] [CrossRef]

- Gutiérrez, V.; Galache, J.A.; Santana, J.; Sotres, P.; Sánchez, L.; Muñoz, L. The Smart City Innovation Ecosystem: A Practical Approach. IEEE COMSOC MMTC E-Lett. 2014, 9, 35–39. [Google Scholar]

- Ballesteros, L.G.M.; Alvarez, O.; Markendahl, J. Quality of Experience (QoE) in the smart cities context: An initial analysis. In Proceedings of the 2015 IEEE First International Smart Cities Conference (ISC2), Guadalajara, Mexico, 25–28 Octorber 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Abbas, M. Challenges in Implementation of TVM (Ticket Vending Machine) in Developing Countries for Mass Transport System: A Study of Human Behavior while Interacting with Ticket Vending Machine-TVM; Springer: Cham, Switzerland, 2014; pp. 245–254. [Google Scholar] [CrossRef]

- Verhoeff, N. Urban Interfaces: The Cartographies of Screen-Based Installations. Telev. New Media 2017, 18, 305–319. [Google Scholar] [CrossRef]

- Benouaret, K.; Valliyur-Ramalingam, R.; Charoy, F. CrowdSC: Building Smart Cities with Large-Scale Citizen Participation. IEEE Internet Comput. 2013, 17, 57–63. [Google Scholar] [CrossRef]

- Wac, K.; Ickin, S.; Hong, J.H.; Janowski, L.; Fiedler, M. Studying the experience of mobile applications used in different contexts of daily life. In Proceedings of the First ACM SIGCOMM Workshop on Measurements Up the stack, Toronto, ON, Canada, 19 August 2011; pp. 7–12. [Google Scholar]

- Othman, M.K.; Sulaiman, M.N.; Aman, S. Heuristic Evaluation: Comparing Generic and Specific Usability Heuristics for Identification of Usability Problems in a Living Museum Mobile Guide App. Adv. Hum. Comput. Interact. 2018, 1–13. [Google Scholar] [CrossRef]

- Hvannberg, E.T.; Law, E.L.C.; Lérusdóttir, M.K. Heuristic evaluation: Comparing ways of finding and reporting usability problems. Interact. Comput. 2007, 19, 225–240. [Google Scholar] [CrossRef]

- Bader, F.; Schön, E.M.; Thomaschewski, J. Heuristics Considering UX and Quality Criteria for Heuristics. Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 48–53. [Google Scholar] [CrossRef]

- Nielsen, J.; Molich, R. Heuristic evaluation of user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems Empowering People—CHI ’90, Seattle, WA, USA, 1–5 April 1990; pp. 249–256. [Google Scholar] [CrossRef]

- Bruce, T. Bruce Tognazzini s Tog on Interface—Developing User Interfaces for Microsoft Windows. Available online: http://flylib.com/books/en/2.847.1.19/1/ (accessed on 12 June 2019).

- Gerhardt-Powals, J. Cognitive engineering principles for enhancing human-computer performance. Int. J. Hum. Comput. Interact. 1996, 8, 189–211. [Google Scholar] [CrossRef]

- Connell, I. Full Principles Set. Available online: http://www0.cs.ucl.ac.uk/staff/i.connell/DocsPDF/PrinciplesSet.pdf (accessed on 12 June 2019).

- Sanchez, J. Psychological Usability Heuristics|UX Magazine. Available online: http://uxmag.com/articles/psychological-usability-heuristics (accessed on 12 June 2019).

- Johnson, J. Designing with the Mind in Mind: Simple Guide to Understanding User Interface Design Guidelines; Elsevier: Waltham, MA, USA, 2014. [Google Scholar]

- Web Accesibility Initiative. WAI Guidelines and Techniques|Web Accessibility Initiative (WAI)|W3C. Available online: https://www.w3.org/WAI/standards-guidelines/ (accessed on 1 March 2019).

- Insitituto de Biomecanica de Valencia. Guía de Recomendaciones Para el Diseño de Mobiliario Ergonómico. Available online: http://www.ibv.org/publicaciones/catalogo-de-publicaciones/ergonomia-y-mueble-guia-de-recomendaciones-para-el-diseno-de-mobiliario-ergonomico (accessed on 12 June 2019).

- Russo, P.; Boor, S. How fluent is your interface?: Designing for international users. In Proceedings of the INTERCHI ’93 Conference on Human Factors in Computing Systems, Amsterdam, The Netherlands, 24–29 April 1993; pp. 342–347. [Google Scholar] [CrossRef]

- Weinschenk, S. The Psychologist’s View of UX Design|UX Magazine. Available online: https://uxmag.com/articles/the-psychologists-view-of-ux-design (accessed on 12 June 2019).

- Weinschenk, S. 100 MORE Things Every Designer Needs to Know About People; New Riders: Berkeley, CA, USA, 2015. [Google Scholar]

- Law, E.L.C.; Hvannberg, E.T. Analysis of Strategies for Improving and Estimating the Effectiveness of Heuristic Evaluation. In Proceedings of the Third Nordic Conference on Human-computer Interaction (NordiCHI ’04), Tampere, Finland, 23–27 October 2004; pp. 241–250. [Google Scholar] [CrossRef]

- Martins, A.I.; Queirós, A.; Rocha, N.P. Validation of a usability assessment instrument according to the evaluators’ perspective about the users’ performance. Univers. Access Inf. Soc. 2019, 1–11. [Google Scholar] [CrossRef]

- Sharon, T. Validating Product Ideas; Rosenfeld Media: New York, NY, USA, 2016. [Google Scholar]

- Marsh, S. User Research: A Practical Guide to Designing Better Products and Services; Kogan Page: London, UK, 2018. [Google Scholar]

- Rohrer, C. When to Use Which User-Experience Research Methods. Available online: https://www.nngroup.com/articles/which-ux-research-methods/ (accessed on 16 June 2019).

- Laufer, D.; Burnette, A.; Costa, T.; Hogan, A. The Digital Customer Experience Improvement Playbook for 2019. Available online: https://www.forrester.com/playbook/The+Digital+Customer+Experience+Improvement+Playbook+For+2019/-/E-PLA130 (accessed on 12 June 2019).

- Rodden, K.; Hutchinson, H.; Fu, X. Measuring the User Experience on a Large Scale: User-centered Metrics for Web Applications. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’10), Atlanta, GA, USA, 10–15 April 2010; pp. 2395–2398. [Google Scholar] [CrossRef]

- McGinn, J.; Chang, A.R. RITE+Krug: A Combination of Usability Test Methods for Agile Design. J. Usability Stud. 2013, 8, 61–68. [Google Scholar]

- Sy, D. Adapting Usability Investigations for Agile User-centered Design. J. Usability Stud. 2007, 2, 112–132. [Google Scholar]

- Sheppard, B.; Sarrazin, H.; Kouyoumjian, G.; Dore, F. The Business Value of Design. Available online: https://www.mckinsey.com/business-functions/mckinsey-design/our-insights/the-business-value-of-design (accessed on 12 June 2019).

- Persson, M.; Grundstrom, C.; Väyrynen, K. A case for participatory practices in the digital transformation of insurance. In Proceedings of the 31st Bled Econference: Digital Transformation: Meeting the Challenges, Bled, Slovenia, 17–20 June 2018; pp. 429–440. [Google Scholar] [CrossRef]

- MacDonald, C.M. User Experience (UX) Capacity-Building: A Conceptual Model and Research Agenda. In Proceedings of the 2019 on Designing Interactive Systems Conference (DIS ’19), San Diego, CA, USA, 23–28 June 2019; pp. 187–200. [Google Scholar] [CrossRef]

- Ruud, O. Successful digital transformation projects in public sector with focus on municipalities (research in progress). In Proceedings of the Central and Eastern European e|Dem and e|Gov Days 2017, Budapest, Hungary, 4–5 May 2017. [Google Scholar]

- Berman, S.J.; Korsten, P.J.; Marshall, A. A four-step blueprint for digital reinvention. Strategy Leadersh. 2016, 44, 18–25. [Google Scholar] [CrossRef]

- Bennett, D.; Pérez-Bustamante, D.; Medrano, M.L. Challenges for Smart Cities in the UK. In Sustainable Smart Cities: Creating Spaces for Technological, Social and Business Development; Springer: Cham, Switzerland, 2017; pp. 1–14. [Google Scholar] [CrossRef]

- Oliveira, A.; Campolargo, M. From Smart Cities to Human Smart Cities. In Proceedings of the 48th Hawaii International Conference on System Sciences, Kauai, HI, USA, 5–8 January 2015; pp. 2336–2344. [Google Scholar] [CrossRef]

- Polson, P.G.; Lewis, C.; Rieman, J.; Wharton, C. Cognitive Walkthroughs: A Method for Theory-based Evaluation of User Interfaces. Int. J. Man Mach. Stud. 1992, 36, 741–773. [Google Scholar] [CrossRef]

- Farkas, D.; Nunnally, B. UX Research; O’Reilly Media: Sebastopol, CA, USA, 2016. [Google Scholar]

- Optimal Workshop. Reframer. Available online: https://www.optimalworkshop.com/reframer (accessed on 30 June 2019).

- Micheaux, A. Customer Journey Mapping as a New Way to Teach Data-Driven Marketing as a Service. J. Mark. Educ. 2018. [Google Scholar] [CrossRef]

- Barrouillet, P.; Bernardin, S.; Portrat, S.; Vergauwe, E. Time and Cognitive Load in Working Memory. J. Exp. Psychol. Learn. Mem. Cogn. 2007, 33, 570–585. [Google Scholar] [CrossRef]

- Sandnes, F. User Interface Design for Public Kiosks: An Evaluation of the Taiwan High Speed Rail Ticket Vending Machine. J. Inf. Sci. Eng. 2010, 307–321. Available online: https://www.researchgate.net/publication/220587882_User_Interface_Design_for_Public_Kiosks_An_Evaluation_of_the_Taiwan_High_Speed_Rail_Ticket_Vending_Machine (accessed on 12 July 2017).

- Hernández Sampieri, R.; Fernández Collado, C.; Baptista Lucio, P. Metodología de la Investigación. Quinta Edicion; McGraw-Hill: Mexico DF, Mexico, 2014. [Google Scholar]

- Nielsen, J. Why You Only Need to Test with 5 Users. Available online: https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/ (accessed on 3 December 2018).

- Turner, C.W.; Lewis, J.R.; Nielsen, J. Determining Usability Test Sample Size. In International Encyclopedia of Ergonomics and Human Factors; CRC Press: Boca Raton, FL, USA, 2006; pp. 3084–3088. [Google Scholar]

- Miaskiewicz, T.; Kozar, K.A. Personas and user-centered design: How can personas benefit product design processes? Des. Stud. 2011, 32, 417–430. [Google Scholar] [CrossRef]

- Nielsen, L. Personas—User Focused Design (Human–Computer Interaction Series); Springer International Publishing: London, UK, 2019. [Google Scholar]

- Murthy, D. Ethnographic Research 2.0. J. Organ. Ethnogr. 2013, 2, 23–36. [Google Scholar] [CrossRef]

- Reeves, S.; Kuper, A.; Hodges, B.D. Qualitative research methodologies: Ethnography. BMJ 2008, 337. [Google Scholar] [CrossRef]

- Spool, J.M.; Schroeder, W. Testing web sites: Five users is nowhere near enough. In Proceedings of the CHI ’01 Extended Abstracts on Human Factors in Computing Systems, Seattle, WA, USA, 31 March–5 April 2001. [Google Scholar]

- Faulkner, L. Beyond the five-user assumption: Benefits of increased sample sizes in usability testing. Behav. Res. Methods Instrum. Comput. 2003, 35, 379–383. [Google Scholar] [CrossRef]

- Romano Bergstrom, J.C.; Olmsted-Hawala, E.L.; Chen, J.M.; Murphy, E.D. Conducting Iterative Usability Testing on a Web Site: Challenges and Benefits. J. Usability Stud. 2011, 7, 9–13. Available online: http://uxpajournal.org/conducting-iterative-usability-testing-on-a-web-site-challenges-and-benefits/ (accessed on 12 June 2019).

- Virzi, R.A. Refining the Test Phase of Usability Evaluation: How Many Subjects Is Enough? Hum. Factors 1992, 34, 457–468. [Google Scholar] [CrossRef]

- Nielsen, J. Guerrilla HCI: Using Discount Usability Engineering to Penetrate the Intimidation Barrier. Available online: https://www.nngroup.com/articles/guerrilla-hci/ (accessed on 15 June 2019).

- Cazañas, A.; de San Miguel, A.; Parra, E. Estimating Sample Size for Usability Testing. Enfoque UTE 2017, 8, 172–185. [Google Scholar] [CrossRef]

- Guest, G.S.; Namey, E.M.; Mitchell, M.L. Collecting Qualitative Data: A Field Manual for Applied Research; SAGE Publications, Inc.: Thousand Oaks, CA, USA, 2012. [Google Scholar]

- Henry, D.; Dymnicki, A.B.; Mohatt, N.; Allen, J.; Kelly, J.G. Clustering Methods with Qualitative Data: A Mixed Methods Approach for Prevention Research with Small Samples. Prev. Sci. 2015, 16, 1007–1016. [Google Scholar] [CrossRef]

- Watkins, D.C. Rapid and Rigorous Qualitative Data Analysis: The “RADaR” Technique for Applied Research. Int. J. Qual. Methods 2017, 16. [Google Scholar] [CrossRef]

- Haenssgen, M.J. The struggle for digital inclusion: Phones, healthcare, and marginalisation in rural India. World Dev. 2018, 104, 358–374. [Google Scholar] [CrossRef]

- Ghosal, A.; Halder, S. Building Intelligent Systems for Smart Cities: Issues, Challenges and Approaches. In Smart Cities; Springer: Cham, Switzerland, 2018; pp. 107–125. [Google Scholar] [CrossRef]

- Card, S.K.; Moran, T.P.; Newell, A. The Keystroke-Level Model for User Performance Time with Interactive Systems. Commun. ACM 1980, 23, 396–410. [Google Scholar] [CrossRef]

- Card, S.K.; Moran, T.P.; Newell, A. The Psychology of Human-Computer Interaction; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1986. [Google Scholar]

- Fox, J.E. The Science of Usability Testing. In Proceedings of the 2015 Federal Committee on Statistical Methodology (FCSM) Research Conference, Washington, DC, USA, 1–3 December 2015. [Google Scholar]

- Gonzalez-Holland, E.; Whitmer, D.; Moralez, L.; Mouloua, M. Examination of the Use of Nielsen’s 10 Usability Heuristics & Outlooks for the Future. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2017, 61, 1472–1475. [Google Scholar] [CrossRef]

| Author | Number of Guidelines, Principles or Heuristics | Main Objective | Limitations in the Context of Urban Interfaces |

|---|---|---|---|

| Nielsen [22] | 10 | Provide criteria for designing and evaluating interface design. | Does not consider evaluating the physical setting in which the interface will operate. |

| Tognazzini [23] | 21 | Evaluate user interactions with the interface. | Focuses primarily on the design aspect of the interaction. |

| Gerhardt-Powals [24] | 9 | Improve the performance and efficiency of a person when using an interface. | Mainly steered towards the efficiency of executed tasks. |

| Connell [25] | 30 | Provide principles for interactive system design. | Focuses on digital aspects, does not take setting or physical space into account. |

| Weinschenk [31,32] | 10 | Analyze user experience from a psychological approach. | Primarily considers learning and cognitive aspects. |

| Johnson [27] | 12 | Provide design principles based on cognition and reasoning. | Primarily considers learning and cognitive aspects. |

| WAI [28] | 61 | Evaluate the accessibility of a website for people with motor, cognitive, auditory and visual impairments. | Focuses on web, predominantly on HTML & CSS standards. |

| Biomechanical Institute of Valencia [29] | 12 | Assess ATM accessibility. | Only useful for ATMs that do not have touchscreen technology. |

| Russo & Boor [30] | 7 | Provide design recommendations for interface design used by an international audience. | Only mentions visual and communication elements. |

| Guideline | Main Objective |

|---|---|

| 1. Efficiency | Evaluate the degree to which a task is completed without delays or deviation. |

| 2. Help & instructions | Evaluate whether the interface provides contextual information to orientate and guide the user in the event of doubts. |

| 3. Structure & content | Evaluate the interface’s information architecture: organization, navigation, signage, and findability. |

| 4. Resemblance to reality | Evaluate the manner in which the interface displays elements that allow associations to be easily made with day-to-day objects. |

| 5. Information relevant to context | Evaluate whether only the bare minimum of information needed for decision-making is being presented. |

| 6. Error prevention | Evaluate how situations that may prevent task completion are predicted. |

| 7. Error recovery | Evaluate which elements are provided so that a task or process is completed in the event of an error. |

| 8. Interface feedback | Evaluate whether users are provided status updates regarding actions and processes. |

| 9. Cognitive processes | Evaluate whether a task can be performed in accordance with an individual’s cognitive abilities and technological know-how. |

| 10. Internationalization | Evaluate whether the interface is designed for different cultures. |

| 11.Visual design | Evaluate whether there is a consistently applied visual system design. |

| 12. Accessibility for motor-impaired users | Evaluate performance when used by individuals with permanent or temporary motor impairments. |

| 13. Accessibility for users with sensory impairments | Evaluate performance when used by individuals with temporary or permanent visual or auditory impairments. |

| 14. Alternate and complementary digital resources | Evaluate whether omnichannel or multi-channel options are available for achieving an objective. |

| Interface | Geographical Location | Ownership | Objective of Interface |

|---|---|---|---|

| Taxi ticketing kiosk | Monterrey Mexico. | Private | Purchase tickets for different taxi companies for airport transfer service. |

| ATM of CFE-Mático | Mexico City & Monterrey Mexico. | Government | Pay electricity bill. |

| Parking meter of San Pedro Garza García Town Hall | Monterrey Mexico. | Government | Pay fixed rate for parking in the street. |

| Mexico City Government Treasury Kiosk. | Mexico City, Mexico | Government | Obtain official paperwork and pay for bureaucratic procedures managed by the Mexico City Government. |

| Metrobús ticket vending machine. | Mexico City, Mexico | Government | Purchase public transport tickets for use in Mexico City. |

| Automated parking machine in Cali parking lot. | Cali, Colombia | Private | Pay for parking in private lots and commercial centers. |

| Home Center self-checkout machine | Bogotá & Cali, Colombia. | Private | Pay for items selected whilst shopping. |

| Cine Colombia ticketing kiosk | Bogotá & Cali, Colombia. | Private | Buy cinema tickets. |

| Mío top-up terminal | Cali, Colombia | Government | Top up a travel card with funds for use on public transport in Cali. |

| Urban Interface | Description of Each Group |

|---|---|

| Taxi ticketing kiosk | Group 1: Foreign business travellers. High socioeconomic status. High technological know-how. Age range 35–50 years old. |

| Group 2: Family holidaymakers. Medium or Medium-high socioeconomic status. High technological know-how. Age range 30–40 years old. | |

| Group 3: Students on study visits. Medium or High socioeconomic status. High technological know-how. Age range 18–25 years old. | |

| ATM CFE-Mático | Group 1: Female homemakers. Medium or Medium-high socioeconomic status. Medium technological know-how. Age range 35–50 years old. |

| Group 2: Pensioners. Medium socioeconomic status. Low technological know-how. Age range 60–80 years old. | |

| Group 3: Working parents. Medium or Medium-high socioeconomic status. High technological know-how. Age range 40–50 years old. | |

| Parking Machine – San Pedro Garza García Town Hall | Group 1: Individuals visiting banks or local businesses. Medium or Medium-high socioeconomic status. High technological know-how. Age range 30–50 years old. |

| Group 2: Individuals visiting restaurants, cafes or leisure activity. Medium or Medium-high socioeconomic status. High technological know-how. Age range 20–45 years old. | |

| Group 3: Women shopping. High socioeconomic status. Low technological know-how. Age range 40–70 years old. | |

| Mexico City Government Treasury Kiosk | Group 1: Business owners or managers. Medium or High socioeconomic status. Medium technological know-how. Age range 30–50 years old. |

| Group 2: Parents. Medium or Medium-high socioeconomic status. High technological know-how. Age range 30–40 years old. | |

| Group 3: Pensioners. Low or Medium socioeconomic status. Low technological know-how. Age range 60–80 years old. | |

| Metrobús ticket vending machine in Mexico City | Group 1: Employees who are local residents. Medium or Medium-high socioeconomic status. High technological know-how. Age range 25–40 years old. |

| Group 2: Tourists. Medium-high or High socioeconomic status. High technological know-how. Age range 25–50 years old. | |

| Group 3: Students who are local residents. Medium-low or Medium status. High technological know-how. Age range 15–25 years old. | |

| Self-service Checkout in Home Center | Group 1: Independent professionals working in construction and maintenance. Medium socioeconomic status. Medium technological know-how. Age range 25–50 years old. |

| Group 2: Maintenance employees. Medium or Medium-high socioeconomic status. Medium technological know-how. Age range 30–55 years old. | |

| Group 3: Individuals remodeling and performing DIY in their homes. Medium-high socioeconomic status. High technological know-how. Age range 25–40 years old. | |

| Parking Machine in commercial shopping centers | Group 1: Individuals going shopping in the shopping center. Medium-high or High socioeconomic status. High technological know-how. Age range 25–50 years old. |

| Group 2: Students going to shopping center for leisure & entertainment activities or socializing. Medium or Medium-high socioeconomic status. High technological know-how. Age range 18–25 years old. | |

| Group 3: Pensioners. Medium-high or High socioeconomic status. Low technological know-how. Age range 60–80 years old. | |

| Cine Colombia ticketing kiosk | Group 1: Parents. Medium or Medium-high socioeconomic status. High technological know-how. Age range 25–40 years old. |

| Group 2: Students going to the cinema in groups. Medium or Medium-high socioeconomic status. High technological know-how. Age range 15–25 years old. | |

| Group 3: Young cinema fans. Medium-high or High socioeconomic status. High technological know-how. Age range 25–35 years old. | |

| Mío top-up terminal for public transport in Cali | Group 1: Employees who are local residents. Medium or High socioeconomic status. High technological know-how. Age range 25–40 years old. |

| Group 2: Tourists. Medium-high or High socioeconomic status. High technological know-how. Age range 25–50 years old. | |

| Group 3: People living in rural communities visiting the city to perform bureaucratic processes. Medium-low socioeconomic status. Low technological know-how. Age 18–40 years old. | |

| Group 4: Students who are local residents. Medium-low or Medium socioeconomic status. High technological know-how. Age range 15–25 years old. |

| Activity | Description |

|---|---|

| User Observation Recruitment | Researchers went to the physical location of the urban interface that was to be studied. The previously designed screener was applied to confirm whether the individual corresponded with one of the previously defined profiles. |

| Warm-up & Briefing | Researchers briefly explained the purpose of the study. Brief instructions were given and the user was asked to perform activities as naturally as possible. |

| Observation Session | In accordance with the previously designed guidelines, researchers observed and recorded the actions performed by each person when completing a task. Researchers did not intervene. Part of the session was either recorded or photographed. During the session notes were taken using the observation guidelines previously established. The execution time of each task was also logged. It is important to mention that there were two researchers, one to make observations and the other to record data. |

| Debriefing & Analysis of Results | Upon completing the sessions for each group of users with shared characteristics, researchers met to establish where there is common ground with regards to each guideline. Using Reframer, researchers recorded and group the field notes obtained during observation sessions. In the same tool, researchers tagged the notes to indicate which of the 14 guidelines the notes corresponded to. By tagging the notes in this manner it was possible to identify those guidelines that were repeated more frequently, and which problems were more commonplace. Timings were also logged in the website mentioned above in order to establish the efficiency involved in completing a task. Following this, researchers meet to draw up the customer experience map that best represents the journey observed for each group of individuals. |

| Problems & Findings | Related Guidelines |

|---|---|

| 1. The majority of interfaces are not designed for users with disabilities | - Accessibility for motor-impaired users |

| - Accessibility for users with sensory impairments | |

| - Efficiency | |

| - Visual design | |

| - Cognitive processes | |

| 2. Poor attention paid to internationalization and foreign visitors | - Internationalization |

| - Cognitive processes | |

| - Help & instructions | |

| - Information relevant to context | |

| - Efficiency | |

| - Interface feedback | |

| - Structure & content | |

| - Error recovery | |

| - Error prevention | |

| 3. Urban digital interface design limited to the digital context | - Help & instructions |

| - Information relevant to context | |

| - Visual design | |

| - Resemblance to reality | |

| - Alternate and complementary digital resources | |

| - Structure & content | |

| 4. Urban interface processes have a larger cognitive load than personal interface processes | - Cognitive processes |

| - Help & instructions | |

| - Efficiency | |

| - Interface feedback | |

| - Error recovery | |

| - Error prevention | |

| 5. Poorly designed service, isolated processes, and lack of omnichanneling. | - Alternate and complementary digital resources |

| - Information relevant to context | |

| - Error prevention | |

| - Efficiency | |

| 6. The interface requires ‘independent’ add-ons in order to offer omnichannel processes | - Alternate and complementary digital resources |

| - Help & instructions | |

| - Efficiency | |

| - Cognitive processes | |

| - Interface feedback | |

| - Error recovery | |

| - Error prevention | |

| 7. Greater focus on efficiency than on learning process | - Efficiency |

| - Cognitive processes | |

| - Error recovery | |

| - Error prevention | |

| - Interface feedback | |

| 8. Face-to-face human ‘support staff’ become indispensable | - Cognitive processes |

| - Error recovery | |

| - Error prevention | |

| - Interface feedback | |

| - Help & instructions |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aceves Gutierrez, L.C.; Martin Gutierrez, J.; Del-Rio-Guerra, M.S. Having a Smarter City through Digital Urban Interfaces: An Evaluation Method. Appl. Sci. 2019, 9, 3498. https://doi.org/10.3390/app9173498

Aceves Gutierrez LC, Martin Gutierrez J, Del-Rio-Guerra MS. Having a Smarter City through Digital Urban Interfaces: An Evaluation Method. Applied Sciences. 2019; 9(17):3498. https://doi.org/10.3390/app9173498

Chicago/Turabian StyleAceves Gutierrez, Luis C., Jorge Martin Gutierrez, and Marta Sylvia Del-Rio-Guerra. 2019. "Having a Smarter City through Digital Urban Interfaces: An Evaluation Method" Applied Sciences 9, no. 17: 3498. https://doi.org/10.3390/app9173498

APA StyleAceves Gutierrez, L. C., Martin Gutierrez, J., & Del-Rio-Guerra, M. S. (2019). Having a Smarter City through Digital Urban Interfaces: An Evaluation Method. Applied Sciences, 9(17), 3498. https://doi.org/10.3390/app9173498