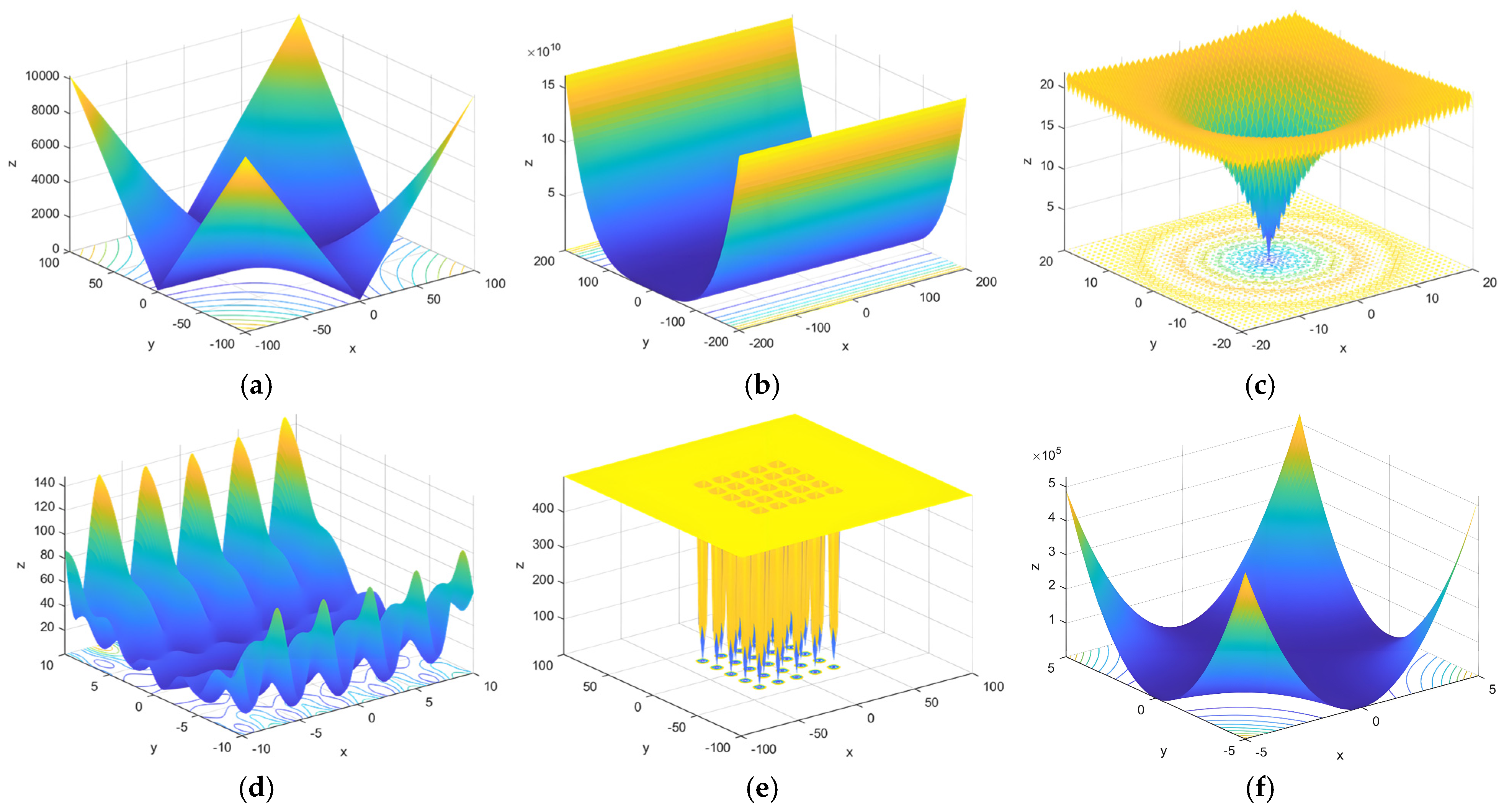

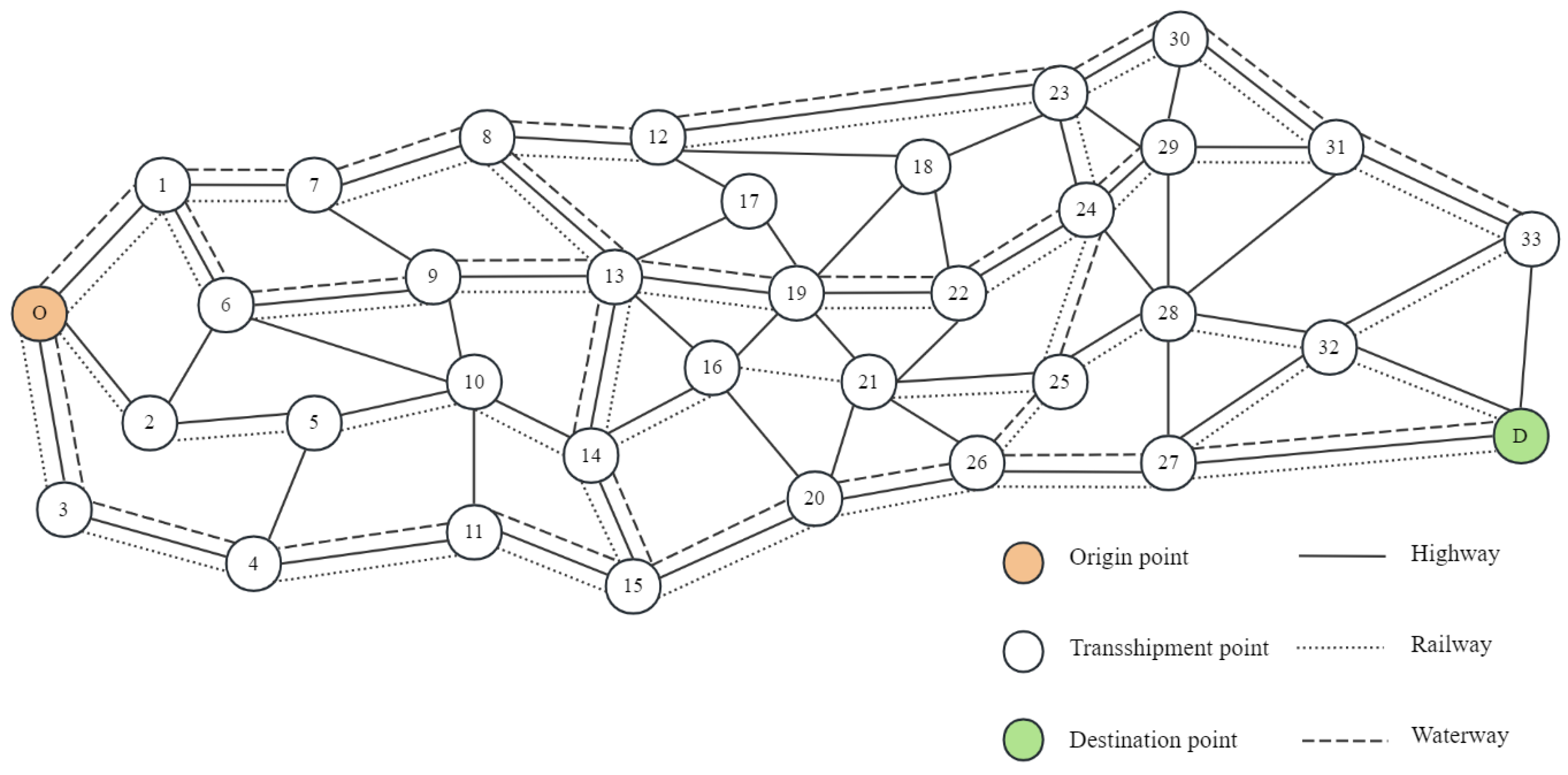

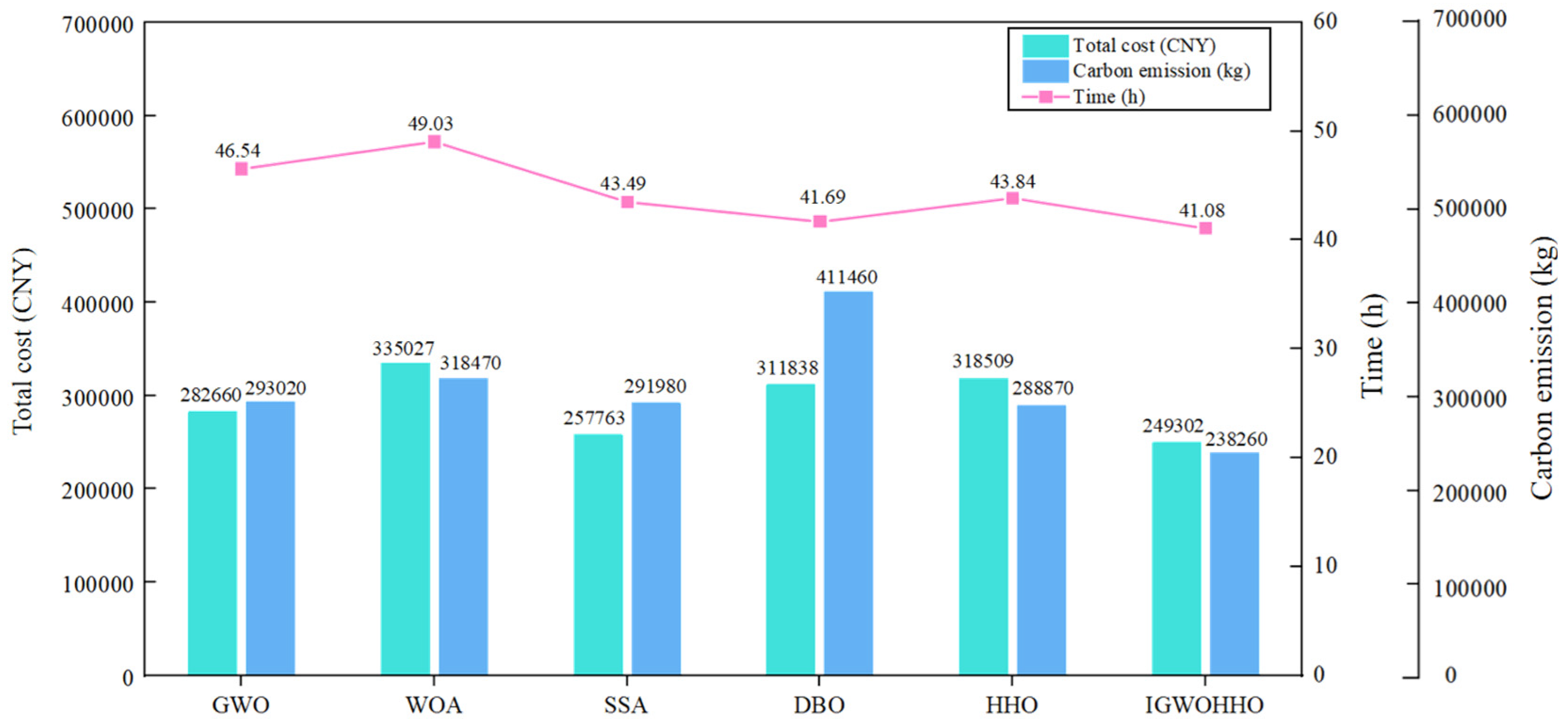

To address the complexity of container intermodal transport scheduling under low-carbon constraints, a multi-objective optimization model is proposed. This model simultaneously considers total cost, transport time, and carbon emissions, incorporating carbon tax pricing and penalties for soft time window violations. Given the model’s nonlinear characteristics and multi-objective structure, an enhanced solution approach based on the IGWOHHO algorithm is introduced to tackle the problem efficiently.

3.1. Container Multimodal Transport Model

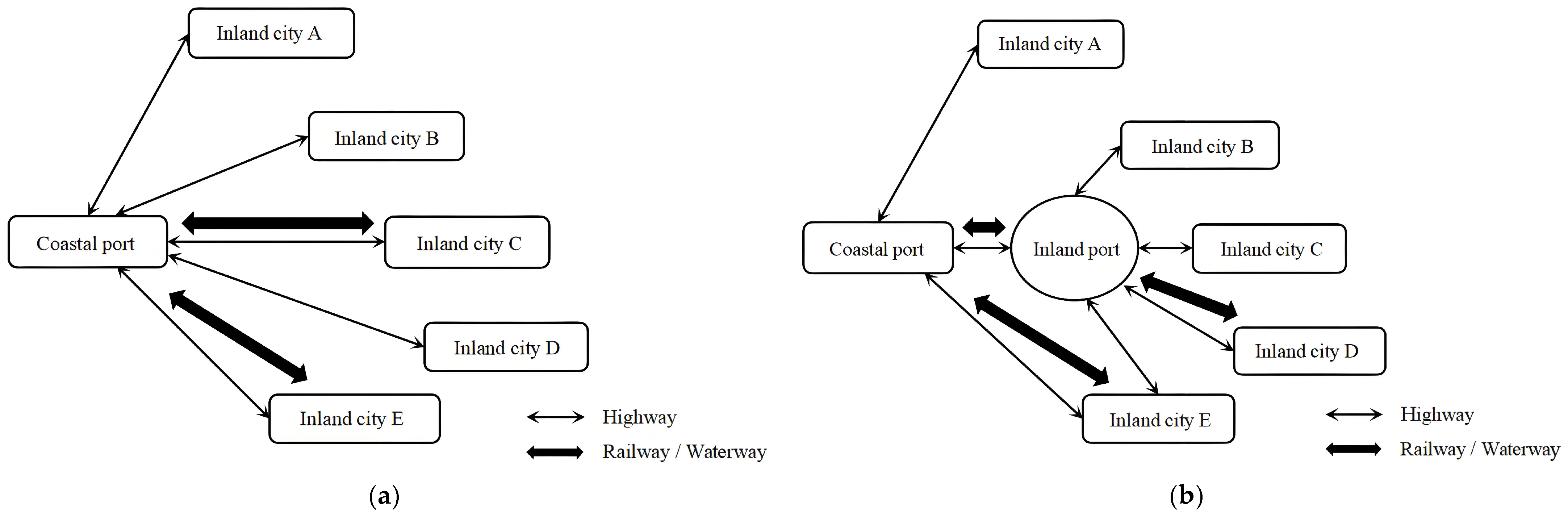

In intermodal container scheduling at inland ports, containers are typically transported directly from the yard to the railway yard or inland waterway terminal by road single-vehicle transport, without intermediate transshipment. To simplify cost calculation, this paper combines pickup and yard-to-yard operations into a single transshipment operation. Based on the actual scheduling process, the model divides the operation costs into three components, which include direct transport cost

Cw, nodal transshipment cost

Ct, and time penalty cost

Cp. This categorization simplifies cost calculation, enhances the model’s practicality, and incorporates constraints related to transportation costs, time efficiency, and carbon emissions, thereby aligning more closely with the practical needs of multimodal transport scheduling optimization.

In this context, N represents the set of nodes, and K denotes the set of transport modes. The parameter q indicates the container demand, while and refer to the distance and per-unit cost between nodes i and j under mode k, respectively. The binary variable indicates whether mode k is selected between nodes i and j. The term denotes the per-unit transition cost from mode k to mode h at node i, with representing the corresponding binary transition variable. The pair (Ei, Li) defines the soft time window bounds, Ti is the actual arrival time at node i, and s1 and s2 denote the unit penalty costs for early and late arrivals, respectively.

Carbon emissions

E generated during intermodal transport arise from two primary sources. The first is fuel consumption by transport modes in operation, represented as

Ef. The second source is the use of mechanized equipment in transshipment processes, represented as

Em.

where

ek represents the unit carbon emission factor of mode

k, and

denotes the unit carbon emission factor associated with transitioning from mode

k to

h at node

i.

In addition to mandatory carbon emission policies, a carbon tax is a key tool for promoting emission reductions by enterprises. By translating carbon emissions into economic costs through carbon tax pricing, the transition to low-carbon transportation can be effectively supported.

where

denotes the carbon tax rate. The expression incorporates both operational and transshipment-related emissions, converting them into monetary terms to reflect their economic impact within the scheduling model.

The total transport time

T consists of two components. The first is the travel time of the transport vehicle

Ts, which represents the duration of goods in transit. The second is the transit time at the transport node

Tr, which reflects the efficiency of node operations.

where

vk denotes the average speed of travel using the

kth transport mode, and

represents the transit time between nodes

i and

j for the selected

kth mode.

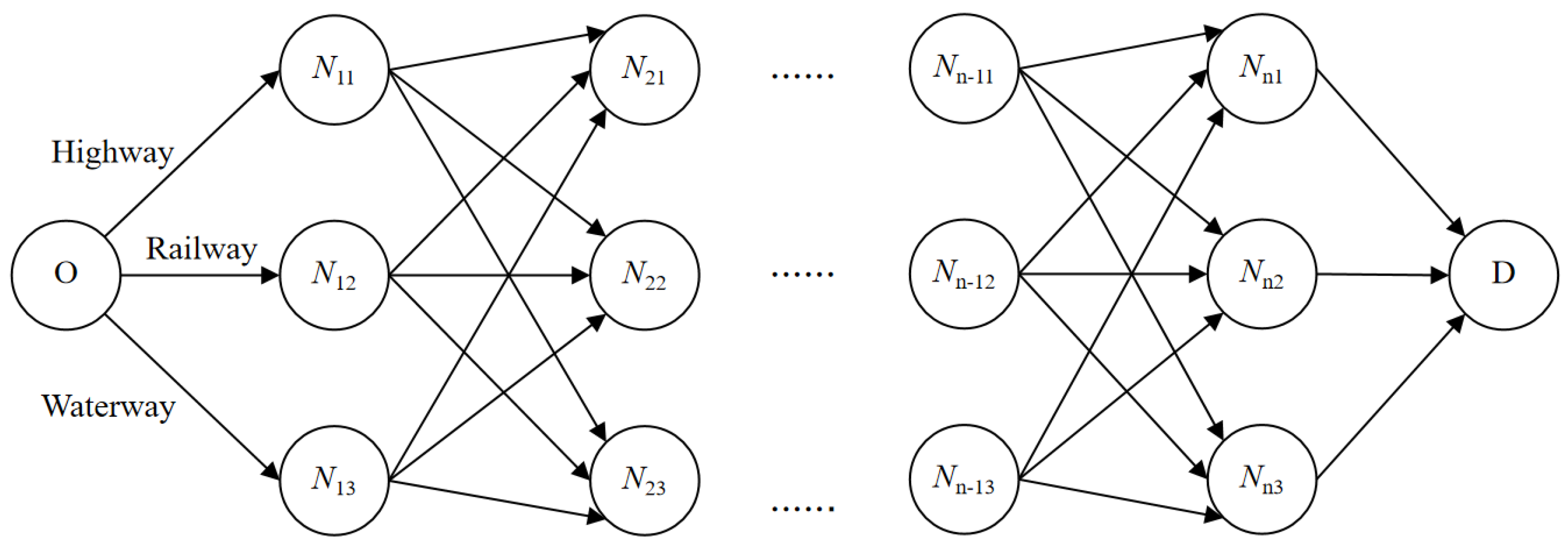

Based on the above parameter definitions, this study constructs a container intermodal transport path optimization model for inland ports within a given planning period. Considering practical constraints related to cost, transport time windows, and carbon emissions, the objective function is defined as shown in Equation (9).

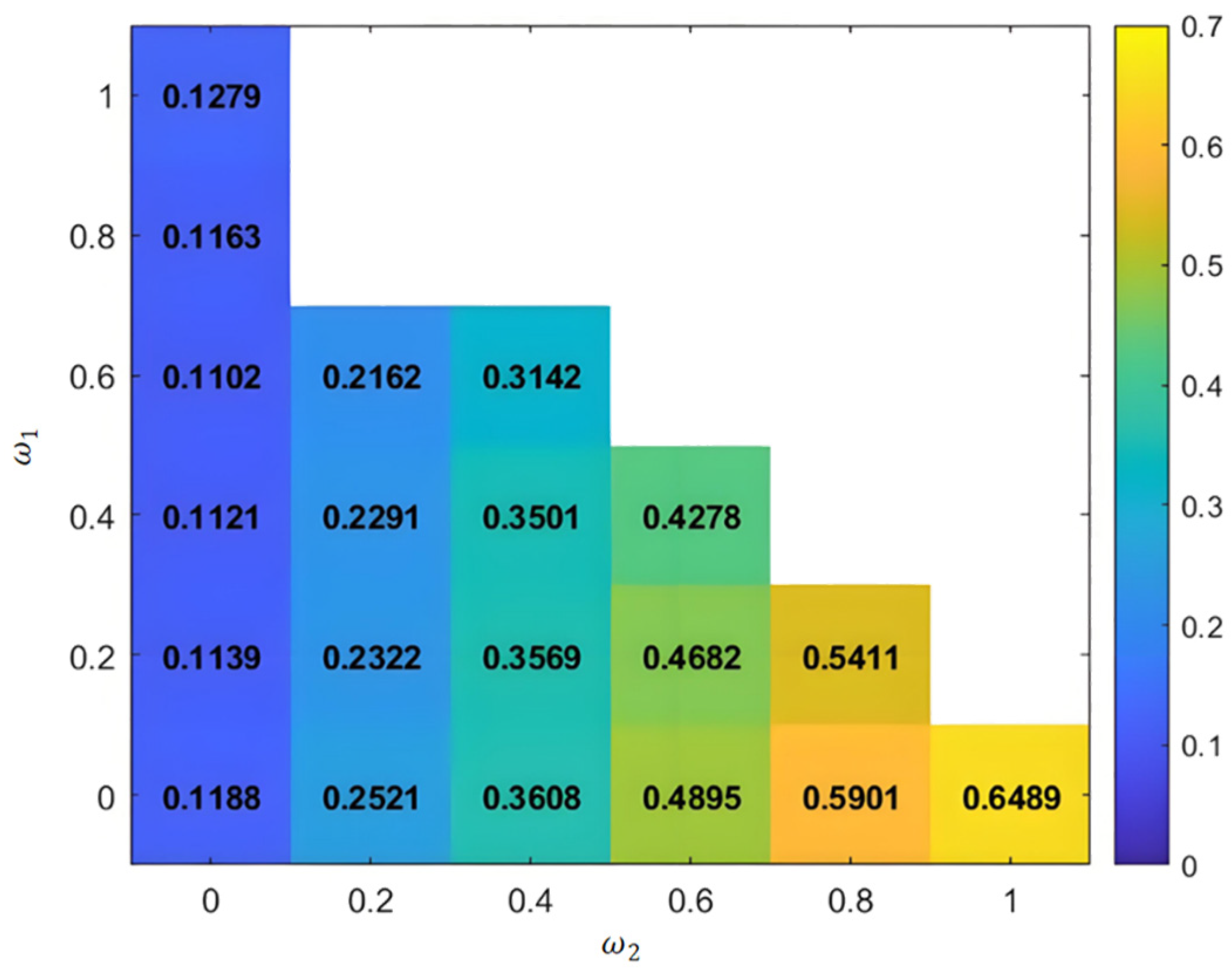

where

ω1,

ω2, and

ω3 denote the weighting coefficients for total transport costs, transport time, and carbon emissions.

To ensure path uniqueness, the model restricts the selection to at most one transport mode between any two nodes, as specified in Equation (10).

The number of mode transitions per node is limited to one, as reflected in Equation (11), thereby avoiding multiple conversions between transport modes at the same node.

The coherence of transport modes is maintained by Equation (12), which ensures that if a mode transition occurs at node

i, the inbound and outbound transport modes are logically consistent.

No transport mode conversion is allowed at the origin or destination node, as specified in Equation (13).

The non-negativity and normalization of weight parameters are specified in Equation (14), which ensures that all weights range between 0 and 1 and their total equals one.

To control emissions, Equation (15) limits total carbon emissions during the transport process, keeping them within the prescribed upper threshold.

The arrival time of goods at node

j is calculated based on the departure time from node

i, the transition time, and the travel time, as shown in Equation (16).

The total transport time is constrained to remain within a predefined hard time window, as indicated in Equation (17).

The binary nature of the decision variables is specified in Equations (18) and (19), where both

and

are restricted to values of 0 or 1.

The balance of cargo flow is maintained by Equation (20), which ensures that the inflow and outflow at each node remain equal throughout the scheduling process.

The proposed model integrates soft time window constraints, alongside time penalty costs and carbon tax considerations, to balance the economic and environmental objectives. It includes a comprehensive set of constraints that guarantee the selection of feasible transport modes, compliance with time constraints, control of carbon emissions, and balance of flow. This approach establishes a strong foundation for optimizing intermodal transport scheduling with a focus on both cost efficiency and low-carbon objectives.

3.3. Improved Grey Wolf–Harris Hawks Hybrid Algorithm

Proposed by Mirjalili et al. [

39] in 2014, GWO is a heuristic algorithm inspired by grey wolf hunting behavior and social hierarchy. It solves complex optimization problems by simulating leadership structures and collaborative strategies within the wolf pack. The population is divided into

α,

β,

δ, and

ω, which correspond to the best, suboptimal, superior, and general solutions. The optimization process involves three main phases, including encircling the prey, tracking its position, and performing the attack.

In the encirclement phase, each individual estimates the distance to the prey using the distance vector

D and updates its position by adjusting the step size based on the control coefficients

A and

C, thereby gradually approaching the optimal solution.

where

represents the prey’s position, i.e., the current optimal solution at iteration

t, while

denotes the position of a grey wolf at the same iteration.

T refers to the maximum number of iterations.

The adjustment coefficients

A and

C are determined by random variables

r1 and

r2, uniformly distributed within the range [0, 1], with their formulations provided in Equations (25) and (26).

The convergence factor

a, which controls the magnitude of these coefficients, decreases linearly from 2 to 0 during the iteration process in accordance with Equation (27).

In the position update phase, the general grey wolf generates candidate solutions

X1,

X2, and

X3 by following the guidance of the three leading wolves, as illustrated in Equation (28).

where

Xα,

Xβ, and

Xδ denote the positions of

α,

β, and

δ wolves, which correspond to the top three solutions with the highest fitness. The distances of individual wolves from

α,

β, and

δ head wolves are represented by

Dα,

Dβ, and

Dδ, respectively. Additionally,

C1,

C2, and

C3 are the random coefficients.

This process is further illustrated in

Figure 3, which shows how the grey wolf updates its position based on the optimal, suboptimal, and superior solutions within the population.

The final position is calculated as a weighted average of the positions of the optimal, suboptimal, and superior individuals, guiding the population toward the optimal direction and enhancing convergence reliability, as presented in Equation (29).

Due to its dynamic balance between global exploration and local exploitation, GWO is highly efficient for solving complex scheduling problems. Its simple structure, rapid convergence, and effective global search capability make GWO a powerful tool. It demonstrates strong robustness and fast convergence in multi-objective optimization and is widely applied in path planning, intelligent scheduling, and low-carbon logistics [

40,

41,

42]. However, GWO tends to fall into local optima, particularly in high-dimensional problems, which limits its solution accuracy. The HHO algorithm addresses this issue by enhancing local search capabilities and improving adaptability in escaping local optima. Combining both algorithms enhances convergence stability and search performance for multi-objective scheduling problems.

Originally proposed by Heidari et al. [

43] in 2019, the HHO algorithm is an emerging intelligent optimization method that has shown strong potential in logistics-related problems, such as the traveling salesman problem and vehicle routing [

44,

45]. Despite these promising results, its application in multimodal transport optimization remains limited and has not been systematically investigated in the literature.

During the exploration phase of the HHO algorithm, when |

E| ≥ 1, the Harris hawk switches between two position update strategies depending on the value of

q. If

q ≥ 0.5, the hawk selects a random location within the population range for its next move. If

q < 0.5, the hawk adjusts its position by considering the distance between the prey and the group’s central location. These two update strategies are presented in Equation (30), while Equation (31) defines the calculation of the group center.

where

Xrand(

t) represents the current position of a randomly selected individual, while

Xrabbit(

t) denotes the prey’s position.

Xm(

t) corresponds to the central position of the hawk flock, which is typically calculated as the average position of all the hawks in the population. This central position plays a guiding role in the HHO algorithm, allowing the hawks to coordinate their movements toward the prey during the optimization process. The set of random factors {

ri|

i = 1, 2, 3, 4} and

q are values within the range (0, 1). Additionally,

B1 and

Bu define the upper and lower bounds of the parameters being optimized.

The escape energy

E gradually decreases with the number of iterations, as formulated in Equation (32), reflecting the diminishing evasion capacity of the prey over time.

where

E0 represents the initial random energy value within the range (−1, 1),

t denotes the current iteration count, and

T indicates the maximum number of iterations.

When |

E| < 1, the HHO algorithm enters the exploitation phase and applies different hunting strategies based on the prey’s escape behavior. Under the soft encirclement condition, where |

E| ≥ 0.5 and

r ≥ 0.5, the hawks update their position according to the prey’s escape pattern.

where

represents the difference between the prey and hawk positions, and

J denotes the jump distance during the rabbit’s escape process, as expressed in Equations (34) and (35).

Upon satisfying the conditions |

E| < 0.5 and

r ≥ 0.5, the algorithm transitions into the hard encirclement phase, during which the hawks fixate on the prey, gradually reduce the search radius, and update their positions, as shown in Equation (36).

When |

E| ≥ 0.5 and

r < 0.5, the hawks either perform a high-speed dive to softly encircle the prey if it demonstrates strong escape ability or initiate Lévy flight behavior when predation fails. The updated position is expressed in Equation (37). The formulation of Lévy flight is provided in Equation (38).

where

D represents the problem dimension, and

S is a random vector of the same dimension. The variables

u and

v are random numbers uniformly distributed within the interval [0, 1]. The parameter

is a constant set to 1.5.

When the Lévy flight fails to yield an improved solution and the conditions |

E| < 0.5 and

r < 0.5 are satisfied, the algorithm enters the hard encirclement phase with high-speed swooping. In this stage, the hawks contract around the group center, and the position is updated using the following formulation as shown in Equation (39):

In the original GWO-HHO hybrid optimization algorithm, GWO is primarily responsible for global exploration, while HHO focuses on local exploitation. However, the use of fixed weight assignment often leads to performance degradation and premature convergence. To address this issue and improve the balance between exploration and exploitation, this study proposes the IGWOHHO algorithm. The enhanced method integrates adaptive weighting, hybrid search, elite retention, and perturbation strategies to enhance search efficiency, convergence precision, and overall robustness.

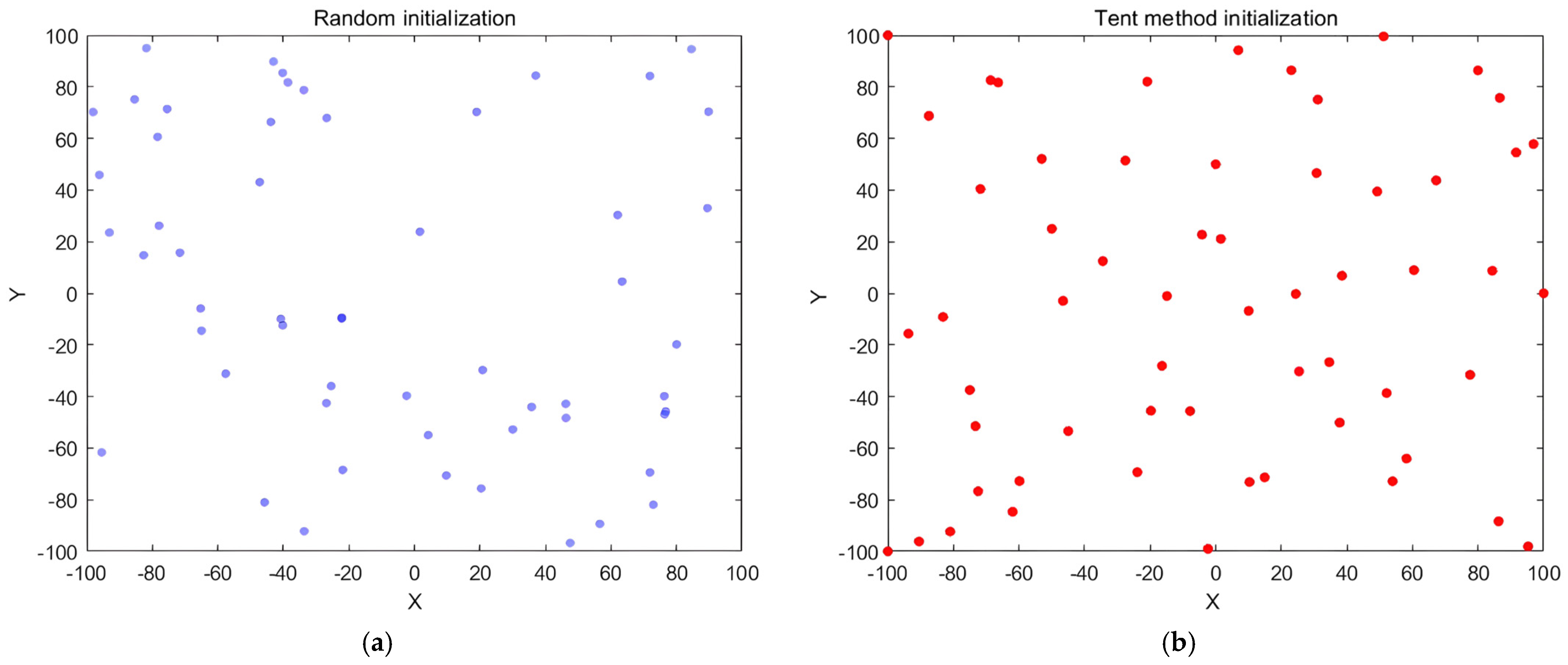

3.3.1. Tent Chaotic Mapping for Initial Population

At the initial stage of the algorithm, the Tent chaotic map is used to initialize the population, enhancing diversity and spatial coverage. As a representative chaotic mapping method, the Tent map exhibits strong traversal ability, uniform distribution, and dynamic complexity. These properties help avoid the clustering and sparsity issues often associated with random initialization, thereby improving the algorithm’s global search performance [

46]. The mathematical expression of the Tent map used to generate the chaotic sequence is given in Equation (40).

where

0, 1] denotes the chaotic sequence of the

nth generation. The initial value

is typically generated randomly within the interval (0, 1). When

, the Tent map exhibits its most representative chaotic behavior.

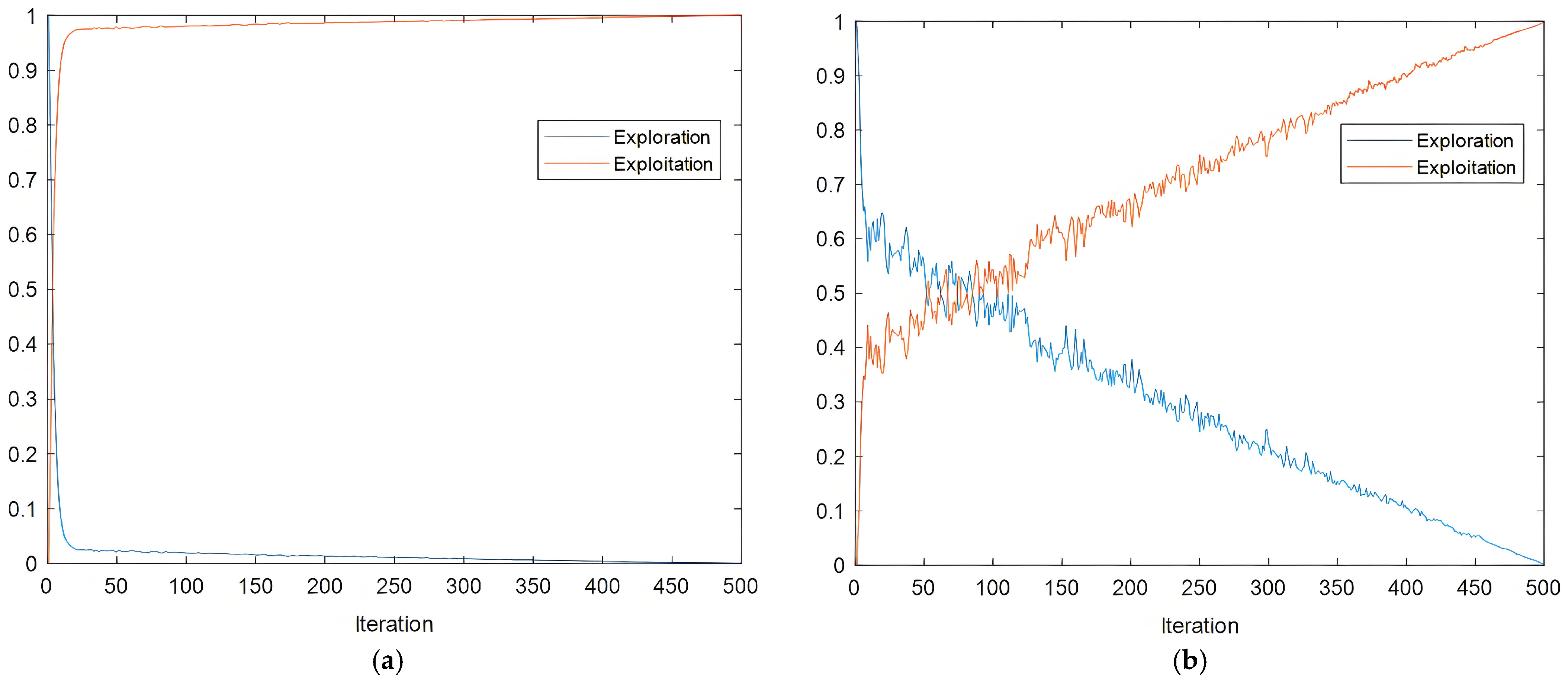

Figure 4 compares the population distribution resulting from random initialization and Tent map-based initialization. The Tent mapping generates a more uniformly distributed sequence in the solution space, effectively reducing blind search regions and enhancing algorithmic robustness. This initialization approach provides a more favorable starting point for subsequent optimization.

The initial chaotic sequence

zn, generated via the Tent map, is subsequently mapped into the problem domain [

Xmin,

Xmax] to construct the initial population

, as presented in Equation (41).

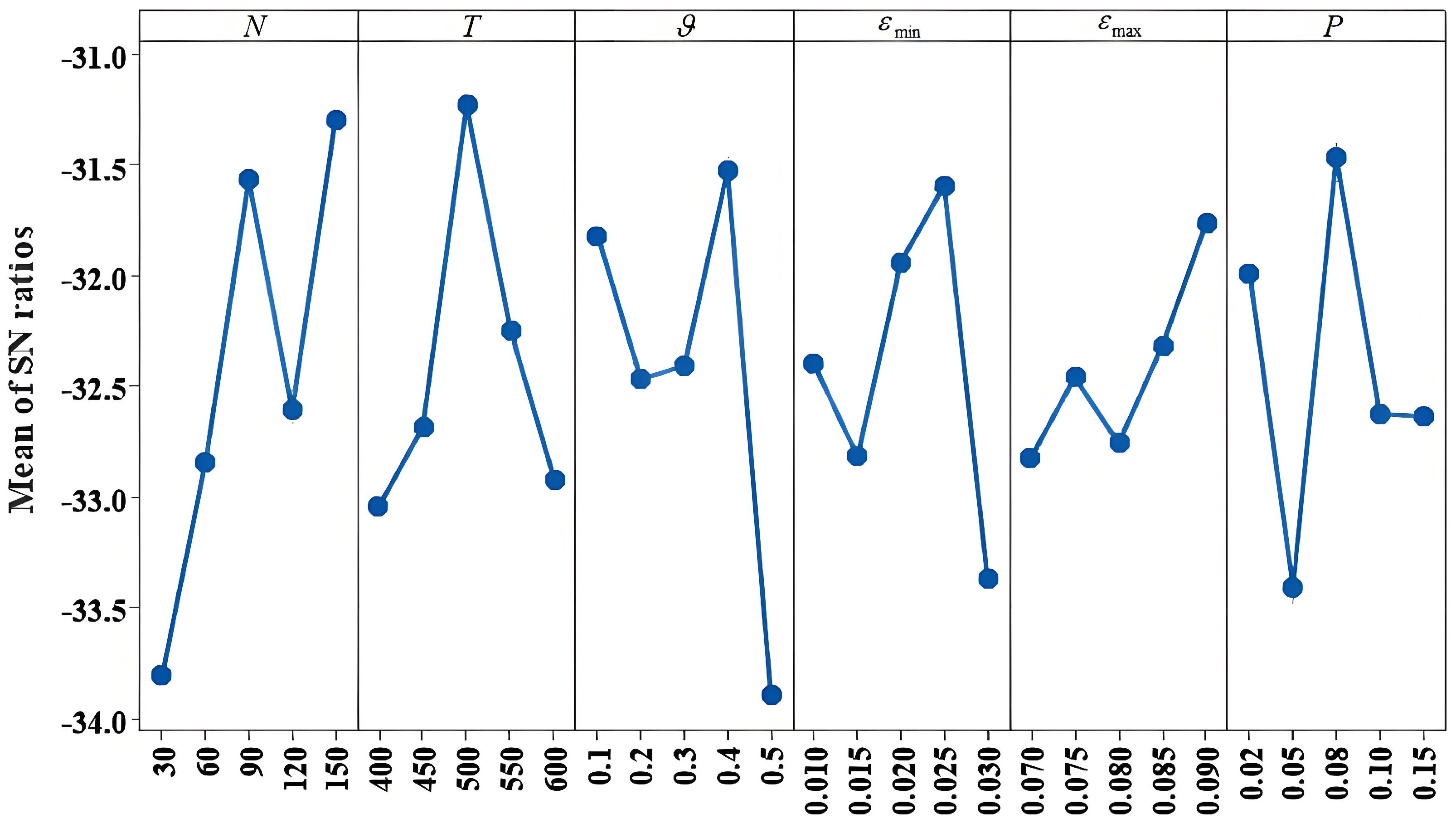

3.3.2. Convergence Factor and Flight Step Size Optimization

During the trapping phase of the GWO algorithm, the original implementation uses a linearly decreasing convergence factor

a, as defined in Equation (27). However, this linear strategy may cause the search range to shrink prematurely in later iterations, reducing global exploration capability. To enhance search flexibility and avoid early convergence, this study introduces a stochastic disturbance based on the gamma distribution to adaptively adjust the convergence factor. The revised formulation is given in Equation (42).

where

is the perturbation adjustment factor.

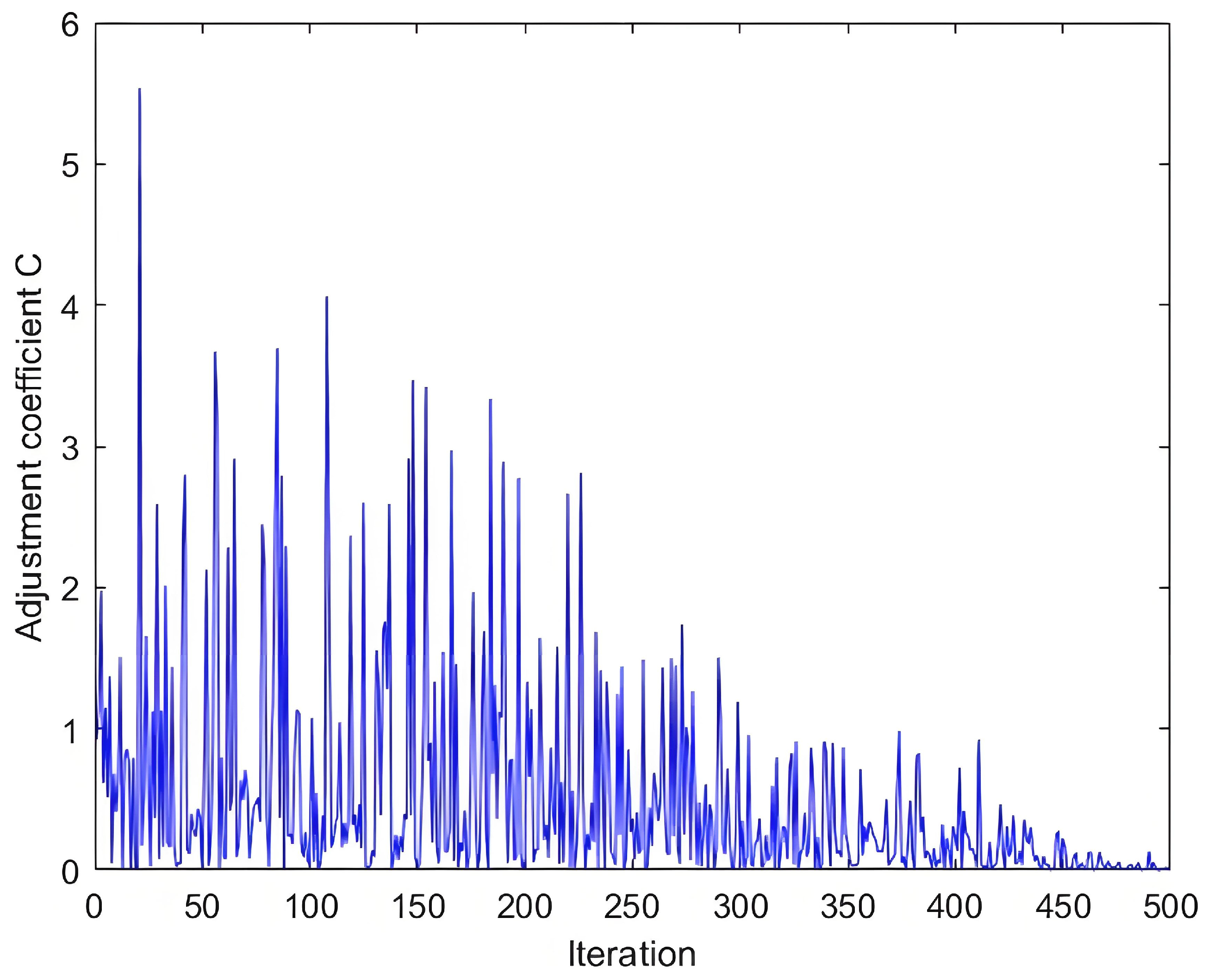

In addition, the adjustment coefficient

C, which maintains stochastic fluctuations throughout the iteration process, does not fully capture the dynamic nature of the search phase. This limitation may lead to excessive dispersion during the early stage of the search. To address this issue, an adaptive convergence factor is introduced to refine the calculation of coefficient

C in the GWO algorithm, as defined in Equation (43).

where

is a random factor used to improve search diversity.

Figure 5 illustrates that the dynamically adjusted value of coefficient

C fluctuates significantly in the early stages, enhancing global exploration. As the iteration progresses, the value gradually stabilizes, supporting a balanced transition between exploration and exploitation.

The Lévy flight mechanism, proposed by the mathematician Paul Lévy, is a stochastic process characterized by a power-law distribution. In the local exploitation phase of the HHO algorithm, a fixed step size is typically used. In contrast, the original Lévy flight strategy introduces search jumps through randomly varying step sizes. Although its heavy-tailed distribution helps balance local intensification and global exploration, the step scaling factor is usually constant. This lack of adaptability may cause excessive variations in the search range during later iterations, which can undermine convergence stability and degrade solution quality. To address this issue, a dynamic step size adjustment mechanism is introduced. This allows the step length to gradually decrease with the iteration process, thereby adaptively controlling the search scope. The adjustment formulation is provided in Equation (44).

where

S0 is the initial step size and

controls the step contraction rate.

To further enhance search efficiency, each round of Lévy flights incorporates a dynamic step size adjustment. If the fitness of the newly generated solution does not surpass that of the current best solution, the search position is updated using a modified step size. When no improvement is observed over three consecutive iterations, a more refined local search is triggered to intensify exploitation.

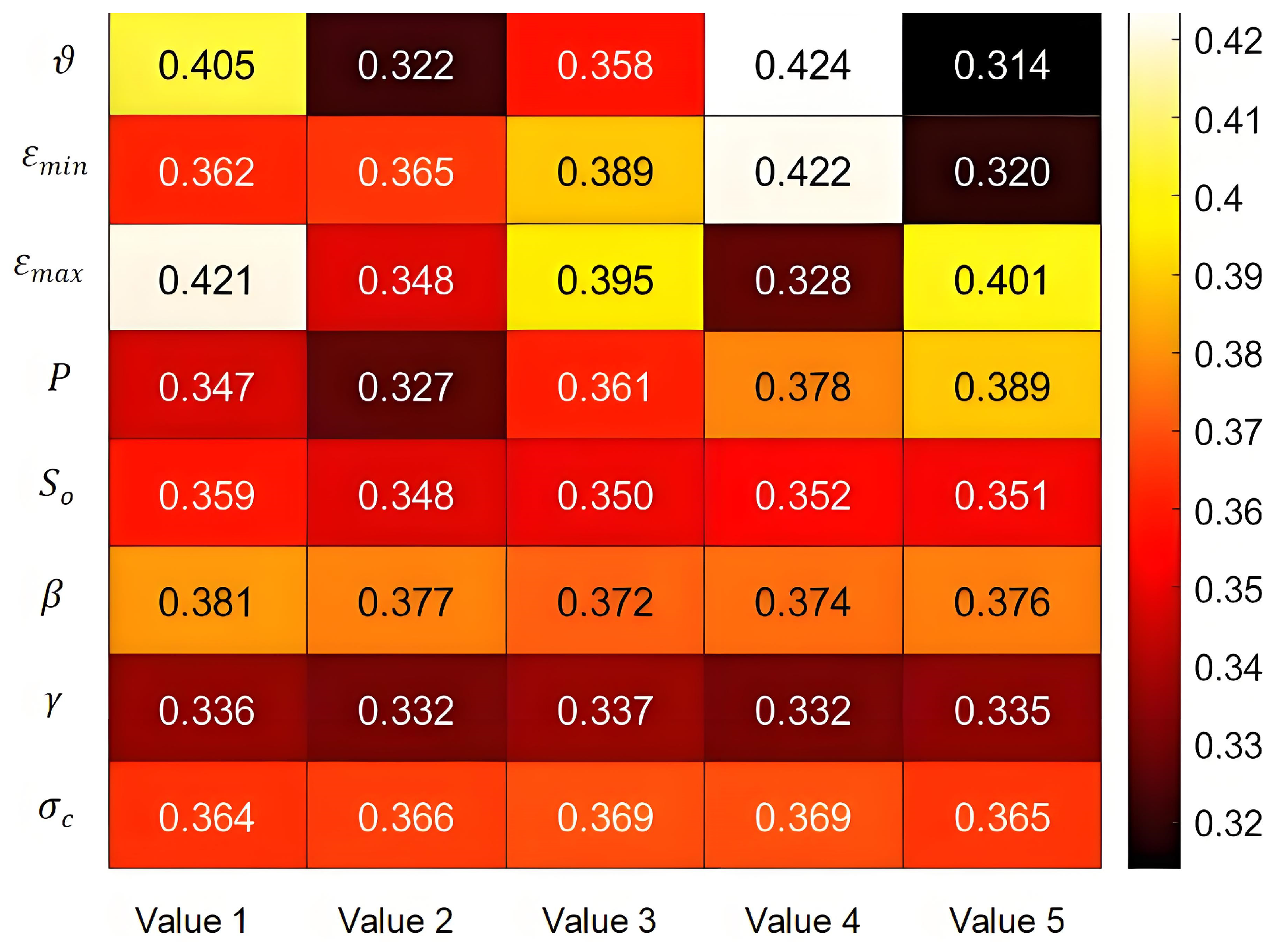

3.3.3. Adaptive Weight Dynamic Switching Strategy

To enable adaptive adjustment and ensure a smooth transition during the search process, this study introduces a switching threshold, as defined in Equation (45).

where the parameters

and

denote the maximum and minimum values of the switching threshold, respectively, and

denotes the control parameter that regulates the rate of change. Preliminary experiments indicated that setting

to 5 provides an optimal balance between exploration and exploitation. This setting enables the algorithm to effectively explore the search space while also converging to optimal or near-optimal solutions within a reasonable number of iterations.

To evaluate the search state in real time, the optimal solution change rate is introduced, as shown in Equation (46).

where

is the current optimal fitness, and

η = 10

−8 is added to avoid zero in the denominator.

If > , the search is still exhibiting significant improvement, and the GWO algorithm continues to guide global exploration. Conversely, when the change rate falls below the threshold, the solution tends to stabilize, and control is gradually shifted to the HHO algorithm to enhance local exploitation.

To prevent premature switching to the HHO algorithm caused by fluctuations in the optimal solution, the switching mechanism is further refined by introducing a weight ratio

as the dominant criterion. This mechanism dynamically adjusts the search weights of both algorithms based on the relationship between

and

, using a Sigmoid function as defined in Equation (47).

where

is the sensitivity adjustment parameter, typically set to 10 [

47].

Based on the dynamic changes in and , different switching conditions are defined for various search phases. In the early stage, a higher threshold allows larger solution fluctuations, promoting global exploration. In the middle stage, as the threshold gradually decreases to 1/2 2.0, and the GWO and HHO algorithms cooperate to maintain a balance between exploration and exploitation. In the late stage, the threshold reaches its minimum while the improvement rate increases, allowing the HHO algorithm to dominate and enhance local search accuracy.

In the coordinated search phase, the GWO algorithm generates candidate solutions according to Equation (29), while the HHO algorithm selects appropriate position update strategies based on the prey escape energy

E and the random factor

r. The final position of each individual is then determined by a weighted combination of the two solutions, as defined in Equation (48).

However, the weighted combination is treated as a candidate solution rather than the final output. A multi-source selection mechanism is subsequently applied to determine whether the optimal solution should be updated, as defined in Equation (49).

3.3.4. Elite Retention and Perturbation Strategies

In population-based optimization algorithms, the elite retention strategy enhances convergence by preserving the best-performing individuals in each iteration, thereby preventing the loss of high-quality solutions [

48]. However, relying solely on elite retention may reduce population diversity and increase the risk of premature convergence. To address this issue, a Gaussian stochastic perturbation mechanism is introduced to perform localized searches around elite individuals, thereby enhancing search diversity and improving global exploration capability.

After each iteration, individuals are ranked in descending order according to their fitness values, and the top

P% are selected to form the elite set. To ensure retention of the current best solution

, it is forcibly included in the elite set if it does not fall within the top

P%. The elite set is defined in Equation (50).

To further enhance the exploration capability of elite individuals, Gaussian perturbation is introduced in Equation (51) to generate neighboring solutions. Building on this, Equation (52) incorporates a pair of sinusoidal functions to dynamically adjust the perturbation amplitude throughout the iteration process. In the early stage, a larger amplitude encourages broad exploration and reduces the risk of premature convergence. As the iteration progresses, the amplitude gradually decreases, enabling more focused local searches and improving solution refinement accuracy.

After each round of perturbation, the fitness of the newly generated neighboring solution is evaluated and compared with that of the original elite individual. The better-performing solution is retained for the next iteration, as defined in Equation (53).

During the hybrid search phase of the GWO and HHO algorithms, the fitness values of the combined candidate solutions and the elite-perturbed solutions are compared. The solution with the lowest fitness value is selected as the optimal one for the current iteration.

To maintain a constant population size, the updated elite and non-elite individuals after perturbation are merged and ranked in ascending order of fitness. The top

N individuals are then selected to form the next generation. This merging process is expressed in Equation (54).

where

denotes the set of updated non-elite individuals, and the

SelectBest function represents the selection of the top

N optimal solutions based on fitness values to keep the population size constant.

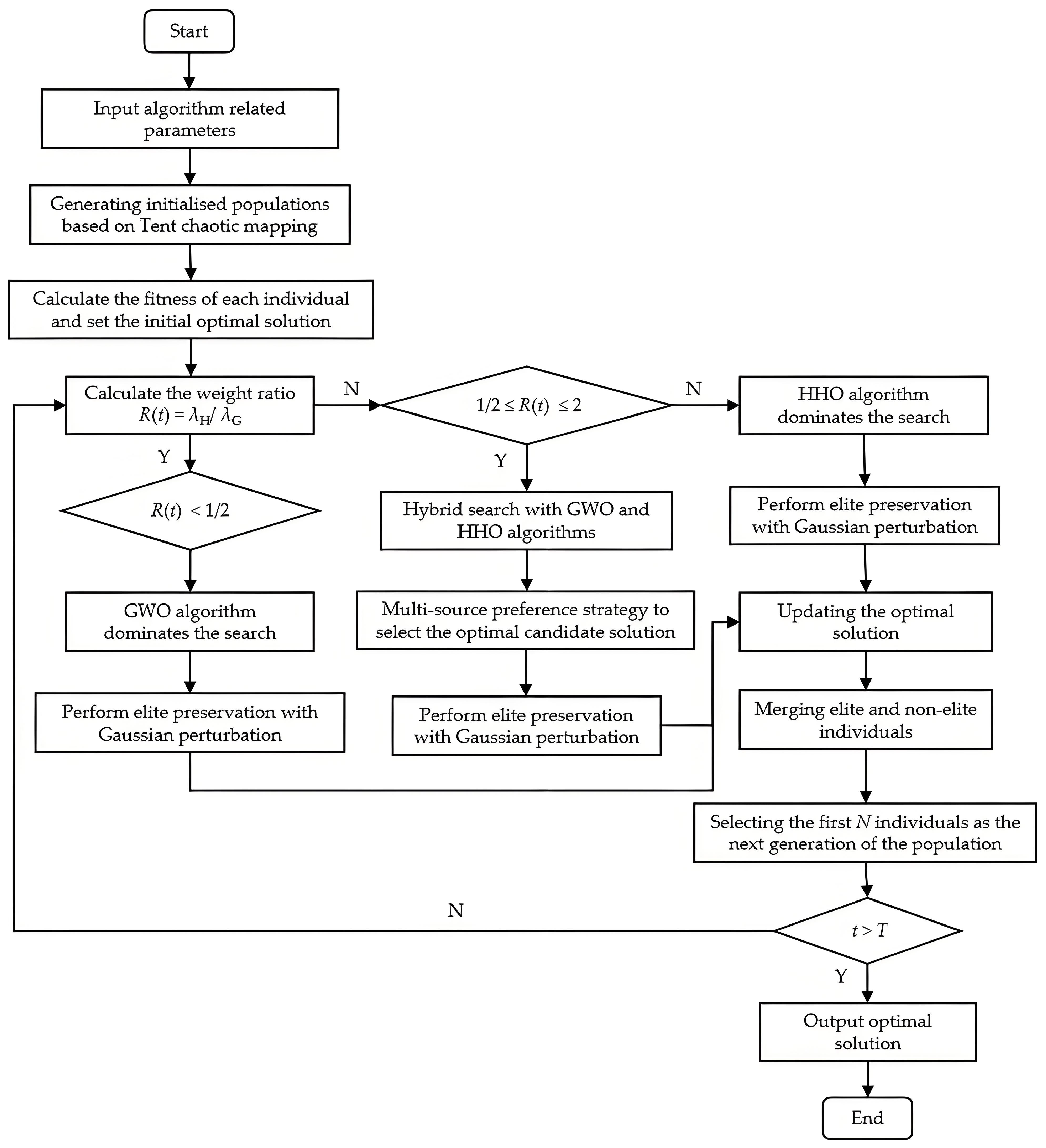

3.3.5. Algorithm Execution Framework

To systematically present the procedural logic of the proposed IGWOHHO algorithm, the pseudocode is provided in Algorithm 1, accompanied by a flowchart. These offer a clear and structured overview of the algorithm’s initialization, dynamic phase transition, hybrid search mechanism, and elite retention strategy.

| Algorithm 1: Framework of the IGWOHHO algorithm |

| Input: population size N, max iterations T, perturbation adjustment coefficient , minimum switching threshold , maximum switching threshold , elite retention ratio proportion P |

| Output: optimal solution X* |

| 1: Initialize parameters N, T, , , , P |

| 2: Generate initial population X0 using Tent chaotic mapping |

| 3: Evaluate fitness of each individual in X0 |

| 4: Set the best individual as X* |

| 5: for t = 1 to T do |

| 6: Evaluate fitness for all individuals |

| 7: Compute ε(t), change rate Δf(t), and dynamic weights λ_GWO, λ_HHO |

| 8: Compute ratio R(t) = λ_HHO/λ_GWO |

| 9: if R(t) < 0.5 then |

| 10: Perform the global search using GWO |

| 11: Apply elite retention and perturbation |

| 12: Update X* if better solution is found |

| 13: else if 0.5 ≤ R(t) ≤ 2.0 then |

| 14: Perform hybrid search |

| 15: Generate candidate solutions using both GWO and HHO |

| 16: Compute weighted combination |

| 17: Apply multi-source selection |

| 18: Apply elite retention and perturbation |

| 19: Update X* if a better solution is found |

| 20: else |

| 21: Perform local search using HHO based on E and r |

| 22: Apply Lévy-based dynamic refinement |

| 23: Apply elite retention and perturbation |

| 24: Update X* if a better solution is found |

| 25: end if |

| 26: Merge elite and non-elite groups, sort by fitness |

| 27: Select top N individuals for next generation |

| 28: end for |

| 29: return X* |

Figure 6 presents the flowchart of the IGWOHHO algorithm, outlining the key steps from initialization to adaptive strategy selection and solution update. It illustrates the dynamic switching mechanism among GWO, HHO, and hybrid search phases.