Abstract

This work presents a mixed reality (MR) system designed to explore inaccessible cultural heritage sites through immersive and interactive experiences. The application features two versions: an asynchronous personalized guided system offering interactions tailored to individual users’ requests and a synchronous guided system providing a shared, collective navigation experience for all users. Both versions integrate innovative mechanics that allow users to explore virtual recreations of cultural sites. Multi-user functionality ensures the visibility of other users as avatars in the virtual environment, enabling collaborative exploration. The proposed application offers a GPS localization system for on-site experiences and a non-location-dependent option for remote settings. A user evaluation was conducted to assess the effectiveness and engagement of the system, providing insights into user preferences and the potential for MR technologies in preserving and promoting cultural heritage. The results highlight the application’s impact on accessibility, immersion, and multi-user interaction, paving the way for further innovation in MR cultural heritage exploration.

1. Introduction

Cultural heritage sites reflect the architectural, artistic, and social achievements of past civilizations. They offer invaluable insights into the evolution of societies, their cultural practices, and technological advancements. However, due to a variety of factors—ranging from the fragile state of many historical structures to ongoing conservation efforts and geographic remoteness—numerous heritage sites remain inaccessible to the general public [1]. Furthermore, excessive human intervention can accelerate the corrosion of delicate artifacts and structures, making it essential to balance access with preservation. As a result, there is a growing need to explore alternative methods of engaging with these sites without compromising their integrity.

Mixed reality (MR) offers a transformative solution to this challenge, providing a remote immersive cultural experience. With MR, users can virtually explore intricate details of historically significant locations, from ancient temples to modern museums all over the world [2], and engage with digital reconstructions, multimedia narratives, and contextual information. Additionally, a shared multi-user experience enhances cultural learning in virtual heritage by enabling collaboration and social interaction [3]. This not only bridges the gap between physical and virtual experiences but also provides a deeper understanding of historical contexts.

The implementation of digital transformation (DT) technology in cultural sites can have varying economic and social results by integrating advanced technologies like MR that attract a diverse range of visitors [4]. Additionally, the adoption of DT can contribute to sustainable tourism by providing immersive experiences and educational opportunities for a deeper understanding of the site and encouraging responsible visitation. Ultimately, such initiatives can drive economic growth while preserving the cultural heritage and promoting long-term development in the region.

1.1. Related Work

Virtual reality (VR) applications offer a powerful tool for the preservation and exploration of cultural heritage, allowing users to experience historically significant sites in immersive, interactive ways [5,6]. By recreating ancient monuments, museums, or inaccessible cultural sites in virtual environments, VR allows users to engage with these spaces as if they were physically present, regardless of geographical limitations or site fragility [7,8]. Digital heritage utilizes augmented reality (AR) to transform cultural preservation by making digital content accessible to a broader audience [9]. AR applications enhance traditional conservation methods by overlaying historical data, imagery, and narratives onto real-world environments, thus creating a hybrid space where users can explore cultural heritage interactively [10,11,12]. As extended reality (XR) continues to evolve, it offers new methods to document, visualize, and share cultural knowledge, promoting both the preservation and dissemination of digital heritage [13].

The integration of 3D reconstruction technologies with AR has significantly impacted the preservation and visualization of cultural heritage artifacts [14]. Techniques like photogrammetry, laser scanning, and lidar are widely used to capture detailed 3D models of fragile or inaccessible artifacts and buildings, offering immersive experiences to users without requiring physical handling [15]. These 3D reconstructions enable museums and cultural institutions to digitally preserve and display items with a high level of realism. Through MR, users can interact with these reconstructions, exploring historical artifacts in their original context or virtually visiting archaeological sites [16,17].

In the context of tourism, MR enhances cultural tourism by enabling visitors to access historical details and reconstructions through mobile devices during site visits [18]. With AR, tourists can visualize missing or damaged parts of historical landmarks, bringing the past to life in real time as they explore [19]. This technology also offers personalized, immersive storytelling experiences and educational opportunities [20], blending digital content with the physical surroundings to enrich tourists’ understanding of historical locations [21]. By offering interactive and informative content, MR boosts the appeal of cultural heritage sites, transforming them into engaging destinations that combine education with exploration.

1.2. Design and Contribution

Therefore, in this paper, we present the initial results of a proposed MR application aimed at enhancing the exploration of cultural heritage sites through immersive and interactive experiences developed in the framework of the project Digital Dynamic and Responsible Twins for XR: DIDYMOS-XR [22]. The application supports both VR and AR platforms, offering users the ability to navigate virtual representations of historic landmarks, interact with digital guides, and access personalized or shared educational content through a multi-user guided experience, either on-site or entirely remotely. The results highlight the potential of MR technologies to bridge accessibility gaps, increase engagement with cultural history, and contribute significantly to the development of digital twins for virtual tourism.

A user evaluation study with 20 participants was conducted to assess both versions of the guided system (synchronous and asynchronous) and to explore the educational and immersive qualities of the application. Feedback from this study serves as a guide for the refinement of the interaction mechanisms and the extension of the system to other sites and contexts. The innovation of this application lies in the combination of advanced features, including multi-user interactivity, real-time environmental adaptation, and the integration of both GPS-enabled and non-location-based positioning. These features, coupled with intricate rendering effects for seamless transitions between physical and virtual environments, establish a highly immersive framework that can be applied to various cultural sites globally. Guided by continuous user feedback, this work contributes to the growing field of MR for cultural heritage, offering a flexible and engaging tool for both education and preservation.

2. Materials and Methods

2.1. Design and Development

The application was developed using Unity3D 2022.3, which was chosen for its robust capabilities in creating cross-platform immersive experiences. Unity’s Universal Render Pipeline (URP) was employed to allow us to develop custom render pipelines when needed. The application supports two hardware platforms: the Meta Quest 3 via the OpenXR SDK and mobile devices utilizing Google ARCore. By targeting these platforms, the application caters to both VR and AR users, delivering a seamless experience that adapts to their respective capabilities. The system integrates a variety of XR elements, such as interactive elements and environmental effects, to enhance users’ engagement and immersion.

For this work, two distinct 3D-scanned models were utilized to represent cultural heritage sites. The first was the Sacrification Church of Pyhämaa (“Sacrification Church of Pyhämaa” by Lassi Kaukonen licensed under Creative Commons Attribution), located in Pyhämaa, Finland, known for its historic wooden architecture and 17th-century wall paintings [23]. The second was the Biblioteca Museu Víctor Balaguer in Vilanova (“Biblioteca Museu Víctor Balaguer Art Gallery” by Neàpolis, Vilanova i la Geltrú), Spain, a cultural landmark with a rich collection of artifacts and literature. Both models were processed to enable interaction and exploration, ensuring smooth navigation while minimizing the rendering costs. This choice of models highlights the adaptability of the system to diverse cultural sites and enhances its applicability for broader heritage exploration.

2.2. Features

The application includes several core features designed to enhance users’ interaction and immersion. These features include portals, which allow users to view and access the interior of cultural sites; avatar selection and multi-player functionality, enabling users to explore the virtual space together; walk-in capabilities, allowing seamless movement between real and virtual environments; and real-time weather and lighting simulations that adapt the experience to match the current environmental conditions.

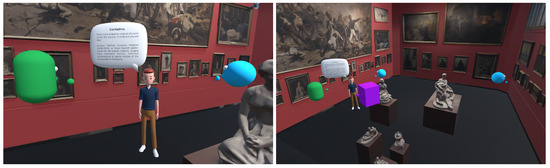

Multi-User. The user can choose their respective avatars, which will be shown to the other connected users. In the app, connected clients can see each other in the correct position within the virtual site by synchronizing their transforms relative to a shared origin point. When a client connects, their initial position is calculated based on their local position relative to their origin in the virtual environment. Any movement that they make is tracked and applied as a transformation relative to this origin. These position and rotation updates are transmitted to other clients, who then apply the same transforms to their local player origins, ensuring that each player appears in the correct location in the shared virtual space (Figure 1).

Figure 1.

Demonstration of the multi-player mode from two different points of view during a synchronous guided tour in the Biblioteca Museu Víctor Balaguer. Capture from Quest 3 on the left and capture from the host PC on the right.

Portals. Portals are virtual openings that allow users to see and access the interior of a cultural site from the outside. They serve as interactive windows that can be placed on real-world surfaces. To achieve the “portal” effect, we implemented a custom rendering setup within the Unity Universal Render Pipeline (URP), leveraging three distinct render objects. Each render object corresponds to a specific layer, tailored to handle a key aspect of the portal’s functionality. This design enables the precise isolation and manipulation of the portal area, ensuring seamless integration between the virtual content and the physical environment.

- Layer 1 (Mask): Responsible for rendering the portal’s surface. This layer defines the visual boundary of the portal, distinguishing it from the surrounding scene.

- Layer 2 (Inside Portal): Handles the rendering of the virtual content visible through the portal. This ensures that only the area within the portal displays the virtual elements, creating the effect of looking into a different space.

- Layer 3 (Effects): Manages the transition effects, such as particle systems and custom visual shaders, providing a smooth and dynamic interaction at the portal’s edges.

Each layer is configured with specific rendering settings, as outlined in Table 1. Key properties include the following.

- Compare: Specifies how the current pixel’s depth is compared against the existing depth buffer to determine visibility. For example, “greater” means that the pixel is only drawn if it is farther from the camera.

- Pass: Defines the action taken on the depth buffer when the depth test succeeds.

Fail and Z Fail rows are omitted as they are configured uniformly to “Keep” across all layers, meaning that no changes are made to the depth buffer when the depth test fails. This multi-layered approach ensures that the portal effect maintains high visual quality and interactive realism, supporting immersive experiences within mixed-reality environments.

Table 1.

Custom render pipeline settings.

Table 1.

Custom render pipeline settings.

| Properties | Layers | ||

|---|---|---|---|

| Mask | Inside Portal | Effects | |

| Applied to | Portals | Behind Portals | Around Portals |

| Queue | Opaque | Opaque | Transparent |

| Compare | Greater | Equal | Not Equal |

| Pass | Replace | Keep | Keep |

For the user to be able to place portals, a model of the exterior of the cultural site was used, with a disabled mesh renderer, leaving only the collider active to provide collision feedback. The collision plane is designed to handle interactions with both mobile devices and the Quest 3. For mobile devices, it has an AR plane component to register tap events via ARCore, while, for the Quest 3, it has the XRSimpleInteractable component to detect controller input. When an interaction occurs, a portal prefab is instantiated at the selected location and, over time, expands smoothly to its final size, using a scaling animation. The portal prefab consists of a circular plane with the custom Mask layer and shader to display the virtual content behind it and a particle effect around its edges using the Effects layer (Figure 2).

Figure 2.

Screenshot from the mobile application showcasing the portal feature. The user taps on the screen to open multiple portals, allowing them to see inside an otherwise inaccessible virtual site (Sacrification Church of Pyhämaa).

When the user steps through the portal, the system switches from the portal effect to the full virtual environment by checking the position of the user’s camera. A trigger collider attached to the door portal detects the camera crossing through, and, using the dot product between the camera’s position and the portal’s plane normal, we determine which side of the portal the camera is on. If the camera is on the virtual side, the portal’s custom layers are disabled, and the camera’s culling mask is set to render the entire virtual scene, creating a seamless transition into the virtual space.

Weather and Lighting. To render real-time weather conditions at the cultural site, we integrated the OpenWeatherMap API into Unity3D. This API allows us to retrieve live weather data via simple HTTP requests. Each request is constructed as a URL containing the API key, endpoint (such as current weather or forecasts), and parameters like the city name or geographic coordinates. Once the request is sent, the API returns a JSON response with detailed weather data, including the temperature, humidity, and specific conditions like cloud coverage or precipitation. We parse these data in Unity and use them to adapt the virtual environment based on the real-world conditions at the site.

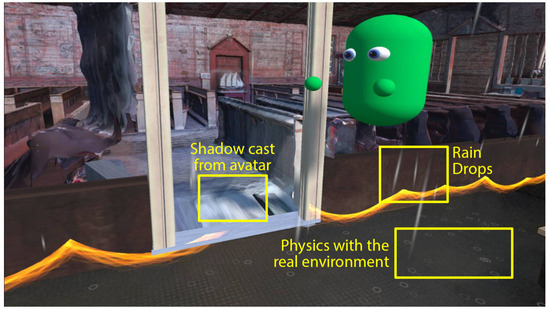

Six weather conditions were modeled to demonstrate the environmental adaptability of the application: clear sky, drizzle, rain, thunderstorm, clouds, and mist. Each condition is visually represented using Unity’s particle systems, optimized for low-resource usage on mobile devices. Rain types use simple, low-polygon raindrop effects, and mist is a combination of image-based fog and low-density particles used to simulate atmospheric diffusion, ensuring that each weather type is rendered with performance efficiency in mind. For the light direction estimation, we used a sun direction calculation based on the user’s location, and the light intensity is derived from the weather conditions [24] and then applied to the virtual scene’s directional light (Figure 3).

Figure 3.

Visualization of weather and lighting simulations in the application. The virtual environment dynamically adapts to reflect the real site’s lighting and weather conditions. Capture from Quest 3.

2.3. Guide

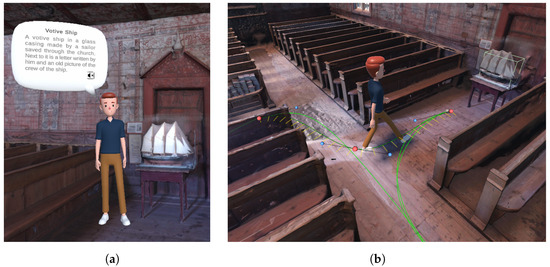

We implemented two versions of a virtual guide system—synchronous and asynchronous. Both versions share common logic for the delivery of contextual information about points of interest (POIs) through a JSON-based data retrieval mechanism. This JSON file, parsed using Unity’s JsonUtility, contains relevant details such as historical facts, names, and multimedia links. The data are displayed in real time through a speech bubble above the guide’s head, implemented with Unity’s Canvas system (Figure 4, left). This provides an interactive and educational experience while maintaining smooth and engaging communication.

2.3.1. Synchronous Guide

The synchronous guide offers a shared experience for all connected users. The virtual guide follows a predefined path between selected POIs, ensuring that all users view the same content at the same time. This enables the users to visit the most important marks of the site. The path is created using Bézier curves, which allow smooth transitions and fluid movement through the virtual site. Unity’s Vector3 class calculates positions along these curves, which are then applied to the guide’s transform to achieve natural navigation. To enhance the realism, Unity’s Animator system synchronizes walking, turning, and idle animations with the guide’s progress along the path. This shared approach allows for collaborative and synchronized exploration, aligning all users’ experiences with the guide’s movements and POI presentations.

2.3.2. Asynchronous Guide

The asynchronous guide adapts to individual users’ interactions, allowing each user to personalize their exploration by selecting whichever POI they find interesting, so, instead of following a fixed path, the guide dynamically navigates there and provides details about the artifact (Figure 4, right). Upon selection, the application calculates the guide’s path to the chosen POI using Bézier curves, ensuring smooth movement. As in the synchronous version, animations are seamlessly triggered based on the guide’s progress along the path. The JSON-based information retrieval system ensures that the guide presents the relevant POI details through its speech bubble upon arrival. This approach enables a tailored, user-driven experience, offering flexibility and personalization without compromising on immersion or educational value.

Figure 4.

The virtual guide functionality in the application. The guide offers dynamic, educational content about POIs and follows predefined paths to enhance users’ interaction and engagement in the virtual heritage environment. Capture from Quest 3 on the left; capture from Unity’s scene view on the right. (a) The guide provides details about a user-selected POI. (b) The guide navigates through predefined paths based on the user’s selection.

2.4. Localization System

Our application supports two distinct localization systems: a GPS-dependent version, ensuring that the virtual model is appropriately aligned with the real-world environment, and a non-location-based version. Each version offers unique advantages depending on the user’s location and device capabilities [25,26].

2.4.1. GPS-Dependent Localization

The GPS-dependent localization system leverages the user’s real-world geographic coordinates (latitude, longitude, and heading) to precisely align the virtual building with its real-world counterpart. This method ensures that the virtual site is placed accurately, making it ideal for outdoor applications where GPS data are accessible. The process begins by calculating the distance between the user’s current position and the target building using the Haversine equation, which determines the shortest distance between two points on the Earth’s surface based on their latitude and longitude:

where d is the distance between the user and the building, r is the Earth’s radius (≈6378 km), are the latitudes of the user and the building, and is the difference in longitude. Additionally, the bearing equation is used to compute the angle between the user and the building, with respect to the north:

where is the bearing angle between the user and the building. By knowing the user’s heading (rotation) and the bearing angle between them and the building, the relative orientation is calculated. Using this angle and the distance, a polar-to-Cartesian conversion is applied to calculate the building’s virtual location relative to the user:

The calculated coordinates in the virtual world () are then used to place the building within the MR environment, ensuring that the virtual model aligns with its true location in the real world. This GPS method is particularly beneficial for large-scale outdoor environments, as it maintains accurate positional and rotational alignment between the real and virtual worlds.

2.4.2. Non-Location-Based Localization

In contrast, the non-location-based localization system is used when GPS data are unavailable or unnecessary. In this system, the virtual building automatically appears in front of the user when they open the app, typically centered within the AR camera’s view. The virtual model is instantiated at a default position, ensuring that the building is visible and proportionally aligned with the user’s viewpoint. While this method lacks external positioning data, it provides a straightforward way to engage with the virtual environment indoors or in locations where GPS signals may be unreliable.

By offering both GPS-dependent and non-location-based systems, our application can cater to different environments and user needs, enabling accurate virtual site placement both in real-world outdoor settings and in locations where GPS is not available.

2.5. User Evaluation

After reaching a fully functional prototype state, the system was evaluated by 20 users, aged between 20 and 35 years old and all university students or graduates, who voluntarily participated in testing. Upon completing the XR experience, the users were asked to fill out a questionnaire comprising 17 questions. The questionnaire was designed to assess key aspects of the application, including the level of immersion, ease of interaction, educational value, and overall satisfaction. Several questions were adapted from the widely used Questionnaire of Presence (QoP) [27], focusing on control factors, sensory factors, distraction factors, and realism factors. These categories were used to evaluate the extent to which the users felt immersed and engaged, as well as the degree of realism and consistency in the virtual environment. Additionally, questions were included to gauge user perceptions of key features such as portals, the guide system, avatar interactions, and environmental elements like weather and lighting.

Before beginning the experience, the users received a brief verbal explanation of the navigation controls and interaction mechanisms for their respective devices—either a mobile or the Quest 3. In the asynchronous guide version, users could interact with the environment by selecting certain points of interest so that the guide could provide targeted information based on the users’ requests. In the synchronous version, participants shared the guide experience simultaneously, observing the guide and accessing information in unison with others. Both versions allowed the users to explore the cultural site, open portals to reveal additional areas, and interact with elements within the environment. The questions focused on evaluating the perceived educational value, interaction quality, and overall engagement with the system. The feedback obtained from this study was instrumental in understanding user preferences and identifying areas for future improvement, ultimately contributing to the iterative refinement of the application.

3. Results and Discussion

The user evaluation results were highly positive, indicating that the application successfully delivered an engaging and immersive experience. Below, in Table 2, Table 3 and Table 4, we explore these findings in three distinct sections: the level of immersion, the importance of specific features, and the potential of mixed reality in enhancing cultural heritage.

3.1. Level of Immersion

The collected data suggest that the users experienced a moderate to high level of immersion during the MR experience. Despite varying familiarity with XR technologies, as indicated by the mean scores for VR and AR, the users reported a strong sense of involvement in the environment. The visual aspects of the application were particularly impactful, contributing significantly to the overall experience. While the naturalness of interactions scored slightly lower, the environment’s responsiveness was rated highly, indicating that the dynamic feedback mechanisms were effective in maintaining users’ engagement and immersion.

Table 2.

Level of immersion.

Table 2.

Level of immersion.

| Evaluate the Level of Control and Immersion You Perceived During Your Experience | Mean | SD |

|---|---|---|

| How experienced are you with VR? | 2.95 | 1.36 |

| How experienced are you with AR? | 2.30 | 1.26 |

| How experienced are you with apps for cultural heritage? | 1.75 | 0.72 |

| How aware were you of events occurring in the real world around you? | 3.15 | 1.14 |

| How responsive was the environment to actions that you initiated (or performed)? | 4.40 | 0.68 |

| How natural did your interactions with the environment seem? | 3.65 | 0.88 |

| How much did the visual aspects of the environment contribute to your overall experience? | 4.55 | 0.60 |

| How much did your experiences in the virtual environment seem consistent with your real-world experiences? | 3.60 | 0.68 |

| How involved were you in the MR experience? | 3.75 | 0.72 |

3.2. Importance of Elements

Among the various aspects contributing to a more engaging MR experience for cultural heritage, environmental realism emerged as the most significant factor. This finding aligns with the users’ emphasis on visual fidelity, underscoring its importance in enhancing the overall immersion. Interaction with points of interest was also highly rated, indicating that direct engagement with elements in the environment significantly impacts user satisfaction. The virtual guide and the multi-player synchronous experience were appreciated for their contributions to the sense of presence and collaborative exploration. These results highlight the need to prioritize detailed environments, interactive elements, and multi-user functionality in future developments to further improve the user experience.

Table 3.

Aspects of immersion.

Table 3.

Aspects of immersion.

| Evaluate the Importance of the Following for a More Engaging MR Experience for Cultural Heritage | Mean | SD |

|---|---|---|

| Virtual Guide | 3.8 | 0.89 |

| Multi-Player Synchronous Experience | 4.05 | 1.00 |

| Interacting with Points of Interest | 4.35 | 0.75 |

| Environmental Realism | 4.35 | 0.75 |

3.3. MR for Cultural Heritage

The user evaluation strongly indicates that MR systems significantly enhance the educational value of cultural heritage experiences. The participants expressed confidence that such applications increase their interest in cultural and educational content and make historic facts easier to understand and remember through visualization. Moreover, the users acknowledged the broader potential of MR technology, with the highest score reflecting their belief in its applicability to other fields like science and art. These results support the argument that museums and cultural institutions should integrate MR systems to enrich visitor engagement, learning, and retention through immersive and interactive experiences.

Table 4.

MR for cultural heritage.

Table 4.

MR for cultural heritage.

| Mixed Reality for Cultural Heritage | Mean | SD |

|---|---|---|

| I think that my interest in cultural and educational content would be higher if interactive content and MR systems were used. | 4.20 | 0.83 |

| It is easier to remember a historic fact if it is visualized. | 4.35 | 0.67 |

| I believe that MR systems could be utilized in other fields. | 4.65 | 0.49 |

| I would use this or similar apps again for other cultural sites. | 4.10 | 0.72 |

3.4. Limitations

While the present work offers significant advancements in the use of mixed reality (MR) for cultural heritage, it is not without limitations. One of the primary challenges lies in the reliance on GPS-dependent localization for outdoor environments, which can be affected by signal accuracy, especially in urban areas with tall buildings or in remote regions. This limitation may result in minor misalignment between the virtual and real-world models, affecting the overall user experience. Additionally, the non-location-based system, while useful for indoor environments, lacks the contextual accuracy provided by GPS-based methods, potentially reducing the sense of immersion. Furthermore, the current implementation does not incorporate advanced haptic feedback or environmental interactions, which could further enhance the sense of presence and engagement.

Future work can address these limitations by integrating more robust localization methods, such as combining GPS with Simultaneous Localization and Mapping (SLAM) [28] or with geo-referenced image matching technologies for improved precision [29]. Expanding the application to include adaptive virtual guide behaviors, such as real-time responses to user queries or actions, could make the experience more interactive and personalized, or a real-life guide connected to the app with live chat could be used [30]. Furthermore, conducting larger-scale user studies with diverse demographics will provide deeper insights into user preferences and areas for improvement. By addressing these aspects, the proposed system could evolve into a more versatile and comprehensive platform for the preservation and promotion of cultural heritage through MR technologies.

4. Conclusions

This study demonstrates the potential of integrating mixed reality (MR) technology into cultural heritage applications to enhance users’ engagement and learning experiences. By combining GPS-dependent and non-location-based localization systems, users can interact with virtual reconstructions of cultural sites, whether in outdoor or indoor environments. Unlike many existing applications for virtual monument visits, which often provide only static 3D models, our system integrates features like the portal effect, which provides visual access to otherwise inaccessible locations, dynamic environmental adaptation, and a shared multi-user experience, offering innovative methods to bridge the gap between physical and digital heritage. Additionally, including virtual guides and interactive elements creates a dynamic environment that encourages both individual exploration and collaborative engagement, enriching the user’s understanding of the historical and cultural contexts.

The significance of this work lies in its contribution to the evolving field of digital heritage by showcasing the potential of MR technologies in creating immersive, educational, and socially engaging experiences. By addressing challenges such as accurate localization, interactive storytelling, and multi-user functionality, this work highlights pathways for future development in virtual heritage. These technologies serve as valuable tools for the preservation and promotion of cultural heritage and pave the way for broader applications in education, tourism, and sustainable development. As MR continues to advance, its role in making cultural heritage more accessible and impactful will undoubtedly grow, providing opportunities to reimagine how we experience and preserve history in the digital age.

Author Contributions

Conceptualization, A.C. and T.C.; Methodology, A.C. and T.C.; Software, A.C. and T.C.; Validation, A.C. and T.C.; Formal Analysis, A.C. and T.C.; Investigation, A.C. and T.C.; Resources, A.C. and T.C.; Data Curation, A.C. and T.C.; Writing—Original draft Preparation, A.C.; Writing—Review and Editing, A.C., T.C., G.A. and K.M.; Visualization, A.C. and T.C.; Supervision, G.A. and K.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the European Union’s Horizon 2020 research and innovation program under Grant Agreement No. 101092875-DIDYMOS-XR: Digital dynamic and responsible twins for XR.

Institutional Review Board Statement

Ethical review and approval were waived for this study in accordance with the regulations of the Research Ethics and Deontology Committee of University of Patras, which do not require review for this type of research.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fanini, B.; Pagano, A.; Pietroni, E.; Ferdani, D.; Demetrescu, E.; Palombini, A. Augmented reality for cultural heritage. In Springer Handbook of Augmented Reality; Springer Handbooks; Springer: Cham, Swizerland, 2023; pp. 391–411. [Google Scholar]

- Bekele, M.K.; Champion, E. A comparison of immersive realities and interaction methods: Cultural learning in virtual heritage. Front. Robot. AI 2019, 6, 91. [Google Scholar] [CrossRef] [PubMed]

- Bekele, M.K.; Champion, E.; McMeekin, D.A.; Rahaman, H. The influence of collaborative and multi-modal mixed reality: Cultural learning in virtual heritage. Multimodal Technol. Interact. 2021, 5, 79. [Google Scholar] [CrossRef]

- Florido-Benítez, L. The Use of Digital Twins to Address Smart Tourist Destinations’ Future Challenges. Platforms 2024, 2, 234–254. [Google Scholar] [CrossRef]

- Voinea, G.D.; Girbacia, F.; Postelnicu, C.C.; Marto, A. Exploring Cultural Heritage Using Augmented Reality Through Google’s Project Tango and ARCore. In VR Technologies in Cultural Heritage; Duguleană, M., Carrozzino, M., Gams, M., Tanea, I., Eds.; Springer: Cham, Swizerland, 2019; pp. 93–106. [Google Scholar]

- Cecotti, H. Cultural heritage in fully immersive virtual reality. Virtual worlds 2022, 1, 82–102. [Google Scholar] [CrossRef]

- Paladini, A.; Dhanda, A.; Reina Ortiz, M.; Weigert, A.; Nofal, E.; Min, A.; Gyi, M.; Su, S.; Van Balen, K.; Santana Quintero, M. Impact of virtual reality experience on accessibility of cultural heritage. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 929–936. [Google Scholar] [CrossRef]

- De Paolis, L.T.; Chiarello, S.; Gatto, C.; Liaci, S.; De Luca, V. Virtual reality for the enhancement of cultural tangible and intangible heritage: The case study of the Castle of Corsano. Digit. Appl. Archaeol. Cult. Herit. 2022, 27, e00238. [Google Scholar] [CrossRef]

- Campi, M.; di Luggo, A.; Palomba, D.; Palomba, R. Digital surveys and 3D reconstructions for augmented accessibility of archaeological heritage. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 205–212. [Google Scholar] [CrossRef]

- Boboc, R.G.; Duguleană, M.; Voinea, G.D.; Postelnicu, C.C.; Popovici, D.M.; Carrozzino, M. Mobile augmented reality for cultural heritage: Following the footsteps of Ovid among different locations in Europe. Sustainability 2019, 11, 1167. [Google Scholar] [CrossRef]

- Litvak, E.; Kuflik, T. Enhancing cultural heritage outdoor experience with augmented-reality smart glasses. Pers. Ubiquitous Comput. 2020, 24, 873–886. [Google Scholar] [CrossRef]

- Boboc, R.G.; Băutu, E.; Gîrbacia, F.; Popovici, N.; Popovici, D.M. Augmented reality in cultural heritage: An overview of the last decade of applications. Appl. Sci. 2022, 12, 9859. [Google Scholar] [CrossRef]

- Ch’ng, E.; Cai, S.; Leow, F.T.; Zhang, T.E. Adoption and use of emerging cultural technologies in China’s museums. J. Cult. Herit. 2019, 37, 170–180. [Google Scholar] [CrossRef]

- Van Nguyen, S.; Le, S.T.; Tran, M.K.; Tran, H.M. Reconstruction of 3D digital heritage objects for VR and AR applications. J. Inf. Telecommun. 2022, 6, 254–269. [Google Scholar] [CrossRef]

- Bevilacqua, M.G.; Russo, M.; Giordano, A.; Spallone, R. 3D reconstruction, digital twinning, and virtual reality: Architectural heritage applications. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; pp. 92–96. [Google Scholar]

- Teruggi, S.; Grilli, E.; Fassi, F.; Remondino, F. 3D surveying, semantic enrichment and virtual access of large cultural heritage. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 8, 155–162. [Google Scholar] [CrossRef]

- Comes, R.; Neamțu, C.G.D.; Grec, C.; Buna, Z.L.; Găzdac, C.; Mateescu-Suciu, L. Digital Reconstruction of Fragmented Cultural Heritage Assets: The Case Study of the Dacian Embossed Disk from Piatra Roșie. Appl. Sci. 2022, 12, 8131. [Google Scholar] [CrossRef]

- Han, D.I.; tom Dieck, M.C.; Jung, T. User experience model for augmented reality applications in urban heritage tourism. J. Herit. Tour. 2018, 13, 46–61. [Google Scholar] [CrossRef]

- Panou, C.; Ragia, L.; Dimelli, D.; Mania, K. Outdoors Mobile Augmented Reality Application Visualizing 3D Reconstructed Historical Monuments. In Proceedings of the GISTAM, Funchal, Madeira, Portugal, 17–19 March 2018; pp. 59–67. [Google Scholar]

- Vlizos, S.; Sharamyeva, J.A.; Kotsopoulos, K. Interdisciplinary design of an educational applications development platform in a 3D environment focused on cultural heritage tourism. In Proceedings of the Emerging Technologies and the Digital Transformation of Museums and Heritage Sites: First International Conference, RISE IMET 2021, Nicosia, Cyprus, 2–4 June 2021; Proceedings 1. Springer: Cham, Swizerland, 2021; pp. 79–96. [Google Scholar]

- Blanco-Pons, S.; Carrión-Ruiz, B.; Lerma, J.L.; Villaverde, V. Design and implementation of an augmented reality application for rock art visualization in Cova dels Cavalls (Spain). J. Cult. Herit. 2019, 39, 177–185. [Google Scholar] [CrossRef]

- DIDYMOS-XR. Available online: https://didymos-xr.eu/ (accessed on 8 January 2025).

- Michelin, C. Developing International Religious Tourism: Brochure for Pyhämaa Church of Sacrifice. Bachelor’s Thesis, Satakunta University of Applied Sciences, Pori, Finland, 2023. [Google Scholar]

- Chrysanthakopoulou, A.; Moustakas, K. Real-time shader-based shadow and occlusion rendering in AR. In Proceedings of the 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Orlando, FL, USA, 16–21 March 2024; pp. 969–970. [Google Scholar]

- Kleftodimos, A.; Moustaka, M.; Evagelou, A. Location-based augmented reality for cultural heritage education: Creating educational, gamified location-based AR applications for the prehistoric lake settlement of Dispilio. Digital 2023, 3, 18–45. [Google Scholar] [CrossRef]

- Kanade, P.; Prasad, J.P. Mobile and location based service using augmented reality: A review. Eur. J. Electr. Eng. Comput. Sci. 2021, 5, 13–18. [Google Scholar] [CrossRef]

- Witmer, B.G.; Singer, M.J. Measuring presence in virtual environments: A presence questionnaire. Presence 1998, 7, 225–240. [Google Scholar] [CrossRef]

- Rui, Z.; Xu, W.; Feng, Z. FAST-LiDAR-SLAM: A Robust and Real-Time Factor Graph for Urban Scenarios With Unstable GPS Signals. IEEE Trans. Intell. Transp. Syst. 2024, 25, 20043–20058. [Google Scholar] [CrossRef]

- Song, Z.; Kang, X.; Wei, X.; Li, S.; Liu, H. Unified and Real-Time Image Geo-Localization via Fine-Grained Overlap Estimation. IEEE Trans. Image Process. 2024, 33, 5060–5072. [Google Scholar] [CrossRef] [PubMed]

- Gabellone, F. A digital twin for distant visit of inaccessible contexts. In Proceedings of the 2020 IMEKO TC-4 International Conference on Metrology for Archaeology and Cultural Heritage, Trento, Italy, 22–24 October 2020; pp. 232–237. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).