There is a great amount of research in the field of Intelligent Transportation Systems due to the never-ending need for regulating traffic, providing traffic assistance, and calculating the estimated time of arrival, etc. In this section, the latest research about traffic speed prediction has been reviewed under two categories: parametric and non-parametric approaches.

2.1. Parametric Approaches

Many parametric models have been used in the past for prediction of traffic speeds. They are effective when there are no vast amounts of data to work on and they usually capture traffic flow characteristics faster than non-parametric approaches.

Autoregressive Integrated Moving Average (ARIMA) models, which are almost accepted as a standard in time series forecasting, are used frequently for traffic speed estimation. Recent studies combine ARIMA with other models to improve performance. Wang et al. [

8] developed a model in which the original traffic volume values are first fed into the ARIMA and then into a Support Vector Machine (SVM) model. They managed to make predictions from 6 a.m. to 10 a.m with a MAPE value of 13.4%. As expected, MAPE increases to 14.8% when the prediction period is between 2 p.m. and 6 p.m due to the increased uncertainty of traffic flow during rush hours. Li et al. [

9] proposed a hybrid approach that combines ARIMA and radial basis function neural network (RBF-ANN). They could achieve a MAPE value of 13.67% via their hybrid approach. It is also possible to use Seasonal ARIMA (SARIMA) models to predict traffic speeds since the traffic data usually has a seasonal pattern. SARIMA models consider seasonal patterns in the data unlike ARIMA models. Kumar et al. [

10] developed a SARIMA model with limited data. Even with sample size limitations, results were acceptable in terms of intelligent traffic systems.

Another popular parametric model that is used in time series prediction is the SVM. SVMs try to find a hyperplane which contains maximum number of samples within a threshold value. Feng et al. [

11] proposed an SVM model where they used an improved version of the particle swarm optimization algorithm (PSO) to adaptively adjust parameters of the SVM which uses a hybrid Gaussian and Polynomial kernel. They achieved 10.26% MAPE with their optimized adaptive SVM model. Duan [

12] similarly used the original PSO algorithm to achieve optimal SVM model. k-Nearest Neighbor model is an instance based learning technique which also used in time series prediction. It stores every sample in the training set and uses all of them while making predictions. This algorithm finds the

k closest samples that are similar to the test sample and calculates the mean of them to label it. Similarity is calculated by a distance function. Cheng et al. [

13] proposed a k-NN model where spatial and temporal relations in the data are also considered. They managed to lower the MAPE of the traditional k-NN algorithm by up to two points.

In some studies, parametric approaches act as a preprocessing step before applying non-parametric approaches. Luo et al. [

14] developed a model where k-NN is utilized to select road segments with high spatial correlation and LSTM is used to process temporal features of selected segments, while the baseline LSTM model produces results with 1.81 RMSE; their proposed kNN-LSTM method produces results with 1.74 RMSE on their experiment dataset.

2.2. Non-Parametric Approaches

Popularity of non-parametric models such as neural networks has increased considerably because of the improvements that have been made on graphical processing units and new simpler and faster ways to train large networks. Since these models do not make assumptions about the data, they are highly effective for the task of predicting traffic speeds due to the fact that traffic flow gets affected by several external factors such as holidays, weather conditions, accidents, and sport, culture, and music events.

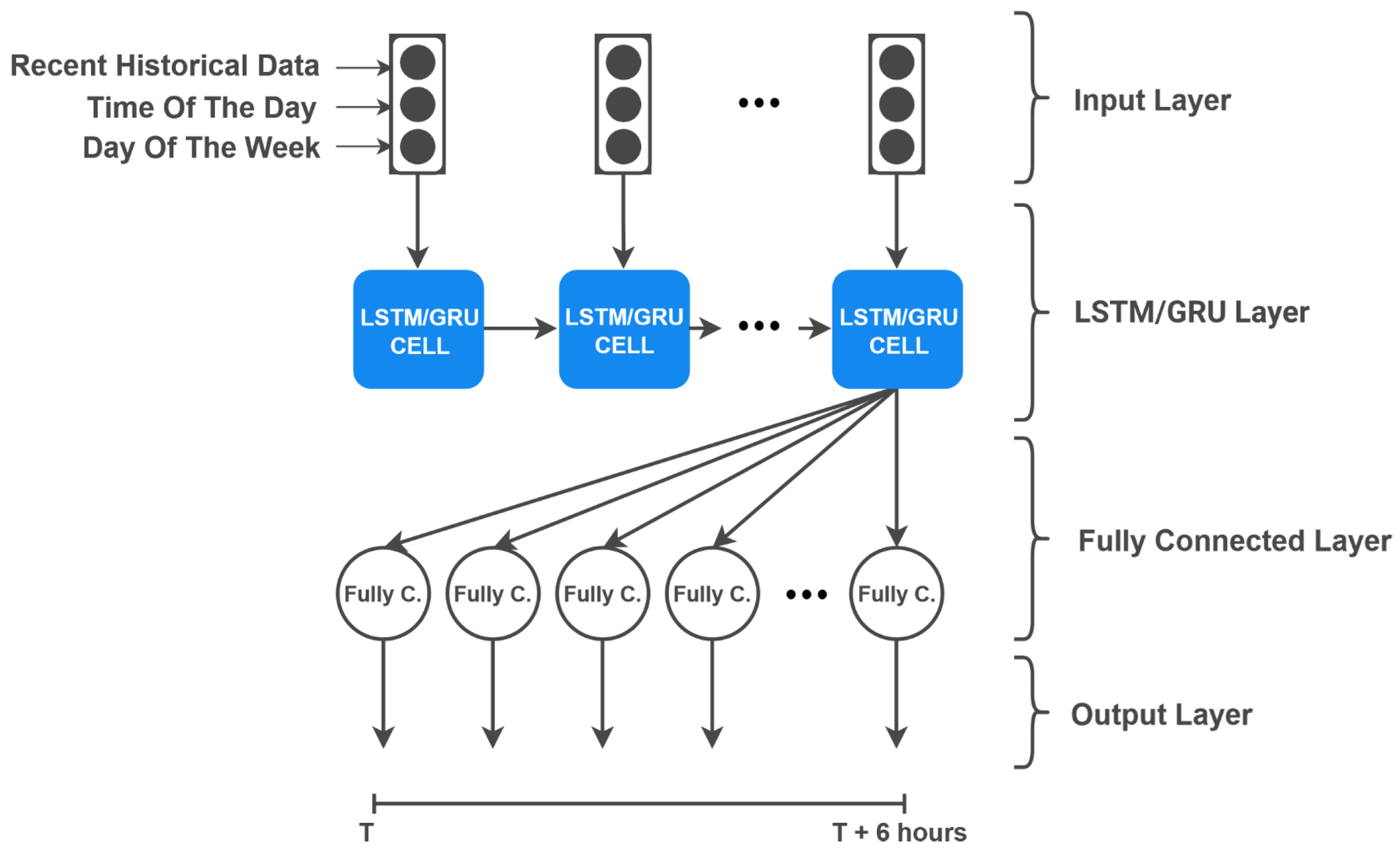

One of the most popular deep learning architecture that is used to predict time series is the Recurrent Neural Network (RNN). Since RNNs are designed to work on sequential data, they are suitable for predicting next steps of time series. A special type of RNN, Long Short-Term Memory (LSTM) is widely used in traffic flow prediction since it does not suffer from the vanishing gradient problem. Yongxue et al. [

15] used LSTM network to predict traffic flow on working days. They developed models for different time intervals, and while they achieved 6.49% MAPE for 15 min interval predictions, they managed to get 6.25% MAPE for 60 min intervals. Since the type of data that is used in the traffic prediction is mostly time series, it is possible to extract temporal features from the dataset. Qu et al. [

16] proposed a model where traffic speed values and other features that are extracted from the dataset (day, hour, minute, weekday, and holiday days) are also exploited. Traffic speed values were fed into a stacked LSTM model while other features are fed to an Autoencoder. Then a merging layer is used to combine results coming from both parts. Their proposed model, Fi-LSTM, managed to obtain 7.73% MAPE for 15 min interval predictions, while the baseline LSTM model got a MAPE of 8.18%. The study demonstrated that using temporal features can improve the prediction results.

It is also possible to apply a preprocessing procedure on the traffic data before training the model. Zhao et al. [

17] applied an adaptive time series decomposition method called CEEMDAN [

18] on traffic flow data. They used the Grey Wolf Optimizer [

19] algorithm to find the optimal hyperparameters of the LSTM network. With their GWO-CEEMDAN-LSTM approach, they reduced the baseline LSTM model’s 15.13% MAPE down to 10.62%. Ma et al. [

20] applied a first-order differencing on the traffic data before feeding into numerous stacked LSTM networks.

Researchers also developed new model structures to improve the results of predictions. Huang et al. [

21] proposed a new model called Long Short-Term Graph Convolutional Networks which consists of two special learning blocks called GLU and GCN; while GLU units learn the temporal relation in the data, GCN units learn the spatial relation. They tested their model on the PeMS dataset and they were able to make predictions with a MAPE value lower than 10%. Zheng et al. [

22] proposed a Convolutional Bidirectional LSTM network which also includes an attention mechanism to be able to learn long-term relations in the data. Yang et al. [

23] proposed a model where speed values passed through a spatial attention unit called CBAM, CNN and LSTM. Other features such as meteorology data, road information, and date information are fed to a multilayer perceptron (MLP). Outputs from both nodes are then merged in a fusion layer. Another deep learning architecture that is used to predict time series is the Wavelet Neural Network (WNN). In WNNs, the activation function of layers is replaced by a function based on wavelet analysis. Chen et al. [

24] proposed an improved WNN whose hyperparameters are optimized by an improved particle swarm optimization algorithm. They alter the original PSO algorithm by enabling the particles to know their surroundings at the initial position better and hence it converges faster. With this improvement in the PSO algorithm, they decreased the baseline WNN model’s MAE of 31.32% to 16.32%. There are also studies that utilize the WNN model as a preprocessing layer to RNN layers. Huang et al. [

25] proposed a model structure where they used an improved WNN to preprocess data and feed it to LSTM.

Convolutional Neural Networks (CNNs) are also commonly used in the prediction of traffic speeds. They are especially useful to learn spatial relations in the data. Nevertheless, researchers usually combine CNNs and RNNs together to be able to learn both spatial and temporal relations from the data. Cao et al. [

26] proposed a CNN-LSTM model where speed values of consecutive days are fed into the CNN and then passed through a stacked LSTM layer. Zhuang et al. [

27] developed a model which takes a matrix of historical speed and flow data taken from multiple road segments as input for the CNN layer. Outputs from the CNN are then processed in a Bidirectional LSTM layer. With this approach, they achieved 8.12% MAPE value for 30 min interval predictions. There are also studies that combine the attention mechanism with convolutional networks to learn long-term dependencies from the data. Liu et al. [

4] proposed an attention convolutional neural network where flow, speed, and occupancy data are merged and passed into the convolutional layer and attention model.

Research in this field is typically focused on finding the effects of using external data such as weather and accidents, developing deep learning architectures to make it possible for models to learn both spatial and temporal relations from the data, and combining various deep learning techniques to improve forecasting outcomes. There are few studies [

28] incorporating past speed values in different ways using deep learning techniques.

In a recent work [

29], researchers exploited short-, medium-, and long-term temporal information along with spatial features to enhance short-term traffic prediction. They have introduced an ensemble prediction framework that utilizes ARIMA for modeling short-term temporal features, while LSTM networks were employed to model medium and long-term temporal features. Global spatial features were extracted by utilizing the stacked autoencoder (SAE). In another study [

30], the outputs of three residual neural networks, which were trained on distant, near, and recent data to model temporal trends, periods, and closeness, were dynamically aggregated. This aggregation was further complemented with external factors like weather conditions and events. Researchers evaluated their proposed method using the BikeNYC and TaxiBJ datasets. Both of these studies are considered effective approaches as they leverage short-, medium-, and long-term temporal features, as well as spatial dependencies, simultaneously. From a different point of view, there are also few computational solutions [

31] leveraging spatiotemporal features. For example, the authors in [

32] further enhance the traffic spatiotemporal prediction accuracy while reducing model complexity for traffic forecasting tasks.

Lately, Graph Neural Network (GNN)-based models have been used more and more frequently to forecast different aspects of the traffic [

33,

34,

35]. These types of models suit the problem very well when one can access not only the speed features but also the structural graph that represents the relationships between road segments. Unlike previous studies, where researchers had to add some form of spatial understanding layers, GNNs achieve this by leveraging their ability to naturally process graph-structured data for spatial dependencies and using mechanisms like recurrent layers or time-aware architectures to capture temporal dynamics [

36,

37]. Additionally, in [

38], the authors present a comprehensive approach to spatial–temporal traffic data representation learning where it strategically extracts discriminative embeddings from both traffic time-series and graph-structured data.

However, due to high computational costs and limited accessibility due to their complexity, Graph Neural Networks (GNNs) face challenges in practical deployment, and the industry lags in their applicability and adoption [

39]. To address these issues, focus has been on making these models more performant [

40]. There are also studies where researchers use other resource-efficient and optimized layers alongside efficient graph calculations to minimize the computational requirements. Han et al. [

41] propose a graph neural network that approximates the adjacency matrix using lightweight feature matrices. This approach, combined with linearized spatial convolution and attention mechanisms, enables scalable processing of large-scale graphs with linear complexity.

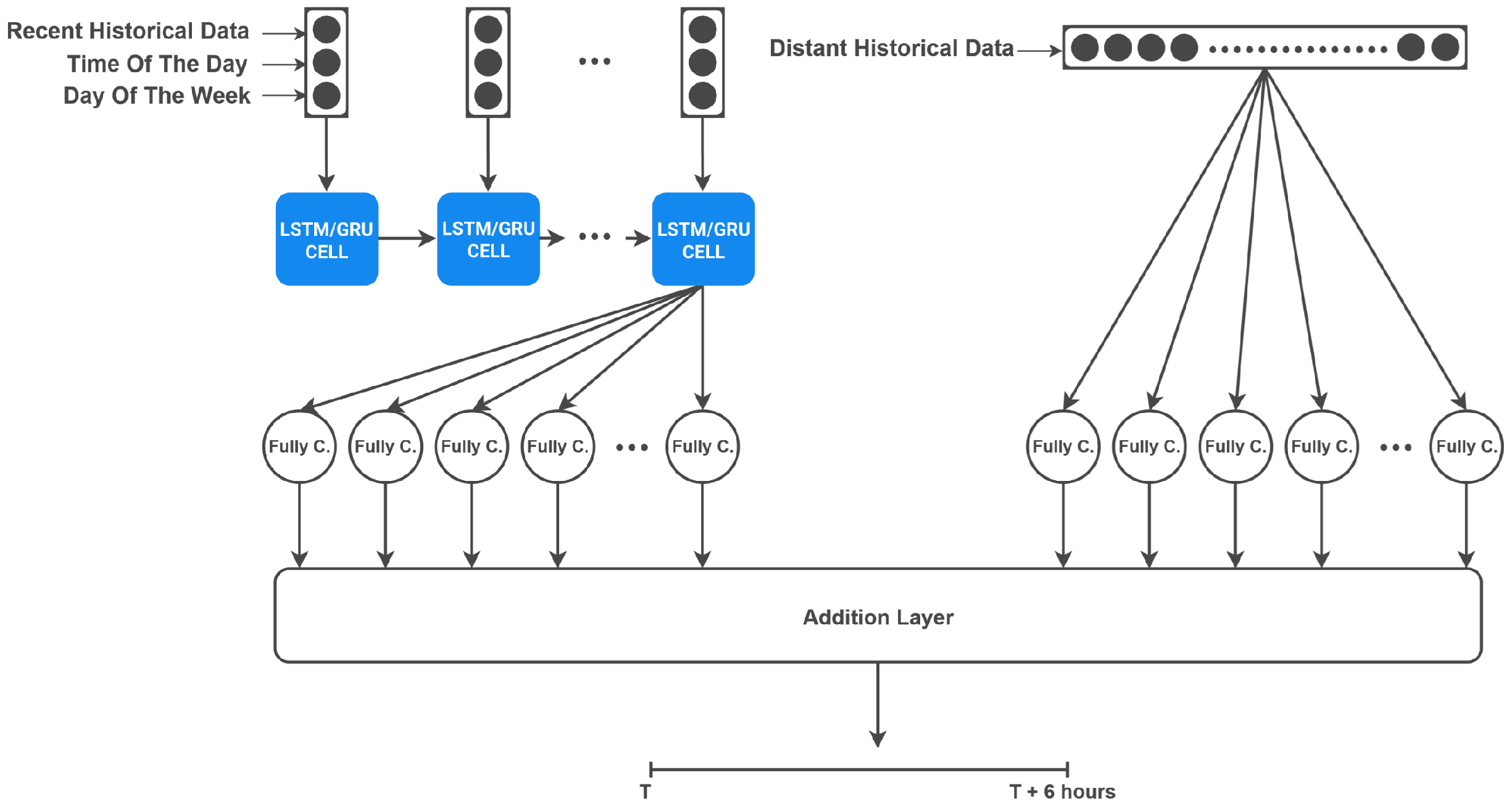

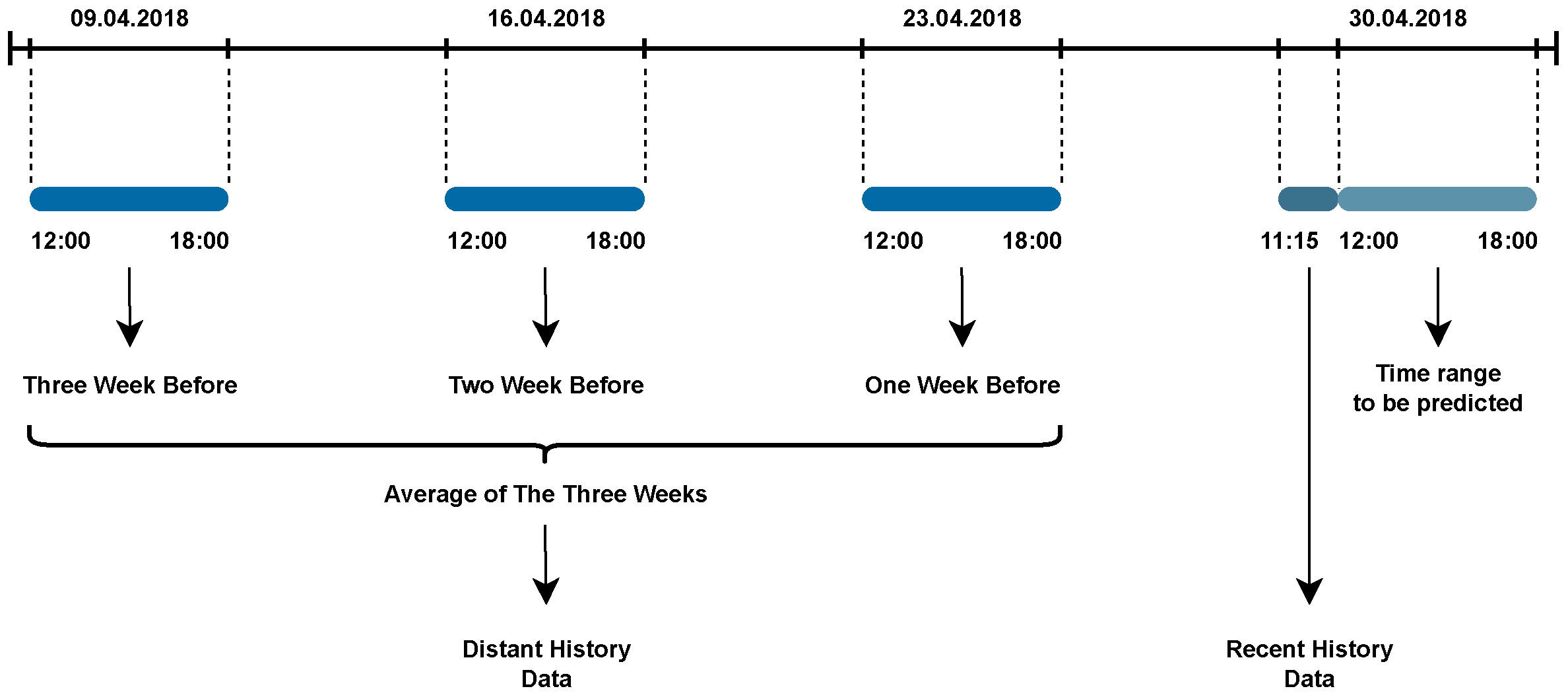

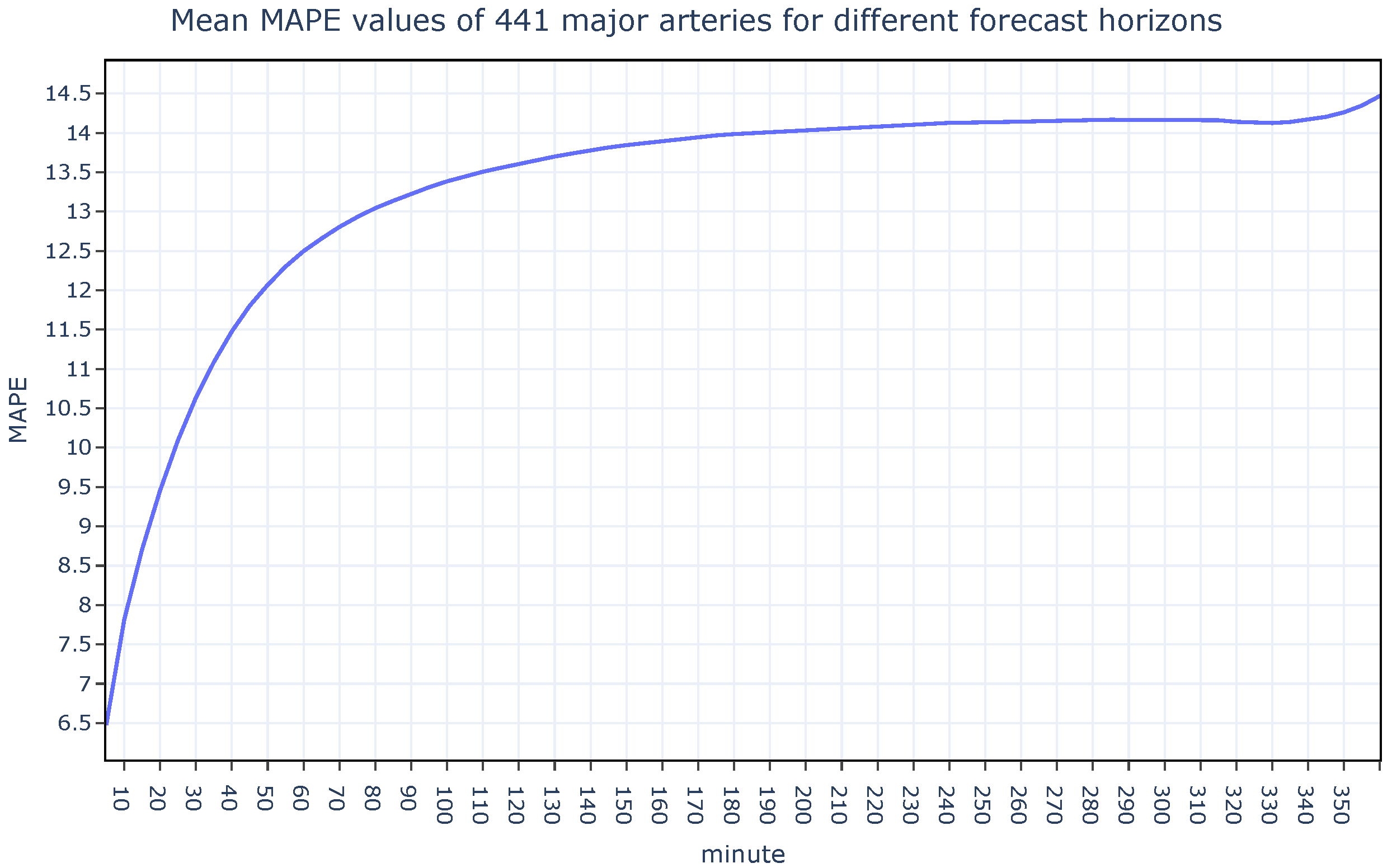

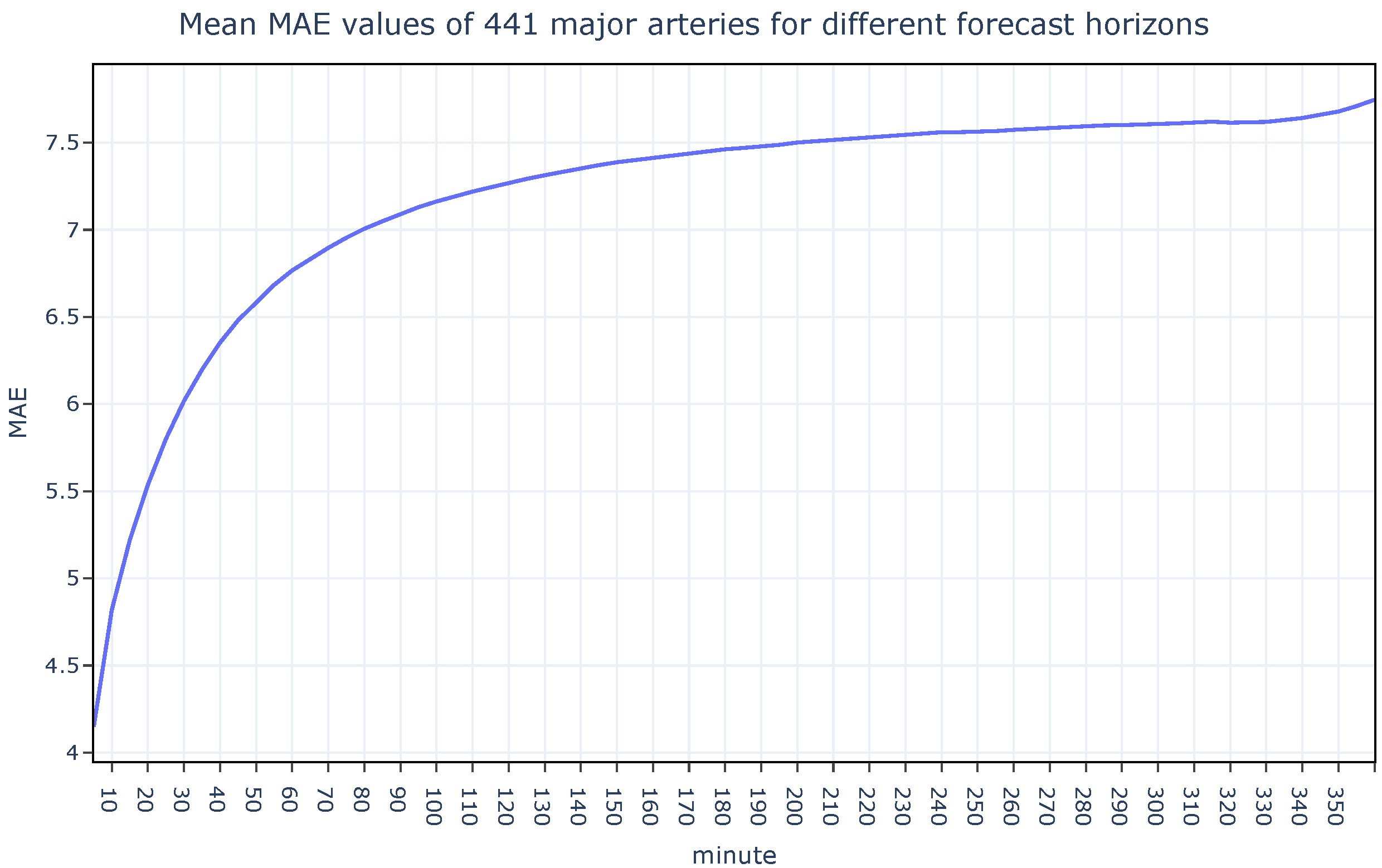

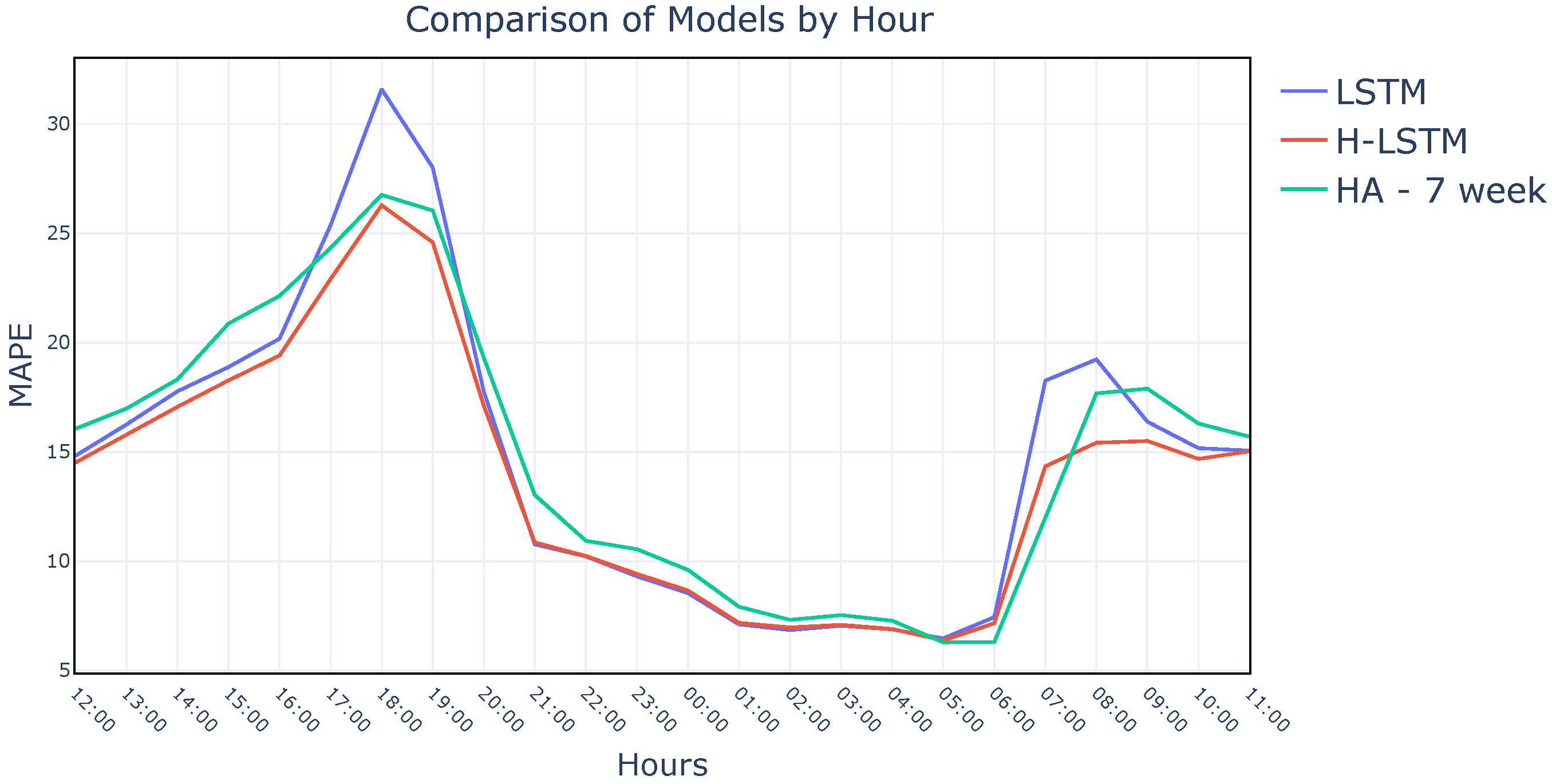

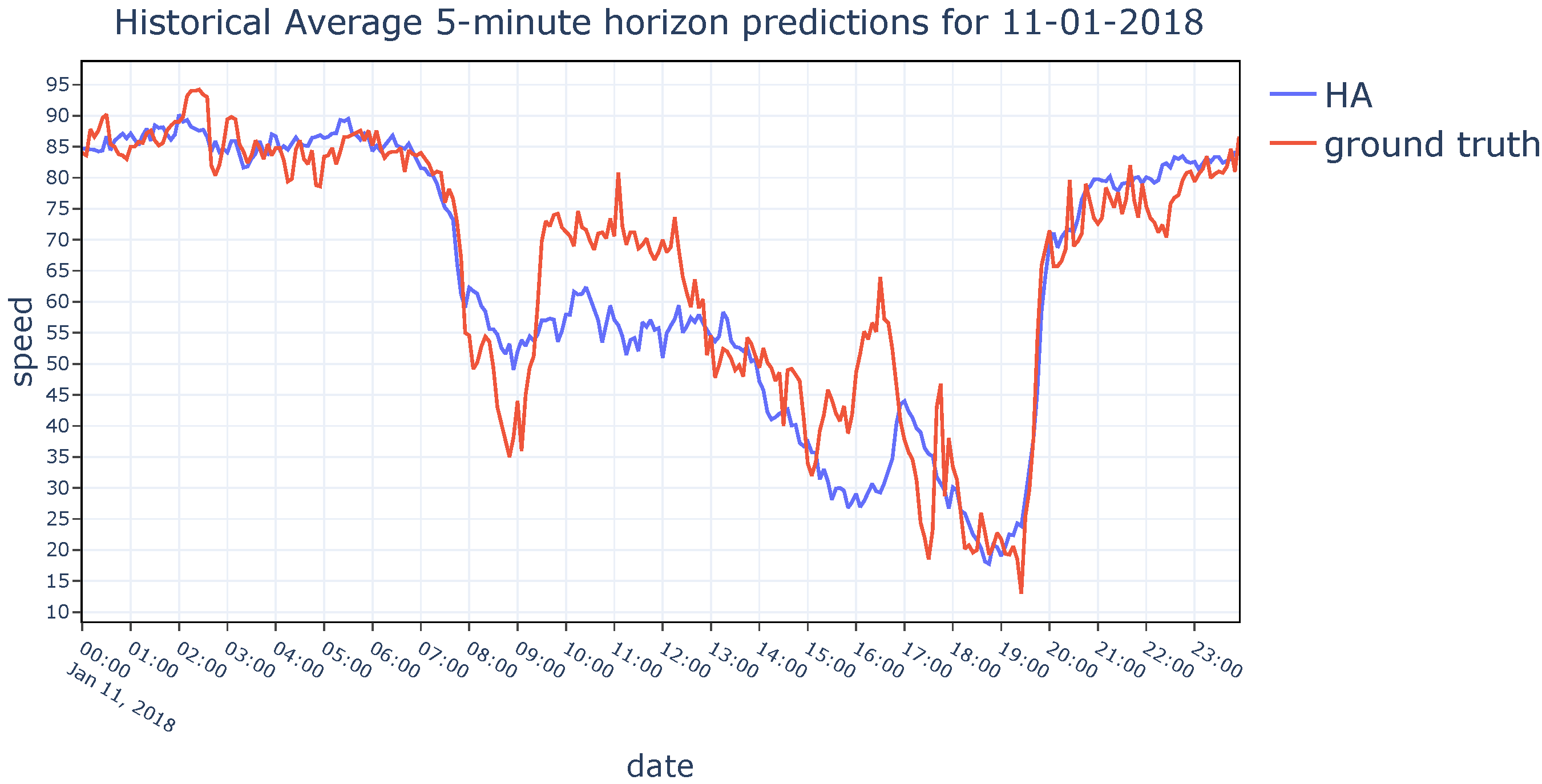

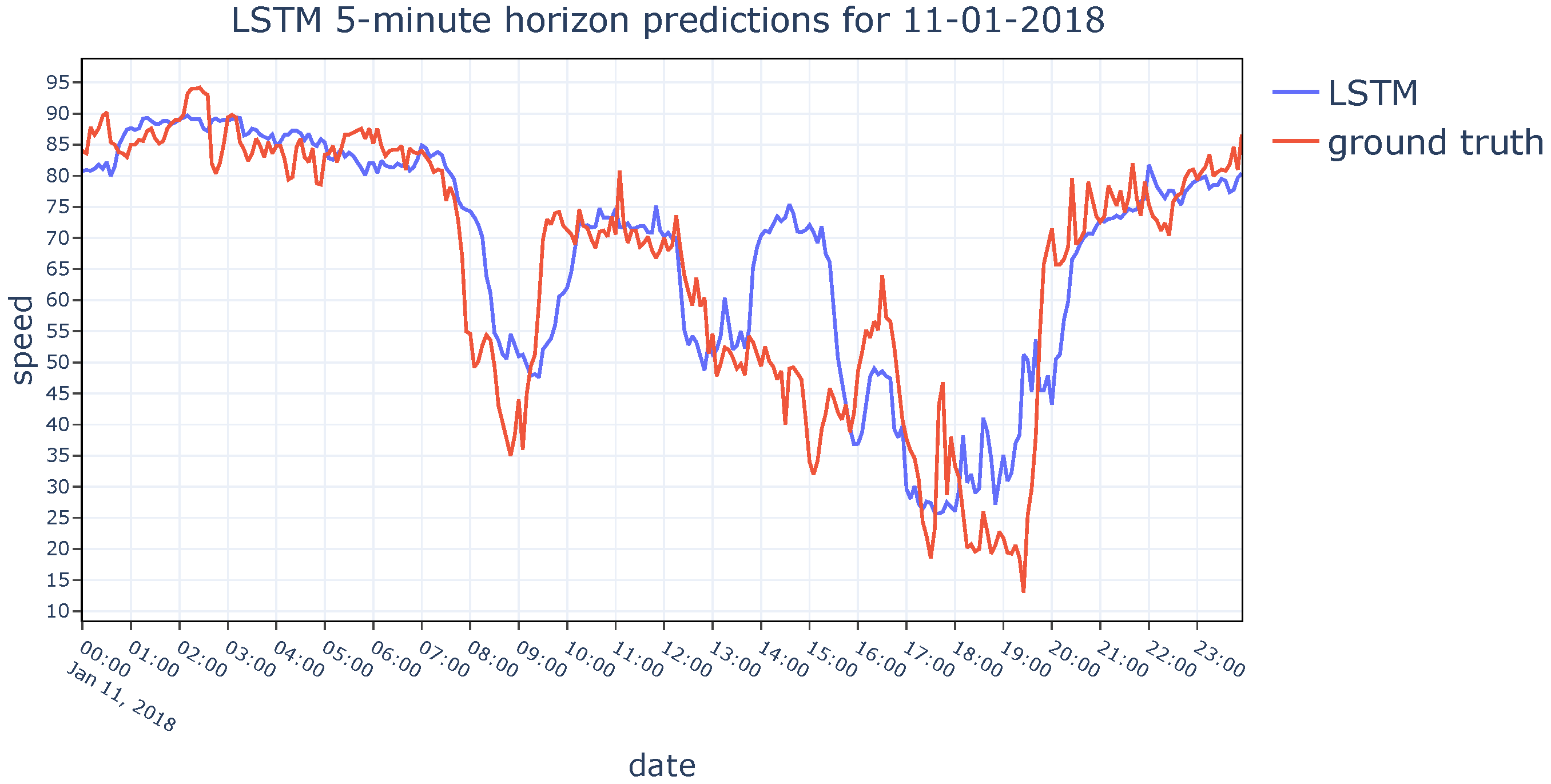

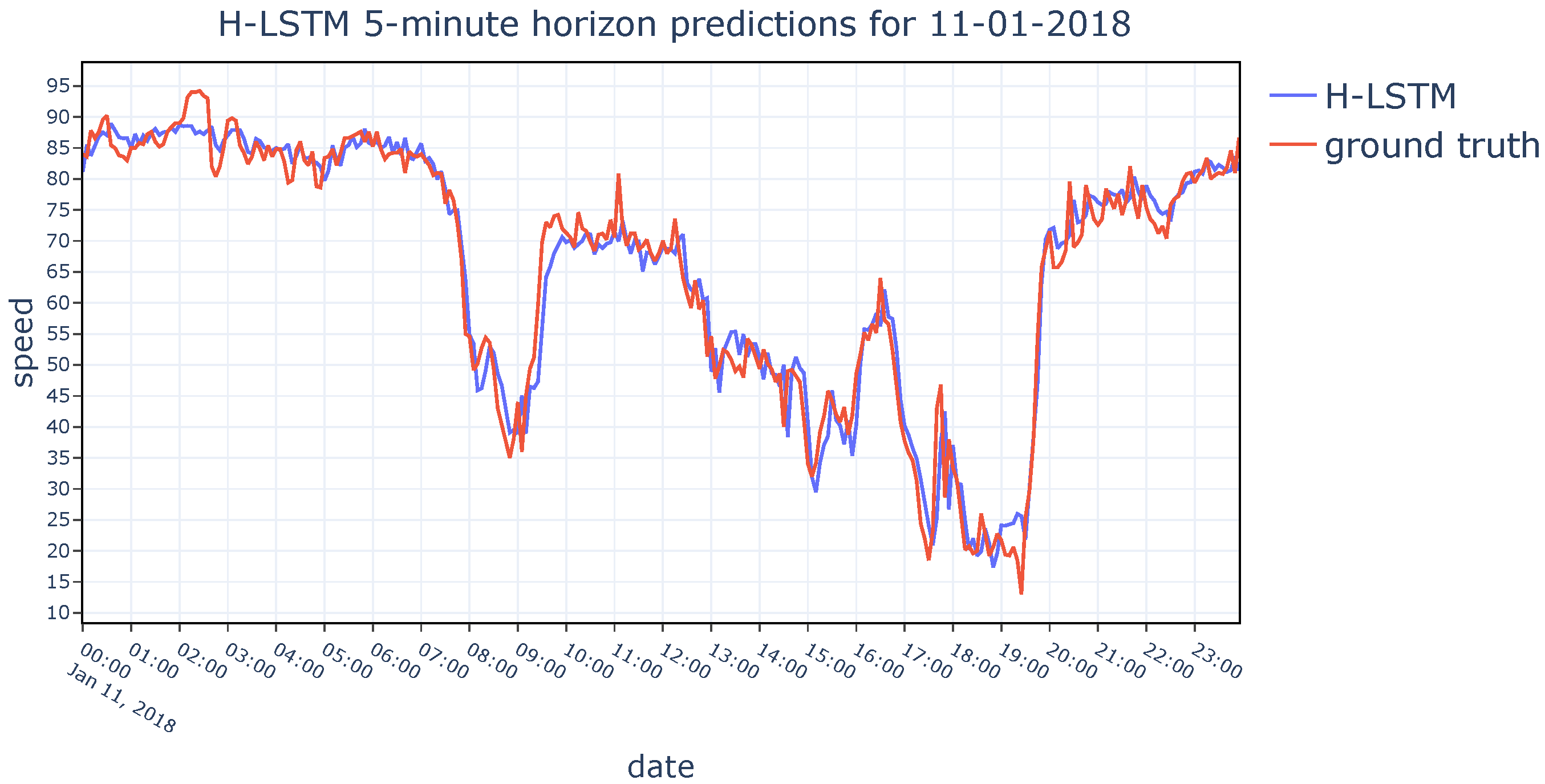

Despite the demonstrated effectiveness of combining short- and long-term dependencies to improve prediction accuracy in these studies, none of them examined the impact of using historical values of various lengths and different time periods on the outcomes of traffic forecasting. In this research, unlike the previous studies, we employ the proposed method not only for short-term predictions but also for medium-term predictions. We evaluate the system’s performance across various forecast horizons, times of the day, and days of the week. Furthermore, in contrast to the other studies, the results were attained with a notably high prediction frequency of every 5 min. These assessments will offer valuable insights to researchers seeking to leverage both recent and distant historical data to develop robust and reliable systems.