AgentReport: A Multi-Agent LLM Approach for Automated and Reproducible Bug Report Generation

Abstract

1. Introduction

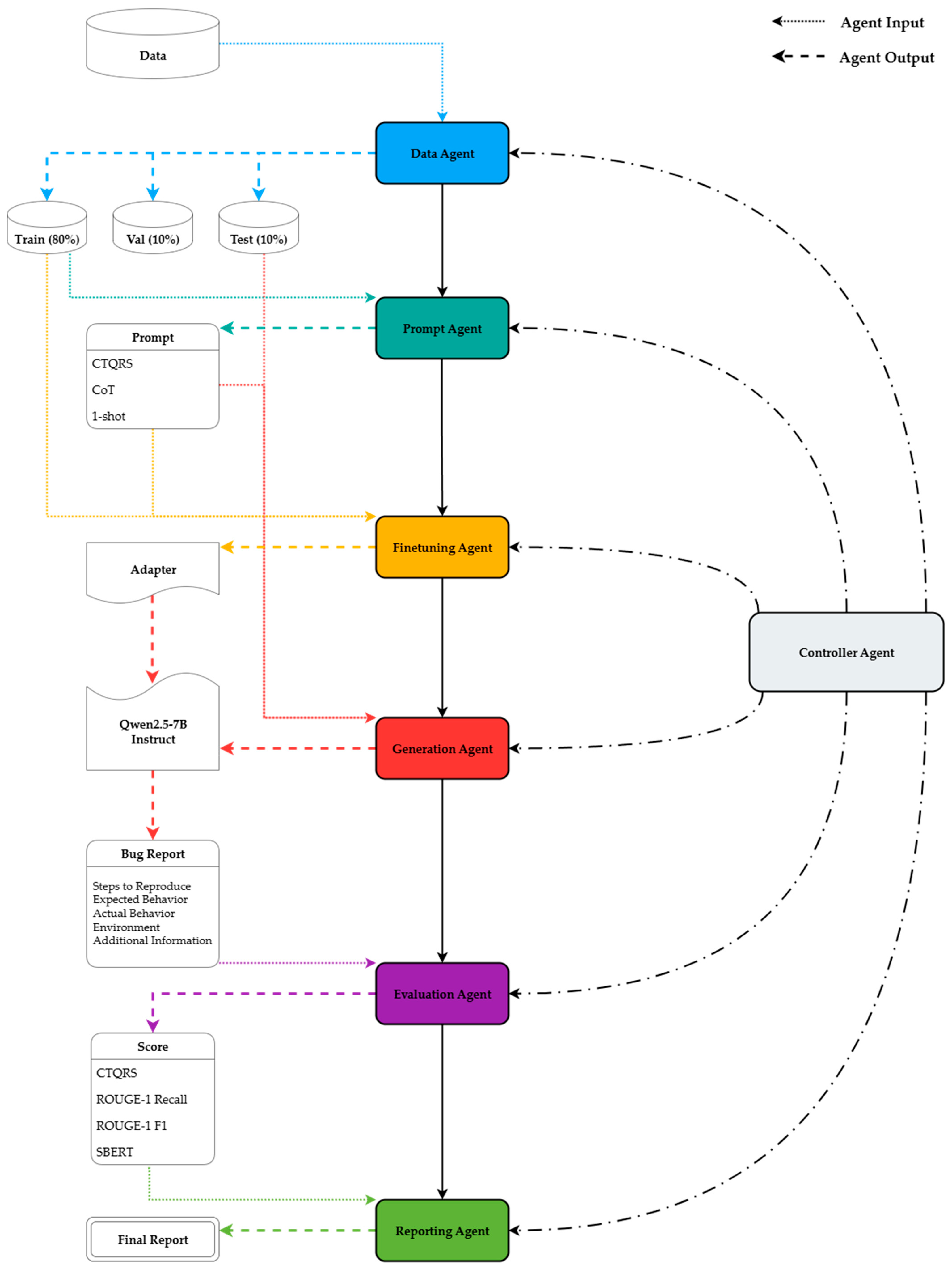

- Proposal of a multi-agent modular pipeline: We designed an architecture composed of Data, Prompt, Fine-tuning, Generation, Evaluation, Reporting, and Controller modules. This modular design overcomes the limitations of single-pipeline approaches and provides a reproducible and extensible framework.

- Introduction of a new evaluation baseline: We introduced ROUGE-1 F1, which had not been reported in previous studies, and achieved 56.8%. This provides a new evaluation standard that complements recall-oriented assessments and incorporates precision.

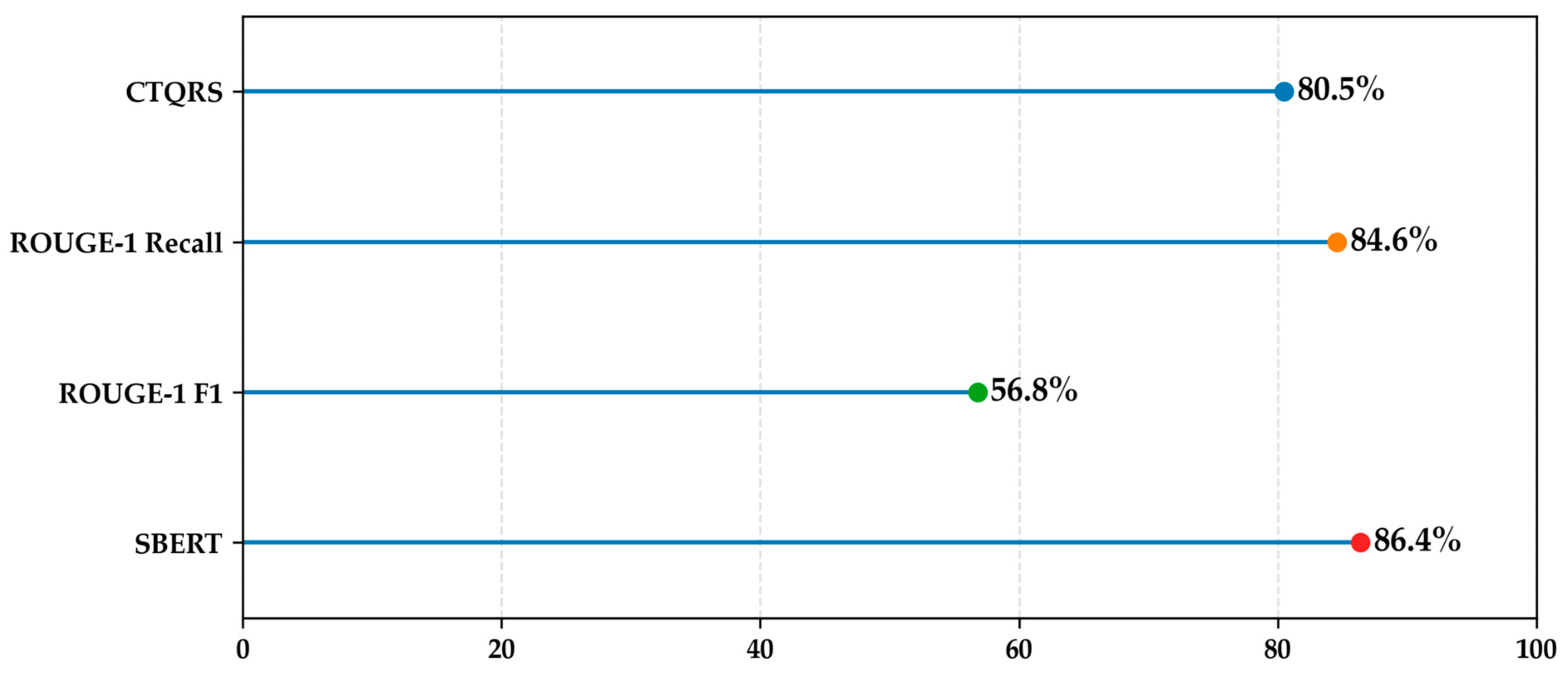

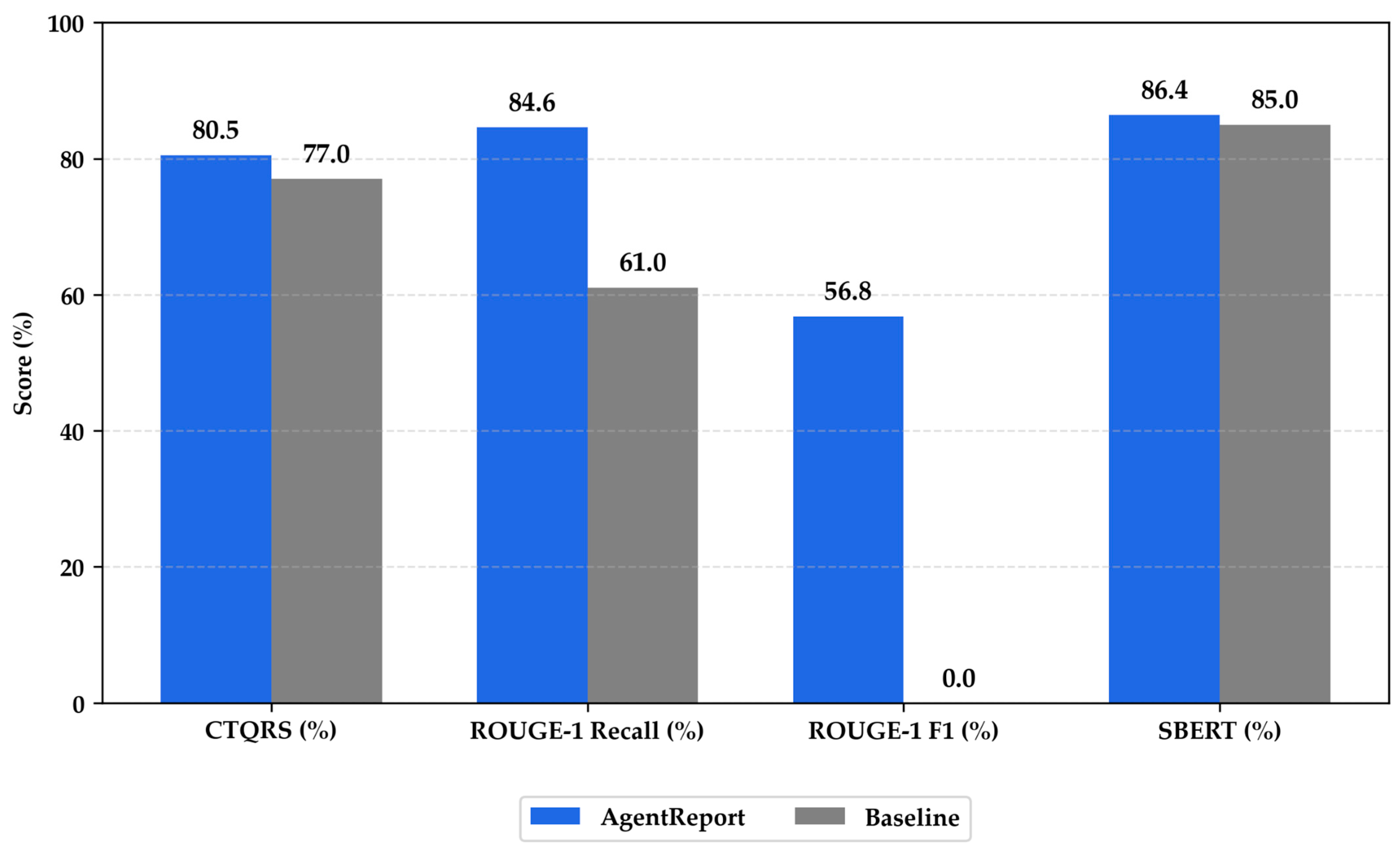

- Validation of performance improvements over the Baseline: In experiments with 3966 Bugzilla summary–report pairs, AgentReport improved CTQRS (+3.5 pp), ROUGE-1 Recall (+23.6 pp), and SBERT (+1.4 pp). These results confirm that AgentReport delivers overall enhancements in structural completeness, lexical fidelity, and semantic consistency.

2. Background Knowledge

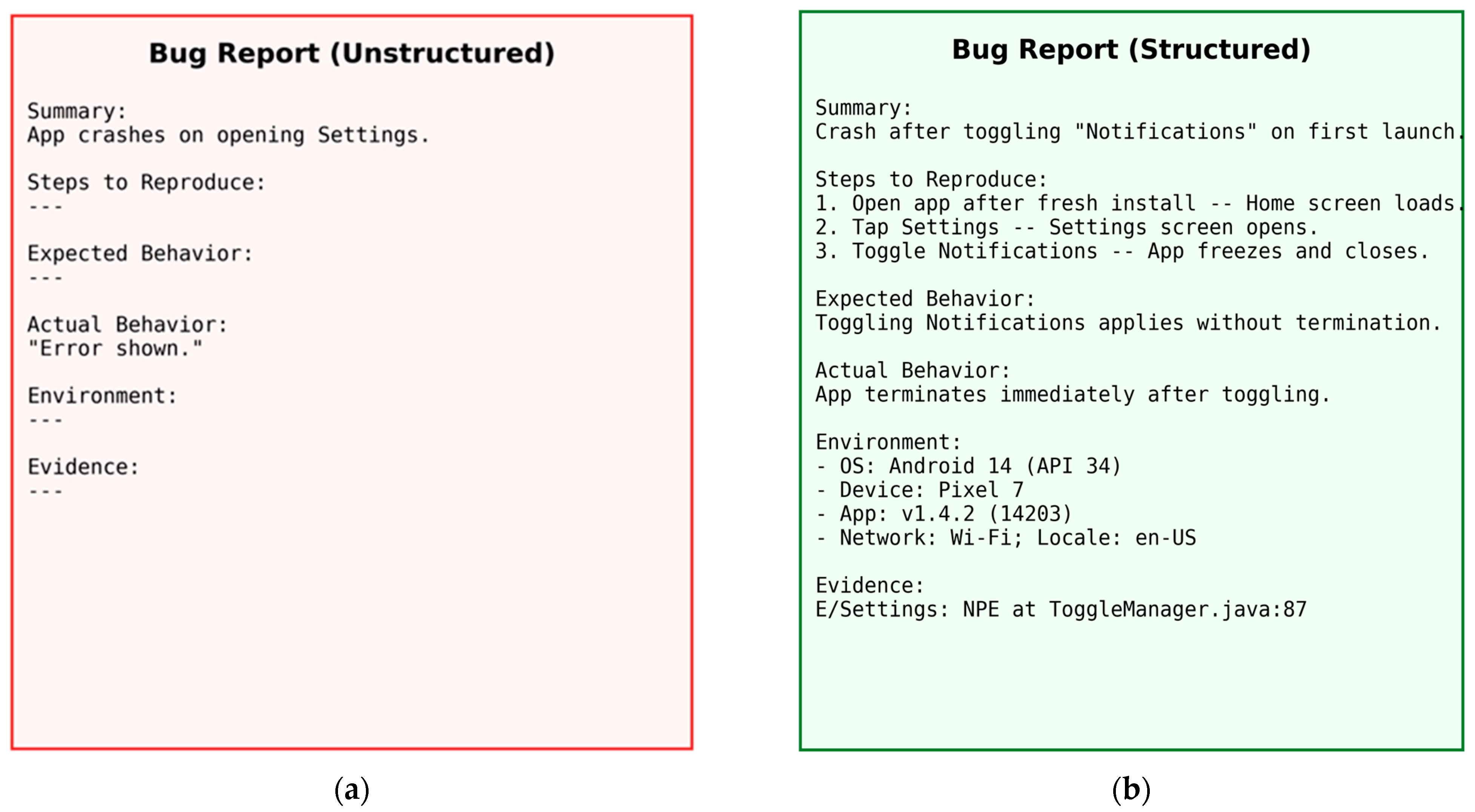

2.1. Variability in Bug Report Quality and the Need for Structuring

2.2. Trends in Automated Bug Report Research

2.3. Baseline Studies and Their Limitations

3. Methodology

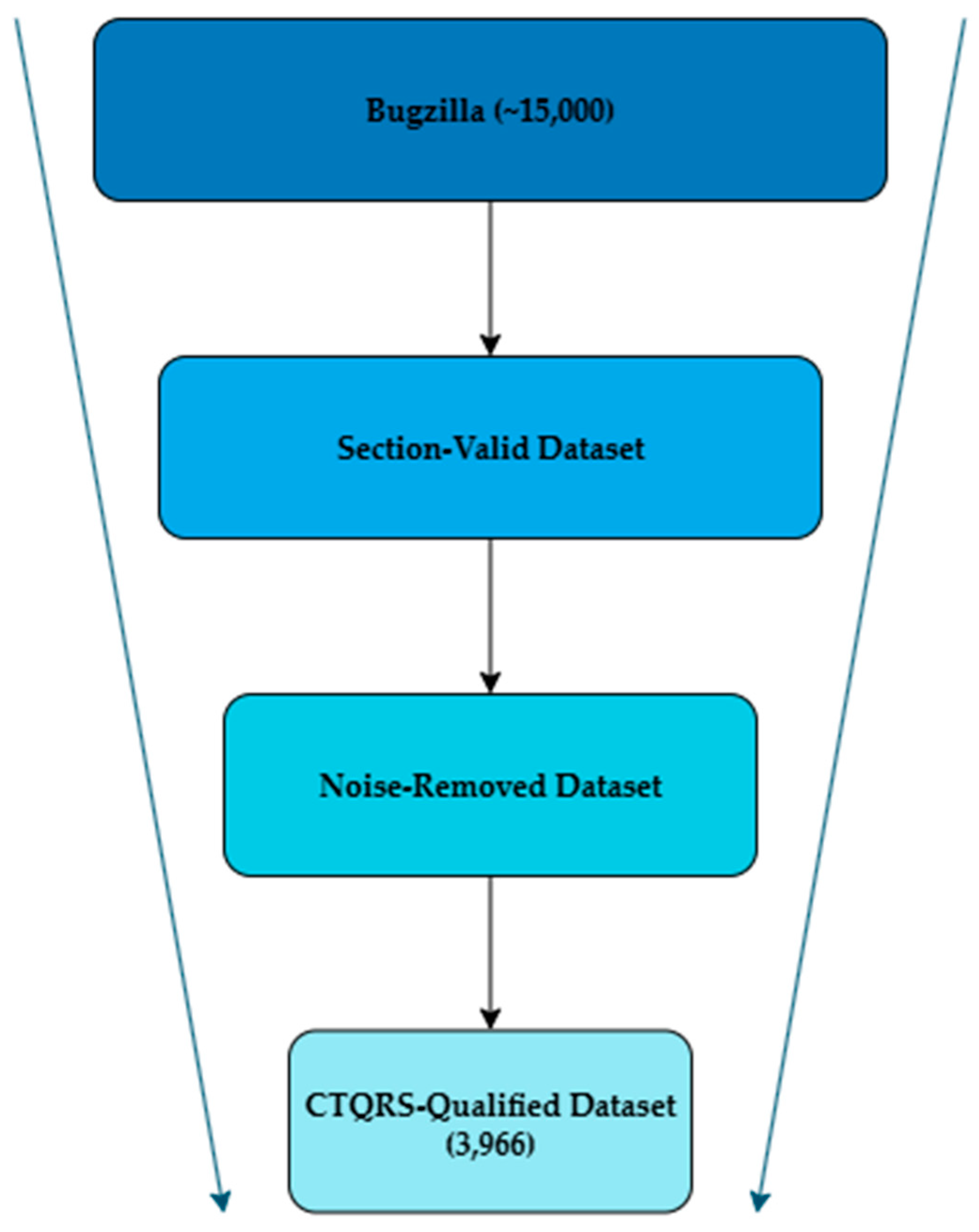

3.1. Data Preprocessing and Data Agent

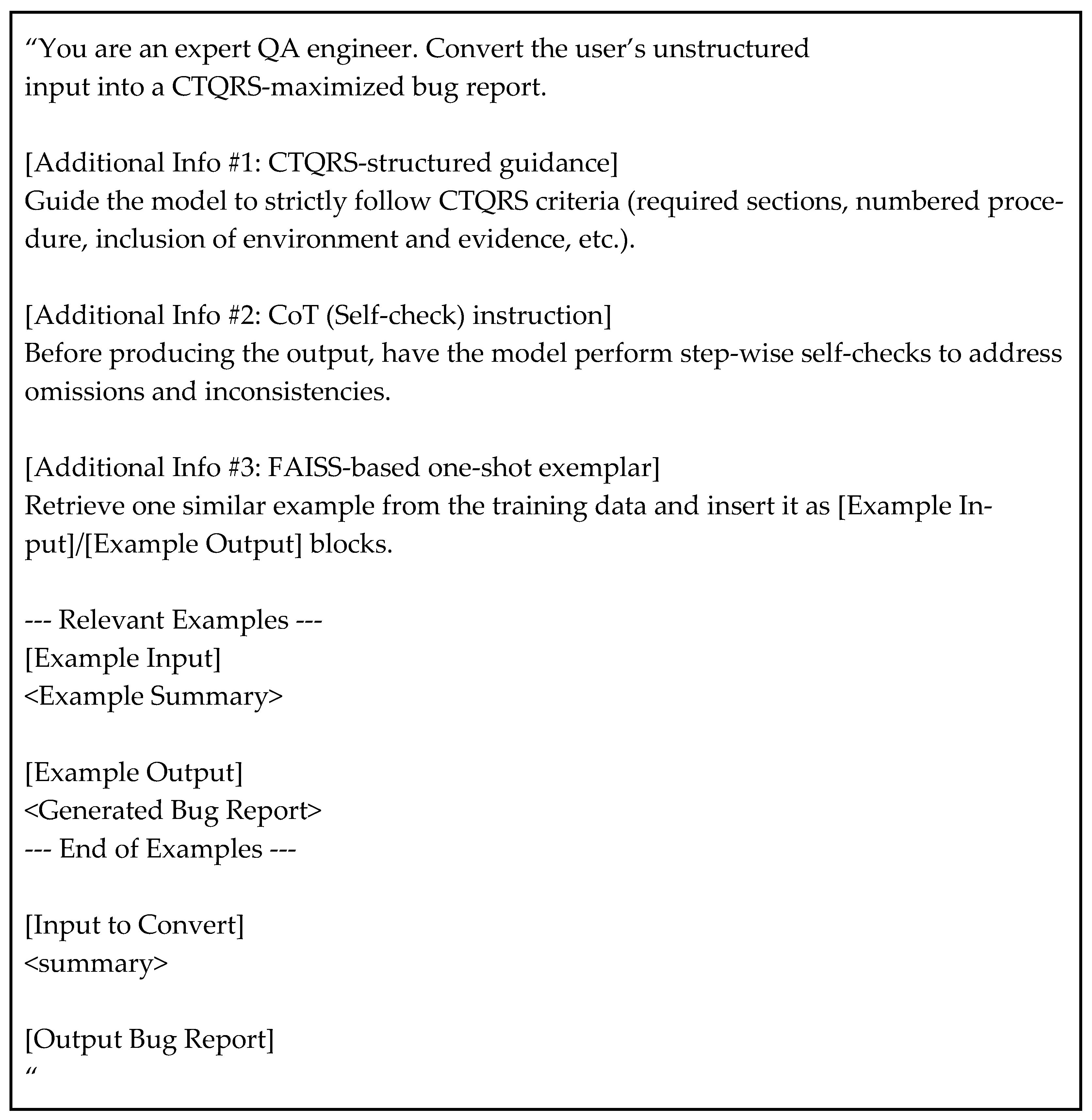

3.2. Prompt Agent

3.3. Fine-Tuning Agent

3.4. Generation Agent

3.5. Evaluation Agent

3.6. Reporting Agent

3.7. Controller Agent

4. Experiments

4.1. Experimental Settings

4.2. Dataset

4.3. Evaluation Metrics

4.4. Baseline and Research Questions

- RQ1. Validation of AgentReport’s Performance:

- Does the multi-agent pipeline (AgentReport) consistently achieve a reliable level of performance in bug report generation, as measured by CTQRS, ROUGE-1 Recall/F1, and SBERT? This question examines whether AgentReport independently shows its capability to produce high-quality bug reports. To further ensure generality, we additionally compared AgentReport with ChatGPT-4o (OpenAI, 2025), a powerful general-purpose LLM, under both zero-shot and three-shot settings using the same Bugzilla test set and evaluation protocol.

- RQ2. Appropriateness of AgentReport Compared to the Baseline:

- When directly compared with the Baseline (LoRA-based instruction fine-tuning combined with a simple directive prompt), does the proposed approach serve as a substantially more suitable alternative in terms of structural completeness, lexical fidelity, and semantic consistency?

- Baseline: Bug reports are generated by combining LoRA-based instruction fine-tuning with a simple directive prompt that directly utilizes the input summary. The Baseline followed the Alpaca-LoRA instruction template described in [1], which provides concise task-related context and formats outputs into a structured bug report. No CTQRS guidance, CoT reasoning, or one-shot exemplar were applied.

- The Baseline employed a directive prompt designed to generate structured reports but without any CTQRS-based reasoning or self-verification process. The exact Baseline prompt is illustrated in Figure 5. This prompt provided a fixed four-section structure but did not include CTQRS guidance, Chain-of-Thought reasoning, or retrieval-based examples. It served as a minimal directive setup intended to represent conventional instruction fine-tuning. The same Qwen2.5-7B-Instruct model was used under the standard LoRA configuration with identical hyperparameters to ensure a fair comparison with AgentReport.

- AgentReport: Bug reports are generated by integrating QLoRA-4bit lightweight fine-tuning, CTQRS-based structured prompts, step-wise reasoning (CoT), one-shot exemplar, and a multi-agent modular pipeline composed of Data, Prompt, Fine-tuning, Generation, Evaluation, Reporting, and Controller agents.

4.5. Experimental Results and Ablation Analysis

4.5.1. Main Results

4.5.2. Ablation Study

5. Discussion

5.1. Analysis of Experimental Results

5.2. Threats to Validity

6. Related Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LLM | Large Language Model |

| CTQRS | Completeness, Traceability, Quantifiability, Reproducibility, Specificity. |

| CoT | Chain-of-Thought |

| QLoRA | Quantized Low-Rank Adaptation |

| SBERT | Sentence-BERT |

| ROUGE-1 | Recall-Oriented Understudy for Gisting Evaluation-1 |

Appendix A. Glossary

| AgentReport: The proposed multi-agent large language model (LLM) framework for automated bug report generation. It integrates data preprocessing, structured prompting, fine-tuning, generation, evaluation, and reporting in a modular pipeline. CTQRS: Completeness, Traceability, Quantifiability, Reproducibility, Specificity. A structural quality metric assessing whether a bug report contains sufficient contextual and procedural information. CoT (Chain-of-Thought): A reasoning strategy that encourages the LLM to generate intermediate reasoning steps, improving contextual completeness and logical coherence. QLoRA (Quantized Low-Rank Adaptation): A parameter-efficient fine-tuning method that enables low-memory training of large models using 4-bit quantization and rank decomposition. SBERT (Sentence-BERT): A semantic embedding model that measures sentence-level similarity using cosine distance in a shared vector space. ROUGE-1 Recall/F1: Lexical evaluation metrics measuring overlap between generated and reference texts; Recall reflects coverage, while F1 balances precision and recall. one-shot exemplar: A single reference instance provided to guide the model’s response pattern or structural format during generation. Multi-agent Architecture: A modular framework where independent agents (Data, Prompt, Finetuning, Generation, Evaluation, Reporting, and Controller) cooperate sequentially to achieve reproducible and scalable experimentation. |

References

- Acharya, A.; Ginde, R. RAG-based bug report generation with large language models. In Proceedings of the IEEE/ACM International Conference on Software Engineering (ICSE), Ottawa, ON, Canada, 27 April–3 May 2025. [Google Scholar]

- Bettenburg, N.; Just, S.; Schröter, A.; Weiss, C.; Premraj, R.; Zimmermann, T. What makes a good bug report? In Proceedings of the 16th ACM SIGSOFT International Symposium on Foundations of Software Engineering (FSE-16), Atlanta, GA, USA, 9–14 November 2008; pp. 308–318. [Google Scholar] [CrossRef]

- Medeiros, M.; Kulesza, U.; Coelho, R.; Bonifacio, R.; Treude, C.; Barbosa, E.A. The impact of bug localization based on crash report mining: A developers’ perspective. arXiv 2024, arXiv:2403.10753. [Google Scholar] [CrossRef]

- Fan, Y.; Xia, X.; Lo, D.; Hassan, A.E. Chaff from the wheat: Characterizing and determining valid bug reports. IEEE Trans. Softw. Eng. 2018, 46, 495–525. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Virtual, 6–12 December 2020. [Google Scholar]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Bubeck, S.; Chandrasekaran, V.; Eldan, R.; Gehrke, J.; Horvitz, E.; Kamar, E.; Lee, P.; Lee, Y.T.; Li, Y.; Lundberg, S.; et al. Sparks of artificial general intelligence: Early experiments with GPT-4. arXiv 2023, arXiv:2303.12712. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-rank adaptation of large language models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Zhang, H.; Zhao, Y.; Yu, S.; Chen, Z. Automated quality assessment for crowdsourced test reports based on dependency parsing. In Proceedings of the 9th International Conference on Dependable Systems and Their Applications (DSA), Wulumuqi, China, 4–5 August 2022; pp. 34–41. [Google Scholar] [CrossRef]

- Lin, C.-Y. ROUGE: A package for automatic evaluation of summaries. In Proceedings of the Workshop on Text Summarization Branches Out (ACL), Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. QLoRA: Efficient finetuning of quantized LLMs. arXiv 2023, arXiv:2305.14314. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Wang, L.; Ma, C.; Feng, X.; Zhang, Z.; Yang, H.; Zhang, J.; Chen, Z.; Tang, J.; Chen, X.; Lin, Y.; et al. A survey on large language model based autonomous agents. Front. Comput. Sci. 2024, 18, 186345. [Google Scholar] [CrossRef]

- Zhang, Z.; Dai, Q.; Bo, X.; Ma, C.; Li, R.; Chen, X.; Zhu, J.; Dong, Z.; Wen, J. A survey on the memory mechanism of LLM-based agents. arXiv 2024, arXiv:2404.02889. [Google Scholar]

- Yao, S.; Zhao, J.; Yu, D.; Du, N.; Shafran, I.; Narasimhan, K.R.; Cao, Y. ReAct: Synergizing reasoning and acting in language models. arXiv 2022, arXiv:2210.03629. [Google Scholar]

- Wu, Q.; Bansal, G.; Zhang, J.; Wu, Y.; Li, B.; Zhu, E.; Jiang, L.; Zhang, X.; Zhang, S.; Liu, J.; et al. AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation. arXiv 2023, arXiv:2308.08155. [Google Scholar]

- Pandya, K.; Holia, M. Automating Customer Service using LangChain: Building custom open-source GPT Chatbot for organizations. arXiv 2023, arXiv:2310.05421. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence embeddings using Siamese BERT-networks. In Proceedings of the Empirical Methods in Natural Language Processing (EMNLP), Hong Kong, China, 3–7 November 2019; pp. 3980–3990. [Google Scholar]

- Sun, C.; Lo, D.; Khoo, X. Towards more accurate retrieval of duplicate bug reports. In Proceedings of the ACM International Conference on Systems and Software Engineering (ASE), Lawrence, KS, USA, 6–12 November 2011; pp. 253–262. [Google Scholar]

- Alipour, A.; Hindle, A.; Stroulia, E. A contextual approach towards more accurate duplicate bug report detection and ranking. Empir. Softw. Eng. 2015, 21, 565–604. [Google Scholar] [CrossRef]

- He, Z.; Marcus, A.; Poshyvanyk, D. Using information retrieval and NLP to classify bug reports. In Proceedings of the International Conference on Program Comprehension (ICPC), Braga, Portugal, 30 June–2 July 2010; pp. 148–157. [Google Scholar]

- Cubranic, D.; Murphy, G.C. Automatic bug triage using text categorization. In Proceedings of the Sixteenth International Conference on Software Engineering & Knowledge Engineering (SEKE), Banff, AB, Canada, 20–24 June 2004; pp. 92–97. [Google Scholar]

- Rastkar, S.; Murphy, G.C.; Murray, G. Summarizing software artifacts: A case study of bug reports. In Proceedings of the 32nd International Conference on Software Engineering (ICSE), Cape Town, South Africa, 2–8 May 2010; Volume 1, pp. 505–514. [Google Scholar]

- Mani, S.; Catherine, R.; Sinha, V.S.; Dubey, A. AUSUM: Approach for unsupervised bug report summarization. In Proceedings of the ACM SIGSOFT 20th International Symposium on the Foundations of Software Engineering (FSE), Cary, NC, USA, 10–17 November 2012; pp. 1–11. [Google Scholar]

- Rastkar, S.; Murphy, G.C.; Murray, G. Automatic summarization of bug reports. IEEE Trans. Softw. Eng. 2014, 40, 366–380. [Google Scholar] [CrossRef]

- Lotufo, R.; Malik, Z.; Czarnecki, K. Modeling the ‘hurried’ bug report reading process to summarize bug reports. Empir. Softw. Eng. 2015, 20, 516–548. [Google Scholar] [CrossRef]

- GitHub. Bug Report Summarization Benchmark Dataset. Available online: https://github.com/GindeLab/Ease_2025_AI_model (accessed on 21 September 2025).

- Beijing Academy of Artificial Intelligence (BAAI). BAAI BAAI/bge-large-en-v1.5 (English) Embedding Model; Technical Report (Model Release); Beijing Academy of Artificial Intelligence (BAAI): Beijing, China, 2023; Available online: https://huggingface.co/BAAI/bge-large-en-v1.5 (accessed on 21 September 2025).

- Team, Q. Qwen2.5 technical report. arXiv 2024, arXiv:2409.12121. [Google Scholar] [CrossRef]

- Team, U. Unsloth: Efficient Fine-Tuning Framework for LLMs. GitHub Repository, 2025. Available online: https://github.com/unslothai/unsloth (accessed on 21 September 2025).

- Johnson, J.; Douze, M.; Jégou, H. Billion-scale similarity search with GPUs. IEEE Trans. Big Data 2021, 7, 535–547. [Google Scholar] [CrossRef]

- Fang, S.; Tan, Y.-S.; Zhang, T.; Xu, Z.; Liu, H. Effective prediction of bug-fixing priority via weighted graph convolutional networks. IEEE Trans. Reliab. 2019, 7, 535–547. [Google Scholar] [CrossRef]

- Fang, S.; Zhang, T.; Tan, Y.; Jiang, H.; Xia, X.; Sun, X. RepresentThemAll: A universal learning representation of bug reports. In Proceedings of the IEEE/ACM 45th International Conference on Software Engineering (ICSE), Melbourne, Australia, 14–20 May 2023; pp. 602–614. [Google Scholar]

- Liu, H.; Yu, Y.; Li, S.; Guo, Y.; Wang, D.; Mao, X. BugSum: Deep context understanding for bug report summarization. In Proceedings of the IEEE/ACM International Conference on Program Comprehension (ICPC), Seoul, Republic of Korea, 13–15 July 2020; pp. 94–105. [Google Scholar]

- Shao, Y.; Xiang, B. Towards effective bug report summarization by domain-specific representation learning. IEEE Access 2024, 12, 37653–37662. [Google Scholar] [CrossRef]

- Lamkanfi, A.; Demeyer, S.; Giger, E.; Goethals, B. Predicting the severity of a reported bug. In Proceedings of the IEEE International Conference on Mining Software Repositories (MSR), Cape Town, South Africa, 2–3 May 2010; pp. 1–10. [Google Scholar] [CrossRef]

- Sarkar, A.; Rigby, P.C.; Bartalos, B. Improving Bug Triaging with High Confidence Predictions at Ericsson. In Proceedings of the IEEE International Conference on Software Maintenance and Evolution (ICSME), Cleveland, OH, USA, 30 September–4 October 2019; pp. 81–91. [Google Scholar]

- Chen, M. Evaluating large language models trained on code. arXiv 2021, arXiv:2107.03374. [Google Scholar] [CrossRef]

- Allal, L.B.; Muennighoff, N.; Umapathi, L.K.; Lipkin, B.; von Werra, L. StarCoder: Open source code LLMs. arXiv 2023, arXiv:2305.06161. [Google Scholar]

- Rozière, B.; Gehring, J.; Gloeckle, F.; Sootla, S.; Gat, I.; Tan, X.E.; Adi, Y.; Liu, J.; Sauvestre, R.; Remez, T.; et al. Code LLaMA: Open foundation models for code. arXiv 2023, arXiv:2308.12950. [Google Scholar]

- Luo, Z.; Xu, C.; Zhao, P.; Sun, Q.; Geng, X.; Hu, W.; Tao, C.; Ma, J.; Lin, Q.; Jiang, D. WizardCoder: Empowering code LLMs to speak code fluently. arXiv 2023, arXiv:2306.08568. [Google Scholar]

- Madaan, A.; Tandon, N.; Gupta, P.; Hallinan, S.; Gao, L.; Wiegreffe, S.; Alon, U.; Dziri, N.; Prabhumoye, S.; Yang, Y.; et al. Self-Refine: Iterative refinement with feedback. arXiv 2023, arXiv:2303.17651. [Google Scholar]

- Chen, X.; Lin, M.; Schärli, N.; Zhou, D. Teaching LLMs to self-debug. arXiv 2023, arXiv:2304.05125. [Google Scholar]

- Gou, Z.; Shao, Z.; Gong, Y.; Shen, Y.; Yang, Y.; Duan, N.; Chen, W. CRITIC: LLMs can self-correct with tool-interactive critiquing. arXiv 2023, arXiv:2305.11738. [Google Scholar]

- Nye, M.; Andreassen, A.J.; Gur-Ari, G.; Michalewski, H.; Austin, J.; Bieber, D.; Dohan, D.; Lewkowycz, A.; Bosma, M.; Luan, D.; et al. Show your work: Scratchpads for intermediate reasoning. arXiv 2021, arXiv:2112.00114. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.-J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–7 July 2002; pp. 311–318. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating text generation with BERT. In Proceedings of the 8th International Conference on Learning Representations (ICLR 2020), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Fabbri, A.; Kryscinski, V.; McCann, B.; Xiong, C.; Socher, R.; Radev, D. SummEval: Re-evaluating summarization evaluation. Trans. Assoc. Comput. Linguist. 2021, 9, 391–409. [Google Scholar] [CrossRef]

- Eghbali, A.; Pradel, M. CrystalBLEU: Precisely and efficiently measuring code generation quality. In Proceedings of the 37th IEEE/ACM International Conference on Automated Software Engineering, Belfast, UK, 10–12 June 2022; pp. 1–12. [Google Scholar]

- Zhou, S.; Alon, U.; Agarwal, S.; Neubig, G. CodeBERTScore: Evaluating code generation with BERT-based similarity. arXiv 2023, arXiv:2302.05527. [Google Scholar]

- Fu, J.; Ng, S.-K.; Jiang, Z.; Liu, P. GPTScore: Evaluate as you desire. arXiv 2023, arXiv:2302.04166. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, S.; Fried, D.; Neubig, G. Execution-based evaluation for open-domain code generation. arXiv 2022, arXiv:2212.10481. [Google Scholar]

- Lu, S.; Guo, D.; Ren, R.; Huang, J.; Svyatkovskiy, A.; Blanco, A.; Clement, C.; Drain, D.; Jiang, D.; Tang, D.; et al. CodeXGLUE: A machine learning benchmark dataset for code understanding and generation. arXiv 2021, arXiv:2102.04664. [Google Scholar] [CrossRef]

- Yasunaga, M.; Liang, P. Break-it-fix-it: Unsupervised learning for program repair. In Proceedings of the 38th International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; pp. 11941–11952. [Google Scholar]

- Ye, H.; Martinez, M.; Durieux, T.; Monperrus, M. A comprehensive study of automated program repair on QuixBugs. J. Syst. Softw. 2021, 171, 110825. [Google Scholar] [CrossRef]

- GitHub. AgentReport: A Multi-Agent LLM Approach for Automated and Reproducible Bug Report Generation. Available online: https://github.com/insui12/AgentReport (accessed on 1 November 2025).

| Metric | Evaluated Aspect | Description |

|---|---|---|

| CTQRS | Structural Completeness | Evaluates overall completeness, traceability, quantifiability, reproducibility, and specificity. Scores are computed on a 17-point scale, then normalized to a 0–1 range. A score below 0.5 indicates a low-quality report, while a score of 0.9 or higher represents a high-quality report. This study employed the publicly released automatic scoring script from prior research. |

| ROUGE-1 Recall | Lexical Coverage | Measures how well the key terms of the reference report are included in the generated output. Scores between 0.3 and 0.5 indicate many missing key terms, while scores above 0.8 indicate sufficient coverage. However, Recall alone cannot effectively filter out unnecessary words. |

| ROUGE-1 F1 | Lexical Precision | Considers both Recall and Precision to evaluate whether essential terms are sufficiently included while unnecessary expressions are suppressed. Prior baseline work used only Recall, but this study additionally applied F1 to reflect precision. A low score suggests excessive redundant wording, while scores above 0.8 indicate a well-balanced report. |

| SBERT | Semantic Consistency | Assesses semantic alignment with the reference report using Sentence-BERT embeddings and cosine similarity. A score below 0.6 indicates semantic mismatch, while scores above 0.85 indicate that the generated report conveys the same meaning and context despite different phrasing. |

| Metric | Mean (%) | 95% CI Low (%) | 95% CI High (%) |

|---|---|---|---|

| CTQRS | 80.5 | 79.3 | 81.7 |

| ROUGE-1 Recall | 84.6 | 82.7 | 86.4 |

| ROUGE-1 F1 | 56.8 | 54.9 | 58.9 |

| SBERT | 86.4 | 85.2 | 87.5 |

| Configuration | CTQRS (%) | ROUGE-1 Recall (%) | ROUGE-1 F1 (%) | SBERT (%) |

|---|---|---|---|---|

| ase | 74.7 | 65.6 | 24.1 | 83.0 |

| CTQRS only | 76.0 | 67.8 | 24.5 | 84.3 |

| CoT only | 76.5 | 67.5 | 24.9 | 84.6 |

| one-shot only | 80.0 | 79.9 | 26.8 | 83.4 |

| QLoRA-4bit only | 79.8 | 72.0 | 57.7 | 87.3 |

| All except CoT | 81.0 | 84.3 | 54.9 | 84.8 |

| All except one-shot | 80.0 | 70.0 | 56.5 | 86.7 |

| All except QLoRA | 76.9 | 75.4 | 24.9 | 82.5 |

| All except CTQRS | 81.1 | 84.7 | 55.1 | 85.9 |

| All combined | 80.5 | 84.6 | 56.8 | 86.4 |

| Category | Baseline | AgentReport |

|---|---|---|

| Structural Features | LoRA-based instruction fine-tuning + simple prompts | QLoRA-4bit + CTQRS-based structured prompts + CoT + one-shot + multi-agent modular pipeline |

| CTQRS | 77.0% | 80.5% (consistent inclusion of core elements, stable improvement) |

| ROUGE-1 Recall | 61.0% | 84.6% (greater coverage, faithful reflection of reference vocabulary) |

| ROUGE-1 F1 | – | 56.8% (not high in absolute terms, precision improvement needed) |

| SBERT | 85.0% | 86.4% (semantic consistency maintained with refined expressions) |

| Advantages | Simple implementation, provides baseline reference | Improved performance, structural completeness, semantic stability, reproducibility, scalability |

| Limitations | Insufficient CTQRS coverage, recall-oriented bias, lack of precision, no reproducibility or scalability | F1 relatively low, requiring improvement in conciseness and accuracy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, S.; Yang, G. AgentReport: A Multi-Agent LLM Approach for Automated and Reproducible Bug Report Generation. Appl. Sci. 2025, 15, 11931. https://doi.org/10.3390/app152211931

Choi S, Yang G. AgentReport: A Multi-Agent LLM Approach for Automated and Reproducible Bug Report Generation. Applied Sciences. 2025; 15(22):11931. https://doi.org/10.3390/app152211931

Chicago/Turabian StyleChoi, Seojin, and Geunseok Yang. 2025. "AgentReport: A Multi-Agent LLM Approach for Automated and Reproducible Bug Report Generation" Applied Sciences 15, no. 22: 11931. https://doi.org/10.3390/app152211931

APA StyleChoi, S., & Yang, G. (2025). AgentReport: A Multi-Agent LLM Approach for Automated and Reproducible Bug Report Generation. Applied Sciences, 15(22), 11931. https://doi.org/10.3390/app152211931