PrivRewrite: Differentially Private Text Rewriting Under Black-Box Access with Refined Sensitivity Guarantees

Abstract

1. Introduction

- We propose PrivRewrite, a novel method for DP text rewriting that achieves formal -DP guarantees using only black-box access to LLMs. The approach is lightweight, practical, and avoids reliance on model internals, fine-tuning, or local inference.

- We formally define the selection utility for the exponential mechanism in the text-rewriting setting and derive its global sensitivity. Our analysis yields a tighter sensitivity bound than naive estimates, enabling improved utility at the same privacy level.

- We conduct extensive experiments to assess the privacy–utility trade-off of PrivRewrite. Results demonstrate that our method achieves high semantic quality while maintaining formal DP guarantees and remains effective even in constrained API-only access scenarios.

2. Related Work

3. Preliminary and Problem Definition

3.1. Preliminary

3.2. Problem Definition

- Utility: should preserve the semantic content of x and remain fluent and coherent.

- Privacy: must satisfy -DP with respect to x.

- Practicality: The mechanism should operate with only black-box access to a language model, without relying on internal components such as logits, gradients, or model weights.

3.3. Threat Model and Assumptions

4. Proposed Method

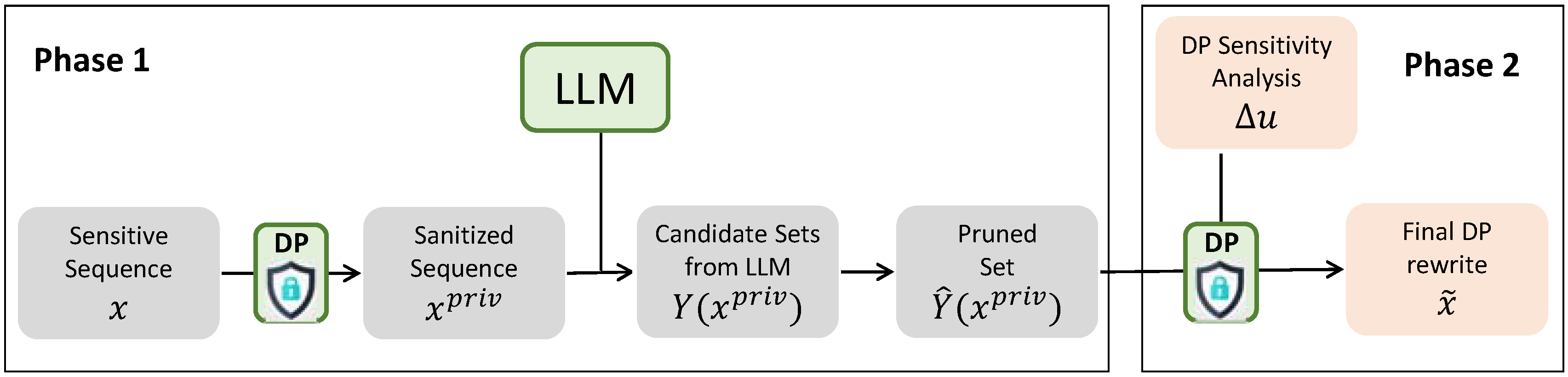

- Phase 1 (Sanitized candidate generation): Given an input sequence, we construct a privatized view using a per-token DP sanitizer. The sanitized text is then submitted to the black-box LLM, which produces a set of k rewrite candidates. To reduce redundancy, near-duplicate candidates are pruned based on candidate-to-candidate similarity.

- Phase 2 (Differentially private selection): From the candidate set, we choose a single rewrite using the exponential mechanism. This mechanism samples according to a bounded similarity score with respect to the input, so that the final output preserves meaning while incorporating randomized noise for privacy.

4.1. Phase 1 (Sanitized Candidate Generation)

4.1.1. Token-Level Sanitization

4.1.2. Candidate Generation Using LLM

4.1.3. Near-Duplicate Pruning

- Initialize .

- For each j in order , add to if for every .

4.2. Phase 2 (Differentially Private Selection)

4.2.1. Utility Function

4.2.2. Exponential-Mechanism Selection

4.3. Privacy Guarantee and Utility Analysis

4.3.1. Privacy Guarantee

4.3.2. Utility Analysis and Fallback Under Post-Processing

5. Experiments

5.1. Experimental Setup

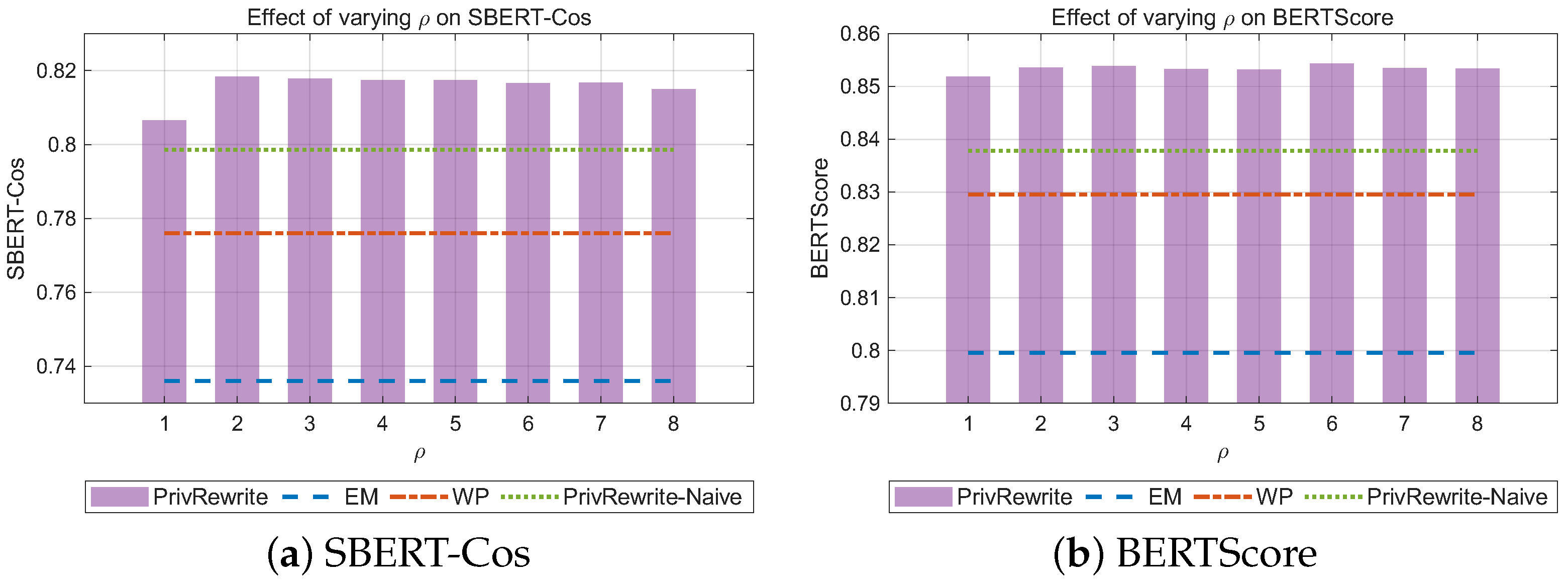

- WordPerturb (WP) [11]: A word-level privatization approach based on -privacy, a metric-based relaxation of DP. Each word embedding is perturbed with calibrated noise in the vector space and then mapped back to the nearest vocabulary word. This provides metric-DP guarantees while aiming to preserve semantic meaning.

- Exponential mechanism-based text rewriting (EM): An approach that applies the exponential mechanism [39] to rewrite each token independently. This corresponds to directly releasing the token-level sanitized text produced in Phase 1.

- PrivRewrite with naive sensitivity (PrivRewrite-Naive): A variant of our method where the global sensitivity in Phase 2 is conservatively set to . This baseline isolates the effect of our proposed tight sensitivity analysis.

- PrivRewrite: The proposed approach introduced in this paper, which combines token-level sanitization (Phase 1) with tight-sensitivity exponential selection (Phase 2).

5.2. Experimental Results

6. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Song, S.; Kim, J. Adapting Geo-Indistinguishability for Privacy-Preserving Collection of Medical Microdata. Electronics 2023, 12, 2793. [Google Scholar] [CrossRef]

- Saura, J.R.; Ribeiro-Soriano, D.; Palacios-Marques, D. From user-generated data to data-driven innovation: A research agenda to understand user privacy in digital markets. Int. J. Inf. Manag. 2021, 60, 102331. [Google Scholar] [CrossRef]

- Kim, J.W.; Lim, J.H.; Moon, S.M.; Jang, B. Collecting health lifelog data from smartwatch users in a privacy-preserving manner. IEEE Trans. Consum. Electron. 2019, 65, 369–378. [Google Scholar] [CrossRef]

- Dash, S.; Shakyawar, S.K.; Sharma, M.; Kaushik, S. Big data in healthcare: Management, analysis and future prospects. J. Big Data 2019, 6, 1–25. [Google Scholar] [CrossRef]

- Li, M.; Liu, J.; Yang, Y. Automated Identification of Sensitive Financial Data Based on the Topic Analysis. Future Internet 2024, 16, 55. [Google Scholar] [CrossRef]

- Health Insurance Portability and Accountability Act. Available online: https://www.hhs.gov/hipaa/index.html (accessed on 18 April 2025).[Green Version]

- General Data Protection Regulation. Available online: https://gdpr-info.eu/ (accessed on 18 April 2025).[Green Version]

- Dwork, C. Differential privacy. In Proceedings of the International Colloquium on Automata, Languages, and Programming, Venice, Italy, 10–14 July 2006; pp. 1–12. [Google Scholar][Green Version]

- Erlingsson, U.; Pihur, V.; Korolova, A. RAPPOR: Randomized aggregatable privacy-preserving ordinal response. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, Scottsdale, AZ, USA, 3–7 November 2014; pp. 1054–1067. [Google Scholar][Green Version]

- Wang, T.; Blocki, J.; Li, N.; Jha, S. Locally differentially private protocols for frequency estimation. In Proceedings of the USENIX Conference on Security Symposium, Berkeley, CA, USA, 16–18 August 2017. [Google Scholar][Green Version]

- Feyisetan, O.; Balle, B.; Drake, T.; Diethe, T. Privacy-and utility-preserving textual analysis via calibrated multivariate perturbations. In Proceedings of the International Conference on Web Search and Data Mining, Houston, TX, USA, 3–7 February 2020; pp. 178–186. [Google Scholar][Green Version]

- Xu, Z.; Aggarwal, A.; Feyisetan, O.; Teissier, N. A differentially private text perturbation method using regularized Mahalanobis metric. In Proceedings of the Second Workshop on Privacy in NLP, Online, 20 November 2020; pp. 7–17. [Google Scholar][Green Version]

- Carvalho, R.S.; Vasiloudis, T.; Feyisetan, O. TEM: High utility metric differential privacy on text. arXiv 2021, arXiv:2107.07928. [Google Scholar] [CrossRef]

- Meehan, C.; Mrini, K.; Chaudhuri, K. Sentence-level privacy for document embeddings. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; pp. 3367–3380. [Google Scholar]

- Li, X.; Wang, S.; Zeng, S.; Wu, Y.; Yang, Y. A survey on LLM-based multi-agent systems: Workflow, infrastructure, and challenges. Vicinagearth 2024, 1, 9. [Google Scholar] [CrossRef]

- Zhou, H.; Hu, C.; Yuan, Y.; Cui, Y.; Jin, Y.; Chen, C. Large Language Model (LLM) for Telecommunications: A Comprehensive Survey on Principles, Key Techniques, and Opportunities. IEEE Commun. Surv. Tutor. 2025, 27, 1955–2005. [Google Scholar] [CrossRef]

- Mattern, J.; Weggenmann, B.; Kerschbaum, F. The Limits of Word Level Differential Privacy. In Findings of the Association for Computational Linguistics: NAACL 2022; Association for Computational Linguistics: Singapore, 2022; pp. 867–881. [Google Scholar]

- Utpala, S.; Hooker, S.; Chen, P.-Y. Locally Differentially Private Document Generation Using Zero Shot Prompting. In Findings of the Association for Computational Linguistics: EMNLP 2023; Association for Computational Linguistics: Singapore, 2023; pp. 8442–8457. [Google Scholar]

- Meisenbacher, S.; Chevli, M.; Vladika, J.; Matthes, F. DP-MLM: Differentially Private Text Rewriting Using Masked Language Models. In Findings of the Association for Computational Linguistics: ACL 2024; Association for Computational Linguistics: Singapore, 2024; pp. 9314–9328. [Google Scholar]

- Tong, M.; Chen, K.; Zhang, J.; Qi, Y.; Zhang, W.; Yu, N.; Zhang, T.; Zhang, Z. InferDPT: Privacy-Preserving Inference for Black-box Large Language Model. arXiv 2023, arXiv:2310.12214. [Google Scholar] [CrossRef]

- Miglani, V.; Yang, A.; Markosyan, A.; Garcia-Olano, D.; Kokhlikyan, N. Using Captum to Explain Generative Language Models. In Proceedings of the 3rd Workshop for Natural Language Processing Open Source Software, Singapore, 6 December 2023; pp. 165–173. [Google Scholar]

- Chang, Y.; Cao, B.; Wang, Y.; Chen, J.; Lin, L. XPrompt: Explaining Large Language Model’s Generation via Joint Prompt Attribution. arXiv 2024, arXiv:2405.20404. [Google Scholar] [CrossRef]

- Zhou, X.; Lu, Y.; Ma, R.; Gui, T.; Wang, Y.; Ding, Y.; Zhang, Y.; Zhang, Q.; Huang, X. TextObfuscator: Making Pre-trained Language Model a Privacy Protector via Obfuscating Word Representations. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Singapore, 2023; pp. 5459–5473. [Google Scholar]

- Yue, X.; Du, M.; Wang, T.; Li, Y.; Sun, H.; Chow, S.S.M. Differential Privacy for Text Analytics via Natural Text Sanitization. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021; Association for Computational Linguistics: Singapore, 2021; pp. 3853–3866. [Google Scholar]

- Chen, H.; Mo, F.; Wang, Y.; Chen, C.; Nie, J.-Y.; Wang, C.; Cui, J. A Customized Text Sanitization Mechanism with Differential Privacy. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Singapore, 2023; pp. 4606–4621. [Google Scholar]

- Bollegala, D.; Otake, S.; Machide, T.; Kawarabayashi, K. A Metric Differential Privacy Mechanism for Sentence Embeddings. ACM Trans. Priv. Secur. 2025, 28, 1–34. [Google Scholar] [CrossRef]

- Li, M.; Fan, H.; Fu, S.; Ding, J.; Feng, Y. DP-GTR: Differentially Private Prompt Protection via Group Text Rewriting. arXiv 2025, arXiv:2503.04990. [Google Scholar] [CrossRef]

- Lin, S.; Hua, W.; Wang, Z.; Jin, M.; Fan, L.; Zhang, Y. EmojiPrompt: Generative Prompt Obfuscation for Privacy-Preserving Communication with Cloud-based LLMs. arXiv 2025, arXiv:2402.05868. [Google Scholar] [CrossRef]

- Mai, P.; Yan, R.; Huang, Z.; Yang, Y.; Pang, Y. Split-and-Denoise: Protect Large Language Model Inference with Local Differential Privacy. arXiv 2024, arXiv:2310.09130. [Google Scholar] [CrossRef]

- Wu, H.; Dai, W.; Wang, L.; Yan, Q. Cape: Context-Aware Prompt Perturbation Mechanism with Differential Privacy. arXiv 2025, arXiv:2505.05922. [Google Scholar] [CrossRef]

- Hong, J.; Wang, J.T.; Zhang, C.; Li, Z.; Li, B.; Wang, Z. DP-OPT: Make Large Language Model Your Privacy-Preserving Prompt Engineer. arXiv 2024, arXiv:2312.03724. [Google Scholar] [CrossRef]

- Zhou, Y.; Ni, T.; Lee, W.-B.; Zhao, Q. A Survey on Backdoor Threats in Large Language Models (LLMs): Attacks, Defenses, and Evaluation Methods. Trans. Artif. Intell. 2025, 1, 28–58. [Google Scholar] [CrossRef]

- Wang, J.; Ni, T.; Lee, W.-B.; Zhao, Q. A Contemporary Survey of Large Language Model Assisted Program Analysis. Trans. Artif. Intell. 2025, 1, 105–129. [Google Scholar] [CrossRef]

- Jaffal, N.O.; Alkhanafseh, M.; Mohaisen, D. Large Language Models in Cybersecurity: A Survey of Applications, Vulnerabilities, and Defense Techniques. AI 2025, 6, 216. [Google Scholar] [CrossRef]

- Choi, S.; Alkinoon, A.; Alghuried, A.; Alghamdi, A.; Mohaisen, D. Attributing ChatGPT-Transformed Synthetic Code. In Proceedings of the IEEE International Conference on Distributed Computing Systems, Glasgow, Scotland, 20–23 July 2025; pp. 89–99. [Google Scholar]

- Lin, J.; Mohaisen, D. From Large to Mammoth: A Comparative Evaluation of Large Language Models in Vulnerability Detection. In Proceedings of the Network and Distributed System Security Symposium, San Diego, CA, USA, 24–28 February 2025. [Google Scholar]

- Alghamdi, A.; Mohaisen, D. Through the Looking Glass: LLM-Based Analysis of AR/VR Android Applications Privacy Policie. In Proceedings of the International Conference on Machine Learning and Applications, Vienna, Austria, 21–27 July 2024; pp. 534–539. [Google Scholar]

- Lin, J.; Mohaisen, D. Evaluating Large Language Models in Vulnerability Detection Under Variable Context Windows. In Proceedings of the International Conference on Machine Learning and Applications, Vienna, Austria, 21–27 July 2024; pp. 1131–1134. [Google Scholar]

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Found. Trends Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Ben Abacha, A.; Demner-Fushman, D. A Question-Entailment Approach to Question Answering. Bmc Bioinform. 2019, 20, 511. [Google Scholar] [CrossRef] [PubMed]

- Maas, A.L.; Daly, R.E.; Pham, P.T.; Huang, D.; Ng, A.Y.; Potts, C. Learning Word Vectors for Sentiment Analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics, Portland, OR, USA, 19–24 June 2011. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence embeddings using Siamese BERT networks. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 3982–3992. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating text generation with BERT. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

| vs. | vs. Phase 1 Candidates | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Dataset | ||||||||||

| MedQuAD | 0.708 | 0.725 | 0.737 | 0.736 | 0.746 | 0.798 | 0.801 | 0.802 | 0.806 | 0.809 |

| IMDB | 0.679 | 0.689 | 0.696 | 0.713 | 0.722 | 0.772 | 0.778 | 0.788 | 0.792 | 0.798 |

| Sample 1 | |

| Method | Text |

| Original | Pilomatrixoma is a benign (non-cancerous) skin tumor of the hair follicle (structure in the skin that makes hair). They tend to develop in the head and neck area and are usually not associated with any other signs and symptoms (isolated). |

| EM | Pilomatrixoma is a impervious (basis-carcinoma) plastic nerve of the wear pineal (includes in the yellowish that always bones). They get to establishing in the over and neck situated and are usually not possible with any no real and infection (remain). |

| PrivRewrite-Naive | Pilomatrixoma is a benign skin tumor that originates from hair follicle cells and often contains calcifications, giving it a firm texture. These tumors commonly appear in the head and neck region, typically presenting as painless, slow-growing masses without signs of inflammation. |

| PrivRewrite | Pilomatrixoma is a benign skin tumor, sometimes confused with more concerning conditions. These growths tend to occur on the head and neck and typically present without pain or signs of infection. |

| Sample 2 | |

| Original | Autoimmune hepatitis is a chronicor long lastingdisease in which the body’s immune system attacks the normal components, or cells, of the liver and causes inflammation and liver damage. The immune system normally protects people from infection by identifying and destroying bacteria, viruses, and other potentially harmful foreign substances. |

| EM | Autoimmune hepatitis is a chronicor in which the similar’s inhibiting provide arson the life aircraft, or bacterial, of the virus and chronic rashes and treat threatening. The protects provide if protect wanted from malignancy by diagnosing and tearing micro-organisms, viruses, and known cause deleterious foreigners toxin. |

| PrivRewrite-Naive | Autoimmune hepatitis is a chronic inflammatory liver disease where the body’s defense system mistakenly attacks the liver. This can cause prolonged liver damage, potentially leading to severe health risks and even life-threatening complications if left untreated. |

| PrivRewrite | Autoimmune hepatitis is a chronic inflammatory condition affecting the liver, where the body’s immune system mistakenly attacks its own liver cells. This can result in ongoing inflammation and damage to the liver, potentially leading to severe health problems and even life-threatening complications if left untreated. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J. PrivRewrite: Differentially Private Text Rewriting Under Black-Box Access with Refined Sensitivity Guarantees. Appl. Sci. 2025, 15, 11930. https://doi.org/10.3390/app152211930

Kim J. PrivRewrite: Differentially Private Text Rewriting Under Black-Box Access with Refined Sensitivity Guarantees. Applied Sciences. 2025; 15(22):11930. https://doi.org/10.3390/app152211930

Chicago/Turabian StyleKim, Jongwook. 2025. "PrivRewrite: Differentially Private Text Rewriting Under Black-Box Access with Refined Sensitivity Guarantees" Applied Sciences 15, no. 22: 11930. https://doi.org/10.3390/app152211930

APA StyleKim, J. (2025). PrivRewrite: Differentially Private Text Rewriting Under Black-Box Access with Refined Sensitivity Guarantees. Applied Sciences, 15(22), 11930. https://doi.org/10.3390/app152211930