Abstract

AI chatbots have the potential to facilitate students’ academic progress and enhance knowledge accessibility in higher education, yet learners’ attitudes toward these technologies vary amid AI-driven disruptions, with factors influencing acceptance remaining debated. The current study constructs an integrated model based on Technology Acceptance Model 3 (TAM3), an extension of the original TAM, incorporating factors including Self-Efficacy, Perceived Enjoyment, Anxiety, Perceived Ease of Use, Perceived Usefulness, Output Quality, Social Influence, and Behavioral Intention, to explore determinants and mechanisms influencing learners’ acceptance of AI chatbots. This addresses key challenges in AI-augmented learning, such as personalization benefits versus risks like information inaccuracy and ethical concerns. Results from the questionnaire survey analysis with 265 valid responses reveal significant relationships: (1) self-efficacy significantly predicts perceived ease of use; (2) both perceived enjoyment and perceived ease of use positively influence perceived usefulness; and (3) self-efficacy, perceived usefulness, and social influence collectively exert significant effects on behavioral intention. Measurement invariance tests further indicate significant differences in acceptance between undergraduate and graduate students, suggesting academic level moderates behavioral intentions. Findings offer principled guidance for designing inclusive AI tools that mitigate accessibility barriers and promote equitable adoption in educational environments.

1. Introduction

In recent years, the surge in computing power has driven the application and development of artificial intelligence technology in the field of education [1], mainly including personalized tutoring, homework help, concept learning, standardized test preparation, discussion and collaboration, and mental health support [2]. Existing literature emphasizes its ability to significantly enhance emotional communication in the learning process, providing a more personalized learning experience for each student [3]. AI chatbot is an automated conversational system capable of interacting with humans using natural language. Functioning as a virtual personal assistant, it provides support for various tasks [4]. AI chatbots can meet students’ needs for academic consultation [5], and improve communication efficiency by responding to inquiries 24/7, overcoming human limitations [6], AI chatbots support students in using their native language for communication, providing sufficient inclusivity [7]. AI-driven chatbots can use predictive technology to provide early intervention support for students at risk or in rebellious periods [8]. Although AI chatbots may have multiple positive impacts on learners, their current acceptance rate remains relatively low. The key factors influencing learners’ adoption of AI chatbots and their underlying relationships remain unclear.

Davis proposed the Technology Acceptance Model (TAM) to evaluate technology adoption in organizational settings [9]. Sánchez-Prieto et al. used TAM to study students’ acceptance of AI-based assessment tools [10]. In another study, Gupta applied TAM to investigate the determinants of teachers’ adoption of emerging technologies like AI in teaching [11]. Venkatesh and Bala made improvements on the basis of the TAM2 model. They added two composite variables: personal differences and system characteristics, and proposed the TAM3 model, which is more comprehensive and applicable. Their theoretical advancement posits that these two variables play significant roles in shaping individuals’ acceptance and usage of information technology systems [12]. Lin et al. used the TAM3 model to study the factors influencing students’ acceptance and use of handheld technology for digitizing MOOCs [13]. Kim et al. focused on the perceived ease of communication and perceived usefulness in the TAM to determine students’ perceptions of AI teaching assistants [14].

Some studies have extended TAM to investigate students’ perceptions of educational tools. Shamsi et al. expanded TAM by incorporating constructs such as subjective norms, enjoyment, facilitating conditions, trust, and security to explore how students utilize AI-driven conversational agents for learning [15]. Ragheb et al. combined UTAUT with the social influence construct to study students’ acceptance of chatbot technology [16]. Bilquise integrated TAM, the service robot acceptance (sRAM) model, and the self-determination theory (SDT) model to understand UAE students’ acceptance of academic advisory chatbots [17]. It can be seen from the existing literature that as users’ expectations of technology have evolved, the TAM lacks the ability to provide deeper insights into behavioral intentions in educational contexts. Therefore, it is crucial to gain a more comprehensive understanding of users’ acceptance of intelligent chatbots by introducing external factors such as social influence.

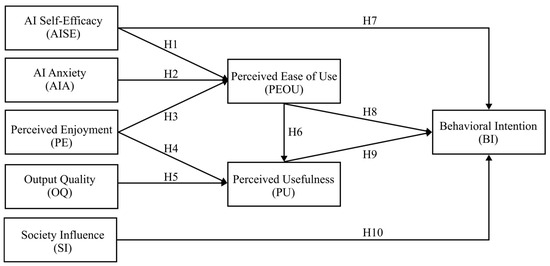

This study is based on the TAM3 model by incorporating external factors such as social influence, while discarding elements from the original model, including job relevance, result demonstrability, perceptions of external control, computer playfulness, and objective usability, as these factors are less directly related to students’ immediate learning experiences and needs in an educational context. This study focuses on analyzing the impact of eight main factors on students’ behavioral intentions to use AI chatbots: Perceived Ease of Use (PEOU), Perceived Usefulness (PU), Self-Efficacy (AISE), Anxiety (AIA), Perceived Enjoyment (PE), Output Quality (OQ), Social Influence (SI), and Behavioral Intention (BI). These factors were selected to understand students’ beliefs and expectations regarding the acceptance of AI chatbots. Additionally, the moderating effect of education level in the TAM has been demonstrated in numerous previous studies on behavioral intention influences. Qaid et al. found that education level affects university lecturers’ attitudes towards using e-government services [18].

Integrating chatbots into educational environments has the potential to create more efficient learning environments [19]. AI tools can provide students with timely feedback anytime and anywhere, potentially increasing student success rates and engagement, especially among students from disadvantaged backgrounds [20]. In terms of homework and learning assistance, AI chatbots can offer detailed feedback and suggestions on student assignments [21]. For example, ChatGPT can serve as a useful learning companion, providing step-by-step solutions and guiding students through complex homework problems [22]. ChatGPT can write essays at a level similar to a third-year medical student [23]. In personalized learning, AI chatbots can provide students with personalized guidance, accurately identifying learning blind spots and enhancing learning outcomes [24], to meet each student’s unique needs, helping them learn difficult concepts and improve their understanding [25]. Khan et al. studied the impact of ChatGPT on medical education and clinical management, emphasizing its ability to provide students with tailored learning opportunities [26]. The interactive and conversational nature of ChatGPT can increase student engagement and motivation, making learning more enjoyable and personalized [27]. In terms of skill development, AI chatbots can help students improve their writing skills by offering syntax and grammar correction suggestions [28], fostering problem-solving abilities [29].

However, integrating AI chatbots into student education also presents challenges. First, information reliability and accuracy, AI chatbots may provide biased or inaccurate information [27], potentially misleading students and hindering their learning progress. Especially in the field of medical education, ensuring the reliability and accuracy of information provided by chatbots is crucial [26]. Moreover, biased training data can lead chatbots to echo distortions, stereotypes, or discriminatory advice. Second, AI chatbots challenge academic integrity, educators may find it difficult to determine whether students’ answers are original or AI-generated, thereby affecting the accuracy of rating and feedback. This raises concerns about academic integrity and fair assessment practices [30]. Third, in terms of social relationships, unlike human teachers, AI chatbots lack the ability to sense emotions and provide real-time emotional support [24]. Finally, ethical concerns arise, particularly around data privacy, security, and accountability. Since AI chatbots gather student data during interactions, strong safeguards are necessary. In medical education, it typically includes patient confidentiality and ethical considerations, making the ethical and proper use of chatbots significant [31].

In summary, applying AI chatbots in the education field can provide students with personalized study assistance and improve educators’ efficiency. However, the public has growing concerns about the accuracy of information, academic integrity, and ethical considerations. Therefore, striking a balance between these advantages and challenges is crucial for integrating AI chatbots into education responsibly.

This study conducts an extensive review of the literature on the current state of AI chatbots, the application of the Technology Acceptance Model (TAM) in education, and the benefits and challenges of integrating AI chatbots into student learning. It further proposes to employ Structural Equation Modeling (SEM) to analyze key factors influencing Chinese university students’ acceptance of educational chatbots based on an extended TAM framework.

2. Materials and Methods

2.1. Research Model Construction

In this section, the main research questions and hypotheses are presented. First, two primary research questions are proposed, and through a review of relevant literature, the factors influencing the acceptance of educational chatbots are identified. Subsequently, we formulate hypotheses regarding the relationships between the three primary factors and other variables, thereby constructing a Technology Acceptance Model 3 (TAM3) for educational chatbots.

This study is based on the TAM3 model [12], including variables such as AI self-efficacy (AISE), perceived enjoyment (PE), AI anxiety (AIA), perceived ease of use (PEOU), perceived usefulness (PU), output quality (OQ), social influence (SI), and behavioral intention (BI). The study has two main research questions:

- What factors determine AI chatbot acceptance among Chinese higher education students?

- What role does the level of education play in the acceptance of AI chatbots?

A comprehensive literature review indicates that the PEOU of AI chatbots is influenced by three factors: AISE, AIA, and PE. PEOU reflects an individual’s view on the ease of using a new technology [32]. AISE refers to people’s judgments of their ability to organize and execute the actions required to achieve a specified type of performance. It is not concerned with the skills a person has, but with their judgment of what they can do with the skills they possess [33]. PE captures the degree to which using a technology is enjoyable, independent of consequences [34]. Previous research indicate that AISE [35] and PE [36] positively influence PEOU, while AIA negatively affects it [37]. Therefore, the study proposes the following three hypotheses:

H1.

SE demonstrated a significant positive prediction on PEOU.

H2.

AIA showed a significant negative correlation with PEOU.

H3.

PE positively predicted PEOU at statistically significant levels.

PU is another key driver affecting user performance [38]. According to previous research, PE [36], OQ [39] and PEOU [40,41] have a positive impact on the PU of AI chatbots. Therefore, the study proposes the following hypotheses:

H4.

PE exhibited a significant positive predictive effect on PU.

H5.

OQ demonstrated significant and positive prediction on PU.

H6.

PEOU demonstrated a significant positive prediction on PU.

BI is positively influenced by AISE [35]. PU and PEOU are two important predictors of BI [42]. Dwivedi suggested improving the PEOU of the system to enhance users’ BI [43], and Chocarro et al. found that the PU of chatbots positively affects teachers’ BI of using technology [44]. In addition, studies have shown that SI has a positive impact on students’ BI to use chatbots for teaching [16]. Therefore, we propose the following hypotheses:

H7.

SE exhibited a significant positive predictive effect on BI.

H8.

PEOU demonstrated significant and positive prediction on BI.

H9.

PU demonstrated significant and positive prediction on BI.

H10.

SI exhibited a significant positive predictive effect on BI.

The model we proposed is shown in Figure 1.

Figure 1.

Technology Acceptance Model 3 based Research model with hypotheses.

2.2. Methods

A description of the methods employed for data collection and analysis, along with the validation of the proposed theoretical model is presented subsequently. We used a quantitative research method to design a questionnaire and scales to collect relevant data. The study comprehensively describes various aspects, including participant demographics, selection of measurement instruments, data collection procedures, and analytical methods applied. Finally, the discriminant validity of the measurement model is examined.

2.2.1. Participants and Data Collection

The study employs a convenience sampling method, which is a non-probability sampling technique suitable for researching specific groups of people. It is often adopted due to its cost-effectiveness, as it requires fewer resources. The target population is the students currently receiving higher education, and the purpose is to understand their views and willingness regarding the use of AI chatbots in study. Students were recruited to participate in the study by completing an online questionnaire via the Wenjuanxing platform (https://www.wjx.cn/). Participants were recruited from institutions spanning 25 Chinese provinces and representing over 30 academic disciplines. Among the 348 respondents, valid data was collected from 265 respondents (76.15% of the total respondents). Invalid questionnaires were excluded based on the following criteria: First, responses with a completion time of less than 100 s; Second, responses with contradictory answers to reverse logic questions; Finally, responses where the experience with AI chatbots was selected as “completely unfamiliar. “ Hair recommends 5 to 10 observations per question [45], thus, the 265 valid datasets are deemed sufficient to meet the sample requirements of this study. Table 1 presents the demographic characteristics of the participants. All participants provided written informed consent prior to the study. The research protocol was approved by the Academic Ethics Review Committee of Guangdong University of Technology.

Table 1.

Demographic characteristics of students (N = 265).

As indicated in Table 1, there were 265 effective responses from the participants, with 38.87% being male and 61.13% being female. The majority of the respondents were graduate or doctoral students (56.98%), and students of humanities and social sciences dominated (66.42%). In addition, all respondents had experience with using AI chatbots, 93.96% of them were generally familiar or more with AI chatbots. Most of them (85.66%) had their first encounter with AI chatbots within the past year, commonly using them for information retrieval (80.00%), text writing (77.36%), language translation (48.30%), and interactive dialog (44.15%).

2.2.2. Measurement Tool

This study was conducted in the spring of 2024. In the preliminary research, the study designed a two-part questionnaire. The first section includes demographic information such as gender, education level, and major. The second part is adapted from existing established scale items and structures, including eight variables: PU, PEOU, AISE, AIA, PE, OQ, SI, and BI. A pilot study was conducted with 36 respondents to test the medium of the questionnaire’s altered items, the clarity of language expression, and the reliability and validity, with secondary modifications made to items with low reliability and validity. Table 2 introduces these structures, their sources, and corresponding items. Empirical research by authoritative scholars in the field of structural equation modeling has indicated that large-scale scales are superior to small-scale scales in terms of reliability and validity [46,47]. Therefore, this study’s questionnaire adopts a seven-point Likert scale, with values ranging from (strongly disagree = 1) to (strongly agree = 7).

Table 2.

Questionnaire.

2.2.3. Data Analysis

This study validated the construction of the conceptual model with SPSS (version 27.0.1.0), assessing its internal consistency, reliability, convergent validity, and discriminant validity. Additionally, the causal model was evaluated using maximum likelihood estimation. The model completed confirmatory factor analysis (CFA) using AMOS 28 software to test the validity and reliability of the latent structure, and structural equation modeling (SEM) was used to assess causal relationships [62]. Through chi-square fit index tests, we evaluated model fit, comparative fit index (CFI), Tucker–Lewis index (TLI), incremental fit index (IFI), root mean square error of approximation (RMSEA), and standardized root mean square residual (SRMR). X2/df was used because X2 is highly sensitive to sample size. In addition, measurement invariance was conducted using AMOS 28 software [63]. The consistency of measurement weights, structural covariances, and measurement residuals was tested [64], with the model’s rigor progressively enhanced, and the invariance of the model was assessed using p-values. If the p-value is less than 0.05, the null hypothesis is rejected, which indicates a significant difference in fit between nested models [65].

2.2.4. Measurement Model Analysis

The scale used in this study was adapted from established scales, which consist of 8 variables with a total of 24 items. It has reached 64.813% of total variance, exceeding the recommended standard of 60% [45]. As shown in Table 3, the internal consistency of each variable was determined by Cronbach’s alpha and ranged from 0.719 to 0.878. Factor loadings above 0.50 indicate reliable measurement validity [45]. The factor loadings for all items ranged between 0.604 and 0.911, meeting the threshold set by Hair et al.

Table 3.

Data concerning the indicators of confidence and convergent validity used in this research.

According to the standards set by Fornell et al., ideally, the AVE should exceed 0.5, and 0.36–0.5 is acceptable [66]. As shown in Table 3, except for the AVE of AISE being 0.498, the AVE values of all other items have reached the ideal situation. Structural reliability is demonstrated through the measurement of composite reliability (CR). The CR values of all factors are higher than the required threshold of 0.7 [67], ranging from 0.719 to 0.879, indicating that the scale items are internally consistent. Structural validity is determined using convergent validity and discriminant validity measures. Convergent validity ensures that the items as structural indicators are associated with each item and consistently measure the same structure.

The correlation between the structure and the square root of AVE is shown in Table 4, which verifies the discriminant validity of the latent variables. The values of the diagonal elements of the matrix should be greater than those of the non-diagonal rows and columns [66]. The data show that the square root of AVE for each structure is higher than the correlation coefficients between other variables. For the AISE-PEOU structure, further validation using the Heterotrait-Monotrait (HTMT) ratio is required to assess discriminant validity.

Table 4.

Discriminant validity for the model.

The results of the Heterotrait-Monotrait Ratio (HTMT) are presented in Table 5. Typically, an HTMT value below 0.85 indicates adequate discriminant validity between two constructs. Analysis demonstrates all HTMT values fall below 0.85, confirming clear conceptual differentiation among constructs and robust discriminant validity in the research data. Specifically, the AISE-PEOU construct pair yields an HTMT of 0.761, resolving previous concerns regarding overlap raised by the Fornell-Larcker test. Critically, this validates their distinctiveness despite the limitations of the prior approach. Overall, the discriminant validity of the measurement model can be accepted and supports the discriminant validity between constructs.

Table 5.

HTMT (Heterogeneity-Monotonicity Ratio) result.

3. Results

The SEM results and the standardized path coefficients of the research model are reported first. Then, the measurement invariance testing procedure and interpret the findings are presented.

3.1. Structural Model Analysis

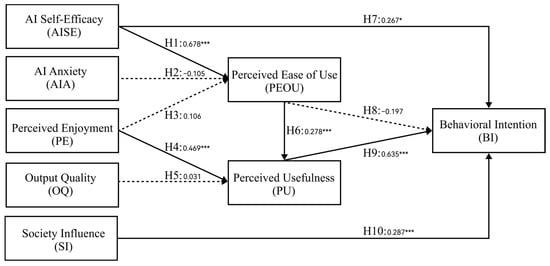

As shown in Table 6, the goodness-of-fit indices for the SEM are quite good, with CMID = 404.631, DF = 232, CMID/DF = 1.744, TLI = 0.93, CFI = 0.941, RMSEA = 0.053, SRMR = 0.0528, IFI = 0.942, PGFI = 0.685, PNFI = 0.734. Next, in Table 7 and Figure 2, we report the standardized path coefficients of the proposed research model and its results. Among the 10 hypotheses tested, 6 were confirmed. The path coefficients for the 6 supported hypotheses ranged from 0.267 to 0.678, with the smallest path coefficient being from AISE to BI (p-value = 0.025) and the most significant path coefficient being from AISE to PEOU (p-value = 0.000). Additionally, the paths from AIA to PEOU (path coefficient = −0.105, p-value = 0.146), PE to PEOU (path coefficient = 0.106, p-value = 0.158), OQ to PE (path coefficient = 0.031, p-value = 0.739), and PEOU to BI (path coefficient = −0.197, p-value = 0.099) were not statistically significant. Therefore, H2, H3, H5, and H8 were rejected. Finally, R2 indicates that AIA, AISE, and PE explain 62.5% of the variance in PEOU; PE, OQ, and PEOU explain 44.4% of the variance in PU; and AISE, PEOU, PE, and SI explain 64.4% of the variance in BI.

Table 6.

Test results of SEM fit indices.

Table 7.

Hypothesis testing of the overall model.

Figure 2.

Hypothesis testing of the overall model. The dashed line indicates the lack of significance; *** p < 0.001; * p < 0.05.

3.2. Measurement Invariance Test

Before conducting invariance measurement, the initial measurement model needs to be split into two datasets [71]. In this study, one dataset consists of undergraduates (n = 114), and the other dataset consists of graduate students (n = 151). Since the measurement model is replicable in each sample, we conducted a hierarchical test of measurement invariance, including measurement weights, structural covariances, and measurement residuals (Table 8), with gradually stricter constraints to identify the invariance of measurement parameters across educational levels. The initial model tested was the baseline model, with no constraints applied, to subsequently test increasingly restrictive nested models. This least restrictive model showed good data fit, with the same items measuring the same structure for both undergraduates and graduate students. Next, metric invariance was tested by constraining the factor loadings to be equal. The invariance of factor loadings is considered the minimum acceptable criterion for measurement invariance [74]. As shown in Table 9, the p-value between the two models was 0.0077, which is less than the threshold of 0.05, indicating that the models are statistically significant and that the factor loadings differ across educational levels. However, both |ΔCFI| and |ΔTLI| were less than 0.01, indicating that the core measurement structure remained fundamentally consistent across different educational level groups, meeting the minimum criterion for metric invariance and justifying cross-group comparisons. In terms of precision and theoretical rigor, differences between the two models exist. Subsequently, we constrained the structural covariances to be equal and found no statistically significant differences between them, which implies that education level did not alter the intrinsic relationships among latent variables. Finally, we constrained the measurement residuals to be equal across samples of different educational levels. The results showed that the p-value was close to 0, |ΔCFI| = 0.027, |ΔTLI| = 0.022, indicating that the strictly constrained model did not pass the invariance test. The study reveals differences in responses to specific observed variables among groups with different educational backgrounds, which may reflect the influence of education level on the depth of technological cognition, preferences for usage scenarios, or individual differences. This differential analysis based on measurement invariance testing further ensures the scientific validity of previous research conclusions and helps avoid measurement bias. Moreover, it provides a theoretical basis for the design of chatbots in the education field.

Table 8.

Multi-Cohort Analysis Adaptation Table (undergraduate and master’s degree students).

Table 9.

Measurement invariance test form (undergraduate and master’s degree students).

4. Discussion

Generative large models are deeply integrated across various fields, yet their impact on students at different educational levels and the factors influencing their acceptance remain unclear. This study constructs a research model using selected variables from the TAM3 model, ultimately determining that undergraduate and graduate students exhibit different levels of acceptance toward educational AI chatbots, indicating that educational level significantly influences students’ willingness to use educational AI chatbots. The study tested the model with survey data from China. Results supported more than half of the hypotheses, while four hypotheses were not supported. The discussion will separately address each hypothesis.

4.1. Supported Hypothesis

- AISE → PEOU

Research shows that AISE [35] may enhance users’ confidence in chatbots, making them perceive the tool as easier to use. This relationship also applies to educational AI chatbots, where students’ confidence in technology may reduce usage barriers. The technical AISE of the students directly influences their judgment of the PEOU of AI chatbots. In higher education settings, students generally have more experience with AI tools, and their familiarity with AI technology lowers the operational threshold.

- PE → PU

PE [36] may enhance users’ PU of AI chatbots through positive experience. The enjoyment and interactivity of AI educational chatbots could increase students’ recognition of the tool’s value. A pleasant learning experience could make students more likely to believe that AI tools can improve their learning efficiency.

- PEOU → PU

Previous studies have mentioned that PEOU [40,41] has a positive effect on PU. In educational settings, when students perceive AI chatbots as having good PEOU, they tend to perceive them as useful. This relationship is central to the TAM. When AI chatbots serve as educational tools, PEOU may often be directly equated with usefulness. This conclusion accords with the logical chain of the classic TAM, indicating that operational convenience directly influences students’ judgment of a tool’s utility. For example, ChatGPT’s natural language interaction reduces learning costs, and the efficiency gains from PEOU can directly translate into increased usefulness.

- AISE → BI

BI is positively influenced by AISE [35], meaning that confident students are more likely to have the willingness to use AI chatbots. They may perceive AI tools as enhancing their capabilities rather than diminishing their thinking abilities, further increasing their readiness to adopt new technologies with the aid of AI tools, reflecting the pathway of “confidence driving BI.” High AISE may lower the psychological threshold for technology use, directly encouraging BI.

- PU → BI

Chocarro et al. found that the PU of chatbots has a certain advantage in teachers’ intention to use technology [44]. Similar results were observed in this study: if students perceive the usefulness of AI chatbots, they may be more inclined to autonomously use AI to learn knowledge that is typically difficult to grasp, effectively enhancing their self-motivation and positively influencing BI.

- SI → BI

Research has shown that SI has a positive impact on students’ BI to use chatbots for teaching [16]. In higher education settings, social pressure factors such as teacher-student interaction, peer recommendations, and academic stress significantly influence individual decision-making. Moreover, Chinese society exhibits a positive attitude towards technological development, and people are more willing to learn and use AI tools rather than resist them. Especially the higher education students, who are more inclined to use AI tools to enhance their competitiveness.

Based on the verified hypotheses, it can be concluded that more confident students in higher education show greater BI to use AI chatbots to enhance their abilities. Developers of AI educational chatbots should enhance AISE feedback and convenience in their interaction designs, such as incorporating gamification and personalization while simplifying the interface. These designs will collectively, directly or indirectly, strengthen students’ BI to use AI educational chatbots. It is crucial to create a favorable social environment and regulatory framework for AI technology as well. Additionally, establishing shareable social networks within educational AI chatbot designs will help increase students’ intention to use them. Integrating the above design principles may facilitate the establishment of a virtuous cycle of “AISE-BI-AISE,” enhancing students’ self-motivation and promoting exploratory learning.

4.2. Unverified Hypothesis

- AIA → PEOU

Previous studies have suggested that AIA has a negative impact on PEOU [37], but this study contradicts this finding. A possible reason is that students are highly familiar with AI tools, with 93.96% of students reporting their experience as “generally familiar” or above. This may be the main reason why AIA did not significantly affect PEOU. Furthermore, current AI chatbots generally apply user-friendly interactive designs with timely feedback, which could also reduce students’ AIA. Furthermore, higher education students, the study’s focus, whose AIA levels toward new technologies are generally lower than those of the general population.

- PE → PEOU

The findings did not support a positive correlation between PE [36] and PEOU. A possible reason is that in higher education settings, students may prioritize the practicality of AI tools, making the impact of PE on PU relatively minor. Additionally, in terms of experimental measurement, the questionnaire item “Interacting with AI chatbots makes me happy” may not adequately capture the aspect of learning enjoyment in an educational context.

- OQ → PU

This study does not support the conclusion regarding OQ [39] from previous research, possibly due to differing evaluation standards among students for OQ. The measurement items of OQ in questionnaire design focus more on universality rather than educational context specificity, which is closely related to this conclusion. Additionally, AI products in 2024 generally exist at a high hallucination rate (e.g., ChatGPT 3.5, OpenAI o3, ERNIE Bot), potentially affecting experimental result reliability.

- PEOU → BI

Dwivedi et al. suggested improving the system’s PEOU to enhance users’ willingness to use it [43]. However, this study contradicts this finding, possibly because PE is more dominant, and PEOU affects intention through mediating effects. The study’s participants placed greater importance on PEOU, as evidenced by the non-significant PEOU → BI path (β = −0.197, p = 0.099), which contradicts core assumptions of TAM3. This may reflect the unique characteristics of educational AI applications: students appear to prioritize PU over PEOU. For instance, compared to traditional interactive software, AI products like ChatGPT demonstrate inherently high PEOU, requiring minimal training for effective use. However, this conclusion warrants further validation through cross-cultural samples.

Among the unsupported hypotheses, the three paths related to PEOU—H2, H3, and H8—were not supported. One possible explanation is that the current development of large AI models is in a state of intense competition. Higher education students frequently employ various AI-assisted learning tools, which feature user-friendly interactions and fast feedback. Their PEOU significantly surpasses that of ordinary search and auxiliary tools, yet the differences among various AI tools are minimal. Students have clear psychological expectations regarding AI-generated results, all of which greatly reduce their sensitivity to PEOU. Compared to PEOU, the high hallucination rate of AI in 2024 (e.g., ChatGPT 3.5, OpenAI o3, ERNIE Bot 3.5) remains a more serious issue, making PU a greater focus for higher education students.

This study focuses on the field of educational chatbots, employing the Technology Acceptance Model to explore factors influencing students’ intention to use educational chatbots and constructing a chatbot product acceptance model for higher education students. Based on empirical data, this theoretical model establishes a practical framework for educational AI chatbots, offering insights for implementation in higher education. It provides an innovative theoretical reference for the development of AI in higher education. In future research, the model can be translated into targeted design principles to guide the interface and functional design of educational chatbots, offering practical application value.

4.3. Theoretical Innovations

This study has made several innovative contributions at the theoretical level. First, although AI chatbots are widely applied in the educational field, research on the acceptance of AI tools with different forms and interaction methods among higher education students across varying educational levels remains insufficient. This study focuses on higher education students, providing an in-depth exploration of their acceptance of educational AI chatbots. Second, the results of this study support most hypotheses related to the TAM3 model under the given research conditions, offering theoretical references for the design and application of educational AI chatbots. Furthermore, this study incorporates educational level as a research variable. Through measurement invariance testing, it demonstrates that educational level exerts a moderating effect on higher education students’ acceptance of AI chatbots. Differences in educational levels indirectly influence students’ BI toward educational AI chatbots.

4.4. Practical Application Potential

This work has significant practical value in AI-driven scenarios: First, Hierarchical Design for Different Educational Levels: Students at different educational levels exhibit significantly varying BI in educational AI chatbots. Design considerations should address undergraduates’ search needs and graduate students’ research requirements. For example, undergraduates may prioritize ease of use and interface friendliness, so providing a pleasant user experience (e.g., scaffolded teaching support) can enhance their BI to adopt the technology. Graduate students may focus more on answer depth and OQ, so delivering precise and in-depth responses can foster their trust in educational AI chatbots. Second, Gamification Design for AI Chatbots: Based on research findings, enhancing PE, PEOU, and SI in educational AI chatbots can directly or indirectly increase students’ BI. Gamification design is recommended, including incorporating reward mechanisms, points, levels, leaderboards, and community-based social features. Showcasing usage experiences from peers and experts can further improve students’ PU and acceptance. Furthermore, Personalized Learning Support: Chatbots should provide personalized learning recommendations based on students’ knowledge levels, learning speeds, and preferences [75]. Such recommendations can be realized by analyzing students’ behavior patterns and usage history through algorithms, ensuring a tailored experience for each student and providing them with maximum satisfaction.

4.5. Limitations of the Current Study

The limitations have to be described in multi-folds. First, due to the rapid development of artificial intelligence technology, especially the emergence of DeepSeek V3/R1 earlier this year, the experimental data collected and analyzed last year reflects user behavior concerning specific AI models prevalent during the data collection period (e.g., ChatGPT 3.5, OpenAI o3, ERNIE Bot). While AI technology develops rapidly, the patterns of human-AI interaction and adoption captured at this specific historical juncture retain theoretical and practical relevance. However, the target users of this study are current Chinese higher education students, it also provides a theoretical foundation for the development of customized AI chatbots in the higher education industry. Second, the total sample size of the questionnaire is relatively small, and 66.42% of the respondents are students from humanities and social sciences disciplines, potentially introducing disciplinary biases. Third, All samples were collected from Chinese universities, where technology acceptance behaviors within China’s educational environment may be influenced by Chinese culture, potentially leading to an amplified effect of Social Influence (SI). The conclusions of this study might vary across regions and cultural contexts and should not be directly generalized to other cultural settings. Fourth, The study employs a convenience sampling method, the sample may lack representativeness of a broadly defined population due to high selection bias. And the findings are susceptible to various interferences, making it difficult to generalize the results to the entire population. Fifth, The moderating effect of academic level may reflect differences in students’ exposure to AI technology across different educational stages, but it should be noted that this finding is based on a limited sample and should not be directly generalized to macro-social phenomena such as the digital divide.

These limitations will provide researchers with opportunities for further study. First, in future research, classifications and analyses can be conducted based on different student groups, such as cultural background, education level, quality of education, gender, age, and experience with AI usage, to enable more in-depth exploration. Second, the sole reliance on questionnaires in this study presents a limitation. Although education level was identified as a statistically significant moderator, the lack of detailed subgroup analysis prevented us from determining its specific direction or underlying mechanism. Therefore, future studies should employ mixed methods. For instance, combining physiological measurements (e.g., EDA, RESP) with qualitative approaches (e.g., focus groups, in-depth interviews) under a stratified sampling design would yield a deeper and more comprehensive understanding of educational AI chatbots. Finally, future research should follow up on AI models like DeepSeek V3/R1 to track the impact of technological advancements on the design principles of theoretical models.

5. Conclusions

This study extends the TAM3 framework to elucidate key drivers of Chinese university students’ acceptance of educational AI chatbots, revealing significant influences from self-efficacy, perceived usefulness, and social influence on behavioral intention, alongside moderation by academic level that highlights potential disparities in adoption. These insights underscore the dual-edged nature of AI in higher education: while chatbots offer opportunities for personalized learning and timely knowledge access, they also pose risks such as information inaccuracies, ethical dilemmas related to academic integrity, who may differ in self-efficacy and familiarity with AI tools.

To overcome these accessibility challenges in AI-disrupted learning environments, developers should prioritize inclusive design features, such as adaptive interfaces that reduce anxiety through simplified interactions, gamified elements to boost perceived enjoyment, and mechanisms ensuring output quality and bias mitigation. Generally, incorporating multilingual support and emotion-aware responses could address inclusive issues, promoting equitable adoption across diverse student populations. Educators and institutions are encouraged to integrate training initiatives that build self-efficacy, particularly for undergraduates, to bridge the digital divide and foster information literacy in the upcoming ear of generative AI.

Furthermore, the findings inform educational policies for responsible AI use, advocating frameworks that emphasize ethical guidelines, data privacy safeguards, and assessments of benefits versus risks. By guiding the development of AI-enhanced tools and policies that prioritize universal access, this research contributes to relieve AI-driven disruptions, ensuring that educational chatbots enhance knowledge acquisition, creative problem-solving, and overall inclusive in higher education. Future studies could explore longitudinal effects or cross-cultural variations to further refine these strategies.

Author Contributions

J.X.: Conceptualization, Investigation, Funding acquisition, Supervision. D.P.: Writing—Original draft preparation. R.G.: Software, Writing—Original draft preparation, Validation. T.X.: Conceptualization, Methodology. X.Z.: Conceptualization. D.Y.: Methodology, Writing—Reviewing and Editing, Funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by grants from the Philosophy and Social Sciences Fund of Guangdong Province (GD24CYS04), the Postgraduate Education Innovation Program of Guangdong University of Technology (2024-21) and the Humanities and Social Sciences Research Foundation of the Ministry of Education, China (23YJA760026).

Institutional Review Board Statement

Ethical compliance was approved by the Ethics Committee of Guangdong University of Technology (Approval No. GDUTXS20250135, 10 April 2025).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy.

Acknowledgments

AI tools (DeepSeek V3 and grok3) were utilized for grammar checking and language polishing during the manuscript preparation.

Conflicts of Interest

The authors report there are no competing interests to declare.

Abbreviations

The following abbreviations are used in this manuscript:

| AISE | Artificial Intelligence Self-Efficacy |

| PE | Perceived Enjoyment |

| AIA | Artificial Intelligence Anxiety |

| PEOU | Perceived Ease Of Use |

| PU | Perceived Usefulness |

| OQ | Output Quality |

| SI | Social Influence |

| BI | Behavioral Intention |

References

- Zheng, C.; Yu, M.; Guo, Z. The application of artificial intelligence in language teaching over the past three decades: Retrospect and prospect. Foreign Lang. Educ. 2024, 45, 59–68. [Google Scholar]

- Labadze, L.; Grigolia, M.; Machaidze, L. Role of AI chatbots in education: Systematic literature review. Int. J. Educ. Technol. High. Educ. 2023, 20, 56. [Google Scholar] [CrossRef]

- Moraes, C.L. Chatbot as a Learning Assistant: Factors Influencing Adoption and Recommendation. Masters’ Thesis, Universidade NOVA de Lisboa, Lisboa, Portugal, 2021. [Google Scholar]

- Sheehan, B.; Jin, H.S.; Gottlieb, U. Customer service chatbots: Anthropomorphism and adoption. J. Bus. Res. 2020, 115, 14–24. [Google Scholar] [CrossRef]

- Ho, C.C.; Lee, H.L.; Lo, W.K.; Lui, K.F.A. Developing a chatbot for college student programme advisement. In Proceedings of the 2018 International Symposium on Educational Technology (ISET), Osaka, Japan, 31 July–2 August 2018; pp. 52–56. [Google Scholar]

- Bilquise, G.; Shaalan, K. AI-based academic advising framework: A knowledge management perspective. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 193–203. [Google Scholar] [CrossRef]

- Bilquise, G.; Ibrahim, S.; Shaalan, K. Bilingual AI-driven chatbot for academic advising. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 50–57. [Google Scholar] [CrossRef]

- Lim, M.S.; Ho, S.-B.; Chai, I. Design and functionality of a university academic advisor chatbot as an early intervention to improve students’ academic performance. In Proceedings of the Computational Science and Technology: 7th ICCST 2020, Pattaya, Thailand, 29–30 August 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 167–178. [Google Scholar]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Sánchez-Prieto, J.C.; Cruz-Benito, J.; Therón Sánchez, R.; García-Peñalvo, F.J. Assessed by machines: Development of a TAM-based tool to measure AI-based assessment acceptance among students. Int. J. Interact. Multimed. Artif. Intell. 2020, 6, 80. [Google Scholar] [CrossRef]

- Gupta, P.; Yadav, S. A TAM-based Study on the ICT Usage by the Academicians in Higher Educational Institutions of Delhi NCR. In Proceedings of the Congress on Intelligent Systems: Proceedings of CIS 2021, Bengaluru, India, 4–5 September 2021; Volume 2, pp. 329–353. [Google Scholar]

- Venkatesh, V.; Bala, H. Technology acceptance model 3 and a research agenda on interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Lin, H.-M.; Qian, Y.-Y.; Wu, B.-Z. Study on the Influencing Factors of the Willingness to Use the Handheld Technology Digital Experiment MOOC Using PLS-SEM Measurement Based on TAM3 Model. Chin. J. Chem. Educ. 2021, 42, 105–112. [Google Scholar]

- Kim, J.; Merrill, K.; Xu, K.; Sellnow, D.D. My teacher is a machine: Understanding students’ perceptions of AI teaching assistants in online education. Int. J. Hum. –Comput. Interact. 2020, 36, 1902–1911. [Google Scholar] [CrossRef]

- Al Shamsi, J.H.; Al-Emran, M.; Shaalan, K. Understanding key drivers affecting students’ use of artificial intelligence-based voice assistants. Educ. Inf. Technol. 2022, 27, 8071–8091. [Google Scholar] [CrossRef]

- Ragheb, M.A.; Tantawi, P.; Farouk, N.; Hatata, A. Investigating the acceptance of applying chat-bot (Artificial intelligence) technology among higher education students in Egypt. Int. J. High. Educ. Manag. 2022, 8, 1–13. [Google Scholar] [CrossRef]

- Bilquise, G.; Ibrahim, S.; Salhieh, S.E.M. Investigating student acceptance of an academic advising chatbot in higher education institutions. Educ. Inf. Technol. 2024, 29, 6357–6382. [Google Scholar] [CrossRef]

- Qaid, E.H.; Ateeq, A.A.; Abro, Z.; Milhem, M.; Alzoraiki, M.; Alkadash, T.M.; Al-Fahim, N.H. Conceptual Framework in Attitude Factors Affecting Yemeni University Lecturers’ Adoption of E-Government. In Information and Communication Technology in Technical and Vocational Education and Training for Sustainable and Equal Opportunity: Business Governance and Digitalization of Business Education; Springer: Berlin/Heidelberg, Germany, 2024; pp. 345–358. [Google Scholar]

- Chen, Y.; Jensen, S.; Albert, L.J.; Gupta, S.; Lee, T. Artificial intelligence (AI) student assistants in the classroom: Designing chatbots to support student success. Inf. Syst. Front. 2023, 25, 161–182. [Google Scholar] [CrossRef]

- Sullivan, M.; Kelly, A.; McLaughlan, P. ChatGPT in higher education: Considerations for academic integrity and student learning. J. Appl. Learn. Teach. 2023, 6, 31–40. [Google Scholar] [CrossRef]

- Celik, I.; Dindar, M.; Muukkonen, H.; Järvelä, S. The promises and challenges of artificial intelligence for teachers: A systematic review of research. TechTrends 2022, 66, 616–630. [Google Scholar] [CrossRef]

- Crawford, J.; Cowling, M.; Allen, K.-A. Leadership is needed for ethical ChatGPT: Character, assessment, and learning using artificial intelligence (AI). J. Univ. Teach. Learn. Pract. 2023, 20, 2. [Google Scholar] [CrossRef]

- Sedaghat, S. Success through simplicity: What other artificial intelligence applications in medicine should learn from history and ChatGPT. Ann. Biomed. Eng. 2023, 51, 2657–2658. [Google Scholar] [CrossRef]

- Lan, G.; Song, F. Artificial Intelligence Education Application:Intelligent Tools, Advantages, Challenges and Future Development. Yuejiang Acad. J. 2025, 17, 157–169+175. [Google Scholar]

- Fariani, R.I.; Junus, K.; Santoso, H.B. A systematic literature review on personalised learning in the higher education context. Technol. Knowl. Learn. 2023, 28, 449–476. [Google Scholar] [CrossRef]

- Khan, R.A.; Jawaid, M.; Khan, A.R.; Sajjad, M. ChatGPT-Reshaping medical education and clinical management. Pak. J. Med. Sci. 2023, 39, 605. [Google Scholar] [CrossRef] [PubMed]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Kaharuddin, K. Assessing the effect of using artificial intelligence on the writing skill of Indonesian learners of English. Linguist. Cult. Rev. 2021, 5, 288–304. [Google Scholar] [CrossRef]

- Benvenuti, M.; Cangelosi, A.; Weinberger, A.; Mazzoni, E.; Benassi, M.; Barbaresi, M.; Orsoni, M. Artificial intelligence and human behavioral development: A perspective on new skills and competences acquisition for the educational context. Comput. Hum. Behav. 2023, 148, 107903. [Google Scholar] [CrossRef]

- AlAfnan, M.A.; Dishari, S.; Jovic, M.; Lomidze, K. Chatgpt as an educational tool: Opportunities, challenges, and recommendations for communication, business writing, and composition courses. J. Artif. Intell. Technol. 2023, 3, 60–68. [Google Scholar] [CrossRef]

- Masters, K. Ethical use of artificial intelligence in health professions education: AMEE Guide No. 158. Med. Teach. 2023, 45, 574–584. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A theoretical extension of the technology acceptance model: Four longitudinal field studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Compeau, D.R.; Higgins, C.A. Computer self-efficacy: Development of a measure and initial test. MIS Q. 1995, 19, 189–211. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. Extrinsic and intrinsic motivation to use computers in the workplace 1. J. Appl. Soc. Psychol. 1992, 22, 1111–1132. [Google Scholar] [CrossRef]

- Park, S.Y. An analysis of the technology acceptance model in understanding university students’ behavioral intention to use e-learning. J. Educ. Technol. Soc. 2009, 12, 150–162. [Google Scholar]

- Teo, T.; Noyes, J. An assessment of the influence of perceived enjoyment and attitude on the intention to use technology among pre-service teachers: A structural equation modeling approach. Comput. Educ. 2011, 57, 1645–1653. [Google Scholar] [CrossRef]

- Van Raaij, E.M.; Schepers, J.J. The acceptance and use of a virtual learning environment in China. Comput. Educ. 2008, 50, 838–852. [Google Scholar] [CrossRef]

- Lee, M.K.; Cheung, C.M.; Chen, Z. Acceptance of Internet-based learning medium: The role of extrinsic and intrinsic motivation. Inf. Manag. 2005, 42, 1095–1104. [Google Scholar] [CrossRef]

- Faqih, K.M.; Jaradat, M.-I.R.M. Assessing the moderating effect of gender differences and individualism-collectivism at individual-level on the adoption of mobile commerce technology: TAM3 perspective. J. Retail. Consum. Serv. 2015, 22, 37–52. [Google Scholar] [CrossRef]

- Alfadda, H.A.; Mahdi, H.S. Measuring students’ use of zoom application in language course based on the technology acceptance model (TAM). J. Psycholinguist. Res. 2021, 50, 883–900. [Google Scholar] [CrossRef] [PubMed]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Scherer, R.; Teo, T. Unpacking teachers’ intentions to integrate technology: A meta-analysis. Educ. Res. Rev. 2019, 27, 90–109. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Rana, N.P.; Jeyaraj, A.; Clement, M.; Williams, M.D. Re-examining the unified theory of acceptance and use of technology (UTAUT): Towards a revised theoretical model. Inf. Syst. Front. 2019, 21, 719–734. [Google Scholar] [CrossRef]

- Chocarro, R.; Cortinas, M.; Marcos-Matás, G. Teachers’ attitudes towards chatbots in education: A technology acceptance model approach considering the effect of social language, bot proactiveness, and users’ characteristics. Educ. Stud. 2023, 49, 295–313. [Google Scholar] [CrossRef]

- Hair, J.F. Multivariate Data Analysis; Pearson: London, UK, 2009. [Google Scholar]

- Dawes, J. Five point vs. eleven point scales: Does it make a difference to data characteristics. Australas. J. Mark. Res. 2002, 10, 39–47. [Google Scholar]

- Brown, J.D. Likert items and scales of measurement. Shiken JALT Test. Eval. SIG Newsl. 2011, 15, 10–14. [Google Scholar]

- Li, K. Determinants of college students’ actual use of AI-based systems: An extension of the technology acceptance model. Sustainability 2023, 15, 5221. [Google Scholar] [CrossRef]

- Xu, J.; Zhang, X.; Li, H.; Yoo, C.; Pan, Y. Is everyone an artist? A study on user experience of AI-based painting system. Appl. Sci. 2023, 13, 6496. [Google Scholar] [CrossRef]

- Zhang, C.; Schießl, J.; Plößl, L.; Hofmann, F.; Gläser-Zikuda, M. Acceptance of artificial intelligence among pre-service teachers: A multigroup analysis. Int. J. Educ. Technol. High. Educ. 2023, 20, 49. [Google Scholar] [CrossRef]

- Joo, Y.J.; So, H.-J.; Kim, N.H. Examination of relationships among students’ self-determination, technology acceptance, satisfaction, and continuance intention to use K-MOOCs. Comput. Educ. 2018, 122, 260–272. [Google Scholar] [CrossRef]

- Salloum, S.A.; Alhamad, A.Q.M.; Al-Emran, M.; Monem, A.A.; Shaalan, K. Exploring students’ acceptance of e-learning through the development of a comprehensive technology acceptance model. IEEE Access 2019, 7, 128445–128462. [Google Scholar] [CrossRef]

- Kamal, S.A.; Shafiq, M.; Kakria, P. Investigating acceptance of telemedicine services through an extended technology acceptance model (TAM). Technol. Soc. 2020, 60, 101212. [Google Scholar] [CrossRef]

- Saadé, R.G.; Kira, D. The emotional state of technology acceptance. Issues Informing Sci. Inf. Technol. 2006, 3, 529–539. [Google Scholar] [CrossRef][Green Version]

- Kang, J.-W.; Namkung, Y. The information quality and source credibility matter in customers’ evaluation toward food O2O commerce. Int. J. Hosp. Manag. 2019, 78, 189–198. [Google Scholar] [CrossRef]

- Khan, M.I.; Saleh, M.A.; Quazi, A. Social media adoption by health professionals: A TAM-based study. In Proceedings of the Informatics, Madrid, Spain, 25–27 October 2021; p. 6. [Google Scholar]

- Kohnke, A.; Cole, M.L.; Bush, R. Incorporating UTAUT predictors for understanding home care patients’ and clinician’s acceptance of healthcare telemedicine equipment. J. Technol. Manag. Innov. 2014, 9, 29–41. [Google Scholar] [CrossRef]

- Li, C.; He, L.; Wong, I.A. Determinants predicting undergraduates’ intention to adopt e-learning for studying english in chinese higher education context: A structural equation modelling approach. Educ. Inf. Technol. 2021, 26, 4221–4239. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Yang, H.-L.; Lin, R.-X. Why do people continue to play mobile game apps? A perspective of individual motivation, social factor and gaming factor. J. Internet Technol. 2019, 20, 1925–1936. [Google Scholar]

- Pan, L.; Xia, Y.; Xing, L.; Song, Z.; Xu, Y. Exploring use acceptance of electric bicycle-sharing systems: An empirical study based on PLS-SEM analysis. Sensors 2022, 22, 7057. [Google Scholar] [CrossRef] [PubMed]

- Hair, J.F.; Gabriel, M.; Patel, V. AMOS covariance-based structural equation modeling (CB-SEM): Guidelines on its application as a marketing research tool. Braz. J. Mark. 2014, 13, 44–55. [Google Scholar]

- Byrne, B.M. Testing for multigroup invariance using AMOS graphics: A road less traveled. Struct. Equ. Model. 2004, 11, 272–300. [Google Scholar] [CrossRef]

- Byrne, B.M.; Shavelson, R.J.; Muthén, B. Testing for the equivalence of factor covariance and mean structures: The issue of partial measurement invariance. Psychol. Bull. 1989, 105, 456. [Google Scholar] [CrossRef]

- Byrne, B.M. Structural equation modeling with AMOS: Basic concepts, applications, and programming (multivariate applications series). New York Taylor Fr. Group 2010, 396, 7384. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Nunnally, J. Psychometric Theory; McGraw-Hill Inc.: Columbus, OH, USA, 1994. [Google Scholar]

- Hayduk, L.A. Structural Equation Modeling with LISREL: Essentials and Advances; Jhu Press: Baltimore, MD, USA, 1987. [Google Scholar]

- Hu, L.t.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. A Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Bagozzi, R.P.; Yi, Y. On the evaluation of structural equation models. J. Acad. Mark. Sci. 1988, 16, 74–94. [Google Scholar] [CrossRef]

- Brown, T.A. Confirmatory Factor Analysis for Applied Research; Guilford Publications: New York, NY, USA, 2015. [Google Scholar]

- Marsh, H.W.; Hocevar, D. Application of confirmatory factor analysis to the study of self-concept: First-and higher order factor models and their invariance across groups. Psychol. Bull. 1985, 97, 562. [Google Scholar] [CrossRef]

- Wheaton, B.; Muthen, B.; Alwin, D.F.; Summers, G.F. Assessing reliability and stability in panel models. Sociol. Methodol. 1977, 8, 84–136. [Google Scholar] [CrossRef]

- Byrne, B.M. Structural equation modeling with AMOS, EQS, and LISREL: Comparative approaches to testing for the factorial validity of a measuring instrument. Int. J. Test. 2001, 1, 55–86. [Google Scholar] [CrossRef]

- Kikalishvili, S. Unlocking the potential of GPT-3 in education: Opportunities, limitations, and recommendations for effective integration. Interact. Learn. Environ. 2023, 32, 5587–5599. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).