1. Introduction

Obtaining knowledge in algorithms is a fundamental aspect of Computer Science education. A widely used method for teaching algorithms is pseudocode [

1], which provides an abstract representation of core principles. Pseudocode provides a crucial link between high-level algorithmic ideas and their implementation in various programming languages. However, the effectiveness of pseudocode as a teaching tool is not always straightforward. Students often struggle to understand and translate pseudocode into functional code, especially when the pseudocode is not detailed sufficiently, or more complex paradigms are involved, such as recursion [

2].

A balance between abstraction and detail is critical for actual implementation based on provided pseudocode. On the one hand, highly abstract pseudocode [

1] inspires problem-solving skills by encouraging students to interpret and implement the solution, but too ambiguous descriptions may lead to confusion and errors during the implementation. Similarly, pseudocode that is too detailed can result in exact copying, where students simply rewrite the instructions into code without understanding the core concepts fully. Furthermore, debugging becomes difficult when students rely on pseudocode, as the differences between logic and implementation are often difficult to identify without proper instructions [

3]. Another critical aspect is the presence of recursion in pseudocode [

4], a concept that students find difficult to understand because they need to be able to visualise the execution flow of the function. This often results in incomplete implementations, or logical errors that are tedious to debug without explicit examples and support.

The motivation behind this study comes from the development of the FLoCIC algorithm (Few Lines of Code for Image Compression) [

5], a concise algorithm for lossless raster image compression. The problem is practical and interesting for students. FLoCIC requires a small number of lines of code to achieve competitive results compared to actual compression methods [

6]. Its straightforward logic makes it an ideal candidate for educational purposes, especially for teaching algorithms based on pseudocode. Given its structure, students can focus on understanding core algorithmic concepts, such as recursion, lossless image compression, prediction models, and entropy encoding [

7], without being overwhelmed by unnecessary complexity. In this paper, our objective is to evaluate the impact of pseudocode on students’ learning outcomes and coding practices by analysing their implementations of the FLoCIC algorithm. This study conducted a comprehensive questionnaire among undergraduate and graduate students of Computer Science. The participants were provided with the FLoCIC pseudocode and tasked with implementing the algorithm, followed by answering a structured set of questions to assess their understanding and the challenges faced. By comparing the responses between undergraduate and graduate students, we gained valuable insights into the differences in understanding levels, debugging strategies, learning outcomes, and the impact of prior knowledge on their algorithmic implementation. The analysis revealed how varying levels of experience influenced the students’ approaches to problem-solving, particularly in dealing with complex pseudocode components and selecting effective implementation strategies. The contributions of this work are multifaceted, including the following:

Evaluation of the FLoCIC algorithm as a simple but effective tool for teaching fundamental algorithmic principles in lossless image compression, promoting both theoretical understanding and practical implementation skills.

Identification of the role of external resources, such as generative AI and code from the WEB, in supporting implementation efforts, while highlighting the importance of a fundamental understanding of algorithms.

Examination of the differences in perceptions of algorithm complexity, implementation duration, and learning effectiveness between undergraduate and graduate students, offering insights into how past programming experiences affect performance in algorithmic tasks.

Recommendations for improving pedagogical practices, including the need for detailed pseudocode for complex tasks, additional support for less experienced students, and promoting systematic debugging and testing approaches.

Previous methods, like PRIMM [

8], Parsons problems [

9,

10] and Media Computation [

11], are based on concepts more suitable for beginners, using basic programming techniques. They focus on fundamental skills, such as structured code reading, careful code tracing, testing existing code, rearranging existing code fragments, and, more directly, visualising the results of the executed code. In contrast, the proposed FLoCIC method stands out because it is designed for more advanced programming techniques. FLoCIC introduces an optimised, comprehensive pipeline that is independent of any specific programming language and which students are required to implement fully. This assignment is conceptually challenging, deliberately incorporating advanced programming concepts like recursion, interpolative coding, and binary writing. These are recognised as difficult topics in programming education, making this task suitable for upper-year students or graduate students.

The remainder of the paper is organised as follows. In

Section 2, we review related works, including the existing approaches to data compression, challenges in teaching programming, and the role of pseudocode in the learning process.

Section 3 describes the elementary concepts of data compression and describes the FLoCIC algorithm briefly. In

Section 4, we describe the methodology, including the design of the study, the questionnaire process, and the data collection.

Section 5 presents the results of the questionnaire and the analysis, while

Section 6 offers a detailed discussion of the findings, comparing the levels of performance and comprehension of the students and the implications for teaching.

2. Related Work

Traditional methods, such as Huffman coding [

12], Lempel–Ziv–Welch, (LZW) [

13] and arithmetic coding (AC) [

14], have shown their effectiveness in compressing structured and repetitive data by exploiting redundancies in the data. In the realm of image compression, however, additional techniques are required which consider redundancies at the pixel level. Image data compression techniques can generally be categorised as lossy and lossless. Although lossy compression achieves higher compression ratios by allowing inaccuracies in data reconstruction, lossless compression ensures exact replication of the original data. Lossless data compression is crucial in domains where exact reconstruction of the original data is required, such as medical imaging and archival storage. Techniques used in various file formats for lossless compression, such as Portable Network Graphics (PNG) [

15] and Joint Photographic Experts Group—Lossless (JPEG LS) [

16], usually use predictive models combined with entropy coding to achieve efficient and lossless compression. For example, JPEG LS implements local pixel prediction and context modelling to reduce redundancy in image data. These methods perform exceptionally well, providing reasonable compression ratios while preserving data integrity. Some other approaches, such as interpolative coding (IC) [

17], have shown potential in specific domains by simplifying the encoding process while maintaining efficiency. For example, Niemi and Teuhola [

18], explored its application in bi-level image compression, highlighting its adaptability.

FLoCIC [

5], the algorithm used in this study, is also designed for lossless compression, which is easier for students to understand, and its decompression results are more straightforward to verify. The algorithm builds on prediction and interpolative coding [

17] to achieve competitive performance with minimal implementation complexity, making it very suitable for educational purposes.

Table 1 summarises the mentioned methods.

Teaching programming remains a challenging task, particularly for novice students [

19]. The transition from understanding abstract concepts to implementing functional code is often problematic [

20]. Common challenges include understanding syntax, debugging, and developing problem-solving skills [

21]. These problems become even greater when the students encounter complex programming patterns such as recursion and dynamic memory management [

22]. Studies have shown that pseudocode can enhance the understanding of algorithmic logic and encourage critical thinking [

23]. However, it is also noted that poorly designed pseudocode may lead to confusion or complicate bug detection [

24]. In academic environments, pseudocode is often used in assignments and examinations to assess the students’ understanding of algorithms. Research [

2] suggests that the use of pseudocode in teaching enables students to visualise execution flows better, especially regarding concepts such as recursion and nested loops. Moreover, it allows teachers to focus on logic and structure rather than syntax, which is especially beneficial for first-year programming courses [

25].

Questionnaires [

26] are used frequently in educational research to assess learning outcomes, understand challenges, and collect feedback. For example, student questionnaires have been used to evaluate the effectiveness of teaching methods, the difficulty of course material, and the participation of students [

27]. In the context of programming education, questionnaires have been crucial in identifying common challenges faced by students, such as debugging difficulties and the lack of algorithmic thinking [

28]. Combining questionnaires with performance-based assessments provides a comprehensive perspective on educational effectiveness [

29]. This study builds on these foundations by incorporating pseudocode into a programming task and evaluating its impact through a detailed questionnaire. The FLoCIC algorithm, designed for simplicity, serves as an ideal case study for evaluating how students interpret and implement pseudocode. The results of the questionnaire offer information on the effectiveness of pseudocode as a teaching tool and highlight areas where students require additional support.

4. Materials and Methods

The main aim of this study is to evaluate the educational value of the FLoCIC algorithm. The study began with the preparation of implementation instructions for FLoCIC. The students were tasked with implementing the algorithm in a programming language of their choice. At the end, they were invited to complete a questionnaire. Their responses formed the core of this paper.

4.1. The Conduction of the Questionnaire

The study was conducted in 2024 at the University of Maribor, Faculty of Electrical Engineering and Computer Science. The main participants in the study were undergraduate and postgraduate students of the Computer Science course. The undergraduates were in their last year of study, while the postgraduates were in their first. Among the 68 undergraduate students, 47 were enrolled in a professional Higher Education Programme (Bachelor of Applied Science, B.A.Sc.), while 21 were attending a University Study Programme (Bachelor of Science, B.Sc.). In addition, 53 students were enrolled in a postgraduate programme (Master of Science, M.Sc.).

The students received detailed and structured step-by-step instructions on how to implement the algorithm. These instructions were delivered in person during a live session, where the concepts, functionalities, and implementation steps of the algorithm were explained systematically. To support understanding and continuity, the students had constant access to a presentation containing all the necessary pseudocode, visual aids, and key algorithmic principles. During the task, a teaching assistant was present throughout to provide support, clarify doubts, and assist with any challenges in implementation through e-mail and instant messaging channels. This ensured a supportive learning environment that promoted engagement and encouraged independent problem-solving. In addition, students were informed from the beginning that their participation involved completing an anonymous questionnaire to share their insights and experiences.

4.2. The Questionnaire

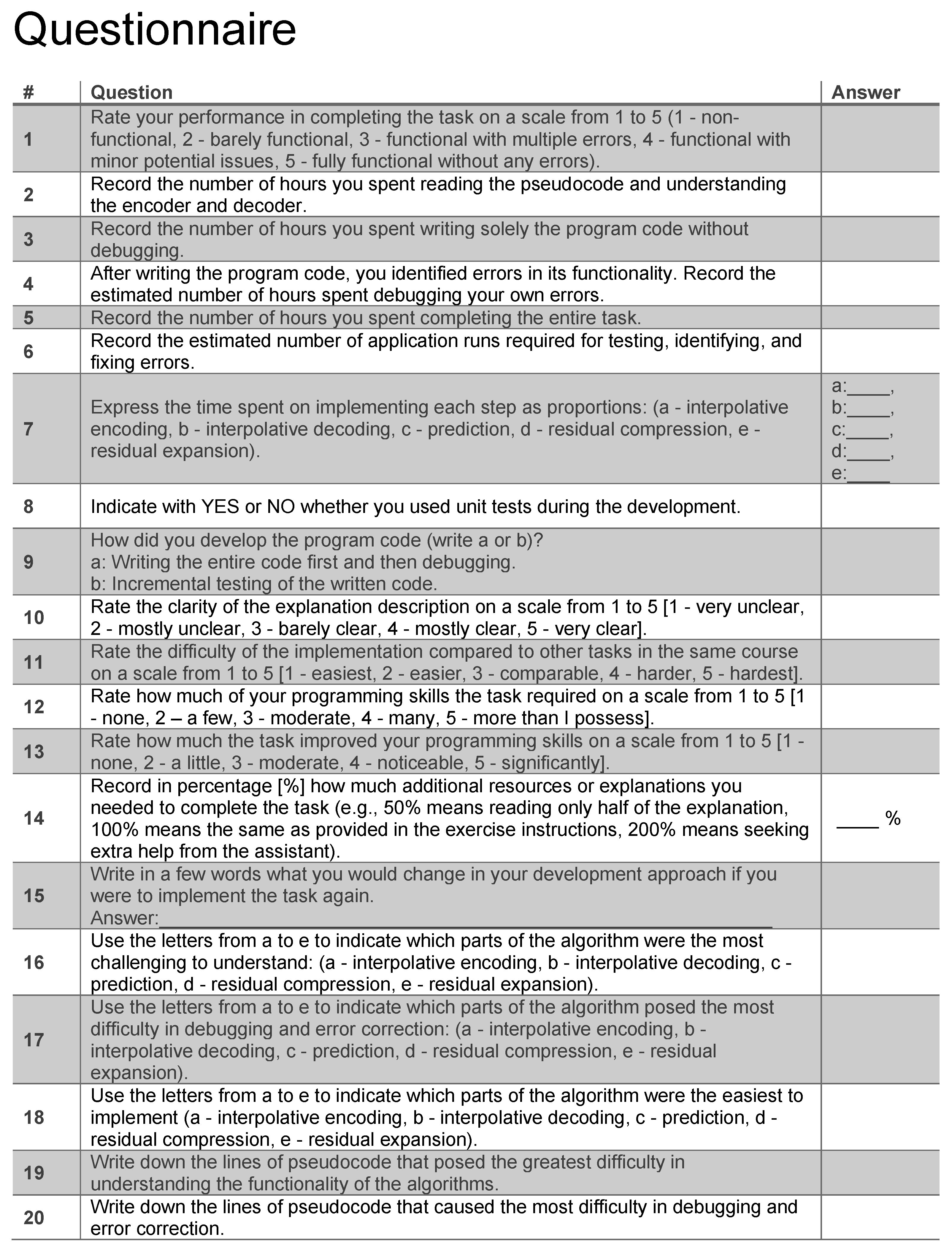

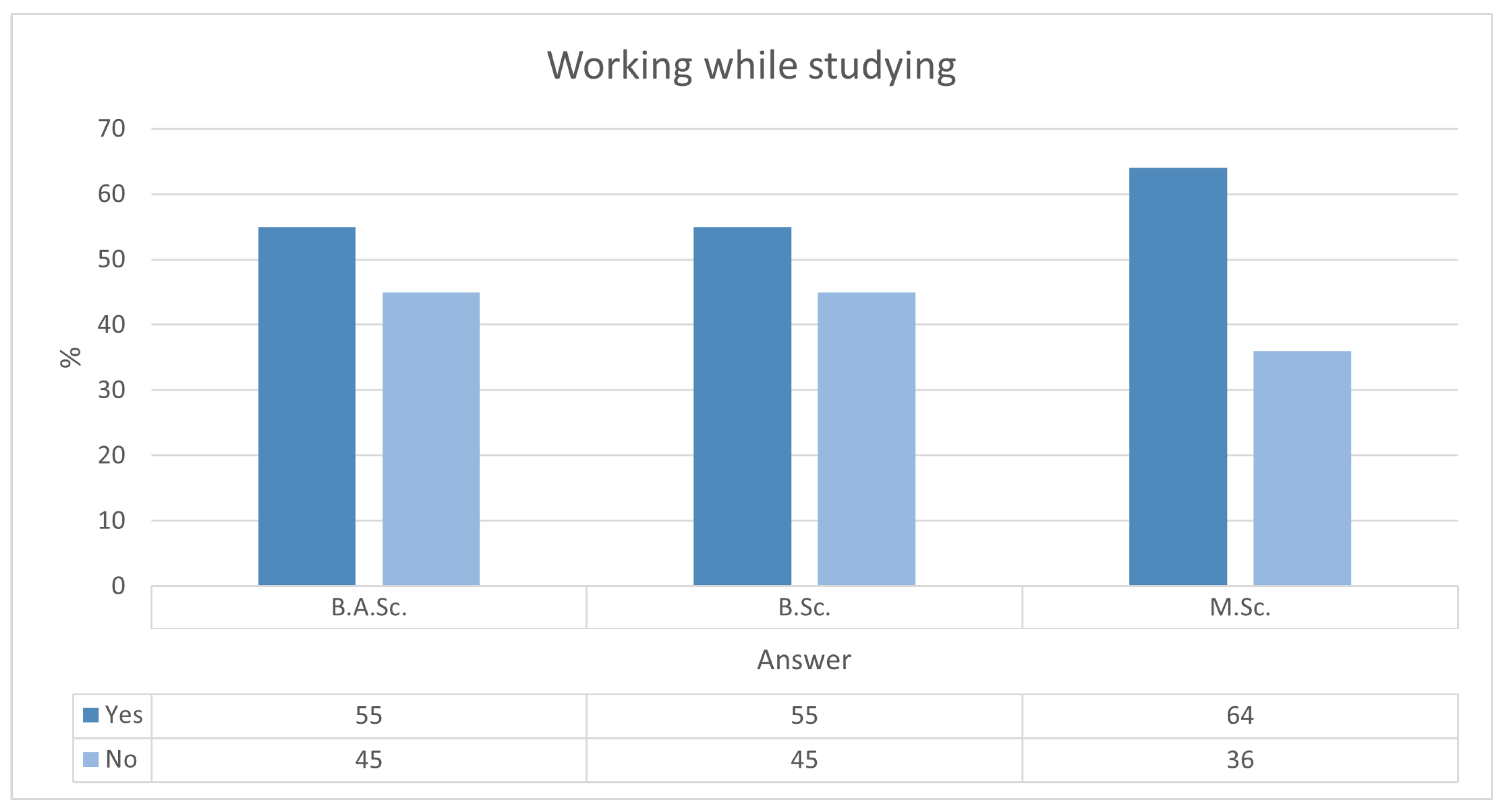

The questionnaire consisted of 36 questions (see

Figure A1 and

Figure A2) covering different aspects of the implementation process, such as the technical approach of the students, their level of understanding, and the perceived difficulty of the given task. The categories were derived from established constructs in computing education, such as algorithmic understanding, self-efficacy in debugging, and perceived cognitive load, based on the relevant literature and prior teaching experiences. To ensure the validity and clarity of the content, both the categories and individual questions were reviewed by two Faculty experts and piloted with a small group of Computer Science education colleagues prior to the main study. To avoid bias in the data, the questions were designed carefully (simple and unambiguous), the questionnaire was completely anonymous, and the whole cohort of students from the B.A.Sc., B.Sc. and M.Sc. programmes was captured in the questionnaire regardless of the students’ backgrounds. They all received the same instructions and were not informed in advance about the content of the questionnaire.

The questionnaire, designed to evaluate the students’ experiences and perceptions, was distributed at the end of the assignment. Participation in the questionnaire was entirely voluntary, and the questions were intentionally not revealed beforehand to ensure spontaneous and unbiased responses. The completion of the questionnaire had no impact on the grading of the assignment, maintaining the objectivity of the academic evaluation. The questionnaire served as a reflective tool, encouraging the students to evaluate their learning process, the challenges encountered, and the support provided during the task critically. The questionnaire was designed to capture a complete understanding of the students’ experiences, and was categorised into five thematic categories:

Programming background: This evaluated the existing programming experience of the students, including their familiarity with data structures, algorithms, and basic compression techniques, providing information on how these foundational skills influenced their ability to implement the FLoCIC algorithm.

Implementation process: This measured how well the students understood the pseudocode provided and their ability to translate it into a functional implementation of the FLoCIC algorithm. The metrics were determined by the clarity and accessibility of the instructions provided, as well as by how successfully the students adapted the pseudocode to their chosen programming environment.

Challenges: This identified specific difficulties the students encountered during the implementation process. This category focused especially on debugging issues, such as recursive functions, modular design, and interpreting the pseudocode. It also aimed to highlight the differences in problem-solving strategies between students with varying levels of experience and levels of study.

External help: This analysed the availability and effectiveness of the resources provided to the students. It examined how useful the students found access to the presentation materials, the role of the teaching assistant, and the adequacy of the instructional materials. In addition, it evaluated the students’ use of external resources, such as generative AI and code from WEB repositories, to support their implementation efforts.

Self-assessment: This gathered information about the students’ overall learning experience. The questions explored whether the task improved their understanding of algorithmic principles, increased their confidence in implementing pseudocode, and improved the applicability of their acquired skills to future assignments and projects. It also examined how the learning process differed between student groups and how the assignment could be adjusted to provide more targeted support.

These thematic categories provided a structured framework for analysing the questionnaire responses and identifying patterns in the students’ experiences. By incorporating various aspects of the task, including prior knowledge, understanding, challenges, and reflections, the questionnaire offered a comprehensive view of the educational impact of implementing the FLoCIC algorithm. This structured approach formed a solid foundation for the analysis presented in this study, highlighting both the pedagogical successes and areas for improvement.

5. Results

The following section presents the analysis of the questionnaire data collected from a total of 121 (out of 159 enrolled) students who participated in the study, aimed at evaluating their experience in implementing a pseudocode-based algorithm. The data collected offered valuable information on the differences in response patterns between students of different educational levels and programmes. It is important to note that not all the students answered every question, as some responses were left blank, either accidentally, or due to uncertainty, resulting in varying numbers of responses across individual questionnaire questions. This analysis focused on identifying trends in student performance and feedback, highlighting both common challenges and distinctive characteristics between the subgroups.

5.1. Programming Background

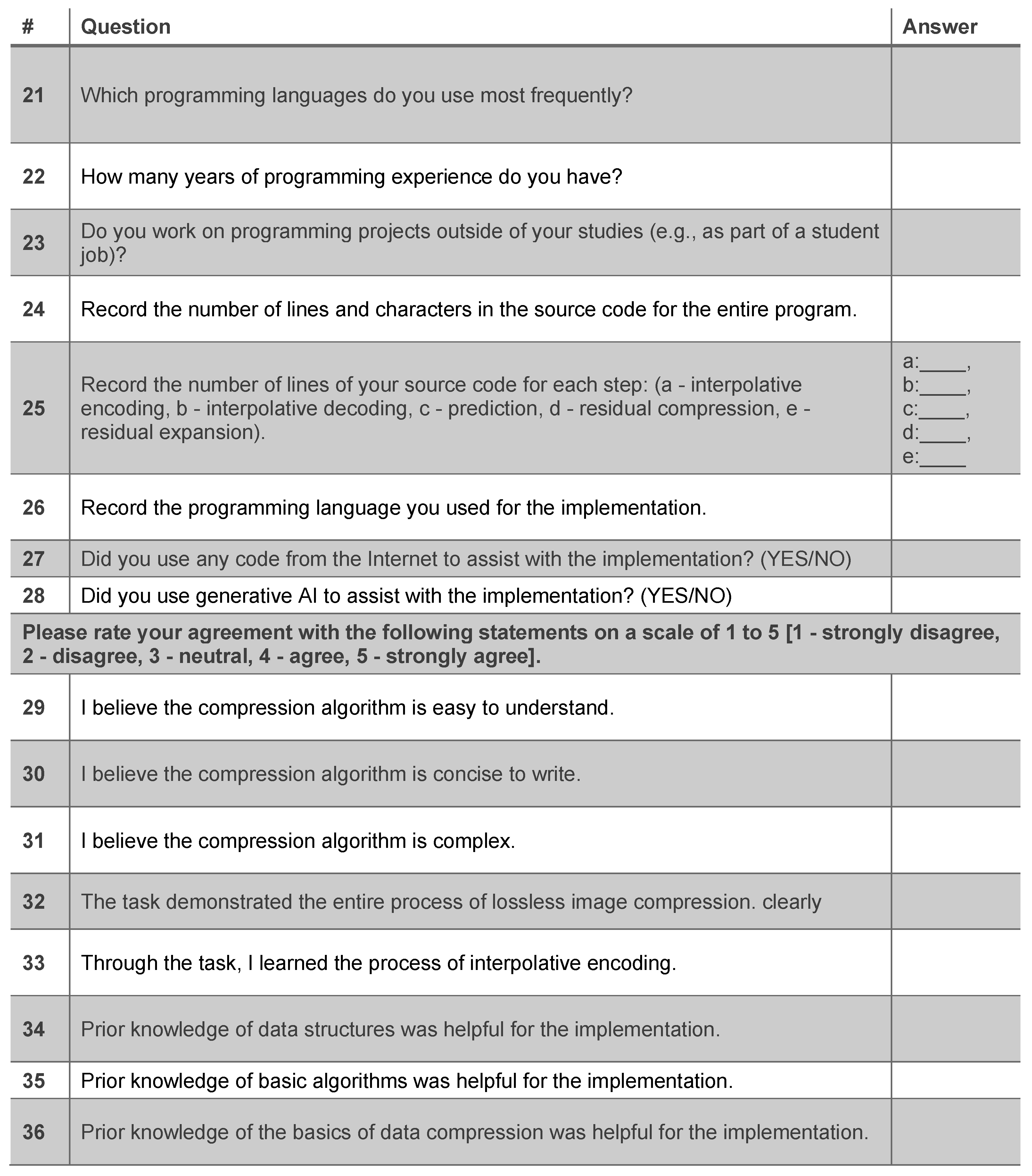

The questionnaire began with general questions, such as the number of years of programming experience, to establish a baseline for the participants’ technical backgrounds. The bar chart in

Figure 1 illustrates the distribution of the programming experience of the students at the different study levels. The number of years of experience varied significantly, with the highest frequency of respondents having 5 years of programming experience, particularly among the M.Sc. students. Although most of the students had between 2 and 8 years of experience, some M.Sc. students reported over 10 years, indicating a smaller group of highly experienced participants. The analysis revealed that B.A.Sc. students have generally more programming experience compared to B.Sc. students, likely due to their backgrounds in high schools specialising in Computer Engineering. In contrast, B.Sc. students, who come primarily from grammar schools (Gymnasiums), had less programming experience.

5.1.1. Working Experiences

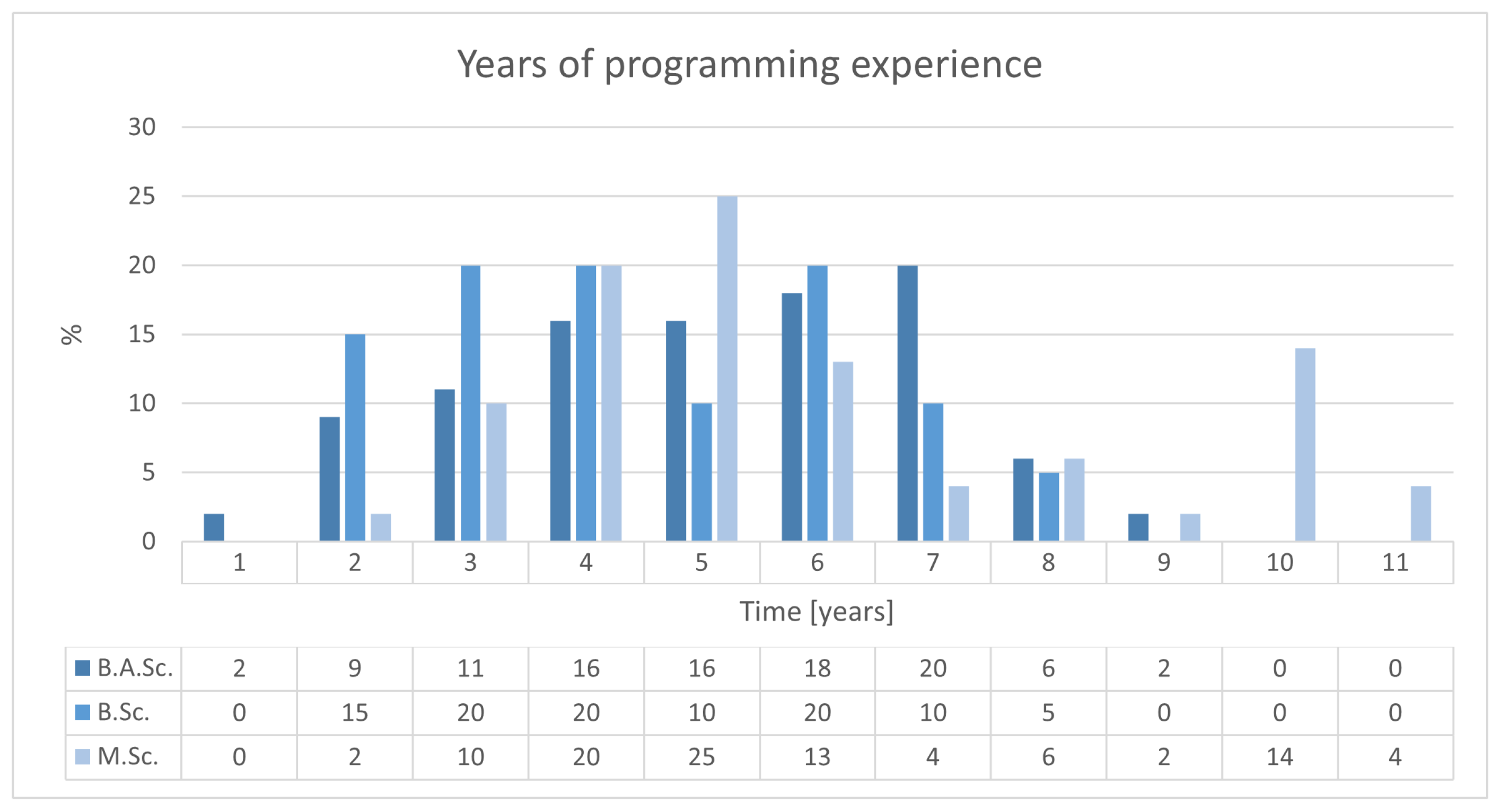

Figure 2 represents the number of students who work for local IT companies while studying. Among the B.A.Sc. students, slightly more than half reported working, indicating a balance between those who work and those who do not. The B.Sc. students showed a similar distribution, with 55% working and 45% not working. However, the M.Sc. students demonstrated a significantly higher proportion of working individuals, with 64% of them engaged actively in professional work. Most of the students who work are employed in the IT sector, where they are involved directly in programming and software development tasks, allowing them to apply and expand their technical knowledge in real-world scenarios. The students are involved in various forms of work, including regular employment, part-time jobs through student employment agencies, or their own independent IT projects.

Figure 2 also suggests that postgraduate students are more likely to work alongside their studies, due probably to their advanced programming experience and professional readiness.

5.1.2. Programming Languages

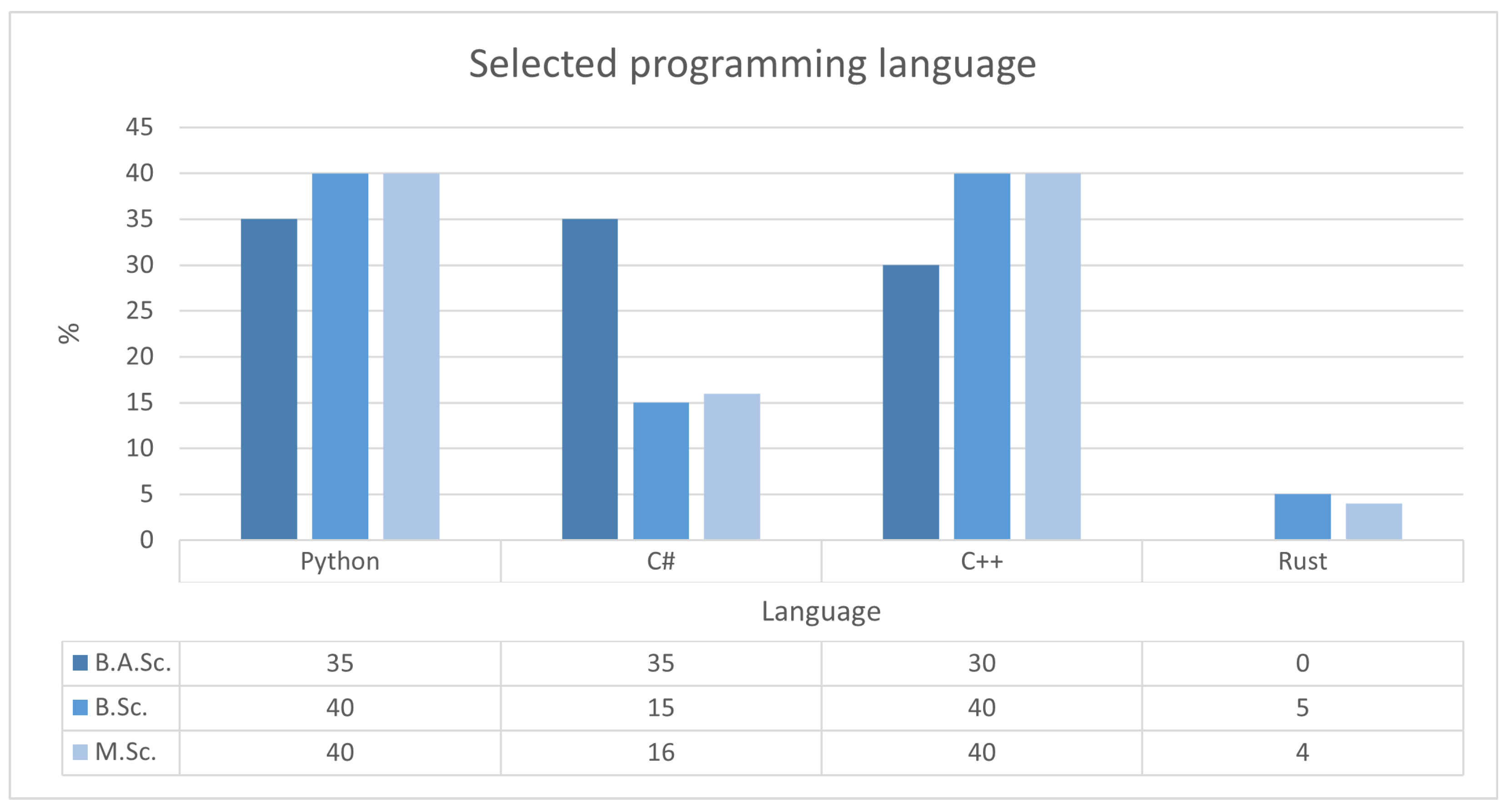

The students were also asked to identify the programming languages that they use the most frequently, and the responses revealed distinct patterns between different study levels.

Figure 3 illustrates the distribution of programming languages, with C++ standing out as the most popular, mentioned by 29% students, followed by Python 3.12 with 20% and C# with 13%. The M.Sc. students favoured predominantly C++17, Python, and JavaScript 2024, reflecting their alignment with widely applicable languages. The B.A.Sc. students also showed a strong preference for C++, with notable use of Python, C# 12, and Kotlin 1.9, indicating a broader adoption of languages suited for practical applications. The B.Sc. students demonstrated a more diverse set of preferences, with Python, C++, and C being the most widely used, with less emphasis on JavaScript and Java compared to the other groups. Less common languages such as Kotlin, Rust, TypeScript, and PHP were also represented, but their usage was limited, concentrated mostly to specific students. The data reflect a strong preference for flexible general-purpose languages like C++, Python, and C#, which are used commonly in academic and professional settings. The difference in language preferences between the students at M.Sc., B.A.Sc. and B.Sc. levels likely reflects variations in the focus of the programme and the project requirements at each level of study.

The chart in

Figure 4 illustrates the programming languages selected by the students to implement the pseudocode, which was the final question in this section of the questionnaire, with C++, Python, and C# emerging as the most popular choices at all study levels. Rust saw minimal use, chosen by only three students in all the groups.

5.2. Implementation Process

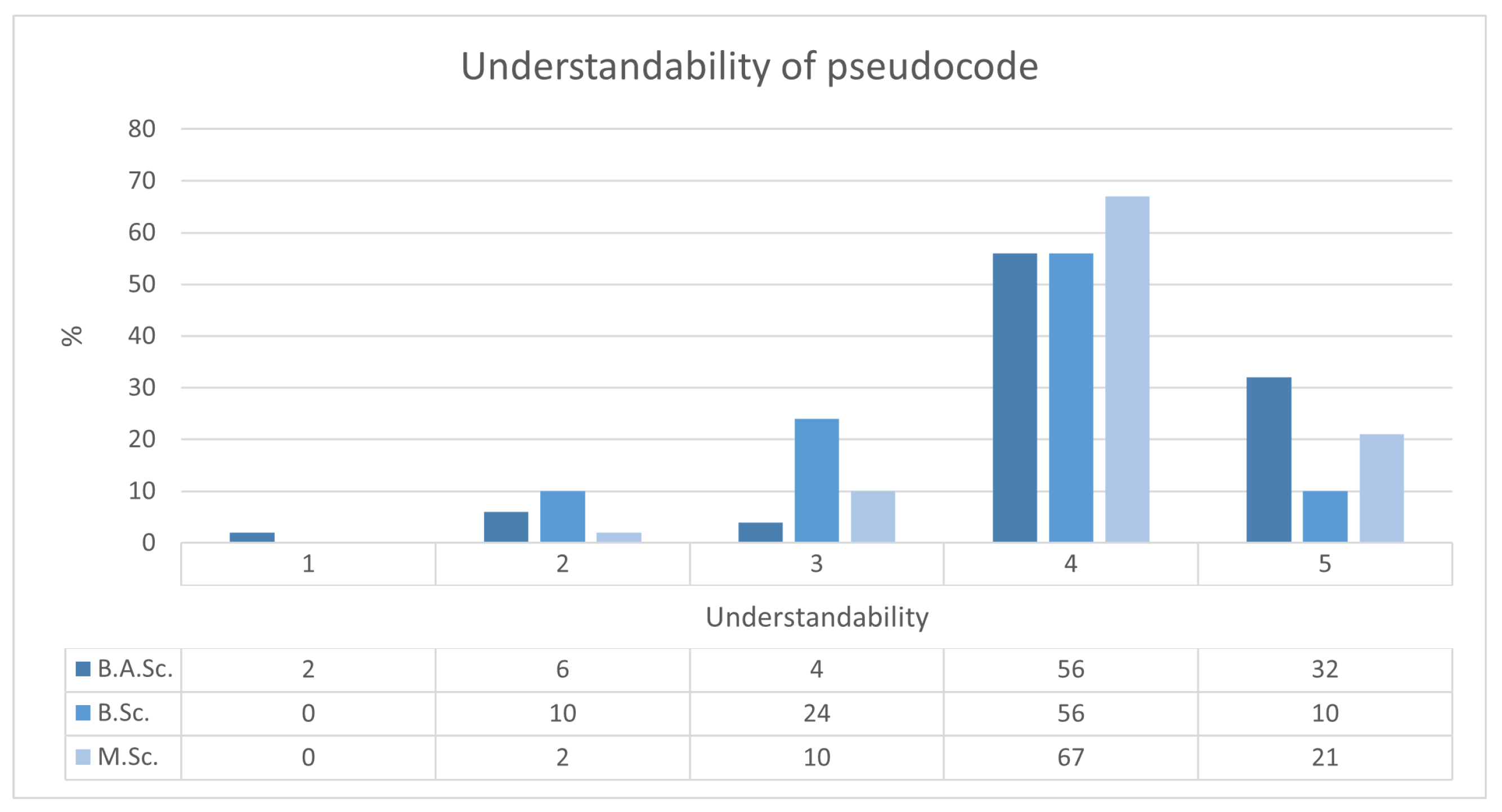

Figure 5 illustrates how the students rated the understandability of the oral explanation of the pseudocode provided prior to the implementation. The ratings ranged from 1 (very unclear) to 5 (very clear). Most of the students found the explanation to be mostly clear (rating 4), or very clear. Among the postgraduate students, 67% rated the explanation as mostly clear and 21% as very clear, indicating a strong general understanding. Similarly, a large percentage of the B.A.Sc. students (86%) rated it mostly clear and very clear. The B.Sc. students, while fewer in number, showed a similar trend. This distribution suggests that the explanation was generally effective in all the groups, although very few students faced challenges to understand it fully.

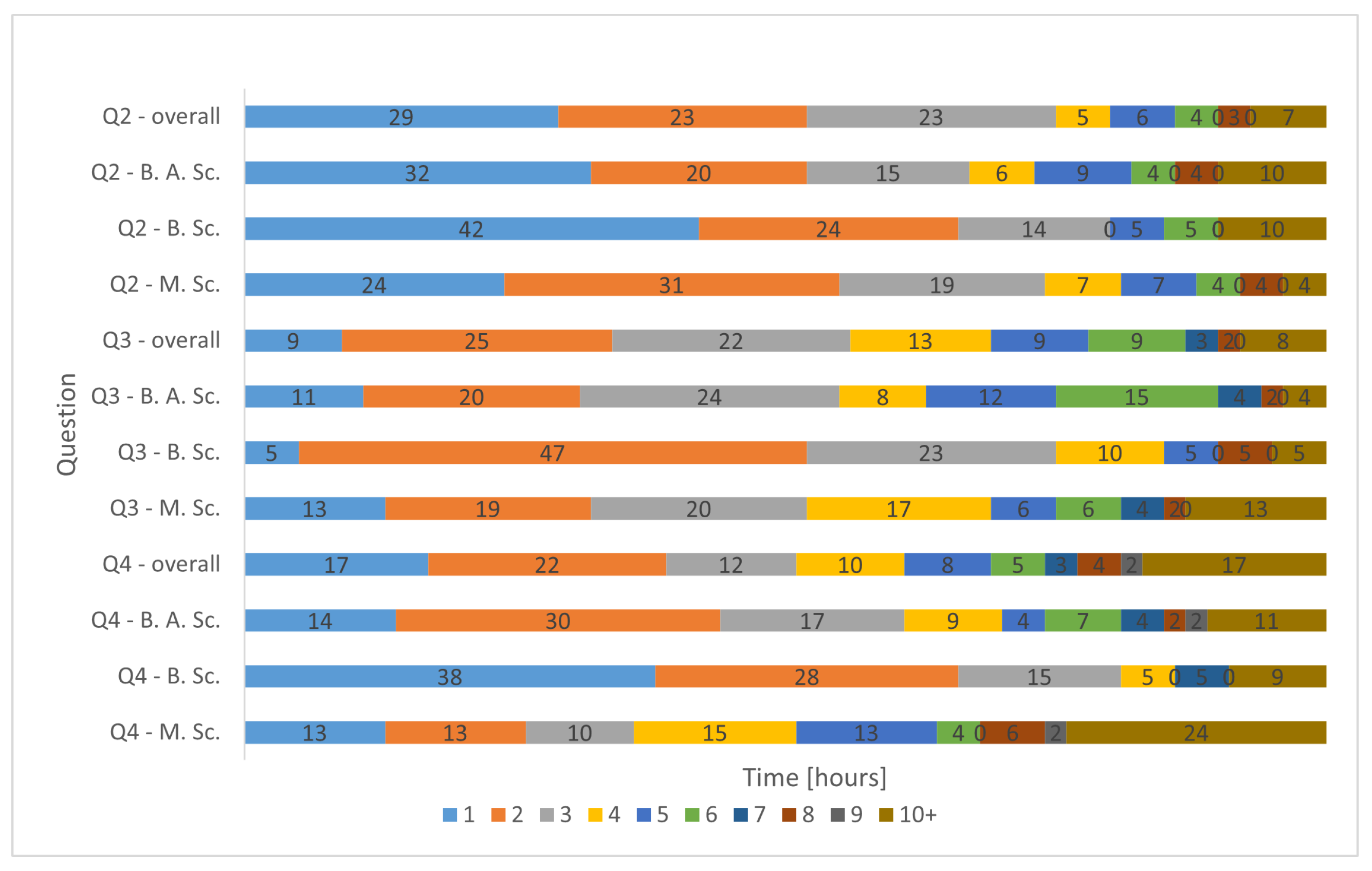

The data collected provide a detailed overview of the implementation process of the pseudocode-based algorithm by the students, covering various aspects such as reading and understanding the pseudocode, writing the code, debugging, completion time, and specific coding practices. All the times presented are approximate and depend on the students’ levels of rigour. As shown for Q2 in

Figure 6, most of the students at all study levels required between 1 and 3 h to understand the pseudocode after having already been given an oral presentation on the algorithm. The B.A.Sc. students showed a slightly higher proportion that required more than 10 h compared to the other groups, indicating a small subset of the participants with potential difficulties in understanding the instructions. The next step focused on writing the complete code without debugging, as illustrated by Q3 in

Figure 6. Here, most of the students from all the groups required between 2 and 4 h, indicating a general consistency in coding efficiency. However, a small group of the M.Sc. students reported spending more than 10 h, suggesting either a highly conscientious approach or challenges in structuring the code independently. Debugging, shown as Q4 in

Figure 6, proved to be a significant part of the implementation process, and most B.A.Sc. and B.Sc. students completed this task in 1 to 3 h. However, the M.Sc. students were more likely to exceed 10 h of debugging.

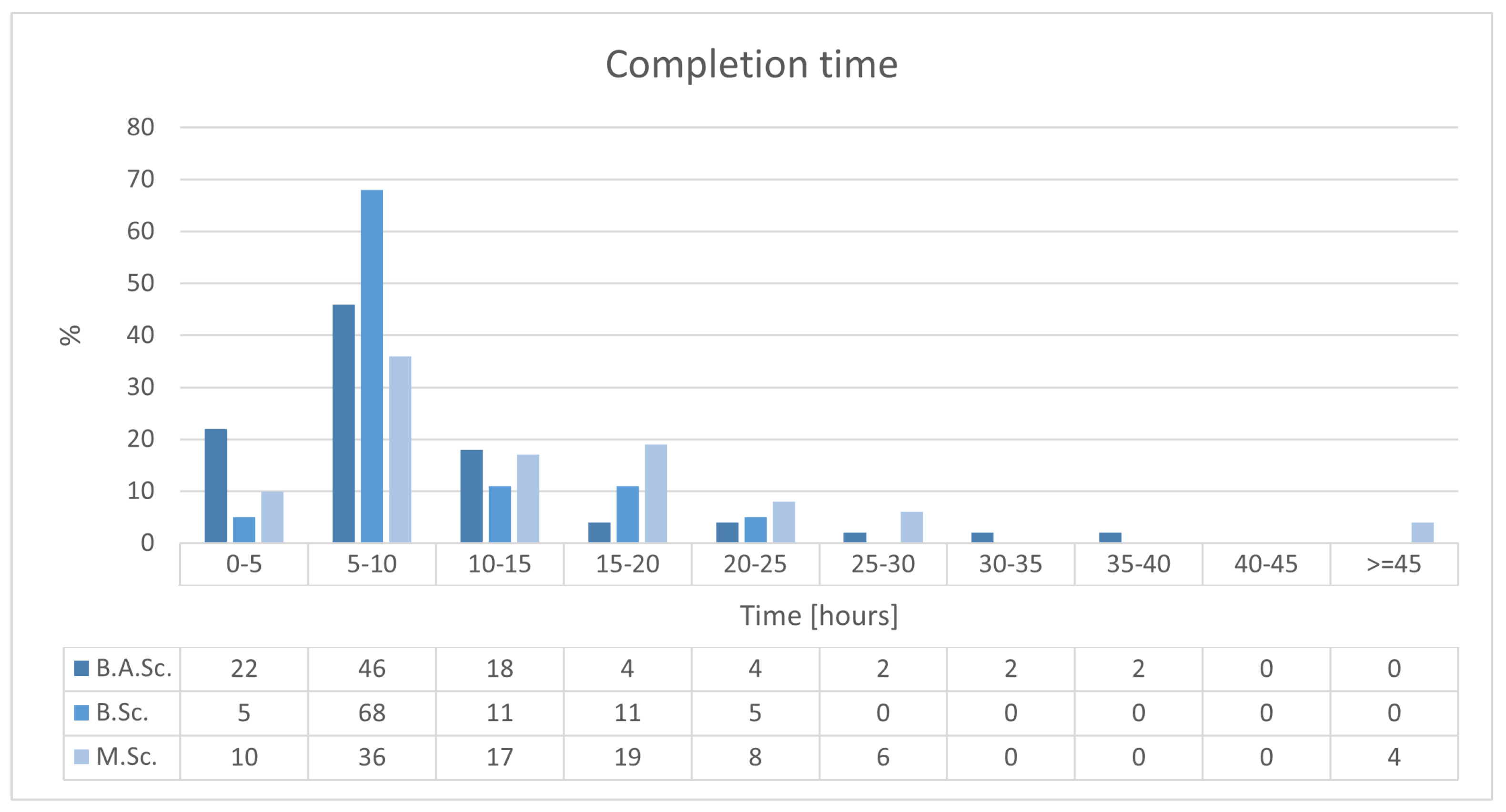

The total completion time of the task is summarised in

Figure 7. Most of the students in all the groups completed the implementation in 5 to 10 h, although the M.Sc. students displayed a wider spread, with some reporting over 20 h or even exceeding 45 h.

Most of the students wrote between 250 and 500 lines of code for their implementations (without documentation), as presented in

Figure 8, with the variations influenced by the programming languages they selected. A notable portion of the students, especially among the B.Sc., wrote fewer than 250 lines of code, reflecting either a concise implementation style or reliance on languages requiring less detailed syntax. The B.A.Sc. students were distributed evenly across the mid-length categories, indicating a balance between detailed solutions and concise approaches. Among the three students who wrote between 100 and 150 lines of code, two used Python, while one selected C++. This diversity illustrates how programming experience and language choice affect code length. However, the number of lines of code should be interpreted with caution, as this metric alone does not reflect the complexity or quality of the solutions adequately. Factors such as the programming language used, the individual coding style, and the amount of code reuse can affect the line count significantly, making it an unreliable indicator of effort or skill.

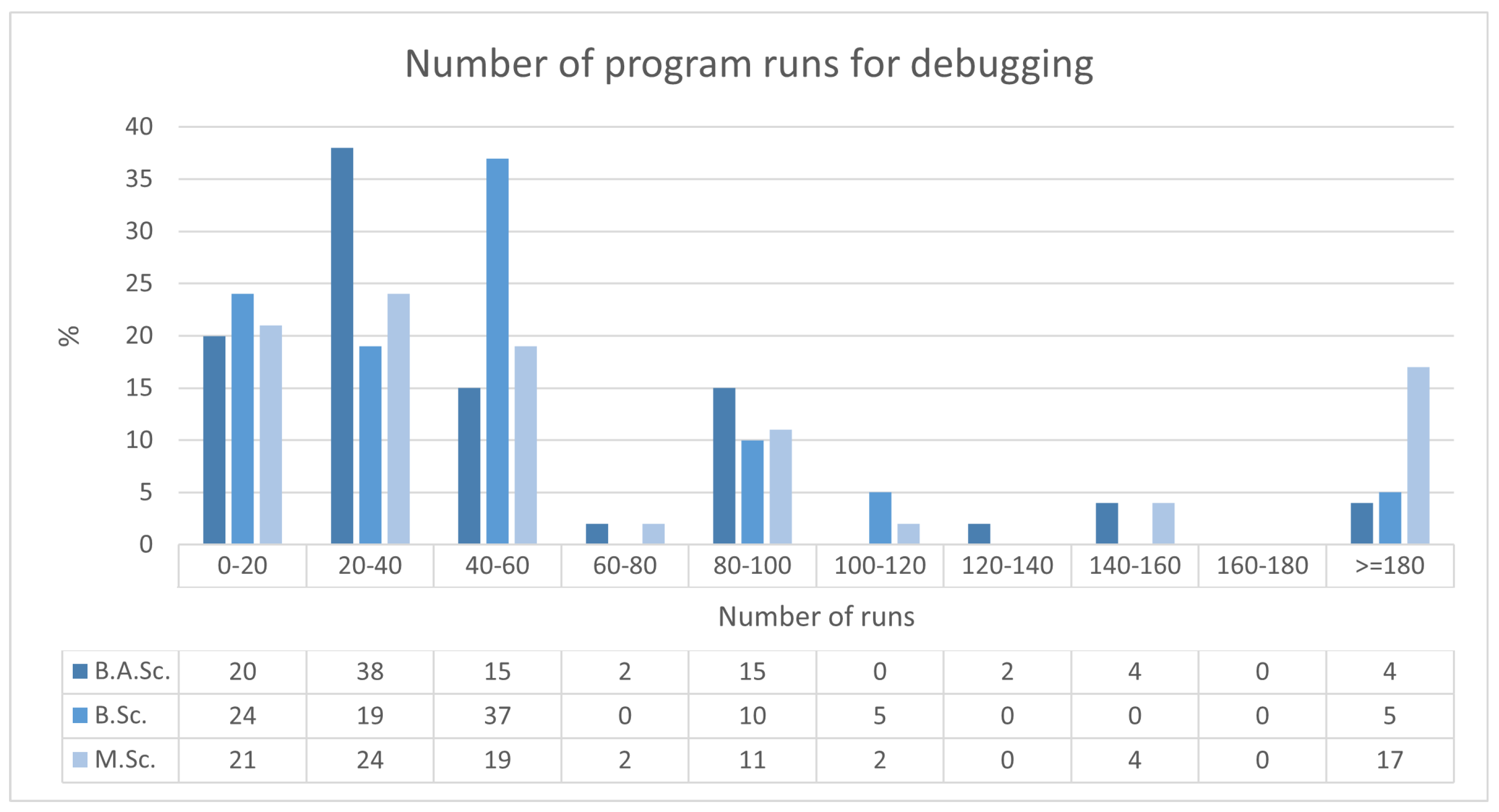

Most of the students debugged their programmes between 20 and 40 times, but the M.Sc. students showed a tendency to perform more intensive debugging, with several exceeding 180 runs (see

Figure 9). The B.A.Sc. and B.Sc. students generally debugged less, remaining under 100 runs in most cases. The high number of runs among the M.Sc. students indicates a more test-driven development, likely reflecting their advanced experience and careful implementation strategies.

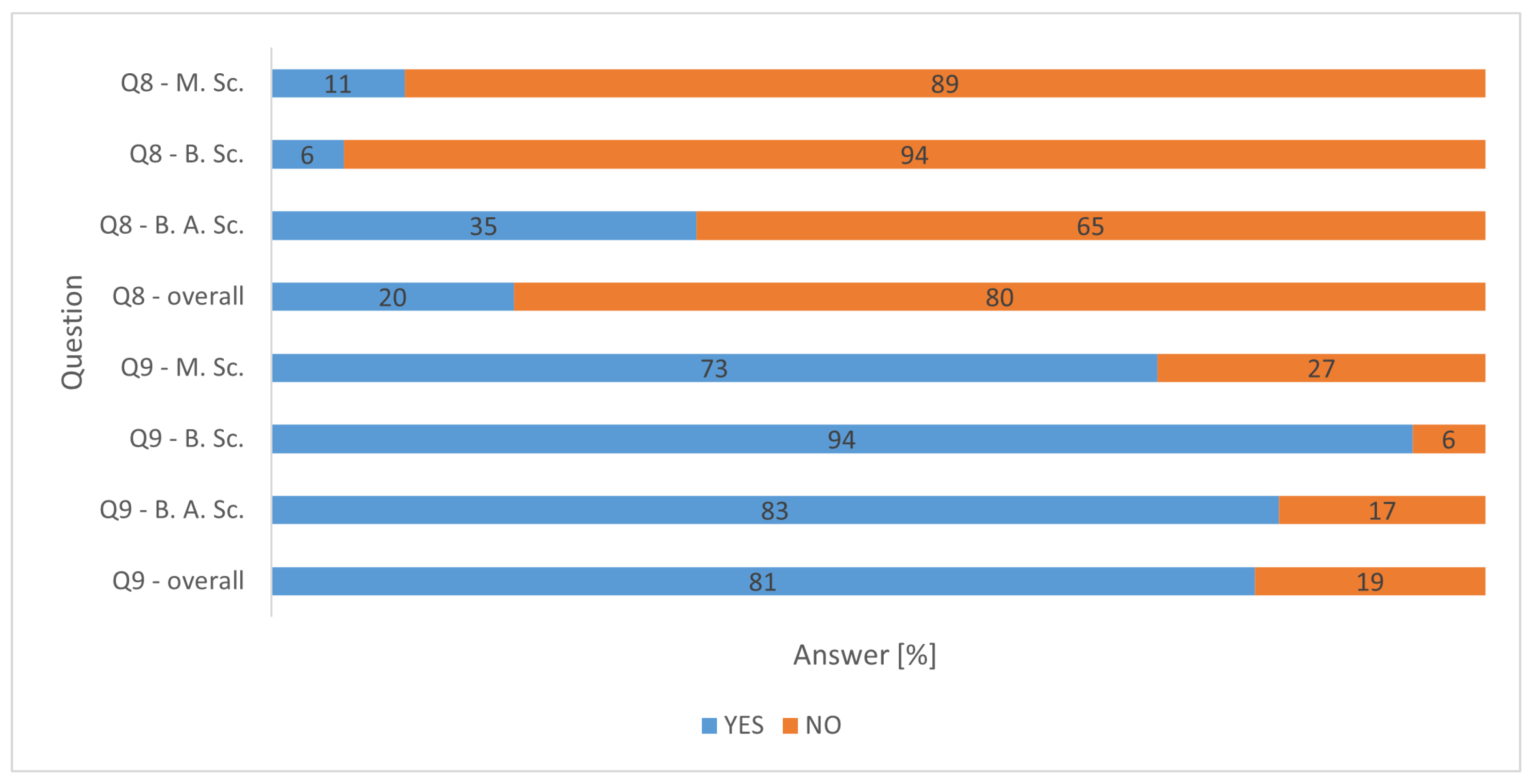

Figure 10 shows, in Q9, that real-time code testing was the dominant strategy in all the groups, especially among M.Sc. and B.A.Sc. students. The B.Sc. students were less consistent, with fewer incremental tests during development. The preference for real-time testing aligns with best practices, and likely contributed to fewer errors during debugging. A minority of the students postponed testing until their implementations were complete, potentially increasing the debugging effort later. Unit testing was used rarely, and most of the students in all the groups did not use this technique. The B.A.Sc. students reported the highest adoption, compared to only six M.Sc. students and one B.Sc. student (see Q8 in

Figure 10). The low usage suggests that unit testing is not yet a standard practice among students, even at the postgraduate level, highlighting an area for improvement in programming education.

5.3. Challenges

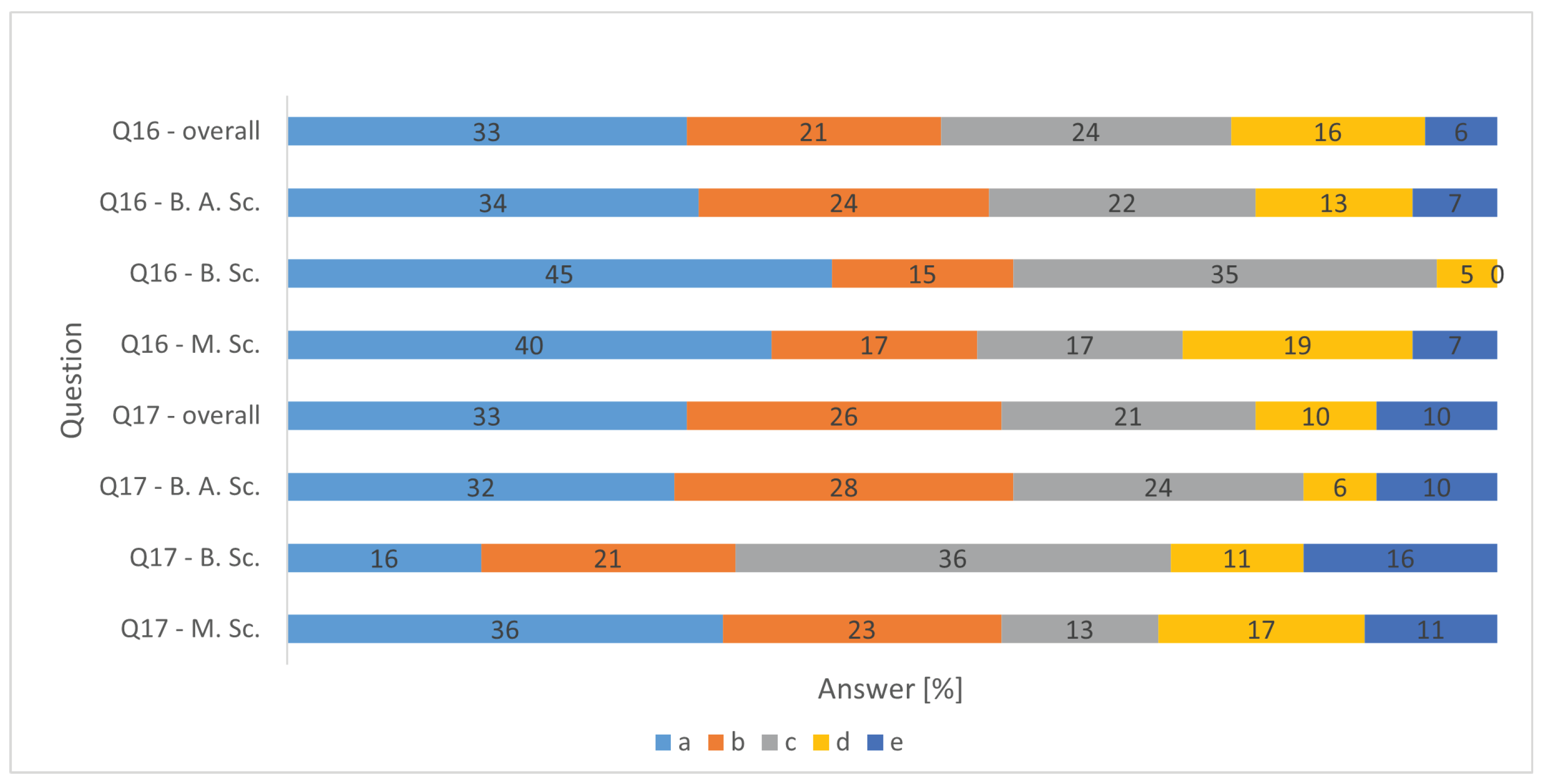

We anticipated that the students might face challenges during the implementation process, particularly in understanding and debugging specific parts of the pseudocode. To explore these difficulties, we asked the students to identify the sections of the code they found most difficult to understand and debug. Q16 in

Figure 11 highlights that interpolative coding (answer a) presented the most significant challenge for the students in all the groups. The M.Sc. students reported the highest difficulty, with 21 responses, followed by B.A.Sc. (16) and B.Sc. (9), altogether comprising 33% of all the participants. Other challenging sections included prediction (answer c) and compression of the remainder (answer d). Similarly, Q17 in

Figure 11 reveals that interpolative coding debugging (answer a) was especially problematic, with 33% students marking this as the hardest section. Debugging difficulties were also observed for the prediction (answer c), although these challenges were distributed more evenly among the groups.

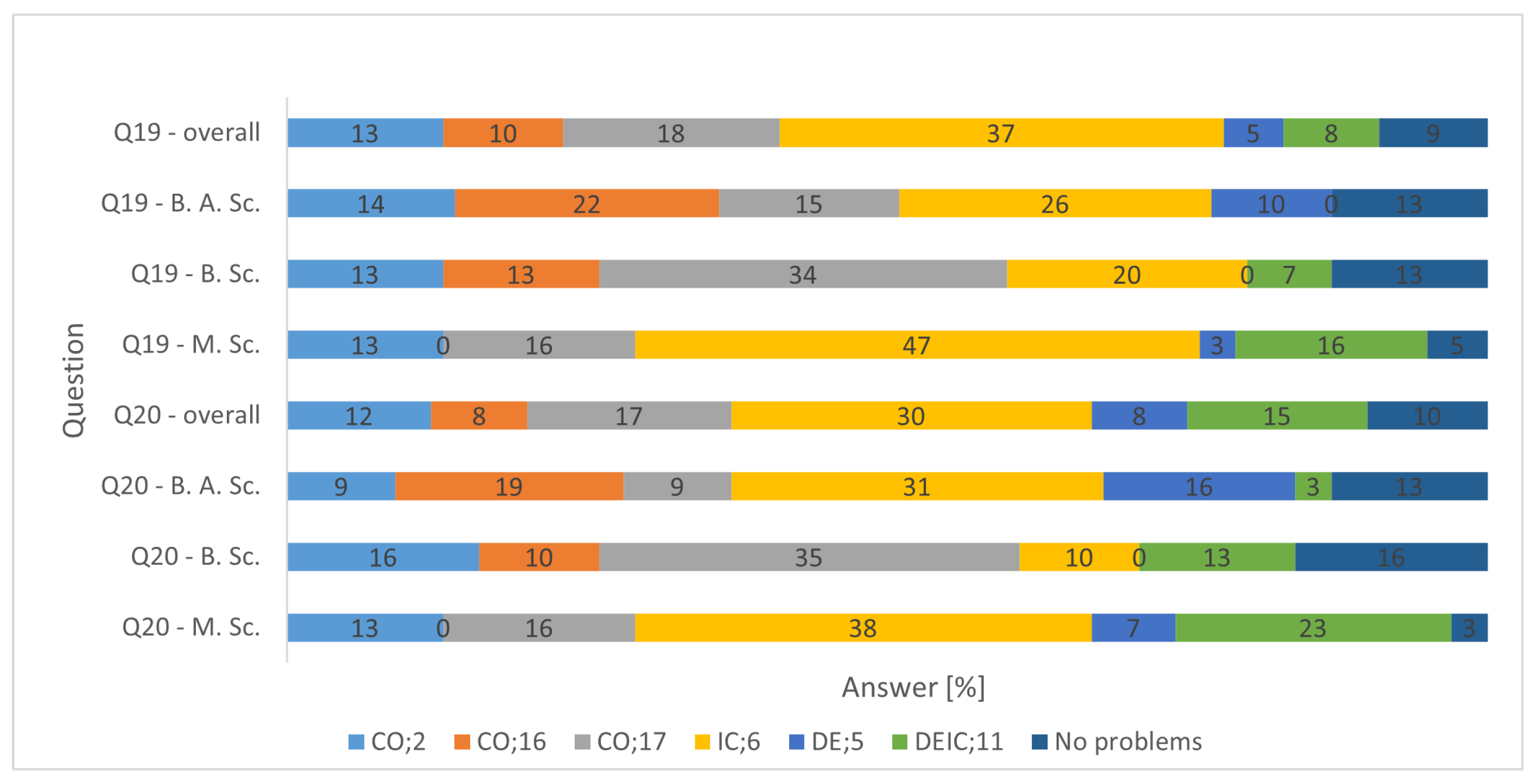

Figure 12 provides an insight into the specific pseudocode lines where the students experienced the most difficulty, focusing on understanding and debugging the challenges. In Q19 in

Figure 12, IC;6, corresponding to line 6 in Algorithm A3, emerged as the most difficult to understand, particularly among the M.Sc. (47% of the participants) and B.A.Sc. students (26% of the participants). This indicates that the functionality in this section of the pseudocode was conceptually complex. Similarly, CO;17, denoting line 17 in Algorithm A1, which represents the entire interpolative coding function, was also identified frequently as difficult to understand, especially by the M.Sc. students. CO;16 (see Algorithm A1, line 16), which deals with writing the file header, and CO;2 (see Algorithm A1, line 2), a prediction function (Algorithm A2), were highlighted by the B.A.Sc. students as less intuitive. The B.Sc. students reported fewer problems overall, with many indicating that they had no major difficulties in understanding specific lines.

The debugging difficulties mirrored the understanding challenges, with IC;6 again being the most problematic line, comprising 38% of all responses (see Q20 in

Figure 12). This suggests that IC;6 presented both conceptual and practical challenges during the implementation. Furthermore, DE;5 (see Algorithm A4, line 5), which contains the entire interpolative decoding function (Algorithm A5), was marked as challenging, particularly by the M.Sc. students. CO;16 and CO;2, were also mentioned as debugging bottlenecks, with the B.A.Sc. students facing the most problems. As in understanding challenges, the B.Sc. students reported significantly fewer problems, with some indicating that they had no difficulty debugging specific lines. The inverse prediction function in decompression (Algorithm A6) showed no difficulties in understanding and implementing.

The consistent identification of IC;6, CO;17, and DE;5 as challenging highlights their inherent complexity in both understanding and debugging. These lines, representing entire functions or critical operations, such as file header writing or prediction, require a deeper understanding and implementation accuracy. However, recursion did not represent a significant burden.

5.4. External Help

Q28 in

Figure 13 highlights the adoption of generative AI tools, such as ChatGPT 4 [

35], in different groups of students. Most of the students (71%) reported using generative AI as a support tool for the implementation. This widespread use suggests that students of all levels recognised the value of generative AI in troubleshooting, generating code snippets, or clarifying parts of the pseudocode. In Q27 in

Figure 13, we observe the dependence on the code found in WEB repositories. This resource was particularly liked by the B.A.Sc. students, where 67% of the participants acknowledged its use. Similarly, the M.Sc. students and B.Sc. students also relied on WEB-sourced code. The lower adoption rate among the B.Sc. students could indicate a limited awareness of, or a hesitancy towards, using such resources. In contrast, the B.A.Sc. students, with their background in applied technical education, might be more experienced in incorporating online resources into their work.

These results reflect the increasing reliance on external tools like generative AI and WEB resources in programming tasks. The widespread use of generative AI shows its potential as a valuable educational aid, particularly for debugging and understanding complex pseudocode. However, the variability in adoption between the groups suggests different levels of comfort or awareness of these tools. Educators might consider formalising training on how to use AI tools and WEB-sourced code effectively and ethically to enhance the students’ problem-solving skills and efficiency.

5.5. Self-Assessment

The histogram in

Figure 14 presents the distribution of the self-assessed performance ratings of the students, segmented by their academic level. The ratings range from 1 (indicating complete failure) to 5 (indicating complete functionality without errors). No student rated their performance as 1, indicating that all the participants implemented the algorithm successfully to some extent. Only four students rated their performance as barely functional. A total of 12 students rated their performance as functional with significant errors. A substantial proportion of the participants rated their implementations mostly functional with minor potential problems. The largest group of respondents rated their performance as 5, representing fully functional and error-free solutions. This top rating was given by more than half of the participants. The distribution in

Figure 14 highlights a trend in which the postgraduate students demonstrated the highest self-confidence in their implementations. The data suggest an upward trajectory in self-assessed performance with increasing academic levels.

The self-assessed performance ratings question was followed by the question of what the students would change if they needed to implement the pseudocode again. They needed to write a short answer. A significant number of the students mentioned the importance of integrating testing into their workflow. Many reflected that they would test their code more frequently and systematically. This suggests that the students recognised the value of continuous testing in identifying and addressing issues earlier in the development process, which could reduce debugging time and improve the overall outcomes. Another prevalent theme was the need for a better understanding of the problem or pseudocode before beginning its implementation. The answers also highlighted that many students felt unprepared or rushed into the implementation without understanding the requirements of the task completely. This lack of preparation may have led to inefficiencies and errors that could have been avoided with more detailed planning. The choice of programming language also appeared to be a critical factor in the reflections of the students. Many expressed a preference for switching to a language like Python, which they found easier to use compared to languages such as C++ or Rust. Time management was another repeated issue. The students mentioned starting the task earlier, or dedicating more time to it frequently. This suggests that some students may have underestimated the complexity of the task or struggled with delay, affecting their ability to complete the assignment optimally. In addition, many students recognised the importance of external help and resources. Some answers illustrated that seeking support, either from assistants or online resources, could have made the task more manageable. Similarly, several students highlighted the need to follow instructions with more care. Finally, the need for better code organisation and optimisation was a common reflection. Many students mentioned improving modularity, reducing redundancy, and optimising specific functions. This feedback highlights the importance of teaching students good coding practices to improve the maintainability and readability of their codes.

Figure 15 represents the students feedback on the set of questions, providing a comprehensive view of their perceptions of the task, the algorithm, and the utility of their prior knowledge. The students responded using a scale from 1 to 5, where 1 indicated strong disagreement, 2 indicated disagreement, 3 was neutral, 4 indicated agreement, and 5 represented strong agreement. This scale allowed for a detailed understanding of their experiences and evaluations.

The responses in

Figure 15 indicate that the students generally found the algorithm to be understandable (Q29). Most of the B.A.Sc. and M.Sc. students as mainly or completely agreed with this assessment. When assessing whether the algorithm was quick to implement (Q30), a significant proportion of the B.A.Sc. and M.Sc. students rated it positively (4 or 5). This indicates that the experienced students, especially at higher levels, may have found it efficient. However, a notable proportion of the students rated it neutrally, suggesting that the task was not perceived universally as straightforward. The student responses in Q31 varied in terms of the complexity of the algorithm. The M.Sc. students showed the highest concentration of neutral responses, indicating a perceived moderate level of complexity, while the B.A.Sc. and B.Sc. students were divided between neutral and slightly positive ratings. Most of the students at all levels agreed strongly (4 or 5) that the assignment demonstrated the lossless image compression process effectively. This outcome emphasises the effectiveness of the task in achieving its educational objectives (see Q32). The responses to the question of whether, through the assignment, they learnt the interpolative coding process, were notable (Q33). The M.Sc. students rated it highly overwhelmingly, showing that they valued the learning experience. The B.A.Sc. students also showed strong agreement, while the B.Sc. responses were slightly more varied. Q34 in

Figure 15 shows that prior knowledge of the data structures was considered useful, especially among the M.Sc. students, who rated it very positively. The B.Sc. students were more neutral, suggesting that their knowledge of data structures may not have been as extensive or applicable. Similarly to the data structures, the utility of algorithmic knowledge was rated highly by the B.A.Sc. and M.Sc. students, with the majority indicating that it was beneficial. The B.Sc. responses were distributed more evenly, and some students found it less relevant (Q35). The M.Sc. students in Q36 scored the highest for the usefulness of prior knowledge about data compression, reflecting their advanced preparation. The B.A.Sc. students also found it helpful, while the B.Sc. responses showed a more neutral stance, indicating varying levels of familiarity.

The task demonstrated the concepts of lossless image compression and interpolative coding successfully, which was particularly beneficial to the B.A.Sc. and M.Sc. students. Prior knowledge, especially of data structures, algorithms, and compression, proved to be a significant advantage for the M.Sc. and B.A.Sc. students, while the B.Sc. students expressed a more neutral stance, suggesting the need for additional preparation at this level. The FLoCIC algorithm was generally perceived as understandable and moderately complex, although opinions varied on its length of implementation. From a pedagogical perspective, the task achieved its learning objectives effectively, but highlighted the necessity of providing customised support to less experienced students.

5.6. Statistical Analysis

5.6.1. Pearson Correlation Coefficient

Figure 16 shows the calculated values of the Pearson correlation coefficient between the questions with numerical values. As illustrated in

Figure 16, the group of questions Q2–Q6 is correlated positively. These questions refer to reading and understanding the pseudocode, writing the code, debugging, completion time, and running the code to test, identify, and fix errors. This indicates that students who had difficulties understanding the algorithm also faced challenges in its implementation (writing code and debugging). This finding is supported further by the strongest positive correlation in the observed group of questions between Q2 and Q5, where Q2 represents reading and understanding the pseudocode and Q5 corresponds to the completion time. The next very strong positive correlation is observed between questions Q35 and Q36, which refer to prior knowledge of general algorithms and compression algorithms, respectively. This correlation confirms further that the students with greater prior knowledge encountered fewer difficulties during implementation. By adding question Q34 in

Figure 16, which represents prior knowledge of data structures, to questions Q35 and Q36, we obtain another group of questions that are correlated negatively with the previously defined group Q2–Q6. This confirms further that prior programming knowledge is associated with shorter task completion times. The strongest negative correlation was observed between questions Q29 and Q31 in

Figure 16, which address the ease of understanding the algorithm and the complexity of its implementation. This outcome is consistent with the expectations. The expected results can also be observed in

Figure 16 for Q7a and Q7b, which represent interpolative encoding and decoding times; Q7d and Q7e, representing residual compression and decompression times; Q25a and Q25b, representing the number of lines for interpolative encoding and decoding; and Q25d and Q25e, showing the codes for programming residual compression and decompression. All of these are correlated highly positively.

5.6.2. Cramér’s V Test

Cramér’s V test was used to measure the strength of association between two categorical variables, to assess whether a meaningful relationship existed rather than random variation. A bias-corrected Cramér’s V analysis was performed (see

Figure 17) and identified several coherent groups of questions. One is represented by the questions Q24 and Q25, which refer to the total number of lines of code and the number of lines in individual sections of the implemented algorithm. Additionally, Q26, which represents the selected programming language, can be included, allowing us to infer that the number of lines is associated strongly with the chosen programming language. Another highly coherent group of questions is represented by Q29–Q36, which captures the perception of the algorithm in terms of comprehensibility, clarity, complexity, and completeness (already discussed in

Figure 15). Students who considered the algorithm to be clear (Q29) also evaluated it as less complex (Q31 in

Figure 17) and comprehensive (Q32). Questions Q35 and Q36 confirm further that prior knowledge influenced the way students implemented and evaluated the task strongly, as already demonstrated in

Figure 16. Furthermore, questions Q27 and Q28 in

Figure 17, which represent the use of code from the web and generative AI, also show a strong dependency with the previously mentioned group of questions. Use of generative AI was even higher when just using code from the web. This confirms that the students who relied on additional assistance evaluated the task more positively. The high value for

Figure 17 for Q26, which represents the programming language chosen for implementation, with questions Q27–Q36, highlights the importance of the selected language in the positive evaluation of the task. A similar pattern can be observed in

Figure 17 for Q22 and Q23, representing the years of programming experience and current employment status. Furthermore, Q19 and Q20, which refer to lines of code that are difficult to understand and implement, also show high Cramér’s V values.

5.6.3. T-Test

The

t-test analysis, as can be seen in

Table 2, revealed several statistically significant (

p < 0.05) differences between undergraduate (UG, combined B.A.Sc. and B.Sc.) and postgraduate (PG, M.Sc.) students. The PG students rated their task performance higher (Q1) consistently and invested more time in debugging (Q4) and completing the entire task (Q5). They also executed a greater number of programme runs (Q6), indicating a more iterative and systematic approach to testing. Furthermore, the PG students allocated more effort to specific algorithmic phases, such as interpolative encoding (Q7a), meaning that they wanted to implement more complex binary coding (truncated binary or FELICS codes), and reported a higher overall level of programming experience (Q22). They also perceived the compression algorithm as easier to understand (Q29). In contrast, the UG students rated the task as requiring more of their programming skills (Q12), suggesting that they considered the exercise as more demanding relative to their current knowledge and abilities. Taken together, these results suggest that the PG students approached the assignment more thoroughly and with greater confidence, while the UG students experienced the task as more challenging, reflecting the differences in prior knowledge and programming experience between the two groups.

6. Discussion

The results of the questionnaire provide an extensive view of the perceptions, challenges, and learning outcomes of the students regarding the implementation of the FLoCIC algorithm [

5]. These findings align with existing research and highlight key areas for improvement in instructional strategies. The results showed that prior knowledge of data structures, algorithms, and compression concepts benefited the B.A.Sc. and M.Sc. students significantly, who rated these factors highly for their usefulness during the implementation process (Q34–Q36 in

Figure 15). This finding is consistent with research by Su et al. [

36], which highlighted that the students with stronger prior knowledge tend to achieve better learning outcomes. The study also highlights the importance of adjusting instructional approaches to meet the diverse needs of students, suggesting that additional preliminary support should be provided for less experienced students. It is also important to note that the Study Programmes in our Faculty are open to all candidates who meet the formal admission criteria, including those without prior programming experience. Similarly, the M.Sc. programme is accessible to students from other academic disciplines and institutions, including international candidates who have completed their B.Sc. in the adequate study (mathematics, physics, or other technical studies). These students do not have the same fundamental knowledge as those of a Computer Science background. The responses of these students could lead to outliers in some responses due to individual differences in prior experience and knowledge. All these findings are confirmed by studies in the literature, as several studies have demonstrated the importance of prior programming knowledge consistently in predicting student success in foundational Computer Science courses. Bui et al. [

37] observed a persistent achievement gap of approximately one grade level between students with any prior programming experience and those without. Similarly, Uhanova et al. [

38] confirmed a positive correlation between prior programming knowledge and academic performance among first-year students in Computer Science. Veerasamy et al. [

39] showed further that prior programming knowledge was a statistically significant predictor of final exam performance. Finally, Lau and Yuen [

40] identified previous programming experience, together with self-efficacy and knowledge organisation, as key predictors of programming performance.

The feedback revealed repeated challenges, including debugging recursive functions, implementing modular code, and understanding specific components of the pseudocode, such as interpolative coding and compression functions, which were identified as particularly challenging to understand and debug (Q19 and Q20 in

Figure 12). In particular, IC;6, which represented the greatest challenge for the students, involved writing binary represented values in a file. The students could choose between several options, such as classical binary codes, truncated binary codes [

31], and FELICS codes [

34], but the lack of detailed explanations or pseudocodes for these methods added to the difficulty. This also indicates that students struggle to implement complex concepts independently without clear guidance, and it can therefore be concluded that, in the absence of pseudocode, they were less able to rely on generative AI to produce the code. These findings suggest that future improvements in pseudocode design should focus on simplifying or explaining these critical areas better, to facilitate both understanding and debugging. In addition, the duration of the implementation (Q30 in

Figure 15) and the perceptions of complexity varied (Q31 in

Figure 15). The longer implementation times among the more experienced students could be attributed to their attempts to implement the most challenging option, FELICS codes. Without detailed pseudocode, the students had to extrapolate key parts of the algorithm, likely leading to longer development and debugging times. The more experienced students were generally more prone to address this challenge, whereas the less experienced selected simpler encoding methods. This choice likely led to increased debugging difficulties and more frequent runs of the algorithm, contributing to the observed differences in time and complexity.

We assume that, due to the lack of detailed explanations or pseudocode for implementing more complex binary writing algorithms, many students relied on external resources, such as generative AI (Q28 in

Figure 13) or code from the WEB (Q27 in

Figure 13), to help with their implementations. The fact that most of the M.Sc. students used generative AI (see Q28 in

Figure 13) compared to the undergraduate students could indicate that the postgraduate students have a better understanding of how to integrate AI tools into their workflow effectively. Although these types of help can be valuable for improving productivity, their use should be guided to ensure that students develop a complete understanding of the underlying concepts rather than relying entirely on external solutions. Despite this assistance, many students still did not complete the task without errors.

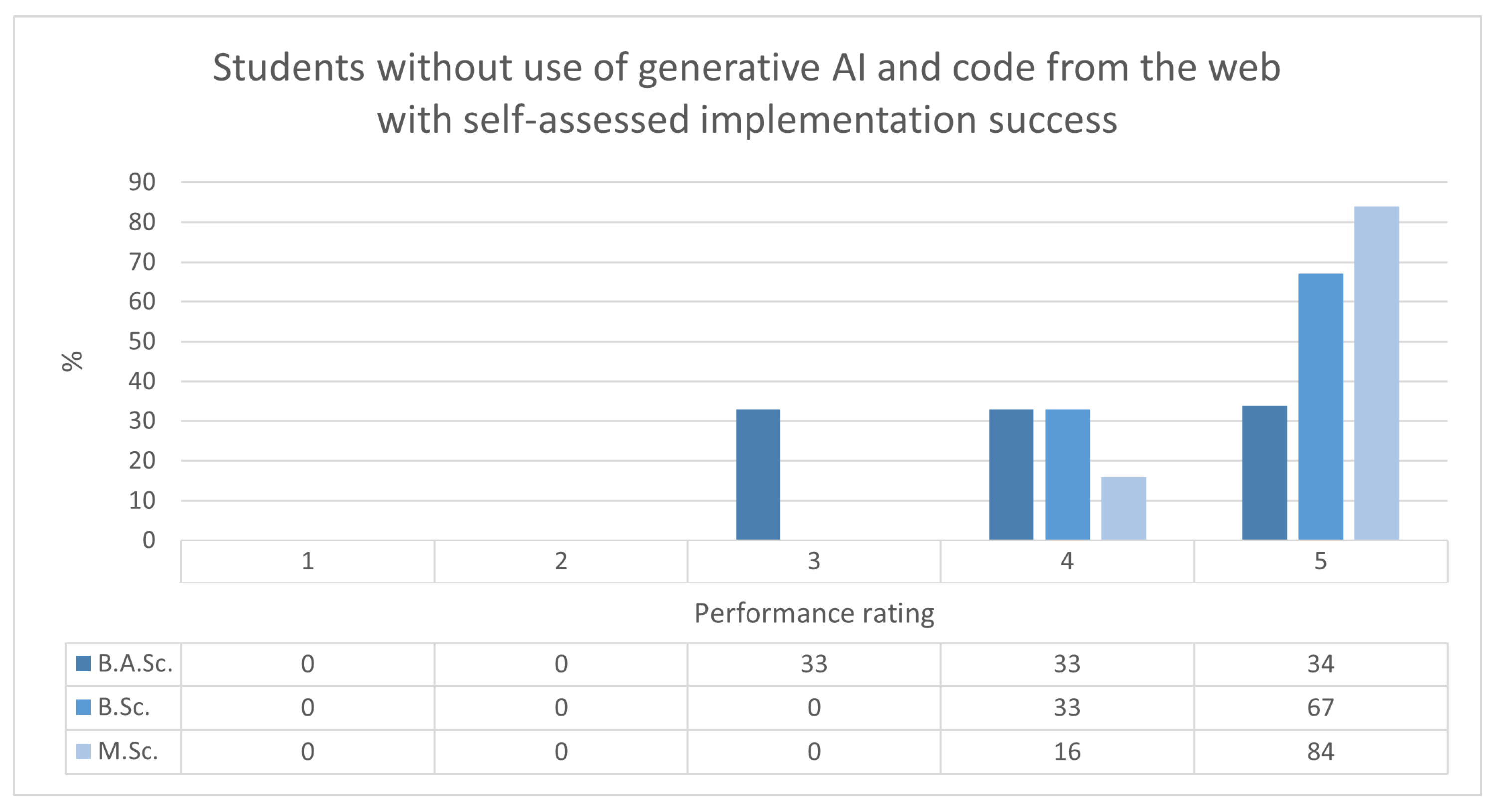

Figure 18 illustrates that, even among those who used code from the WEB and generative AI, a significant number did not achieve a fully functional implementation. This finding highlights the importance of understanding the underlying algorithm and its components, as external tools alone are insufficient for a successful implementation without a solid understanding of the content. Moreover,

Figure 19 shows that many students who did not rely on generative AI or code from the WEB still reported high levels of implementation success. In particular, a majority of the M.Sc. students in this group rated their implementation with the highest score, suggesting that prior experience and conceptual understanding of algorithmic principles can compensate for the use of external support. This confirms the idea that understanding, rather than external help (such as generative AI), is the primary factor of success in algorithmic problem solving.

The increased use of generative AI among students represents pedagogical risks, as over-reliance on such tools can bypass productive and critical thinking while hiding the underlying algorithmic reasoning of the problem to be solved. We therefore propose guidelines aligned with Computer Science education practices, such as transparent declarations of AI use, the requirement to justify AI-generated code, and assessment designs that include code reading, version-controlled development, and brief oral explanations of implemented algorithms. These measures aim to capture learning improvements while limiting the use of generative AI for solving problems superficially without understanding the actual problem.

These findings indicate that, while the algorithm balances complexity and clarity effectively, its implementation may require further assistance to accommodate less experienced students. Many students expressed better use of the debugging tools (

Figure 9) and the desire for more systematic testing practices (

Figure 10 Q9). These reflections highlight the importance of teaching students effective debugging strategies and promoting incremental development to identify and address errors earlier in the implementation process. This finding aligns with the work presented in [

41], which highlighted the importance of developing effective learning strategies to tackle problem-solving tasks. By developing a systematic debugging mindset, students can approach challenges with confidence and efficiency, ultimately improving their problem-solving skills and results. The reflections of the students also indicate a strong interest in improving their approach if given another opportunity, with many suggesting earlier preparation, continuous testing, and better time management (

Figure 7). These insights provide a foundation for future educational improvements, including structuring complex concepts, offering additional debugging resources, and integrating methodical training on best programming practices.

Several measures could be implemented to address these challenges. One option is to provide students with an executable version of the algorithm, allowing them to test the correctness of their solutions on their own input data. In addition, offering examples with correct outputs for specific code segments could support step-by-step verification of individual functions. A further approach would be to highlight explanations and practices that align with the principles of test-driven development [

42], encouraging the students to validate their implementations through systematic testing. However, it should be noted that these are undergraduates in the last year of their studies, while the postgraduates were in the first year of their study, and they are expected to already possess the organisational and methodological skills to adopt such practices independently.

Finally, the assignment was successful in achieving its educational objectives, as most of the students agreed that it provided a clear demonstration of lossless image compression and the interpolative coding process (

Figure 15 Q32 and Q33). The students typically found the FLoCIC algorithm understandable, as demonstrated by their high scores in this category (

Figure 15 Q29). In future research, we plan to complement the surveys with more in-depth follow-up interviews with the students, in order to identify more specific reasons for certain deviations and findings observed in the questionnaire results. In addition, future surveys will pay much greater attention to the role of AI, as this is currently a highly relevant topic in education. These outcomes would strengthen the value of practical tasks in connecting theoretical concepts with practical applications.