Augmented Reality in Dental Extractions: Narrative Review and an AR-Guided Impacted Mandibular Third-Molar Case

Abstract

1. Introduction

2. Materials and Methods

2.1. Search Strategy

2.2. Inclusion and Exclusion Criteria

2.3. Article Selection Process

2.4. Data Extraction and Quality Assessment

2.5. Surgical Workflow of the Case

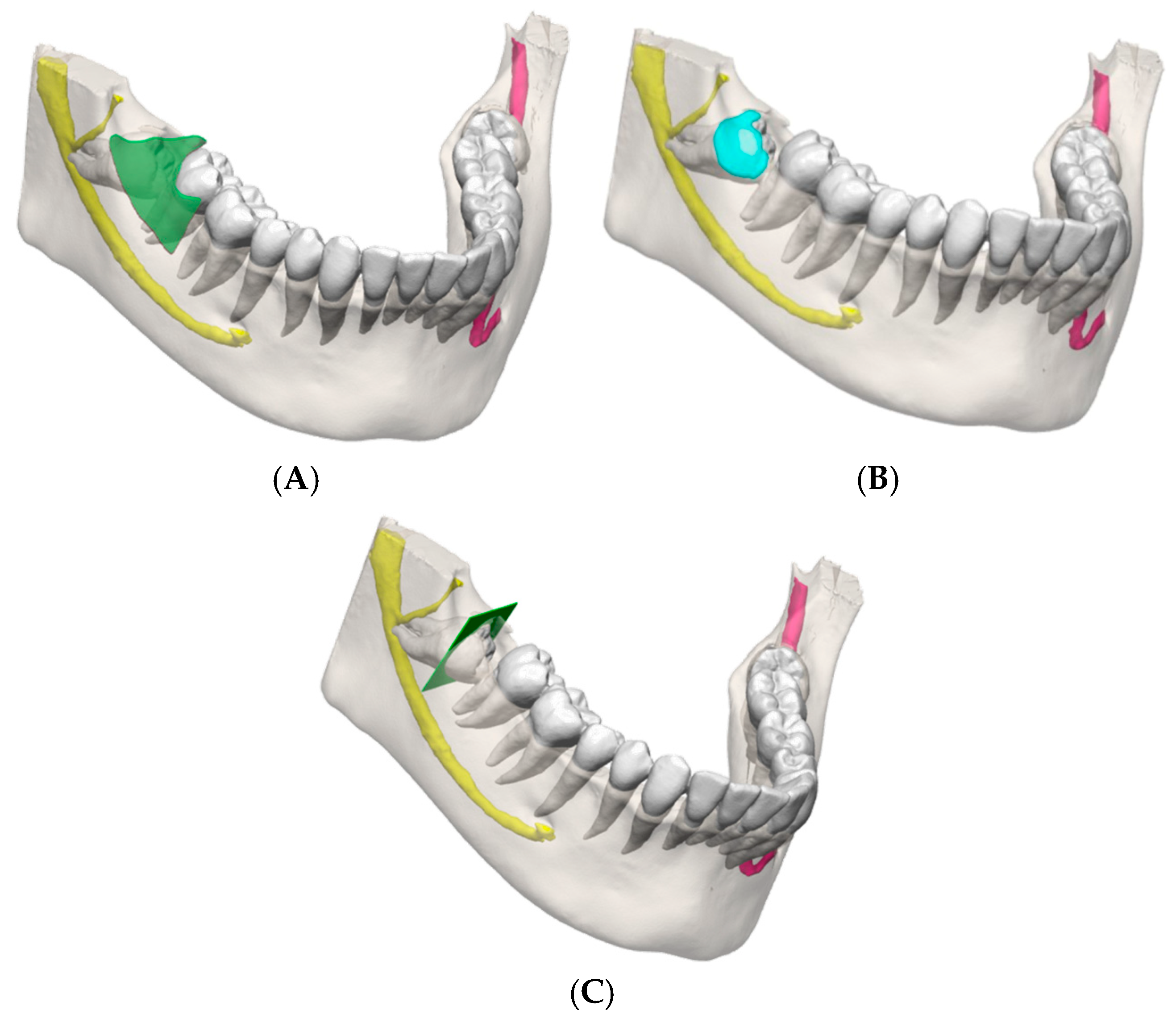

2.5.1. Digital Workflow

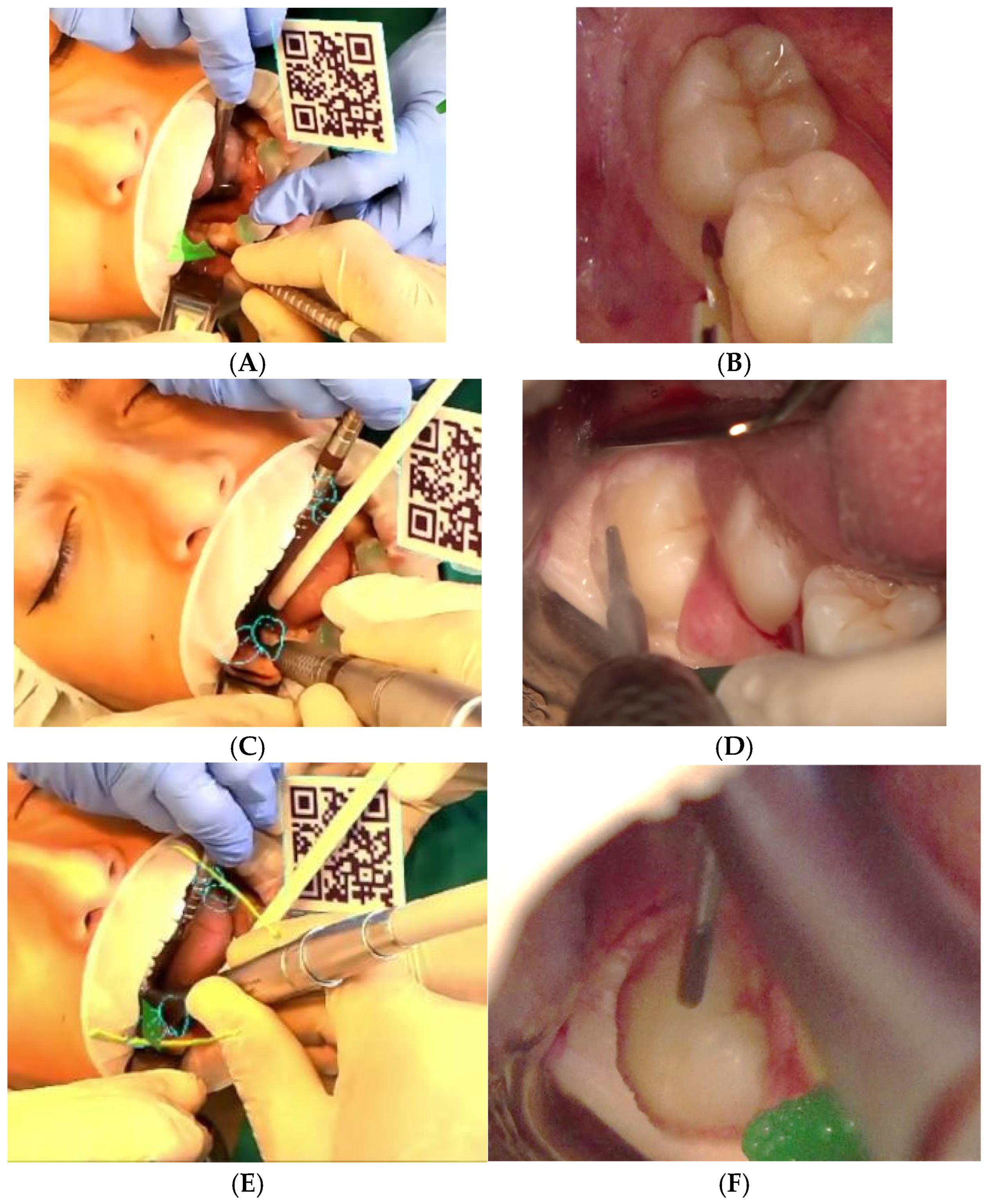

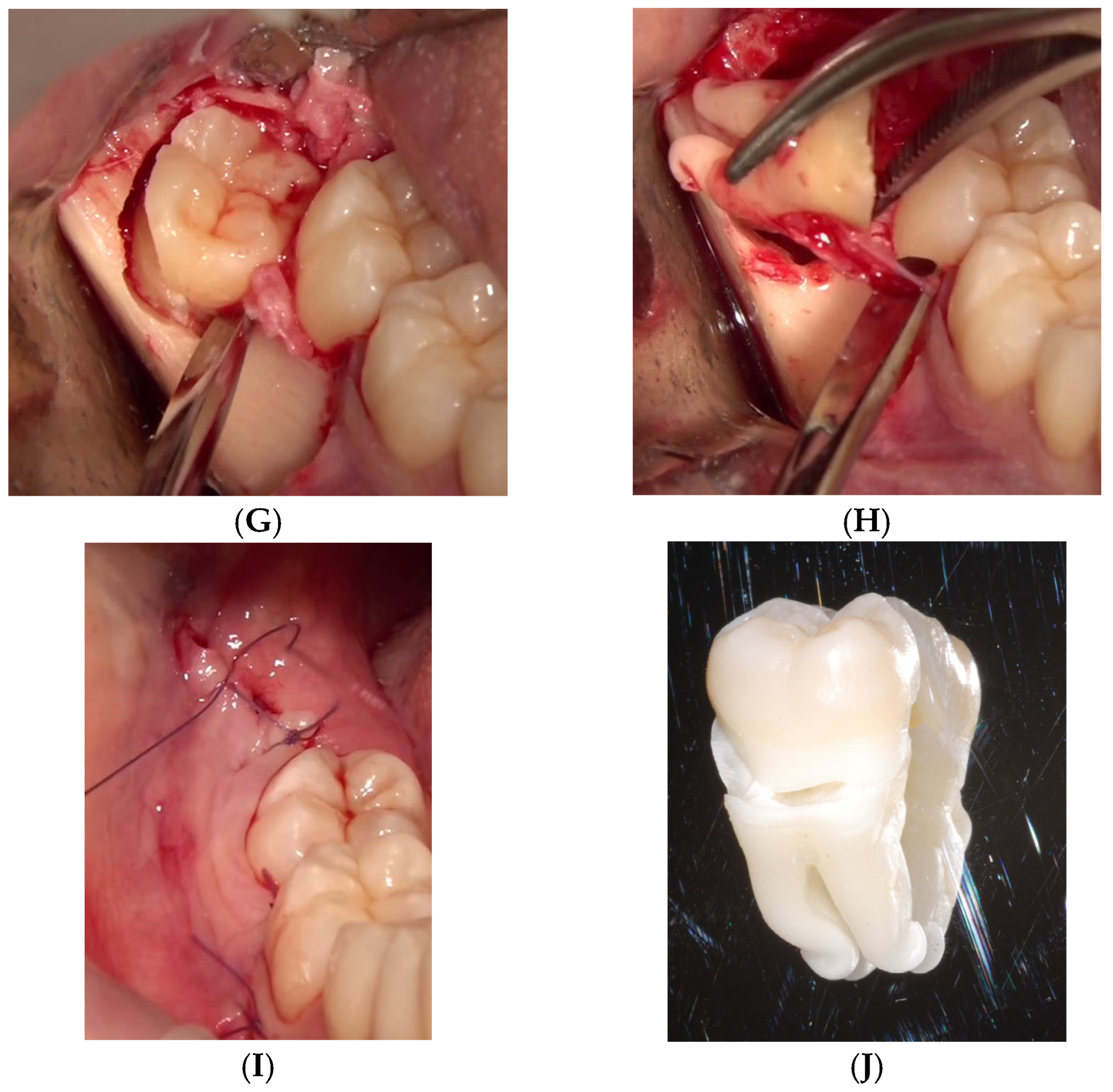

2.5.2. Surgical Execution

3. Results

3.1. Literature Synthesis

| Title | Authors | Year | Study Type | Extraction Context | AR Modality | Key Findings |

|---|---|---|---|---|---|---|

| Vision-based marker-less registration using stereo vision and an augmented reality surgical navigation system: a pilot study | Suenaga et al. [28] | 2015 | Pilot volunteer | Impacted third molar (visualised) | Marker-less stereo-vision AR | <1 mm registration error; real-time overlay of canal + roots |

| Video see-through augmented reality for oral and maxillofacial surgery | Wang et al. [29] | 2017 | Proof of concept (phantom + volunteer) | Impacted wisdom tooth and mandibular canal visualised (no extraction performed) | Video see-through AR | ~1 mm overlay error; real-time workflow |

| A proof-of-concept augmented reality system in oral and maxillofacial surgery | Pham Dang et al. [30] | 2021 | Proof of concept (phantom, pig jaw, 1 patient) | Bone window and removal of a maxillary cyst/impacted tooth root; visualises nerve and foramina | Marker-less screen-based AR using dental-cusp landmarks | Accurate alignment; successful cyst removal |

| Computer-Assisted Pre-operative Simulation & AR for Extraction of Impacted Supernumerary Teeth: A Clinical Case Report of Two Cases | Suenaga et al. [31] | 2023 | Case report (2 patients) | Deeply impacted supernumerary maxillary incisors | Marker-less video see-through AR (CT + intra-oral-scan fused) | Precise localisation; atraumatic removals; no complications; demonstrates clinical feasibility |

| Mixed reality for extraction of maxillary mesiodens | Koyama et al. [34] | 2023 | Case series (3 paediatric patients) | Mesiodens (supernumerary maxillary incisors) extractions | Microsoft HoloLens-based mixed reality (CarnaLife Holo; marker-less volume rendering) | Boys aged 7–11; mean operative time 32 min; palatal approach in 2 cases, labial in 1; minimal bleeding, no intra/postoperative complications; shared holograms among surgeons |

| AR-Assisted Surgical Exposure of an Impacted Tooth: a pilot study | Macrì et al. [32] | 2023 | Pilot single case (1 patient) | Impacted maxillary canine (exposure) | Marker-less, video-based AR (VisLab; single camera, monitor view) | Targeted flap and osteotomy; uneventful healing |

| Mixed reality-based technology to visualise impacted teeth: proof of concept | Fudalej et al. [36] | 2024 | Proof of concept (demo + survey) | Impacted-canine planning | HoloLens 2 mixed reality | >90 % clinicians found MR beneficial |

| AR-Guided Extraction of Fully Impacted Lower Third Molars | Rieder et al. [35] | 2024 | Pilot (2 cadaver + 4 patients) | Fully impacted lower third molars | HoloLens 2 CBCT overlay | Set-up 166 s; surgery 21 min; SUS ≈ 79 |

| Marker-less AR-assisted surgery for resection of a dentigerous cyst in the maxillary sinus | Suenaga et al. [33] | 2024 | Case report | Dentigerous cyst + ectopic wisdom tooth | Marker-less HUD AR | Conservative window; complete enucleation |

3.2. Case Report

4. Discussion

4.1. Strengths of AR-Guided Extraction

4.2. Clinical Relevance

4.3. Educational Potential

4.4. Limitations

4.5. Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DIBINEM | Department of Biomedical and Neuromotor Sciences |

| AI | Artificial Intelligence |

| CAD | Computer-Aided Design |

| DICOM | Digital Imaging and Communications in Medicine |

| HUD | Head-Up Display |

| PRISMA | Preferred Reporting Items for Systematic Review |

| QR | Quick Response |

| STL | Stereolithography |

| SUS | System Usability Scale |

| AR | Augmented Reality |

| CT | Computed Tomography |

| CBCT | Cone-Beam Computed Tomography |

| TRE | Target-Registration Error |

| VAS | Visual Analogue Scale |

| VR | Virtual Reality |

Appendix A

Appendix A.1. PubMed—Query (Verbatim)

Appendix A.2. Cochrane Library

References

- Shuhaiber, J.H. Augmented reality in surgery. Arch. Surg. 2004, 139, 170–174. [Google Scholar] [CrossRef] [PubMed]

- Benmahdjoub, M.; van Walsum, T.; van Twisk, P.; Wolvius, E.B. Augmented reality in craniomaxillofacial surgery: Added value and proposed recommendations through a systematic review of the literature. Int. J. Oral Maxillofac. Surg. 2021, 50, 969–978. [Google Scholar] [CrossRef] [PubMed]

- Joda, T.; Gallucci, G.O.; Wismeijer, D.; Zitzmann, N.U. Augmented and virtual reality in dental medicine: A systematic review. Comput. Biol. Med. 2019, 108, 93–100. [Google Scholar] [CrossRef] [PubMed]

- Queisner, M.; Eisenträger, K. Surgical planning in virtual reality: A systematic review. J. Med. Imaging 2024, 11, 062603. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Badiali, G.; Ferrari, V.; Cutolo, F.; Freschi, C.; Caramella, D.; Bianchi, A.; Marchetti, C. Augmented reality as an aid in maxillofacial surgery: Validation of a wearable system allowing maxillary repositioning. J. Cranio-Maxillofac. Surg. 2014, 42, 1970–1976. [Google Scholar] [CrossRef] [PubMed]

- Blanchard, J.; Koshal, S.; Morley, S.; McGurk, M. The use of mixed reality in dentistry. Br. Dent. J. 2022, 233, 261–265. [Google Scholar] [CrossRef] [PubMed]

- Badiali, G.; Cercenelli, L.; Battaglia, S.; Marcelli, E.; Marchetti, C.; Ferrari, V.; Cutolo, F. Review on Augmented Reality in Oral and Cranio-Maxillofacial Surgery: Toward “Surgery-Specific” HeadUp Displays. IEEE Access 2020, 8, 59015–59028. [Google Scholar] [CrossRef]

- Pellegrino, G.; Mangano, C.; Mangano, R.; Ferri, A.; Taraschi, V.; Marchetti, C. Augmented reality for dental implantology: A pilot clinical report of two cases. BMC Oral Health 2019, 19, 158. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Mai, H.N.; Dam, V.V.; Lee, D.H. Accuracy of Augmented Reality-Assisted Navigation in Dental Implant Surgery: Systematic Review and Meta-analysis. J. Med. Internet Res. 2023, 25, e42040. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Mosch, R.; Alevizakos, V.; Ströbele, D.A.; Schiller, M.; von See, C. Exploring Augmented Reality for Dental Implant Surgery: Feasibility of Using Smartphones as Navigation Tools. Clin. Exp. Dent. Res. 2025, 11, e70110. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Glas, H.H.; Kraeima, J.; van Ooijen, P.M.A.; Spijkervet, F.K.L.; Yu, L.; Witjes, M.J.H. Augmented Reality Visualization for Image-Guided Surgery: A Validation Study Using a Three-Dimensional Printed Phantom. J. Oral Maxillofac. Surg. 2021, 79, 1943.e1–1943.e10. [Google Scholar] [CrossRef] [PubMed]

- Tang, Z.N.; Hui, Y.; Hu, L.H.; Yu, Y.; Zhang, W.B.; Peng, X. Application of mixed reality technique for the surgery of oral and maxillofacial tumors. Beijing Da Xue Xue bao. Yi Xue Ban = J. Peking University. Health Sci. 2020, 52, 1124–1129. (In Chinese) [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Fushima, K.; Kobayashi, M. Mixed-reality simulation for orthognathic surgery. Maxillofac. Plast. Reconstr. Surg. 2016, 38, 13. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Tang, Z.N.; Hu, L.H.; Soh, H.Y.; Yu, Y.; Zhang, W.B.; Peng, X. Accuracy of Mixed Reality Combined With Surgical Navigation Assisted Oral and Maxillofacial Tumor Resection. Front. Oncol. 2022, 11, 715484. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Stevanie, C.; Ariestiana, Y.Y.; Hendra, F.N.; Anshar, M.; Boffano, P.; Forouzanfar, T.; Sukotjo, C.; Kurniawan, S.H.; Ruslin, M. Advanced outcomes of mixed reality usage in orthognathic surgery: A systematic review. Maxillofac. Plast. Reconstr. Surg. 2024, 46, 29. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Yang, R.; Li, C.; Tu, P.; Ahmed, A.; Ji, T.; Chen, X. Development and Application of Digital Maxillofacial Surgery System Based on Mixed Reality Technology. Front. Surg. 2022, 8, 719985. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Chai, Y.; Dong, Y.; Lu, Y.; Wei, W.; Chen, M.; Yang, C. Risk Factors Associated With Inferior Alveolar Nerve Injury After Extraction of Impacted Lower Mandibular Third Molars: A Prospective Cohort Study. J. Oral Maxillofac. Surg. 2024, 82, 1100–1108. [Google Scholar] [CrossRef] [PubMed]

- Rapaport, B.H.J.; Brown, J.S. Systematic review of lingual nerve retraction during surgical mandibular third molar extractions. Br. J. Oral Maxillofac. Surg. 2020, 58, 748–752. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Ling, Z.; Zhang, H.; Xie, H.; Zhang, P.; Jiang, H.; Fu, Y. Association of the Inferior Alveolar Nerve Position and Nerve Injury: A Systematic Review and Meta-Analysis. Healthcare 2022, 10, 1782. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Zhu, M.; Liu, F.; Chai, G.; Pan, J.J.; Jiang, T.; Lin, L.; Xin, Y.; Zhang, Y.; Li, Q. A novel augmented reality system for displaying inferior alveolar nerve bundles in maxillofacial surgery. Sci. Rep. 2017, 7, 42365. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Sarikov, R.; Juodzbalys, G. Inferior alveolar nerve injury after mandibular third molar extraction: A literature review. J. Oral Maxillofac. Res. 2014, 5, e1. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Bjelovucic, R.; Wolff, J.; Nørholt, S.E.; Pauwels, R.; Taneja, P. Effectiveness of Mixed Reality in Oral Surgery Training: A Systematic Review. Sensors 2025, 25, 3945. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Ayoub, A.; Pulijala, Y. The application of virtual reality and augmented reality in Oral & Maxillofacial Surgery. BMC Oral Health 2019, 19, 238. [Google Scholar] [CrossRef]

- Barausse, C.; Felice, P.; Pistilli, R.; Pellegrino, G.; Bonifazi, L.; Tayeb, S.; Neri, I.; Koufi, F.D.; Fazio, A.; Marvi, M.V.; et al. Anatomy Education and Training Methods in Oral Surgery and Dental Implantology: A Narrative Review. Dent. J. 2024, 12, 406. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Bosc, R.; Fitoussi, A.; Hersant, B.; Dao, T.H.; Meningaud, J.P. Intraoperative augmented reality with heads-up displays in maxillofacial surgery: A systematic review of the literature and a classification of relevant technologies. Int. J. Oral Maxillofac. Surg. 2019, 48, 132–139. [Google Scholar] [CrossRef] [PubMed]

- Puleio, F.; Tosco, V.; Pirri, R.; Simeone, M.; Monterubbianesi, R.; Lo Giudice, G.; Lo Giudice, R. Augmented Reality in Dentistry: Enhancing Precision in Clinical Procedures-A Systematic Review. Clin. Pract. 2024, 14, 2267–2283. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Suenaga, H.; Tran, H.H.; Liao, H.; Masamune, K.; Dohi, T.; Hoshi, K.; Takato, T. Vision-based markerless registration using stereo vision and an augmented reality surgical navigation system: A pilot study. BMC Med. Imaging 2015, 15, 51. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Wang, J.; Suenaga, H.; Yang, L.; Kobayashi, E.; Sakuma, I. Video see-through augmented reality for oral and maxillofacial surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2017, 13, e1754. [Google Scholar] [CrossRef] [PubMed]

- Dang, N.P.; Chandelon, K.; Barthélémy, I.; Devoize, L.; Bartoli, A. A proof-of-concept augmented reality system in oral and maxillofacial surgery. J. Stomatol. Oral Maxillofac. Surg. 2021, 122, 338–342. [Google Scholar] [CrossRef] [PubMed]

- Suenaga, H.; Sakakibara, A.; Taniguchi, A.; Hoshi, K. Computer-Assisted Preoperative Simulation and Augmented Reality for Extraction of Impacted Supernumerary Teeth: A Clinical Case Report of Two Cases. J. Oral Maxillofac. Surg. 2023, 81, 201–205. [Google Scholar] [CrossRef] [PubMed]

- Macrì, M.; D’Albis, G.; D’Albis, V.; Timeo, S.; Festa, F. Augmented Reality-Assisted Surgical Exposure of an Impacted Tooth: A Pilot Study. Appl. Sci. 2023, 13, 11097. [Google Scholar] [CrossRef]

- Suenaga, H.; Sakakibara, A.; Koyama, J.; Hoshi, K. A clinical presentation of markerless augmented reality assisted surgery for resection of a dentigerous cyst in the maxillary sinus. J. Stomatol. Oral Maxillofac. Surg. 2024, 125, 101767. [Google Scholar] [CrossRef] [PubMed]

- Koyama, Y.; Sugahara, K.; Koyachi, M.; Tachizawa, K.; Iwasaki, A.; Wakita, I.; Nishiyama, A.; Matsunaga, S.; Katakura, A. Mixed reality for extraction of maxillary mesiodens. Maxillofac. Plast. Reconstr. Surg. 2023, 45, 1. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Rieder, M.; Remschmidt, B.; Gsaxner, C.; Gaessler, J.; Payer, M.; Zemann, W.; Wallner, J. Augmented Reality-Guided Extraction of Fully Impacted Lower Third Molars Based on Maxillofacial CBCT Scans. Bioengineering 2024, 11, 625. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Fudalej, P.S.; Garlicka, A.; Dołęga-Dołegowski, D.; Dołęga-Dołegowska, M.; Proniewska, K.; Voborna, I.; Dubovska, I. Mixed reality-based technology to visualize and facilitate treatment planning of impacted teeth: Proof of concept. Orthod. Craniofacial Res. 2024, 27 (Suppl. 2), 42–47. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lin, P.Y.; Chen, T.C.; Lin, C.J.; Huang, C.C.; Tsai, Y.H.; Tsai, Y.L.; Wang, C.Y. The use of augmented reality (AR) and virtual reality (VR) in dental surgery education and practice: A narrative review. J. Dent. Sci. 2024, 19 (Suppl. 2), S91–S101. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Pellegrino, G.; Bellini, P.; Cavallini, P.F.; Ferri, A.; Zacchino, A.; Taraschi, V.; Marchetti, C.; Consolo, U. Dynamic Navigation in Dental Implantology: The Influence of Surgical Experience on Implant Placement Accuracy and Operating Time. An in Vitro Study. Int. J. Environ. Res. Public Health 2020, 17, 2153. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Pellegrino, G.; Ferri, A.; Del Fabbro, M.; Prati, C.; Gandolfi, M.G.; Marchetti, C. Dynamic Navigation in Implant Dentistry: A Systematic Review and Meta-analysis. Int. J. Oral Maxillofac. Implant. 2021, 36, e121–e140. [Google Scholar] [CrossRef] [PubMed]

- Stucki, J.; Dastgir, R.; Baur, D.A.; Quereshy, F.A. The use of virtual reality and augmented reality in oral and maxillofacial surgery: A narrative review. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2024, 137, 12–18. [Google Scholar] [CrossRef] [PubMed]

- Pellegrino, G.; Lizio, G.; Ferri, A.; Marchetti, C. Flapless and bone-preserving extraction of partially impacted mandibular third molars with dynamic navigation technology. A report of three cases. Int. J. Comput. Dent. 2021, 24, 253–262. [Google Scholar] [PubMed]

- Heredero, S.; Gómez, V.J.; Sanjuan-Sanjuan, A. The Role of Virtual Reality, Augmented Reality and Mixed Reality in Modernizing Free Flap Reconstruction Techniques. Oral Maxillofac. Surg. Clin. 2025, 37, 479–486. [Google Scholar] [CrossRef] [PubMed]

- Nasir, N.; Cercenelli, L.; Tarsitano, A.; Marcelli, E. Augmented reality for orthopedic and maxillofacial oncological surgery: A systematic review focusing on both clinical and technical aspects. Front. Bioeng. Biotechnol. 2023, 11, 1276338. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lungu, A.J.; Swinkels, W.; Claesen, L.; Tu, P.; Egger, J.; Chen, X. A review on the applications of virtual reality, augmented reality and mixed reality in surgical simulation: An extension to different kinds of surgery. Expert Rev. Med. Devices 2021, 18, 47–62. [Google Scholar] [CrossRef] [PubMed]

- Koyachi, M.; Sugahara, K.; Tachizawa, K.; Nishiyama, A.; Odaka, K.; Matsunaga, S.; Sugimoto, M.; Katakura, A. Mixed-reality and computer-aided design/computer-aided manufacturing technology for mandibular reconstruction: A case description. Quant. Imaging Med. Surg. 2023, 13, 4050–4056. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Puladi, B.; Ooms, M.; Bellgardt, M.; Cesov, M.; Lipprandt, M.; Raith, S.; Peters, F.; Möhlhenrich, S.C.; Prescher, A.; Hölzle, F.; et al. Augmented Reality-Based Surgery on the Human Cadaver Using a New Generation of Optical Head-Mounted Displays: Development and Feasibility Study. JMIR Serious Games 2022, 10, e34781. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lin, Y.K.; Yau, H.T.; Wang, I.C.; Zheng, C.; Chung, K.H. A novel dental implant guided surgery based on integration of surgical template and augmented reality. Clin. Implant. Dent. Relat. Res. 2015, 17, 543–553. [Google Scholar] [CrossRef] [PubMed]

- Farronato, M.; Maspero, C.; Lanteri, V.; Fama, A.; Ferrati, F.; Pettenuzzo, A.; Farronato, D. Current state of the art in the use of augmented reality in dentistry: A systematic review of the literature. BMC Oral Health 2019, 19, 135. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Mangano, F.G.; Admakin, O.; Lerner, H.; Mangano, C. Artificial intelligence and augmented reality for guided implant surgery planning: A proof of concept. J. Dent. 2023, 133, 104485. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Shen, Y.; Yang, S. A practical marker-less image registration method for augmented reality oral and maxillofacial surgery. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 763–773. [Google Scholar] [CrossRef] [PubMed]

- Ruggiero, F.; Cercenelli, L.; Emiliani, N.; Badiali, G.; Bevini, M.; Zucchelli, M.; Marcelli, E.; Tarsitano, A. Preclinical Application of Augmented Reality in Pediatric Craniofacial Surgery: An Accuracy Study. J. Clin. Med. 2023, 12, 2693. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Strong, E.B.; Patel, A.; Marston, A.P.; Sadegh, C.; Potts, J.; Johnston, D.; Ahn, D.; Bryant, S.; Li, M.; Raslan, O.; et al. Augmented Reality Navigation in Craniomaxillofacial/Head and Neck Surgery. OTO Open 2025, 9, e70108. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Chegini, S.; Edwards, E.; McGurk, M.; Clarkson, M.; Schilling, C. Systematic review of techniques used to validate the registration of augmented-reality images using a head-mounted device to navigate surgery. Br. J. Oral Maxillofac. Surg. 2023, 61, 19–27. [Google Scholar] [CrossRef] [PubMed]

- Ceccariglia, F.; Cercenelli, L.; Badiali, G.; Marcelli, E.; Tarsitano, A. Application of Augmented Reality to Maxillary Resections: A Three-Dimensional Approach to Maxillofacial Oncologic Surgery. J. Pers. Med. 2022, 12, 2047. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Li, X.; Sun, Q.; Shao, L.; Zhu, Z.; Zhao, R.; Meng, F.; Zhao, Z.; Jihu, K.; Xiang, X.; Fu, T.; et al. Robot-assisted augmented reality navigation for osteotomy and personalized guide-plate in mandibular reconstruction: A preclinical study. BMC Oral Health 2025, 25, 1309. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Schneider, B.; Ströbele, D.A.; Grün, P.; Mosch, R.; Turhani, D.; See, C.V. Smartphone application-based augmented reality for pre-clinical dental implant placement training: A pilot study. Oral Maxillofac. Surg. 2025, 29, 38. [Google Scholar] [CrossRef] [PubMed]

- Tao, B.; Fan, X.; Wang, F.; Chen, X.; Shen, Y.; Wu, Y. Comparison of the accuracy of dental implant placement using dynamic and augmented reality-based dynamic navigation: An in vitro study. J. Dent. Sci. 2024, 19, 196–202. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Won, Y.J.; Kang, S.H. Application of augmented reality for inferior alveolar nerve block anesthesia: A technical note. J. Dent. Anesth. Pain Med. 2017, 17, 129–134. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pellegrino, G.; Barausse, C.; Tayeb, S.; Vignudelli, E.; Casaburi, M.; Stradiotti, S.; Ferretti, F.; Cercenelli, L.; Marcelli, E.; Felice, P. Augmented Reality in Dental Extractions: Narrative Review and an AR-Guided Impacted Mandibular Third-Molar Case. Appl. Sci. 2025, 15, 9723. https://doi.org/10.3390/app15179723

Pellegrino G, Barausse C, Tayeb S, Vignudelli E, Casaburi M, Stradiotti S, Ferretti F, Cercenelli L, Marcelli E, Felice P. Augmented Reality in Dental Extractions: Narrative Review and an AR-Guided Impacted Mandibular Third-Molar Case. Applied Sciences. 2025; 15(17):9723. https://doi.org/10.3390/app15179723

Chicago/Turabian StylePellegrino, Gerardo, Carlo Barausse, Subhi Tayeb, Elisabetta Vignudelli, Martina Casaburi, Stefano Stradiotti, Fabrizio Ferretti, Laura Cercenelli, Emanuela Marcelli, and Pietro Felice. 2025. "Augmented Reality in Dental Extractions: Narrative Review and an AR-Guided Impacted Mandibular Third-Molar Case" Applied Sciences 15, no. 17: 9723. https://doi.org/10.3390/app15179723

APA StylePellegrino, G., Barausse, C., Tayeb, S., Vignudelli, E., Casaburi, M., Stradiotti, S., Ferretti, F., Cercenelli, L., Marcelli, E., & Felice, P. (2025). Augmented Reality in Dental Extractions: Narrative Review and an AR-Guided Impacted Mandibular Third-Molar Case. Applied Sciences, 15(17), 9723. https://doi.org/10.3390/app15179723