1. Introduction

Crowdsourcing platforms are experiencing rapid growth in task volume, making it increasingly difficult for workers to identify promising bids. As system dimensions scale up, operational platforms—which encompass critical financial oversight systems like Sichuan University’s fiscal management platform—experience proportional increases in computational and network loads. This platform, representative of high-stakes financial operations, executes complex functions including high-volume transaction processing, multi-campus budget allocation tracking, and comprehensive year-end settlement calculations. These systems must reliably execute such complex, high-stakes tasks, demanding meticulous precision and extended processing cycles. This necessity underscores the critical requirement for highly efficient, dynamic resource allocation strategies that ensure robustness under load while simultaneously reducing time consumption. Such efficiencies are crucial to reducing the possibility of human errors in intricate financial tasks. While recommender systems have been deployed to relieve this information overload, most existing models inherit the user-independence assumption from e-commerce and media domains and thus fail to capture the fierce, real-time competition among workers for the same task. Consequently, the development of efficient, competition-aware task recommendation algorithms becomes paramount.

Recommendation algorithms for crowdsourcing are primarily categorized into two types [

1]: one is the mainstream recommendation algorithm based on neural networks [

2,

3,

4,

5], and the other is the recommendation algorithm built on manual modeling for crowdsourcing scenarios [

6,

7]. Both types of algorithms exhibit deficiencies in crowdsourcing scenarios. Neural network-based recommendation algorithms typically employ single-point methods [

4], pairing methods [

8], or list methods [

9] when generating output sequences. All three methodologies consider the interrelation of items based on the presumption of user independence. However, these algorithms overlook the non-independence of users in crowdsourcing scenarios, leading to inadequate recommendation accuracy. Manual modeling-based recommendation algorithms in crowdsourcing scenarios account for user non-independence, but they require extensive domain knowledge and human input, resulting in inferior learning and predictive capabilities compared to neural network-based methods. Therefore, no existing recommendation algorithm can simultaneously account for user non-independence, particularly in crowdsourcing scenarios deployed within mission-critical platforms like the Sichuan University Finance Office Management System where precision and reliability are paramount due to sensitive financial data and zero tolerance for errors, or exploit the learning and predictive strengths of neural networks, resulting in severely compromised recommendation accuracy and significantly reduced work efficiency. Therefore, this study aims to answer a research question that addresses the issue of inaccurate and inefficient recommendations caused by traditional recommendation algorithms failing to account for the non-independence among workers.

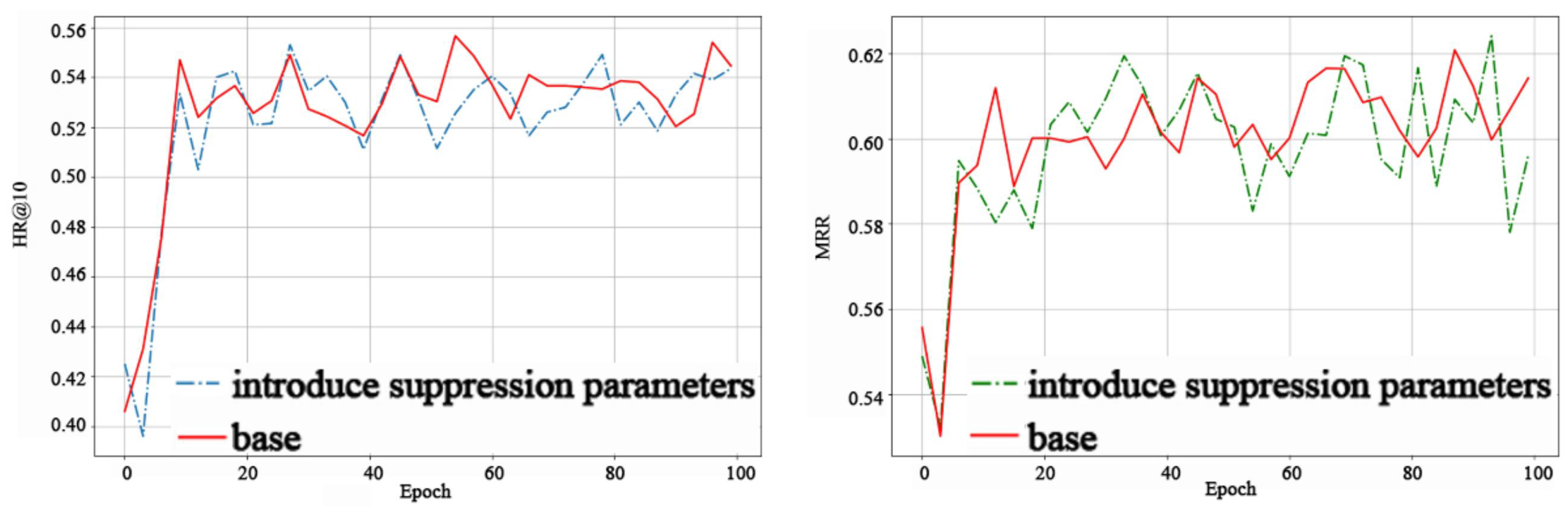

To address these issues, we propose an Adaptive Parameter Exponential Decay Algorithm (APAED) that factors in user competition. APAED introduces user non-independence into crowdsourcing scenarios by quantifying real-time competition among workers and uses it to adjust the prediction scores generated by the neural network model. This not only leverages the robust learning and predictive capabilities of neural networks but also factors in the effect of user non-independence on the score. Moreover, since the distribution of predicted values generated by the neural network model varies among different models, recommendation lists, and tasks, APAED incorporates an adaptive decay factor into the decay functions, enhancing its generalizability. By attenuating the prediction scores from the neural network model, APAED effectively resolves the issue of user non-independence without compromising the model, thereby improving the accuracy of recommendations and thus reducing processing time and enhancing system efficiency. Experiments show that APAED cuts the residual RMSE of HR@10 from to (−38%) and that of MRR from to (−75%), substantially reducing score fluctuations across epochs and consistently outperforming four strong neural baselines. The enhanced accuracy enables faster processing to improve workflow efficiency, while facilitating optimal task allocation to mitigate risks and better satisfy financial management system requirements.

The primary contributions of this study are as follows:

The introduction of the Adaptive Parameter Exponential Decay Algorithm (APAED), an innovative recommendation algorithm that adjusts its decay factor adaptively based on the distribution of offline model predicted values. During the prediction process, APAED splits the worker’s tasks into an offline prediction part handled by the neural network model and an online prediction part managed by the APAED decay algorithm for score decay. The algorithm attenuates the score of the offline neural network model based on real-time competition intensity among workers, resulting in the final prediction.

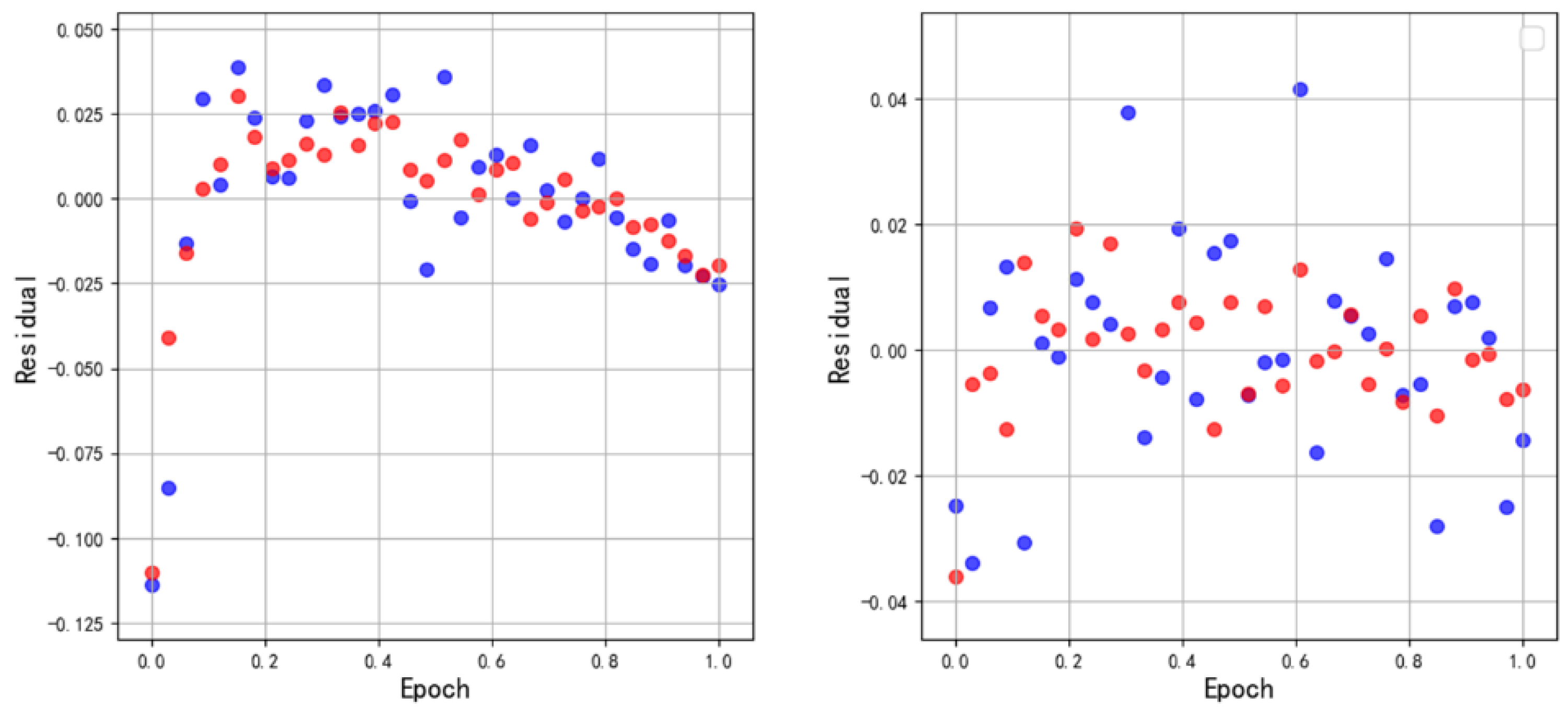

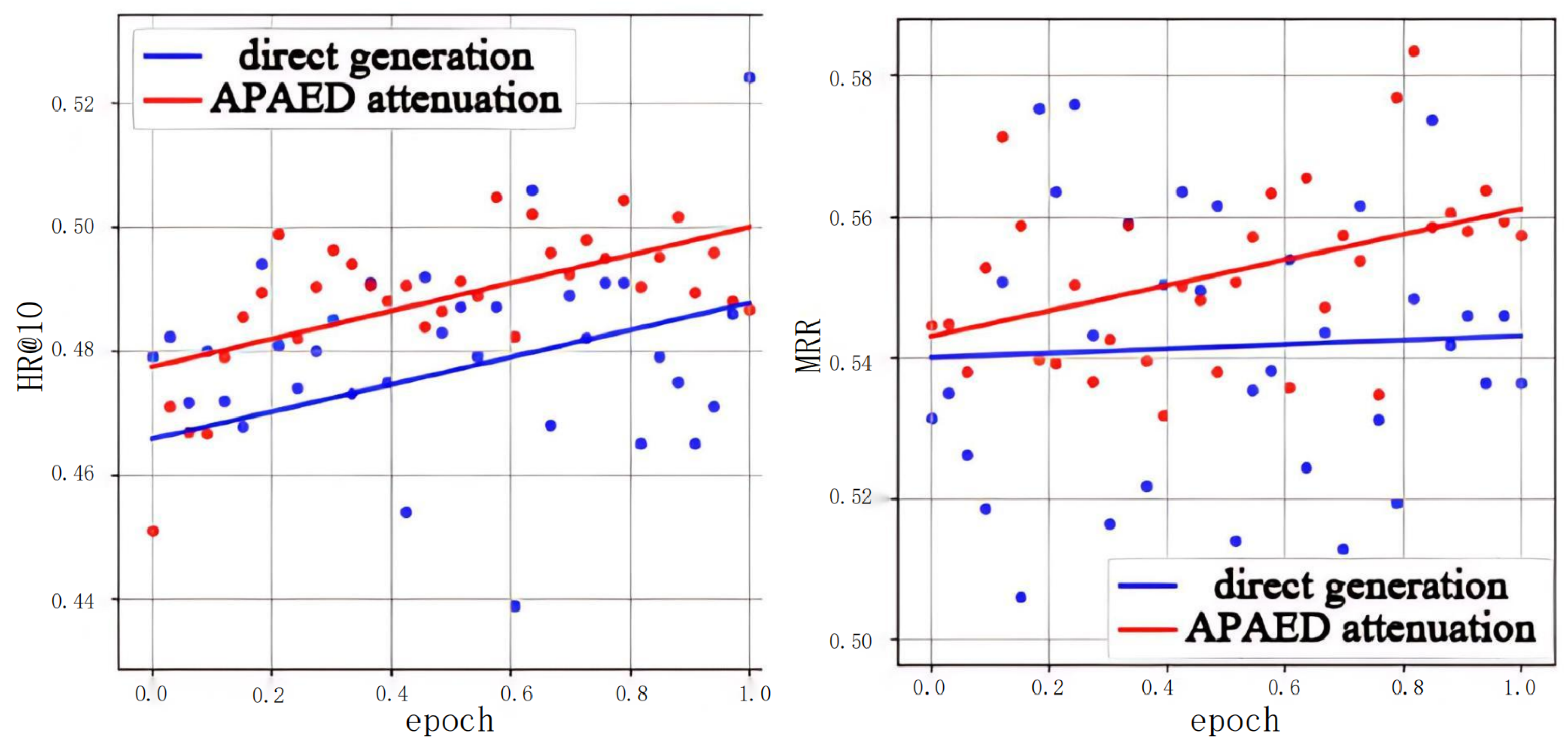

A comprehensive evaluation of APAED’s effectiveness using real-world datasets. The results demonstrate that APAED significantly improves relevant indicators compared to models that solely rely on neural networks and verify APAED’s robust generalization capabilities across different offline models. Our extensive experiments demonstrate the effectiveness and robustness of this strategy. When applied to the OPCA-CF backbone, APAED cuts the residual RMSE of HR@10 from to (−38%) and that of MRR from to (−75%), markedly reducing indicator fluctuations across training epochs. Similar gains hold for three additional neural baselines (NAIS, NAIS+AP, NAIS+DCA), where APAED lifts HR@10 and MRR by on average while consistently tightening residual spreads. APAED marries the expressive power of deep recommenders with an explicit model of real-time worker competition, delivering state-of-the-art accuracy and stability for crowdsourcing task recommendation.

This paper is structured in the following manner: The Introduction segment elucidates the importance of this research and outlines the key contributions of this paper. The Related Work section offers a comprehensive review of existing research in the fields of crowdsourcing and recommendation algorithms. The section on the APAED Algorithm provides a detailed examination of the design and implementation of the APAED algorithm. The Algorithm Evaluation section uses real-world datasets to assess the performance and generalizability of the APAED algorithm. The Results section presents the data and descriptions of the experimental results, while the Discussion section discusses the main findings of the study as well as its limitations. Finally, the Conclusion summarizes the paper, encapsulating the key findings and their implications.

3. APAED Algorithm

The APAED algorithm stands as the pioneering crowdsourcing recommendation model that integrates offline model predictions with real-time bidding dynamics to quantitatively evaluate the competitive intensity of present tasks in crowdsourcing environments. APAED employs both global and local distributions of predicted values to derive decay parameters, serving to attenuate these predicted values. Within APAED, decay and gain function symmetrically, yet our discussion will solely focus on the process of establishing decay parameters. This is predicated on the assumption that the primary influence on a worker’s bidding success stems from competition-induced decay. Consequently, the decay parameter, embodying real-time competition data, is adjusted to impact the gain at the end. The APAED accounts for the non-independence among staff members, thereby more effectively mitigating resource waste and enhancing work efficiency on high-pressure platforms, especially in systems with stringent task efficiency requirements such as Sichuan University’s Financial Management System.

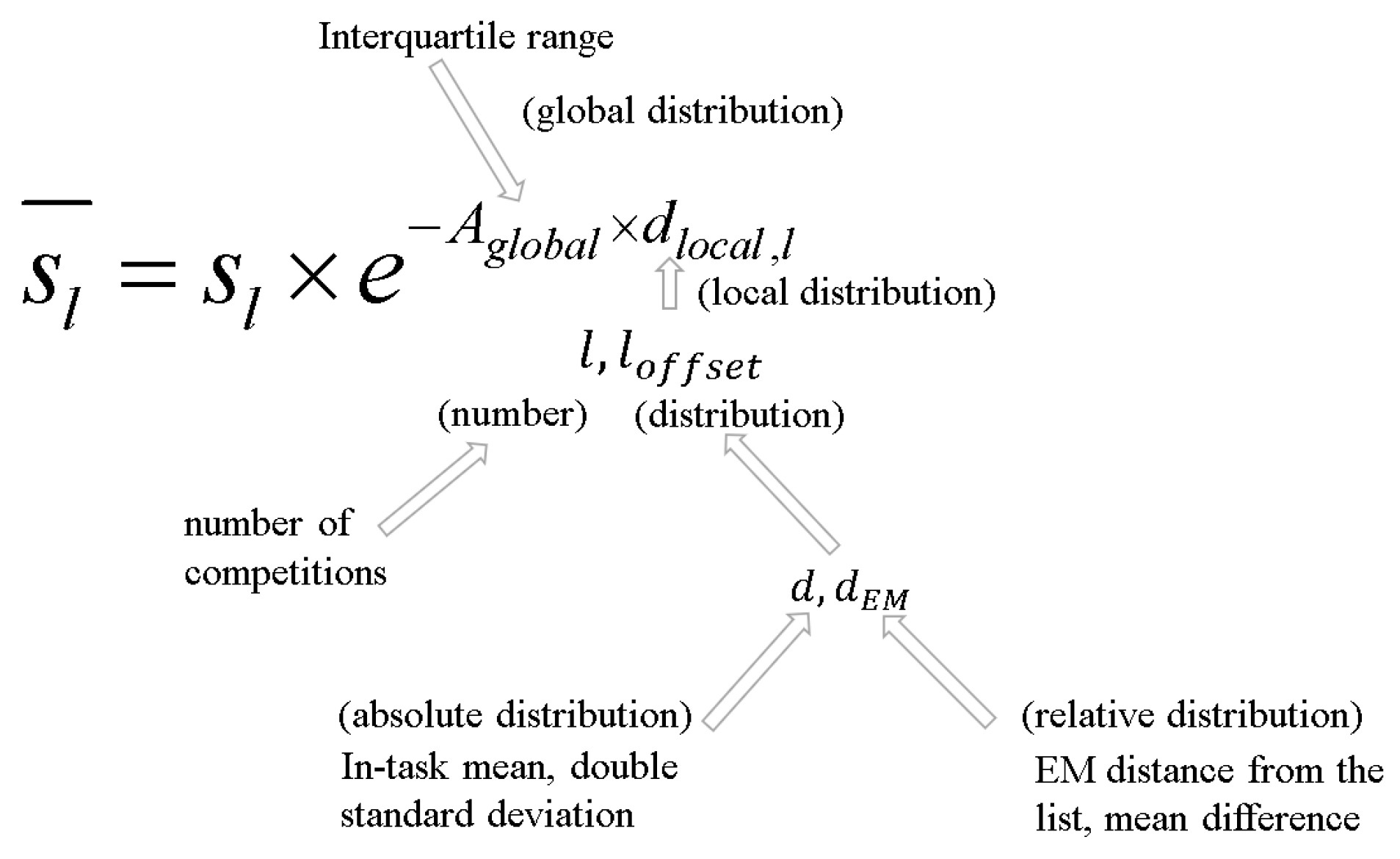

When attenuating the predicted value, APAED will determine the decay or gain parameters from the predicted value distribution from two angles, which are the global distribution and local distribution of the predicted value, respectively. The global distribution is the distribution of the predicted value of the entire list when the recommendation list is generated for a certain worker, and the local distribution is the distribution of the predicted value of a task in the recommendation list. When generating the recommendation list, since only the predicted value of the current bid is known, the competition information is only reflected based on the record of the bid. The relationship between the global and local decay functions and the final decay functions, and the standards involved are shown in

Figure 1. In this figure,

is the final recommendation score after decay adjustment by the APAED algorithm while the

represents the initial recommendation score generated by the offline neural network model for the

l-th task.

denotes the global decay factor, calculated based on the interquartile range of the recommendation list as shown in Formula (

2).

refers to the local decay factor for the

l-th task, which is determined by factors such as the number of bids, absolute score distribution, and relative score distribution of the task, and is used to adjust the decay magnitude of the recommendation score for that task, as illustrated in Formula (

3). The exponential decay formula integrates global and local information, enabling the recommendation results to align with both the historical preferences of workers and the real-time competitive environment, thereby enhancing the accuracy and stability of task recommendations. A more detailed exposition of the parameters

l,

d, and

will be provided in subsequent sections.

3.1. Offline Prediction Model

In this research, NAIS [

27] is used as the framework of the offline model, and the APAED algorithm is used to attenuate the offline prediction value generated by the offline model. You can find more details about NAIS in the “Comparison of Algorithms” section referenced in

Section 4. Because the tasks in crowdsourcing are time-sensitive and cannot directly use the id in NASI as feature input, here we follow the method of learning news features according to news attributes in NEWS [

28], and learn crowdsourcing features according to the attributes of crowdsourcing tasks.

3.2. Global Decay Parameter

The predicted value after decay

is shown in Formula (

1). The decay object here is all the predicted values of the worker in the list when the recommendation list is generated for a certain worker

, where

l is the number of tasks in the list.

In Formula (

1),

is used as a global decay factor to determine the influence of the distribution of predicted values in the entire recommendation list on the decay intensity.

Because the purpose of decay is to adjust the relative position ranking of the predicted value of each task in a certain worker s according to the competition information, and as a global decay factor determines the upper limit of the decay strength for changing the relative ranking position, is thus determined by the overall distribution of s. When the value of s is more discrete, a greater decay strength is needed to affect the relative position ranking in s.

Use the interquartile range

of

s as the benchmark for

. The interquartile range is mainly used to measure the degree of dispersion of the predicted value of the worker recommendation list. It reflects the degree of dispersion of the middle 50% of the data, calculated as the difference between the upper quartile (Q3, which is located at 75%) and the lower quartile (Q1, which is located at 25%). The interquartile range can avoid the influence of extreme values, because after OPCA-CF prediction, the smallest extreme value in each recommended list tends to 0, and the largest extreme value tends to 1, which does not reflect the degree of dispersion of the data.

can make the list position shift caused by the decay of

within the task up to 50%, that is, attenuate from

to

. Define

as the intensity required to attenuate

to

using exponential decay, which is obtained by Formula (

2):

3.3. Local Decay Parameter

Local decay parameter

is used as the local decay factor in each task, which represents the influence of the predicted value

of other workers in the

l-th task on the decay intensity.

m is the predicted value of other workers’ bid records in the

l-th task.

is determined by the Formula (

3). The parameters

l,

d, and

will be explained below.

3.3.1. Bid Quantity Parameter

A bidding behavior greater than the predicted value of in a task will strengthen the competition of the current task and cause the decay of . Since the upper limit of the decay strength is determined by the global distribution, it is hoped that , determined by the number of bids, can tend to 1 when the competition is strongest, so that the final decay strength can be between 0 and . Here, , where is used to map the number of bids to a range that makes meaningful, and is a hyperparameter defined according to the magnitude of the bid.

3.3.2. Parameter d, Determined by the Absolute Distribution of the Predicted Value of the Bid Within the Task and the Relative Distribution of the Predicted Value Between the Lists

In addition to the influence of the number of bids on the decay intensity, the distribution of

will also affect the current decay intensity, which can be used to correct

. In the case of the same number of bids, different

distributions will also cause different decay strengths. As shown in

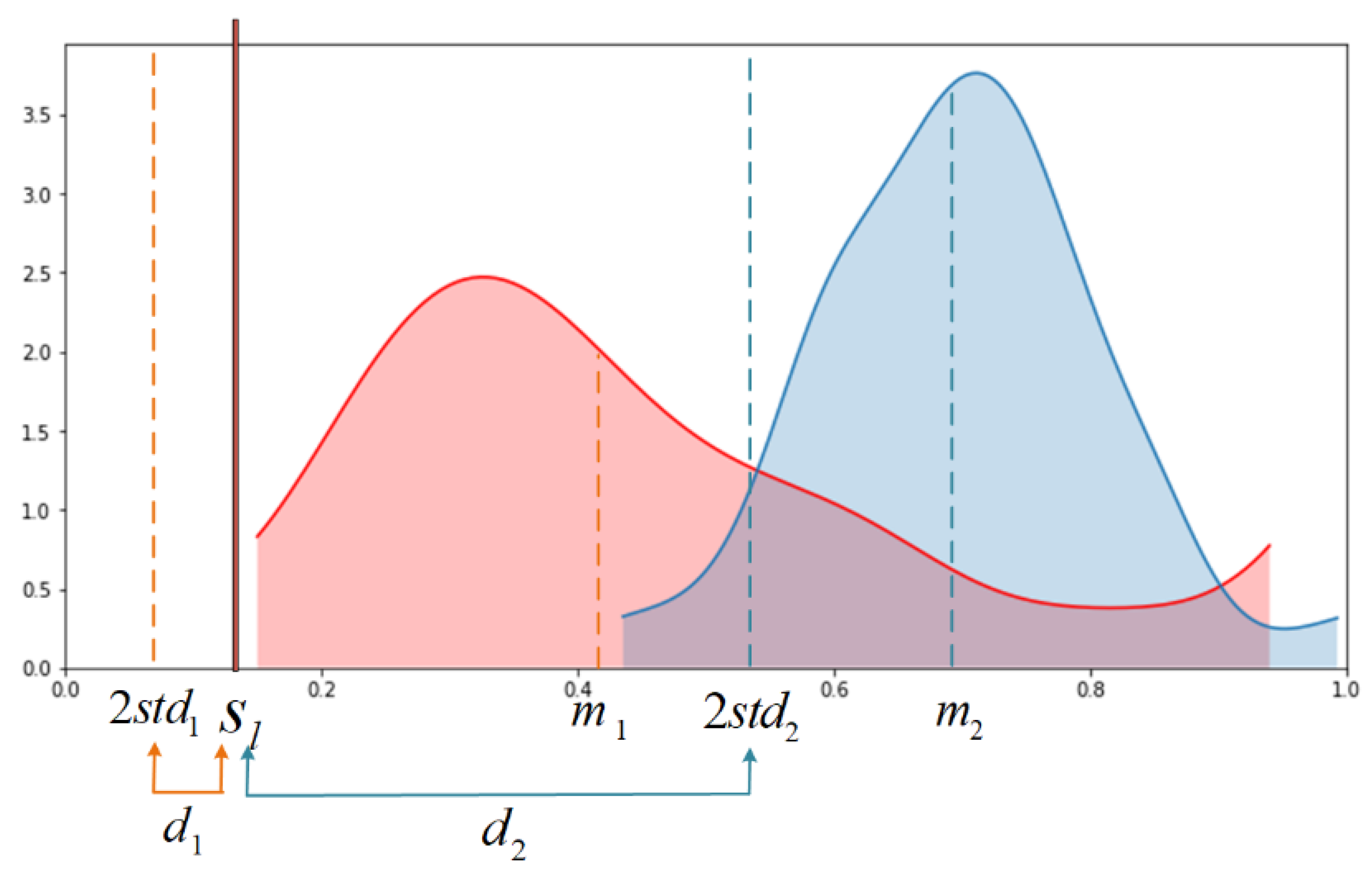

Figure 2, the blue and red shades represent the two types of predicted value density distributions with the same bid quantity but different distributions. Obviously, the decay intensity of the blue distribution to the predicted value is greater than that of the red distribution.

Therefore,

d is introduced here to indicate the distance between

and

by two standard deviations,

. In this way,

d is determined by the degree of concentration and dispersion of

. Obviously, the blue part in

Figure 2 causes a greater degree of competition than the red part, so

.

In addition to the distance

d between the distribution and the current predicted value

, the shape of the distribution itself will also cause differences in the intensity of competition, and

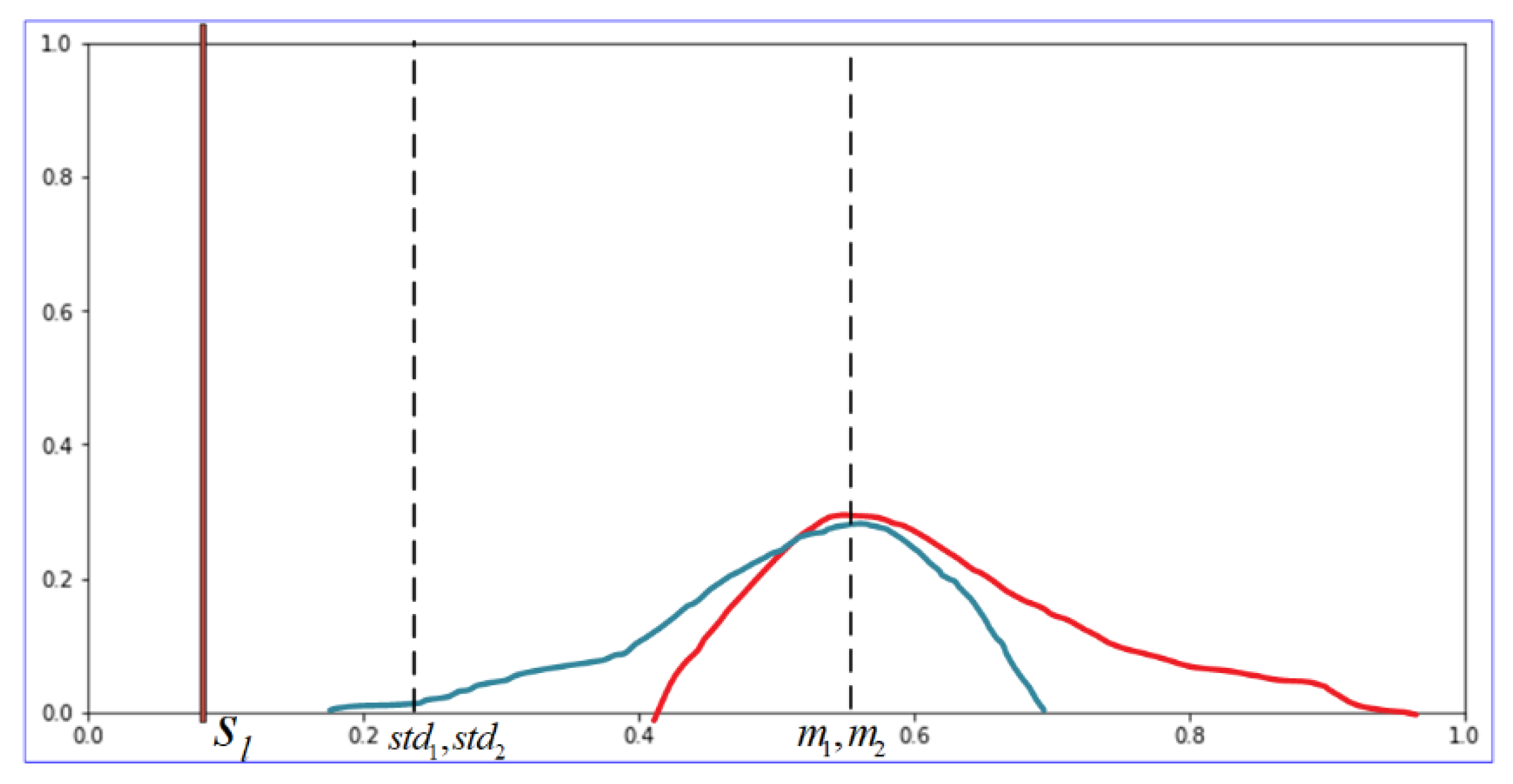

d only includes the mean and variance of the data and cannot reflect the shape of the distribution. Because the predicted value does not necessarily conform to the normal distribution, there may be information where the mean and variance are the same but the competition intensity is different. As shown in

Figure 3, the blue and red distributions have the same mean and variance, that is,

s is the same, but obviously the red distribution represents greater competition.

Therefore,

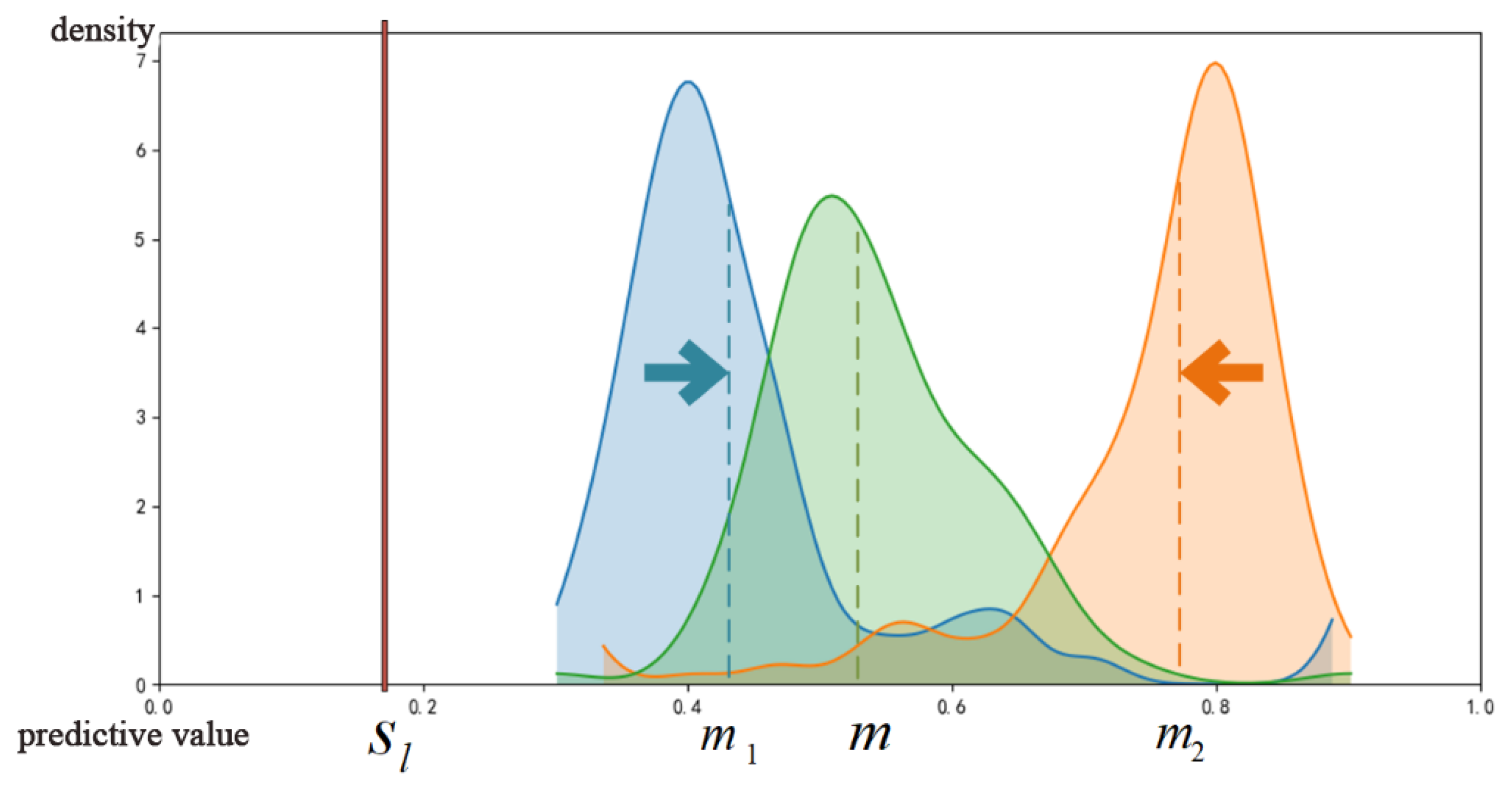

d needs to be strengthened or suppressed according to the distribution pattern. In order to determine whether to strengthen or suppress and perform quantitative analysis, it is necessary to use a certain distribution as a benchmark. Here, we use the prediction value distribution

of the current recommendation list between the extreme values of

as the benchmark, as shown in the green part of

Figure 4. Then, we use EMD (Earth Mover’s Distance) to calculate the distance from distribution

to

in the task, which is obtained by Formula (

4).

where

is the set of all possible joint distributions that combine the two distributions. For each possible joint distribution, samples

x and

y are obtained from it, and the distance of the pair of samples and the expected distance

of all samples are calculated. The lower bound of this expected value in all possible joint distributions is from

to

, the EM distance.

Intuitively, the EM distance is to imagine the probability distribution as a pile of dirt. In

Figure 4, the task distribution (blue and red parts) moves in the direction of the arrow, and the energy required to pile it into another target shape

:

(green part) is determined by carrying out the minimum amount of work.

In order to avoid the influence of the predicted value dimension on

W, it is necessary to normalize

and

before calculation because the dimension of the local predicted value is unrelated to the decay intensity caused by the predicted value distribution; for example,

and

are both in the order of magnitude

and

, and the intensity of competition faced by

is the same. Subsequently, since the EM distances are all greater than zero, it is impossible to determine whether to strengthen or suppress

d. Here, the mean difference between

and

is used as the standard for strengthening or suppressing. If it is positive, it will be strengthened, and if it is negative, it will be suppressed, that is,

and

in Formula (

5). Finally, the decay caused by the distribution of the predicted value is shown in Formula (

5).

Finally,

d and

jointly determine

, as shown in Formula (

6).

The improved sigmoid function is used here, and the sigmoid function can be used to avoid the occurrence of outliers leading to excessive changes in

,

.

and

are used as hyperparameters to scale and correct the overall intensity.

Finally, use a symmetrical method to calculate the gain part of

. The gain here is not to increase

, but to weaken

obtained by

. For example, even if the competition intensity is the same, the probability of winning the bid of

in the top 10% and the bottom 10% of the task is obviously different. The part

should be used to adjust

, and

will be suppressed as the gain increases. Here, it is believed that the importance of decay is greater than the gain in competition, so the local distribution gain parameter

obtained by the symmetric method is penalized. After obtaining

, we use the ratio

of

to

to weaken

, the sigmoid function to smooth

, and cosine decay to obtain the final local decay parameter

, as shown in Formula (

7).

Among them, is the maximum suppression ratio of the hyperparameter control gain part to .

The purpose of using the cosine function is that even if the decay reaches the maximum, that is, when , will not be attenuated to 0, so as to ensure that the decay is dominant in competition.

4. Algorithm Evaluation

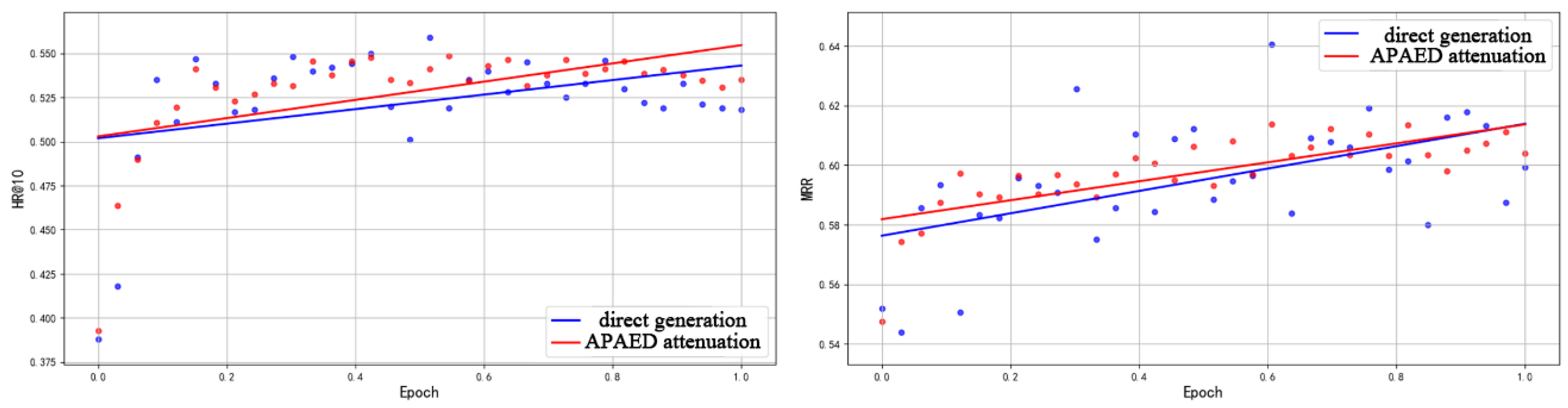

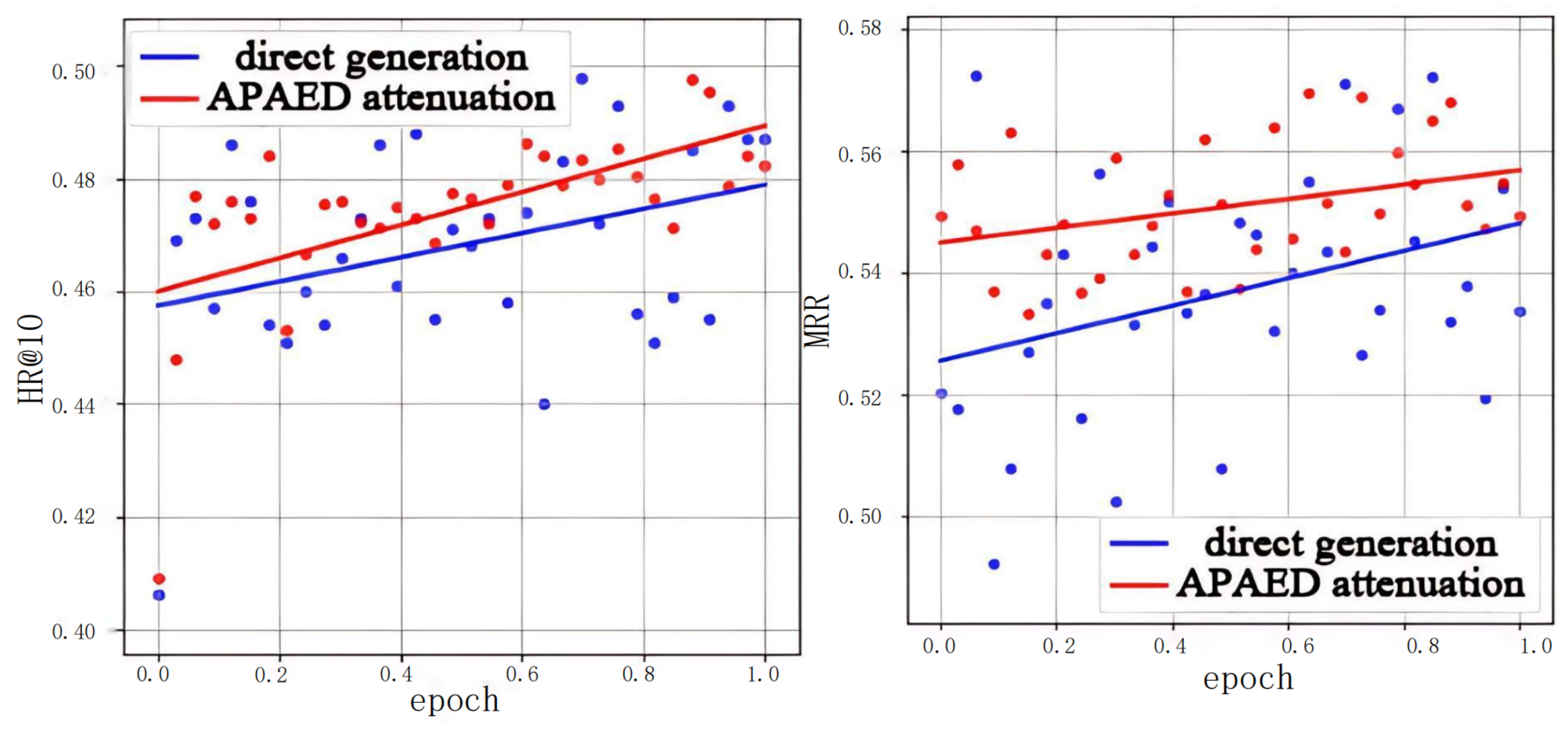

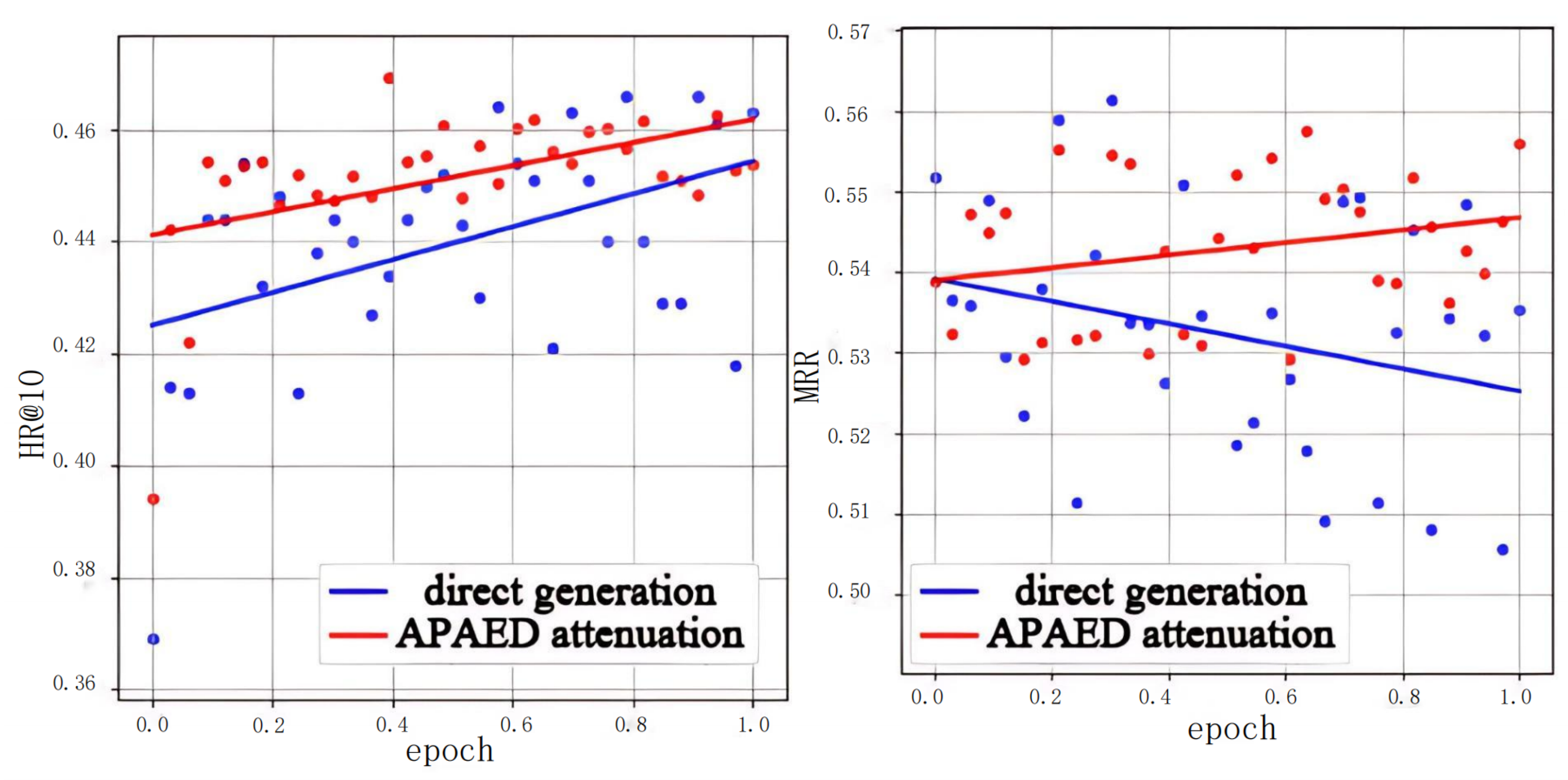

The experiment in this research compares the difference between the recommendation list directly generated by the offline model and the recommendation list generated using the APAED algorithm in indicators HR@10 and MRR, which proves that the APAED algorithm has an inhibitory effect on the abnormal fluctuation of the indicators in the crowdsourcing scenario.

The experimental environment used comprised Windows 10 Professional 64-bit, Intel Core i7-8700 @ 3.20 six-core, NVIDIA Geforce RTX 2060 (6 GB). The algorithm was built by tensorflow 1.13.1 and python 3.6.8.

The filtered dataset statistics are shown in

Table 1. There were 963,352 pieces of original data before filtering. It can be seen that half of the workers on the original website have never won the bid without a personalized recommendation algorithm.

This research compares the difference between the recommendation list directly generated by the offline model and the recommendation list generated using the APAED algorithm in indicators HR@10 and MRR, and uses a straight line to fit the fluctuation curve of HR and MRR with epoch, uses Root Mean Squared Error (RMSE) as the loss function, and trains 8000 steps with the SGD optimizer. The training residual can reflect the intensity of the fluctuation.

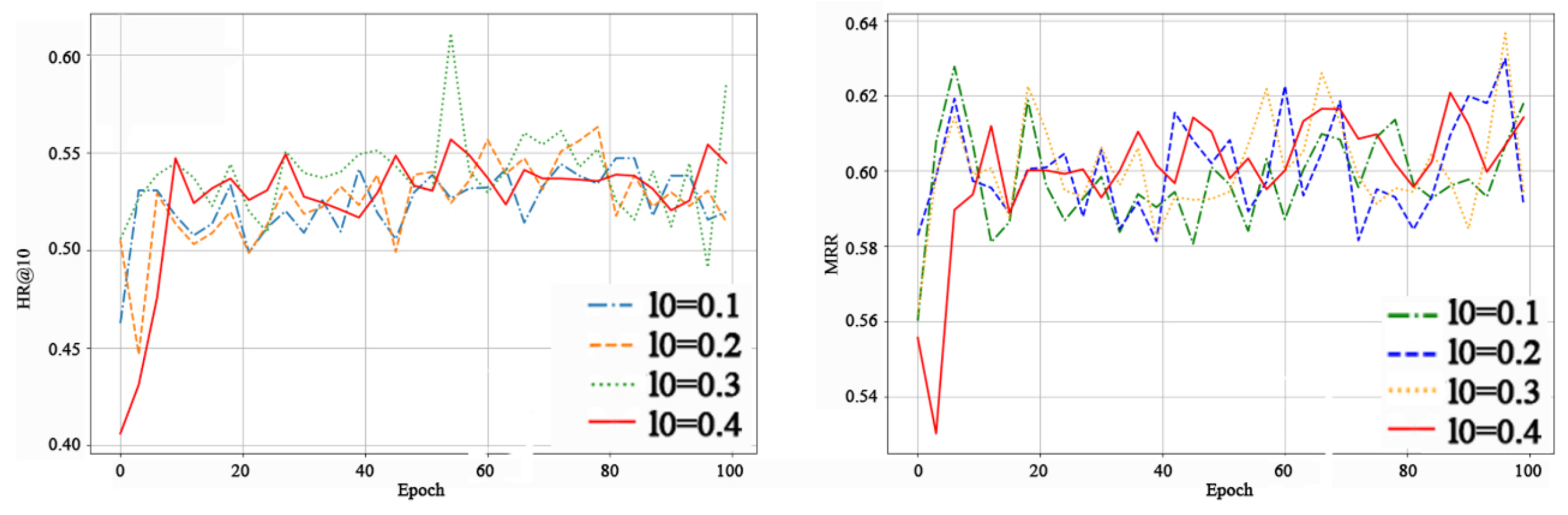

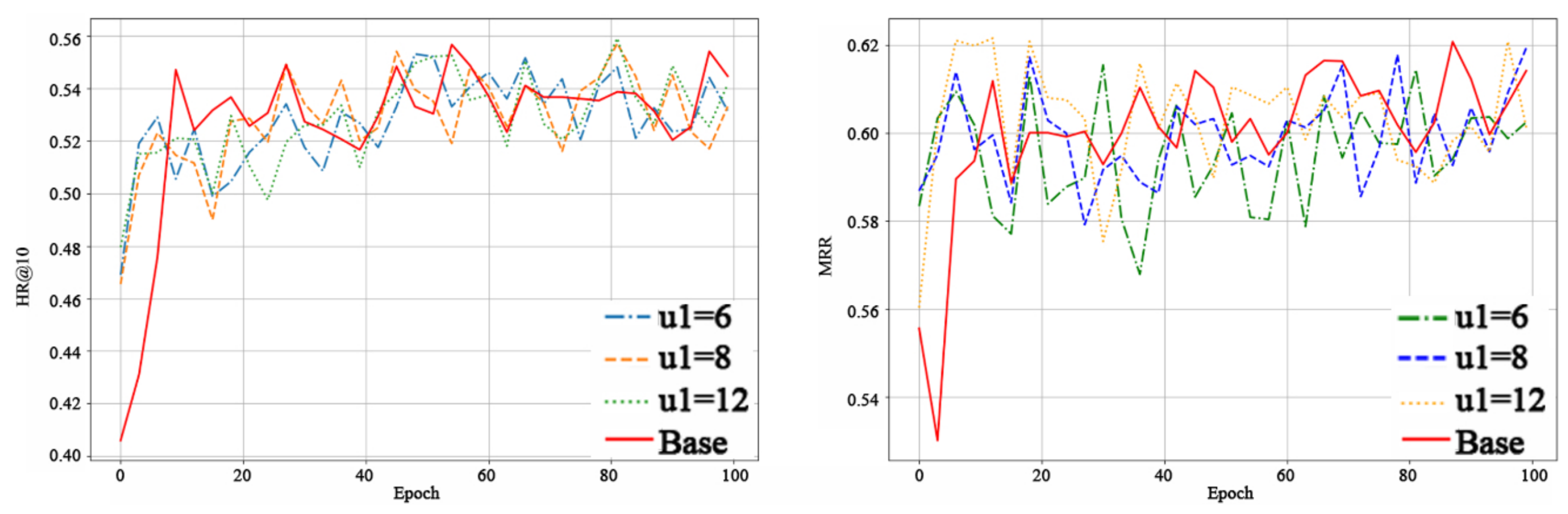

In order to verify that the APAED algorithm can improve the indicators related to the recommendation list and test the generalization ability of the algorithm among different offline models, we need different offline models to generate different offline prediction value distributions. However, most of the existing neural network algorithms cannot be directly applied to crowdsourcing. Therefore, the offline model adopts a fixed main network and changes the attention sub-network to generate different offline prediction value distributions. Four attention mechanisms are selected here.

NAIS: In this experiment, tasks are used instead of Item, and workers are used instead of User. Taking the sequence of workers as an example, the attention mechanism is shown in Formula (

8). In this formula,

represents the feature embedding vector of the

i-th task historically interacted with by the target worker, such as task type, reward, deadline, etc.

r denotes the worker embedding vector of the target worker

g.

represents the element-wise product between the worker embedding vector and the task embedding vector, capturing the matching degree between the task and the worker’s preferences or capabilities.

is a

-dimensional matrix used for a linear transformation of features.

serves as the activation function, filtering out negative signals while retaining positive matching features.

is the output weight of the attention network with a dimension of

, which projects the worker–task matching features into a scalar weight.

is the bias term of the network, applied to correct scoring errors.

L represents the total number of tasks, that is, the number of tasks in

, which controls the calculation range of max-pooling to ensure coverage of all possible task matching items. After normalization, the attention weight

for the

i-th task encountered by the worker is obtained. The greater this weight, the higher the reference value of this historical task for the current recommendation.

Attentive pooling networks [

29] (hereinafter referred to as AP): Since its model is designed for QA (question and answer), in the experiment, it is used to replace the attention mechanism part of NAIS (hereafter replaced by NAIS+AP). Taking the worker sequence as an example, the attention mechanism is shown in Formula (

9). In this formula,

denotes the worker-side original feature matrix with dimensions corresponding to the number of workers × worker feature dimensions, including features such as workers’ historical completion rates and specialized task types.

is the worker feature projection matrix that compresses high-dimensional original worker features into a low-dimensional embedding space, with dimensions of target embedding dimension × worker feature dimensions.

stands for the task-side original feature matrix with dimensions of number of tasks × task feature dimensions, including attributes such as task rewards, difficulty levels, and deadlines.

is the task feature projection matrix that functions similarly to

and compresses high-dimensional task features into a space with the same dimension as the worker embedding.

U is the cross-feature attention weight matrix for workers and tasks with dimensions of target embedding dimension × target embedding dimension, whose core role is to capture high-matching interaction relationships between worker and task features.

is the low-dimensional embedding matrix of original worker features projected by

, where each row corresponds to the embedding vector of one worker.

is the transpose of the embedding matrix of original task features projected by

, where each column corresponds to the embedding vector of one task.

M is the worker–task interaction score matrix with dimensions of number of workers × number of tasks. The element

represents the matching score between the

j-th worker and the

m-th task. After tanh activation, its value range is

, and a larger value indicates a higher matching degree.

is the maximum matching weight of the

j-th worker for the target task

g, obtained by taking the maximum value of the scores

between this worker and all tasks, and used to screen the contribution of task features most suitable for the worker.

The collaborative attention mechanism used in ref. [

30]: Since the matrix dot product is used in the attention mechanism, it is referred to as DCA (Dot Co-Attention), which replaces the attention mechanism part of NAIS, hereinafter referred to as NAIS+DCA. Take the worker sequence as an example of the attention mechanism, as shown in Formula (

10).

is the low-dimensional feature embedding vector of the target worker with dimensions of target embedding dimension × 1.

is the low-dimensional feature embedding vector of the target task with the same dimension as

.

is the self-attention weight matrix on the worker side with dimensions of target embedding dimension × target embedding dimension.

is the cross-side attention weight matrix between workers and tasks with the same dimension as

. After tanh activation, their value range is

, which avoids gradient explosion and highlights valid features.

is the worker–task bidirectional feature fusion vector obtained by element-wise multiplication of the above two activation vectors.

is the fusion feature output weight matrix with dimensions of number of candidate recommended tasks

K× target embedding dimension. It is used to project the high-dimensional fusion vector into

K-dimensional candidate task scores.

is the output layer bias term with dimensions of

K× 1. It is used to correct the baseline bias of projected scores and avoid overall score deviation caused by uneven feature distribution.

is the attention weight distribution of the target worker for candidate tasks. After Softmax normalization, its value range is

and the sum is 1. A higher weight for a task indicates a stronger willingness of the worker to accept it, leading to a higher ranking in the recommendation list.

The collaborative attention mechanism is used in this article, and it is also used to replace the attention mechanism part of NAIS, hereinafter referred to as OPCA-CF.

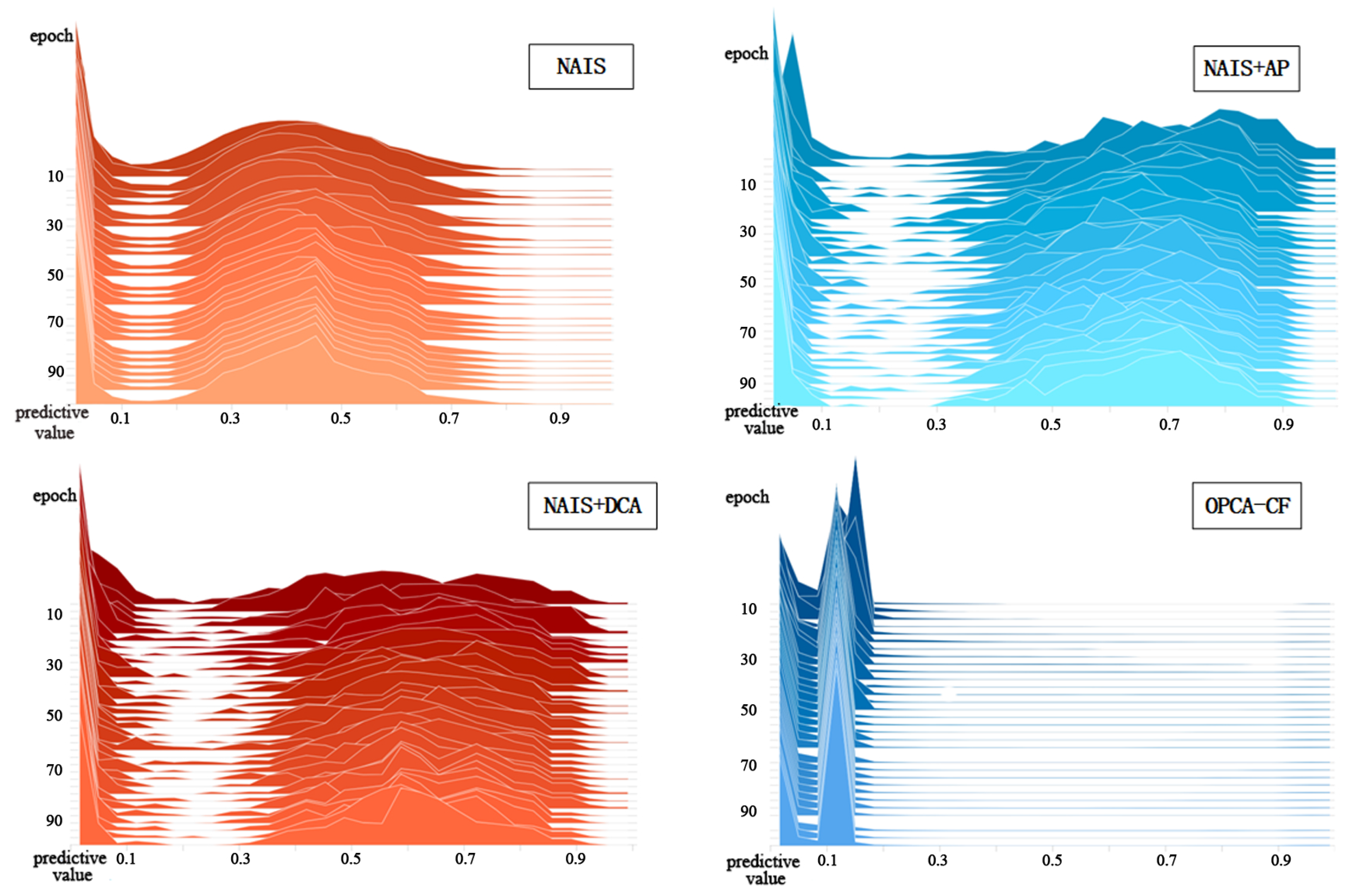

The distribution of predicted values generated by the four offline models is shown in

Figure 5. It can be seen that replacing the sub-network can meet the requirements of generating different predicted value distributions.