An Extended Survey Concerning the Vector Commitments

Abstract

1. Introduction

- Hiding: the committed message should not be revealed.

- Binding: exists an opening procedure through which can be verified that the opened message is the same as the one S committed to.

- Committing: using a specific algorithm, the sender commits to the message m through a committing scheme C.

- Decommitting: when revealing the message, S should convince R of the existence of m in C.

Scientific Objectives

- A survey of the related research literature in order to determine the weaknesses and propose possible solutions.

- Identify the most relevant studies in the field of vector commitments.

- Prioritizing studies relevant to vector commitments according to various criteria: citations, scientific relevance, objectives achieved, reproducibility of experiments, generality of solutions, etc.

- Classification by various criteria of frameworks dedicated to the open problem. To determine the relevant scientific research trends.

- To identify the relevant research problems.

- To define the scientific relevance of the corresponding content of research.

2. Research Methodology

2.1. Research Questions

- What is the related significant literature that approaches conceptual problems and reports adequate solutions?

- What are the relevant scientific research trends?

- What are the determined research questions and weak points?

- What is the reviewed research scope’s conceptual, scientific, and real-world importance?

2.2. The Research Process

2.3. Inclusion and Exclusion Criteria

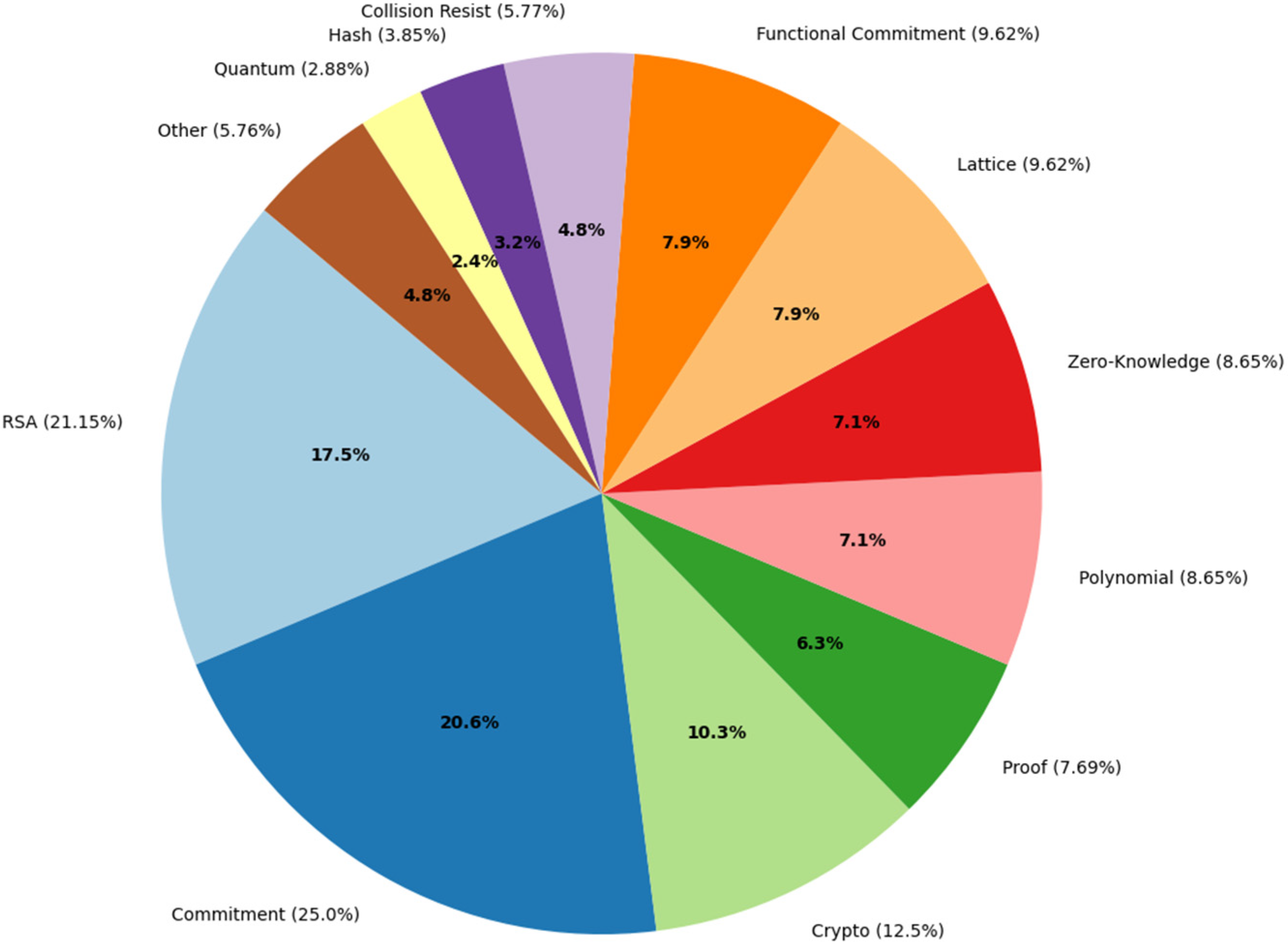

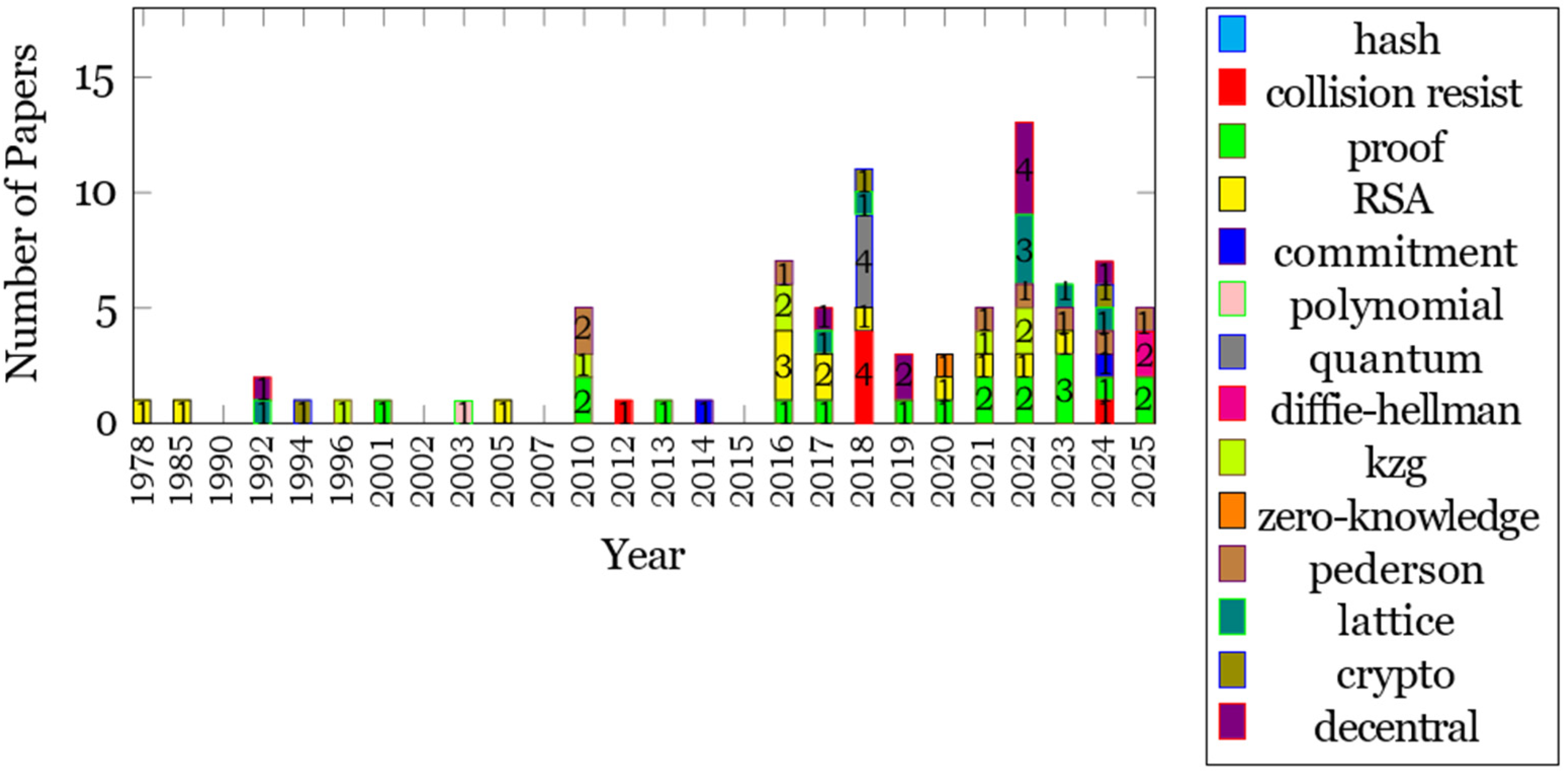

2.4. Quantitative Comparison

3. Vector Commitments

3.1. Classical Vector Commitments

3.2. RSA-Based VC

- Use a strong prime number generator to ensure that the prime numbers are unpredictable and cannot be easily guessed by an attacker.

- Avoid using weak prime numbers, such as small primes or primes too close to each other.

- Use a minimum length of 2048 bits for the RSA key.

- Take necessary actions to protect against fault-based attacks, such as using tamper-resistant hardware.

- Manage and secure the RSA keys properly using techniques like regular key rotation and different keys for different applications.

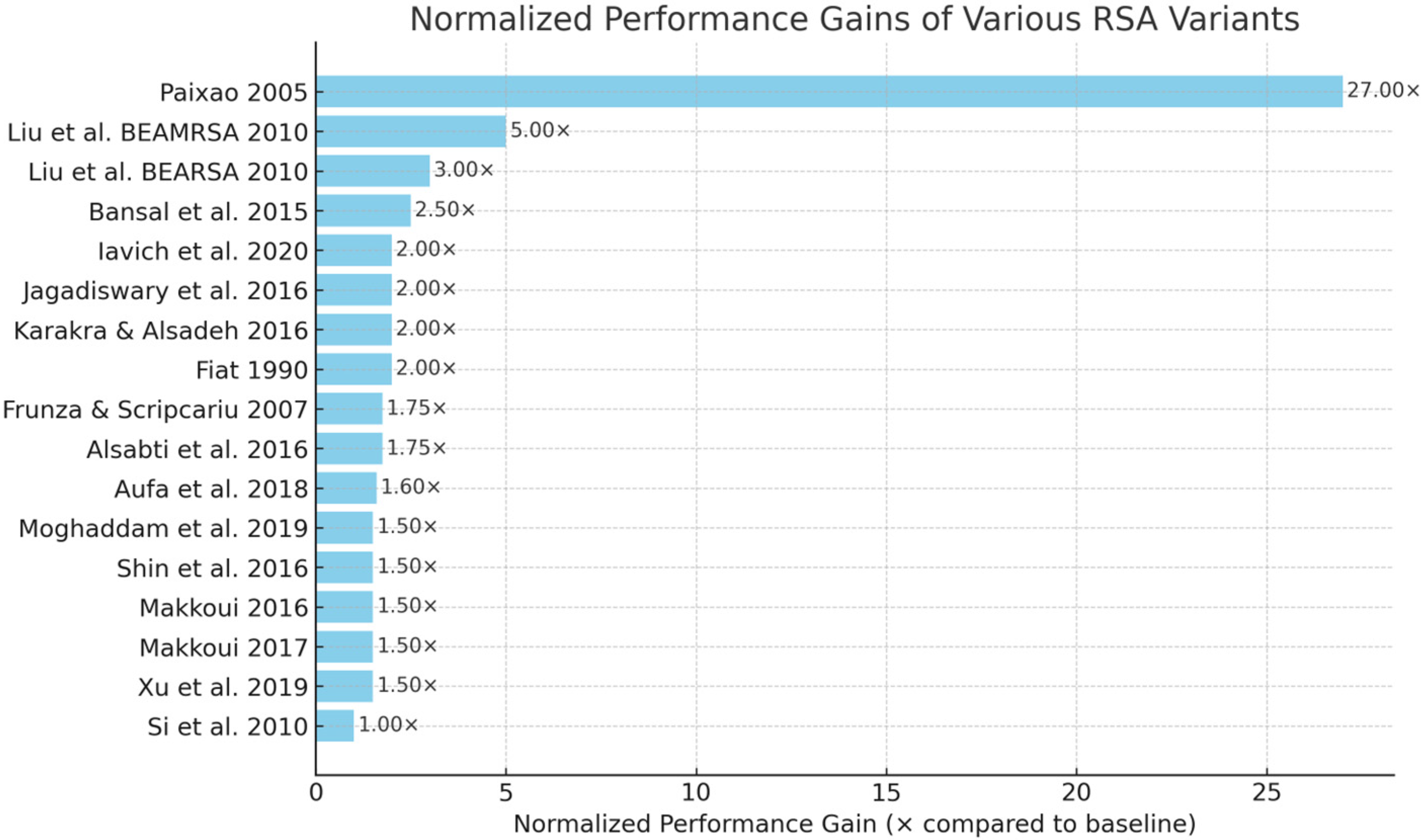

- Performance of RSA variants: We can notice large improvements over classical RSA obtained through math tricks (multi-prime, re-balanced RSA, Fast Cloud RSA), batch processing (Batch RSA, BEARSA/BEAMRSA), and hybrid use of symmetric and asymmetric methods (AES + RSA, Blowfish + RSA).

- Security tradeoffs: Most variants do indeed preserve the base RSA security models but also include homomorphic encryption, improved brute force resistance, or image scrambling for better domain-specific security.

- Application of RSA to specific fields: We see that many recent RSA variants have been designed for particular applications, for example, cloud data storage (HE-RSA), medical image encryption (MDRSA), and fast digital signatures (Aufa et al.), which shows a great range of use for these adaptations.

- Trends in the past had been seen towards early development of batch processing and multi-prime optimization issues. In the present time, attention is given toward hybrid cryptosystems and ASCE (for example, images, biomedical collections, cloud storage).

| Year | Authors | Scheme | Performance Gain Factor | Security Notes | Application Domain |

|---|---|---|---|---|---|

| 1990 | Fiat [27] | Batch RSA | N/A | Standard RSA security | Electronic communication |

| 2005 | Paixao et al. [5] | Multi-Prime + Re-balanced RSA | 27× | Same as RSA (strong integer factorization assumption) | General purpose |

| 2007 | Frunză and Scripcariu [26] | AFF-RSA | 1.1–1.2× | Larger key space | Wireless |

| 2010 | Liu et al. [28] | BEARSA | 1.3–1.5× | Standard RSA | Electronic communication |

| 2010 | Liu et al. [28] | BEAMRSA | 1.5–1.6× | Standard RSA | Electronic communication |

| 2010 | Si et al. [24] | Efficient RSA signature | N/A | Standard RSA security | Digital signatures |

| 2015 | Bansal et al. [12] | RSA + Blowfish | 1.2–1.3× | Symmetric + asymmetric hybrid | Cloud security |

| 2016 | Karakra and Alsadeh [7] | A-RSA (RSA + Rabin + Hoffman Coding) | 1.3–1.4× | 1. More secure, 2. Shorter ciphertext 3. better brute-force resistance | General purpose |

| 2016 | Alsabti et al. [16] | RSA for MATLAB images | 1.1–1.15× | Secure image transfer | Image encryption |

| 2016 | Jagadiswary et al. [17] | MDRSA | 1.1–1.15× | Stronger biomedical data security | Medical |

| 2017 | Shin et al. [18] | Non-Mersenne Prime RSA | 1.1× | Image scrambling | Medical |

| 2017 | Makkaoui [14] | Cloud RSA | 1.1× | Homomorphic property retained | Cloud |

| 2018 | Makkaoui [14] | Fast Cloud RSA (N = prqs) | 1.2× | Same as RSA | Cloud |

| 2018 | Xu et al., Jiao et al., Sabir et al., Soni et al. [19,20,21,22] | RSA + Arnold’s Map | 1.05–1.1× | Image scrambling | Image encryption |

| 2018 | Aufa et al. [24] | RSA + Digital Signature | 1.6× | Maintains RSA + DS security | Digital signatures |

| 2018 | Moghaddam et al. [11] | HE-RSA | 1.5× | Maintains homomorphic property | Cloud storage |

| 2020 | Iavich et al. [10] | AES + RSA, Twofish + RSA | 1.2–1.3× | Inherits AES/Twofish + RSA security | General purpose |

3.3. Diffie–Hellman-Based VC

- Standard DH Groups: 2048-bit primes (p = 2q + 1 where q is prime) with |p| = 2048, |q| = 256 bits (112-bit NIST security).

- Elliptic Curve Groups: secp256k1 (y2 = x3 + 7 over 𝔽ₚ where p = 2256 − 232 − 977) or Curve25519.

- Pairing Groups: BLS12-381 (|G1| = 381 bits, |G2| = 756 bits) with Type-3 pairings.

- 1.

- Pedersen Commitments [31]:

- a.

- Binding: Reduces to Discrete Log Problem (DLP) in G.

- b.

- Hiding: Unconditional (perfect hiding).

- c.

- Proof size: 2|G| (64 bytes for secp256k1).

- 2.

- ElGamal-style [32]:

- a.

- IND-CPA security ⇐ DDH in G.

- b.

- Concrete advantage: Adv_DDH ≤ ε + q/|G| for q queries.

- c.

- Verification: 2 exp. operations (≈15 ms on modern CPUs).

- 3.

- Polynomial Commitments:

- d.

- q-SDH assumption for evaluation binding.

- e.

- Requires |p| ≥ 256 bits for 128-bit security.

3.4. Pederson Commitments

| Scheme | Security (Bits) | Commit Size | Commit Time | Verify Time | ZK Proof Size |

|---|---|---|---|---|---|

| Metere and Dong [36] | 128 (DLP) | 256 bytes | 220 ms | 180 ms | N/A |

| Chen et al. [38] | 128 (DLP) | 192 bytes | 15 ms | 22 ms | 1.2 KB |

| Wang et al. [39] | 128 (DLP) | 64 bytes | 8 ms | 22 ms | 3.8 KB |

| Feng et al. [40] | 128 (DLP) | 48 bytes | 0.8 ms | 1.5 ms | 2.1 KB |

| Yang et al. [41] | 128 (DLP) | 80 bytes | 5 ms | 18 ms | 500 byte |

3.5. Post-Quantum Vector Commitments

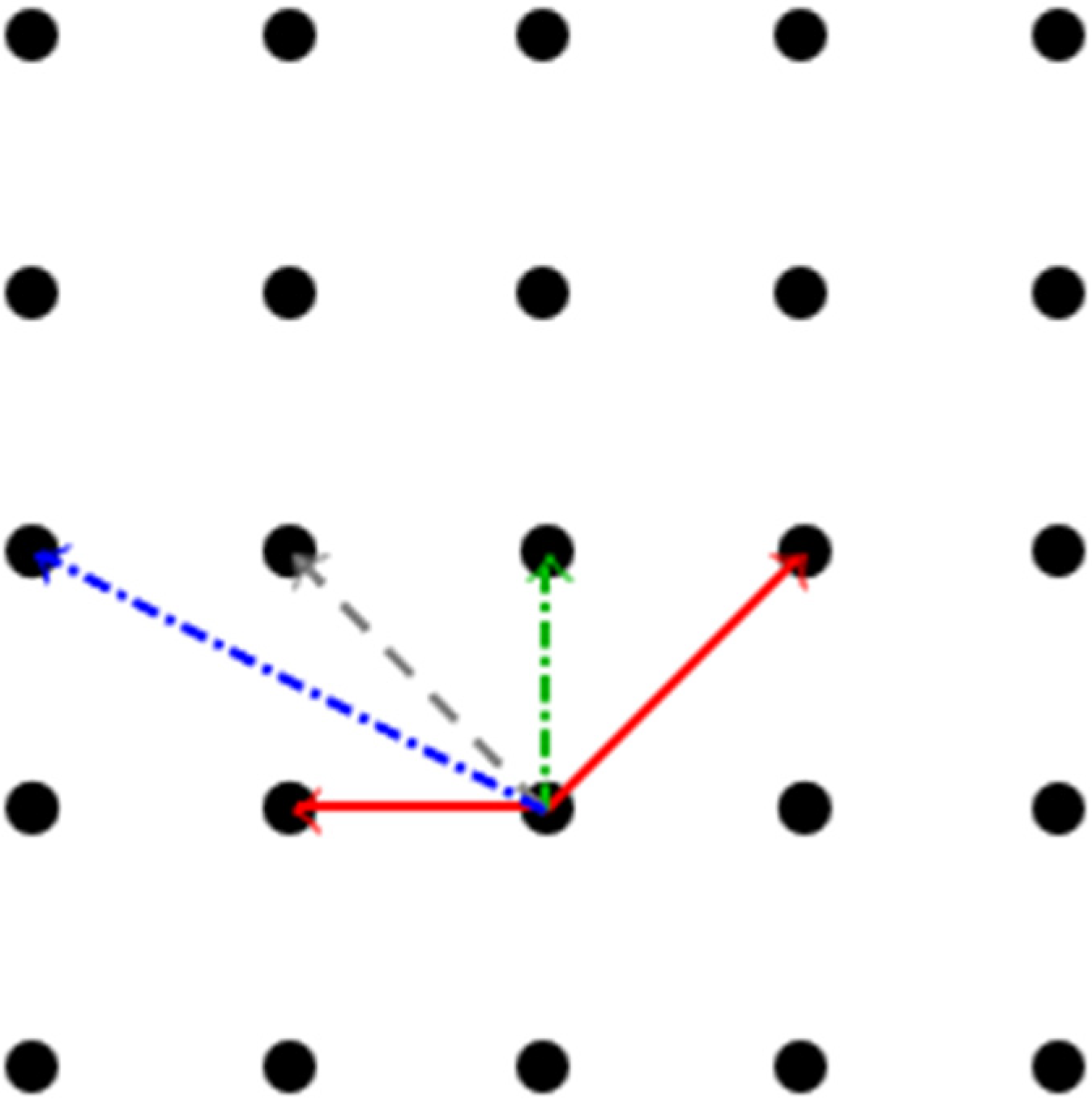

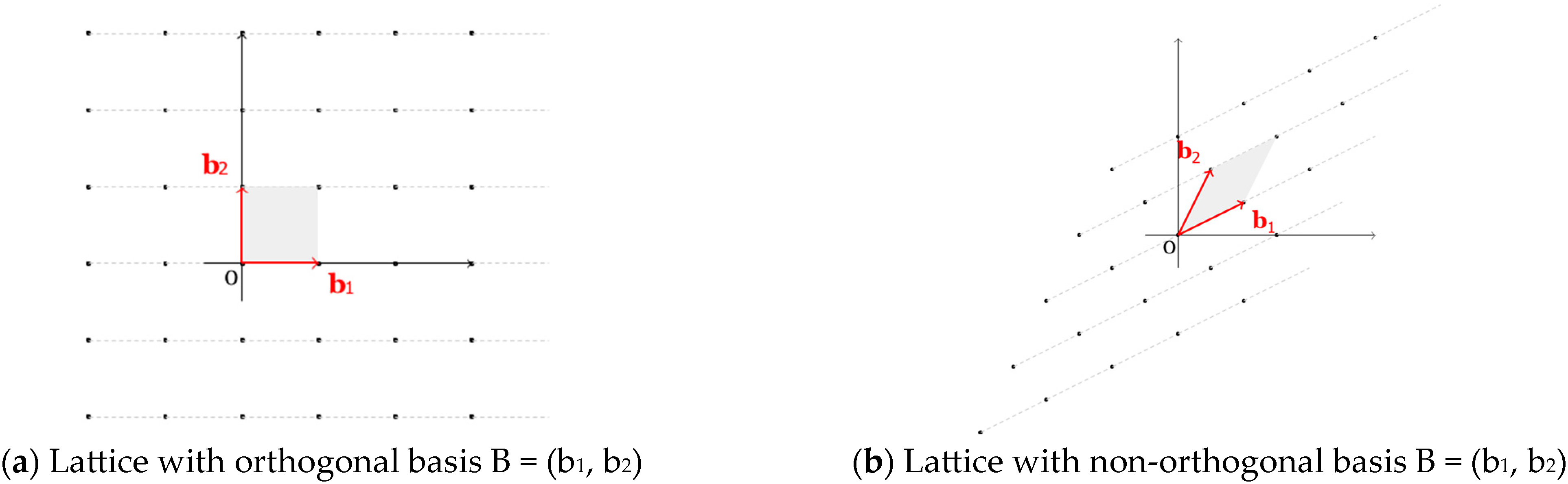

- The Shortest Vector Problem (SVP): Given a lattice basis B, find a shortest non-zero vector in the lattice L(B), i.e., find a non-zero vector v ∈ L(B) such that

- 2.

- The Shortest Independent Vectors Problem (SIVP): Given a lattice basis B of an n-dimensional lattice L(B), find n linearly independent vectors , …, ∈ L(B) such that

- 3.

- The Closest Vector Problem (CVP): Given a lattice basis B and a target vector t that is not in the lattice L(B), find a vector in L(B) that is closest to t, i.e., find a vector v ∈ L(B) such that for all w ∈ L(B), it satisfies

- 4.

- The α-Bounded Distance Decoding Problem (BDDα): Given a lattice basis B of an n-dimensional lattice L and a target vector t ∈ Rn satisfying

3.6. Gaps in Research

- Post-Quantum Secure Vector Commitments: Most of the VC schemes are vulnerable to post-quantum attacks. In [52], Boneh, Bunz, and Fisch question whether an accumulator with constant-size witnesses can be constructed under a quantum-resistant assumption. In [30], Peikert offers post-quantum constructions of vector and functional commitments based on the Short Integer Solution (SIS) lattice problem, but we should also find and implement more techniques to empower the VC schemes.

- Sublinear Update and Proof Times: Most of the VC schemes hardly support efficient updates in the changing vector elements context. Tas and Boneh offer such an approach in [53], but we should also investigate more ways to improve this context.

- Efficient Zero-Knowledge Vector Commitments: Few VC constructions address the zero-knowledge proofs for committed values. Although Wang et al. investigate in [54] a (Zero-Knowledge) VC with Sum Binding, we may focus on improving this area.

- Multi-Vector or Hierarchical Commitments: The subject of committing to multiple related vectors or nested structures is underexplored. Miao et al. try an approach in [55], but more research directions should also be explored.

- Applications to Decentralized Systems: With the advent of blockchains and cryptocurrencies, the practical integration of VCs into blockchain and rollup systems is still emerging. Future work could explore intersections with domain-specific data, such as underwater image verification, where commitments might enhance tamper-proofing for enhanced images [23].

4. Polynomial Commitments

4.1. Classical Polynomial Commitments

- can be the coefficients of the polynomial:

- 2.

- The polynomial can interpolate the points (). Consequently, we can construct a polynomial that passes through each of those points. To do this, for each i, we first construct a polynomial Qi(x) which evaluates to 0 at each for and evaluates to 1 at i:

4.2. Pedersen Polynomial Commitment

4.3. KZG Polynomial Commitments

| Scheme | Type | Proof Size | Verification Time | Setup | Security Model | Unique Contribution |

|---|---|---|---|---|---|---|

| Belohorec et al. (PoKoP) [60] | Multivariate KZG | 288 bytes | 12 ms | Trusted | Standard model (w/extractability) | First extractable multivariate KZG |

| Behemont [61] | Transparent Poly. | 1.5 KB | 45 ms | Transparent | ROM + Generic Group | Resolves Nikolaenko’s open problem |

| Avery and Sheek (Storage Audit) [63] | Univariate KZG | 96 bytes | 8 ms | Trusted | Generic Group | 10× fewer audits vs. Merkle trees |

| Verkle trees [64,65] | KZG-based | 128 bytes/node | 6 ms/node | Trusted | Generic Group | 30× smaller proofs than Merkle |

4.4. Zero-Knowledge Proofs

4.5. Post-Quantum Polynomial Commitments

| Scheme | Type | Security Assumption | Proof Size | Prover Time | Verifier Time | Trusted Setup | Soundness |

|---|---|---|---|---|---|---|---|

| OWF-Based Commit [72] | One-Way Function Commit | OWF Existence | N/A | N/A | N/A | No | yes |

| Variant of [72,73] | Enhanced OWF Commit | Stronger OWF Assumption | N/A | N/A | N/A | No | Yes |

| Orion (Original) [74] | ZK Polynomial Commit | Small Set Expansion | 2.5 KB | O(n) | O(1) | No | No (Unsound) |

| Orion (Fixed) [78] | ZK Polynomial Commit | Diamond Reduction [79] | 1.8 KB (reduced by 28% compared to the original) | O(n) | O(log n) | No | Yes |

| Polaris [82] | Merkle-Based Commit | CRHFs | 4.2 KB | O(n) | O(log n) | No | Yes |

| Lattice SNARK [83] | Lattice-Based SNARK | SIS/LWE | 1.2 KB | O(n log n) | O(√n) | yes | Yes |

4.6. Gaps in the Research

- Post-Quantum Secure Polynomial Commitments: The existing efficient polynomial commitments (e.g., KZG) rely on pairings and discrete logarithm assumptions, which are insecure against quantum adversaries. Few post-quantum constructions balance succinctness, universality, and efficiency. The challenge lay in achieving short proofs, efficient verification, and universality under lattice-based assumptions like SIS or LWE. Although some research works [69,85] proposed feasible solutions and implementations, there is still room to research and propose other approaches for this purpose.

- Efficient Batch Openings (Multi-point, Multi-polynomial): While batching is supported in KZG-based schemes [52,69,86,87], it is less efficient or absent in the transparent or the post-quantum settings. One challenge might be to design non-interactive aggregation techniques with minimal overhead and secure under standard assumptions.

- Dynamic or Updatable Commitments: Most existing schemes are static; thus, updating the coefficients of the polynomials typically requires full recommitment. One solution might be to develop schemes that allow local updates to committed polynomials with minimal computational or communication costs. Some works already intended to address this issue [85,86]; however, future works focus on implementing more efficient and less expensive scenarios.

- Universally Composable and Simulation-Sound Security: Many PC constructions lack proofs of security under UC frameworks or simulation-based zero-knowledge. The works of Peikert et al. [85], Chiesa and Spooner [70], or Boneh et al. [52] attempted to offer implementations of such constructions. Consequently, it is imperiously necessary to further focus on constructing polynomial commitment schemes composable within larger cryptographic protocols like SNARKs [69] or multi-party computation (MPC) [88].

- Efficient Verifier Time in Transparent Settings: Transparent schemes such as STARKs [69] incur superlinear verifier time and larger proof sizes. The key solution is to achieve linear or sublinear-time verification without trusted setup or non-standard assumptions.

5. Functional Commitments

5.1. Classical Schemes

5.2. Post-Quantum Approaches

- In [29], Chris Peikert et al. introduced a stateless updatable FC scheme based on SIS:

- a.

- Supports compact proofs and efficient updates.

- b.

- Allows commitments to arbitrary Boolean circuits.

- c.

- Operates under falsifiable assumptions.

- Hoeteck Wee and David J. Wu in [89] focused on the following:

- a.

- Fast verification for constant-degree polynomials.

- b.

- Use of transparent setup and SIS variants.

- c.

- Avoidance of non-black-box cryptographic tools.

- Based on lattice assumptions, not pairings.

- Require minimal trust in setup.

- Enable provable security under quantum threat models.

5.3. SIS-Based Functional Commitments

Mathematical Framework

- Setup: Generates public parameters .

- Commit: Produces a commitment to function .

- Open: Generates a proof that .

- Verify: Checks proofs .

5.4. Lattice-Based Construction

- Transparent setup: The public parameters are just uniformly random matrices, requiring no trusted setup:

- 2.

- Support for all functions: The scheme can handle arbitrary functions of bounded complexity via their Boolean or arithmetic circuit representations.

- 3.

- Stateless updates: Commitments can be updated to g∘f without knowing f:

- 4.

- Efficient verification: Reduces to checking a single SIS relation:

5.4.1. Core Algebraic Structure

5.4.2. Efficiency Analysis

5.5. Gaps and Limitations in Lattice-Based Functional Commitments

5.5.1. Proof Size Scalability

5.5.2. Adaptive Security Limitation

- Complexity leveraging:

- 2.

- New techniques to avoid the security loss.

5.5.3. Concrete Efficiency Bottlenecks

- Homomorphic Multiplication Cost:

- 2.

- Verification Overhead:

- 3.

- Key Sizes:

5.6. Updated Research Gaps and Open Questions in Functional Commitments

6. Comparative Analysis and Open Questions

7. Discussion

- Batch verification and multi-function aggregation are underdeveloped in post-quantum schemes.

- Selective revealability and fairness—key for applications like credit scoring or automated decision-making—lack formal models and efficient cryptographic support.

- Security in concurrent or adversarial settings (e.g., asynchronous networks, MPC frameworks) has not yet been robustly addressed in most FC proposals.

- Hybrid models that combine classical efficiency with post-quantum guarantees are scarcely explored, though they may offer transitional solutions during the quantum migration phase.

8. Conclusions

- Hybrid Classical–Quantum Schemes: Investigating commitments that leverage classical hardness assumptions alongside post-quantum primitives for transitional security.

- Domain-Specialized Optimizations: Tailoring schemes for emerging applications (e.g., privacy-preserving machine learning or encrypted image verification [93]) without compromising generality.

- Standardization and Benchmarks: Establishing unified metrics to evaluate proof sizes, verification times, and composability across post-quantum constructions.

- Hardware Acceleration: Exploring GPU/FPGA implementations to mitigate computational overhead in lattice-based functional commitments.

- This work serves as both a reference for researchers and a roadmap for future innovations in the field.

Funding

Data Availability Statement

Conflicts of Interest

References

- Catalano, D.; Fiore, D. Vector commitments and their applications. In Public-Key Cryptography–PKC 2013: 16th International Conference on Practice and Theory in Public-Key Cryptography, Nara, Japan, February 26–March 1, 2013; Proceedings 16; Springer: Berlin/Heidelberg, Germany, 2013; pp. 55–72. [Google Scholar] [CrossRef]

- Wang, H.; Sun, S.; Ren, P. Underwater Color Disparities: Cues for Enhancing Underwater Images Toward Natural Color Consistencies. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 738–753. [Google Scholar] [CrossRef]

- Rivest, R.L.; Shamir, A.; Adleman, L. A method for obtaining digital signatures and public-key cryptosystems. Commun. ACM 1978, 21, 120–126. [Google Scholar] [CrossRef]

- Imam, R.; Areeb, Q.M.; Alturki, A.; Anwer, F. Systematic and Critical Review of RSA Based Public Key Cryptographic Schemes: Past and Present Status. IEEE Access 2021, 9, 155949–155976. [Google Scholar] [CrossRef]

- Paixao, C.A.M.; Filho, D.L.G. An efficient variant of the RSA cryptosystem. In Simpósio Brasileiro em Segurança da Informação e de Sistemas Computacionais (SBSeg); Springer: Berlin/Heidelberg, Germany, 2005; pp. 14–27. [Google Scholar]

- Boneh, D.; Shacham, H. Fast variants of rsa. CryptoBytes 2002, 5, 1–9. [Google Scholar]

- Karakra, A.; Alsadeh, A. A-RSA: Augmented RSA. In Proceedings of the 2016 SAI Computing Conference (SAI), London, UK, 13–15 July 2016; pp. 1016–1023. [Google Scholar] [CrossRef]

- Rabin, M.O. Digitalized Signatures and Public-Key Functions as Intractable as Factorization; MIT Technical Report (1979); Massachusetts Institute of Technology: Cambridge, MA, USA, 1979. [Google Scholar]

- Huffman, D.A. A Method for the Construction of Minimum-Redundancy Codes. Proc. IRE 1952, 40, 1098–1101. [Google Scholar] [CrossRef]

- Jintcharadze, E.; Iavich, M. Hybrid implementation of TWOFISH, AES, ELGAMAL and RSA cryptosystems. In Proceedings of the 2020 IEEE East-West Design and Test Symposium (EWDTS), Varna, Bulgaria, 4–7 September 2020; pp. 1–5. [Google Scholar]

- Moghaddam, F.F.; Alrashdan, M.T.; Karimi, O. A Hybrid Encryption Algorithm Based on RSA Small-e and Efficient-RSA for Cloud Computing Environments. J. Adv. Comput. Netw. 2013, 1, 238–241. [Google Scholar] [CrossRef]

- Bansal, V.P.; Singh, S. A hybrid data encryption technique using RSA and blowfish for cloud computing on FPGAS. In Proceedings of the 2015 2nd International Conference on Recent Advances in Engineering and Computational Sciences (RAECS), Chandigarh, India, 21–22 December 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Schneier, B. The blowfish encryption algorithm. Dr Dobb’s J.-Softw. Tools Prof. Program. 1994, 19, 38–43. [Google Scholar]

- El Makkaoui, K.; Ezzati, A.; Beni-Hssane, A. Cloud-RSA: An enhanced homomorphic encryption scheme. In Europe and MENA Cooperation Advances in Information and Communication Technologies; Springer: Berlin/Heidelberg, Germany, 2017; pp. 471–480. [Google Scholar] [CrossRef]

- El Makkaoui, K.; Beni-Hssane, A.; Ezzati, A.; El-Ansari, A. Fast cloud-RSA scheme for promoting data confidentiality in the cloud computing. Procedia Comput. Sci. 2017, 113, 33–40. [Google Scholar] [CrossRef]

- AlSabti, K.D.M.; Hashim, H.R. A new approach for image encryption in the modified RSA cryptosystem using MATLAB. Glob. J. Pure Appl. Math. 2016, 12, 3631–3640. [Google Scholar]

- Jagadiswary, D.; Saraswady, D. Estimation of Modified RSA Cryptosystem with Hyper Image Encryption Algorithm. Indian J. Sci. Technol. 2017, 10, 1–5. [Google Scholar] [CrossRef]

- Shin, S.-H.; Yoo, W.S.; Choi, H. Development of modified RSA algorithm using fixed MERSENNE prime numbers for medical ultra-sound imaging instrumentation. Comput. Assist. Surg. 2019, 24, 73–78. [Google Scholar] [CrossRef]

- Xu, Q.; Sun, K.; Zhu, C. A visually secure asymmetric image encryption scheme based on RSA algorithm and hyperchaotic map. Phys. Scr. 2020, 95, 035223. [Google Scholar] [CrossRef]

- Jiao, K.; Ye, G.; Dong, Y.; Huang, X.; He, J. Image Encryption Scheme Based on a Generalized Arnold Map and RSA Algorithm. Secur. Commun. Networks 2020, 2020, 9721675. [Google Scholar] [CrossRef]

- Sabir, S.; Guleria, V. A novel multi-layer color image encryption based on RSA cryptosystem, RP2DFrHT and generalized 2D Arnold map. Multimed. Tools Appl. 2023, 82, 38509–38560. [Google Scholar] [CrossRef]

- Soni, G.K.; Arora, H.; Jain, B. A novel image encryption technique using ARNOLD transform and asymmetric RSA algorithm. In International Conference on Artificial Intelligence: Advances and Applications 2019: Proceedings of ICAIAA 2019; Springer: Singapore, 2020; pp. 83–90. [Google Scholar]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Prisma Group. Preferred reporting items for systematic reviews and meta-analyses: The prisma statement. Int. J. Surg. 2010, 8, 336–341. [Google Scholar] [CrossRef] [PubMed]

- Si, H.; Cai, Y.; Cheng, Z. An improved RSA signature algorithm based on complex numeric operation function. In Proceedings of the 2010 International Conference on Challenges in Environmental Science and Computer Engineering, Wuhan, China, 6–7 March 2010; Volume 2, pp. 397–400. [Google Scholar] [CrossRef]

- Aufa, F.J.; Endroyono; Affandi, A. Security system analysis in combination method: RSA encryption and digital signature algorithm. In Proceedings of the 2018 4th International Conference on Science and Technology (ICST), Yogyakarta, Indonesia, 7–8 August 2018; pp. 1–5. [Google Scholar]

- Frunza, M.; Scripcariu, L. Improved RSA encryption algorithm for increased security of wireless networks. In Proceedings of the 2007 International Symposium on Signals, Circuits and Systems, Iasi, Romania, 13–14 July 2007; Volume 2, pp. 1–4. [Google Scholar] [CrossRef]

- Fiat, A. Batch RSA. In Advances in Cryptology—CRYPTO’89 Proceedings 9; Springer: New York, NY, USA, 1990; pp. 175–185. [Google Scholar] [CrossRef]

- Li, Y.; Liu, Q.; Li, T. Design and implementation of two improved batch RSA algorithms. In Proceedings of the 2010 3rd International Conference on Computer Science and Information Technology, Chengdu, China, 9–11 July 2010; Volume 4, pp. 156–160. [Google Scholar] [CrossRef]

- Rothblum, R.D.; Vasudevan, P.N. Collision resistance from multi-collision resistance. J. Cryptol. 2024, 37, 14. [Google Scholar] [CrossRef]

- Peikert, C.; Pepin, Z.; Sharp, C. Vector and Functional Commitments from Lattices. Cryptology ePrint Archive, Article 2021/1254. Available online: https://eprint.iacr.org/2021/1254 (accessed on 18 June 2025).

- Pedersen, T.P. Non-interactive and information-theoretic secure verifiable secret sharing. In Annual International Cryptology Conference; Springer: Berlin/Heidelberg, Germany, 1991; pp. 129–140. [Google Scholar] [CrossRef]

- Elgamal, T. A public key cryptosystem and a signature scheme based on discrete logarithms. IEEE Trans. Inf. Theory 1985, 31, 469–472. [Google Scholar] [CrossRef]

- Bao, F.; Deng, R.H.; Zhu, H. Variations of diffie-hellman problem. In Proceedings of the International Conference on Information and Communications Security; Springer: Berlin/Heidelberg, Germany, 2003; pp. 301–312. [Google Scholar] [CrossRef]

- Cash, D.; Kiltz, E.; Shoup, V. The twin diffie–hellman problem and applications. J. Cryptol. 2009, 22, 470–504. [Google Scholar] [CrossRef]

- Canetti, R.; Gennaro, R.; Goldfeder, S.; Makriyannis, N.; Peled, U. Uc non-interactive, proactive, threshold ECDSA with identifiable aborts. In Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security, Virtual Event, 9–13 November 2020; pp. 1769–1787. [Google Scholar] [CrossRef]

- Metere, R.; Dong, C. Automated cryptographic analysis of the PEDERSEN commitment scheme. arXiv 2017, arXiv:1705.05897. [Google Scholar] [CrossRef]

- Barthe, G.; Dupressoir, F.; Gr’egoire, B.; Kunz, C.; Schmidt, B.; Strub, P.-Y. Easycrypt: A tutorial. In Proceedings of the International School on Foundations of Security Analysis and Design, Bertinoro, Italy, 3–8 September 2012; pp. 146–166. [Google Scholar] [CrossRef]

- Chen, B.; Li, X.; Xiang, T.; Wang, P. SBRAC: Blockchain-based sealed-bid auction with bidding price privacy and public verifiability. J. Inf. Secur. Appl. 2022, 65, 103082. [Google Scholar] [CrossRef]

- Wang, H.; Liao, J. Blockchain privacy protection algorithm based on pedersen commitment and zero-knowledge proof. In Proceedings of the 2021 4th International Conference on Blockchain Technology and Applications, ICBTA ’21, Xi’an, China, 17–19 December 2022; pp. 1–5. [Google Scholar]

- Liu, F.; Yang, J.; Kong, D.; Qi, J. A secure multi-party computation protocol combines pederson commitment with schnorr signature for blockchain. In Proceedings of the 2020 IEEE 20th International Conference on Communication Technology (ICCT), Nanning, China, 28–31 October 2020; pp. 57–63. [Google Scholar] [CrossRef]

- Yang, Y.; Rong, C.; Zheng, X.; Cheng, H.; Chang, V.; Luo, X.; Li, Z. Time controlled expressive predicate query with accountable anonymity. IEEE Trans. Serv. Comput. 2022, 16, 1444–1457. [Google Scholar] [CrossRef]

- Shor, P.W. Algorithms for Quantum Computation: Discrete Logarithms and Factoring. In Proceedings of the 35th Annual Symposium on Foundation of Computer Science, Washington, DC, USA, 20–22 November 1994; pp. 124–134. [Google Scholar] [CrossRef]

- Peikert, C. A decade of lattice cryptography. Found. Trends® Theor. Comput. Sci. 2016, 10, 283–424. [Google Scholar] [CrossRef]

- Li, Y.; Ng, K.S.; Purcell, M. A tutorial introduction to lattice-based cryptography and homomorphic encryption. arXiv 2022, arXiv:2208.08125. [Google Scholar] [CrossRef]

- Ajtai, M. Generating hard instances of lattice problems. In Proceedings of the Twenty-Eighth Annual ACM Symposium on Theory of Computing, Philadelphia, PA, USA, 22–24 May 1996; pp. 99–108. [Google Scholar] [CrossRef]

- Berman, I.; Degwekar, A.; Rothblum, R.D.; Vasudevan, P.N. Multi-collision resistant hash functions and their applications. In Advances in Cryptology–EUROCRYPT 2018: 37th Annual International Conference on the Theory and Applications of Cryptographic Techniques, Tel Aviv, Israel, April 29–May 3, 2018; Proceedings, Part II 37; Springer: Cham, Switzerland, 2018; pp. 133–161. [Google Scholar] [CrossRef]

- Komargodski, I.; Naor, M.; Yogev, E. White-box vs. black-box complexity of search problems: Ramsey and graph property testing. J. ACM (JACM) 2019, 66, 34. [Google Scholar] [CrossRef]

- Bitansky, N.; Kalai, Y.T.; Paneth, O. Multi-collision resistance: A paradigm for keyless hash functions. In Proceedings of the 50th Annual ACM SIGACT Symposium on Theory of Computing, Los Angeles, CA, USA, 25–29 June 2018; pp. 671–684. [Google Scholar] [CrossRef]

- Komargodski, I.; Naor, M.; Yogev, E. Collision resistant hashing for paranoids: Dealing with multiple collisions. In Advances in Cryptology—EUROCRYPT 2018: 37th Annual International Conference on the Theory and Applications of Cryptographic Techniques, Tel Aviv, Israel, April 29–May 3, 2018; Proceedings, Part II 37; Springer: Berlin/Heidelberg, Germany, 2018; pp. 162–194. [Google Scholar] [CrossRef]

- Komargodski, I.; Yogev, E. On distributional collision resistant hashing. In Proceedings of the Annual International Cryptology Conference, Santa Barbara, CA, USA, 19–23 August 2018; pp. 303–327. [Google Scholar] [CrossRef]

- Papamanthou, C.; Shi, E.; Tamassia, R.; Yi, K. Streaming authenticated data structures. In Advances in Cryptology—EU-ROCRYPT 2013; Volume 7881 of Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; pp. 353–370. [Google Scholar] [CrossRef]

- Boneh, D.; Bünz, B.; Fisch, B. Batching techniques for accumulators with applications to IOPs and stateless blockchains. In Advances in Cryptology–CRYPTO 2019: 39th Annual International Cryptology Conference, Santa Barbara, CA, USA, 18–22 August 2019; Proceedings, Part I 39; Springer: Cham, Switzerland, 2019; pp. 561–586. [Google Scholar]

- Tas, E.N.; Boneh, D. Vector commitments with efficient updates. arXiv 2023, arXiv:2307.04085. [Google Scholar] [CrossRef]

- Wang, Q.; Zhou, F.; Xu, J.; Xu, Z. A (zero-knowledge) vector commitment with sum binding and its applications. Comput. J. 2019, 63, 633–647. [Google Scholar] [CrossRef]

- Miao, M.; Wang, J.; Ma, J.; Susilo, W. Publicly verifiable databases with efficient insertion/deletion operations. J. Comput. Syst. Sci. 2017, 86, 49–58. [Google Scholar] [CrossRef]

- Kate, A.; Zaverucha, G.M.; Goldberg, I. Constant-Size Commitments to Polynomials and Their Applications. In Proceedings of the ASIACRYPT 2010: 16th International Conference on the Theory and Application of Cryptology and Information Security, Singapore, 5–9 December 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 177–194. [Google Scholar] [CrossRef]

- Belling, A.; Soleimanian, A.; Ursu, B. Vortex: A List Polynomial Commitment and Its Application to Arguments of Knowledge. Cryptology ePrint Archive, 2024 Article 2024/185. Available online: https://eprint.iacr.org/2025/185 (accessed on 18 June 2025).

- Golovnev, A.; Lee, J.; Setty, S.; Thaler, J.; Wahby, R.S. Brakedown: Linear-time and field-agnostic snarks for R1CS. In 43rd Annual International Cryptology Conference, CRYPTO 2023, Santa Barbara, CA, USA, 20–24 August 2023, Proceedings, Part II; Springer: Cham, Switzerland, 2023; pp. 193–226. [Google Scholar] [CrossRef]

- Kattis, A.A.; Panarin, K.; Vlasov, A. Redshift: Transparent snarks from list polynomial commitments. In Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security, Los Angeles, CA, USA, 7–11 November 2022; pp. 1725–1737. [Google Scholar] [CrossRef]

- Belohorec, J.; Dvor’ak, P.; Hoffmann, C.; Hub’acek, P.; Maskov’a, K.; Pastyr’ık, M. On Extractability of the kzg Family of Polynomial Commitment Schemes. Cryptology ePrint Archive, 2025, Article 2025/514. Available online: https://eprint.iacr.org/2025/514 (accessed on 8 May 2025).

- Seres, I.A.; Burcsi, P. Behemoth: Transparent Polynomial Commitment Scheme with Constant Opening Proof Size and Verifier Time. Cryptology ePrint Archive, 2023, Article 2023/670. Available online: https://eprint.iacr.org/2023/670 (accessed on 7 May 2025).

- Nikolaenko, V.; Ragsdale, S.; Bonneau, J.; Boneh, D. Powers-of-tau to the people: Decentralizing setup ceremonies. In Proceedings of the International Conference on Applied Cryptography and Network Security, Abu Dhabi, United Arab Emirates, 5–8 March 2024; Springer: Cham, Switzerland, 2024; pp. 105–134. [Google Scholar]

- Avery, C.; Sheek, J. Storage Auditing Using Merkle Trees and KZG Commitments. 2025. Available online: https://www.orchid.com/storage-auditing-latest.pdf (accessed on 27 May 2025).

- Lee, J. Dory: Efficient, transparent arguments for generalised inner products and polynomial commitments. In Theory of Cryptography; Springer: Cham, Switzerland, 2021; pp. 1–34. [Google Scholar] [CrossRef]

- Kuszmaul, J. Verkle Trees. Verkle Trees 2018, Kuszmaul, J. Verkle Trees. 2019. Available online: https://math.mit.edu/research/highschool/primes/materials/2018/Kuszmaul.pdf (accessed on 17 May 2025).

- Blum, M.; Feldman, P.; Micali, S. Non-interactive zero- knowledge and its applications. In Providing Sound Foundations for Cryptography: On the Work of Shafi Goldwasser and Silvio Micali; Association for Computing Machinery: New York, NY, USA, 2019; pp. 329–349. [Google Scholar] [CrossRef]

- Bitansky, N.; Canetti, R.; Chiesa, A.; Tromer, E. From extractable collision resistance to succinct non-interactive arguments of knowledge, and back again. In Proceedings of the 3rd Innovations in Theoretical Computer Science Conference, Cambridge, MA, USA, 8–10 January 2012; pp. 326–349. [Google Scholar] [CrossRef]

- Sasson, E.B.; Chiesa, A.; Garman, C.; Green, M.; Miers, I.; Tromer, E.; Virza, M. Zerocash: Decentralized anonymous payments from bitcoin. In Proceedings of the 2014 IEEE Symposium on Security and Privacy, Berkeley, CA, USA, 18–21 May 2014; pp. 459–474. [Google Scholar] [CrossRef]

- Hopwood, D.; Bowe, S.; Hornby, T.; Wilcox, N. Zcash Protocol Specification, Version 2024.1.1. 2024. Available online: https://zips.z.cash/protocol/protocol.pdf (accessed on 1 April 2025).

- Ben-Sasson, E.; Chiesa, A.; Riabzev, M.; Spooner, N.; Virza, M.; Ward, N.P. Aurora: Transparent succinct arguments for r1cs. In Advances in Cryptology–EUROCRYPT 2019: 38th Annual International Conference on the Theory and Applications of Cryptographic Techniques, Darmstadt, Germany, May 19–23, 2019; Proceedings, Part I 38; Springer: Cham, Switzerland, 2019; pp. 103–128. [Google Scholar] [CrossRef]

- Masip-Ardevol, H.; Baylina-Melé, J.; Guzmán-Albiol, M. estark: Extending starks with arguments. Des. Codes Cryptogr. 2024, 92, 3677–3721. [Google Scholar] [CrossRef]

- Ashur, T.; Dhooghe, S. Marvellous: A Stark-Friendly Family of Cryptographic Primitives. Cryptology ePrint Archive, 2018. Article 2018/1098. Available online: https://eprint.iacr.org/2018/1098 (accessed on 19 May 2025).

- Ben-Sasson, E.; Bentov, I.; Horesh, Y.; Riabzev, M. Fast reed-solomon interactive oracle proofs of proximity. In Proceedings of the 45th International Colloquium on Automata, Languages, and Programming (ICALP 2018), Prague, Czech Republic, 9–13 July 2018; pp. 14–19. [Google Scholar] [CrossRef]

- Ames, S.; Hazay, C.; Ishai, Y.; Venkita-subramaniam, M. Ligero: Lightweight sublinear arguments without a trusted setup. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 2087–2104. [Google Scholar] [CrossRef]

- Xie, T.; Zhang, Y.; Song, D. Orion: Zero knowledge proof with linear prover time. In Proceedings of the Annual International Cryptology Conference, Santa Barbara, CA, USA, 15–18 August 2022; pp. 299–328. [Google Scholar] [CrossRef]

- Bootle, J.; Cerulli, A.; Ghadafi, E.; Groth, J.; Hajiabadi, M.; Jakobsen, S.K. Linear-time zero-knowledge proofs for arithmetic circuit satisfiability. In Proceedings of the International Conference on the Theory and Application of Cryptology and Information Security, Hong Kong, China, 3–7 December 2017; pp. 336–365. [Google Scholar] [CrossRef]

- Bootle, J.; Chiesa, A.; Groth, J. Linear-time arguments with sublinear verification from tensor codes. In Proceedings of the Theory of Cryptography—18th International Conference, TCC 2020, Durham, NC, USA, 16–19 November 2020; pp. 19–46. [Google Scholar] [CrossRef]

- Bootle, J.; Chiesa, A.; Liu, S. Zero-knowledge IOPs with linear-time prover and polylogarithmic-time verifier. In Annual International Conference on the Theory and Applications of Cryptographic Techniques; Springer: Cham, Switzerland, 2022; pp. 275–304. [Google Scholar] [CrossRef]

- den Hollander, T.; Slamanig, D. A Crack in the Firmament: Restoring Soundness of the Orion Proof System and More. Cryptology ePrint Archive, 2024, Article 2024/1164. Available online: https://eprint.iacr.org/2024/1164 (accessed on 12 May 2025).

- Diamond, B.E.; Posen, J. Proximity Testing with Logarithmic Randomness. Cryptology ePrint Archive, 2023, Article 2023/630. Available online: https://eprint.iacr.org/2023/630 (accessed on 11 June 2025).

- Setty, S. Spartan: Efficient and general-purpose ZKSNARKs without trusted setup. In Proceedings of the 40th Annual International Cryptology Conference, CRYPTO 2020, Santa Barbara, CA, USA, 17–21 August 2020; Proceedings, Part III; Springer: Cham, Switzerland, 2020; pp. 704–737. [Google Scholar] [CrossRef]

- Fu, S.; Gong, G. Polaris: Transparent succinct zero-knowledge arguments for r1cs with efficient verifier. In Proceedings of the Privacy Enhancing Technologies, Virtual, 11–14 July 2022. [Google Scholar] [CrossRef]

- Kilian, J. A note on efficient zero-knowledge proofs and arguments. In Proceedings of the Twenty-Fourth Annual ACM Symposium on Theory of Computing, Victoria, BC, Canada, 4–6 May 1992; pp. 723–732. [Google Scholar] [CrossRef]

- Albrecht, M.R.; Cini, V.; Lai, R.W.F.; Malavolta, G.; Thyagarajan, S.A. Lattice-Based SNARKs: Publicly Verifiable, Preprocessing, and Recursively Composable. Cryptology ePrint Archive 2022, Article 2022/941. Available online: https://eprint.iacr.org/2022/941 (accessed on 11 June 2025).

- de Castro, L.; Peikert, C. Functional commitments for all functions, with transparent setup and from sis. In Annual International Conference on the Theory and Applications of Cryptographic Techniques; Springer: Cham, Switzerland, 2023; pp. 287–320. [Google Scholar] [CrossRef]

- Canetti, R.; Fischlin, M. Universally composable commitments. In Advances in Cryptology—CRYPTO 2001: 21st Annual International Cryptology Conference, Santa Barbara, California, USA, 19–23 August 2001 Proceedings 21; Springer: Berlin/Heidelberg, Germany, 2001; pp. 19–40. [Google Scholar] [CrossRef]

- Srinivasan, S.; Karantaidou, I.; Baldimtsi, F.; Papamanthou, C. Batching, aggregation, and zero-knowledge proofs in bilinear accumulators. In Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security, Los Angeles, CA, USA, 7–11 November 2022; pp. 2719–2733. [Google Scholar] [CrossRef]

- Du, W.; Atallah, M.J. Secure multi-party computation problems and their applications: A review and open problems. In Proceedings of the 2001 Workshop on New Security Paradigms, Cloudcroft, Mexico, 10–13 September 2001; pp. 13–22. [Google Scholar] [CrossRef]

- Wee, H.; Wu, D.J. Succinct functional commitments for circuits from k-lin. In Proceedings of the 43rd Annual International Conference on the Theory and Applications of Cryptographic Techniques, Zurich, Switzerland, 26–30 May 2024; pp. 280–310. [Google Scholar] [CrossRef]

- Wee, H.; Wu, D.J. Succinct vector, polynomial, and functional commitments from lattices. In Proceedings of the 42nd Annual International Conference on the Theory and Applications of Cryptographic Techniques, Lyon, France, 23–27 April 2023; pp. 385–416. [Google Scholar] [CrossRef]

- Peikert, C.; Pepin, Z.; Sharp, C. Vector and functional commitments from lattices. In Theory of Cryptography: 19th International Conference, TCC 2021, Raleigh, NC, USA, November 8–11, 2021, Proceedings, Part III 19; Springer: Cham, Switzerland, 2021; pp. 480–511. [Google Scholar] [CrossRef]

- Boneh, D.; Nguyen, W.; Ozdemir, A. Efficient Functional Commitments: How to Commit to a Private Function. Cryptology ePrint Archive, 2021, Article 2021/1342. Available online: https://eprint.iacr.org/2021/1342 (accessed on 1 April 2025).

- Wee, H.; Wu, D.J. Lattice-based functional commitments: Fast verification and cryptanalysis. In Proceedings of the 29th International Conference on the Theory and Application of Cryptology and Information Security, Guangzhou, China, 4–8 December 2023; pp. 201–235. [Google Scholar] [CrossRef]

- Lin, H.; Pass, R.; Venkitasubramaniam, M. Concurrent non-malleable commitments from any one-way function. In Theory of Cryptography: Fifth Theory of Cryptography Conference, TCC 2008, New York, USA, 19–21 March 2008; Proceedings 5; Springer: Berlin/Heidelberg, Germany, 2008; pp. 571–588. [Google Scholar] [CrossRef]

- Namboothiry, B. Revealable Functional Commitments: How to Partially Reveal a Secret Function. Cryptology ePrint Archive, 2023, Article 2023/1250. Available online: https://eprint.iacr.org/2023/1250 (accessed on 2 June 2025).

- Liu, B.; Song, W.; Zheng, M.; Fu, C.; Chen, J.; Wang, X. Semantically enhanced selective image encryption scheme with parallel computing. Expert Syst. Appl. 2025, 279, 127404. [Google Scholar] [CrossRef]

- Song, W.; Fu, C.; Zheng, Y.; Tie, M.; Liu, J.; Chen, J. A parallel image encryption algorithm using intra bitplane scrambling. Math. Comput. Simul. 2023, 204, 71–88. [Google Scholar] [CrossRef]

- Song, W.; Fu, C.; Zheng, Y.; Zhang, Y.; Chen, J.; Wang, P. Batch image encryption using cross image permutation and diffusion. J. Inf. Secur. Appl. 2024, 80, 103686. [Google Scholar] [CrossRef]

| Database | Public URL |

|---|---|

| arXiv-DLScience Direct-Elsevier-DLScopus-SEIEEExplore-DL ACM Digital Library-DL Web of Science-SE Google Scholar-SE MDPI-DL Springer-DL Research Gate-Scientific Social Networking Edinburgh Library database-DL RefSeek-SE Bielefeld Academic Search Engine-SE | https://arxiv.org (accessed on 12 June 2025) https://www.sciencedirect.com (accessed on 16 June 2025) https://www.scopus.com (accessed on 19 May 2025) https://ieeexplore.ieee.org(accessed on 13 June 2025) https://dl.acm.org (accessed on 12 June 2025) https://www.webofscience.com (accessed on 1 June 2025) https://scholar.google.com (accessed on 12 June 2025) https://www.mdpi.com (accessed on 30 May 2025) https://www.springer.com (accessed on 12 May 2025) https://www.researchgate.net (accessed on 12 June 2025) https://library.ed.ac.uk (accessed on 20 May 2025) https://www.refseek.com (accessed on 7 June 2025) https://www.base-search.net (accessed on 11 May 2025) |

| Inclusion Criteria | Exclusion Criteria |

|---|---|

|

|

|

|

|

|

| |

|

| VC Scheme Type | Underlying Primitive (i.e., Cryptographic Primitive) |

|---|---|

| Merkle tree-based RSA-based | Collision-resistant hash functions Strong RSA assumption, accumulators |

| KZG (pairing-based) Lattice-based Hash-based (Post-Quantum) | Bilinear pairings, structured reference strings Learning With Errors (LWE) or Ring-LWE Hash functions only (e.g., SHA-3) |

| Scheme | Primitive | Collision Size (t) | Proof Size | Limitations |

|---|---|---|---|---|

| [45,46,47,48,49] (MCRH) | t-MCRH | t = {3,4} | N/A | Only t ≤ 4 feasible |

| [50] (CHR Conversion) | t-MCRH → CHR | t = {3,4} | Non-constructive | No explicit construction for t > 4 |

| [29] (Peikert et al.) | SIS-based VC | not applicable | 0.3 KB | Requires trusted setup |

| [30] (Papamanthou et al.) | Merkle-based VC | not applicable | 1.2 KB | Linear proof growth |

| Component | Size (Bits) | Asymptotic |

|---|---|---|

| Public Parameters | ||

| Commitment | ||

| Proof |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nutu, M.; Akhalaia, G.; Bocu, R.; Iavich, M. An Extended Survey Concerning the Vector Commitments. Appl. Sci. 2025, 15, 9510. https://doi.org/10.3390/app15179510

Nutu M, Akhalaia G, Bocu R, Iavich M. An Extended Survey Concerning the Vector Commitments. Applied Sciences. 2025; 15(17):9510. https://doi.org/10.3390/app15179510

Chicago/Turabian StyleNutu, Maria, Giorgi Akhalaia, Razvan Bocu, and Maksim Iavich. 2025. "An Extended Survey Concerning the Vector Commitments" Applied Sciences 15, no. 17: 9510. https://doi.org/10.3390/app15179510

APA StyleNutu, M., Akhalaia, G., Bocu, R., & Iavich, M. (2025). An Extended Survey Concerning the Vector Commitments. Applied Sciences, 15(17), 9510. https://doi.org/10.3390/app15179510