An Open-Source Virtual Reality Traffic Co-Simulation for Enhanced Traffic Safety Assessment

Abstract

1. Introduction

1.1. VR Traffic Simulation to Analyze Road User Behavior

- Study Goal Definition: Define clear objectives, such as improving in-vehicle systems or infrastructure designs.

- Scenario Development: Create multiple scenarios (for example, different in-vehicle systems).

- Measures of Effectiveness (MOEs) Development: Compare scenario outcomes using measures such as reaction time, collision rates, eye movements, simulation sickness, and questionnaires.

- VR Traffic Simulation Design: Build a VR environment where participants interact with various traffic objects.

- Inviting Participants for Data Collection: Invite participants to provide study data such as vehicle trajectory (x, y, z coordinates).

- Best Scenario Identification: Select the best scenario based on the MOEs.

1.2. Problem Statement

1.3. Study Goal and Objectives

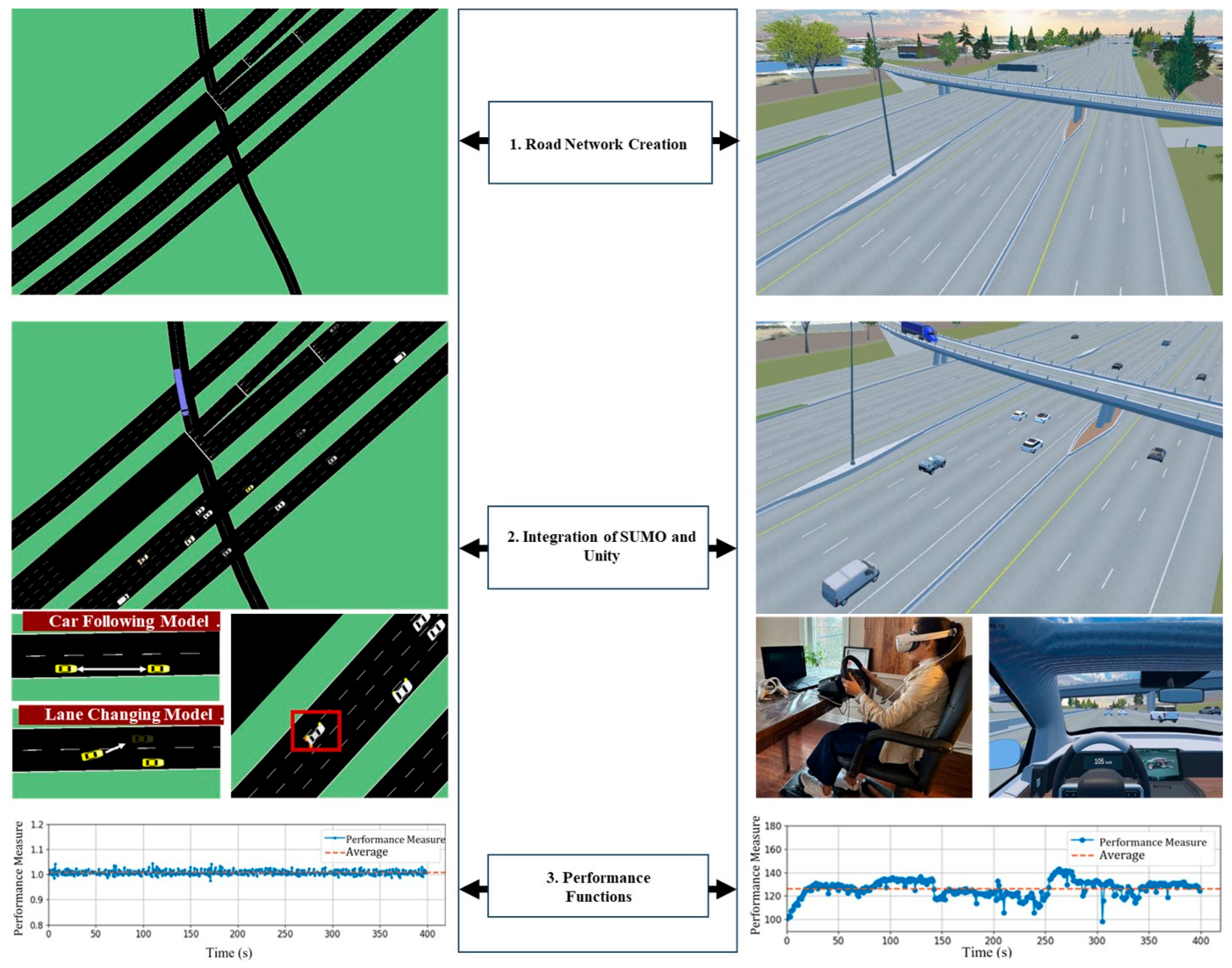

- Road network creation process that can be used in both SUMO and Unity to develop a road network that includes several lanes and interchanges.

- SUMO and Unity Integration to enable realistic vehicle-to-vehicle interactions and the inclusion of different traffic densities (different levels of service).

- Performance functions development to evaluate the performance of the integration.

1.4. The VR Traffic Simulation Development

2. Literature Review

2.1. SUMO

2.2. Unity

2.3. Integration Challenges of SUMO and Unity

3. Methodology

3.1. Road Network Creation Process

- (a)

- GIS Maps: We georeferenced each map to real-world coordinates which aligns a map to its correct position and scale in the real world. We exported the aerial imagery as a PNG file and the elevation map as a TIF file. This step ensures accurate terrain representation (see Figure 2a).

- (b)

- Road Network in RoadRunner: We imported the maps into MathWorks RoadRunner as reference layers. This powerful road-network-generation tool, owned by Matlab, contains tools that allow users to create a detailed road network capturing highway geometry, on/off-ramps, and elevation changes (see Figure 2b). First, we drew lanes (3.75 m) and shoulders (3.0 m) that exactly matched the road’s horizontal geometry. Then, RoadRunner’s Project Roads tool automatically fitted the roadway surface to the terrain with an accuracy of about 2 m. After creating the road surface, we manually added road markings based on the aerial imagery reference.

- (c)

- Road Network in Unity and SUMO: RoadRunner can correlate the 2-D road network in SUMO with the 3-D road network in Unity by exporting through the OpenDRIVE format. OpenDRIVE, introduced by VIRES Simulationstechnologie GmbH in the mid-2000s, is a standard that describes road geometry and lane counts in a machine-readable form for different software (Dupuis et al. [20]). In practice, RoadRunner converts the 3-D road network to a 2-D OpenDRIVE file that SUMO can read (see Figure 2c); and

- (d)

- Buildings, road signs, and trees (see Figure 2d): We created buildings with SketchUp software (https://sketchup.trimble.com). We used real-world images for creating 38 buildings near the highway. There are several residential areas that contain many buildings in the study area. We created 12 generic residential buildings randomly and placed them in residential areas. For road signs, we firstly observe the real-world sign in the location (we use Google Street View or real-world images from dashboard camera) to locate the sign. Then, we added road signs in MathWorks RoadRunner, which includes a wider range of existing Canadian road signs. If an Ontario-specific sign is absent from RoadRunner (e.g., signs with local place names), we created it manually using sign tool in RoadRunner.

- (1)

- Junctions: A junction is where two or more roads meet, usually as an intersection or by merging. Importing OpenDrive junctions into SUMO may initially produce simplified junctions. Figure 3a shows an example of a simplified junction. The problem can be avoided by intervening manually to create improved alignment and smoother transitions, and to ensure that vehicles can navigate junctions and merging lanes as intended (see Figure 3e).

- (2)

- Geometry: Geometric alignment refers to the precise layout, orientation, and continuity of road segments. In OpenDrive, roads can include complex curves with varying widths. When imported into SUMO, these roads may initially appear misaligned (see Figure 3b). By refining the import process, adjusting lane definitions, or revising geometry parameters, Figure 3f presents a smoother, more coherent roadway.

- (3)

- (4)

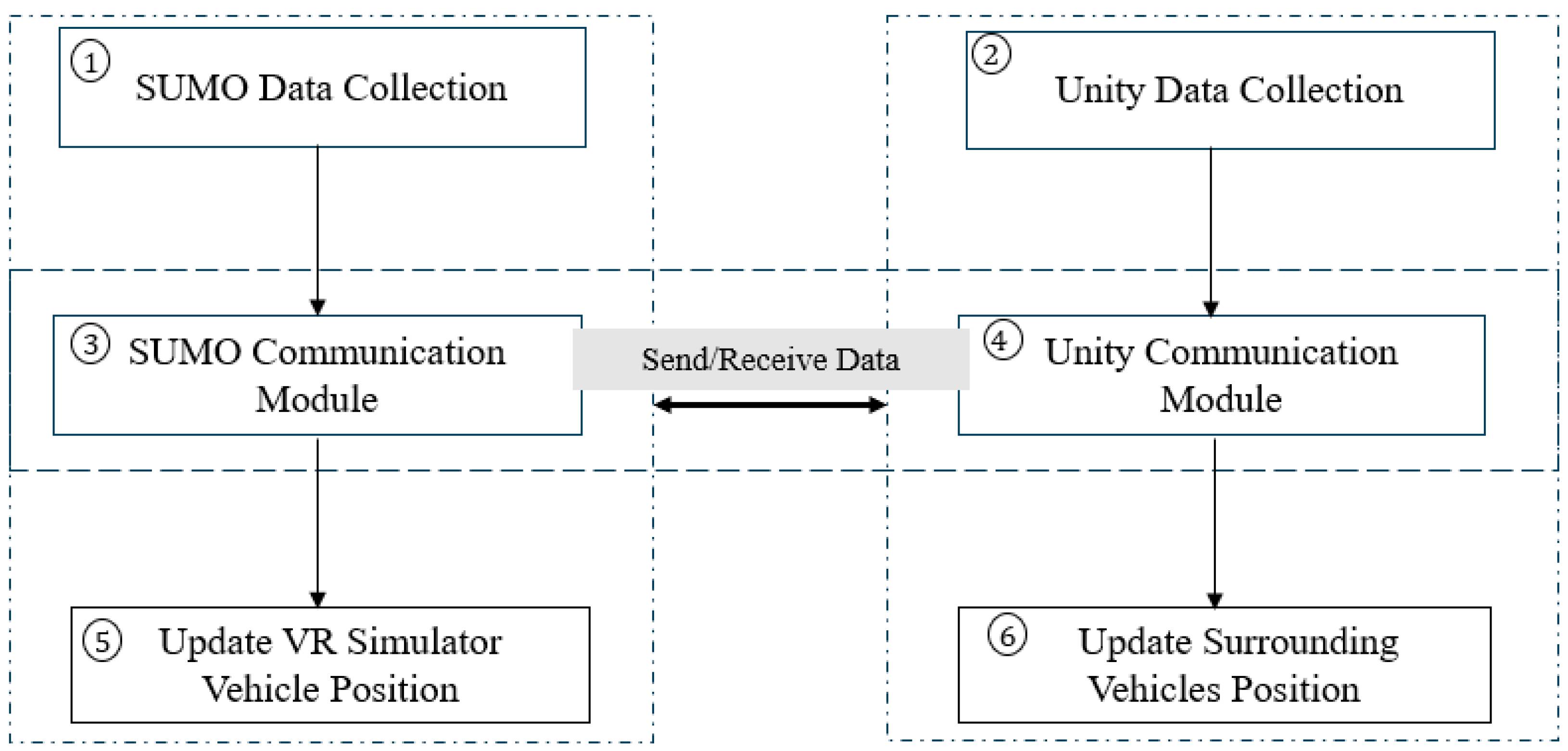

3.2. SUMO and Unity Integration

3.2.1. Cycle Time

- (a)

- Step 1 (SUMO Data Collection): To collect data from SUMO, the SUMO communication module retrieves data from the SUMO traffic simulation. These data include trajectory data of vehicles (e.g., positions and orientation). Gathering the data requires calling proper methods from the SUMO application programming interface (known as the TraCI interface). The time taken in this step depends on factors like the complexity of the network and the number of vehicles. During step 1, the script must wait for SUMO to provide all the information needed for an accurate reflection of the current state of the simulated traffic.

- (b)

- Step 2 (Unity Data Collection): After collecting the data from SUMO, the Unity communication module retrieves the trajectory data of the VR simulator vehicle from Unity. Unity provides the vehicle’s current position and orientation. This step ensures that the module is aware of the VR simulator vehicle’s latest state, allowing Unity to integrate the information back into SUMO. The time required here is related to the data collection from Unity.

- (c)

- Step 3 (SUMO Communication Module): In step 3, the integration uses the SUMO communication module to handle two primary data-exchange tasks. Firstly, the SUMO Communication Module receives traffic state data, such as vehicle positions, speeds, and traffic light states, from SUMO. These data are then prepared and sent over to the Unity communication module so that Unity can accurately represent the current simulation scenario in its VR environment. Secondly, the SUMO Communication Module also receives updated VR simulator vehicle trajectory data from the Unity. The updated VR simulator vehicle trajectory data are passed forward to Step 5 where SUMO incorporates the VR simulator vehicle’s position and behavior into its traffic environment. The time taken for this step depends on the size of the data exchange.

- (d)

- Step 4 (Unity Communication Module): In step 4, the integration code interacts with Unity through a dedicated communication link that handles two main data exchange tasks. The first exchange involves sending the processed SUMO traffic state data (vehicle positions, speeds, etc.) to Unity to enable Unity to update its virtual environment and ensure that what the user sees in the VR headset mirrors the current simulation state. In the second exchange, the script receives the VR simulator vehicle’s latest trajectory from Unity. The updated trajectory data reflect the human participant’s real-time inputs (e.g., steering and acceleration) and will be used in subsequent steps to inform SUMO’s traffic model and maintain continuous integration and realism between the user’s actions and the evolving traffic scenario. The time taken here depends on the size of the data exchange.

- (e)

- Step 5 (Update VR Simulator Vehicle Position in SUMO): After the VR simulator vehicle’s new position has been sent to SUMO and processed, SUMO integrates the VR simulator vehicle’s state into the network. This step involves applying SUMO’s car-following and lane-changing models to adjust traffic conditions accordingly. The time this step takes depends largely on the complexity of the simulation and the number of vehicles. It is a computational step executed by SUMO to ensure the simulation reflects the VR simulator vehicle’s influence on other vehicles and traffic elements.

- (f)

- Step 6 (Update Surrounding Vehicles Positions in Unity): Finally, Unity processes the incoming data about surrounding vehicles, and updates their positions, movements, and states in the VR environment. This step involves rendering the updated scenario so that the participant sees a realistic and synchronized traffic environment. The time for this step depends on Unity’s rendering speed and scene complexity.

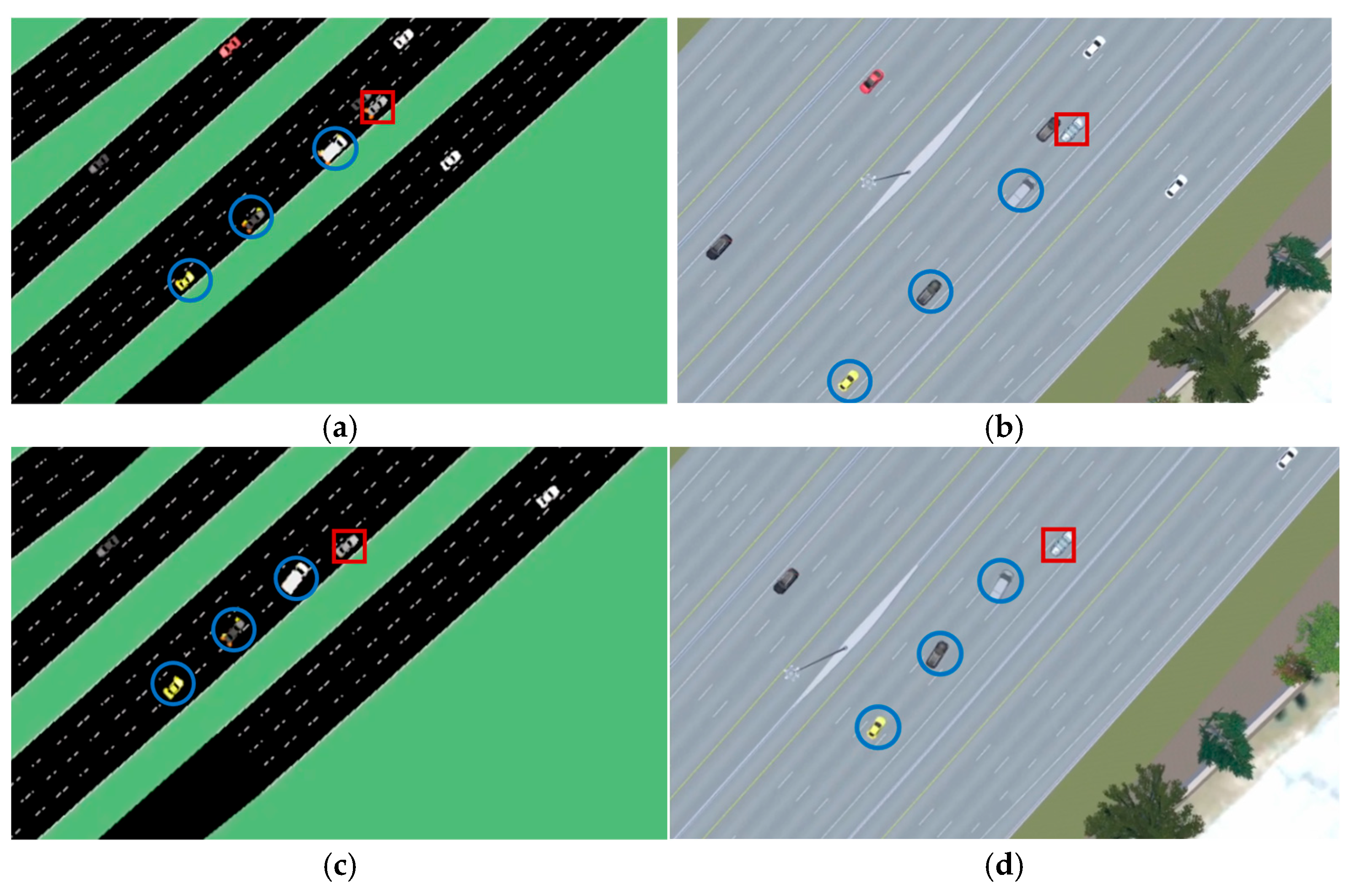

3.2.2. Vehicle-to-Vehicle Interactions

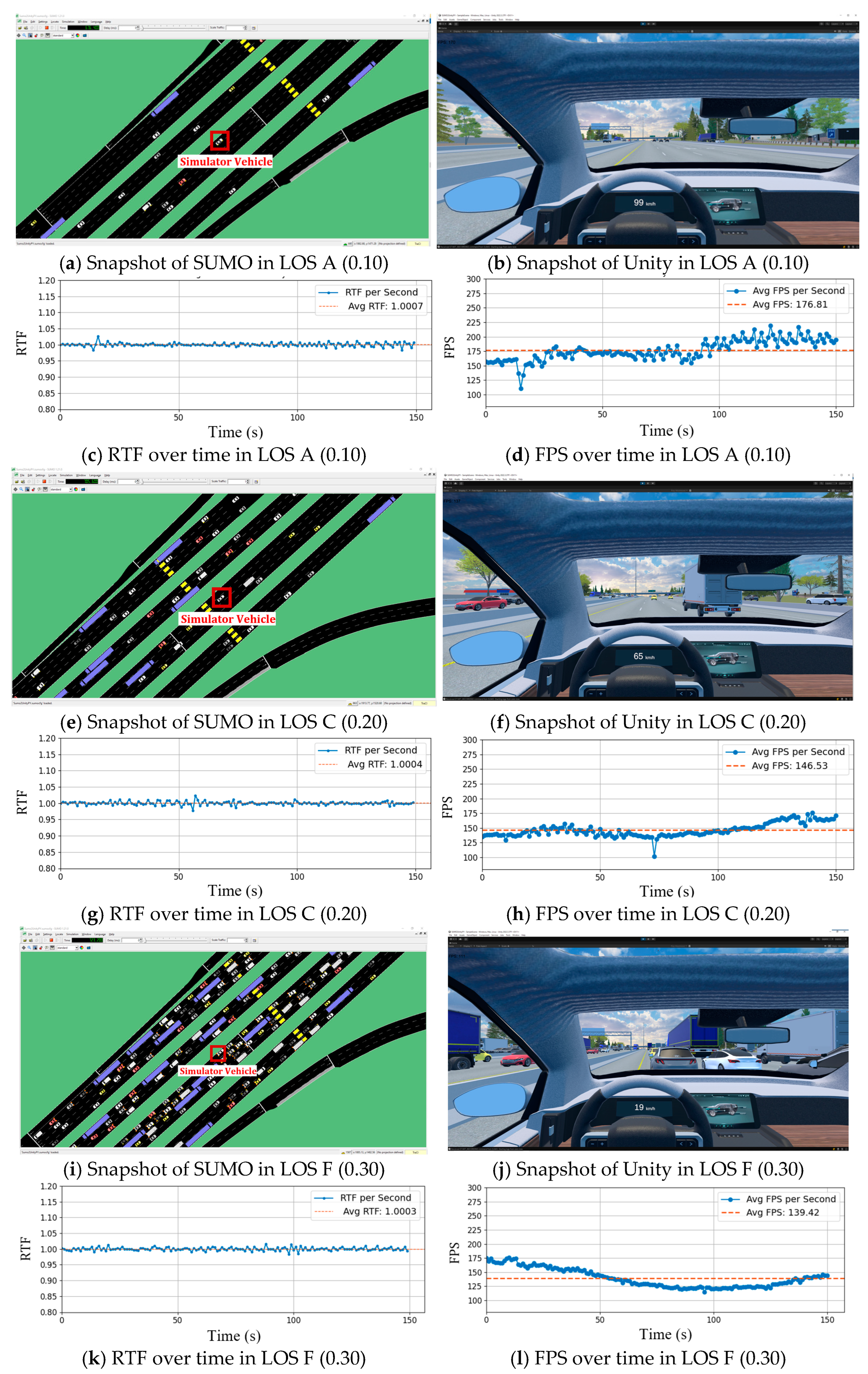

3.2.3. Handling Traffic Densities (Different LOS)

- (A)

- Adding Detectors

- (B)

- Limiting the data exchange to nearby vehicles to the VR simulator vehicle

3.3. Develop Performance Functions

- (A)

- Real-Time Factor

- (B)

- Frame Rate Per Second

4. Analysis of Results

4.1. Traffic Density

4.2. SUMO Simulation Time

4.3. Typical Traffic Scenarioes

4.4. Capturing Car-Following and Lane-Changing Dynamic

5. Conclusions and Recommendations

Recommendations

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pasetto, M.; Barbati, S.D. How the interpretation of drivers’ behavior in virtual environment can become a road design tool: A case study. Adv. Hum.-Comput. Interact. 2011, 2011, 673585. [Google Scholar] [CrossRef]

- Wulf, F.; Rimini-Döring, M.; Arnon, M.; Gauterin, F. Recommendations supporting situation awareness in partially automated driver assistance systems. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2290–2296. [Google Scholar] [CrossRef]

- Shi, J.; Liu, M. Impacts of differentiated per-lane speed limit on lane changing behaviour: A driving simulator-based study. Transp. Res. Part F Traffic Psychol. Behav. 2019, 60, 93–104. [Google Scholar] [CrossRef]

- DeGuzman, C.A.; Donmez, B. Training benefits driver behaviour while using automation with an attention monitoring system. Transp. Res. Part C Emerg. Technol. 2024, 165, 104752. [Google Scholar] [CrossRef]

- Krasniuk, S.; Toxopeus, R.; Knott, M.; McKeown, M.; Crizzle, A.M. The effectiveness of driving simulator training on driving skills and safety in young novice drivers: A systematic review of interventions. J. Saf. Res. 2024, 91, 20–37. [Google Scholar] [CrossRef] [PubMed]

- Gilandeh, S.S.; Hosseinlou, M.H.; Anarkooli, A.J. Examining bus driver behavior as a function of roadway features under daytime and nighttime lighting conditions: Driving simulator study. Saf. Sci. 2018, 110, 142–151. [Google Scholar] [CrossRef]

- Dowling, R.; Skabardonis, A.; Alexiadis, V. Traffic Analysis Toolbox, Volume III: Guidelines for Applying Traffic Microsimulation Modeling Software (No. FHWA-HRT-04-040); United States. Federal Highway Administration. Office of Operations: Washington, DC, USA, 2004.

- Barcelo, J. Fundamentals of Traffic Simulation; Springer: New York, NY, USA, 2010; Volume 145, p. 439. [Google Scholar]

- Brackstone, M.; McDonald, M. Car-following: A historical review. Transp. Res. Part F Traffic Psychol. Behav. 1999, 2, 181–196. [Google Scholar] [CrossRef]

- Toledo, T.; Koutsopoulos, H.N.; Ben-Akiva, M. Integrated driving behavior modeling. Transp. Res. Part C Emerg. Technol. 2007, 15, 96–112. [Google Scholar] [CrossRef]

- Transportation Research Board. Highway Capacity Manual, 7th ed.; The National Academies Press: Washington, DC, USA, 2022. [Google Scholar]

- Farooq, B.; Cherchi, E.; Sobhani, A. Virtual immersive reality for stated preference travel behavior experiments: A case study of autonomous vehicles on urban roads. Transp. Res. Rec. 2018, 2672, 35–45. [Google Scholar] [CrossRef]

- Olaverri-Monreal, C.; Errea-Moreno, J.; Díaz-Álvarez, A.; Biurrun-Quel, C.; Serrano-Arriezu, L.; Kuba, M. Connection of the SUMO microscopic traffic simulator and the unity 3D game engine to evaluate V2X communication-based systems. Sensors 2018, 18, 4399. [Google Scholar] [CrossRef] [PubMed]

- Mohammadi, A.; Park, P.Y.; Nourinejad, M.; Cherakkatil, M.S.B.; Park, H.S. SUMO2Unity: An Open-Source Traffic Co-Simulation Tool to Improve Road Safety. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju, Republic of Korea, 2–5 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 2523–2528. [Google Scholar]

- Pechinger, M.; Lindner, J. Sumonity: Bridging SUMO and Unity for Enhanced Traffic Simulation Experiences. In Proceedings of the SUMO Conference Proceedings, Berlin-Adlershof, Germany, 13–15 May 2024; Volume 5, pp. 163–177. [Google Scholar]

- Liao, X.; Zhao, X.; Wu, G.; Barth, M.; Wang, Z.; Han, K.; Tiwari, P. A game theory based ramp merging strategy for connected and automated vehicles in the mixed traffic: A unity-sumo integrated platform. arXiv 2021, arXiv:2101.11237. [Google Scholar] [CrossRef]

- Nagy, V.; Májer, C.J.; Lepold, S. Eclipse SUMO and Unity 3D integration for emission studies based on driving behaviour in virtual reality environment. Procedia Comput. Sci. 2025, 257, 396–403. [Google Scholar] [CrossRef]

- Krajzewicz, D.; Erdmann, J.; Behrisch, M.; Bieker, L. Recent development and applications of SUMO—Simulation of Urban MObility. Int. J. Adv. Syst. Meas. 2012, 5, 128–138. [Google Scholar]

- Yu, Y. Use Inspired Research in Using Virtual Reality for Safe Operation of Exemplar Critical Infrastructure Systems. Doctoral Dissertation, Rutgers The State University of New Jersey, School of Graduate Studies, New Brunswick, NJ, USA, 2022. [Google Scholar]

- Dupuis, M.; Strobl, M.; Grezlikowski, H. Opendrive 2010 and beyond–status and future of the de facto standard for the description of road networks. In Proceedings of the Driving Simulation Conference Europe, Paris, France, 9–10 September 2010; pp. 231–242. [Google Scholar]

- Blochwitz, T.; Otter, M.; Arnold, M.; Bausch-Gall, I.; Elmqvist, H.; Junghanns, A. The Functional Mockup Interface for Tool independent Exchange of Simulation Models. In Proceedings of the 8th International Modelica Conference, Dresden, Germany, 20–22 March 2011. [Google Scholar] [CrossRef]

- AASHTO (American Association of State Highway and Transportation Officials). A Policy on Geometric Design of Highways and Streets; American Association of State Highway and Transportation Officials: Washington, DC, USA, 2011. [Google Scholar]

- Macedonia, M.R.; Zyda, M.J.; Pratt, D.R.; Brutzman, D.P.; Barham, P.T. Exploiting reality with multicast groups: A network architecture for large-scale virtual environments. IEEE Comput. Graph. Appl. 1995, 9, 10–1109. [Google Scholar]

- Singhal, S.; Zyda, M. Networked Virtual Environments: Design and Implementation; ACM Press: New York, NY, USA; Addison-Wesley Publishing Co.: Boston, MA, USA, 1999. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Mirauda, D.; Capece, N.; Erra, U. Sustainable water management: Virtual reality training for open-channel flow monitoring. Sustainability 2020, 12, 757. [Google Scholar] [CrossRef]

- Pense, C.; Tektaş, M.; Kanj, H.; Ali, N. The use of virtual reality technology in intelligent transportation systems education. Sustainability 2022, 15, 300. [Google Scholar] [CrossRef]

- Oculus VR. Oculus Best Practices. 2022. Available online: https://developer.oculus.com/ (accessed on 12 August 2025).

- Gazebo Classic. Performance Metrics [Tutorial]. Gazebo Classic Documentation. 2025. Available online: https://classic.gazebosim.org/tutorials?cat=tools_utilities&tut=performance_metrics (accessed on 25 June 2025).

| Study | Elevated Road Network | Vehicle-Detector LOS Control | Manual VR Vehicle Control | Quantified Integration Performance |

|---|---|---|---|---|

| Olaverri-Monreal et al. [13] | ✗ | ✗ | ✓ | ✗ |

| Mohammadi et al. [14] | ✗ | ✗ | ✓ | ✗ |

| Pechinger and Lindner [15] | ✗ | ✗ | ✗ | ✗ |

| Liao et al. [16] | ✓ | ✗ | ✗ | ✗ |

| Nagy et al. [17] | ✗ | ✗ | ✓ | ✗ |

| This Study | ✓ | ✓ | ✓ | ✓ |

| LOS (A–F) | Step Length (s) | Traffic Density (veh/mile/lane) | RTF (No Unit) | FPS (Integer) |

|---|---|---|---|---|

| A | 0.30 | 9.14 | 1.00 | 206 |

| 0.20 | 9.05 | 1.00 | 187 | |

| 0.10 | 9.03 | 1.00 | 177 | |

| B | 0.30 | 13.17 | 1.00 | 166 |

| 0.20 | 13.46 | 1.00 | 150 | |

| 0.10 | 14.37 | 0.84 | 142 | |

| C | 0.30 | 25.34 | 1.00 | 158 |

| 0.20 | 25.25 | 1.00 | 146 | |

| 0.10 | 22.98 | 0.63 | 137 | |

| D | 0.30 | 28.86 | 1.00 | 158 |

| 0.20 | 27.00 | 1.00 | 152 | |

| 0.10 | 28.01 | 0.63 | 143 | |

| E | 0.30 | 44.57 | 1.00 | 153 |

| 0.20 | 43.91 | 1.00 | 155 | |

| 0.10 | 40.71 | 0.87 | 145 | |

| F | 0.30 | 77.09 | 1.00 | 139 |

| 0.20 | 84.10 | 0.96 | 141 | |

| 0.10 | 80.24 | 0.52 | 123 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohammadi, A.; Cherakkatil, M.S.B.; Park, P.Y.; Nourinejad, M.; Asgary, A. An Open-Source Virtual Reality Traffic Co-Simulation for Enhanced Traffic Safety Assessment. Appl. Sci. 2025, 15, 9351. https://doi.org/10.3390/app15179351

Mohammadi A, Cherakkatil MSB, Park PY, Nourinejad M, Asgary A. An Open-Source Virtual Reality Traffic Co-Simulation for Enhanced Traffic Safety Assessment. Applied Sciences. 2025; 15(17):9351. https://doi.org/10.3390/app15179351

Chicago/Turabian StyleMohammadi, Ahmad, Muhammed Shijas Babu Cherakkatil, Peter Y. Park, Mehdi Nourinejad, and Ali Asgary. 2025. "An Open-Source Virtual Reality Traffic Co-Simulation for Enhanced Traffic Safety Assessment" Applied Sciences 15, no. 17: 9351. https://doi.org/10.3390/app15179351

APA StyleMohammadi, A., Cherakkatil, M. S. B., Park, P. Y., Nourinejad, M., & Asgary, A. (2025). An Open-Source Virtual Reality Traffic Co-Simulation for Enhanced Traffic Safety Assessment. Applied Sciences, 15(17), 9351. https://doi.org/10.3390/app15179351