Abstract

The integration of emerging eXtended Reality (XR) technologies, digital twins (DTs), smart environments, and advanced mobile and wireless networks is set to enable novel forms of immersive interaction and communication. This paper proposes a high-level conceptual framework for shared presence via XR-based communication and interaction within a virtual reality (VR) representation of the digital twin of a smart space. The digital twin is continuously updated and synchronized—both spatially and temporally—with a physical smart space equipped with sensors and actuators. This architecture enables interactive experiences and fosters a sense of co-presence between a local user in the smart physical environment utilizing augmented reality (AR) and a remote VR user engaging through the digital counterpart. We present our lab deployment architecture used as a basis for ongoing experimental work related to testing and integrating functionalities defined in the conceptual framework. Finally, key technology requirements and research challenges are outlined, aiming to provide a foundation for future research efforts in immersive, interconnected XR systems.

1. Introduction

Digital twins (DTs) and emerging eXtended Reality (XR) technologies are widely recognized as key enablers for blending the digital and physical worlds [1]. According to the Digital Twin Consortium (https://www.digitaltwinconsortium.org/ accessed on 30 July 2025), a DT may be defined as a synchronized virtual representation of real-world entities and processes. DTs are increasingly being used in domains outside of industrial production, as building blocks of novel applications based on wireless connectivity and data exchange between the physical environment, objects, people, and the digital replicas thereof. By providing visualization and immersion, as well as accurate alignment and integration of real and virtual objects, XR technologies enhance the human ability to effectively manage and interact with DTs [2,3]. The term XR is often used as an umbrella term for a wide range of “realities”, including Augmented, Mixed, and Virtual Reality (AR, MR, VR) [4].

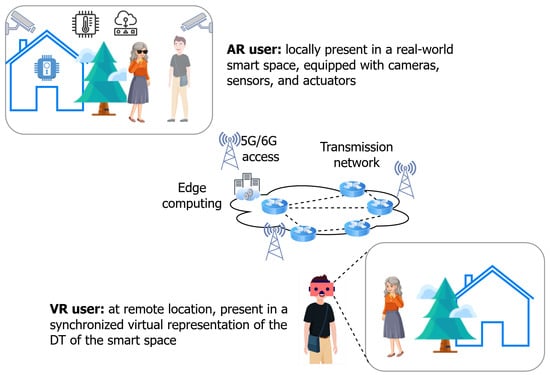

Innovative platforms that allow users to connect and interact within immersive environments signify a major advancement in communication and collaboration services. In this conceptual paper, we aim to outline the research perspectives on technologies needed to facilitate immersive communication and interaction between physically remote users, providing a sense of joint presence in a shared space based on a dynamically updated DT (Figure 1). We consider the dynamically updated DT as a digital representation and information model of a physical smart space, equipped with cameras, sensors, and actuators, in which local and remote users interact with each other and with objects within the actual physical environment. Spatial and temporal synchronization of the DT with the physical environment is achieved on the basis of real-time input from smart space devices. A user located in the physical smart space uses an AR headset, and is referred to as an AR user. Another user (VR user) participates in the shared space remotely, through a VR device and synchronized virtual representation of the DT. The position/orientation of users, as well as any changes in the positions/orientations or states of both real and virtual objects, are synchronized between the AR and VR views.

Figure 1.

Shared presence enabled by a dynamically updated digital twin of a smart space.

The key infrastructures needed to achieve this vision encompass various communication networks and remote computing to process and render the visual representation of a DT deployed on cloud and edge infrastructures. Furthermore, tools are needed to create and dynamically update the DT to strengthen the link between the real and virtual world, integrating interoperable Internet of Things (IoT) platforms for managing devices in physical smart spaces. To contribute to ongoing discussions in the field, we present a conceptual framework for immersive and intuitive XR communication and interaction between people and objects in the visual representation of a dynamically updated DT. For clarity, we refer to the overall concept as DIGIPHY. We delve into selected technology requirements for the DT of a smart space supporting XR communication and interaction in the form of research challenges, presenting state-of-the-art trends and avenues for further research. We note that a preliminary version of the concept was published as an extended abstract in [5]. The current manuscript substantially extends previous work by providing a conceptual framework and an in-depth discussion of technology requirements and research challenges. The paper is organized as follows. Two illustrative use cases are outlined in Section 2. The conceptual framework and research challenges are presented in Section 3 and Section 4, respectively. Section 5 gives a brief overview of our ongoing experimental work, while Section 6 concludes the paper and provides an outlook for future work.

2. Example Use Case Scenarios

Our use cases focus on communication and interaction in assisted living environments and remote training/instruction. The use cases differ primarily in the tightness of interaction coupling and the level of spatial accuracy required.

2.1. Remote Visitor in an Active Assisted Living Care Facility

The concept of Active Assisted Living (AAL) [6] broadly refers to supporting individuals with various special needs to live independently; for example, older adults and people with disabilities. The technology aspect of assisted living involves integrating various “smart” control and monitoring devices into the living environment, for example, a smart home or a specialized care facility. We envision a DT system of an in- and outdoor “smart space” equipped with cameras, sensors (e.g., temperature, rain), and actuators (e.g., opening/closing doors, turning on light switches), supporting an XR service that enables a shared experience of remotely visiting a resident in the care facility in the real world.

In the absence of physical meetings, facilitating such shared experiences could prevent or alleviate social isolation among individuals living in care facilities [6]. The goal is for the remote virtual visitor (VR user) and the person in the care facility (AR user) to interact with each other in real-time, while having a suitably adapted audiovisual display of each other (e.g., a hologram) within a common world view. Their interactions may include switching on the lights or opening doors for each other, and joint activities such as taking a walk, sitting together on a bench, and playing a game of chess [7]. The feeling of shared presence will enable experientially richer interactions through XR (“richer” as compared to only voice and/or video calls).

2.2. Remote Instructor in an Industrial Environment

With cost-effective and adaptable practical experience in a simulated environment, DTs can help individuals gain deeper understanding of complex industrial systems, e.g., to practice diagnostics and repairs. For example, “Industrial Metaverse” [8] aims at supporting remote maintenance tasks by leveraging avatars, DTs, and collaborative XR technologies. We expand the idea of enabling remote training and collaboration through a DT system by using XR-supported remote communication and interaction. We consider the use case of a remote expert (VR user), represented in the virtual DT of the smart space, providing assistance to a less experienced user or trainee (AR user) located in the physical smart space. The system enables the VR user to remotely operate robotic devices located in the physical space, provide real-time spatial annotations, and demonstrate operations using virtual replicas of real-world objects. Spatial and temporal synchronization between the DT and the real physical world is one of the key challenges for analysis and simulation of various situations of interest.

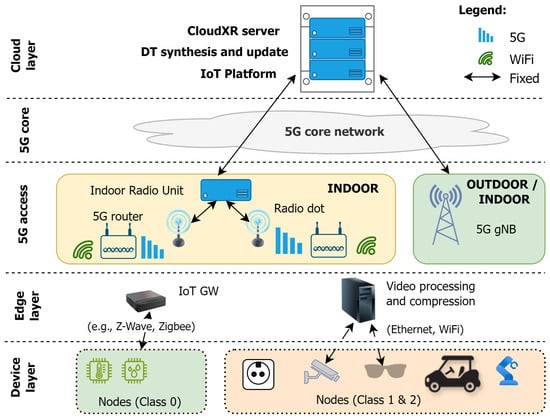

2.3. Deployment Architecture

Our lab deployment architecture to support the use cases is shown in Figure 2. Diverse IoT devices employed at the (bottom) Device layer can be classified as Class 0, Class 1 and Class 2, as specified in RFC 7228 [9]. Class 0 devices are severely constrained and typically use a constrained communication protocol for data transmission to gateways (in Edge layer), since they do not have sufficient resources to run the complete IP stack. Class 1 devices have more resources than Class 0 devices, but they cannot run a full IP stack and thus use constrained application protocols to interact with the cloud and edge services without a gateway. Class 2 devices have increased resource capabilities as compared to Class 1 and can employ a full implementation of the IP stack to connect directly to IoT platforms and other cloud or local/edge services.

Figure 2.

Lab deployment architecture.

Considering these device capabilities, diverse wireless network technologies are used to accommodate requirements ranging from low-power, low-data-rate sensor transmissions to high-bandwidth AR/VR video streams.

The (private) 5G network (5G access and 5G core) and the servers shown in the Cloud layer are parts of the experimental setup in our lab. A CloudXR server is included to support offloading of computationally intensive tasks (e.g., physics simulation, localization, and mapping) and graphics rendering from the XR headsets to a cloud infrastructure with powerful GPUs and CPUs. Rendered and encoded content is then streamed to end-user devices with low latency and using real-time streaming protocols. Further details regarding DT synthesis and update, as well as integration of IoT data via an IoT platform are discussed in the following section.

3. Conceptual Framework

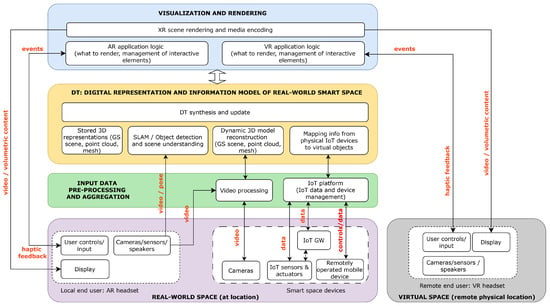

A conceptual framework of the proposed DIGIPHY system and its key functionalities is given in Figure 3, loosely inspired by the DT system architecture specified by the Digital Twin Consortium (https://www.digitaltwinconsortium.org/glossary/glossary/ accessed on 30 July 2025). Starting from the bottom of the picture, we distinguish between devices belonging to the real-world physical space (including AR headset) and the VR headset located remotely (and represented in virtual space). Various input data feeds, including video from static cameras as well as the AR headset, user events from both AR and VR headsets, VR user controller events, user pose, and IoT sensor data, are collected, (pre)processed, and aggregated as input to the DT, i.e., the digital representation and information model of the real-world environment. Output flows sent from the DT include 3D output sent to both AR and VR displays, and actuation commands sent to IoT devices and remotely operated mobile devices (e.g., mobile robotic platform).

Figure 3.

DIGIPHY conceptual framework: XR communication and interaction (shared presence) within a dynamically updated DT.

Four key components that need to be integrated for DT synthesis and update include the following:

- Stored 3D representations, referring to currently displayed static point clouds, meshes, or Gaussian Splatting-based 3D reconstruction—can be constructed in advance (e.g., original 3D environment model);

- Simultaneous Localization and Mapping (SLAM)/object detection: spatial computation output used to accurately position the AR user and to understand the scene;

- Dynamic 3D model reconstruction, referring to dynamically generated models used to update the stored 3D representations if changes are detected;

- Mapping information from physical IoT devices (sensors/actuators managed by an IoT platform) to virtual objects in the DT space.

With respect to the DT, we note that the functionality may be extended beyond synthesis and update to further include simulation models of real-world systems, processes and entities based on collected contextual data. However, such functionalities are considered outside the scope of the proposed framework.

Following DT synthesis and update, the visualization and rendering functionality includes the AR and VR application logic, responsible for making decisions on what digital content to add to the scene and what to render. The XR scene rendering and media encoding is responsible for generating graphics to be shown to the user on the respective AR or VR display. We note that both the application logic and rendering functionalities can be run locally on the client device or be pushed onto the edge cloud. Due to stringent latency requirements, certain functionality (e.g., pose correction) will likely continue to be executed on the XR device.

Key technology requirements and research challenges supporting practical realization of the proposed conceptual framework are discussed in the following section.

4. Technology Requirements and Research Challenges

4.1. 3D Model Reconstruction

In the context of 3D model reconstruction, the main challenge we highlight is real-time updating and rendering of a 3D visual representation of the DT of the smart space. Selective updating of an initially reconstructed 3D model based on detected changes in the environment requires sophisticated techniques for data integration. For detecting changes, we foresee using an object recognition system, combined with breaking down the environment 3D model into smaller, more manageable segments, followed by determining the exact position of all partial reconstructions in the complete model of the environment.

In the context of photorealistic scene reconstruction and use of radiance field techniques for rendering, studies have investigated the applicability of Neural Radiance Fields (NeRFs) [10]. NeRF photogrammetry algorithms use deep learning to generate 3D representations of scenes from camera data (lens characteristics and camera positions) and 2D images [11]. Despite being able to achieve high image quality, NeRFs have been shown to suffer from significant time and memory inefficiencies [12], with computationally intensive methods requiring extensive training times and rendering resources [13].

Following the need for faster and more efficient rendering, 3D Gaussian Splatting (3D-GS) emerged as an innovative technique in 3D graphics and scene reconstruction. Popularized in 2023 by Kerbl et al. [14], 3D-GS delivers a breakthrough in real-time, high-resolution image rendering without dependence on deep neural networks [15]. A 3D-GS model consists of a dense distribution of “splats” (ellipsoids in 3D space) modeled as Gaussian functions in 3D space and characterized by parameters such as position, orientation, color, and opacity. 3D-GS has recently gained attention for its potential applications in novel view synthesis, real-time graphics, VR/AR experiences [16], and visualization of DT models due to its efficient handling of complex geometry and textures [17]. Moreover, 3D-GS can be integrated into commercial game engines such as Unity, with plugins available [18]. The lack of a rigid mesh structure allows 3D-GS to be more flexible for tasks that involve dynamic environments. Additionally, the smooth representation offered by Gaussian functions helps mitigate artifacts typically seen in meshed models and point clouds, particularly in regions where surface data is sparse or occluded. A challenge relevant to the context of the conceptual framework proposed in Section 3 is related to the reconstruction of dynamic scenes from sparse input [19,20], depending on the number and position of available camera feeds.

With respect to real-time rendering, an additional challenge is reducing memory usage (related to millions of Gaussians that represent the scene) while maintaining sufficient quality [21]. Research directions include reducing the number of 3D Gaussians [22] and applying novel compression methods [23]. Furthermore, while GS models are typically trained or optimized offline, dynamic scenes require incremental updates or re-optimization of Gaussians as objects change or move [24]. Accurate object tracking and segmentation are necessary to identify which parts of the scene have changed and need to be adapted, a process that is highly computationally intensive.

Finally, ongoing challenges relate to the integration of GS-created 3D environments into game engines (e.g., Unity) as part of the production pipeline to deliver immersive applications [16], coupled with the segmentation of parts of the scene to allow for editing and interactions, and the incorporation of various 3D assets into the scene [25].

For more in-depth discussions of challenges and potential avenues for future research, we refer the interested reader to recently published survey papers [15,26,27].

4.2. Localization of AR User and Spatial Synchronization Between Digital and Physical Environments

The synchronization of DT representations with the physical world, especially in AR deployments, is a rapidly advancing field that relies on multiple localization technologies. It is necessary to accurately position the AR user (located in the physical smart space) within the spatial model of the environment so that the remote VR user is presented with the correct AR user’s position. Furthermore, AR elements displayed to the AR user must be correctly positioned in space. To achieve this, we propose combining three localization methods as follows:

- SLAM of AR glasses: Simultaneously building a map of an unknown environment while keeping track of the device location within that environment [28]. SLAM uses sensors such as cameras, inertial measurement units (IMUs), and LiDAR to track movement and map surroundings. It provides only relative positioning and cannot determine the user’s initial position in absolute terms.

- Visual Positioning System (VPS) localization of AR glasses: Provides precise absolute positioning by matching the user’s current view (captured by the AR glasses’ camera) against a database of 3D maps created beforehand [29]. VPS allows the system to determine the user’s exact location within the pre-mapped area.

- Optical tracking using on-site cameras: Involves the use of external cameras positioned around the environment to track the user’s movement through optical means. Computer vision algorithms, often powered by neural networks, analyze video feeds from cameras to detect, and track the user’s position. The detected position is mapped to the spatial model using known camera positions.

An open research challenge is how to design a highly accurate positioning algorithm that aggregates data from the three localization methods (SLAM, VPS, and optical tracking) while resolving discrepancies and ensuring real-time processing.

4.3. Interoperability of Smart-Space Data and Interaction Mechanisms Between Digital and Physical Environments

Important elements of DTs are IoT platforms that manage sensors and actuators in the real world and dynamically update their digital replicas by enabling bi-directional flow of data between the real and the digital worlds. Our use cases illustrate scenarios in which a remote user observes and intuitively controls or interacts with the smart space environment via a VR-based visual representation of a DT in real-time, while a local user can explore real-world sensor data and actuation functions through an AR interface. In this context, object detection in immersive environments is enhanced with features which tag and identify IoT devices as actionable objects [30].

Major challenges in such scenarios are interoperability and security for IoT platforms [31]. A smart space information model is needed to provide semantics to the space and enable the interoperability between the IoT platform, which manages smart space devices, and the DT. Appropriate communication protocols are needed for the efficient transmission of real-world data through the IoT platform to the DT for (near) real-time updates of status, interactions, and remote control of real-world devices. The commonly used application protocols include MQTT, CoAP, and HTTP; however, given that none of these protocols consider (near) real-time data transmission, the applicability of other protocols (such as QUIC) should be explored, as well as approaches based on goal-oriented semantic communication [32].

Another significant challenge for the DT in our context is that interactions with devices, either from the AR or VR user, happen in (near) real-time, making it necessary that management and processing services be in close proximity to devices themselves. Various computing nodes deployed in the edge computing continuum facilitate data processing close to the physical environment to alleviate issues associated with limited bandwidth and higher latency when relying solely on cloud resources for DT provisioning. Finally, processing data close to the physical world are advantageous in terms of security and privacy.

Furthermore, efficient interaction among devices, humans, and objects in the hybrid, digital–physical world, while ensuring the Quality of Experience (QoE) from the user’s perspective, requires novel algorithms for service orchestration. For the visual representation of the DT, the dynamics between local (edge) and remote (cloud) processing will be explored. For example, computation-intense tasks (such as rendering) can be executed on an edge server, while user pose correction mechanisms can be executed on the XR device.

4.4. Data Transmission over 5G and Future Networks

Challenges with respect to dimensioning and allocating network resources stem from highly heterogeneous and uncertain requirements (due to diverse traffic flows), with dynamic traffic patterns resulting from six degrees-of-freedom (6DoF) movements, gestures, and real-time user interactions with the system [33]. With respect to the motion-to-photon latency, studies indicate it should be below 20 ms to avoid cybersickness [34,35]. In the context of DT data synthesis and visualization, we identify several broad categories of XR-related data flows as follows:

- User position and orientation data: sensory and 6DoF tracking information collected by the AR/VR headset and used for localization and viewport generation (determining which part of the scene to display to the user);

- User controls: Input events for managing interactive elements; haptic feedback;

- Environment data synchronization: Camera feeds for dynamic 3D model reconstruction;

- XR streaming: AR/VR content rendered in the cloud and streamed to end-user displays in the form of stereo video or volumetric content;

- Voice data streaming between users.

Clearly, data compression can enable faster transmission and efficient use of network resources. Furthermore, we recognize that varying degrees of offloading computationally intensive tasks (such as SLAM, object detections and tracking, and rendering) from the headset to the network create different networking requirements. A promising solution relies on combining 5G and edge cloud for offloading rendering tasks, referred to as Cloud XR [36,37], with existing solutions including NVIDIA’s CloudXR Suite (https://developer.nvidia.com/cloudxr-sdk, accessed on 30 July 2025). In such cases, high-fidelity XR content is streamed to thin-client devices. The latency and bandwidth requirements for such data streams can be met with network slicing and QoS prioritization in 5G networks. In efforts driven by the Video Quality Experts Group (VQEG) Immersive Media Group (IMG), the VQEG 5G KPI working group contributed to the informative ITU-T Technical Report “QoE requirements for real-time multimedia services over 5G networks” [38], where scenarios involving the offloading of heavy-duty algorithms to 5G edge computing platforms are considered.

Ongoing efforts within standards organizations such as 3GPP are centered on specifying key ‘5G and beyond’ technologies to support various XR service scenarios [39]. 5G-Advanced features such as 5G New Radio enhancements (e.g., providing information about XR application requirements to the radio access network) continue to evolve to support the demands of emerging XR applications [40]. Initial proposals have been made for incorporating 5G-XR functionalities within the 5G system; however, details regarding the offered services, exposed APIs, and communication with other core 5G functions are still being developed. In the context of immersive multi-user mobile XR services, millimeter-wave links have recently been envisioned as a potential last-hop connectivity option for head mounted displays (HMDs) [41]. Finally, 6G connectivity is foreseen as providing the true ultra-low delay, ultra-high throughput, high reliability, and security necessary for collaboration and communication via a dynamic high-fidelity DT [42,43,44]. The Horizon Europe project 6G-XR (https://6g-xr.eu/ accessed on 30 July 2025) further looks to enable next-generation XR services and infrastructures that aim to provide beyond-state-of-the-art capabilities towards the 6G era.

4.5. QoE for Cooperative and Collaborative XR

In designing advanced XR-services that facilitate communication and collaboration, the goal is to achieve an intuitive digital–physical user experience [45]. This implies achieving user-perceived presence and immersion by enabling natural interaction between the digital and physical worlds, as well as the natural appearance and behavior of people depicted in the virtual world, including their gestures and movement. QoE for immersive communications continues to be a highly active area of research [46].

With respect to understanding end-user needs and requirements, ITU-T Rec. P.1320 [47] specifies QoE assessment procedures and metrics for the evaluation of XR telemeetings, outlining various categories of QoE influence factors and use cases (adopted from [39]). The Recommendation targets XR services taking place in a virtual location or combining virtual and real locations; services with mixtures of real and virtual participants; and services with augmented elements for collaboration; virtual conferences; and joint teleoperation of equipment. Ongoing joint efforts between the VQEG Immersive Media Group (IMG) and ITU-T Study Group 12 are targeted towards specifying interactive test methods for subjective assessment of XR communications using structured tasks for interaction and environment evaluation (draft Rec. ITU-T P.IXC, as of Jan. 2025) [48].

Relevant indicators of QoE have been referred to as QoE constituents, specified as being “formed during an individual’s experience of a service” [47]. Methods involving questionnaires, conversation and behavior analysis, performance measures, and physiological measures are proposed for assessing the following QoE constituents: simulator sickness (“cybersickness”); perceived immersion (in AR, refers to how augmented virtual objects are considered part of the real world environment, while in VR refers to the feeling of being fully immersed in a virtual environment); presence, co-presence, and social presence (perceiving oneself as existing within an environment, alone or with others); plausibility (realistic responses produced by an environment); fatigue and cognitive load; ability to achieve goals; and ethics of XR use.

Open research challenges remain with respect to designing and evaluating different user representations, interaction modalities [7], navigation techniques, and simultaneous use of different AR/VR environments by participants. In tasks requiring interaction and/or collaboration between users in separate physical and virtual spaces, effective communication and shared control and interaction mechanisms are essential [8]. Test methods and findings for collaboration and engagement of users in AR/VR remote assistance environments, reported in [49], provide valuable initial insights. New evaluation methods for collaborative XR are needed, as current tools like questionnaires often fail to capture complex social interactions and immersion.

4.6. Privacy and Ethics

As XR applications can involve the collection of a substantial amount of personal user data, mechanisms are needed to ensure data protection and privacy [50]. According to [51], “existing privacy regulations that address virtual or real-world privacy issues fail to adequately address the convergence of realities that exists in XR”. Our conceptual framework involves the use of both on-device (XR headset) and external cameras, microphones, and sensors to capture and display people and their surroundings in XR. In a report published as a result of work within the IEEE Global Initiative on Ethics of Extended Reality (XR) [52], one of the highlighted key concerns related to public use of XR and corresponding visual and auditory data capture is ensuring the anonymity and privacy of bystanders—referring to people physically within the sensing range of an AR headset. Thus, we acknowledge the need to make sure that bystanders are made aware of data capture [53] and should have the capacity to revoke assumed consent for capture. Where there is a risk that the privacy of bystanders would be violated, avatars (or any other form of representation) of bystanders not directly involved in the communication scenario should be anonymized (i.e., they should not be identifiable). Privacy concerns and the handling of potentially sensitive data should thus be carefully considered and integrated into the technology design phase, following an ethically aware design approach [54].

As the impact of XR technologies on individuals and society is still under exploration, the corresponding standards are in the early stages of development. Particularly significant is development of the IEEE P7016 Standard for Ethically Aligned Design and Operation of Metaverse Systems [55], developed by the IEEE Social Implications of Technology Standards Committee (SSIT/SC). The analysis of ethical issues should be carried out in parallel with the development of targeted technologies.

4.7. Summary of Research Challenges

To help readers understand the interdisciplinary scope and future research directions, Table 1 summarizes key research challenges, along with future directions and technologies.

Table 1.

Summary of research challenges across domains.

5. Ongoing Work

In our ongoing experimental work, we are testing and integrating functionalities defined in the conceptual framework while working towards the development of end-to-end prototypes that showcase target use cases. The prototypes have so far progressed through early-stage development and validation in a lab environment. The current experimental setup provides a foundation for evaluating the range of capabilities of integrated technologies, and for refining the requirements that will inform future research and technological solutions. At the time of writing, we summarize the targets we aim to achieve following the end-to-end system implementation as follows:

- Deviation of surface position between the synchronized virtual representation of the DT and the physical real-world smart space less than 2%;

- Change in the real-world smart space visible in the virtual representation of the DT with less than 5 s delay;

- Change in the real-world smart space visible in the virtual representation of the DT with position deviation less than 5 cm;

- Current position of each user displayed with a deviation from the actual position less than 5 cm and position update delay less than 2 s;

- Data from sensors displayed with a delay less than 2 s;

- Anonymized point-cloud hologram (GDPR compliant) displayed to ensure bystander privacy;

- Explored impact of network performance on user experience;

- Identified key quality metrics for assessing end-user QoE.

At this stage, we have developed and are testing individual components, with some early results already published [5,7] or to appear [56], and others currently in preparation. A fully integrated end-to-end system has not yet been realized. Table 2 summarizes the current development status of key DIGIPHY components.

Table 2.

Current development status of key DIGIPHY components.

With respect to 3D model reconstruction, we are evaluating and developing solutions for real-time updating and rendering of a 3D visual representation of the DT of the smart space using Gaussian Splatting techniques. Our preliminary setup uses monocular depth estimation and Gaussian Splatting reconstruction to generate a usable 3D GS environment from a single stationary camera feed. We are currently working on merging scans from multiple stationary cameras to increase environment coverage, reduce occluded geometry, and improve 3D representation accuracy. Ongoing activities include investigating techniques for real-time updates and difference highlighting. With respect to the integration of IoT data, we are testing the integration of various communication technologies including MQTT and WebSocket.

Furthermore, we are conducting a number of user studies to assess various QoE constituents corresponding to user-to-user and user-to-object interaction scenarios. Initial results have provided us with insights into both AR and VR user-preferred object manipulation techniques [7], as well as preferred methods for teleoperating a remote mobile robot via a VR-based interface [56]. In our first study, published in [7], we investigated the impact of different virtual object manipulation techniques on user experience and task performance in a networked cross-reality application. A total of 40 participants took part in the study. Participants were organized into pairs, with one situated in an AR environment in a real-world lab and the other in its corresponding VR digital representation. Participants were tasked with moving virtual chess pieces to set positions using three manipulation techniques across two chess set sizes. We measured task completion time, error rates, and user feedback on experience, usability, and interaction quality. The Pick, Drag, and Snap (PDaS) technique outperformed others, yielding faster task completion and higher satisfaction. Furthermore, AR and VR users showed distinct differences in task completion time, with the AR group completing tasks significantly faster, thus highlighting key design considerations for cross-reality systems. In a second study [56], we investigated preferred methods for teleoperating a remote mobile robot via a VR-based interface. In a user study involving 27 participants, we evaluated teleoperation performance in terms of user experience and navigation task completion time for different teleoperation view modes (first person view, VR view with camera feed, VR view without camera feed), and robot control methods (manual vs. point-and-click). Results indicate that users preferred the combination of a real-time camera feed with a virtual representation and manual control. The findings from both studies will be utilized in the two use cases outlined in Section 2. Interested readers are encouraged to refer to [7,56] for further study details.

Finally, we are focusing on the utilization of 5G networks for system evaluation and validation. Network performance measurements were conducted using UDP and TCP protocols immediately after the initial provisioning of the 5G infrastructure, yielding preliminary results for throughput and latency. As a next (ongoing) step, various data flows (illustrated in Figure 3) are being mapped to 5G Quality of Service (QoS) identifiers to enable evaluation of 5G QoS mechanisms in meeting end-user requirements.

6. Conclusions and Outlook

In this paper, we highlight the critical technologies and research challenges that we consider relevant to enable user-centric immersive shared presence via XR communication and interaction through the integration of DT, smart spaces, and advanced network infrastructures. By leveraging DTs as dynamically updated virtual representations of physical environments, synchronized in real time, the proposed DIGIPHY concept facilitates seamless interaction and collaboration between remote VR users and local AR users in shared immersive spaces.

We propose a conceptual framework integrating key functionalities needed to realize a shared presence experience within a dynamically updated DT. Key technologies and challenges related to the framework and lab deployment architecture are discussed, including dynamic 3D scene reconstruction; transmission via high-bandwidth, low-latency energy-efficient networks; utilization of cloud and edge computing for real-time processing; IoT platforms for maintaining spatial and temporal synchronization; Quality of Experience assessment methods and metrics; and the need to address privacy concerns and ethical issues.

Looking ahead, incorporating a number of advanced features requires a move towards future 6G infrastructures. Support for volumetric user representations (e.g., users portrayed as holograms rather than avatars) requires bandwidth reaching Gbps speeds due to limited availability of real-time compression algorithms and novel streaming protocols. Furthermore, achieving true real-time 3D model reconstructions of complex environments and virtual/physical object synchronization between local AR and remote VR views with adequate human-to-device interaction in smart spaces requires ultra low-latency, edge computing support, and the use of AI algorithms for both scene generation and optimized network resource allocation.

Author Contributions

Conceptualization, L.S.-K., M.M., D.S., V.S., D.H., I.P.Z., M.K. and A.G.; investigation, L.S.-K., M.M., D.S., V.S., D.H., I.P.Z., M.K. and A.G.; writing—original draft preparation, L.S.-K., M.M. and I.P.Z.; writing—review and editing, M.M., D.S., V.S., D.H., I.P.Z., M.K. and A.G.; project administration, L.S.-K.; funding acquisition, L.S.-K., D.S. and M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been funded by the European Union—NextGenerationEU, project XR Communication and Interaction Through a Dynamically Updated Digital Twin of a Smart Space—DIGIPHY, Grant NPOO.C3.2.R3-I1.04.0070. Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or the European Commission. Neither the European Union nor the European Commission can be held responsible for them.

Acknowledgments

Images were created using draw.io and flaticon.com.

Conflicts of Interest

Authors Vedran Skarica and Darian Skarica are employed by the company Delta Reality, while author Andrej Grguric is employed by the company Ericsson Nikola Tesla d.d. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be constructed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 3D | Three Dimensional (width, height, and depth) |

| 3D-GS | 3D Gaussian Splatting |

| AAL | Active Assisted Living |

| AR | Augmented Reality |

| CoAP | Constrained Application Protocol |

| CPU | Central Processing Unit |

| DoF | Degrees of Freedom |

| DT | Digital Twin |

| GDPR | General Data Protection Regulation |

| GPU | Graphics Processing Unit |

| HMD | Head Mounted Display |

| HTTP | Hypertext Transfer Protocol |

| IEEE | Institute of Electrical and Electronics Engineers |

| IMG | Immersive Media Group |

| IMU | Inertial Measurement Unit |

| IoT | Internet of Things |

| ITU-T | International Telecommunication Union - Telecommunication Standardization Sector |

| KPI | Key Performance Indicator |

| MQTT | Message Queuing Telemetry Transport |

| MR | Mixed Reality |

| NeRFs | Neural Radiance Fields |

| QoE | Quality of Experience |

| QoS | Quality of Service |

| QUIC | Quick UDP Internet Connections |

| RFC | Request for Comments |

| SLAM | Simultaneous Localization and Mapping |

| TCP | Transmission Control Protocol |

| UDP | User Datagram Protocol |

| VPS | Visual Positioning System |

| VQEG | Video Quality Experts Group |

| VR | Virtual Reality |

| XR | eXtended Reality |

References

- New European Media (NEM). NEM: List of Topics for the Work Program 2023–2024. 2022. Available online: https://nem-initiative.org/wp-content/uploads/2022/05/nem-list-of-topics-for-the-work-program-2023-2024.pdf?x46896 (accessed on 28 November 2024).

- Stacchio, L.; Angeli, A.; Marfia, G. Empowering Digital Twins with Extended Reality Collaborations. Virtual Real. Intell. Hardw. 2022, 4, 487–505. [Google Scholar] [CrossRef]

- Künz, A.; Rosmann, S.; Loria, E.; Pirker, J. The Potential of Augmented Reality for Digital Twins: A Literature Review. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 12–16 March 2022; pp. 389–398. [Google Scholar]

- Rauschnabel, P.A.; Felix, R.; Hinsch, C.; Shahab, H.; Alt, F. What is XR? Towards a framework for augmented and virtual reality. Comput. Hum. Behav. 2022, 133, 107289. [Google Scholar] [CrossRef]

- Skorin-Kapov, L.; Matijasevic, M.; Podnar Zarko, I.; Kusek, M.; Huljenic, D.; Skarica, V.; Skarica, D.; Grguric, A. DIGIPHY: XR Communication and Interaction within a Dynamically Updated Digital Twin of a Smart Space, 2025. In Proceedings of the EUCNC & 6G Summit, Poznań, Poland, 3–6 June 2025; Available online: https://tinyurl.com/2y4dvcdy (accessed on 30 July 2025).

- Florez-Revuelta, F.; Ake-Kob, A.; Climent-Perez, P.; Coelho, P.; Colonna, L.; Dahabiyeh, L.; Dantas, C.; Dogru-Huzmeli, E.; Ekenel, H.K.; Jevremovic, A.; et al. 50 Questions on Active Assisted Living Technologies, Global ed.; Zenodo: Geneva, Switzerland, 2024. [Google Scholar] [CrossRef]

- Brzica, L.; Matanović, F.; Vlahović, S.; Pavlin Bernardić, N.; Skorin-Kapov, L. Analysis of User Experience and Task Performance in a Multi-User Cross-Reality Virtual Object Manipulation Task. In Proceedings of the 17th International Workshop on IMmersive Mixed and Virtual Environment Systems, Stellenbosch, South Africa, 31 March–3 April 2025; pp. 22–28. [Google Scholar]

- Oppermann, L.; Buchholz, F.; Uzun, Y. Industrial Metaverse: Supporting remote maintenance with avatars and digital twins in collaborative XR environments. In Proceedings of the Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–5. [Google Scholar]

- Bormann, C.; Ersue, M.; Keränen, A. Terminology for Constrained-Node Networks; RFC 7228; RFC Editor: Los Angeles, CA, USA, 2014. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Gao, K.; Gao, Y.; He, H.; Lu, D.; Xu, L.; Li, J. NeRF: Neural Radiance Field in 3D Vision, a Comprehensive Review. arXiv 2022, arXiv:2210.00379. [Google Scholar]

- Lindell, D.B.; Martel, J.N.; Wetzstein, G. Autoint: Automatic integration for fast neural volume rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14556–14565. [Google Scholar]

- Reiser, C.; Peng, S.; Liao, Y.; Geiger, A. Kilonerf: Speeding up neural radiance fields with thousands of tiny MLPs. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 14335–14345. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 2023, 42, 139. [Google Scholar] [CrossRef]

- Chen, G.; Wang, W. A survey on 3D Gaussian Splatting. arXiv 2024, arXiv:2401.03890. [Google Scholar]

- Haslbauer, P.; Pullen, M.; Praprotnik, M.; Stimec, S.; Hospital, M.; Grosbois, A.; Reichherzer, C.; Smolic, A. VR Planica: Gaussian Splatting Workflows for Immersive Storytelling. In Proceedings of the ACM International Conference on Interactive Media Experiences Workshops, Porto Alegre, RS, Brasil, 9–11 June 2025; pp. 47–53. [Google Scholar] [CrossRef]

- Jiang, Y.; Yu, C.; Xie, T.; Li, X.; Feng, Y.; Wang, H.; Li, M.; Lau, H.; Gao, F.; Yang, Y.; et al. VR-GS: A Physical Dynamics-Aware Interactive Gaussian Splatting System in Virtual Reality. In Proceedings of the ACM SIGGRAPH 2024 Conference Papers, Denver, CO, USA, 27 July–1 August 2024; p. 78. [Google Scholar]

- Aras Pranckevičius. UnityGaussianSplatting. Available online: https://github.com/aras-p/UnityGaussianSplatting (accessed on 21 June 2025).

- Zheng, S.; Zhou, B.; Shao, R.; Liu, B.; Zhang, S.; Nie, L.; Liu, Y. Gps–Gaussian: Generalizable pixel-wise 3D Gaussian Splatting for real-time human novel view synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19680–19690. [Google Scholar]

- Liu, Z.; Su, J.; Cai, G.; Chen, Y.; Zeng, B.; Wang, Z. Georgs: Geometric regularization for real-time novel view synthesis from sparse inputs. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 13113–13126. [Google Scholar] [CrossRef]

- Ren, K.; Jiang, L.; Lu, T.; Yu, M.; Xu, L.; Ni, Z.; Dai, B. Octree-GS: Towards consistent Real-Time Rendering with LOD-Structured 3D Gaussians. arXiv 2024, arXiv:2403.17898. [Google Scholar] [CrossRef]

- Lee, J.C.; Rho, D.; Sun, X.; Ko, J.H.; Park, E. Compact 3D Gaussian representation for radiance field. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 21719–21728. [Google Scholar]

- Niedermayr, S.; Stumpfegger, J.; Westermann, R. Compressed 3D Gaussian Splatting for accelerated novel view synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 10349–10358. [Google Scholar]

- Wu, G.; Yi, T.; Fang, J.; Xie, L.; Zhang, X.; Wei, W.; Liu, W.; Tian, Q.; Wang, X. 4D Gaussian Splatting for real-time dynamic scene rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 20310–20320. [Google Scholar]

- Schieber, H.; Young, J.; Langlotz, T.; Zollmann, S.; Roth, D. Semantics-controlled gaussian splatting for outdoor scene reconstruction and rendering in virtual reality. In Proceedings of the 2025 IEEE Conference Virtual Reality and 3D User Interfaces (IEEE VR), Saint-Malo, France, 8–12 March 2025; pp. 318–328. [Google Scholar]

- Fei, B.; Xu, J.; Zhang, R.; Zhou, Q.; Yang, W.; He, Y. 3D Gaussian Splatting as New Era: A Survey. IEEE Trans. Vis. Comput. Graph. 2024, 1–20, early access. [Google Scholar] [CrossRef]

- Bao, Y.; Ding, T.; Huo, J.; Liu, Y.; Li, Y.; Li, W.; Gao, Y.; Luo, J. 3D Gaussian Splatting: Survey, technologies, challenges, and opportunities. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 7. [Google Scholar] [CrossRef]

- Sheng, X.; Mao, S.; Yan, Y.; Yang, X. Review on SLAM algorithms for Augmented Reality. Displays 2024, 84, 102806. [Google Scholar] [CrossRef]

- Li, J.; Wang, C.; Kang, X.; Zhao, Q. Camera localization for augmented reality and indoor positioning: A vision-based 3D feature database approach. Int. J. Digit. Earth 2020, 13, 727–741. [Google Scholar] [CrossRef]

- Jo, D.; Kim, G.J. AR Enabled IoT for a Smart and Interactive Environment: A Survey and Future Directions. Sensors 2019, 19, 4330. [Google Scholar] [CrossRef]

- Podnar Žarko, I.; Mueller, S.; Plociennik, M.; Rajtar, T. The symbIoTe Solution for Semantic and Syntactic Interoperability of Cloud-based IoT Platforms. In Proceedings of the 2019 IEEE Global IoT Summit (GIoTS), Aarhus, Denmark, 17–21 June 2019; pp. 1–6. [Google Scholar]

- Strinati, E.C.; Barbarossa, S. 6G networks: Beyond Shannon towards semantic and goal-oriented communications. Comput. Netw. 2021, 190, 107930. [Google Scholar] [CrossRef]

- Lecci, M.; Drago, M.; Zanella, A.; Zorzi, M. An open framework for analyzing and modeling XR network traffic. IEEE Access 2021, 9, 129782–129795. [Google Scholar] [CrossRef]

- Aijaz, A.; Dohler, M.; Aghvami, A.H.; Friderikos, V.; Frodigh, M. Realizing the tactile Internet: Haptic communications over next generation 5G cellular networks. IEEE Wirel. Commun. 2017, 24, 82–89. [Google Scholar] [CrossRef]

- Torres Vega, M.; Liaskos, C.; Abadal, S.; Papapetrou, E.; Jain, A.; Mouhouche, B.; Kalem, G.; Ergüt, S.; Mach, M.; Famaey, J.; et al. Immersive interconnected virtual and augmented reality: A 5G and IoT perspective. J. Netw. Syst. Manag. 2020, 28, 796–826. [Google Scholar] [CrossRef]

- Yeregui, I.; Mejías, D.; Pacho, G.; Viola, R.; Astorga, J.; Montagud, M. Edge Rendering Architecture for multiuser XR Experiences and E2E Performance Assessment. arXiv 2024, arXiv:2406.07087. [Google Scholar]

- Theodoropoulos, T.; Makris, A.; Boudi, A.; Taleb, T.; Herzog, U.; Rosa, L.; Cordeiro, L.; Tserpes, K.; Spatafora, E.; Romussi, A.; et al. Cloud-based XR services: A survey on relevant challenges and enabling technologies. J. Netw. Netw. Appl. 2022, 2, 1–22. [Google Scholar] [CrossRef]

- ITU-T GSTR-5GQoE; Quality of Experience (QoE) Requirements for Real-Time Multimedia Services over 5G Networks. ITU-T Technical Report; International Telecommunication Union: Geneva, Switzerland, 2022.

- 3GPP TR 26.928; Technical Specification Group Services and System Aspects; Extended Reality (XR) in 5G (Release 18). Technical report; 3rd Generation Partnership Project; Version 18.0.0; 3GPP Organizational Partners: Sophia Antipolis, France, 2023.

- 3GPP TR 38.835; Technical Specification Group Radio Access Network; NR; Study on XR enhancements for NR (Release 18). Technical report; 3rd Generation Partnership Project; Version 18.0.1; 3GPP Organizational Partners: Sophia Antipolis, France, 2023.

- Struye, J.; Van Damme, S.; Bhat, N.N.; Troch, A.; Van Liempd, B.; Assasa, H.; Lemic, F.; Famaey, J.; Vega, M.T. Toward Interactive Multi-User Extended Reality Using Millimeter-Wave Networking. IEEE Commun. Mag. 2024, 62, 54–60. [Google Scholar] [CrossRef]

- Taleb, T.; Boudi, A.; Rosa, L.; Cordeiro, L.; Theodoropoulos, T.; Tserpes, K.; Dazzi, P.; Protopsaltis, A.I.; Li, R. Toward supporting XR services: Architecture and enablers. IEEE Internet Things J. 2022, 10, 3567–3586. [Google Scholar] [CrossRef]

- Tang, F.; Chen, X.; Zhao, M.; Kato, N. The Roadmap of Communication and Networking in 6G for the Metaverse. IEEE Wirel. Commun. 2022, 30, 72–81. [Google Scholar] [CrossRef]

- Chen, W.; Lin, X.; Lee, J.; Toskala, A.; Sun, S.; Chiasserini, C.F.; Liu, L. 5G-Advanced toward 6G: Past, Present, and Future. IEEE J. Sel. Areas Commun. 2023, 41, 1592–1619. [Google Scholar] [CrossRef]

- Fiedler, M.; Skorin-Kapov, L. Towards Immersive Digiphysical Experiences. ACM SIGMultimedia Rec. 2025, 16, 1. [Google Scholar] [CrossRef]

- Pérez, P.; Gonzalez-Sosa, E.; Gutiérrez, J.; García, N. Emerging immersive communication systems: Overview, taxonomy, and good practices for QoE assessment. Front. Signal Process. 2022, 2, 917684. [Google Scholar] [CrossRef]

- ITU-T P.1320; Quality of Experience Assessment of Extended Reality Meetings. ITU-T Recommendation; International Telecommunication Union: Geneva, Switzerland, 2022.

- ITU-T P.IXC; First Draft of P.IXC ‘Interactive Test Methods for Subjective Assessment of Extended Reality Communications’. ITU-T SG12 Contribution 199; International Telecommunication Union: Geneva, Switzerland, 2024.

- Chang, E.; Lee, Y.; Billinghurst, M.; Yoo, B. Efficient VR-AR communication method using virtual replicas in XR remote collaboration. Int. J. Hum.-Comput. Stud. 2024, 190, 103304. [Google Scholar] [CrossRef]

- Sivelle, C.; Palma, D.; De Moor, K. Security and Privacy for VR in non-entertainment sectors: A practice-based study of the challenges, strategies and gaps. In Proceedings of the 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Saint-Malo, France, 8–12 March 2025; pp. 295–298. [Google Scholar]

- Pahi, S.; Schroeder, C. Extended privacy for extended reality: XR technology has 99 problems and privacy is several of them. Notre Dame J. Emerg. Tech. 2023, 4, 1. [Google Scholar] [CrossRef]

- McGill; Mark. The IEEE Global Initiative on Ethics of Extended Reality (XR) Report–Extended Reality (XR) and the Erosion of Anonymity and Privacy. In Extended Reality (XR) and the Erosion of Anonymity and Privacy—White Paper; IEEE: New York, NY, USA, 2021; pp. 1–24. [Google Scholar]

- O’Hagan, J.; Saeghe, P.; Gugenheimer, J.; Medeiros, D.; Marky, K.; Khamis, M.; McGill, M. Privacy-enhancing technology and everyday augmented reality: Understanding bystanders’ varying needs for awareness and consent. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2023, 6, 1–35. [Google Scholar] [CrossRef]

- Florez-Revuelta, F. (Ed.) Proceedings of the Joint visuAAL-GoodBrother Conference on Trustworthy Video- and Audio-Based Assistive Technologies; Zenodo: Geneva, Switzerland, 2024. [Google Scholar] [CrossRef]

- IEEE Social Implications of Technology Standards Committee (SSIT/SC). IEEE P7016 Standard for Ethically Aligned Design and Operation of Metaverse Systems. Available online: https://standards.ieee.org/ieee/7016/11178/ (accessed on 21 June 2025).

- Paladin, M.; Brzica, L.; Matanovic, F.; Kljajic, D.; Skorin-Kapov, L. QoE and Task Performance Assessment of Mobile Robot Teleoperation via a VR-based Interface. In Proceedings of the 17th International Conference on Quality of Multimedia Experience (Accepted for Publication), Madrid, Spain, 30 September–2 October 2025. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).