1. Introduction

The advance of Industry 4.0 has included new paradigms, constantly modifying industrial processes through the integration of digital technologies that optimize production, improve operational efficiency and strengthen maintenance strategies [

1]. In this context, the monitoring and advanced diagnosis of faults in industrial equipment has become vitally important within production processes, not only to ensure operational continuity and reliability of machinery, but also to improve the safety of workers and minimize costs associated with unplanned shutdowns. In parallel, the integration of immersive simulation tools such as virtual reality and digital twins also opens new opportunities for technical training and capacity building in industrial environments [

2].

Within this framework, condition-based and preventive maintenance approaches play a fundamental role in industrial asset management. Preventive maintenance is based on the scheduled execution of inspections and the replacement of components before a fault occurs, ensuring optimal performance and extending the useful life of equipment. However, with the advancement of digitalization, predictive maintenance has emerged, which, in conjunction with preventive maintenance, employs advanced technologies such as IoT sensors and digital tools to monitor the condition of assets in real time and predict faults before they occur. This approach enables more efficient maintenance planning, avoiding unnecessary interventions and reducing operating costs.

The complexity of new industrial systems and their automation, which is taking an increasingly dominant role, together with the need to increase maintenance accuracy, have led to the adoption of costly innovative tools such as virtual reality (VR), augmented reality (AR) and digital twins (DTs) [

3]. These technologies, in combination with IoT sensors, cloud storage and real-time data analytics, enable the more efficient lifecycle management of industrial assets, optimizing decision making and reducing downtime.

As these improvements continue to evolve, their implementation becomes increasingly necessary in all types of industries to keep organizations competitive. The market’s growing demand for more rigorous safety standards that seek to protect worker integrity also makes it possible to manage the health and availability of industrial assets, ensuring a more efficient and reliable production environment.

1.1. Issues and Applications

Despite these advances, industries face significant challenges in implementing such technological solutions. First, there is a lack of trained personnel to operate and maintain increasingly digitized systems. The introduction of advanced machinery requires ensuring the adequate training of all workers, which is critical to maintaining productivity and operational safety [

4]. Recent reviews highlight that the continuous training of industrial personnel is vital in order to equip them with the skills needed in changing environments [

5].

Secondly, many operations occur in remote or hazardous environments, such as subway mines, power facilities located far from urban centers and highly automated factories, among others. This has generated a need for tools to overcome adverse equipment situations. Physical access for inspection and maintenance at these sites is difficult, costly and risky, which has motivated the search for remote monitoring and remote operation alternatives [

6,

7]. For example, BP implemented digital twins to monitor and maintain oil facilities in isolated regions, increasing operational reliability without the constant presence of personnel in the field [

8].

Third, the increasing complexity of industrial systems poses an additional obstacle. The convergence of multiple technologies and the enormous amount of data generated in smart factories make it difficult to comprehensively understand processes [

9]. Managers and personnel in charge of overseeing and managing maintenance face the challenge of handling massive volumes of information in real time, which is indispensable for harnessing the potential of Industry 4.0 but requires new analytical and management capabilities [

4]. Likewise, integrating these advanced technologies without affecting the existing safety culture brings with it a major challenge, where automation and intelligent monitoring promise to simplify risk management but must coexist with the human and organizational factors that have historically underpinned safe performance [

10].

Faced with these problems, there is a need for innovative technological tools to support industrial maintenance and personnel training while improving safety in remote or complex operations. One of these tools is remote monitoring through digital twins, which allows users to supervise equipment and processes remotely in real time. Several use cases have demonstrated the value of this technology: for example, Siemens developed digital twins of electrical systems and treatment plants to monitor pipelines in real time and detect faults trends in advance, issuing early warnings before incidents occurred [

8]. Similarly, SAP reports that virtual twin inspections can identify incipient faults more cost-effectively than physical inspections, improving the safety of equipment by predicting its future evolution [

8]. These predictive capabilities are especially useful in remote environments, where early responses can prevent disasters.

Another indispensable tool is virtual reality applied to training. VR makes it possible to create immersive environments where operators practice maintenance tasks and operating procedures without interrupting production or exposing themselves to real hazards. For example, Aziz et al. developed a VR system for equipment maintenance in the oil industry, demonstrating that workers can train off-site without affecting daily operations and receive immediate feedback on their actions [

11]. Similarly, Oliveira et al. (2020) [

11] implemented a VR simulator for the maintenance of diesel engines in mining locomotives, given that the size and weight of these engines made their availability for training difficult. The virtual solution allowed for the training of personnel in a safe and low-cost environment, achieving satisfactory results in comfort, immersion and usability, validated by maintenance experts [

11]. In general, the literature reports multiple advantages of VR-based training: greater commitment and speed in learning, the possibility of measuring performance, scenario customization and cost reduction, all while preserving the physical integrity of the operators by avoiding real risks [

5]. Thus, VR is emerging as a present and future alternative for the efficient training of human resources in the industry. For its part, augmented reality offers direct support in the field: with mobile devices or AR glasses, technicians can receive visual instructions superimposed on the real environment during maintenance tasks, facilitating the step-by-step execution of complex procedures [

12]. Recent studies show that these AR/VR applications in maintenance tend to improve worker performance—for example, by reducing operation errors—and in most cases the impacts are positive for employees and companies. Di Pasquale et al. (2024) identified that well-designed AR/VR solutions increase the efficiency of corrective and preventive interventions [

12]. Additionally, AR allows the connecting of remote experts with local operators: by livestreaming the actual scene annotated virtually, a specialist can guide plant personnel in the resolution of a breakdown, combining remote monitoring with real-time expert assistance [

12]. This type of AR-based remote support has been successfully tested, showing that it is feasible to perform maintenance with remote expert assistance when on-site access is restricted [

13]. In sum, tools such as VR and AR are bridging the skills gap by training and assisting less experienced operators in complex industrial environments [

13].

Another technological pillar in Industry 4.0 is the use of digital twins for safety and proactive risk management. The digitization and extensive sensorization of operations open the possibility of automating the monitoring of abnormal conditions and generating early warnings of potential faults or hazardous situations [

4]. For example, environmental sensors connected to a digital twin can detect chemical leaks or abrupt changes in process parameters [

4]. Likewise, AR/VR is being applied in order to improve personal protective equipment: through visors, a worker can visualize environmental information (temperatures, gas concentrations, etc.) over his or her field of view while operating, increasing situational awareness and safety [

4]. These innovations make up what Liu et al. (2022) call Safety 3.0, a new generation of industrial safety management integrated with Industry 4.0 [

4]. However, the incorporation of automation in safety must be complemented by the existing safety culture. Lees (2021) warns that relying exclusively on technical solutions (e.g., automated monitoring and warning systems) without involving the human factor could undermine established safety practices [

9]. Therefore, they recommend an approach that allows the worker to be included in these processes when implementing Industry 4.0 safety features, where technology serves to empower workers and not displace them [

9]. In other words, digital tools should be integrated in a coherent way, supporting informed decision making by operators and maintaining high industrial safety standards. Thus, unlike previous studies that focus individually on remote monitoring, predictive models, or isolated VR training simulators, the present work proposes a structured methodology that integrates all these technologies into a single immersive and interactive system. This approach is novel in that it combines real-time data acquisition from industrial equipment, critical parameter analysis and visual feedback through virtual reality in a training-oriented environment. Furthermore, the methodology is designed using open-source or widely available tools, enabling its cost-effective deployment in educational and industrial settings alike. This integration offers not only technical advantages for fault prevention but also pedagogical value by enhancing operator training through simulated emergency scenarios based on real sensor input.

1.2. Challenges and Value Proposition

Despite the multiple benefits of implementing these technologies in industry, they are not yet the norm in all industries due to several factors, such as the following:

High implementation costs: Given that these technologies require specialized hardware, such as virtual reality glasses and computer equipment of the necessary range to enable the development of the environment, together with the handling of a considerable amount of data, these aspects represent a barrier for a significant number of companies [

13].

Resistance to change: In many companies the non-implementation of new technologies is due to bad conceptions of these technologies and the requirement of training necessary for the handling of this equipment, without forgetting the learning curve involved for the operator when adopting these new technologies, which mainly causes rejection [

11].

Technological limitations: Factors such as latency, internet connection stability and data processing capacity are still, in many cases, obstacles that hinder the correct implementation of these technologies.

Given this background, the need for comprehensive solutions that combine remote monitoring, virtual simulation and immersive training to address the current limitations in industrial maintenance is evident. The literature supports the potential of an immersive system based on digital twins with early warning capabilities as an answer to these challenges. The methodological section that follows proposes precisely such a technological architecture, which comprises a systematic way to implement these technologies for any industrial equipment, by collecting data based on previous analysis, and integrating a digital twin into an immersive virtual reality environment. This system will seek to anticipate faults and operational risks through early warnings, while facilitating continuous training and remote assistance to personnel through the use of open-source software and programs that are easy to understand for the user in order to expand the implementation of these technologies to any type of area in the industry, thus democratizing the use of these new technologies, as well as improving the reliability of the processes and safety in the different industrial fields.

2. Materials and Methods

This research addresses and proposes the integration of virtual reality technologies and real-time sensing for the development of a collaborative, safe and interactive environment, which also allows the ability to remotely monitor equipment along with providing an environment that allows for training against maintenance tasks. For the development of this project, it was decided to use the Design Science Research Methodology (DSRM) since it is widely accepted in technological and industrial development contexts and structures research in a systematic way.

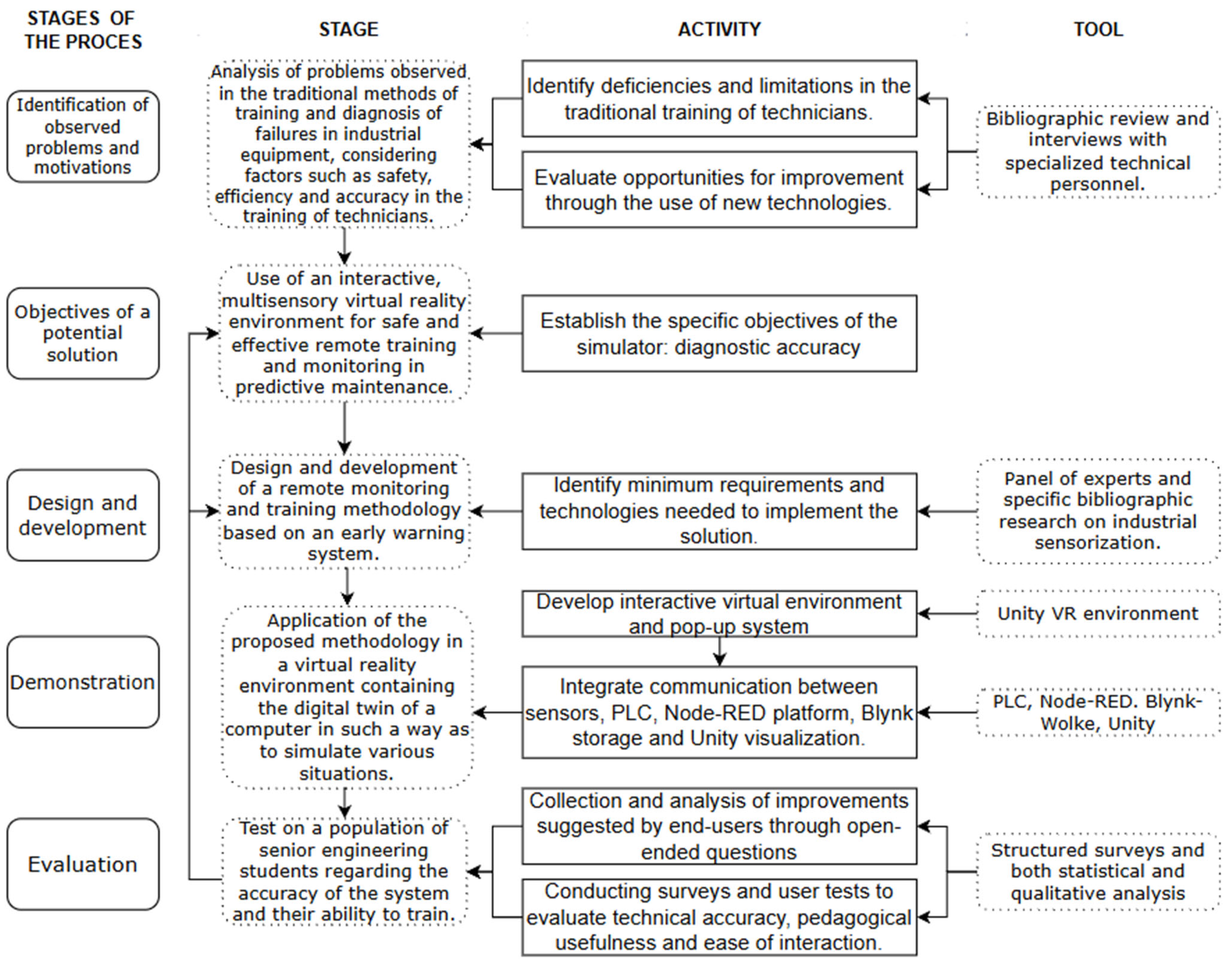

Figure 1 shows the activities necessary to carry out this research, together with the tools and methodologies that allow its direct implementation.

2.1. Objectives of Solution

To provide a solution to the problems, this research sought to meet specific objectives, which were as follows:

Provide an immersive virtual reality environment that enables the monitoring of critical parameters.

Integrate a sensor system to deliver real-time information to Unity with the data already processed by NODERED.

Integrate a system of emerging alerts linked to the critical operating limits of the measured parameters and the delivery of instructions for maintenance actions to be performed.

2.2. Methodological Proposal

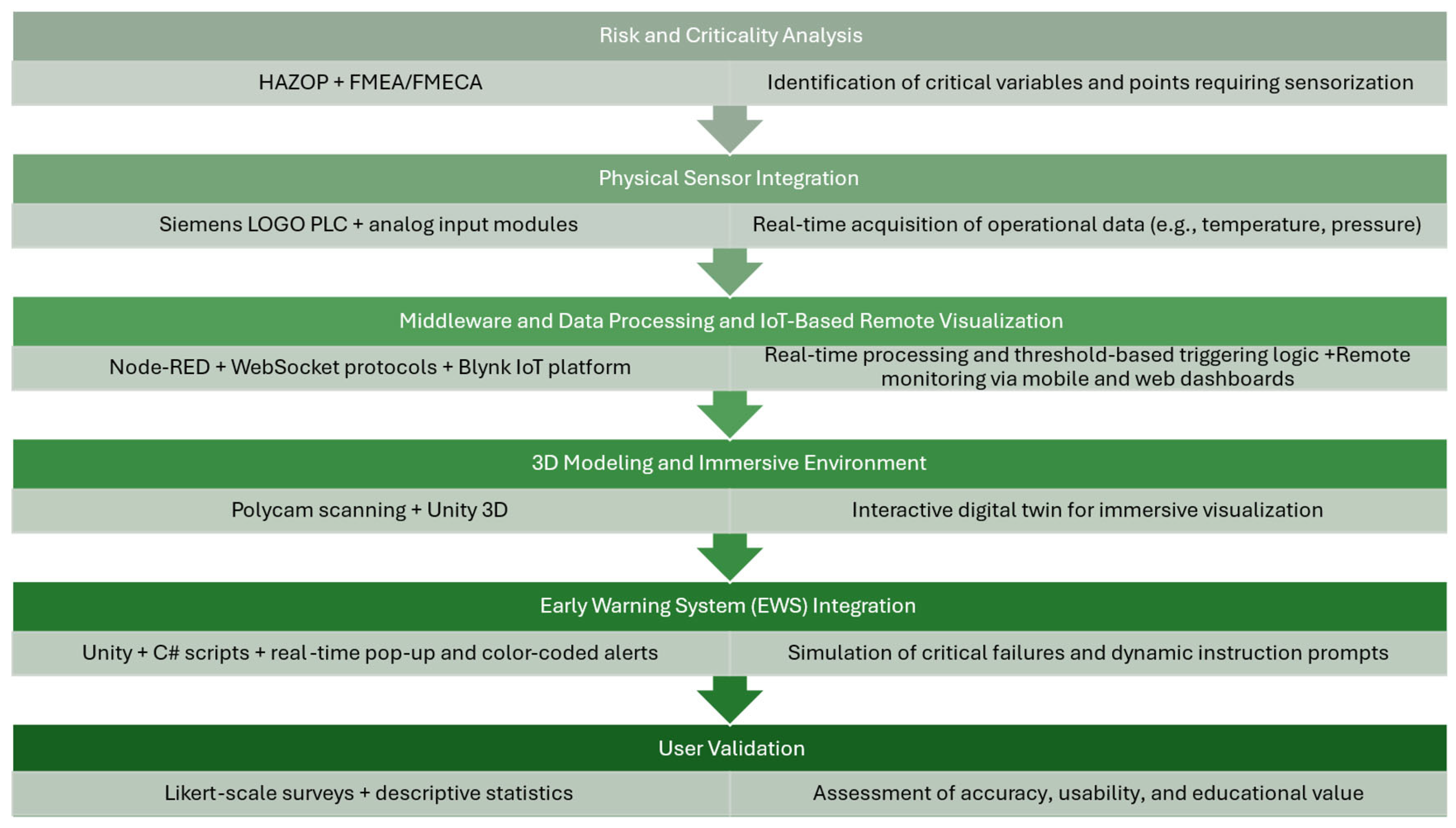

In

Figure 2, the proposed workflow is presented, detailing the sequential tasks, tools involved and expected outputs for the implementation of the digital twin and early warning system in a VR environment.

For the development of a technological implementation of this type, a sequence of steps was designed to allow the inclusion of the technological solution, allowing, in the first instance, the selection of the equipment to which this technology will be applied under defined criteria and guidelines.

The first step is to analyze the equipment environment and the possible risks that its handling could represent for operators and technicians. To do this, it is essential to perform a HAZOP analysis (Hazard and Operability Analysis), a systematic methodology to identify deviations in the operation of the different pieces of equipment involved in a production process and to evaluate their possible consequences. This analysis facilitates the implementation of appropriate mitigation measures, ensuring a safe working environment and optimizing the integration of technology in industrial operation.

The inclusion of HAZOP analysis makes it possible to determine the need for the soundproofing of factors external to the machine, with the aim of preserving the integrity of the workers. The identification of noise sources or interferences in the working environment is crucial, as they can affect both safety and operational efficiency. In this context, the combination of HAZOP analysis with virtual reality technologies enables the simulation of different working conditions, allowing the evaluation of corrective and preventive measures in different types of scenarios. This reduces risks, improves personnel training and optimizes maintenance and operation processes [

8].

Once the HAZOP analysis has been performed, it is essential to carry out a detailed study to identify which parts or systems of the equipment should be parameterized according to the occurrence of failures and the criticality of each component for the correct operation of the equipment. For these purposes, in an industrial environment it is recommended to perform a Failure Mode and Effect Analysis (FMEA) or its extended version, the Failure Mode, Effect and Criticality Analysis (FMECA) [

14].

FMEA is a structured methodology used in reliability engineering to identify and evaluate the possible failure modes of a system and their causes and effects, with the objective of mitigating possible risks before they occur. FMECA allows the extension of FMEA by including a criticality assessment, being able to prioritize failure modes according to their impact on operation and safety [

15].

Including FMEA- or FMECA-type analyses in the initial phase of the process allows users to achieve the following:

Identify potential faults in key equipment components prior to implementation.

Evaluate the severity and probability of the occurrence of each possible fault, prioritizing those with the greatest impact.

Optimize equipment parameterization, ensuring that critical variables are adequately monitored.

Reduce maintenance costs and unexpected downtime by implementing preventive and predictive maintenance strategies.

Improve industrial safety, minimizing risks for operators and technicians.

The combination of analysis in conjunction with digital tools, such as virtual reality and digital twins, allows users to simulate faults and evaluate maintenance strategies in a virtual environment before applying them in the real world [

16].

Once the initial analyses have been performed and the correct sensorization of the equipment has been justified, the development of the digital twin and its corresponding virtual reality (VR) based environment begins. This virtual reality environment must provide an immersive, accurate and detailed experience, replicating as closely as possible the physical dimensions of the equipment as well as the characteristics of its real operating environment [

17].

To ensure effective monitoring, this virtual model must also facilitate the dynamic visualization of operational parameters captured by sensors installed on the actual equipment, allowing operators to view this data at its exact location within the virtual environment.

The development of the digital twin with VR involves the integration of multiple advanced technologies. First, 3D scanning techniques are used to obtain an accurate representation of the equipment, ensuring that the virtual geometry accurately matches the actual dimensions. Next, graphics engines, such as Unity, are used to generate an immersive and interactive environment that enhances the operator’s experience. Finally, the connection with software such as Node-RED and Blynk allows users to establish efficient and continuous communication between the physical sensors and the digital environment, facilitating the accurate and seamless synchronization of sensory information in real time [

13].

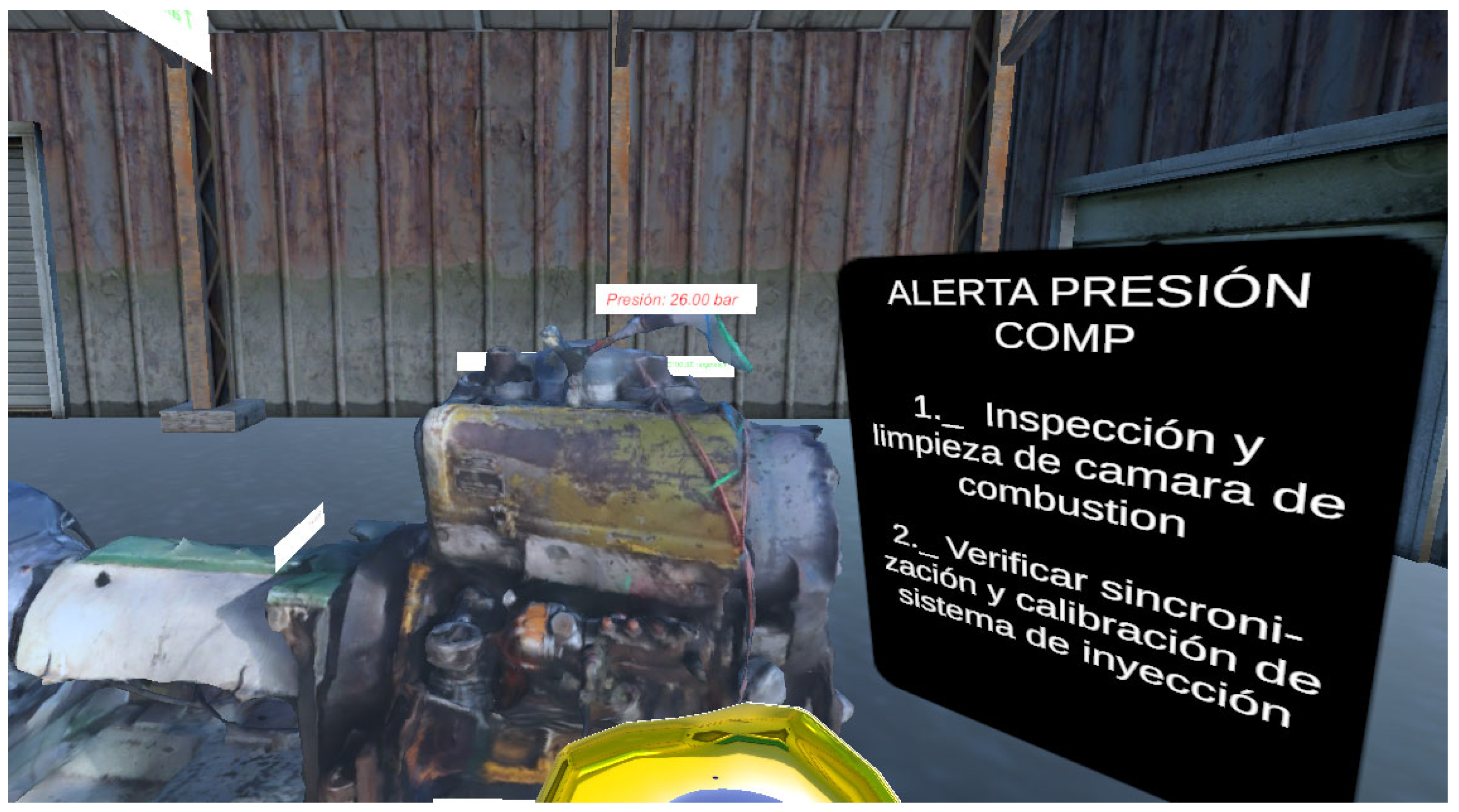

Following this, the model receives real-time data from temperature and pressure sensors installed on the physical equipment, which are represented in the virtual environment through interactive visual elements. Each sensor parameter is displayed as a digital readout, with its color dynamically changing based on its proximity to critical thresholds: green (normal), yellow (warning), and red (critical).

Interaction with the model is primarily observational: users can navigate the virtual environment, inspect the sensorized areas and monitor parameter changes in real time. When a parameter exceeds its critical value, an automated pop-up alert is displayed, providing corresponding maintenance instructions, thus simulating fault conditions.

The joint implementation of the digital twin and virtual reality brings several significant benefits for industrial monitoring and maintenance, which consolidate its application through aspects such as the following:

The immersive and interactive visualization in the VR environment allows operators to interact directly with the three-dimensional model of the equipment, allowing them to explore specific details without the need for direct physical contact, thus increasing their understanding of the operation, assembly characteristics and spatial location of the various components.

Real-time monitoring, thanks to the integration of sensors, allows the equipment’s operating parameters to be dynamically visualized within the virtual environment. This facilitates the immediate detection of anomalies and optimizes preventive or corrective maintenance decisions, thus increasing operational reliability [

17].

The simulation of failures and virtual training, since the very nature of the virtual environment allows recreating critical situations or specific scenarios of potential faults without any risk for the physical equipment or the operators, means that the training of technical personnel is improved, strengthening their practical skills in real operating situations [

18].

Optimization, efficiency and operational safety are benefitted by minimizing the need for frequent physical interventions in potentially hazardous areas thanks to the use of a digital twin with VR, which significantly reduces risks for operators. In addition, the early detection of abnormal operating conditions decreases unexpected downtime, generating operational savings and improving the overall safety of industrial facilities [

17]

Once the analyses of Step 1 are completed, where the need to integrate new technologies and sensors into the equipment is evaluated, in addition to considering and building maintenance plans, and after the implementation of the digital twin in Step 2, the last step is the implementation of an early warning system (EWS) based on the capture and processing of real-time information from the sensors installed in the physical equipment in order to functionally integrate all the technologies used and to allow different types of scenarios and conditions to be simulated. This system allows users to detect anomalous conditions in early stages, generating immediate alerts that can be visual and/or audible depending on the criticality of the detected conditions. Its objective is to complement and optimize predictive maintenance strategies and the continuous supervision and training of operating personnel [

19].

The justification for implementing an EWS in the industrial context is due to the imperative increase in operational safety and the need to increase the ability to react to potentially catastrophic events. The integration of digital technologies such as advanced sensors, digital twins and virtual reality allows the early detection of operational deviations before they become critical faults, generating proactive responses that reduce unplanned downtime and increase occupational safety [

19].

In this sense, the proposed system combines the use of a PLC for the direct acquisition of sensory data on key parameters (e.g., temperature, vibrations, flow or pressure), and uses platforms such as Node-RED as middleware that performs the function of managing the processing of these data in real time. The processed data is then simultaneously transmitted to Blynk for its remote display in the Unity graphics engine, where it is plotted on a digital twin. This application allows the easy visualization of operational values and the detection of anomalies in their early stages [

20].

Node-RED is especially relevant in this integration, acting as an intermediary tool that facilitates efficient and fluid communication between the physical sensors (through the PLC) and the digital monitoring and alerting systems (Unity and Blynk). This platform not only processes and injects the data but also allows the generation of specific flows graphically that can be easily programmed to trigger automatic alarms when the sensorized values exceed the established thresholds [

21].

Blynk functions as a means of direct communication with Unity about critical events in the production process or equipment status. The inclusion of visual and audible signals allows operators to act in a timely manner in the event of abnormal conditions before they lead to major damage or risk situations [

16].

Unity, as the development platform for the VR environment, allows the immersive and dynamic visualization of the sensorized parameters in the exact location of the equipment within the digital twin. Thus, operators can intuitively visualize which sensors are indicating risk conditions, as well as have the possibility of observing the warnings that are presented at the moment a fault emerges or even before, improving the response capacity to these situations. In addition, virtual reality facilitates the simulation of these possible fault scenarios, strengthening the education and training of operating personnel without any real associated risk [

18].

Finally, the effective implementation of early warning systems based on the integration of technologies such as virtual reality and the real-time monitoring of operational parameters will allow all types of industry to move towards an intelligent operation and maintenance model, thus increasing the efficiency and safety in their production processes and strengthening the essential principles of Industry 4.0 [

22].

3. Workflow

For the purposes of this research, use was made of the DEUTZ F3L912W model, which is located on the premises of the School of Civil Mechanical Engineering of the Pontifical Catholic University of Valparaiso. The choice of this engine was made based on the industrial applications that this model had in its time; given its robustness and efficiency, it was widely used in various industries such as mining, construction and maritime, where it was used as an engine for heavy machinery, in pumping and as an electric generator, among other uses.

In this context, Norambuena et al. (2025) developed a real-time digital twin based on an engine, incorporating it into an immersive visualization environment in Unity 3D [

23]. The 3D model of the engine was obtained by scanning it with Polycam to achieve high-fidelity imaging, thus enabling the faithful replication of the physical equipment located in the university laboratory [

19]. This synchronized virtual environment allowed multiple students to interact simultaneously with the digital engine through VR viewers, observing its operational behavior in a safe and controlled space (Norambuena et al., 2025 [

23]). First, it was defined that it was necessary to incorporate an advanced sensing system to obtain accurate and complete measurements of the equipment. For this, specific sensors were selected according to key parameters for the experience:

Temperature: We decided to use multiple sensors to obtain detailed temperature data at different critical points of the engine, such as intake, exhaust and oil temperature.

Pressure: An analog pressure sensor was incorporated to evaluate the internal state of the compression chambers in the absence of combustion, providing valuable information on the internal behavior of the engine.

These improvements significantly enriched the analytical capability of the experience by allowing a more accurate and representative capture of actual engine behavior.

Then, for the effective capture and management of this sensorized data, a Siemens LOGO! 7 PLC was implemented. This PLC was chosen for its robustness and ease of integration. To manage the installed sensors, additional AM2 RTD-type analog modules (for temperature sensing) and standard AM2 analog modules (for 0–20 mA and 4–20 mA analog current signals) [

23] were used.

The PLC configuration involved converting and correctly scaling the analog signals received, an essential process because it normalizes the sensory readings, transforming them into easily interpretable values for later visualization and analysis in real time.

Communication between the PLC and the digital environment was established through Node-RED, a platform that acts as technological middleware. Node-RED receives data from the PLC, processes it and, using protocols such as WebSocket, transmits this data in real time to a centralized server.

For the remote visualization and management of this data, Blynk was selected as the IoT platform. Blynk stands out for its capacity for remote monitoring and centralized storage of data from the PLC, offering an interactive dashboard that facilitates immediate analysis and continuous monitoring of the measured parameters.

Finally, the information stored and centralized in the Blynk server was integrated into a multi-user environment developed with Unity 3D. To create an accurate and detailed representation, the physical engine of the laboratory was scanned using the Polycam application, generating an accurate digital model of the equipment. Interactive interfaces were designed in Unity to clearly display the data, and readings came from the sensory system in real time, creating an immersive environment and substantially enriching the students’ educational experience [

23].

Although this environment was developed with a purely educational purpose, its operation allowed its use as a simulation tool, given that the infrastructure used was the same that was used at the time of proposing the methodology. In addition, as mentioned above, to create and implement this virtual environment, a study related to the direct case of application for which it was designed was also carried out, including subsequent sensorization, which was compatible with the objectives of the case applied in this study. This allowed us to simulate the operating conditions of the engine not as if it were dragged, but as an internal combustion engine. This is because we had exhaust gas temperature, ambient and pressure sensors, which, through data injection, made the simulation of the case feasible.

Thanks to the possibility of using the environment described above, the first two steps proposed by the methodology presented in this text were already completed. Therefore, all that remained was to implement the early warning system or EWS.

For the implementation of the early warning system, it was first necessary to perform an analysis of the critical operational limits of the parameters measured by the sensors installed in the equipment. This type of analysis is an essential step in the design of any effective EWS, since it allows the early identification of deviations that could compromise the operational safety of the system. As has been demonstrated in studies on predictive models based on time series data, an adequate delimitation of these thresholds allows user not only to foresee faults, but also to reduce downtime and avoid economic losses associated with unplanned outages [

24]. In this context, we proceeded to review the official technical and service manuals of the equipment in question, in addition to obtaining direct operational information from the professional in charge of machine safety and maintenance. This approach was aligned with scenario-based methodologies that integrate both historical data analysis and operator experience to establish causal networks that anticipate undesired events [

25]. The key parameters and critical limits are summarized in

Table 1.

Once the critical operational limits of the measured parameters were defined, a list of inspection and maintenance tasks to be performed in case any of these values were exceeded was prepared. With this information available, it was integrated into the virtual environment, where a system of pop-up windows was developed that were automatically activated when any of the parameterized values reached their critical limit. These pop-up windows had the function of indicating previously defined inspection and/or maintenance tasks, with the objective of improving and accelerating the response process in the event of an imminent equipment failure. This automated response logic was consistent with solutions applied in structural intelligent monitoring systems, where distributed sensors provide alerts in real time about critical risks, allowing immediate intervention even in adverse conditions [

26].

In addition to this pop-up system, a criticality indicator was added to indicate the proximity of the parameterized values to the critical values. This indicator consisted of color variation tracking the measured values—green for normal values, yellow when they were at an intermediate level and red when they reached their critical values—as described by the color-coded indicators, which visually reflect the criticality level of the monitored parameter which visually reflect the criticality level of the monitored parameter. Such a visual representation constitutes an effective tool for real-time decision making, as it facilitates the interpretation of the risk level without the need for a complex technical analysis, thus reinforcing the usefulness of the TSS as an operational and preventive support [

24].

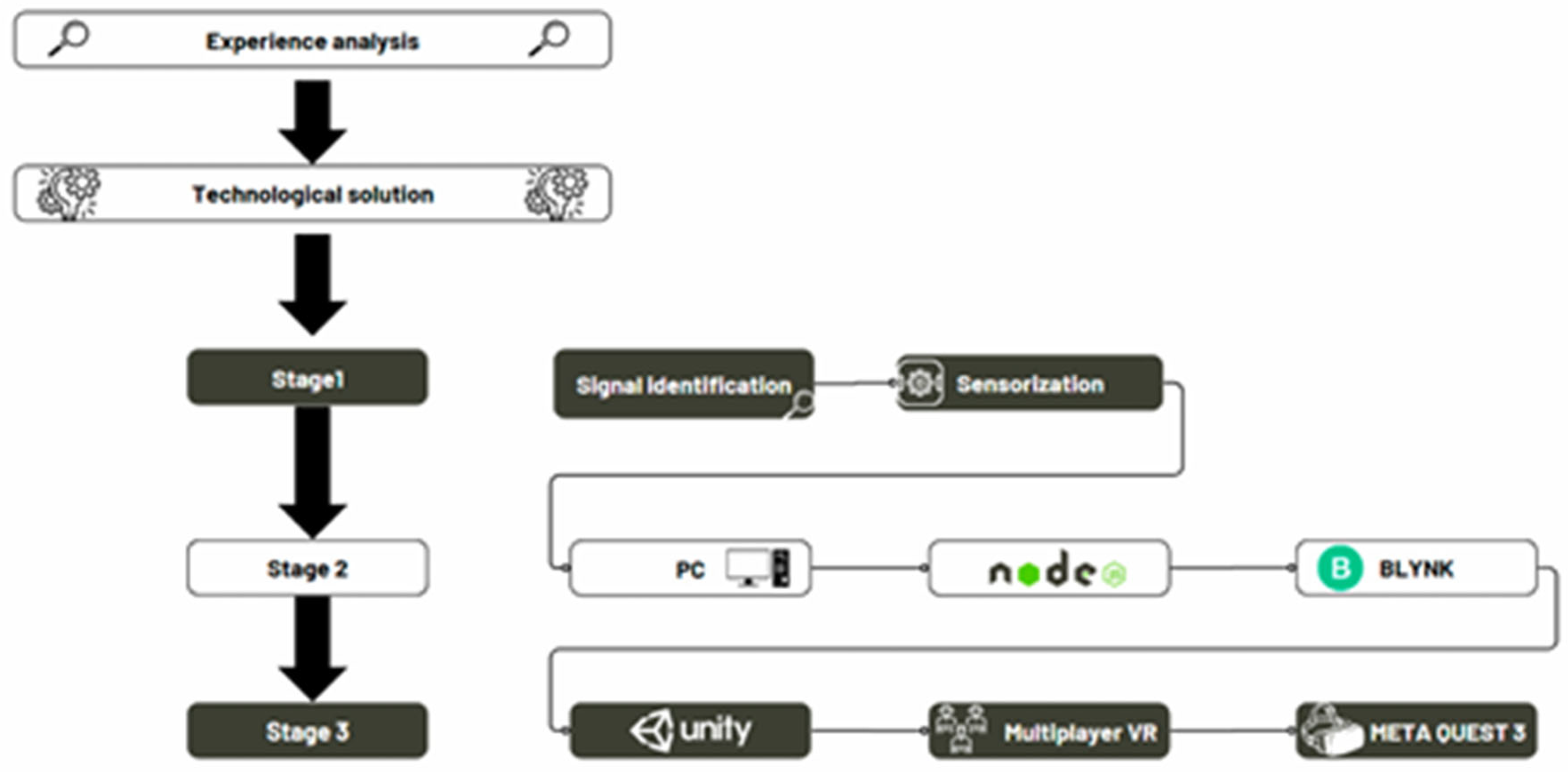

To implement the early warning system, we first focused on the sensorization process and signal transmission, using as a reference the configuration diagram presented in [

23]. This initial stage allowed us to establish a stable communication flow between the physical sensors and the digital environment, ensuring that real-time data could be accurately processed and visualized. The signal management logic, based on modular structures, was adapted and extended from the approach detailed in that previous work (

Figure 3).

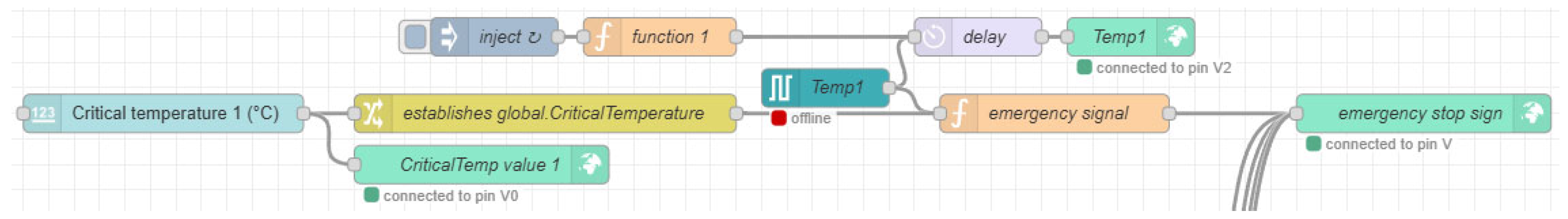

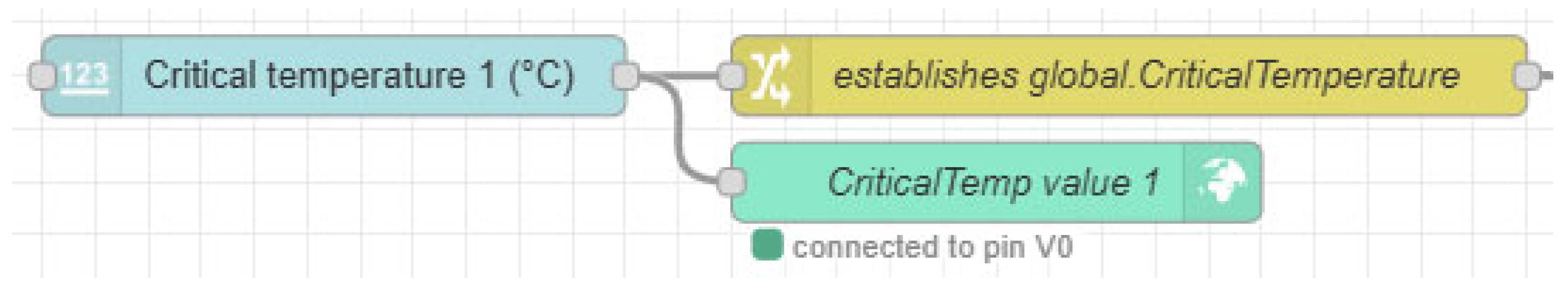

Figure 4 shows the final configuration of Node-RED, the module that performs data collection and transmits the information to Unity, where the existence of five structures can be seen connected to a final module. These structures correspond to each of the sensors connected to the equipment.

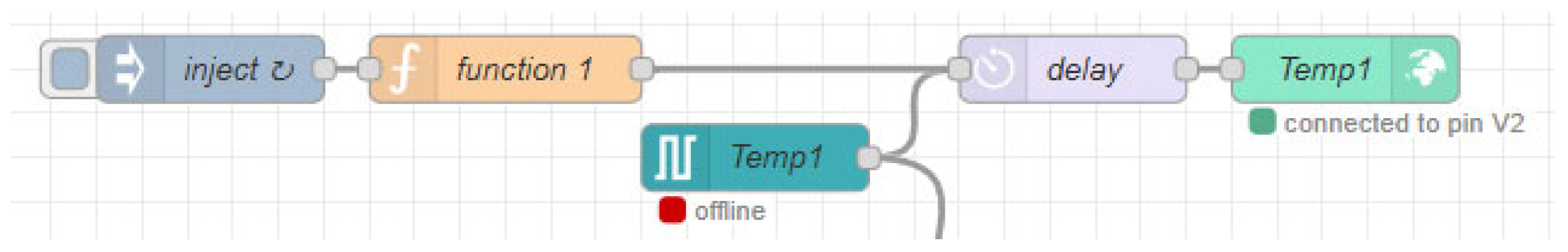

Each of these structures was configured with the same structure depicted in

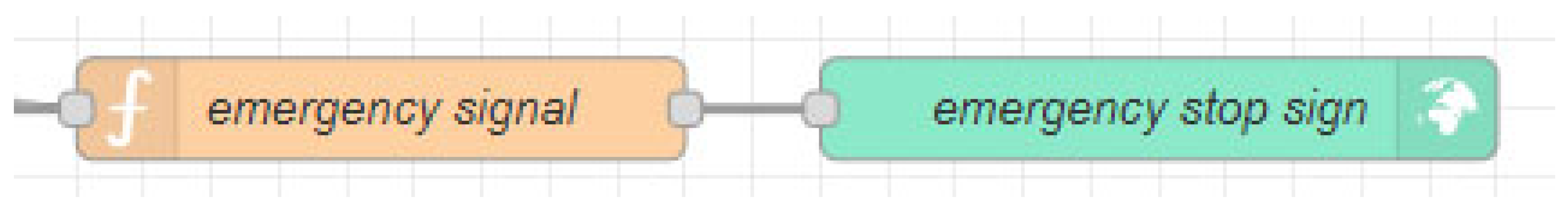

Figure 5 and was divided into three main blocks, each with a specific function but interconnected to ensure the proper functioning of the proposed early warning system.

Figure 6 presents a diagram of the structure whose function was to define and restrict the critical value of a parameter. Then, the next structure, which operates in parallel and is represented in

Figure 7, oversees injecting data in such a way that it fluctuates and allows the desired simulation; it directly communicates the sensor data with the server in Blynk and its respective representation in Unity. Finally, the structure shown in

Figure 8 receives data from both previous structures and interprets the values to send a warning signal to the simulation in Unity when a parameter reaches its critical value. This allows the freezing of the simulation, preserving the last data received, while feeding the pop-up system in Unity.

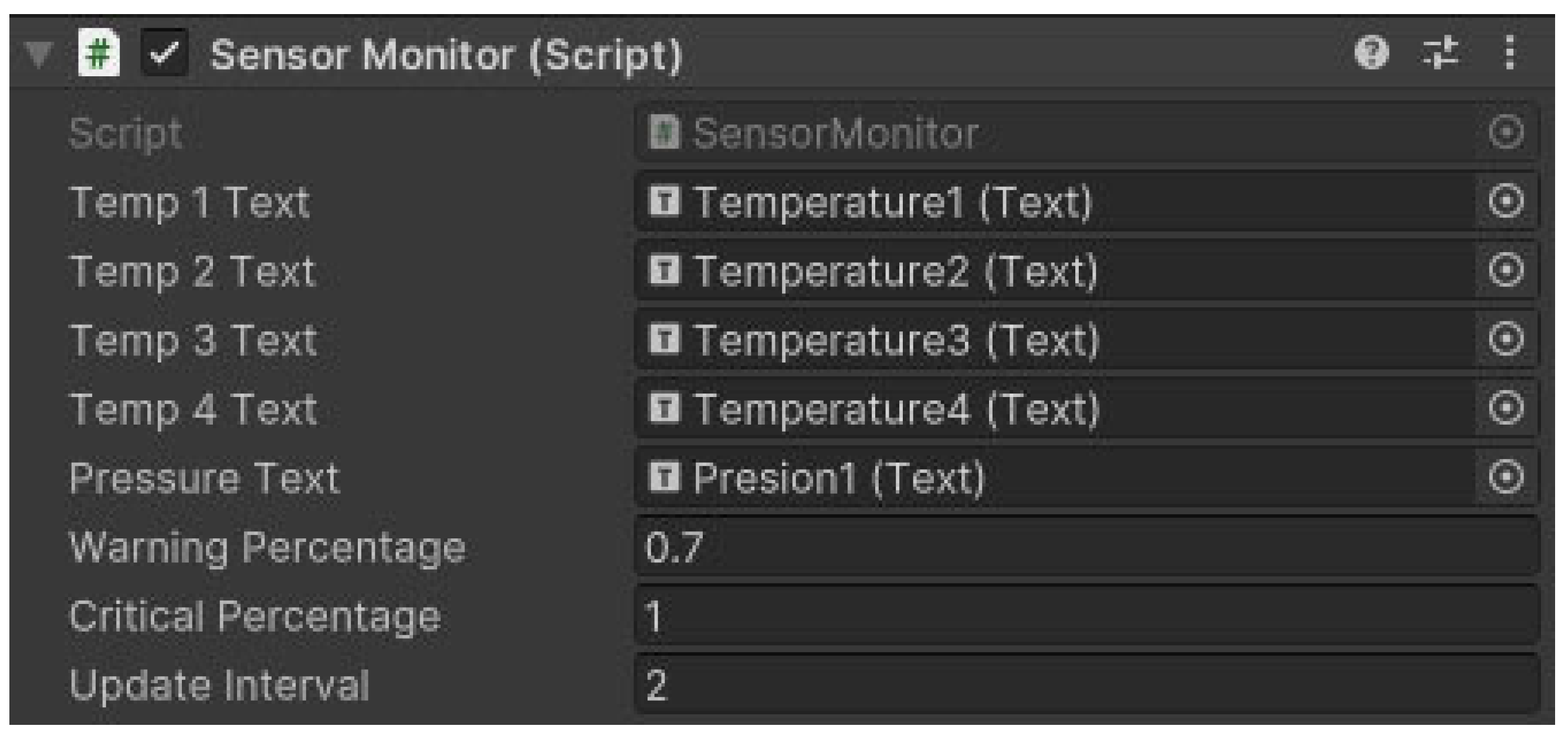

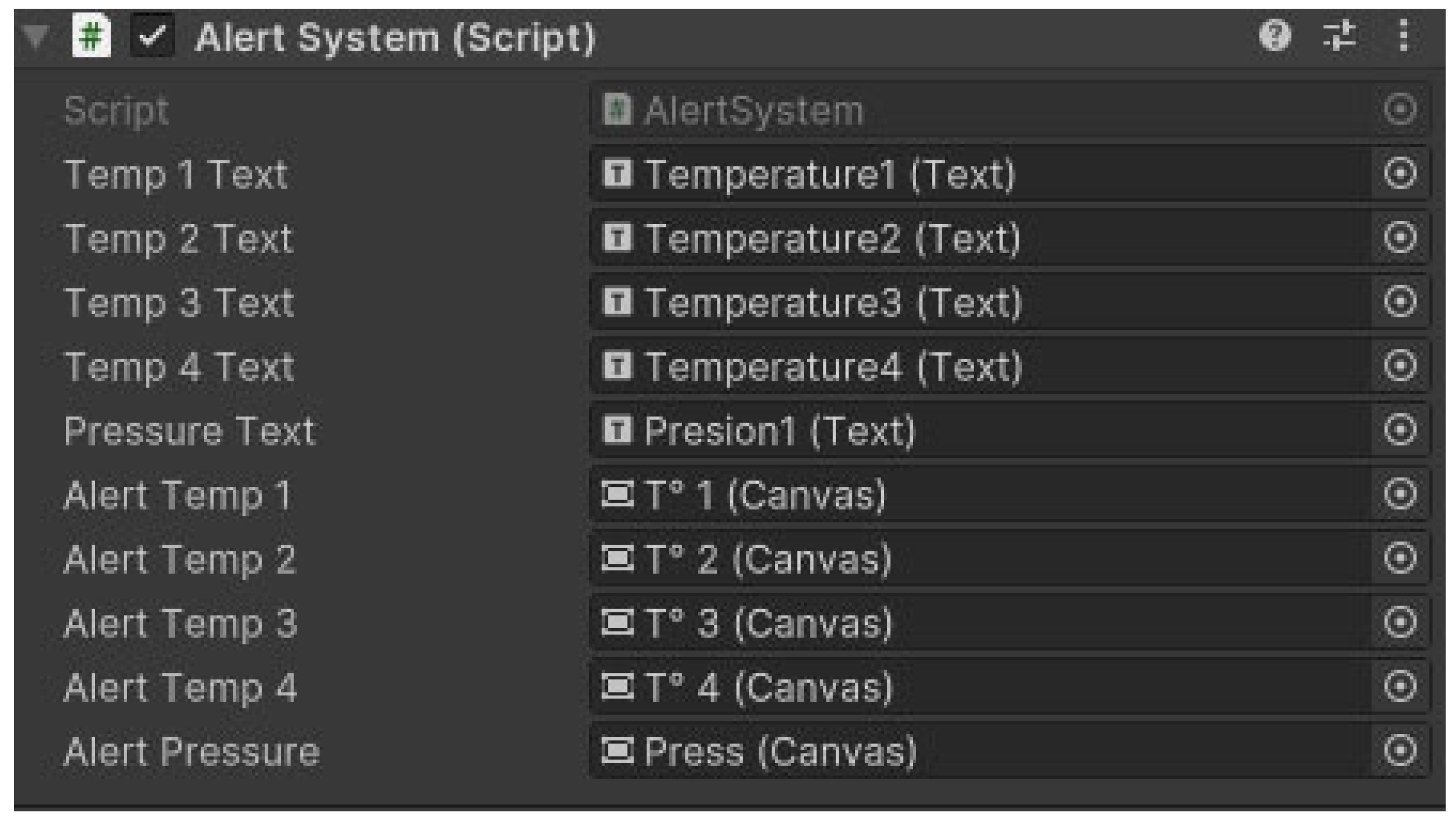

For the pop-up-window system, two applications embedded in Unity were developed. The first one, whose configuration panel in Unity corresponds to the one shown in

Figure 9, interpreted the data provided by Node-RED and executed a color change in the text representing the parameter values, signaling three different states with respect to operational status. Green indicated correct operation without problems; yellow appeared when the value approached a critical state, defined by a percentage of the critical value determined by the user; and finally, red indicated that the value had reached its maximum critical limit.

The second application, corresponding to

Figure 10, communicated directly with the previous one and had the function of identifying a color change in the source of any of the sensorized parameters. When parameterized data reached its maximum value, this application detected the red color in the source of the parameter and automatically activated the pop-up window, which indicated that the motor must be turned off and the respective inspection and/or maintenance work must be carried out. For further details, see

Table 2. Unity code.

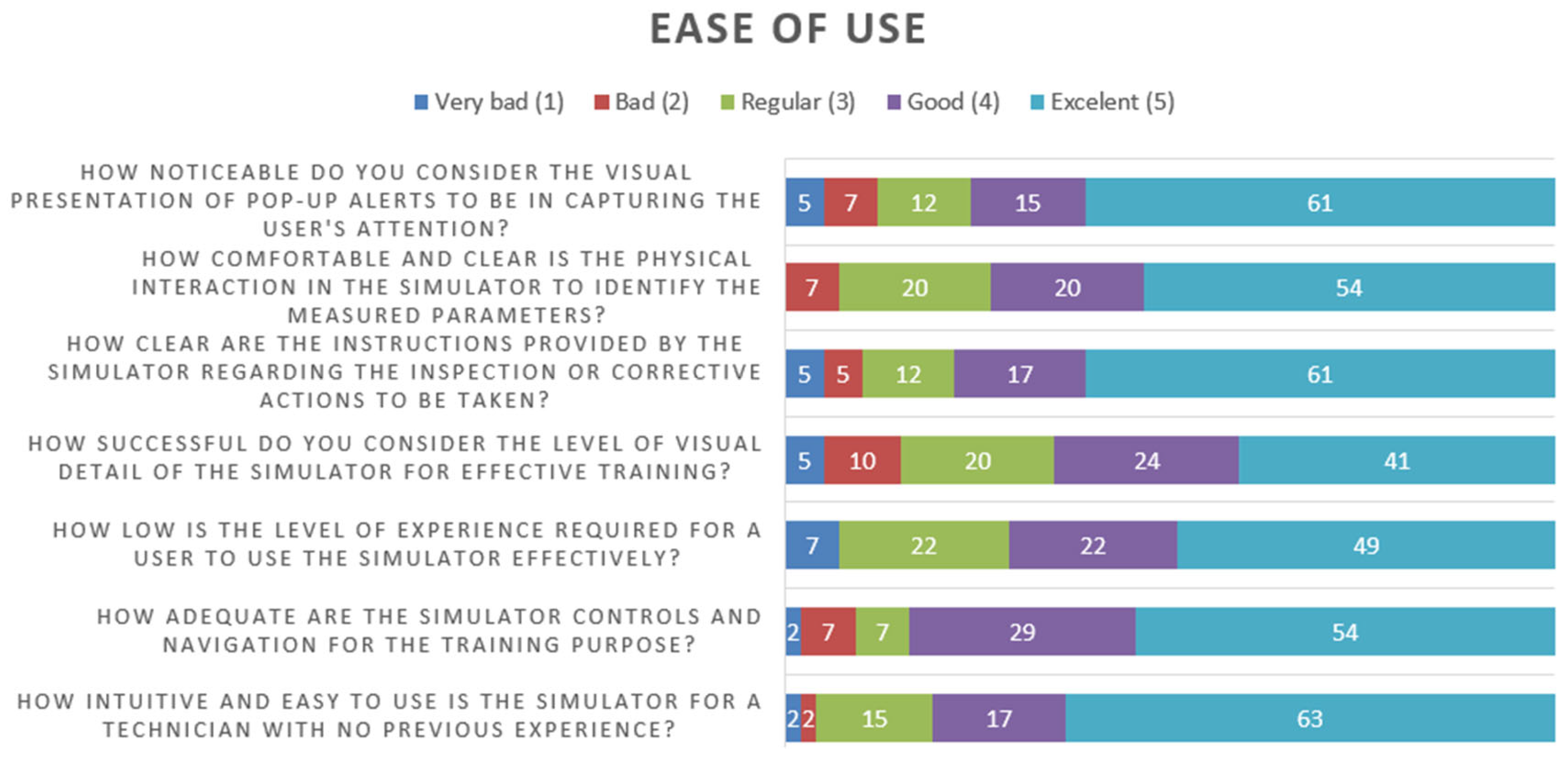

5. Discussion

In industry, the use of technologies such as virtual reality (VR) and digital twins in industrial environments has become increasingly necessary, allowing significant progress in monitoring, maintenance and training tasks by offering a safe, accessible and controlled alternative to field operations. However, there are still technical limitations that prevent the implementation of these technologies on a large scale. These include the absence of realistic tactile feedback, which reduces the effectiveness of hands-on learning in industrial simulations [

27]. In addition, current systems face latency problems that hinder synchronization between the physical environment and its virtual representation, especially in critical contexts such as subway or hard-to-access facilities [

28]. Added to this is the need for more intuitive interfaces, as many platforms remain complex to use for non-specialized operators, making it more difficult to increase efficiency in design validation and assisted maintenance processes [

29].

In the face of these challenges, future lines of research are aimed at improving the accuracy and responsiveness of systems through solutions such as advanced haptic devices, 5G networks and edge computing to reduce latency, along with the integration of artificial intelligence to complement predictive-type analytics and the personalization of the user experience. These improvements would enable not only more realistic and effective training, but also safer remote operations, such as real-time remote inspection in hostile industrial environments, thanks to immersive 360° video streams combined with smart sensors [

30]. Likewise, the adoption of digital twins coupled to VR environments allows us to visualize in advance the behavior of equipment under different operating conditions, facilitating decision making in preventive and predictive maintenance [

31].

Despite the promising results of the proposed system, several practical limitations may hinder its large-scale adoption in industrial contexts. These include latency issues in data transmission, especially in remote environments; the cost of VR-compatible hardware and sensing equipment, which may not be affordable for all companies; and network stability, which is critical for ensuring real-time synchronization between physical assets and their digital counterparts. To address these challenges, future research will explore the incorporation of 5G communication technologies and edge computing to reduce latency and increase system responsiveness. Additionally, integrating artificial intelligence for automated diagnosis and decision support may enhance the robustness and scalability of the platform, paving the way for more resilient and intelligent maintenance systems under the Industry 4.0 paradigm.

The proposed methodology demonstrates a strong potential for scalability and generalization across different industrial scenarios. Although this study focused on a specific use case involving an internal combustion engine, the system architecture is modular and adaptable. By modifying the 3D digital model and updating the types of sensors connected to the PLC, the same communication flow—from data acquisition to immersive visualization—can be applied to a wide range of equipment or processes. This flexibility allows the platform to be deployed in various industrial environments such as fluid transport systems, robotic workcells, or energy plants, making it a replicable and extensible solution for Industry 4.0 training and monitoring applications.

Despite the system’s successful performance in a controlled environment, certain technological limitations must be considered for real industrial deployment. These include latency due to network instability, limitations in data throughput, and the processing load of real-time visualization. Future iterations of the system may incorporate edge computing to decentralize data processing closer to the sensor source, and implement industrial communication protocols such as OPC UA or MQTT with quality-of-service control. These enhancements would improve system robustness, reduce response time and facilitate deployment in time-sensitive or remote industrial contexts.

Future enhancements to the system should be aimed at increasing realism, usability and scalability. One key direction involves the integration of advanced haptic devices and 360° immersive video, which would provide more authentic tactile feedback and contextual visualization. These features were not implemented in the current version due to hardware constraints and cost considerations but are considered high-impact improvements for simulation-based training in high-risk scenarios. Another relevant challenge is latency and data synchronization in remote or mission-critical industrial environments. Although the current implementation was tested under stable conditions, future versions may incorporate edge computing to offload processing closer to the data source, as well as 5G or industrial-grade communication protocols with QoS to support real-time responsiveness in complex deployments. Additionally, AI integration is proposed as a future development path, using machine learning models such as LSTM or rule-based systems to support predictive maintenance and personalized training. This would require a structured data infrastructure and greater computational capacity but would significantly enhance system intelligence and adaptability. Finally, recognizing that system interfaces may pose a barrier for non-specialized operators, future versions will focus on user interface simplification, adding guided workflows, voice-assisted cues and customizable interaction layers to improve accessibility and ease of use across different user profiles.

6. Conclusions

The advance of Industry 4.0 has led to the implementation of technologies such as virtual reality and digital twins, which in the industrial context represents a significant advance in the digitization of maintenance, monitoring and training processes. This work has demonstrated, through the systematic application of the proposed methodology, the technical feasibility of integrating physical sensors, PLC processing and immersive visualization along with anticipated fin systems implemented in Unity through the use of open-source software, allowing the application of these technologies in a more extended way.

However, the system has some limitations. The lack of haptic feedback and the dependence on connectivity with IoT platforms may affect accuracy and user experience in critical scenarios. In addition, quantitative metrics such as latency, error rate in alerts or user performance in a real job in the context of tasks related to their work were not evaluated, which is considered essential for future validations.

Future work will focus on implementing a quantitative evaluation of the system’s performance in industrial scenarios. This will include measuring sensor-to-visual latency, synchronization accuracy between real-world data and the digital twin model, and transmission delay through the communication layers. In addition, user performance during training sessions will be assessed using objective metrics such as task completion time, error rate and response to alerts. These indicators will provide a more comprehensive validation of the platform’s effectiveness, both technically and pedagogically, beyond subjective perception.

As directions for future work, we propose the following: (1) the incorporation of haptic feedback to enrich the interaction with the virtual environment, (2) the use of 5G networks and edge computing to reduce latency and improve system stability, (3) the integration of artificial intelligence algorithms for automatic diagnosis and the personalization of training according to the user’s profile. These improvements would allow us to not only increase the fidelity of the environment but also move towards predictive maintenance and adaptive learning models, contributing to safer, more efficient and resilient industrial environments.