Featured Application

This study contributes to the design of adaptive gesture-based control systems using smart rings, optimizing user interactions across diverse contexts—from relaxed 2D screen interfaces to immersive XR environments—by aligning gesture modalities with user expectations and contextual settings.

Abstract

As gesture-based interactions expand across traditional 2D screens and immersive XR platforms, designing intuitive input modalities tailored to specific contexts becomes increasingly essential. This study explores how users cognitively and experientially engage with gesture-based interactions in two distinct environments: a lean-back 2D television interface and an immersive XR spatial environment. A within-subject experimental design was employed, utilizing a gesture-recognizable smart ring to perform tasks using three gesture modalities: (a) Surface-Touch gesture, (b) mid-air gesture, and (c) micro finger-touch gesture. The results revealed clear, context-dependent user preferences; Surface-Touch gestures were preferred in the 2D context due to their controlled and pragmatic nature, whereas mid-air gestures were favored in the XR context for their immersive, intuitive qualities. Interestingly, longer gesture execution times did not consistently reduce user satisfaction, indicating that compatibility between the gesture modality and the interaction environment matters more than efficiency alone. This study concludes that successful gesture-based interface design must carefully consider the contextual alignment, highlighting the nuanced interplay among user expectations, environmental context, and gesture modality. Consequently, these findings provide practical considerations for designing Natural User Interfaces (NUIs) for various interaction contexts.

1. Introduction

Hand-gesture-driven interaction has matured into a dominant input method, expanding its practical applications across high-tech domains. This evolution has been particularly evident in industries like gaming, robotics, and IoT-powered home appliances [1]. Within the Human–Computer Interaction (HCI) field, gesture recognition has become a central research topic, with major technology firms integrating gesture-based controls into their ecosystems. Companies like Microsoft, Google, Apple, Meta, and Sony have integrated their own gesture recognition technology into their platforms, such as the Google Soli project, Microsoft Kinect and the HoloLens Head-Mounted Display (HMD), the Meta Quest HMD, and recently the Apple Vision Pro HMD. These technologies showcase how gestures can act as intuitive, organic methods for engaging with digital and virtual environments [2]. For instance, Meta Quest Software Development Kit (SDK)’s hand recognition and tracking technology perfectly and conveniently replaces the conventional VR controllers, demonstrating how hand-gesture-driven interaction could enhance immersive in-play experiences within VR/MR environments [3]. Likewise, Apple Vision Pro relies on pinch gestures as a core mechanism for navigating spatial UIs, intentionally minimizing the reliance on traditional XR controllers [4].

In consumer tech, smart TVs and media devices increasingly support free-hand gestures for interface navigation and control, moving beyond the conventional presses of a button on a remote to hand swipes and waves. For example, Sony and Apple have secured patents for mid-air gesture controls designed for navigating TV home interfaces, effectively serving as alternatives to traditional physical remotes [5,6]. Moreover, their integration with wearable technology further enhances the gesture recognition accuracy. Compared to free-hand gestures, lightweight wearable devices—such as smart rings equipped with IMU (Inertial Measurement Unit) sensors—offer a more accurate and user-friendly way to detect fine gestural actions like subtle muscle vibrations and directional finger movements [7].

In particular, a key reason for spotlighting smart rings as an interaction method stems from the high computational demands of bare-hand gesture recognition, which typically involves numerous cameras and substantial processing power for visual sensing [8]. Also, many users express a preference for lighter, glasses-style XR gear over bulky headsets when engaging with XR experiences [9]. Addressing this preference, Meta’s Orion project has introduced a wristband that uses Electromyography (EMG) technology to improve the precision of gesture recognition for lightweight XR devices [10]. Drawing from such developments, this study emphasizes enhancing natural user interactions through everyday wearable form factors—such as the increasingly popular eyewear-like XR devices. Consequently, we identified the smart ring as an optimal target form factor to facilitate gesture-based interactions due to its potential for seamless integration into everyday user routines.

Considering this, the widespread use of hand gesture technology highlights the increasing relevance of gesture-based interfaces, supporting the idea that Natural User Interfaces (NUIs) could offer an intuitive and efficient way for users to interact with digital systems [11]. It thus becomes crucial to examine how users preferably perform and perceive hand gestures across different interface contexts, especially in two of the most active application domains: a TV-based 2D screen interface environment and a spatial interface environment. These two platforms present notably different usability contexts. A TV-platform-based UI typically involves a lean-back control experience on the couch in the living room. Antithetically, an XR (AR/VR/MR)-based spatial UI places users inside either a fully rendered 3D virtual environment or an environment superimposed onto the real world, allowing them to gesture relatively more freely than in a TV experience due to the scale and dispersedly expanded layouts of spatial UIs. In particular, users wearing XR headsets tend to perform more expressive hand movements—such as grasping, pinching, swiping, or pointing in mid-air—to interact with virtual elements and interfaces [12]. While TV interfaces also allow for gesture control, their two-dimensional screen size and the physical distance between the user and the screen impose spatial constraints. As a result, the gestures on TVs are often more fixed in orientation and limited in range compared to the more dynamic, flexible gestures possible in XR.

Despite the increasing adoption of gesture interactions on both platforms, these two platform conditions have rarely been compared in terms of their gesture interactions and usability, and how users’ preferences for certain types of hand gestures might differ and change between controlling a 2D-screen-based interface and an XR-based interface remains an open question. To address this gap, this study explores the hand-gesture-based interactions in both a TV-platform-based 2D interface and an XR-platform-based 3D virtual spatial interface, with the aim of measuring the usability differences and similarities in how users experience and evaluate the gestural controls across these two different platforms (Figure 1).

Figure 1.

Images of participants (wearing a smart ring mockup) interacting with the UI components of a landing page interface by performing predefined gestures under two experimental conditions: a TV-screen-based interface (TV-type projector: (A-1,A-2)) and an XR-based spatial interface (B-1,B-2).

Specifically, our objectives are to

- Compare the user experiences and performance of hand-gesture-based interactions for a TV-platform-based 2D interface versus an XR-platform-based 3D spatial interface;

- Determine which gestures types (e.g., hand/finger swipes, point and click, grab and release, etc.) are relatively preferred in each contextual condition;

- Assess the user comfort levels and usability issues (physical fatigue, cognitive load, or social discomfort) associated with different gestures on the two different platforms.

By examining usability, preferential choices, and issues, we seek to deepen our understanding of how environmental and platform-specific contexts shape the effectiveness of gesture-driven user interfaces and to clarify the proper user preferences and anticipated future usability that will sustain convenient, usable gesture interactions on both traditional and immersive media platforms.

2. Related Studies

2.1. Natural User Interfaces (NUIs)

Natural User Interfaces (NUIs) have been designed to enhance user interactions by utilizing instinctive human actions like hand gestures, eye gaze, and voice commands, aiming to make the perception of the user experience smoother and more intuitive [13]. A NUI focuses on reducing the learning curve by aligning interactive behaviors with the user’s physical and cognitive habits—for example, employing mid-air gestures to browse or manipulate digital contents [14]. Research on tactile and gesture-based systems has shown that physically interacting with digital elements improves users’ spatial recall and lessens their cognitive strain, as users feel that the interaction is like a natural extension of their body movements [15,16]. Similarly, a NUI promotes interacting with digital contents through intuitive actions—for instance, swiping or waving a hand to dismiss contents rather than clicking a delete button [17]. This method reflects the concept of hands-on interaction, enabling users to feel as if they are physically engaging with and handling digital components [18]. Additionally, NUI-based systems typically respond to environmental cues, offering context-aware suggestions and adjusting in real time to users’ interaction patterns [19].

In terms of their real-world application, NUIs have been adopted across numerous technological sectors. Since the early 2010s, Microsoft has played a pioneering role in NUI development, especially through its Kinect series and accompanying SDKs for Xbox, which enabled users to interact with games using full-body gestures [20,21]. Likewise, Apple’s touchscreen interfaces on iPhones and iPads have set the industry standards for intuitive gesture interactions like finger-based pinching or dragging gestures [22]. Especially since 2020, major big IT companies have actively been advancing NUI technologies and integrating intuitive multimodal interaction methods like gesture recognition, eye tracking, and voice commands into their products and platforms. As previously mentioned, the Apple Vision Pro and the Meta Quest series have pioneered seamless interactions in mixed and virtual reality environments, harnessing sophisticated hand tracking, eye tracking, and voice control to enhance user immersion [3,23]. In consumer smart home devices, Google Nest Hub utilized Soli radar technology to enable touch-free gesture interactions, demonstrating the integration of NUIs into daily life [24,25]. Meanwhile, Amazon Alexa’s voice-interaction-driven NUI has shown new usability in that the AI assistant applies contextual awareness and responds to user conversations with human-like responsiveness [26]. Considering these technological and UX trends, these examples from major tech companies illustrate how NUI-driven technologies and principles can be embedded into products to transform how we engage with digital interfaces.

2.2. Gesture Interactions in TV Interfaces

In the realm of home entertainment and television, mid-air gesture interactions serve as an alternative to traditional remote controls. Research in this area primarily aims to identify gesture sets that feel instinctive, allowing users to browse, select, and operate TV content from a certain distance. For instance, Ramis et al. recorded a wide range of hand motions in participants to determine the most intuitive gestures for essential TV operations such as channel switching and volume control [27]. Other studies have also focused on gesture elicitation techniques to create user-centric and accessible interaction methods for TVs. Vatavu and Zaiti conducted a gesture elicitation study focused on television interactions, evaluating the ease of performing and the remembering probability for free-hand gestures across plural TV control functions [28]. Similarly, Dong et al. investigated how users naturally perform gestures for smart TV control, focusing on the importance of recallability and usability in developing a practical gesture system [29]. He et al. carried out research into gesture elicitation to develop intuitive gestures for smart TV interactions, assessing their effectiveness in terms of their user-friendliness and retention and the ease of learning and memorizing gestures [30]. These studies suggest that the design of TV gestural controls should align with users’ inherent mental models of natural physical actions to maximize their intuitiveness.

Beyond this perspective, some studies have highlighted significant usability challenges for gesture-driven TV interaction methods. Notably, gesture-controlled TV interfaces may impose cognitive loads and mental stress on users compared to conventional remote controls. For instance, Xuan et al. examined mid-air gestures in comparison to remote control methods, finding that although gesture-based input led to increased mental effort and stress, users also perceived enhanced task effectiveness and adaptability [31]. Analogously, Bernardos et al. examined how users responded to gesture-based control systems in smart environments, including smart devices like TVs, discovering that while gesture interactions offer novel and forward-thinking possibilities, interacting through mid-air gestures tends to elevate users’ mental strain and cognitive load compared to conventional input techniques [32]. Additional concerns highlighted in the literature involve the precision of gesture detection and the commonly cited “Gorilla Arm” fatigue phenomenon: this refers to the physical strain that can occur when users hold their arms or hands in extended positions—such as maintaining a pointing gesture in mid-air—for an extended duration, which can cause muscle fatigue and negatively impact user comfort and the overall interaction usability [33]. Overall, research in the TV domain underscores that gesture-based TV control is pragmatically feasible and could be enjoyable to users, yet the properties of hand gestures should be planned to mitigate their cognitive load and physical strain.

2.3. Gesture Interactions in XR (VR/AR) Interfaces

In recent years, natural gesture-based interactions have become an integral communication method within the realms of extended reality interfaces, encompassing both virtual and augmented reality technologies. Progress in machine learning and depth-sensing tools has significantly enhanced gesture recognition, allowing users to manipulate virtual, digital objects or components as they would in a real-life experience [34]. Particularly, major IT companies have significantly contributed to these developments. The Apple Vision Pro offers a seamless interaction experience by combining eye tracking with hand gesture recognition. For example, users can select elements by looking at them and pinching two fingers together, move elements by moving their pinched fingers, and scroll by flicking their wrist. This integration allows for precise and fluid interactions within virtual environments, eliminating the need for physical controllers [35]. Similarly, Microsoft’s HoloLens series employs a combination of depth sensors and advanced gesture recognition algorithms to enable intuitive hand-tracking and interactions within an augmented reality environment [36]. Also, as mentioned above, the Meta Orion project recently unveiled lightweight smart XR glasses that render holographic user interfaces so that they are superimposed into real-life environments and contain real-life objects [37]. Considering this paradigm in the IT industry, these advancements collectively highlight the growing role of gesture recognition technology in shaping more immersive, intuitive, and controller-free interactions within XR environments.

From an alternative standpoint, wearable technologies have been explored either as auxiliary tools to XR devices or the primary apparatuses for enhancing gestural interactions in XR environments. Again, a notable example is the Meta Orion project, which deals with an EMG-sensor-embedded wearable wristband that reads neural signals to interpret a user’s hand movements. This technology enables the conversion of subtle finger and wrist motions into precise digital commands during XR-based interactions [38]. Another significant EMG-enabled device is the Myo Armband by Thalmic Labs. Akin to the Meta Orion project’s wristband, this armband also employs EMG sensors to capture the bioelectrical signals generated by muscle contractions [39,40,41]. Moreover, wearable technology spans from armbands and smart gloves to full-body suits, designed to recognize and translate human gestures in response to different contextual settings. In terms of haptic gloves, HaptX Gloves DK2 has notably advanced tactile interactions by simulating the sensations of touch, pressure, and texture through microfluidic systems, offering users a realistic experience of digital objects [42]. As examined, developmental enhancements in natural gesture-driven interactions, combined with the continuous convergence of wearable technology, are fostering more fluid and intuitive digital experiences. With ongoing advancements, these integrations are expected to bridge the gap between physical and virtual spaces further, transforming how users engage with immersive environments.

2.4. Smart Ring Interactions

Smart rings have emerged as versatile wearable devices that significantly enhance gesture-based interactions across a variety of digital environments, including TVs, computers, and XR devices. Equipped with advanced sensing technologies, smart rings accurately interpret a broad spectrum of hand and finger movements, providing seamless and user-friendly interaction experiences. For instance, the RingGesture system integrates an IMU sensor and electrodes to detect mid-air gestures, enabling seamless text input and navigation across digital platforms [43]. Similarly, Ring-a-Pose employs acoustic sensing technology to continuously track three-dimensional hand poses, accurately identifying various types of micro-gestures [44]. Also, empirical studies underline the broad applicability of smart ring technology further. Gheran et al. conducted an in-depth study that aggregated extensive user-driven data collection and analyses, uncovering minimal agreement among participants and the necessity of personalized gesture mapping to enhance the intuitiveness of smart ring interfaces [45]. Building on this, a follow-up study reaffirmed the need for a participatory design process by formalizing user-specified gesture sets and assessing their cognitive loads across multiple interaction use cases [46]. Additionally, PeriSense utilized capacitive sensing to capture the subtle distances between the ring’s electrodes and the user’s skin, thereby enabling more refined and complex interactions involving multiple fingers [47].

The growing presence of smart rings in consumer electronics showcases their broad potential for everyday use. The Samsung Galaxy Ring enables intuitive gesture-based controls, such as a double-pinch gesture, enabling users to manage common tasks like taking photos or dismissing alarms hands-free, significantly enhancing the convenience of interactions within the Samsung ecosystem [48,49,50]. Similarly, the Neyya smart ring allows users to perform actions such as controlling presentations, managing calls, skipping media tracks, and adjusting the volume on a variety of devices, including laptops, TVs, and smartphones [51]. Additionally, the Y-RING features personalized gesture inputs and an integrated NFC module for contactless payments, offering diverse interaction possibilities and transactional convenience [52]. Given the growing integration of gesture recognition and cross-platform compatibility, smart rings are poised to become a useful, intuitive interface for seamless and context-aware interactions.

3. Methods

3.1. Overview

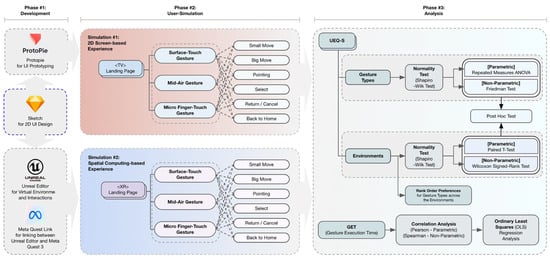

To systematically investigate gesture-based interactions in different user interface environments, this study is structured into three main sequential stages: the development phase, the user simulation phase, and the analysis phase (Figure 2). Firstly, the development phase involved (a) the process of designing a user interface using Sketch (ver. 67.2, Bohemian Coding, The Hague, Netherlands); (b) the process of prototyping a 2D interface using Protopie (ver. 9.1.0, Studio XID, Seoul, Republic of Korea); and (c) the processes of developing, linking, and prototyping the interactions in a 3D spatial user interface using Unreal Editor (ver. 5.2.1, Epic Games, Cary, NC, USA) and Meta Quest Link (ver. 76.0.0.452.315, Meta Platforms, Menlo Park, CA, USA). Based on designing the basic UI architectures and components in Sketch, these UI outputs were then migrated into both Protopie and Unreal Editor, where they were appropriately applied to interaction frameworks for interactive prototyping in both 2D screen and 3D spatial environments. Also, to enable augmentation of the spatial user interface, Meta Quest Link works as a bridge platform that links Unreal Editor and Meta Quest 3 (Meta Platforms, Menlo Park, CA, USA). For hand recognition and interactions in virtual interfaces within XR environments, we purchased the Hand Tracking Plugin (ver. 0.6.3) developed by Just2Devs on Epic Games Launcher (Epic Games, Cary, NC, USA) [53] and also used the Meta XR Plugin (ver. 1.88.0, Meta Platforms, Menlo Park, CA, USA) from the Meta for Developers website [54].

Figure 2.

The framework for the development, user simulation, and analysis of hand-gesture-based interactions in 2D and XR environments.

In the user simulation phase, prior to initiating the experiment, the participants were pre-informed that this study did not involve an actual user test with an actually functioning smart ring with detection capabilities. Instead, they were instructed to assume that the smart ring they were going to wear recognizes gestures and enables UI controls within a simulated experiment. Following this briefing, the participants proceeded with the experiment while wearing a smart ring mockup on either their left or right index finger. These methodological settings meant that the user testing conducted in this study followed the “Wizard of Oz” method [55], enabling rapid and effective prototyping of interactive UIs and user simulation experiments.

Building upon these predefined settings, the participants first completed a pre-experiment questionnaire assessing their prior experience with IT-based devices and VR/MR technologies. After this, they received instructions on performing three distinct types of gestures (Figure 2): Surface-Touch gestures, mid-air gestures, and micro finger gestures. Based on this guidance, the participants interacted with two primary platforms using the instructed gestures: (a) a landing page implemented as a TV-screen-based user interface and (b) a landing page designed as an XR-based spatial user interface (see the “Phase 2: User Simulation” part of Figure 2). During the simulations, the participants were guided to perform specific hand/finger gestures in predefined user scenarios. These included large and small finger movements for corresponding cursor adjustments and finger pointing and selection motions (pausing a finger swipe and either tapping a surface or tapping in mid-air) for free cursor movement and selection, as well as hand-grabbing and waving motions for functions like “return/cancel” and “back to home”.

Upon completing all three gesture types, the participants proceeded with a usability preference survey and a post-experiment interview at the end, lasting approximately 13 min, to assess their overall experience with hand gesture interactions while wearing the smart ring.

With regard to conducting the actual experiment, it took place from 9 to 23 September 2024 in the UX research lab located on the basement floor of the Samsung R&D campus in Umyeon-dong, Seoul. Each session lasted approximately 65 to 80 min per participant, with recruitment carried out via an internal company email promotion. To maintain consistency in the cognitive characteristics of the participant group, the scope of actual recruitment was limited to (a) individuals with a little knowledge of VR/MR/AR technology, (b) those who had experienced these technologies at least once, and (c) working professionals aged between 20 and 45. As a result, a total of 32 participants were recruited for the experiment, of whom 30 (17 male and 13 female) ultimately participated in this study. The participants were primarily composed of UI designers, GUI designers, VR engineers, and robotics engineers from the Samsung R&D campus in Umyeon-dong. Also, it was confirmed that the participants had no physical or mental issues that could have affected their involvement in the XR experiment.

Regarding the experimental procedure, we informed each participant prior to the simulation that the experiment could be paused and suspended if they felt motion sickness while performing the gesture interactions while using the TV-screen-based projection motion graphics or the VR headset. Participants who completed all of the procedures of the experiment received modest compensation like a coffee voucher or a gift card worth USD 15. Also, during the experiment, all experimental procedures were conducted under the approval of the Public Institutional Review Board designated by the Ministry of Health and Welfare.

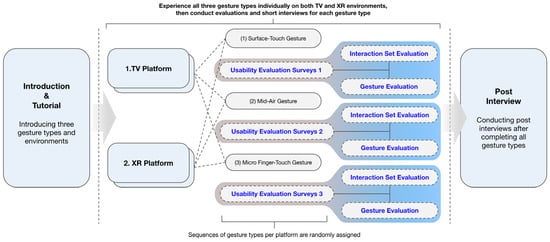

After completing each gesture type, the participants filled out two evaluation surveys: the “Interaction Set Evaluation” and the “Gesture Evaluation” (Figure 3). To comprehensively measure the usability per each type, the User Experience Questionnaire, Short Version (UEQ-S) was mainly employed as the analytical detection tool in these surveys, assessing the participants’ experiential and emotional feedback according to the criteria of pragmatic quality, hedonic quality, and overall quality [56]. These collected user data were then translated into statistical results in the analysis phase.

Figure 3.

The detailed experimental procedure for evaluating usability and preference in “Phase 2: User Simulation” of Figure 2.

3.2. The Rationale for the Selection of the Gesture Types

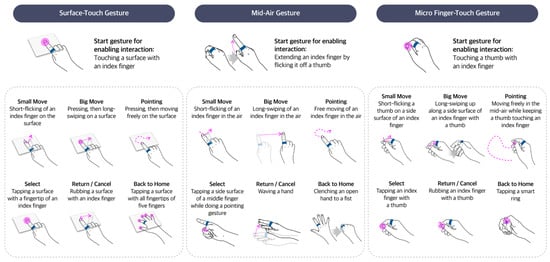

In terms of comprehensively exploring the gesture-based interactions within both conventional and immersive environment settings, this study adopted a triadic gesture classification consisting of Surface-Touch gestures, mid-air gestures, and micro finger-touch gestures (Figure 4). The selection of these gesture types was grounded in a synthesis of prior empirical studies and cognitive ergonomics, ensuring that each modality addressed a unique facet of user input behavior relevant to its interaction context.

Figure 4.

Typological classification of specific hand gestures based on three main gesture types: Surface-Touch gestures, mid-air gestures, and micro finger-touch gestures.

Surface-Touch gestures were selected as the baseline gesture modality, inspired by the well-established paradigms for direct manipulation interfaces on physical surfaces, such as trackpad-driven, finger gestural interactions [57]. Derived from the tactile and spatial familiarity of touchpad interactions and body–surface contact modalities [58], this gesture type entails utilizing a resting surface—such as the armrest of a sofa or a tabletop—on which finger-base gestures are performed. Moreover, similar approaches in wrist-mounted and surface-coupled input systems have demonstrated high precision and user satisfaction [59], reinforcing the viability of surface-based input for casual and low-effort interactions. Given these characteristics, this gesture type is particularly well-suited to everyday lean-back scenarios, enabling intuitive control of TV interfaces while seated comfortably on a living room couch.

Mid-air gestures were incorporated to examine a more immersive and expressive form of interaction, especially relevant in spatial computing environments where free-hand movements can be fully performed. Regarding this, prior research has emphasized the naturalness and intuitiveness of mid-air gestures for controlling digital content in XR environments [60,61]. Particularly, gestures such as pointing, swiping, and grabbing in mid-air align well with the interactive affordances of virtual objects [45]. However, in lean-back scenarios like television viewing, these mid-air gestures often suffer from a mismatch between spatial scale and user comfort, as the lack of a tactile experience can lead to decreased precision in navigating the interface and increased fatigue over time [32]. Also, as mentioned above, mid-air gestures can induce physical fatigue—commonly referred to as the “Gorilla Arm” effect—which poses usability concerns in constrained or prolonged user scenarios [16].

Micro finger-touch gestures offer alternative usability that retains the benefits of hands-free interactions while substantially reducing physical strain. Unlike arm-to-wrist movements, these micro gestures—comprising side finger taps, presses, and swiping—require only minimal motion and can be executed with the hand resting naturally. These kinds of finger-based micro gestures are particularly advantageous for prolonged interactions [62]. Furthermore, the integration of IMU and capacitive sensors into compact form factors such as smart rings enables real-time tracking of micro finger movements with high accuracy [59,63]. Moreover, approaches such as the MicroPress and the AuraRing illustrate how micro finger-touch gestures can provide a rich interaction vocabulary while maintaining ergonomic comfort [64,65]. Consequently, this gestural design shows the possibility for micro touch (press)-based gestures to be a promising modality for seamless, fatigue-reducing interaction methods within both screen-based and spatial computing environments.

The selection of these three gesture modalities is not only theoretically grounded but also pragmatically motivated by the human-centered, technical, and contextual features of the references above. By mapping the gesture types to the environmental affordance—(a) Surface-Touch gestures for grounded stability, (b) mid-air gestures for immersive freedom, and (c) micro finger-touch gestures for subtle comfort—this framework pursues usability-oriented, cognitive validity in studying the gesture-driven interactions across both 2D and spatial interfaces. These gestural designs also allow for a robust comparative analysis of user preferences, cognitive load, and interaction efficiency, contributing to a deeper understanding of how gestures could be adapted to diverse computing environments.

3.3. The Experimental Design

In this study, we adopt a controlled within-subject experimental design [66] to compare the gesture-based interactions across two platforms (TV and XR) and three gesture input types. Each participant experiences the conditions of both platforms—a conventional TV-screen-based 2D interface and a 3D spatial interface in a virtual space reminiscent of the real environment. Under these environmental conditions, the participants are guided to use all three gesture modalities in each environmental context: (a) Surface-Touch gestures (touch-based input on a physical surface); (b) mid-air gestures (free-hand movements in mid-air); and (c) micro finger-touch gestures (subtle finger-level touch input on the side surface of the index finger on which the smart ring mockup is worn). These three gesture types are repeatedly performed in two discrete environments (TV and XR) as six conditional combinations. Also, as mentioned, a within-subject design is chosen to allow for direct comparisons of the conditions by the same individuals. To mitigate learning or ordering effects, the sequences of the gesture types and the user testing order are randomly rotated in a Latin-square design [67,68]. This pre-condition ensures that no one faces the same gestural procedure and the same environment first.

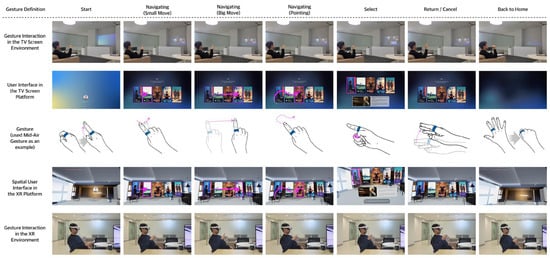

For each experimental condition, the participants perform a set of equivalent tasks on the given platform using the assigned gesture modes in terms of aligning with common user objectives. For both the TV-screen platform-based and XR-platform-based scenarios, they are instructed to carry out a range of related actions, including navigating card components on the landing page, selecting a card component to enter the content details page, or canceling a selection to return to the landing page (Figure 5). The assigned tasks are kept as similar as possible across the TV and XR so that the usability performance can be compared fairly across platforms and gesture modalities.

Figure 5.

A diagram of sequences of performing the same gestures according to the same task in both TV and XR environments (the pink-highlighted cursors and arrows on the user interface are exaggerated for visual recognition purposes).

3.4. The Data Collection and Analysis Methods

In the analysis phase, multiple user data collection methods were employed to gather both quantitative and qualitative data on the usability, experiential user feedback, and user preferences for certain gesture types according to the platform conditions. To protect the participants’ privacy, all of the data collected from the participants were kept confidential and anonymous. Anonymous IDs that represented the participants were used to label their questionnaire responses, performance data, and interview transcripts.

3.4.1. Observational and Performance Data

During the experiment, each task was video-recorded for each participant (with consent prior to the simulation) from an angle that captured the participants’ hand gestures in interacting with the TV-screen-based interface and the XR-based spatial user interface. Also, the monitoring screen that controlled and monitored the interfaces (especially the first-person view via the Meta Quest 3) of the TV and XR platforms in each instance was screen-recorded to check the overall usability of the hand gestures as well. The entire recordings per participant were saved as anonymous documents and were later reviewed to conduct in-depth observations of usability. The objective measure was the “gesture execution time (GET)” for each gesture type. Via Adobe Premiere Pro (ver. 25.2.3, Adobe Inc., San Jose, CA, USA), the time intervals that represented the GET for each individual gesture type were observed, and the interval within a recorded video clip was marked using a marker tool (Figure 6) to visually identify the intervals where specific gestures were performed in the video clip.

Figure 6.

A screenshot of marking specific gesture segments using the marker tools in Adobe Premiere Pro (ver. 25.2.3).

The measurement method for the duration of the performed gestures was organized as follows. In the TV and XR environments, the total duration of each ring gesture was measured under the default conditions of a 30 fps timecode. The measurement began the moment that a user raised their hand or wrist to initiate a small movement and ended when the final home gesture was completed, triggering a screen transition. Even if gestures were repeated for confirmation or there were long intervals due to conversation, these durations were included in the total time, as higher engagement (hedonic quality) implies focus on the gesture. Additionally, if no corresponding video was available, XR measurements were taken using the monitoring screen recordings.

3.4.2. Surveys and Questionnaires

After completing each individual gesture type on each platform, the participants filled out a usability and preference survey to evaluate their subjective user experience. In particular, to quantitatively and statistically measure user feedback on the gesture types, we used the “User Experience Questionnaire—Short Version (UEQ-S)” [56]. Based on three main criteria—(a) pragmatic quality (usability aspects such as efficiency, dependability, and usefulness), (b) hedonic quality (emotional aspects such as satisfaction and enjoyment), and (c) overall quality—the participants individually assessed the three gesture types that they used to interact with the interfaces of the TV and XR platforms through semantic 7-point scales. This survey was conducted immediately after each gesture type task was completed and lasted for 3 min. The reason for conducting a quick survey right after completing each gesture type was so that the participants could provide a UX rating for each of the six condition experiences while their memory was still fresh. Given that each gesture-type task was conducted three times on the TV platform and three times on the XR platform, the total number of surveys conducted was six.

In addition to the UEQ-S, the survey includes a few custom questions targeting preferences and perceived workload. For example, we asked the participants to rank the three gesture types by preference for each platform and to rate how physically or mentally demanding each gesture interaction felt. We also included an overall comparison question (e.g., “Which platform did you find easier to interact with, and why?”), with multiple-choice and open-ended explanations.

3.4.3. Interviews

After completing all gesture types on the two platforms, semi-structured post-interviews were conducted to collect the users’ in-depth qualitative feedback. The experiment facilitator deliberately asked the participants open-ended questions focusing on the users’ experience and their opinions of the different gesture interactions in both environments. Key questions were asked, such as “Describe any difficulties you faced using mid-air gestures on the TV versus in XR” and “Which gesture type did you prefer overall and what made it better?”, or “How did the XR platform-based experience compare to the TV—in what ways did you find one more immersive or more frustrating than the other?”. Follow-up questions probed for explanations (e.g., if a participant found a gesture tiring or unintuitive, the experiment facilitator asked why). The interviews also allowed the participants to suggest improvements or discuss contextual factors (such as whether they might feel self-conscious performing mid-air gestures in a living room versus with the privacy of VR). Each interview lasted around 10–15 min, or longer if the participant had more to share. The interviews were video-recorded, to which the participants gave consent prior to the experiment. After the experiment, audio recordings from the participants’ recorded video files were extracted in WAV format. These audio files were then processed using the NAVER CLOVA app (ver. 3.7.11, NAVER Corp., Seongnam, Republic of Korea) to generate text-based transcripts. The voice recordings and the resulting transcripts were anonymized and securely stored in an encrypted storage system for a period of one year.

3.5. The Data Analysis Methods

Based on the user data collected from the surveys, this study analyzes the participants’ experiences with different gesture types in TV and XR environments using Python (v.3.13) for data processing. The methodology encompasses several analytical procedures as follows. The participants’ responses in the UEQ-S from the survey were processed using the UEQ-S data analysis tool [69]. This tool provides statistics and comparative outcomes, enabling a clear understanding of the users’ hedonic and pragmatic perceptions across different experimental conditions. Based on this setting, we conducted internal consistency analyses (Cronbach’s α) for two distinct dimensions that the UEQ-S contained: pragmatic and hedonic quality. For both dimensions, the Cronbach’s α values exceeded 0.7 across all gesture types, indicating acceptable reliability. Additionally, we referred to the benchmark data provided by Team UEQ to comparatively interpret the score levels for each gesture type across the environments.

To clarify the results of the UEQ-S, statistical analyses were performed. All analyses began with a Shapiro–Wilk test to check for normality, followed by the application of either parametric or non-parametric tests depending on whether the within-subject data met the assumptions for normality (see “Phase #3: Analysis” in Figure 2). When post hoc tests were conducted, Bonferroni correction was applied to adjust the p-values and enhance the accuracy of the results. Furthermore, we aimed to examine whether the participants’ preferences for gesture types differed between the TV and XR environments. To this end, we conducted both quantitative and qualitative analyses using the UEQ-S scores and the rank order data, in which the participants ranked their preferences for three gesture types in each environment.

To examine the relationship between the gesture execution time (GET) for each gesture type (see Section 3.4.1) and the user experience measured through the UEQ-S scores further, an additional correlation analysis was conducted. After testing for normality, an appropriate correlation method—either Pearson’s or Spearman’s—was applied to assessing the relationship between the two variables. In addition, Ordinary Least Squares (OLS) regression was performed to more clearly identify potential linear associations between gesture efficiency and user satisfaction. All statistical analyses were executed using the Python (v.3.13)-based SciPy (v.1.15.2) and Statsmodels (v.0.14.04) libraries, facilitating a comprehensive evaluation of the differences in the user experience metrics across the various gesture types and environments.

For the interview analysis, an open-coding process was applied to the documents containing the recorded interview contents. Open-coding refers to a process where the research team repeatedly reviews the qualitative data to identify the implicit categories embedded within the interview data. After this, the dispersed open-coded categories are analytically organized to identify and group the core messages from both individual participants and the collective feedback. Via this process, participant comments that represent key messages are selected.

4. Results

4.1. The Evaluation of the Gesture Types in the TV Environment

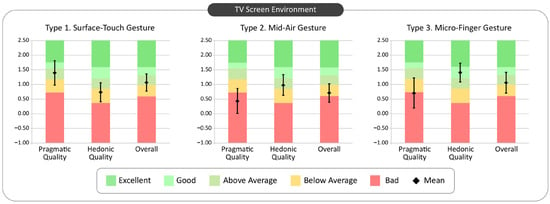

In the TV environment, the results from the UEQ-S evaluation for the three gesture types reveal the following (see Figure 7 and Table 1). The Surface-Touch gesture was rated highest in terms of pragmatic quality (“Above Average”) but lowest in terms of hedonic quality (“Below Average”). Nonetheless, it attained the highest overall satisfaction score (1.07, “Above Average”). The mid-air gesture was evaluated as lowest in terms of its pragmatic quality (“Bad”) and also had the lowest average hedonic quality (0.70, “Below Average”), resulting in the lowest overall satisfaction score among all three gesture types. In contrast, the micro finger-touch gesture received a low pragmatic quality rating (“Bad”) but the highest hedonic quality rating (“Good”). Also, this gesture type achieved the second highest overall satisfaction score (1.05, “Above Average”).

Figure 7.

A graph of the UEQ-S results for the three gesture types in the TV environment.

Table 1.

Statistics of the UEQ-S results for the three gesture types in the TV environment.

Also, we conducted statistical analyses to examine the differences among the three gesture types within the TV environment (Table 2). First, the Shapiro–Wilk test results indicated that none of the three UEQ-S scales met the assumption of normality for the TV conditions (all p-values < 0.05). Accordingly, the non-parametric Friedman test was applied. The results revealed a statistically significant difference among the three gesture types in their pragmatic quality (χ2(2) = 9.89, p = 0.0071) and hedonic quality (χ2(2) = 10.16, p = 0.0062). However, for overall satisfaction, the result was only marginally significant (χ2(2) = 4.78, p = 0.0918).

Table 2.

Statistical significance results between gesture types in the TV environment (the Friedman test and post hoc testing using the Wilcoxon signed-rank test with Bonferroni correction).

Subsequent post hoc analyses were performed using the Wilcoxon signed-rank test with Bonferroni correction. Based on the marginal significance observed for the overall satisfaction result, we also included it in the post hoc comparisons to examine potential differences among gesture types further. The results showed that in terms of pragmatic quality, a significant difference was found between the mid-air and Surface-Touch gestures (Mean_diff (Mid-Air, Surface) = −0.98, W = 90, corrected p = 0.01). For hedonic quality, significant differences were found between the micro finger and mid-air gestures (Mean_diff (Micro-Finger, Mid-Air) = 0.42, W = 87, corrected p = 0.04) and between the micro finger and Surface-Touch gestures (Mean_diff (Micro Finger, Surface) = 0.67, W = 65.5, corrected p = 0.0086). Finally, for overall satisfaction, a significant difference was also observed between the mid-air and Surface-Touch gestures (Mean_diff (Mid-Air, Surface) = −0.35, W = 100, corrected p = 0.033).

These results suggest that the Surface-Touch gesture is perceived as the most practical gesture type yet lacks elements of enjoyment in the interaction experience. In contrast, the micro finger-touch gesture received relatively negative evaluations in terms of its practicality but was recognized as providing a greater sense of enjoyment during its use. In terms of overall satisfaction, the mid-air gesture was evaluated most negatively in the TV environment, while the Surface-Touch and micro finger-touch gestures were rated more positively. Notably, these two gestures received the highest evaluations in terms of their pragmatic quality and hedonic quality, respectively, which appears to have contributed to their higher scores for overall satisfaction.

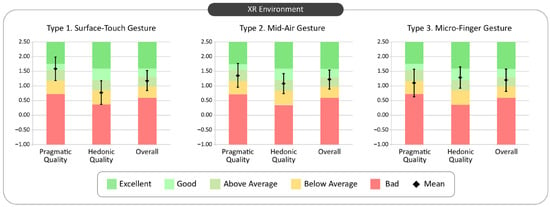

4.2. The Evaluation of the Gesture Types in the XR Environment

For the XR environment (see Figure 8 and Table 3), the evaluation results show similar yet distinct trends. The Surface-Touch gesture again ranked highest in pragmatic quality (“Good”) and lowest in hedonic quality (“Below Average”). Interestingly, although this gesture type received the highest overall satisfaction in the TV environment, it ranked the lowest among all three gesture types on the XR platform, with an overall satisfaction score of 1.17 (“Above Average”). On the other hand, the evaluation of the mid-air gesture was improved notably in this context, obtaining the second highest pragmatic quality rating (“Above Average”) and moderate hedonic quality evaluations (“Above Average”). Remarkably, despite being rated negatively in the TV environment, this gesture type received the highest overall satisfaction score (1.22, “Above Average”) in the XR environment. Also, the micro finger-touch gesture exhibited similar patterns to those in the TV environment. This gesture rated lowest in terms of its pragmatic quality (“Below Average”) but highest in hedonic quality (“Good”), thereby achieving the second highest overall satisfaction (1.20, “Above Average”).

Figure 8.

A graph of the UEQ-S results by the three gesture types in the XR environment.

Table 3.

Statistics of the UEQ-S results for the three gesture types in the XR environment.

Subsequently, a statistical analysis was conducted to examine the differences among the three gesture types within the XR environment (Table 4). First, the normality tests showed that pragmatic quality did not meet the assumption of normality; therefore, a non-parametric Friedman test was conducted. For hedonic quality and overall satisfaction, the data satisfied the normality assumption, and thus a repeated-measures ANOVA was applied. The Friedman test for pragmatic quality revealed no significant differences among the three gesture types (χ2(2) = 1.94, p = 0.379). In contrast, the ANOVA for hedonic quality indicated a statistically significant difference (F(2, 58) = 4.45, p = 0.016). However, no significant difference was observed in overall satisfaction (F(2, 58) = 0.04, p = 0.96).

Table 4.

Statistical significance results between gesture types in XR environment (Friedman Test/Repeated Measure ANOVA and post-hoc using pairwise t-test with Bonferroni correction).

Given the lack of significance for pragmatic quality and overall satisfaction, post hoc comparisons were performed only for hedonic quality, using pairwise t-tests with Bonferroni correction. The results showed a significant difference between the micro finger and Surface-Touch gestures (Mean_diff (Micro Finger, Surface) = 0.52, t = 2.62, corrected p = 0.0138).

The analysis revealed no clear statistical differences among the three gesture types in the XR environment. However, it is noteworthy that, in line with the findings in the TV environment, the Surface-Touch gesture was perceived as relatively less engaging, while the Micro Finger gesture was considered the most enjoyable. Interestingly, although no statistical difference was confirmed, the Mid-Air gesture—which received the most negative evaluations in the TV environment—showed the highest mean score in overall satisfaction in the XR environment. Conversely, the Surface-Touch gesture, which had received the most favorable evaluations in the TV environment, recorded the lowest average score in the XR environment. These findings may suggest that the nature of the XR environment—allowing gesture execution without the need for physical devices—may have increased the perceived utility of Mid-Air gestures compared to Surface-Touch gestures, potentially enhancing users’ sense of immersion and freedom.

4.3. Comparative Analysis of the Results Between the TV and XR Environments

As the next step, a comparative analysis of the TV and XR environments was conducted. To compare the two environments in terms of pragmatic quality, hedonic quality, and overall satisfaction, normality tests were first performed. Depending on the outcome, either a paired t-test (for normally distributed data) or a Wilcoxon signed-rank test (for non-normal data) was applied.

The analysis revealed significant differences between the two environments in pragmatic quality and overall satisfaction. Specifically, pragmatic quality did not meet the assumption of normality, and thus the Wilcoxon signed-rank test was used, which showed a statistically significant difference between the two environments (W = 54.50, p = 0.0007). For overall satisfaction, the data met the assumption of normality; the paired t-test also revealed a significant difference (t(29) = −3.41, p = 0.0019). In contrast, no significant difference was found in hedonic quality between the environments (t(29) = −0.16, p = 0.876). Based on these findings, gesture-level comparisons between the two environments (Table 5) were analyzed further for the dimensions that showed significant differences: pragmatic quality and overall satisfaction.

Table 5.

Results of the gesture-level comparative analysis between the TV and XR environments for pragmatic quality and overall satisfaction.

The analysis revealed that the mid-air gesture was the only gesture type that showed a statistically significant difference between the two environments. Specifically, the mid-air gesture was perceived as functionally more favorable in the XR environment than in the TV environment (t(29) = −4.275, p = 0.0002), and the mean score for hedonic quality was also higher in XR. Consequently, the overall satisfaction for the mid-air gesture dramatically improved in the XR environment compared to that in the TV environment (t(29) = −3.768, p = 0.0007).

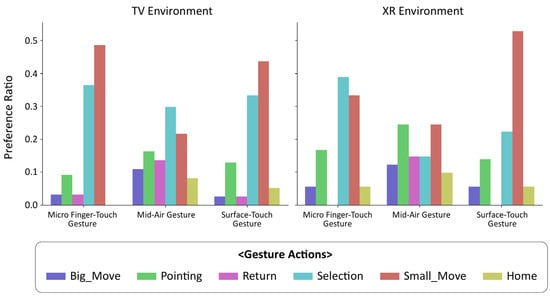

In summary, the UEQ-S analysis indicated that a significant difference between the TV and XR environments was observed only for the mid-air gesture. To investigate these environment-dependent differences further, we analyzed the participants’ rank order preferences for the gesture types across the two environments. After experiencing all gestures in both environments, the participants were asked to rank the gesture types in order of preference. The results of this ranking task are presented in Figure 9.

Figure 9.

Ranking results for preferred gesture types in TV/XR environments. The gesture type rated highest in each environment is highlighted with a blue rectangular outline, while the gesture type rated lowest is highlighted with a red rectangular outline.

In the TV environment, the Surface-Touch gesture was most frequently selected as either the first or second preference (27 times), whereas the mid-air gesture was the least selected in the first or second positions (14 times) and was most frequently ranked third (16 times). In contrast, in the XR environment, the mid-air gesture received the highest number of first and second rankings (25 times), while the Surface-Touch gesture was least frequently selected in the top two positions (16 times) and most frequently ranked third (14 times).

To provide a more intuitive comparison, a weighted ranking score was calculated by assigning three points to first place, two points to second place, and one point to third place. The results were then converted to a 100-point scale. The micro finger-touch gesture scored identically in both environments (64.4 points). The mid-air gesture scored 55.6 points in the TV environment and 78.9 points in the XR environment. Finally, the Surface-Touch gesture received the highest score in the TV environment (80 points) but the lowest score in the XR environment (56.7 points). Remarkably, this pattern precisely mirrored the average overall satisfaction scores from the UEQ-S results, indicating that the participants’ gesture preferences varied significantly depending on the environment.

4.4. The Evaluation Results for Specific Gesture Actions by Type

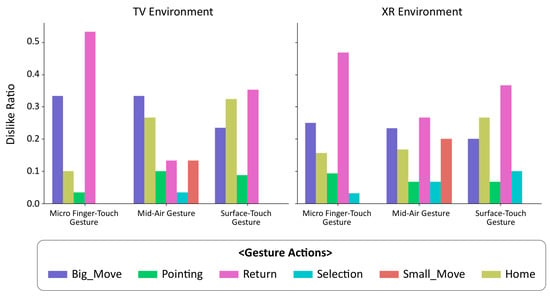

Participants evaluated the specific actions within each gesture type and were asked to identify actions they perceived as natural and those they felt were uncomfortable. This approach enabled an analysis of the strengths and weaknesses associated with each gesture type, and the findings of these results are illustrated in Figure 10 and Figure 11 below.

Figure 10.

Gestures positively evaluated by the participants in TV/XR environments for each of the three gesture types.

Figure 11.

Gestures negatively evaluated by the participants in the TV/XR environments for each of three gesture types.

The participants predominantly reported that the small movement and selection gestures were most natural, describing that these gestures were intuitive, simple, and easy to use. Examples of such gestures include briefly moving one’s finger to shift the selection area or performing basic selection taps on a surface or with one’s index finger/thumb. These actions appeared naturally intuitive to the participants largely due to their similarity to the conventional interactions already familiar within daily smart-device usage. Notably, the pointing gesture in the mid-air gesture category received more positive evaluations in the XR environment than in the TV environment. This favorable response is likely attributable to the mixed-reality setting, where the spatial user interface panels and card components are presented directly in front of users, making pointing interactions with one’s fingertips substantially more intuitive and natural compared to similar gestures directed toward distant TV screens.

Conversely, as illustrated in Figure 11, most of the participants answered that the following gestures—large movements, return/cancel, and back to home—were particularly awkward or uncomfortable. These gestures either significantly deviated from previously embodied interaction patterns (e.g., the back to home gesture, performed by swiping all of one’s fingers across a surface in the Surface-Touch gesture type) or presented difficulties in terms of cognitively differentiating large movement gestures from more convenient gestures like the small movement gestures. Consequently, these gestures could impose additional cognitive demands, requiring users to engage in new learning processes and increasing the mental effort required to recall specific gesture associations during interactions with the interfaces.

4.5. The Evaluation Results for Gesture Execution Times: An Analysis of the Correlation with the UEQ-S Scores

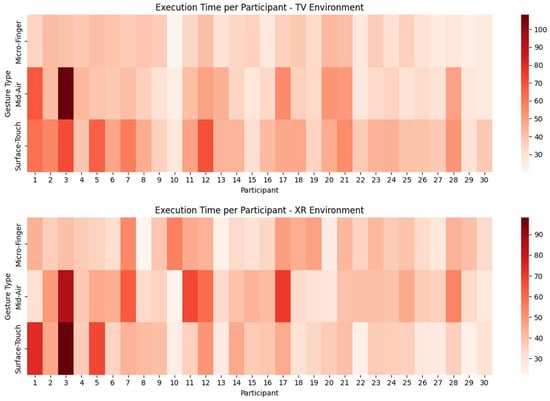

To investigate how gesture performance time influenced the user experience in different environments further, we analyzed the gesture execution time (GET) for the hand gestures and its correlation with the User Experience Questionnaire, Short Version (UEQ-S) scores. The primary objective was to examine whether temporal efficiency in gesture execution influenced users’ satisfaction and usability perceptions across different environments and gesture types. Based on this direction point, firstly, the GET data were collected via the rules established in Section 3.4.1 (Figure 12).

Figure 12.

Heat map of measured gesture execution time per each gesture type.

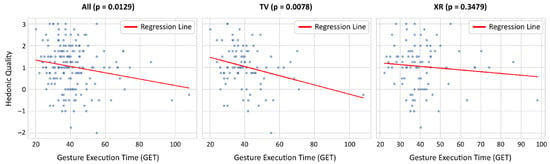

The correlation analysis between the GETs and UEQ-S scores revealed a marginally significant negative correlation with pragmatic quality (Spearman’s r = −0.135, p = 0.070), and statistically significant negative correlations with hedonic quality (Spearman’s r = −0.151, p = 0.044) and overall satisfaction (Spearman’s r = −0.176, p = 0.018). These results imply that longer execution times may slightly reduce users’ overall satisfaction and emotional experience with gesture interactions.

To clarify the extent to which GET influenced the UEQ-S outcomes further—namely pragmatic quality, hedonic quality, and overall satisfaction—and to explore potential differences across environments, multiple Ordinary Least Squares (OLS) regression analyses were conducted using the combined dataset (TV + XR), as well as subsets for the TV and XR environments individually (Table 6).

Table 6.

The results of OLS regression analyses assessing the effect of gesture execution time on the UEQ-S scores across different environments.

The regression results indicated that the GET had no statistically significant impact on most of the UEQ-S outcomes, and the explanatory power of the models was generally low. However, hedonic quality showed a significant effect in both the combined dataset and the TV environment, while no statistical difference was observed in the XR environment (Figure 13). These findings suggest that in the TV environment, longer execution times may negatively affect the enjoyment aspect of gesture interactions, whereas in the XR environment, the duration of gesture execution appears to have little to no influence on participants’ gesture experiences.

Figure 13.

Scatter plots with red linear regression lines evaluating the relationship between gesture execution time and hedonic quality among the UEQ-S scores.

The findings suggest a subtle yet negative influence of the GET on the participants’ interaction satisfaction within the TV environment, although the strength of this relationship is limited. Conversely, no statistically significant relationship between GET and interaction satisfaction emerged within the XR environment. These outcomes may be interpreted from several perspectives. Firstly, the participants may have perceived the gesture interactions as inherently more intuitive and natural in the XR environments compared to the TV environments, causing them to prioritize intuitiveness and fluidity over the duration of gesture execution. That is, such intuitiveness and instinctiveness may have led the participants not to exclusively concentrate on the functional execution of the gestures but rather may have allowed them to naturally spend more time engaging with content exploration or concurrently performing other activities, such as interacting with the other ambient virtual environments [70]. This interpretation leads to the point that immersive qualities and natural flow appear to outweigh efficiency.

Secondly, the participants’ established mental models from prior experience with remote control interactions might have led them to expect quicker and simpler gestures in the TV environment, where there is a conventionally perceived physical distance between the TV and the remote [71]. This interpretation suggests that task efficiency plays a greater role in shaping user experiences on the TV platform, where users may expect quicker, remote-like interactions. This distinction underscores the context-dependent nature of gestural interaction usability and the importance of platform-specific UX considerations in interface designs.

5. Discussions

This study aimed to comprehensively understand the participants’ gesture interaction experiences and to derive meaningful insights by utilizing diverse data collection methods and analytical techniques. By synthesizing the findings from both the quantitative data analysis and qualitative participant feedback via surveys and post-interviews, the specific preferences among the participants in terms of the gesture types under certain environmental conditions were revealed. These identified patterns underscore the importance of context-specific gesture interactions, suggesting that gestures may be perceived differently depending on environmental factors from user experiential perspectives even though the same gestures are executed across these environments [72]. Considering future opportunity, the outcomes of this study not only highlight the varying factors influencing user satisfaction and interaction quality but also suggest directions for future research. Based on the insights found here, future studies could attempt deeper analytical approaches and explore user-friendly scenarios that mainly employ hand gestures for TV and XR environments [73].

Building upon these future possibilities, this study organized and analyzed post-interviews with the participants who had just finished all types of gesture-based interaction tasks (six gesture types across both the TV and XR environments). Through this interview-based analysis, we sought to uncover the underlying rationale behind user behaviors and preferences and extract in-depth insights that may significantly influence future gesture-based interaction systems.

5.1. [Objective #1] Does a Difference Arise in the Participants’ Gesture Interaction Experiences Between the TV and XR Environments?

The first objective of this study was to examine the differences in the gesture interaction experiences across TV and XR environments. To achieve this, this study designed multiple experimental scenarios and platform-wise environments and then conducted experiments involving participants who interacted with gestures in different contexts. The findings demonstrated that the participants showed diverse reactions (Table 1 and Table 3) and gave diverse feedback when performing the gesture interactions across distinct environmental settings (see “Related Interviews” in Table 7). These differences mean that pursuing a singular, universal design solution for gesture interactions is insufficient and ineffective. Instead, they highlight the necessity of adopting a user-centered design approach to creating the optimal gesture interactions specifically tailored to each environment. This approach requires careful consideration of the users’ device contexts and their interface styles.

Table 7.

Analysis results and related interview answers for Objective #1.

Consequently, the environment-specific variations identified through this study emphasize the importance of experience-based design, considering the point that technical considerations alone cannot sufficiently guide effective user interface development. Also, future research should deepen its focus on examining user behaviors and preferences across varied interaction environments, leveraging distilled insights to systematically design and validate gestures that effectively align with users’ intuitive expectations and enhance their overall satisfaction.

5.2. [Objective #2] Do Users Have Preferred Gesture Types Depending on TV and XR Environments?

The findings of this study indicated that based on the UEQ-S-derived UX scores, notable differences among gesture types were predominantly evident in the case of mid-air gestures (Table 5). However, significant variations emerged when examining the participants’ rank order preferences for gesture types in specific environmental contexts (Figure 9). Specifically, the participants showed the highest preference for the Surface-Touch gesture in the TV environment, whereas the mid-air gesture was most preferred in the XR environment. Conversely, the mid-air gesture received the lowest evaluations in the TV environment, and notably, the Surface-Touch gesture was least favored in the XR environment.

Such contrasts were further reflected in the qualitative user experiential insights gathered from the participant interviews (see “Related Interviews” in Table 8). For instance, participant [P06] commented, “There seems to be a substantial spatial difference between TV and XR environments, influencing gesture preferences. For TV environments, comfortable gestures for a lean-back situation are preferable, whereas in XR environments, gestures enabling more active selection and manipulation of virtual objects are relatively preferred.” Also, further analytical commentary by participant [P15] highlighted that the flat, two-dimensional nature of the TV environment naturally aligns with the Surface-Touch gesture. In contrast, participant [P15] commented that gestures utilizing a three-dimensional spatial sense, such as the mid-air gesture, appeared more intuitive and fitting for the XR environment due to its inherent spatial characteristics.

Table 8.

Analysis results and related interview answers for Objective #2.

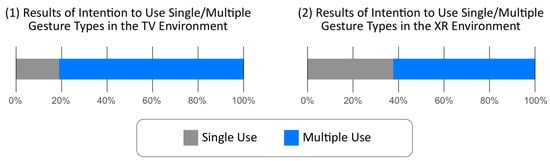

Additionally, the participant preferences indicated a clear inclination towards using multiple gesture types rather than a single type exclusively, depending on the given context (Figure 14). Specifically, the majority of the participants preferred mixed usage of gesture types across both environments—over 80% in the TV environment and more than 60% in the XR environment. These findings suggest that users do not rigidly adhere to one gesture type but instead adaptively combine multiple types based on their personal physical comfort and contextual needs.

Figure 14.

The participants’ selection results regarding the single or mixed use of gesture types depending on TV/XR environments.

These findings suggest the following considerations for gesture interface design and future UX research:

- Environment-specific gesture design is crucial. Developing gestures optimized for each unique environmental context will significantly enhance user experiences.

- Consideration of spatial awareness is necessary. Gesture designs must thoughtfully incorporate the characteristics of the interaction space, effectively utilizing either two-dimensional or three-dimensional elements according to the specific environmental setting.

- UX research should examine both categorical preferences and usage behavior, including combinations and context-based adaptations. These insights should be reflected in gesture interface development to support real-world usage patterns more comprehensively.

Future research is encouraged to build upon these insights through rigorous, ample user testing, validating the effectiveness of the proposed gestures. Moreover, continued research should aim to develop the optimal gesture interfaces suitable for diverse environmental contexts. The outcomes of this study are anticipated to provide valuable guidance for detailed gesture interaction designs in practical implementations.

5.3. [Objective #3] What Actions Are Preferred or Found Uncomfortable for Each Type of Gesture?

Overall, the gestures positively evaluated by the participants predominantly involved basic interactions such as moving selection areas based on finger movements or performing simple tap actions on surfaces or with one’s fingers (Table 9). These actions were interpreted as intuitive and natural because of their similarity to familiar touch-based interactions already experienced in daily life. In contrast, gestures involving tapping one’s palm or clenching one’s fist to return to a home screen, as well as shaking one’s finger or rubbing it on a surface to perform return or cancel actions, mostly received negative responses [74]. The participants found these gestures challenging, primarily because they deviated significantly from the conventional interaction methods and exhibited unclear mapping between actions and the intended functionalities (see “Related Interviews” in Table 9).

Table 9.

Analysis results and related interview answers on Objective #3.

In summary, gestures incorporating finger movements and taps analogous to conventional touch interactions received favorable evaluations, underscoring the tendency for familiar actions to be perceived as intuitive and natural. Conversely, new gestures with which the participants lacked experience, involving palm tapping, clenching one’s fist, and shaking one’s finger, were evaluated negatively, highlighting that unclear action–function mappings could lead to user confusion, particularly when these gestures differed significantly from the conventionally established interaction patterns.

5.4. Challenges and Opportunities in Designing Gesture Interfaces

The results of this study suggest that the effectiveness of gesture-based interactions is closely tied to users’ subjective experiences and perceived convenience. Designing gestures that are easy to perform and physically comfortable is essential for sustaining user acceptance and satisfaction. Accordingly, successful gesture interaction systems should be developed through a user-centered design approach that incorporates users’ contextual needs and individual preferences. To enhance the scalability and practical relevance of these findings, we outline the following directions for future research.

First, gesture-based interactions should be examined across a more extensive spectrum of environments where hand input techniques are increasingly integrated into diverse applications and situational conditions. While the present study focused on interactions within spatially confined scenarios such as TV and XR settings in home-like environments, emerging use contexts demand broader consideration. For instance, interacting with spatial interfaces overlaid onto real-world environments via hand gestures and AI while wearing lightweight, XR smart glasses in a public space is no longer a distant future scenario but an emerging aspect of our everyday experience [37,75,76]. Based on our findings, mid-air gestures—which were rated highly in XR contexts—may appear to be the most suitable option. However, prior research suggests that motion-based gestures are more susceptible to recognition errors and unintended activation compared to surface-based gestures during real-world usage [77]. Furthermore, AR/VR users in public environments often encounter social discomfort, as they tend to attract looks and attention from bystanders more compared to those using conventional devices like laptops [78]. In a similar vein, subtle gesture types—such as micro finger-touch gestures—have also been proposed as a more contextually appropriate modality, particularly in public or socially constrained environments [79]. Taken together, these findings underscore the need to advance gesture interaction design in ways that accommodate a comprehensive scope of situational and environmental contexts. Particularly, considerations such as public visibility and mobility constraints must be addressed to ensure that gesture-based inputs remain usable, discreet, and reliable across everyday scenarios beyond controlled environments.

Second, designing gesture-based interaction systems requires nuanced consideration of cultural and behavioral diversity to support the development of gestures that are universally meaningful and acceptable. As the current study solely involved participants from South Korea, therefore, cultural variability was not taken into account. Nonetheless, achieving universality in gesture interactions demands that designers consider the diverse cultural backgrounds of target user groups during the gesture development process. While gestures represent a fundamental and ubiquitous aspect of human communication, prior research highlights that their forms, meanings, and usage patterns are subject to significant cultural divergence. Kita emphasizes that the divergence in gesture interpretation is rooted in how individual cultures uniquely associate physical forms of gestures with meaning, shaped by their specific ways of perceiving space, language, and social communicative norms [80]. Consequently, a gesture perceived as neutral or even positive within one cultural context may convey unintended or negative connotations in another.

Embodying gesture interfaces that are broadly accessible and socially appropriate across user populations, it is crucial to integrate cultural distinctions into both gesture design and interpretation frameworks. Wu et al. empirically validate this perspective through a comparative analysis of the gesture preferences in American and Chinese user groups [81]. Notably, even commonly recognized gestures such as numerical hand signs and a “throat-slitting” motion were subject to varied interpretations depending on cultural backgrounds, reinforcing the importance of contextual sensitivity in gesture design. Also, this evidence advocates for a culturally informed design approach to enable more universally applicable gesture-based interfaces.

Third, to strengthen the potential for commercialization further, future research should place greater emphasis on user diversity and explore gesture experiences in the context of long-term use. The current study involved participants who were relatively familiar with the gesture design and XR-based interactions, with an age range between 20 and 45. However, to improve the generalizability of the gesture design outcomes, it is necessary to include participants with limited prior exposure to specific digital environments or stimuli. Particularly, it is important to examine users who may be less experienced or take longer to perceive or process information—by expanding the age range of the participants beyond those typically included in prior experiments [82,83]. These insights underscore the spectrum and varying levels of technological proficiency, especially for those less experienced with gesture-based and spatial interactions.

In terms of long-term usage, although this study engaged the participants for over an hour across two platform conditions using the established gesture types, it lacked consideration of their habitual or repeated use in daily life contexts. As indicated in prior research on the significance of long-term use testing [84,85], user perceptions, preferences, and fatigue factors may shift when gestures are utilized in real-life scenarios. Accordingly, integrating long-term usage scenarios into future studies could offer deeper insights into how the gesture interaction paradigm adapts—or fails to adapt—to the demands of real-world environments.

6. Conclusions

6.1. An Executive Summary

This study explores how users experience and evaluate gesture-based interactions across two distinct digital environments: a 2D lean-back TV interface and a 3D immersive XR interface. Through a controlled user experiment, the participants completed interaction tasks using three gesture types—Surface-Touch, mid-air, and micro finger-touch—within each environment. By combining a quantitative analysis with qualitative feedback from surveys and interviews, this study identified clear preferences for specific gesture types depending on the environmental context. The quantitative results showed that Surface-Touch gestures were most favored in the TV setting for their practicality, while mid-air gestures were preferred in XR due to their spatial intuitiveness. These findings were reinforced by the qualitative insights, which emphasized how environmental factors—such as the display distance, interaction scale, and spatial awareness—shape user perceptions of gesture usability and satisfaction.

In addition, this study examined how users perceived the intuitiveness of the different gesture actions. Simple, touch-like movements were generally favored, whereas unfamiliar gestures (e.g., palm taps, full-hand swipes) were often deemed uncomfortable or unintuitive. These findings underscore key factors influencing user satisfaction and interaction quality while also suggesting practical directions for future research. Future studies may explore deeper analytical approaches and develop gesture-based scenarios tailored to users’ needs across TV and XR environments. We hope that this study highlights the importance of context-aware gesture interaction design and serves as a meaningful contribution to the future research on developing more natural and user-centered digital interfaces.

6.2. Limitations and Future Work

While this study provides meaningful insights into gesture-based interactions across TV and XR environments, several limitations must be acknowledged.

First, the limited sample size in this study may pose a constraint on the generalizability of the findings. While the experiment yielded meaningful insights into gesture-based interactions across two interface environments, the relatively small number of participants may not adequately represent the broader user population. In particular, this study did not account for heterogeneity among user groups, such as variations in an expanded age span, technical and non-technical users, and cultural backgrounds. These factors may influence the broader applicability of the results to real-world gesture interaction scenarios, particularly in spatial computing contexts. As discussed in Section 5.4, future research should strive to incorporate more demographically and culturally diverse participant groups to enhance the ecological validity and inclusivity of the results.