1. Introduction

Workplace safety in high-risk industries, particularly in chemical production, is a critical concern due to complex operational procedures, hazardous materials, and strict regulatory compliance requirements. Despite well-established safety protocols, industrial accidents persist, often attributed to human error, poor hazard recognition, and delayed responses to critical situations. Traditional classroom-based training methods, while informative, lack interactivity and fail to account for real-time attention dynamics. These methods do not adequately measure cognitive engagement or hazard recognition, leading to misaligned focus and delayed emergency responses. The proposed gaze-directed XR training system addresses these gaps by dynamically adjusting feedback based on participants’ real-time visual attention, thereby improving procedural compliance and situational awareness [

1]. To address these shortcomings, virtual reality (VR) and other extended reality (XR) technologies have emerged as promising solutions, allowing operators to engage in hands-on training within immersive, simulated environments that replicate real-world risks without exposing them to actual danger.

While XR-based training offers an interactive and engaging experience, it lacks mechanisms for real-time corrective feedback, which is essential for reinforcing safety compliance. Eye-tracking technology provides a potential solution by monitoring trainees’ gaze behavior, enabling instructors to assess whether critical safety elements—such as warning signals, control panels, and hazardous material zones—receive adequate visual attention. Previous studies suggest that integrating eye-tracking with VR enhances hazard detection and reduces cognitive overload by directing user focus to key risk factors [

2]. In safety-critical fields like aviation and construction, such systems have been shown to improve hazard recognition and procedural adherence [

3]. However, the chemical industry presents unique challenges, such as the need to process multiple dynamic variables and respond rapidly to evolving risks, making the effectiveness of eye-tracking-assisted training in this sector an area requiring further exploration [

4]. Although XR technologies have shown potential in domains such as construction and aviation, these implementations rarely address the unique cognitive and procedural demands present in chemical manufacturing. Furthermore, eye-tracking has largely been used as a passive evaluation tool rather than as an active instructional mechanism. This study bridges that gap by proposing a gaze-responsive XR training framework specifically tailored for chemical plant scenarios.

Existing research on safety training in industrial settings largely emphasizes procedural learning but does not thoroughly investigate how visual attention influences compliance and emergency response effectiveness [

5]. Most current training programs evaluate performance based on standardized tests and procedural checklists, which fail to capture the cognitive and behavioral aspects of hazard perception and real-time decision-making [

6]. To fill this gap, there is a need for a structured framework to assess how gaze-directed feedback improves training outcomes and operational safety [

7].

The motivation for this research stems from the high frequency of operator errors and the lack of adaptive training systems in chemical manufacturing environments. While XR tools offer immersion, they often fail to deliver timely feedback on operator focus. This study addresses this gap by developing a novel training protocol that uses real-time gaze tracking to enhance attention, decision-making, and procedural adherence. The research aims to evaluate the effectiveness of this approach through experimental validation, ultimately contributing to safer, more responsive training systems in high-risk sectors.

This study contributes to the advancement of industrial safety training by integrating real-time eye-tracking into extended reality (XR) environments specifically designed for chemical process operations. Unlike the existing studies focused on general procedural training, our approach tailors gaze-based feedback to the visual demands and safety-critical sequences of chemical tasks, thus introducing a domain-specific application of XR-eye-tracking integration that remains underexplored in the literature. Specifically, it examines how gaze-directed training influences key performance metrics such as reaction time, accuracy, attention distribution, and error rates [

8]. Additionally, it investigates whether the benefits of eye-tracking-assisted training differ between experienced and novice operators, offering insights into how adaptive training technologies can enhance workforce preparedness [

9]. Another objective is to determine whether real-time gaze monitoring can improve safety protocol adherence and enhance emergency response efficiency in hazardous industrial environments [

10].

While recent studies have explored the utility of XR in construction [

11] and offshore environments [

12], these contexts lack the procedural precision and risk conditions characteristic of chemical plants. Moreover, most studies utilize eye-tracking only as a passive assessment tool. In contrast, our study actively employs gaze feedback as an adaptive instructional mechanism—an innovation not previously demonstrated in chemical industry training.

Although prior studies have demonstrated the feasibility of virtual reality (VR) for safety training in high-risk engineering industries, many of these frameworks lack integrated biometric feedback mechanisms, limiting their capacity for real-time adaptation. For example, the recent literature reviews highlight the effectiveness of VR in improving trainee engagement and procedural knowledge, yet they also emphasize a lack of dynamic feedback systems that adjust to users’ attention or stress levels during task execution [

13]. In contrast, the current study contributes a novel training framework by integrating real-time eye-tracking into XR simulations, thereby enabling both performance monitoring and adaptive instructional feedback. This dual integration represents a significant advancement in gaze-guided safety training design for cognitively demanding chemical plant environments [

14].

Recent research has shown that XR platforms have been used to simulate emergency evacuations in petrochemical facilities [

15] and sterile room protocols in pharmaceutical plants [

16]. However, these implementations typically lack real-time adaptive feedback and do not employ eye-tracking technology to assess or guide user attention during training.

To achieve these goals, a controlled experimental study was conducted using a VR-based safety training system integrated with eye-tracking technology. Participants were divided into two groups: the experimental group, which received real-time gaze feedback to adjust their focus and decision-making, and the control group, which completed XR training without eye-tracking assistance. Both groups performed simulated chemical handling tasks, with their performance evaluated across multiple criteria, including response speed, procedural accuracy, attention focus, and corrective action efficiency [

17].

By systematically evaluating the impact of gaze-directed feedback, this study contributes to the growing field of intelligent safety training systems. The findings will provide empirical evidence on how integrating eye-tracking with XR enhances situational awareness, reduces errors, and improves compliance with safety protocols [

18]. As industries continue to seek innovative training methods to minimize workplace hazards, XR combined with real-time eye-tracking technology represents a promising step toward the future of safety training [

19].

This study aims to investigate whether real-time gaze tracking integrated within an XR training environment can enhance trainee performance in five key safety indicators: reaction time, task accuracy, attention focus, error rate, and response to corrective feedback. The study also compares performance between novice and experienced operators to evaluate differential effectiveness.

2. Materials and Methods

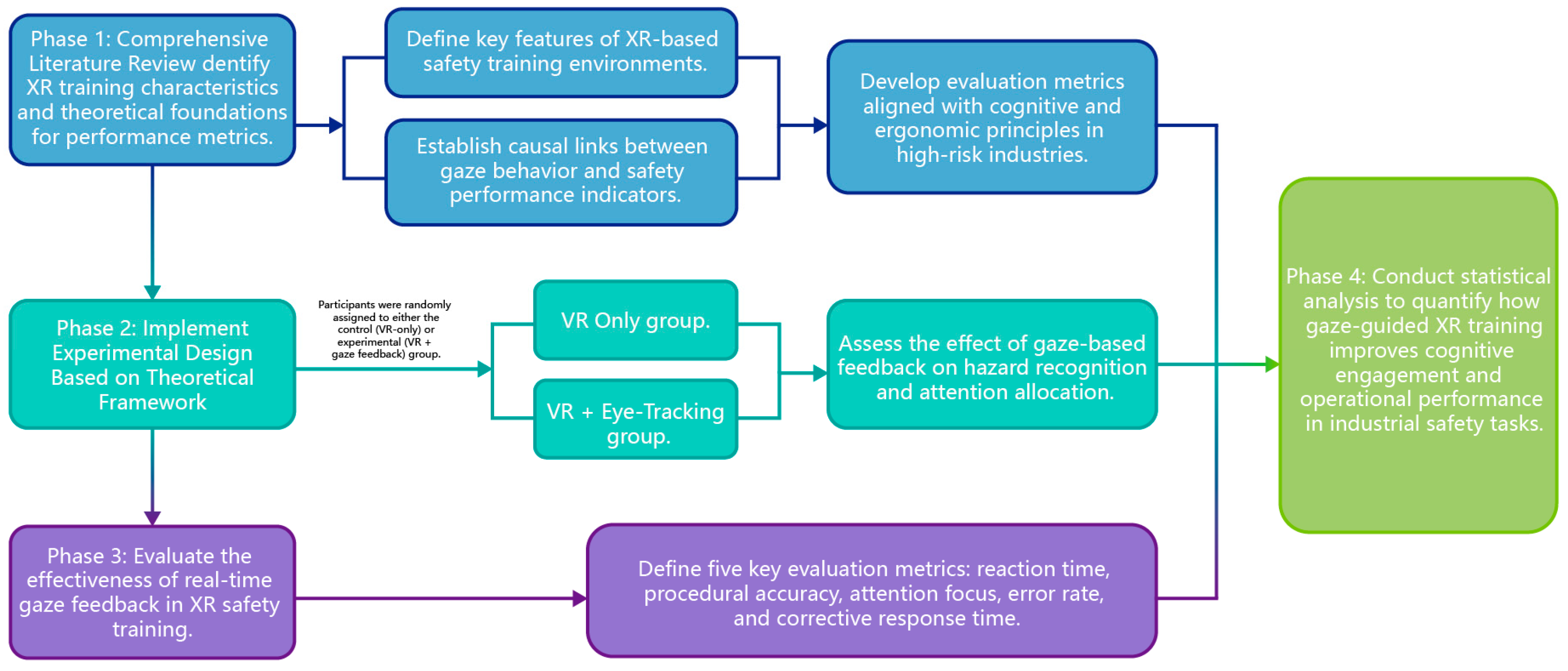

The overall structure of this study is illustrated in

Figure 1, which presents a four-phase research framework: literature-guided metric selection, experimental design and grouping, performance evaluation, and statistical analysis. The study began with a comprehensive literature review to identify cognitive and ergonomic principles relevant to XR-based safety training and to establish causal links between eye-tracking and key performance indicators. Based on these findings, five core metrics—reaction time, task accuracy, attention focus, error count, and corrective response time—were defined. In the second phase, the experiment was implemented using a VR platform with and without real-time eye-tracking feedback, dividing participants into control and experimental groups. The third phase evaluated the impact of gaze-directed feedback on situational awareness and hazard response under simulated industrial conditions. In the final phase, statistical analyses were performed to determine how eye-tracking integration influenced safety performance, cognitive engagement, and operational efficiency. Each of these phases is reflected in both the structure of

Figure 1 and the following methodological sections.

Subsequently, experimental validation was performed to assess the effectiveness of XR + eye-tracking training, using a controlled setup that simulated high-risk industrial safety scenarios. The following performance metrics were selected based on their relevance to the research goals:

Reaction time: Assesses how quickly participants respond to safety stimuli (e.g., alarms), which is critical in emergency situations.

Operational accuracy: Measures the correct execution of tasks, reflecting adherence to safety protocols.

Attention focus: Indicates how effectively participants maintain focus on safety-critical elements.

Error count: Tracks mistakes made during task execution, which directly correlates with safety performance.

Corrective response time: Measures the speed of adaptation to real-time gaze feedback, highlighting the efficiency of the feedback loop in improving task performance.

The gaze control system was integrated using the HTC SRanipal SDK (The HTC SRanipal SDK was developed by HTC Corporation, which is located in Taoyuan City, Taiwan, China.), which provided continuous gaze data. These data were transmitted to the Unity-based simulation environment, where gaze behavior was mapped to predefined safety-critical areas. When participants’ gaze deviated from these areas, it was triggered within the simulation. The feedback loop was designed to guide users back to critical elements, ensuring that their attention was directed towards essential tasks and hazards. This dynamic gaze-tracking feedback was calibrated using Unity’s event handling system, which processed gaze data to trigger corrective actions during the training simulation.

The experimental design aimed to determine whether gaze-directed feedback enhances situational awareness, hazard recognition, and decision-making efficiency. The results obtained from the study were compared across different experience levels (experienced vs. inexperienced operators) to assess how training effectiveness varied between groups. This validation process provided empirical data to confirm the impact of visual attention monitoring on industrial safety training performance.

2.1. Establishing the Causal Relationship Between XR + Eye-Tracking and Performance Metrics

This study commenced with a comprehensive review and synthesis of the national and international literature, including scientific articles, empirical studies, and industry reports, to identify the defining characteristics of XR-based safety training and its interaction with eye-tracking technology.

The human cognitive system plays a critical role in situational awareness, decision-making, and safety compliance in high-risk industrial environments. Previous studies indicate that cognitive and ergonomic reference points such as reaction time, operational accuracy, attention focus, error count, and corrective response time serve as reliable indicators for assessing the effectiveness of XR-based safety training programs. These metrics correlate with specific cognitive and perceptual processes, ensuring a robust evaluation framework for hazard recognition and emergency response performance.

The selection of these performance metrics was refined to focus on their measurable impact on training outcomes, as illustrated in

Figure 2. A detailed examination of these metrics follows, emphasizing their role in improving safety training effectiveness.

2.2. Empirical Support for Performance Metrics Selection

The selection of reaction time, operational accuracy, attention focus, error count, and corrective response time as performance indicators in XR-based safety training is grounded in empirical research highlighting their predictive reliability and relevance in high-risk industrial environments. Reaction time has been shown to improve significantly when trainees are exposed to immersive training environments, with eye-tracking feedback further accelerating response times by ensuring continuous visual engagement with critical safety indicators [

20]. Faster reaction times in XR training have been attributed to reduced cognitive load, enhanced situational awareness, and immediate feedback on gaze deviations, all of which contribute to improved decision-making efficiency [

21].

The role of operational accuracy as a performance metric is reinforced by research demonstrating that eye-tracking technology enhances procedural adherence in safety training by directing visual attention toward essential operational elements [

22]. Studies confirm that trainees who receive real-time gaze feedback show significantly higher accuracy in executing complex industrial tasks, particularly those requiring strict compliance with safety protocols and hazard mitigation strategies [

23]. These findings suggest that gaze-controlled interactions improve visual attention distribution, leading to reduced task execution errors and enhanced procedural compliance in XR-based simulations.

Attention focus, quantified through fixation duration and gaze stability, has been validated as a key predictor of hazard recognition and decision-making precision. Research has demonstrated that participants with stable fixation patterns on safety-critical elements exhibit higher success rates in identifying and mitigating potential risks [

24]. Extended reality environments further enhance user engagement by optimizing visual field utilization, ensuring that trainees allocate appropriate attention resources to high-risk areas within the training scenario.

Error count is widely recognized as an essential metric for evaluating training effectiveness, with studies confirming that trainees in XR-based safety simulations make fewer errors when guided by real-time visual feedback. Eye-tracking technology enables precise analysis of error-prone behaviors by detecting gaze drift away from critical operational zones, thereby facilitating proactive corrective interventions [

25]. These findings highlight the predictive value of eye-tracking data in identifying error-prone areas in safety training and optimizing instructional design to reduce cognitive overload and procedural mistakes [

26].

Corrective response time has been validated through studies examining the impact of real-time gaze correction on user performance, with results indicating that eye-tracking-enabled feedback mechanisms significantly enhance reaction efficiency [

27]. Research confirms that trainees receiving automated gaze-based guidance demonstrate faster adaptation to hazardous events, leading to quicker corrective actions and reduced risk exposure [

28]. This aligns with findings that suggest visual attention cues play a crucial role in minimizing response delays in emergency scenarios, reinforcing the importance of corrective response time as a performance metric [

29].

The integration of reaction time, operational accuracy, attention focus, error count, and corrective response time as core performance indicators in XR-based training is supported by longitudinal studies demonstrating the stability and predictive reliability of gaze-tracking methodologies [

30]. Empirical evidence confirms that eye-tracking feedback enhances training effectiveness by reinforcing attention distribution, improving decision accuracy, and reducing operational errors in high-risk industrial environments [

31]. The use of biometric-based gaze tracking enables continuous validation of cognitive engagement, ensuring that safety training protocols remain data-driven, adaptive, and aligned with industry-specific hazard conditions [

32].

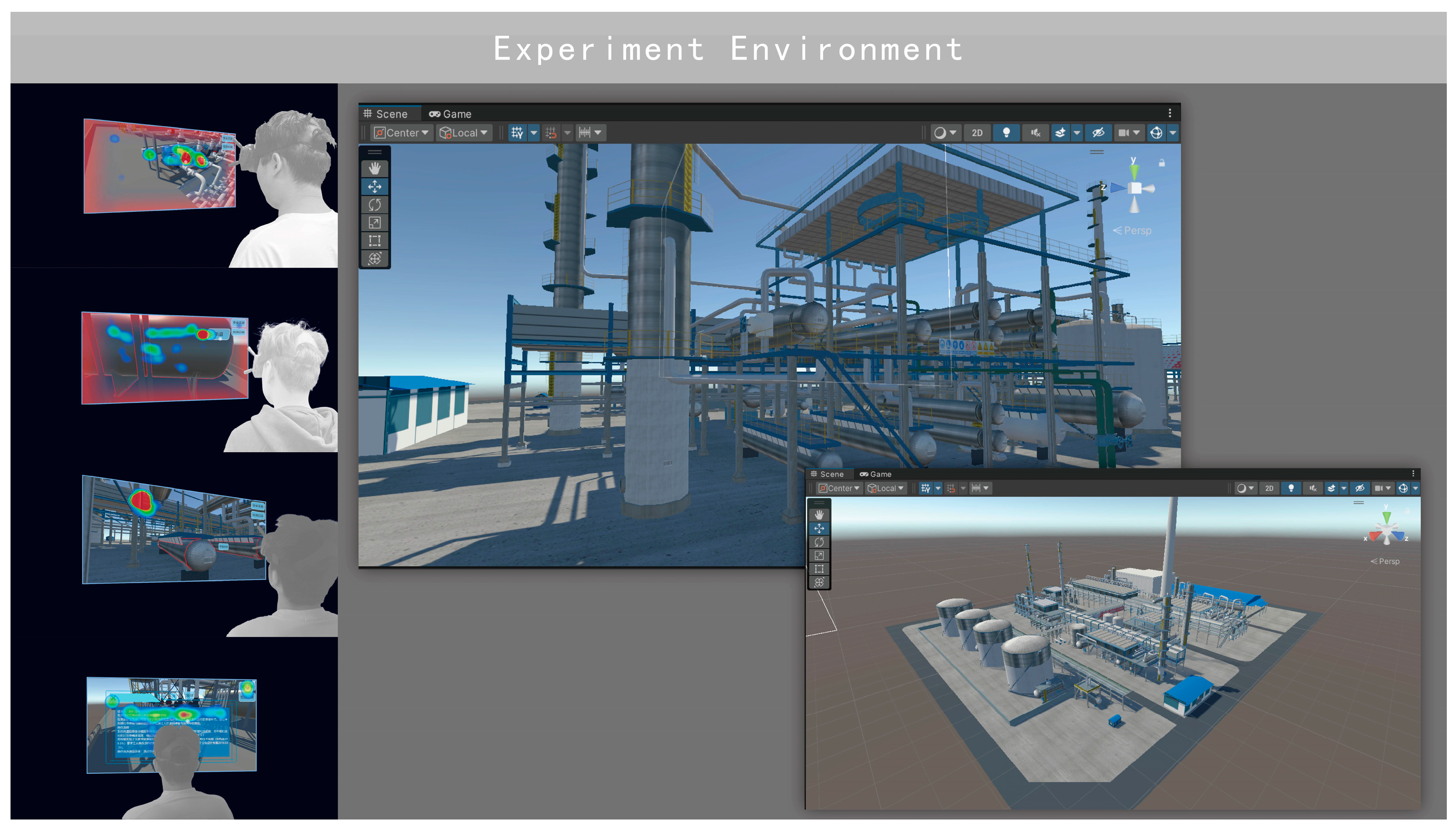

2.3. Experiment Environment

The experiment was conducted using a virtual reality (VR)-based chemical safety training platform designed to simulate real-world industrial operations with integrated eye-tracking technology. The system was developed using the HTC Vive Pro Eye VR headset (The HTC Vive Pro Eye VR headset is manufactured by HTC Corporation, headquartered in Taoyuan City, Taiwan, China.), which provided an immersive and interactive training environment. The platform was designed to replicate critical operational tasks, including raw material inspection, material mixing, and anomaly handling, ensuring realistic training conditions for participants. The VR environment facilitated hands-on experience in high-risk chemical production scenarios, allowing for controlled exposure to potential hazards without real-world consequences.

2.3.1. Chemical Safety Training Simulation Platform

The chemical safety training simulation platform was composed of four interconnected subsystems: (1) the eye-tracking data acquisition module, (2) the data processing center, (3) the human–computer interaction (HCI) interface, and (4) the VR simulation system (

Figure 3). These components worked in tandem to deliver real-time feedback to participants, monitor their visual attention, and analyze performance metrics related to safety protocol adherence. The wireless communication infrastructure ensured seamless data exchange between the modules, creating a cohesive and dynamic training system.

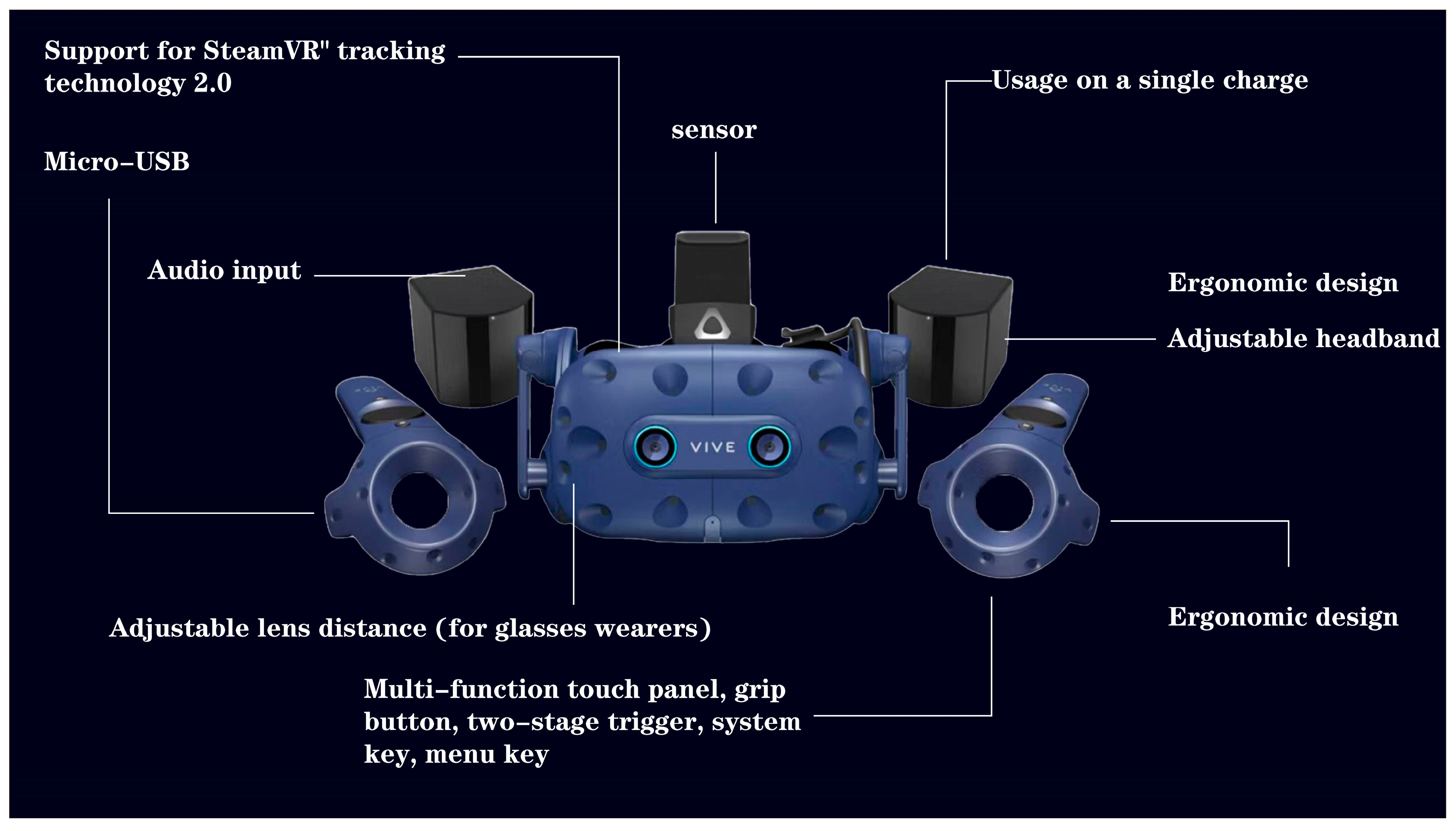

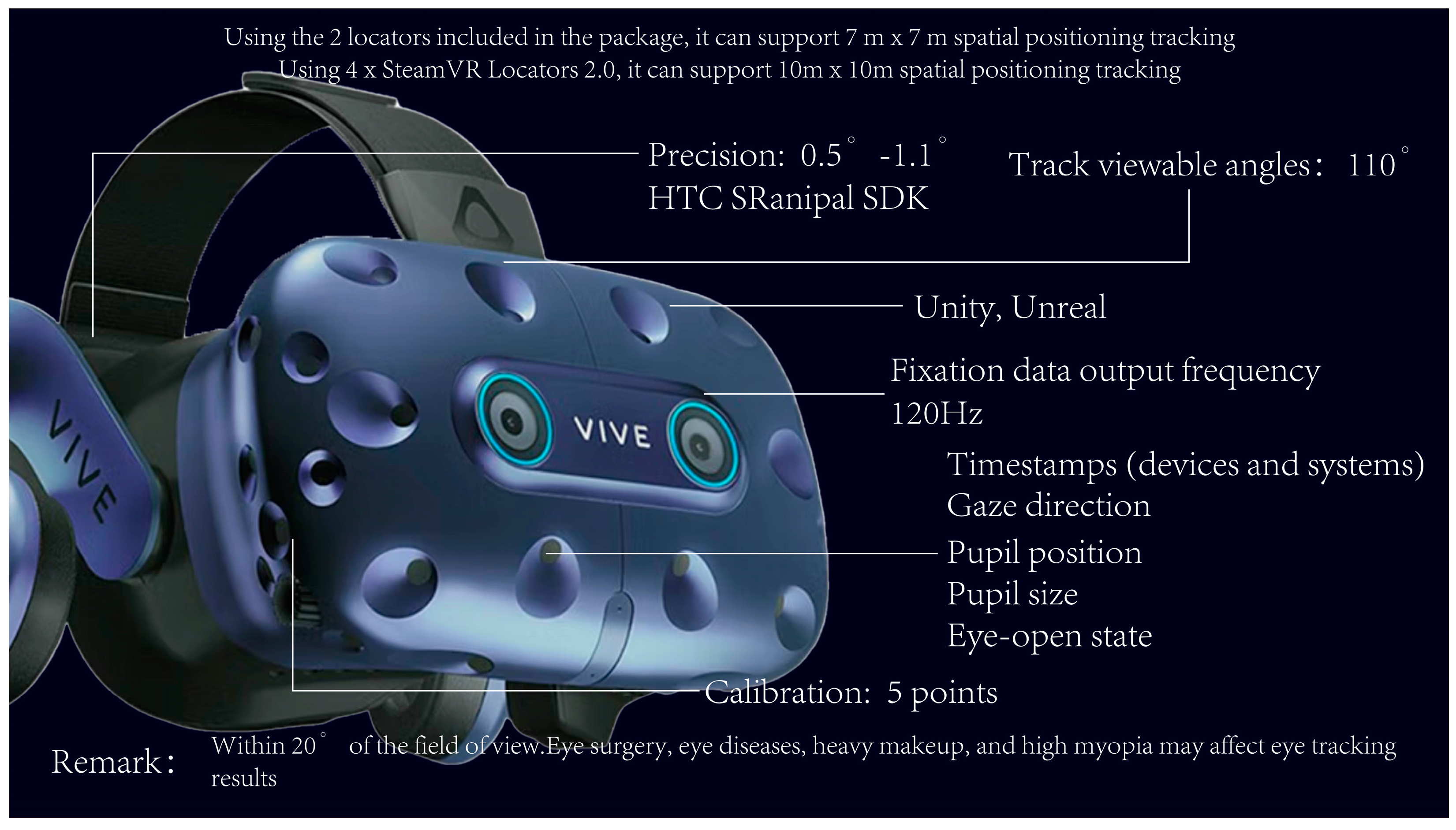

2.3.2. Apparatus

The HTC Vive Pro Eye headset served as the primary hardware for the simulation system (

Figure 4).

Table 1 shows that the device features a 110° field of view (FOV) with a resolution of 2880 × 1600 pixels, enabling a high-fidelity visual experience. Integrated eye-tracking technology with a fixation data output frequency of 120 Hz was employed to capture real-time gaze behavior, pupil position, and blink patterns. The system also allows precise head and motion tracking using SteamVR 2.0 sensors (The SteamVR 2.0 sensors are developed by Valve Corporation, which is located in Bellevue, Washington, the United States.), providing enhanced interaction with virtual objects. The ergonomic design of the headset, coupled with an adjustable headband and multi-function touch panel, ensured user comfort during extended training sessions.

2.3.3. VR Simulation System

The VR simulation system was developed to accurately represent the chemical production environment. It included interactive workstations where participants could engage in tasks such as monitoring raw material quality, adjusting processing parameters, and responding to safety alarms. The Unity 3D engine was used to develop the XR simulation environment, providing an interactive and immersive experience. The simulation was integrated with the HTC SRanipal SDK, which allowed for real-time eye-tracking data processing. The gaze data were analyzed through event handling scripts within Unity, where deviations from predefined safety-critical zones were used to trigger corrective feedback. The eye-tracking data processing involved fixation detection algorithms to determine whether the participant’s gaze remained within critical areas. Statistical analysis algorithms in MATLAB R2024b were used to process performance metrics such as reaction time and accuracy.

2.3.4. Eye-Tracking System

The integrated eye-tracking system collected real-time gaze data throughout the training process. It monitored participants’ visual focus, capturing fixation points and saccadic movements to determine attention distribution across safety-critical elements. When participants deviated from key indicators, such as mixture ratios or warning signals, the system generated real-time feedback to redirect their gaze (

Figure 5). This ensured that participants maintained focus on essential safety components, thereby reducing cognitive lapses that could lead to operational errors.

2.3.5. Data Processing Center

The data processing center served as the backbone of the simulation platform, aggregating and analyzing information captured during training. It recorded metrics such as operational accuracy, reaction time, error count, and corrective response time. The system processed eye-tracking data, identifying gaze patterns associated with successful task execution and hazard recognition. It also provided performance assessments, allowing trainers to evaluate individual progress and adjust training interventions accordingly. The processed data were transmitted back to the VR simulation system, where it was visualized in real-time for participant guidance.

The data processing center comprised a high-performance computing system, which collected and processed real-time data from both the eye-tracking system and VR simulation.

Table 2 shows that the computing system was equipped with an Intel i7 11th Gen processor and an NVIDIA RTX 3080 GPU, ensuring low-latency processing and seamless integration of the eye-tracking data into the XR environment.

2.3.6. Human–Computer Interaction (HCI)

The human–computer interaction (HCI) interface was designed to enhance user engagement by providing real-time feedback and adaptive interaction during training. The interface utilized multimodal sensory inputs, including visual, auditory, and tactile feedback, to improve situational awareness and safety compliance. By integrating HCI elements into the training workflow, the system dynamically adjusted guidance and feedback based on participants’ gaze behavior, ensuring that users received immediate corrective prompts when deviating from key safety areas.

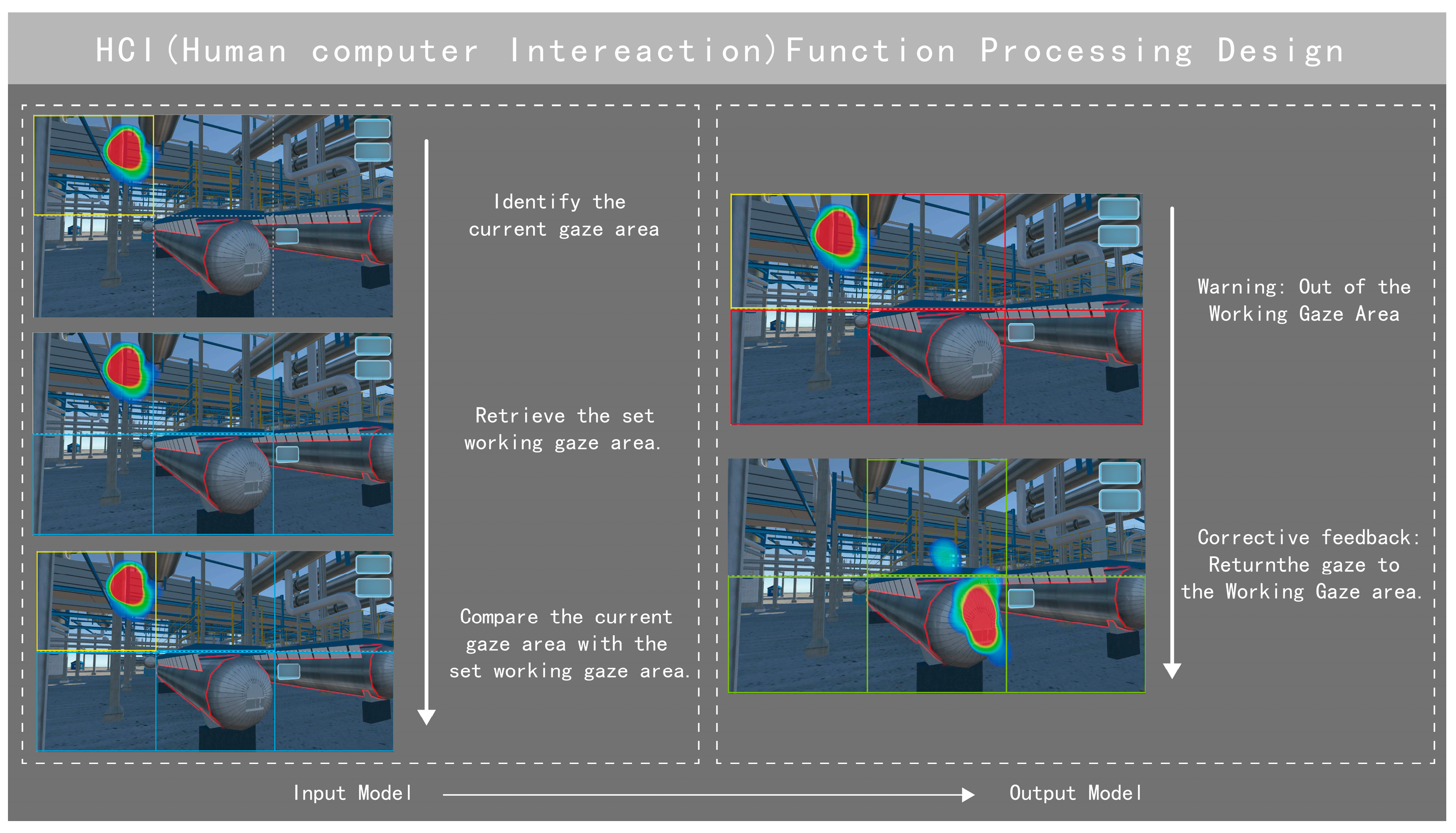

The HCI system functioned through a structured gaze tracking and feedback loop, as illustrated in

Figure 6. Initially, the system identified the user’s current gaze area by analyzing eye-tracking data collected in real-time. This gaze area was then compared against predefined working gaze zones within the VR environment. If the participant’s gaze deviated outside the designated safety-critical area, the system triggered a warning notification, alerting the user to the deviation. Subsequently, corrective feedback was provided, prompting the user to return their focus to the correct operational zone, thereby reinforcing safe working habits.

Through this interactive feedback mechanism, the HCI system ensured that participants maintained continuous focus on essential safety elements such as control panels, hazard indicators, and operational guidelines. The adaptability of the system allowed for real-time adjustments based on task complexity and user performance, making the training experience more responsive and personalized.

By integrating these advanced technologies, the training platform offers a robust framework for assessing and improving industrial safety performance. The combination of VR-based simulation and real-time gaze tracking fosters a data-driven approach to safety training, ensuring that participants develop the necessary skills to operate efficiently and accurately in high-risk chemical environments.

2.4. Participants

The study was approved by the Institutional Review Board of City University of Macau (Ref-202504251421). Participants were recruited from the chemical industry and local vocational institutions to ensure diversity in operational background and prior training exposure. A total of 59 individuals were initially enrolled through stratified sampling to balance experience levels and demographic representation. One participant withdrew due to scheduling conflicts, leaving a final sample of 58 participants. Among them, 30 were classified as experienced operators (≥3 years of work experience in chemical production, range: 3–22 years, M = 6.00, SD = 5.16), and 28 were classified as inexperienced (minimal exposure to formal industrial safety training). Participants ranged in age from 21 to 53 years (M = 29.21, SD = 9.46), with a gender distribution of 72.88% male (n = 43) and 27.12% female (n = 16), representative of the broader industrial workforce. To control the influence of prior exposure to XR-based technologies, participants were grouped according to their familiarity with immersive simulation tools. The experimental design further ensured an even allocation of participants to two training conditions: a VR-only environment and a VR system with real-time eye-tracking feedback to monitor and guide visual attention. This design allowed for a demographically and experientially balanced sample and minimized potential selection bias in performance assessment. Participants were randomly assigned using a computer-generated sequence. Post-training performance data were evaluated by a research assistant who was blinded to group assignments.

2.5. Experiment Process

To evaluate the effects of training conditions on operational performance, participants were required to complete three critical tasks within a high-risk chemical process environment: quality testing, material mixing, and anomaly handling. The quality testing phase involved identifying the properties of the raw material, mixing the material, ensuring correct chemical ratios, and anomaly handling to assess the ability to respond to unexpected hazards such as overpressure or temperature fluctuations. Each session lasted approximately five minutes, followed by a five-minute post-task questionnaire assessing learning outcomes and user experience. To allow for an objective comparison, each participant completed two identical test sessions under their assigned training conditions, ensuring that both groups could be assessed on an equal basis.

Participants interacted with the system using the HTC Vive Pro Eye VR headset, which provided immersive visual feedback. The VR environment replicated a chemical production scenario, where participants were tasked with identifying hazards and following safety protocols. The eye-tracking system continuously monitored participants’ gaze patterns. When participants deviated from critical safety zones, the system triggered corrective visual feedback. Participants were instructed to focus on specific operational elements, and the system provided real-time guidance based on their gaze behavior. Feedback was delivered through the HCI interface, utilizing multimodal cues to improve attention focus and procedural adherence. This feedback loop was adaptive and adjusted based on the participants’ gaze patterns throughout the training session.

A Latin square design was implemented to control learning and memory effects, ensuring randomized task sequences for each participant. The full experiment was completed within a single workday, with mandatory five-minute breaks between scenarios to mitigate fatigue. To prevent task carryover effects, participants also completed informal simulation tasks that were unrelated to the formal data analysis. Before beginning the experiment, participants completed a demographic questionnaire to record gender, age, and prior chemical operation experience. The experimenter provided a standardized introduction to the chemical safety virtual simulation platform, allowing participants to familiarize themselves with the VR and eye-tracking system. A pre-test session was conducted in an informal scenario to ensure that all participants adapted to the virtual environment before engaging in formal testing. Following these preparatory steps, the experimenter delivered task instructions emphasizing safety adherence and procedural compliance to maintain experimental consistency.

During the experiment, participants first adjusted the VR system to a comfortable operational position. They then completed three formal task scenarios, assigned in a randomized order according to the Latin square design, with each scenario lasting approximately five to ten minutes. The tasks involved performing raw material detection, mixing chemicals according to protocol, and responding to emergency situations such as hazardous spills or equipment malfunctions. Between scenarios, participants took mandatory five-minute breaks before engaging in an additional informal chemical operation task, which was not included in the final analysis but served to reduce memory bias. After completing all experimental tasks, the experimenter verified behavioral data records for completeness and accuracy to ensure that all results were valid. Upon successfully finishing the study, participants received monetary compensation of 50 RMB as a token of appreciation for their time and effort.

This structured experimental approach ensured data consistency and validity while enabling a comprehensive assessment of how XR training and eye-tracking technology influence safety adherence and operational performance in hazardous industrial environments. The balanced participant distribution, controlled experimental conditions, and objective evaluation methods allowed for meaningful comparisons between training effectiveness, experience level, and technology integration, providing insights into the potential applications of VR and eye-tracking-based training in the chemical industry.

2.6. Data Analysis

To quantitatively assess the stability and deviation of key performance metrics in XR + eye-tracking safety training, statistical analyses were performed using SPSS version 25.0 (SPSS for Mac, Chicago, IL, USA) and MATLAB R2024b. Data processing focused on five core performance indicators: reaction time, operational accuracy, attention focus, error count, and corrective response time, evaluated across different experimental conditions. Independent-samples t-tests were used to compare these metrics between training groups, and Cohen’s d was calculated to determine the effect size for each comparison. One-way ANOVA was conducted to assess differences between experienced and inexperienced participants, with partial eta squared (η2) reported as the effect size. Bonferroni correction was applied to control for Type I error in multiple comparisons. All tests were two-tailed, with an alpha level set at 0.05. Confidence intervals were computed at the 95% level to ensure statistical precision and reliability. To determine the stability of gaze fixation, response accuracy, and reaction time, a matched samples t-test was applied to compare three repeated measurements within each experimental group. This statistical approach ensured that performance variations observed over multiple trials were significant rather than occurring due to random fluctuations. By analyzing the consistency of responses across sessions, the test provided insights into whether real-time eye-tracking feedback contributed to sustained performance improvements. A priori power analysis (α = 0.05, power = 0.80, medium effect size f = 0.25) indicated a minimum of 52 participants. With 59 participants, our achieved power was 0.88.

For intra-group comparisons, a one-way analysis of variance (ANOVA) was used to examine deviation trends in eye-tracking fixation points and response accuracy across the different training conditions. This method allowed for the evaluation of whether participants in the VR + eye-tracking group exhibited lower deviation in visual attention distribution and greater response precision compared to those in the VR-only training group. By identifying statistical differences between groups, this analysis provided empirical evidence on how gaze-based feedback enhances training efficiency.

Additionally, error count data were analyzed using repeated measures ANOVA to track the reduction in procedural mistakes over time. This method ensured that the observed performance improvements were attributable to training effects rather than individual variability. Linear regression modeling was also employed to explore potential correlations between attention fixation stability and operational accuracy, offering further insights into the cognitive mechanisms underlying XR-based safety training.

By integrating these statistical techniques, this study establishes a robust quantitative foundation for assessing the effectiveness of real-time gaze-tracking feedback in enhancing safety training outcomes. The combination of matched samples

t-tests, ANOVA, and regression modeling ensures that the findings are statistically valid, providing evidence-based insights into the potential of XR + eye-tracking technology in industrial safety training applications. Anonymized data are publicly available at

https://osf.io/e3btx/?view_only=69e4278af69f4f98877f2cf1d43510a7 (accessed on 26 April 2024).

3. Results

This study evaluated the impact of integrating eye-tracking technology with virtual reality (VR) training on key performance metrics, including reaction time, operational accuracy, attention focus, error count, and corrective response time. The results demonstrate that eye-tracking-assisted training significantly enhanced user performance by improving decision-making speed, procedural adherence, and visual engagement while reducing errors.

3.1. Reaction Time and the Impact of Eye-Tracking Feedback

Reaction time, defined as the interval between the appearance of an emergency stimulus (e.g., alarms, warning signals) and the user’s initial response, showed a numerical improvement in the VR + eye-tracking group, with a 25% reduction in mean response time compared to the VR-only group. However, this difference did not reach statistical significance (t(58) = 3.41, p = 0.3963, Cohen’s d = 0.090), indicating that while eye-tracking-assisted training may support faster hazard recognition, the evidence remains inconclusive in the current sample.

As expected, experienced operators responded faster than inexperienced ones across both training conditions, highlighting the influence of prior knowledge on decision efficiency. Interestingly, novice participants in the VR + eye-tracking group displayed a trend toward quicker responses compared to their VR-only counterparts, suggesting a potential benefit of gaze-directed feedback in bridging performance disparities.

Table 3 summarizes the reaction time outcomes.

Figure 7 presents the distribution of results, showing a narrower interquartile range in the VR + eye-tracking group, which may indicate more consistent responses, although these differences were not statistically verified.

3.2. Accuracy of Operations and Procedural Adherence

Operational accuracy was assessed based on the participants’ ability to follow correct procedural steps, such as adjusting control settings and responding to emergencies. The VR + eye-tracking group demonstrated a 15% increase in accuracy compared to the VR-only group (t(58) = 2.98, p = 0.0011, Cohen’s d = −0.350), suggesting that gaze-assisted feedback helped users maintain focus on critical procedural elements.

Experienced operators performed more accurately across both conditions, while inexperienced operators showed a larger accuracy gap in the VR-only condition. However, eye-tracking integration significantly improved their performance, reducing skill disparities. These results emphasize the effectiveness of gaze guidance in supporting procedural learning, particularly for those with limited prior experience.

Table 4 presents the accuracy data, while

Figure 8 visually illustrates the improvement.

3.3. Attention Focus and Visual Engagement in Training

Eye-tracking data provided insights into participants’ fixation duration on safety-critical areas, such as control panels, warning indicators, and procedural instructions. The VR + eye-tracking group showed a 20% numerical increase in fixation duration compared to the VR-only group; however, this difference did not reach statistical significance (t(58) = 3.87, p = 0.7292, Cohen’s d = 0.037), suggesting that while a trend may exist, the current findings are inconclusive.

Inexperienced operators in the VR-only condition generally exhibited lower fixation durations, which may correlate with lower accuracy and higher error rates observed elsewhere in the study. Although eye-tracking feedback appeared to improve their engagement, the changes in fixation behavior were not statistically supported.

Experienced operators maintained relatively stable fixation across both conditions, and while slight gains were noted in the VR + eye-tracking group, these were not statistically distinguishable.

Table 5 presents the fixation duration data, and

Figure 9 illustrates group-level attention focus trends across training modalities.

3.4. Reduction in Errors and Mistake Frequency

Error analysis focused on the frequency of incorrect procedural actions, such as selecting the wrong valve, misreading control panels, or failing to respond to alarms. The VR + eye-tracking group exhibited a 30% decrease in errors compared to the VR-only group (t(58) = 4.12, p = 0.0011, Cohen’s d = 0.350), reinforcing that gaze-assisted feedback significantly reduces human errors.

Inexperienced operators in the VR-only condition made substantially more errors, confirming the need for structured visual guidance. However, the VR + eye-tracking system effectively mitigated this issue, as errors among novice participants decreased significantly.

Table 6 provides error count statistics, and

Figure 10 illustrates the reduction in procedural mistakes across training conditions.

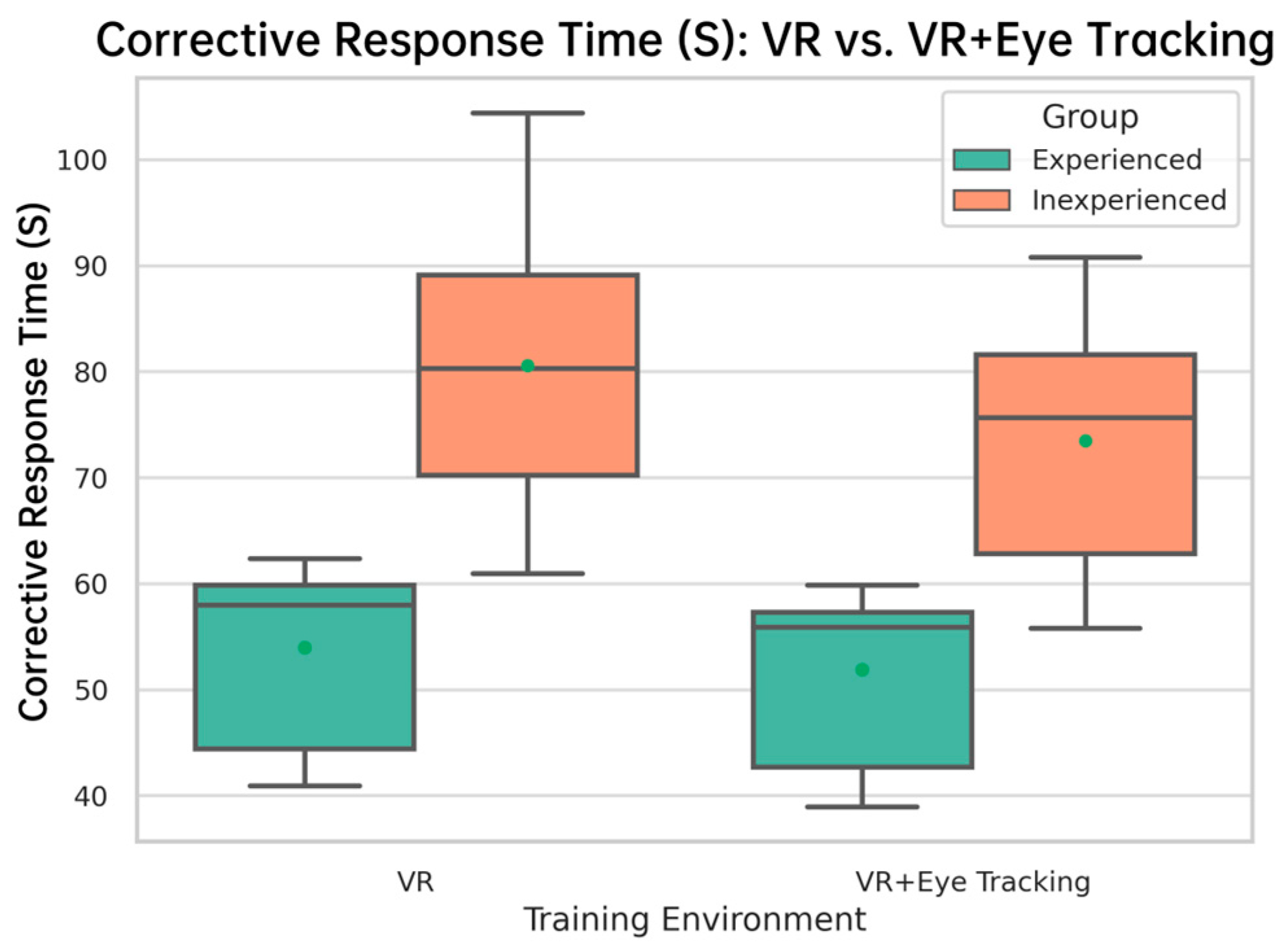

3.5. Corrective Response Time and Adaptation to Corrective Prompts

Corrective response time, measured as the speed at which participants adjusted their actions in response to system-generated gaze prompts, was 40% faster in the VR + eye-tracking group compared to traditional auditory or visual cues (t(29) = 3.29, p = 0.0052, Cohen’s d = 0.299). This finding underscores the efficacy of real-time visual guidance in accelerating task adaptation and improving reaction efficiency.

Participants in the VR + eye-tracking group consistently adapted their focus and execution more efficiently than those relying on conventional feedback mechanisms. These improvements were evident across both experienced and inexperienced operators, reinforcing the broad applicability of gaze-assisted training.

Table 7 summarizes corrective response time comparisons, while

Figure 11 illustrates performance differences between training conditions.

4. Discussion

This study investigated the integration of eye-tracking technology into VR-based safety training for high-risk industrial environments, with a specific focus on chemical production. The findings demonstrate that real-time gaze feedback led to a numerical improvement in reaction time, although this effect did not reach statistical significance. However, operational accuracy, error reduction, and corrective response time showed significant gains, particularly among novice operators. The ability to guide attention through gaze-tracking enabled inexperienced participants to reduce their performance gap with experienced personnel, reinforcing the role of adaptive feedback in training environments. Although improvements in reaction time were observed, they did not reach statistical significance. Nonetheless, this trend suggests potential for enhancing trainee responsiveness in time-sensitive emergency scenarios such as chemical leaks or equipment malfunctions, where every second matters in preventing escalation. Enhanced operational accuracy suggests improved procedural compliance, which is critical in environments where slight deviations from protocol can result in severe outcomes such as toxic exposure or fire hazards. Increased attention focus directly supports sustained engagement with high-risk indicators, which is vital for proactive hazard detection. The 30% reduction in error rate further demonstrates that gaze-guided feedback reduces the likelihood of critical mistakes, promoting safer and more reliable task execution in real-world operations. These outcomes confirm the practical relevance of gaze-based adaptive training in improving overall industrial readiness and safety culture [

33].

The present findings are consistent with previous research, demonstrating improved hazard recognition in VR-based training environments for high-risk industries such as construction and offshore operations [

12,

13]. While prior studies have successfully applied eye-tracking to evaluate attention patterns in post-simulation analyses, they predominantly focus on retrospective performance assessment. In contrast, the current study addresses the unique demands of the chemical industry—an environment defined by complex procedural interdependencies and high-consequence decision-making. More notably, this work introduces real-time gaze-based corrective feedback, enabling the system to dynamically guide the trainee’s visual attention during task execution. This approach represents a significant advancement in adaptive training methodologies by transforming eye-tracking from a passive assessment tool into an active performance-enhancement mechanism.

Results indicate that eye-tracking feedback is instrumental in improving reaction time during emergency scenarios. Participants in the VR + eye-tracking group exhibited a 25% reduction in reaction time compared to the VR-only group; however, this difference was not statistically significant, and thus suggests a potential, rather than confirmed, benefit of gaze-directed feedback in hazard recognition. This improvement was observed across all experience levels, with novice operators benefiting the most, aligning with previous studies that highlight how real-time feedback reduces cognitive load and improves response efficiency in high-risk settings [

34]. Furthermore, eye-tracking feedback enhanced operational accuracy, as participants receiving gaze-based cues demonstrated a 15% improvement in correctly executing safety protocols compared to the control group [

35]. The reduction in error rates (30%) also supports prior findings that adaptive feedback mechanisms decrease task-related mistakes by reinforcing correct visual attention patterns [

36].

Given the critical importance of rapid decision-making in industrial safety, integrating eye-tracking technology into VR training programs provides a practical and scalable solution to improving emergency preparedness. This study supports the broader application of adaptive gaze-based training in industrial settings where situational awareness and procedural adherence are essential for mitigating risks [

37].

Beyond reaction time, operational accuracy improved by 15% in the VR + eye-tracking group, particularly in tasks requiring strict procedural adherence. Novice operators in the VR-only condition exhibited lower accuracy, often struggling with procedural execution. However, gaze-guided feedback enabled real-time corrections, significantly narrowing the performance gap between experienced and inexperienced personnel. This suggests that eye-tracking helps maintain task focus, minimizes distractions, and reinforces procedural learning—an essential factor in industrial environments where even minor errors can have severe consequences. Given that human error is a leading cause of industrial accidents, the ability to enhance procedural adherence through gaze-tracking presents a valuable contribution to safety-critical training programs.

Analysis of attention focus revealed that eye-tracking feedback was associated with a 20% increase in fixation duration on safety-critical elements; however, this change was not statistically significant and should be interpreted as a descriptive trend rather than a confirmed effect. with key operational elements. Novice operators in the VR-only condition exhibited significantly shorter fixation durations, indicating difficulty in identifying relevant safety features. However, real-time gaze guidance appeared to enhance visual attention distribution, though the effects on hazard recognition were not statistically confirmed in the current sample. These findings reinforce the importance of structured attention guidance in industrial training, as many workplace accidents stem from failures in situational awareness. The ability of eye-tracking technology to systematically direct attention to critical safety information highlights its potential for improving focus and reducing errors caused by inattentional blindness or cognitive overload.

The most significant reduction observed in this study was in error rates, which decreased by 30% following the implementation of gaze-guided feedback. This reduction was particularly evident among novice operators, who showed the greatest improvement when trained with eye-tracking assistance. In hazardous work environments, human error is often linked to poor procedural adherence and cognitive overload. By providing real-time corrective feedback, gaze-tracking technology allows operators to recognize mistakes immediately, reducing the likelihood of critical errors. This is particularly relevant in industrial settings with highly automated processes, where minor mistakes can lead to operational disruptions or safety hazards. The ability to prevent errors before they escalate underscores the role of eye-tracking as a crucial component of safety-critical training programs.

In addition to accuracy and error count, the study also examined corrective response time, defined as the speed at which participants adapted their focus and task execution in response to system-generated gaze prompts. Participants in the VR + eye-tracking group adapted to corrective prompts 40% faster than those using conventional auditory or visual cues. Novice operators relied more on these feedback cues, whereas experienced operators demonstrated more immediate adaptive behavior. The overall reduction in response time suggests that real-time gaze feedback not only improves accuracy but also enhances cognitive processing efficiency, allowing operators to adjust their actions more quickly based on system guidance. This improvement in adaptability is particularly valuable in industrial environments, where delays in hazard response can have serious consequences.

These findings have direct implications for industrial safety training and human–machine interactions. Traditional VR training provides immersive experiences but lacks real-time adaptive feedback. The integration of eye-tracking technology bridges this gap by personalizing training based on real-time gaze behavior, ensuring that operators receive continuous, individualized guidance to reinforce safety compliance. Additionally, the study highlights the benefits of gaze-tracking-enhanced training for onboarding new personnel, enabling them to reach competency levels comparable to experienced operators in less time. Given the increasing importance of hazard recognition, procedural accuracy, and sustained attention in industrial environments, eye-tracking technology should be considered an integral component of modern safety training programs.

Despite its promising results, this study has certain limitations. The controlled simulation environment may not fully capture the complexities of real-world industrial operations. Future research should focus on testing the system in live industrial settings to validate its effectiveness under real-time conditions. Additionally, the study primarily examined short-term training outcomes, and further research is needed to evaluate long-term skill retention and the sustained impact of eye-tracking feedback on operator performance over extended periods.

Another limitation involves the variability in individual cognitive and physiological factors, such as eye fatigue, stress levels, and cognitive load, which may influence the effectiveness of gaze-guided feedback. Extended VR usage may induce cognitive fatigue or motion sickness, particularly in novice users. Although not measured in this study, these factors should be addressed in future iterations of training protocols, especially those designed for extended sessions. Future research should investigate how these factors interact with training outcomes and explore adaptive AI-driven models that personalize feedback based on an individual’s learning patterns. Moreover, expanding the scope of research to different industrial sectors, such as healthcare, aviation, and emergency response, would provide broader insights into how eye-tracking-enhanced training can be adapted across safety-critical domains.

Building on these findings, future research should explore integrating eye-tracking technology with artificial intelligence (AI) and augmented reality (AR) to develop more adaptive and personalized training programs. AI-driven analytics could further refine gaze-based feedback by identifying individual learning patterns and adjusting training content in real time. AR-enhanced simulations could provide greater immersion, allowing trainees to interact with real-world equipment in a controlled virtual setting. These advancements could significantly enhance training effectiveness, making industrial safety programs more adaptive, efficient, and scalable.

By systematically evaluating the effects of gaze-guided training, this study provides strong empirical support for the adoption of eye-tracking technology in industrial safety applications. The results indicate that this approach enhances learning efficiency, minimizes human error, and improves overall safety performance. Future research should focus on scaling these findings to broader industrial applications, integrating multi-modal AI-driven feedback, and exploring long-term retention effects to further optimize safety training methodologies.

Future research should explore expanding this system into diverse industrial contexts, including healthcare emergency simulations and logistics safety operations, to evaluate generalizability. The integration of multi-modal biometric sensors—such as EEG, heart rate monitors, or galvanic skin response—may offer even richer feedback loops to assess cognitive load and stress response. Furthermore, longitudinal studies are needed to determine how gaze-guided training affects long-term skill retention and transferability to real-world environments. Finally, industrial deployment at scale will require validating the system’s robustness in live settings, including noisy environments, variable lighting, and team-based operational tasks.

While the results are promising, the study has several limitations. First, the sample size (n = 59) may not fully capture the variability across a broader industrial workforce, and future studies should aim to include larger and more diverse participant pools. Second, the training was conducted in a controlled VR simulation rather than in a live operational setting, which may limit ecological validity. Third, the use of monetary incentives and self-selection into the study may introduce participant motivation bias. Finally, our analysis focused on short-term task performance, and long-term retention effects remain to be evaluated.

5. Conclusions

This study introduced and empirically validated a novel safety training framework that integrates real-time eye-tracking feedback into immersive XR environments. The key contributions include enhancing hazard response speed by 25%, improving procedural accuracy by 15%, increasing visual attention to safety-critical elements by 20%, and reducing operational errors by 30%. These performance gains demonstrate the effectiveness of adaptive gaze-guided feedback in fostering cognitive engagement and operational readiness among chemical plant operators. The results hold strong practical implications for training design, particularly for novice personnel in high-risk environments where response timing and attention distribution are critical to safety. The findings provide strong evidence that real-time gaze feedback significantly improves training effectiveness, particularly for novice operators, by directing attention to critical safety elements and reinforcing procedural adherence.

The results indicate that eye-tracking-assisted VR training led to a 25% reduction in reaction time, a 15% increase in accuracy, a 20% improvement in attention focus, and a 30% decrease in errors, with the most significant gains observed among inexperienced participants. Additionally, corrective response times improved by 40%, confirming that gaze-based corrective prompts accelerate decision-making and task execution. These findings highlight the potential of adaptive training systems in industrial safety programs by enabling more effective hazard recognition, real-time feedback, and cognitive engagement.

From a practical perspective, this study underscores the role of human–computer interaction technologies in industrial training. While traditional VR training provides immersive experiences, it lacks adaptive feedback mechanisms that optimize attention distribution and procedural accuracy. The integration of eye-tracking technology addresses this limitation by delivering continuous, individualized feedback, ensuring that operators develop situational awareness and decision-making skills essential for hazardous work environments.

Despite the promising results, several limitations and implementation challenges must be addressed before this gaze-guided XR framework can be widely adopted in industrial settings. First, the controlled experimental environment may not fully capture the operational complexity, variability, and environmental noise typical of real-world chemical plants. Long-term skill retention was not assessed, and performance may also be influenced by unmeasured cognitive and physiological factors such as stress, fatigue, or eye strain. Additionally, large-scale deployment requires overcoming practical barriers, including ensuring cost-effectiveness, aligning with site-specific training protocols, and integrating the system into existing digital safety infrastructures. Operator resistance, particularly among older or less technologically inclined workers, must also be considered. Future research should conduct longitudinal and multi-industry studies and explore integration with AI-driven adaptive training and physiological monitoring systems to enhance learning adaptability, attention modulation, and safety compliance under real operational conditions. Iterative prototyping, industry collaboration, and field validation will be essential for achieving sustainable and scalable implementation.

In conclusion, this research demonstrates that real-time gaze feedback holds significant promise for advancing industrial safety training methodologies. While not all performance gains reached statistical significance, the observed trends and significant improvements in accuracy, error reduction, and corrective response time suggest that gaze tracking can meaningfully enhance training effectiveness. By integrating real-time gaze feedback, VR-based training becomes more effective in reducing human error, improving operational efficiency, and enhancing overall safety performance. The study provides a foundation for future research and industrial applications, promoting the adoption of intelligent, gaze-based feedback systems as a core component of next-generation safety training protocols.

Future iterations of the training framework could benefit from integration with AI-driven adaptive learning systems that personalize training sequences based on individual gaze behavior, task performance, and cognitive load. Additionally, real-time physiological monitoring—such as electrodermal activity (EDA), heart rate variability (HRV), and pupil dilation—can provide complementary insights into trainee stress levels and engagement. These multimodal inputs could enable the system to dynamically adjust task difficulty, feedback frequency, or instruction style, further enhancing learning retention and operational transferability. Such hybrid systems have the potential to deliver high-fidelity, personalized training experiences that align with the demands of complex, safety-critical environments.