Abstract

The adoption of artificial intelligence (AI) in sports training has the potential to revolutionize skill development, yet cost-effective solutions remain scarce, particularly in table tennis. To bridge this gap, we present an intelligent training system leveraging computer vision and machine learning for real-time performance analysis. The system integrates YOLOv5 for high-precision ball detection (98% accuracy) and MediaPipe for athlete posture evaluation. A dynamic time-wrapping algorithm further assesses stroke effectiveness, demonstrating statistically significant discrimination between beginner and intermediate players (p = 0.004 and Cohen’s d = 0.86) in a cohort of 50 participants. By automating feedback and reducing reliance on expert observation, this system offers a scalable tool for coaching, self-training, and sports analysis. Its modular design also allows adaptation to other racket sports, highlighting broader utility in athletic training and entertainment applications.

1. Introduction

The integration of technology into sports has ushered in a new era, transforming the way that athletes train, compete, and recover [1,2,3,4]. From grassroots levels to the pinnacle of professional sports, technological advancements are redefining the boundaries of human performance and enhancing the overall experience for players, coaches, teachers, and fans alike. Technology in sports encompasses a broad spectrum of innovations, including advanced training equipment, performance monitoring devices, and sophisticated analytics software [5], which could provide valuable feedback to players.

Table tennis, a sport celebrated for its lightning-fast rallies and strategic finesse, is also undergoing a technological transformation with the integration of science and technology into training and teaching [6]. The combination of science and technology with sports training can contribute to innovative training concepts, methods, and techniques [3,7,8,9,10,11,12]. Although technology is revolutionizing training methods in table tennis, the popularity of intelligent technology in table tennis teaching remains relatively low. One of the biggest challenges in table tennis is the accurate determination (over 90%) of the position of a fast-moving (up to 100 km/h) [13] and small table tennis ball (40+ mm diameter), as well as the evaluation of the effectiveness of a player’s stroke. In the field of table tennis ball recognition, sensor arrays are the most widely used technique to provide feedback on ball position [8,14]. However, the deployment of sensors is still troublesome and time-consuming. Video streams represent a more effective way of tracking objects, but the accuracy of video-based techniques has been problematic because the small ball only occupies a few pixels on the camera screen. To address this issue, machine learning algorithms, such as deep learning, have been proposed [15,16,17]. These algorithms can be easily integrated into computer vision systems for both ball recognition and stroke effectiveness analysis. For instance, Goh et al. developed an artificial intelligence (AI)-based system for badminton that enables shuttlecock tracking and automatic game analysis [18]. Similarly, Messelodi et al. proposed TrackNet, a neural network-based ball trajectory tracking framework for tennis, achieving accurate predictions of ball trajectories in real time [19]. Compared to these applications, our work focuses on table tennis, which involves smaller, faster-moving balls and requires different algorithmic considerations for ball detection and player evaluation. To the best of our knowledge, few studies have addressed both ball tracking and stroke effectiveness evaluation in table tennis simultaneously.

Moreover, unlike professional table tennis training, a widely adopted intelligent table tennis training system would require robustness and a relatively low cost. Existing products are expensive due to complex technology and outdated algorithms. For example, a high-speed image processing stereo camera [8] and/or the combination of inertial measurement units [20] are commonly used to estimate the trajectory and state of the table tennis ball to assist in training. However, these devices significantly increase the cost of the system, often over USD 10,000, which hinders large-scale deployment of the technology.

This study aims to develop a cost-effective AI-driven table tennis instruction system that synergistically unites real-time 3D ball trajectory analysis (with the YOLOv5 algorithm) with advanced human motion evaluation (dynamic time wrapping) to enable a precise diagnosis of ball dynamics and player technique. By using consumer-grade hardware, our novel system collectively establishes causal relationships between player technique (e.g., joint angles) and ball dynamics (e.g., speed and placement), achieving this capacity at less than 10% of conventional costs. This innovation allows coaches and athletes to identify the reasons behind the success or failure of specific strokes, delivering expert-level diagnostic accuracy while challenging the paradigm that advanced sports analytics are limited to institutional settings. Guided by this objective, this study addresses two key research questions: (i) How can integrated AI systems simultaneously track high-speed equipment dynamics and human kinematics using affordable equipment? (ii) Can AI-driven motion assessments quantitatively align with professional coaches’ evaluation of stroke quality? By addressing these questions, our research demonstrates how cost-effective technologies can provide elite-level training insights to broader populations. Furthermore, this study also establishes a scalable framework for equitable motor skill development, with potential implications extending to racket sports, rehabilitation, and global communities lacking traditional coaching infrastructure.

2. Materials and Methods

2.1. The Architecture of the System and Ball Detection

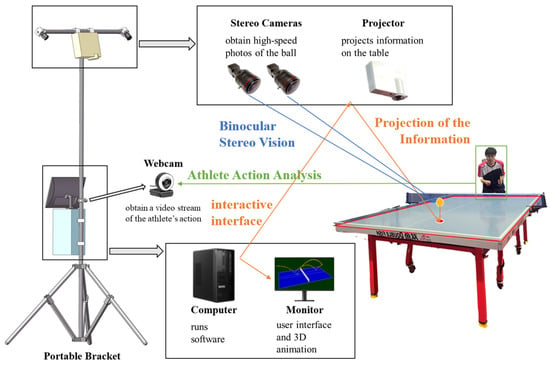

In table tennis training, ball landing accuracy and hitting pose analysis are the key factors for beginners and intermediate-level players. Therefore, we build a system based on computer vision and user interface systems. The general architecture of the system is illustrated in Figure 1. The hardware of the table tennis training system comprises cameras, a computer, a monitor, and a projector. Since the goal of this study is to build a system with an affordable cost of <USD 800, we choose two low-cost, high-speed industrial cameras (0.9 megapixels with a 3 min exposure time at 120 frames per second) to capture the table tennis balls and a webcam to capture the player’s hitting pose. The computer (core i5-10400F (Intel, Santa Clara, CA, USA) with GTX 2060S (Nvidia, Santa Clara, CA, USA)) runs the system’s software, processes the captured images, and outputs information and interactive content. The monitor and projector display this processed information, including three-dimensional animations of the table tennis ball and projections of its drop points.

Figure 1.

General architecture of the system based on computer vision. The hardware of the table tennis training system comprises cameras, a computer, a monitor, and a projector.

The software for the intelligent table tennis training system includes table tennis motion state analysis, human motion analysis, and an interactive interface. The motion state analysis uses images captured by the stereo vision system to identify the table tennis ball through deep learning methods and to calculate its three-dimensional position. The athlete motion analysis utilizes the video stream from the webcam to detect the athlete’s body posture, analyze their movements, and score their actions. The interactive interface then displays information such as three-dimensional animations of the table tennis ball and the drop point positions on the table.

Ball recognition is achieved through binocular vision. Specifically, we use the stereo rectification algorithm for binocular images to obtain two images with parallel imaging surfaces. In this setup, T is the horizontal distance between the two cameras, xl is the horizontal coordinate of point P on the left camera image, xr is the horizontal coordinate of point P on the right camera image, Z is the depth of point P, and f is the focal length of the camera. To observe the depth Z, we can use the principle of similar triangles:

In Equation (1), xl − xr is the disparity d. Therefore, for objects for which depth needs to be estimated, the core issue is to find the pixel points that belong to the object on the left and right camera images, match these pixel points one by one, and calculate the disparity of their horizontal image coordinates [21].

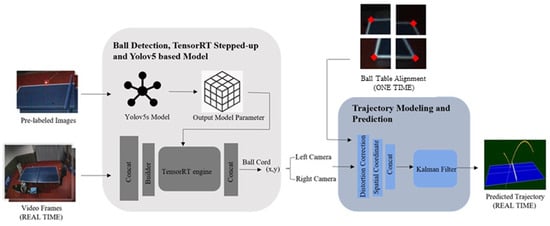

In designing the table tennis tracking and position estimation algorithms, our system employs a lightweight yet high-performance object detection neural network. This is combined with classical computer vision and camera imaging algorithms, enabling us to achieve highly accurate measurements while adhering to cost constraints [22]. Leveraging efficient optimization algorithms and advanced software architecture, our system achieves the real-time calculation of table tennis trajectories in less than 10 min, even on platforms with limited computational capabilities, surpassing the human eye’s capture rate by a factor of two. In summary, our system delivers robust, efficient, and sub-centimeter-level table tennis positioning in actual table tennis rooms where different light settings, various background colors, and multiple players playing could all interfere with table tennis ball recognition. The following section presents an overview of the principles and algorithms utilized in the design of the table tennis tracking and position estimation system. The ball detection algorithm is based on the YOLOv5 model and TensorRT 7 [23,24]. To achieve the recognition and tracking of table tennis movements, our project utilizes a deep learning approach based on the YOLOv5 model. The overall process is presented in Figure 2. This method extracts features and performs classification on real-time table tennis images captured by stereo cameras, enabling object recognition and tracking in a 3D environment [25].

Figure 2.

Technological workflow for real-time estimation and prediction of table tennis trajectories.

Compared to traditional image processing algorithms and motion-based models, deep learning methods demonstrate superior generalization and anti-interference capabilities [26]. Compared to conventional object detection algorithms, YOLOv5 employs a lightweight neural network structure that allows for rapid object detection on resource-constrained edge devices while maintaining high accuracy [26]. Therefore, as the YOLOv5s model is lightweight, easy to deploy, and efficient in recognition, our project employs it for table tennis object recognition and detection. The importance of capturing frame rates for reconstructing the trajectories of fast-moving objects is self-evident. Due to the significant displacement and changes exhibited by fast-moving objects within short time intervals, a low frame rate fails to accurately capture the motion trajectory, resulting in inaccurate trajectory reconstruction. On platforms with limited computational resources, the inference speed of the YOLOv5s model used for table tennis object detection and recognition is insufficient to meet the requirements of capturing the fast movements of the table tennis ball. Hence, optimization of the original model is necessary to further improve the inference speed. In the optimization of the YOLOv5 network, we employ a pre-trained YOLOv5 model that is transformed and compressed using TensorRT. This process converts the model structure into a more suitable format for GPU computing, thereby enhancing the inference speed. Similar works have also evaluated the efficiency on other objects [27,28]. In the overall workflow of GPU utilization and engine execution, the process begins with reading the input image and performing preprocessing on it. The preprocessed image is then passed to the TensorRT inference engine, initiating the inference process. The inference engine performs forward computation on the input image using the model, generating object detection results. Subsequently, post-processing is applied to the detection results, yielding the final detection outcomes.

2.2. Hitting Pose Analysis

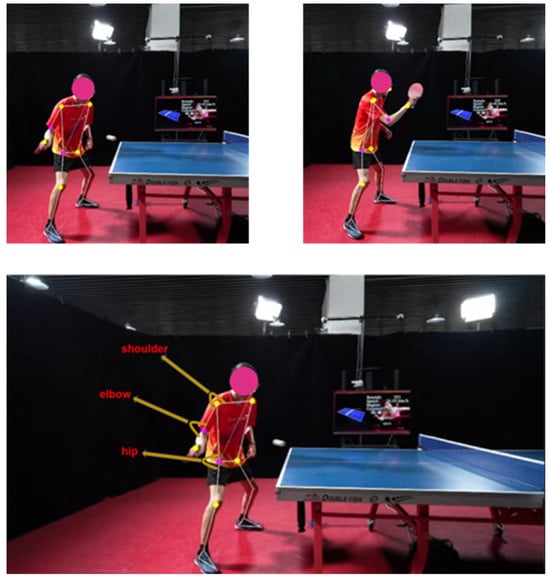

Evaluating the test player’s performance in terms of the trajectory and landing point of the ball is necessary. However, even if the test player can hit the ball onto the table using an improper technique, it can be detrimental to their long-term training in proper table tennis skills. Therefore, the correctness and technicality of the test player’s movements are also important evaluation metrics for scoring their table tennis performance. Currently, there are two main approaches to motion capture: sensor-based and vision-based solutions. Sensor-based solutions rely on specialized sensors and devices to capture and track the movements of the test player. In contrast, vision-based solutions utilize computer vision techniques to extract motion information from video or image sequences. These methods involve the use of cameras or depth sensors, such as Kinetic [29], to capture the movements of the test player. Both sensor-based and vision-based approaches have their advantages and limitations. Since vision-based solutions offer a more flexible and non-intrusive setup than sensor-based solutions, we chose vision-based solutions for our proposed method. Our proposed framework is shown in Figure 3. We divided the presentation into two parts to discuss the details of this framework.

Figure 3.

The framework of body skeleton tracking and scoring.

The first part of our proposed framework utilizes existing videos or recorded files from smartphone or computer cameras to estimate the skeleton point of the test players in the video footage. Human pose estimation has a wide range of applications, such as quantifying sports exercises, sign language recognition, and full-body gesture control. Representative methods include MediaPipe [30] and YOLOv7 [31]. MediaPipe is an open-source project by Google that provides a cross-platform ML solution for high-fidelity human pose tracking. It leverages the research of BlazePose and obtains 33 2D landmarks (or 25 upper-body landmarks) from RGB video frames. Additionally, MediaPipe Pose’s methods allow for real-time computation and estimation on most modern smartphones and even on the web. Based on these above advantages, we choose to use MediaPipe 0.10.21 as our method for human pose estimation. After obtaining the skeletal data of the test player, we select the three key joints that have the most significant impact on table tennis strokes and calculate the angles among them. These key joints are the elbow, shoulder, and hip of the grid hand of the table tennis player. These angles serve as the input data for the second part of the framework for evaluating the test player’s hitting actions.

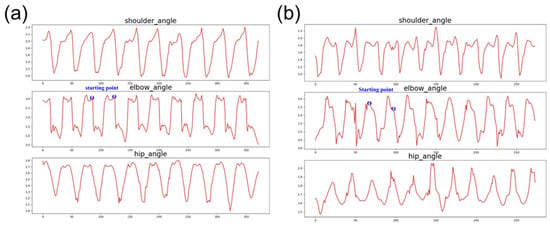

The second part of our proposed framework is detailed as follows: First, we use a wavelet denoising method to eliminate noise from the data. Since the test player’s movements are recorded continuously, a complete video will contain multiple repetitions of hitting actions (typically, the tested athlete is asked to perform ten consecutive hitting actions). By defining a time window function, as shown in Figure 3, we can identify the starting point of each repeated action by detecting a rapid decrease in the elbow joint angle (Figure 4). This allows us to separate the repeated hitting actions into individual actions. After sliding window segmentation, we apply wavelet denoising to the angle data [32]. Once these steps are completed, we can compare and score the actions against those of a standard athlete.

Figure 4.

Skeleton model in computer setting with real player.

The MediaPipe system estimates 2D body key points from RGB camera input and infers relative depth cues based on a learned regression model. While this approach is cost-effective, real-time capable, and easy to deploy, it does not provide true 3D spatial measurements of joint positions. We acknowledge that relying solely on 2D key points may lead to potential inaccuracies, particularly in cases of out-of-plane motion, varying camera perspectives, or depth-related ambiguities. To mitigate these effects, the camera was placed perpendicular to the player and parallel to the plane of motion, and players were instructed to maintain a consistent lateral position relative to the table during tests. These arrangements help to reduce the impact of depth loss for the main stroke movements, such as shoulder rotations and elbow swings, which predominantly occur within the imaging plane. However, we recognize the inherent limitations of this setup in fully capturing complex three-dimensional motions, especially for strokes involving significant forward or backward body movement. Therefore, integrating stereo vision or depth cameras to enable true 3D pose estimation will be considered in our future work to further enhance the accuracy and robustness of motion evaluation. These improvements are expected to provide more precise joint position measurements, enabling a more comprehensive biomechanical analysis of player performance.

The hitting pose analysis with the MediaPipe method was performed with players aged 18–25. The total number of players was 53 (M:47; F:6). They had various table tennis skill levels. All procedures were approved by the Human Research Ethics Committee of the Education University of Hong Kong (Ref. no. 2024-2025-0238) and conducted in accordance with the Declaration of Helsinki. Informed written consent was obtained from each participant before experimentation. The confidentiality of the participants’ information was ensured both during and after the data collection process. Additionally, all measurements were carried out by an experienced sports scientist, with the support of a research assistant in an indoor multi-game court to ensure accuracy and consistency.

3. Results

3.1. Detection Rate and Accuracy Test

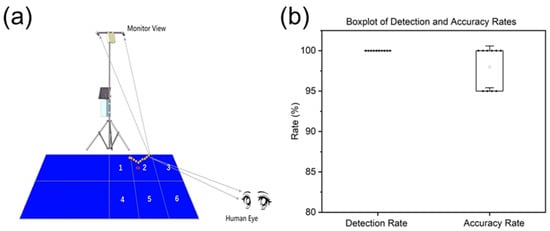

The detection rate and accuracy of the system are tested with real hitting. First, the half-table is divided into six equal areas, with a size of approximately 45 cm × 75 cm, and labeled areas 1–6 (Figure 5a) in line with standard table tennis training practices. This six-zone division is not arbitrary but is commonly used in foundational training programs to assess players’ ability to place the ball effectively across different areas of the table. Such division reflects conventional coaching strategies aimed at skill categorization and stroke accuracy improvement. Then, the test player starts hitting the ball to the different areas randomly with different ball heights and speeds. The landing point is recorded by the system and a human observer. A total of 10 sets of tests are conducted, with each test consisting of 20 balls being hit. For each ball, the event of ball landing and the landing point are recorded both by the system and the human observer. The detector rate is defined as the number of landings of the ball recognized by the system/the number of landings of the ball confirmed by the human eye. The accuracy of the system is defined as the consistency of the landing point recognized by the system with that recognized by the human eye. If the landing point recorded by the system is the same as that recorded by the human eye, the accuracy is defined as 100%. Also, the evaluation of the ball detection rate and landing point accuracy is conducted using camera-based ground truth. While human observation remains a widely accepted standard in practical table tennis training scenarios—where coaches visually assess ball landing positions and player performance—we recognize the need for an objective quantitative measurement to enhance the scientific rigor of our approach. To achieve this, we implement a precise ground truth calibration process prior to system operation. The four corners of the table are carefully calibrated using a stereo camera setup, ensuring accurate spatial positioning. The coordinate system of the table, with its center defined as the origin, is aligned with the ball’s trajectory tracking coordinate system in airspace. This alignment allows for the direct mapping of the ball’s detected trajectory onto the physical table surface with high spatial consistency. The system is capable of reliably identifying the ball’s landing, even at the table edges and corners, due to this accurate calibration, ensuring robustness in real-world scenarios.

Figure 5.

(a) schematics for detection rate and accuracy rate test of 200 balls. (b) Box plot of detection and accuracy rate.

The test results are shown in the boxplot in Figure 5b. The raw data are shown in Table S1. The y-axis stands presents the rate, and the x-axis presents the detection and accuracy rates. The raw test data are attached in the Supplementary Materials. Interestingly, with the YOLOv5 algorithm and TensorRT, the system is able to achieve an average ball detection rate of 100% while maintaining a high accuracy rate of 98.1 ± 2.5%, indicating the effectiveness of the system in detecting fast-moving objects with the YOLOv5 algorithm, which is better than some of the other algorithms, such as ViBe (94.1%) or the dynamic color gamut method (93.2%) [17].

3.2. Evaluation of Hitting Pose—Pilot Study with Dynamic Time Wrapping (DTW)

Due to individual differences, different players take different lengths of time to complete a technical action. Furthermore, there are also variations in the timing of completing a technical action within each phase. When evaluating and analyzing the accuracy of the test player’s technical movements, it is not appropriate to simply judge them as unreasonable based on differences in the test player’s speed and rhythm compared to those of a standard athlete, as shown in Figure 6. It can be roughly observed that the time series length required to complete the movements differs between test player A and test player B. We align time series of different lengths by first interpolating them using cubic polynomial interpolation to make them the same length. To compare the similarity of unequal-length time series, time series similarity algorithms are used with DTW [33], which finds an optimal path to match two time series, thereby measuring their similarity.

Figure 6.

With a camera frame rate of 30 Hz, two test players (a,b) completed 10 right-hand forehand attacking strokes but performed different curves.

Thus, to evaluate the effectiveness of a players’ stroke, with consent, we first shoot a video of professional athletes. Then, a player’s video is obtained and compared with the professional athletes’ video. In the DTW calculation, the calculated term is

where ) is the deviation between points ai from series A (professional athletes) and bj from series B (player), and the sum is taken over all points (i,j) in the optimal alignment path. In our hitting pose analysis, we use the elbow angle, shoulder angle, and hip angle of the grid hand, as these poses could be related to table tennis player effectiveness according to a literature study on biomechanics [34]. Thus, the DTW value could provide us with a measure of similarity between the players and professional athletes.

However, since each player has different rates of motion, the normalized DTW function is defined as

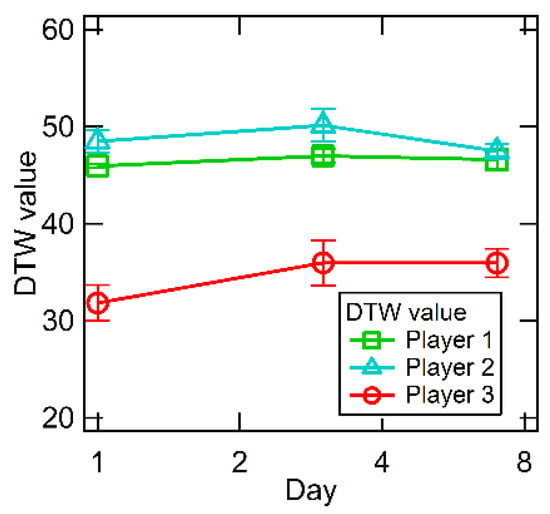

Thus, the normalized DTW function can be used to quantitatively evaluate stroke effectiveness. We first test the stability of the DTW value to make sure that it can accurately reflect the performance of the players. After obtaining consent, three male players aged 18–25 with different levels are asked to perform the DTW test (15 s video recording) for a 7-day period, with the test conducted 3 times and 5 min apart on days 1, 3, and 7. The players are not allowed to train in this 7-day period to minimize the learning effect. The average data (normalized DTW values) are plotted for different days and are compared (Figure 7 and Table 1). In the plot, it can be observed that the DTW values for the players are quite stable on a day-to-day basis, indicating that DTW is a reliable tool to evaluate players’ stroke. We also calculate the intraclass correlation coefficient (the raw data and calculation are shown in the Supporting Information) to be 0.94 for all nine tests, indicating very good stability.

Figure 7.

DTW stability test for 3 players.

Table 1.

DTW pilot study for 3 players.

3.3. DTW Validation Study

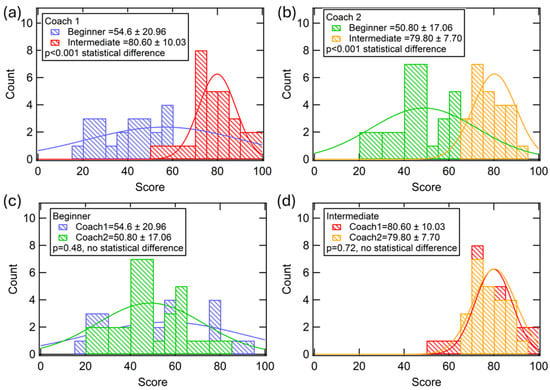

With the establishment of DTW stability, we began to validate the correlation of the normalized DTW function with human evaluation. We performed an experiment with a much larger group consisting of 25 beginner players (with no prior table tennis experience: M: 22; F: 3; age: 18–25) and 25 intermediate players (with at least 2 years of table tennis experience: M: 22; F:3; age: 18–25). After obtaining consent, they received 2 h of basic training on the forehand stroke. Then, their hitting poses were recorded with the system to obtain a 15 s video stream, and, for each of them, the normalized DTW value was displayed. At the same time, the recording was sent to two professional table tennis coaches (certified national level two table tennis players) to evaluate the hitting position with a composite score of 0–100, where 100 stands for most effective, and 0 stands for least effective. The coaches’ score criteria included timing, speed, landing point, and movement rationalization. These four criteria were weighted equally at both the beginner and intermediate levels when giving a composite score—timing (25%), landing point (25%), movement rationalization (25%), and speed (25%)—and the sum of all the scores formed the composite score. Specifically, timing was assessed by observing the players’ ability to execute shots at the optimal moment during rallies, such as anticipating the opponent’s movements or reacting quickly to fast-paced exchanges. The landing point was judged by the accuracy of the ball’s placement on the table, focusing on whether the players consistently targeted advantageous positions for their shots. Movement rationalization was assessed by analyzing the players’ decision-making and movement efficiency, such as whether they used the most effective and economical movements to reach the ball or position themselves strategically. Speed was evaluated based on the players’ reaction time and the efficiency of their movements, including how quickly they transitioned between positions and how fast they hit the table tennis balls. Each coach independently evaluated each video, using a standardized scoring sheet. The coaches reviewed the videos as much as needed using slow motion and freeze frame. The method of using two independent observers could improve internal validity; however, interpretation bias from coaches could still affect validity. Therefore, we first examined the composite score from the two coaches to ensure cross-reliability. The data are summarized in Figure 8, and the raw data are presented in the Supporting Information.

Figure 8.

Comparison of coaches’ scoring of players of different levels. (a) Coach 1’s and (b) Coach 2’s score (c) Beginner and (d) Intermediate players’ score comparison between two coaches.

For the beginners, the two coaches gave similar ratings of 54.6 ± 20.96 (coach 1) and 50.80 ± 17.06 (coach 2), with a p value of 0.48. The same trend was observed for the intermediate group, where coach 1’s rating was 81.61 ± 10.54, and coach 2’s rating was 80.71 ± 8.01, with a p value of 0.72. We did notice, however, that there were individual score fluctuations between the coaches. For example, player 6 in the beginner group received a score of 30 from coach 1 and 50 from coach 2, while player 20 received a score of 80 from coach 1 and 50 from coach 2. Since each player can have a very different style of play and each coach will have interpretation bias, this kind of individual variation could influence the reliability of the composite score. Therefore, we can only say that, for the average composite score, the scores of the two coaches yielded no statistical difference between the beginners and intermediates.

For the beginners vs. intermediates, the coaches’ average composite scores were statistically different. For coach 1, the beginners’ score was 54.6 ± 20.96, while the intermediates’ score was 81.61 ± 10.54, with a p value of <0.001. For coach 2, the beginners’ score was 50.8 ± 17.06, while the intermediates’ score was 80.71 ± 8.01, with a p value of <0.001. However, individual differences still existed. For example, player 25 in the intermediate group received a score of 50 from coach 1 and 70 from coach 2. Therefore, our analysis suggests that the beginner group and intermediate groups could be statistically different in terms of skill level, and the coaches’ rating can, on average, give a reliable measure of skill level.

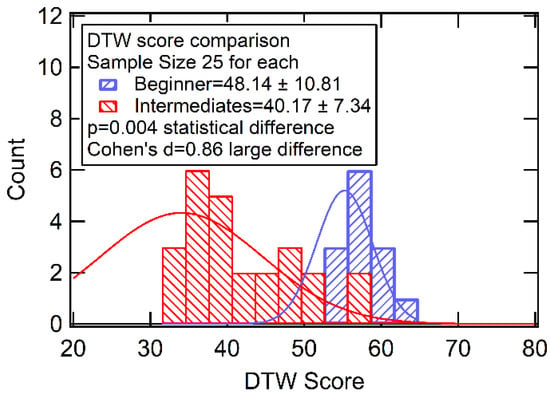

We then turned to analyze the raw DTW values of the two groups. The results are shown in Figure 9. The two groups had different DTW values, with higher DTW values for the beginners (48.14 ± 10.81) vs. intermediates (40.17 ± 7.34), with a p value of 0.004. Cohen’s d was also calculated to be 0.86, meaning that a large difference was observed for the beginners versus the intermediates. Thus, the DTW value could be used to determine the statistical difference in player stroke effectiveness between beginners and intermediates, showing the usefulness of the DTW function. The raw data for the coaches’ scoring and DTW are summarized in Table 2 and Table 3.

Figure 9.

Raw DTW comparison for players of different levels.

Table 2.

Coaches’ ratings and DTW for players in beginner group.

Table 3.

Coaches’ ratings and DTW for players in intermediate group.

3.4. Limitation of DTW for Individual Scoring

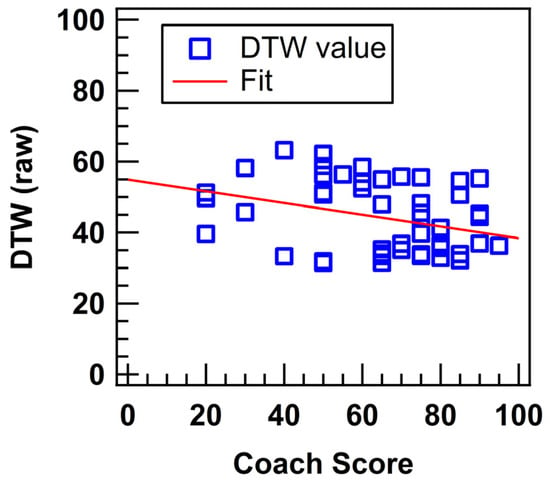

With the reliability of the coaches’ scores and the DTW values established, we want to correlate the DTW value with each individual and professional score to show its effectiveness in evaluating players’ hitting pose. The data are compared and plotted in Figure 10, and the raw data are plotted in Supporting Information. In the scatter plot, it can be observed that the DTW values show a linear relation with the professional athletes’ scores, with

Figure 10.

Comparison of DTW score with coach score.

However, the R2 of the linear fit is only 0.15, indicating a relatively poor correlation. This is where the DTW value is limited in accurately evaluating individuals. Many factors could influence individual DTW ratings, such as player height, arm span, and kinematics, resulting in a ceiling effect. More data can be collected in the future to better correlate the individual scores with the coaches’ scores.

4. Discussion

The integration of technology into sports is rapidly transforming how athletes train, compete, and improve their performance. The use of AI, in particular, has been widespread across various areas of sports [35,36,37]. This trend is particularly evident in sports like table tennis, where precision, speed, and technique are crucial. Our system’s distinctive architecture combines binocular vision with an optimized YOLOv5 deep learning framework, achieving unprecedented performance metrics—including 100% ball detection accuracy and >98% positional precision under normal training conditions. These results are comparable to and slightly surpass the 97% achieved with other machine learning methods [8]. Additionally, the implementation of a MediaPipe-based kinematic analysis enables a robust quantification of player form through frame-by-frame comparison against biomechanically optimal stroke patterns. Notably, our DTW classification algorithm demonstrates statistically significant discriminative power between skill levels (25 beginner- and 25 intermediate-level players), with a p value of 0.004 and Cohen’s d (effect size) of 0.86, representing a significant advancement in automated sports pedagogy. With a total implementation cost under USD 800, this solution establishes a new benchmark for cost-effective, scalable AI training systems.

Our system enables detailed load monitoring of players’ physical activities during table tennis training, such as tracking the number of rallies and the speed of the ball. This functionality supports training load optimization and injury prevention by providing actionable insights into player performance [35]. Beyond sports training, the system’s adaptability allows it to be applied in various racket sports settings for tactical advancement. By quantifying match play dynamics, it provides coaches and analysts with enhanced insights derived from a comprehensive data analysis [36], fostering better decision-making and strategy development. Equally important is the system’s ability to analyze and assess the player’s hitting pose in real time. By integrating the DTW algorithm, the system quantitatively calculates the difference in the hitting pose angles between players and professional athletes. This allows teachers and coaches to provide personalized feedback for correcting posture, adjusting grip, and refining swing mechanics, helping players refine their technique. Such immediate and precise feedback contributes to more powerful, accurate, and consistent shots.

With a total cost of less than USD 800, the system is highly accessible and can be deployed in diverse settings, such as schools or training facilities. The system’s high accuracy in ball tracking enables teachers or coaches to gain deep insights into patterns of play, strengths, and areas for improvement. These data can then be used to individualize training sessions, focusing on specific skills or strategies, thereby making practice more efficient and targeted [36]. Additionally, the cost-effectiveness of the system could foster the widespread proliferation and adoption of AI in sports professions, transforming the role and knowledge requirements for coaches [37]. Rather than relying solely on subjective observation and experience, coaches can now leverage precise, data-driven insights to guide their training strategies. This shift empowers coaches to focus on interpreting analytics, designing personalized programs, and fostering strategic thinking among players, ultimately elevating the quality of coaching and athlete development.

While promising, our findings have limitations. First, the system’s performance was validated under controlled lighting and camera angles; variable environments (e.g., outdoor settings or uneven illumination) may reduce tracking accuracy. Second, the dataset, though balanced (25 beginners vs. 25 intermediates), lacks elite athletes and players with diverse body types, potentially limiting generalizability. For example, taller players’ extended reach may alter stroke kinematics, a factor not accounted for in our current DTW model. Third, real-time feedback latency—though acceptable for post-stroke correction—remains inadequate for in-swing adjustments, a challenge also noted in wearable sensor systems [2].

To enhance the applicability of the system, we also propose several future directions for research. The first is DTW algorithm refinement. The incorporation of anthropometric factors (e.g., arm span-to-height ratios) could improve personalization, and collaborative datasets with international coaching bodies could expand the diversity of the training data. The second direction is multi-modal integration: the DTW can be compared with wearable devices [2] to resolve the ambiguities in pose estimation. The third promising direction is to apply the DTW algorithm in other sports such as basketball for free throw analysis or tennis for serves where cyclic movements are important. DTW can work as a universal method in sports science to quantitatively evaluate pose effectiveness, as it computes the similarities between players. However, sport-specific calibration is needed to define key joint trajectories (e.g., joint angles in tennis serves [20]) and for validation against expert benchmarks. Finally, the DTW algorithm could be applied to para-athletics. Applying DTW to analyze compensatory techniques in wheelchair table tennis could reveal optimized kinetic chains for athletes with mobility impairments. Pilot studies with paralympic coaches are needed to establish disability-specific biomechanical models.

5. Conclusions

In conclusion, we developed an artificial intelligence-based table tennis teaching system suitable for the general population that combines table tennis information with athlete action recognition. With binocular camera vision and a Yolov5-based deep learning algorithm, the ball detection rate could reach 100%, together with 98% ball position accuracy. Also, with the media pipe algorithm, the key joint points of a player’s body from the input video stream are displayed to evaluate the similarity and correctness of the player’s actions compared to standard actions. With a DTW algorithm, the system could differentiate beginner players and intermediate players, with a p-value of 0.004 and Cohen’s d of 0.86. Moreover, the total system cost is less than USD 800, making it suitable for large-scale deployment. This research opens up the possibility for technology-based training in many other sports, which could benefit the whole sports science community in the future.

6. Patents

The authors have two Chinse patents approved with number, ZL202120616967.8 and ZL202110320026.4, together with a pending Chinese patent application with number PSU332024094CN1.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/app15105370/s1, Table S1: Raw data for the accuracy and precision test.

Author Contributions

Conceptualization, Z.H., Z.Y., G.C.-C.C. and X.C.; methodology, J.X. and H.C.; software, X.L.; validation, A.W. and J.Y.; data curation, Z.H.; writing—original draft preparation, X.C.; writing—review and editing, G.C.-C.C. and X.C.; funding acquisition, Z.H., G.C.-C.C. and X.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Teaching Reform Fund from the Southern University of Science and Technology (XJZLGC202227) and the Cultivation of Guangdong College Students’ Scientific and Technological Innovation Fund (PDJH2024A326).

Institutional Review Board Statement

All procedures were approved by the Human Research Ethics Committee of the Education University of Hong Kong (Ref. no. 2024-2025-0238, approved 25 March 2025) and were conducted in accordance with the Declaration of Helsinki.

Informed Consent Statement

Informed written consent was obtained from each participant before experimentation. The confidentiality of the participants’ information was ensured both during and after the data collection process.

Data Availability Statement

The original contributions presented in this study are included in the article or the Supplementary Materials. The data are not publicly available due to player privacy. Further inquiries can be directed to the corresponding author.

Acknowledgments

We thank Fan Du, Jixian Wang, and Haoning Jiang for assistance with data collection.

Conflicts of Interest

The authors have two Chinse patents approved with numbers ZL202120616967.8 and ZL202110320026.4, together with a pending Chinese patent application with number PSU332024094CN1.

References

- Liebermann, D.G.; Katz, L.; Hughes, M.D.; Bartlett, R.M.; McClements, J.; Franks, I.M. Advances in the application of information technology to sport performance. J. Sports Sci. 2002, 20, 755–769. [Google Scholar] [CrossRef] [PubMed]

- Rana, M.; Mittal, V. Wearable Sensors for Real-Time Kinematics Analysis in Sports: A Review. IEEE Sens. J. 2021, 21, 1187–1207. [Google Scholar] [CrossRef]

- Capasa, L.; Zulauf, K.; Wagner, R. Virtual Reality Experience of Mega Sports Events: A Technology Acceptance Study. J. Theor. Appl. Electron. Commer. Res. 2022, 17, 686–703. [Google Scholar] [CrossRef]

- Zadeh, A.; Taylor, D.; Bertsos, M.; Tillman, T.; Nosoudi, N.; Bruce, S. Predicting Sports Injuries with Wearable Technology and Data Analysis. Inf. Syst. Front. 2021, 23, 1023–1037. [Google Scholar] [CrossRef]

- Giménez-Egido, J.M.; Ortega, E.; Verdu-Conesa, I.; Cejudo, A.; Torres-Luque, G. Using Smart Sensors to Monitor Physical Activity and Technical–Tactical Actions in Junior Tennis Players. Int. J. Environ. Res. Public Health 2020, 17, 1068. [Google Scholar] [CrossRef]

- Dokic, K.; Mesic, T.; Martinovic, M. Table Tennis Forehand and Backhand Stroke Recognition Based on Neural Network. In Proceedings of Advances in Computing and Data Sciences; Springer: Singapore, 2020; pp. 24–35. [Google Scholar]

- Oagaz, H.; Schoun, B.; Choi, M.H. Performance Improvement and Skill Transfer in Table Tennis Through Training in Virtual Reality. IEEE Trans. Vis. Comput. Graph. 2022, 28, 4332–4343. [Google Scholar] [CrossRef]

- Lin, H.-I.; Yu, Z.; Huang, Y.-C. Ball Tracking and Trajectory Prediction for Table-Tennis Robots. Sensors 2020, 20, 333. [Google Scholar] [CrossRef]

- Mat Sanusi, K.A.; Mitri, D.D.; Limbu, B.; Klemke, R. Table Tennis Tutor: Forehand Strokes Classification Based on Multimodal Data and Neural Networks. Sensors 2021, 21, 3121. [Google Scholar] [CrossRef]

- Büchler, D.; Guist, S.; Calandra, R.; Berenz, V.; Schölkopf, B.; Peters, J. Learning to Play Table Tennis From Scratch Using Muscular Robots. IEEE Trans. Robot. 2022, 38, 3850–3860. [Google Scholar] [CrossRef]

- Michalski, S.C.; Szpak, A.; Saredakis, D.; Ross, T.J.; Billinghurst, M.; Loetscher, T. Getting your game on: Using virtual reality to improve real table tennis skills. PLoS ONE 2019, 14, e0222351. [Google Scholar] [CrossRef]

- Oagaz, H.; Schoun, B.; Choi, M.-H. Real-time posture feedback for effective motor learning in table tennis in virtual reality. Int. J. Hum. Comput. Stud. 2022, 158, 102731. [Google Scholar] [CrossRef]

- Ralf, S.; Lars, L.; Stefan, K.; Christian, S. Table Tennis and Physics. In Simulation Modeling—Recent Advances, New Perspectives, and Applications; Abdo Abou, J., Ed.; IntechOpen: Rijeka, Croatia, 2022; Chapter 11. [Google Scholar]

- Yu, H.-I.; Hong, S.-C.; Ju, T.-Y. Low-cost system for real-time detection of the ball-table impact position on ping-pong table. Appl. Acoust. 2022, 195, 108832. [Google Scholar] [CrossRef]

- Rong, Z. Optimization of table tennis target detection algorithm guided by multi-scale feature fusion of deep learning. Sci. Rep. 2024, 14, 1401. [Google Scholar] [CrossRef]

- Li, W.; Liu, X.; An, K.; Qin, C.; Cheng, Y. Table Tennis Track Detection Based on Temporal Feature Multiplexing Network. Sensors 2023, 23, 1726. [Google Scholar] [CrossRef] [PubMed]

- Ning, T.; Wang, C.; Fu, M.; Duan, X. A study on table tennis landing point detection algorithm based on spatial domain information. Sci. Rep. 2023, 13, 20656. [Google Scholar] [CrossRef]

- Goh, G.L.; Goh, G.D.; Pan, J.W.; Teng, P.S.; Kong, P.W. Automated Service Height Fault Detection Using Computer Vision and Machine Learning for Badminton Matches. Sensors 2023, 23, 9759. [Google Scholar] [CrossRef]

- Messelodi, S.; Modena, C.M.; Ropele, V.; Marcon, S.; Sgrò, M. A Low-Cost Computer Vision System for Real-Time Tennis Analysis. In Proceedings of Image Analysis and Processing—ICIAP 2019; Springer International Publishing: Cham, Switzerland, 2019; pp. 106–116. [Google Scholar]

- Brocherie, F.; Dinu, D. Biomechanical estimation of tennis serve using inertial sensors: A case study. Front. Sports Act. Living 2022, 4, 962941. [Google Scholar] [CrossRef]

- Yin, Y.; Zhu, H.; Yang, P.; Yang, Z.; Liu, K.; Fu, H. High-precision and rapid binocular camera calibration method using a single image per camera. Opt. Express 2022, 30, 18781–18799. [Google Scholar] [CrossRef]

- Gomez-Gonzalez, S.; Nemmour, Y.; Schölkopf, B.; Peters, J. Reliable Real-Time Ball Tracking for Robot Table Tennis. Robotics 2019, 8, 90. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; NanoCode012; Kwon, Y.; TaoXie; Fang, J.; Imyhxy; Michael, K.; et al. Ultralytics/yolov5: v6.1—TensorRT, TensorFlow Edge TPU and OpenVINO Export and Inference; Zenodo: Geneve, Switzerland, 2022. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; NanoCode012; Kwon, Y.; TaoXie; Michael, K.; Fang, J.; Imyhxy; et al. Ultralytics/Yolov5: v6.2—YOLOv5 Classification Models, Apple M1, Reproducibility, ClearML and Deci.ai Integrations; Zenodo: Geneve, Switzerland, 2022. [Google Scholar]

- Barone, S.; Neri, P.; Paoli, A.; Razionale, A.V. 3D acquisition and stereo-camera calibration by active devices: A unique structured light encoding framework. Opt. Lasers Eng. 2020, 127, 105989. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Fang, J.; Liu, Q.; Li, J. A Deployment Scheme of YOLOv5 with Inference Optimizations Based on the Triton Inference Server. In Proceedings of the 2021 IEEE 6th International Conference on Cloud Computing and Big Data Analytics (ICCCBDA), Chengdu, China, 24–26 April 2021; pp. 441–445. [Google Scholar]

- Qiaoshou, L.I.U.; Zhiyuan, Z.; Juncheng, W.; Shengwen, P.I. High Performance YOLOv5: Research on High Performance Target Detection Algorithm for Embedded Platform. J. Electron. Inf. Technol. 2023, 45, 2205–2215. [Google Scholar] [CrossRef]

- Zhang, Z. Microsoft Kinect Sensor and Its Effect. IEEE MultiMedia 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.-L.; Yong, M.; Lee, J. Mediapipe: A framework for perceiving and processing reality. In Proceedings of the Third Workshop on Computer Vision for AR/VR at IEEE Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 17 June 2019. [Google Scholar]

- Wang, Y.; Wang, H.; Xin, Z. Efficient Detection Model of Steel Strip Surface Defects Based on YOLO-V7. IEEE Access 2022, 10, 133936–133944. [Google Scholar] [CrossRef]

- Sardy, S.; Tseng, P.; Bruce, A. Robust wavelet denoising. IEEE Trans. Signal Process. 2001, 49, 1146–1152. [Google Scholar] [CrossRef]

- Dynamic Time Warping. In Information Retrieval for Music and Motion; Müller, M., Ed.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 69–84. [Google Scholar]

- Wong, D.W.; Lee, W.C.; Lam, W.-K. Biomechanics of Table Tennis: A Systematic Scoping Review of Playing Levels and Maneuvers. Appl. Sci. 2020, 10, 5203. [Google Scholar] [CrossRef]

- Mateus, N.; Abade, E.; Coutinho, D.; Gómez, M.-Á.; Peñas, C.L.; Sampaio, J. Empowering the Sports Scientist with Artificial Intelligence in Training, Performance, and Health Management. Sensors 2025, 25, 139. [Google Scholar] [CrossRef] [PubMed]

- Wei, S.; Huang, P.; Li, R.; Liu, Z.; Zou, Y. Exploring the Application of Artificial Intelligence in Sports Training: A Case Study Approach. Complexity 2021, 2021, 4658937. [Google Scholar] [CrossRef]

- Naughton, M.; Salmon, P.M.; Compton, H.R.; McLean, S. Challenges and opportunities of artificial intelligence implementation within sports science and sports medicine teams. Front. Sports Act. Living 2024, 6, 1332427. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).