A Review of Multimodal Interaction in Remote Education: Technologies, Applications, and Challenges

Abstract

1. Introduction

- Real-time multimodal data fusion and latency mitigation in authentic classroom scenarios require more robust solutions;

- There is a lack of systematic research on the cross-cultural and multilingual adaptability of multimodal systems;

- Most empirical validations are conducted in controlled laboratory environments, with limited large-scale data from real-world educational settings.

2. Development and Application of Multimodal Interaction Technology in Remote Education

2.1. Development of Multimodal Interaction Technology

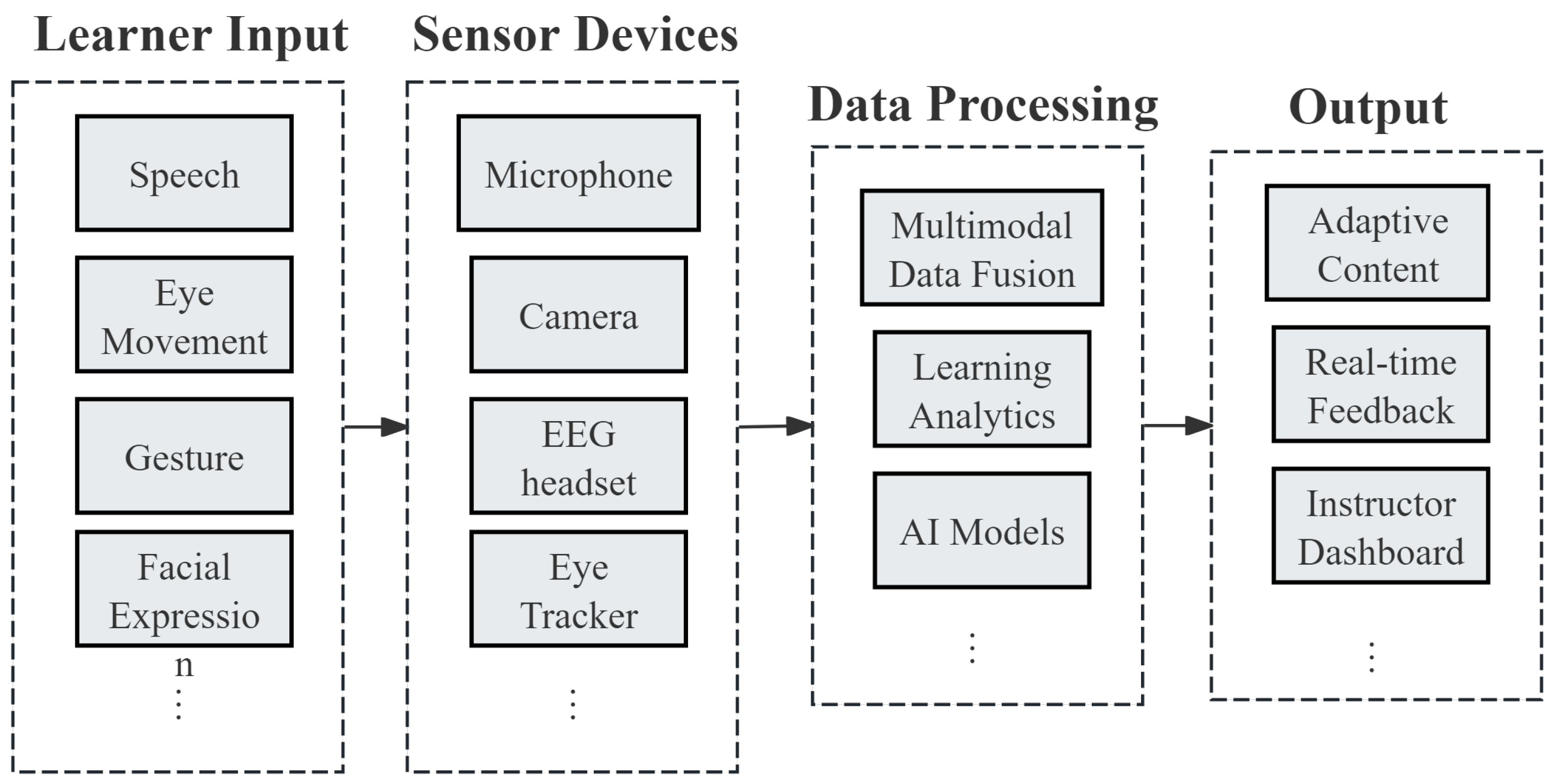

2.2. Key Technologies in Remote Interaction

2.3. Applications of Multimodal Interaction Technology in Enhancing Educational Models

- Interactive Teaching Tools: Multimodal technology has been integrated into intelligent learning environments like the MMISE system, which makes use of multimodal input and output modalities that consist of speech, gestures, and facial expressions to enhance the effectiveness of instruction. This system has turned out to be critically important, especially in the context of remote education during the COVID-19 pandemic [11,22].

- Improving Student Engagement: Data for students’ interactions with multimodal study materials could reveal insights about comprehension and attention allocation by applying eye-tracking technologies [23]. In addition, the application of digital media entertainment technologies into remote music education has played an effective role in helping students improve their learning motivation and engagement [24,25].

- Remote Learning State Monitoring System: Multimodal data fusion techniques utilize speech, video, facial expressions, and gestures through deep learning models to study users’ attention patterns [26,27]. Furthermore, the real-time decoding of a user’s cognitive attentional states during remote learning is further made possible through the learning state monitoring system based on EEG and eye-tracking technology [28,29].

- Experimental Teaching Platforms: Platforms such as the “Remote Experimental Teaching Platform for Digital Signal Processing” enhance instructional efficacy by enabling remote program debugging and data-sharing functionalities [30]. Moreover, VR technology has been employed to develop interactive remote teaching systems, thereby improving teacher–student engagement and enhancing learning outcomes in remote education [31].

- Remote User Attention Assessment Methods: Sensor-based learning analytics approaches, including smartphone-embedded sensor technologies for the collection of data on student behavior and learning, have been integrated with embedded hardware–software systems and backend data processing frameworks to facilitate real-time dynamic assessments in remote education [31,32,33].

- Data Fusion and Visualization: Data fusion methodologies employ tools such as “physiological heat maps” to integrate eye-tracking and physiological data, dynamically visualizing learners’ emotional and cognitive states [34]. Additionally, machine learning algorithms have been utilized to analyze students’ visual attention trajectories, enabling the prediction of learning outcomes and the refinement of instructional design strategies [35].

3. Commonly Utilized Methods

3.1. Learning Indicators and Available Data

3.2. Data Collection Methods

- Digital Interaction Data: Traditional LMS and online learning platforms primarily collect learner behavioral data through log records (clickstreams), assignment submissions, and quiz performance metrics [9].

- Physical Learning Analytics: Physical learning analytics leverages sensor technologies and the Internet of Things (IoT) to embed computational capabilities into physical environments, thereby enabling real-time interaction and data collection [43].

- Sensors and Wearable Devices: By using hard devices such as eye trackers, posture recognition cameras, and heart rate monitors, researchers can capture learners’ spatial positioning, postural dynamics, and physical interactions [39]. This approach facilitates the seamless integration of learning activities across multiple environments [44], thereby extending the scope of learning analytics beyond digital interactions to encompass real-world learning contexts.

- Physiological Data: The primary objective of multimodal physiological signal research is to enhance the understanding of emotional and cognitive states by integrating multiple physiological indicators, including EEG, EDA, and electrocardiography (ECG) (Table 2).

3.3. Data Processing

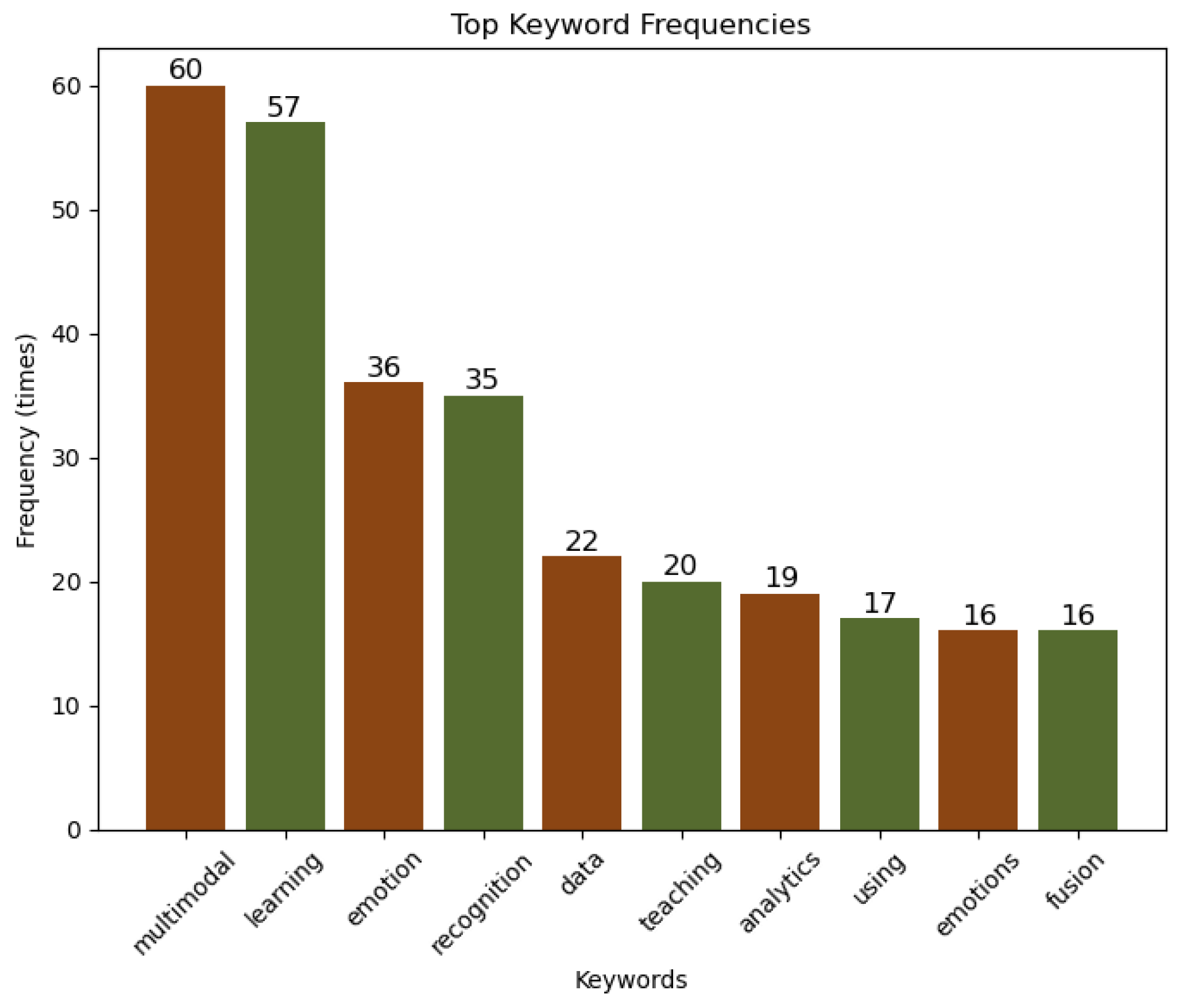

4. Keyword and Correlation Analysis

4.1. Word Frequency Analysis

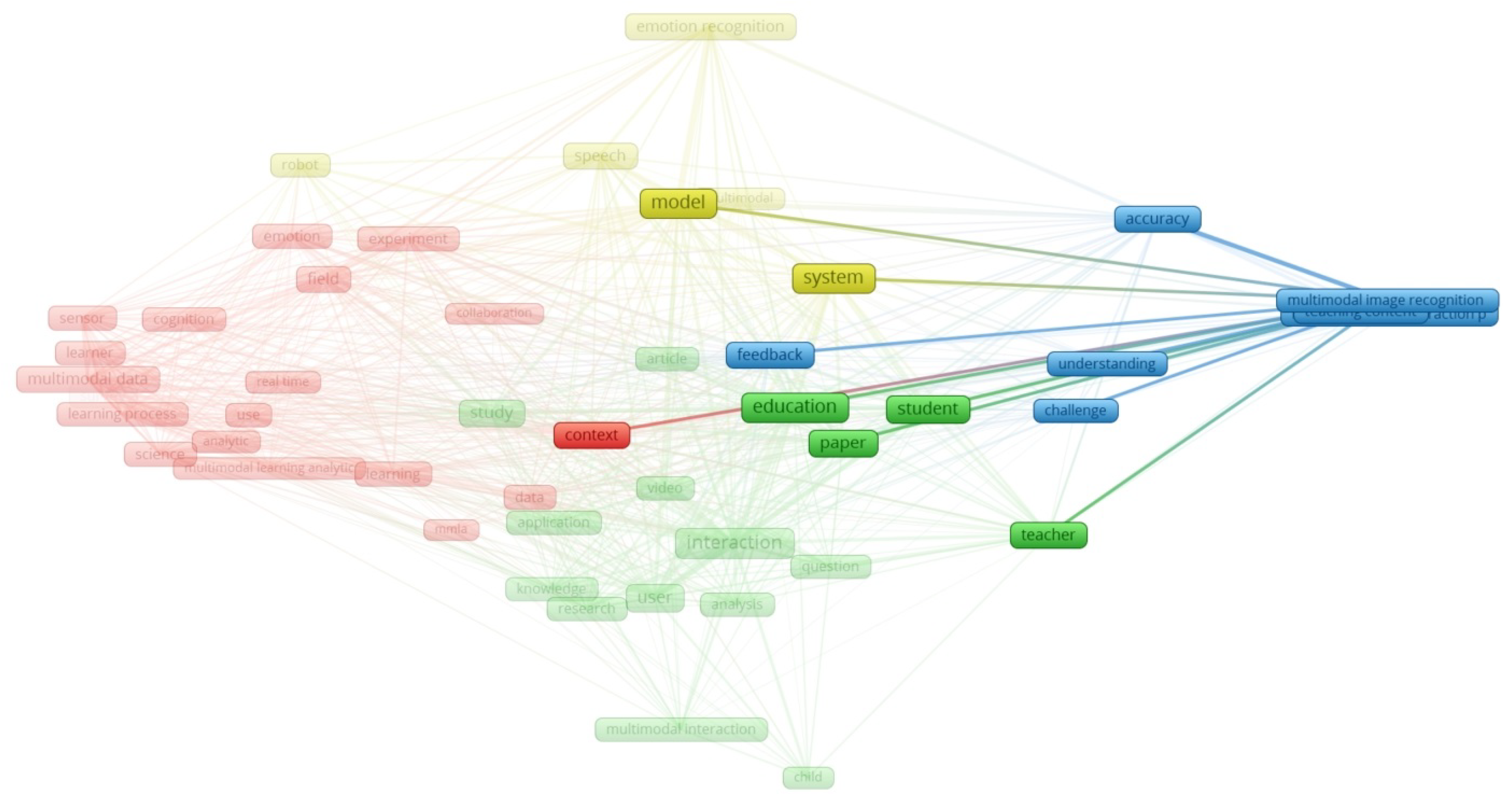

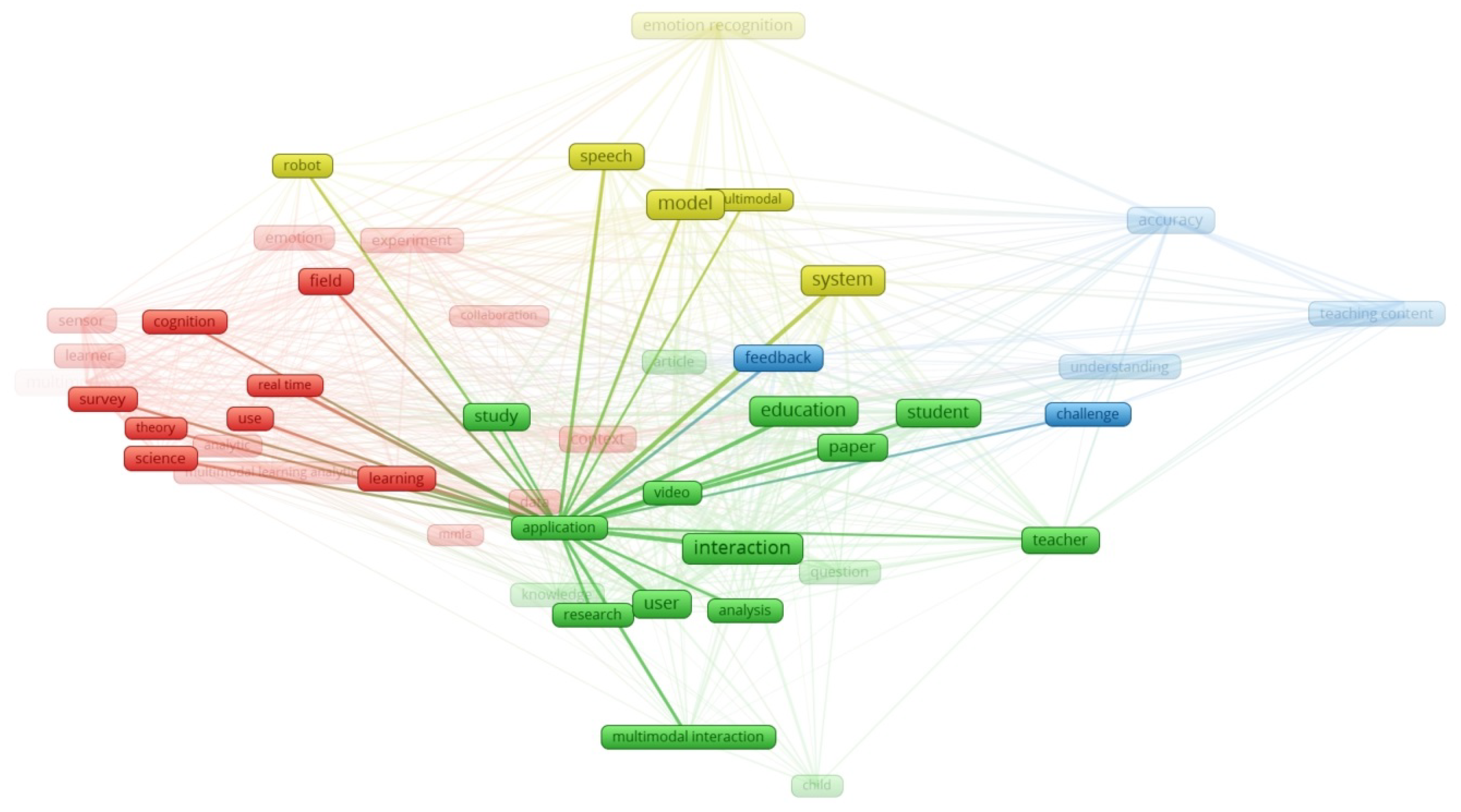

4.2. Correlation Analysis

4.2.1. Multimodal Image Recognition

4.2.2. Application

4.2.3. Feedback

4.3. Multimodal Interaction Across Educational Levels

4.3.1. K-12 Education

4.3.2. Higher Education

4.3.3. Vocational Training

5. Discussion

5.1. Technical Challenges

5.1.1. Data Processing

- Synchronization Issues in Multimodal DataMultimodal data fusion necessitates the synchronization of data acquired from various sensors, including speech, gestures, and facial expressions. However, variations in sampling rates and timestamps among these data sources present significant synchronization challenges. For instance, speech signals typically have a higher sampling rate compared to video frames, requiring precise temporal alignment during data fusion [62,63]. Additionally, hardware-induced latency and network transmission delays further impact synchronization accuracy, complicating real-time data integration [64].

- High Computational Resource Demands and Limited Compatibility with Low-End DevicesProcessing multimodal data requires complex algorithms and deep neural networks, which demand high computational power. Real-time processing requires strong CPU and GPU performance, but many remote education devices, like personal computers and mobile devices, lack these resources. This limits the use of multimodal technology in low-resource settings. Developing lightweight and efficient algorithms can improve accessibility [65,66,67].

- Data Storage and Transmission OverheadIn remote education, multimodal interaction technology produces large amounts of high-dimensional data. Managing and transmitting these datasets is a major challenge for educational platforms and cloud computing systems. Remote education depends on cloud storage and real-time streaming. High bandwidth demands can weaken system stability, especially in areas with poor network infrastructure [68,69].

5.1.2. User Experience

- Teachers’ Adaptation to Multimodal TechnologyMultimodal technology has changed traditional teaching methods, and thus teachers have to learn new tools and platforms. Some instructors struggle to leverage these tools to their full potential because they have not been trained in them. This inhibits their ability to fully benefit from multimodal systems [70]. Hardware failures and computer glitches lead to added workloads for instructors and compromised teaching effectiveness [71,72].

- Students’ Acceptance of Personalized Learning PathwaysMultimodal technology facilitates the development of personalized learning pathways by adapting instructional content to students’ individual learning styles and progress. However, students’ acceptance of such personalized learning approaches varies. While some learners prefer self-directed learning and customized educational content, others may be more inclined toward traditional teacher-led instruction [73]. Furthermore, designing personalized learning pathways necessitates a balance between self-regulated learning and teacher guidance to ensure an optimal and structured learning experience [64].

- Complexity of Human–Computer InteractionMultimodal systems support different input modes beyond speech, including touch interfaces, gestures, and facial recognition. Users must switch between these modes to interact with the system. Some users face problems with usability. Common issues include complex interface design, system delays after input, and low recognition accuracy [74]. High error rates also reduce user experience. Inaccurate speech recognition can cause unintended actions, and poor gesture recognition can disrupt classroom teaching. A major challenge in multimodal instructional systems is maintaining a good user experience while keeping the system easy to use [27].

5.1.3. Privacy and Ethical Issues

- Privacy ProtectionPhysiological student data in remote teaching multimodal environments are employed extensively to determine learning status and adjust teaching practices. However, the sensitivity of this type of data is extremely high. Today, most remote learning systems have incomplete security measures to protect physiological data, making them vulnerable to unauthorized use, illegal access, and potential breaches. The application of data protection laws, such as GDPR, in education is unclear. This creates legal risks in data storage, processing, and use. A major challenge is protecting data privacy while making full use of multimodal data to improve education [75].

- Algorithmic FairnessMultimodal learning systems are prone to algorithmic biases, which can affect fairness and inclusivity. AI models are trained on historical datasets that may contain cultural, gender, or linguistic biases. These biases can lead to unfair feedback or unequal learning experiences for students from different backgrounds. Some speech recognition systems have difficulty processing non-native speakers’ inputs, which can negatively impact their learning. Adaptive learning systems may also create inequalities. Personalized recommendation algorithms might assign different difficulty levels based on existing gaps, reinforcing disparities [76]. Future research should focus on improving fairness in multimodal AI algorithms to provide equal educational support for all students.

- Security ConcernsAn increase in remote education will mean that cloud-based systems used to store and process data are vulnerable to cyber attacks, data theft, or identity theft. The security and privacy threats of unauthorized access to student behavioral data, learning records, physiological information, etc., are serious. Distributed ledger technology (DLT). The existing encryption techniques and access control mechanisms should be further optimized to meet the needs and requirements of the increasing rate of attack on new remote education systems. Technologies such as federated learning, which allow for decentralized data usage, help reduce the risk of data falling, while advanced blockchain-based identity authentication provides stronger data security and integrity [77]. This foundation of sustainability and resilience depends on which multimodal curricula are designed, and this is where the data security framework must be laid.

5.2. Limitations of Current Research

- Diversity and Representativeness of DataExisting multimodal learning datasets are mostly acquired in controlled experimental environments, while there is no adequately large-scale data available from authentic classroom settings. This limitation restricts the external validity and generalizability of research findings. Immadisetty et al. [64] emphasized the importance of capturing diverse datasets in real-world educational settings to advance the relevance of multimodal learning studies.

- Exploiting the Gap Between Experimental Settings and Practical UtilizationAlthough randomized experiments are used to measure a wide variety of interventions to improve student learning, these interventions are often implemented in highly controlled laboratory environments that do not reflect the complexity of real educational settings. This divergence can lead to experimental results that do not translate well to the field. Liu et al. [78] emphasized the need to validate multimodal interaction systems in real educational scenarios to ensure their real-world effectiveness and scalability.

- Assessment of Long-Term Learning OutcomesMuch of the existing research focuses on short-term experimental studies, and few studies have conducted rigorous longitudinal investigations covering the sustained effect of multimodal interaction technology. Future studies should include long-term follow-up investigations of its impact on the ongoing cognitive and behavioral development of learners.

5.3. Future Work

- Tailoring Adaptive Learning JourneysUsing emotion recognition and behavioral analysis, the existing multimodal education systems aim to provide real-time adaptive feedback, but more studies are needed to fine-tune personalized learning paths with better use of multimodal data. For instance, Liu et al. [78] developed a personalized multimodal feedback generation network that integrates multiple modal inputs to produce customized feedback on student assignments, ultimately improving learning efficiency.

- Flexibility when Deployed in Low-Resource ContextsMultimodal interaction technology frequently requires high-performance computing resources, making its deployment in resource-constrained regions difficult. Immadisetty et al. [64] highlighted the vital need to develop multimodal education systems tailored to low-bandwidth, low-computation environments, thus fostering educational equity and technological access.

- International AdaptabilityAdditionally, existing multimodal interaction systems are typically designed within specific cultural and linguistic contexts, which may restrict cross-cultural relevance and scalability (e.g., in multicultural educational environments). Future research should focus on developing multimodal systems that are adaptable to diverse cultural contexts, ensuring broader usability and acceptance across various educational institutions.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Obrenovic, Z.; Starcevic, D. Modeling multimodal human-computer interaction. Computer 2004, 37, 65–72. [Google Scholar] [CrossRef]

- Saffaryazdi, N.; Goonesekera, Y.; Saffaryazdi, N.; Hailemariam, N.D.; Temesgen, E.G.; Nanayakkara, S.; Broadbent, E.; Billinghurst, M. Emotion Recognition in Conversations Using Brain and Physiological Signals. In Proceedings of the IUI’22: 27th International Conference on Intelligent User Interfaces, Helsinki, Finland, 22–25 March 2022; pp. 229–242. [Google Scholar] [CrossRef]

- Chollet, M.; Wörtwein, T.; Morency, L.P.; Shapiro, A.; Scherer, S. Exploring feedback strategies to improve public speaking: An interactive virtual audience framework. In Proceedings of the UbiComp’15: 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 September 2015; pp. 1143–1154. [Google Scholar] [CrossRef]

- Geiger, A.; Bewersdorf, I.; Brandenburg, E.; Stark, R. Visual Feedback for Grasping in Virtual Reality Environments for an Interface to Instruct Digital Human Models. In Proceedings of the Advances in Usability and User Experience, Barcelona, Spain, 19–20 September 2018; Ahram, T., Falcão, C., Eds.; Springer: Cham, Swirzerlands, 2018; pp. 228–239. [Google Scholar]

- Kotranza, A.; Lok, B.; Pugh, C.M.; Lind, D.S. Virtual Humans That Touch Back: Enhancing Nonverbal Communication with Virtual Humans through Bidirectional Touch. In Proceedings of the 2009 IEEE Virtual Reality Conference, Lafayette, LA, USA, 14–18 March 2009; pp. 175–178. [Google Scholar] [CrossRef]

- Wigdor, D.; Wixon, D. Brave NUI World: Designing Natural User Interfaces for Touch and Gesture; Elsevier: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Schwendimann, B.A.; Rodríguez-Triana, M.J.; Vozniuk, A.; Prieto, L.P.; Boroujeni, M.S.; Holzer, A.; Gillet, D.; Dillenbourg, P. Perceiving Learning at a Glance: A Systematic Literature Review of Learning Dashboard Research. IEEE Trans. Learn. Technol. 2017, 10, 30–41. [Google Scholar] [CrossRef]

- Di Mitri, D.; Schneider, J.; Specht, M.; Drachsler, H. From signals to knowledge: A conceptual model for multimodal learning analytics. J. Comput. Assist. Learn. 2018, 34, 338–349. [Google Scholar] [CrossRef]

- Pardo, A.; Kloos, C.D. Stepping out of the box: Towards analytics outside the learning management system. In Proceedings of the LAK’11: 1st International Conference on Learning Analytics and Knowledge, New York, NY, USA, 27 February–1 March 2011; pp. 163–167. [Google Scholar] [CrossRef]

- Di Mitri, D. Digital Learning Projection. In Artificial Intelligence in Education; André, E., Baker, R., Hu, X., Rodrigo, M.M.T., du Boulay, B., Eds.; Springer: Cham, Swirzerlands, 2017; pp. 609–612. [Google Scholar]

- Jia, J.; Yunfan, H.; Huixiao, L. A Multimodal Human-Computer Interaction System and Its Application in Smart Learning Environments; Springer: Cham, Swirzerlands, 2020; pp. 3–14. [Google Scholar] [CrossRef]

- Lazaro, M.J.; Kim, S.; Lee, J.; Chun, J.; Kim, G.; Yang, E.; Bilyalova, A.; Yun, M.H. A Review of Multimodal Interaction in Intelligent Systems. In Human-Computer Interaction. Theory, Methods and Tools; Kurosu, M., Ed.; Springer: Cham, Swirzerlands, 2021; pp. 206–219. [Google Scholar]

- Jiang, Y.; Li, W.; Hossain, M.S.; Chen, M.; Alelaiwi, A.; Al-Hammadi, M. A snapshot research and implementation of multimodal information fusion for data-driven emotion recognition. Inf. Fusion 2020, 53, 209–221. [Google Scholar] [CrossRef]

- Nguyen, R.; Gouin-Vallerand, C.; Amiri, M. Hand interaction designs in mixed and augmented reality head mounted display: A scoping review and classification. Front. Virtual Real. 2023, 4, 1171230. [Google Scholar] [CrossRef]

- Tu, Y.; Luo, J. Accessibility Research on Multimodal Interaction for the Elderly; ACM: New York, NY, USA, 2024; pp. 384–398. [Google Scholar] [CrossRef]

- Ramaswamy, M.P.A.; Palaniswamy, S. Multimodal emotion recognition: A comprehensive review, trends, and challenges. WIREs Data Min. Knowl. Discov. 2024, 14, e1563. [Google Scholar] [CrossRef]

- Koromilas, P.; Giannakopoulos, T. Deep Multimodal Emotion Recognition on Human Speech: A Review. Appl. Sci. 2021, 11, 7962. [Google Scholar] [CrossRef]

- Wang, T.; Zheng, P.; Li, S.; Wang, L. Multimodal Human–Robot Interaction for Human-Centric Smart Manufacturing: A Survey. Adv. Intell. Syst. 2024, 6, 2300359. [Google Scholar] [CrossRef]

- Pan, B.; Hirota, K.; Jia, Z.; Dai, Y. A review of multimodal emotion recognition from datasets, preprocessing, features, and fusion methods. Neurocomputing 2023, 561, 126866. [Google Scholar] [CrossRef]

- Zhu, X.; Guo, C.; Feng, H.; Huang, Y.; Feng, Y.; Wang, X.; Wang, R. A Review of Key Technologies for Emotion Analysis Using Multimodal Information. Cogn. Comput. 2024, 16, 1504–1530. [Google Scholar] [CrossRef]

- Calvo, R.; D’Mello, S.; Gratch, J.; Kappas, A. The Oxford Handbook of Affective Computing; Oxford Academic: Oxford, UK, 2014. [Google Scholar]

- Cornide-Reyes, H.; Riquelme, F.; Monsalves, D.; Noël, R.; Cechinel, C.; Villarroel, R.; Ponce, F.; Muñoz, R. A Multimodal Real-Time Feedback Platform Based on Spoken Interactions for Remote Active Learning Support. Sensors 2020, 20, 6337. [Google Scholar] [CrossRef]

- Gatcho, A.; Manuel, J.P.; Sarasua, R. Eye tracking research on readers’ interactions with multimodal texts: A mini-review. Front. Commun. 2024, 9, 1482105. [Google Scholar] [CrossRef]

- Wang, J. Application of digital media entertainment technology based on soft computing in immersive experience of remote piano teaching. Entertain. Comput. 2025, 52, 100822. [Google Scholar] [CrossRef]

- Choo, Y.B.; Saidalvi, A.; Abdullah, T. Use of Multimodality in Remote Drama Performance among Pre-Service Teachers during the Covid-19 Pandemic. Int. J. Acad. Res. Progress. Educ. Dev. 2022, 11, 743–760. [Google Scholar] [CrossRef]

- Ranjan, R.; Patel, V.M.; Chellappa, R. HyperFace: A Deep Multi-Task Learning Framework for Face Detection, Landmark Localization, Pose Estimation, and Gender Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 121–135. [Google Scholar] [CrossRef]

- Cruciata, G.; Lo Presti, L.; Cascia, M.L. On the Use of Deep Reinforcement Learning for Visual Tracking: A Survey. IEEE Access 2021, 9, 120880–120900. [Google Scholar] [CrossRef]

- Miran, S.; Akram, S.; Sheikhattar, A.; Simon, J.Z.; Zhang, T.; Babadi, B. Real-time tracking of selective auditory attention from M/EEG: A bayesian filtering approach. Front. Neurosci. 2018, 12, 262. [Google Scholar] [CrossRef]

- Tanaka, N.; Watanabe, K.; Ishimaru, S.; Dengel, A.; Ata, S.; Fujimoto, M. Concentration Estimation in Online Video Lecture Using Multimodal Sensors. In Proceedings of the Companion of the 2024 on ACM International Joint Conference on Pervasive and Ubiquitous Computing, Melbourne, VIC, Australia, 5–9 October 2024. [Google Scholar] [CrossRef]

- Shang, Q.; Zheng, G.; Li, Y. Mobile Learning Based on Remote Experimental Teaching Platform. In Proceedings of the ICEMT’19: 3rd International Conference on Education and Multimedia Technology, Nagoya, Japan, 22–25 July 2019; pp. 307–310. [Google Scholar] [CrossRef]

- Ge, T.; Darcy, O. Study on the Design of Interactive Distance Multimedia Teaching System based on VR Technology. Int. J. Contin. Eng. Educ.-Life-Long Learn. 2021, 31, 1. [Google Scholar] [CrossRef]

- Engelbrecht, J.M.; Michler, A.; Schwarzbach, P.; Michler, O. Bring Your Own Device-Enabling Student-Centric Learning in Engineering Education by Incorporating Smartphone-Based Data Acquisition. In Proceedings of the International Conference on Interactive Collaborative Learning, Madrid, Spain, 26–29 September 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 373–383. [Google Scholar]

- Javeed, M.; Mudawi, N.A.; Alazeb, A.; Almakdi, S.; Alotaibi, S.S.; Chelloug, S.; Jalal, A. Intelligent ADL Recognition via IoT-Based Multimodal Deep Learning Framework. Sensors 2023, 23, 7927. [Google Scholar] [CrossRef]

- Courtemanche, F.; Léger, P.M.; Dufresne, A.; Fredette, M.; Labonté-LeMoyne, É.; Sénécal, S. Physiological heatmaps: A tool for visualizing users’ emotional reactions. Multimed. Tools Appl. 2018, 77, 11547–11574. [Google Scholar] [CrossRef]

- Chettaoui, N.; Atia, A.; Bouhlel, M.S. Student performance prediction with eye-gaze data in embodied educational context. Educ. Inf. Technol. 2023, 28, 833–855. [Google Scholar]

- Siemens, G.; Long, P. Penetrating the Fog: Analytics in Learning and Education. EDUCAUSE Rev. 2011, 5, 30–32. [Google Scholar] [CrossRef]

- Gašević, D.; Dawson, S.; Pardo, A. How Do We Start? State Directions of Learning Analytics Adoption; ICDE—International Council For Open And Distance Education: Oslo, Norway, 2016. [Google Scholar]

- Gašević, D.; Dawson, S.; Siemens, G. Let’s not forget: Learning analytics are about learning. TechTrends 2015, 59, 64–71. [Google Scholar]

- Martinez-Maldonado, R.; Echeverria, V.; Santos, O.C.; Santos, A.D.P.D.; Yacef, K. Physical learning analytics: A multimodal perspective. In Proceedings of the LAK’18: 8th International Conference on Learning Analytics and Knowledge, Sydney, NSW, Australia, 7–9 March 2018; pp. 375–379. [Google Scholar] [CrossRef]

- Netekal, M.; Hegade, P.; Shettar, A. Knowledge Structuring and Construction in Problem Based Learning. J. Eng. Educ. Transform. 2023, 36, 186–193. [Google Scholar] [CrossRef]

- Roll, I.; Wylie, R. Evolution and revolution in artificial intelligence in education. Int. J. Artif. Intell. Educ. 2016, 26, 582–599. [Google Scholar]

- Mu, S.; Cui, M.; Huang, X. Multimodal Data Fusion in Learning Analytics: A Systematic Review. Sensors 2020, 20, 6856. [Google Scholar] [CrossRef]

- Ebling, M.R. Pervasive Computing and the Internet of Things. IEEE Pervasive Comput. 2016, 15, 2–4. [Google Scholar] [CrossRef]

- Kurti, A.; Spikol, D.; Milrad, M.; Svensson, M.; Pettersson, O. Exploring How Pervasive Computing Can Support Situated Learning. In Proceedings of the Pervasive Learning 2007, Toronto, ON, Canada, 13 May 2007. [Google Scholar]

- Verma, G.K.; Tiwary, U.S. Multimodal fusion framework: A multiresolution approach for emotion classification and recognition from physiological signals. NeuroImage 2014, 102, 162–172. [Google Scholar] [CrossRef]

- Li, L.; Gui, X.; Huang, G.; Zhang, L.; Wan, F.; Han, X.; Wang, J.; Ni, D.; Liang, Z.; Zhang, Z. Decoded EEG neurofeedback-guided cognitive reappraisal training for emotion regulation. Cogn. Neurodyn. 2024, 18, 2659–2673. [Google Scholar]

- Horvers, A.; Tombeng, N.; Bosse, T.; Lazonder, A.; Molenaar, I. Detecting Emotions through Electrodermal Activity in Learning Contexts: A Systematic Review. Sensors 2021, 21, 7869. [Google Scholar] [CrossRef]

- Lee, M.; Cho, Y.; Lee, Y.; Pae, D.; Lim, M.; Kang, T.K. PPG and EMG Based Emotion Recognition using Convolutional Neural Network. In Proceedings of the 16th International Conference on Informatics in Control, Automation and Robotics, Prague, Czech Republic, 29–31 July 2019; pp. 595–600. [Google Scholar] [CrossRef]

- Yang, G.; Kang, Y.; Charlton, P.H.; Kyriacou, P.; Kim, K.K.; Li, L.; Park, C. Energy-Efficient PPG-Based Respiratory Rate Estimation Using Spiking Neural Networks. Sensors 2024, 24, 3980. [Google Scholar] [CrossRef] [PubMed]

- Heideklang, R.; Shokouhi, P. Fusion of multi-sensory NDT data for reliable detection of surface cracks: Signal-level vs. decision-level. AIP Conf. Proc. 2016, 1706, 180004. [Google Scholar] [CrossRef]

- Uribe, Y.F.; Alvarez-Uribe, K.C.; Peluffo-Ordóñez, D.H.; Becerra, M.A. Physiological Signals Fusion Oriented to Diagnosis—A Review. In Communications in Computer and Information Science; Springer: Cham, Switzerlands, 2018; pp. 1–15. [Google Scholar] [CrossRef]

- Sad, G.D.; Terissi, L.D.; Gómez, J. Decision Level Fusion for Audio-Visual Speech Recognition in Noisy Conditions. In Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications; Springer: Cham, Switzerlands, 2016; pp. 360–367. [Google Scholar] [CrossRef]

- Rabbani, M.H.R.; Islam, S.M.R. Multimodal Decision Fusion of EEG and fNIRS Signals. In Proceedings of the 2021 5th International Conference on Electrical Engineering and Information & Communication Technology (ICEEICT), Dhaka, Bangladesh, 18–20 November 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Xu, P.; Jiang, H. Review of the Application of Multimodal Biological Data in Education Analysis. IOP Conf. Ser. Earth Environ. Sci. 2018, 170, 022175. [Google Scholar] [CrossRef]

- Chango, W.; Lara, J.; Cerezo, R.; Romero, C. A review on data fusion in multimodal learning analytics and educational data mining. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2022, 12, e1458. [Google Scholar] [CrossRef]

- Blikstein, P.; Worsley, M. Multimodal Learning Analytics and Education Data Mining: Using computational technologies to measure complex learning tasks. J. Learn. Anal. 2016, 3, 220–238. [Google Scholar] [CrossRef]

- Doumanis, I.; Economou, D.; Sim, G.; Porter, S. The impact of multimodal collaborative virtual environments on learning: A gamified online debate. Comput. Educ. 2019, 130, 121–138. [Google Scholar] [CrossRef]

- Perveen, A. Facilitating Multiple Intelligences Through Multimodal Learning Analytics. Turk. Online J. Distance Educ. 2018, 19, 18–30. [Google Scholar] [CrossRef]

- Chen, Y.; Guo, Q.; Qiao, C.; Wang, J. A systematic review of the application of eye-tracking technology in reading in science studies. Res. Sci. Technol. Educ. 2023, 1–25. [Google Scholar] [CrossRef]

- Puffay, C.; Accou, B.; Bollens, L.; Monesi, M.J.; Vanthornhout, J.; Hamme, H.V.; Francart, T. Relating EEG to continuous speech using deep neural networks: A review. arXiv 2023, arXiv:2302.01736. [Google Scholar]

- Nam, J.; Chung, H.; ah Seong, Y.; Lee, H. A New Terrain in HCI: Emotion Recognition Interface using Biometric Data for an Immersive VR Experience. arXiv 2019, arXiv:1912.01177. [Google Scholar]

- Basystiuk, O.; Rybchak, Z.; Zavushchak, I.; Marikutsa, U. Evaluation of multimodal data synchronization tools. Comput. Des. Syst. Theory Pract. 2024, 6, 104–111. [Google Scholar] [CrossRef]

- Malawski, F.; Kapela, K.; Krupa, M. Synchronization of External Inertial Sensors and Built-in Camera on Mobile Devices. In Proceedings of the 2023 IEEE Symposium Series on Computational Intelligence (SSCI), Mexico City, Mexico, 5–8 December 2023; pp. 772–777. [Google Scholar] [CrossRef]

- Immadisetty, P.; Rajesh, P.; Gupta, A.; MR, D.A.; Subramanya, D.K. Multimodality in online education: A comparative study. Multimed. Tools Appl. 2025, 1–34. [Google Scholar] [CrossRef]

- Lyu, Y.; Zheng, X.; Kim, D.; Wang, L. OmniBind: Teach to Build Unequal-Scale Modality Interaction for Omni-Bind of All. arXiv 2024, arXiv:2405.16108. [Google Scholar]

- Cui, K.; Zhao, M.; He, M.; Liu, D.; Zhao, Q.; Hu, B. MultimodalSleepNet: A Lightweight Neural Network Model for Sleep Staging based on Multimodal Physiological Signals. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Lisbon, Portugal, 3–6 December 2024; pp. 5264–5271. [Google Scholar] [CrossRef]

- Guo, Z.; Ma, H.; Li, A. A lightweight finger multimodal recognition model based on detail optimization and perceptual compensation embedding. Comput. Stand. Interfaces 2024, 92, 103937. [Google Scholar] [CrossRef]

- Ranhel, J.; Vilela, C. Guidelines for creating man-machine multimodal interfaces. arXiv 2020, arXiv:1901.10408. [Google Scholar]

- Agrawal, D.; Kalpana, C.; Lachhani, M.; Salgaonkar, K.A.; Patil, Y. Role of Cloud Computing in Education. REST J. Data Anal. Artif. Intell. 2023, 2, 38–42. [Google Scholar] [CrossRef]

- Qushem, U.B.; Christopoulos, A.; Oyelere, S.; Ogata, H.; Laakso, M. Multimodal Technologies in Precision Education: Providing New Opportunities or Adding More Challenges? Educ. Sci. 2021, 11, 338. [Google Scholar] [CrossRef]

- Wu, Y.; Sun, Y.; Sundar, S.S. What Do You Get from Turning on Your Video? Effects of Videoconferencing Affordances on Remote Class Experience During COVID-19. Proc. ACM Hum.-Comput. Interact. 2022, 6, 3555773. [Google Scholar] [CrossRef]

- Manamela, L.E.; Sumbane, G.O.; Mutshatshi, T.E.; Ngoatle, C.; Rasweswe, M.M. Multimodal teaching and learning challenges: Perspectives of undergraduate learner nurses at a higher education institution in South Africa. Afr. J. Health Prof. Educ. 2024, 16, e1299. [Google Scholar] [CrossRef]

- Khor, E.; Tan, L.P.; Chan, S.H.L. Systematic Review on the Application of Multimodal Learning Analytics to Personalize Students’ Learning. AsTEN J. Teach. Educ. 2024, 1–16. [Google Scholar] [CrossRef]

- Darin, T.G.R.; Andrade, R.; Sánchez, J. Usability evaluation of multimodal interactive virtual environments for learners who are blind: An empirical investigation. Int. J. Hum. Comput. Stud. 2021, 158, 102732. [Google Scholar] [CrossRef]

- Liu, D.K. Predicting Stress in Remote Learning via Advanced Deep Learning Technologies. arXiv 2021, arXiv:2109.11076. [Google Scholar]

- Peng, X.; Wei, Y.; Deng, A.; Wang, D.; Hu, D. Balanced multimodal learning via on-the-fly gradient modulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8238–8247. [Google Scholar]

- Liu, Y.; Li, K.; Huang, Z.; Li, B.; Wang, G.; Cai, W. EduChain: A blockchain-based education data management system. In Proceedings of the Blockchain Technology and Application: Third CCF China Blockchain Conference, CBCC 2020, Jinan, China, 18–20 December 2020; Revised Selected Papers 3. Springer: Berlin/Heidelberg, Germany, 2021; pp. 66–81. [Google Scholar]

- Liu, H.; Liu, Z.; Wu, Z.; Tang, J. Personalized Multimodal Feedback Generation in Education. arXiv 2020, arXiv:2011.00192. [Google Scholar]

- Faridan, M.; Kumari, B.; Suzuki, R. ChameleonControl: Teleoperating Real Human Surrogates through Mixed Reality Gestural Guidance for Remote Hands-on Classrooms. In Proceedings of the CHI’23: 2023 CHI Conference on Human Factors in Computing Systems, ACM, Hamburg, Germany, 23–28 April 2023; pp. 1–13. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang-Li, D.; Yu, J.; Gong, L.; Zhou, J.; Hao, Z.; Jiang, J.; Cao, J.; Liu, H.; Liu, Z.; et al. Simulating Classroom Education with LLM-Empowered Agents. arXiv 2024, arXiv:2406.19226. [Google Scholar]

- Hao, Y.; Li, H.; Ding, W.; Wu, Z.; Tang, J.; Luckin, R.; Liu, Z. Multi-Task Learning based Online Dialogic Instruction Detection with Pre-trained Language Models. arXiv 2021, arXiv:2107.07119. [Google Scholar]

- Li, H.; Ding, W.; Liu, Z. Identifying At-Risk K-12 Students in Multimodal Online Environments: A Machine Learning Approach. arXiv 2020, arXiv:2003.09670. [Google Scholar]

| Dimension | Description | Reference |

|---|---|---|

| Behavior | Learners’ interaction patterns, including digital behaviors such as mouse clicks, scrolling, and text input, as well as physical actions in the learning environment | Martinez-Maldonado et al., 2018 [39] |

| Cognition | Learners’ cognitive processes, including problem-solving ability, knowledge construction, and memory recall | Netekal et al., 2023 [40] |

| Emotion | Encompasses learners’ emotional states such as anxiety, confidence, or frustration, which can be detected through physiological signals (e.g., EEG, EDA) or facial expression analysis | Pardo & Kloos 2011 [9] |

| Engagement | Measures learners’ attention levels and sustained participation, often assessed through interaction behaviors or physiological data (e.g., heart rate variability) | Ido Roll & Wylie 2016 [41] |

| Collaboration | Describes learners’ interactions in group learning, including distinctions between individual and collective attention or differences between self-regulated and collaborative learning emotions | Mu, Cui, & Huang 2020 [42] |

| Modality | Application | Reference |

|---|---|---|

| EEG | Records brain activity to analyze emotional and cognitive states | Verma & Tiwary (2014) [45]; Lin & Li (2023) [46] |

| EDA | Detects autonomic nervous system activity, reflecting emotional fluctuations | Horvers et al. (2021) [47] |

| ECG | Analyzes heart rate variability to assess emotional responses | Lin & Li (2023) [46] |

| Other Signals | Includes electromyography (EMG), respiratory patterns, and photoplethysmography (PPG), which enhance emotion detection accuracy in specific contexts | Verma & Tiwary (2014) [45] |

| Wearable Devices | Increasingly used flexible skin-attached sensors and smart devices for long-term monitoring of multiple physiological signals | Lee et al. (2019) [48]; Yang et al. (2024) [49] |

| Study | Modality Types Used | Education Level | Target Group | Environment | Limitations |

|---|---|---|---|---|---|

| Immadisetty et al., 2023 [64] | Posture and gesture recognition, facial analysis, eye-tracking, verbal recognition | Higher Education | General students | Controlled laboratory setting | Limited real-world classroom deployment |

| Faridan et al., 2023 [79] | Mixed reality gestural guidance | Higher Education | Physiotherapy students | Simulated classroom environment | Small sample size |

| Zhang et al., 2024 [80] | LLM-empowered agents simulating teacher-student interactions | Higher Education | General students | Virtual classroom simulation | Lack of real-world validation |

| Hao et al., 2021 [81] | Pre-trained language models for dialogic instruction detection | Higher Education | Online learners | Online educational platform | Focused on text-based interactions only |

| Li et al., 2020 [82] | Machine learning for identifying at-risk students | K-12 Education | K-12 students | Multimodal online environments | Data imbalance, limited offline factors |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, Y.; Yang, L.; Zhang, M.; Chen, S.; Li, J. A Review of Multimodal Interaction in Remote Education: Technologies, Applications, and Challenges. Appl. Sci. 2025, 15, 3937. https://doi.org/10.3390/app15073937

Xie Y, Yang L, Zhang M, Chen S, Li J. A Review of Multimodal Interaction in Remote Education: Technologies, Applications, and Challenges. Applied Sciences. 2025; 15(7):3937. https://doi.org/10.3390/app15073937

Chicago/Turabian StyleXie, Yangmei, Liuyi Yang, Miao Zhang, Sinan Chen, and Jialong Li. 2025. "A Review of Multimodal Interaction in Remote Education: Technologies, Applications, and Challenges" Applied Sciences 15, no. 7: 3937. https://doi.org/10.3390/app15073937

APA StyleXie, Y., Yang, L., Zhang, M., Chen, S., & Li, J. (2025). A Review of Multimodal Interaction in Remote Education: Technologies, Applications, and Challenges. Applied Sciences, 15(7), 3937. https://doi.org/10.3390/app15073937