Abstract

In recent years, human activity monitoring and recognition have gained importance in providing valuable information to improve the quality of life. A lack of activity can cause health problems including falling, depression, and decreased mobility. Continuous activity monitoring can be useful to prevent progressive health problems. With this purpose, this study presents a wireless smart insole with four force-sensitive resistors (FSRs) that monitor foot contact states during activities for both indoor and outdoor use. The designed insole is a compact solution and provides walking comfort with a slim and flexible structure. Moreover, the inertial measurement unit (IMU) sensors designed in our previous study were used to collect 3-axis accelerometer and 3-axis gyroscope outputs. Smart insoles were located in the shoe sole for both right and left feet, and two IMU sensors were attached to the thigh area of each leg. The sensor outputs were collected and recorded from forty healthy volunteers for eight different gait-based activities including walking uphill and descending stairs. The obtained datasets were separated into three categories; foot contact states, the combination of acceleration and gyroscope outputs, and a set of all sensor outputs. The dataset for each category was separately fed into deep learning algorithms, namely, convolutional long–short-term memory neural networks. The performance of each neural network for each category type was examined. The results show that the neural network using only foot contact states presents 90.1% accuracy and provides better performance than the combination of acceleration and gyroscope datasets for activity recognition. Moreover, the neural network presents the best results with 93.4% accuracy using a combination of all the data compared with the other two categories.

1. Introduction

Nowadays, immobility is one of the major problems that adversely affects life quality. It may cause falling, various syndromes, and physical disabilities. The World Health Organization informs us that approximately 28–35% of people above 65 years fall at least once each year [1]. Reducing the risk is possible by performing regular physical activities such as walking, playing sports, or cycling. However, people can forget or ignore daily activities due to work pace or lifestyle. To prevent possible health problems, especially in the aging stage, the monitoring and recognition of activities are highly important.

There are two popular methods to monitor activities. Video-based systems are the most reliable but have some disadvantages such as cost, need for a special environment, and useful for only indoor applications [2,3]. Moreover, they suffer from issues such as privacy and ethics [4]. With the rapid advances in sensor and connectivity technologies, wearable systems such as smartphones, smartwatches, smart insoles, and smart clothes allow for monitoring daily movements with superior advantages including low-cost, lightweight, and small size [5]. Moreover, they enable continuous monitoring with low power consumption, even if the user is in an outdoor environment.

Many researchers have presented a variety of wireless wearable devices, especially using inertial measurement unit (IMU) sensors, force-sensitive resistors (FSRs), and electromyography [6,7,8,9]. Roden et al. designed a kinetic insole consisting of FSRs and IMU sensors for physical therapy and athletic training [10]. Pierleoni et al. developed a wearable device with an accelerometer, gyroscope, magnetometer, and barometer sensor to detect falls in elderly people [11]. Hsu et al. built up a wearable system including inertial sensing modules to recognize sports activities [12]. Qian et al. proposed a smart insole based on pressure sensors for fall detection [13]. Yang et al. designed a wearable system based on air pressure and inertial measurement unit sensors to improve the performance of human activity recognition (HAR) [14]. Moreover, smartphones and smartwatches including IMU sensors have been used as wearable devices recently for activity recognition [15,16].

In the literature, traditional and modern machine learning techniques have been used for human activity recognition. Traditional machine learning is based on hand-crafted methods. The features are extracted from datasets manually using techniques such as statistical information, time-frequency transformation, etc., and then machine learning algorithms are applied. With this method; De Leonardis et al. compared the performances of five algorithms including a k-nearest neighbor, feedforward neural network, support vector machines, naïve Bayes, and decision tree for activity recognition. They extracted 342 features from the input set and reduced them to 69 features using genetic algorithms and the correlation technique [17]. Davis et al. proposed the classification of six basic activities by using a support vector machine, hybrid versions of the support vector machine and a hidden Markov model, and artificial neural networks. They extracted 561 features [18]. However, the generalization capability of the handcrafted method is based on human experience and it causes a waste of time.

Recently, deep learning has become a popular and powerful tool with automated feature extraction capability. This method does not need specialized knowledge or expertise and extracts the features from hierarchical architectures. The most popular ones are convolutional neural networks (CNN) and long–short-term neural networks (LSTM NN). CNN is actively used in big data problems. The key factor to CNN’s success is its architectural design, which includes convolutional, pooling, normalizing, and fully connected layers that extract semantic outputs from the input data. Despite its efficiency, CNN can be used for fixed and short sequence classification problems but is not useful for long and complex time-series data problems [19]. LSTM NN is designed for sequential data to extract the hidden pattern from sequential data. In general, such neural networks analyze the input latent sequence pattern and predict future sequences by combining previous information with existing information from spatial and temporal dimensions. However, LSTM NN cannot remember information for a long time [20]. Considering the challenge points of CNN and LSTM NN, a hybrid model, namely, a convolutional long-short term neural network (ConvLSTM NN) is used in this study.

There are various studies on deep learning and human activity recognition. Ronao and Cho proposed CNN for human activity recognition using smartphone sensors [21]. Ordonez and Roggen made a network performance comparison between a convolutional neural network and a convolutional long–short-term memory neural network (ConvLSTM NN) using the Opportunity dataset [22]. Moreover, many researchers presented a performance comparison of methods based on handcrafted and automated feature extraction [23,24,25,26].

Smart insoles are equipped with sensors inside the insoles or the base of shoes. They can measure force information applied by the foot during movement. FSRs are chosen popularly due to significant advantages such as low cost, simple use, and thin surface for smart insole design. They can measure weight between 0.01kg to 10kg. However, applied force during performed activity may be greater than the maximum weight limit and may cause saturation. The limitation of the FSRs can cause applied force differences between user’s weight and gait characteristics. These reasons may result in inconsistent data, and the deep learning performance can be affected adversely. To overcome this challenge, the usage of foot contact state is suggested instead of ground reaction force information for activity recognition in this study.

In our previous study, we designed a wireless sensor network (WSN) consisting of a master device and IMU slave devices [27]. For this study, we have expanded our WSN, adding smart insoles. These insoles were designed to collect foot contact states from four different points of the sole using force-sensitive resistors. Designed smart insoles and IMU sensors were placed on the soles of the feet and on the thigh part of the legs, respectively. The sensor outputs were collected and recorded by the master device during eight gait-based motions, including ascending stairs and running. The obtained data were fed into ConvLSTM NN by applying pre-processing techniques such as removing noise, data normalization, and data segmentation. The activity recognition performance of the networks was compared for different input sets including only foot contact states, only acceleration and gyroscope data, and a combination of them.

In this study, a low-cost smart insole design using force-sensitive resistor sensors is presented for medical and sports applications, as well as research that involves mass participation. FSRs have a limited capability to measure ground reaction force and cause saturation. To overcome this challenge, foot contact states were derived from the ground reaction forces. For this purpose; the HAR approach based on foot contact states and deep learning is proposed. In the literature, many studies are available for activity recognition, and the IMU-based HAR approach is the most popular. The neural network performance of the proposed foot contact state-based HAR approach is compared with the IMU-based HAR approach. Moreover, the performance of the HAR approach, consisting of the smart insole and IMU sensor datasets with deep learning, is examined.

2. Materials and Methods

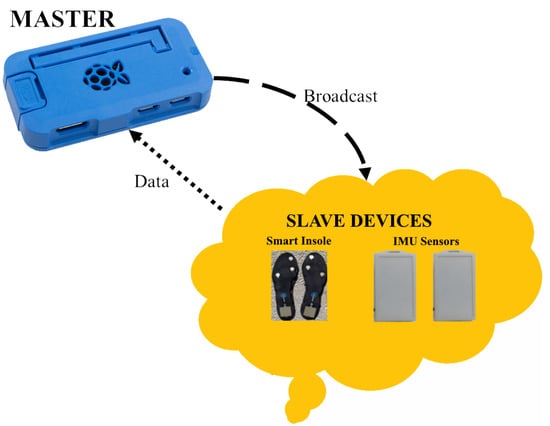

In our previous work, we built a modular and expandable WSN, which consists of a master device and IMU slave devices [27]. The master device establishes and manages the wireless network, as well as collects and records incoming information from the slave devices. Slave devices connect to the managed network by the master device via Wi-Fi communication and send acceleration and gyroscope outputs to the master. The software on the master device interactively accepts various management commands that can be sent via a web interface on the same wireless network. Management commands range from starting the data flow of slave devices to viewing the status of the connected devices on the network. Moreover, the user can access and download recorded files from the master device from the web interface. For this study, the existing WSN was expanded by adding slave smart insoles. The block diagram of the updated WSN is shown in Figure 1.

Figure 1.

The block diagram of the designed wireless sensor network.

Wireless IMU devices consist of a 3-axis accelerometer, a 3-axis gyroscope, and Wi-Fi modules. The built-in controller of the Wi-Fi module is utilized to collect the raw data from the accelerometer and the gyroscope. Then, these datasets are sent to the master device via Wi-Fi. The designed IMU devices are shielded by a box to isolate the circuit from users. The enclosure box has an attached elastic rope and stopper. The rope and the stopper allow the user to comfortably mount and secure the sensor on the human body.

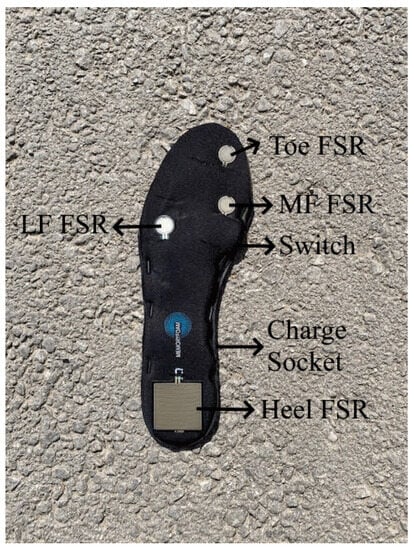

Wireless smart insoles were designed to collect foot contact states during activities. The electronic parts of the insole consist of FSRs, a small-size and low-capacity battery, and a development board supporting Wi-Fi communication. Four FSRs were used in each insole design. These sensors were placed on the heel, medial forefoot (MF), lateral forefoot (LF), and toe area of the foot by examining many studies in the literature [28,29,30,31,32]. FSRs transmit their outputs via the analog interface to the development board, which processes the incoming FSR outputs and transfers the master device via Wi-Fi connectivity. The system also has a two-way switch. When the switch is positioned at “on”, the designed smart insole connects to the sensor network and waits for broadcast commands from the master device; these commands are transmitted to all slaves. Then, the master device continuously and sequentially requests data transmission from the slave devices, which prompts them to read and send sensor data. Moreover, these requests also allow the slave devices to work synchronously with each other. When the switch is positioned at “off”, the battery can easily charge without removing it from the insole. The designed smart insole can be seen in Figure 2.

Figure 2.

The designed smart insole and position of components.

We have used a memory foam sole in the insole design. It has advantages including flexibility, cushioning, and moisture absorption. This type of sole helps to reduce shocks and vibration and prevents perspiration, as well as providing optimal walking comfort.

The obtained FSR outputs were converted into foot contact states as “0” or “1” by using a threshold value. In other words, weight measurements were accepted as “No contact” when they were less than a threshold value but were otherwise accepted as “Contact”. There are many types of FSRs. We have preferred using circular and small-size FSRs for the toe, LF, and MF areas, while square and wider-size FSRs are used for the heel area in our insole design. These sensors have the same specifications except for size.

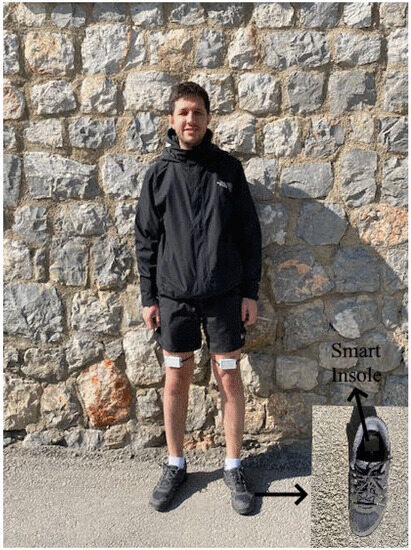

In this study, we have used a total of four slave sensors, namely, two smart insoles and two IMU sensors. Smart insoles were placed inside of the shoe sole for both the right and left feet, and IMU sensors were attached on the thigh part of each leg, as seen in Figure 3. The sampling frequency of all slave nodes was configured as 40 Hz.

Figure 3.

Sensor position on the human body.

Total 40 healthy volunteers (30 males and 10 females) with ages ranging from 20 to 32 (mean 26.4), height 163–195 cm (mean 178), and weight 50–103 kg (mean 78). Each user was informed about the aim of the study and was requested to realize eight different gait-based activities; namely, walking on flat ground (WG), walking uphill (WU), walking downhill (WD), ascending stairs (ASC), descending stairs (DES), running (RN), jumping (JP), and sit down/stand up (SS).

For each subject, a total of 40 min of data were recorded for eight different activities by collecting 5 min of data per activity. Accelerometers and FSRs are highly sensitive sensors and include noises and artifacts during the movement. Moving average filters were used to cancel them out. The data values of the 3-axis accelerometer and 3-axis gyroscope outputs, and also foot contact states, were not in the same range. for a uniform data range, min–max normalization was separately applied for each feature of the accelerometer and gyroscope. This is important during neural network training and testing to eliminate the dominant characteristics of large values over small values.

All the recorded data from all the volunteers were divided into frames using 1.5 s non-overlapping window sizes due to the average cycle duration of activities. 8000 frames for each activity, and a total of 64,000 frames were obtained. Then, the frames were divided into three sections for training, validation, and testing. Specifically, 70% and 30% of the total frames belonging to 28 volunteers were used for training and validation, respectively. Then, all frames of the other 12 volunteers were used to test and verify the results.

The processed data were separated into three categories; only foot contact state, the combination of accelerometer and gyroscope outputs, and a set of all the data according to feature type. Each category was fed into the ConvLSTM NN, respectively. A comparison of the network performances was performed in terms of precision, recall, , and accuracy for different input sets.

Deep learning is a modern machine learning tool that extracts features automatically from raw data and processes them for prediction and classification. The most popular types are convolutional neural networks and long–short-term memory neural networks (LSTM NN). We have used a hybrid model, which is called convolutional long–short-term memory, that presents a superior performance compared to these two neural networks [22,27]. This model is a combination of the feature extraction layer of CNN and LSTM. CNN extracts the features from the raw data using convolutional and pooling layers. Then, the extracted features are input to the LSTM NN that models the temporal dynamics. The outputs of LSTM NN feed into fully connected layers (FC), consisting of one or more feed-forward hidden layers. Finally, the softmax activation function and classification layer produce a class label using outputs of FC.

Hyperparameters are factors that affect the performance as well as network type. There are various parameters such as the number of layers, learning rate, filter number, filter size, etc. All these parameters are determined using the trial and error method.

All data processes and deep learning applications were conducted in MATLAB 2020a. To speed up the process duration, we have used a graphical processor unit (GPU) [33,34]. Its specifications are the NVIDIA RTX 2070 model, 8 GB memory capacity, and 14 Gbps memory speed.

3. Results

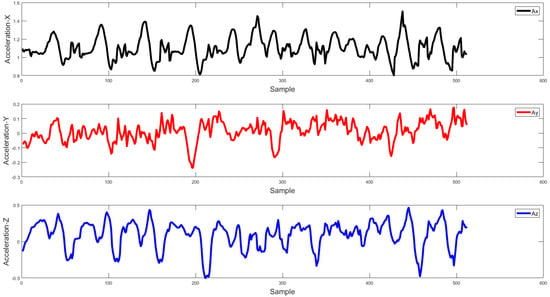

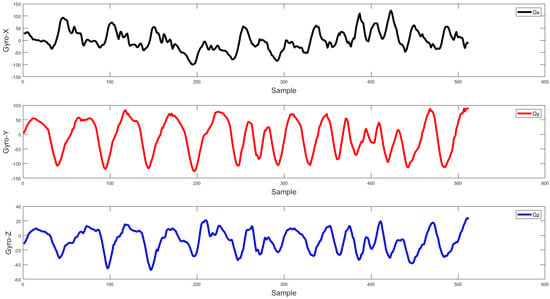

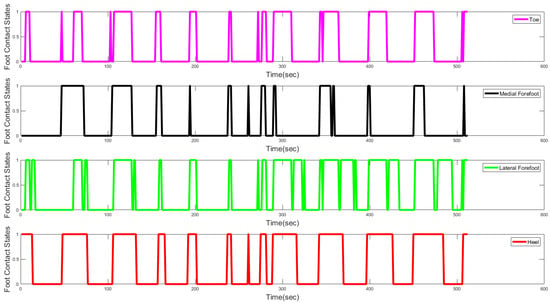

In this study, a wireless sensor network was established with smart insoles and IMU sensors. From forty healthy people, sensor outputs, including foot contact states and accelerometer and gyroscope readings, were collected and recorded. They performed eight activities including walking on flat ground, walking uphill, walking downhill, ascending stairs, descending stairs, running, jumping, and sitting down/standing up. Figure 4, Figure 5 and Figure 6 illustrate a part of the activity signals obtained from the IMU sensors placed on the thigh area of the left leg and FSR located on the sole of the left foot while walking on flat ground, respectively.

Figure 4.

A Part of the Acceleration Data Collected from the Thigh Part of the Left Leg During Walking.

Figure 5.

A Part of the Gyroscope Data Collected from the Thigh Part of the Left Leg During Walking.

Figure 6.

A Part of the Foot Contact States Collected from the Sole of the Left Foot During Walking.

The classification performances of the proposed models were evaluated using four metrics including precision, recall, , and accuracy [35]. These metrics are calculated using Equations (1)–(4), where TP is true positive, FP is false positive, TN is true negative, and FN is false negative.

The datasets were divided into three categories depending on features, such as only foot contact states, only accelerometer and gyroscope outputs, and a combination of all the data. Each category was fed into the ConvLSTM NN to examine and compare the classification performance. To have the best results, hyperparameters were determined by fine-tuning.

The classification performance of the neural networks for each input set is given in Table 1, Table 2 and Table 3.

Table 1.

The classification performance of ConvLSTM NN when the input set is only foot contact states.

Table 2.

The classification performance of ConvLSTM NN when the input set is the accelerometer and gyroscope outputs.

Table 3.

The classification performance of ConvLSTM NN when the input set is a combination of all the data.

The classification results show that the neural network has the best performance for each activity when the input set is a combination of all the datasets. The sit-down/stand-up activity has the highest rate for each neural network in terms of precision and recall. The average accuracies of the neural networks are 90.1%, 88.6%, and 93.4% for foot contact states, accelerometer and gyroscope, and the combination of all the datasets, respectively.

It is seen from the average accuracy that the ConvLSTM NN with the foot contact state input set offers better performance around 1.5% than the accelerometer and gyroscope input set. Moreover, Table 4 shows the values of the neural networks for each input set. The combination of all the datasets has better performance in terms of with 0.958 than the other two categories.

Table 4.

Comparison of the network performances for each input category.

4. Discussion and Conclusions

In this paper, we have designed a wireless smart insole. It is a low-cost, lightweight, and compact solution. Our design has no visible wires as in many other studies [36,37,38]. The advantage of this design is to protect both the devices and users from damage while wearing and removing from the human body. Moreover, it provides walking comfort with memory foam soles for users.

The number of commercial smart insole systems is increasing. Some of the most well-known are F-Scan [39] and the Pedar Sensole System [40]. These systems have high resolution, but they are expensive and used mainly for clinical diagnostics and research purposes. Moticon [41] and Orpyx [42] have low resolution due to a low number of sensors. Although these insoles are relatively cheaper than those with high resolution, they are not affordable enough to appeal to the masses. This study focused on the low-cost development of a designed smart insole system for research requiring mass participation.

There are many studies on activity monitoring. The most popular one is IMU sensor-based monitoring due to its low cost and easy placement in any desired part of the human body. However, the user needs to carry the device on the body. This may cause discomfort for many people and reduce the demand for use. However, smart insoles can be thought of as an object-based device. They can be located in shoes. Thus, continuous monitoring can be provided without the need for users to feel as if they are carrying an external device.

Many researchers have preferred traditional and modern machine learning methods for activity recognition. Currently, deep learning is very efficient due to its automatic feature extraction ability. This study uses a hybrid model, namely, the convolutional LSTM neural network, due to its superior performance. Various sensor modalities including foot contact states, acceleration, and gyroscope were fed into neural networks to examine the classification performance. There are many studies on activity recognition using deep learning. Challa et al. proposed a hybrid of CNN and bidirectional long–short-term memory [43]. The accuracy of the UCI-HAR, WISDM, and PAMAP2 datasets achieved 96.37%, 96.05%, and 94.29%, respectively. Dua et al. proposed a model with CNN combined with a gated recurrent unit and obtained accuracy of 96.20%, 97.21%, and 95.27% on UCI-HAR, WISDM, and PAMAP2 datasets, respectively [44]. D’arco et al. proposed a design including inertial sensors and pressure sensors embedded into the smart insoles. They set the sampling frequency as 200 Hz. Their study included six different activities including walking downstairs, sitting, sit to stand, standing, walking upstairs, and walking. The average accuracy was 94.66% [45]. In our study, the sampling rate is 40 Hz for lower battery consumption and the total duration of the collected data was 1600 min. Moreover, the number of activities was eight. When compared with these studies, our study presents less accuracy; however, the results are important to provide more generalized scores based on the number of performed activities, number of placed sensors, and number of attended volunteers. Moreover, a high sampling rate may result in high power consumption for the device.

The results showed that the combination of all the data presented the best performance compared with other categories for activity recognition. Moreover, the neural network provided better results for foot contact states compared to the combination of the accelerometer and gyroscope data. Considering the ease of use and performance of the designed smart insole, it is seen that it may be sufficient for the specified activity recognition types alone.

Improving the accuracy of results is possible by adding more FSRs into the smart insole or attaching more IMU devices on the human body, including the shank part of the lower limb. However, accelerometers are sensitive sensors and stable positions are important to affect less noise. Hence, placing these sensors in stationary positions is important to improve the success rate. However, more data does not always mean high performance. They can cause an increment in the training duration for the neural networks and do not guarantee more accuracy.

In our future work, we are planning to conduct a study about sports activity recognition such as playing football, basketball, tennis, etc., using different sensor modalities including blood pressure, electromyography, heart rate, etc. Moreover, traditional and modern machine learning techniques can be used to make a comparison of the performance.

Author Contributions

Conceptualization, G.A. and Y.S.; methodology, G.A. and Y.S.; software, G.A. and Y.S.; validation, G.A. and Y.S.; formal analysis, G.A. and Y.S.; investigation, G.A. and Y.S.; resources, G.A. and Y.S.; data curation, G.A. and Y.S.; writing—original draft preparation, G.A. and Y.S.; writing—review and editing, G.A. and Y.S.; visualization, G.A.; supervision, Y.S.; project administration, Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Written informed consent has been obtained from the patient(s) to publish this paper.

Data Availability Statement

The collected data are unavailable due to privacy or ethical restrictions.

Acknowledgments

This work was supported in part by grant 2020.KB.FEN.011.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Physical Activity. 2021. Available online: https://www.who.int/publications/i/item/9789241563536 (accessed on 24 September 2023).

- Cho, Y.S.; Jang, S.H.; Cho, J.S.; Kim, M.J.; Lee, H.D.; Lee, S.Y.; Moon, S.B. Evaluation of validity and reliability of inertial measurement unit-based gait analysis systems. Ann. Rehabil. Med. 2018, 42, 872. [Google Scholar] [CrossRef]

- Gabel, M.; Gilad-Bachrach, R.; Renshaw, E.; Schuster, A. Full body gait analysis with kinect. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 1964–1967. [Google Scholar]

- Lago, P.; Lang, F.; Roncancio, C.; Jiménez-Guarín, C.; Mateescu, R.; Bonnefond, N. The contextact@ a4h real-life dataset of daily-living activities. In Modeling and Using Context, Proceedings of the 10th International and Interdisciplinary Conference, Paris, France, 20–23 June 2017; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 175–188. [Google Scholar]

- Tapia, E.M.; Intille, S.S.; Larson, K. Activity recognition in the home using simple and ubiquitous sensors. In International Conference on Pervasive Computing; Springer: Berlin/Heidelberg, Germany, 2004; pp. 158–175. [Google Scholar]

- Mukhopadhyay, S.C. Wearable sensors for human activity monitoring: A review. IEEE Sensors J. 2014, 15, 1321–1330. [Google Scholar] [CrossRef]

- Saeedi, S.; El-Sheimy, N. Activity recognition using fusion of low-cost sensors on a smartphone for mobile navigation application. Micromachines 2015, 6, 1100–1134. [Google Scholar] [CrossRef]

- Nweke, H.F.; Teh, Y.W.; Al-Garadi, M.A.; Alo, U.R. Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: State of the art and research challenges. Expert Syst. Appl. 2018, 105, 233–261. [Google Scholar] [CrossRef]

- Wang, Y.; Cang, S.; Yu, H. A survey on wearable sensor modality centred human activity recognition in health care. Expert Syst. Appl. 2019, 137, 167–190. [Google Scholar] [CrossRef]

- Roden, T.E.; LeGr, R.; Fernandez, R.; Brown, J.; Deaton, J.; Ross, J. Development of a smart insole tracking system for physical therapy and athletics. In Proceedings of the 7th International Conference on Pervasive Technologies Related to Assistive Environments, Rhodes, Greece, 27–30 May 2014; pp. 1–6. [Google Scholar]

- Pierleoni, P.; Belli, A.; Maurizi, L.; Palma, L.; Pernini, L.; Paniccia, M.; Valenti, S. A wearable fall detector for elderly people based on ahrs and barometric sensor. IEEE Sensors J. 2016, 16, 6733–6744. [Google Scholar] [CrossRef]

- Hsu, Y.L.; Chang, H.C.; Chiu, Y.J. Wearable sport activity classification based on deep convolutional neural network. IEEE Access 2019, 7, 170199–170212. [Google Scholar] [CrossRef]

- Qian, X.; Cheng, H.; Chen, D.; Liu, Q.; Chen, H.; Jiang, H.; Huang, M.C. The smart insole: A pilot study of fall detection. In EAI International Conference on Body Area Networks; Springer International Publishing: Cham, Switzerland, 2019; pp. 37–49. [Google Scholar]

- Yang, D.; Huang, J.; Tu, X.; Ding, G.; Shen, T.; Xiao, X. A wearable activity recognition device using air-pressure and IMU sensors. IEEE Access 2018, 7, 6611–6621. [Google Scholar] [CrossRef]

- Su, X.; Tong, H.; Ji, P. Activity recognition with smartphone sensors. Tsinghua Sci. Technol. 2014, 19, 235–249. [Google Scholar]

- Weiss, G.M.; Timko, J.L.; Gallagher, C.M.; Yoneda, K.; Schreiber, A.J. Smartwatch-based activity recognition: A machine learning approach. In Proceedings of the 2016 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Las Vegas, NV, USA, 24–27 February 2016; pp. 426–429. [Google Scholar]

- De Leonardis, G.; Rosati, S.; Balestra, G.; Agostini, V.; Panero, E.; Gastaldi, L.; Knaflitz, M. Human Activity Recognition by Wearable Sensors: Comparison of different classifiers for real-time applications. In Proceedings of the 2018 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rome, Italy, 11–13 June 2018; pp. 1–6. [Google Scholar]

- Davis, K.; Owusu, E.; Bastani, V.; Marcenaro, L.; Hu, J.; Regazzoni, C.; Feijs, L. Activity recognition based on inertial sensors for ambient assisted living. In Proceedings of the 2016 19th International Conference on Information Fusion (FUSION), Heidelberg, Germany, 5–8 July 2016; pp. 371–378. [Google Scholar]

- Khan, I.; Afzal, S.; Lee, J. Human activity recognition via hybrid deep learning based model. Sensors 2022, 22, 323. [Google Scholar] [CrossRef] [PubMed]

- Ullah, W.; Ullah, A.; Hussain, T.; Khan, Z.; Baik, S. An efficient anomaly recognition framework using an attention residual LSTM in surveillance videos. Sensors 2021, 21, 2811. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Ordóñez, F.J.; Roggen, D. Deep convolutional and lstm recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef]

- Jiang, W.; Yin, Z. Human activity recognition using wearable sensors by deep convolutional neural networks. In Proceedings of the 23rd ACM international conference on Multimedia, New York, NY, USA, 26–30 October 2015; pp. 1307–1310. [Google Scholar]

- Preece, S.J.; Goulermas, J.Y.; Kenney, L.P.; Howard, D. A comparison of feature extraction methods for the classification of dynamic activities from accelerometer data. IEEE Trans. Biomed. 2009, 56, 871–879. [Google Scholar] [CrossRef]

- Wan, S.; Qi, L.; Xu, X.; Tong, C.; Gu, Z. Deep learning models for real-time human activity recognition with smartphones. Mob. Netw. Appl. 2020, 25, 743–755. [Google Scholar] [CrossRef]

- Chen, Y.; Xue, Y. A deep learning approach to human activity recognition based on single accelerometer. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 1488–1492. [Google Scholar]

- Ascioglu, G.; Senol, Y. Design of a wearable wireless multi-sensor monitoring system and application for activity recognition using deep learning. IEEE Access 2020, 8, 169183–169195. [Google Scholar] [CrossRef]

- Howell, A.M.; Kobayashi, T.; Hayes, H.A.; Foreman, K.B.; Bamberg, S.J.M. Kinetic gait analysis using a low-cost insole. IEEE Trans. Biomed. Eng. 2013, 60, 3284–3290. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Huang, M.C.; Amini, N.; Liu, J.J.; He, L.; Sarrafzadeh, M. Smart insole: A wearable system for gait analysis. In Proceedings of the 5th International Conference on Pervasive Technologies Related to Assistive Environments, Heraklion, Greece, 6–8 June 2014; pp. 1–4. [Google Scholar]

- Lin, F.; Wang, A.; Zhuang, Y.; Tomita, M.R.; Xu, W. Smart insole: A wearable sensor device for unobtrusive gait monitoring in daily life. IEEE Trans. Ind. Inform. 2016, 12, 2281–2291. [Google Scholar] [CrossRef]

- Tee, K.S.; Javahar, Y.S.H.; Saim, H.; Zakaria, W.N.W.; Khialdin, S.B.M.; Isa, H.; Awad, M.I.; Soon, C.F. A portable insole pressure mapping system. Telkomnika 2017, 15, 1493–1500. [Google Scholar]

- Muñoz-Organero, M.; Parker, J.; Powell, L.; Davies, R.; Mawson, S. Sensor optimization in smart insoles for post-stroke gait asymmetries using total variation and L1 distances. IEEE Sensors J. 2017, 17, 3142–3151. [Google Scholar] [CrossRef]

- Cheng, J.; Chen, X.; Shen, M. A framework for daily activity monitoring and fall detection based on surface electromyography and accelerometer signals. IEEE J. Biomed. Health 2013, 17, 38–45. [Google Scholar] [CrossRef] [PubMed]

- Kouris, I.; Koutsouris, D. Activity recognition using smartphones and wearable wireless body sensor networks. In Wireless Mobile Communication and Healthcare, Proceedings of the Second International ICST Conference, Kos Island, Greece, 5–7 October 2011; Springer: Berlin/Heidelberg, Germany, 2012; pp. 32–37. [Google Scholar]

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond accuracy, F-score and ROC: A family of discriminant measures for performance evaluation. In Australasian Joint Conference On Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1015–1021. [Google Scholar]

- Manupibul, U.; Charoensuk, W.; Kaimuk, P. Design and development of smart insole system for plantar pressure measurement in imbalance human body and heavy activities. In Proceedings of the 7th 2014 Biomedical Engineering International Conference, Fukuoka, Japan, 26–28 November 2014; pp. 1–5. [Google Scholar]

- Tam, W.K.; Wang, A.; Wang, B.; Yang, Z. Lower-body posture estimation with a wireless smart insole. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 3348–3351. [Google Scholar]

- Domínguez-Morales, M.J.; Luna-Perejón, F.; Miró-Amarante, L.; Hernández-Velázquez, M.; Sevillano-Ramos, J.L. Smart footwear insole for recognition of foot pronation and supination using neural networks. Appl. Sci. 2019, 9, 3970. [Google Scholar] [CrossRef]

- Tekscan. Tekscan: Pressure Mapping, Force Measurement & Tactile Sensors. Available online: https://www.tekscan.com/products-solutions/systems/f-scan-system (accessed on 9 September 2022).

- Novel, The Pedar System—The Quality in-Shoe Dynamic Pressure Measuring System. Available online: http://www.novel.de/novelcontent/pedar (accessed on 24 September 2023).

- Moticon GmbH. Monitor Pressure Underfoot with the Surrosense RXTM. Available online: http://www.moticon.de/products/product-home (accessed on 24 September 2023).

- Orpyx Medical Technologies Inc. Mission: Sensing Foot Ddynamics. Available online: http://orpyx.com/pages/surrosense-rx (accessed on 24 September 2023).

- Challa, S.; Kumar, A.; Semwal, V. A multibranch CNN-BiLSTM model for human activity recognition using wearable sensor data. Vis. Comput. 2022, 38, 4095–4109. [Google Scholar] [CrossRef]

- Dua, N.; Singh, S.; Semwal, V. Multi-input CNN-GRU based human activity recognition using wearable sensors. Computing 2021, 103, 1461–1478. [Google Scholar] [CrossRef]

- D’Arco, L.; Wang, H.; Zheng, H. Assessing impact of sensors and feature selection in smart-insole-based human activity recognition. Methods Protoc. 2022, 5, 45. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).