A Highly Accurate Forest Fire Prediction Model Based on an Improved Dynamic Convolutional Neural Network

Abstract

:1. Introduction

- (1)

- The DCNN network was trained in combination with migration learning, and multiple pre-trained DCNN models were used to extract the features of forest fire images.

- (2)

- Principal component analysis (PCA) reconstruction technology was used to enhance image category differentiation.

- (3)

- A 15-layer DCNN model called “DCN_Fire”, as an improvement on the traditional DCNN model, was constructed and analyzed.

- (4)

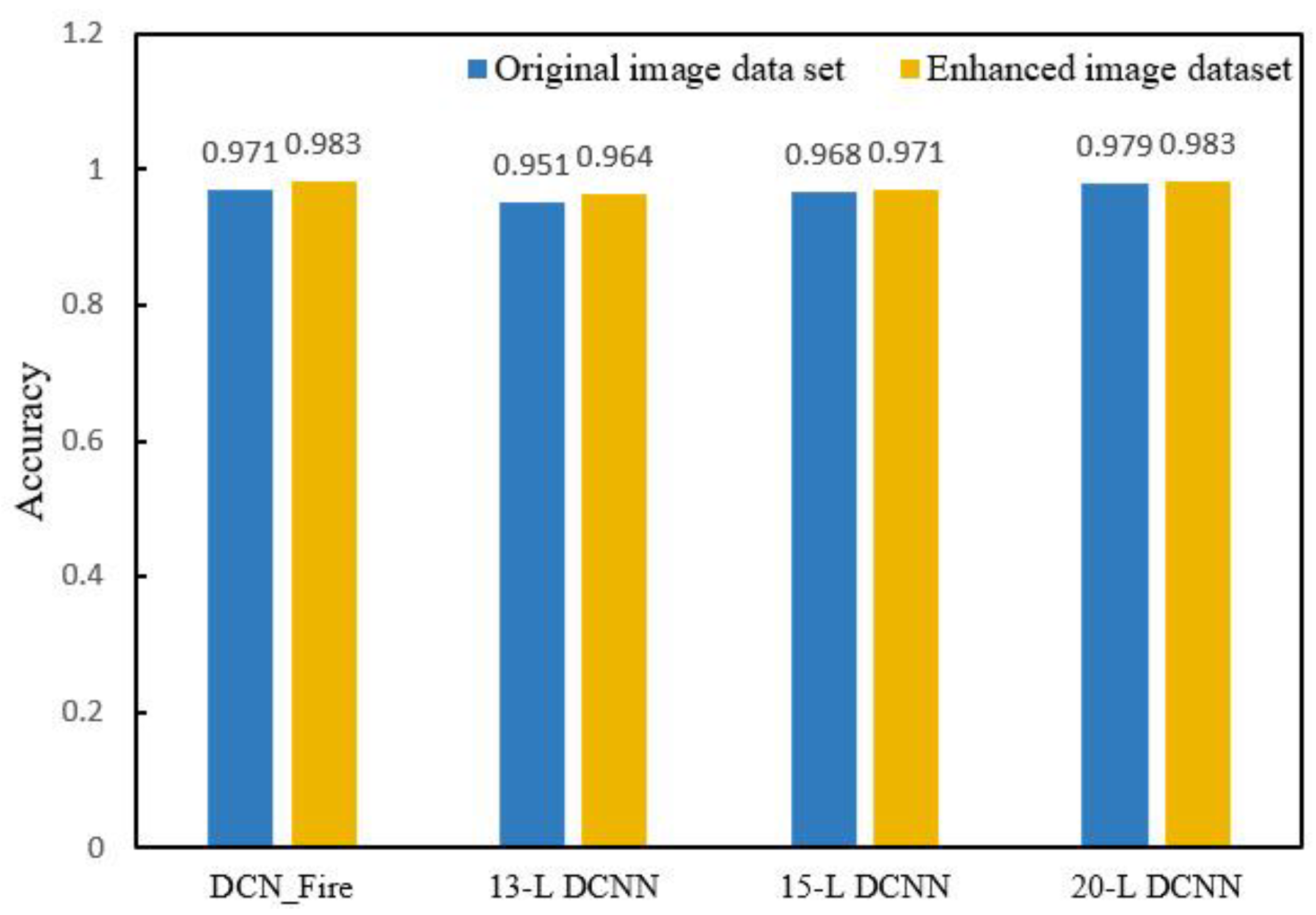

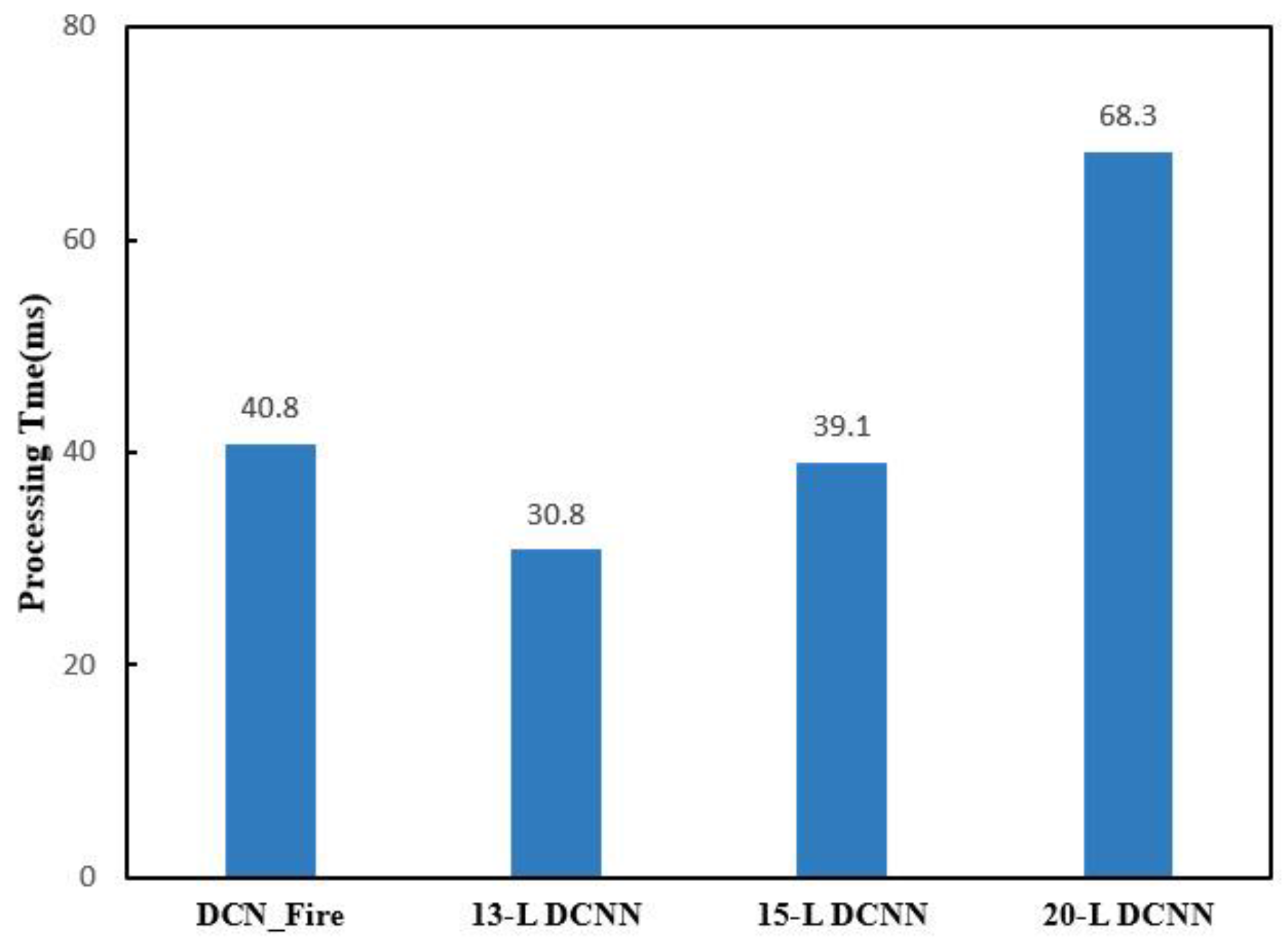

- The recognition speed and accuracy of the improved DCNN model were compared with three other DCNN models with different architectures.

- (5)

- The DCNN model could accurately recognize the risk of a forest fire risk in natural light; thus it was suitable as an early warning system for forest fires.

2. Model Construction and Analysis

2.1. Model Construction

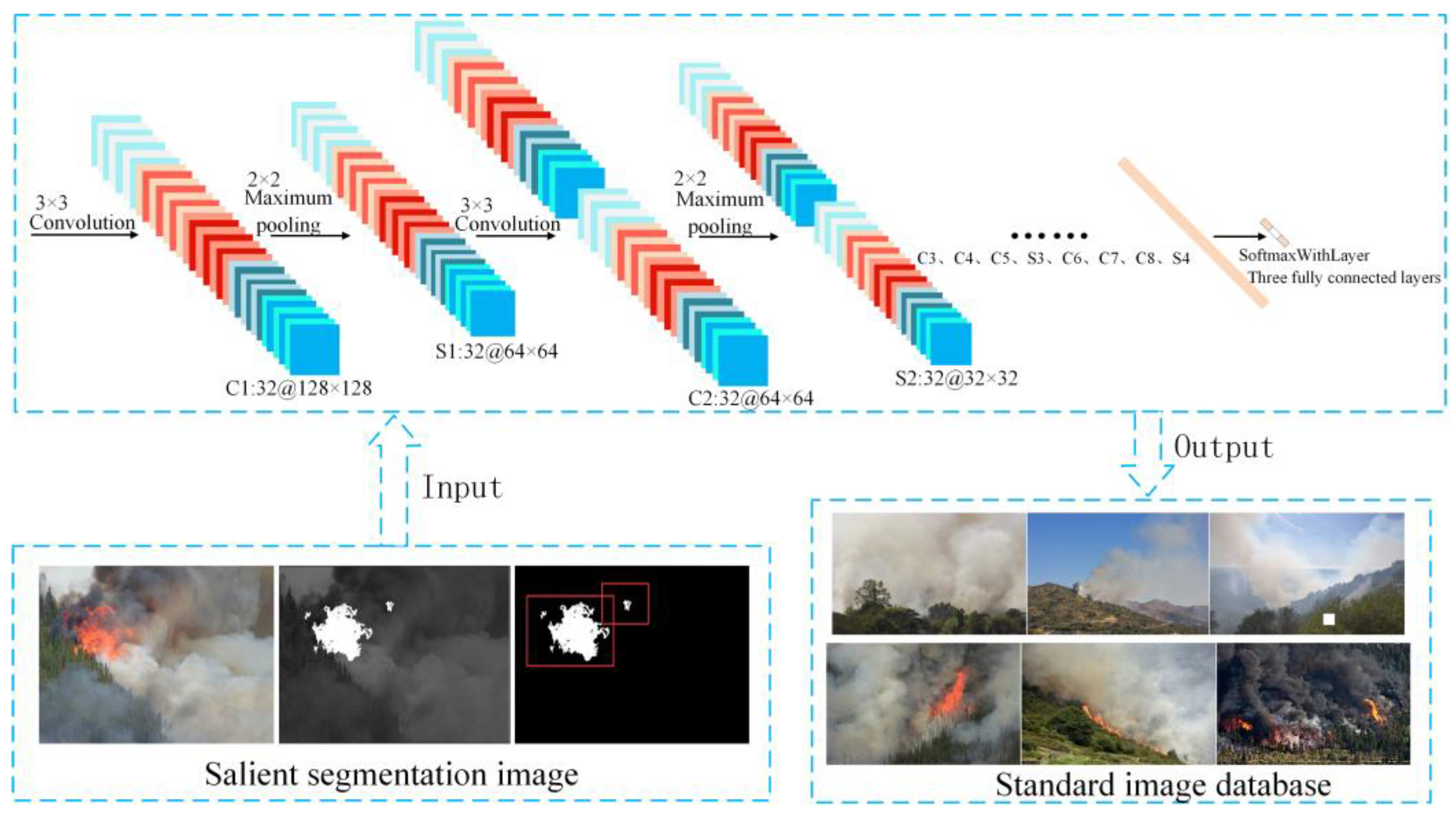

2.1.1. Overall Architecture

2.1.2. Details of the DCN_Fire Model

2.2. Transfer Learning

2.3. Feature Extraction and Classification

3. Comparative Analysis of the Algorithm Implementation Performance

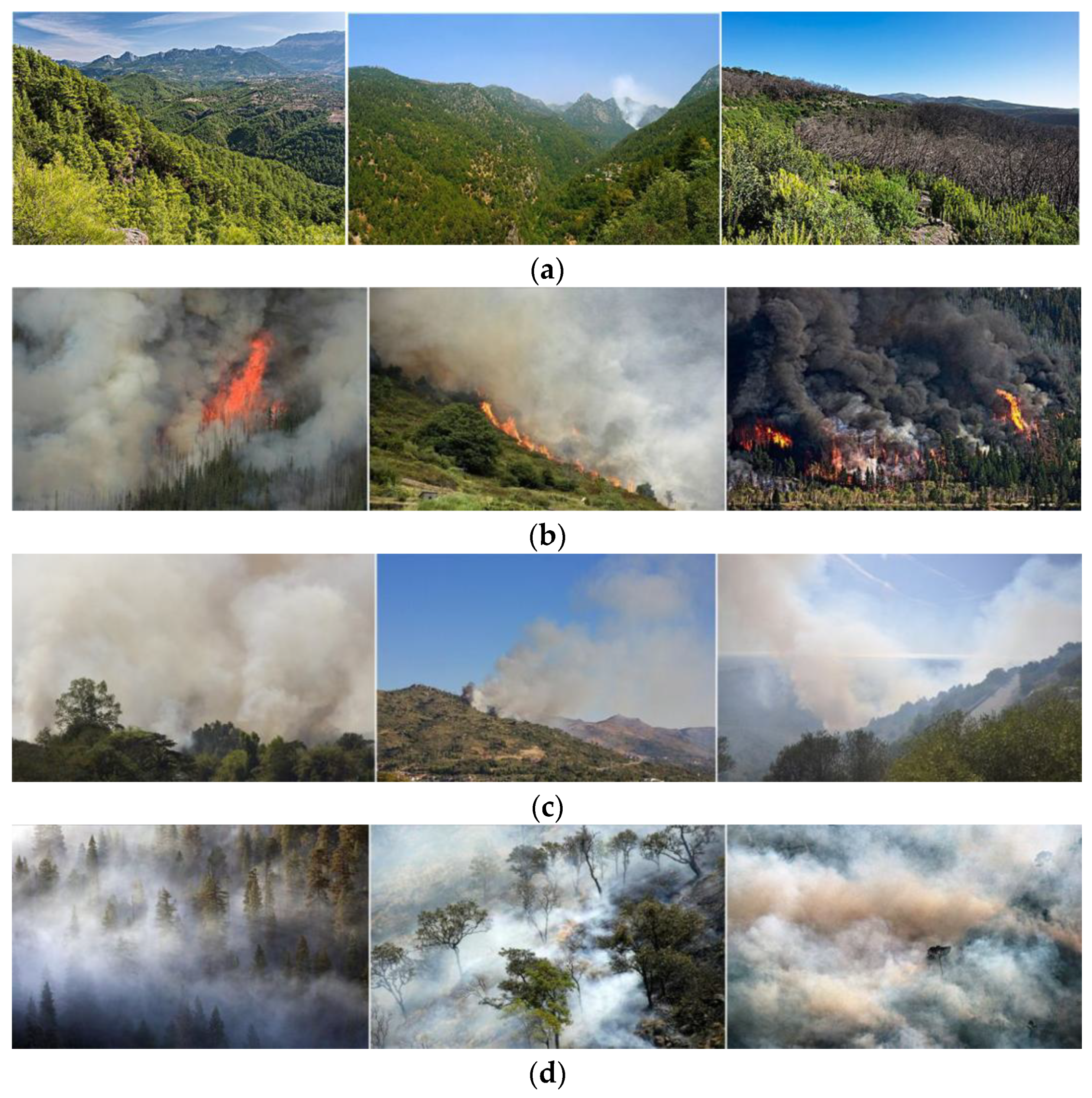

3.1. Data Source

- Step 1

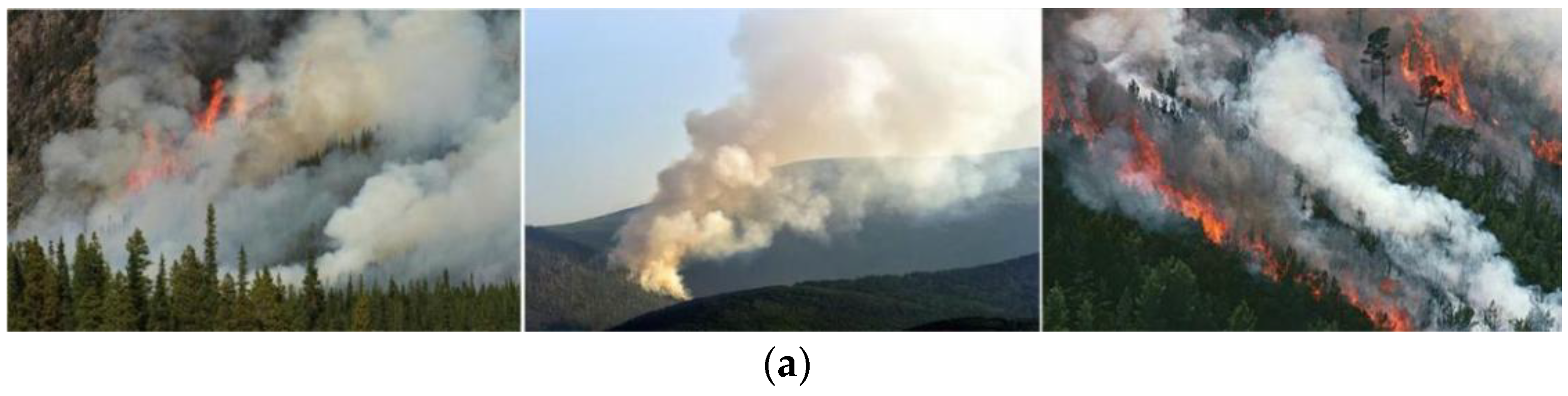

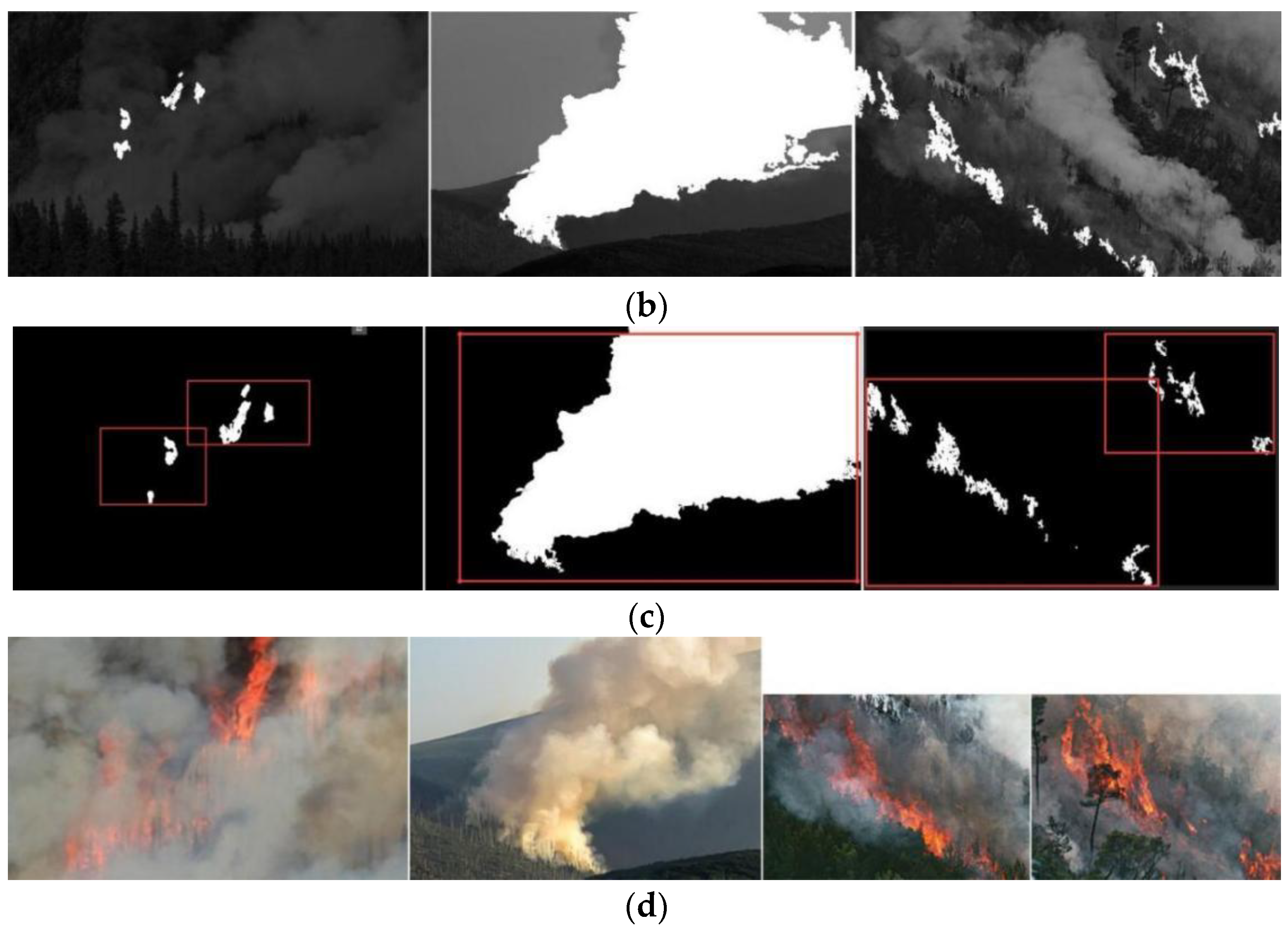

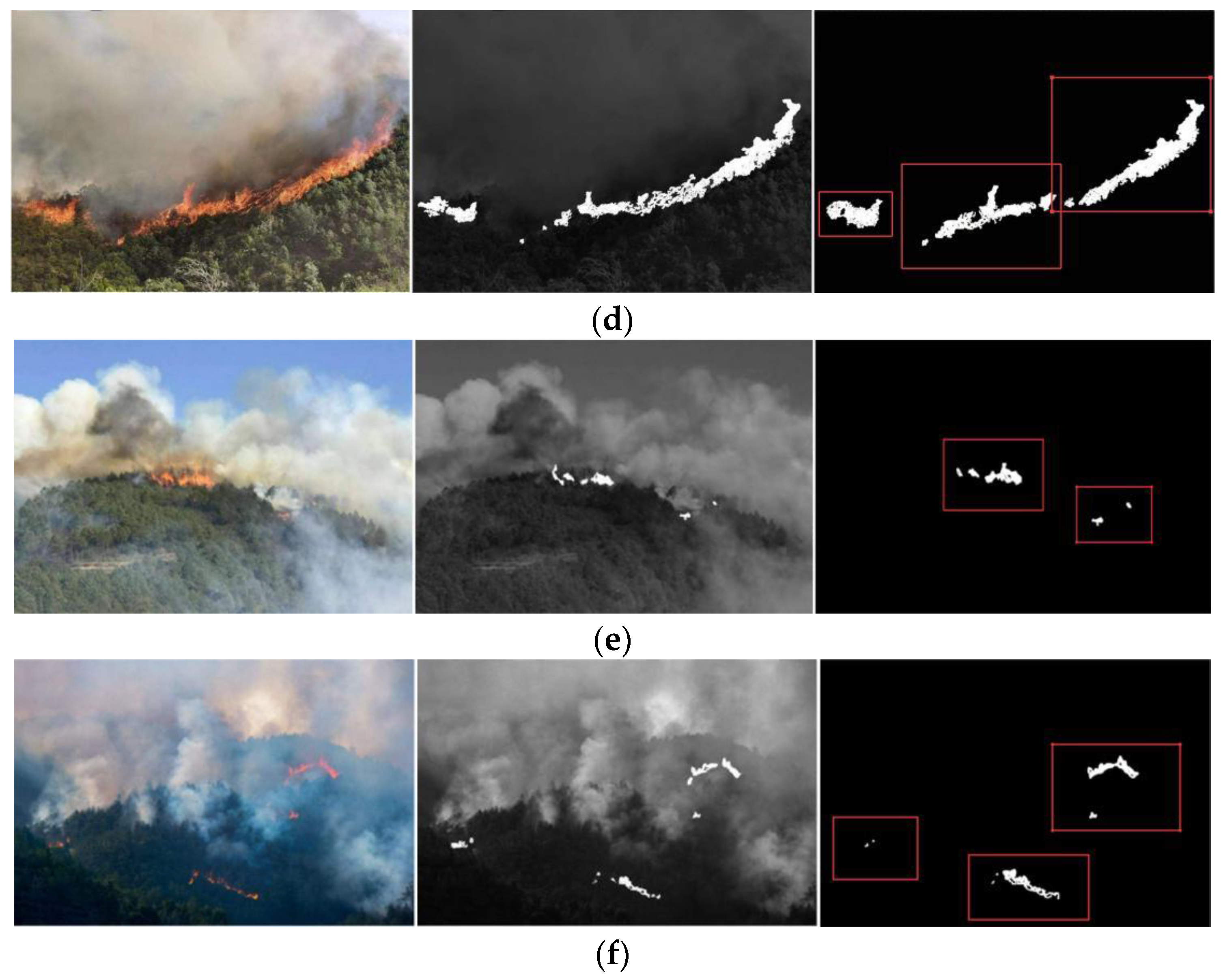

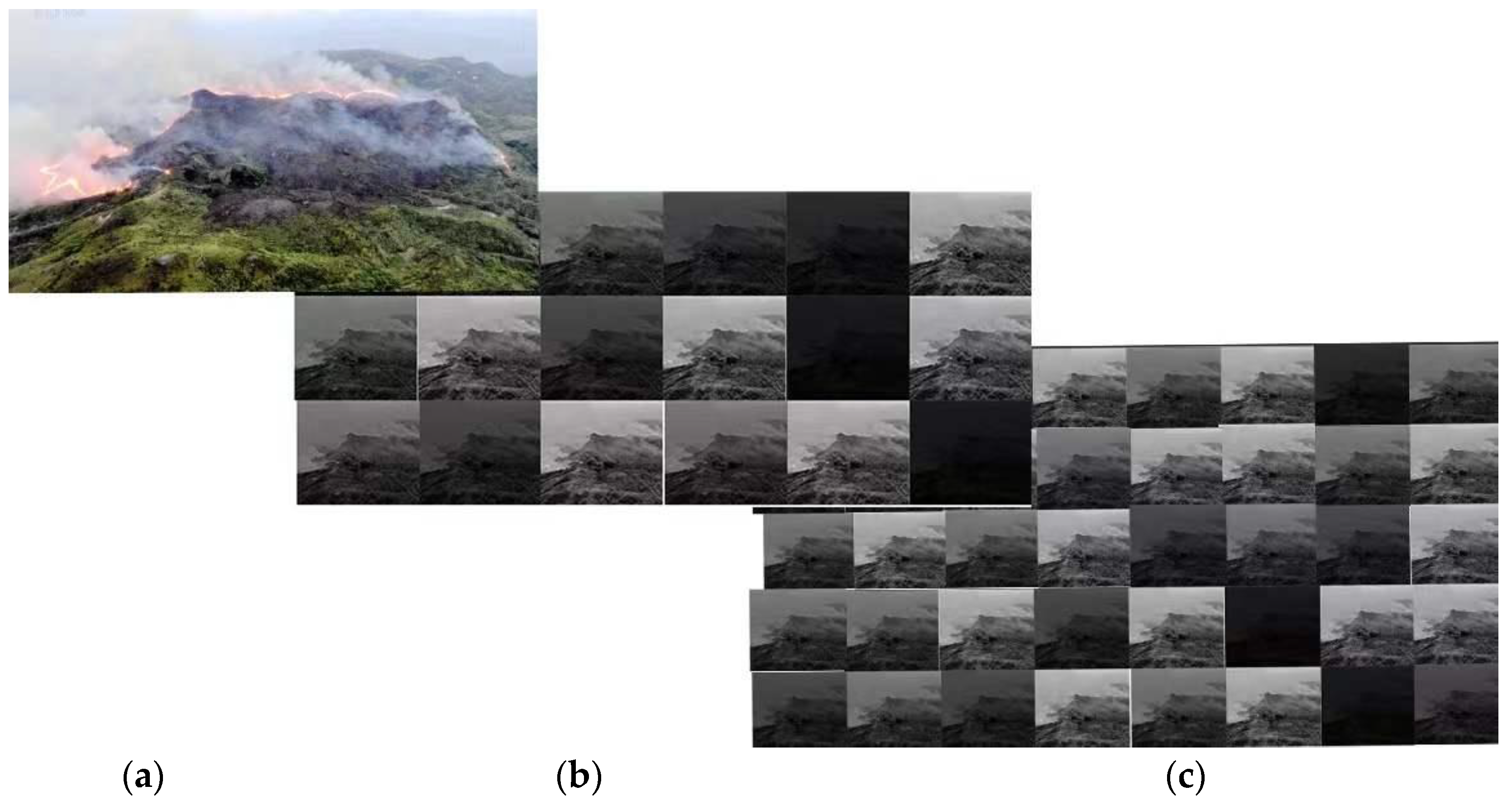

- The region of interest (ROI) proposal. Bayesian-based saliency detection was applied to an original image I; the saliency was mapped to the planarization in the image mask IM; and the original image was masked to areas R1–Rn.

- Step 2

- The color components, image energy, and entropy (vectors X1–Xn) of each ROI were determined to decide whether the characteristics vector in the ROI corresponded to flame or smoke, after which MBR and a fixed size boundary were used to divide the fire area.

3.2. Significance Segmentation and Data Enhancement

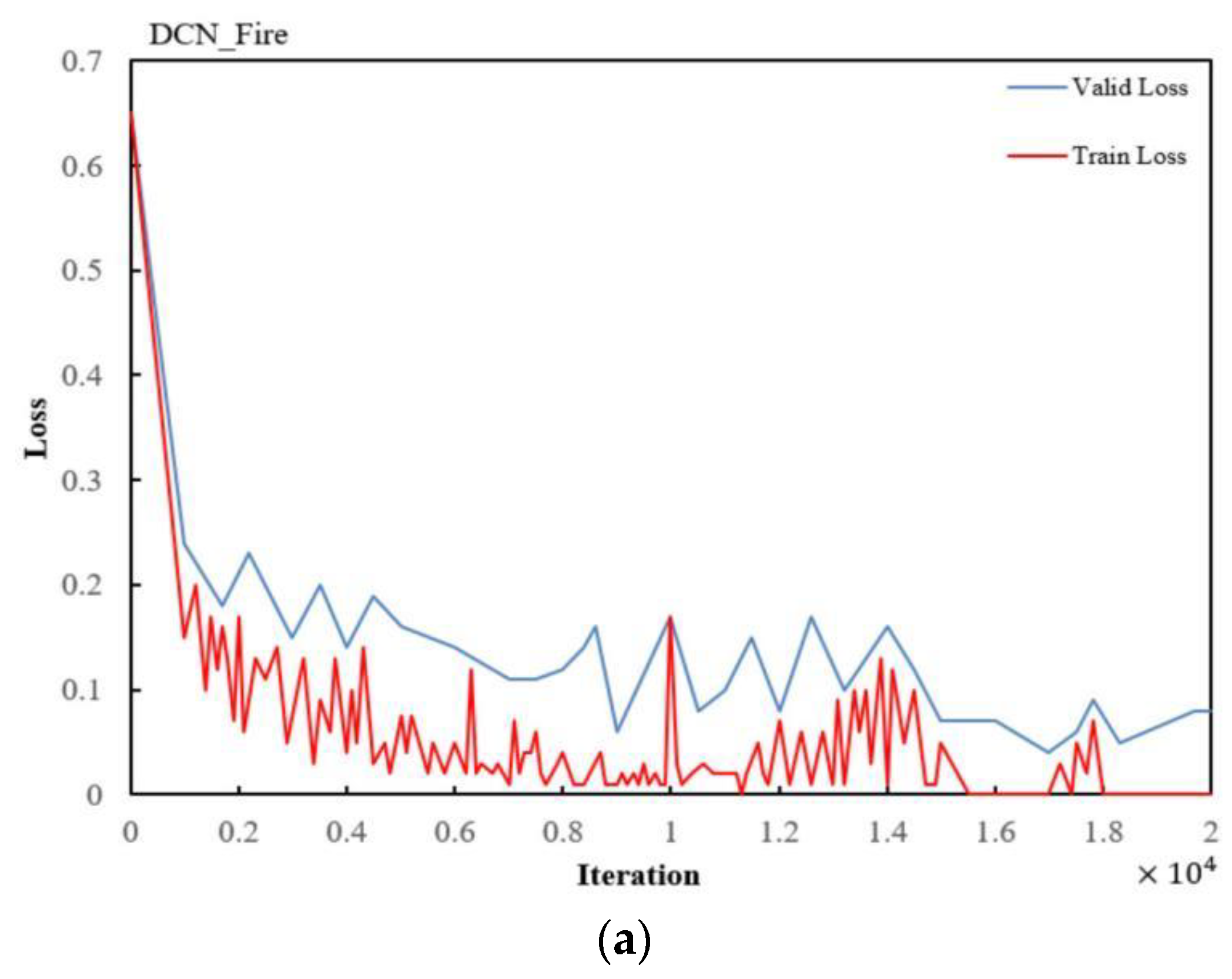

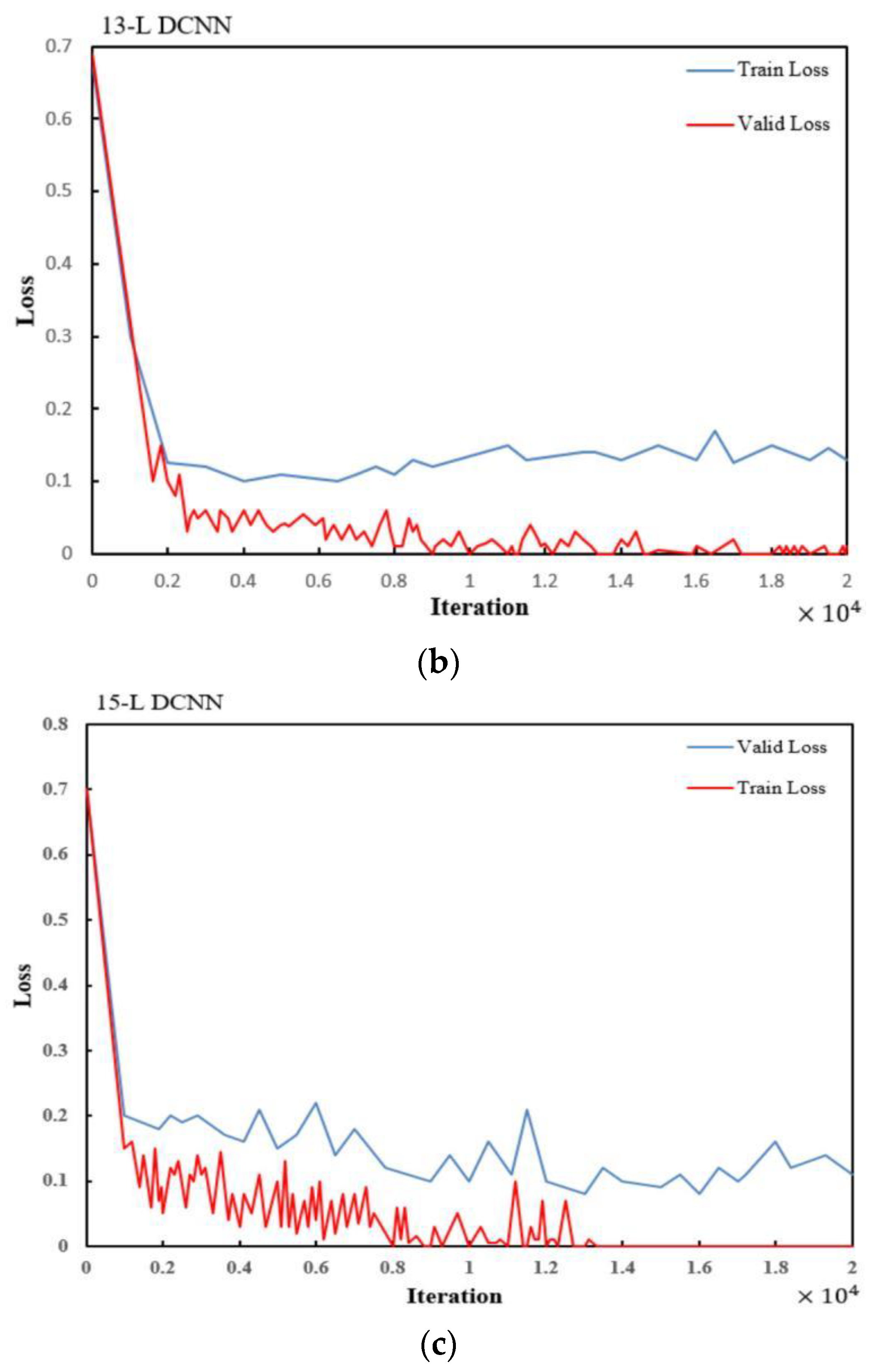

3.3. Optimization of the DCN_Fire Parameters

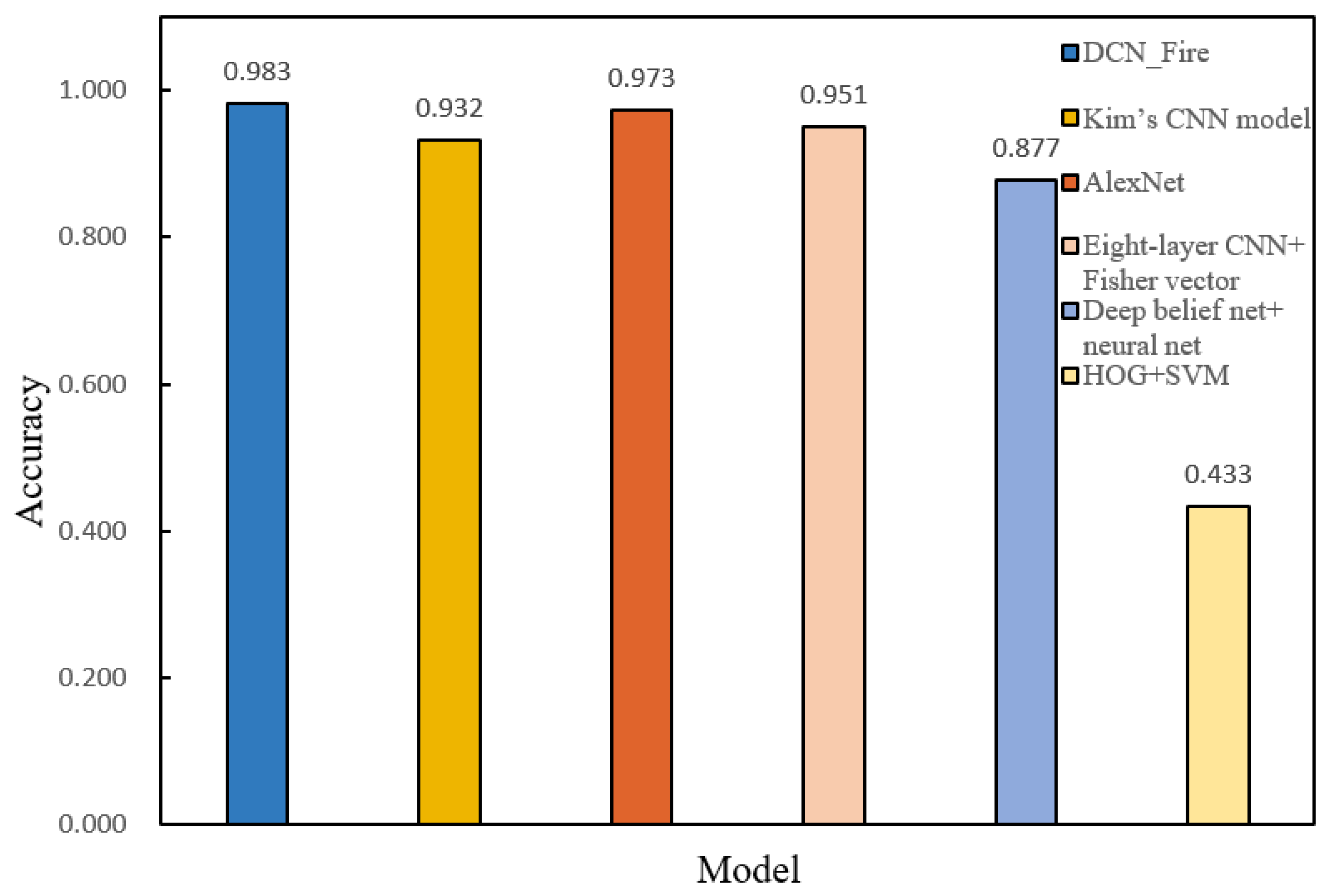

4. Model Validation

4.1. Model Performance Verification

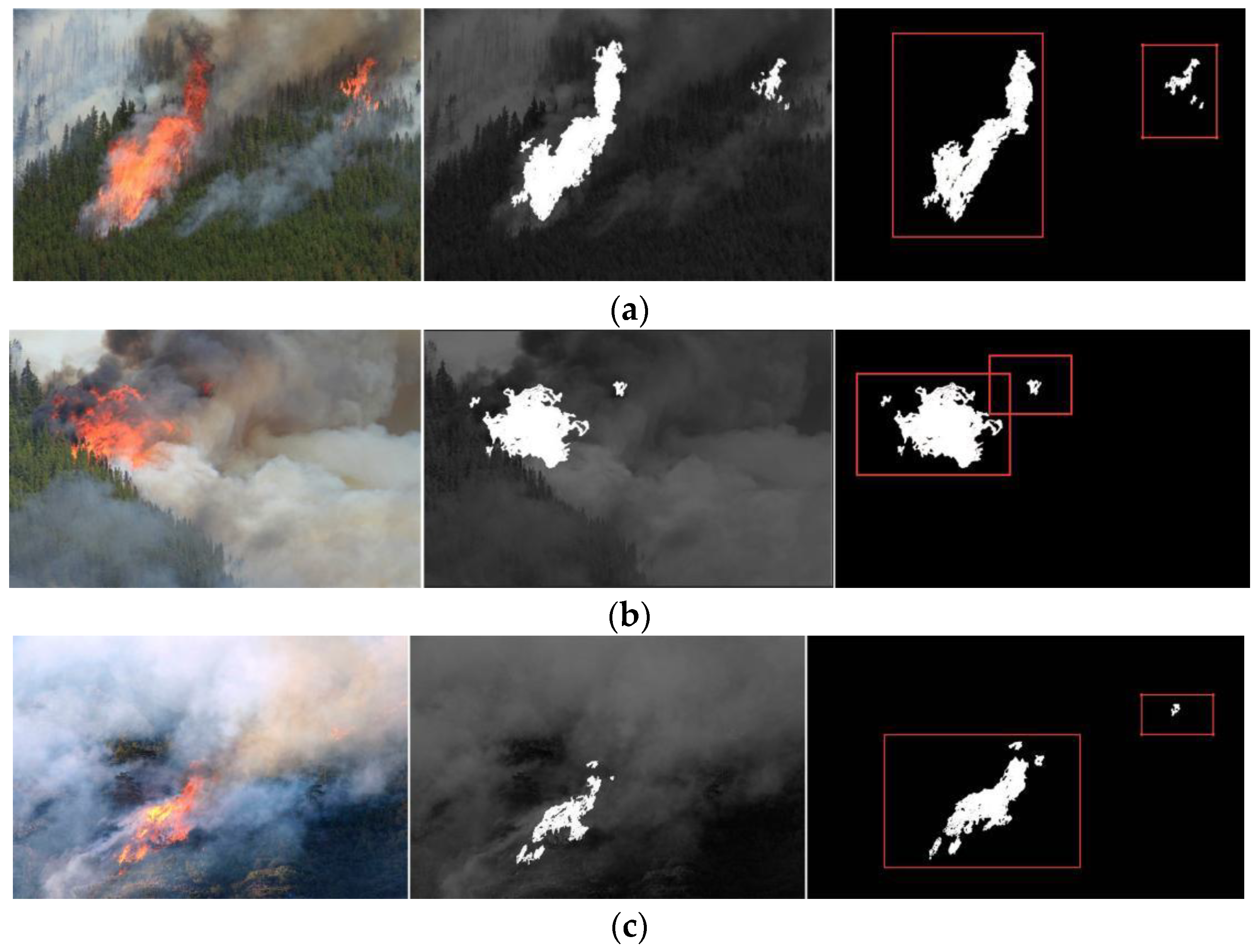

4.2. Analysis of Fire Recognition by the DCN_Fire Model

5. Conclusions

- (1)

- A DCNN network combined with migration learning was constructed and trained using multiple pre-trained DCNN models to extract features from forest fire images and used PCA reconstruction technology to convert features into a shared feature subspace to establish a forest fire risk recognition model. We used 550 flame images to test the fire risk location performance and the segmentation algorithm. The true positive rate was 7.41% and the false positive rate was 4.8%. When verifying the impact of different batch sizes and loss rates on verification accuracy, the loss rate of the DCN_Fire model of 0.5 and the batch size of 50 provided the optimal value for verification accuracy (0.983).

- (2)

- Comparing the test results showed that the performance of the improved DCNN model was comparable with that of other models. In terms of processing time and verification accuracy, the DCN_Fire model was considered a better DCNN architecture in fire risk identification. When calculating the training and verification losses of several models, those of the DCN_Fire model were minimized.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Tariq, A.; Shu, H.; Siddiqui, S.; Mousa, B.G.; Munir, I.; Nasri, A.; Waqas, H.; Lu, L.; Baqa, M.F. Forest fire monitoring using spatial-statistical and Geo-spatial analysis of factors determining forest fire in Margalla Hills, Islamabad, Pakistan. Geomat. Nat. Hazards Risk 2021, 12, 1212–1233. [Google Scholar] [CrossRef]

- Vigna, I.; Besana, A.; Comino, E.; Pezzoli, A. Application of the socio-ecological system framework to forest fire risk management: A systematic literature review. Sustainability 2021, 13, 2121. [Google Scholar] [CrossRef]

- Naderpour, M.; Rizeei, H.M.; Khakzad, N.; Pezzoli, A. Forest fire induced Natech risk assessment: A survey of geospatial technologies. Reliab. Eng. Syst. Saf. 2019, 191, 106558. [Google Scholar] [CrossRef]

- Çolak, E.; Sunar, F. Evaluation of forest fire risk in the Mediterranean Turkish forests: A case study of Menderes region, Izmir. Int. J. Disaster Risk Reduct. 2020, 45, 101479. [Google Scholar] [CrossRef]

- Van Hoang, T.; Chou, T.Y.; Fang, Y.M.; Nguyen, N.T.; Nguyen, Q.H.; Xuan Canh, P.; Ngo Bao Toan, D.; Nguyen, X.L.; Meadows, M.E. Mapping forest fire risk and development of early warning system for NW Vietnam using AHP and MCA/GIS methods. Appl. Sci. 2020, 10, 4348. [Google Scholar] [CrossRef]

- Janiec, P.; Gadal, S. A comparison of two machine learning classification methods for remote sensing predictive modeling of the forest fire in the North-Eastern Siberia. Remote Sens. 2020, 12, 4157. [Google Scholar] [CrossRef]

- Gigovic, L.; Jakovljevic, G.; Sekulović, D.; Regodić, M. GIS multi-criteria analysis for identifying and mapping forest fire hazard: Nevesinje, Bosnia and Herzegovina. Teh. Vjesn. 2018, 25, 891–897. [Google Scholar]

- Mohajane, M.; Costache, R.; Karimi, F.; Pham, Q.B.; Essahlaoui, A.; Nguyen, H.; Laneve, G.; Oudija, F. Application of remote sensing and machine learning algorithms for forest fire mapping in a Mediterranean area. Ecol. Indic. 2021, 129, 107869. [Google Scholar] [CrossRef]

- Kalantar, B.; Ueda, N.; Idrees, M.; Janizadeh, S.; Ahmadi, K.; Shabani, F. Forest fire susceptibility prediction based on machine learning models with resampling algorithms on remote sensing data. Remote Sens. 2020, 12, 3682. [Google Scholar] [CrossRef]

- Stula, M.; Krstinic, D.; Seric, L. Intelligent forest fire monitoring system. Inf. Syst. Front. 2012, 14, 725–739. [Google Scholar] [CrossRef]

- Ciprián-Sánchez, J.F.; Ochoa-Ruiz, G.; Rossi, L.; Morandini, F. Assessing the impact of the loss function, architecture and image type for Deep Learning-based wildfire segmentation. Appl. Sci. 2021, 11, 7046. [Google Scholar] [CrossRef]

- Moayedi, H.; Mehrabi, M.; Bui, D.T.; Pradhan, B.; Foong, L.K. Fuzzy-metaheuristic ensembles for spatial assessment of forest fire susceptibility. J. Environ. Manag. 2020, 260, 109867. [Google Scholar] [CrossRef] [PubMed]

- Vikram, R.; Sinha, D.; De, D.; Das, A.K. EEFFL: Energy efficient data forwarding for forest fire detection using localization technique in wireless sensor network. Wirel. Netw. 2020, 26, 5177–5205. [Google Scholar] [CrossRef]

- Achu, A.L.; Thomas, J.; Aju, C.D.; Gopinath, G.; Kumar, S.; Reghunath, R. Machine-learning modelling of fire susceptibility in a forest-agriculture mosaic landscape of southern India. Ecol. Inform. 2021, 64, 101348. [Google Scholar] [CrossRef]

- Pham, B.T.; Jaafari, A.; Avand, M.; Al-Ansari, N.; Dinh Du, T.; Yen, H.P.H.; Phong, T.V.; Nguyen, D.H.; Le, H.V.; Mafi-Gholami, D.; et al. Performance evaluation of machine learning methods for forest fire modeling and prediction. Symmetry 2020, 12, 1022. [Google Scholar] [CrossRef]

- Michael, Y.; Helman, D.; Glickman, O.; Gabay, D.; Brenner, S.; Lensky, I.M. Forecasting fire risk with machine learning and dynamic information derived from satellite vegetation index time-series. Sci. Total Environ. 2021, 764, 142844. [Google Scholar] [CrossRef]

- Naderpour, M.; Rizeei, H.M.; Ramezani, F. Forest fire risk prediction: A spatial deep neural network-based framework. Remote Sens. 2021, 13, 2513. [Google Scholar] [CrossRef]

- Wang, C.; Luo, T.; Zhao, L.; Tang, Y.; Zou, X. Window zooming–based localization algorithm of fruit and vegetable for harvesting robot. IEEE Access 2019, 7, 103639–103649. [Google Scholar] [CrossRef]

- Resco de Dios, V.; Nolan, R.H. Some challenges for forest fire risk predictions in the 21st century. Forests 2021, 12, 469. [Google Scholar] [CrossRef]

- Bowman, D.M.; Williamson, G.J. River flows are a reliable index of forest fire risk in the temperate Tasmanian Wilderness World Heritage Area, Australia. Fire 2021, 4, 22. [Google Scholar] [CrossRef]

- Razavi-Termeh, S.V.; Sadeghi-Niaraki, A.; Choi, S.M. Ubiquitous GIS-based forest fire susceptibility mapping using artificial intelligence methods. Remote Sens. 2020, 12, 1689. [Google Scholar] [CrossRef]

- Salazar, L.G.F.; Romão, X.; Paupério, E. Review of vulnerability indicators for fire risk assessment in cultural heritage. Int. J. Disaster Risk Reduct. 2021, 60, 102286. [Google Scholar] [CrossRef]

- Son, B.H.; Kang, K.H.; Ryu, J.R.; Roh, S.J. Analysis of Spatial Characteristics of Old Building Districts to Evaluate Fire Risk Factors. J. Korea Inst. Build. Constr. 2022, 22, 69–80. [Google Scholar]

- Wang, H.; Dong, L.; Zhou, H.; Luo, L.; Lin, G.; Wu, J.; Tang, Y. YOLOv3-Litchi detection method of densely distributed litchi in large vision scenes. Math. Probl. Eng. 2021, 2021, 8883015. [Google Scholar] [CrossRef]

- Anderson-Bell, J.; Schillaci, C.; Lipani, A. Predicting non-residential building fire risk using geospatial information and convolutional neural networks. Remote Sens. Appl. Soc. Environ. 2021, 21, 100470. [Google Scholar] [CrossRef]

- Maffei, C.; Lindenbergh, R.; Menenti, M. Combining multi-spectral and thermal remote sensing to predict forest fire characteristics. ISPRS J. Photogramm. Remote Sens. 2021, 181, 400–412. [Google Scholar] [CrossRef]

- Hansen, R. The Flame Characteristics of a Tyre Fire on a Mining Vehicle. Min. Metall. Explor. 2022, 39, 317–334. [Google Scholar] [CrossRef]

- Tomar, J.S.; Kranjčić, N.; Đurin, B.; Kanga, S.; Singh, S.K. Forest fire hazards vulnerability and risk assessment in Sirmaur district forest of Himachal Pradesh (India): A geospatial approach. ISPRS Int. J. Geo-Inf. 2021, 10, 447. [Google Scholar] [CrossRef]

- Ozenen Kavlak, M.; Cabuk, S.N.; Cetin, M. Development of forest fire risk map using geographical information systems and remote sensing capabilities: Ören case. Environ. Sci. Pollut. Res. 2021, 28, 33265–33291. [Google Scholar] [CrossRef]

- Lin, G.; Zhu, L.; Li, J.; Zou, X.; Tang, Y. Collision-free path planning for a guava-harvesting robot based on recurrent deep reinforcement learning. Comput. Electron. Agric. 2021, 188, 106350. [Google Scholar] [CrossRef]

- Park, M.; Tran, D.Q.; Lee, S.; Park, S. Multilabel Image Classification with Deep Transfer Learning for Decision Support on Wildfire Response. Remote Sens. 2021, 13, 3985. [Google Scholar] [CrossRef]

- Šerić, L.; Pinjušić, T.; Topić, K.; Blažević, T. Lost person search area prediction based on regression and transfer learning models. ISPRS Int. J. Geo-Inf. 2021, 10, 80. [Google Scholar] [CrossRef]

- Lawal, M.O. Tomato detection based on modified YOLOv3 framework. Sci. Rep. 2021, 11, 1447. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Zhu, M.; Chen, Z.; Wu, C.; Chen, B.; Li, C.; Li, L. Seismic performance evaluation of recycled aggregate concrete-filled steel tubular columns with field strain detected via a novel mark-free vision method. In Structures; Elsevier: Amsterdam, The Netherlands, 2022; Volume 37, pp. 426–441. [Google Scholar]

- Arif, M.; Alghamdi, K.K.; Sahel, S.A.; Alosaimi, S.O.; Alsahaft, M.E.; Alharthi, M.A.; Arif, M. Role of machine learning algorithms in forest fire management: A literature review. J. Robot. Autom. 2021, 5, 212–226. [Google Scholar]

- Quintero, N.; Viedma, O.; Urbieta, I.R.; Moreno, J.M. Assessing landscape fire hazard by multitemporal automatic classification of landsat time series using the Google Earth Engine in West-Central Spain. Forests 2019, 10, 518. [Google Scholar] [CrossRef] [Green Version]

- Akilan, T.; Wu, Q.J.; Zhang, H. Effect of fusing features from multiple DCNN architectures in image classification. IET Image Processing 2018, 12, 1102–1110. [Google Scholar] [CrossRef]

- Roy, A.M.; Bhaduri, J. Real-time growth stage detection model for high degree of occultation using DenseNet-fused YOLOv4. Comput. Electron. Agric. 2022, 193, 106694. [Google Scholar] [CrossRef]

| Structure Name | Input Dimensions | The Penultimate Floor | Feature Size |

|---|---|---|---|

| AlexNet | 227 × 227 × 3 | FC7 | 4096 |

| VGG16 | 224 × 224 × 3 | FC2 | 4096 |

| Inception-V3 | any × any × 3 | Pool_3 | 2048 |

| Image Type | Total ROI Detected from 240 Aerial Fire Images 492 | Total ROI Detected from 310 Normal-View Images 683 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Performance | TP | FN | FP | TN | TPR | FPR | TP | FN | FP | TN | TPR | FPR |

| 329 | 19 | 8 | 136 | 96.1% | 4.8% | 463 | 47 | 16 | 157 | 92.2% | 7.41% | |

| Image Type | Original Image Database | Enhanced Image Database | ||||

|---|---|---|---|---|---|---|

| UAV Image Acquisition | Ordinary Image | Total | UAV Image Acquisition | Ordinary Image | Total | |

| No flames | 354 | 762 | 1116 | 748 | 1476 | 2224 |

| With flames | 247 | 497 | 744 | 457 | 1164 | 1621 |

| Total | 601 | 1259 | 1860 | 1205 | 2640 | 3845 |

| Batch Size | Verification Accuracy | Loss Rate | Verification Accuracy |

|---|---|---|---|

| 50 | 0.968 | 0.5 | 0.983 |

| 64 | 0.971 | 0.6 | 0.967 |

| 48 | 0.972 | 0.4 | 0.964 |

| 128 | 0.965 | 0.7 | 0.952 |

| 32 | 0.961 | 0.3 | 0.949 |

| 16 | 0.949 | 0.2 | 0.947 |

| TP | FN | TN | FP | TPR | TNR | FNR | General Accuracy |

|---|---|---|---|---|---|---|---|

| 592 | 8 | 588 | 12 | 98.7% | 98.0% | 0.13% | 98.3% |

| Model | Classifier | Accuracy |

|---|---|---|

| DCN_ Fire | Softmax | 98.3% |

| Kim’s CNN model | Softmax | 93.2% |

| AlexNet | Softmax | 97.3% |

| Eight-layer CNN + Fisher vector | SVM | 95.1% |

| HOG + SVM | SVM | 43.3% |

| Deep belief net + neural net | BPNN | 87.7% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, S.; Gao, P.; Wang, W.; Zou, X. A Highly Accurate Forest Fire Prediction Model Based on an Improved Dynamic Convolutional Neural Network. Appl. Sci. 2022, 12, 6721. https://doi.org/10.3390/app12136721

Zheng S, Gao P, Wang W, Zou X. A Highly Accurate Forest Fire Prediction Model Based on an Improved Dynamic Convolutional Neural Network. Applied Sciences. 2022; 12(13):6721. https://doi.org/10.3390/app12136721

Chicago/Turabian StyleZheng, Shaoxiong, Peng Gao, Weixing Wang, and Xiangjun Zou. 2022. "A Highly Accurate Forest Fire Prediction Model Based on an Improved Dynamic Convolutional Neural Network" Applied Sciences 12, no. 13: 6721. https://doi.org/10.3390/app12136721

APA StyleZheng, S., Gao, P., Wang, W., & Zou, X. (2022). A Highly Accurate Forest Fire Prediction Model Based on an Improved Dynamic Convolutional Neural Network. Applied Sciences, 12(13), 6721. https://doi.org/10.3390/app12136721