Virtual Reality-Based Interface for Advanced Assisted Mobile Robot Teleoperation

Abstract

:1. Introduction

1.1. Motivation

1.2. Literature Review

Virtual Reality-Based Interfaces

1.3. Proposal

- Unlike the works mentioned above, this work presents an intuitive interface designed to teleoperate mobile robots in totally unknown environments. To do this, the user is able to guide the robot through the environment in order to benefit from the intelligence and adaptability of the human, whereas the robot is able to automatically avoid collisions with the objects in the environment in order to benefit from its fast response.

- Contrary to the aforementioned works, the proposed interface does not seek the realism of the virtual environment but provides all the minimum necessary elements that allow the user to carry out the teleoperation task in a more natural and intuitive way. Hence, the proposed interface establishes different virtual elements (e.g., mobile robot, user reference, 2D map of the environment, information related to the robot or task, and the 3D position of the objects detected in real-time, among others) that allow the user to quickly interact with the interface and successfully perform the robot teleoperation task.

- In contrast to the works about virtual reality interfaces mentioned above, where virtual reality controllers are used for interacting with the virtual environment, this work proposes the use of gamepads to carry out this interaction. Thus, this work aims to improve the ergonomics of the user, allowing them to teleoperate the robots in a natural way for long periods of time.

- This work is focused on improving the interaction between human users and interfaces for the teleoperation of mobile robots. In this sense, in addition to conventional studies, similar to those carried out in the abovementioned works to establish the viability and efficiency of the proposed interface, this work also carries out a study of the experience lived by users of different ages, gender and backgrounds when using the proposed interface in order to establish its degree of naturalness and intuition.

1.4. Content of the Article

2. Proposed Application

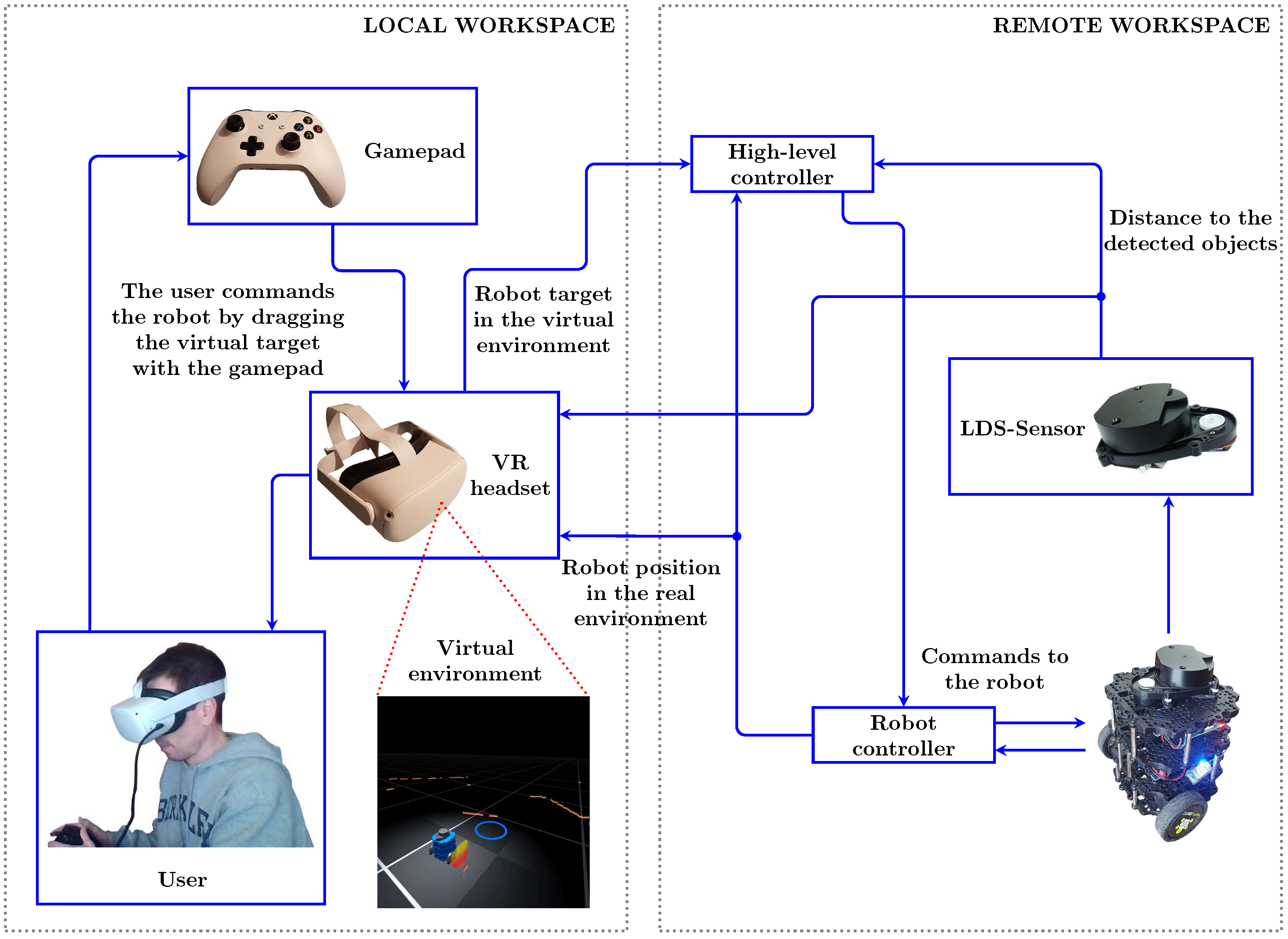

2.1. Overview

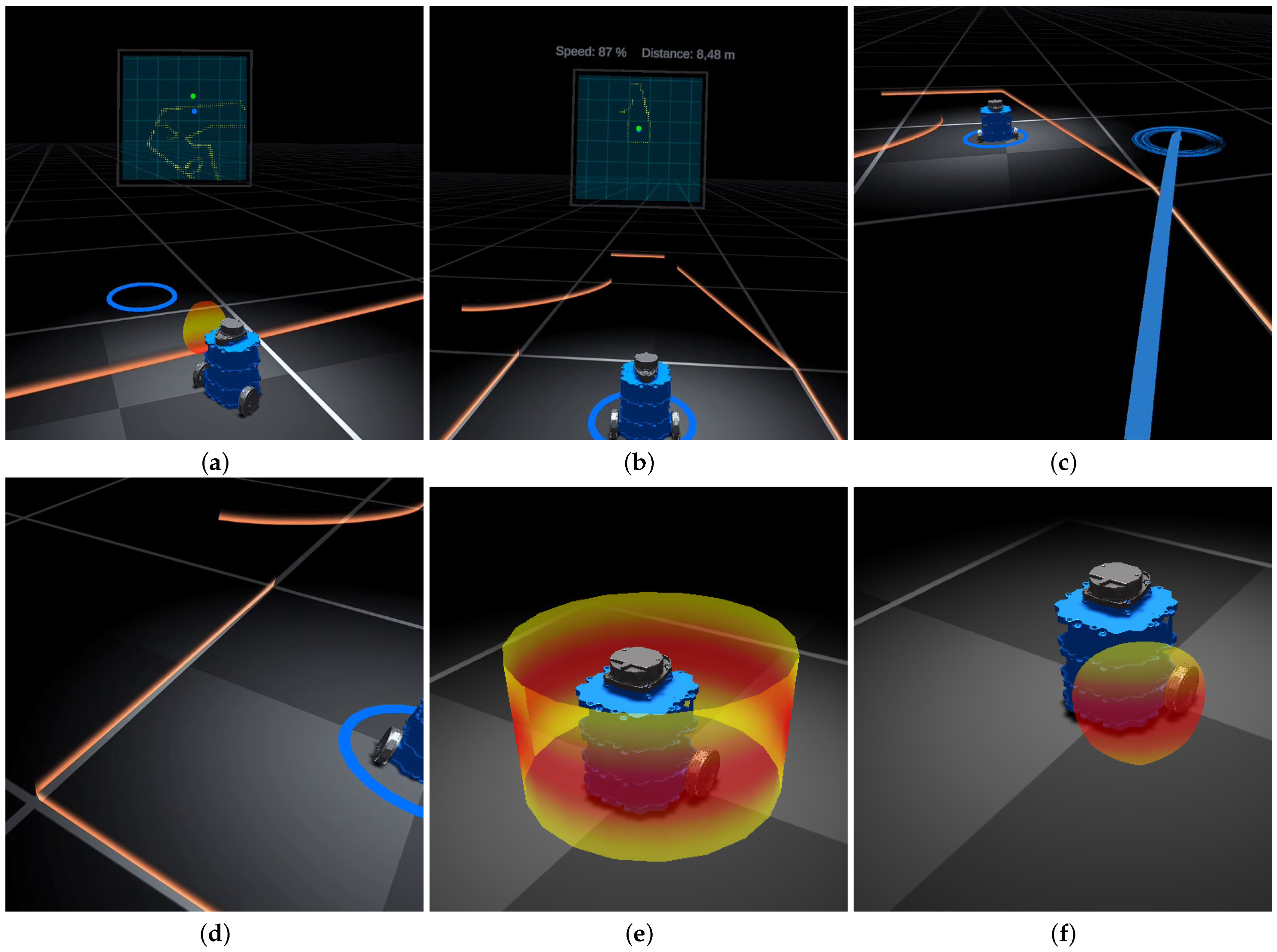

2.2. Virtual Environment

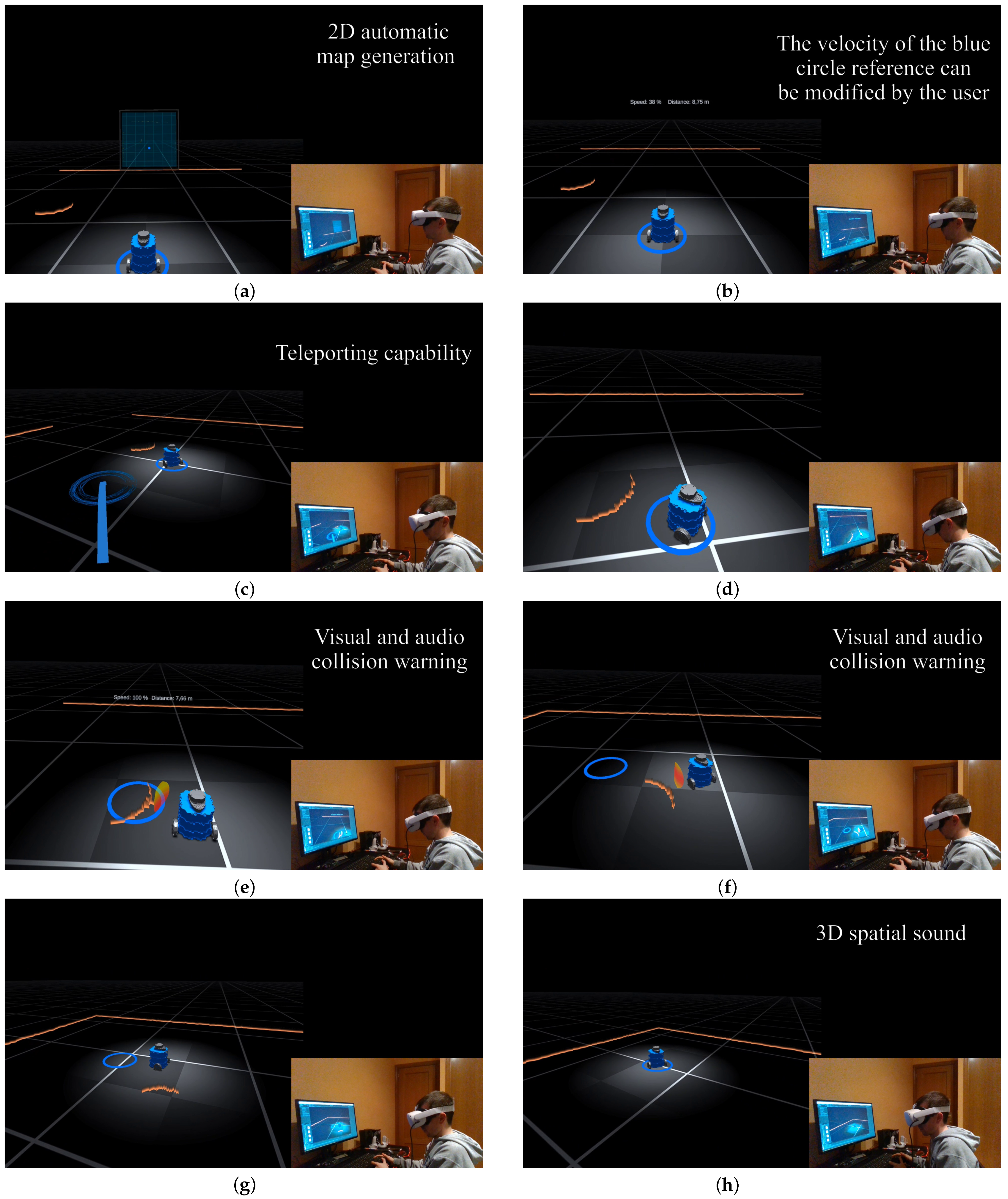

- Physically: the VR headset position and orientation is tracked at any moment and, hence, the user is able to move through the environment as if they were in the real workspace (In general, this movement is limited by a security region free of obstacles established a priori. To avoid this problem, one possibility could be the use of VR omnidirectional treadmills [58]).

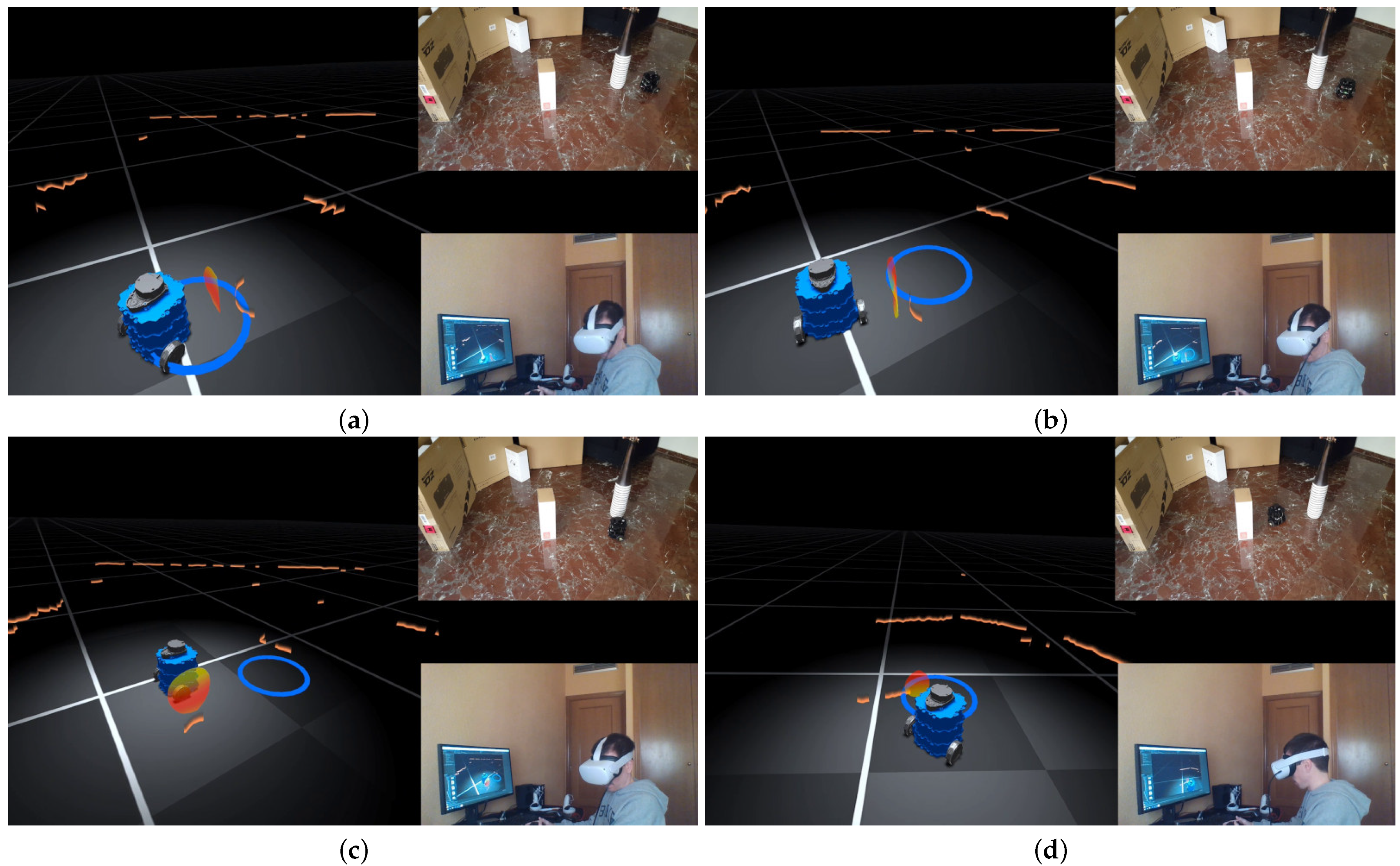

- Teleporting: the user can “jump” from their current position to another position in the environment using the gamepad. Figure 2c shows the designed teleporting element, which consists of an animated blue arrowed circle. This element is designed according to the standard representation of teleporting in most current VR applications. Note that, when the teleporting option is activated, the user cannot simultaneously move the reference position of the robot for security reasons.

- The movement of the robot produces a characteristic sound due to the robot servos, whose treble variation depends on the speed of the robot. To give it more realism, this sound is recorded directly from the actual sound of the robot moving at low speeds. The treble change of this base sound is carried out proportionally to the speed of the wheels, producing a real sensation of movement of the robot in the VE. This sound effect cannot be disabled by the user. In addition, this is a 3D sound that changes depending on the distance from the user to the robot position, providing the user with a more realistic level of immersion in the task.

- An alarm sound is also included to warn the user of collisions between the robot boundary and the obstacles in its environment. As in the previous case, this is also a 3D sound. However, contrary to the later, the user is allowed to deactivate this warning sound, since the nature of the proposed assisted teleoperation approach can lead to situations where the user, for instance, takes the robot to areas where collisions occur, or takes the robot to very tight zones where collisions cannot be avoided. In either case, the user’s attention would be on the robot, so the visual effect of the boundary alone would suffice. Note also that this warning sound for long periods could become annoying.

2.3. High Level Controller: Mobile Robot Navigation with the Potential Field-Based Method

3. Results

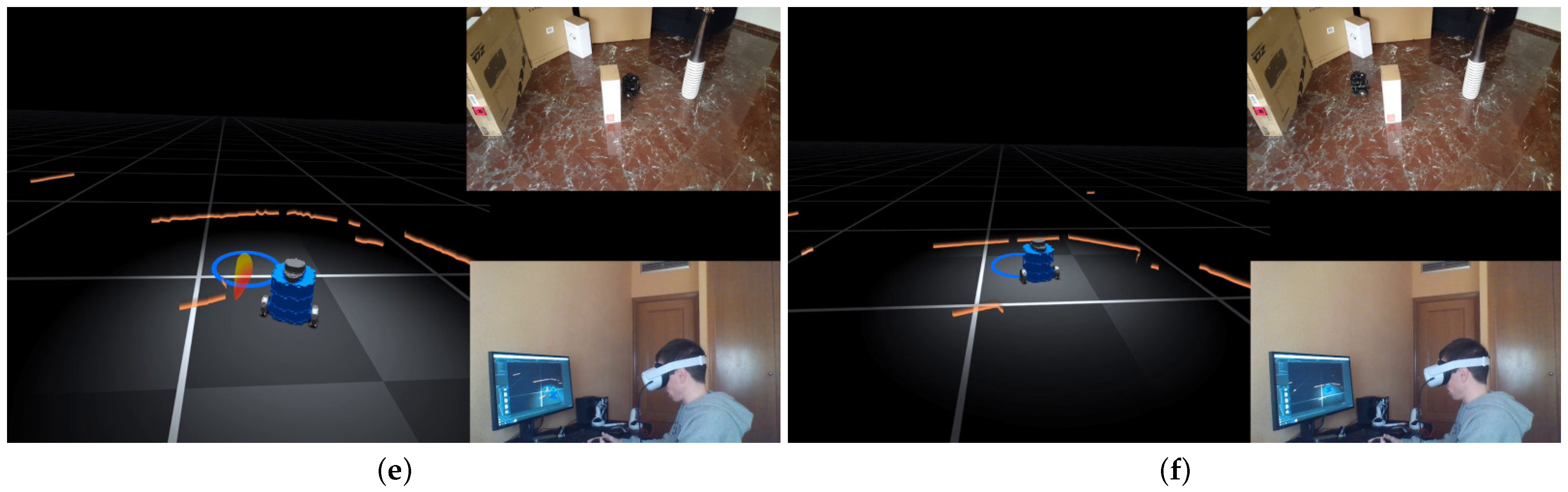

3.1. Case Study 1: Virtual Application Functionalities and Behavior

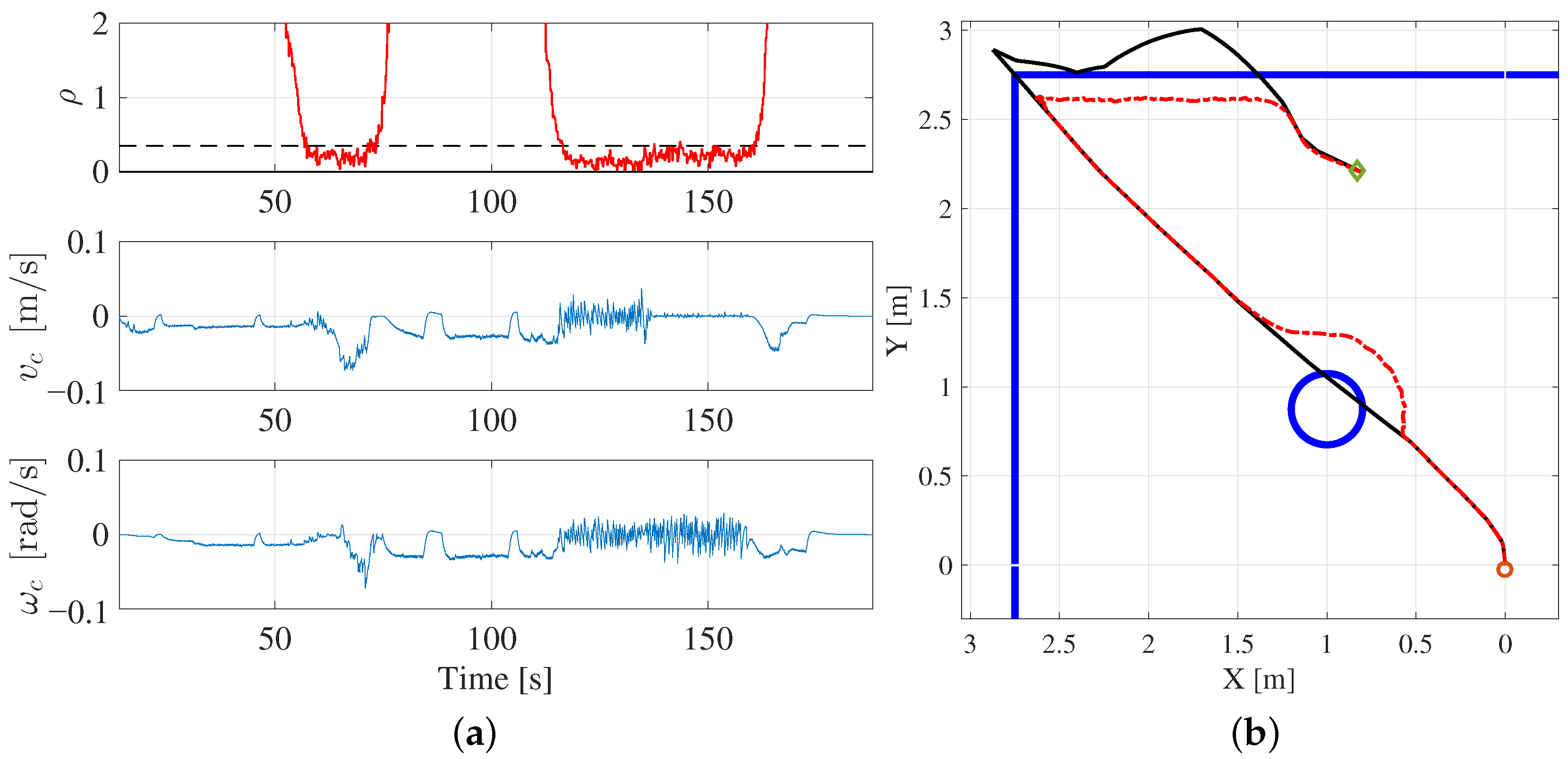

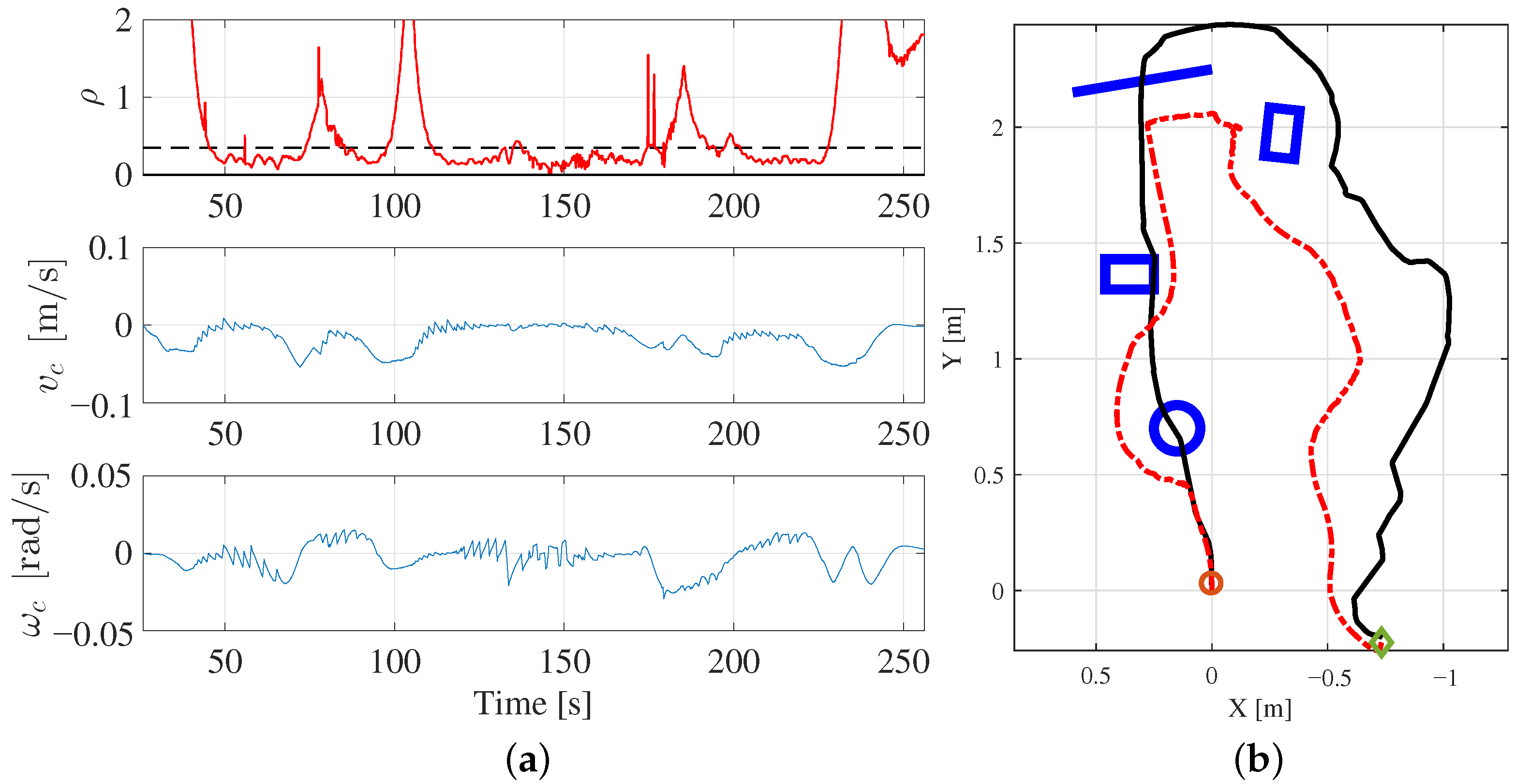

3.2. Case Study 2: Real Robot Behavior

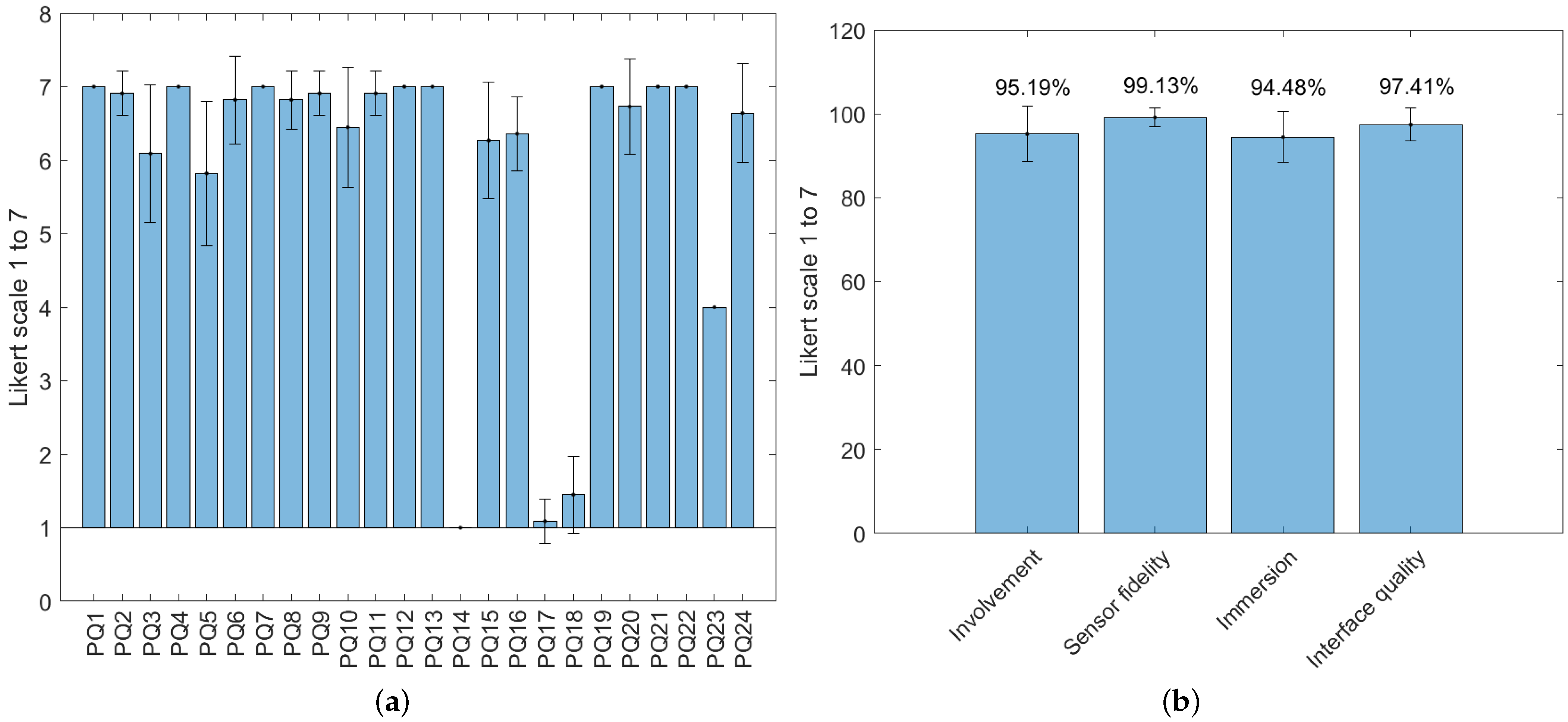

3.3. Usability Analysis Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Saputra, R.P.; Rakicevic, N.; Kuder, I.; Bilsdorfer, J.; Gough, A.; Dakin, A.; de Cocker, E.; Rock, S.; Harpin, R.; Kormushev, P. ResQbot 2.0: An Improved Design of a Mobile Rescue Robot with an Inflatable Neck Securing Device for Safe Casualty Extraction. Appl. Sci. 2021, 11, 5414. [Google Scholar] [CrossRef]

- Habibian, S.; Dadvar, M.; Peykari, B.; Hosseini, A.; Salehzadeh, M.H.; Hosseini, A.H.M.; Najafi, F. Design and implementation of a maxi-sized mobile robot (Karo) for rescue missions. ROBOMECH J. 2021, 8, 1. [Google Scholar] [CrossRef]

- Sun, Z.; Yang, H.; Ma, Y.; Wang, X.; Mo, Y.; Li, H.; Jiang, Z. BIT-DMR: A Humanoid Dual-Arm Mobile Robot for Complex Rescue Operations. IEEE Robot. Autom. Lett. 2022, 7, 802–809. [Google Scholar] [CrossRef]

- Schuster, M.J.; Müller, M.G.; Brunner, S.G.; Lehner, H.; Lehner, P.; Sakagami, R.; Dömel, A.; Meyer, L.; Vodermayer, B.; Giubilato, R.; et al. The ARCHES Space-Analogue Demonstration Mission: Towards Heterogeneous Teams of Autonomous Robots for Collaborative Scientific Sampling in Planetary Exploration. IEEE Robot. Autom. Lett. 2020, 5, 5315–5322. [Google Scholar] [CrossRef]

- Jia, X.; Sun, C.; Fu, J. Mobile Augmented Reality Centred Ietm System for Shipping Applications. Int. J. Robot. Autom. 2022, 37, 147–162. [Google Scholar] [CrossRef]

- Yin, K.; Sun, Q.; Gao, F.; Zhou, S. Lunar surface soft-landing analysis of a novel six-legged mobile lander with repetitive landing capacity. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2022, 236, 1214–1233. [Google Scholar] [CrossRef]

- Kot, T.; Novák, P. Application of virtual reality in teleoperation of the military mobile robotic system TAROS. Int. J. Adv. Robot. Syst. 2018, 15, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Kavitha, S.; SadishKumar, S.T.; Menaga, T.; Gomathi, E.; Sanjay, M.; Abarna, V.S. Military Based Voice Controlled Spy Bot with Weapon Detector. Biosci. Biotechnol. Res. Commun. 2020, 13, 142–146. [Google Scholar]

- Grabowski, A.; Jankowski, J.; Wodzyński, M. Teleoperated mobile robot with two arms: The influence of a human-machine interface, VR training and operator age. Int. J. Hum.-Comput. Stud. 2021, 156, 102707. [Google Scholar] [CrossRef]

- Li, C.; Li, B.; Wang, R.; Zhang, X. A survey on visual servoing for wheeled mobile robots. Int. J. Intell. Robot. Appl. 2021, 5, 203–218. [Google Scholar] [CrossRef]

- Szrek, J.; Jakubiak, J.; Zimroz, R. A Mobile Robot-Based System for Automatic Inspection of Belt Conveyors in Mining Industry. Energies 2022, 15, 327. [Google Scholar] [CrossRef]

- Khalaji, A.K.; Zahedifar, R. Lyapunov-Based Formation Control of Underwater Robots. Robotica 2020, 38, 1105–1122. [Google Scholar] [CrossRef]

- Mahmud, M.S.A.; Abidin, M.S.Z.; Buyamin, S.; Emmanuel, A.A.; Hasan, H.S. Multi-objective Route Planning for Underwater Cleaning Robot in Water Reservoir Tank. J. Intell. Robot. Syst. 2021, 101, 9. [Google Scholar] [CrossRef]

- Doss, A.S.A.; Venkatesh, D.; Ovinis, M. Simulation and experimental studies of a mobile robot for underwater applications. Int. J. Robot. Autom. 2021, 36, 10–17. [Google Scholar] [CrossRef]

- Guzman Ortiz, E.; Andres, B.; Fraile, F.; Poler, R.; Ortiz Bas, A. Fleet Management System for Mobile Robots in Healthcare Environments. J. Ind. Eng. Manag.-Jiem 2021, 14, 55–71. [Google Scholar] [CrossRef]

- Law, M.; Ahn, H.S.; Broadbent, E.; Peri, K.; Kerse, N.; Topou, E.; Gasteiger, N.; MacDonald, B. Case studies on the usability, acceptability and functionality of autonomous mobile delivery robots in real-world healthcare settings. Intell. Serv. Robot. 2021, 14, 387–398. [Google Scholar] [CrossRef]

- Lim, H.; Kim, S.W.; Song, J.B.; Cha, Y. Thin Piezoelectric Mobile Robot Using Curved Tail Oscillation. IEEE Access 2021, 9, 145477–145485. [Google Scholar] [CrossRef]

- Cardoso, J.C.S. Comparison of Gesture, Gamepad, and Gaze-Based Locomotion for VR Worlds. In Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology, Munich, Germany, 2–4 November 2016; pp. 319–320. [Google Scholar]

- Kitson, A.; Hashemian, A.M.; Stepanova, E.R.; Kruijff, E.; Riecke, B.E. Comparing leaning-based motion cueing interfaces for virtual reality locomotion. In Proceedings of the 2017 IEEE Symposium on 3D User Interfaces (3DUI), Los Angeles, CA, USA, 18–19 March 2017; pp. 73–82. [Google Scholar]

- Zhao, J.; Allison, R.S. Comparing head gesture, hand gesture and gamepad interfaces for answering Yes/No questions in virtual environments. Virtual Real. 2019, 24, 515–524. [Google Scholar] [CrossRef]

- Solanes, J.E.; Muñoz, A.; Gracia, L.; Martí, A.; Girbés-Juan, V.; Tornero, J. Teleoperation of industrial robot manipulators based on augmented reality. Int. J. Adv. Manuf. Technol. 2020, 111, 1077–1097. [Google Scholar] [CrossRef]

- Niemeyer, G.; Preusche, C.; Stramigioli, S.; Lee, D. Telerobotics. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 1085–1108. [Google Scholar]

- Bandala, M.; West, C.; Monk, S.; Montazeri, A.; Taylor, C.J. Vision-Based Assisted Tele-Operation of a Dual-Arm Hydraulically Actuated Robot for Pipe Cutting and Grasping in Nuclear Environments. Robotics 2019, 8, 42. [Google Scholar] [CrossRef] [Green Version]

- Abi-Farraj, F.; Pacchierotti, C.; Arenz, O.; Neumann, G.; Giordano, P.R. A Haptic Shared-Control Architecture for Guided Multi-Target Robotic Grasping. IEEE Trans. Haptics 2020, 13, 270–285. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Suarez, A.; Real, F.; Vega, V.M.; Heredia, G.; Rodriguez-Castaño, A.; Ollero, A. Compliant Bimanual Aerial Manipulation: Standard and Long Reach Configurations. IEEE Access 2020, 8, 88844–88865. [Google Scholar] [CrossRef]

- Isop, W.A.; Gebhardt, C.; Nägeli, T.; Fraundorfer, F.; Hilliges, O.; Schmalstieg, D. High-Level Teleoperation System for Aerial Exploration of Indoor Environments. Front. Robot. AI 2019, 6, 95. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brantner, G.; Khatib, O. Controlling Ocean One: Human–robot collaboration for deep-sea manipulation. J. Field Robot. 2021, 38, 28–51. [Google Scholar] [CrossRef]

- Sivčev, S.; Coleman, J.; Omerdić, E.; Dooly, G.; Toal, D. Underwater manipulators: A review. Ocean Eng. 2018, 163, 431–450. [Google Scholar] [CrossRef]

- Chen, H.; Huang, P.; Liu, Z. Mode Switching-Based Symmetric Predictive Control Mechanism for Networked Teleoperation Space Robot System. IEEE/ASME Trans. Mechatron. 2019, 24, 2706–2717. [Google Scholar] [CrossRef]

- Yoon, H.; Jeong, J.H.; Yi, B. Image-Guided Dual Master–Slave Robotic System for Maxillary Sinus Surgery. IEEE Trans. Robot. 2018, 34, 1098–1111. [Google Scholar] [CrossRef]

- Saracino, A.; Oude-Vrielink, T.J.C.; Menciassi, A.; Sinibaldi, E.; Mylonas, G.P. Haptic Intracorporeal Palpation Using a Cable-Driven Parallel Robot: A User Study. IEEE Trans. Biomed. Eng. 2020, 67, 3452–3463. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, S.; Wu, Z.; Yang, B.; Luo, Q.; Xu, K. Review of surgical robotic systems for keyhole and endoscopic procedures: State of the art and perspectives. Front. Med. 2020, 14, 382–403. [Google Scholar] [CrossRef]

- Kapoor, A.; Li, M.; Taylor, R.H. Spatial Motion Constraints for Robot Assisted Suturing Using Virtual Fixtures. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2005, Palm Springs, CA, USA, 26–29 October 2005; Duncan, J.S., Gerig, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 89–96. [Google Scholar]

- Kono, H.; Mori, T.; Ji, Y.; Fujii, H.; Suzuki, T. Development of Perilous Environment Estimation System Using a Teleoperated Rescue Robot with On-board LiDAR. In Proceedings of the 2019 IEEE/SICE International Symposium on System Integration (SII), Paris, France, 14–16 January 2019; pp. 7–10. [Google Scholar]

- Johnson, M.; Vera, A. No AI Is an Island: The Case for Teaming Intelligence. AI Mag. 2019, 40, 16–28. [Google Scholar] [CrossRef] [Green Version]

- Selvaggio, M.; Abi-Farraj, F.; Pacchierotti, C.; Giordano, P.R.; Siciliano, B. Haptic-Based Shared-Control Methods for a Dual-Arm System. IEEE Robot. Autom. Lett. 2018, 3, 4249–4256. [Google Scholar] [CrossRef] [Green Version]

- Nicolis, D.; Palumbo, M.; Zanchettin, A.M.; Rocco, P. Occlusion-Free Visual Servoing for the Shared Autonomy Teleoperation of Dual-Arm Robots. IEEE Robot. Autom. Lett. 2018, 3, 796–803. [Google Scholar] [CrossRef]

- Lu, Z.; Huang, P.; Liu, Z. Predictive Approach for Sensorless Bimanual Teleoperation Under Random Time Delays With Adaptive Fuzzy Control. IEEE Trans. Ind. Electron. 2018, 65, 2439–2448. [Google Scholar] [CrossRef]

- Gorjup, G.; Dwivedi, A.; Elangovan, N.; Liarokapis, M. An Intuitive, Affordances Oriented Telemanipulation Framework for a Dual Robot Arm Hand System: On the Execution of Bimanual Tasks. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 3611–3616. [Google Scholar]

- Clark, J.P.; Lentini, G.; Barontini, F.; Catalano, M.G.; Bianchi, M.; O’Malley, M.K. On the role of wearable haptics for force feedback in teleimpedance control for dual-arm robotic teleoperation. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5187–5193. [Google Scholar]

- Girbés-Juan, V.; Schettino, V.; Gracia, L.; Solanes, J.E.; Demeris, Y.; Tornero, J. Combining haptics and inertial motion capture to enhance remote control of a dual-arm robot. J. Multimodal User Interfaces 2022, 16, 219–238. [Google Scholar] [CrossRef]

- García, A.; Solanes, J.E.; Gracia, L.; Muñoz-Benavent, P.; Girbés-Juan, V.; Tornero, J. Bimanual robot control for surface treatment tasks. Int. J. Syst. Sci. 2022, 53, 74–107. [Google Scholar] [CrossRef]

- Selvaggio, M.; Ghalamzan, A.; Moccia, R.; Ficuciello, F.; Siciliano, B. Haptic-guided shared control for needle grasping optimization in minimally invasive robotic surgery. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Macau, China, 3–8 November 2019. [Google Scholar]

- Girbés-Juan, V.; Schettino, V.; Demiris, Y.; Tornero, J. Haptic and Visual Feedback Assistance for Dual-Arm Robot Teleoperation in Surface Conditioning Tasks. IEEE Trans. Haptics 2021, 14, 44–56. [Google Scholar] [CrossRef]

- Laghi, M.; Ajoudani, A.; Catalano, M.G.; Bicchi, A. Unifying bilateral teleoperation and tele-impedance for enhanced user experience. Int. J. Robot. Res. 2020, 39, 514–539. [Google Scholar] [CrossRef]

- Navarro, R.; Vega, V.; Martinez, S.; Jose Espinosa, M.; Hidalgo, D.; Benavente, B. Designing Experiences: A Virtual Reality Video Game to Enhance Immersion. In Proceedings of the 10th International Conference on Applied Human Factors and Ergonomics/AHFE International Conference on Human Factors and Wearable Technologies/AHFE International Conference on Game Design and Virtual Environments, Washington, DC, USA, 24–28 July 2019. [Google Scholar] [CrossRef]

- Tao, G.; Garrett, B.; Taverner, T.; Cordingley, E.; Sun, C. Immersive virtual reality health games: A narrative review of game design. J. Neuroeng. Rehabil. 2021, 18, 31. [Google Scholar] [CrossRef]

- Shafer, D.M. The Effects of Interaction Fidelity on Game Experience in Virtual Reality. Psychol. Pop. Media 2021, 10, 457–466. [Google Scholar] [CrossRef]

- Ho, J.C.F.; Ng, R. Perspective-Taking of Non-Player Characters in Prosocial Virtual Reality Games: Effects on Closeness, Empathy, and Game Immersion. Behav. Inf. Technol. 2020, 41, 1185–1198. [Google Scholar] [CrossRef]

- Wang, J.; Yuan, X.Q. Route Planning of Teleoperation Mobile Robot based on the Virtual Reality Technology. J. Robotics Netw. Artif. Life 2020, 7, 125–128. [Google Scholar] [CrossRef]

- Urrea, C.; Matteoda, R. Development of a virtual reality simulator for a strategy for coordinating cooperative manipulator robots using cloud computing. Robot. Auton. Syst. 2020, 126, 103447. [Google Scholar] [CrossRef]

- Kuo, C.Y.; Huang, C.C.; Tsai, C.H.; Shi, Y.S.; Smith, S. Development of an immersive SLAM-based VR system for teleoperation of a mobile manipulator in an unknown environment. Comput. Ind. 2021, 132, 103502. [Google Scholar] [CrossRef]

- Meta, Facebook Reality Labs (Redmond, DC, USA). Oculus Quest 2 Hardware Details. Available online: https://www.oculus.com/quest-2/ (accessed on 4 March 2022).

- Unity (San Francisco, CA, USA). Unity Real-Time Development Platform. Available online: https://unity.com/ (accessed on 5 May 2022).

- Microsoft (Redmond, DC, USA). Xbox Wireless Controller Hardware Details. Available online: https://www.xbox.com/en-US/accessories/controllers/xbox-wireless-controller (accessed on 4 March 2022).

- Robotis (Lake Forest, CA, USA). Turtlebot3 Hardware Details. Available online: https://www.robotis.us/turtlebot-3/ (accessed on 4 March 2022).

- Unity (San Francisco, CA, USA). Shaders Core Concepts. Available online: https://docs.unity3d.com/Manual/Shaders.html (accessed on 4 March 2022).

- Virtuix (Austin, TX, USA). OmniOne Hardware Details. Available online: https://omni.virtuix.com/ (accessed on 4 March 2022).

- Latombe, J.C. Robot Motion Planning; Kluwer: Boston, MA, USA, 1991. [Google Scholar]

- Khatib, O. Real-time obstacle avoidance for manipulators and mobile robots. Int. J. Robot. Res. 1986, 5, 90–98. [Google Scholar] [CrossRef]

- Gracia, L.; Tornero, J. Kinematic models and isotropy analysis of wheeled mobile robots. Robotica 2008, 26, 587–599. [Google Scholar] [CrossRef]

- Gracia, L.; Tornero, J. Characterization of zero tracking error references in the kinematic control of wheeled mobile robots. Robot. Auton. Syst. 2009, 57, 565–577. [Google Scholar] [CrossRef]

- Gracia, L.; Tornero, J. Kinematic control system for car-like vehicles. In Proceedings of the Ibero-American Conference on Artificial Intelligence, Seville, Spain, 12–15 November 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 882–892. [Google Scholar]

- Koenig, N.; Howard, A. Design and Use Paradigms for Gazebo, An Open-Source Multi-Robot Simulator. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Sendai, Japan, 28 September–2 October 2004; pp. 2149–2154. [Google Scholar]

- Aguero, C.; Koenig, N.; Chen, I.; Boyer, H.; Peters, S.; Hsu, J.; Gerkey, B.; Paepcke, S.; Rivero, J.; Manzo, J.; et al. Inside the Virtual Robotics Challenge: Simulating Real-Time Robotic Disaster Response. IEEE Trans. Autom. Sci. Eng. 2015, 12, 494–506. [Google Scholar] [CrossRef]

- Video of Experiment 1. 2022. Available online: https://media.upv.es/player/?id=7fac10d0-8ccd-11ec-ad20-231602f2b702 (accessed on 12 June 2022).

- Video of Experiment 2. 2022. Available online: https://media.upv.es/player/?id=31aac3c0-8cca-11ec-a6b9-39f61182889c (accessed on 12 June 2022).

- Blattgerste, J.; Strenge, B.; Renner, P.; Pfeiffer, T.; Essig, K. Comparing Conventional and Augmented Reality Instructions for Manual Assembly Tasks. In Proceedings of the 10th International Conference on PErvasive Technologies Related to Assistive Environments, Island of Rhodes, Greece, 21–23 June 2017; pp. 75–82. [Google Scholar] [CrossRef]

- Attig, C.; Wessel, D.; Franke, T. Assessing Personality Differences in Human-Technology Interaction: An Overview of Key Self-report Scales to Predict Successful Interaction. In Proceedings of the HCI International 2017—Posters’ Extended Abstracts, Vancouver, BC, Canada, 9–14 July 2017; Stephanidis, C., Ed.; Springer International Publishing: Cham, Switzerland, 2017; pp. 19–29. [Google Scholar]

- Franke, T.; Attig, C.; Wessel, D. A Personal Resource for Technology Interaction: Development and Validation of the Affinity for Technology Interaction (ATI) Scale. Int. J. -Hum.-Comput. Interact. 2018, 35, 456–467. [Google Scholar] [CrossRef]

- Du, J.; Do, H.M.; Sheng, W. Human-Robot Collaborative Control in a Virtual-Reality-Based Telepresence System. Int. J. Soc. Robot. 2021, 13, 1295–1306. [Google Scholar] [CrossRef]

- Uboe, J. Introductory Statistics for Business and Economics: Theory, Exercises and Solutions; Springer International Publishing AG: Cham, Switzerland, 2017; ISBN 9783319709369. [Google Scholar]

- Hess, R. Blender Foundations: The Essential Guide to Learning Blender 2.6; Focal Press: Waltham, MA, USA, 2010; Available online: https://www.sciencedirect.com/book/9780240814308/blender-foundations (accessed on 12 June 2022).

- Video of Experiment 3. 2022. Available online: https://media.upv.es/player/?id=f45e38d0-8cce-11ec-ad20-231602f2b702 (accessed on 12 June 2022).

- Witmer, B.G.; Singer, M.J. Measuring Presence in Virtual Environments: A Presence Questionnaire. Presence Teleoperators Virtual Environ. 1998, 7, 225–240. [Google Scholar] [CrossRef]

- Witmer, B.G.; Jerome, C.J.; Singer, M.J. The Factor Structure of the Presence Questionnaire. Presence Teleoperators Virtual Environ. 2005, 14, 298–312. [Google Scholar] [CrossRef]

- Schubert, T.; Friedmann, F.; Regenbrecht, H. The Experience of Presence: Factor Analytic Insights. Presence Teleoperators Virtual Environ. 2001, 10, 266–281. [Google Scholar] [CrossRef]

- Regenbrecht, H.; Schubert, T. Real and Illusory Interactions Enhance Presence in Virtual Environments. Presence Teleoperators Virtual Environ. 2002, 11, 425–434. [Google Scholar] [CrossRef]

- Schubert, T. The sense of presence in virtual environments: A three-component scale measuring spatial presence, involvement, and realness. Z. für Medien. 2003, 15, 69–71. [Google Scholar] [CrossRef]

- Brooke, J. “SUS-A Quick and Dirty Usability Scale.” Usability Evaluation in Industry; CRC Press: Boca Raton, FL, USA, 1996; ISBN 9780748404605. [Google Scholar]

- Gracia, L.; Garelli, F.; Sala, A. Reactive Sliding-Mode Algorithm for Collision Avoidance in Robotic Systems. IEEE Trans. Control. Syst. Technol. 2013, 21, 2391–2399. [Google Scholar] [CrossRef] [Green Version]

- Tutsoy, O.; Barkana, D.E.; Balikci, K. A Novel Exploration-Exploitation-Based Adaptive Law for Intelligent Model-Free Control Approaches. IEEE Trans. Cybern. 2021; 1–9, in press. [Google Scholar] [CrossRef]

| PQ1 | How much were you able to control events? |

| PQ2 | How responsive was the environment to actions that you initiated (or performed)? |

| PQ3 | How natural did your interactions with the environment seem? |

| PQ4 | How much did the visual aspects of the environment involve you? |

| PQ5 | How natural was the mechanism which controlled movement through the environment? |

| PQ6 | How compelling was your sense of objects moving through space? |

| PQ7 | How much did your experiences in the virtual environment seem consistent with your real world experiences? |

| PQ8 | How compelling was your sense of moving around inside the virtual environment? |

| PQ9 | How completely were you able to actively survey or search the environment using vision? |

| PQ11 | How well could you move or manipulate objects in the virtual environment? |

| PQ12 | How closely were you able to examine objects? |

| PQ13 | How well could you examine objects from multiple viewpoints? |

| PQ14 | How much did the auditory aspects of the environment involve you? |

| PQ15 | How well could you identify sounds? |

| PQ16 | How well could you localize sounds? |

| PQ17 | Were you able to anticipate what would happen next in response to the actions that you performed? |

| PQ18 | How quickly did you adjust to the virtual environment experience? |

| PQ19 | How proficient in moving and interacting with the virtual environment did you feel at the end of the experience? |

| PQ20 | How well could you concentrate on the assigned tasks or required activities rather than on the mechanisms used to perform those tasks or activities? |

| PQ21 | How much delay did you experience between your actions and expected outcomes? |

| PQ22 | How much did the visual display quality interfere or distract you from performing assigned tasks or required activities? |

| PQ23 | How much did the control devices interfere with the performance of assigned tasks or with other activities |

| PQ24 | How much did the control devices interfere with the performance of assigned tasks or with other activities |

| IPQ1 | In the computer generated world I had a sense of ”being there“ |

| IPQ2 | Somehow I felt that the virtual world surrounded me |

| IPQ3 | I felt like I was just perceiving pictures |

| IPQ4 | I did not feel present in the virtual space |

| IPQ5 | I had a sense of acting in the virtual space, rather than operating something from outside |

| IPQ6 | I felt present in the virtual space |

| IPQ7 | How aware were you of the real world surrounding while navigating in the virtual world? (i.e., sounds, room temperature, and other people)? |

| IPQ8 | I was not aware of my real environment |

| IPQ9 | I still paid attention to the real environment |

| IPQ11 | I was completely captivated by the virtual world |

| IPQ12 | How real did the virtual world seem to you? |

| IPQ13 | How much did your experience in the virtual environment seem consistent with your real world experience? |

| IPQ14 | The virtual world seemed more realistic than the real world |

| SUS1 | I think that I would like to use this system frequently |

| SUS2 | I found the system unnecessarily complex |

| SUS3 | I thought the system was easy to use |

| SUS4 | I think that I would need the support of a technical person to be able to use this system |

| SUS5 | I found the various functions in this system were well integrated |

| SUS6 | I thought there was too much inconsistency in this system |

| SUS7 | I would imagine that most people would learn to use this system very quickly |

| SUS8 | I found the system very cumbersome to use |

| SUS9 | I felt very confident using the system |

| SUS10 | I needed to learn a lot of things before I could get going with this system |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Solanes, J.E.; Muñoz, A.; Gracia, L.; Tornero, J. Virtual Reality-Based Interface for Advanced Assisted Mobile Robot Teleoperation. Appl. Sci. 2022, 12, 6071. https://doi.org/10.3390/app12126071

Solanes JE, Muñoz A, Gracia L, Tornero J. Virtual Reality-Based Interface for Advanced Assisted Mobile Robot Teleoperation. Applied Sciences. 2022; 12(12):6071. https://doi.org/10.3390/app12126071

Chicago/Turabian StyleSolanes, J. Ernesto, Adolfo Muñoz, Luis Gracia, and Josep Tornero. 2022. "Virtual Reality-Based Interface for Advanced Assisted Mobile Robot Teleoperation" Applied Sciences 12, no. 12: 6071. https://doi.org/10.3390/app12126071

APA StyleSolanes, J. E., Muñoz, A., Gracia, L., & Tornero, J. (2022). Virtual Reality-Based Interface for Advanced Assisted Mobile Robot Teleoperation. Applied Sciences, 12(12), 6071. https://doi.org/10.3390/app12126071