Implementing Ethical, Legal, and Societal Considerations in Wearable Robot Design

Abstract

:1. Introduction

2. Process, Principles and Domain-Specificity: The Challenge of Targeting Ethical, Legal and Social Implications (ELSI) Guidance Effectively

- The need for a clear process of integrating the consideration of ELSI in WRs development, as exemplified by process models for ethical design, such as value-sensitive design (VSD) or ethics-by-design, the procedural focus of some RRI approaches, or the use of design flow-charts, for example, the Assessment list in the High Level Expert Group (HLEG) on AI [17];

- The need for “top-down” guidance, by providing specific sets of substantive ELSI guidance, often based on general principles that can cover many application areas and be applicable to as yet unknown innovations, as exemplified by HLEG AI [17], IEEE [23], privacy by design, universal design, equality, diversity and inclusion (EDI), standards, and professional ethics codes;

- The need for “bottom-up” guidance, in the sense of achieving sufficiently domain-specific guidance that allows robot developers to easily apply relevant concerns to their practice, including attention to specific use cases and stakeholder involvement.

2.1. ELSI Design Tools That Focus on Process

2.2. ELSI Design Tools That Identify Substantive Principles

2.3. Desiderata for the Translation of ELSI Guidance into Domain-Specific Practice

3. ELSI Considerations for Wearable Robots: Methods Followed for Selecting Domain-Specific Principles and Core Concepts

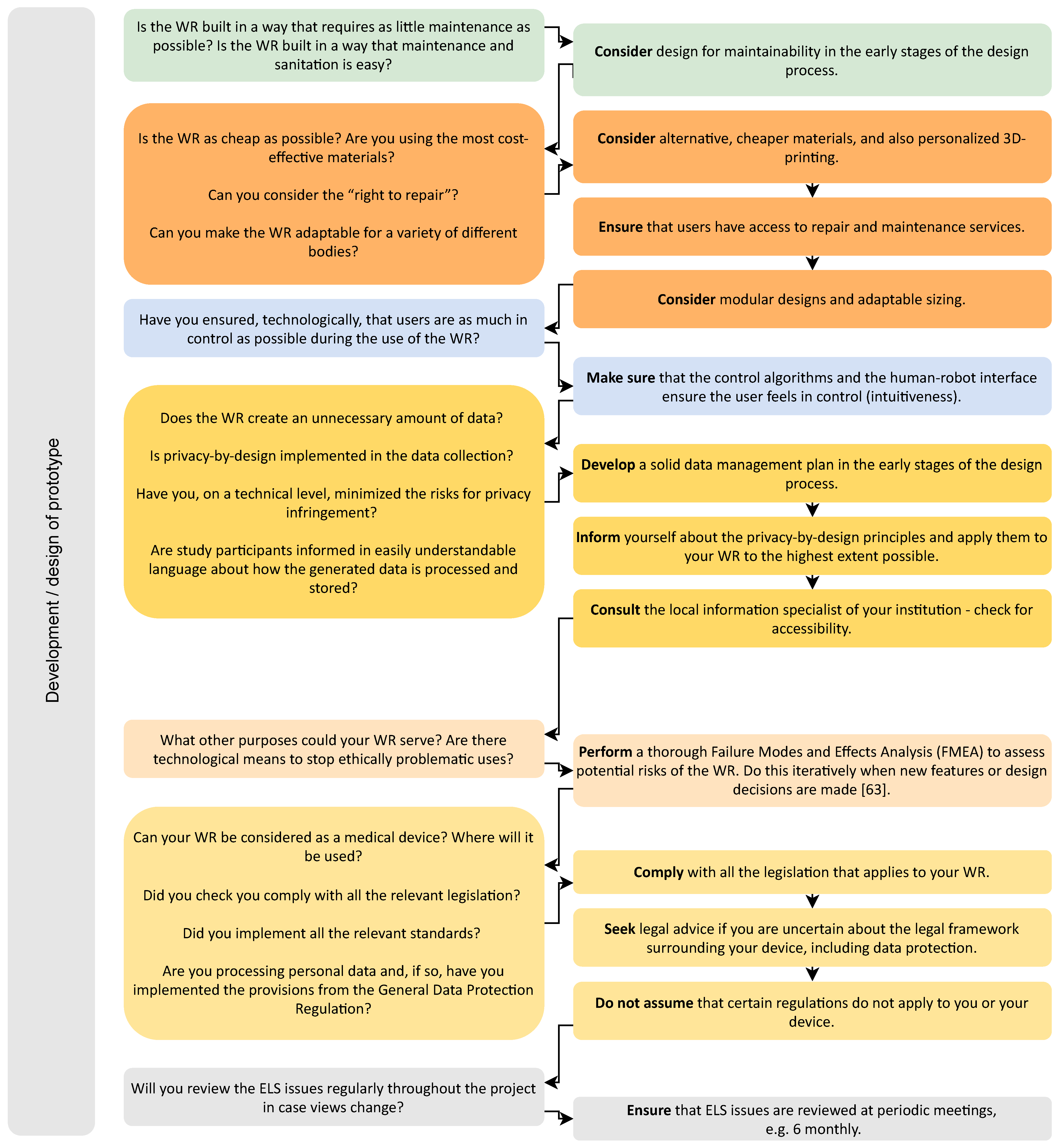

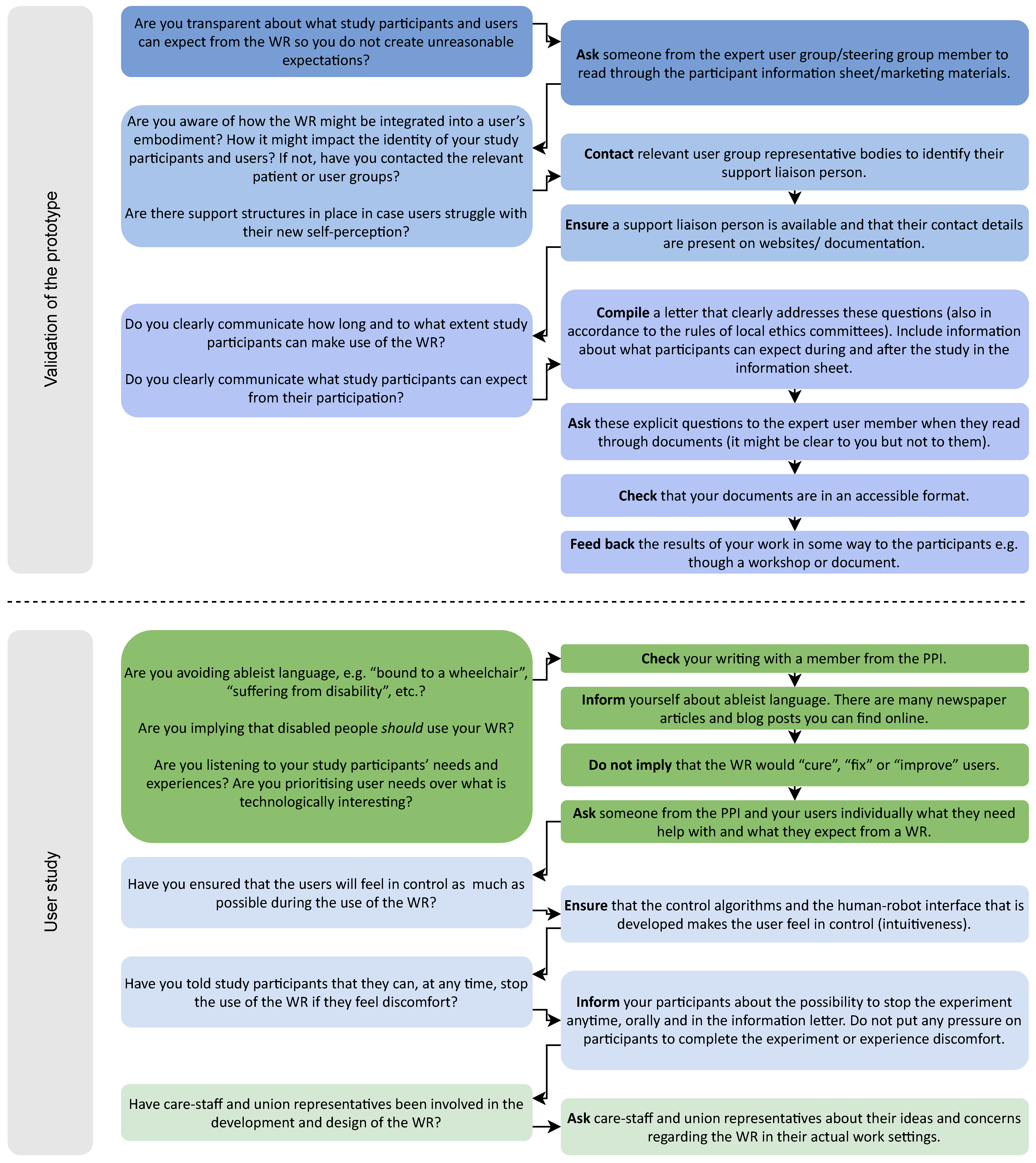

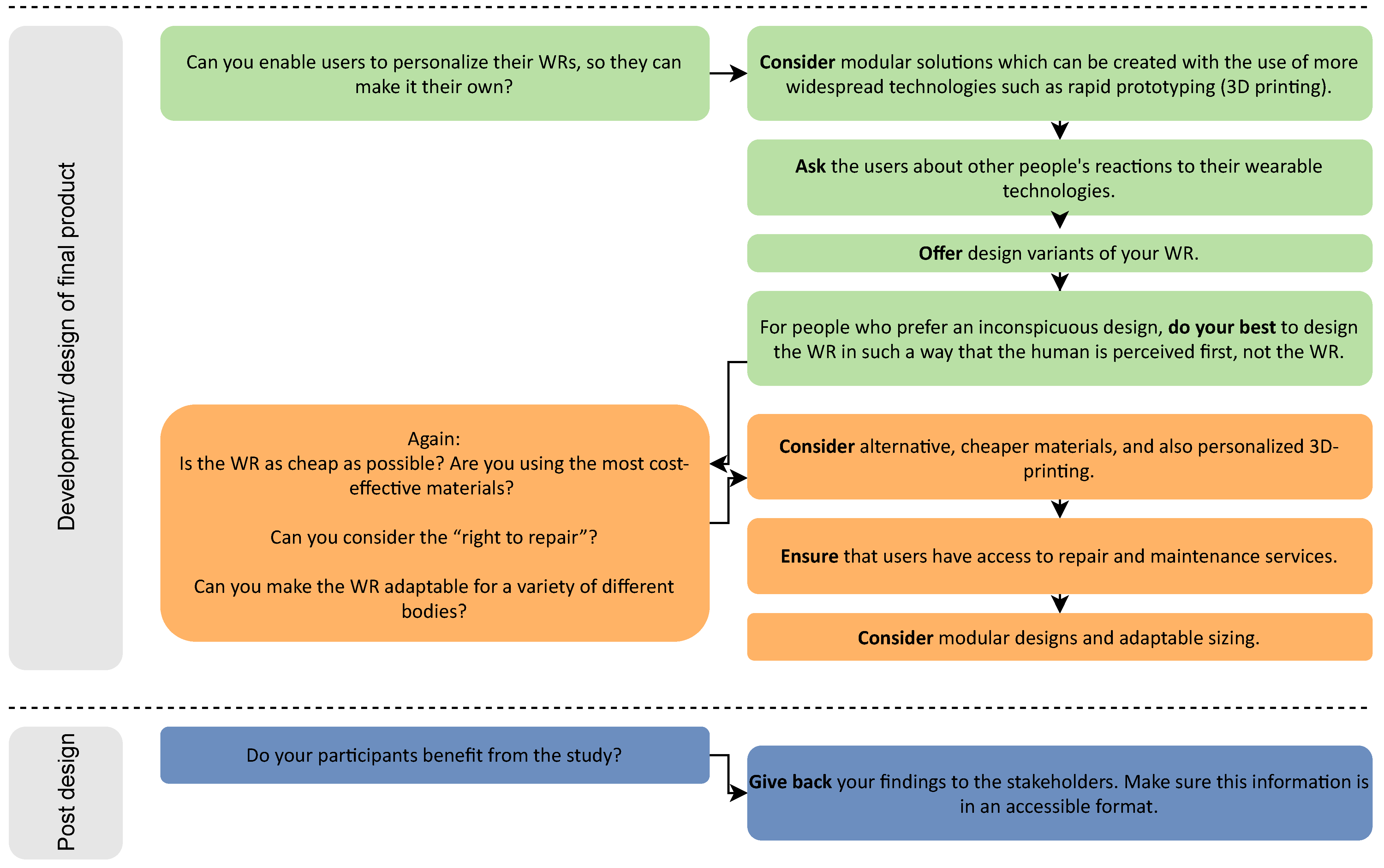

4. Implementing ELSI Considerations in Wearable Robotics: From Principles to Actionable Guidance

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- COST Action 16116 Wearable Robots. Augmentation, Assistance, or Substitution of Human Motor Functions. 2017. Available online: https://wearablerobots.eu/ (accessed on 20 July 2021).

- Van Laar, E.; Van Deursen, A.J.; Van Dijk, J.A.; De Haan, J. Measuring the levels of 21st-century digital skills among professionals working within the creative industries: A performance-based approach. Poetics 2020, 81, 101434. [Google Scholar] [CrossRef]

- Benchmarking Locomotion: Benchmarking Bipedal Locomotion. Available online: http://www.benchmarkinglocomotion.org/new/ (accessed on 20 July 2021).

- Eurobench: European Robotic Framework for Bipedal Locomotion Benchmarking. Available online: https://eurobench2020.eu (accessed on 20 July 2021).

- Kapeller, A.; Felzmann, H.; Fosch-Villaronga, E.; Hughes, A.-M. A taxonomy of ethical, legal and social implications of wearable robots: An expert perspective. Sci. Eng. Ethics 2020, 26, 3229–3247. [Google Scholar] [CrossRef] [PubMed]

- European Parliament and the Council of Europe ‘Leaked’ EU Regulation on AI. Available online: https://drive.google.com/file/d/1ZaBPsfor_aHKNeeyXxk9uJfTru747EOn/view (accessed on 14 April 2021).

- Hill, D.; Holloway, C.; Ramirez, D.Z.M.; Smitham, P.; Pappas, Y. What are user perspectives of exoskeleton technology? A literature review. Int. J. Technol. Assess. Heal. Care 2017, 33, 160–167. [Google Scholar] [CrossRef] [PubMed]

- Almpani, S.; Mitsikas, T.; Stefaneas, P.; Frangos, P. ExosCE: A legal-based computational system for compliance with exoskeletons’ CE marking. Paladyn J. Behav. Robot. 2020, 11, 414–427. [Google Scholar] [CrossRef]

- Ármannsdóttir, A.L.; Beckerle, P.; Moreno, J.C.; Van Asseldonk, E.H.F.; Manrique-Sancho, M.-T.; Del-Ama, A.J.; Veneman, J.; Briem, K. Assessing the Involvement of Users During Development of Lower Limb Wearable Robotic Exoskeletons: A Survey Study. Hum. Factors J. Hum. Factors Ergon. Soc. 2020, 62, 351–364. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kapeller, A.; Nagenborg, M.H.; Nizamis, K. Wearable robotic exoskeletons: A socio-philosophical perspective on Duchenne muscular dystrophy research. Paladyn, J. Behav. Robot. 2020, 11, 404–413. [Google Scholar] [CrossRef]

- Beers, S. Teaching 21st Century Skills; ASCD: Alexandria, VA, USA, 2011. [Google Scholar]

- Kilic-Bebek, E.; Nizamis, K.; Karapars, Z.; Gokkurt, A.; Unal, R.; Bebek, O.; Vlutters, M.; Vander, P.E.B.; Borghesan, G.; Decré, W.; et al. Discussing Modernizing Engineering Education through the Erasmus + Project Titled “Open Educational Resources on Enabling Technologies in Wearable and Collaborative Robotics (WeCoRD), 3rd ed.; Accepted/In Press; International Instructional Technologies in Engineering Education Symposium IITEE: Izmir, Turkey, 2020. [Google Scholar]

- Arntz, M.; Gregory, T.; Zierahn, U. ELS Issues in Robotics and Steps to Consider Them. Part 1: Robotics and Employment. Consequences of Robotics and Technological Change for the Structure and Level of Employment; Research Report; ZEW-Gutachten und Forschungsberichte: 2016. Available online: https://www.econstor.eu/bitstream/10419/146501/1/867017465.pdf (accessed on 12 March 2021).

- Vandemeulebroucke, T.; de Casterlé, B.D.; Gastmans, C. The use of care robots in aged care: A systematic review of argument-based ethics literature. Arch. Gerontol. Geriatr. 2018, 74, 15–25. [Google Scholar] [CrossRef]

- Fosch-Villaronga, E. Robots, Healthcare, and the Law: Regulating Automation in Personal Care; Routledge: Abingdon, UK, 2019. [Google Scholar]

- Holder, C.; Khurana, V.; Harrison, F.; Jacobs, L. Robotics and law: Key legal and regulatory implications of the robotics age (Part I of II). Comput. Law Secur. Rev. 2016, 32, 383–402. [Google Scholar] [CrossRef]

- High Level Expert Group (HLEG) on Artificial Intelligence, HLEG AI. Ethical Guidelines for Trustworthy AI. European Commission. Available online: https://ec.europa.eu/digital-single-market/en/news/ethics-guidelines-trustworthy-ai (accessed on 12 March 2021).

- Vallor, S. Carebots and Caregivers: Sustaining the Ethical Ideal of Care in the Twenty-First Century. Philos. Technol. 2011, 24, 251–268. [Google Scholar] [CrossRef]

- Van Wynsberghe, A. Designing Robots for Care: Care Centered Value-Sensitive Design. Sci. Eng. Ethics 2012, 19, 407–433. [Google Scholar] [CrossRef] [Green Version]

- Palmerini, E.; Bertolini, A.; Battaglia, F.; Koops, B.-J.; Carnevale, A.; Salvini, P. RoboLaw: Towards a European framework for robotics regulation. Robot. Auton. Syst. 2016, 86, 78–85. [Google Scholar] [CrossRef]

- Felt, U.; Fochler, M.; Sigl, L. IMAGINE RRI. A card-based method for reflecting on responsibility in life science research. J. Responsible Innov. 2018, 5, 1–24. [Google Scholar] [CrossRef]

- Felt, U. “Response-able practices” or “New bureaucracies of virtue”: The challenges of making RRI work in academic environments. In Responsible Innovation 3: A European Agenda? Asveld, L., van Dam-Mieras, R., Swierstra, T., Lavrijssen, S., Linse, K., van den Hoven, J., Eds.; Springer: Cham, Switzerland, 2017; pp. 49–68. [Google Scholar]

- IEEE. Ethically Aligned Design. 2016. Available online: https://standards.ieee.org/news/2016/ethically_aligned_design.html (accessed on 14 April 2021).

- Sutcliffe, H. A Report on Responsible Research and Innovation. MATTER European Commission. 2011. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.226.8407&rep=rep1&type=pdf (accessed on 20 July 2021).

- Owen, R.; Macnaghten, P.; Stilgoe, J. Responsible research and innovation: From science in society to science for society, with society. Sci. Public Policy 2012, 39, 751–760. [Google Scholar] [CrossRef] [Green Version]

- Schomberg, R.V. Technikfolgen abschätzen lehren: Bildungspotenziale transdisziplinärer Methode. In Prospects for Technology Assessment in a Framework of Responsible Research and Innovation; Dusseldorp, M., Beecroft, R., Eds.; Springer: Wiesbaden, Germany, 2012; pp. 39–61. Available online: https://app.box.com/s/f9quor8jo1bi3ham8lfc (accessed on 18 May 2021).

- Burget, M.; Bardone, E.; Pedaste, M. Definitions and conceptual dimensions of responsible research and innovation: A literature review. Sci. Eng. Ethics 2017, 23, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Nissenbaum, H. How computer systems embody values. Computer 2001, 34, 119–120. [Google Scholar] [CrossRef]

- Friedman, B.; Kahn, P.H.; Borning, A.; Huldtgren, A. Value sensitive design and information systems. In Early Engagement and New Technologies: Opening up the Laboratory; Springer: Dordrecht, The Netherlands, 2013; pp. 55–95. [Google Scholar]

- Cavoukian, A. Privacy by design: The definitive workshop. A foreword by Ann Cavoukian, Ph.D. Identity Inf. Soc. 2010, 3, 247–251. [Google Scholar] [CrossRef] [Green Version]

- Cummings, M.L. Integrating ethics in design through the value-sensitive design approach. Sci. Eng. Ethics 2006, 12, 701–715. [Google Scholar] [CrossRef]

- ORBIT Project. AREA 4P Framework. Available online: https://www.orbit-rri.org/about/area-4p-framework/ (accessed on 18 May 2021).

- RRI-Tools Project. Self Reflection Tool. Available online: https://rri-tools.eu/self-reflection-tool (accessed on 18 May 2021).

- Winfield, A. Ethical standards in robotics and AI. Nat. Electron. 2019, 2, 46–48. [Google Scholar] [CrossRef]

- British Standard Institute, BS 8611: Robots and Robotic Devices. Guide to the Ethical Design and Application of Robots and Robotic Systems. United Kingdom. 2016. Available online: https://shop.bsigroup.com/ProductDetail?pid=000000000030320089 (accessed on 20 July 2021).

- Salvini, P.; Laschi, C.; Dario, P. Do Service Robots Need a Driving License? [Industrial Activities]. IEEE Robot. Autom. Mag. 2011, 18, 12–13. [Google Scholar] [CrossRef]

- Fosch-Villaronga, E.; Heldeweg, M. “Regulation, I presume?” said the robot–Towards an iterative regulatory process for robot governance. Comput. Law Secur. Rev. 2018, 34, 1258–1277. [Google Scholar] [CrossRef]

- Yang, G.-Z.; Cambias, J.; Cleary, K.; Daimler, E.; Drake, J.; Dupont, P.E.; Hata, N.; Kazanzides, P.; Martel, S.; Patel, R.V.; et al. Medical robotics—Regulatory, ethical and legal considerations for increasing levels of autonomy. Sci. Robot. 2017, 2, eaam8638. [Google Scholar] [CrossRef] [PubMed]

- Fosch-Villaronga, E.; Khanna, P.; Drukarch, H.; Custers, B.H.M. A human in the loop in surgery automation. Nat. Mach. Intell. 2021, 3, 368–369. [Google Scholar] [CrossRef]

- Erden, Y.E.; Brey, P. Ethical Guidance for Research with a Potential for Human Enhancement (Version V1); Zenodo: Genève, Switzerland, 2021. [Google Scholar] [CrossRef]

- Beauchamp, T.L.; Childress, J.F. Principles of Biomedical Ethics; Oxford University Press: Oxford, UK, 1979. [Google Scholar]

- Bissolotti, L.; Nicoli, F.; Picozzi, M. Domestic use of the exoskeleton for gait training in patients with spinal cord injuries: Ethical dilemmas in clinical practice. Front. Neurosci. 2018, 12, 78. [Google Scholar] [CrossRef] [Green Version]

- Bessler, J.; Prange-Lasonder, G.B.; Schaake, L.; Saenz, J.F.; Bidard, C.; Fassi, I.; Valori, M.; Lassen, A.B.; Buurke, J.H. Safety assessment of rehabilitation robots: A review identifying safety skills and current knowledge gaps. Front. Robot. AI 2021, 8, 33. [Google Scholar] [CrossRef] [PubMed]

- Bessler, J.; Prange-Lasonder, G.B.; Schulte, R.V.; Schaake, L.; Prinsen, E.C.; Buurke, J.H. Occurrence and type of adverse events during the use of stationary gait robots—A systematic literature review. Front. Robot. AI 2020, 7, 158. [Google Scholar] [CrossRef] [PubMed]

- Barfield, W.; Williams, A. Cyborgs and enhancement technology. Philosophies 2017, 2, 4. [Google Scholar] [CrossRef]

- Breen, J.S. The exoskeleton generation–disability redux. Disabil. Soc. 2015, 30, 1568–1572. [Google Scholar] [CrossRef]

- Palmerini, E.; Azzarri, F.; Battaglia, F.; Bertolini, A.; Carnevale, A.; Carpaneto, J.; Warwick, K. Guidelines on Regulating Robotics: Deliverable 6.2 (Regulating Emerging Robotic Technologies in Europe: Robotics facing Law and Ethics). 2014. Available online: http://www.robolaw.eu/RoboLaw_files/documents/robolaw_d6.2_guidelinesregulatingrobotics_20140922.pdf (accessed on 20 July 2021).

- Tucker, M.R.; Olivier, J.; Pagel, A.; Bleuler, H.; Bouri, M.; Lambercy, O.; Gassert, R. Control strategies for active lower extremity prosthetics and orthotics: A review. J. Neuroeng. Rehabil. 2015, 12, 1–30. [Google Scholar] [CrossRef] [Green Version]

- Sawh, M. Getting all Emotional: Wearables that are Trying to Monitor How We Feel. Wareable. Available online: https://www.wareable.com/wearable-tech/wearables-that-track-emotion-7278 (accessed on 18 May 2021).

- Greenbaum, D. Ethical, legal and social concerns relating to exoskeletons. ACM SIGCAS Comput. Soc. 2016, 45, 234–239. [Google Scholar] [CrossRef]

- Mackenzie, C.; Rogers, W.; Dodds, S. Vulnerability: New Essays in Ethics and Feminist Philosophy; Oxford University Press: Oxford, UK, 2014. [Google Scholar]

- Klein, E.; Nam, C. Neuroethics and brain-computer interfaces (BCIs). Brain Comput. Interfaces 2016, 3, 123–125. [Google Scholar] [CrossRef] [Green Version]

- Søraa, R.A.; Fosch-Villaronga, E. Exoskeletons for all: The interplay between exoskeletons, inclusion, gender and intersectionality. Paladyn J. Behav. Robot. 2020, 11, 217–227. [Google Scholar] [CrossRef]

- Vrousalis, N. Exploitation: A primer. Philos. Compass 2018, 13, e12486. [Google Scholar] [CrossRef]

- Kittay, E.F. Love’s labor: Essays on Women, Equality and Dependency, 2nd ed.; Routledge: New York, NY, USA, 2020. [Google Scholar]

- Kittay, E.; Feder, E. (Eds.) The Subject of Care: Feminist Perspectives on Dependency; Rowman & Littlefield Publishers: Lanham, MD, USA, 2002. [Google Scholar]

- Manning, J. Health, Humanity and Justice: Emerging Technologies and Health Policy in the 21st Century; An Independent Review Commissioned; Conservative Party: London, UK, 2010. [Google Scholar]

- Fosch-Villaronga, E.; Čartolovni, A.; Pierce, R.L. Promoting inclusiveness in exoskeleton robotics: Addressing challenges for pediatric access. Paladyn J. Behav. Robot. 2020, 11, 327–339. [Google Scholar] [CrossRef]

- Food and Drug Administration. FDA Cybersecurity. 2019. Available online: https://www.fda.gov/medical-devices/digital-health/cybersecurity (accessed on 20 July 2021).

- Floridi, L. Faultless responsibility: On the nature and allocation of moral responsibility for distributed moral actions. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2016, 374, 20160112. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Matthias, A. The responsibility gap: Ascribing responsibility for the actions of learning automata. Ethics Inf. Technol. 2004, 6, 175–183. [Google Scholar] [CrossRef]

- Fosch-Villaronga, E.; Özcan, B. The progressive intertwinement between design, human needs and the regulation of care technology: The case of lower-limb exoskeletons. Int. J. Soc. Robot. 2019, 12, 959–972. [Google Scholar] [CrossRef] [Green Version]

- Stamatis, D.H. Failure Mode and Effect Analysis-FMEA from Theory to Execution; 2nd Edition Revised and Expanded; American Society for Quality (ASQ): Milwaukee, WI, USA, 2003. [Google Scholar]

| Dimension | Issue | Explanation | Examples (Non-Exhaustive List) |

|---|---|---|---|

| Wearable Robots and the Self | Benefits, Risks and Harms for Self | WRs are considered within the context of the key ethical principles of beneficence and non-maleficence [41]. Harm-benefit analyses usually seek to determine benefit profiles and justifiable risks connected to the development and use of WRs [42], but these can be user dependent. The recent work by Bessler et al. [43,44] showed that there are multiple adverse effects and safety hazards linked to the use of robotics and their interaction with the human body. | Harms: functional restrictions, limited ease of use and battery life, intrusive appearance, data hacking, malware, or risk of falling. harmful physical interaction between the user and the WRs. Benefits: being able to stand erect supported by a lower limb WR, reducing incidence of back strain within working environments, or using the upper limb to manipulate the immediate environment. |

| Body and Identity Impacts | WRs may affect a user’s self-perception and identity; they are likely to change not only the users’ functional abilities but also how their self is experienced [45,46]. | Identity: WRs, like wheelchairs, can become incorporated into their users’ identities, necessitating a conceptual re-evaluation of the body [45,47]. This may include considering cosmetic [48] or also emotional aspects [49]. Dependence: Dependence on WR for essential activities of daily life including holding things, walking or working has potential impacts on the user’s identity if the technology is withdrawn without adequate replacement [42,50]. | |

| The Experience of Vulnerability | WRs could cause or ameliorate a user’s experience of vulnerability, defined as the ‘capacity to suffer that is inherent in human embodiment’ [51]. | Vulnerability: Vulnerability, resulting from reduced mobility with concomitant health and social risks, could be ameliorated by the WR, or increased if it resulted in dependence on the WR which was then withdrawn. | |

| Agency, Control and Responsibility | Training is required so that WR users can co-ordinate their shared bodily and WR movements. Especially to start with, users might feel that they are not entirely in control of the combination of their body and the machine, as there are still limited solutions for successfully translating users intent to robotic movement. | Safety and Control: The WR user could experience unplanned and possibly harmful movements, generating ‘destructive forces whose controlled output behavior may not always be in agreement with the user’s intent’ [48]. Users might try to move in a way that is incompatible with the WR’s programming, or may not want to use the WR’s automatically generated movements. | |

| Wearable Robots and the Other | Ableism and Stigmatization in the Perception of the WR-Supported Body with Disabilities | WR may strengthen the ableist viewpoint that disabilities are intrinsically bad, create stigma, reduce the socially acceptable range of bodies, decrease the focus on improving accessibility and not be suitable for all users. | Accessibility: Claims for accessible devices such as ramps and door openers [52] could be undermined by increased use of WRs. Intersectional approach: WRs have weight and height limitations, and will be built around expected shapes. Anyone who does not meet these will be excluded [37,53] unless WR developers account for these differences [53]. |

| Overestimation and Alienation in the Perception of the WR-Enhanced Professional Body | Different regulations currently apply depending on the context of use i.e., whether within the healthcare field or not [15]. However, the boundaries can be indistinct—when does healthcare supporting use change into non-health related enhancement [47,50], and so which regulations apply [15]. | Dehumanisation and Discrimination: Changing expectations of the work a WR-enhanced worker can achieve might lead to extra risks, unequal treatment, and exploitation [54]. Increased negative feelings, including alienation, may be experienced by WR-enhanced workers from people unfamiliar with WRs. Work environments which include significant public-facing contact may face more challenges. | |

| Care-Giving, Dependencies and Trust | Care relationships may be significantly affected by the use of WRs as they are characterised by various dependencies. Their complexity and ethical significance have been extensively explored in bioethics e.g., [55,56]. Families may also be impacted by patients’ WR use. | Trust: WRs can be used by caregivers, to assist patients’ movements, so reducing bodily strain, or by care-receivers to enhance movement. Both contexts can be either for short periods of rehabilitation or longer-term options. Trust in the device and its role in care may be potentially precarious and easily disrupted. Left-behind: A perceived, and potentially inappropriate, reduced need for human care may result from patients’ increased physical independence from using WRs. | |

| Wearable Robots and the Society | Technologisa-tion, Dehumanisation and Exploitation | ‘Turning workers into machines’ has been connected to the dehumanisation of work and the possible exploitation of workers [50]. | Dehumanisation: The reasons for imposing the use of WRs on workers might be an act of domination and subjugation for financial reasons, rather than being targeted to improve workers’ health. If workers’ become stronger or more efficient owing to WR use, employers may raise task performance targets instead of balancing efficiency benefits holistically against the broader impacts of intensified work practices on workers |

| Social Justice, Resources and Access | Access is likely to be limited by cost and physical dimensions. | Accessibility: WRs may exacerbate social inequality by only being available to wealthy patients in developed countries [50,57]. WRs for growing children need to be adaptable and have a life-based design approach [58]. | |

| Data Protection and Privacy | WR specifications will control the nature, sensitivity and volume of processed data. For a human-exoskeleton interaction this may include data on kinematics and kinetics from training and use, exoskeleton performance, environment, as well as a user’s health data. | Data Management: Much of this biometric data will be in the sensitive data category of the General Data Protection Regulation (GDPR), requiring enhanced protective measures to ensure the rights of the WR users as data subjects. Cybersecurity and safety: Exploitation of technological vulnerabilities could permit remote unauthorized people to access, control, and issue commands to compromised devices, potentially leading to patient harm [59]. For robotic devices directly attached to the user’s body the implications may be greater and so deserve particular attention [37,50]. | |

| Accountability and Responsibility | Responsibility and accountability for robot actions arise from an individual through to a societal level. | Responsibility: Challenges include:

| |

| Legislation and Regulation for WRs | Legal frameworks governing WR use are not clear. At the same time, legislation is complex and the status of WR as medical devices is unclear. Some regulations are binding (MDR) while others (ISO standard) are not. | Compliance with existing regulations: WRs, whether worn in a healthcare setting for patient rehabilitation, or in a factory setting for worker support, may present similar risks to the user’s health and, thus, have to follow the Medical Device Regulation [15,62]. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kapeller, A.; Felzmann, H.; Fosch-Villaronga, E.; Nizamis, K.; Hughes, A.-M. Implementing Ethical, Legal, and Societal Considerations in Wearable Robot Design. Appl. Sci. 2021, 11, 6705. https://doi.org/10.3390/app11156705

Kapeller A, Felzmann H, Fosch-Villaronga E, Nizamis K, Hughes A-M. Implementing Ethical, Legal, and Societal Considerations in Wearable Robot Design. Applied Sciences. 2021; 11(15):6705. https://doi.org/10.3390/app11156705

Chicago/Turabian StyleKapeller, Alexandra, Heike Felzmann, Eduard Fosch-Villaronga, Kostas Nizamis, and Ann-Marie Hughes. 2021. "Implementing Ethical, Legal, and Societal Considerations in Wearable Robot Design" Applied Sciences 11, no. 15: 6705. https://doi.org/10.3390/app11156705

APA StyleKapeller, A., Felzmann, H., Fosch-Villaronga, E., Nizamis, K., & Hughes, A.-M. (2021). Implementing Ethical, Legal, and Societal Considerations in Wearable Robot Design. Applied Sciences, 11(15), 6705. https://doi.org/10.3390/app11156705