The Limited Effect of Graphic Elements in Video and Augmented Reality on Children’s Listening Comprehension

Abstract

1. Introduction

2. Related Works

2.1. Listening Comprehension

2.2. Augmented Reality and Comprehension in Children

2.3. Effects of Visual Content Characteristics on Comprehension

3. Materials and Methods

3.1. Objective and Hypotheses

- H1A: People who hear AR lesson will have more successful marks in comprehension test (CT).

- H2A: People who hear AR lesson will take longer to complete the comprehension test (CT).

3.2. Sample

- Augmented Reality Group (AR group)

- High Reading Comprehension (HighAR): 8 participants

- Low Reading Comprehension: (LowAR): 8 participants

- Video Group (VIDEO group)

- High Reading Comprehension (HighVideo): 8 participants

- Low Reading Comprehension (LowVideo): 8 participants

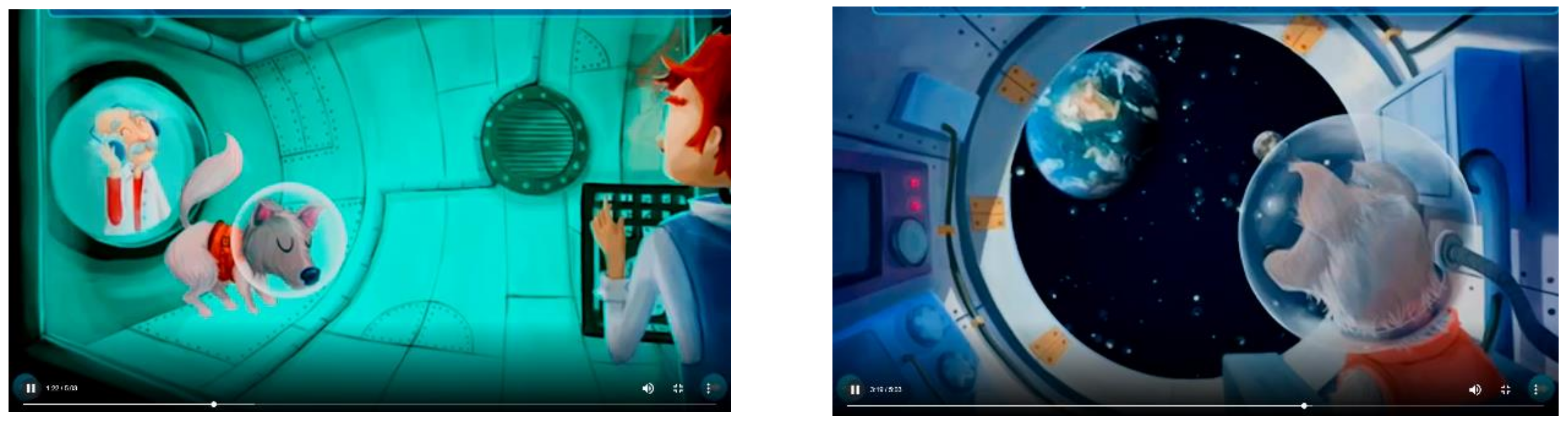

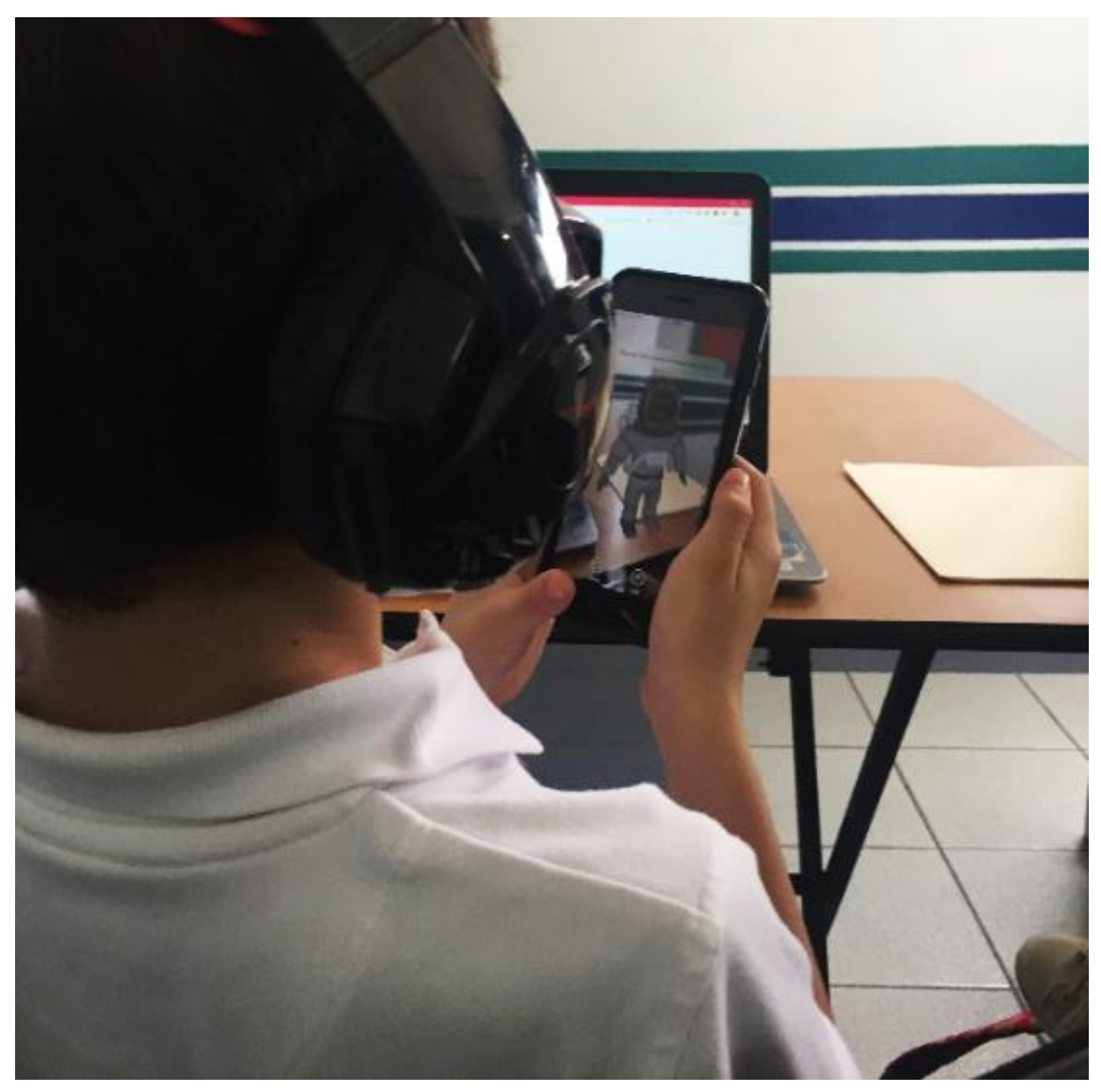

3.3. Software and Resources

3.4. Procedure

“I am [insert Monitor Name] and I will be with you throughout this activity. I’m studying computers and I like to learn how to use computers, tablets and cell phones to help people.

I asked you to be here today because I need your help. I’m trying to figure out how to make a reading app for kids like you. So, I want you to help me by doing an activity. It’s going to take about 25 min.

Don’t get nervous; we are testing the application, not you. Really, you’re not going to make a mistake here. There are no right answers or wrong answers but if I see that you’re paying attention I’ll give you a prize (I’ll give every participant a prize). […]. Now I am going to explain what we’re going to do. You know how in some classes professors show you videos to teach you something? Well, we’re going to show you a video and I want you to listen carefully while you watch it […].”

- What was the goal of the experiment?

- ○

- To experiment with a dog in order to test whether humans could survive in space.

- ○

- To watch animal behavior in situations of extreme fear.

- ○

- Build an intelligent ship to explore the Moon and to find aliens.

- ○

- Send living beings into space for them to live in another galaxy.

- How did scientists know that Laika was alive in space?

- ○

- By her barking.

- ○

- By her pulse.

- ○

- Because of movements in the ship.

- ○

- They saw her from their lab’s telescope.

- What was the name of the ship that Laika travelled in?

- ○

- Sputnik 2.

- ○

- Odyssey.

- ○

- Apollo 2.

- ○

- Hubble.

4. Results

4.1. Comparison of the Number of Successes in the Comprehension Test

4.2. Comparison of Times for Comprehension Test Completion

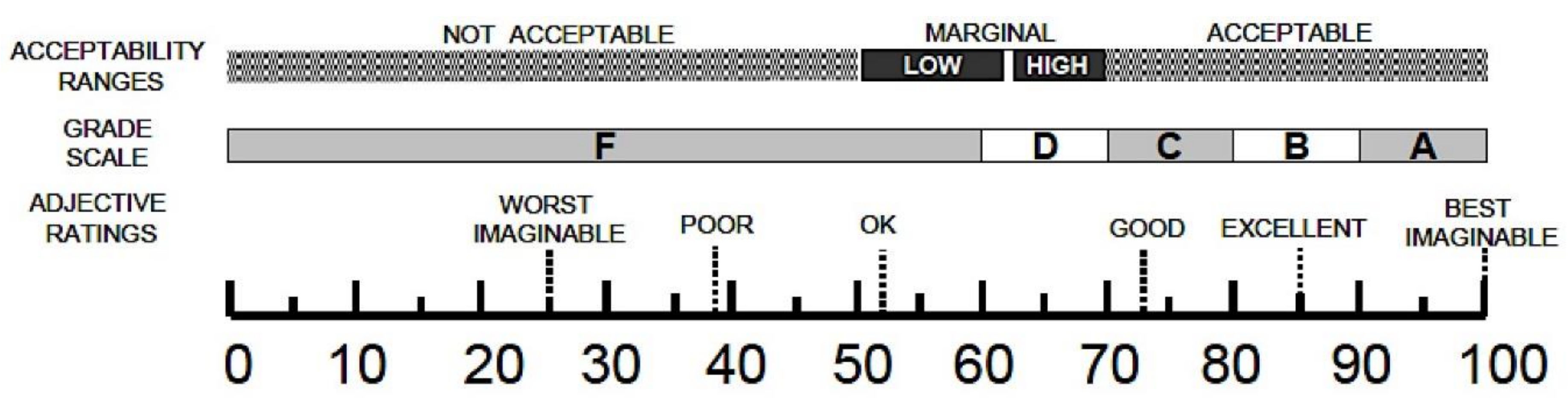

4.3. Usability

- Question #1: How much do you like the application? It is entertaining, boring, it attracts attention, you don’t like it.

- Question #2: Have you been surprised by the virtual 3D drawings integrated into the real environment?

- Question #3: Did you find it difficult to use the app?

- Question #4: Would you like to have more AR apps to study or do you prefer the video version?

4.4. Observational Findings

5. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Liao, T. Future directions for mobile augmented reality research: Understanding relationships between augmented reality users, nonusers, content, devices, and industry. Mob. Media Commun. 2019, 7, 131–149. [Google Scholar] [CrossRef]

- Gattullo, M.; Scurati, G.W.; Fiorentino, M.; Uva, A.E.; Ferrise, F.; Bordegoni, M. Towards augmented reality manuals for industry 4.0: A methodology. Robot. Comput. Integr. Manuf. 2019, 56, 276–286. [Google Scholar] [CrossRef]

- Affolter, R.; Eggert, S.; Sieberth, T.; Thali, M.; Ebert, L.C. Applying augmented reality during a forensic autopsy—Microsoft HoloLens as a DICOM viewer. J. Forensic Radiol. Imaging 2019, 16, 5–8. [Google Scholar] [CrossRef]

- Gan, A.; Cohen, A.; Tan, L. Augmented Reality-Assisted Percutaneous Dilatational Tracheostomy in Critically Ill Patients With Chronic Respiratory Disease. J. Intensive Care Med. 2019, 34, 153–155. [Google Scholar] [CrossRef]

- Yip, J.; Wong, S.-H.; Yick, K.-L.; Chan, K.; Wong, K.-H. Improving quality of teaching and learning in classes by using augmented reality video. Comput. Educ. 2019, 128, 88–101. [Google Scholar] [CrossRef]

- Bazarov, S.E.; Kholodilin, I.Y.; Nesterov, A.S.; Sokhina, A.V. Applying Augmented Reality in practical classes for engineering students. IOP Conf. Ser. Earth Environ. Sci. 2017, 87, 032004. [Google Scholar] [CrossRef]

- Karagozlu, D.; Ozdamli, F. Student Opinions on Mobile Augmented Reality Application and Developed Content in Science Class. TEM J. J. 2017, 6, 660–670. [Google Scholar] [CrossRef]

- Toledo-Morales, P.; Sánchez-García, J.M. Use of Augmented Reality in Social Sciences as Educational Resource. Turkish Online J. Distance Educ. 2018, 19, 38–52. [Google Scholar] [CrossRef]

- Andres, H.P. Active teaching to manage course difficulty and learning motivation. J. Furth. High. Educ. 2019, 43, 1–16. [Google Scholar] [CrossRef]

- Setiawan, A. Comparison of mobile learning applications in classroom learning in vocational education technology students based on usability testing. IOP Conf. Ser. Mater. Sci. Eng. 2018, 434, 012251. [Google Scholar] [CrossRef]

- Tsai, C.W.; Fan, Y.T. Research trends in game-based learning research in online learning environments: A review of studies published in SSCI-indexed journals from 2003 to 2012. Br. J. Educ. Technol. 2013, 44, E115–E119. [Google Scholar] [CrossRef]

- Martín-Gutiérrez, J.; Efrén-Mora, C.; Añorbe-Díaz, B.; González-Marrero, A. Virtual Technologies Trends in Education. EURASIA J. Math. Sci. Technol. Educ. 2017, 13, 469–486. [Google Scholar] [CrossRef]

- Schmalstieg, D.; Hollerer, T. Augmented reality: Principles and practice. In Proceedings of the 2017 IEEE Virtual Reality (VR), Los Angeles, CA, USA, 18–22 March 2017; pp. 425–426. [Google Scholar] [CrossRef]

- Shevat, A. Designing Bots: Creating Conversational Experiences; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2017. [Google Scholar]

- Seipel, P.; Stock, A.; Santhanam, S.; Baranowski, A.; Hochgeschwender, N.; Schreiber, A. Adopting conversational interfaces for exploring OSGi-based software architectures in augmented reality. In Proceedings of the 1st International Workshop on Bots in Software Engineering (BotSE’19), Montreal, QC, Canada, 27 May 2019; pp. 20–21. [Google Scholar]

- Van De Ven, F. Identibot: Combining AI and AR to Create the Future of Shopping. Available online: https://chatbotslife.com/identibot-combining-ai-and-ar-to-create-the-future-of-shopping-e30e726ed1c1 (accessed on 29 December 2019).

- Ati, M.; Kabir, K.; Abdullahi, H.; Ahmed, M. Augmented reality enhanced computer aided learning for young children. In Proceedings of the 2018 IEEE Symposium on Computer Applications & Industrial Electronics (ISCAIE), Penang, Malasya, 28–29 April 2018; pp. 129–133. [Google Scholar] [CrossRef]

- Pretto, F.; Manssour, I.H.; Lopes, M.H.I.; Pinho, M.S. Experiences using Augmented Reality Environment for training and evaluating medical students. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), San Jose, CA, USA, 15–19 July 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Del Rio Guerra, M.; Martín-Gutiérrez, J.; Vargas-Lizárraga, R.; Garza-Bernal, I. Determining which touch gestures are commonly used when visualizing physics problems in augmented reality. In VAMR 2018: Virtual, Augmented and Mixed Reality: Interaction, Navigation, Visualization, Embodiment, and Simulation, Proceedings of the International Conference on Virtual, Augmented and Mixed Reality, Las Vegas, CA, USA, 15–20 July 2018; Springer: Cham, Switzerland, 2018; pp. 3–12. [Google Scholar] [CrossRef]

- Logan, S.; Medford, E.; Hughes, N. The importance of intrinsic motivation for high and low ability readers’ reading comprehension performance. Learn. Individ. Differ. 2011, 21, 124–128. [Google Scholar] [CrossRef]

- Giannakos, M.N.; Vlamos, P. Using webcasts in education: Evaluation of its effectiveness. Br. J. Educ. Technol. 2013, 44, 432–441. [Google Scholar] [CrossRef]

- Luttenberger, S.; Macher, D.; Maidl, V.; Rominger, C.; Aydin, N.; Paechter, M. Different patterns of university students’ integration of lecture podcasts, learning materials, and lecture attendance in a psychology course. Educ. Inf. Technol. 2018, 23, 165–178. [Google Scholar] [CrossRef]

- Olmos-Raya, E.; Ferreira-Cavalcanti, J.; Contero, M.; Castellanos-Baena, M.C.; Chicci-Giglioli, I.A.; Alcañiz, M. Mobile virtual reality as an educational platform: A pilot study on the impact of immersion and positive emotion induction in the learning process. EURASIA J. Math. Sci. Technol. Educ. 2018, 14, 2045–2057. [Google Scholar] [CrossRef]

- Hung, I.C.; Chen, N.S. Embodied interactive video lectures for improving learning comprehension and retention. Comput. Educ. 2018, 117, 116–131. [Google Scholar] [CrossRef]

- Hamdan, J.M.; Al-Hawamdeh, R.F. The Effects of ‘Face’ on Listening Comprehension: Evidence from Advanced Jordanian Speakers of English. J. Psycholinguist. Res. 2018, 47, 1121–1131. [Google Scholar] [CrossRef]

- Karbalaie Safarali, S.; Hamidi, H. The Impact of Videos Presenting Speakers’ Gestures and Facial Clues on Iranian EFL Learners’ Listening Comprehension. Int. J. Appl. Linguist. English Lit. 2012, 1, 106–114. [Google Scholar] [CrossRef]

- Lee, H.; Mayer, R.E. Visual Aids to Learning in a Second Language: Adding Redundant Video to an Audio Lecture. Appl. Cogn. Psychol. 2015, 29, 445–454. [Google Scholar] [CrossRef]

- Pardo-Ballester, C. El uso del vídeo en tests de comprensión oral por internet. J. New Approaches Educ. Res. 2016, 5, 91–98. [Google Scholar] [CrossRef]

- Rohloff, T.; Bothe, M.; Renz, J.; Meinel, C. Towards a Better Understanding of Mobile Learning in MOOCs. In Proceedings of the 2018 Learning With MOOCS (LWMOOCS), Madrid, Spain, 26–28 September 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Anton, D.; Kurillo, G.; Bajcsy, R. User experience and interaction performance in 2D/3D telecollaboration. Futur. Gener. Comput. Syst. 2018, 82, 77–88. [Google Scholar] [CrossRef]

- Delgado, F.J.; Martinez, R.; Finat, J.; Martinez, J.; Puche, J.C.; Finat, F.J. Enhancing the reuse of digital resources for integrated systems to represent, understand and dynamize complex interactions in architectural cultural heritage environments. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 1, 97102. [Google Scholar] [CrossRef]

- Saleh, M.; Al Barghuthi, N.; Baker, S. Innovation in Education via Problem Based Learning from Complexity to Simplicity. In Proceedings of the 2017 International Conference on New Trends in Computing Sciences (ICTCS), Amman, Jordan, 11–13 October 2017; pp. 283–288. [Google Scholar] [CrossRef]

- Lesnov, R.O. Content-Rich Versus Content-Deficient Video-Based Visuals in L2 Academic Listening Tests. Int. J. Comput. Lang. Learn. Teach. 2018, 8, 15–30. [Google Scholar] [CrossRef]

- Kashani, A.S.; Sajjadi, S.; Sohrabi, M.R.; Younespour, S. Optimizing visually-assisted listening comprehension. Lang. Learn. J. 2011, 39, 75–84. [Google Scholar] [CrossRef]

- Seo, K. Research note: The effect of visuals on listening comprehension: A study of Japanese learners’ listening strategies. Int. J. Listening 2002, 16, 57–81. [Google Scholar] [CrossRef]

- Tobar-Muñoz, H.; Baldiris, S.; Fabregat, R. Augmented Reality Game-Based Learning: Enriching Students’ Experience during Reading Comprehension Activities. J. Educ. Comput. Res. 2017, 55, 901–936. [Google Scholar] [CrossRef]

- Yilmaz, R.M.; Kucuk, S.; Goktas, Y. Are augmented reality picture books magic or real for preschool children aged five to six? Br. J. Educ. Technol. 2017, 48, 824–841. [Google Scholar] [CrossRef]

- Roohani, A.; Jafarpour, A.; Zarei, S. Effects of Visualisation and Advance Organisers in Reading Multimedia-Based Texts. 3L Southeast Asian J. Engl. Lang. Stud. 2015, 21, 47–62. [Google Scholar] [CrossRef][Green Version]

- Nirme, J.; Haake, M.; Lyberg Åhlander, V.; Brännström, J.; Sahlén, B. A virtual speaker in noisy classroom conditions: Supporting or disrupting children’s listening comprehension? Logop. Phoniatr. Vocology 2019, 44, 79–86. [Google Scholar] [CrossRef]

- Rudner, M.; Lyberg-Åhlander, V.; Brännström, J.; Nirme, J.; Pichora-Fuller, M.K.; Sahlén, B. Listening comprehension and listening effort in the primary school classroom. Front. Psychol. 2018, 9, 1193. [Google Scholar] [CrossRef] [PubMed]

- Merkt, M.; Weigand, S.; Heier, A.; Schwan, S. Learning with videos vs. learning with print: The role of interactive features. Learn. Instr. 2011, 21, 687–704. [Google Scholar] [CrossRef]

- Dünser, A. Supporting low ability readers with interactive augmented reality. In Annual Review of Cybertherapy and Telemedicine. Changing the Face of Healthcare; Wiederhold, B.K., Gamberini, L., Bouchard, S., Riva, G., Eds.; Interactive Media Institute: San Diego, CA, USA, 2008; pp. 39–46. [Google Scholar]

- Augment Augment Sales. Available online: https://www.augment.com/solutions/field_sales/ https://www.augment.com/blocks/ar-viewer/ (accessed on 29 December 2019).

- Carter, M.D.; Walker, M.M.; O’Brien, K.; Hough, M.S. The effects of text length on reading abilities in accelerated reading tasks. Speech Lang. Hear. 2017, 22, 111–121. [Google Scholar] [CrossRef]

- Brooke, J. SUS—A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Digital Communications Division in the U.S. Department of Health and Human Services’ (HHS) Office of the Assistant Secretary for Public Affairs. Available online: https://www.usability.gov/how-to-and-tools/methods/system-usability-scale.html (accessed on 29 December 2019).

- Baumgartner, J.; Frei, N.; Kleinke, M.; Sauer, J.; Sonderegger, A. Pictorial System Usability Scale (P-SUS): Developing an Instrument for Measuring Perceived Usability. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ’19), Glasgow, Scotland, UK, 4–9 May 2019; pp. 1–11. [Google Scholar] [CrossRef]

- Lewis, J.R.; Sauro, J. The factor structure of the system usability scale. In Proceedings of the International Conference on Human Centered Design (HCI International 2009), San Diego, CA, USA, 19–24 July 2009; Springer: Berlin, Germany, 2009; pp. 94–103. [Google Scholar]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Sauro, J. Measuring Usability with the System Usability Scale (SUS). Available online: https://measuringu.com/sus/ (accessed on 29 December 2019).

- Kostyrka-Allchorne, K.; Cooper, N.R.; Simpson, A. Touchscreen generation: children’s current media use, parental supervision methods and attitudes towards contemporary media. Acta Paediatr. 2017, 106, 654–662. [Google Scholar] [CrossRef]

- Dirin, A.; Laine, T.H. User Experience in Mobile Augmented Reality: Emotions, Challenges, Opportunities and Best Practices. Computers 2018, 7, 33. [Google Scholar] [CrossRef]

| Study | Sample | Education | Scope/Results | Format |

|---|---|---|---|---|

| [25] Hamdan and Al-Hawamde | 60 | University and graduate students. Foreign language. | Listening comprehension in foreign language affected by flat audio and audio + video. | Audio and video on computer. |

| [33] Lesnov | 73 | Foreign language students. | Listening comprehension in foreign language affected by flat audio, audio + relevant visuals and audio + non-relevant visuals. No statistical difference in second language test-takers’ performance on an academic listening test in an audio-only mode versus an audio-video mode. | Audio and video on computer. |

| [38] Roohani, Jafarpour and Zarei | 80 | Foreign language students. | Listening comprehension in foreign language affected by flat audio, audio + relevant visuals and audio + non-relevant visuals. The animation type of visualization was more effective than the static one and embedding animations with question advance organizers improved reading comprehension significantly. | Text, animations and static images on computer. |

| [39] Nirme et al. | 55 | Children aged 8 to 9 (Swedish elementary schools). | Listening comprehension in noise and silence conditions by audio and audiovisual. The results are inconclusive regarding how seeing a virtual speaker affects listening comprehension. | Audio and audiovisual (virtual speaker video). |

| [42] Dünser | 21 | Children aged 6 to 7 (primary school students). | Reading comprehension for traditional picture books and for AR picture books. No statistical difference in the number of interactive lessons read between high and low reading comprehension groups. Readers with high reading comprehension remembered more events. | Text, images, AR on computer. A webcam is used to capture bookmarks. |

| Present study | 32 | Children aged 9 to 11 (primary school students). | Listening comprehension affected by visual elements of video and visual elements of AR. See Section 4. | Audio, video on computer, AR on mobile device. |

| Part | Main Idea | 3D Model 1 | Duration (s) |

|---|---|---|---|

| 1 | The description of an experiment to find out if humans could survive in space. | An astronaut (Brewton, 2018) | 41″ |

| 2 | Capturing the dog that would be the test subject in the experiment. | A dog (Pillen, 2018) | 26″ |

| 3 | Description of the training that Laika underwent. | A washing machine (Coldesina, 2017) | 25″ |

| 4 | Russian scientists publicly announce the upcoming launch of Laika. | A dog in an astronaut suit (Morcillo, 2018) | 40″ |

| 5 | Laika is launched into space and vital signs are recorded. | A space rocket (Tvalashvili, 2016) | 48″ |

| 6 | Laika and Sputnik II continue their mission around the Earth and Laika dies. | Planet Earth (Guggisberg, 2018) | 48″ |

| 7 | Scientists announce their findings, causing unrest among the population. | A person protesting (Tepapalearninglab, 2017) | 42″ |

| 8 | Protesters achieve the prevention of future animal abuse for scientific purposes and Laika is thanked for marking the beginning of the mission to send humans into space. | A rocket standing on a star (Lukashov, 2016) | 33″ |

| High | Low | Boys | Girls | All Participants | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Score | Time | Score | Time | Score | Time | Score | Time | Score | Time | |

| AR Group | 7.75 (0.89) | 288.88 (256.97) | 5.12 (3.00) | 304.75 (124.36) | 6.00 (2.80) | 255.08 (119.38) | 7.75 (0.5) | 422.00 (332.24) | 6.44 (2.53) | 296.81 (195.19) |

| n = 8 | n = 8 | n = 12 | n = 4 | N = 16 | ||||||

| Score | Time | Score | Time | Score | Time | Score | Time | Score | Time | |

| VIDEO Group | 7.63 (0.74) | 161.88 (64.96) | 6.63 (0.92) | 391.75 (259.75) | 7.11 (1.05) | 33,700 (248.36) | 7.14 (0.90) | 199.42 (154.56) | 7.12 (0.96) | 276.81 (217.77) |

| n = 8 | n = 8 | n = 9 | n = 7 | N = 16 | ||||||

| Factor | p-Value |

|---|---|

| Level of Reading Comprehension | 0.005 |

| Lesson format | 0.254 |

| Level of Reading Comprehension * Lesson Format | 0.180 |

| Subgroup | Mean | Tukey Group | |

|---|---|---|---|

| HighAR | 7.75 | A | |

| HighVIDEO | 7.63 | A | |

| LowVIDEO | 6.63 | A | B |

| LowAR | 5.12 | B | |

| Factor | p-Value |

|---|---|

| Reading comprehension level | 0.017 |

| Lesson Format | 0.731 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

del Río Guerra, M.S.; Garza Martínez, A.E.; Martin-Gutierrez, J.; López-Chao, V. The Limited Effect of Graphic Elements in Video and Augmented Reality on Children’s Listening Comprehension. Appl. Sci. 2020, 10, 527. https://doi.org/10.3390/app10020527

del Río Guerra MS, Garza Martínez AE, Martin-Gutierrez J, López-Chao V. The Limited Effect of Graphic Elements in Video and Augmented Reality on Children’s Listening Comprehension. Applied Sciences. 2020; 10(2):527. https://doi.org/10.3390/app10020527

Chicago/Turabian Styledel Río Guerra, Marta Sylvia, Alejandra Estefanía Garza Martínez, Jorge Martin-Gutierrez, and Vicente López-Chao. 2020. "The Limited Effect of Graphic Elements in Video and Augmented Reality on Children’s Listening Comprehension" Applied Sciences 10, no. 2: 527. https://doi.org/10.3390/app10020527

APA Styledel Río Guerra, M. S., Garza Martínez, A. E., Martin-Gutierrez, J., & López-Chao, V. (2020). The Limited Effect of Graphic Elements in Video and Augmented Reality on Children’s Listening Comprehension. Applied Sciences, 10(2), 527. https://doi.org/10.3390/app10020527