1. Introduction

Virtual reality (VR) is considered as one type of the most widely-announced applications of the fifth-generation cellular system (5G) [

1,

2,

3]. In addition, many applications and market sectors are expected to be introduced in many areas of life [

4]. Furthermore, augmented reality (AR) is considered as a new technology that enables the augmentation of real objects in the surrounding environments such as perceptual information extracted from multiple sensory modes containing haptic, visual and auditory sensors [

5,

6]. In addition to that, the recent developments and advances in sensory manufacturing, AR devices (e.g., Epson Moverio and Google Glass) and AR toolkits (e.g., ARKit) lead to developing a wide range of AR/VR applications in various domains [

7,

8], which are considered to be ultra-low latency applications and required an end-to-end latency in terms of 5 ms [

9,

10,

11].

However, designing a reliable AR/VR system that achieves the required latency constraints and other required quality of service (QoS) requirements is a challenge. This is due to the long distance between the application server and the end users, which leads to increase the network latency and thus, affects the user experience [

12].

To address these issues, mobile edge computing (MEC), software-defined networking (SDN) and network function virtualization (NFV) have been promised as new technologies that have received much attention lately and can provide the AR/VR applications with an ultra-low latency [

13,

14,

15,

16]. MEC is a recent paradigm that brings the computing capabilities at the edge of the radio access network (RAN), near to end-users and thus, reduces the communication latency. This paradigm turns the centralized huge data centers to distributed small edge servers and thus, moves from the centralization to the decentralization of computing resources [

17]. Introducing edge-cloud servers in the way between the AR end-user and the application server achieves many benefits that include the reduction of communication latency.

SDN and Network softwarization are another key paradigm and recent communication technology which provide the required flexibility and open the way for network innovation by separating data and control plans. In addition, SDN deployment for AR networks provides the required reliability and flexibility. Furthermore, SDN also can manage the distributed MEC servers and control the communication with the application server.

Based on 5G technology, data can be transferred with high speed and low latency, where society will rapidly enter the new era of smart cities and the Internet of things (IoT). Industry stakeholders have identified several potential use cases for 5G networks, and ITU-R has identified three important categories for these use cases: Enhanced Mobile Broadband (eMBB)—enhanced broadband indoor and outdoor, corporate collaboration, augmented and virtual reality; massive machine-type communications (mMTC)—IoT, smart agriculture, smart cities, energy monitoring, smart home, remote monitoring; and ultra-reliable low-latency communications (URLLC)—autonomous vehicles, smart grids, remote patient monitoring and telemedicine, production automation.

According to wireless operators, eMBB will be the primary scenario for using 5G in the early stages of network deployment. Based on eMBB, densely populated areas will be covered by high-speed mobile broadband, users will have access to high-speed streaming data on-demand on home devices, screens and mobile devices, and we will see services for corporate collaboration development. Some operators are also considering eMBB as the solution in areas where there are not enough copper cable connections or fiber-optic connections to the living quarters. 5G technology is also expected to contribute to the development of smart cities and IoT by deploying a large number of low-power sensor networks in urban and rural areas. Due to its safety and reliability, 5G technology can be used to ensure public safety, as well as to provide critical services, for example, in smart grids, the work of the police and security services, energy and water supply enterprises and healthcare.

With the development of computation offloading for MEC system, several related system architectures and approaches have been proposed in which the intensive computation tasks of the mobile devices will be offloaded to a richer resource located at the edge of the radio access network (RAN) [

18,

19]. However, most of these studies address only two levels of computation offloading in MEC systems [

20,

21], while few studies address the multi-level computation offloading [

22]. In addition, most of these studies address single user or multi-user with only single computation task in which minimizing energy consumption, allocating radio and computation resources efficiently, and/or satisfying latency requirements for mobile users are the main goals. Consequently, the main motivations behind our work are based on the following observations:

Considering a multi-level environment for multi-player computation offloading especially in the case of a large number of mobile devices and the resources of edge server are not enough is an important issue.

In the MEC system, most of the complex mobile applications have many tasks that need to be offloaded and executed. Therefore, addressing the multi-task issue is important.

Computing resources on the edge and cloud server and computing tasks of the mobile devices are considered to be the main factors that play an important role in the efficiency of multi-level, multi-player, multi-task edge-cloud computing system. It is, therefore, crucial to have an effective policy that is joint between them.

Motivated by such considerations, in this paper, we introduced a reliable AR system able to support ultra-low latency AR applications with the announced specifications. This system has deployed a multi-level, multi-player with multi-task computation offloading environment to provide the computing resources at the edge of the RAN which can reduce the communication latency of the VR tasks. In addition, we formulated the computation offloading as an integer optimization problem whose objective is to minimize the sum cost entire system in terms of network latency and energy consumption. The main contributions of this paper include:

An efficient computation offloading model is formulated as an integer optimization problem with the objective of minimizing the sum cost of an entire system in terms of network latency and energy consumption for a multi-level multi-player multi-task edge-cloud computing systems. In addition, our environment has considered a single cloud computing which connected with the edge computing server via an intelligent core network that is built based on SDN technology to provide with more resources when the number of VR devices increases and the resources of edge server becomes not enough.

An efficient algorithm has been designed which provides comprehensive processes for deriving the optimal computation offloading decision.

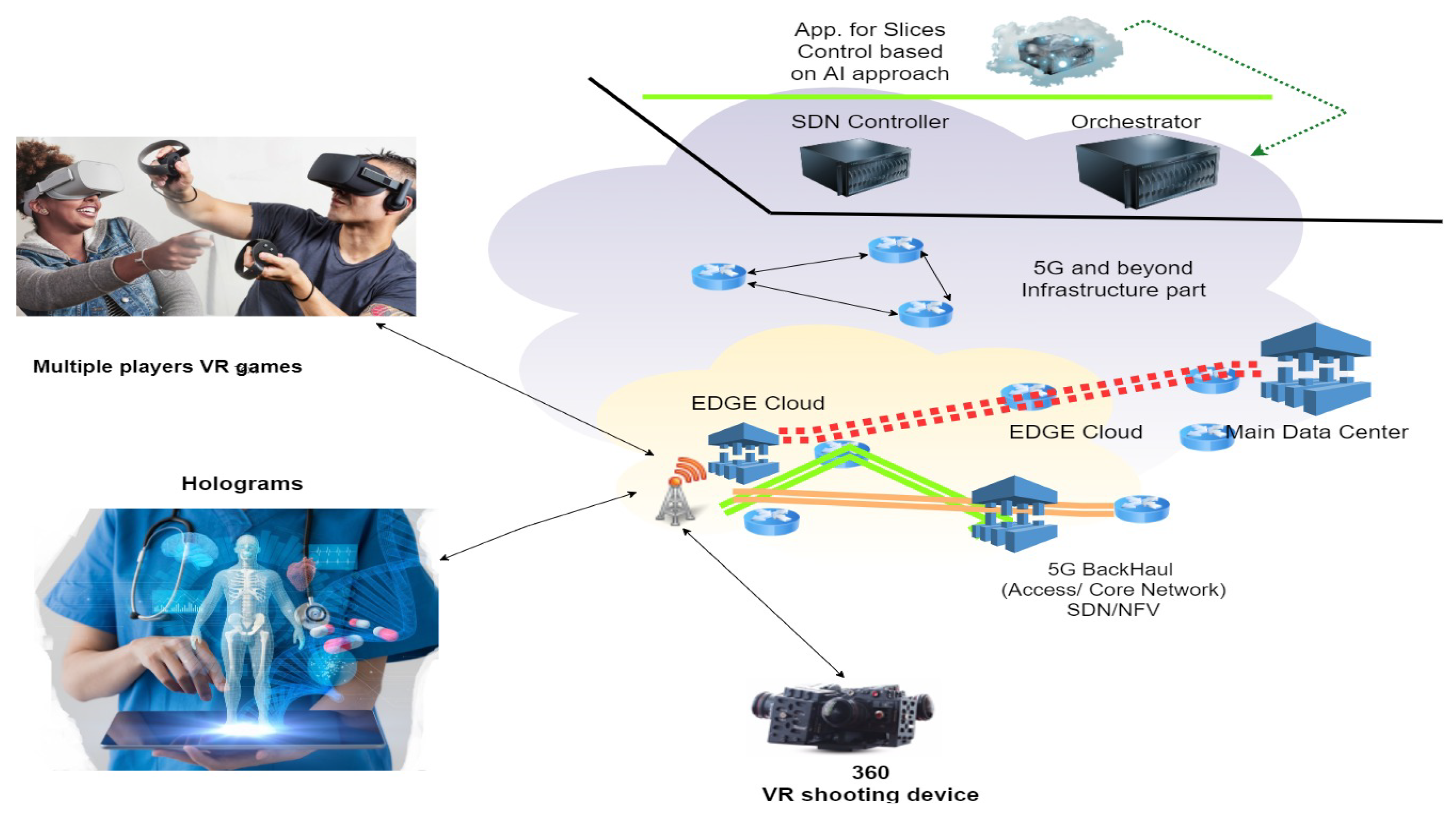

Three main VR applications have been considered which are multiple player VR games, Holograms and 360-degree Rv video applications.

Finally, simulations have been conducted to validate our proposed model and prove that the network latency and energy consumption can be reduced by up to 26.2%, 27.2% and 10.9%, 12.2% in comparison with edge and cloud execution, respectively. In addition, we provide a prototype and real implementation for the proposed system using OpenAirInterface software.

The rest of the paper is organized as follows.

Section 2 introduces three main VR applications.

Section 3 reviews related work on computation offloading policies.

Section 4 presents our system model for multi-level multi-player with multi-task computation offloading and the designed algorithm. Simulation experiments and prototype implementation are conducted in

Section 5. Finally,

Section 6 concludes the paper.

2. VR Applications

2.1. Holograms over Proposed System

To increase the application effectiveness, augmented reality and virtual reality are increasingly used in conjunction with other technologies, for example, with the Internet of things applications, Tactile Internet and holographic telepresence [

23,

24,

25,

26]. The holographic presence makes AR/VR more spectacular for the user and allows to see virtual holograms, which are volumetric color images. Modern equipment allows you to create practical holograms not different from real things. Including such an effect is achieved due to accurate tracking of the user’s position in a given space and depending on his location playing him a stereoscopic image. AR/VR technologies allow us to project static or animated objects into real environments, thereby expanding the physical world. Earlier designs of holograms for AR are based on so-called displays in the air, sometimes also called displays in free space. Projected graphic objects are displayed in the air on free projection surfaces, such as a poorly visible fog wall or fog screen created by an installed fan [

27]. One of the most popular is the Hololens equipment from Microsoft [

28]. Since both Microsoft HoloLens and AR glasses are capable of tracking head movements, they create the impression of a constant presence of holographic geospatial objects in the user’s environment. Even if the user walks in a certain area, usually indoors, the holograms remain and adapt to the user’s location and viewing perspective. This constant and adaptable holographic projection can lead to visualization approaches that bring additional benefits for cognitive processing. Presented as the first stand-alone holographic computer, Hololens unites the physical and digital worlds, allows users to interact with digital content and interact with holograms in mixed reality. The work [

29] is devoted to a technical assessment of the use of Hololens for multimedia applications.

2.2. Multiple Players VR Games over Proposed System

For better illustration of the operation of the cloudlet and other higher layer edge-cloud units, an example of multi-player VR games is considered. In multiple players VR games, players use their VR supported devices to play an intended game, however these games run over the remote application server. In order to reduce the latency and efficient use of energy and computing resources of users’ VR devices, the proposed system is introduced.

Figure 1 presents the multi-player VR game system runs over the proposed system.

The VR user with limited computing or energy resources searches, the surrounding, for devices with available computing and energy resources capable of hosting computing tasks associated with the VR game user. These devices are referred to as cloudlet and it may be represented by a powerful smartphone, notebook or tablet. Computing tasks are offloaded from the VR user to the cloudlet over D2D communication interface. WiFi Direct represents an efficient interface and it can be deployed with the method proposed in [

6]. The offloading process is held based on the developed algorithm that is introduced in

Section 4.4.

If the VR game user can not find a nearby cloudlet, it turns to offload its computing tasks to the next level of edge-cloud units, which is the micro-cloud edge servers connected to cellular base stations. Micro-cloud edge servers are small edge units that have limited computing and energy resources. These servers are deployed to provide the computing and energy resources at the edge of the RAN and thus, achieve higher latency efficiency and reduce the traffic passed to the core network. Micro-cloud edge servers receive and handle computing tasks from VR users or from corresponding cloudlets that have not sufficient computing or energy resources.

Based on the available resources and the considered offloading algorithm, micro-cloud edge servers handle the received computing tasks or offload them to the higher edge-cloud units, i.e., mini-cloud units. Each group of micro-cloud units are connected physically with a higher computing and energy capability edge-cloud unit; referred to as mini-cloud. All distributed mini-cloud edge units are connected directly to the core network cloud that represents the interface to the application server.

2.3. 360-Degree Video Streaming over Proposed System

In order to illustrate the benefits of the proposed system, another important VR application is considered. The 360-degree video streaming technology becomes a demand for many VR applications. The local execution of video processing indicates low performance for high-resolution video applications, e.g., 4 k videos and higher resolutions. To this end, MEC technology should be involved and video computing tasks should be offloaded over an appropriate communication link to the edge-cloud server. Moreover, an efficient data offloading scheme should be introduced for efficient offloading of computing tasks.

The introduction of heterogeneous distributed edge-cloud servers provides the computing and energy resources to the mobile VR devices and thus, video processing and decoding tasks can be offloaded. The considered MM-MEC system can be used for achieving high efficiency of 360-degree video applications of mobile VR devices. However, this kind of VR application requires a proper communication interface that achieves high spectral efficiency, proper for video applications with the required QoE.

To achieve high transmission QoS for VR-360-degree video applications, we consider millimeter wave (mmWave) as the communication interface. The IEEE 802.11ad standard is a multi-Gigabit wireless standard that uses the V band at a frequency of 60 GHz. The use of high wireless bandwidth is efficient for achieving a higher capacity of video-based applications. The main issue with the mmWave compared with the traditional interfaces, e.g., WiFi, is the limited communication range and thus, it is recommended for outdoor applications rather than indoor ones. Due to the recent advances in the antenna design, recent techniques have been developed for adopting mmWave to indoor applications.

3. Related Works

In recent years, numerous approaches and optimization models have been proposed for addressing the challenges of mobile devices using MEC by applying the computation offloading concept. Most of these studies handle only two levels of computation offloading in MEC systems [

20,

21], while few studies address the multi-level computation offloading [

22]. In this section, a brief overview of the common approaches will be introduced.

Colman-Meixner et al. [

30] introduced a 5G City and discussed how the advanced media services will be facilitated using 5G technology such as ultra-high-definition video, augmented and virtual reality. In addition, the opportunities provided by 5G technology and changes in the work of the telecommunications service provider are being studied. Furthermore, three different use cases are presented and their use in public networks, as well as the advantages of using this model for infrastructure owners and media service providers, is described. While in [

31], Elbamby et al. studied the problem of low-latency wireless networks of virtual reality. Then, to solve this problem, the authors proposed to use information about user positions, proactive computing and caching to minimize computation latency.

Real prototypes for VR applications were implemented in which some of them used edge computing [

32,

33], while other prototypes did not use edge computing [

34,

35]. For the prototypes which used edge computing, Hou et al. discussed how to enable a portable and mobile VR device with VR glasses for wireless connecting to edge computing devices in [

32]. In addition, the authors explored the main issues which are associated with the inclusion of a new approach to wireless VR with edge computing and various application scenarios. Furthermore, they provided an analysis of the delay requirements to enable wireless VR, and to study several possible solutions. Whereas, the computation offloading for VR gaming applications has been considered in [

33] in which minimizing the network latency is the main goal.

Meanwhile, in [

34], Hsiao et al. dealt with issues related to information security and addressed the existing security system shortcomings in augmented reality (AR) technologies, artificial intelligence, wireless, 5G, big data, massive computing and virtual stores. While in [

35], Le et al. addressed the computation offloading over mmWave for mobile VR in which 360 video streaming is used as a case study. First, the authors mentioned that using 360 video streaming requires more bandwidth and faster user response. In addition, mobile virtual reality (VR) devices locally process video decoding, post-processing and rendering. However, its performance is not enough for streaming high-resolution video, such as 4 K–8 K. Therefore, the authors propose adaptive computations of a discharge scheme using a millimeter-wave (mm-wave) communication. This offloading scheme helps the mobile device to share video decoding tasks on a powerful PC. This improves the mobile device VR ability to play high-definition video. mmWave 802.11ad wireless technology promises the use of broadband wireless to improve the throughput of multimedia systems.

In 5G, network AR/VR applications will be one of the leading applications in the category of Ultra-Reliable and Low-Latency Communication [

20,

36]. More specifically, in [

20], Liu et al. proposed a computation offloading framework for Ultra-Reliable Low Latency communications in which the computation tasks are divided into sub-tasks that will be offloaded and executed at nearby edge server nodes. In addition, the authors formulated optimization problems which jointly minimize the latency and offloading failure probability. Furthermore, three heuristic search-based algorithms are designed to solve this problem and derive the computation offloading decision. However, cloud computing does not consider environment in this work, which can leverage when the edge server resources are not enough. Similarly, in [

36] Viitanen et al. described the basic functionality and demo installation for 360 degrees stereo virtual reality (VR) games remote control. They proposed a low latency approach in which the execution of the VR game is offloaded from the end-user device to the edge-cloud server. In addition, the controller feedback is transmitted over the network to the server from which the game visualized types are transmitted to the user in real-time, like encoded HEVC video frames. Finally, this approach proved that energy consumption and the computational load of end terminals are reduced through utilizing the latest advances in network connection speed.

It is observed from the above review of related work, computation offloading has been investigated for different objectives in which most of them address only two-level architecture in MEC systems. Moreover, most of these studies address a single user or multi-user with only a single computation task. This motivates the work of this paper for jointly considering the computation offloading multi-level multi-player multi-task edge-cloud computing systems. Our work aims to minimize the sum cost of the entire system in terms of network latency and energy consumption.

4. System Model

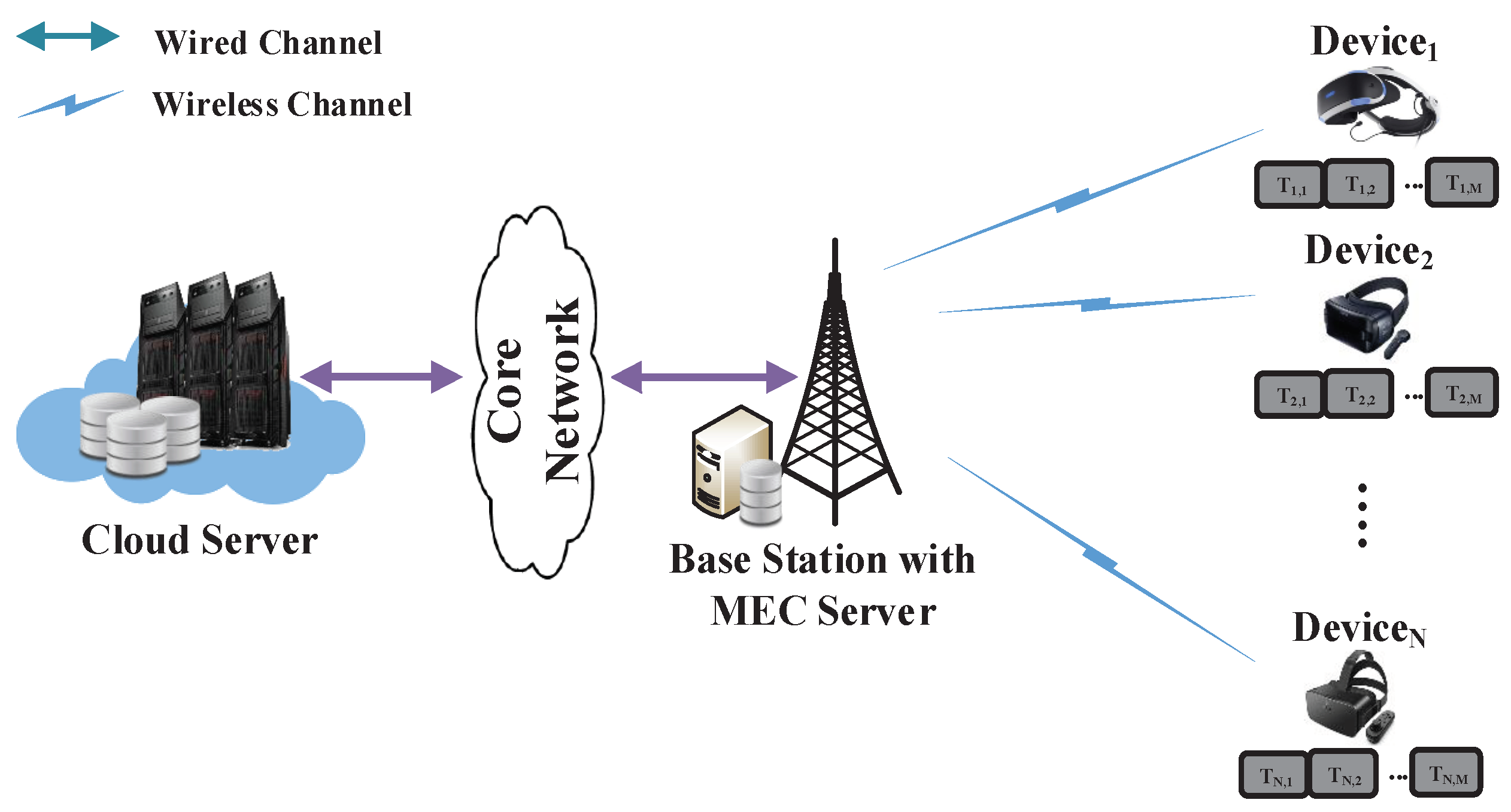

In this section, we introduce our system model which is adopted in this paper. As shown in

Figure 2, we consider a set of

VR game devices in which each device have a set of

independent computation tasks that needed to be completed. In addition, these devices are connected with a single base station via a wireless channel which is equipped with a mobile edge computing server as well as connected with a centralized cloud computing via core network. We denote the set of VR devices and their computation tasks as

and

where the computation tasks can be executed locally on the device itself or will be offloaded and processed remotely on the edge server or cloud server.

In the following subsections, communication and computation models are presented with more detail, followed by the formulation of the optimization problem for our model.

The notations used in this study are summarized in

Table 1.

4.1. Communication Model

We firstly introduce the communication model in edge-cloud system, in which our environment has a single base station connected with a set of VR game devices through a wireless channel as well as edge computing resources are associated with a single base station and connected with cloud computing via the core network. In addition, each device runs a VR mobile game application which has independent computation tasks that needed to be completed.

Let us denote as a binary computation offloading decision for the computation task j of VR device i which defines the execution place for the computation task. More specifically, () indicates that the computation task j of VR device i will be executed locally by VR device resource, while ( and ) indicates that the computation task j of VR device i will be offloaded and processed remotely at the base station and cloud server, respectively. Overall, each computation task j must be executed only one time whether locally () or remotely ( ), i.e., .

Regarding Shannon law, the maximum uplink and downlink data rate for each VR device where its computation task data are transmitted over the communications channel can be calculated as [

4]:

where

and

denote to the uplink and downlink channel bandwidth,

, and

denote the transmission power of VR device

i and base station,

and

denote to the density of noise power and the corresponding channel gain between the VR device and the base station due to the path loss and shadowing attenuation.

4.2. Computation Model

In this subsection, the computation offloading model is introduced. Firstly, as mentioned above, our simulation has a a single base station connected with

set of VR game devices in which each device has

independent computation tasks that needed to be completed. For each computation task

j, we use a tuple

to represent the computation task requirement, where

and

represent the size of input and output data that need to be transmitted and received, respectively. Whereas

and

represent the total number of CPU cycles and the completion deadline that are required for task

j of VR device

i. The values of

,

and

can be obtained through carefully profiling of the task execution [

37,

38,

39].

Consequently, the computation overhead in terms of execution time and energy consumption for local, edge and cloud execution approaches will be discussed later in detail.

4.2.1. Local Execution

For the local execution approach where the computation task

j will be executed locally at the VR device itself, the total execution time and energy consumption can be respectively calculated as:

where

denotes the computational capability (CPU cycles per seconds) of VR device, and

is a coefficient, that denotes the consumed energy per CPU cycle. we set

, where the energy consumption is a superlinear function of VR device frequency [

40,

41].

4.2.2. Remote Execution

For the remote execution approach where the computation task

j of VR device

i will be offloaded and processed at the base station or cloud server, the total execution time can be respectively calculated as:

where

is constant denoting the propagation delay for transferring the computation task between the base station and cloud server. While

,

,

and

denote the offloading, downloading and execution time for processing the computation task

j of the VR device

i at the base station and cloud server, respectively which can be expressed as follows:

where

and

denote the computational capability of the base station and cloud server which is assigned to the VR device

i.

Consequently, the energy consumption for offloading, downloading and processing the computation task

j of VR device

i remotely at the base station and cloud server can be expressed as follows:

where

denote the reception power of the VR device and

is constant denoting the energy consumption for being idle while processing the task at the edge and cloud.

In view of communication and computation models, the total overhead for executing the computation task

j of the VR device

i in terms of time and energy can be respectively expressed as:

where

and

denote the weighting parameters of execution time and energy consumption for VR device

i’s decision making, respectively. While

and

are total time and energy which can be expressed as:

4.3. Problem Formulation

In this section, we consider the issue of achieving efficient computation offloading for multi-player VR edge-cloud computing systems. Regarding the above communication and computation models, the computation offloading problem is formulated as the following constrained optimization formulation problem:

The objective function of the optimization problem is to minimize the sum cost of the entire system in terms of time and energy through the deployment of task offloading. The constraints C1 and C2 are upper bounds of energy and time consumption, respectively. The constraint C3 guarantees that each computation task j must be executed only one time. Finally, the last constraint C4 guarantees that the computation offloading decision variable is binary.

As a result that the objective function is linear as well as all the constraints are also linear, the optimization problem (

16) is an integer linear optimization problem in which the optimal solution can be obtained using the branch and bound method [

42].

4.4. Multi-Player Computation Offloading Algorithm

In this subsection, we present the design of our multi-player computation offloading algorithm which provides comprehensive processes for deriving the optimal computation offloading decision of the constrained optimization problem in Equation (

16) in an efficient manner.

First, all VR devices are initializing their offloading decision

, that means local execution. Then, each device uploads the computation tasks’ requirements which includes

and the local computation capabilities

to the edge server. Afterwards, the edge server calculates the uplink and downlink data rate for each VR device based on the current number of VR players. In addition, the edge server finds the optimal execution place for each computation task (i.e., local, edge or cloud) through solving the optimization problem in Equation (

16). Finally, each VR device receives the execution place for their computation tasks from the edge server, thereby minimizing the sum cost of the entire system in terms of time and energy.

Algorithm 1 provides the detailed process of the multi-player computation offloading algorithm in which is the time complexity where N and M denote the total number of VR devices and their tasks, respectively.

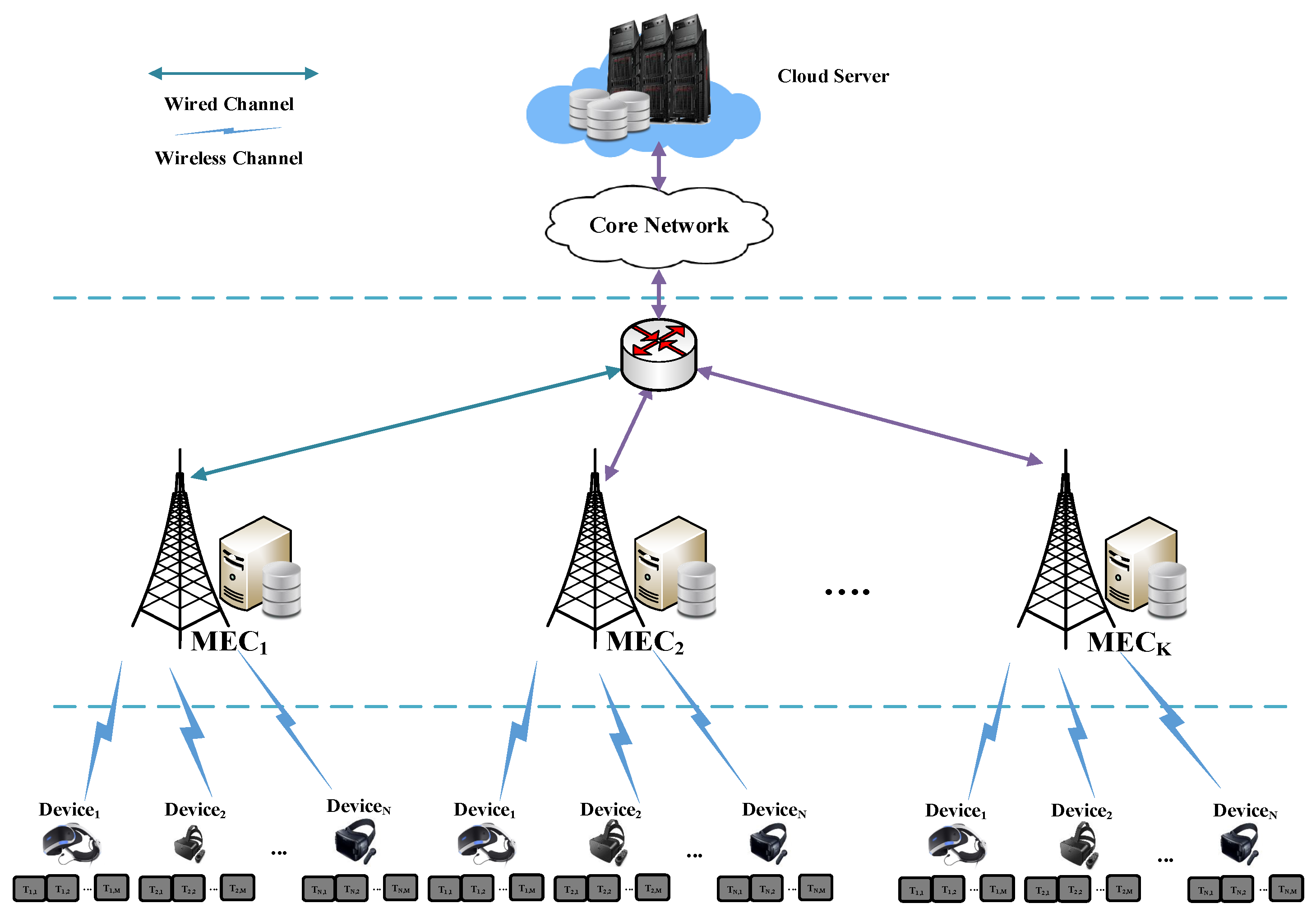

4.5. Multi-Level with Multi-Edge Computing Architecture

In this section, we will describe the multi-level, multi-edge, multi-user with multi-task system architecture in which the system architecture composed of three-level as shown in

Figure 3. Staring from down to up, the first level consists of a set of

VR game devices in which each device has a set of

independent computation tasks that needed to be completed. In the above level, we have a set of

mobile edge computing servers in which the VR game devices are distributed and connected via wireless channel. In addition, we have a backbone router which can connect and control the mobile edge computing server via wired connection as well as designed using SDN technology. Finally, in the last level, we have a single cloud server which can provide more resources as well as connect with a backbone router through the core network.

| Algorithm 1 Multi-Player Computation Offloading Algorithm |

- 1:

Initialization: Each VR device i initializes the offloading decision for their computation tasks with - 2:

for all each VR device i and at given time slot t do - 3:

for all each computation task j do - 4:

Uploads the computation tasks’ requirements and the local computation capabilities to the edge server. - 5:

Calculate the uplink and downlink data rate for each VR device based on Equations ( 1) and ( 2). - 6:

Solve the optimization problem in Equation ( 16) and obtain the optimal computation offloading decision values for each compuatation task at VR devices in which the sum cost of the entire system is minimized. - 7:

Send the offloading decision values to each VR device. - 8:

end for - 9:

end for

|

Regrading the simulation and results for this architecture, there are some issues that should be handled for the computation offloading which are:

Scalability Issue: In the multi-edge environment, the number on VR game devices becomes large, which has more computation tasks that need to be offloaded and executed remotely. Simultaneously, the mobile edge computing servers should provide with the resources’ scalability. Thus, an intelligent algorithm should be designed to scale the computation resources of the overloaded edge server using the computation resources of the neighbor edge server and cloud server which are underloaded.

Load Balancing Issue: As mentioned above, the VR game devices are distributed across the edge computing servers. In addition, due to the randomization distribution of the users, some of the edge servers will be overloaded whereas other servers will be underloaded. Consequently, this will affect the computation offloading process and may lead to poor service quality and long delays due to network congestion. Therefore, it important to propose an efficient algorithm to balance the load between the edge server and improve the quality of service for the VR game device user.

Mobility Issue: In the multi-edge environment, each VR game device has the ability to depart and leave dynamically between the edge servers within a computation offloading period which will be interesting and technically challenging, where the offloading decision and the execution location will be affected. Thus, an intelligent approach should be developed to determine the best execution place for the computation task in which the overall consumption in terms of time and energy will be minimized.

Possible Output: Finally, if the scalability, load balancing and mobility issues are handled as mentioned above, the proposed model can operate and derive the computation offloading decision in an efficient manner. Further, the weighted sum cost of the entire system in terms of energy and time will be optimized.

5. Simulation Results and Prototype Implementation

5.1. Simulation Results and Discussion

In our simulation settings, a Python-based simulator is used, in which the computer is equipped with Intel

® Core(TM) i7-4770 CPU with 3.4 GHz frequency and 8 GB RAM capacity running Windows 10 Professional 64-bit platform. We consider a multi-player multi-task edge-cloud computing system with single cloud server, single small base station,

VR devices and each device has

independent computation tasks that can be executed locally or offloaded and processed remotely at the available base station or cloud server. Each computation task has an input data size which is uniformly distributed within the range

MB while the output data size is assumed to be 20% of the input data size. In addition, the total number of CPU cycles required to complete each task is assigned to 1500 cycles/bit. The CPU computational capability of each VR device is uniformly distributed within range

GHz, while the CPU computational capability of edge server and cloud are set to 20 and 50 GHz, respectively. The local computing energy consumption per cycle follows a uniform distribution in the range

J/cycle. The other simulation settings employed in the simulations are summarized in

Table 2.

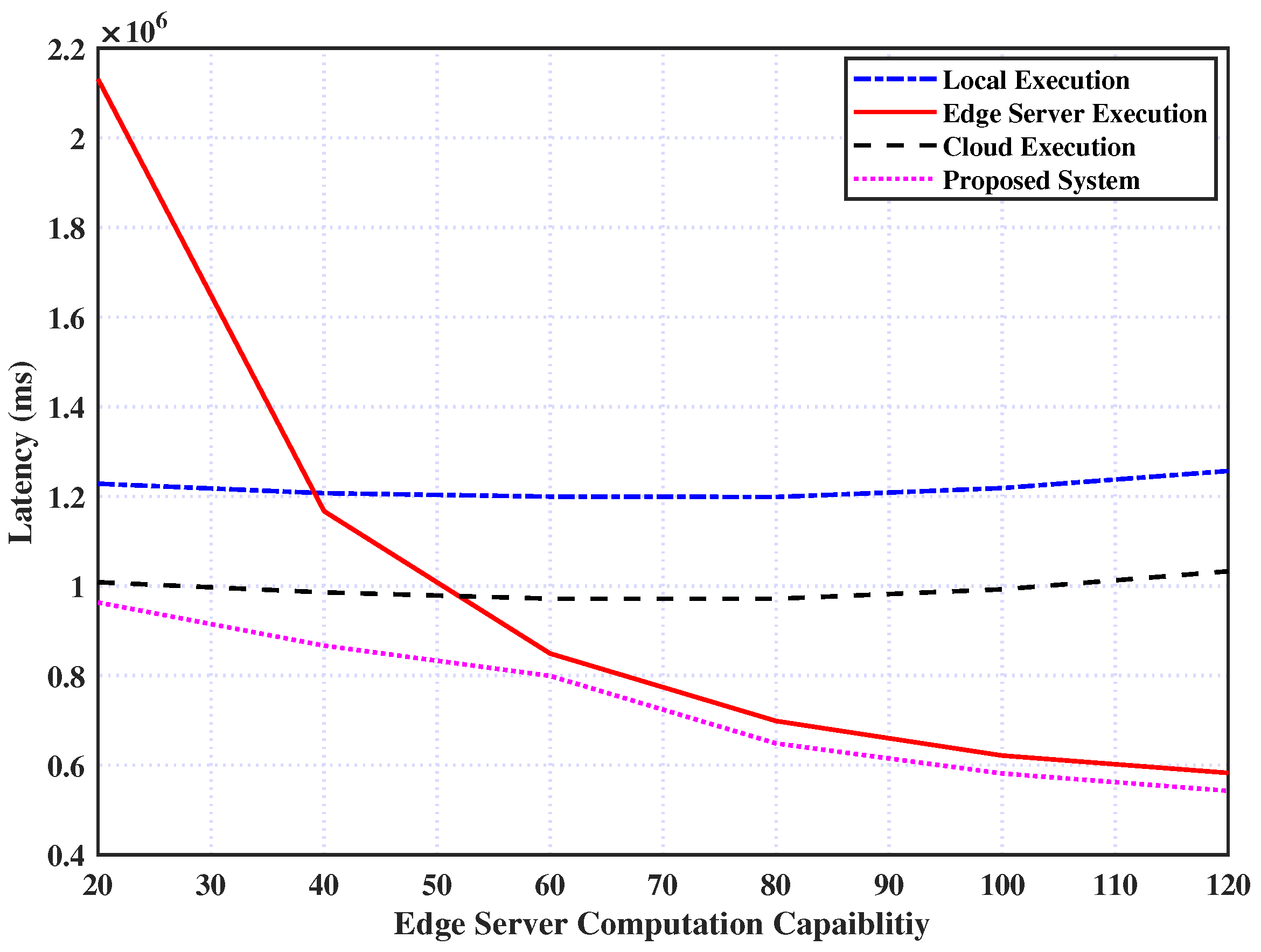

Figure 4 shows the energy consumption of executing the computation tasks for the three different scenarios versus different values of edge server computation capabilities. It is observed from the figure that the energy consumption for our proposed system can achieve the lowest result. Specifically, the edge server scenario is decreasing as the edge server computation capability is increasing and becoming lesser than the cloud execution scenario. This is due to the energy consumption becoming shorter as the VR device is allocated more resources, whereas the cloud execution and local execution scenarios do not affect because they do not depend on the edge server resources. In addition, our proposed system gets the best execution place (i.e., local, edge and cloud).

Figure 5 presents the processing time of executing the computation tasks for different edge server’s capability. It is seen in this figure that the cloud execution and local execution policies are not affected by the edge server capability, whereas the time execution of our proposed system and edge server policies gradually decreases as edge server capability increasing. This is because of the shorter latency as the VR devices are allocated more resources.

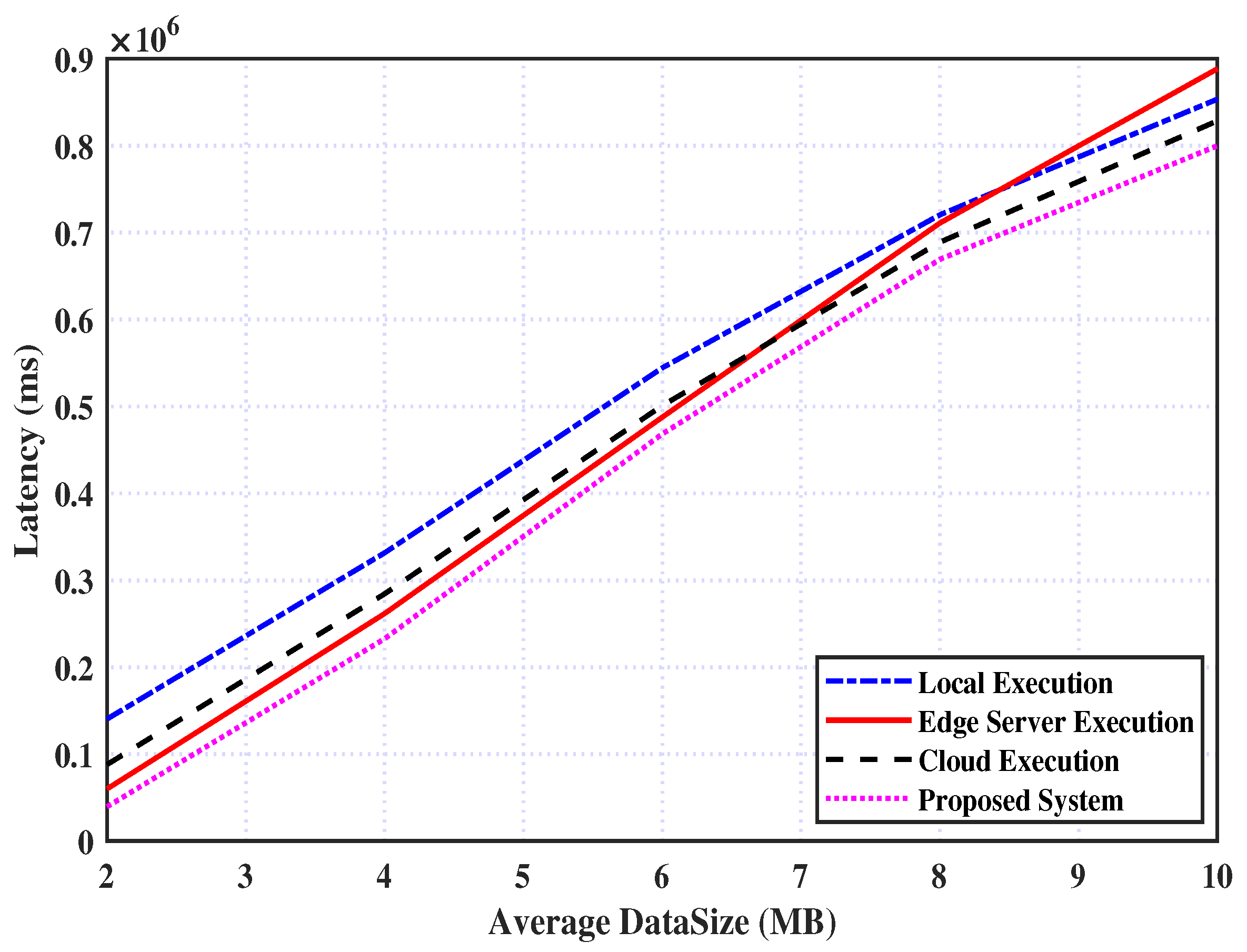

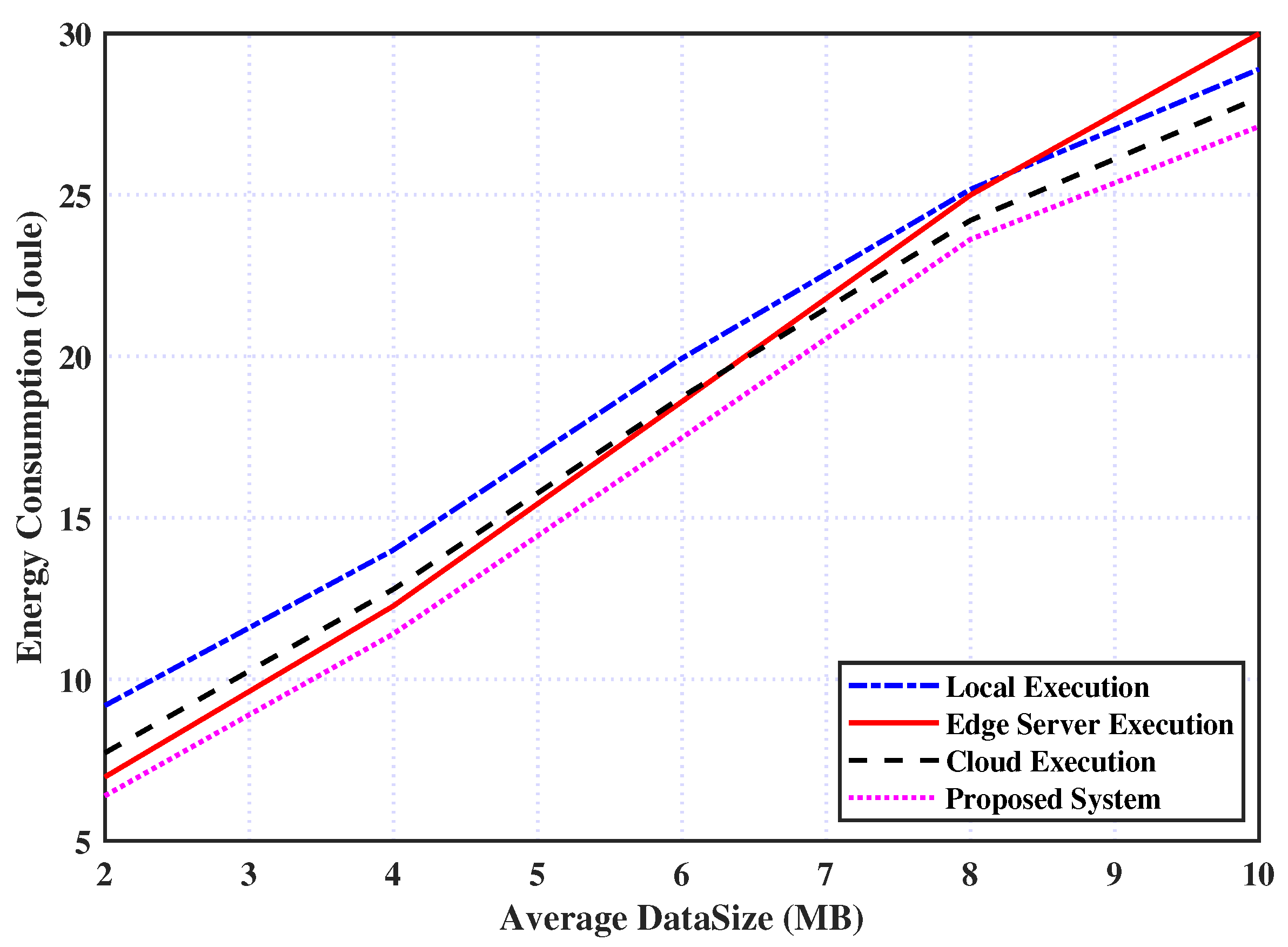

The processing time and energy consumption of executing the computation tasks over different values of input data size (Input data size is uniformly distributed within the range (0,i) MB where i is the value of x-axis) are shown in

Figure 6 and

Figure 7, respectively. It can be deduced from the figures that the cost wise (time and energy) of our proposed system can achieve better performance and able to maintain a lower overhead in comparison with the other policies. In addition, the edge execution policy exceeds the local execution as the number of data size increases (i.e., data size > 8 MB). This is due to our proposed model can select some appropriate tasks to be executed remotely (i.e., at edge or cloud server) while rejecting others in an optimal way, which minimizes the sum cost of the entire system.

5.2. Prototype Implementation and Measurements

Network segment includes hardware and software such as: NI USRP boards, which provide the ability to efficiently study, analyze/emulate LTE/LTE-A networks, 5G New Radio and other wireless technologies; GNU Radio, Amarisoft, srsLTE software packages provide an opportunity to study/test network protocols, signaling technologies and access to the radio channel. This experiment is conducted with the OpenAirInterface software package for virtualization of mobile communication components. Additionally deployed is a virtual environment in which vEPC, Amarisoft are installed in the form of containers and virtual machines for convenient infrastructure management, which makes it possible to emulate virtualized components of a wireless radio access network (HSS, vSGW, vPGW, vMME).

The developed prototype includes transport network, logical transport network that is the link between the network core and the radio access network (RAN). It is based on SDN technology and has the ability to flexibly and quickly manage all nodes. Using API, it can automatically change the configuration depending on the requirements, the second part is the core network, which consists of a virtualized segment. In this part, OpenAirInterFace, srsLTE, AMAISOFRT, openEPC can be used. Each element of this zone can be represented as a docker container and a virtual machine, and the last part is radio access network—an area consisting of NI-USRP 2954R software-defined radio systems (SDR), on which an LTE access network, New Radio sub6, LoRa, NB-IoT, etc. can be deployed. For the organization of radio, interfaces used are OpenAirInterface, GNU Radio, srsENB solutions. A deployed 5G NSA network model includes a set of docker containers in which network elements are packed, and SDR hardware modules. A Docker image is a service with its required dependencies and libraries that are directly related to running the application. For example, for an HSS element docker, the container will include hss.h, security.h, etc. Each container is installed either in separate VMs or multiple containers in one VM. It is important to note that the N26 interface is a key interface between MME (EPC) and AMF as a signaling exchange point for UE radio control. Therefore, these two elements must be at a sufficient distance to satisfy synchronization tasks. In our case, for network hardware devices we used the software-defined radio (SDR.). The program control has a wide range of tools that are used in our laboratory, for example, real-time or offline/post-processing, C ++ and the USRP Hardware Driver (UHD) API (open-source), GNU Radio (Python, NumPy, SciPy, Matplotlib, etc.) (open-source), LabVIEWTM (National Instruments), MATLABTM (The MathWorks), Application-Specific (Cellular: OpenBTS, OpenBTS-UMTS, OpenLTE, srsLTE, Amarisoft).

As shown in

Figure 8, to realize the proposed system, we used in our prototype the following devices:

Edge hosting:

- –

CPU Intel(R) Xeon(R) CPU E5-2620 v4 @ 2.10GHz,

- –

RAM 64GB,

- –

GPU [GeForce GT 1030] per node.

Network node:

- –

LaNNER x86,

- –

CPU Intel(R) Xeon(R) CPU E5-2620 v4 @ 2.10GHz,

- –

RAM 32GB,

- –

Interface 10xSFP+,

- –

Virtual interface VLAN,QinQ.

To create a cluster rendering of VR applications, we used several EDGE hosts with an interface between 10GBE hosts. Using a medium-quality graphics card, the GeForce GT 1030 was used as the rendering core.

Using a cluster with a different number of nodes, we measured the average energy consumption of a node per Mbps of information transfer. Then, after rendering, the image is broadcast to the end device. Such a construction of the architecture will allow us to study the average content delivery delay depending on the number of EDGE hosts in the cluster.

During experiments, the following results were obtained from edge computing clusters:

Average power consumption of one edge computing per one Mbps of information transfer.

The average delay between the end device—the VR application server, depending on the number of hosts in the rendering cluster.

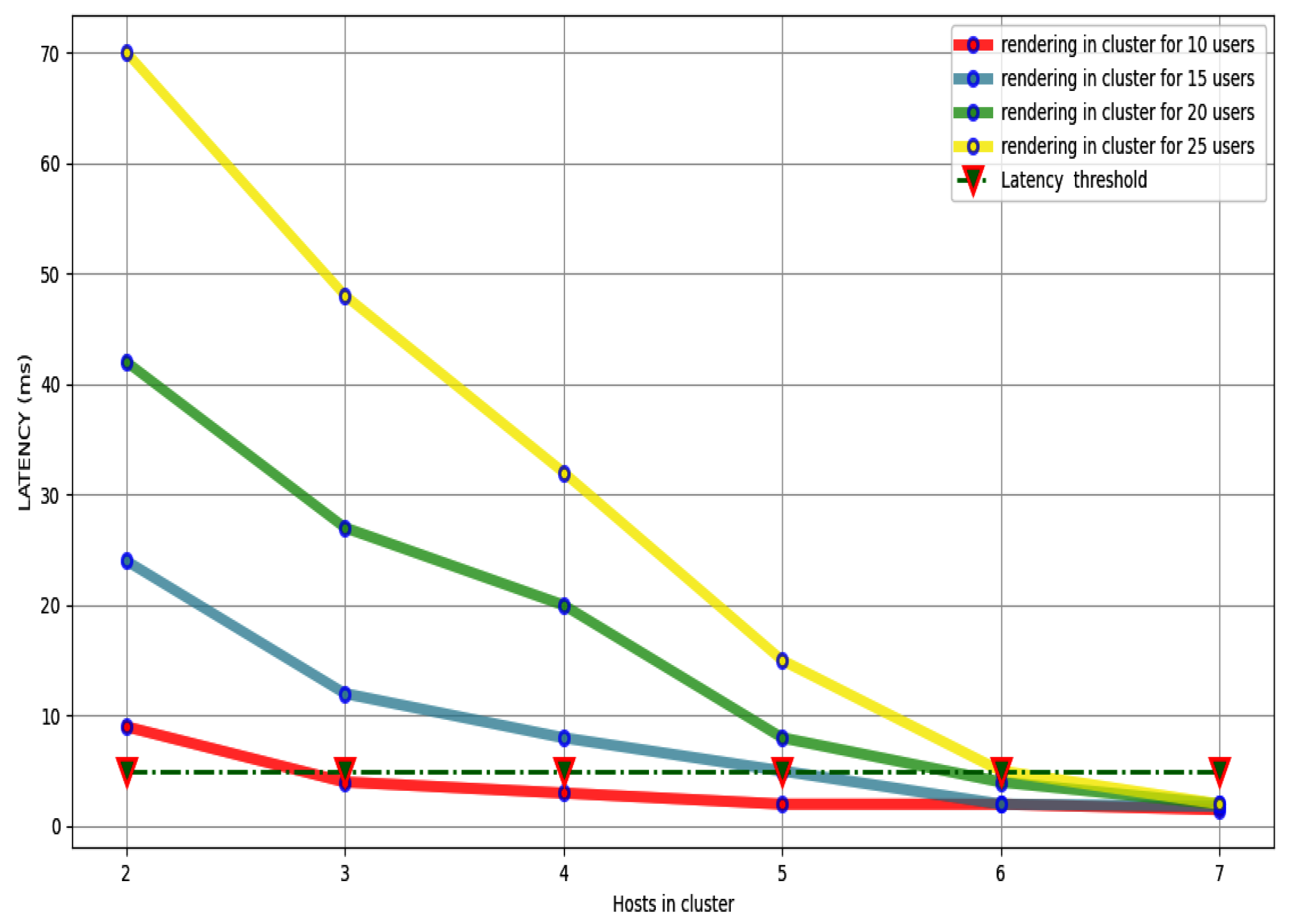

In general, the use of edge computing at base stations or near network nodes increases power consumption. The more users accessing this unit increases at times (

Figure 9). However, the price of electricity annually falls, and at the same time, the power of elemental bases increases.

To improve the QoS of the VR application, we have to solve the problem with energy consumption. In

Figure 10, the dependence of the response delay on the number of devices in the VR rendering cluster was shown. In case of the larger cluster, the VR application will be generated faster. This happens due to computing parallelism.

6. Conclusions

In this study, we proposed an efficient multi-player computation offloading approach for VR edge-cloud computing systems. Firstly, the computation offloading is formulated as an integer optimization problem whose objective is to minimize the weighted cost of the entire system in terms of time and energy. This model is considered as latency and energy-aware in which it can select the execution place for the VR computing tasks in away to achieve the best energy and latency efficiency. The proposed system is integrated to achieve the best VR user experience. In addition, a low complexity multi-player computation offloading algorithm is designed to derive the optimal computation offloading decision. Finally, this system is simulated over a reliable environment for various simulation scenarios. Finally, simulations have been conducted to validate our proposed model and prove that the network latency and energy consumption can be reduced by up to 26.2%, 27.2% and 10.9%, 12.2% in comparison with edge and cloud execution, respectively.

In ongoing and future work, a new effective compression layer will be introduced in which the offloading data will be compressed in the low bandwidth state using an efficient algorithm. Hence, the communication time and energy will be reduced and the performance of the entire system will be enhanced. In addition, a more general case will be considered where there are multi-edge server and the mobility issue for the mobile device will be handle in which each mobile device may depart and leave dynamically within a computation offloading period, which will be interesting and technically challenging.