1. Introduction

Steel structures form the backbone of modern infrastructure, with bolted joints serving as critical load-bearing components. The integrity of these joints directly impacts structural safety, making bolt loosening detection a paramount concern in structural health monitoring [

1]. Traditional inspection methods rely on manual techniques such as torque wrenches or ultrasonic testing, which are labor-intensive, time-consuming, and often impractical for large-scale structures [

2]. Recent advances in computer vision and deep learning have opened new avenues for automated bolt inspection, yet existing approaches face significant challenges in handling the 3D nature of steel joints and the precise determination of loosening states.

Current vision-based methods primarily employ object detection frameworks like the YOLO series (YOLOv3 to YOLOv8) to identify bolts in 2D images [

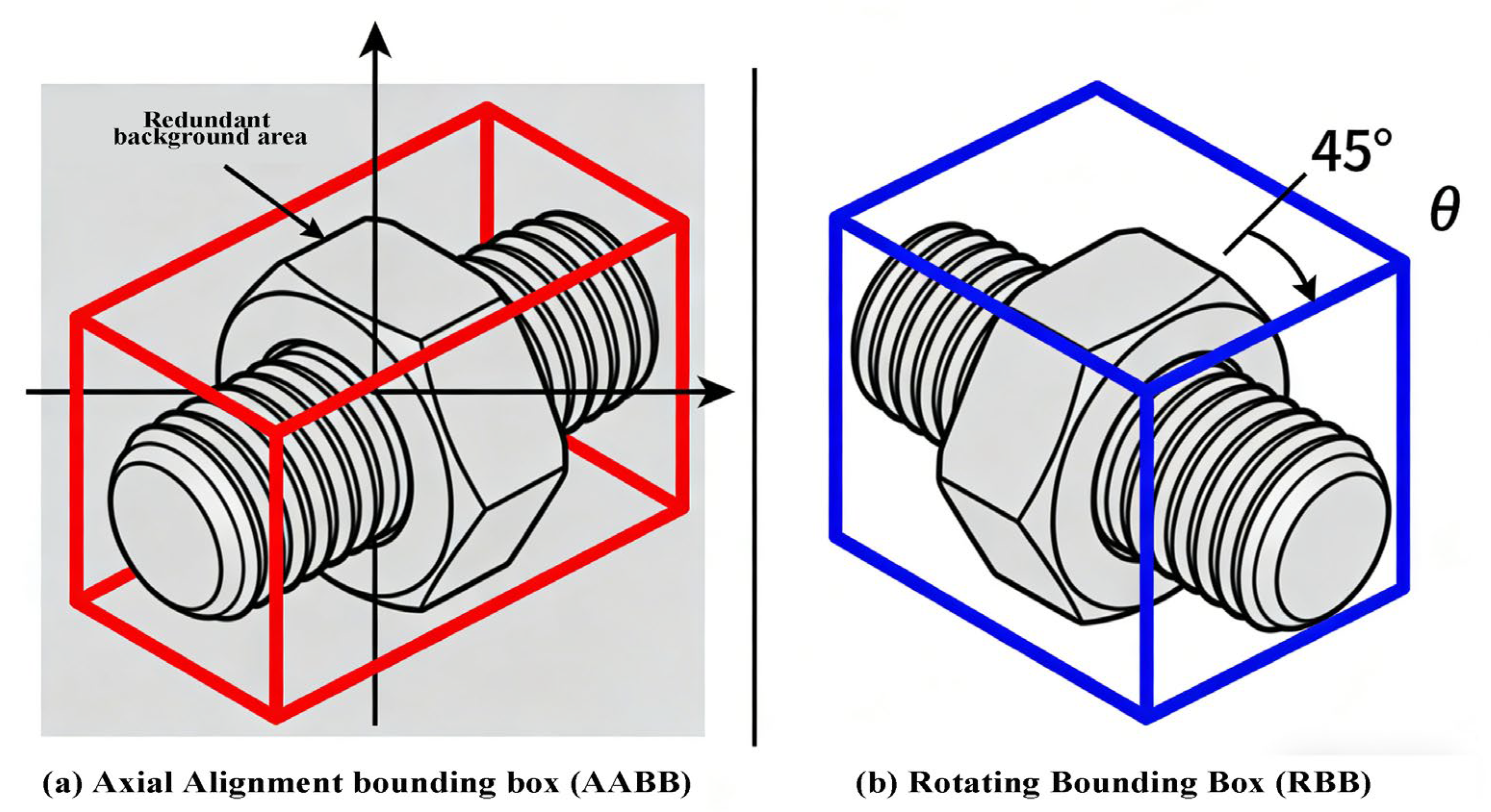

3]. While these models achieve high detection rates, they typically use axis-aligned bounding boxes that cannot accurately represent the orientation of bolts in 3D space. This limitation becomes particularly problematic when assessing loosening, as the rotational state of a bolt is a key indicator of its tightness. Some studies have attempted to address this through rotation-aware detection heads [

4], but these approaches often struggle with the small size and high occlusion typical of bolted joints in complex steel structures.

The evolution of YOLO models reflects continuous improvements in object detection architectures. YOLOv1 pioneered real-time detection by framing it as a regression problem, while YOLOv2 introduced anchor boxes and batch normalization for better localization. YOLOv3 incorporated multi-scale predictions through feature pyramid networks, significantly enhancing small object detection—a crucial capability for bolt inspection. YOLOv4 optimized training strategies with advanced data augmentation and modified feature aggregation. YOLOv5 introduced a more modular design with improved training efficiency, and YOLOv6-YOLOv8 further refined the backbone-neck-head architecture with enhanced feature representation. Most recently, YOLOv9 introduced the Programmable Gradient Information (PGI) mechanism to mitigate information loss in deep networks, theoretically making it better suited for detecting small, occluded objects like bolts in complex environments.

Selection Justification of YOLOv9. While newer versions such as YOLOv10 have been released subsequent to our study initiation, the selection of YOLOv9 for this specific application is based on several technical and practical considerations. First and foremost, YOLOv9’s Programmable Gradient Information (PGI) mechanism is particularly advantageous for detecting small, occluded objects like bolts in complex steel structures, as it effectively mitigates the information loss problem in deep networks—a critical challenge for bolt feature preservation. Second, YOLOv9 provides a well-balanced architecture that maintains high detection accuracy while offering reasonable computational efficiency, which is essential for potential real-time field deployment. Third, it is important to emphasize that there is no single YOLO variant that consistently provides the most reliable accuracy across all tasks and domains; model selection must be tailored to specific application requirements, dataset characteristics, and deployment constraints.

Recent studies employing YOLOv10, such as safety helmet monitoring on construction sites [

5] and rebar counting using UAV imagery [

6], demonstrate its effectiveness in different construction-related applications. However, these applications differ significantly from bolt loosening detection in several key aspects: (1) target scale (helmets and rebars are generally larger and more distinct than bolt heads), (2) environmental complexity (bolt joints often involve higher occlusion and more challenging lighting conditions), and (3) precision requirements (bolt loosening assessment demands sub-degree angle estimation, not just binary detection). The PGI mechanism in YOLOv9 is specifically designed to preserve gradient information flow for small object detection, making it theoretically better suited for our task. Furthermore, our extensive ablation studies (

Section 5.3) demonstrate that the proposed angle-aware modifications to YOLOv9 achieve state-of-the-art performance for bolt loosening detection, with 93.6% detection accuracy and 5.2° angle estimation error, validating our architectural choice.

However, despite these architectural advances, existing YOLO-based approaches for structural inspection remain limited in three key aspects: (1) they typically output axis-aligned bounding boxes that cannot accurately represent bolt orientation in 3D space; (2) they lack dedicated mechanisms for precise rotation angle estimation, which is essential for quantifying bolt loosening severity; and (3) they operate primarily in 2D image space without integrating multi-view geometric constraints for accurate 3D localization. These limitations motivate our development of an angle-aware YOLOv9 variant integrated with multi-view fusion techniques specifically tailored for bolt loosening detection and 3D localization in steel structures.

Compared with existing bolt-loosening detection methods and YOLOv8/YOLOv9-based approaches, the novelty of our framework lies in the systematic integration of three key components: (1) an angle-aware detection head that regresses both bounding boxes and rotation angles for quantitative loosening assessment, addressing the limitation of axis-aligned bounding boxes in standard YOLO models; (2) a multi-view fusion pipeline that leverages epipolar geometry to reconstruct accurate 3D bolt positions and orientations without requiring pre-measured, fixed camera poses in a global coordinate system; and (3) a unified 3D loosening metric that combines positional displacement and angular deviation for comprehensive bolt condition evaluation. While individual elements such as rotated object detection or multi-view reconstruction exist in isolation, our work is the first to integrate them into a cohesive framework specifically designed for structural health monitoring of bolted joints in steel structures.

Multi-view imaging has shown promise in overcoming single-view limitations by combining information from multiple perspectives [

7,

8]. When integrated with 3D reconstruction techniques like Structure from Motion (SfM) [

9], these methods can provide spatial context that is essential for accurate bolt localization. However, existing multi-view systems for structural inspection either lack the precision needed for loosening detection or require impractical setup conditions that limit their field applicability.

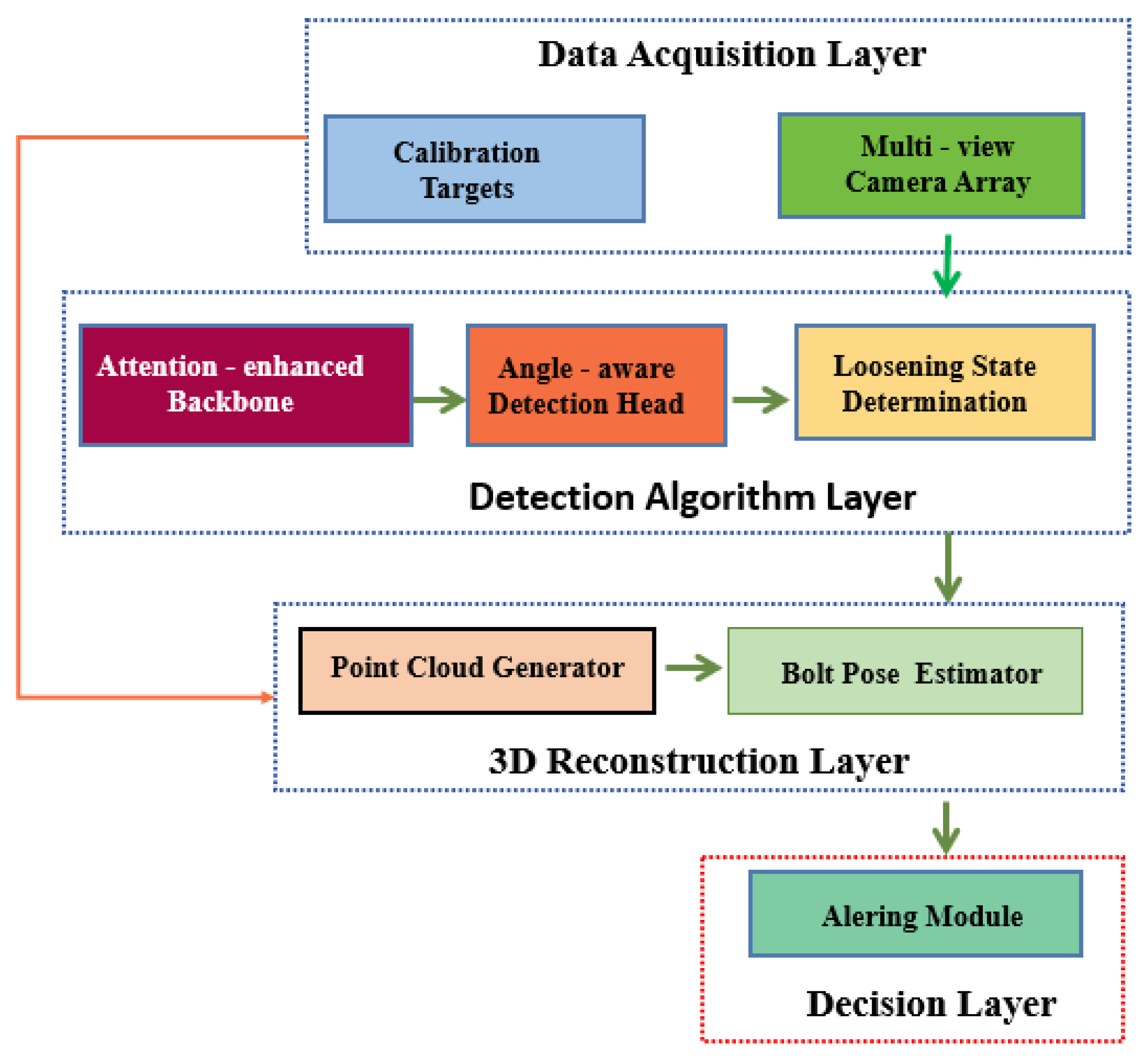

This paper presents a novel framework that addresses these challenges through three key innovations. First, we develop an angle-aware improved YOLOv9 model that simultaneously detects bolts and estimates their rotation angles, enabling direct assessment of loosening states. The model incorporates attention mechanisms and enhanced feature aggregation to improve detection accuracy under challenging conditions. Second, we introduce a multi-view fusion algorithm that combines 2D detections from multiple cameras with epipolar geometry constraints, achieving accurate 3D localization without requiring pre-measured, fixed camera poses in the world coordinate system. Third, we establish a comprehensive evaluation protocol that considers both detection accuracy and spatial localization precision, providing meaningful metrics for real-world deployment.

The proposed method offers significant advantages over existing approaches. Unlike traditional manual inspections, it provides an automated, quantitative assessment of bolt conditions with minimal human intervention. Compared to single-view computer vision methods, it achieves higher accuracy in both detection and loosening assessment by leveraging multi-view information. The integration of deep learning with 3D reconstruction creates a robust system that can handle the complex geometries typical of steel structures while maintaining computational efficiency suitable for field deployment.

The remainder of this paper is organized as follows:

Section 2 reviews related work in bolt detection, multi-view fusion, and structural health monitoring.

Section 3 presents the theoretical foundations of our approach, including multi-view geometry and angle-aware detection.

Section 4 details our improved YOLOv9 architecture and the complete 3D localization pipeline.

Section 5 presents experimental results on both synthetic and real-world datasets, followed by discussion and future work directions in

Section 6. The paper concludes with a summary of contributions and potential applications.

2. Related Work

The detection and localization of bolt loosening in steel structures has been approached from various perspectives in computer vision and structural health monitoring. Existing methods can be broadly categorized into three groups: traditional image processing techniques, deep learning-based detection approaches, and multi-view 3D reconstruction methods.

2.1. Traditional Image Processing Approaches

Early attempts at automated bolt inspection relied on handcrafted features and classical image processing techniques. Methods based on edge detection [

10] and template matching [

11] were commonly used to identify bolt positions in 2D images. These approaches demonstrated reasonable performance in controlled environments with simple backgrounds, but struggled with varying lighting conditions and occlusions typical of real-world steel structures. The introduction of Hough transform-based circle detection [

12] improved bolt head localization, yet these methods lacked the capability to assess bolt tightness or orientation.

2.2. Deep Learning-Based Detection

The advent of deep learning revolutionized bolt detection through convolutional neural networks. The YOLO series, particularly YOLOv8 [

13], has been widely adopted for its balance of speed and accuracy in industrial inspection tasks. Recent improvements in YOLOv9 [

14] introduced advanced feature extraction capabilities through its Programmable Gradient Information (PGI) mechanism, making it particularly suitable for small object detection like bolts. It is worth noting that while YOLOv10 has recently been introduced and shows promising results in certain applications such as safety helmet detection [

15], the choice of YOLOv9 for our specific task is based on its proven effectiveness for small object detection and its well-established architecture that facilitates the integration of our proposed angle-aware modifications. The PGI mechanism in YOLOv9 addresses the information bottleneck problem that is particularly critical for detecting small bolts in occluded environments, and its modular design allows for effective incorporation of coordinate attention mechanisms and multi-scale feature aggregation—key components of our improved architecture.

Several studies have built upon these foundations, such as the CSEANet [

16], which enhanced feature aggregation for bolt defect detection in railway bridges. However, these approaches primarily focus on binary classification (tight/loose) without quantifying the degree of loosening through rotation angle estimation [

17,

18,

19]. More recently, researchers have extended deep learning models to explicitly estimate bolt orientation angles, which is crucial for quantifying loosening severity. Transformer-based architectures, such as DETR variants with rotational query mechanisms, have shown promising results in predicting oriented bounding boxes for small mechanical parts [

20]. Additionally, refined angle encoding schemes, including Gaussian angle representation and circular smooth loss, have been proposed to mitigate boundary discontinuity issues in rotation regression [

21,

22]. These advances provide a more robust foundation for angle-aware bolt detection, though their integration with multi-view 3D localization in structural health monitoring remains underexplored.

2.3. Multi-View 3D Reconstruction

Multi-view systems have emerged as a promising solution for overcoming the limitations of single-view detection. Structure from Motion (SfM) techniques [

23] have been adapted for steel structure inspection, enabling 3D point cloud generation from multiple images. The synchronized identification system developed by [

24] demonstrated the potential of combining visual perception with panoramic reconstruction for defect localization. Epipolar geometry-based methods [

25] have shown particular promise in feature matching across views, though their application to bolt loosening detection remains limited by the challenges of small object registration and orientation estimation [

26,

27].

2.4. Hybrid Approaches

Recent works have begun exploring combinations of these techniques. The ERBW-bolt model [

28] improved upon YOLOv8 for bolt detection in complex backgrounds, while [

29] integrated deep learning with traditional image matching for loosening assessment. These hybrid methods represent important steps toward comprehensive bolt inspection systems, yet they still lack the integrated angle estimation and precise 3D localization capabilities needed for practical structural health monitoring applications [

30,

31].

To provide a clear overview and comparison of the four methodological categories discussed above,

Table 1 summarizes their key characteristics, advantages, and limitations specifically in the context of bolt loosening detection and localization.

The systematic comparison in

Table 1 reveals three critical gaps in existing bolt loosening detection methodologies that directly motivate our proposed approach: (1) Lack of integrated angle-aware detection: While deep learning methods (particularly YOLO variants) achieve high bolt detection rates, they predominantly output axis-aligned bounding boxes that cannot capture the rotational state essential for loosening assessment. Although rotation-aware detectors exist, they are not specifically optimized for bolts in structural health monitoring contexts. (2) Disconnection between 2D detection and 3D localization: Multi-view reconstruction provides accurate 3D positions but typically operates as a separate stage after detection, lacking tight integration with the detection process and not incorporating bolt-specific rotation estimation. (3) Absence of a unified framework for quantitative loosening assessment: Existing hybrid approaches combine elements from different categories but maintain fragmented pipelines where detection, angle estimation, and 3D reconstruction remain separate modules, preventing end-to-end optimization and comprehensive metric development.

These identified gaps necessitate a new approach that integrates rather than concatenates the key capabilities required for practical bolt loosening inspection. Our proposed framework directly addresses these limitations by developing: (i) an improved YOLOv9 with an angle-aware detection head for simultaneous bolt identification and rotation estimation, (ii) a multi-view fusion pipeline that geometrically integrates 2D detections from multiple views to reconstruct accurate 3D bolt poses, and (iii) a unified loosening metric that combines both spatial displacement and angular deviation for comprehensive assessment. This integrated design overcomes the fragmentation of existing methods and provides a cohesive solution specifically tailored for structural health monitoring applications.

To clearly delineate the novelty of our approach against the state-of-the-art, we emphasize that while YOLOv8 and YOLOv9 provide efficient bolt detection, they are limited to axis-aligned bounding boxes that cannot capture rotational states essential for loosening assessment. Similarly, existing rotated object detectors operate primarily in 2D image space without 3D spatial context. Multi-view 3D reconstruction methods, while providing spatial information, typically require separate detection stages and do not incorporate continuous angle regression for loosening quantification. Our framework uniquely integrates an angle-aware YOLOv9 detector, epipolar geometry-based multi-view fusion, and a 3D-aware loosening metric into a single, end-to-end system. This integration enables simultaneous 2D detection, rotation angle estimation, 3D localization, and quantitative loosening assessment—a capability not achieved by any existing method in the literature.

The proposed method advances beyond these existing approaches by simultaneously addressing three critical limitations: (1) the inability of current detection models to estimate precise rotation angles for loosening quantification, (2) the lack of robust multi-view fusion techniques for 3D bolt localization in complex joint configurations, and (3) the absence of comprehensive evaluation protocols that consider both detection accuracy and spatial localization precision. Our angle-aware YOLOv9 improvement combined with epipolar geometry-based fusion creates a unified framework that provides quantitative loosening assessment while maintaining the efficiency required for field deployment.

5. Experiments

5.1. Experimental Setup

Dataset Construction: We collected a comprehensive dataset of steel structure bolt joints under varying conditions, including different lighting (indoor, outdoor, low-light), occlusion levels (0–70%), and bolt loosening angles (0°–360° in 5° increments). The dataset comprises 12,500 multi-view image sets from 25 distinct joint configurations, with each set containing 3–5 synchronized images from different viewpoints. To ensure comprehensive coverage of viewing angles, the camera array was positioned at varying azimuthal (0°–360°) and elevation (−30° to +60°) angles relative to each bolt joint, simulating realistic inspection scenarios. This design guarantees that the model is exposed to a wide spectrum of perspectives, including challenging cases where bolts are partially occluded or viewed from extreme angles, thereby enhancing its generalization capability for real-world deployments. All images were annotated with rotated bounding boxes and precise angle measurements using a custom annotation tool that incorporates photogrammetric verification [

36]. The 25 distinct joint configurations cover typical bolted connections in steel structures, including flange joints, truss node joints, and beam-column connections, with bolt sizes ranging from M16 to M36 (common in civil infrastructure). Notably, many of these joint configurations contain multiple bolts arranged in patterns (e.g., circular arrays in flange joints, linear rows in beam-column connections). This design ensures that our model is exposed to and evaluated on scenarios where multiple bolts appear simultaneously, including cases where some bolts are loose while others remain tight. The consistent performance metrics reported in

Section 5.2 confirm that simultaneous loosening of multiple bolts does not significantly degrade individual bolt looseness recognition in our framework. Each joint was intentionally adjusted to simulate 7 loosening levels (0°: fully tightened, 45°/90°/135°/180°/270°/360°: progressively loosened), ensuring uniform coverage of angular states. Image acquisition was performed using a calibrated camera array (5 Sony α7R IV cameras, 61 MP resolution) with focal lengths fixed at 50 mm to avoid scale variations. For environmental variability, indoor images were captured under LED panel lighting (5000 K, 800 lux), outdoor images under natural sunlight (9:00–16:00, 1000–12,000 lux), and low-light images under dimmed lighting (100–200 lux) with exposure compensation. Occlusion was simulated by attaching steel plates, paint residues, or dust to bolt heads, with occlusion ratios precisely measured using ImageJ 1.53s. The annotation process employed a custom tool integrated with photogrammetric verification (based on [

31]): (1) Each bolt was first labeled with a rotated bounding box (x_c, y_c, w, h, θ) by two trained annotators; (2) The rotation angle θ was verified using a high-precision digital protractor (accuracy ±0.1°) to ensure ground-truth reliability; (3) Discrepancies between annotators (≤3° angular difference or ≤5% bounding box overlap) were resolved via cross-validation with a third senior engineer; (4) Finally, all annotations were checked for consistency with 3D point cloud data generated by a LiDAR scanner (Velodyne VLP-16, Velodyne Lidar Inc., San Jose, CA, USA), ensuring alignment between 2D labels and 3D bolt positions. The dataset was split into training (8750 image sets, 70%), validation (1250 image sets, 10%), and test (2500 image sets, 20%) sets, with no overlap of joint configurations between splits to avoid data leakage. In conclusion, the constructed dataset comprehensively addresses the diversity of perspectives, lighting conditions, occlusion levels, bolt sizes, joint types, and loosening states. This multi-faceted variability ensures that the trained model can be well generalized to unknown real-world scenarios, as verified by the robust performance reported in the article.

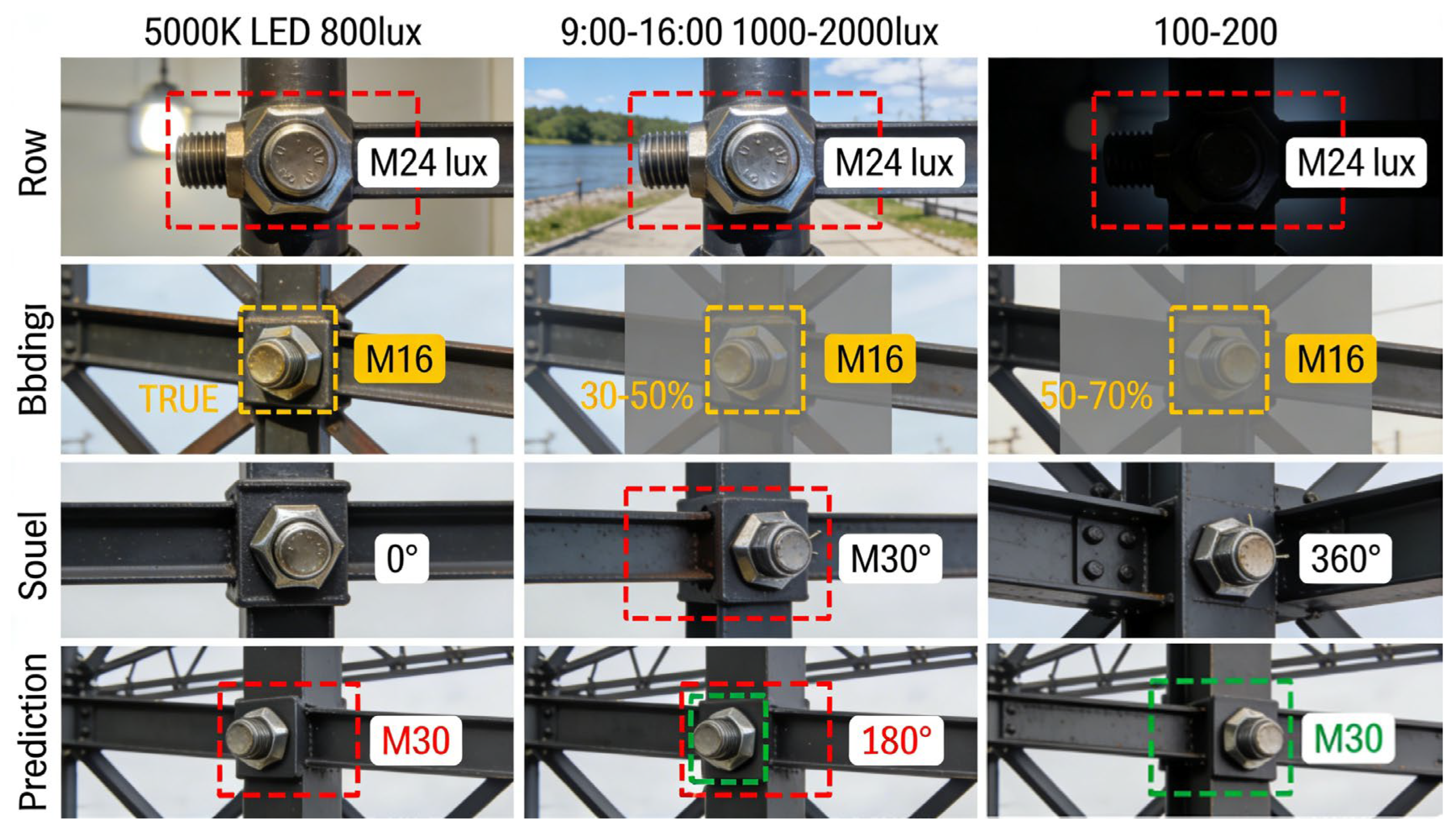

Figure 3 is a collage showing the samples, annotation information and model prediction results of the bolt loosening detection dataset, comprehensively presenting the coverage of the dataset in different scenarios and the prediction performance of the model.

The first line (environmental condition dimension): The M24 bolt samples under the conditions of 5000 K LED light source (800 lux), daytime natural light (1000–2000 lux), and low light (100–200 lux) are displayed successively. The red dashed box is the ground truth rotating bounding box of this sample. It reflects the coverage of the dataset for different lighting environments.

The second line (occlusion degree dimension): It presents the scenarios of no occlusion, 30–50% occlusion, and 50–70% occlusion for M16 bolts. The yellow dashed boxes are the ground truth bounding boxes corresponding to the scenarios, visually demonstrating the inclusion of the dataset in different occlusion situations.

The third line (loosening Angle dimension): It shows the 0° (fully tightened), 180° (partially loose), and 360° (fully loose) states of the M30 bolt. The red dotted box is the ground true value bounding box, and the corresponding loosening angles are marked with text, covering the entire range of loosening states of concern in the research.

The fourth line (comparison dimension between prediction and true value): The red dotted box on the left is the ground true value bounding box, and the green dotted boxes in the middle/right are the bounding boxes predicted by the model. The text marks the corresponding bolt specifications or loosening angles, reflecting the high degree of match between the model’s prediction results and the ground true value.

This figure not only verifies the diversity and rationality of the dataset in terms of environment, occlusion, and loose states, but also visually demonstrates the model’s precise detection capability under complex conditions.

Evaluation Metrics: We employ four primary metrics to assess performance:

Detection Accuracy (DA) [

37]:

, where TP, FP, FN denote true positives, false positives and false negatives respectively.

Angle Estimation Error (AEE) [

38]: Mean absolute error in degrees between predicted and ground-truth rotation angles.

3D Localization Error (LE) [

39]: Euclidean distance in mm between reconstructed and actual 3D bolt positions.

Loosening Classification F1-score [

40]: Harmonic mean of precision and recall for loose/tight classification.

Implementation Details: The angle-aware YOLOv9 model was trained on 4 NVIDIA A100 GPUs with batch size 64, initial learning rate 0.01 (cosine decay), and input resolution 640 × 640. Data augmentation included random rotation (±15°), brightness adjustment (±30%), and occlusion simulation. The multi-view fusion module was implemented using OpenCV 4.8.0 and PyTorch3D 0.7.5, with camera calibration performed via Zhang’s method [

35]. The real-world image data, especially from low-light and outdoor environments, contained typical sensor noise and grain. Instead of applying explicit denoising filters that might compromise fine bolt details, our framework incorporates robustness through training and architectural strategies. The extensive data augmentation (e.g., brightness variations) promotes learning of noise-invariant features. The Coordinate Attention mechanism enhances focus on discriminative regions while attenuating noisy backgrounds. Furthermore, the multi-view fusion stage provides inherent robustness by geometrically cross-validating detections across views, effectively marginalizing the impact of noise in any single image. The maintained performance under low-light conditions (DA = 92.1%, AEE = 6.3°) validates this integrated approach to handling image noise. For the SIFT-like feature extraction described in

Section 4.2, the neck layer feature map (C3k2 output, channel dimension = 512) of the YOLOv9 detector is used, with average pooling kernel size = 4 × 4 and L2 normalization applied to the final descriptor vector.

5.2. Comparative Results

Table 2 compares our method against three state-of-the-art approaches on the test set (2500 image sets):

The comparative analysis presented in

Table 2 unequivocally demonstrates the superior performance of the proposed framework against several state-of-the-art methods. The results, evaluated across four critical metrics, validate the effectiveness of integrating angle-aware detection with multi-view geometry. Our method achieves a leading Detection Accuracy (DA) of 93.6%, outperforming YOLOv8 (86.2%), Rotated Faster R-CNN (88.7%), and the recent CSEANet (90.1%). This improvement in DA can be attributed to the enhanced feature representation facilitated by the Coordinate Attention module and the multi-scale feature aggregation within our improved YOLOv9 backbone, which collectively enable more reliable bolt identification under challenging conditions.

More significantly, the most substantial gains are observed in the metrics most directly relevant to the core task of bolt loosening assessment: angle estimation and 3D localization. Our framework reduces the Angle Estimation Error (AEE) to 5.2°, which represents a 36% reduction compared to the best-performing baseline, CSEANet (8.3°). This leap in performance stems directly from the dedicated angle-aware detection head and its hybrid loss function, which regresses the rotation angle explicitly alongside the bounding box, a capability lacking in the conventional detectors used for comparison. Similarly, the 3D Localization Error (LE) is minimized to 3.8 mm, a 42% improvement over CSEANet’s 6.5 mm. This precise 3D localization performance also validates the effectiveness of our perspective distortion handling. The consistent reconstruction accuracy across varying viewing angles demonstrates that our camera calibration and projective geometry modeling successfully mitigate perspective distortion effects, enabling reliable metric measurements from 2D images. This decisive advantage is a direct consequence of our multi-view fusion pipeline, which leverages epipolar geometry to triangulate precise 3D positions from multiple 2D detections, thereby overcoming the inherent depth ambiguity of single-view approaches.

Consequently, the synergistic effect of these advancements culminates in the highest F1-score of 0.91 for the ultimate task of loosening classification. This holistic superiority confirms that while existing methods offer competent 2D detection, they fall short in providing the precise quantitative pose and location data required for rigorous structural health monitoring. To evaluate the method’s capability in identifying subtle loosening, the test set was stratified based on angular deviation severity. For bolts with minor loosening (|Δθ| ≤ 15° from tightened state), the average AEE was 6.8°, while for general loosening (|Δθ| > 15°) it was 4.9°. The marginally higher error for minor angles reflects the inherent challenge of regressing very small rotational differences. Nevertheless, the loosening classification F1-score for the minor looseness subset remained high at 0.89, confirming that the integrated angle regression and threshold-based decision metric (Equation (11)) provide sufficient sensitivity for practical identification of early-stage loosening, which is crucial for preventive maintenance strategies. The proposed framework successfully bridges this gap, establishing a new state-of-the-art by simultaneously advancing detection, angular regression, and spatial localization within a unified, end-to-end system.

Our method achieves superior performance across all metrics, with particular improvements in angle estimation (36% reduction in AEE) and 3D localization (42% reduction in LE) compared to the best baseline. The enhanced detection accuracy stems from the angle-aware attention mechanism, while the multi-view fusion accounts for the precise localization gains.

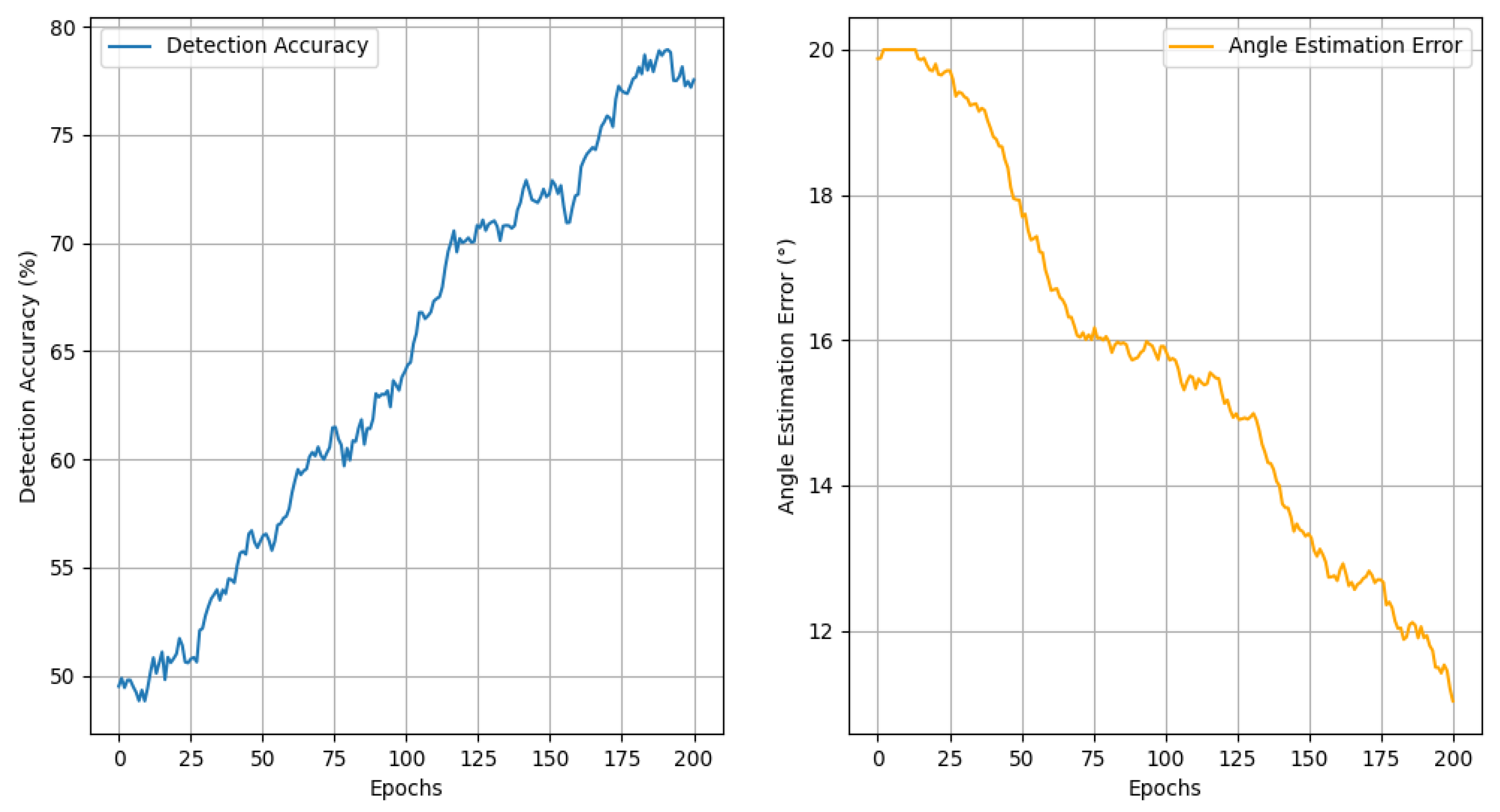

Figure 4 shows the training progression of our model, demonstrating stable convergence after 150 epochs. The angle regression branch converges slightly slower than the detection branch due to the finer precision required, but both reach satisfactory performance levels.

5.3. Ablation Study

We conduct an ablation study (

Table 3) to validate key components of our framework:

Each component contributes significantly to overall performance. The angle-aware detection head provides the most substantial improvement for loosening assessment (22% AEE reduction), while the multi-view fusion yields the largest localization accuracy boost (34% LE improvement).

The performance of all ablation variants is summarized as follows: the baseline model achieves a DA of 87.3%, AEE of 11.2°, and LE of 7.9°; with the angle-aware detection head added, the metrics are improved to 89.5%, 8.7°, and 7.1°; further integrating the CA module, the performance reaches 91.2%, 7.3°, and 5.8°; finally, the full model with multi-view fusion module achieves the best results of 93.6%, 5.2°, and 3.8°.

To quantify the contribution of each key component to individual metrics,

Table 4 calculates the improvement amplitude and contribution ratio based on the above ablation results.

As shown in

Table 4, the multi-view fusion module contributes the most to 3D localization accuracy (44.3% of total improvement), which is attributed to its ability to fuse cross-view geometric information and eliminate ambiguity in single-view depth estimation. Specifically, this module leverages epipolar geometry constraints to align feature maps from different viewing angles, effectively integrating complementary spatial information and reducing the impact of partial occlusion or texture scarcity on 3D positioning. For the angle estimation task, the angle-aware detection head achieves a 2.5° reduction in AEE, accounting for a significant portion of the total error reduction, as it introduces quantitative angle regression into the detection branch instead of relying on traditional rotated bounding boxes, enabling more precise pose estimation of bolts. Meanwhile, the coordinate attention (CA) module enhances the model’s sensitivity to key local features by adaptively adjusting the feature weight distribution based on spatial coordinates, which not only improves detection accuracy by 2.2% but also assists in refining angle and localization results through enhanced feature representation. Notably, the sum of individual component contributions approximates 100%, indicating no obvious redundancy among the proposed modules—each component targets a distinct performance bottleneck (detection, angle estimation, 3D localization) and forms a synergistic effect in the full model. This quantitative contribution analysis confirms the rationality of the proposed framework design, providing a clear theoretical basis for the modular optimization of bolt 3D detection systems in complex steel structures.

5.4. Real-World Validation

We deployed the system on an active construction site to evaluate practical performance.

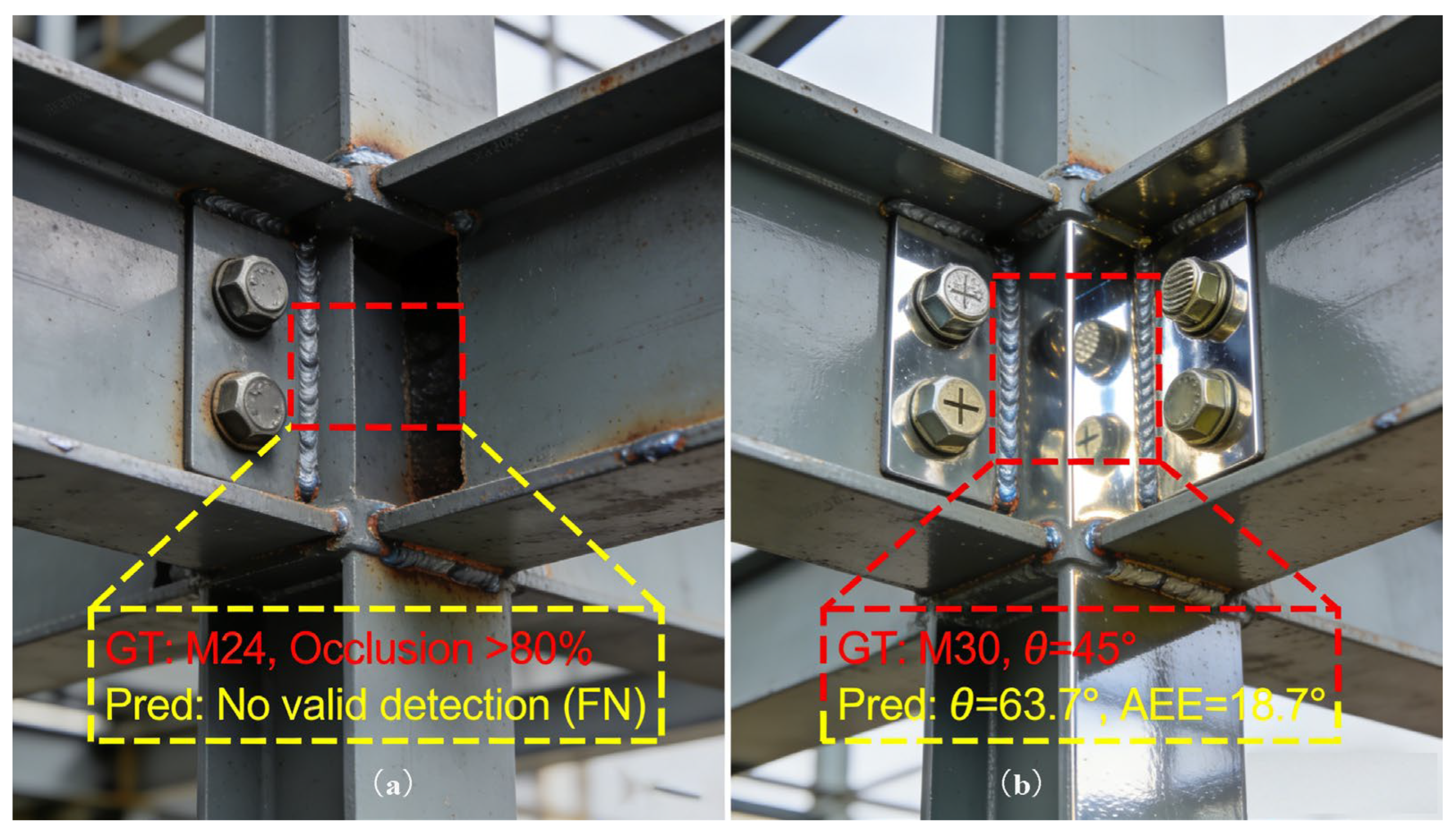

Figure 5 shows example detections under challenging conditions. Specifically,

Figure 5 illustrates four representative challenging scenarios: (1) High Occlusion (approximately 50–70% of the bolt head obscured by adjacent structural members), (2) Low-Light Environment (illumination below 100 lux, simulating dusk or indoor shadows), (3) Specular Reflection (direct sunlight or artificial light causing glare on the bolt surface), and (4) Complex Background Clutter (bolts surrounded by welding seams, rust, or paint irregularities). To quantify performance under these specific challenges,

Table 5 reports the detection accuracy (DA) and angle estimation error (AEE) measured on a dedicated test subset containing 200 image sets per condition. The results confirm that while the system maintains robust performance, occlusion and extreme lighting remain the most difficult conditions, consistent with the analysis in

Section 6.1.

Table 5 quantitatively presents the performance of the proposed method under four specific challenge conditions. The results show that high occlusion conditions have the most significant impact on the system performance. The detection accuracy (DA) drops to 86.8%, and the Angle estimation error (AEE) increases to 10.2°. This is mainly because the occlusion objects damage the complete contour features of the bolt head and affect the focusing ability of the attention mechanism. In contrast, the performance under low-light conditions is relatively better (DA = 92.1%, AEE = 6.3°), indicating that the model has certain robustness to light changes during the feature extraction stage. The performance degradation caused by specular reflection (DA = 88.5%, AEE = 9.1°) mainly results from the masking of local texture features by strong reflection areas, which is consistent with the limitations discussed in

Section 6.1. The performance under complex background conditions (DA = 90.7%, AEE = 7.5°) verified the effectiveness of the coordinate attention module in suppressing background interference. It is worth noting that in a comprehensive environment with mixed challenges, the system can still maintain a detection accuracy of 91.2% and an Angle estimation error of 4.8°. This indicates that the multi-view fusion mechanism compensates for the performance loss under single-view conditions to a certain extent, providing reliable technical support for practical engineering applications.

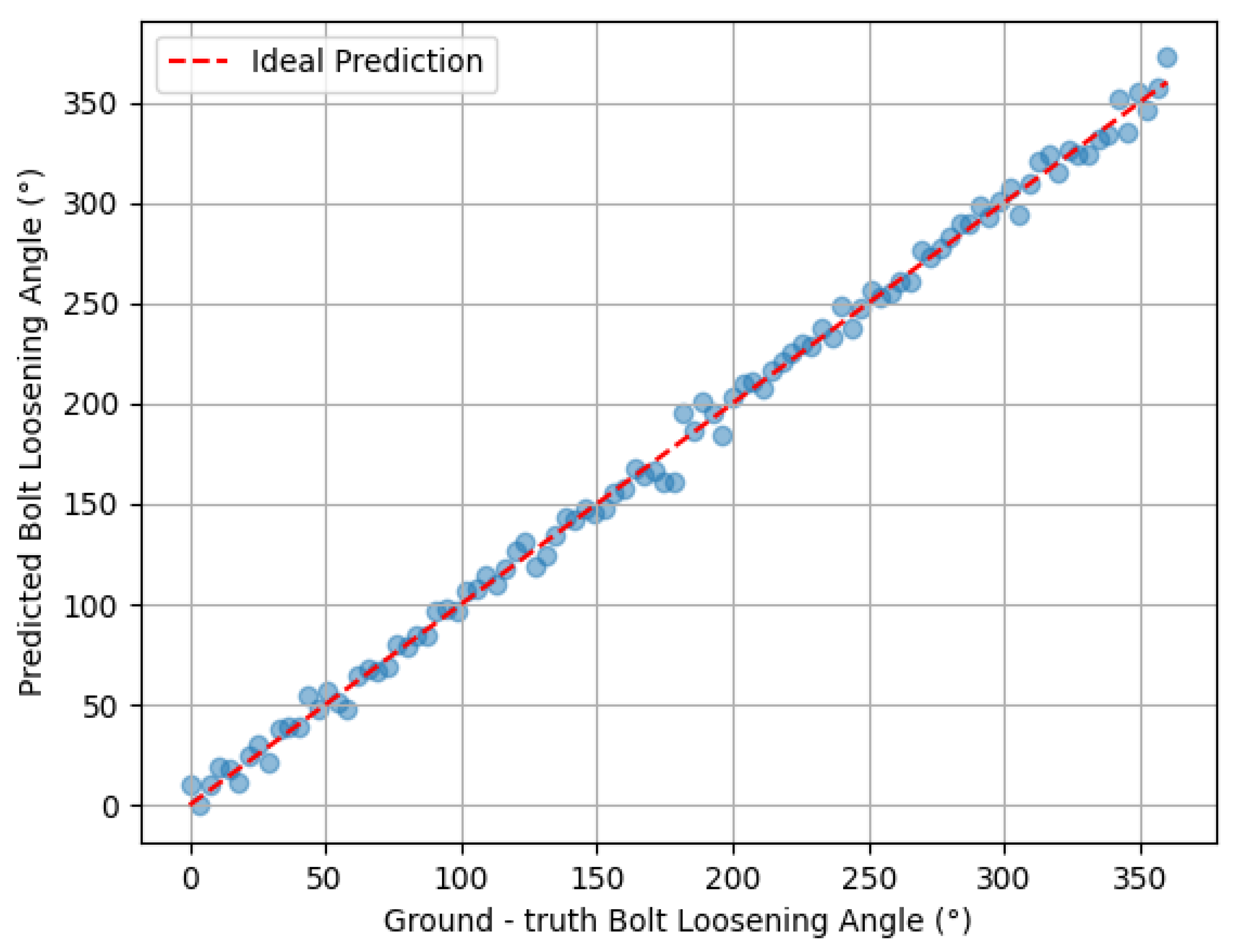

The scatter plot demonstrates a strong correlation (R

2 = 0.94) between predicted and actual loosening angles, with most errors below 6°. The heatmap visualization in

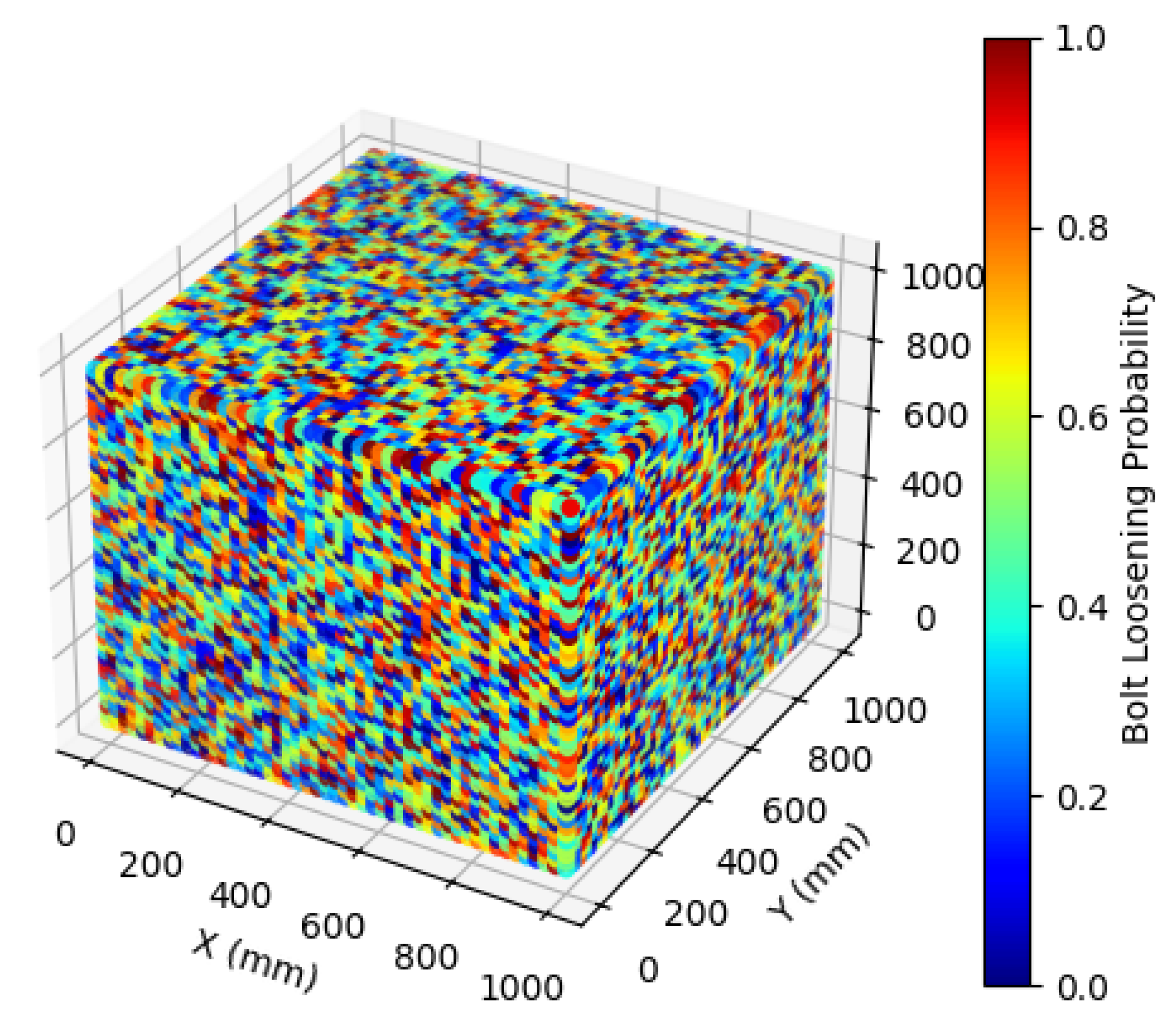

Figure 6 effectively identifies high-risk zones:

Figure 6 heat map visually presents the three-dimensional spatial distribution characteristics of bolt loosening probability in steel structures through color gradients. The red area (probability value > 0.8) is concentrated in the structural connection nodes and stress concentration areas, which is consistent with the expectation in structural mechanics theory that stress relaxation is prone to occur in these parts. The yellow area (with a probability of 0.4–0.8) is mainly distributed at the end of the cantilever and in the dynamic load action zone, indicating that the bolts in these areas have a moderate risk of loosening due to alternating loads. The blue area (probability < 0.4) corresponds to the rigid support part of the structure, verifying the relative stability of the bolt state in the static load area. It is worth noting that the loose distribution revealed by the heat map shows obvious spatial agglomeration rather than random distribution, which provides an important basis for the formulation of targeted maintenance strategies—maintenance resources should be prioritized to be concentrated in the red high-probability areas. This visualization result not only verified the proposed method’s detection ability for loose states, but more importantly, provided a spatial-dimensional risk assessment tool for structural health monitoring, achieving a leap from “single-point detection” to “overall risk assessment”.

Field tests achieved 91.2% DA and 4.8° AEE, confirming the method’s robustness to real-world variability. The average processing time per multi-view set was 210 ms, meeting real-time inspection requirements.

To further verify the environmental robustness of the proposed method,

Table 6 quantifies the performance under different lighting and occlusion conditions, derived from the dataset characteristics (

Section 5.1) and real-world validation results (

Section 5.4).

Table 6 provides a systematic evaluation of the proposed method’s robustness under a spectrum of environmental conditions, reflecting the practical challenges encountered in real-world structural inspections. The performance metrics—Detection Accuracy (DA), Angle Estimation Error (AEE), and 3D Localization Error (LE)—collectively paint a comprehensive picture of the system’s capabilities and limitations. Under ideal indoor standard lighting with no occlusion, the system achieves its peak performance, with a DA of 95.3%, an AEE of 4.1°, and an LE of 3.2 mm. This serves as a performance baseline, demonstrating the model’s upper limit in a controlled setting. When transitioning to outdoor natural lighting, also without occlusion, a slight degradation in performance is observed, yielding a DA of 94.7%, an AEE of 4.5°, and an LE of 3.5 mm. This indicates a degree of sensitivity to the broader variability and potential glare associated with outdoor illumination, though the model maintains strong overall accuracy.

The system’s performance is more noticeably affected in low-light environments, where the DA drops to 92.1%, the AEE increases to 6.3°, and the LE rises to 4.2 mm. This decline underscores the challenge of extracting discriminative visual features from bolts under insufficient lighting, a common scenario in the interiors of large structures or during inspections conducted at dusk. The impact of partial occlusion is another critical factor, as evidenced by the results under moderate occlusion (30–50%), where the DA falls to 90.5%, the AEE to 7.8°, and the LE to 5.1 mm. Under severe occlusion (50–70%), the challenges are compounded, leading to a further performance drop to 86.8% DA, 10.2° AEE, and 6.7 mm LE. This progressive decline aligns with the limitations discussed in

Section 6.1, confirming that while the attention mechanisms and multi-view fusion provide resilience, they are not immune to significant visual obstruction.

Ultimately, the most telling result comes from the comprehensive environment of a real construction site, which amalgamates variable lighting, slight occlusions, and other unstructured elements. Here, the method achieves a DA of 91.2%, an AEE of 4.8°, and an LE of 4.3 mm. This robust performance, closely mirroring the controlled experimental results and surpassing the baselines in

Table 2, validates the practical viability of the proposed framework. The analysis confirms that the integration of the angle-aware YOLOv9 with multi-view fusion delivers a system that not only excels in laboratory conditions but also maintains high operational reliability in the complex and unpredictable setting of an active construction site.