The Application of a BiGRU Model with Transformer-Based Error Correction in Deformation Prediction for Bridge SHM

Abstract

1. Introduction

2. Methodology

2.1. BiGRU Model

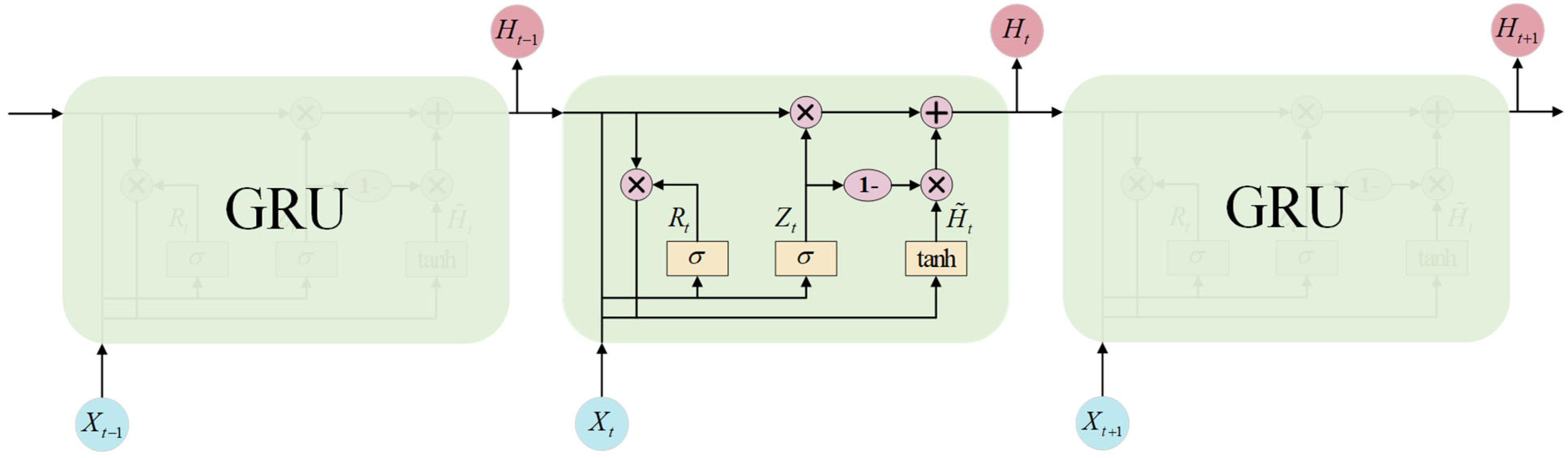

2.1.1. GRU Neural Network

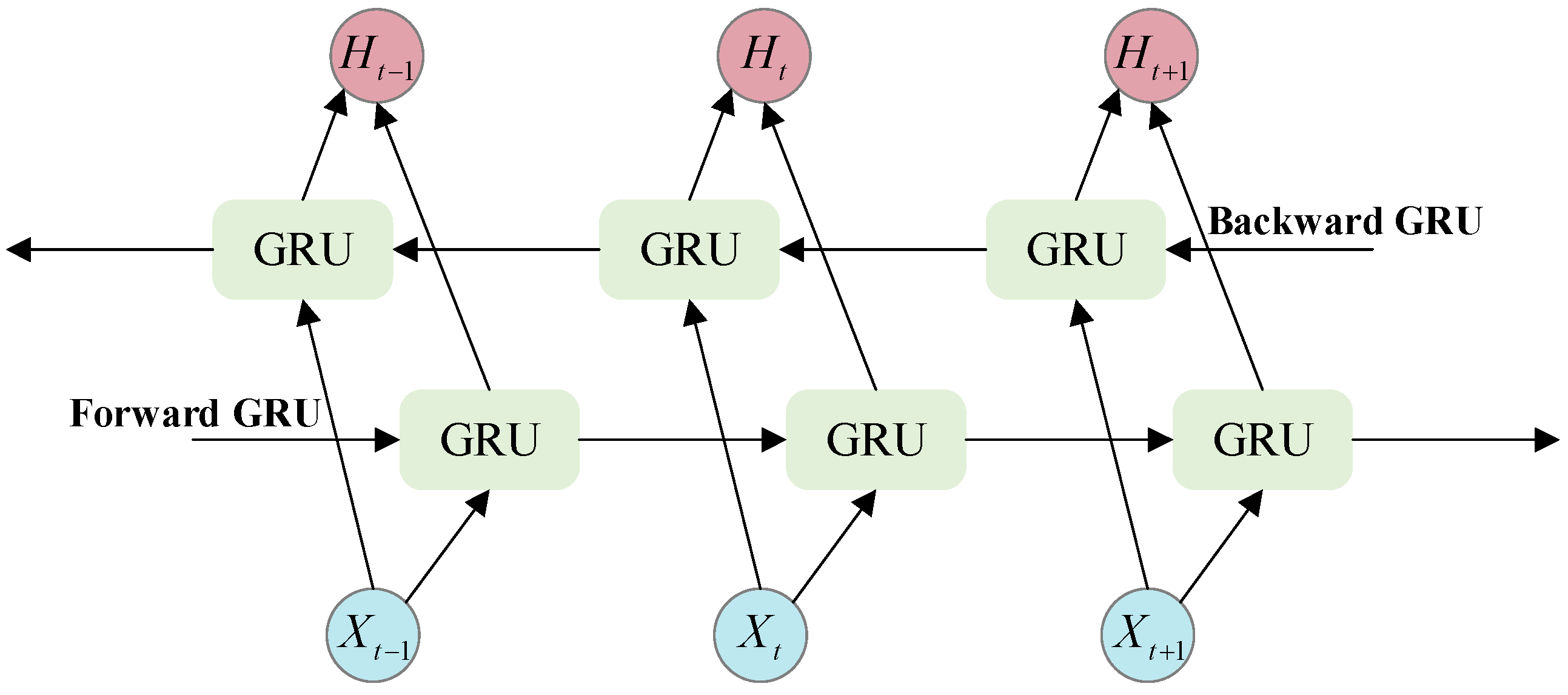

2.1.2. BiGRU Neural Network

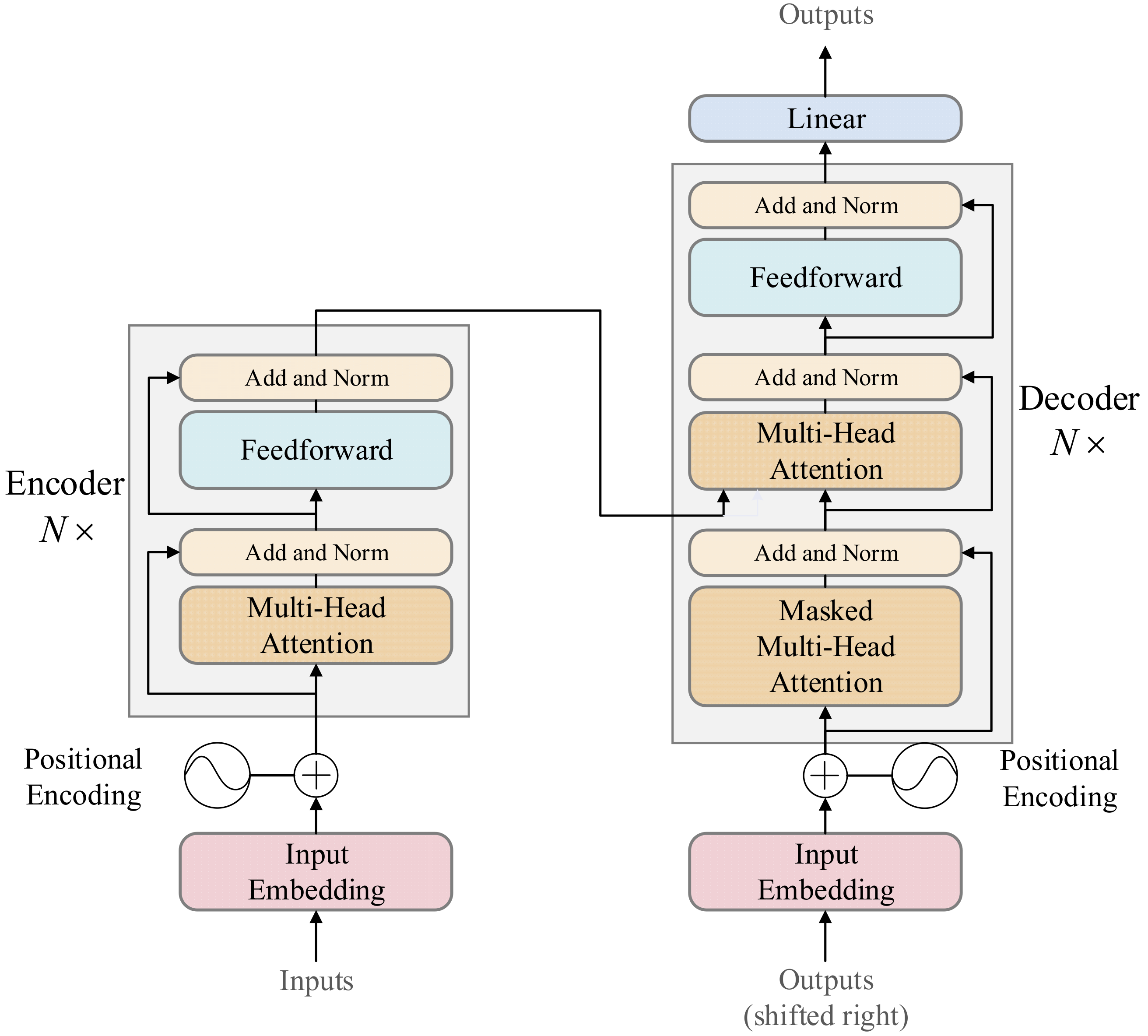

2.2. Transformer Model

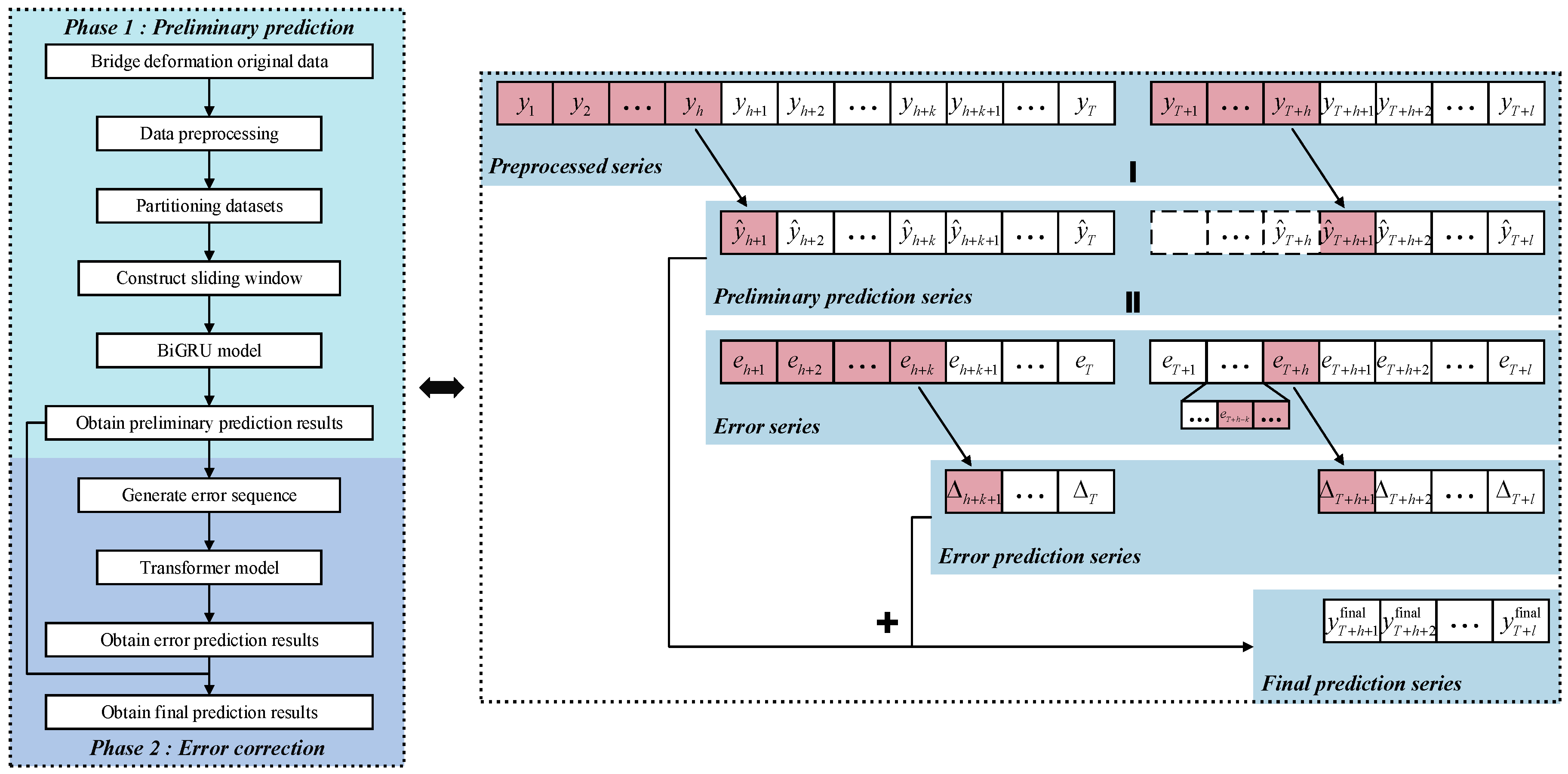

2.3. Bridge Deformation Prediction Method

2.3.1. Preliminary Forecasting Stage

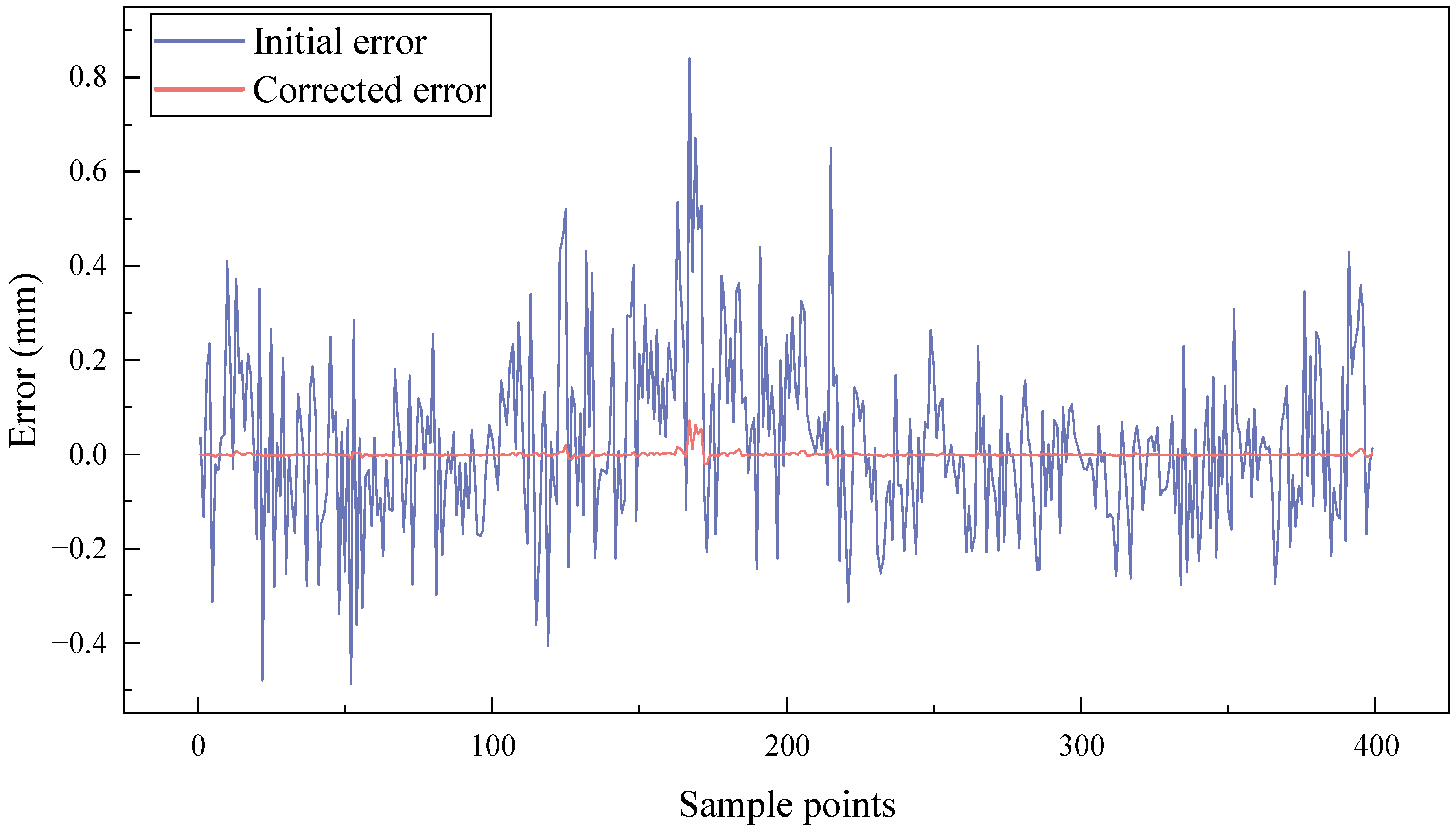

2.3.2. Error Correction Stage

2.3.3. Bridge Deformation Prediction Framework

3. Application

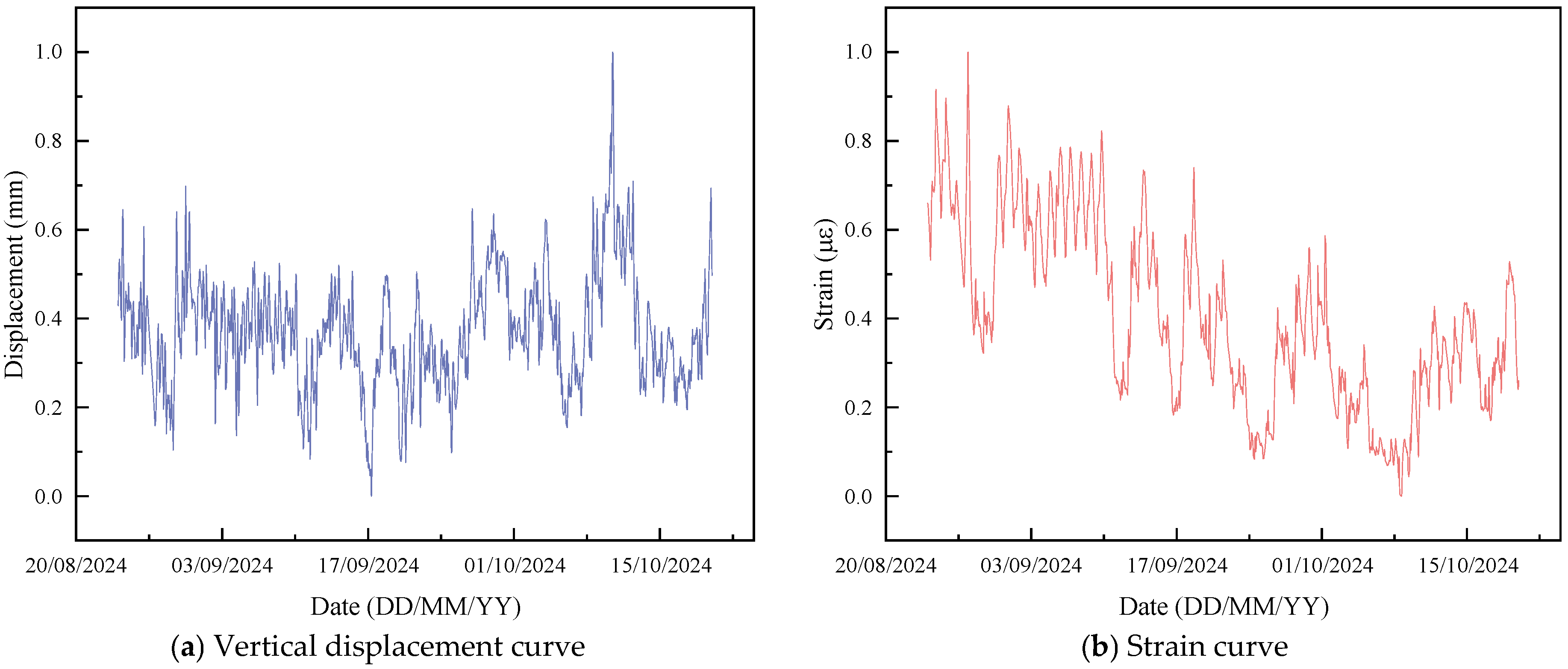

3.1. Data Source and Processing

3.2. Model Setup

3.3. Evaluation Metrics

4. Result and Discussion

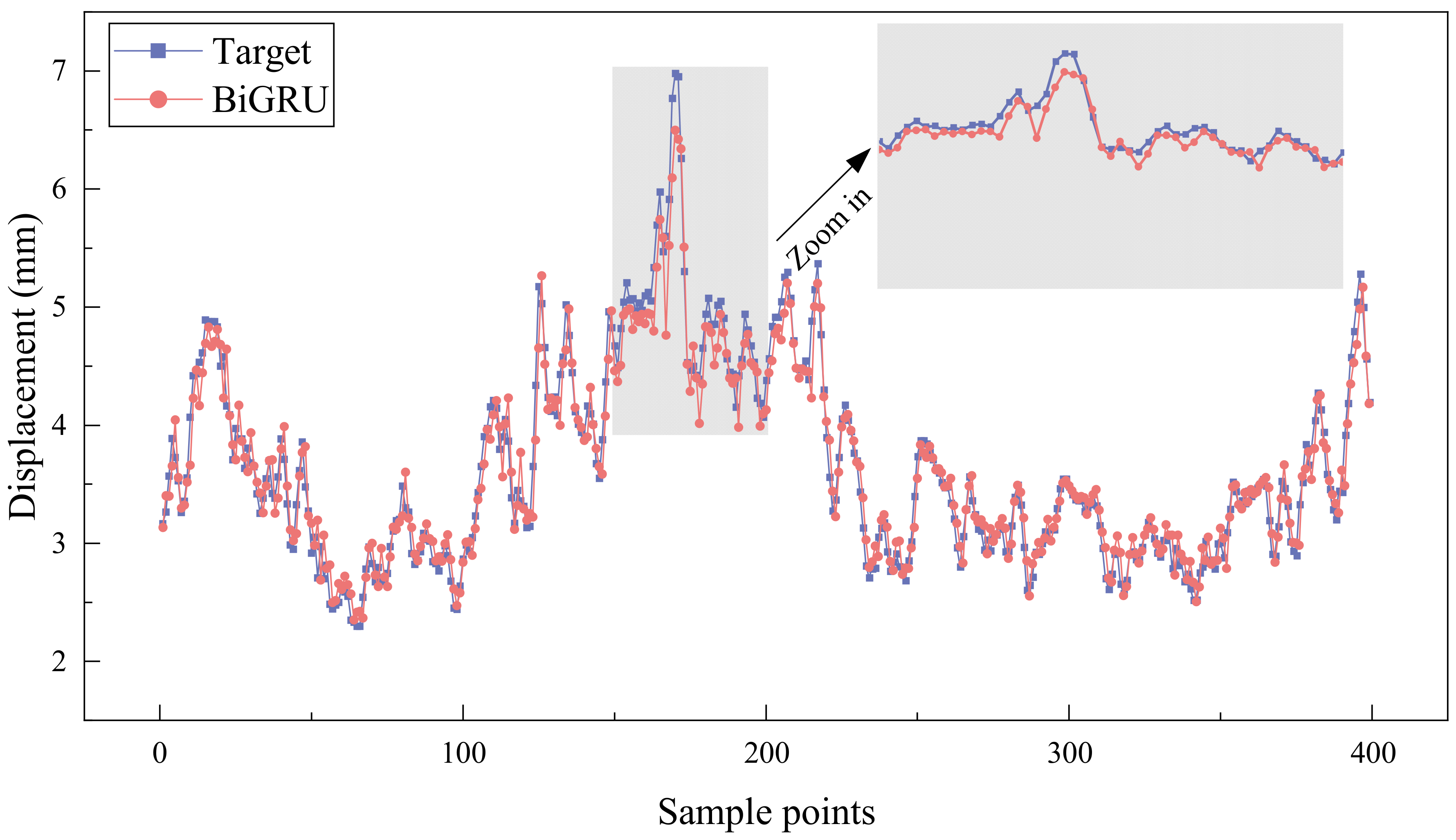

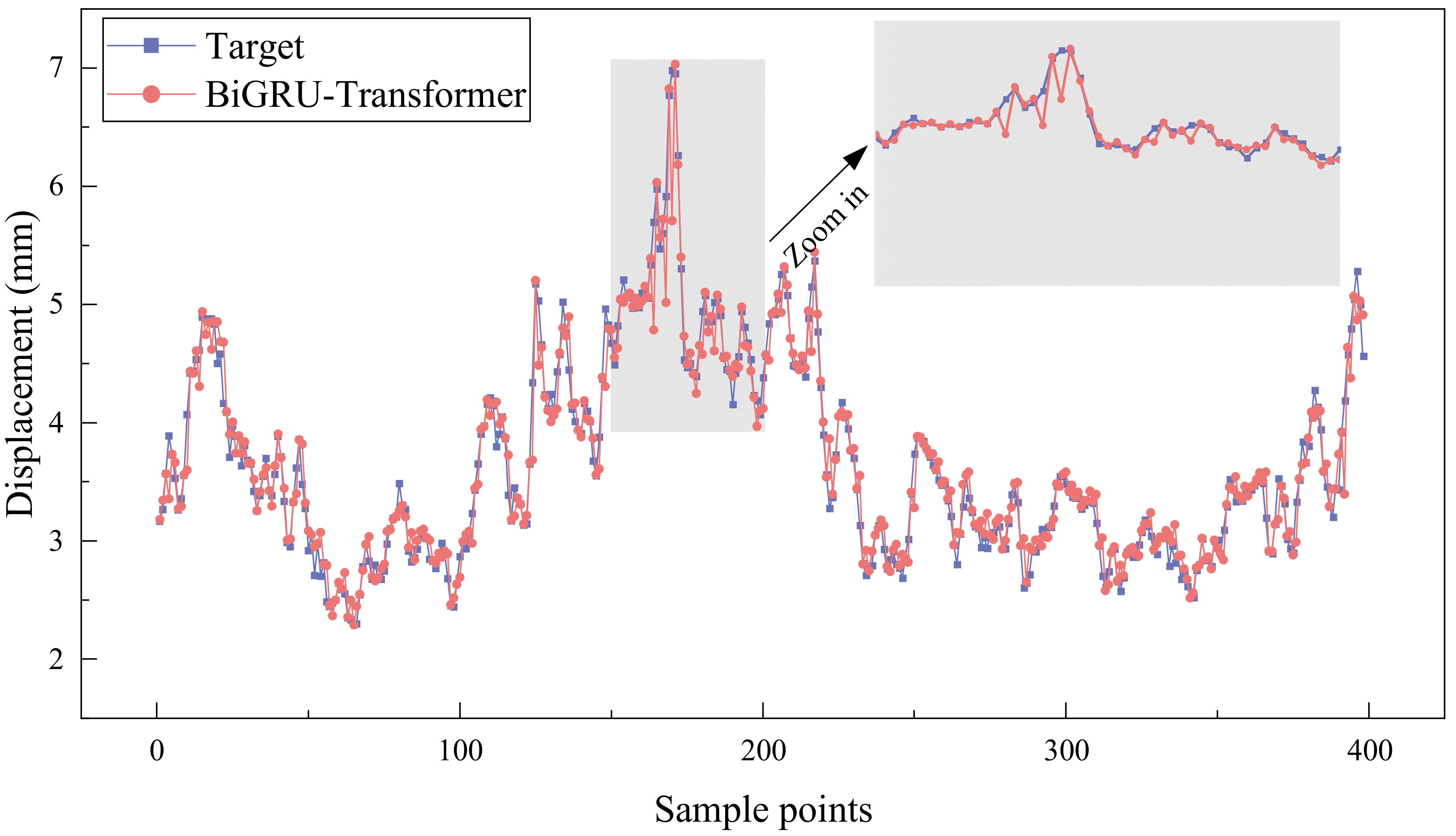

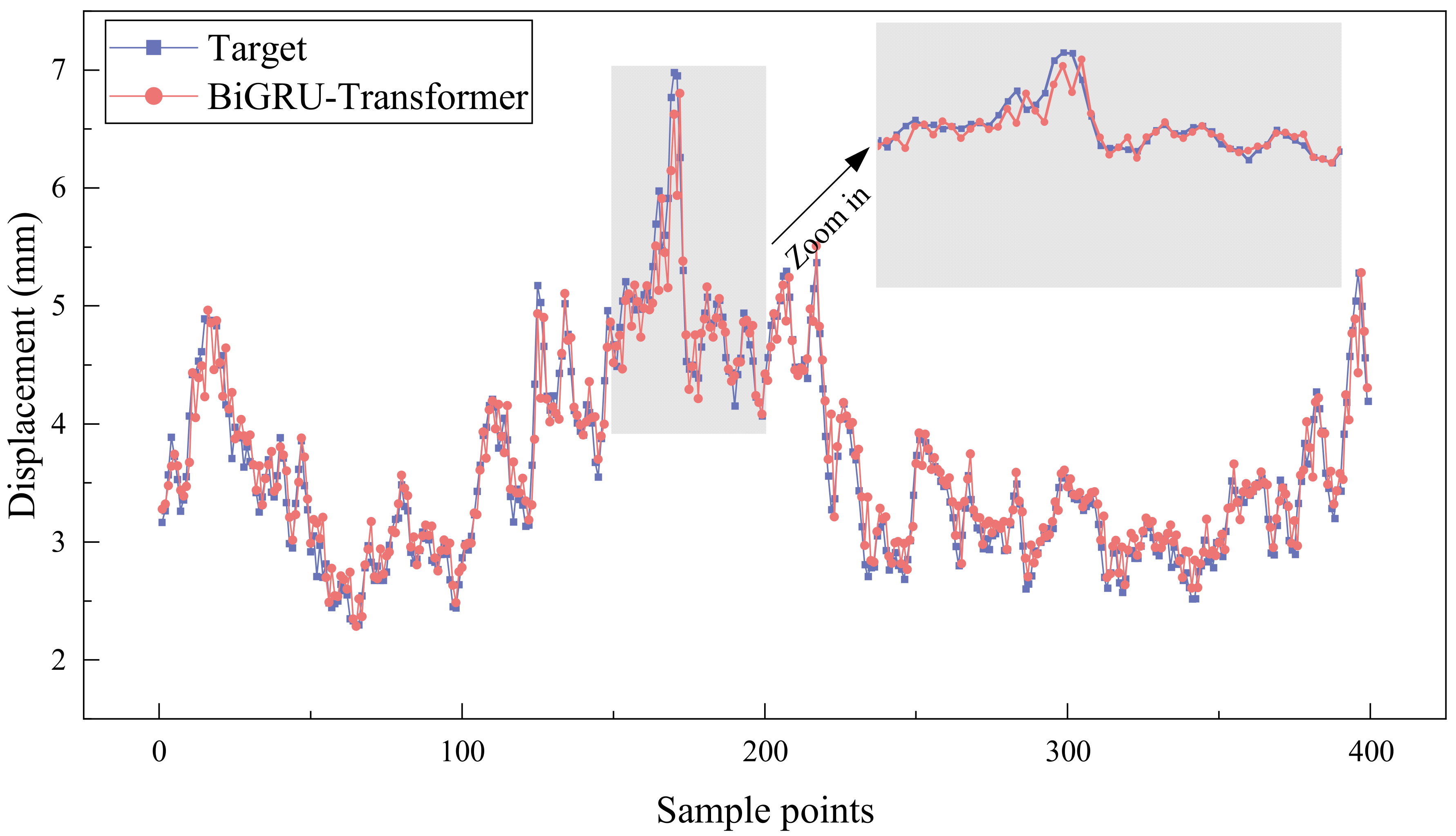

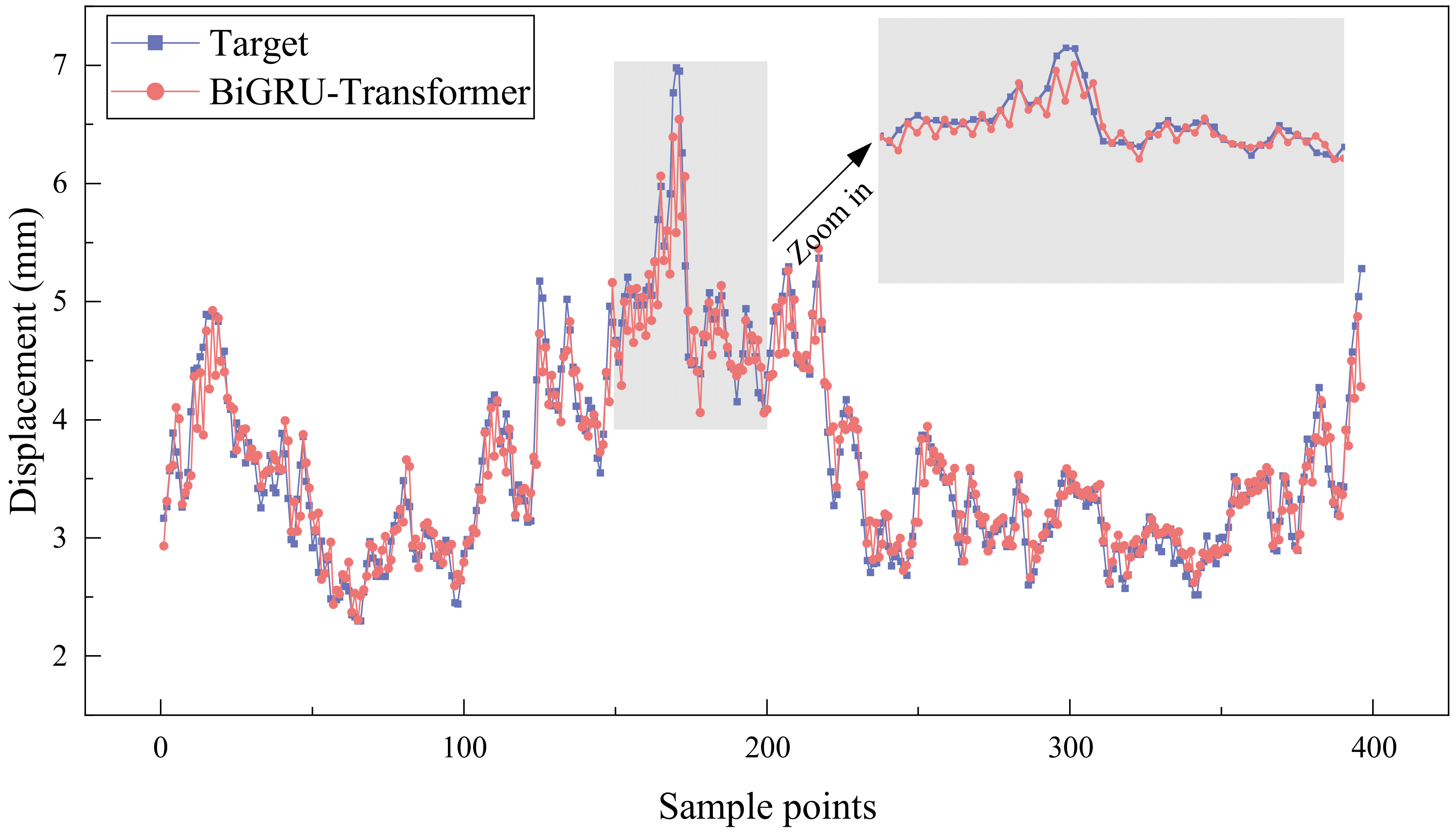

4.1. Single-Step Prediction Results

4.1.1. Bridge Deformation Prediction Results

4.1.2. Model Comparison

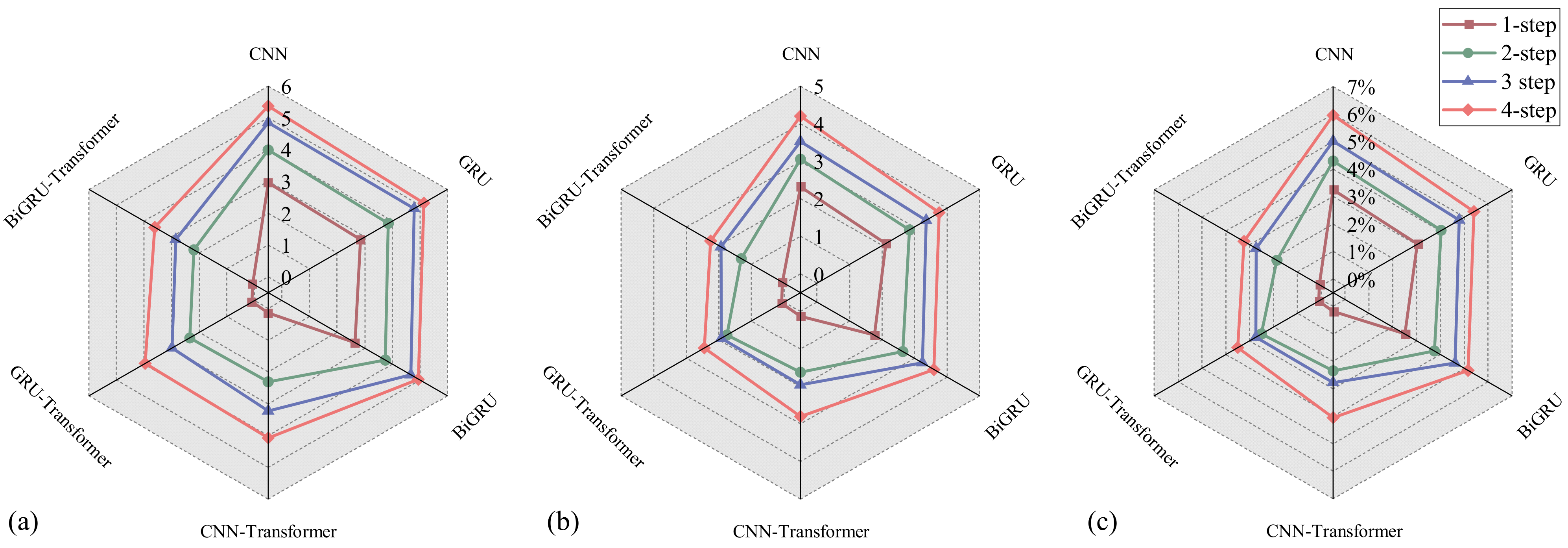

4.2. Multi-Step Prediction Results

4.3. Generalization Performance of the Prediction Method

5. Conclusions

- (1)

- In the bridge deformation prediction experiments, BiGRU outperformed both 1D-CNN and GRU in all evaluation metrics. For example, in the vertical displacement prediction experiment, BiGRU improved prediction accuracy by approximately 11% compared to 1D-CNN and about 2% compared to GRU. This indicates that BiGRU, by learning both forward and backward features of the sequence, enhances its ability to capture complex patterns and long-term dependencies in the deformation data. Thus, its prediction accuracy is further improved compared to other single models.

- (2)

- A comparison of single models and hybrid models incorporating transformer-based error correction was conducted for one-step prediction. The results show that the hybrid models significantly outperform the single models, with their prediction accuracy improving by up to 98.59%. Moreover, when the prediction steps are set to 2, 3, and 4, the hybrid models demonstrate more excellent stability and higher prediction accuracy than single models. This highlights the effectiveness of integrating error correction techniques into the bridge deformation prediction framework.

- (3)

- To improve prediction performance, the BiGRU-Transformer hybrid model employs a two-stage prediction approach, consisting of a preliminary BiGRU prediction followed by transformer-based error correction on SHM data. Compared to other models, the BiGRU-Transformer achieves the highest prediction accuracy, with its prediction error remaining within 5%. This demonstrates the advanced nature and superiority of the BiGRU-Transformer model.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Catbas, N.; Avci, O. A Review of Latest Trends in Bridge Health Monitoring. Proc. Inst. Civ. Eng. Bridge Eng. 2023, 176, 76–91. [Google Scholar] [CrossRef]

- Sun, B.; Zhou, H.; Cao, W.; Chen, W.; Chen, B.; Zhuang, Y. Vertical and Horizontal Combined Algorithm for Missing Data Imputation in Bridge Health Monitoring System. J. Bridge Eng. 2023, 28, 04023033. [Google Scholar] [CrossRef]

- Sun, B.; Xie, Y.; Zhou, H.; Li, R.; Wu, T.; Ruan, W. Hybrid WARIMA-WANN Algorithm for Data Prediction in Bridge Health Monitoring System. Structures 2024, 69, 107490. [Google Scholar] [CrossRef]

- Xin, J.; Jiang, Y.; Zhou, J.; Peng, L.; Liu, S.; Tang, Q. Bridge Deformation Prediction Based on SHM Data Using Improved VMD and Conditional KDE. Eng. Struct. 2022, 261, 114285. [Google Scholar] [CrossRef]

- Shi, Z.; Li, J.; Jiang, Z.; Li, H.; Yu, C.; Mi, X. WGformer: A Weibull-Gaussian Informer Based Model for Wind Speed Prediction. Eng. Appl. Artif. Intell. 2024, 131, 107891. [Google Scholar] [CrossRef]

- Lim, J.-Y.; Kim, S.; Kim, H.-K.; Kim, Y.-K. Long Short-Term Memory (LSTM)-Based Wind Speed Prediction during a Typhoon for Bridge Traffic Control. J. Wind Eng. Ind. Aerodyn. 2022, 220, 104788. [Google Scholar] [CrossRef]

- Wang, Z.; Lu, X.; Zhang, W.; Fragkoulis, V.C.; Zhang, Y.; Beer, M. Deep Learning-Based Prediction of Wind-Induced Lateral Displacement Response of Suspension Bridge Decks for Structural Health Monitoring. J. Wind Eng. Ind. Aerodyn. 2024, 247, 105679. [Google Scholar] [CrossRef]

- Kwas, M.; Paccagnini, A.; Rubaszek, M. Common Factors and the Dynamics of Industrial Metal Prices. A Forecasting Perspective. Resour. Policy 2021, 74, 102319. [Google Scholar] [CrossRef]

- Montanari, A.; Rosso, R.; Taqqu, M.S. A Seasonal Fractional ARIMA Model Applied to the Nile River Monthly Flows at Aswan. Water Resour. Res. 2000, 36, 1249–1259. [Google Scholar] [CrossRef]

- Chen, K.; Chen, H.; Zhou, C.; Huang, Y.; Qi, X.; Shen, R.; Liu, F.; Zuo, M.; Zou, X.; Wang, J.; et al. Comparative Analysis of Surface Water Quality Prediction Performance and Identification of Key Water Parameters Using Different Machine Learning Models Based on Big Data. Water Res. 2020, 171, 115454. [Google Scholar] [CrossRef]

- Wang, C.; Ansari, F.; Wu, B.; Li, S.; Morgese, M.; Zhou, J. LSTM Approach for Condition Assessment of Suspension Bridges Based on Time-Series Deflection and Temperature Data. Adv. Struct. Eng. 2022, 25, 3450–3463. [Google Scholar] [CrossRef]

- Kisvari, A.; Lin, Z.; Liu, X. Wind Power Forecasting—A Data-Driven Method along with Gated Recurrent Neural Network. Renew. Energy 2021, 163, 1895–1909. [Google Scholar] [CrossRef]

- She, D.; Jia, M. A BiGRU Method for Remaining Useful Life Prediction of Machinery. Measurement 2021, 167, 108277. [Google Scholar] [CrossRef]

- Sun, L.; Shang, Z.; Xia, Y.; Bhowmick, S.; Nagarajaiah, S. Review of Bridge Structural Health Monitoring Aided by Big Data and Artificial Intelligence: From Condition Assessment to Damage Detection. J. Struct. Eng. 2020, 146, 04020073. [Google Scholar] [CrossRef]

- Escribano, Á.; Wang, D. Mixed Random Forest, Cointegration, and Forecasting Gasoline Prices. Int. J. Forecast. 2021, 37, 1442–1462. [Google Scholar] [CrossRef]

- Wang, S.; Yan, S.; Li, H.; Zhang, T.; Jiang, W.; Yang, B.; Li, Q.; Li, M.; Zhang, N.; Wang, J. Short-Term Prediction of Photovoltaic Power Based on Quadratic Decomposition and Residual Correction. Electr. Power Syst. Res. 2024, 236, 110968. [Google Scholar] [CrossRef]

- Xu, H.; Chang, Y.; Zhao, Y.; Wang, F. A Hybrid Model for Multi-Step Wind Speed Forecasting Based on Secondary Decomposition, Deep Learning, and Error Correction Algorithms. J. Intell. Fuzzy Syst. 2021, 41, 3443–3462. [Google Scholar] [CrossRef]

- Luo, H.; Wang, D.; Cheng, J.; Wu, Q. Multi-Step-Ahead Copper Price Forecasting Using a Two-Phase Architecture Based on an Improved LSTM with Novel Input Strategy and Error Correction. Resour. Policy 2022, 79, 102962. [Google Scholar] [CrossRef]

- Luo, H.; Wang, D.; Yue, C.; Liu, Y.; Guo, H. Research and Application of a Novel Hybrid Decomposition-Ensemble Learning Paradigm with Error Correction for Daily PM10 Forecasting. Atmos. Res. 2018, 201, 34–45. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wenchuan, W.; Yanwei, Z.; Dongmei, X.; Yanghao, H. Error Correction Method Based on Deep Learning for Improving the Accuracy of Conceptual Rainfall-Runoff Model. J. Hydrol. 2024, 643, 131992. [Google Scholar] [CrossRef]

- Zhao, L.-T.; Li, Y.; Chen, X.-H.; Sun, L.-Y.; Xue, Z.-Y. MFTM-Informer: A Multi-Step Prediction Model Based on Multivariate Fuzzy Trend Matching and Informer. Inform. Sci. 2024, 681, 121268. [Google Scholar] [CrossRef]

- Santra, A.S.; Lin, J.-L. Integrating Long Short-Term Memory and Genetic Algorithm for Short-Term Load Forecasting. Energies 2019, 12, 2040. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Chen, C.; Tang, L.; Lu, Y.; Wang, Y.; Liu, Z.; Liu, Y.; Zhou, L.; Jiang, Z.; Yang, B. Reconstruction of Long-Term Strain Data for Structural Health Monitoring with a Hybrid Deep-Learning and Autoregressive Model Considering Thermal Effects. Eng. Struct. 2023, 285, 116063. [Google Scholar] [CrossRef]

- Zhang, Q.; Guo, M.; Zhao, L.; Li, Y.; Zhang, X.; Han, M. Transformer-Based Structural Seismic Response Prediction. Structures 2024, 61, 105929. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A Review on the Attention Mechanism of Deep Learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Kow, P.-Y.; Liou, J.-Y.; Yang, M.-T.; Lee, M.-H.; Chang, L.-C.; Chang, F.-J. Advancing Climate-Resilient Flood Mitigation: Utilizing Transformer-LSTM for Water Level Forecasting at Pumping Stations. Sci. Total Environ. 2024, 927, 172246. [Google Scholar] [CrossRef]

- Asad, A.T.; Kim, B.; Cho, S.; Sim, S.-H. Prediction Model for Long-Term Bridge Bearing Displacement Using Artificial Neural Network and Bayesian Optimization. Struct. Control Health Monit. 2023, 2023, 6664981. [Google Scholar] [CrossRef]

- O’Shea, K.; Nash, R. An Introduction to Convolutional Neural Networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

| Model | Hyperparameter Name | Hyperparameter Value |

|---|---|---|

| BiGRU | Input length of BiGRU | 12 |

| Number of BiGRU layers | 2 | |

| Hidden size of BiGRU | 64, 128 | |

| Transformer | Input length of transformer | 5 |

| Number of encoder layers | 3 | |

| Number of decoder layers | 2 | |

| Dimension of model | 128 | |

| Dimension of fcn | 128 | |

| Number of heads | 8 |

| Preliminary Prediction Model | RMSE/mm | MAE/mm | MAPE/% |

|---|---|---|---|

| 1D-CNN | 0.224 | 0.169 | 4.516 |

| GRU | 0.195 | 0.149 | 3.985 |

| BiGRU | 0.188 | 0.142 | 3.840 |

| Hybrid Prediction Model | RMSE/mm | MAE/mm | MAPE/% |

|---|---|---|---|

| 1D-CNN-Transformer | 0.015 | 0.004 | 0.094 |

| GRU-Transformer | 0.010 | 0.006 | 0.165 |

| BiGRU-Transformer | 0.007 | 0.002 | 0.054 |

| Prediction Step | Model | RMSE/mm | MAE/mm | MAPE/% |

|---|---|---|---|---|

| 2 | 1D-CNN | 0.347 | 0.245 | 6.417 |

| GRU | 0.301 | 0.212 | 5.536 | |

| BiGRU | 0.295 | 0.205 | 5.377 | |

| 1D-CNN-Transformer | 0.218 | 0.145 | 3.912 | |

| GRU-Transformer | 0.204 | 0.116 | 3.034 | |

| BiGRU-Transformer | 0.181 | 0.100 | 2.687 | |

| 3 | 1D-CNN | 0.399 | 0.287 | 7.695 |

| GRU | 0.376 | 0.268 | 7.024 | |

| BiGRU | 0.371 | 0.262 | 6.907 | |

| 1D-CNN-Transformer | 0.240 | 0.154 | 4.046 | |

| GRU-Transformer | 0.227 | 0.154 | 4.110 | |

| BiGRU-Transformer | 0.215 | 0.145 | 3.968 | |

| 4 | 1D-CNN | 0.451 | 0.325 | 8.525 |

| GRU | 0.386 | 0.279 | 7.355 | |

| BiGRU | 0.378 | 0.275 | 7.350 | |

| 1D-CNN-Transformer | 0.288 | 0.196 | 5.303 | |

| GRU-Transformer | 0.242 | 0.170 | 4.705 | |

| BiGRU-Transformer | 0.248 | 0.168 | 4.515 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Xie, G.; Zhang, Y.; Liu, H.; Zhou, L.; Liu, W.; Gao, Y. The Application of a BiGRU Model with Transformer-Based Error Correction in Deformation Prediction for Bridge SHM. Buildings 2025, 15, 542. https://doi.org/10.3390/buildings15040542

Wang X, Xie G, Zhang Y, Liu H, Zhou L, Liu W, Gao Y. The Application of a BiGRU Model with Transformer-Based Error Correction in Deformation Prediction for Bridge SHM. Buildings. 2025; 15(4):542. https://doi.org/10.3390/buildings15040542

Chicago/Turabian StyleWang, Xu, Guilin Xie, Youjia Zhang, Haiming Liu, Lei Zhou, Wentao Liu, and Yang Gao. 2025. "The Application of a BiGRU Model with Transformer-Based Error Correction in Deformation Prediction for Bridge SHM" Buildings 15, no. 4: 542. https://doi.org/10.3390/buildings15040542

APA StyleWang, X., Xie, G., Zhang, Y., Liu, H., Zhou, L., Liu, W., & Gao, Y. (2025). The Application of a BiGRU Model with Transformer-Based Error Correction in Deformation Prediction for Bridge SHM. Buildings, 15(4), 542. https://doi.org/10.3390/buildings15040542