A Generative AI Framework for Adaptive Residential Layout Design Responding to Family Lifecycle Changes

Abstract

1. Introduction

1.1. Background

1.2. Literature Review

1.2.1. The Evolution of Architectural and Interior Design Technologies

1.2.2. Advancements of Generative Artificial Intelligence in Architectural Design

1.2.3. Strengths and Limitations of Mainstream Generative Models

1.2.4. Architectural Adaptability and Human-Centered Design

1.2.5. Family Structure Transformation and Emerging Research Gaps

1.3. Research Gaps and Objective

- Existing studies on generative AI in housing design address single or static residential layouts, often neglecting the dynamic evolution of family structures and associated spatial adaptability requirements across the life cycle. Previous frameworks, such as ControlNet and FloorDiffusion, provide strong control over geometric or semantic conditioning, yet they lack mechanisms to represent functional continuity and behavioral transitions across time. This research focuses on adaptive residential layouts that respond to functional and spatial transformations driven by family transitions, aiming to address how generative AI can systematically capture and generate layouts that reflect evolving household configurations.

- While prior research has explored user-centered design, there is limited investigation into stage-specific shifts in spatial requirements, zoning flexibility, and multifunctional adaptation resulting from family structure transitions, such as shifts from couples to child-rearing households or multigenerational caregiving. Models such as LayoutDM partially incorporate spatial reasoning but remain restricted to fixed typologies and do not integrate lifecycle variability or user-behavioral logic. This study, therefore, emphasizes mapping behavioral and spatial needs across life-cycle stages, integrating them into AI-driven layout generation to bridge this gap between architectural functionality and life-cycle-aware design.

- Conventional applications of Stable Diffusion in residential design often exhibit inconsistencies between prompts and generated outcomes, failing to align with functional requirements and the subtleties of family life cycles. To overcome these limitations, the present study introduces a composite loss function that simultaneously optimizes aesthetic coherence, spatial adaptability, and behavioral fidelity. Unlike single-objective diffusion tuning in existing works, the proposed loss formulation establishes a multi-criteria optimization process that allows generative outputs to maintain both architectural rationality and lifecycle sensitivity. Accordingly, this study proposes a framework that translates family-structure-driven requirements into AI-interpretable prompts, incorporates stage-specific floor plans as ControlNet constraints, and introduces a novel composite loss function to guide lifecycle-responsive fine-tuning. This approach enables more accurate and architecturally informed generation of layouts and interior visuals, thereby bridging the gap between evolving family needs and AI-generated design outputs, and contributing to more adaptable, life-cycle-sensitive residential design.

- To analyze family lifecycle characteristics and extract stage-specific behavioral, spatial, and functional requirements that inform adaptive housing design;

- To construct a generative design framework integrating user-behavioral logic, spatial adaptability principles, and a fine-tuned diffusion model for lifecycle-responsive layout generation;

- To evaluate the proposed framework through a comparative analysis of functional behavioral, spatial alignment, and performance against conventional design workflows.

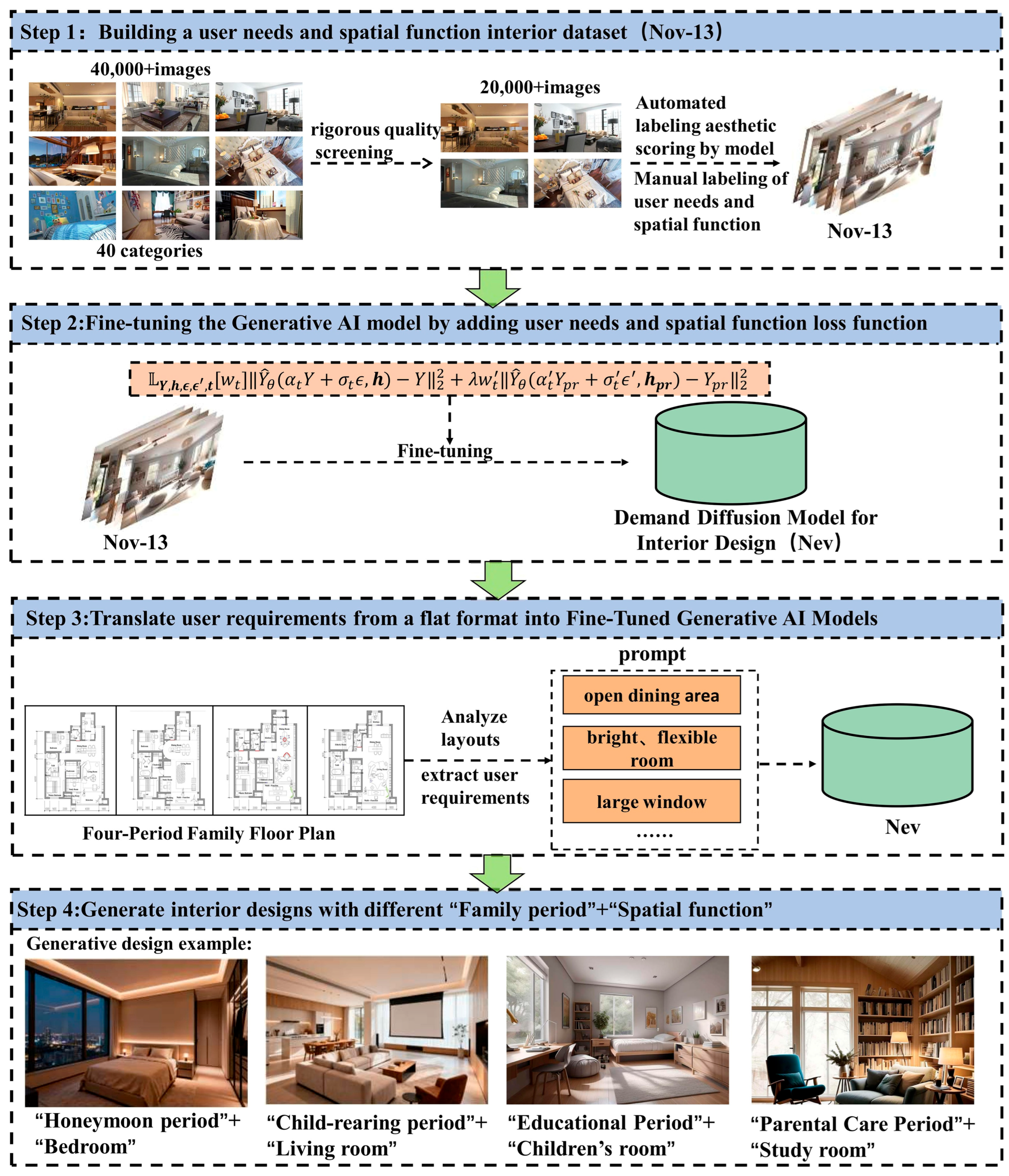

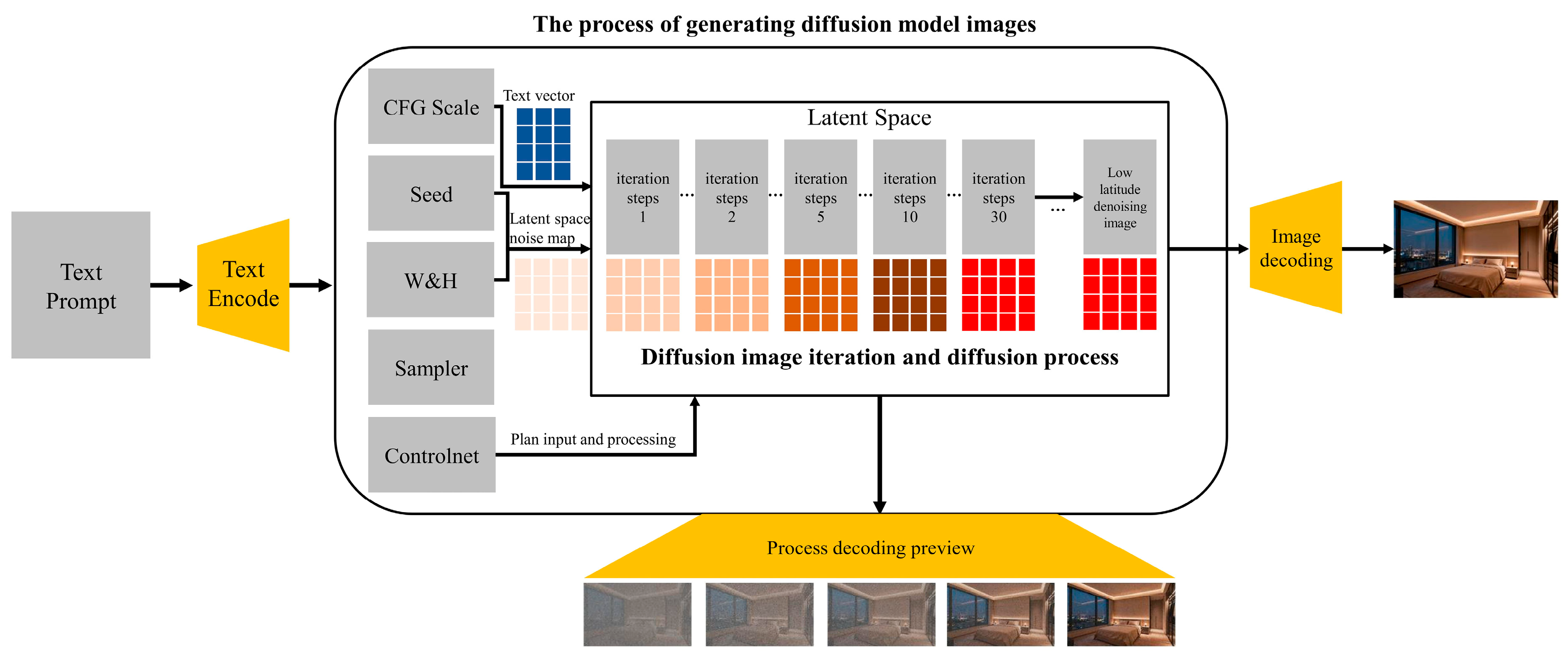

2. Methodology

2.1. Framework

2.2. Nov-13 Dataset

Dataset Transparency and Ethical Compliance

2.3. Training Loss Function Formulation

- : target (ground truth) interior image;

- : image generated by pre-trained diffusion model;

- : textual conditioning or prompt embedding;

- : Gaussian noise vectors drawn from ;

- : forward diffusion scheduling coefficients at step ;

- : time-step-dependent weights;

- : learnable parameters of the diffusion network;

- : adaptive weight for prior knowledge regularization.

2.4. Model Fine-Tuning

2.5. Generating Designs Using Fine-Tuned Models

2.6. Experimental Configuration and Reproducibility

2.7. Validation Method

3. Result

3.1. Family Lifecycle and Corresponding Space-User Requirements

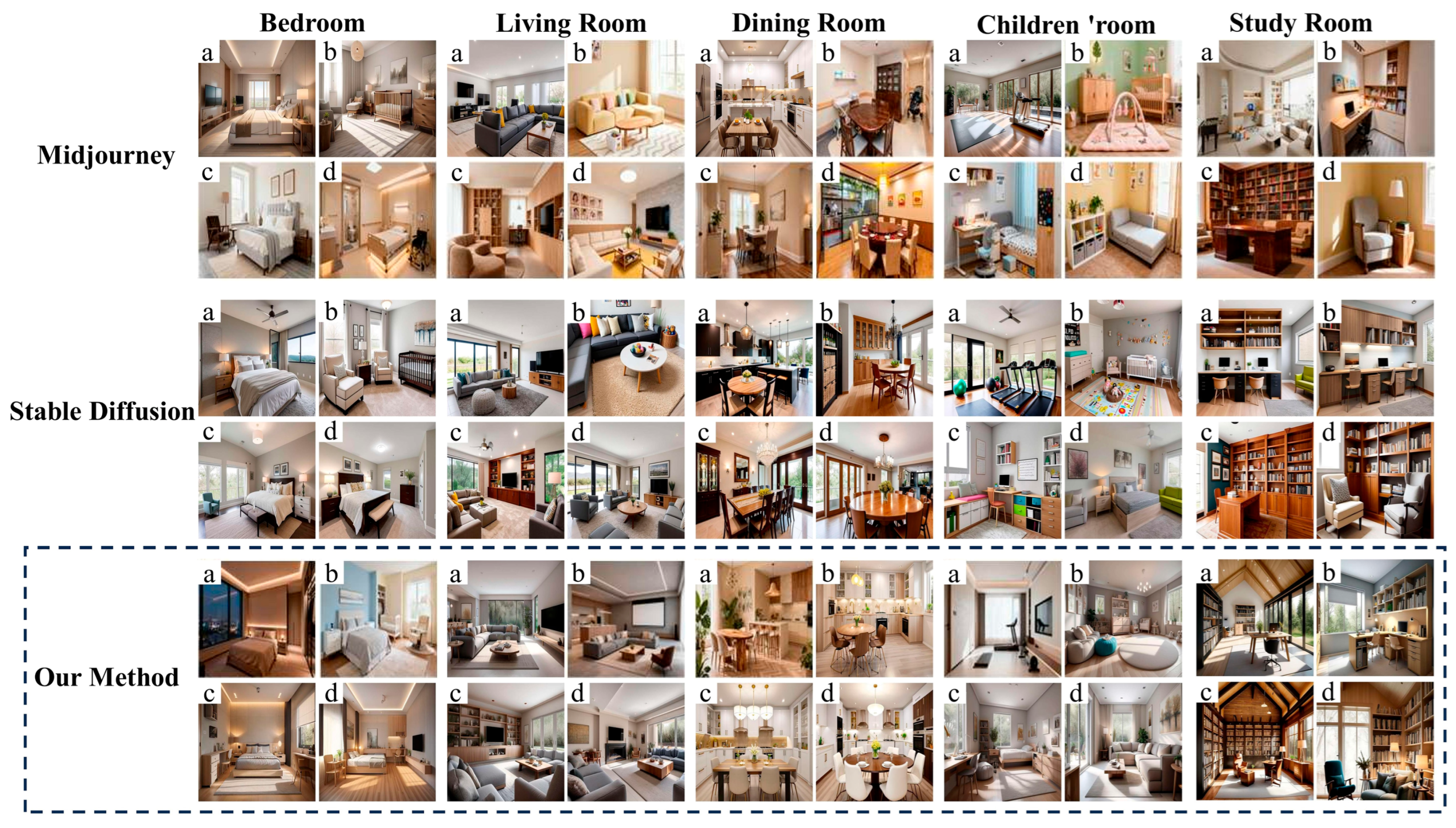

3.2. Generating Interior Visualizations for Different Spaces Using Three Approaches

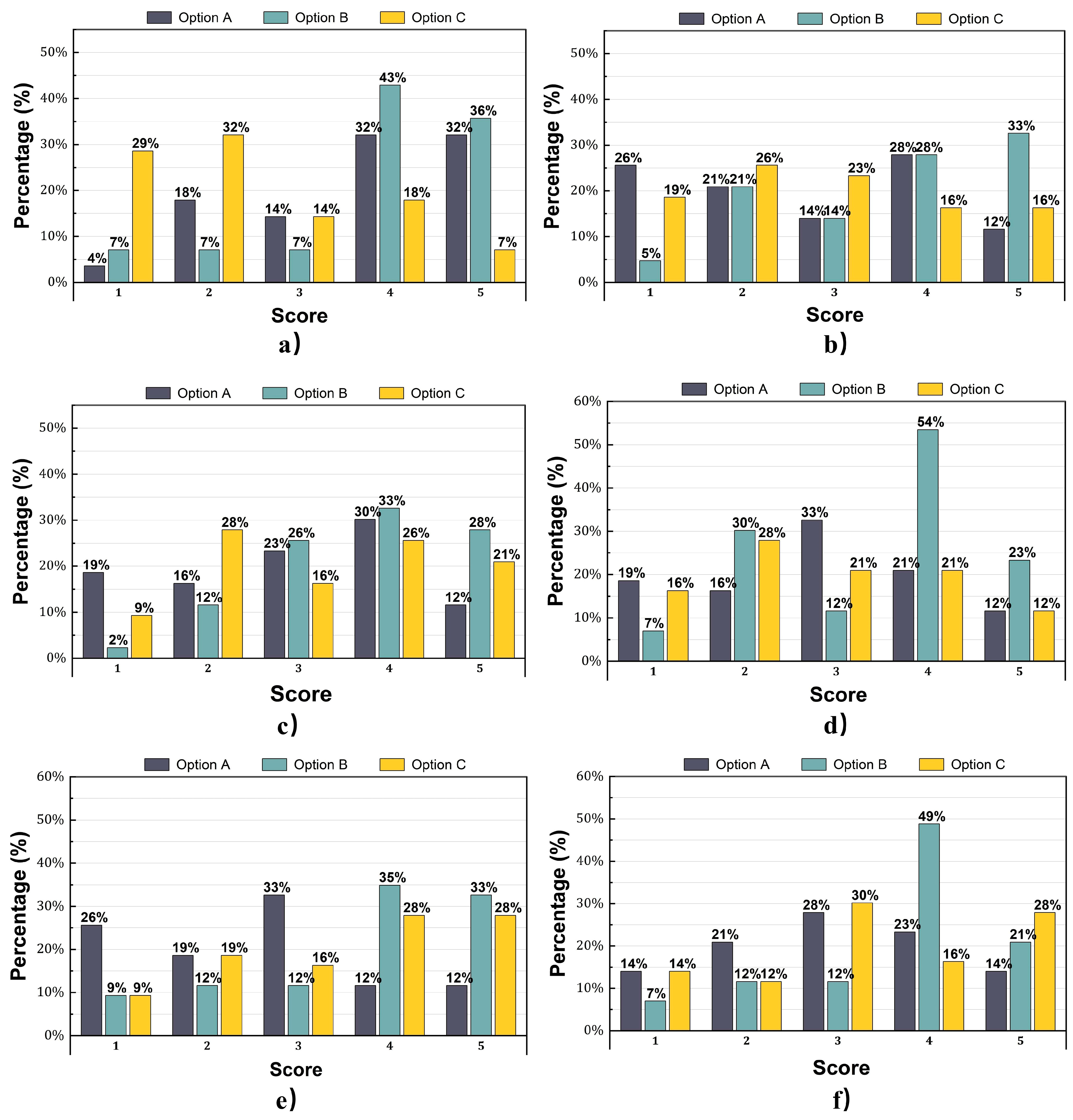

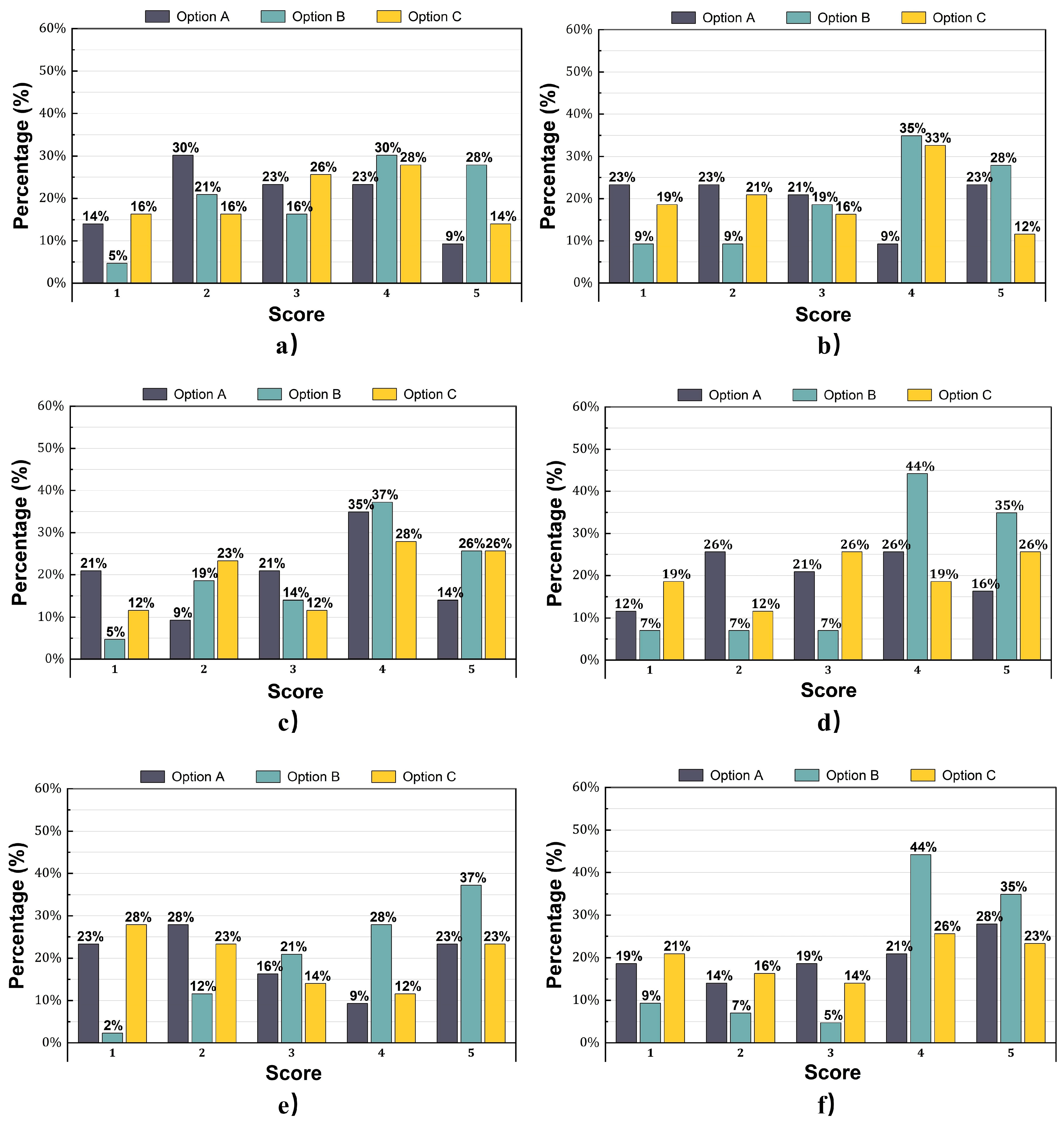

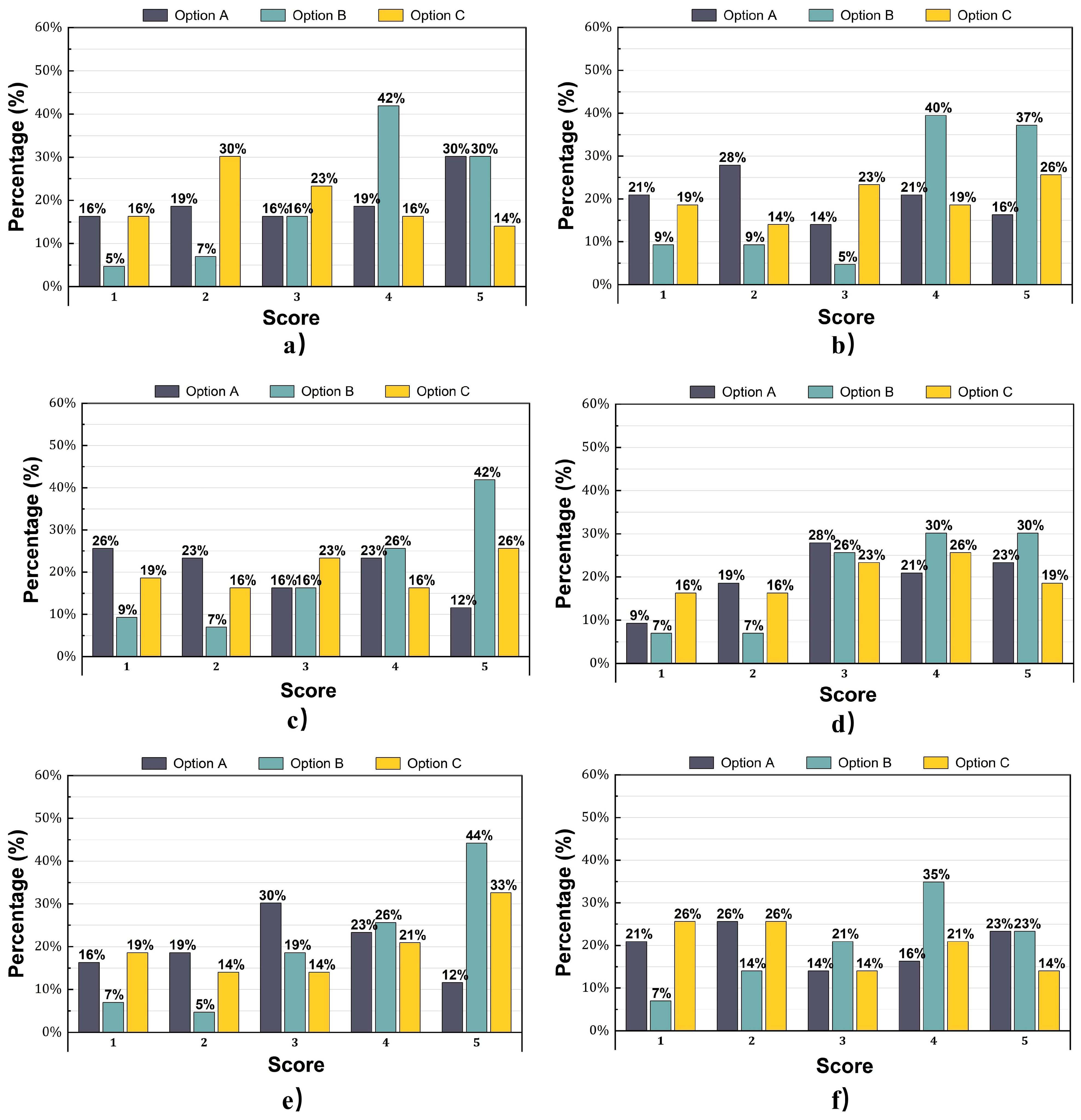

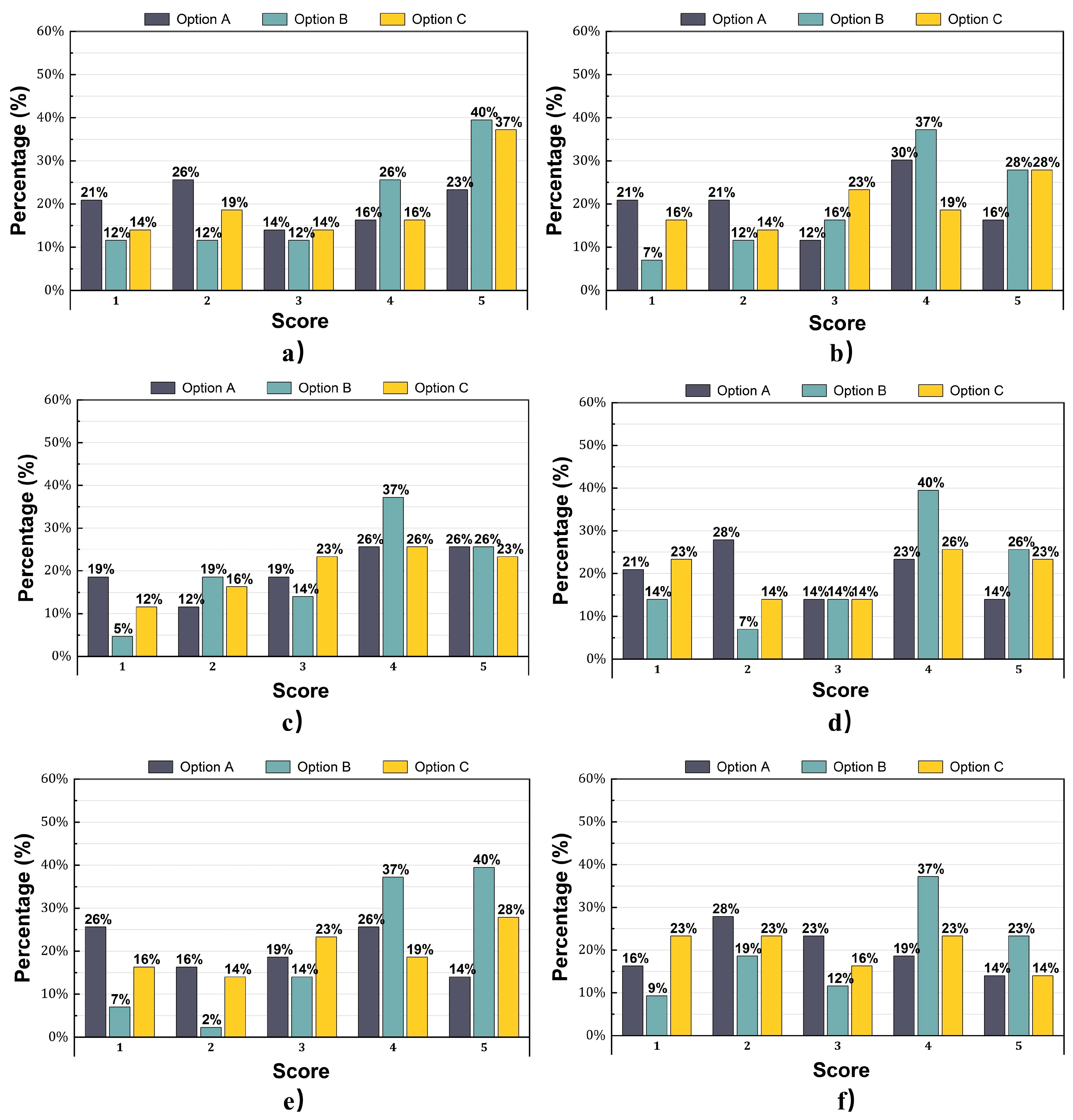

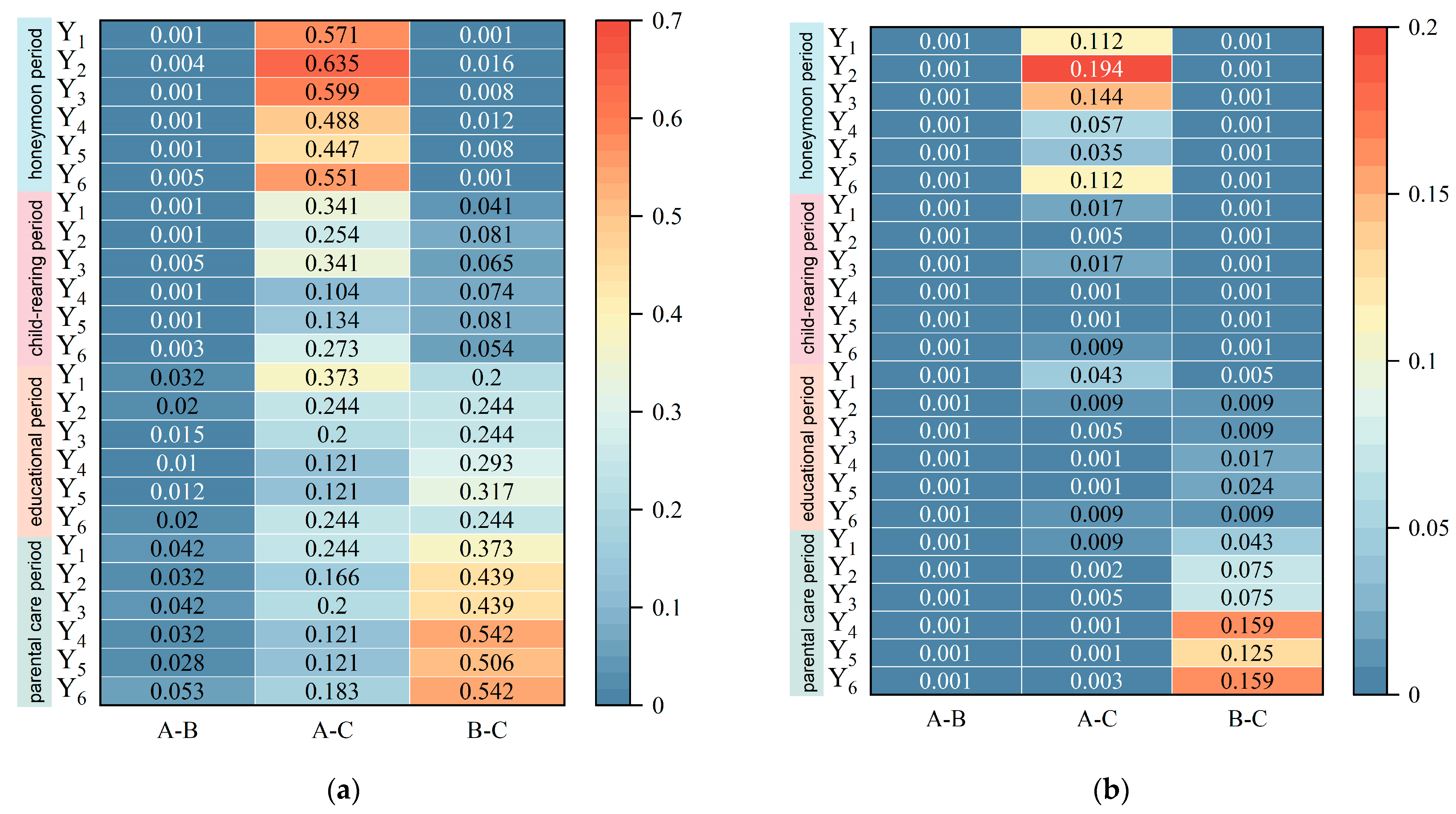

3.3. Validation and Results

- Holding a professional degree or equivalent qualification in a design-related discipline;

- Possessing at least five years of professional or academic experience;

- Having prior engagement with residential or spatial layout design projects.

4. Discussion

4.1. Performance Analysis and Lifecycle Implications

4.2. Practical Implications, Limitations, and Future Directions

4.3. Literature Comparison and Research Positioning

- It introduces Nov-13, a lifecycle-responsive interior dataset linking user behavior, spatial function, and aesthetic attributes;

- It refines diffusion-based generation via a composite loss function that balances functional, emotional, and behavioral fidelity;

- It implements a dual evaluation scheme, combining qualitative expert assessment with quantitative statistical analysis. Together, these innovations yield a mean adaptability score of 79.07% across lifecycle stages—empirically verifying the model’s capacity for adaptive residential generation.

Human-Centered and Neurodesign Perspectives

4.4. Limitations and Future Work

- Quantitative spatial performance evaluation, integrating metrics such as space syntax connectivity, daylight accessibility, and circulation efficiency into the generative loss function.

- Usability and perceptual studies, engaging real users through immersive or participatory design sessions to assess behavioral realism and emotional resonance.

- Cross-cultural validation, expanding the dataset to include diverse dwelling typologies and socio-spatial norms, thereby enhancing external validity and global relevance.

5. Conclusions

- The newly developed Nov-13 dataset and the composite loss function improved the model’s ability to capture user behavior, spatial adaptability, and lifecycle responsiveness. The dataset contains over 22,000 annotated images linking core residential spaces with user demand categories, enabling behavior-aware generation with high internal consistency.

- Comparative evaluation with Stable Diffusion and Midjourney showed that the proposed model achieves superior performance across quantitative and qualitative dimensions. Across four lifecycle stages and six evaluation criteria, the model achieved an average matching accuracy of 79.07%, indicating strong responsiveness and functional coherence.

- Qualitative analysis further demonstrated that Nev reliably produces layouts aligned with user intent and daily behavioral logic, underscoring its applicability to human-centered and adaptive housing design.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Framework | Core Mechanism | Functional Adaptability | Lifecycle Sensitivity | Controllability | Limitation |

|---|---|---|---|---|---|

| ControlNet [64] | Structural condition control | Low | None | High | Focused on geometry, lacks functional reasoning |

| FloorDiffusion [22] | Floor plan diffusion with semantic layout | Medium | Low | Medium | Limited adaptability to family transitions |

| LayoutDM [65] | Diffusion for spatial organization | Medium | Low | Medium | Static typologies only |

| This Study (Nev) | Composite loss with lifecycle-driven fine-tuning | High | High | High | None |

| Serial Number | Parameter | Meaning | Parameter Value Setting |

|---|---|---|---|

| One | Steps | Controlling the number of denoising iterations in the latent space directly affects image quality and generation time. In response to the characteristics of architectural design scenes with many details and a large amount of information, it is necessary to moderately increase the number of iteration steps to improve the expression of details | 30 |

| Two | Sampler | Determine the denoising strategy during the image generation process. In architectural design applications, it is preferred to choose samplers with good convergence to ensure high-quality output. | DPM ++ 2M |

| Three | CFG Scale | The matching degree and image quality between the balanced image and prompt words need to be adjusted to an appropriate range according to specific needs. Balance the matching degree and quality between images and prompt words | 7 |

| Four | Width and height | Set the size of the latent space noise map. For architectural design projects, a size that is too small often cannot obtain sufficient visual information, and a size that is too small can cause computational power consumption and reduce generation efficiency | 768 × 512 |

| Five | Seed | The number of random noise images extracted from the latent space before iteration will result in different generations, resulting in different seed numbers, even if other parameters remain the same, in different generation batches | 2386705101 |

| Six | Repeat | The number of times each image is learned | 20~50 |

| Seven | Batch size | The number of training samples processed simultaneously in a single iteration during model optimization. | Recommendation 1 for graphics card memory of 12 GB or less; 16 GB video memory can be set to 2; Video memory above 24 GB can be set to 4 |

| Eight | ControlNet model | Preprocess input drafts and generate composition and details of content guided by models. The mainstream models in architectural scenes include Canny, Lineart, Depth, SEG, Scribble, etc. | none |

| 95% Confidence Interval | ||||||

|---|---|---|---|---|---|---|

| Evaluation Dimension | (I) Method | (J) Method | Mean Difference (I–J) | Standard Error | Lower Limit | Upper Limit |

| User Requirements Translation | Option A | Option B | −0.63 * | 0.21 | −1.05 | −0.21 |

| Option A | Option C | 0.12 | 0.21 | −0.30 | 0.54 | |

| Option B | Option C | 0.75 * | 0.21 | 0.33 | 1.17 | |

| Periodic Spatial Response | Option A | Option B | −0.61 * | 0.21 | −1.03 | −0.19 |

| Option A | Option C | −0.10 | 0.21 | −0.52 | 0.32 | |

| Option B | Option C | 0.51 * | 0.21 | 0.09 | 0.93 | |

| Style consistency | Option A | Option B | −0.67 * | 0.21 | −1.09 | −0.25 |

| Option A | Option C | −0.11 | 0.21 | −0.53 | 0.31 | |

| Option B | Option C | 0.56 * | 0.21 | 0.14 | 0.98 | |

| Functional Rationality | Option A | Option B | −0.65 * | 0.20 | −1.05 | −0.25 |

| Option A | Option C | −0.14 | 0.20 | −0.54 | 0.26 | |

| Option B | Option C | 0.51 * | 0.20 | 0.11 | 0.91 | |

| Detail Integrity | Option A | Option B | −0.72 * | 0.21 | −1.14 | −0.30 |

| Option A | Option C | −0.16 | 0.21 | −0.58 | 0.26 | |

| Option B | Option C | 0.56 * | 0.21 | 0.14 | 0.98 | |

| Overall design quality | Option A | Option B | −0.58 * | 0.20 | −0.98 | −0.18 |

| Option A | Option C | 0.12 | 0.20 | −0.28 | 0.52 | |

| Option B | Option C | 0.70 * | 0.20 | 0.30 | 1.10 | |

| 95% Confidence Interval | ||||||

|---|---|---|---|---|---|---|

| Evaluation Dimension | (I) Method | (J) Method | Mean Difference (I–J) | Standard Error | Lower Limit | Upper Limit |

| User Requirements Translation | Option A | Option B | −0.60 * | 0.20 | −1.00 | −0.20 |

| Option A | Option C | −0.19 | 0.20 | −0.59 | 0.21 | |

| Option B | Option C | 0.41 * | 0.20 | 0.01 | 0.81 | |

| Periodic Spatial Response | Option A | Option B | −0.58 * | 0.20 | −0.98 | −0.18 |

| Option A | Option C | −0.23 | 0.20 | −0.63 | 0.17 | |

| Option B | Option C | 0.35 * | 0.20 | −0.05 | 0.75 | |

| Style consistency | Option A | Option B | −0.56 * | 0.20 | −0.96 | −0.16 |

| Option A | Option C | −0.19 | 0.20 | −0.59 | 0.21 | |

| Option B | Option C | 0.37 * | 0.20 | −0.03 | 0.77 | |

| Functional Rationality | Option A | Option B | −0.65 * | 0.19 | −1.03 | −0.27 |

| Option A | Option C | −0.31 | 0.19 | −0.69 | 0.07 | |

| Option B | Option C | 0.34 * | 0.19 | −0.04 | 0.72 | |

| Detail Integrity | Option A | Option B | −0.65 * | 0.20 | −1.05 | −0.25 |

| Option A | Option C | −0.30 | 0.20 | −0.70 | 0.10 | |

| Option B | Option C | 0.35 * | 0.20 | −0.05 | 0.75 | |

| Overall design quality | Option A | Option B | −0.58 * | 0.19 | −0.96 | −0.20 |

| Option A | Option C | −0.21 | 0.19 | −0.59 | 0.17 | |

| Option B | Option C | 0.37 * | 0.19 | −0.01 | 0.75 | |

| 95% Confidence Interval | ||||||

|---|---|---|---|---|---|---|

| Evaluation Dimension | (I) Method | (J) Method | Mean Difference (I–J) | Standard Error | Lower Limit | Upper Limit |

| User Requirements Translation | Option A | Option B | −0.39 * | 0.18 | −0.75 | −0.03 |

| Option A | Option C | −0.16 | 0.18 | −0.52 | 0.20 | |

| Option B | Option C | 0.23 | 0.18 | −0.13 | 0.59 | |

| Periodic Spatial Response | Option A | Option B | −0.42 * | 0.18 | −0.78 | −0.06 |

| Option A | Option C | −0.21 | 0.18 | −0.57 | 0.15 | |

| Option B | Option C | 0.21 | 0.18 | −0.15 | 0.57 | |

| Style consistency | Option A | Option B | −0.44 * | 0.18 | −0.80 | −0.08 |

| Option A | Option C | −0.23 | 0.18 | −0.59 | 0.13 | |

| Option B | Option C | 0.21 | 0.18 | −0.15 | 0.57 | |

| Functional Rationality | Option A | Option B | −0.47 * | 0.18 | −0.83 | −0.11 |

| Option A | Option C | −0.28 | 0.18 | −0.64 | 0.08 | |

| Option B | Option C | 0.19 | 0.18 | −0.17 | 0.55 | |

| Detail Integrity | Option A | Option B | −0.46 * | 0.18 | −0.82 | −0.10 |

| Option A | Option C | −0.28 | 0.18 | −0.64 | 0.08 | |

| Option B | Option C | 0.18 | 0.18 | −0.18 | 0.54 | |

| Overall design quality | Option A | Option B | −0.42 * | 0.18 | −0.78 | −0.06 |

| Option A | Option C | −0.21 | 0.18 | −0.57 | 0.15 | |

| Option B | Option C | 0.21 | 0.18 | −0.15 | 0.57 | |

| 95% Confidence Interval | ||||||

|---|---|---|---|---|---|---|

| Evaluation Dimension | (I) Method | (J) Method | Mean Difference (I–J) | Standard Error | Lower Limit | Upper Limit |

| User Requirements Translation | Option A | Option B | −0.37 * | 0.18 | −0.73 | −0.01 |

| Option A | Option C | −0.21 | 0.18 | −0.57 | 0.15 | |

| Option B | Option C | 0.16 | 0.18 | −0.20 | 0.52 | |

| Periodic Spatial Response | Option A | Option B | −0.39 * | 0.18 | −0.75 | −0.03 |

| Option A | Option C | −0.25 | 0.18 | −0.61 | 0.11 | |

| Option B | Option C | 0.14 | 0.18 | −0.22 | 0.50 | |

| Style consistency | Option A | Option B | −0.37 * | 0.18 | −0.73 | −0.01 |

| Option A | Option C | −0.23 | 0.18 | −0.59 | 0.13 | |

| Option B | Option C | 0.14 | 0.18 | −0.22 | 0.50 | |

| Functional Rationality | Option A | Option B | −0.39 * | 0.18 | −0.75 | −0.03 |

| Option A | Option C | −0.28 | 0.18 | −0.64 | 0.08 | |

| Option B | Option C | 0.11 | 0.18 | −0.25 | 0.47 | |

| Detail Integrity | Option A | Option B | −0.40 * | 0.18 | −0.76 | −0.04 |

| Option A | Option C | −0.28 | 0.18 | −0.64 | 0.08 | |

| Option B | Option C | 0.12 | 0.18 | −0.24 | 0.48 | |

| Overall design quality | Option A | Option B | −0.35 * | 0.18 | −0.71 | 0.01 |

| Option A | Option C | −0.24 | 0.18 | −0.60 | 0.12 | |

| Option B | Option C | 0.11 | 0.18 | −0.25 | 0.47 | |

References

- Menninghaus, W.; Wagner, V.; Wassiliwizky, E.; Schindler, I.; Hanich, J.; Jacobsen, T.; Koelsch, S. What are aesthetic emotions? Psychol. Rev. 2019, 126, 171–195. [Google Scholar] [CrossRef]

- Bao, Z.; Laovisutthichai, V.; Tan, T.; Wang, Q.; Lu, W. Design for manufacture and assembly (DfMA) enablers for offsite interior design and construction. Build. Res. Inf. 2022, 50, 325–338. [Google Scholar] [CrossRef]

- Sinha, M.; Fukey, L.N. Sustainable Interior Designing in the 21st Century—A Review. ECS Trans. 2022, 107, 6801–6823. [Google Scholar] [CrossRef]

- Cao, H.; Tan, C.; Gao, Z.; Xu, Y.; Chen, G.; Heng, P.-A.; Li, S.Z. A survey on generative diffusion models. IEEE Trans. Knowl. Data Eng. 2024, 36, 2814–2830. [Google Scholar] [CrossRef]

- Qiu, Z.; Liu, J.; Xia, Y.; Qi, H.; Liu, P. Text Semantics to Controllable Design: A Residential Layout Generation Method Based on Stable Diffusion Model. Dev. Built Environ. 2025, 23, 100691. [Google Scholar] [CrossRef]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical text-conditional image generation with clip latents. arXiv 2022, arXiv:2204.06125. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar] [CrossRef]

- Borji, A. Generated faces in the wild: Quantitative comparison of stable diffusion, midjourney and dall-e 2. arXiv 2022, arXiv:2210.00586. [Google Scholar] [CrossRef]

- Pérez, R.I.P. Blurring the Boundaries Between Real and Artificial in Architecture and Urban Design Through the Use of Artificial Intelligence. Ph.D. Thesis, Universidade da Coruña, A Coruña, Spain, 2017. [Google Scholar]

- Dutta, K.; Sarthak, S. Architectural space planning using evolutionary computing approaches: A review. Artif. Intell. Rev. 2011, 36, 311–321. [Google Scholar] [CrossRef]

- Baltus, V.; Žebrauskas, T. Parametric design concept in architectural studies. Archit. Urban Plan. 2019, 15, 96–100. [Google Scholar] [CrossRef]

- Mora, R.; Bédard, C.; Rivard, H. A geometric modelling framework for conceptual structural design from early digital architectural models. Adv. Eng. Inform. 2008, 22, 254–270. [Google Scholar] [CrossRef]

- Zhu, S.; Yu, T.; Xu, T.; Chen, H.; Dustdar, S.; Gigan, S.; Gunduz, D.; Hossain, E.; Jin, Y.; Lin, F.; et al. Intelligent computing: The latest advances, challenges, and future. Intell. Comput. 2023, 2, 0006. [Google Scholar] [CrossRef]

- Brown, A. Computing, AI and architecture: What destinations are we moving to? Int. J. Arch. Comput. 2025, 23, 333–338. [Google Scholar] [CrossRef]

- Jang, S.; Roh, H.; Lee, G. Generative AI in architectural design: Application, data, and evaluation methods. Autom. Constr. 2025, 174, 106174. [Google Scholar] [CrossRef]

- Wen, W.; Hong, L.; Xueqiang, M. Application of fractals in architectural shape design. In Proceedings of the 2010 IEEE 2nd Symposium on Web Society, Beijing, China, 16–17 August 2010; pp. 185–190. [Google Scholar] [CrossRef]

- Sharafi, P.; Teh, L.H.; Hadi, M.N. Conceptual design optimization of rectilinear building frames: A knapsack problem approach. Eng. Optim. 2015, 47, 1303–1323. [Google Scholar] [CrossRef]

- Yi, H.; Kim, I. Differential evolutionary cuckoo-search-integrated tabu-adaptive pattern search (DECS-TAPS): A novel multihybrid variant of swarm intelligence and evolutionary algorithm in architectural design optimization and automation. J. Comput. Des. Eng. 2022, 9, 2103–2133. [Google Scholar] [CrossRef]

- Gan, W.; Zhao, Z.; Wang, Y.; Zou, Y.; Zhou, S.; Wu, Z. UDGAN: A new urban design inspiration approach driven by using generative adversarial networks. J. Comput. Des. Eng. 2024, 11, 305–324. [Google Scholar] [CrossRef]

- Chang, S.; Dong, W.; Jun, H. Use of electroencephalogram and long short-term memory networks to recognize design preferences of users toward architectural design alternatives. J. Comput. Des. Eng. 2020, 7, 551–562. [Google Scholar] [CrossRef]

- Hu, X.; Zheng, H.; Lai, D. Prediction and optimization of daylight performance of AI-generated residential floor plans. Build. Environ. 2025, 279, 113054. [Google Scholar] [CrossRef]

- Shim, J.; Moon, J.; Kim, H.; Hwang, E. FloorDiffusion: Diffusion model-based conditional floorplan image generation method using parameter-efficient fine-tuning and image inpainting. J. Build. Eng. 2024, 95, 110320. [Google Scholar] [CrossRef]

- Yu, S.; Li, J.; Zheng, H.; Ding, H. Research on Predicting Building Façade Deterioration in Winter Cities Using Diffusion Model. J. Build. Eng. 2025, 111, 113365. [Google Scholar] [CrossRef]

- Wang, L.; Liu, J.; Zeng, Y.; Cheng, G.; Hu, H.; Hu, J.; Huang, X. Automated building layout generation using deep learning and graph algorithms. Autom. Constr. 2023, 154, 105036. [Google Scholar] [CrossRef]

- Li, H.; Gu, J.; Koner, R.; Sharifzadeh, S.; Tresp, V. Do dall-e and flamingo understand each other? In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 1999–2010. [Google Scholar] [CrossRef]

- Park, B.H.; Hyun, K.H. Analysis of pairings of colors and materials of furnishings in interior design with a data-driven framework. J. Comput. Des. Eng. 2022, 9, 2419–2438. [Google Scholar] [CrossRef]

- Chen, J.; Shao, Z.; Hu, B. Generating interior design from text: A new diffusion model-based method for efficient creative design. Buildings 2023, 13, 1861. [Google Scholar] [CrossRef]

- Ahmed, J.; Nadeem, G.; Majeed, M.K.; Ghaffar, R.; Baig, A.K.K.; Shah, S.R.; Razzaq, R.A.; Irfan, T. The Rise of Multimodal AI: A Quick Review of GPT-4V and Gemini. Spectr. Eng. Sci. 2025, 3, 778–786. [Google Scholar] [CrossRef]

- Kendall, S.H. Residential Architecture as Infrastructure: Open Building in Practice; Routledge: London, UK, 2021. [Google Scholar] [CrossRef]

- Leupen, B.; Heijne, R.; van Zwol, J. Time-Based Architecture; 010 Publishers: Rotterdam, The Netherlands, 2005; Available online: https://books.google.co.jp/books?id=xCgIpz8FCwEC (accessed on 15 October 2025).

- Till, J.; Schneider, T. Flexible Housing; Routledge: London, UK, 2016. [Google Scholar] [CrossRef]

- Brand, S. How Buildings Learn: What Happens After They’re Built; Penguin Publishing Group: London, UK, 1995; Available online: https://books.google.co.jp/books?id=zkgRgdVN2GIC (accessed on 15 December 2024).

- Xie, X.; Ding, W. An interactive approach for generating spatial architecture layout based on graph theory. Front. Archit. Res. 2023, 12, 630–650. [Google Scholar] [CrossRef]

- Magdziak, M. Flexibility and adaptability of the living space to the changing needs of residents. IOP Conf. Ser. Mater. Sci. Eng. 2019, 471, 072011. [Google Scholar] [CrossRef]

- Gober, P. Urban housing demography. Prog. Hum. Geogr. 1992, 16, 171–189. [Google Scholar] [CrossRef] [PubMed]

- Estaji, H. A review of flexibility and adaptability in housing design. Int. J. Contemp. Archit. 2017, 4, 37–49. [Google Scholar] [CrossRef]

- Onatayo, D.; Onososen, A.; Oyediran, A.O.; Oyediran, H.; Arowoiya, V.; Onatayo, E. Generative AI applications in architecture, engineering, and construction: Trends, implications for practice, education & imperatives for upskilling—A review. Architecture 2024, 4, 877–902. [Google Scholar] [CrossRef]

- Xu, J.; Liu, X.; Wu, Y.; Tong, Y.; Li, Q.; Ding, M.; Tang, J.; Dong, Y. Imagereward: Learning and evaluating human preferences for text-to-image generation. Adv. Neural Inf. Process. Syst. 2023, 36, 15903–15935. [Google Scholar]

- Available online: https://www.znzmo.com (accessed on 15 December 2024).

- Available online: https://www.google.com (accessed on 15 December 2024).

- Available online: https://www.bing.com (accessed on 15 December 2024).

- Available online: https://creativecommons.org/licenses/by-nc/4.0/ (accessed on 3 November 2025).

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Chen, J.; Shao, Z.; Zheng, X.; Zhang, K.; Yin, J. Integrating aesthetics and efficiency: AI-driven diffusion models for visually pleasing interior design generation. Sci. Rep. 2024, 14, 3496. [Google Scholar] [CrossRef]

- Chen, J.; Wang, D.; Shao, Z.; Zhang, X.; Ruan, M.; Li, H.; Li, J. Using artificial intelligence to generate master-quality architectural designs from text descriptions. Buildings 2023, 13, 2285. [Google Scholar] [CrossRef]

- Chen, X.; Kang, S.B.; Xu, Y.-Q.; Dorsey, J.; Shum, H.-Y. Sketching reality: Realistic interpretation of architectural designs. ACM Trans. Graph. TOG 2008, 27, 11. [Google Scholar] [CrossRef]

- Wang, B.; Zhu, Y.; Chen, L.; Liu, J.; Sun, L.; Childs, P. A study of the evaluation metrics for generative images containing combinational creativity. AI EDAM 2023, 37, e11. [Google Scholar] [CrossRef]

- Arceo, A.; Touchie, M.; O’Brien, W.; Hong, T.; Malik, J.; Mayer, M.; Peters, T.; Saxe, S.; Tamas, R.; Villeneuve, H.; et al. Ten questions concerning housing sufficiency. Build. Environ. 2025, 277, 112941. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.-G. Statistical power analyses using G* Power 3.1: Tests for correlation and regression analyses. Behav. Res. Methods 2009, 41, 1149–1160. [Google Scholar] [CrossRef]

- Shi, M.; Seo, J.; Cha, S.H.; Xiao, B.; Chi, H.-L. Generative AI-powered architectural exterior conceptual design based on the design intent. J. Comput. Des. Eng. 2024, 11, 125–142. [Google Scholar] [CrossRef]

- St»hle, L.; Wold, S. Analysis of variance (ANOVA). Chemom. Intell. Lab. Syst. 1989, 6, 259–272. [Google Scholar] [CrossRef]

- Shi, Z.; Jin, N.; Chen, D.; Ai, D. A comparison study of semantic segmentation networks for crack detection in construction materials. Constr. Build. Mater. 2024, 414, 134950. [Google Scholar] [CrossRef]

- Napierala, M.A. What Is the Bonferroni Correction? AAOS Now 2012, 40–41. Available online: https://link.gale.com/apps/doc/A288979427/HRCA?u=anon~f4b0666f&sid=googleScholar&xid=3f3f1bf7 (accessed on 15 October 2025).

- GM, H.; Gourisaria, M.K.; Pandey, M.; Rautaray, S.S. A comprehensive survey and analysis of generative models in machine learning. Comput. Sci. Rev. 2020, 38, 100285. [Google Scholar] [CrossRef]

- Miao, Y.; Koenig, R.; Knecht, K. The development of optimization methods in generative urban design: A review. In Proceedings of the 11th Annual Symposium on Simulation for Architecture and Urban Design, Vienna, Austria, 25–27 May 2020; pp. 1–8. Available online: https://www.researchgate.net/publication/344460745_The_Development_of_Optimization_Methods_in_Generative_Urban_Design_A_Review (accessed on 15 October 2025).

- Li, C.; Zhang, C.; Cho, J.; Waghwase, A.; Lee, L.-H.; Rameau, F.; Yang, Y.; Bae, S.-H.; Hong, C.S. Generative ai meets 3d: A survey on text-to-3d in aigc era. arXiv 2023, arXiv:2305.06131. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, C.; Zhang, M.; Kweon, I.S. Text-to-image diffusion models in generative ai: A survey. arXiv 2023, arXiv:2303.07909. [Google Scholar] [CrossRef]

- Sussman, A.; Hollander, J. Cognitive Architecture: Designing for How We Respond to the Built Environment; Routledge: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Lavdas, A.A.; Sussman, A.; Woodworth, A.V. Routledge Handbook of Neuroscience and the Built Environment; Taylor & Francis: London, UK, 2025; Available online: https://books.google.co.jp/books?id=8fuEEQAAQBAJ (accessed on 15 October 2025).

- Taylor, R.P. The Potential of Biophilic Fractal Designs to Promote Health and Performance: A Review of Experiments and Applications. Sustainability 2021, 13, 823. [Google Scholar] [CrossRef]

- Gibson, J.J. The ecological approach to the visual perception of pictures. Leonardo 1978, 11, 227–235. Available online: https://muse.jhu.edu/pub/6/article/599064/summary (accessed on 15 October 2025). [CrossRef]

- Valentine, C. The impact of architectural form on physiological stress: A systematic review. Front. Comput. Sci. 2024, 5, 1237531. [Google Scholar] [CrossRef]

- Alexander, C. A Pattern Language: Towns, Buildings, Construction; Oxford University Press: New York, NY, USA, 1977; Available online: https://books.google.co.jp/books?id=hwAHmktpk5IC (accessed on 15 October 2025).

- Zhao, S.; Chen, D.; Chen, Y.-C.; Bao, J.; Hao, S.; Yuan, L.; Wong, K.-Y.K. Uni-controlnet: All-in-one control to text-to-image diffusion models. Adv. Neural Inf. Process. Syst. 2023, 36, 11127–11150. Available online: https://proceedings.neurips.cc/paper_files/paper/2023/file/2468f84a13ff8bb6767a67518fb596eb-Paper-Conference.pdf (accessed on 15 October 2025).

- Inoue, N.; Kikuchi, K.; Simo-Serra, E.; Otani, M.; Yamaguchi, K. Layoutdm: Discrete diffusion model for controllable layout generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 10167–10176. [Google Scholar] [CrossRef]

| Functional and Physical Needs | Behavioral and Activity Needs | Psychological and Emotional Needs | Social and Cultural Needs | Health and Sustainable Needs | Total | |

|---|---|---|---|---|---|---|

| Bedroom | 1099 | 1167 | 803 | 760 | 975 | 4804 |

| Living Room | 1590 | 1078 | 1459 | 897 | 1130 | 6154 |

| Dining Room | 734 | 679 | 504 | 421 | 703 | 3041 |

| Children’s room | 747 | 707 | 732 | 679 | 863 | 3728 |

| Study Room | 1060 | 788 | 885 | 673 | 895 | 4301 |

| Spatial Function | User Needs | Prompt |

|---|---|---|

| Bedroom | romantic, spacious, king-size bed, en-suite bathroom, walk-in closet, soft lighting, luxurious | interior design photo, a spacious and romantic master bedroom, king-size bed with high-quality linen, soft ambient lighting, walk-in closet en-suite, a serene and intimate atmosphere, modern minimalist style, large window with a view |

| Living Room | open-plan, great for entertaining, large sectional sofa, projection screen, social hub, bright and airy | Interior design photo, a large open-plan living room with great visual flow to the kitchen and dining area, modern low-profile sectional sofa, a large projection screen for movie nights, ideal for social gatherings and relaxation, bright and welcoming atmosphere |

| Dining Room | open, kitchen island/breakfast bar, stylish pendant lights, seating for 4, romantic dinners, modern and chic | Interior design photo, an open dining area seamlessly connected to the kitchen featuring a central breakfast island/bar, stylish pendant lights above the island, seating for four, creating a perfect atmosphere for romantic dinners and casual entertaining, modern and chic. |

| Children’s room | flexible multi-function room, home gym, entertainment room, guest room, neutral, adaptable design | Interior design photo, a bright and flexible multi-function room, currently set up as a home gym with yoga mats and a treadmill, with potential to be a guest room or entertainment space, clean lines, neutral colors, modern and adaptable design. |

| Study Room | open plan, integrated with living area, versatile, home office/gaming room, sleek, bright, multifunctional | Interior design photo, an open-plan home office integrated with the living area, sleek built-in shelves, a modern minimalist desk for a laptop, versatile space that can also serve as a gaming or reading nook, bright and airy, promoting connectivity and relaxation. |

| User Requirements Translation | Periodic Spatial Response | Style Consistency | Functional Rationality | Detail Integrity | Overall Design Quality | |

|---|---|---|---|---|---|---|

| Option A | 78.57% | 53.49% | 65.11% | 65.11% | 55.81% | 65.11% |

| Option B | 85.71% | 74.41% | 86.04% | 88.37% | 78.07% | 81.40% |

| Option C | 39.28% | 55.81% | 62.79% | 53.49% | 72.09% | 74.41% |

| User Requirements Translation | Periodic Spatial Response | Style Consistency | Functional Rationality | Detail Integrity | Overall Design Quality | |

|---|---|---|---|---|---|---|

| Option A | 55.81% | 53.49% | 69.76% | 62.79% | 48.83% | 67.44% |

| Option B | 74.41% | 81.40% | 76.74% | 86.04% | 86.04% | 83.72% |

| Option C | 67.44% | 60.46% | 65.11% | 69.76% | 48.83% | 62.79% |

| User Requirements Translation | Periodic Spatial Response | Style Consistency | Functional Rationality | Detail Integrity | Overall Design Quality | |

|---|---|---|---|---|---|---|

| Option A | 65.11% | 51.16% | 51.16% | 72.09% | 65.11% | 53.49% |

| Option B | 88.37% | 81.40% | 83.72% | 86.04% | 88.37% | 79.07% |

| Option C | 53.48% | 67.44% | 65% | 67.44% | 67.44% | 48.83% |

| User Requirements Translation | Periodic Spatial Response | Style Consistency | Functional Rationality | Detail Integrity | Overall Design Quality | |

|---|---|---|---|---|---|---|

| Option A | 53.49% | 58.13% | 69.76% | 51.16% | 58.14% | 55.81% |

| Option B | 76.74% | 81.40% | 76.74% | 79.07% | 90.70% | 72.09% |

| Option C | 67.44% | 69.77% | 72.09% | 62.79% | 69.77% | 53.49% |

| Reference | Year Published | Lifecycle/User Adaptability | Spatial & Functional Coherence | Generative AI Model | Generative AI Evaluation Metric Classification | Remarks |

|---|---|---|---|---|---|---|

| [13] | 2023 | NA | NA | M | L | Broad review of intelligent computing advances; not focused on lifecycle or interior specifics |

| [15] | 2025 | M | M | M | L | Review of applications, data needs, and evaluation methods; useful taxonomy but limited lifecycle-specific solutions. |

| [18] | 2022 | NA | M | NA | L | Optimization-focused method for architectural design; not a generative-AI text-to-image study. |

| [19] | 2024 | L | M | M | M | GAN-driven urban/architectural inspiration tool; emphasizes rapid alternatives rather than household adaptation. |

| [20] | 2020 | L | M | M | M | Integrates human-preference signals (EEG) into design-selection; informs personalization but not lifecycle modeling. |

| [21] | 2025 | L | L | L | M | Links AI-generated plans to environmental performance; strong on functional evaluation. |

| [22] | 2024 | L | H | H | M | Conditional diffusion for floor plans with parameter-efficient fine-tuning improves structural fidelity. |

| [23] | 2025 | L | M | M | L | Application of diffusion for façade-deterioration prediction; not interior/lifecycle focused. |

| [24] | 2023 | NA | L | L | M | Automated layout generation using DL + graph methods; strong spatial/functional focus but limited lifecycle modeling. |

| [27] | 2023 | M | H | H | H | Directly relevant: text-to-interior diffusion method; focuses on creative efficiency and visual quality more than lifecycle adaptation. |

| [37] | 2024 | M | M | M | L | Broad review of AIGC in AEC; highlights practice/education implications but not lifecycle-specific frameworks. |

| [54] | 2020 | L | L | L | L | Presented an overview of generative AI model classifications. |

| [55] | 2020 | NA | NA | L | M | Focuses on the application of GANs in architecture and urban design. |

| [56] | 2023 | L | M | M | L | A comprehensive survey on the underlying technology and applications of text-to-3D conversion. |

| [57] | 2023 | NA | NA | L | M | A review of text-to-image diffusion models. |

| This paper | 2025 | H | H | H | H | Integrates lifecycle-responsive dataset (Nov-13), composite loss, and fine-tuning; validated both qualitatively and quantitatively. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Y.; Pan, Y. A Generative AI Framework for Adaptive Residential Layout Design Responding to Family Lifecycle Changes. Buildings 2025, 15, 4155. https://doi.org/10.3390/buildings15224155

Zhou Y, Pan Y. A Generative AI Framework for Adaptive Residential Layout Design Responding to Family Lifecycle Changes. Buildings. 2025; 15(22):4155. https://doi.org/10.3390/buildings15224155

Chicago/Turabian StyleZhou, Yinlin, and Yonggang Pan. 2025. "A Generative AI Framework for Adaptive Residential Layout Design Responding to Family Lifecycle Changes" Buildings 15, no. 22: 4155. https://doi.org/10.3390/buildings15224155

APA StyleZhou, Y., & Pan, Y. (2025). A Generative AI Framework for Adaptive Residential Layout Design Responding to Family Lifecycle Changes. Buildings, 15(22), 4155. https://doi.org/10.3390/buildings15224155