MBAV: A Positional Encoding-Based Lightweight Network for Detecting Embedded Parts in Prefabricated Composite Slabs

Abstract

1. Introduction

2. Prefabricated Components and Dataset Preparation

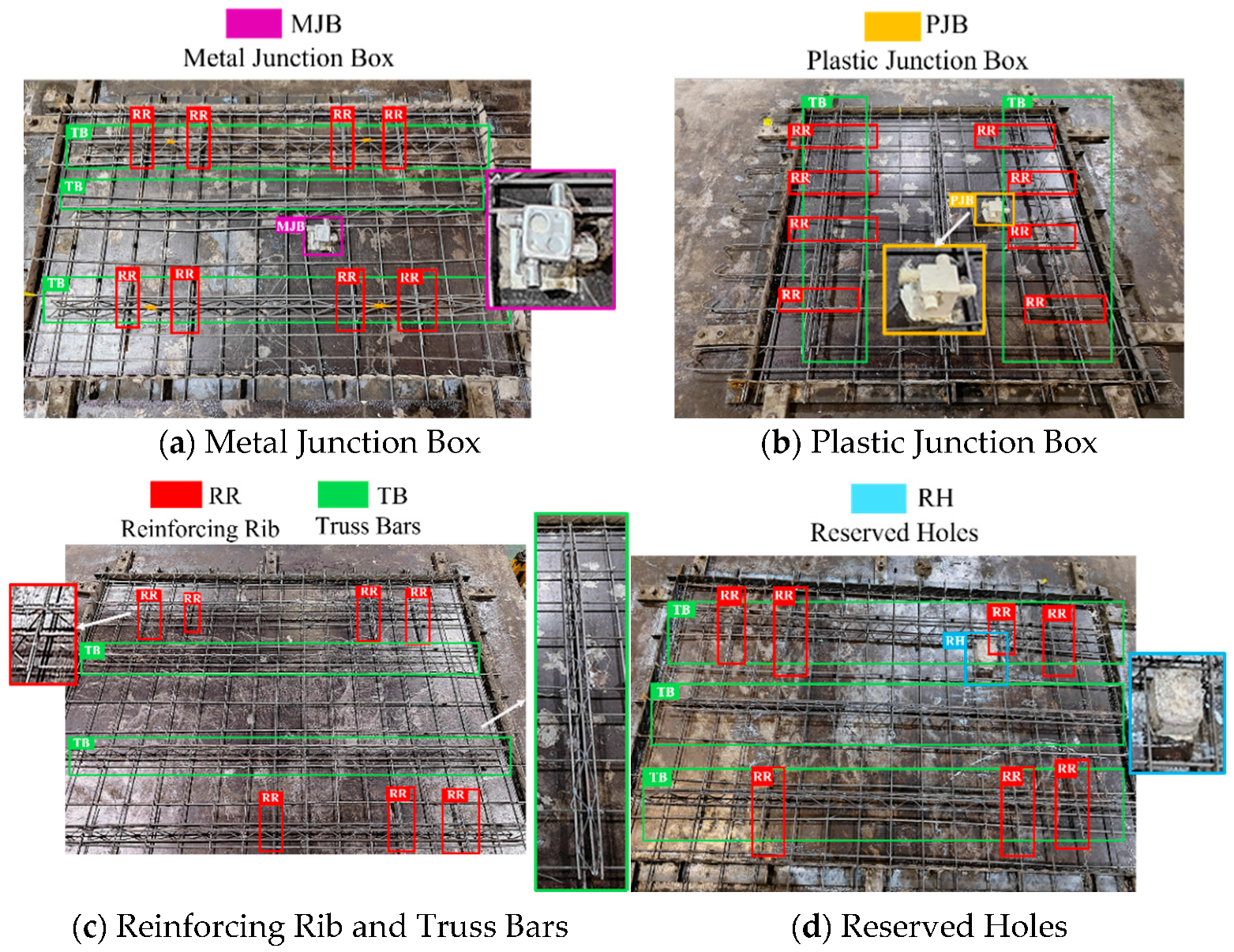

2.1. Prefabricated Slab Components

2.2. Dataset Preparation

3. Network Architecture Design for the MBAV Detection Model

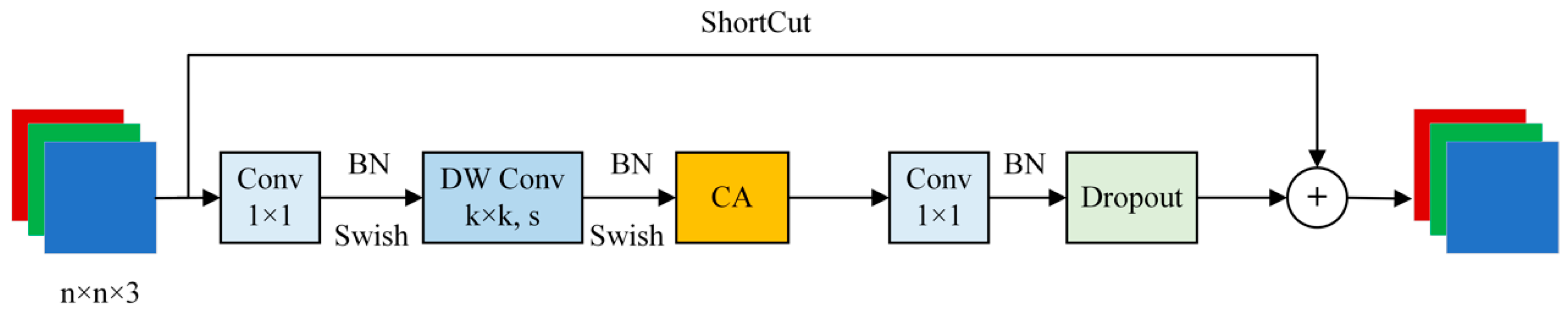

3.1. Design of the Backbone Network

3.1.1. MBCA Module

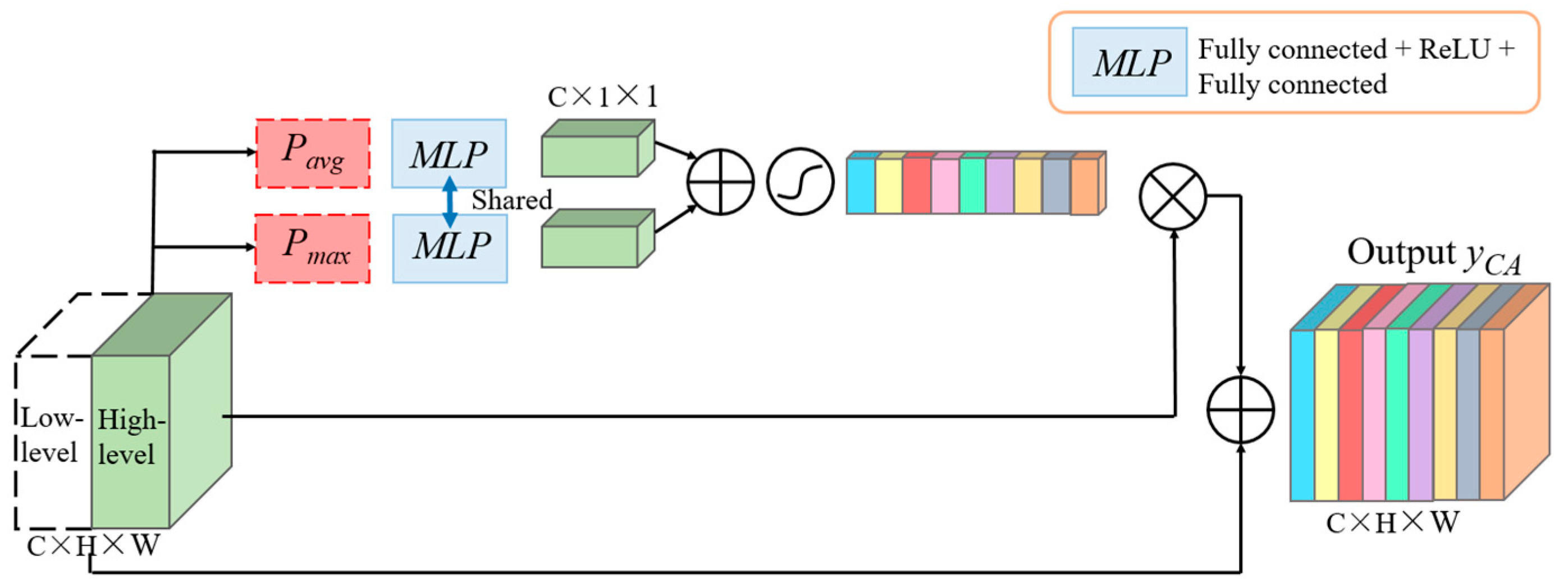

3.1.2. Coordinate Attention Mechanism

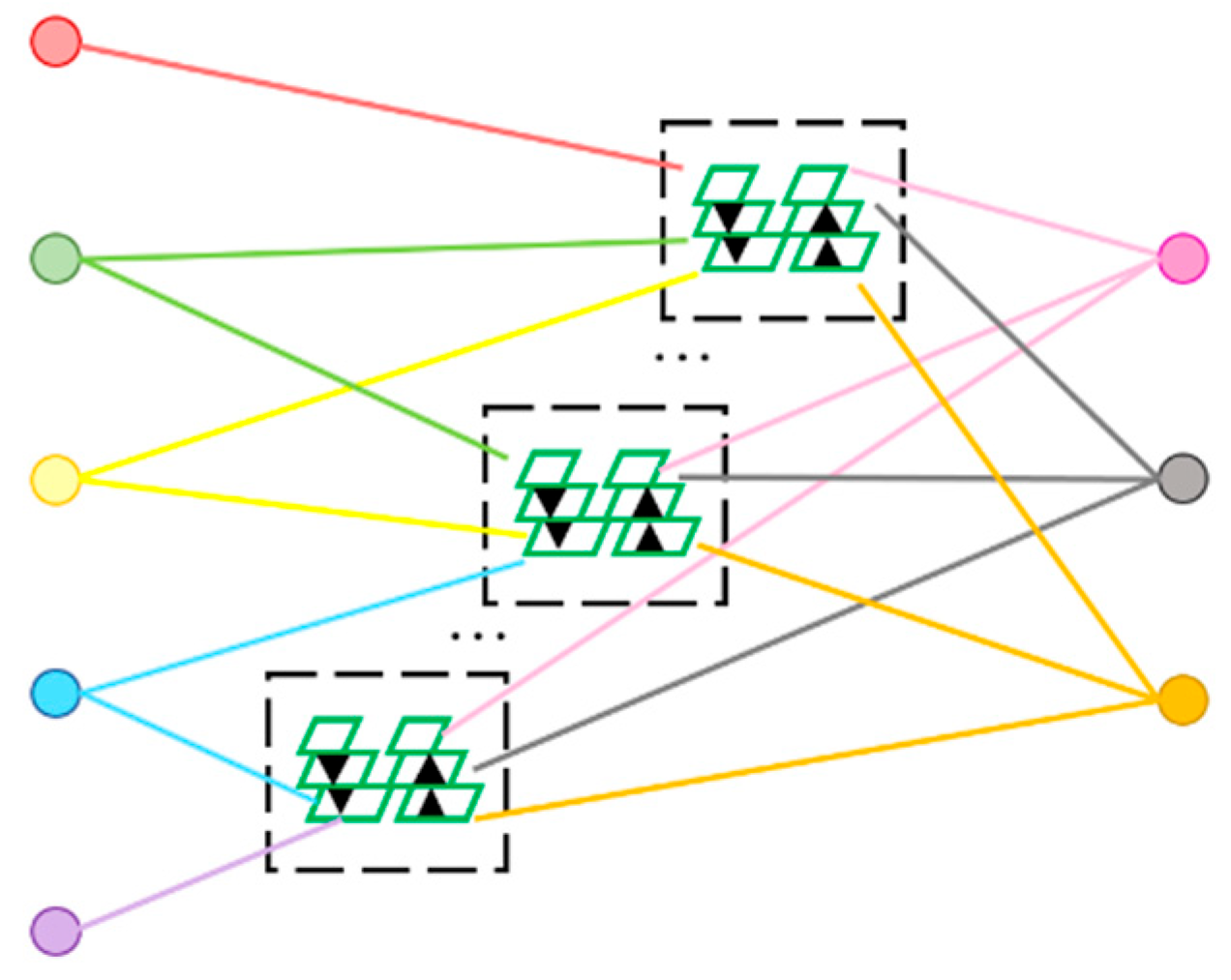

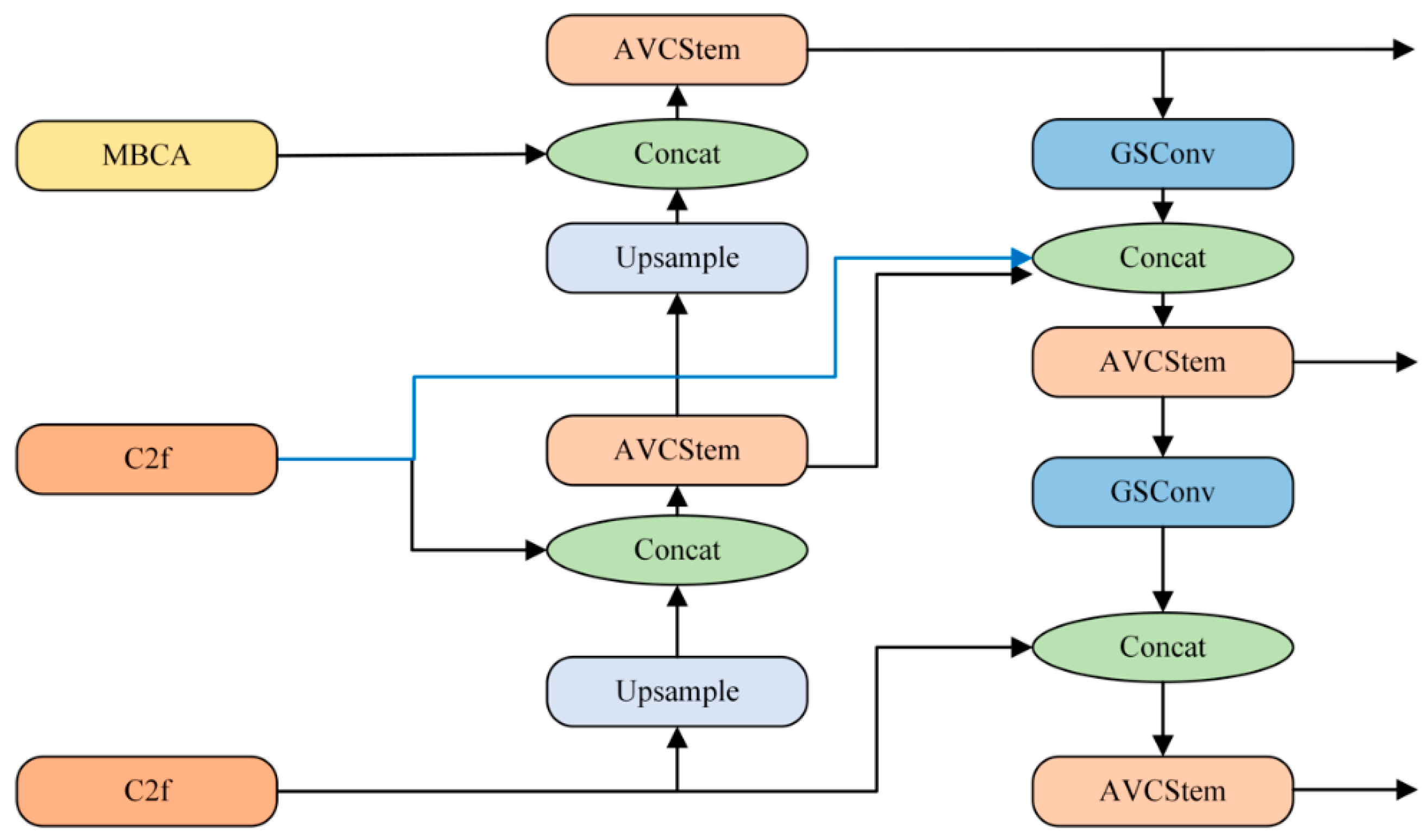

3.2. Design of the Neck Network

3.2.1. Feature Fusion Enhancement

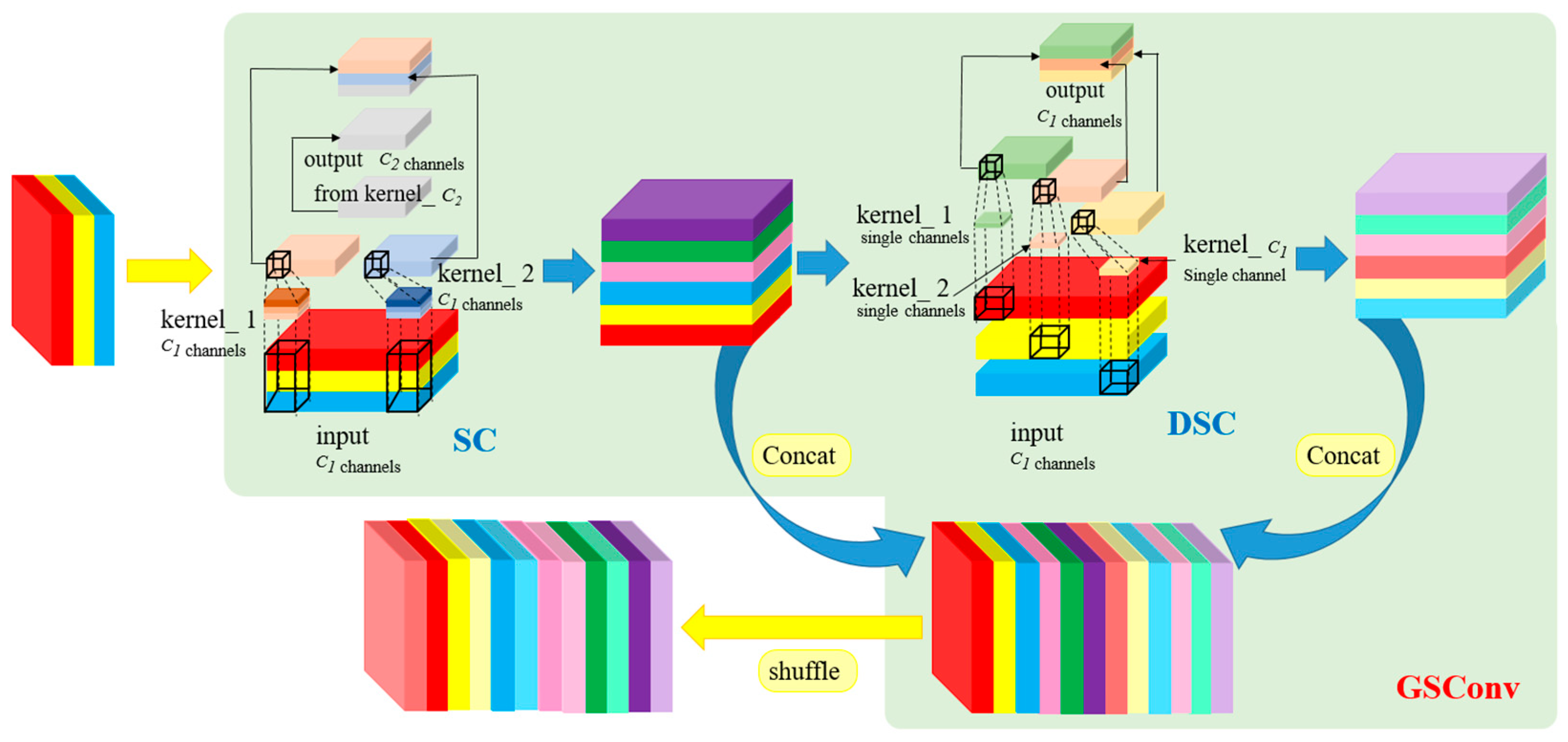

3.2.2. VoVGSCSP: A Lightweight Feature Extraction Module Based on GSConv

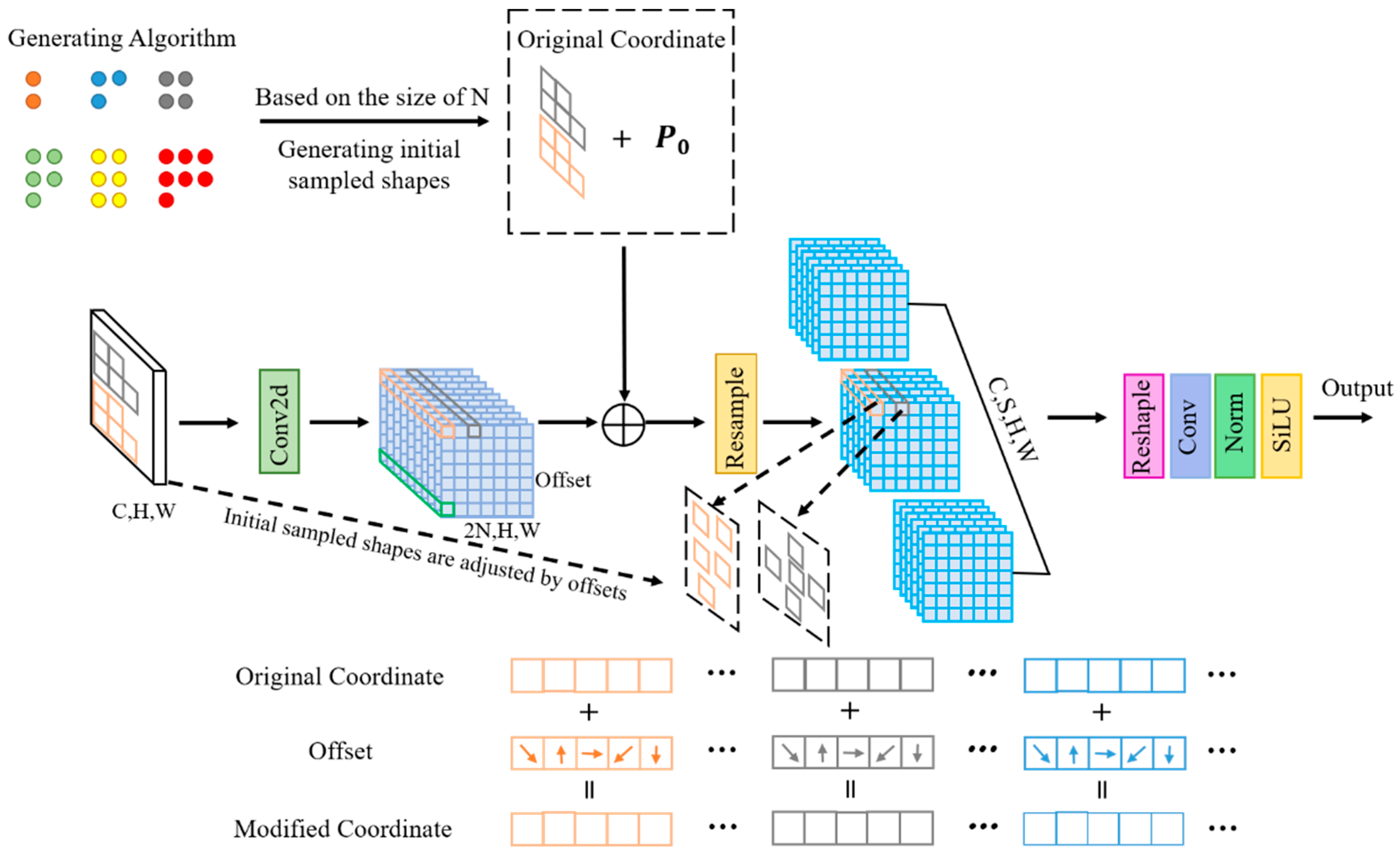

3.2.3. AVCM: Attention-Based Fusion Module

4. Experimental Preparation

5. Experiments and Results

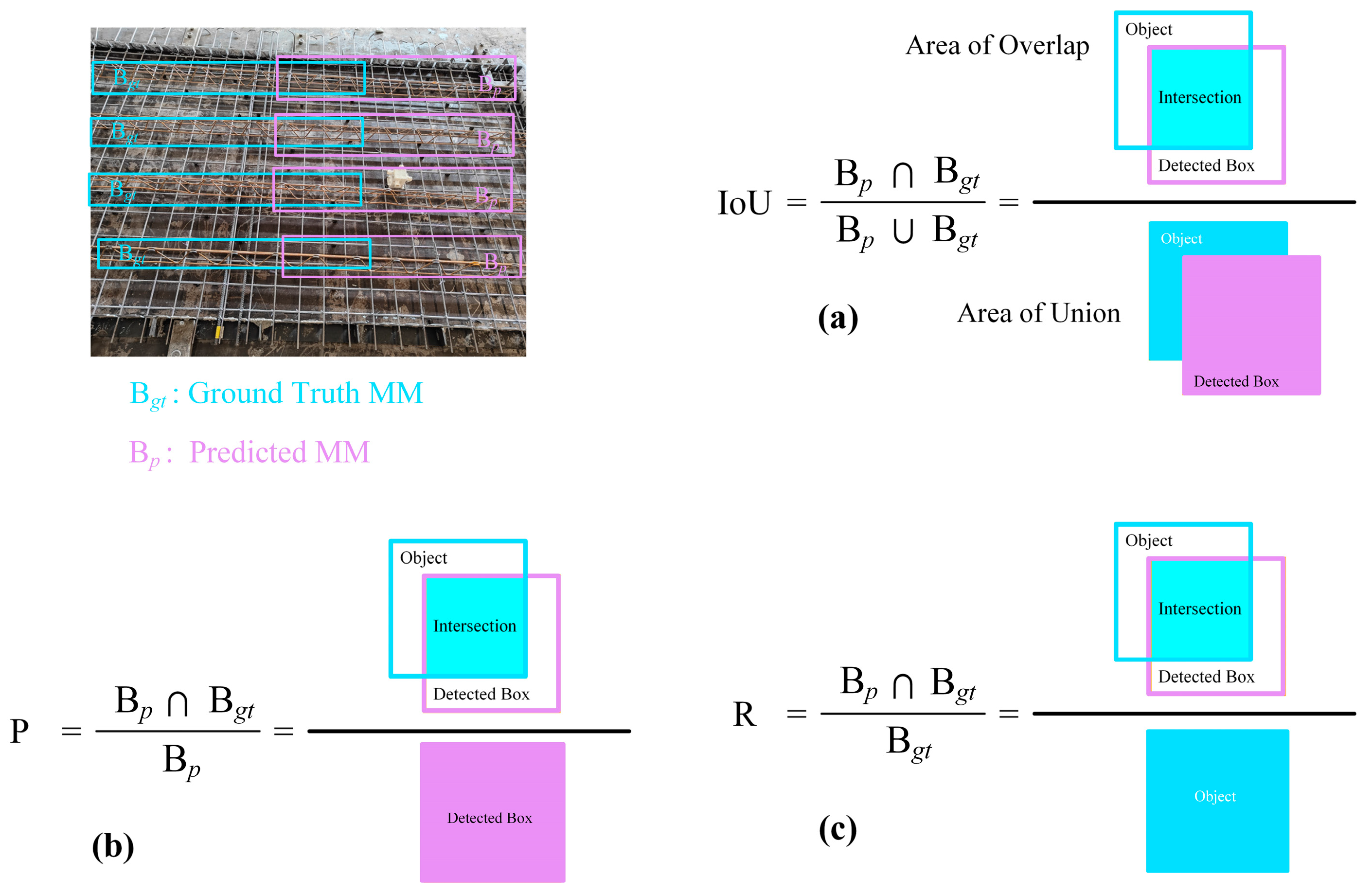

5.1. Evaluation Indicators

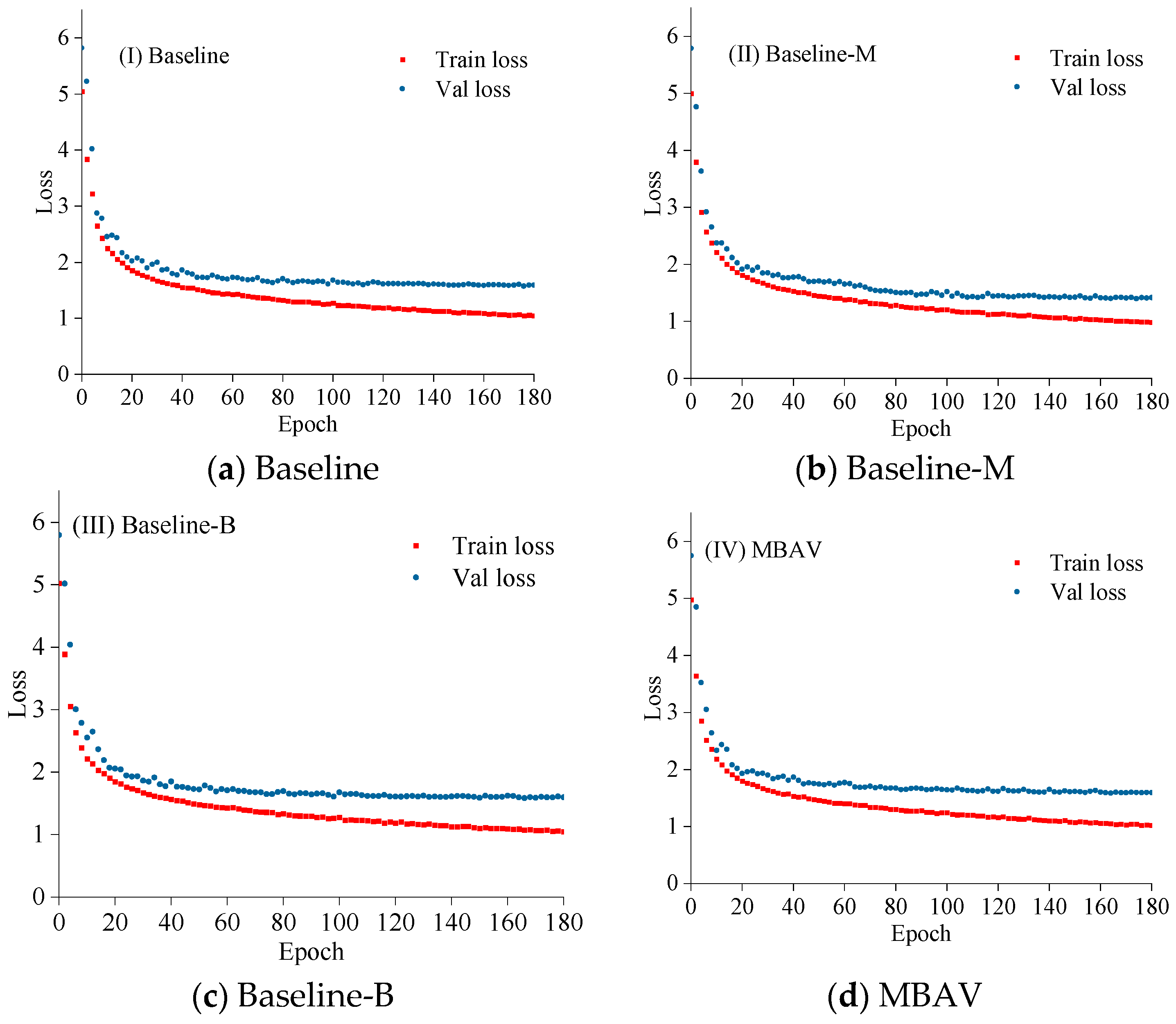

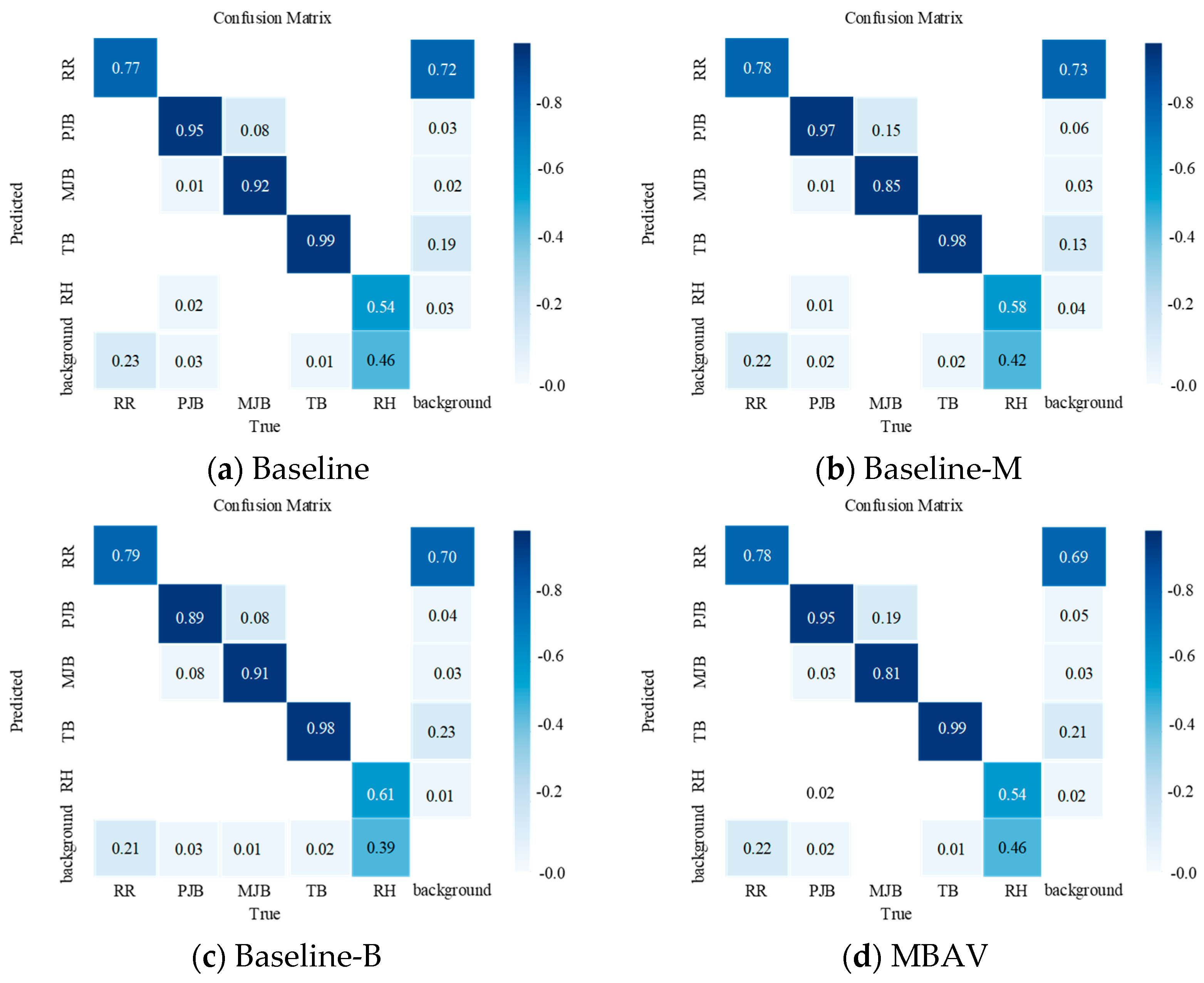

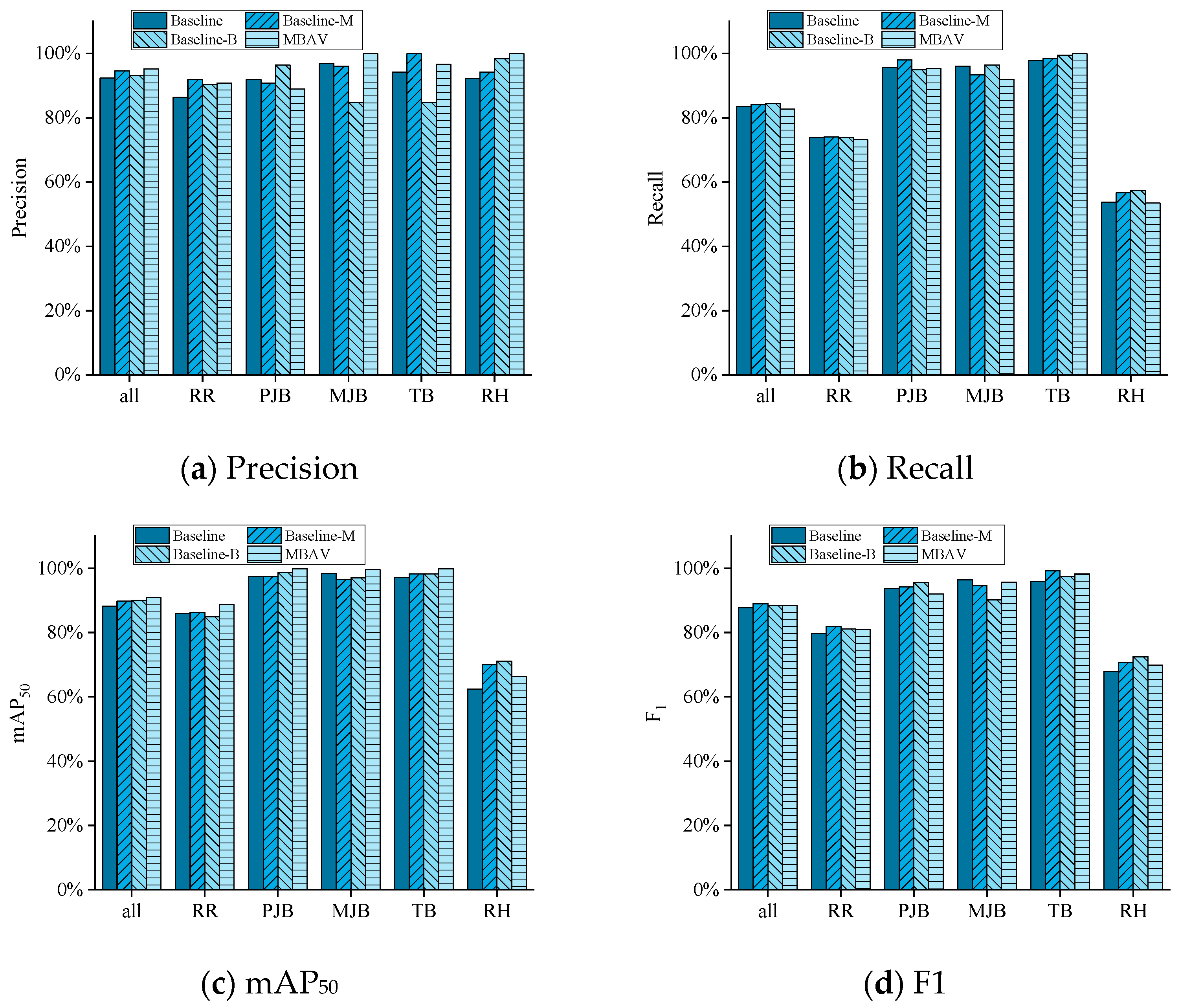

5.2. Ablation Experiment

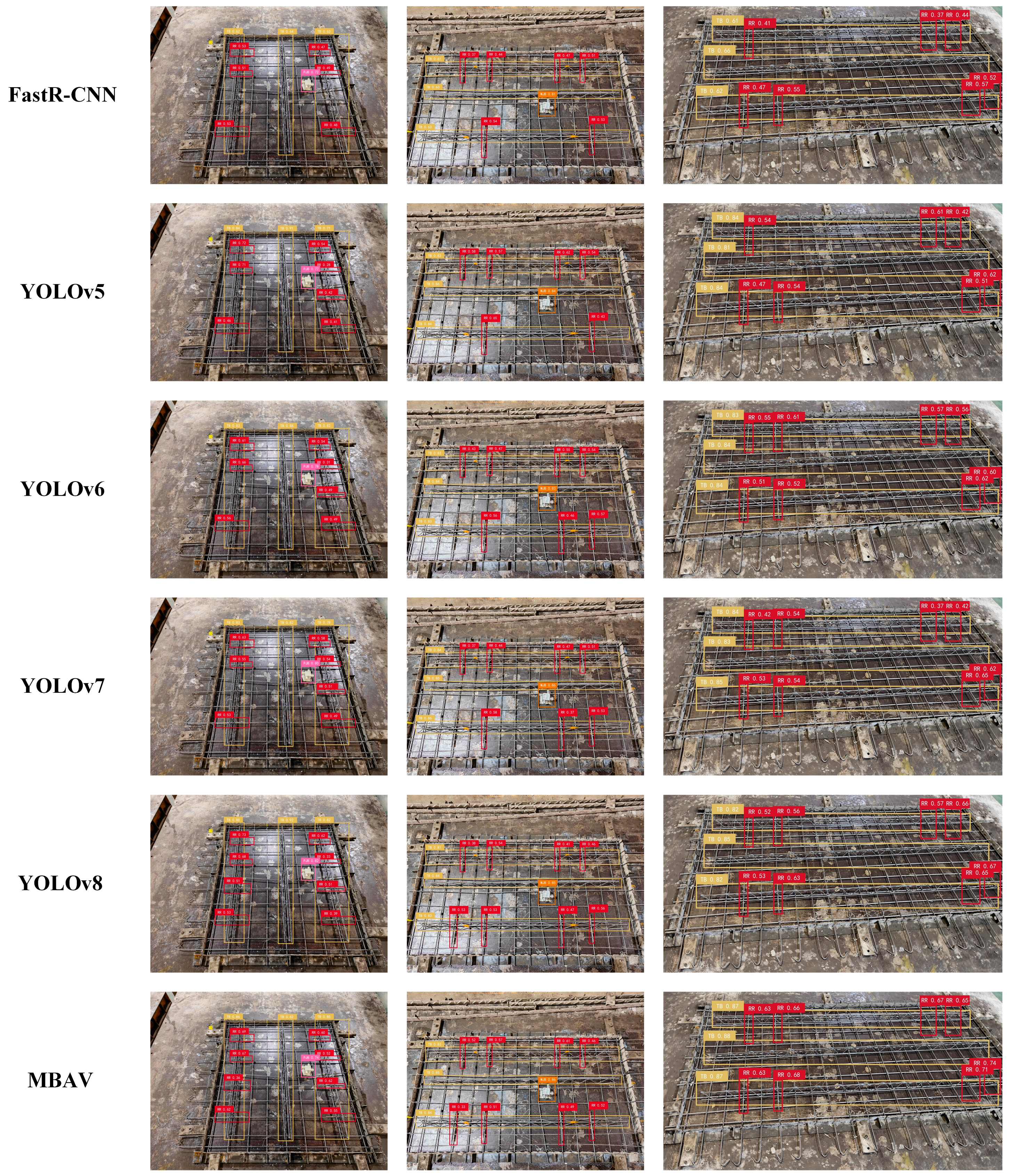

5.3. Comparison with Other Methods

6. Conclusions

- (1)

- A specialized dataset was built for embedded-part detection in composite slabs, covering five typical categories—truss bars, reinforcement rebars, metal junction boxes, plastic junction boxes, and reserved holes—with a total of 1535 images and 13,680 annotated instances collected from multiple factory environments.

- (2)

- The proposed MBAV network integrates the MBCA module in the backbone and the AVCStem + AKConv structure with positional encoding in the neck, effectively enhancing feature extraction and fusion for small, overlapping, or low-contrast targets.

- (3)

- On the constructed dataset, MBAV achieves an mAP50 of 91%, outperforming the baseline YOLOv8 by three percentage points, while reducing the parameter count by 8.06% to 5.7M. The classwise accuracy improves by 1.2–2.8%, confirming its robustness under real-world factory conditions.

- (4)

- The model demonstrates strong potential for the real-time, automated quality inspection of prefabricated components and offers a practical foundation for the further development of intelligent quality control systems in industrialized construction.

Supplementary Materials

Author Contributions

Funding

Data Availability Statements

Conflicts of Interest

References

- Jaillon, L.; Poon, C.S. Life cycle design and prefabrication in buildings: A review and case studies in Hong Kong. Autom. Constr. 2014, 39, 195–202. [Google Scholar] [CrossRef]

- Li, Z.; Shen, G.Q.; Xue, X. Critical review of the research on the management of prefabricated construction. Habitat Int. 2014, 43, 240–249. [Google Scholar] [CrossRef]

- Polat, G. Factors affecting the use of precast concrete systems in the United States. J. Constr. Eng. Manag. 2008, 134, 169–178. [Google Scholar] [CrossRef]

- Navaratnam, S.; Satheeskumar, A.; Zhang, G.; Nguyen, K.; Venkatesan, S.; Poologanathan, K. The challenges confronting the growth of sustainable prefabricated building construction in Australia: Construction industry views. J. Build. Eng. 2022, 48, 103935. [Google Scholar] [CrossRef]

- Yin, J.; Huang, R.; Sun, H.; Cai, S. Multi-objective optimization for coordinated production and transportation in prefabricated construction with on-site lifting requirements. Comput. Ind. Eng. 2024, 189, 110017. [Google Scholar] [CrossRef]

- Li, Q.; Yang, Y.; Yao, G.; Wei, F.; Xue, G.; Qin, H. Multiobject real-time automatic detection method for production quality control of prefabricated laminated slabs. J. Constr. Eng. Manag. 2024, 150, 05023017. [Google Scholar] [CrossRef]

- Atmaca, E.E.; Altunişik, A.C.; Günaydin, M.; Atmaca, B. Collapse of an RC building under construction with a flat slab system: Reasons, calculations, and FE simulations. Buildings 2024, 15, 20. [Google Scholar] [CrossRef]

- Ma, Z.; Liu, Y.; Li, J. Review on automated quality inspection of precast concrete components. Autom. Constr. 2023, 150, 104828. [Google Scholar] [CrossRef]

- Yao, G.; Liao, G.; Yang, Y.; Li, Q.; Wei, F. Multi-objective intelligent detection method of prefabricated laminated sheets basedon convolutional neural networks. J. Civ. Environ. Eng. 2024, 46, 93–101. (In Chinese) [Google Scholar] [CrossRef]

- Wei, W.; Lu, Y.; Zhang, X.; Wang, B.; Lin, Y. Fine-grained progress tracking of prefabricated construction based on component segmentation. Autom. Constr. 2024, 160, 105329. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Guo, J.; Liu, P.; Xiao, B.; Deng, L.; Wang, Q. Surface defect detection of civil structures using images: Review from data perspective. Autom. Constr. 2024, 158, 105186. [Google Scholar] [CrossRef]

- Tang, Y.; Wang, Y.; Qian, Y. Railroad missing components detection via cascade region-based convolutional neural network with predefined proposal templates. Comput.-Aided Civ. Infrastruct. Eng. 2024, 39, 3083–3102. [Google Scholar] [CrossRef]

- Ye, G.; Dai, W.; Tao, J.; Qu, J.; Zhu, L.; Jin, Q. An improved transformer-based concrete crack classification method. Sci. Rep. 2024, 14, 6226. [Google Scholar] [CrossRef]

- Wang, Q.A.; Dai, Y.; Ma, Z.G.; Wang, J.F.; Lin, J.F.; Ni, Y.Q.; Ren, W.X.; Jiang, J.; Yang, X.; Yan, J.R. Towards high-precision data modeling of SHM measurements using an improved sparse Bayesian learning scheme with strong generalization ability. Struct. Health Monit. 2023, 23, 588–604. [Google Scholar] [CrossRef]

- Wang, Q.A.; Wang, H.B.; Ma, Z.G.; Ni, Y.Q.; Liu, Z.J.; Jiang, J.; Sun, R.; Zhu, H.W. Towards high-accuracy data modelling, uncertainty quantification and correlation analysis for SHM measurements during typhoon events using an improved most likely heteroscedastic Gaussian process. Smart Struct. Syst. 2023, 32, 267–279. [Google Scholar]

- Wang, Q.A.; Liu, Q.; Ma, Z.G.; Wang, J.F.; Ni, Y.Q.; Ren, W.X.; Wang, H.B. Data interpretation and forecasting of SHM heteroscedastic measurements under typhoon conditions enabled by an enhanced hierarchical sparse Bayesian learning model with high robustness. Measurement 2024, 230, 114509. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into high-quality object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Qiao, S.; Chen, L.C.; Yuille, A. DetectoRS: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10213–10224. [Google Scholar]

- Ye, G.; Qu, J.; Tao, J.; Dai, W.; Mao, Y.; Jin, Q. Autonomous surface crack identification of concrete structures based on the YOLOv7 algorithm. J. Build. Eng. 2023, 73, 106688. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Yu, L.; Zhu, J.; Zhao, Q.; Wang, Z. An efficient yolo algorithm with an attention mechanism for vision-based defect inspection deployed on FPGA. Micromachines 2022, 13, 1058. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; An, Z.; Huang, L.; He, S.; Zhang, X.; Lin, S. Surface defect detection of electric power equipment in substation based on improved YOLOv4 algorithm. In Proceedings of the 2020 10th International Conference on Power and Energy Systems (ICPES), Chengdu, China, 25–27 December 2020; pp. 256–261. [Google Scholar]

- Liang, Y.; Li, S.; Ye, G.; Jiang, Q.; Jin, Q.; Mao, Y. Autonomous surface crack identification for concrete structures based on the you only look once version 5 algorithm. Eng. Appl. Artif. Intell. 2024, 133, 108479. [Google Scholar] [CrossRef]

- Wang, H.; Xu, X.; Liu, Y.; Lu, D.; Liang, B.; Tang, Y. Real-time defect detection for metal components: A fusion of enhanced Canny–Devernay and YOLOv6 algorithms. Appl. Sci. 2023, 13, 6898. [Google Scholar] [CrossRef]

- Ye, G.; Li, S.; Zhou, M.; Mao, Y.; Qu, J.; Shi, T.; Jin, Q. Pavement crack instance segmentation using YOLOv7-WMF with connected feature fusion. Autom. Constr. 2024, 160, 105331. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Zhang, X.; Song, Y.; Song, T.; Yang, D.; Ye, Y.; Zhou, J.; Zhang, L. AKConv: Convolutional Kernel with Arbitrary Sampled Shapes and Arbitrary Number of Parameters. arXiv 2023, arXiv:2311.11587. [Google Scholar]

- Yuen, K.V.; Ye, G. Adaptive feature expansion and fusion model for precast component segmentation. In Computer-Aided Civil and Infrastructure Engineering; Wiley: Hoboken, NJ, USA, 2025. [Google Scholar]

- Powers, D.M.W. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Li, L.; Jiang, Q.; Ye, G.; Chong, X.; Zhu, X. ASDS-you only look once version 8: A real-time segmentation method for cross-scale prefabricated laminated slab components. Eng. Appl. Artif. Intell. 2025, 153, 110958. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

| Image Type | Number of Images for Training | Number of Images for Validation | Number of Images for Testing | Total |

|---|---|---|---|---|

| Number of Images | 1070 | 305 | 160 | 1535 |

| Type of Data | Number of Labels for Training | Number of Labels for Validation | Number of Labels for Testing |

|---|---|---|---|

| Metal junction box | 220 | 70 | 45 |

| Plastic junction box | 830 | 250 | 110 |

| Reserved holes | 400 | 115 | 95 |

| Truss bars | 4135 | 1195 | 560 |

| Reinforcement rebars | 8095 | 2315 | 1190 |

| Total | 13,680 | 3945 | 2000 |

| Used Network | Adopted Module | Box | Params (M) | F1 | GFLOPs | Size | ||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | mAP50 | ||||||

| Baseline | YOLOv8 | 92.3 | 83.5 | 88.3 | 3,006,623 | 87.7 | 8.1 | 6.2 |

| Baseline-M | MBCA | 94.5 | 84.1 | 89.8 | 3,038,715 | 89.0 | 8.7 | 6.3 |

| Baseline-B | Bi-AV-FPN | 93.1 | 84.4 | 90.1 | 2,675,287 | 88.5 | 7.3 | 5.6 |

| MBAV | MBCA + Bi-AV-FPN | 95.2 | 82.7 | 90.9 | 2,707,379 | 88.5 | 8.0 | 5.7 |

| Module | All-mAP50 | RR-mAP50 | PJB-mAP50 | MJB-mAP50 | TB-mAP50 | RH-mAP50 |

|---|---|---|---|---|---|---|

| Baseline | 88.3% | 85.9% | 97.6% | 98.4% | 97.2% | 62.5% |

| Baseline-M | 89.8% | 85.0% | 98.8% | 97.1% | 98.3% | 70.7% |

| Baseline-B | 90.1% | 86.3% | 97.6% | 96.6% | 98.3% | 71.7% |

| MBAV | 90.9% | 88.0% | 99.9% | 99.6% | 99.9% | 66.3% |

| Used Network | Box | Size (M) | F1 | GFLOPs | ||

|---|---|---|---|---|---|---|

| Precision | Recall | mAP50 | ||||

| Fast R-CNN | 87% | 62% | 82% | - | 0.72 | - |

| YOLOv5 | 92% | 83% | 88% | 7.1 | 0.87 | 15.8 |

| YOLOv6 | 82% | 48% | 63% | 18.5 | 0.60 | 45.17 |

| YOLOv7 | 93% | 83% | 89% | 61.9 | 0.88 | 103.2 |

| Baseline | 92% | 84% | 88% | 6.2 | 0.88 | 12.0 |

| MBAV | 95% | 83% | 91% | 5.7 | 0.89 | 14.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, F.; Yuan, L.; Jin, Q.; Hu, D. MBAV: A Positional Encoding-Based Lightweight Network for Detecting Embedded Parts in Prefabricated Composite Slabs. Buildings 2025, 15, 2850. https://doi.org/10.3390/buildings15162850

Yu F, Yuan L, Jin Q, Hu D. MBAV: A Positional Encoding-Based Lightweight Network for Detecting Embedded Parts in Prefabricated Composite Slabs. Buildings. 2025; 15(16):2850. https://doi.org/10.3390/buildings15162850

Chicago/Turabian StyleYu, Fei, Liangyu Yuan, Qiang Jin, and Di Hu. 2025. "MBAV: A Positional Encoding-Based Lightweight Network for Detecting Embedded Parts in Prefabricated Composite Slabs" Buildings 15, no. 16: 2850. https://doi.org/10.3390/buildings15162850

APA StyleYu, F., Yuan, L., Jin, Q., & Hu, D. (2025). MBAV: A Positional Encoding-Based Lightweight Network for Detecting Embedded Parts in Prefabricated Composite Slabs. Buildings, 15(16), 2850. https://doi.org/10.3390/buildings15162850