1. Introduction

1.1. Background

The construction sector is marked by a substantial dependence on a human workforce, an elevated incidence of occupational accidents and deaths, and sluggish productivity growth compared to other industries over recent decades [

1,

2]. With the global challenges such as climate change, an aging labor pool, and a scarcity of qualified workers, conventional building practices struggle to address the rising demands for productivity, quality, safety, and environmental sustainability [

3]. To counter these challenges, significant efforts have been directed toward the development of on-site robotic systems capable of independently performing tasks in hazardous construction settings, thereby reducing the physical burden and risks borne by human workers. Evidence from prior research indicates that autonomous construction robotics can decrease repetitive on-site labor by 25% to 90% and reduce exposure to dangerous activities by 72% while concurrently improving precision, cost-effectiveness, and adherence to project timelines [

4,

5,

6]. The incorporation of AI and autonomous robotic systems plays an essential role in modernizing construction methodologies and reshaping construction practices.

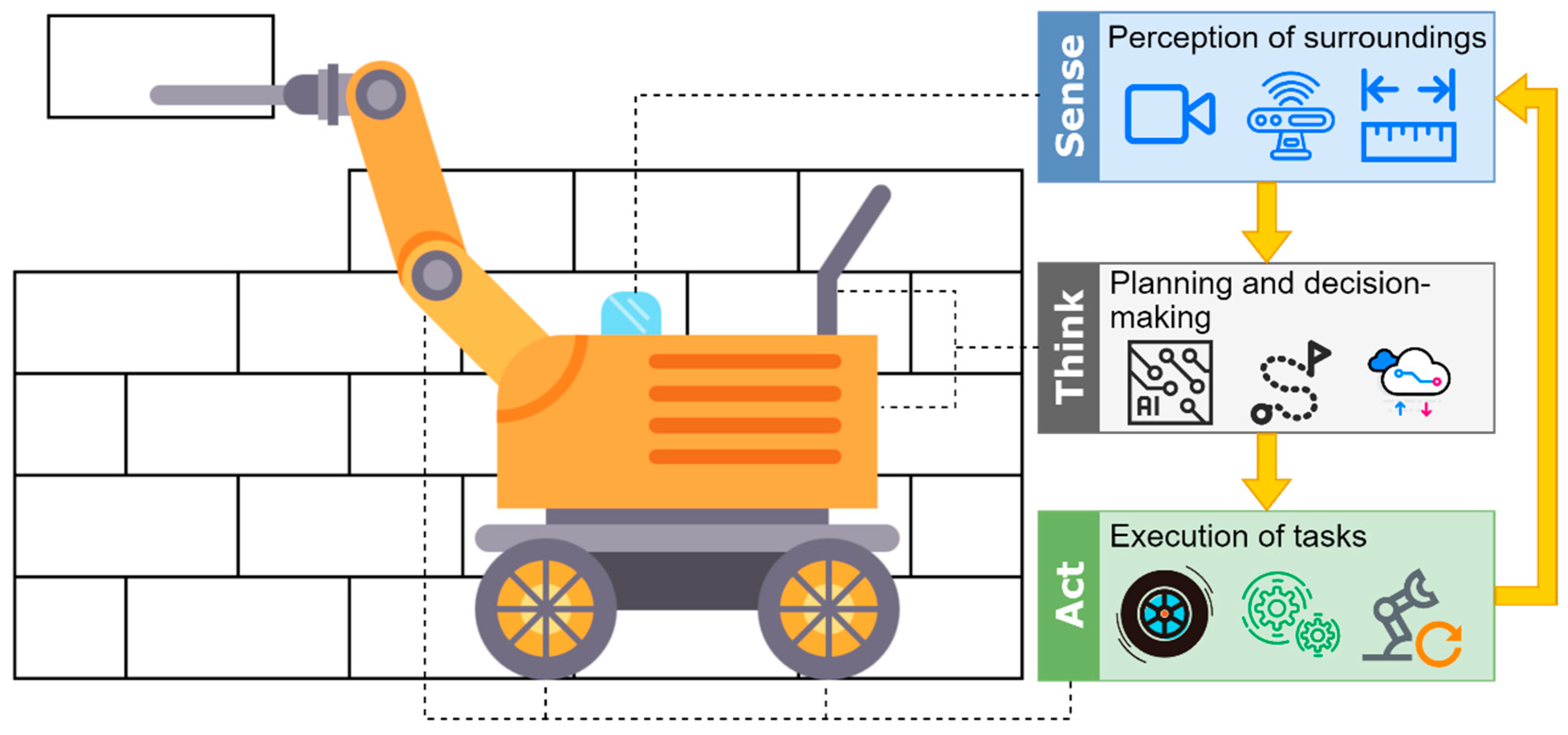

1.2. Role of the Sense–Think–Act Framework

In recent years, AI-driven on-site construction robotics has attracted considerable attention across multiple academic disciplines. Central to the functionality of these autonomous systems are three core technological components: sense, think, and act [

7,

8]. The “sense” component enables robots to interpret their surroundings and acquire task-relevant data (e.g., force feedback when performing pick-and-place tasks [

9]) using tools like visual imaging devices [

10,

11,

12,

13], light detection and ranging (LiDAR) systems [

14,

15], inertial measurement unit (IMU) sensors, and other measurement instruments [

16,

17,

18,

19]. This information includes visual depictions, spatial coordinates, distance metrics, and additional data influencing operational performance. The “think” component leverages AI algorithms and data repositories to process this information and generate decisions. For instance, advanced machine learning (ML) techniques allow robots to detect and categorize workers [

20], machinery [

21], various types of materials [

22,

23], and tools [

24] as they work, while navigation algorithms determine efficient pathways, avoiding obstacles and prioritizing safety [

25,

26,

27,

28]. Finally, the “act” component translates these decisions into physical outcomes through mechanical systems, including articulated limbs [

29,

30,

31], guided tracks [

32], suspension mechanisms [

33,

34], rotors [

16,

35,

36,

37], robotic arms [

38,

39,

40], or heavy machinery-like cranes [

41,

42,

43].

Figure 1 illustrates this sense–think–act (STA) framework underpinning autonomous construction robotics. For construction robotics, STA is critical to understanding how robots navigate complex sites, collaborate with human workers, and execute specialized tasks, making it an ideal lens for evaluating technological advancements.

1.3. Gaps in the Existing Literature Review

Despite growing interest in AI-driven construction robotics, the existing literature reviews often fall short of providing a comprehensive assessment. A summary of recent literature reviews is provided in

Table 1, organized by research focus within the STA framework and ordered by publication date. Despite their number, these studies tend to emphasize specific dimensions of autonomous construction robotics. For example, Yarovoi and Cho explored simultaneous localization and mapping (SLAM) algorithms, which enable real-time environmental mapping and self-positioning in unfamiliar contexts, aligning with the “sense” component [

44]. Liu et al. highlighted three key aspects of construction robotics—building information modeling (BIM), human–robot collaboration (HRC), and deep reinforcement learning—primarily tied to the “think” component for data processing and task planning [

45]. Guaman-Rivera et al. examined material compositions and 3D-printing techniques in additive manufacturing robotics, pertinent to the “act” component [

46]. Although Pan et al. conducted an extensive review spanning all STA components, their analysis was restricted to prefabricated and modular construction robotics [

47]. While valuable, these reviews lack a holistic integration of all STA components, limiting insights into their interdependence and overall progress. Furthermore, few studies employ quantitative methods, such as bibliometric analysis, to systematically map research trends and identify collaborative networks. This fragmentation hinders a unified understanding of AI-driven construction robotics, leaving researchers and practitioners without a clear roadmap for advancing the field. This reveals a gap in comprehensive assessments of AI-driven autonomous construction robotics under the STA framework using quantitative approaches. To address this, the present study seeks to deliver a comprehensive overview of recent technological advancements, providing valuable guidance to researchers and practitioners on the design, implementation strategies, and adaptation concerns of autonomous construction robotics, thus fostering further innovation and practical applications.

1.4. Research Objectives

This study addresses the identified gap by delivering a comprehensive, STA-driven review of AI applications in on-site autonomous construction robotics. Bibliometric analysis offers a quantitative method to map research trends and evaluate their impact, illuminating future directions and supporting the advancement of AI-driven robotic systems in construction. This study employed a bibliometric approach with the following objectives: (1) construct a detailed overview of research trends reflecting the current state of AI-powered construction robotics, and (2) identify key contributors and collaborative networks (e.g., researchers, journal sources, and countries) that encourage potential collaborations and knowledge sharing. Through a rigorous content analysis based on the results of bibliometric analysis, we aim to (3) evaluate advancements across all STA components and (4) propose insights derived from the content analysis of the existing literature, steering future research and inspiring innovative AI solutions for autonomous construction robotics within the STA framework. Overall, this research aims to provide a holistic synthesis that bridges fragmented perspectives, fostering both academic understanding and practical implementation of AI-driven construction robotics to enhance safety, efficiency, and sustainability on construction sites.

This study pioneers the application of the STA framework to evaluate AI-driven construction robotics, offering a novel, integrated perspective on sensing, decision-making, and actuation. Unlike prior reviews focusing on isolated technologies, our approach reveals critical interdependencies, advancing the understanding of autonomous systems in construction. Furthermore, by combining bibliometric and content analyses, we provide a unique dual lens—quantitative trends paired with qualitative depth—unmatched in the existing literature.

The paper is structured as follows:

Section 2 describes the review methodology;

Section 3 presents the findings of the bibliometric analysis;

Section 4 examines function-oriented research trends, addressing each technological component of the STA framework with an overview of relevant approaches and key findings;

Section 5 discusses challenges identified from the results and proposes directions for future research; and

Section 6 concludes the paper, summarizing its contributions.

2. Methodology

This study performs a comprehensive review of AI applications in on-site autonomous construction robotics by employing bibliometric and content analyses, guided by the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) framework. The PRISMA approach facilitates the extraction of valuable insights from a broad set of publications while promoting transparency and replicability in the research process. This section outlines the detailed methodology applied in this investigation.

2.1. Data Collection

Data collection was initiated using two well-established academic databases: Web of Science and Scopus. These platforms are widely acknowledged for their comprehensive inclusion of peer-reviewed journals, conference proceedings, and scholarly works, as evidenced by their use in prior studies [

38,

44,

51]. Employing both databases enhances the likelihood of capturing a wide array of engineering-focused research [

51], which is critical given that AI in construction robotics spans disciplines such as computer science, electrical engineering, civil engineering, and construction-specific domains.

A meticulously designed search query was developed to ensure the retrieval of the relevant literature, incorporating key themes, synonyms, and variations prevalent in academic and industry contexts. Informed by existing studies [

31,

47,

49] and refined through iterative testing, the following keywords were adopted: “robot*” AND (“auto*” OR “self-*” OR “intelligent” OR “smart”) AND (“construction” OR “building” OR “AEC”) AND (“site” OR “onsite” OR “on-site” OR “field”) AND (“artificial intelligence” OR “AI” OR “machine intelligence” OR “algorithm” OR “learning”). The use of “robot*” enables the inclusion of terms like “robot,” “robots,” “robotic,” and “robotics,” ensuring broad coverage of robotic technologies. The first group of terms targets autonomous systems, with “auto*” encompassing “autonomous,” “automation,” and “automated,” and “self-*” capturing “self-learning,” “self-adaptive,” and “self-driving,” highlighting studies related to automation and intelligence in robotics. The second and third groups focus on on-site construction applications, aligning with the study’s scope, while the final group ensures the inclusion of AI-related concepts, such as algorithms and learning techniques, including ML, reinforcement learning (RL), deep learning (DL), and deep reinforcement learning (DRL). Only journal articles and conference papers were included to uphold academic standards. The search query in this study ensures the completeness and relevance of retrieval while minimizing irrelevant results by capturing core concepts (i.e., autonomous robotics) within the targeted domain (i.e., on-site construction) and emphasizing AI-driven approaches.

The publication period was defined as 1 January 2015 to 31 December 2024, reflecting a decade of notable progress in AI and robotics, aligning with the emergence of Industry 4.0, BIM, and digital twin technologies, which have profoundly influenced the construction industry [

52,

53,

54]. This timeframe balances the need for a substantial dataset with the inclusion of seminal works, avoiding outdated studies while retaining key developments that shape the field.

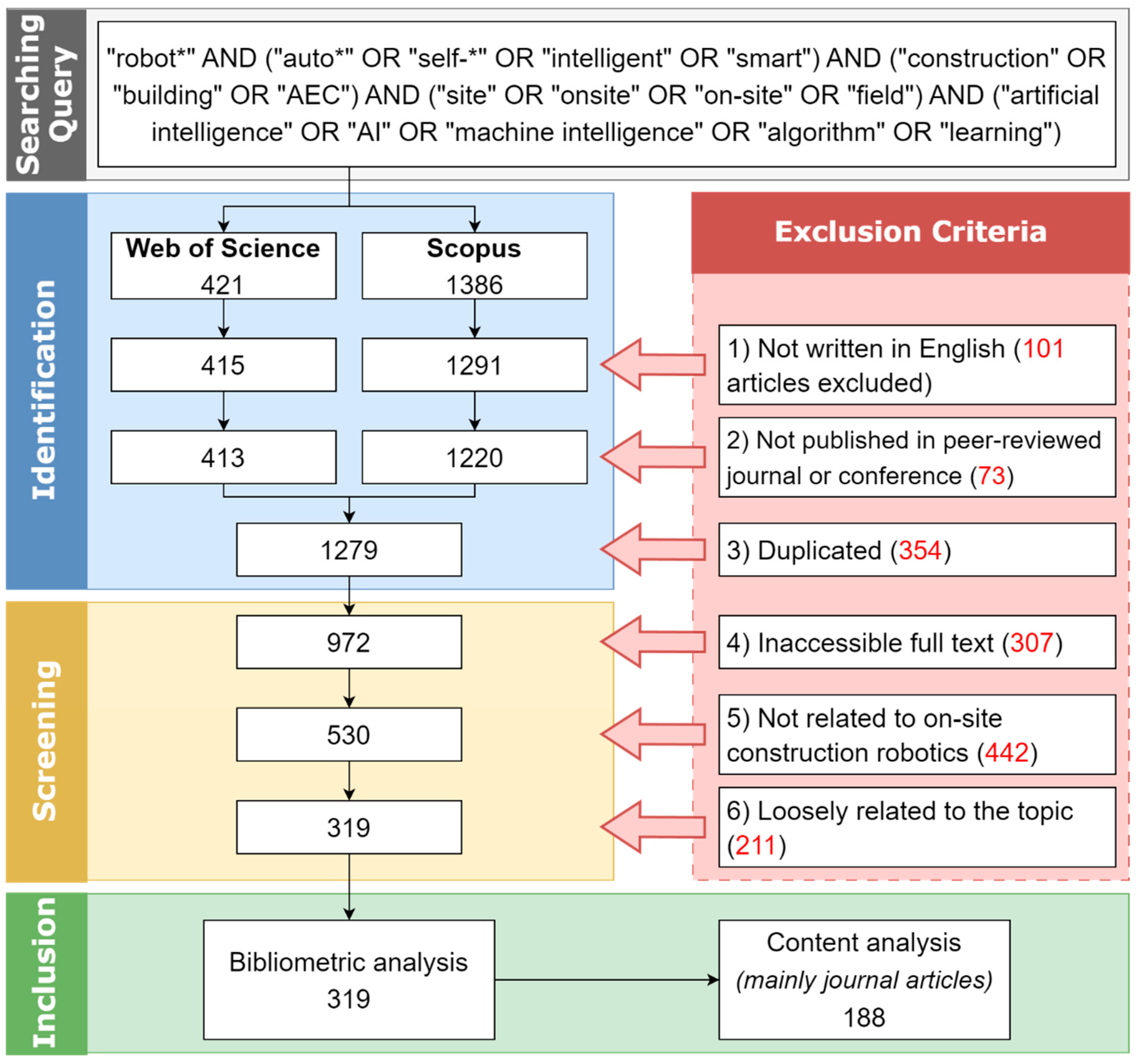

2.2. Data Selection Adhered to PRISMA Guidelines

The initial search yielded 1807 publications. A systematic selection process, aligned with PRISMA guidelines, reduced this number to 319 articles for detailed review. The process, depicted in

Figure 2, includes a flow diagram and exclusion criteria to ensure methodological rigor and clarity. The exclusion criteria include non-English publications, non-peer-reviewed publications, duplicated, inaccessible full texts, and thematic irrelevance. Specifically, studies not directly addressing AI-driven autonomous construction robotics were excluded due to thematic exclusion, such as those focused on unrelated fields (e.g., agriculture and factory manufacturing) or loosely related to the topic (e.g., mentioning construction robotics only as examples), ensuring relevance to the STA framework. In the identification stage, 174 publications were excluded due to being non-English, not peer-reviewed, or retracted, despite initial filters. Duplicate entries were also eliminated, leaving 1279 articles. During screening, 307 articles were removed due to unavailable full texts. Subsequent title and abstract reviews excluded 442 studies related to factory manufacturing or unrelated fields (e.g., agriculture, logistics, and psychology) and 211 articles loosely related to AI in construction robotics (e.g., as examples or future prospects). Ultimately, 319 articles—comprising both conference papers and journal articles—were selected for bibliometric analysis, with 188 journal articles chosen for content analysis. Conference papers were included in the bibliometric analysis to capture a wide range of trends reflecting recent advancements, despite their preliminary explorations. Journal articles were prioritized in content analysis because they undergo stricter peer review, enhancing methodological validation and reliability. Additionally, journal articles typically offer more comprehensive details compared to conference papers, and this is critical for in-depth content analysis.

2.3. Bibliometric and Content Analysis

To explore the research landscape of AI-driven construction robotics within the STA framework, bibliometric analysis was conducted using VOSviewer version 1.6.20 and Python version 3.10.15, complemented by a content analysis of the selected journal articles. VOSviewer was employed to examine bibliometric data, revealing research trends, influential works, and citation networks. This tool generates distance-based maps from large datasets, where node proximity indicates relationship strength. Python was used to preprocess data and visualize the global distribution of research via Plotly version 6.0.0, producing an intuitive world map.

For the content analysis, only journal articles were included to ensure methodological robustness and depth. These articles were systematically reviewed to identify AI techniques, robotic applications, research scope, and performance metrics for on-site construction, organized within the STA framework. Emphasis was placed on algorithm development, experimental validation, and practical deployment challenges, providing insights into technological progress and remaining gaps in the field.

3. Results of Bibliometric Analysis

This section presents a comprehensive bibliometric analysis of AI-driven robotics in construction, offering a systematic and quantitative assessment of the field’s development. It explores publication trends by year and country, research trends, key contributors, and collaborative networks, providing insights into the evolution of this research domain.

3.1. Overview of Publications by Year and Country

The temporal distribution of publications, as depicted in

Figure 3, highlights a significant increase in research output following 2021, marking a critical shift in scholarly attention toward AI-driven robotics in construction. Despite a slight decrease in 2024 after a peak in 2023, the number of publications remains significantly higher than pre-2021 levels, indicating that while research output may stabilize, the field remains highly active and influential. Notably, journal articles exhibit consistent growth, whereas conference papers show less increase, suggesting a transition from preliminary conference-based explorations to more formalized, peer-reviewed journal publications. This increase reflects advancements in AI technologies, such as deep learning and reinforcement learning, which have enhanced robotic capabilities in construction. The COVID-19 pandemic further accelerated this trend by increasing the need for robot automation to avoid human contact while sustaining productivity [

55]. Additionally, regional policy initiatives and funding programs have further supported the observed growth.

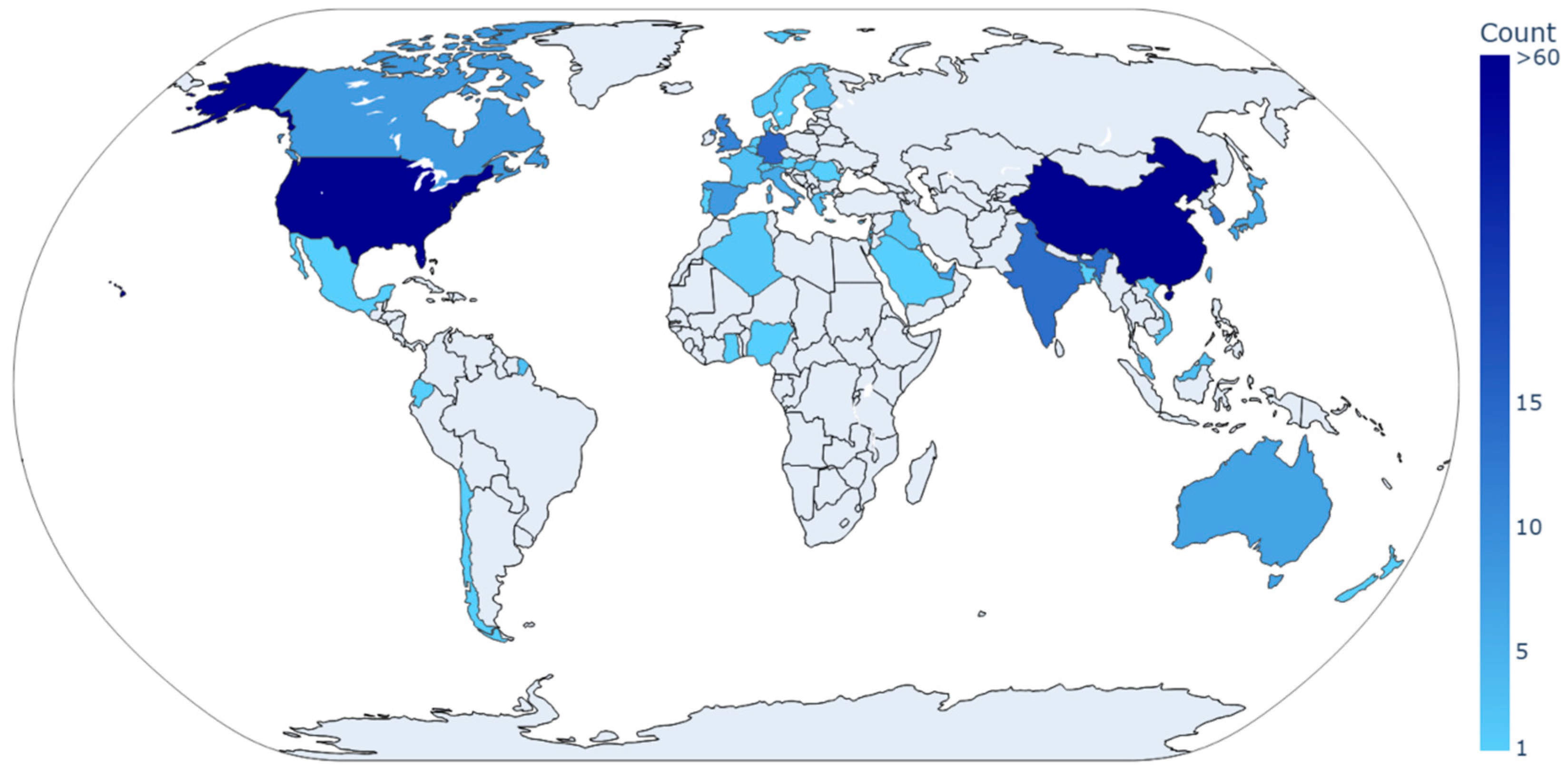

Geographically, China and the United States lead the field, with 76 and 65 publications, respectively, together comprising nearly half of the total output. This concentration underscores their dominant roles in advancing AI-driven construction robotics. Government policies promoting AI and robotics development, such as the US National Robotics Initiative and China’s “Made in China 2025” strategy, have encouraged research in this area, contributing to this leadership. Significant contributions also emerge from European and Asian countries, including Germany (15 publications), India (14), South Korea (12), the United Kingdom (11), and Hong Kong (11), reflecting moderate yet impactful research activity. Additionally, emerging efforts in other regions highlight the broadening global interest in AI-driven robotics for on-site construction automation. The geographical distribution of countries with more than one publication is illustrated in

Figure 4.

3.2. Citation and Publication Analysis

This section outlines key findings on highly cited articles, major publication sources, and collaborative authorship patterns in the field of AI-driven construction robotics. This analysis provides a clear summary of the research field and supports collaboration by pointing out significant studies, top publication outlets, and leading researchers who have shaped the field.

Table 2 lists the 10 most frequently cited articles from the selected publications. The top-cited work is a review article titled “Utilizing Industry 4.0 on the Construction Site: Challenges and Opportunities” by C. J. Turner et al., published in 2021. It earned 180 citations by early 2025 and offers a research plan for integrating Industry 4.0 technologies into construction [

56]. The other notable papers have similar citation counts, typically above 100. It is worth noting that half of these highly cited works were published in 2021, matching the sharp rise in research activity shown in

Figure 3. This suggests that AI-driven robotics in construction began to draw significant attention around that time, resulting in a rapid increase in published studies.

Tracking leading publication sources is a common practice in bibliometric studies to understand research trends and guide authors on where to submit their work for greater visibility and impact.

Table 3 separates the most cited journals and conferences. The range of sources, such as

Automation in Construction and

IEEE Robotics and Automation Letters, reflects the field’s cross-disciplinary nature. Journals are the main outlets for impactful research, with

Automation in Construction leading the pack. It has the highest number of articles (25) and total citations (910), confirming its key role in this area. Other journals also contribute significantly, including

Advanced Engineering Informatics (five articles, 200 citations) and

IEEE Transactions on Industrial Informatics (11 articles, 179 citations). Notably, the latter has the highest average citations per article, with one paper cited 180 times. While conferences produce fewer publications than journals, they are valuable for sharing new and exploratory research. For example, the “IEEE International Conference on Intelligent Robots and Systems” has six papers that together have 96 citations. These results show that researchers favor journals for well-established findings, which aligns with the growing focus on peer-reviewed journal articles seen in

Figure 3.

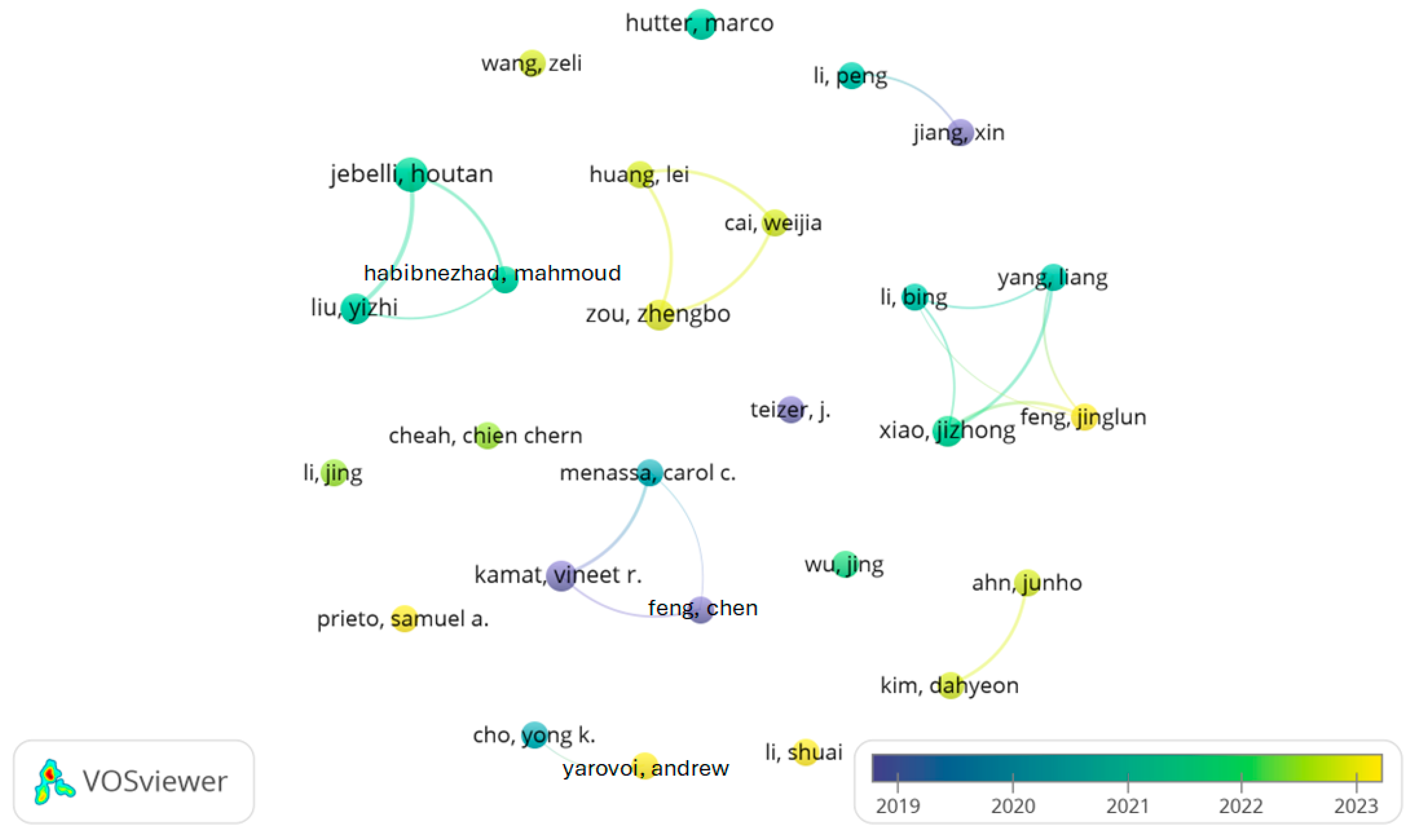

Studying co-authorship is important for understanding teamwork and identifying the scholars and institutions pushing innovation forward.

Figure 5 shows a time-overlaid co-authorship network created using VOSviewer, including only authors with at least three publications. This results in 27 out of 1244 authors being displayed. The colors represent the average publication year of each author’s papers. The largest connected group, on the central right, includes researchers like J. Xiao and L. Yang. Other major clusters include the central left one, representing Liu et al., the central upper cluster, representing Zou et al., and the central lower cluster, representing Kamat et al. Some smaller, unconnected groups also exist, hinting at opportunities for future collaboration among researchers. As for the timeline of the publications, the clusters in yellow represent researchers newly involved in this research domain in recent years. Larger groups with publications averaging between 2022 and 2023 suggest that these individuals might emerge as influential rising stars in this research area. For instance, the central upper cluster led by Zou et al. bridges AI, advanced automation, and construction robotics, showcasing collaboration between computer science and construction expertise.

3.3. Keyword Occurrence Analysis

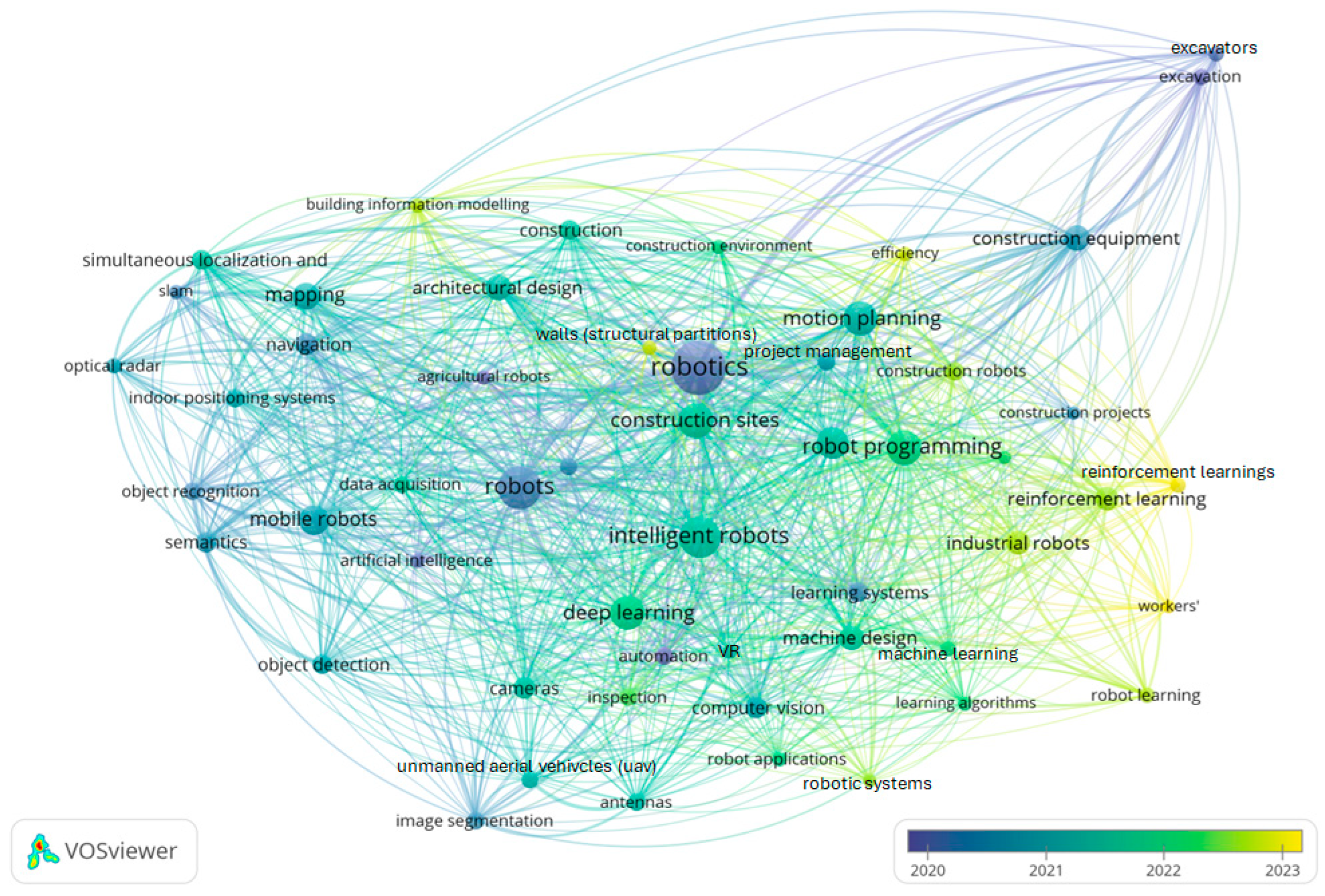

Occurrence Analysis of author keywords has been widely adopted in bibliometric analysis, as it highlights both established and emerging research areas and provides insights into the evolution of research interests in the field over recent years.

Figure 6 illustrates the time-overlaid author keyword occurrence generated by VOSviewer, with the nodes representing keywords and lines indicating the strength of their relation in various widths and distances. At the core of the network, frequently occurring keywords, such as “robotics” and its synonymous terms, along with “construction sites” and “intelligent robots,” are highly connected in central positions, reflecting their foundational roles in the research topic. Research related to “motion planning,” “programming,” and “deep learning” has also remained consistently active, reflecting advancements in the control of construction robotics. At the distal end, specialized construction equipment, such as the “excavator,” is connected but relatively isolated from the central network, suggesting loosely related applications of AI-driven robotics in excavation tasks.

Clusters of emerging research topics can be observed in the yellow-shaded regions, including “building information modeling”, “efficiency,” “reinforcement learning,” “workers’,” and “robot learning.” These trends indicate a shift toward adaptive and HRC-oriented approaches that enhance robot autonomy in dynamic construction environments, leveraging BIM technologies. Similarly, newer research is exploring “virtual reality,” “inspection,” and “walls (structural partitions),” highlighting the increasing integration of virtual simulation and automated monitoring for certain building elements in project management using construction robotics. Connections between terms like “robot learning” and “inspection” highlight increasing attention to autonomous monitoring systems, reflecting the field’s interdisciplinary evolution. These newer themes suggest a shift toward intelligent, safe, and digitally integrated construction solutions.

The bibliometric analysis uncovers a marked rise in publications post-2021, signaling heightened interest in AI-driven robotics as a response to construction challenges like labor shortages and safety concerns. This surge aligns with the global push toward Industry 4.0 [

56], indicating a paradigm shift to automated, efficient practices. The concentration of research in China and the US, comprising nearly 50% of outputs, reflects their innovation dominance, while emerging contributions from Europe and Asia highlight the broadening global interest in AI-driven robotics for on-site construction automation, driven by needs like efficiency and robot autonomy. The shift from conference papers to journal articles further suggests a maturing field. These trends underscore the strategic importance of autonomous robotics in addressing modern construction challenges, aiming to redefine construction workflows, from automated navigation to real-time site monitoring using emerging technologies like BIM and reinforcement learning. These patterns are not merely statistical; they indicate a broader convergence of technological priorities, with implications for international collaboration and competition in construction robotics. This analysis sets the stage for understanding how technological advancements, explored in the next section, align with these global trends.

4. Function-Centric Review Based on Content Analysis

The bibliometric findings provide a quantitative foundation for understanding the research landscape, highlighting the field’s strategic evolution. To fully grasp the technological drivers behind this growth, we turn to a content analysis framed by the STA paradigm, which is a widely adopted architecture reflecting the core operational cycle of autonomous robotics [

64,

65]. This method ensures that research is categorized in alignment with the fundamental capabilities of robotic systems, enabling a structured and comprehensive review of advancements in perception (“sense” component), decision-making (“think” component), and actuation (“act” component), while emphasizing their interconnected roles in enabling autonomous systems. By linking the macro-level trends from the bibliometric analysis to the micro-level technological developments below, we ensure a cohesive narrative that bridges data and application.

4.1. “Sense” Component—Perception and Environment Understanding

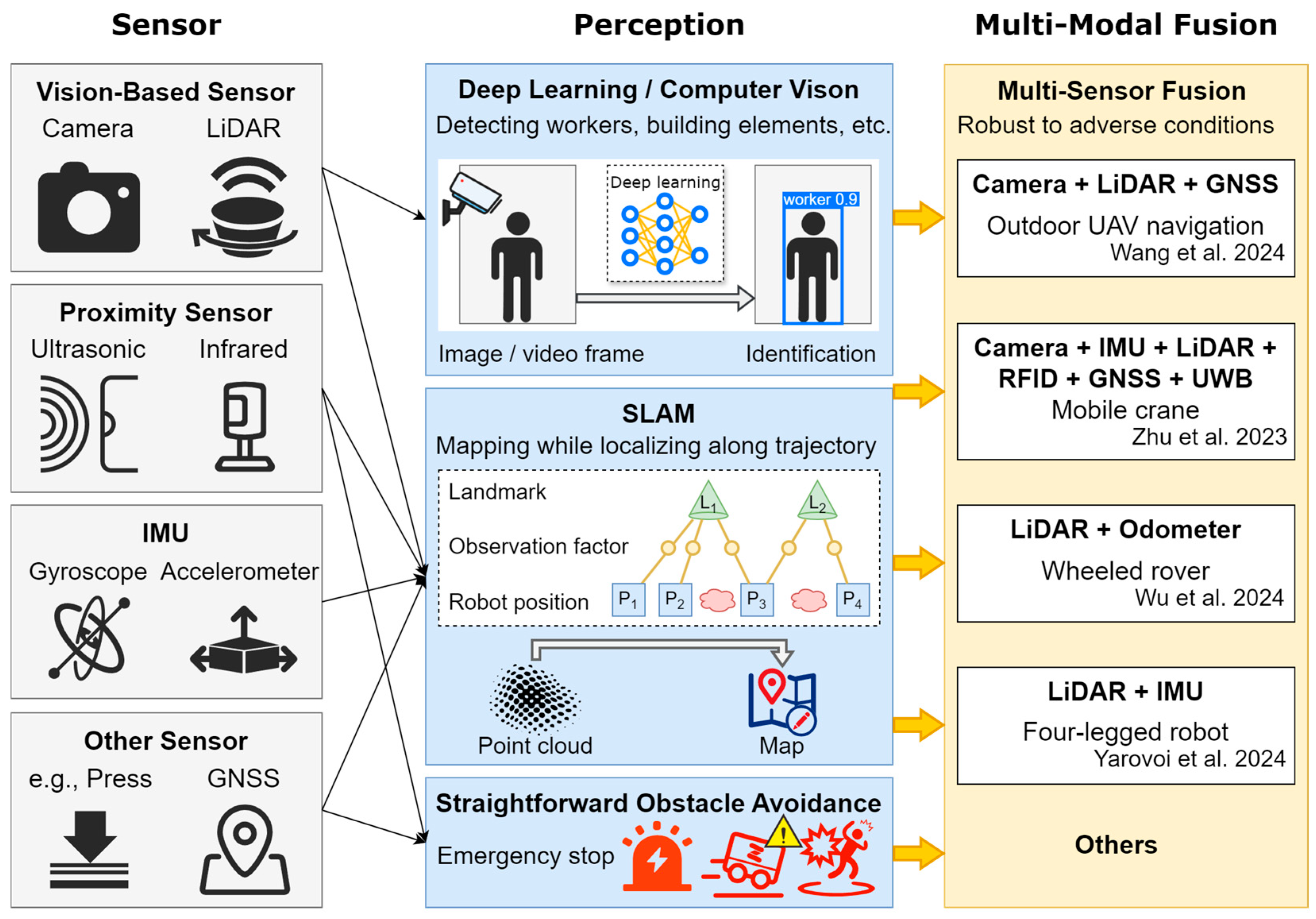

In the STA framework of autonomous construction robotics, the “sense” component relies on sensors and perception technologies, enabling robots to understand the environment and information related to task execution. This section synthesizes the capabilities of these technologies, critiques their limitations in real-world construction contexts, and assesses their maturity for practical deployment.

4.1.1. Sensing Technologies

Automated construction robotics relies on a range of sensing modalities to interpret its surroundings. Various cutting-edge sensing technologies have been developed and applied to autonomous construction robotics. Vision-based sensors are widely used for environmental perception. Cameras are used to capture visual data to assist in object recognition and environmental mapping. For instance, Zheng et al. investigated a video image-based automatic building detection and human tracking system for unmanned aerial vehicles (UAVs) [

16]. However, the performance of cameras can be affected by low lighting conditions. LiDAR uses laser pulses to create precise 3D maps with point clouds, functioning effectively in various lighting conditions, thus addressing some limitations of RGB cameras. You et al. jointly utilized RGB cameras and LiDAR to implement a non-contact building measurement method [

66]. In addition, proximity sensors can provide more straightforward feedback, aiding in obstacle avoidance, such as ultrasonic sensors and infrared sensors that detect high-frequency sound waves and infrared radiation separately. Yang et al. employed a proximity-based sensing method for construction worker safety monitoring [

67]. Tactile sensors like touch sensors and force sensors are equipped to detect physical contact and evaluate pressure application when construction robotics performs pick-and-place tasks [

9]. Wallace et al. used an array of RGBD cameras, force sensors, and precise odometry data to provide feedback for multi-modal teleoperation of heterogeneous robots within a construction environment simulated in virtual reality (VR) [

68]. Inertial measurement unit (IMU) sensors and global navigation satellite system (GNSS) are usually employed to monitor movement and orientation and maintain balance for the navigation of mobile robots [

69]. Some researchers used proximity sensors to slow or halt robotic arms when human proximity is detected to ensure safety for HRC [

70,

71].

4.1.2. Deep Learning-Based Computer Vision

DL–based computer vision relies on neural networks to learn features directly from images or video data in an accurate and automated way, in which convolutional neural networks (CNNs) are commonly used to detect objects (e.g., workers, machinery, building elements, and construction wastes) by training on labeled images [

72]. Wang et al. employed CNNs to detect and track workers and machinery for hazard identification [

73]. Approaches like region-based CNN (R-CNN), faster R-CNN, and mask R-CNN perform localized detection by generating region proposals, which refine the framework and boost speed and accuracy over the original CNN. Tung et al. adopted Mask R-CNN to detect the window weld seam to guide the robot manipulator in its cleaning process, reporting 95% mean average precision (less than 1 cm) [

74]. Kim et al. used Mask R-CNN with a camera and LiDAR to detect floor surfaces, atypical obstacles, and people, facilitating fast search and rescue work at damaged buildings [

75,

76]. You Only Look Once (YOLO) is a commonly used object detection model that identifies and localizes instances of predefined classes in visual inputs with high-speed, real-time scanning of video feeds to identify hazards or navigable routes [

75,

77]. Additionally, some researchers implemented end-to-end DL that integrates detection, classification, and scene understanding within a combined pipeline to streamline the entire sense–think–act loop by providing immediate semantic data to the “think” component [

78].

4.1.3. Simultaneous Localization and Mapping

SLAM enables a construction robot to map its environment while simultaneously tracking its own location along with the trajectory that the robot follows within that map [

44]. Visual SLAM utilizes camera images to build and maintain a map, matching features frame-to-frame to estimate the robot’s trajectory. It is compatible with various types of cameras, such as monocular cameras [

79], stereo cameras [

80], and RGB-D cameras [

15]. However, it suffers from scale drift, especially when using monocular RGB cameras and in low lighting conditions. LiDAR-based SLAM, namely LiDAR odometry and mapping (LOAM), uses LiDAR sensors to generate point clouds for accurate distance measurement and obstacle detection, even in low-texture and low-lighting conditions [

81]. Kim et al. developed a SLAM-driven autonomous mobile robot form of navigation for construction progress monitoring, safety hazard identification, and defect detection [

82].

4.1.4. Multi-Modal Sensor Fusion

Multi-modal sensor fusion combines data from multiple sensors (e.g., camera, LiDAR, IMU, and GNSS) to enhance perception robustness, adaptability, and accuracy compared to a single sensor sensing method. AI techniques (like Kalman filters, deep networks, or Bayesian methods) merge heterogeneous data into a consistent world model. Cameras are frequently integrated into multi-sensor sensing systems to perceive environments. For instance, Kim et al. developed a fire rescue robot that recognizes collapsed areas and rescuers in adverse indoor conditions based on fusion algorithms for a parallelly connected camera and 3D LiDAR, where color and people are detected by cameras using YOLOv4 and LiDAR to localize the robot, draw an indoor map, and estimate the distance between actual objects [

75,

83]. Wang et al. designed a fusion algorithm for UAVs using a fisheye camera, an RGB-D camera, a LiDAR, and GNSS, achieving seven-fold energy savings while maintaining planning success rates of 98% in simulation scenarios [

27].

4.1.5. Comparative Summary and Maturity in Construction Context

In comparison, cameras, particularly RGB variants, offer high-resolution, cost-effective, and versatile solutions for tasks like object recognition, environmental mapping, and tracking, though their effectiveness diminishes under poor lighting, dust, or occlusion, which are prevalent conditions on construction sites. LiDAR provides accurate 3D mapping and performs reliably across diverse lighting scenarios; however, its high cost and limited resolution for fine details constrain its broader adoption. Proximity sensors, such as ultrasonic and infrared types, are simple and affordable, excelling in close-range obstacle detection, yet their low resolution and short range limit their utility for complex perception tasks. Tactile sensors enable precise physical interactions, such as pick-and-place operations, but their complexity and high cost restrict scalability. IMU and GNSS are vital for navigation and positioning, though GNSS falters in indoor or GPS-denied environments, often requiring supplementary techniques like SLAM.

From the perspective of practical applications, through DL architecture such as CNNs, robots achieve robust detection and tracking of on-site objects (e.g., workers, building elements), while SLAM algorithms ensure reliable localization and mapping in adverse conditions (e.g., low-lighting or GPS-denied settings). Sensor fusion methods combine diverse data streams to perceive the environment robustly by reducing the weaknesses of any single sensor. These technologies advance robotic perception in real-world, unstructured sites by improving accuracy, reliability, and adaptability.

Figure 7 illustrates the aforementioned sensing hardware and AI algorithms for the “sense” component in construction robotics.

The maturity of these technologies varies. Cameras are mature and widely adopted, leveraging affordable hardware and advanced DL (e.g., YOLO) for object detection. LiDAR is gaining traction for precision tasks like navigation, though cost remains a barrier. Proximity sensors are well-established in safety roles but lack sophistication for broader use. Tactile sensors, while promising, are experimental due to scalability issues. IMU and GNSS are standard, though GNSS limitations drive SLAM advancements. Cameras and LiDAR have neared widespread use in controlled settings, while tactile and multi-modal systems need further refinement for dynamic construction environments. Common challenges include performance degradation from dust, rain, or extreme lighting, alongside integration hurdles requiring significant computational resources and calibration. Real-world deployment is further complicated by costs, maintenance needs, and compatibility with construction workflows.

Therefore, advancements in sensing technologies have enhanced robots’ ability to perceive complex construction environments. These developments are not isolated; they feed into decision-making systems that rely on accurate, high-resolution data to function effectively.

4.2. “Think” Component—Reasoning and Planning Approaches

In the “think” component of the STA framework, autonomous construction robots process sensory data to plan their next actions, e.g., reasoning, decision-making, and planning. This section synthesizes findings from multiple studies to offer a comprehensive overview of reasoning and planning approaches, critically comparing classical planning, RL, and hybrid methods.

4.2.1. Classical Path-Planning Algorithms

Classical graph-search methods utilize grid or graph representations to find optimal or near-optimal paths, such as Dijkstra’s algorithm and A* [

25,

86]. They typically discretize the environment into nodes (e.g., grid cells or other segments), with edges defining connectivity and traversal costs that encode distance, time, safety margins, and energy use. Dijkstra’s algorithm systematically explores the graph from start to finish, while A* enhances it by adding a heuristic (e.g., Euclidean distance) to increase the efficiency of exploration. Ye et al. implemented a real-time “safe space” concept for construction robots, considering worker movements by pairing an A* method for global planning and an enhanced dynamic window approach for collision avoidance [

87]. Sampling-based methods, such as rapidly exploring random trees (RRTs), randomly sample the space, gradually building a tree until the goal is reached. They consider the kinematic constraints of construction vehicles like trailers or excavators, enabling high-dimensional and complex constraints for various platforms of construction robotics compared to the A* method. RRT* refines paths of RRT by rewiring the tree, steadily improving path quality. Yang et al. combined an RRT for global pathfinding and an actor-centric RL for local obstacle avoidance, demonstrating cooperativity between classical sampling-based global planning and adaptive local control to find feasible paths in cluttered office buildings [

26]. Meanwhile, evolutionary algorithms (e.g., genetic algorithms and particle swarm optimization) arose to handle more complex problems by mimicking the principles of natural selection or swarm behaviors. It maintains a population of candidate solutions (i.e., paths), iteratively evolving them through selection, crossover, and mutation. Zhou et al. compared six global path-planning algorithms (i.e., Dijkstra, A*, RRT, RRT*, genetic algorithms, and deep Q-learning) for truck-trailers and excavators on real-world building sites, and reported that the genetic algorithms produce smoother travel paths [

25].

In conclusion, classical path-planning algorithms have been widely employed for navigation in construction environments. These methods discretize the environment into nodes and edges, identifying optimal paths based on predefined criteria like distance or safety. Their computational efficiency makes them suitable for real-time applications in static settings. However, their reliance on fixed representations limits their effectiveness in dynamic and unstructured construction sites, where obstacles and layouts frequently change.

4.2.2. Reinforcement Learning and Deep Reinforcement Learning

When environments are highly dynamic or partially known, as on busy construction sites, classical path-planning approaches may become inefficient or require frequent restarts. RL can adapt rapidly in real time. It optimizes an agent’s actions via trial-and-error interactions with its environment. DRL leverages neural networks for function approximation, making RL suitable for large or continuous states, that is, action spaces commonly encountered in unstructured construction environments. This method adopts Markov decision processes with a mathematical four-tuple to model the decision-making of a dynamic system, where states observed from sensors (e.g., distance to obstacles and IMU data), action spaces representing robot control (e.g., velocity vectors, angles of steering, and manipulator joints), probabilities that an action will lead to another state, and corresponding rewards guiding the policy toward optimal path are defined. Two methods are typically used to determine the optimal path. Policy-based (e.g., actor–critic) methods directly learn a deterministic policy while a critic network stabilizes training by estimating value functions [

26]. Value-based methods like Deep Q network (DQN) learn a CNN-based Q-value function by mapping each state–action pair to a value and then selecting actions with the highest estimated future return. This is adaptive to changes in the environment by updating policies and mapping high-dimensional sensor data directly into actions. Zhou et al. reported that DQN can discover feasible routes but sometimes struggles with producing the smoothest paths on real-world building sites [

25]. Yi et al. presented an offline RL approach that learns diverse construction-task planning (e.g., assembling blocks into structures) and can adapt to new environments [

88]. After an extensive literature review, Liu et al. emphasized DRL as one of the “three pillars” shaping current construction robotics research [

45].

RL, especially DRL, has gained prominence as an adaptive alternative. DRL enables robots to learn policies through trial and error, making it well-suited for complex tasks like path planning and assembly in evolving environments. Despite its adaptability, DRL requires significant computational resources and extensive training data, posing challenges in resource-constrained construction contexts. Furthermore, its opaque decision-making process raises safety concerns in safety-critical applications.

4.2.3. Hybrid Approaches

Classical path-planning algorithms suffer from handling large graphs and fail to react to frequently changing environments. Despite adaptability and multi-objective optimization, RL requires intensive data collection and challenging hyperparameter tuning. Additionally, as a black box, its lower interpretability raises safety concerns that hinder applications in real construction sites. Many researchers combined multiple methods to perform more robust planning methods on complex construction sites, reducing the disadvantages of using any single method alone [

25,

26,

60,

89]. In addition to identifying objects, such as workers and building elements, as mentioned in

Section 4.1.2, computer vision can also be used to understand instructions from workers directly (e.g., gesture recognition) for its decision-making. Halder et al. developed a vision-based hand gesture control for construction robotics with 99.11% validation accuracy [

90]. Other sensors, like IMU, can also be used for gesture control of 3D-printing robotic arms [

91]. Hybrid adoptions of BIM, SLAM, computer vision, and CNN are reported to facilitate the reasoning and planning of various construction robots [

92,

93,

94].

Hybrid approaches, which integrate classical planning with RL, seek to combine the strengths of both methods. For example, classical planners can provide high-level path guidance, while RL manages low-level obstacle avoidance and real-time adjustments. These systems offer a balance of robustness and adaptability, though their design and tuning can be complex, necessitating careful integration.

4.2.4. Integration of Domain Knowledge

Aiming to facilitate on-site construction tasks, construction robots should not only sense and plan independently but also consider specific domain knowledge of the construction industry. BIM and 4D data store precise geometry, material specifics, and sequences of project tasks. Path-planning and SLAM algorithms can reference BIM data to anticipate walls and columns yet to be built or align real-time sensor data with the planned or as-built structure. For instance, Spinner and Degani enabled explicit injection of prior knowledge from a 4D BIM (e.g., building’s construction schedule, inter-element dependencies, surface roughness, and common installation errors) to further robotic monitoring in construction sites with high-level reasoning [

92]. Villanueva used digital design data obtained from BIM/CAD models to guide path planning for machining cross-laminated timber panels [

95]. Construction tasks often require robots to operate near moving people or to collaborate with workers. Guidelines for minimum clearances and hazard avoidance can be combined with path-planning, reducing collisions and downtime. Ye et al. established worker trajectory forecasts derived from statistics of existing worker behavior patterns to improve the path-planning of construction robotics by proposing a potential collision zone between the paths of workers and robots [

87]. They reported an 8% reduction in path length, preventing collision and avoiding unnecessary local planning repeatedly. Johns et al. integrated the construction site scanning data of irregular dry stones with geometric planning software to determine the preferred placement on the fly, stone by stone, in less time than it takes for the excavator to physically locate, grasp, and place a stone [

96].

4.2.5. Comparative Summary and Maturity in Construction Context

To facilitate comparison, we evaluate these planning approaches based on robustness, real-time adaptability, and computational load. Classical planning approaches, such as Dijkstra’s algorithm and A*, excel in static environments due to their high robustness and low computational demands, but they struggle to adapt to dynamic conditions. In contrast, DRL offers high adaptability, making it ideal for evolving construction sites, though it demands significant computational resources and extensive training data, with its opaque decision-making raising safety concerns. Hybrid methods, blending classical planning with reinforcement learning, aim to balance robustness and adaptability, yet they often require moderate to high computational effort and careful tuning. While classical planning falters in dynamic settings, current DRL models face limitations, including their substantial data requirements and safety risks stemming from their black box nature. Transfer learning and domain adaptation present viable solutions by enabling models to leverage knowledge from related tasks or simulated environments. For instance, pre-training DRL models in simulated construction sites can reduce real-world data needs and enhance safety by refining policies in controlled settings.

In summary, while classical planning provides a reliable baseline, DRL and hybrid approaches offer greater potential for advancing the decision-making capabilities of construction robots. Modern “think” systems for autonomous construction robots can blend multiple technologies to overcome the individual limitations of each technique for robust reasoning and planning. For instance, a robot might adopt computer vision for visual scene understanding (both environment and instructions from the worker’s gestures). Its motion control can be refined by reinforcement learning, while a classical planner ensures safe navigation under constraints extracted from BIM or other domain knowledge. By combining these approaches, autonomous construction robots can be both safe and intelligent in dynamic real-world construction sites. AI algorithms, including RL and DRL, form the “thinking” core of autonomous robotics. These systems process sensory inputs to plan tasks like optimal pathfinding or resource allocation. The sophistication of these algorithms depends heavily on robust sensing, illustrating the synergy within the STA framework.

4.3. “Act” Component—Motion and Actuation

In the realm of construction robotics, the “act” component of the STA framework includes a diverse array of robotic systems, each varying in scale and function. Their sizes and structures vary significantly depending on task types and features. Focusing on the “act” component, this section outlines construction robotics and related AI technologies regarding their physical actions and interactions within construction sites. This perspective emphasizes the robots’ embodiment and operations, allowing for differentiation by physical scale and interactions to perform specific construction tasks. This section provides a comparative evaluation of robotic platforms classified by their scale and critically examines the coordination and communication constraints in multi-robot and HRC systems, with a focus on real-world integration challenges.

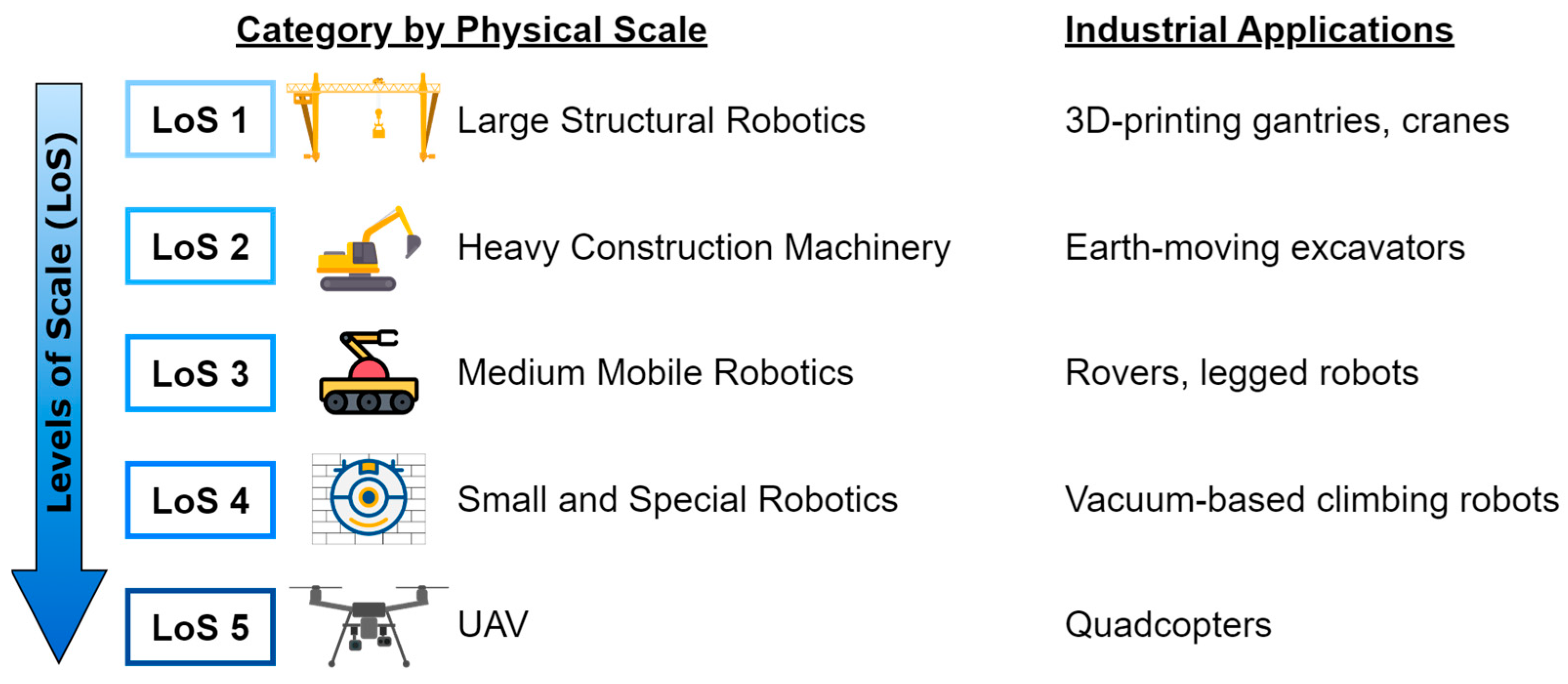

4.3.1. Level of the Scale of Robotic Platforms

Construction robotics refers to a wide spectrum of advanced machinery for automating construction-related tasks. This research systematically classifies these robotics based on their physical scales, ranging from large-scale structures, like cranes, to smaller, more flexible units, like UAVs and bio-inspired soft robotics.

Figure 8 shows the five levels of scale (LoS) defined in this study. Arranged from largest to smallest, the identified categories are (a) large structural robotics, (b) heavy construction machinery, (c) medium mobile robotics, (d) small and special robotics, and (e) UAV. This section highlights their developments, industrial applications, and features.

Large structural robotics (LoS 1) utilizes gantry and crane systems that operate over construction sites, facilitating tasks like heavy lifting [

84], rebar tying [

97], and 3D printing [

46]. By automating manipulation through overhead systems with expansive operational range, they minimize manual labor intensity and risk to human workers in hazardous environments. However, setting up these large systems requires significant time and resources, and their mobility is restricted once installed (e.g., moving in a straight line on each axis [

98]), making them less adaptable to dynamic site conditions. Heavy construction machinery (LoS 2) encompasses the automation of traditionally human-operated heavy machinery (notably, excavators [

96], trucks [

25], and loaders [

99]) that carry out earth-moving and material transport tasks. Automating these machines enhances operational efficiency, productivity, and safety by reducing reliance on manual operations and reducing human exposure to dangerous environments. It requires effective navigation algorithms suited for unstructured terrains with precise path planning, collision avoidance, and robust environmental perception. Additionally, the cost of retrofitting existing machinery with automation technology can be substantial [

100]. Medium mobile robotics (LoS 3) often refers to rovers and legged robotics [

85], which are versatile robotic platforms engineered for diverse tasks. These robotic systems typically maneuver through various terrains to conduct various construction tasks with high precision and repeatability. They offer significant flexibility and adaptability, especially useful in dynamically changing construction environments. However, technical challenges include precise localization and mapping, power management, obstacle avoidance, and the need for robust and reliable actuation.

Unlike the aforementioned ground-based robotics (LoS 1–3), small and special robotics (LoS 4) are compact robotic systems that operate directly on surfaces of buildings and other specially designed robotics, like exoskeletons [

101,

102]. The surface-based robots use advanced adhesion mechanisms and bio-inspired technologies to climb vertical structural surfaces or elevated spaces to access complex areas. For instance, Minibuilders developed a grip robot whose four rollers clamp onto the upper edge of the structure, allowing it to move along the previously printed material, depositing more layers; they also developed a vacuum robot that can attach to the vertical or sloped surfaces of previously printed material. However, their small size restricts the payload they can carry. UAVs (LoS 5) provide valuable aerial perspectives for surveying, site monitoring [

16], construction progress assessment [

103], safety inspections [

104], and materials localization [

105]. They can be quickly deployed to cover large areas, collect high-resolution visual and sensor data, and access hazardous or difficult-to-reach locations. However, UAVs face technical challenges, including limited battery life and reduced navigation precision under adverse environmental conditions such as wind or rain [

58].

As for practical applications, robotic platforms in on-site construction vary significantly in scale, each suited to specific tasks and environments. Large structural robotics, such as cranes, is indispensable for heavy lifting and material transport. Their high cost and extended deployment times reflect the complexity of their setup and operation, but they are essential for tasks like erecting steel frameworks and 3D printing. Heavy construction machinery, including excavators and bulldozers, is widely used for earth-moving and site preparation. These machines are expensive and require moderate deployment time due to their size and the need for skilled operators. Medium mobile robotics, such as rovers and legged robots, offer versatility for tasks like inspection and material handling. Their moderate cost and relatively quick deployment make them adaptable to various construction phases. Small and special robotics, like surface-climbing robots and exoskeletons, are designed for specific tasks in confined or elevated areas. While their deployment is rapid, their limited payload capacity and higher cost per unit restrict their large-scale applications. UAVs provide aerial perspectives for surveying and monitoring. They are cost-effective and quick to deploy but constrained by battery life and environmental factors like wind. This comparison underscores the trade-offs between cost, deployment efficiency, and task suitability, highlighting the importance of selecting appropriate platforms based on project-specific needs.

4.3.2. Learning-Based Actuation

Imitation learning, also referred to as learning from demonstration (LfD) methods, enables robots to learn tasks directly from human demonstrations. These methods usually include supervised learning from recorded expert behaviors, allowing robots to mimic precise movements or tasks performed by skilled human workers. Rasines et al. automated robotic joint filling tasks in construction sites, using a teleoperated demonstration by humans and imitation learning to estimate motion control parameters accurately [

106]. In addition to the “think” component (as described in

Section 4.2.2), RL algorithms enable construction robots to perform complex tasks without requiring hand-crafted control instructions. It allows robots to learn optimal actions through trial and error, improving construction skills progressively, such as excavation [

104], assembly [

105], and material placement [

106]. Schmidt et al. integrated RL for autonomous shotcrete spraying robots, optimizing spraying paths in construction tasks [

18]. It is worth noting that inverse reinforcement learning combines LfD and RL to infer reward functions from expert demonstrations and derive optimal task behaviors through self-exploration, without explicitly defined reward functions [

107].

4.3.3. Collaborations of Construction Robotics

Multi-agent collaboration involves multiple robotic systems working cooperatively to perform complex construction tasks to enhance robustness to environmental uncertainties, thereby improving productivity and reducing individual robot workload. Multiple robots coordinate their actions via communication among robots for task allocation, coordinated path planning, and decision-making. Duan et al. developed multi-agent RL frameworks facilitating collaboration among multiple robots for pick-and-place tasks on construction sites, enhancing efficiency and safety [

108]. Prieto et al. introduced multi-robot systems for collaboratively collecting construction data in complex environments effectively, using cooperative exploration algorithms [

109]. For construction robots operated in remote and connectivity-constrained sites, they often utilize decentralized control strategies and edge computing. Petráček et al. presented a self-sustaining system that interconnects solutions for all crucial robotic tasks and a multi-robot cooperation for the efficient homing of a team of robots in order to enable full autonomy in complex, unknown subterranean environments without access to GNSS [

110].

Multi-robot systems enhance construction efficiency through collaborative task execution, yet they encounter significant coordination and communication challenges. Reliable network infrastructure is essential for real-time data sharing and task allocation among robots. However, construction sites often experience poor connectivity due to physical obstructions and remote locations. Although intermittent communication can lead to task delays and reduced system robustness, decentralized control strategies mitigate reliance on central networks while demanding advanced algorithms to prevent conflicts and ensure operational safety.

HRC involves human and robotics working cooperatively, combining human intelligence, adaptability, and robotic precision and strength to enhance productivity and safety in construction tasks. Robots interpret human intentions and actions through sensors and advanced human-motion prediction algorithms [

50,

111,

112,

113], ensuring safety through collision avoidance algorithms, and intuitive human–machine interfaces like augmented reality [

114]. Shah and Kim developed a motion-intention recognition method using muscle activity data with deep learning, allowing robots to predict the motion that workers will take at the next moment and, ultimately, improve the contextual awareness of robots for human–robot collaboration [

115].

As for HRC, real-world integration challenges include battery life, safety protocols, and worker acceptance. Battery limitations in mobile robots can interrupt workflows, requiring efficient power management or frequent on-site charging. Safety protocols, such as collision avoidance and emergency stop mechanisms, are critical to protect workers. Worker acceptance poses another hurdle, which requires trust in robotic systems driven by transparency in robot behavior and robust safety communication. Addressing these issues through training and intuitive interfaces is vital for effective HRC implementation.

In conclusion, while robotic platforms across various scales offer distinct advantages, their successful deployment relies on overcoming integration challenges. Actuation technologies, ranging from articulated robotic arms to diverse mobile platforms, translate decisions into physical actions. Innovations like soft robotics and reconfigurable actuators allow for greater flexibility, enabling robots to handle diverse tasks such as laying bricks or assembling modular structures. The effectiveness of these systems hinges on precise decision-making, which in turn relies on comprehensive sensory data, reinforcing the interconnectedness of the STA components.

The true potential of autonomous construction robotics lies in the integration of sensing, thinking, and acting. For instance, a robot equipped with advanced LiDAR (sense) can map a site in detail, allowing an AI system (think) to calculate the safest path through debris, which a mobile actuator (act) then executes with precision. This interplay amplifies system performance, enabling robots to tackle complex, dynamic tasks that single-component innovations cannot address alone. By framing our analysis within the STA paradigm, we highlight these synergies, offering a clearer roadmap for future development.

5. Discussion

5.1. Impacts

The adoption of the STA framework in this research sets it apart from prior reviews by providing a structured, holistic lens that uncovers these interdependencies. Additionally, the integration of bibliometric and content analyses offers a dual perspective, i.e., quantitative breadth paired with qualitative depth. Specific insights, such as the link between publication trends and the potential of hybrid AI models, further distinguish this work, providing actionable guidance for researchers and industry leaders alike. From the results of the bibliometric analysis and content analysis using a feature-centric perspective within the STA paradigm, despite increasing research interest with a broad scope of keywords, several aspects still have not been covered or need to be enhanced.

Our findings reveal that while individual STA components have advanced significantly, the field’s next frontier lies in their seamless integration. Sensing systems must evolve to handle the chaotic, unstructured nature of construction sites (e.g., dust, noise, and variable lighting), yet their success depends on equally robust thinking algorithms to interpret this data under uncertainty. Similarly, cutting-edge actuators can perform intricate tasks but only if guided by domain knowledge-enhanced thinking systems that account for real-world constraints like material, schedule, working sequences, or worker safety.

5.2. Challenges to Autonomous Construction Robotics

The challenges and future directions in AI-driven autonomous construction robotics are intricately tied to the STA framework, which underpins the operational capabilities of these systems. This and the next subsection synthesize the identified challenges with insights from prior literature and propose future research directions organized under the themes of hardware, software, collaboration, and implementation. By explicitly connecting these elements, we aim to contribute to the broader goal of developing robust, adaptable, and safe robotic systems for construction environments.

Dynamic and unstructured construction sites. Unlike industrial robotics operating in controlled factory environments with assembly lines, construction robotics faces more complex environments and tasks. The unstructured nature of construction sites, including uneven terrain, partially built structures, and variable storage areas, introduces constant unpredictability in robot navigation, which poses significant challenges to the “sense” and “act” components. Accurate sensing is essential for the perception of environmental features, while a reliable acting component ensures operational success in task execution. Robotics should not only navigate evolving geometry but also adapt to changing obstacles that may appear or move during construction. Robust real-time mapping is essential to deal with on-the-fly deviations in building geometry. Many of the current studies rely on vision-based sensors (e.g., camera and LiDAR) coupled with other sensors, but weather factors, such as dust, low-lighting, or bright sunlight, can degrade vision sensor reliability. Handling such sensor uncertainties in adverse construction sites remains challenging.

Hardware constraints and over-specialization. Construction tasks range from placing large building elements to the fine placement of tiles and welding. The differences in required precision, kinematic constraints, and dynamic payloads (especially for crane-based robotics [

84]) complicate the use of general-purpose construction robotics. This primarily affects the “act” component, as inflexible hardware increases costs and coordination complexity. Current construction robotics platforms are often optimized for specific tasks (e.g., masonry, material transport, and steel placement), emphasizing payload, reach, or stability for a single operation. For instance, large-scale gantry printers excel at 3D-printing building shells but are ill-suited to indoor installation tasks. Robotic solutions that are too specialized introduce many unique robotic systems in a construction site, resulting in difficulties in sharing roles and resources, thereby incurring excessive costs in coordination and task allocation among multiple robots and restricting overall productivity gains.

Ensuring the safety of human workers, robots, and structures. The construction industry is high-risk due to the nature of partial structures, which should support their own weight and that of subsequent added elements. It also remains labor-intensive, necessitating close interactions between human workers and robots. Safety concerns span the “think” and “act” components, requiring predictive decision-making and precise execution. The “think” component must anticipate human movements and structural risks, while the “act” component ensures safe task performance. Although the sensing component can detect human presence (e.g., via on-board sensors or wearable devices), anticipating human actions and preventing collisions is more complex. Any failure in sensing or motion planning can jeopardize both the worker’s safety and the partially built structure’s integrity. It remains a challenge for construction robotics to assess safety margins when planning motion and actuation.

Limited integration of construction domain knowledge. Existing robotics research often employs advanced algorithms for navigation and task allocation but lacks explicit construction knowledge, such as structural load-bearing constraints or working sequencing dependencies. Thus, the “think” component is challenged by the inadequate incorporation of construction-specific knowledge, such as task dependencies and structural requirements. Without this domain-specific information, motion-planning techniques may miss critical safety or scheduling constraints from the perspectives of multi-phase construction projects. The lack of bidirectional links with BIM and digital twins further hinders the robot’s capacity to integrate construction domain knowledge and adapt to on-site changes.

5.3. Future Directions

Future research should prioritize cross-component solutions, such as end-to-end systems that optimize sensing-to-actuation pipelines. Addressing challenges like real-time adaptability, hardware durability, and knowledge transfer will be key to scaling these technologies. Our analysis lays the groundwork for these efforts by identifying where the current strengths and gaps lie. This subsection pairs each challenge with targeted future directions, thereby clarifying how researchers and industry practitioners can address existing gaps and push the field toward robust, large-scale implementation.

Robust sensing system for complex environments. To address the challenges posed by complex construction sites, future robotic systems must go beyond adaptively degrading when sensors fail. They should also balance the cost and efficiency for periodic fine-tuning. Such “on-the-fly” adaptation can be achieved through the following framework. First, integrating multi-sensor fusion (e.g., combining LiDAR, cameras, thermal imaging, or GPS when available) enables a robot to compensate for degraded input from any single modality. Second, reinforcement learning can be layered onto classical planners to adapt motion paths in response to real-time feedback. However, these techniques should be designed with consideration of computational efficiency so that partial re-planning or incremental retraining targets only the affected modules (e.g., the segment of a neural network responsible for obstacle detection under low lighting). This selective approach reduces downtime, conserves energy, and curbs the costs associated with field recalibration.

Balanced reconfigurable robotic platforms. Some researchers suggested specialized robotic platforms, manipulation, and actuation tailored to specific tasks to increase efficiency [

31]. However, over-reliance on specialized robots leads to parallel systems that may be difficult to integrate, coordinate, or maintain on construction sites. Balancing trade-offs between adaptability vs. efficiency, size vs. trafficability, and payload capacity vs. battery life is crucial for future construction robotics. Future research could develop a balanced design or quick-change tool attachments that let robots perform multiple tasks with less manual intervention. By combining standardized locomotion bases (e.g., four-legged robotics or wheeled rovers) with easily swappable end-effectors (e.g., welding torches, grippers, and drilling units), a single platform can handle multiple tasks throughout different project phases. This multi-functionality cuts costs related to deployment, training, and maintenance while also simplifying scheduling, as the same robot base can transition between tasks. Additionally, shared data interfaces and power supply modules can streamline integration, enabling a scalable fleet of robots that collectively manage diverse on-site operations.

Predictive safety and human–robot collaboration. Overcoming safety challenges starts with human-intent recognition. Deep learning approaches that incorporate wearable sensors with computer vision can move beyond simple hazard detection to predict worker trajectories and tasks. This proactive approach allows the robot to reduce speed, modify its path, or pause if it anticipates a hazardous overlap. Physical safety mechanisms, such as soft materials in robotic limbs or compliant actuators, further protect against collisions. Collaborative autonomy protocols, including shared decision-making loops, whereby humans can override or guide robot actions, ensure that expert experience complements robotic efficiency.

Integrate construction domain knowledge. On-site construction robotics usually operates in environments that are complex, high-risk, and heavily influenced by worker activity and machinery. Integrating construction domain knowledge into robotic systems allows them to work more effectively and safely alongside human workers. By embedding knowledge of construction tasks and procedures, robots can better recognize worker activity and predict their intention, thereby taking proactive steps to facilitate or avoid interference. Construction domain knowledge also includes site layouts and building geometry. A robot that integrates domain information can make more informed path-planning decisions. Domain knowledge helps identify high-risk phases of construction. By knowing which parts of a structure can safely bear weight and where and when the risks are greatest, the robot can adjust its decision-making to maintain buffer zones and communicate warnings in real time. Future research should investigate how robots can align their tasks with 4D BIM and digital twins. Automated sync between physical progress and digital blueprints helps coordinate scheduling, material transportation, task allocation, and real-time updates to building models.

Standardized datasets and evaluation metrics. Comprehensive datasets that cover diverse construction scenarios ensure robotic systems trained on them can generalize to real-world scenarios. It is difficult to compare results and reproduce existing research when researchers test their methods on different platforms or use unique datasets. Many existing studies remain too conceptualized, as they make narrow assumptions about construction context, such as ignoring building elements’ properties or simplifying complex construction site environments with pre-defined trajectories of workers. A standardized testing environment can enable direct comparisons, making it easier to measure progress and identify the most promising approaches. It also facilitates collaboration and knowledge transfer between teams in academia and industry by accessing a common dataset and testing platform to build on each other’s work more easily, instead of starting from scratch.

6. Conclusions

The integration of AI into on-site construction robotics, evaluated through the STA framework, constitutes a transformative step toward overcoming persistent challenges in the construction sector. This review has traced the rapid growth and evolving focus of AI-driven construction robotics through a PRISMA-guided dual approach that combined bibliometric mapping of 319 publications with close content analysis of 188 peer-reviewed journal articles. The bibliometric analysis highlights rapid growth in research activities post-2021, revealing a global surge in interest, with China and the US together accounting for nearly half of all publications. Key journals such as Automation in Construction and IEEE Transactions on Industrial Informatics emerged as leading outlets, while co-authorship and keyword networks highlighted growing collaboration and shifts toward topics like RL, BIM, and HRC. Through the lens of the STA framework, the content analysis has shown the following: vision and LiDAR sensors, when fused through robust SLAM and DL methods, now enable reliable perception, even under the challenging conditions of active building sites; the fusion of classical path-planning techniques with RL strategies promises ever greater adaptability for decision-making; learning-based actuation, supported by multi-robot and HRC systems, is paving the way for more flexible, safe task execution, using robotic platforms across various scales.

Looking forward, we argue that realizing fully resilient construction robots will depend on codesigning, sensing, thinking, and actuation as a single, adaptive pipeline in complicated, dynamic sites. Tight coupling with construction domain knowledge like BIM and digital twins offers a pathway for robots to anticipate structural changes, optimize task scheduling, and reduce costly delays, thereby reshaping research agendas toward multidisciplinary collaborations between robotics, civil engineering, and computer science. To foster fair comparison and accelerate breakthroughs, the community should prioritize the creation of open benchmarking protocols, diverse datasets, and unified evaluation metrics. Such benchmarks will not only guide future algorithm development but also inform practitioners seeking to evaluate vendor solutions and establish performance guarantees. Equally important will be the ethical and safety dimensions of HRC. We recommend that follow-up studies develop standardized frameworks for HRC ethics, covering risk assessment, operator training, and accountability, and that they explore long-term field trials to validate system robustness and user acceptance in live construction environments. By integrating explainable AI techniques and transfer-learning approaches, future research can deliver transparent, trustworthy systems that meet both regulatory requirements and on-site productivity demands.

While this review offers an extensive analysis of recent advancements in AI-driven autonomous construction robotics, several limitations should be acknowledged. The bibliometric analysis may inadvertently overlook the relevant literature published outside of the selected databases or beyond the defined timeframe. Furthermore, the review’s broad scope necessitates a high-level overview, potentially missing in-depth technical details of specific technologies or case studies. Nevertheless, these limitations present opportunities for future focused reviews and case studies that could complement and enrich the findings presented here.

Overall, this review has charted the current strengths and gaps in AI-enabled construction robotics and set an agenda for research that bridges algorithmic innovation with practical deployment. By focusing on end-to-end STA integration, digital twin synergy, open benchmarking, and ethical HRC protocols, the field can advance toward robotic systems that are not only technically capable but also economically viable and socially responsible in shaping the construction sites of tomorrow.

Author Contributions

Conceptualization, Z.R. and J.I.K.; methodology, Z.R. and J.I.K.; software, Z.R.; validation, J.I.K.; formal analysis, Z.R.; investigation, Z.R.; resources, J.I.K.; data curation, Z.R.; writing—original draft preparation, Z.R.; writing—review and editing, J.I.K.; visualization, Z.R.; supervision, J.I.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Korea Agency for Infrastructure Technology Advancement (KAIA) grant funded by the Ministry of Land, Infrastructure and Transport (Grant RS-2025-02532980).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Amani, M.; Akhavian, R. Intelligent ergonomic optimization in bimanual worker-robot interaction: A Reinforcement Learning approach. Autom. Constr. 2024, 168, 105741. [Google Scholar] [CrossRef]

- Feng, C.; Xiao, Y.; Willette, A.; McGee, W.; Kamat, V.R. Vision guided autonomous robotic assembly and as-built scanning on unstructured construction sites. Autom. Constr. 2015, 59, 128–138. [Google Scholar] [CrossRef]

- Chen, X.; Yu, Y. An unsupervised low-light image enhancement method for improving V-SLAM localization in uneven low-light construction sites. Autom. Constr. 2024, 162, 105404. [Google Scholar] [CrossRef]

- Chea, C.P.; Bai, Y.; Pan, X.; Arashpour, M.; Xie, Y. An integrated review of automation and robotic technologies for structural prefabrication and construction. Transp. Saf. Environ. 2020, 2, 81–96. [Google Scholar] [CrossRef]

- Wang, L.; Naito, T.; Leng, Y.; Fukuda, H.; Zhang, T.; Wang, L.; Naito, T.; Leng, Y.; Fukuda, H.; Zhang, T. Research on Construction Performance Evaluation of Robot in Wooden Structure Building Method. Buildings 2022, 12, 1437. [Google Scholar] [CrossRef]

- Brosque, C.; Fischer, M. Safety, quality, schedule, and cost impacts of ten construction robots. Constr. Robot. 2022, 6, 163–186. [Google Scholar] [CrossRef]

- Fazeli, N.; Oller, M.; Wu, J.; Wu, Z.; Tenenbaum, J.B.; Rodriguez, A. See, feel, act: Hierarchical learning for complex manipulation skills with multisensory fusion. Sci. Robot. 2019, 4, eaav3123. [Google Scholar] [CrossRef]

- Bekey, G.A. On autonomous robots. Knowl. Eng. Rev. 1998, 13, 143–146. [Google Scholar] [CrossRef]

- Jiménez-Cano, A.E.; Sanalitro, D.; Tognon, M.; Franchi, A.; Cortés, J. Precise Cable-Suspended Pick-and-Place with an Aerial Multi-robot System: A Proof of Concept for Novel Robotics-Based Construction Techniques. J. Intell. Robot. Syst. 2022, 105, 68. [Google Scholar] [CrossRef]

- Kang, H.; Zhang, W.; Ge, Y.; Liao, H.; Huang, B.; Wu, J.; Yan, R.-J.; Chen, I.-M. A high-accuracy hollowness inspection system with sensor fusion of ultra-wide-band radar and depth camera. Robotica 2023, 41, 1258–1274. [Google Scholar] [CrossRef]

- Das, D.; Miura, J. Camera Motion Compensation and Person Detection in Construction Site Using Yolo-Bayes Model. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022; pp. 4566–4572. [Google Scholar]

- Bentz, W.; Qian, L.; Panagou, D. Expanding human visual field: Online learning of assistive camera views by an aerial co-robot. Auton. Robot. 2022, 46, 949–970. [Google Scholar] [CrossRef]

- Leduc, J.-P. 3D+T motion analysis: Motion sensor network versus multiple video cameras. Opt. Photonics Inf. Process. XII 2018, 10751, 107510X. [Google Scholar] [CrossRef]

- Yang, Z.; Li, J.; Wu, H.; Zlatanova, S.; Wang, W.; Fox, J.; Yang, B.; Tang, Y.; Zhang, R.; Gong, J.; et al. Robust and accurate visual geo-localization using prior map constructed by handheld LiDAR SLAM with camera image and terrestrial LiDAR point cloud. Int. J. Digit. Earth 2024, 17, 2416468. [Google Scholar] [CrossRef]

- Kang, X.; Li, J.; Fan, X.; Wan, W. Real-time RGB-D simultaneous localization and mapping guided by terrestrial LiDAR point cloud for indoor 3-D reconstruction and camera pose estimation. Appl. Sci. 2019, 9, 3264. [Google Scholar] [CrossRef]