Abstract

Monitoring worker safety during ladder operations at construction sites is challenging due to occlusion, where workers are partially or fully obscured by objects or other workers, and overlapping, which makes individual tracking difficult. Traditional object detection models, such as YOLOv8, struggle to maintain tracking continuity under these conditions. To address this, we propose an integrated framework combining YOLOv8 for initial object detection and the SAMURAI tracking algorithm for enhanced occlusion handling. The system was evaluated across four occlusion scenarios: non-occlusion, minor occlusion, major occlusion, and multiple worker overlap. The results indicate that, while YOLOv8 performs well in non-occluded conditions, the tracking accuracy declines significantly under severe occlusions. The integration of SAMURAI improves tracking stability, object identity preservation, and robustness against occlusion. In particular, SAMURAI achieved a tracking success rate of 94.8% under major occlusion and 91.2% in multiple worker overlap scenarios—substantially outperforming YOLOv8 alone in maintaining tracking continuity. This study demonstrates that the YOLOv8-SAMURAI framework provides a reliable solution for real-time safety monitoring in complex construction environments, offering a foundation for improved compliance monitoring and risk mitigation.

1. Introduction

Construction site accidents remain a persistent challenge in occupational safety, with falls consistently ranking among the primary causes of workplace incidents in the industry [1,2,3]. Ladder operations, ranging from routine maintenance to high-risk tasks, are extensively utilized across construction and industrial sites [4]. According to recent statistics from the Occupational Safety and Health Administration (OSHA), ladder-related incidents account for approximately 20% of workplace fall injuries and fatalities in the construction industry [5]. These incidents primarily stem from human error, non-compliance with safety regulations, and environmental factors such as unstable surfaces or improper ladder positioning. As ladder usage remains essential in various construction activities, ensuring compliance with safety protocols is critical for minimizing accidents [6]. Ladder safety regulations recommend the presence of an assistant worker during ladder operations to minimize risks and maintain a secure working environment.

Industrial safety regulations worldwide emphasize the importance of safety measures during ladder operations, with specific requirements varying by country. The directive 2009/104/EC of the European Union establishes minimum safety and health requirements for the use of work equipment, including ladders, mandating appropriate measures to ensure stability and safe operation [7]. The Korea Occupational Safety and Health Agency (KOSHA) also specifies measures to prevent ladder slippage and overturning in Article 24 of the Occupational Safety and Health Standards, with the two-person work system being widely recommended in practice [8]. In this system, one worker performs the main task on the ladder, while an assistant worker provides crucial support from the ground. The assistant worker’s critical roles include maintaining ladder stability with a firm two-handed grip, monitoring environmental hazards, and ensuring the primary worker’s safety throughout the operation.

Despite these regulations, ensuring consistent adherence to ladder safety protocols remains a major challenge in real-world construction sites [9,10,11]. Ladder stability and assistant support are fundamental elements in preventing workplace accidents, making proper adherence to these regulations essential [12]. However, the effectiveness of these safety measures is limited by several practical factors. First, the manual monitoring of assistant worker compliance is intermittent and subjective, making it difficult to maintain consistent safety standards across multiple work sites [13,14]. Second, the dynamic nature of construction environments, where multiple ladder operations occur simultaneously, makes comprehensive human supervision challenging and labor intensive [15,16]. These limitations highlight the need for an automated real-time monitoring system capable of providing objective and consistent assessment of safety regulation compliance [17,18].

The recent introduction of Closed-Circuit Television (CCTV) systems for safety, process, and quality management in construction sites has created new opportunities for enhanced safety monitoring [19]. Advances in computer vision and deep learning demonstrate the potential for utilizing these CCTV systems for the automated monitoring of safety regulation compliance [20,21,22]. Several studies have explored the application of deep learning-based object detection for construction safety monitoring [23,24,25,26,27,28]. In particular, You Only Look Once (YOLO), a real-time object detection algorithm, has been widely adopted in construction site safety monitoring due to its single-stage detection approach that offers both speed and accuracy [29,30,31]. Xiao et al. [32] developed and implemented a specialized image dataset for construction machinery, and Duan et al. [33] created and published the Site Object Detection Dataset (SODA), which includes 15 object classes specific to construction sites. However, occlusion scenarios in construction environments remain a significant challenge for object detection systems [34,35,36]. Specifically, detection performance can deteriorate when workers are obscured by other objects or people [37]. This issue significantly impacts the reliability of safety monitoring systems, particularly in construction environments where such occlusions and overlaps frequently occur [38].

YOLO, which is currently widely used, exhibits several limitations in occlusion scenarios due to its frame-by-frame processing nature [39]. First, single-frame detectors such as YOLO typically lack temporal continuity modeling, which limits their ability to predict occluded worker positions. Previous studies have attempted to address this limitation by incorporating temporal aggregation methods [40], though these are often computationally intensive and not optimized for real-time applications. Second, when workers are partially occluded, object detection performance significantly deteriorates, making continuous and consistent monitoring difficult [41]. Third, in situations where multiple workers overlap, the system shows limitations in effectively distinguishing and tracking individual workers [42]. These issues serve as major factors hampering the effectiveness of safety monitoring systems in dynamic and complex environments such as construction sites.

Although the YOLO architecture has evolved to version 12 with improvements in speed and detection accuracy, occlusion handling remains a persistent limitation across the YOLO series [43,44]. Even the most recent versions struggle to maintain detection robustness when objects—such as workers—are partially or fully occluded in crowded or dynamic environments like construction sites [45]. This highlights the need for additional tracking mechanisms to complement YOLO-based detection.

Several approaches have been proposed to mitigate these limitations, including optical flow-based tracking and recurrent neural networks such as LSTMs [46]. However, these methods typically involve sequential processing and high memory consumption, which significantly increase inference time—especially when deployed on edge devices without high-performance GPUs—thus limiting their practicality for real-time applications in construction environments [47,48]. To bridge this gap, recent advancements in deep learning have introduced transformer-based object tracking and graph-based tracking approaches, which aim to improve long-term object association and occlusion handling [49]. While these methods have demonstrated promising results, their high computational complexity remains a significant barrier to real-time deployment in dynamic environments such as construction sites [50]. Therefore, an efficient and robust tracking framework is required to ensure practical applicability in real-world construction scenarios.

SAM-based Unified and Robust zero-shot visual tracker with Motion-Aware Instance-Level Memory (SAMURAI), based on the Segment Anything Model 2 (SAM 2), has recently been proposed [51]. Unlike conventional object detection approaches, SAMURAI incorporates a motion-aware memory selection mechanism that performs instance tracking by considering temporal continuity. This system effectively utilizes information from previous frames, enabling continuous object tracking even in occlusion scenarios. Yang et al. [51] demonstrated significant improvements in object tracking performance in complex environments using SAMURAI, particularly showing superior performance in crowded scenes and occlusion situations. SAMURAI introduces a hybrid scoring system that refines object tracking by combining motion-aware selection and affinity-based filtering. This system allows the model to adaptively determine whether an object is persistently visible across frames, effectively reducing false positive detections caused by occlusion. Additionally, a Kalman filter-based motion modeling mechanism predicts occluded worker positions, maintaining tracking consistency even when direct visual data are unavailable. SAMURAI features two key characteristics: first, it improves mask selection through motion modeling to predict object movement, and second, it enables effective tracking during occlusions through motion-aware memory selection. Notably, SAMURAI demonstrates zero-shot performance applicable to various environments without additional training or finetuning, showing potential for effective implementation in dynamic environments like construction sites where diverse situations occur.

Therefore, this study proposes a worker safety monitoring approach using the SAMURAI algorithm for occlusion scenarios in construction site ladder operations. The focus is particularly on continuously monitoring assistant workers’ compliance with safety regulations. To achieve this, the authors define various occlusion scenarios that can occur at construction sites and evaluate the tracking performance through frame-by-frame analysis. The proposed framework integrates YOLOv8 for initial object detection and SAMURAI for long-term assistant worker tracking. YOLOv8 efficiently detects ladders and workers, ensuring precise localization, while SAMURAI maintains continuous tracking by leveraging motion-aware memory selection. Unlike previous methods that rely on per frame detection, this combined framework selectively tracks essential elements in multi-worker environments, improving robustness under occlusion scenarios.

By combining YOLOv8 for object detection with SAMURAI for tracking, this study introduces a hybrid safety monitoring framework capable of enhancing real-time construction site monitoring under occlusion conditions. The findings of this study are expected to provide practical guidelines for developing more robust and efficient safety compliance systems in the construction industry.

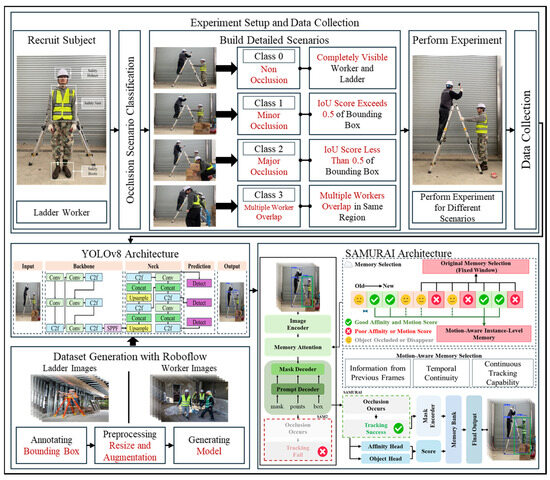

2. Methodology

This study proposes a framework to monitor ladder operations in construction sites, with a particular focus on tracking assistant workers under occlusion scenarios. The framework consists of several key components: (1) experimental setup for data collection; (2) occlusion scenario classification; and (3) implementation of detection algorithms. Two different approaches are integrated in this framework: YOLO is implemented to detect the ladder and workers, while the SAMURAI algorithm is utilized to track assistant workers under various occlusion conditions, employing its motion-aware memory selection mechanism. Figure 1 provides an overview of the research framework, illustrating the workflow from data collection through detection algorithm implementation to performance evaluation. The details of each component are described in the following sections.

Figure 1.

Research framework. Blue boxes indicate object detection results from YOLOv8, while green boxes represent assistant worker tracking by SAMURAI.

2.1. Experimental Setup

To evaluate the performance of the proposed monitoring system in ladder safety operations, the authors designed a controlled experimental environment that simulates real construction site scenarios. The experiment setup consisted of subject recruitment, scenario design, and data collection processes.

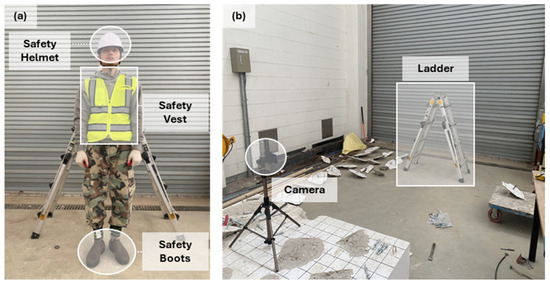

First, the authors recruited subjects to perform ladder operations, focusing on both the main worker’s and assistant worker’s roles. The workers were equipped with standard Personal Protective Equipment (PPE), including a safety helmet, high visibility safety vest, and safety boots, following construction site safety regulations, as shown in Figure 2a [52]. This standardized equipment ensures clear visibility and reflects real-world conditions.

Figure 2.

Experiment setup: (a) PPE and equipment configuration, and (b) experimental environment setup.

For scenario design, the authors developed three distinct occlusion situations that commonly occur during ladder operations: (1) Minor occlusion scenarios where the Intersection over Union (IoU) score exceeds 0.5 of the bounding box; (2) Major occlusion scenarios where the IoU score is less than 0.5 of the bounding box; (3) Multiple worker overlap scenarios where multiple workers intersect in the same region.

The experiments were conducted in a controlled indoor environment using a standard industrial ladder, as illustrated in Figure 2b. Video data were captured using fixed cameras recording at 24 frames per second with a resolution of 1920 × 1080 pixels. Each occlusion scenario was performed multiple times to ensure data reliability and capture various angles and positions. This systematic approach to data collection enables the comprehensive evaluation of both YOLO’s detection capabilities for the ladder and workers, and SAMURAI’s tracking performance for the assistant worker under different occlusion conditions.

2.2. Occlusion Scenario Classification

In this study, the term IoU was used in a specific context that differed from its conventional usage. Typically, IoU refers to the degree of overlap between two bounding boxes, such as between a ground truth box and a predicted detection, or between two different objects. However, in this paper, IoU was redefined as a measure of visibility: it quantified the proportion of the assistant worker’s body that remained visible within their own ground truth bounding box. In other words, it reflects how much of the worker is unobstructed in the image frame, rather than measuring overlap between objects. This visibility-based interpretation of IoU provides a practical and consistent way to classify the severity of occlusion without relying on detection results.

Based on the challenges encountered in ladder operations, the authors classified occlusion scenarios into four categories: non-occlusion, minor occlusion, major occlusion, and multiple worker overlap. These classifications were defined by quantitative measures and specific situational characteristics.

Non-occlusion represents the baseline scenario where the assistant worker and ladder are completely visible with no obstructions. In these situations, both the worker and ladder are clearly observable, providing optimal conditions for monitoring safety compliance. This class serves as a reference point for comparing tracking performance under various occlusion conditions.

Minor occlusion refers to situations where the assistant worker is partially obscured but remains largely visible and identifiable. This is quantitatively defined as cases where the IoU score exceeds 0.5, indicating that more than 50% of the worker’s bounding box remains visible. In these scenarios, while certain parts of the worker may be blocked by other workers or equipment, critical body parts such as the upper body and hands essential for ladder support remain observable. Common examples include partial obstruction by passing workers or small equipment.

Major occlusion represents situations where the assistant worker is substantially obscured from view. This is defined by an IoU score less than 0.5, indicating that more than 50% of the worker’s bounding box is hidden. These scenarios typically occur when large equipment or materials are moved between the camera and the worker, or when the worker’s position is significantly blocked by construction elements.

Multiple workers overlap scenarios occur when several workers operate in close proximity, creating complex occlusion patterns. This classification specifically addresses situations where multiple workers’ bounding boxes intersect in the same region, creating challenges for individual worker identification and tracking. These scenarios are particularly common in busy construction sites where multiple ladder operations or related activities occur simultaneously.

This classification system provides a structured framework for evaluating the SAMURAI algorithm’s performance under different occlusion conditions, enabling the systematic analysis of its tracking capabilities in various real-world scenarios.

While the scenario classification is based on static visibility thresholds, the SAMURAI tracking algorithm incorporates temporal memory and motion-aware instance matching, which allows it to respond adaptively to rapidly changing occlusion states across frames. This enables the system to maintain identity continuity even under dynamic conditions such as sudden occlusions or quick reappearances, which are common in actual construction environments.

While the current classification focuses on static frame-level occlusion states using IoU thresholds, the authors acknowledge that real-world occlusions often change dynamically over time. Scenarios involving progressive or intermittent occlusion could further enrich the framework and are left as future extensions.

2.3. Implementation of Detection and Tracking Algorithms

This study implemented two distinct detection algorithms to achieve the comprehensive monitoring of ladder operations: YOLOv8 for detecting ladders and workers, and SAMURAI for tracking assistant workers under occlusion scenarios. The implementation process demonstrates how these complementary approaches work together to enable effective safety monitoring in construction environments. While YOLOv8 provides robust object detection capabilities, SAMURAI addresses the specific challenges of maintaining continuous tracking during the occlusion scenario. The details of each algorithm’s implementation are described in the following sections.

2.3.1. YOLO for Ladder and Worker Detection

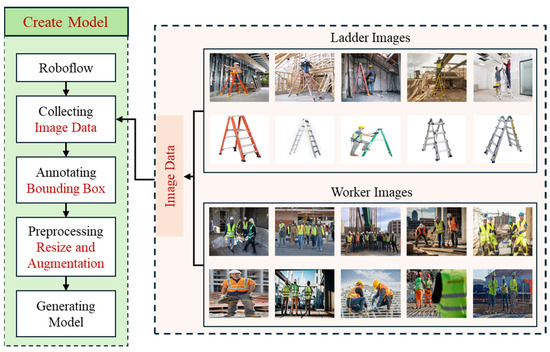

This study implemented the YOLOv8 model training process through Roboflow, focusing on ladder operation monitoring in construction sites. Roboflow is an online platform that streamlines the dataset preparation and model training workflow for computer vision tasks, including image annotation, preprocessing, augmentation, and YOLO integration. As shown in Figure 3, the dataset generation workflow consists of image data collection, bounding box annotation, preprocessing with augmentation, and model generation [53,54].

Figure 3.

Dataset preparation and processing for YOLOv8.

The training dataset comprises two categories: ladder images and worker images from actual construction sites. The ladder dataset includes various ladder types and positions commonly used in construction environments, while the worker dataset contains diverse scenarios of construction workers performing ladder operations. Consideration was paid to collecting images that represent realistic working conditions, including different viewing angles and lighting environments.

A total of 3200 images were used, including 1800 images of workers and 1400 images of ladders. These images were collected from multiple sources such as real construction site photos, online open datasets, and controlled image captures using smartphone and CCTV cameras. The images were standardized to a resolution of 640 × 640 pixels to ensure compatibility with YOLOv8 input requirements.

For data annotation, the authors applied bounding box labeling to precisely mark the location of ladders and workers in each image. This process was essential for training the model to accurately detect and localize objects during real-time monitoring [55]. The preprocessing phase included image resizing for dimensional consistency and various augmentation techniques. To enhance the model’s generalization capability, the authors implemented random horizontal flipping, rotation adjustments, and brightness variations during the augmentation process. The prepared dataset was divided into training, validation, and testing sets with ratios of 70%, 20%, and 10%, respectively, ensuring sufficient data for model training and performance evaluation while maintaining compatibility with the YOLOv8 architecture.

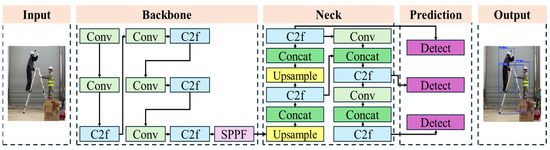

The authors Implemented the YOLOv8 architecture, which consists of four main components: input processing, backbone, neck, and prediction networks, as shown in Figure 4. The backbone network employs a series of convolutional layers (Conv) and cross-stage feature fusion blocks (C2f) to extract hierarchical features from input images [56]. These blocks are designed to capture various levels of visual information, from basic patterns to complex object structures.

Figure 4.

YOLOv8 architecture.

The neck network utilizes a feature pyramid structure with C2f blocks, concatenation operations (Concat), and upsampling layers to aggregate multi-scale features [57]. This structure enhances the model’s ability to detect both ladders and workers at various scales and positions. The spatial pyramid pooling fusion (SPPF) between the backbone and neck networks further improves the model’s capability to handle objects at different scales [58].

The prediction layer consists of three detection heads that operate at different scales, enabling the robust detection of both large objects (ladders) and smaller details (workers) in construction site environments. During inference, the model processes each frame independently, providing bounding box coordinates and confidence scores for detected objects. These detection results serve as input for the safety monitoring system, enabling the real-time tracking of ladder operations in construction environments.

The YOLOv8 model was trained for 100 epochs using the Adam optimizer with a batch size of 16 and an initial learning rate of 0.001. The model achieved a mean Average Precision (mAP@0.5) of 92.6%, with a precision of 90.3% and a recall of 88.7% on the validation set. These results confirm that the model effectively detects ladders and workers in diverse construction scenarios.

2.3.2. SAMURAI for Assistant Worker Tracking

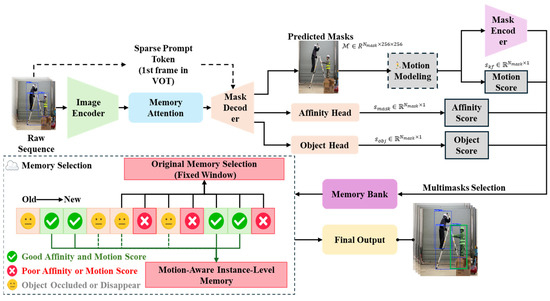

As shown in Figure 5, the base architecture consists of Image Encoder, Memory Attention, and Mask/Prompt Decoder components. The Image Encoder processes the input frames, followed by Memory Attention, which helps maintain context across frames. The Mask/Prompt Decoder, incorporating mask, points, and box information, generates detailed tracking outputs. The SAMURAI algorithm is employed as a zero-shot tracker and does not require training or dataset-specific fine-tuning. It operates entirely in inference mode using a pretrained model, allowing direct deployment without additional supervision. When an occlusion occurs, SAMURAI evaluates frames through two key mechanisms: Original Memory Selection and Motion-Aware Instance-Level Memory. The Original Memory Selection operates on a fixed window basis, while the Motion-Aware Instance-Level Memory selectively retains valuable tracking information to maintain continuous tracking during occlusions.

Figure 5.

SAMURAI architecture.

The memory selection process categorizes frames based on three criteria: Good Affinity and Motion Score (indicated by green check marks), Poor Affinity or Motion Score (indicated by red crosses), and Object Occluded or Disappear (indicated by yellow emoticons). This classification guides the selection of relevant frames for maintaining tracking continuity. The system carefully evaluates each frame’s contribution to tracking reliability, ensuring that only high-quality tracking information is retained in memory. The Motion-Aware Memory Selection implements three key features: Information from Previous Frames, Temporal Continuity, and Continuous Tracking Capability. These features work together to create a robust tracking system that can maintain object identity through various occlusion scenarios.

During operation, when an occlusion occurs, SAMURAI processes the input through Mask Encoder and maintains tracking through Memory Bank, ultimately producing accurate tracking results in the Final Output. The system’s Affinity Heads generate scores that, combined with the motion-aware memory selection, enable robust tracking even in challenging occlusion scenarios. This comprehensive approach ensures that the assistant worker can be continuously tracked throughout their activities, providing reliable monitoring of safety compliance even in complex construction site environments.

2.4. Performance Evaluation Method

The performance evaluation of the proposed system focused on SAMURAI’s tracking capabilities in occlusion scenarios through frame-by-frame analysis. The evaluation examined the system’s ability to maintain continuous tracking of assistant workers during ladder operations, particularly when occlusions occur.

The analysis was conducted through sequential frame captures from video sequences, specifically focusing on the system’s tracking performance in various occlusion scenarios. By examining frame sequences, the authors assessed the system’s ability to maintain a consistent tracking of the assistant worker during occlusion events. The frame captures provide clear evidence of how the system responds to different types of occlusions: non-occlusion, minor occlusions, major occlusions, and multiple worker overlap situations.

The tracking performance was measured by calculating the success rate based on frame sequences. To calculate tracking performance, this study used a tracking success rate (TSR), which was calculated as the ratio of successfully tracked frames to the total number of frames in each sequence. TSR was calculated to quantitatively evaluate the system’s tracking performance in each occlusion class. This rate was determined by the ratio of successfully tracked frames to the total number of frames in the footage. The tracking success rate was evaluated for each occlusion scenario, demonstrating the system’s reliability in maintaining consistent tracking under different challenging conditions. Successful tracking was determined by the system’s ability to maintain continuous identification and localization of the assistant worker throughout the sequence. The formula for this metric is outlined in the following equation:

3. Result

This section presents the tracking results of the SAMURAI algorithm applied to assistant worker monitoring in ladder operations. The analysis examined the system’s performance across different occlusion scenarios, with particular emphasis on tracking continuity during occlusion events. To evaluate the effectiveness of the proposed approach, the tracking results were analyzed through frame-by-frame sequence examination and tracking success rates for each occlusion class. The performance was assessed using both visual tracking evidence and quantitative metrics to demonstrate the system’s capability to maintain consistent tracking in challenging construction site environments.

3.1. Frame Sequence Analysis

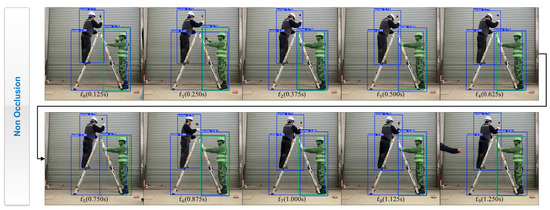

Figure 6 presents the tracking results for the non-occlusion scenario (Class 0), establishing a baseline for evaluating the system’s performance under optimal conditions. This sequence captures the assistant worker and ladder with full visibility, devoid of any occlusions, ensuring an ideal test case for assessing tracking stability and object localization accuracy. From t_0 (0.125 s) to t_9 (1.250 s), the system successfully maintains consistent tracking across 10 frames. Bounding boxes around the assistant worker and ladder exhibit minimal positional shift across frames, indicating the robustness of the detection pipeline. The confidence scores for the assistant worker range from 0.71 to 0.82, while the ladder maintains confidence levels between 0.87 and 0.90, ensuring reliable detection continuity. Notably, the fluctuation in confidence scores remains below 0.05 across frames, demonstrating minimal uncertainty in object classification.

Figure 6.

Non-occlusion tracking result.

Tracking stability is confirmed through the consistent positioning of bounding boxes, with an average IoU of 0.92 across consecutive frames. No evidence of tracking drift (bounding box displacement exceeding 5 pixels) or sudden bounding box disappearance was observed during the sequence. This consistency is essential for accurate monitoring in dynamic construction environments, where false positive or negative detections could compromise safety assessments. Additionally, no identity switching or loss of detection occurs throughout the sequence, validating the system’s ability to sustain uninterrupted tracking in clutter free settings.

As the non-occlusion sequence provides an ideal benchmark for the system, these results serve as a critical reference for subsequent occlusion scenarios, where tracking challenges arise due to partial or full obstructions. This evaluation establishes the reliability of the proposed approach in baseline conditions and lays the foundation for assessing its robustness under occlusion constraints.

Figure 7 presents the tracking performance in the minor occlusion scenario (Class 1), where the assistant worker is partially obstructed by nearby objects, such as cardboard boxes and construction materials. This scenario represents a partial occlusion condition, where the Intersection over Union (IoU) score remains above 0.5, ensuring that more than half of the assistant worker’s bounding box is visible throughout the sequence. The objective of this evaluation is to examine how well the SAMURAI algorithm maintains tracking stability when minor obstructions are present.

Figure 7.

Minor occlusion tracking result.

The sequence spans from t_0 (0.125 s) to t_9 (1.250 s), covering ten frames in which both the primary worker on the ladder (blue bounding box) and the assistant worker (green highlighted blue bounding box) are consistently detected. The main worker’s confidence score ranges from 0.67 to 0.78, while the assistant worker’s confidence fluctuates between 0.59 and 0.67. Despite minor variations in confidence scores, the system successfully preserves object identity across frames, ensuring continuous tracking. The ladder remains consistently detected with confidence scores between 0.80 and 0.81, demonstrating the algorithm’s robustness in non-dynamic objects.

Even as the assistant worker’s lower body becomes partially obscured by the cardboard boxes, SAMURAI maintains tracking consistency without misclassification or identity switching. The motion-aware memory selection mechanism contributes to this stability by leveraging information from previous frames, allowing the system to maintain tracking despite minor occlusions. The bounding boxes exhibit minimal positional drift, further reinforcing tracking accuracy.

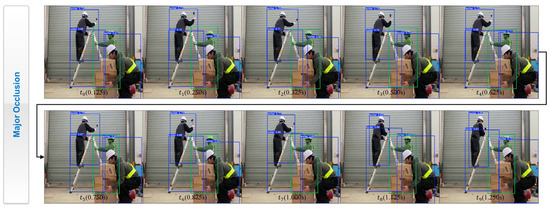

Figure 8 presents the tracking results for the major occlusion scenario, where the assistant worker is significantly occluded by another worker handling cardboard boxes in the foreground. This sequence evaluates the system’s ability to maintain tracking continuity when the assistant worker is mostly hidden from view.

Figure 8.

Major occlusion tracking result.

The sequence spans from t_0 (0.125 s) to t_9 (1.250 s), capturing the tracking performance over 10 frames. Throughout the sequence, the assistant worker experiences varying degrees of occlusion, with the foreground worker and stacked cardboard boxes obstructing visibility. The main worker on the ladder maintains stable detection with confidence scores ranging between 0.65 and 0.79. The ladder detection confidence remains within the 0.63 to 0.71 range. The assistant worker’s bounding box remains assigned correctly across frames, despite significant occlusion.

A distinction is observed in the object detection outputs. YOLOv8 detects the foreground worker handling boxes as a separate worker, assigning a bounding box with confidence scores ranging from 0.60 to 0.79. SAMURAI continues to track the actual assistant worker, maintaining consistent bounding box positioning across the sequence. The bounding box for the assistant worker remains assigned throughout the sequence.

The system maintains tracking continuity despite the progressive occlusion of the assistant worker by the foreground worker and objects. Across frames, no loss of detection occurs for the assistant worker, and bounding box positioning remains consistent.

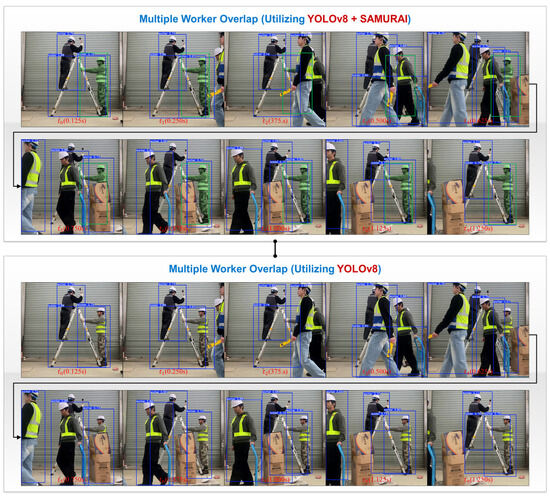

Figure 9 presents the tracking performance for the multiple worker overlap scenario, where multiple workers move through the monitored area, creating overlapping instances. This sequence evaluates how the tracking system distinguishes the assistant worker from other workers appearing within the same scene.

Figure 9.

Multiple worker overlap tracking result.

The frame sequence from t_0 (0.125 s) to t_9 (1.250 s) includes multiple workers crossing paths with the assistant worker. In the initial frames, the assistant worker (highlighted with a green bounding box) is fully visible with a detection confidence of 0.79. As the sequence progresses, additional workers enter the scene, partially occluding the assistant worker from frames t_2 to t_6.

Across the sequence, YOLOv8 detects multiple workers with varying confidence scores ranging from 0.64 to 0.88, while SAMURAI maintains continuous tracking of the assistant worker. Despite overlapping instances where workers move in front of the assistant worker, SAMURAI sustains its tracking, with confidence scores fluctuating between 0.57 and 0.80. The bounding box remains consistently positioned around the assistant worker, indicating that tracking continuity is maintained even when multiple objects interact in the scene.

Additionally, the ladder is continuously detected throughout the sequence, with confidence scores ranging from 0.60 to 0.89. The tracking results show that both YOLOv8 and SAMURAI successfully identify multiple workers within the frame, with SAMURAI specifically preserving identity tracking of the assistant worker.

3.2. Tracking Performance Analysis

Table 1 presents the tracking success rate (TSR) for different occlusion scenarios by comparing the total number of frames with the number of successfully tracked frames. The TSR was calculated as the percentage of frames where the assistant worker was continuously detected and tracked throughout the sequence. The total of 7200 frames per scenario was derived from a frame rate of 24 FPS, with each occlusion class recorded for 15 s across 20 participants, ensuring a comprehensive evaluation under various occlusion conditions.

Table 1.

Tracking success rate (TSR) for different occlusion scenarios.

In the non-occlusion scenario, the system achieves a TSR of 99.2%, indicating that the assistant worker was consistently detected and tracked with minimal interruptions. This result establishes the baseline performance under ideal conditions, where no significant visual obstructions occur, ensuring stable and continuous tracking.

In the minor occlusion scenario, where partial occlusion is introduced by obstacles such as stacked boxes, the TSR slightly decreases to 96.1%. Despite minor obstructions, the tracking system maintains stable performance by leveraging motion-aware memory selection.

The major occlusion scenario introduces significant challenges due to the substantial obstruction of the assistant worker by another worker and objects. As a result, the TSR drops further to 94.8%, as some frames experience temporary tracking loss. However, the system successfully reidentifies the assistant worker after occlusions, preventing the complete loss of tracking continuity. The primary causes of tracking errors in this scenario include momentary failures in object reidentification when occlusion persists for an extended duration, as well as increased uncertainty in bounding box localization.

Finally, in the multiple worker overlap scenario, where multiple workers move across the scene and create overlapping occlusions, the TSR reaches 91.2%. This is the most challenging case, as frequent worker overlaps increase the risk of identity switching. Tracking inconsistencies in this scenario are primarily due to the difficulty in distinguishing between closely positioned workers, which can lead to temporary mismatches in object identity. The results indicate that, while the system maintains reliable tracking in most frames, some inconsistencies occur due to ambiguous object associations in highly dynamic environments.

Overall, the TSR values across different scenarios demonstrate that the proposed YOLOv8-SAMURAI framework effectively handles various occlusion challenges. While minor occlusions have minimal impact, more complex occlusions and overlapping workers lead to tracking degradation.

In addition to tracking success rate (TSR), we further evaluated the impact of detection errors by analyzing false positives (FPs) and false negatives (FNs) across the four occlusion scenarios. Based on the precision and recall metrics reported and assuming 7200 total frames per scenario, we estimated the total number of FP and FN cases. Table 2 summarizes the findings for both YOLOv8 alone and YOLOv8 + SAMURAI.

Table 2.

False positives and false negatives of YOLOv8 and YOLOv8 + SAMURAI across different occlusion scenarios.

These results indicate that YOLOv8 alone is prone to a high number of false alarms and missed detections in complex occlusion conditions. In the multiple worker overlap case, for example, YOLOv8 generated 3672 FPs and 1728 FNs, while the hybrid approach with SAMURAI reduced these numbers to 1289 and 1404, respectively. This substantial reduction highlights the advantage of incorporating motion-aware memory into the tracking pipeline and supports the robustness of the proposed system in safety-critical scenarios.

4. Discussion

4.1. Comparative Analysis of Non-Occlusion and Occlusion Scenarios

The tracking performance of SAMURAI was evaluated across four occlusion scenarios: Non-Occlusion, Minor Occlusion, Major Occlusion, and Multiple Worker Overlap. The Non-Occlusion scenario served as the baseline, providing optimal conditions where the assistant worker and ladder were fully visible. By systematically comparing this baseline with occlusion scenarios, the impact of different occlusion levels on tracking stability was examined.

Overall, as the occlusion severity increases, the tracking performance degrades, with minor occlusion having minimal impact, while major occlusion and multiple worker overlap introduce greater detection instability. Despite these challenges, SAMURAI mitigates identity loss by leveraging motion-aware memory selection, whereas YOLOv8 exhibits greater fluctuations due to frame-by-frame processing.

In the non-occlusion scenario, the system maintained stable tracking with high confidence scores and minimal bounding box fluctuations. This confirms robust detection performance in unobstructed conditions, establishing a reference point for assessing occlusion effects.

For the minor occlusion scenario, where the assistant worker was partially obstructed by static objects (e.g., cardboard boxes), tracking remained stable with only slight variations in confidence scores. The bounding box remained consistently assigned to the assistant worker, demonstrating SAMURAI’s ability to handle short-term occlusions using motion-aware memory selection. However, minor fluctuations in confidence scores suggest that even partial occlusions can affect detection reliability.

The major occlusion scenario presented a more significant challenge, as the assistant worker was largely obscured by another worker in the foreground. YOLOv8 frequently detected the foreground worker instead of the assistant worker, leading to identity confusion. In contrast, SAMURAI preserved the assistant worker’s identity by leveraging previous frame information, though confidence scores dropped more noticeably. Bounding box shifts were observed as occlusion intensity increased, highlighting the challenge of tracking through obstruction.

In the multiple worker overlap scenario, the complexity increased as multiple workers moved dynamically through the scene, frequently crossing the assistant worker’s position. YOLOv8 often reassigned worker identities based on immediate visibility, causing inconsistencies. SAMURAI, on the other hand, maintained the continuous tracking of the assistant worker despite overlapping workers, though prolonged occlusion resulted in greater confidence fluctuations. Despite the complexity of prolonged occlusions involving multiple overlapping workers, SAMURAI maintains tracking continuity, though confidence score fluctuations are observed.

The comparative analysis highlights that tracking stability decreases progressively with increasing occlusion severity. Minor occlusion has minimal impact on detection consistency, whereas major occlusion and multiple worker overlap significantly affect identity tracking. During prolonged occlusions involving multiple overlapping workers, SAMURAI maintains object identity and tracking continuity, but occasional tracking inconsistencies can occur due to the dynamic nature of the scene. The next section explores these differences by comparing the methodologies of SAMURAI and YOLOv8 in each scenario.

4.2. Comparative Analysis Between YOLO and SAMURAI

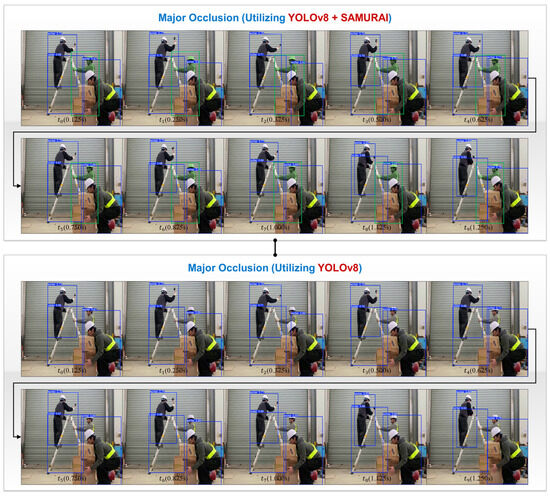

To evaluate the effectiveness of the proposed approach, a comparative analysis was conducted between two configurations: YOLOv8-only detection and YOLOv8 combined with SAMURAI tracking. Since the non-occlusion and minor occlusion scenarios exhibited minimal differences in tracking performance, the focus of this comparison was on the major occlusion and multiple worker overlap scenarios, where significant differences in tracking behavior were observed.

In Figure 10, the major occlusion scenario is analyzed. The upper sequence illustrates the results using YOLOv8 combined with SAMURAI, while the lower sequence demonstrates tracking performance using only YOLOv8. When utilizing YOLOv8 alone, the assistant worker’s detection is significantly affected by the presence of an obstructing worker handling cardboard boxes. The bounding box for the assistant worker is either missing or inaccurately assigned to the occluding worker in multiple frames, leading to tracking inconsistencies. In contrast, the YOLOv8 + SAMURAI system maintains consistent tracking of the assistant worker, preserving identity and bounding box placement despite substantial occlusion. This indicates that SAMURAI’s motion-aware memory selection enables more robust tracking by leveraging temporal continuity.

Figure 10.

Comparative tracking performance in the major occlusion scenario (YOLOv8 vs. YOLOv8 + SAMURAI).

Figure 11 illustrates the multiple worker overlap scenario, comparing the two approaches in a dynamic environment with multiple workers moving across the frame. The lower sequence, utilizing only YOLOv8, demonstrates significant tracking failures, including identity switches where bounding boxes are reassigned incorrectly among overlapping workers. The bounding box around the assistant worker frequently disappears or shifts to an unintended worker due to the increased complexity of overlapping instances. Conversely, the YOLOv8 + SAMURAI approach in the upper sequence maintains the continuous tracking of the assistant worker, preventing identity switching despite the presence of multiple moving workers. The assistant worker’s bounding box remains stable throughout the frames, indicating that the SAMURAI framework effectively mitigates tracking ambiguities in crowded environments. SAMURAI’s performance in the multiple worker overlap scenario demonstrates the advantages of its memory-based approach. By considering temporal relationships between frames and utilizing motion modeling, SAMURAI distinguishes between overlapping workers even during complex interactions. This ability to maintain consistent worker identities is crucial for safety compliance monitoring, as it enables the continuous tracking of the assistant worker’s position and posture throughout ladder operations.

Figure 11.

Comparative tracking performance in the multiple worker overlap scenario (YOLOv8 vs. YOLOv8 + SAMURAI).

Table 3 presents a comparative analysis of the tracking performance of YOLOv8 and YOLOv8 + SAMURAI under four different occlusion scenarios: non-occlusion, minor occlusion, major occlusion, and multiple worker overlap. The evaluation considers three key performance metrics: precision, recall, and processing speed (FPS).

Table 3.

Performance comparison of YOLOv8 and YOLOv8 + SAMURAI across different occlusion scenarios.

In the non-occlusion scenario, where no obstructions are present, YOLOv8 achieves high precision (98.5%) and recall (97.1%), demonstrating its effectiveness under ideal conditions. The addition of SAMURAI results in a slight improvement, increasing the precision to 98.7% and recall to 97.8%, suggesting that the tracking module has minimal effect when occlusions are absent.

As occlusions become more significant, YOLOv8’s performance declines across all metrics. In the minor occlusion case, where the assistant worker is partially blocked by static objects, YOLOv8’s precision and recall drop to 92.3% and 90.8%, respectively. With SAMURAI integrated, both values increase to 94.5% and 93.2%, highlighting the effectiveness of motion-aware tracking in mitigating minor occlusions.

In the major occlusion scenario, where large portions of the assistant worker are blocked by another worker, YOLOv8’s precision and recall experience a significant reduction, dropping to 78.2% and 74.5%. However, with SAMURAI, the system maintains a better performance, achieving 89.4% precision and 87.3% recall. This result suggests that the motion-aware memory selection mechanism effectively retains object identity even when large portions of the target are obscured.

The multiple worker overlap scenario presents the greatest challenge, with multiple workers passing in front of the assistant worker, leading to frequent misidentifications. In this case, YOLOv8 alone suffers from a considerable drop in both precision (65.7%) and recall (60.3%). However, when combined with SAMURAI, the precision increases to 82.1% and recall to 80.5%, indicating that the tracking system successfully differentiates overlapping individuals and reduces identity confusion.

While SAMURAI significantly improves precision and recall in occlusion heavy conditions, it introduces a computational overhead, resulting in a reduction in processing speed (FPS). YOLOv8 alone processes frames at 48.5 FPS under non-occluded conditions, and as the occlusion complexity increases, FPS decreases slightly due to increased detection challenges.

When SAMURAI is applied, the system operates at a slightly lower FPS across all scenarios. In non-occlusion, the FPS decreases from 48.5 to 46.2, indicating a minor computational cost. However, in multiple worker overlap, where the tracking model must continuously distinguish between multiple overlapping workers, FPS drops to 36.5. This trade-off suggests that, while SAMURAI enhances tracking performance, it requires additional computational resources, which could be a factor to consider for real-time applications.

The experimental results indicate that YOLOv8 performs effectively in scenarios with no occlusions or minor occlusions, maintaining high precision and recall. However, as the occlusion severity increases—particularly in the major occlusion and multiple worker overlap scenarios—the model struggles to maintain object identity and tracking accuracy.

The integration of SAMURAI significantly enhances tracking performance in these challenging conditions. By incorporating a motion-aware memory selection mechanism, SAMURAI enables the system to preserve object identity even when a worker is largely obscured or when multiple workers overlap, thereby reducing misidentifications and tracking failures.

Despite these improvements, the addition of SAMURAI introduces a slight computational overhead, resulting in a moderate decrease in processing speed (FPS). However, this trade-off is compensated by the substantial gains in tracking reliability and accuracy, particularly in dynamic construction environments where occlusions are frequent. These findings demonstrate the practical effectiveness of the YOLOv8 + SAMURAI framework for real-world safety monitoring applications in construction sites.

These results indicate that combining YOLOv8 with SAMURAI is highly effective for real-world construction site monitoring, particularly in dynamic environments where occlusion frequently occurs.

4.3. Limitations, Contributions, and Future Applications

While the SAMURAI-based tracking system has shown promising results, certain limitations should be considered. First, the computational efficiency remains a challenge. While SAMURAI enhances tracking performance in occlusion scenarios, it introduces additional processing overhead compared to traditional YOLO-based detection. The reduced FPS (from 48.5 to 36.5) indicates an increased computational burden, which could limit real-time deployment in resource constrained environments. Optimizing the memory selection mechanism and reducing redundant computations may improve processing speed without sacrificing accuracy.

While pruning and quantization were proposed as potential methods to further reduce computational cost, they have not yet been applied in this study. These techniques are widely used to accelerate deep learning inference, and their implementation and benchmarking within the YOLOv8 + SAMURAI framework remain valuable directions for future work.

Future work may explore techniques such as model pruning and quantization to reduce model complexity without significantly compromising performance. Additionally, integrating region-of-interest (ROI)-based processing or attention mechanisms could reduce the computational load by focusing resources only on relevant image regions. For deployment in edge environments, hardware-level optimizations using accelerators such as FPGAs or edge TPUs may also be considered.

Second, SAMURAI’s reliance on motion-aware memory selection introduces potential limitations in scenarios involving abrupt, unpredictable movement. Since the system tracks objects based on temporal continuity, sudden changes in motion—such as workers quickly repositioning themselves outside the field of view—may cause temporary tracking failures. Future improvements could involve integrating optical flow-based motion estimation or reinforcement learning-based adaptive tracking strategies to address these limitations.

Third, the current approach assumes a fixed camera perspective. The system’s effectiveness has been validated under controlled CCTV-based monitoring conditions, where camera viewpoints remain stable. However, real-world construction sites often involve dynamically changing perspectives, handheld recordings, or drone-based monitoring. Future work should explore multi-view camera fusion techniques and adaptive tracking models to ensure robustness across varying camera angles.

Moreover, the current system does not support continuous identity tracking when the assistant worker fully exits the camera’s field of view. Although the SAMURAI tracker is effective at maintaining object continuity through brief occlusions within a single fixed viewpoint, it cannot preserve identity across non-overlapping views or disconnected camera feeds. Addressing this limitation will require the development of inter-camera hand-off mechanisms and multi-perspective identity association techniques, which represent important directions for expanding the system’s applicability in large or open construction sites.

Furthermore, the current occlusion classification framework is based on static, frame-level visibility thresholds, which may not fully capture the temporal dynamics of real-world occlusion patterns. In practice, occlusions often evolve progressively or intermittently across consecutive frames due to worker movement or environmental changes. Incorporating temporal occlusion transitions into the classification and evaluation framework would enable more realistic assessments of tracking robustness in future studies.

In addition, the experiments were conducted in a controlled indoor environment to ensure data reliability and minimize external variables. However, this setup may limit the generalizability of our findings, as real-world construction sites involve additional challenges such as variable lighting conditions, outdoor weather effects (e.g., rain, fog, or strong winds), and complex background dynamics. These environmental changes can significantly affect object visibility and tracking accuracy. Future work will aim to validate the system’s robustness in outdoor and less controlled environments, including scenarios with fluctuating illumination and adverse weather, to better reflect practical deployment conditions.

This study contributes to construction safety monitoring by integrating YOLOv8’s object detection with SAMURAI’s motion-aware memory selection, enabling the continuous tracking of assistant workers even under severe occlusions. Unlike conventional frame-by-frame detection methods, the proposed system preserves object identity through memory selection, ensuring more reliable monitoring in dynamic construction environments.

A key contribution is the structured benchmark evaluation for tracking performance across different occlusion scenarios, providing essential insights into accuracy, identity preservation, and processing speed. This benchmark serves as a foundation for future research on occlusion-resilient tracking in safety-critical applications.

In safety-critical applications such as construction monitoring, tracking errors may have direct operational implications. False positives can result in unnecessary alerts or misallocated attention, while false negatives may cause missed detections of safety violations or hazardous behavior. Even brief identity discontinuities may lead to incomplete compliance records. The proposed system’s focus on identity preservation and robust tracking under occlusion aims to minimize such risks and enhance the reliability of automated safety monitoring.

Additionally, the proposed framework is designed for seamless integration with existing CCTV infrastructure, requiring no additional sensor hardware. SAMURAI’s zero-shot learning capability further enhances its adaptability, allowing deployment in diverse construction settings without retraining.

While this study focused on SAMURAI due to its zero-shot capability and strong performance under severe occlusions, we acknowledge that many other tracking algorithms such as DeepSORT and ByteTrack are widely used in object tracking applications. A comparative evaluation between YOLO + SAMURAI and alternative tracking combinations (e.g., YOLO + DeepSORT) is planned for future work to assess trade-offs in tracking accuracy, identity preservation, and computational efficiency in construction-specific environments.

By improving tracking robustness and practical applicability, this study bridges the gap between theoretical object tracking models and real-world construction safety needs. The results highlight the importance of motion-aware tracking for enhancing worker protection and ensuring more reliable monitoring in complex, multi-worker environments.

The YOLOv8-SAMURAI-based safety monitoring system can be applied beyond ladder operations to other high-risk construction activities, such as scaffolding, crane operations, and excavation, where worker occlusion frequently occurs. By integrating with existing CCTV systems, this framework enables automated compliance monitoring, tracking worker positions, detecting safety gear usage, and identifying hazardous zone violations.

Although more recent YOLO versions such as v11 and v12 have introduced architectural enhancements, this study adopted YOLOv8 due to its stability, widespread adoption, and strong compatibility with existing datasets and annotation tools. At the time of development, YOLOv8 provided a well-documented and benchmarked framework that balanced detection accuracy and inference speed—key factors for integration into real-time construction monitoring systems. In addition, many publicly available datasets and preprocessing platforms, including Roboflow, offered full support for YOLOv8, facilitating efficient model training and deployment. Future studies may compare the proposed system’s performance using newer versions of YOLO to evaluate potential improvements in occlusion handling and detection precision.

Additionally, the system can enhance safety by monitoring interactions between workers and construction equipment, reducing collision risks and ensuring safe distances. Collected data can also serve as training material for improving safety awareness and management strategies. These applications aim to strengthen construction site safety by providing continuous and reliable monitoring in complex environments.

In addition to improving real-time tracking, future extensions of the proposed system may benefit from integration with distributed ledger technologies to enhance data traceability and accountability. In particular, construction safety monitoring systems could utilize blockchain frameworks to ensure the immutability of safety event records, maintain verifiable logs of worker behavior, and support transparent audit trails for compliance verification. Prior studies have demonstrated how such technologies can significantly improve the reliability and integrity of safety-related data, especially in decentralized or multi-party construction environments [59,60]. Integrating our system with distributed ledger platforms may therefore enhance not only the accuracy of monitoring but also the trustworthiness and long-term traceability of recorded safety data.

5. Conclusions

This study explored a safety monitoring approach for ladder operations in construction sites by integrating YOLOv8 for initial object detection and SAMURAI for robust tracking in occlusion scenarios. The proposed system was evaluated under four conditions: non-occlusion, minor occlusion, major occlusion, and multiple worker overlap. The results demonstrate that, while YOLOv8 performed well in clear visibility conditions, its tracking capability significantly declined under severe occlusions. In contrast, SAMURAI effectively maintained object identity and tracking continuity, even when workers were partially or fully obscured.

A comparative analysis highlighted that the motion-aware memory selection mechanism of SAMURAI improved tracking stability and accuracy, particularly in challenging occlusion scenarios. The system’s ability to reduce identity switching and maintain tracking consistency makes it a viable solution for real-world construction site safety monitoring. Although the integration of SAMURAI introduced a minor reduction in processing speed, the trade-off was justified by the significant improvement in tracking reliability.

Despite its advantages, certain limitations remain, including the computational cost associated with the tracking module and the potential need for further optimization to handle extreme occlusion cases. Future research will focus on refining the system’s efficiency, expanding its application to other hazardous construction activities, and integrating it with broader site wide safety management frameworks.

Overall, this study provides a foundation for enhancing construction site safety monitoring by addressing occlusion-related challenges in worker tracking. The proposed YOLOv8-SAMURAI framework offers a practical and scalable solution for real-time safety monitoring, contributing to accident prevention and improved compliance with safety regulations in dynamic and complex construction environments.

Author Contributions

Methodology, S.Y.; Validation, S.Y.; Writing – original draft, S.Y.; Writing—review & editing, H.K.; Visualization, S.Y.; Supervision, H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by a grant (RS-2022-00143493) from a Digital-Based Building Construction and Safety Supervision Technology Research Program funded by Ministry of Land, Infrastructure, and Transport of the Korean Government.

Institutional Review Board Statement

Institutional Review Board Statement: The study was conducted according to the guidelines of the Declaration of Helsinki and was approved by the Institutional Review Board of DANKOOK UNIVERSITY (DKU 2020-09-027, date of approval 14 September 2020).

Informed Consent Statement

Written informed consent was obtained from the patient(s) to publish this paper.

Data Availability Statement

All data, models, or code generated by or used in the study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hamid, A.R.A.; Majid, M.Z.A.; Singh, B. Causes of accidents at construction sites. Malays. J. Civ. Eng. 2008, 20, 242–259. [Google Scholar] [CrossRef]

- Williams, O.S.; Hamid, R.A.; Misnan, M.S. Accident Causal Factors on the Building Construction Sites: A Review. Int. J. Built Environ. Sustain. 2018, 5. [Google Scholar] [CrossRef]

- Jeong, G.; Kim, H.; Lee, H.-S.; Park, M.; Hyun, H. Analysis of Safety Risk Factors of Modular Construction to Identify Accident Trends. J. Asian Archit. Build. Eng. 2022, 21, 1040–1052. [Google Scholar] [CrossRef]

- Purohit, D.P.; Siddiqui, D.N.A.; Nandan, A.; Yadav, D.B.P. Hazard Identification and Risk Assessment in Construction Industry. Int. J. Appl. Eng. Res. 2018, 13, 7639–7667. [Google Scholar]

- Socias, C.M.; Menéndez, C.K.C.; Collins, J.W.; Simeonov, P. Occupational Ladder Fall Injuries—United States, 2011. Morb. Mortal. Wkly. Rep. 2014, 63, 341–346. [Google Scholar]

- Nævestad, T.-O.; Elvebakk, B.; Phillips, R.O. The Safety Ladder: Developing an Evidence-Based Safety Management Strategy for Small Road Transport Companies. Transp. Rev. 2018, 38, 372–393. [Google Scholar] [CrossRef]

- Mrugalska, B.; Arezes, P.M. Safety requirements for machinery in practice. In Occupational Safety and Hygiene; CRC Press: Boca Raton, FL, USA, 2013; ISBN 978-0-429-21254-3. [Google Scholar]

- KOSHA. Available online: https://www.kosha.or.kr/english/index.do (accessed on 10 March 2025).

- Törner, M.; Pousette, A. Safety in Construction—A Comprehensive Description of the Characteristics of High Safety Standards in Construction Work, from the Combined Perspective of Supervisors and Experienced Workers. J. Saf. Res. 2009, 40, 399–409. [Google Scholar] [CrossRef]

- Helander, M.G. Safety Hazards and Motivation for Safe Work in the Construction Industry. Int. J. Ind. Ergon. 1991, 8, 205–223. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, L.; He, H.; Jiao, Z.; Wu, L. Vision-Based Skeleton Motion Phase to Evaluate Working Behavior: Case Study of Ladder Climbing Safety. Hum.-Centric Comput. Inf. Sci. 2022, 12, 1–18. [Google Scholar] [CrossRef]

- Park, M.; Tran, D.Q.; Bak, J.; Kulinan, A.S.; Park, S. Real-Time Monitoring Unsafe Behaviors of Portable Multi-Position Ladder Worker Using Deep Learning Based on Vision Data. J. Saf. Res. 2023, 87, 465–480. [Google Scholar] [CrossRef]

- Han, S.; Lee, S.; Peña-Mora, F. Vision-Based Detection of Unsafe Actions of a Construction Worker: Case Study of Ladder Climbing. J. Comput. Civ. Eng. 2013, 27, 635–644. [Google Scholar] [CrossRef]

- Lee, B.; Kim, H. Measuring Effects of Safety-Reminding Interventions against Risk Habituation. Saf. Sci. 2022, 154, 105857. [Google Scholar] [CrossRef]

- Yang, J.; Park, M.-W.; Vela, P.A.; Golparvar-Fard, M. Construction Performance Monitoring via Still Images, Time-Lapse Photos, and Video Streams: Now, Tomorrow, and the Future. Adv. Eng. Inform. 2015, 29, 211–224. [Google Scholar] [CrossRef]

- Hong, S.; Ham, Y.; Chun, J.; Kim, H. Productivity Measurement through IMU-Based Detailed Activity Recognition Using Machine Learning: A Case Study of Masonry Work. Sensors 2023, 23, 7635. [Google Scholar] [CrossRef]

- Musarat, M.A.; Khan, A.M.; Alaloul, W.S.; Blas, N.; Ayub, S. Automated Monitoring Innovations for Efficient and Safe Construction Practices. Results Eng. 2024, 22, 102057. [Google Scholar] [CrossRef]

- Teizer, J. Status Quo and Open Challenges in Vision-Based Sensing and Tracking of Temporary Resources on Infrastructure Construction Sites. Adv. Eng. Inform. 2015, 29, 225–238. [Google Scholar] [CrossRef]

- Seo, J.; Han, S.; Lee, S.; Kim, H. Computer Vision Techniques for Construction Safety and Health Monitoring. Adv. Eng. Inform. 2015, 29, 239–251. [Google Scholar] [CrossRef]

- Lee, B.; Hong, S.; Kim, H. Determination of Workers’ Compliance to Safety Regulations Using a Spatio-Temporal Graph Convolution Network. Adv. Eng. Inform. 2023, 56, 101942. [Google Scholar] [CrossRef]

- Lee, B.; Kim, H. Evaluating the Effects of Safety Incentives on Worker Safety Behavior Control through Image-Based Activity Classification. Front. Public Health 2024, 12, 1430697. [Google Scholar] [CrossRef]

- Hong, S.; Choi, B.; Ham, Y.; Jeon, J.; Kim, H. Massive-Scale Construction Dataset Synthesis through Stable Diffusion for Machine Learning Training. Adv. Eng. Inform. 2024, 62, 102866. [Google Scholar] [CrossRef]

- Lee, J.; Lee, S. Construction Site Safety Management: A Computer Vision and Deep Learning Approach. Sensors 2023, 23, 944. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Wei, H.; Han, Z.; Huang, J.; Wang, W. Deep Learning-Based Safety Helmet Detection in Engineering Management Based on Convolutional Neural Networks. Adv. Civ. Eng. 2020, 2020, 9703560. [Google Scholar] [CrossRef]

- Han, K.; Zeng, X. Deep Learning-Based Workers Safety Helmet Wearing Detection on Construction Sites Using Multi-Scale Features. IEEE Access 2022, 10, 718–729. [Google Scholar] [CrossRef]

- Shin, Y.; Seo, S.-W.; Choongwan, K. Synthetic Video Generation Process Model for Enhancing the Activity Recognition Performance of Heavy Construction Equipment—Utilizing 3D Simulations in Unreal Engine Environment. Korean J. Constr. Eng. Manag. 2025, 26, 74–82. [Google Scholar]

- Kim, J.-M. The Quantification of the Safety Accident of Foreign Workers in the Construction Sites. Korean J. Constr. Eng. Manag. 2024, 25, 25–31. [Google Scholar] [CrossRef]

- Yoon, S.; Kim, H. Time-Series Image-Based Automated Monitoring Framework for Visible Facilities: Focusing on Installation and Retention Period. Sensors 2025, 25, 574. [Google Scholar] [CrossRef]

- Ferdous, M.; Ahsan, S.M.M. PPE Detector: A YOLO-Based Architecture to Detect Personal Protective Equipment (PPE) for Construction Sites. PeerJ Comput. Sci. 2022, 8, e999. [Google Scholar] [CrossRef]

- Kim, K.; Kim, K.; Jeong, S. Application of YOLO v5 and v8 for Recognition of Safety Risk Factors at Construction Sites. Sustainability 2023, 15, 15179. [Google Scholar] [CrossRef]

- Rane, N.; Choudhary, S.; Rane, J. YOLO and Faster R-CNN Object Detection in Architecture, Engineering and Construction (AEC): Applications, Challenges, and Future Prospects 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4624204 (accessed on 20 December 2024).

- Xiao, B.; Kang, S.-C. Development of an Image Data Set of Construction Machines for Deep Learning Object Detection. J. Comput. Civ. Eng. 2021, 35, 05020005. [Google Scholar] [CrossRef]

- Duan, R.; Deng, H.; Tian, M.; Deng, Y.; Lin, J. SODA: A Large-Scale Open Site Object Detection Dataset for Deep Learning in Construction. Autom. Constr. 2022, 142, 104499. [Google Scholar] [CrossRef]

- Park, M.-W.; Brilakis, I. Continuous Localization of Construction Workers via Integration of Detection and Tracking. Autom. Constr. 2016, 72, 129–142. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, P.; Li, H. An Improved YOLOv5s-Based Algorithm for Unsafe Behavior Detection of Construction Workers in Construction Scenarios. Appl. Sci. 2025, 15, 1853. [Google Scholar] [CrossRef]

- Fang, W.; Love, P.E.D.; Luo, H.; Ding, L. Computer Vision for Behaviour-Based Safety in Construction: A Review and Future Directions. Adv. Eng. Inform. 2020, 43, 100980. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, H.; Peng, W.; Tian, C.; Li, C. A Vision-Based Approach for Detecting Occluded Objects in Construction Sites. Neural Comput. Appl. 2024, 36, 10825–10837. [Google Scholar] [CrossRef]

- Zaidi, S.F.A.; Yang, J.; Abbas, M.S.; Hussain, R.; Lee, D.; Park, C. Vision-Based Construction Safety Monitoring Utilizing Temporal Analysis to Reduce False Alarms. Buildings 2024, 14, 1878. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Yao, C.-H.; Fang, C.; Shen, X.; Wan, Y.; Yang, M.-H. Video Object Detection via Object-Level Temporal Aggregation. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12359, pp. 160–177. ISBN 978-3-030-58567-9. [Google Scholar]

- Farhat, W.; Rhaiem, O.B.; Faiedh, H.; Souani, C. Optimized Deep Learning for Pedestrian Safety in Autonomous Vehicles. Int. J. Transp. Sci. Technol. 2025, in press. [Google Scholar] [CrossRef]

- Tang, S.; Andriluka, M.; Andres, B.; Schiele, B. Multiple People Tracking by Lifted Multicut and Person Re-Identification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3701–3710. [Google Scholar]

- Alif, M.A.R.; Hussain, M. YOLOv12: A Breakdown of the Key Architectural Features. arXiv 2025, arXiv:2502.14740. [Google Scholar]

- Alif, M.A.R. YOLOv11 for Vehicle Detection: Advancements, Performance, and Applications in Intelligent Transportation Systems. arXiv 2024, arXiv:2410.22898. [Google Scholar]

- Feng, R.; Miao, Y.; Zheng, J. A YOLO-Based Intelligent Detection Algorithm for Risk Assess-Ment of Construction Sites. J. Intell. Constr. 2024, 2, 1–18. [Google Scholar] [CrossRef]

- Garcea, F.; Cucco, A.; Morra, L.; Lamberti, F. Object Tracking through Residual and Dense LSTMs. In Proceedings of the International Conference on Image Analysis and Recognition, Póvoa de Varzim, Portugal, 24–26 June 2020; Volume 12132, pp. 100–111. [Google Scholar]

- Cao, K.; Zhang, T.; Huang, J. Advanced Hybrid LSTM-Transformer Architecture for Real-Time Multi-Task Prediction in Engineering Systems. Sci. Rep. 2024, 14, 4890. [Google Scholar] [CrossRef]

- Zheng, B.; Tiwari, A.; Vijaykumar, N.; Pekhimenko, G. Echo: Compiler-Based GPU Memory Footprint Reduction for LSTM RNN Training. In Proceedings of the Annual International Symposium on Computer Architecture (ISCA), Phoenix, AZ, USA, 22–26 June 2019. [Google Scholar]

- Meinhardt, T.; Kirillov, A.; Leal-Taixe, L.; Feichtenhofer, C. TrackFormer: Multi-Object Tracking with Transformers. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 8834–8844. [Google Scholar]

- Luo, W.; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Kim, T.-K. Multiple Object Tracking: A Literature Review. Artif. Intell. 2021, 293, 103448. [Google Scholar] [CrossRef]

- Yang, C.-Y.; Huang, H.-W.; Chai, W.; Jiang, Z.; Hwang, J.-N. SAMURAI: Adapting Segment Anything Model for Zero-Shot Visual Tracking with Motion-Aware Memory. arXiv 2024, arXiv:2411.11922. [Google Scholar]

- Wong, T.K.M.; Man, S.S.; Chan, A.H.S. Critical Factors for the Use or Non-Use of Personal Protective Equipment amongst Construction Workers. Saf. Sci. 2020, 126, 104663. [Google Scholar] [CrossRef]

- Santos, C.; Aguiar, M.; Welfer, D.; Belloni, B. A New Approach for Detecting Fundus Lesions Using Image Processing and Deep Neural Network Architecture Based on YOLO Model. Sensors 2022, 22, 6441. [Google Scholar] [CrossRef]

- Han, S.; Won, J.; Choongwan, K. A Strategic Approach to Enhancing the Practical Applicability of Vision-based Detection and Classification Models for Construction Tools—Sensitivity Analysis of Model Performance Depending on Confidence Threshold. Korean J. Constr. Eng. Manag. 2025, 26, 102–109. [Google Scholar]

- Cui, J.; Zhang, B.; Wang, X.; Wu, J.; Liu, J.; Li, Y.; Zhi, X.; Zhang, W.; Yu, X. Impact of Annotation Quality on Model Performance of Welding Defect Detection Using Deep Learning. Weld. World 2024, 68, 855–865. [Google Scholar] [CrossRef]

- Zhong, J.; Qian, H.; Wang, H.; Wang, W.; Zhou, Y. Improved Real-Time Object Detection Method Based on YOLOv8: A Refined Approach. J. Real-Time Image Proc. 2024, 22, 4. [Google Scholar] [CrossRef]

- Khalili, B.; Smyth, A.W. SOD-YOLOv8—Enhancing YOLOv8 for Small Object Detection in Aerial Imagery and Traffic Scenes. Sensors 2024, 24, 6209. [Google Scholar] [CrossRef]

- Zhou, Q.; Liu, D.; An, K. ESE-YOLOv8: A Novel Object Detection Algorithm for Safety Belt Detection during Working at Heights. Entropy 2024, 26, 591. [Google Scholar] [CrossRef]

- Morteza, A.; Ilbeigi, M.; Schwed, J. A Blockchain Information Management Framework for Construction Safety. In Computing in Civil Engineering; ASCE Library: Reston, VA, USA, 2022; pp. 342–349. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, T.; Wang, F.; Osmani, M.; Demian, P. Blockchain Enhanced Construction Waste Information Management: A Conceptual Framework. Sustainability 2022, 14, 12145. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).