A Review of the Potential of Drone-Based Approaches for Integrated Building Envelope Assessment

Abstract

1. Introduction

- Which type of data is needed for building inspection, as the initial step for building energy retrofit design, and what are the benefits of a drone-based approach to inspecting building envelopes?

- Which sensors can be integrated with drones for effective data collection towards creating 3D point clouds and assessing building defects?

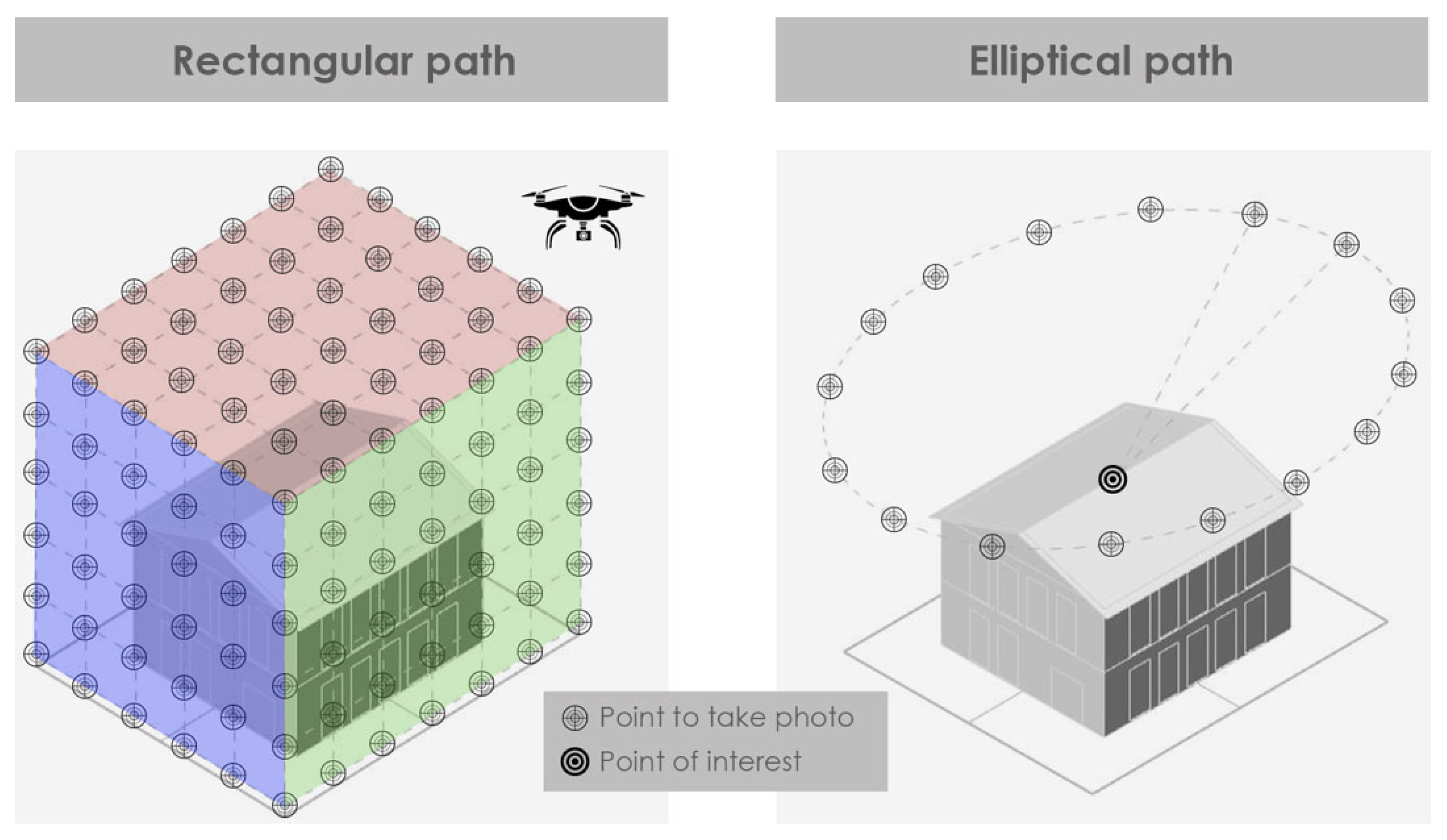

- Which flight path conditions and parameters are appropriate for the effective capture of data from an existing building?

- What are the regulations and other challenges for the operation of drones to collect data from built environments?

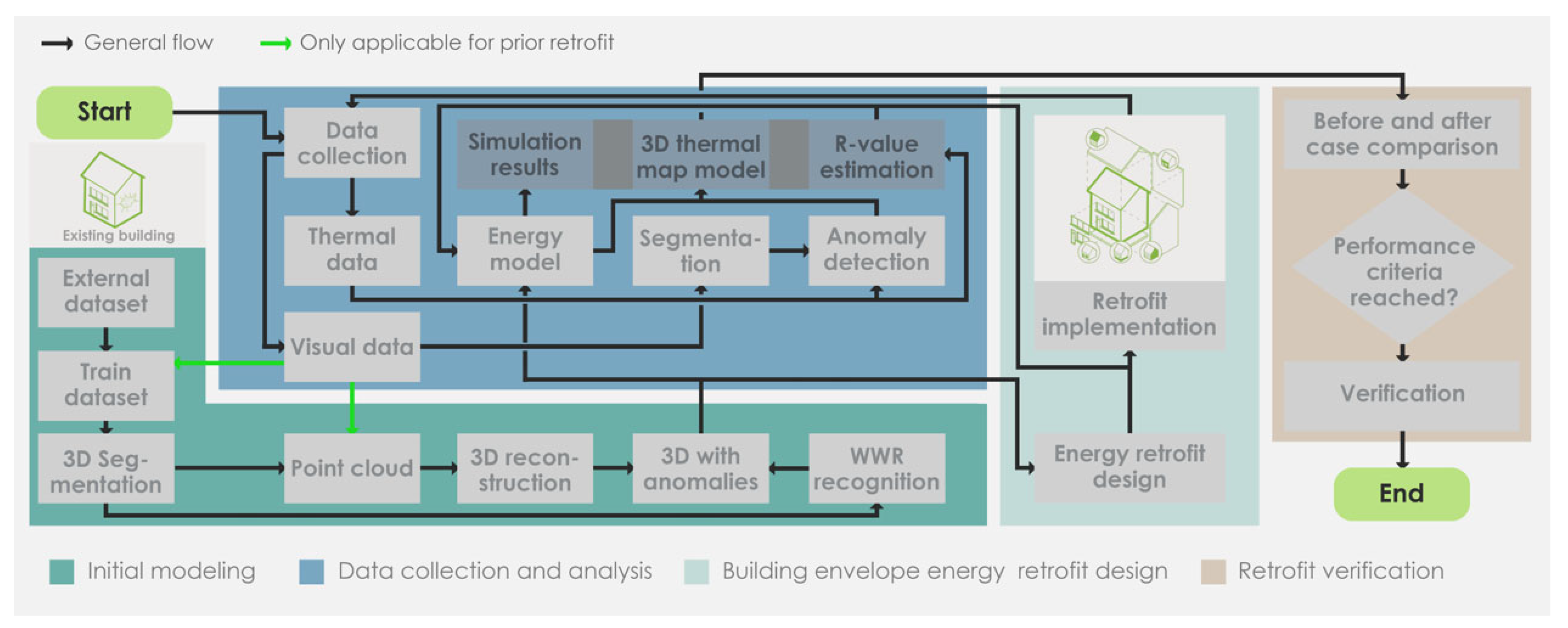

2. Building Inspection

3. Building Envelope Scanning

3.1. Laser Scanning

3.2. Close-Range Photogrammetry

3.3. Ultrasound

3.4. Through-Wall Imaging Radar

3.5. Ground-Penetrating Radar

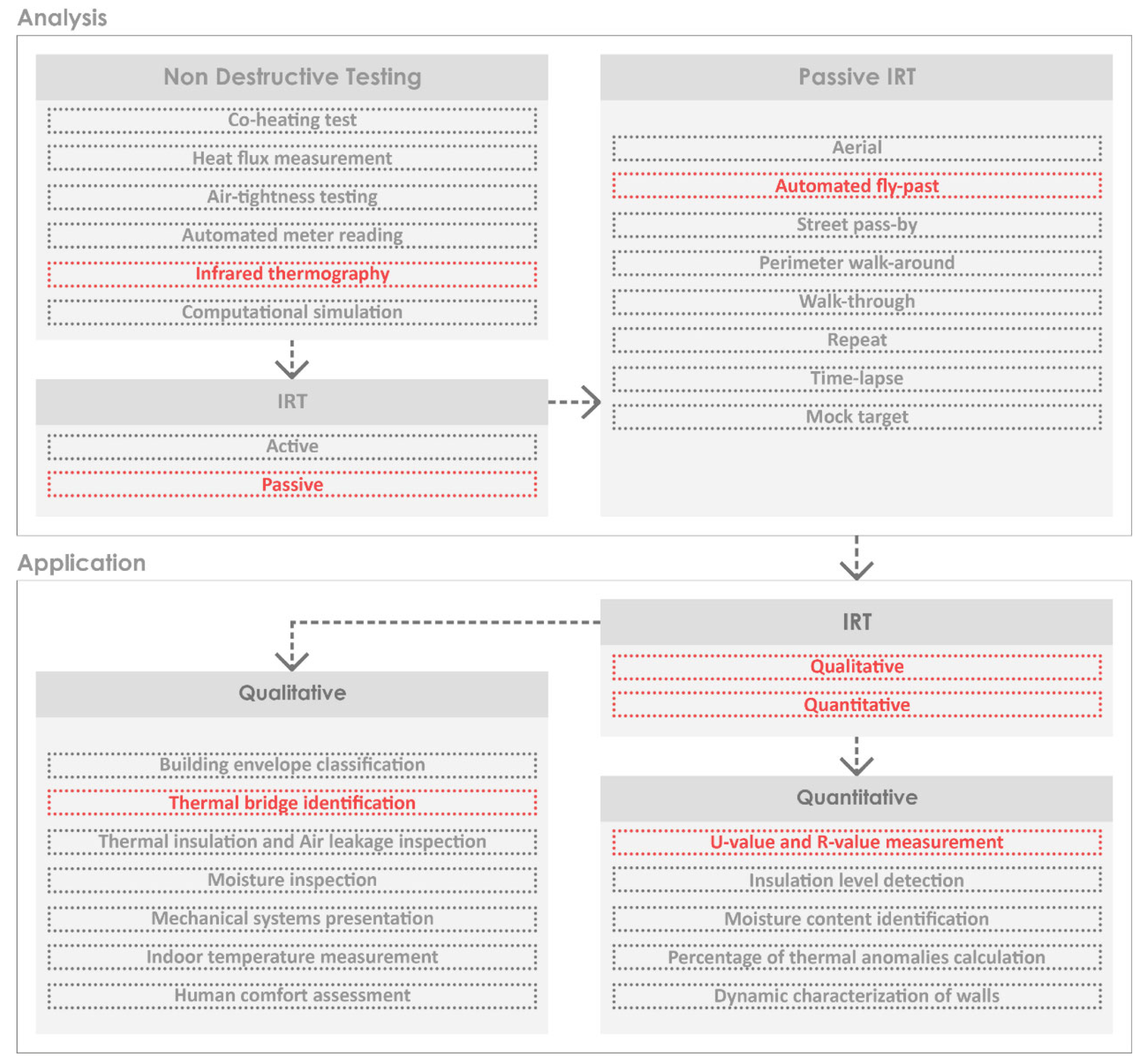

3.6. Infrared Thermography

IRT for Building Envelope Assessment

3.7. Discussion of Envelope Scanning Techniques Based on Input Categories

4. Drone-Based Technologies for Building Inspection

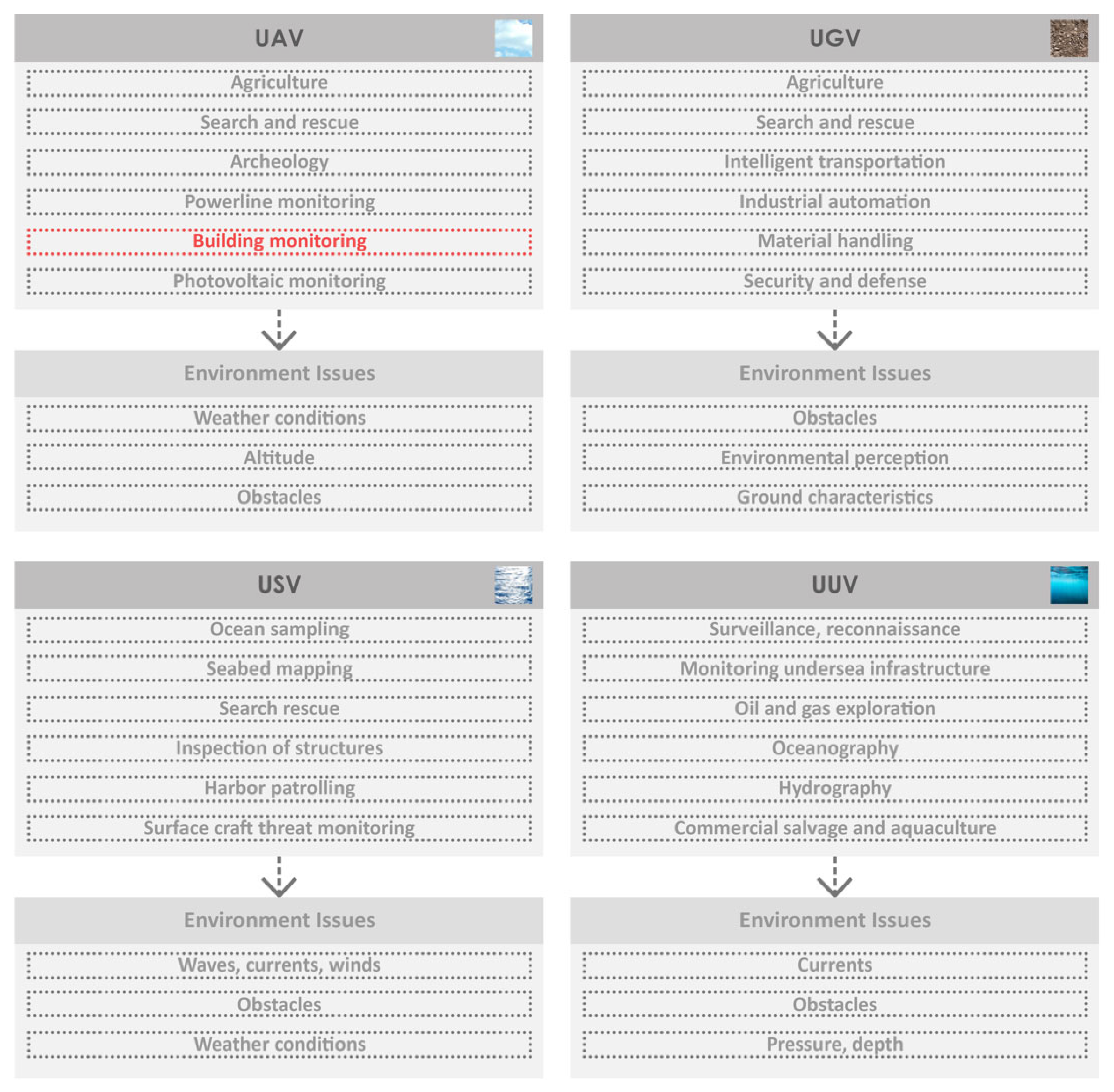

4.1. Unmanned Systems

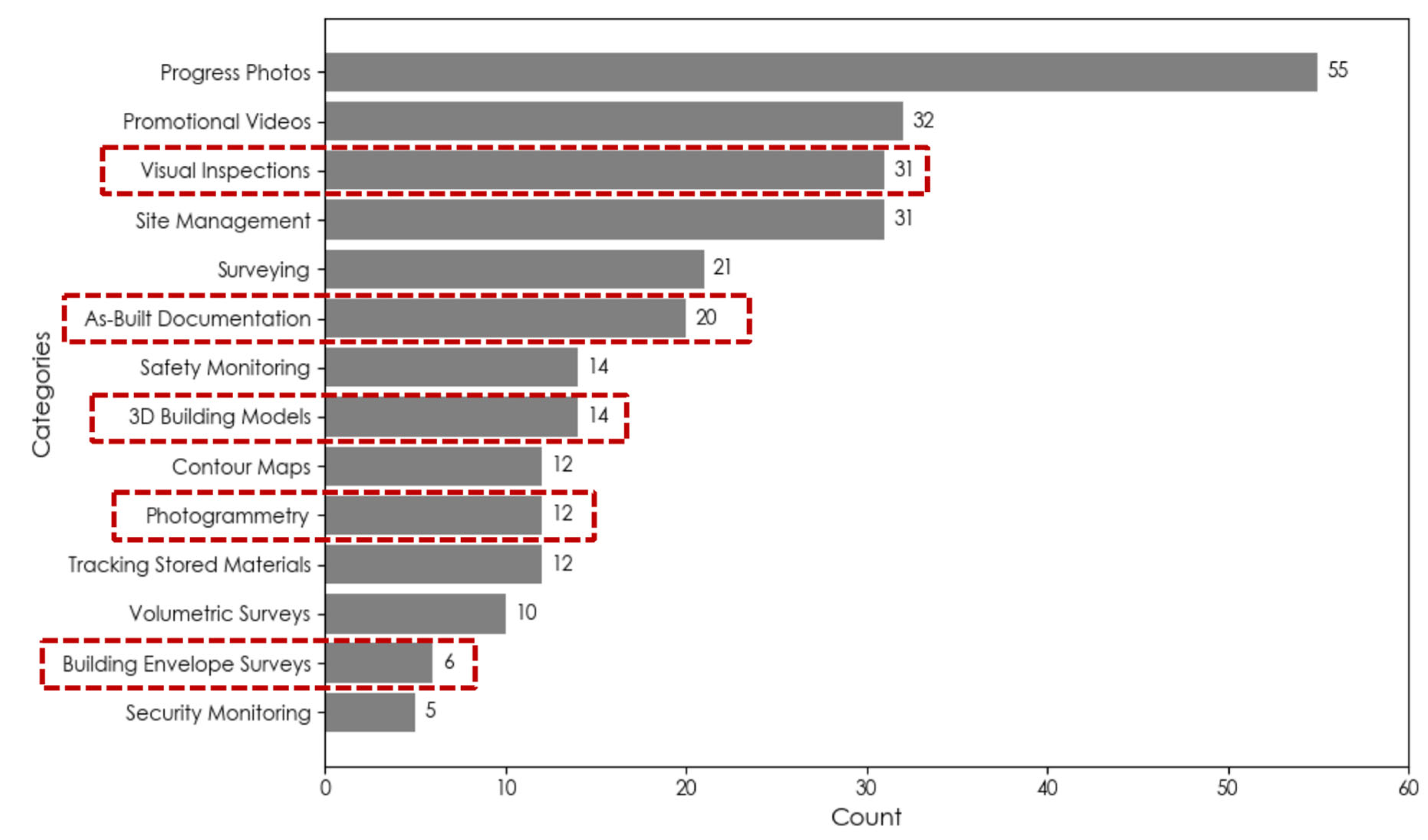

4.2. Overview of Drone Applications in Construction and Building Monitoring

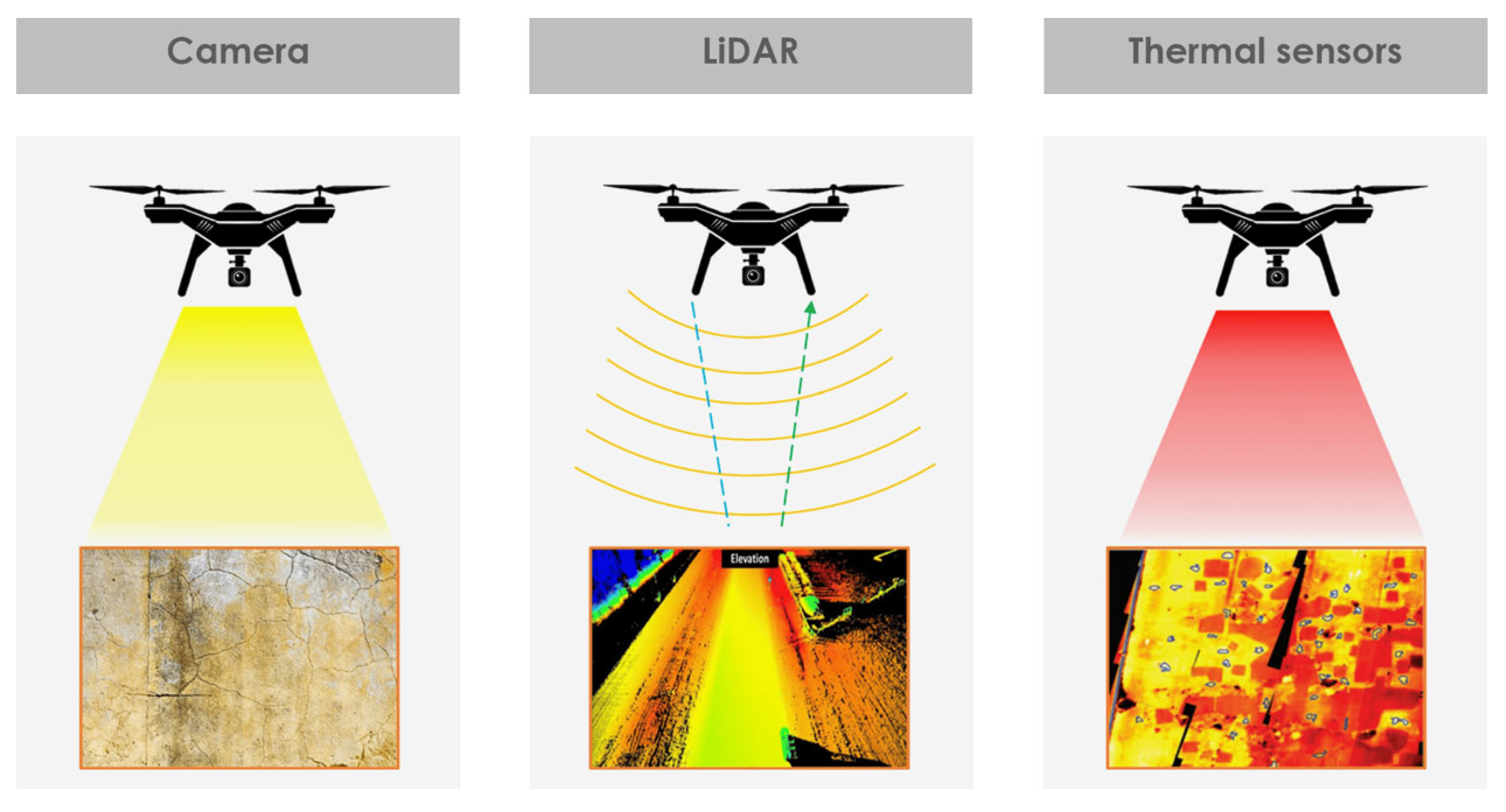

4.3. Sensors for Drones

4.3.1. High-Resolution Camera

4.3.2. Spectral Sensors

4.3.3. Thermal Sensors

4.3.4. LiDAR Sensors

4.3.5. Data Fusion Strategies

4.4. Drones for Building Inspection

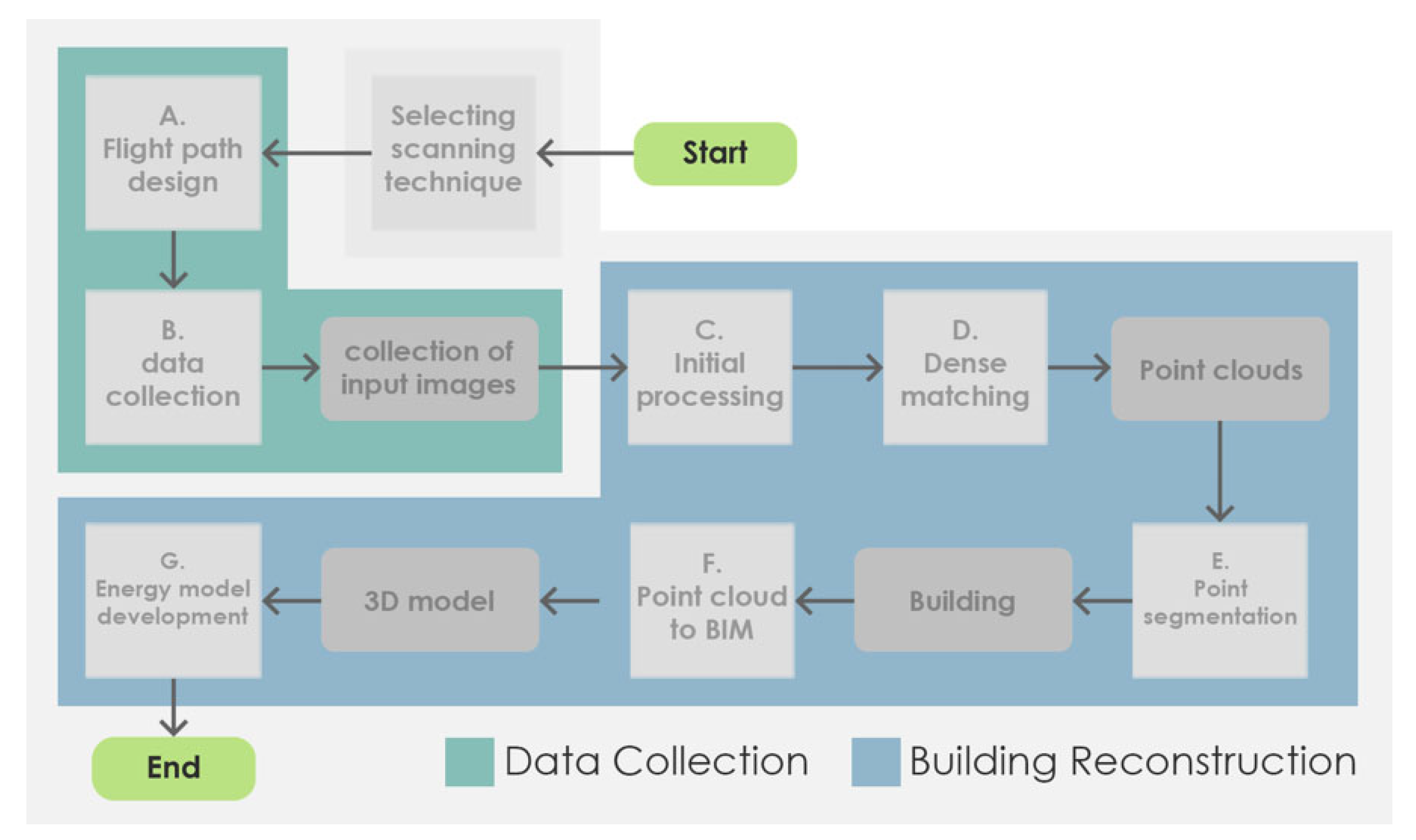

5. A Drone-Based Workflow for Integrated Building Envelope Assessment

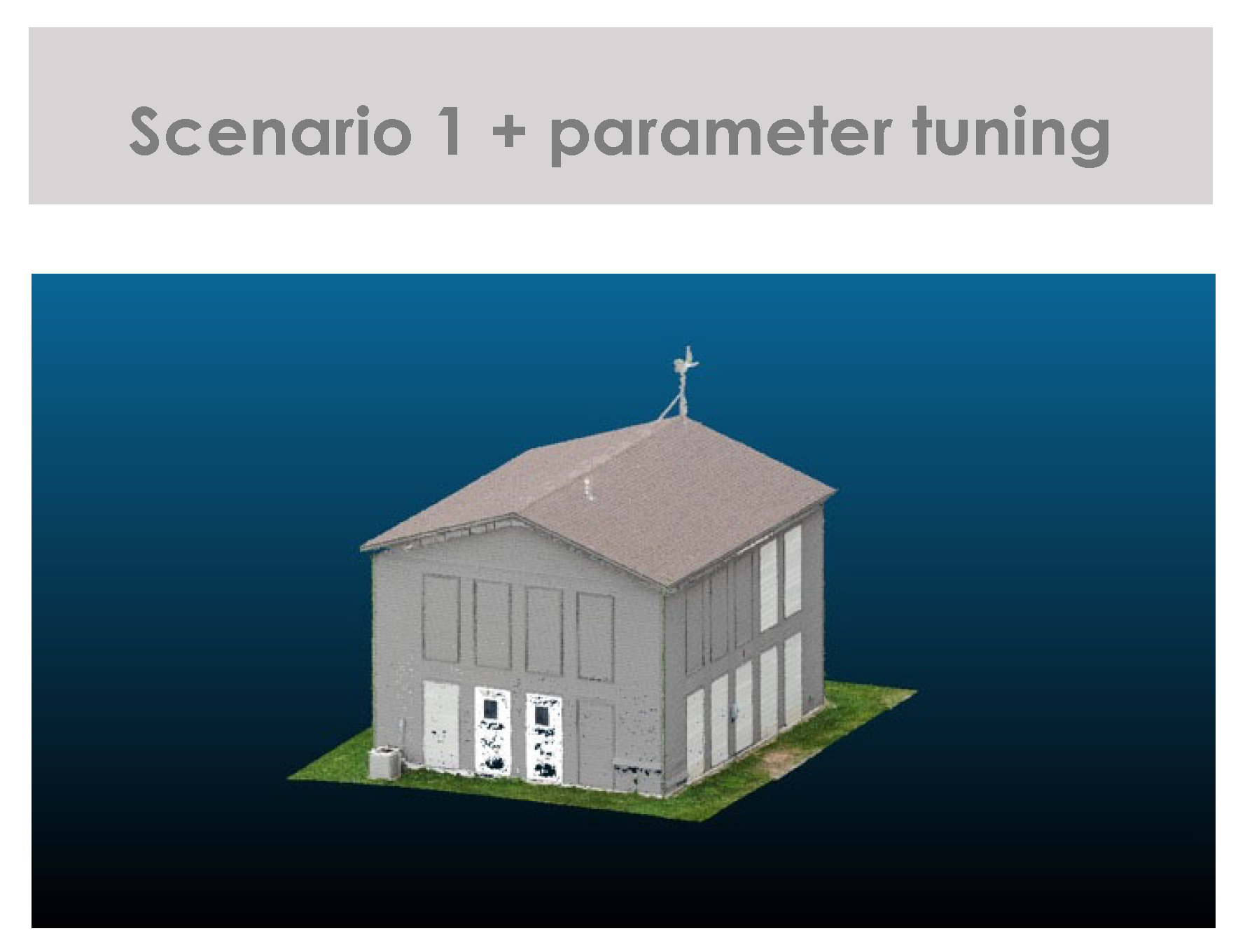

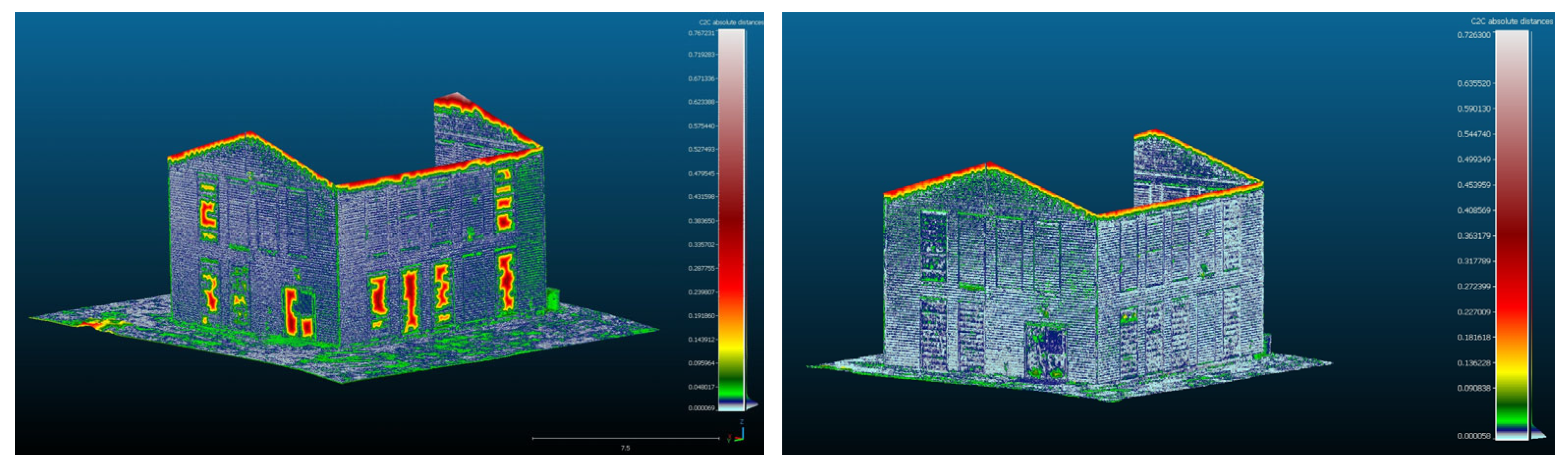

5.1. Case Study

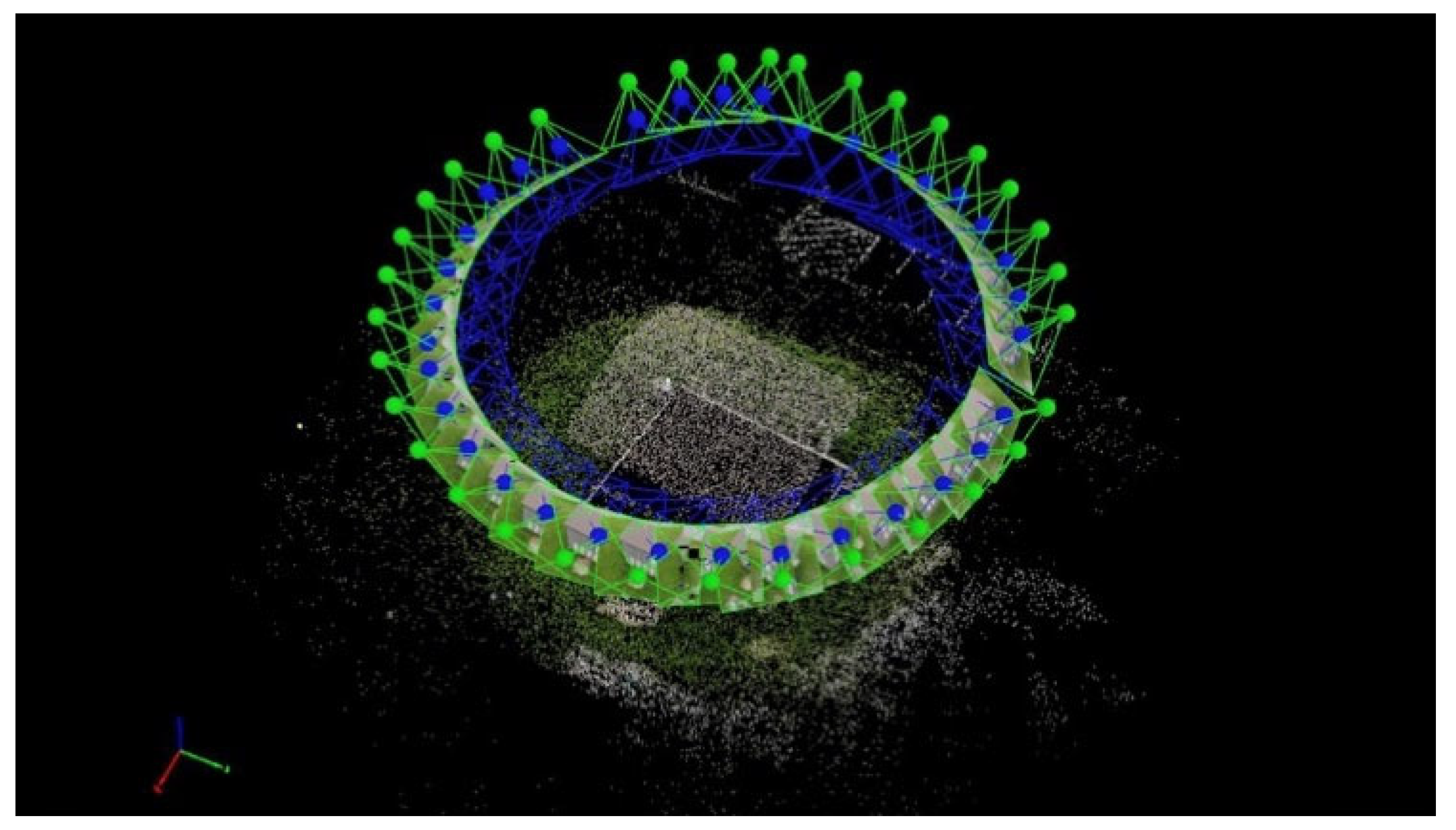

5.1.1. Flight Path Design and Data Collection

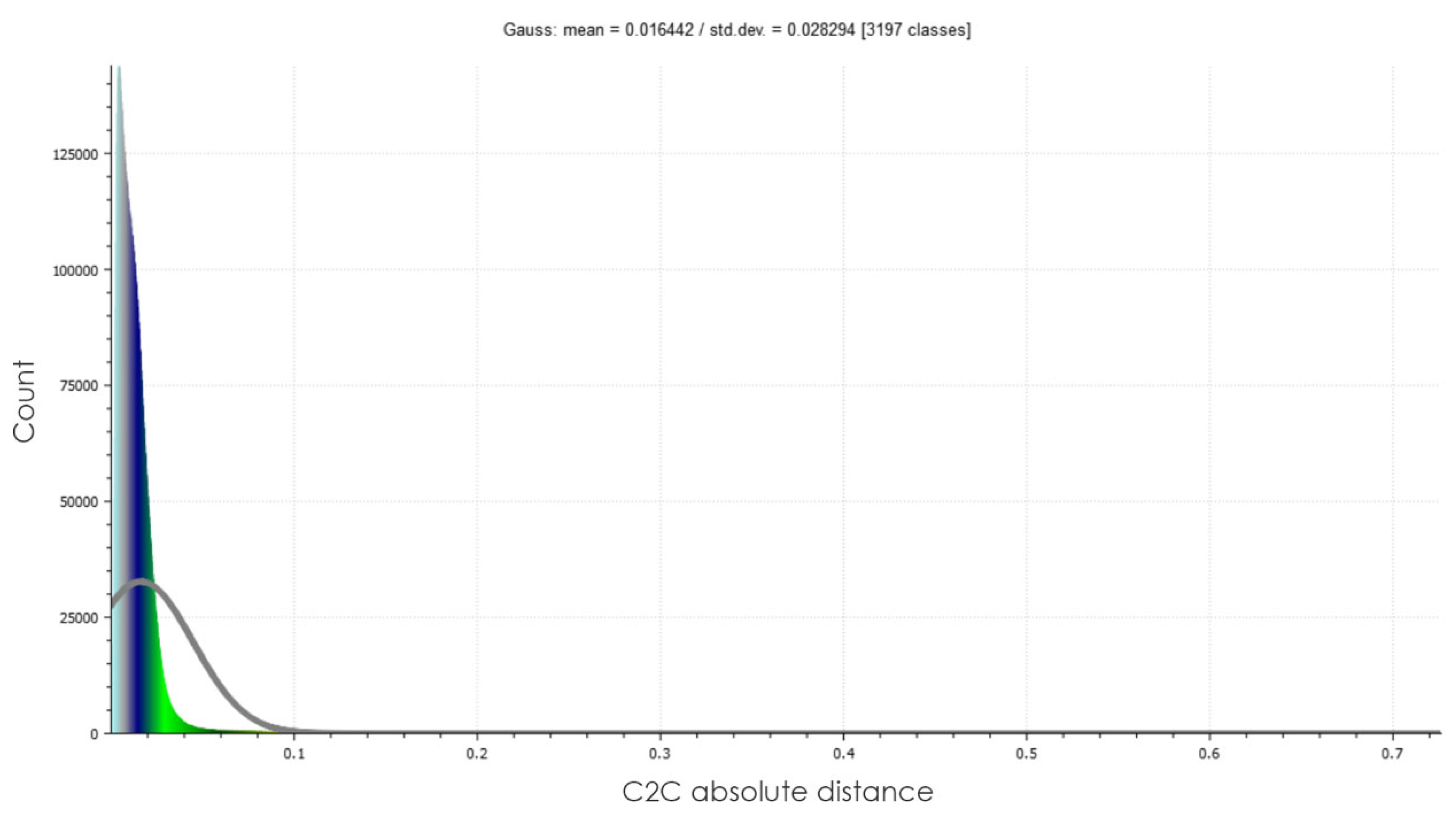

5.1.2. Post-Flight Analysis and Building Reconstruction

6. Results and Discussion

6.1. Case Study Systems

6.2. Challenges of Drone-Based Approaches

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- New York State Energy Research and Development Authority (NYSERDA) Advanced Buildings Program. Available online: https://www.nyserda.ny.gov/All-Programs/Advanced-Buildings (accessed on 27 June 2022).

- Mirzabeigi, S.; Soltanian-Zadeh, S.; Krietemeyer, B.; Dong, B.; Wilson, N.; Carter, B.; Zhang, J. Towards Occupant-Centric Building Retrofit Assessments: An Integrated Protocol for Real-Time Monitoring of Building Energy Use, Indoor Environmental Quality, and Enclosure Performance in Relationship to Occupant Behaviors. ASHRAE Trans. 2024, 130, 70–78. [Google Scholar]

- Crovella, P. Integrated Building Assessment to Enable Retrofit Design, Fabrication and Verification: A Drone-Based Approach. In SyracuseCoE Research Brief Series; Syracuse University Libraries: Syracuse, NY, USA, 2024; Volume 5. [Google Scholar]

- Mirzabeigi, S. Integrated Building Envelope Assessment Towards Automation of Energy Retrofits: Drone-Based Data Acquisition, Automated Thermal Anomaly Detection, and Workflow Development. Ph.D. Thesis, State University of New York College of Environmental Science and Forestry, Syracuse, NY, USA, 2024. [Google Scholar]

- ANSI/ASHRAE/ACCA Standard 211-2018; Standard for Commercial Building Energy Audits. ASHRAE: Atlanta, GA, USA, 2018.

- Mirzabeigi, S.; Razkenari, M. Design Optimization of Urban Typologies: A Framework for Evaluating Building Energy Performance and Outdoor Thermal Comfort. Sustain. Cities Soc. 2022, 76, 103515. [Google Scholar] [CrossRef]

- City of New York Housing Preservation & Development (HPD); Housing Development Corporation (HDC); New York State Homes & Community Renewal (HCR). Integrated Physical Needs Assessment (IPNA) Standard for New York City and State Low/Moderate Income Multifamily Buildings. New York, USA. 2022. Available online: https://www.nyserda.ny.gov/-/media/Project/Nyserda/Files/Programs/IPNA/IPNA-Standard.pdf (accessed on 27 May 2025).

- City of New York RCNY §103-07. Energy Audits and Retro-Commissioning of Base Building Systems. Rules of the City of New York, Title 1, Chapter 100, Subchapter C, Section 103-07. Published October 4, 2024; effective January 1, 2025. Available online: https://www.nyc.gov/assets/buildings/rules/1_RCNY_103-07.pdf (accessed on 8 May 2025).

- ASTM E2841-19; Standard Guide for Conducting Inspections of Building Facades for Unsafe Conditions. ASTM International: West Conshohocken, PA, USA, 2019.

- ASTM E2270-14; Standard Practice for Periodic Inspection of Building Façades for Unsafe Conditions. ASTM International: West Conshohocken, PA, USA, 2019.

- City of New York RCNY §103-04. Periodic Inspection of Exterior Walls and Appurtenances of Buildings. Rules of the City of New York, Title 1, Chapter 100, Subchapter C, Section 103-04. Title Promulgated in 2020 and Amended Most Recently in February 2020. Available online: https://www.nyc.gov/assets/buildings/rules/1_RCNY_103-04.pdf (accessed on 8 May 2025).

- Pətrəucean, V.; Armeni, I.; Nahangi, M.; Yeung, J.; Brilakis, I.; Haas, C. State of Research in Automatic As-Built Modelling. Adv. Eng. Inform. 2015, 29, 162–171. [Google Scholar] [CrossRef]

- Wang, C.; Cho, Y.K. Application of As-Built Data in Building Retrofit Decision Making Process. Procedia Eng. 2015, 118, 902–908. [Google Scholar] [CrossRef][Green Version]

- Schley, M.; Haines, B.; Roper, K.; Williams, B. BIM for Facility Management. Prepared for the BIM-FM Consortium, 12 August 2016. Available online: https://it.ifma.org/wp-content/uploads/2019/04/BIM-FM-Consortium-BIM-Guide-v2_1.pdf (accessed on 8 May 2025).

- Ghosh, A.; Chasey, A.D.; Cribbs, J. BIM for Retrofits: A Case Study of Tool Installation at an Advanced Technology Facility. In Proceedings of the 51st ASC Annual International Conference Proceedings, College Station, TX, USA, 22–25 April 2015. [Google Scholar]

- United BIM Inc. The Ultimate Guide: Scan to BIM; United-BIM Inc.: East Hartford, CT, USA, 2018. [Google Scholar]

- U.S. Institute of Building Documentation (USIBD). USIBD Level of Accuracy (LOA) Specification Guide, Version 2.0 (2016). Arlington, VA, USA. Published 10 October 2016. Available online: https://www.usibd.org/product/usibd-level-of-accuracy-loa-specification-guide-version-2-0-2016/ (accessed on 8 May 2025).

- El Masri, Y.; Rakha, T. A Scoping Review of Non-Destructive Testing (NDT) Techniques in Building Performance Diagnostic Inspections. Constr. Build. Mater. 2020, 265, 120542. [Google Scholar] [CrossRef]

- Mirzabeigi, S.; Zhang, R.; Krietemeyer, B.; Zhang, J. Modeling the Effects of Panel Interfaces on Air-Tightness and Thermal Performance of an Integrated Whole-Building Energy Efficiency Retrofit Assembly. In Proceedings of the International Buildings Physics Conference 2024, Toronto, ON, Canada, 25–27 July 2024; Springer Nature: Singapore, 2024. [Google Scholar]

- Riveiro, B.; Solla, M. Non-Destructive Techniques for the Evaluation of Structures and Infrastructure; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Burrows, P. Five Things to Know When Choosing a Mobile or Terrestrial Laser Scanner. Available online: https://shop.leica-geosystems.com/blog/five-things-know-when-choosing-mobile-or-terrestrial-laser-scanner (accessed on 14 June 2025).

- Grussenmeyer, P.; Landes, T.; Voegtle, T.; Grussenmeyer, P.; Landes, T.; Voegtle, T.; Ringle, K. Comparison Methods of Terrestrial Laser Scanning, Photogrammetry and Tacheometry Data for Recording of Cultural Heritage Buildings. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 213–218. [Google Scholar]

- Previtali, M.; Barazzetti, L.; Brumana, R.; Cuca, B.; Oreni, D.; Roncoroni, F.; Scaioni, M. Automatic Façade Segmentation for Thermal Retrofit. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 197–204. [Google Scholar] [CrossRef]

- Kim, J.-C.; Shin, S. Automatic Building Feature Extraction Using Lidar Data and Digital Map. 2006. Available online: https://www.asprs.org/a/publications/proceedings/fall2006/0019.pdf (accessed on 20 June 2022).

- Wood, R.L.; Mohammadi, M.E. LiDAR Scanning with Supplementary UAV Captured Images for Structural Inspections. In Proceedings of the International LiDAR Mapping Forum 2015, Denver, CO, USA, 23–25 February 2015; pp. 1–10. [Google Scholar]

- Jiang, R.; Jáuregui, D.V.; White, K.R. Close-Range Photogrammetry Applications in Bridge Measurement: Literature Review. Measurement 2008, 41, 823–834. [Google Scholar] [CrossRef]

- Schickert, M. Progress in Ultrasonic Imaging of Concrete. Mater. Struct. 2005, 38, 807–815. [Google Scholar] [CrossRef]

- Morales-Conde, M.J.; Rodríguez-Liñán, C.; Rubio de Hita, P. Application of Non-Destructive Techniques in the Inspection of the Wooden Roof of Historic Buildings: A Case Study. Adv. Mat. Res. 2013, 778, 233–242. [Google Scholar] [CrossRef]

- Shah, A.A.; Ribakov, Y. Effectiveness of Nonlinear Ultrasonic and Acoustic Emission Evaluation of Concrete with Distributed Damages. Mater. Des. 2010, 31, 3777–3784. [Google Scholar] [CrossRef]

- Ren, K.; Burkholder, R.J.; Chen, J. Investigation of Gaps between Blocks in Microwave Images of Multilayered Walls. In Proceedings of the IEEE Antennas and Propagation Society, AP-S International Symposium (Digest), Vancouver, BC, Canada, 19–24 July 2015; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2015; pp. 691–692. [Google Scholar]

- Sévigny, P.; Fournier, J. Automated Front Wall Feature Extraction and Material Assessment Using Fused LIDAR and Through-Wall Radar Imagery. 2017. Available online: https://www.sto.nato.int/publications/STO%20Meeting%20Proceedings/STO-MP-SET-241/MP-SET-241-10-4.pdf (accessed on 8 May 2025).

- Zhang, W. Two-Dimensional Microwave Tomographic Algorithm for Radar Imaging through Multilayered Media. Prog. Electromagn. Res. 2014, 44, 261–270. [Google Scholar] [CrossRef]

- Giunta, G.; Calloni, G. Ground Penetrating Radar Applications on the Façade of St. Peter’s Basilica in Vatican. In Proceedings of the 15th World Conference on Non-Destructive Testing, Rome, Italy, 15–21 October 2000. [Google Scholar]

- Clark, M.R.; McCann, D.M.; Forde, M.C. Application of Infrared Thermography to the Non-Destructive Testing of Concrete and Masonry Bridges. NDT E Int. 2003, 36, 265–275. [Google Scholar] [CrossRef]

- Mirzabeigi, S.; Razkenari, M. Automated Vision-Based Building Inspection Using Drone Thermography. In Proceedings of the Construction Research Congress 2022: Computer Applications, Automation, and Data Analytics, Arlington, VA, USA, 9–12 March 2022; Volume 2-B, pp. 737–746. [Google Scholar]

- Snell, J.; Schwoegler, M. The Use of Infrared Thermal Imaging for Home Weatherization. The Snell Group Online Conference: IR for Weatherization & Building Diagnostics, Montpelier, VT, USA, May 2009. Available online: https://www.efficiencyvermont.com/Media/Default/bbd/2011/docs/presentations/efficiency-vermont-schwoegler-ir-home-weatherization-2009.pdf (accessed on 8 May 2025).

- Bauer, E.; Pavón, E.; Oliveira, E.; Pereira, C.H.F. Facades Inspection with Infrared Thermography: Cracks Evaluation. J. Build. Pathol. Rehabil. 2016, 1, 2. [Google Scholar] [CrossRef]

- Berger, M.; Armitage, A. Room Occupancy Measurement Using Low-Resolution Infrared Cameras. In Proceedings of the IET Irish Signals and Systems Conference (ISSC 2010), Cork, Ireland, 23–24 June 2010; pp. 249–254. [Google Scholar] [CrossRef]

- Mirzabeigi, S.; Razkenari, M. Data-Driven Building Occupancy Prediction: An Educational Building Case Study. In Proceedings of the Computing in Civil Engineering 2021, Orlando, FL, USA, 12–14 September 2021; American Society of Civil Engineers (ASCE): Reston, VA, USA, 2021; pp. 9–16. [Google Scholar]

- Rakha, T.; Gorodetsky, A. Review of Unmanned Aerial System (UAS) Applications in the Built Environment: Towards Automated Building Inspection Procedures Using Drones. Autom. Constr. 2018, 93, 252–264. [Google Scholar] [CrossRef]

- Lucchi, E. Applications of the Infrared Thermography in the Energy Audit of Buildings: A Review. Renew. Sustain. Energy Rev. 2018, 82, 3077–3090. [Google Scholar] [CrossRef]

- Kylili, A.; Fokaides, P.A.; Christou, P.; Kalogirou, S.A. Infrared Thermography (IRT) Applications for Building Diagnostics: A Review. Appl. Energy 2014, 134, 531–549. [Google Scholar] [CrossRef]

- Bayomi, N.; Nagpal, S.; Rakha, T.; Reinhart, C.; Fernandez, J.E. Aerial Thermography as a Tool to Inform Building Envelope Simulation Models. In Proceedings of the 10th Symposium on Simulation for Architecture and Urban Design (SimAUD 2019), San Diego, CA, USA, 7–9 April 2019; Rockcastle, S., Rakha, T., Cerezo Davila, C., Papanikolaou, D., Zakula, T., Eds.; The Society for Modeling & Simulation International (SCS): San Diego, CA, USA, 2019; pp. 45–48. ISBN 978-1-7138-0460-4. [Google Scholar]

- Danielski, I.; Fröling, M. Diagnosis of Buildings’ Thermal Performance—A Quantitative Method Using Thermography Under Non-Steady State Heat Flow. Energy Procedia 2015, 83, 320–329. [Google Scholar] [CrossRef]

- Fox, M.; Coley, D.; Goodhew, S.; De Wilde, P. Thermography Methodologies for Detecting Energy Related Building Defects. Renew. Sustain. Energy Rev. 2014, 40, 296–310. [Google Scholar] [CrossRef]

- Iwaszczuk, D.; Stilla, U. Quality Assessment of Building Textures Extracted from Oblique Airborne Thermal Imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 3–8. [Google Scholar] [CrossRef]

- Cho, Y.K.; Ham, Y.; Golpavar-Fard, M. 3D As-Is Building Energy Modeling and Diagnostics: A Review of the State-of-the-Art. Adv. Eng. Inform. 2015, 29, 184–195. [Google Scholar] [CrossRef]

- Park, J.; Kim, P.; Cho, Y.K.; Kang, J. Framework for Automated Registration of UAV and UGV Point Clouds Using Local Features in Images. Autom. Constr. 2019, 98, 175–182. [Google Scholar] [CrossRef]

- De Filippo, M.; Asadiabadi, S.; Ko, N.; Sun, H. Concept of Computer Vision Based Algorithm for Detecting Thermal Anomalies in Reinforced Concrete Structures. Multidiscip. Digit. Publ. Inst. Proc. 2019, 27, 18. [Google Scholar]

- Rakha, T.; Liberty, A.; Gorodetsky, A.; Kakillioglu, B.; Velipasalar, S. Heat Mapping Drones: An Autonomous Computer Vision-Based Procedure for Building Envelope Inspection Using Unmanned Aerial Systems (UAS). Technol. Archit. Des. 2018, 2, 30–44. [Google Scholar] [CrossRef]

- Mirzabeigi, S.; Eteghad, P.; Razkenari, M.; Crovella, P.; Zhang, J. Drone-Based Scanning Technology for Characterizing the Geometry and Thermal Conditions of Building Enclosure System for Fast Energy Audit and Design of Retrofitting Strategies. In Proceedings of the 2022 (6th) Residential Building Design & Construction Conference, University Park, TX, USA, 11 May 2022; pp. 251–260. [Google Scholar]

- Autonomy Levels for Unmanned Systems (ALFUS) Framework; National Institute of Standards and Technology (NIST): Gaithersburg, MD, USA, 2004.

- Balestrieri, E.; Daponte, P.; De Vito, L.; Lamonaca, F. Sensors and Measurements for Unmanned Systems: An Overview. Sensors 2021, 21, 1518. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Choi, H.W.; Kim, H.J.; Kim, S.K.; Na, W.S. An Overview of Drone Applications in the Construction Industry. Drones 2023, 7, 515. [Google Scholar] [CrossRef]

- Shanti, M.Z.; Cho, C.S.; de Soto, B.G.; Byon, Y.J.; Yeun, C.Y.; Kim, T.Y. Real-Time Monitoring of Work-at-Height Safety Hazards in Construction Sites Using Drones and Deep Learning. J. Saf. Res. 2022, 83, 364–370. [Google Scholar] [CrossRef] [PubMed]

- Fan, J.; Saadeghvaziri, M. Applications of Drones in Infrastructures: Challenges and Opportunities. In Proceedings of the ICARD 2019: International Conference on Aerial Robotics and Drone, Los Angeles, CA, USA, 20–24 May 2019. [Google Scholar]

- Liang, H.; Lee, S.-C.; Bae, W.; Kim, J.; Seo, S. Towards UAVs in Construction: Advancements, Challenges, and Future Directions for Monitoring and Inspection. Drones 2023, 7, 202. [Google Scholar] [CrossRef]

- Jang, G.J.; Kim, J.; Yu, J.K.; Kim, H.J.; Kim, Y.; Kim, D.W.; Kim, K.H.; Lee, C.W.; Chung, Y.S. Review: Cost-Effective Unmanned Aerial Vehicle (UAV) Platform for Field Plant Breeding Application. Remote Sens. 2020, 12, 998. [Google Scholar] [CrossRef]

- Kim, I.H.; Jeon, H.; Baek, S.C.; Hong, W.H.; Jung, H.J. Application of Crack Identification Techniques for an Aging Concrete Bridge Inspection Using an Unmanned Aerial Vehicle. Sensors 2018, 18, 1881. [Google Scholar] [CrossRef]

- Omar, T.; Nehdi, M.L. Remote Sensing of Concrete Bridge Decks Using Unmanned Aerial Vehicle Infrared Thermography. Autom. Constr. 2017, 83, 360–371. [Google Scholar] [CrossRef]

- Adamopoulos, E.; Rinaudo, F. Uas-Based Archaeological Remote Sensing: Review, Meta-Analysis and State-of-the-Art. Drones 2020, 4, 46. [Google Scholar] [CrossRef]

- Bolourian, N.; Soltani, M.M.; Albahri, A.H.; Hammad, A. High Level Framework for Bridge Inspection Using LiDAR-Equipped UAV. In Proceedings of the ISARC 2017, 34th International Symposium on Automation and Robotics in Construction, Taiwan, China, 30 June 2017; pp. 683–688. [Google Scholar]

- Chauve, A.; Bretar, F.; Durrieu, S.; Pierrot Deseilligny, M.; Puech, W. FullAnalyze: A Research Tool for Handling Processing and Analyzing Full-Waveform Lidar Data. In Proceedings of the IEEE International Geoscience & Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar]

- Zheng, S.; Zhang, J.; Zu, R.; Li, Y. Vision Transformer-Enhanced Thermal Anomaly Detection in Building Facades through Fusion of Thermal and Visible Imagery. J. Asian Arch. Build. Eng. 2024, 1–15. [Google Scholar] [CrossRef]

- Motayyeb, S.; Samadzedegan, F.; Dadrass Javan, F.; Hosseinpour, H. Fusion of UAV-Based Infrared and Visible Images for Thermal Leakage Map Generation of Building Facades. Heliyon 2023, 9, e14551. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zhou, G.; Wang, S.; Jia, X.; Hou, L. Multi-Sensor Data Fusion and Deep Learning-Based Prediction of Excavator Bucket Fill Rates. Autom. Constr. 2025, 171, 106008. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, L. A Review on Unmanned Aerial Vehicle Remote Sensing: Platforms, Sensors, Data Processing Methods, and Applications. Drones 2023, 7, 398. [Google Scholar] [CrossRef]

- Sreenath, S.; Malik, H.; Husnu, N.; Kalaichelavan, K. Assessment and Use of Unmanned Aerial Vehicle for Civil Structural Health Monitoring. Procedia Comput. Sci. 2020, 170, 656–663. [Google Scholar] [CrossRef]

- Hrubecky, J.; Wendy Wan, P. Using Drones to Conduct Facade Inspections—Local Law 102 of 2020; New York City Department of Buildings: New York, NY, USA, 2020. Available online: https://www.nyc.gov/assets/buildings/pdf/LL102of2020-DroneReport.pdf (accessed on 3 May 2022).

- United States Department of Energy DOE Announces Its E-ROBOT Prize Phase 1 Winners. Available online: https://www.energy.gov/eere/buildings/articles/doe-announces-its-e-robot-prize-phase-1-winners (accessed on 14 June 2025).

- Dai, M.; Ward, W.O.C.; Meyers, G.; Densley Tingley, D.; Mayfield, M. Residential Building Facade Segmentation in the Urban Environment. Build. Environ. 2021, 199, 107921. [Google Scholar] [CrossRef]

- Zhou, R.; Wen, Z.; Su, H. Automatic Recognition of Earth Rock Embankment Leakage Based on UAV Passive Infrared Thermography and Deep Learning. ISPRS J. Photogramm. Remote Sens. 2022, 191, 85–104. [Google Scholar] [CrossRef]

- Luo, K.; Kong, X.; Zhang, J.; Hu, J.; Li, J.; Tang, H. Computer Vision-Based Bridge Inspection and Monitoring: A Review. Sensors 2023, 23, 7863. [Google Scholar] [CrossRef] [PubMed]

- Qiu, Q.; Lau, D. Real-Time Detection of Cracks in Tiled Sidewalks Using YOLO-Based Method Applied to Unmanned Aerial Vehicle (UAV) Images. Autom. Constr. 2023, 147, 104745. [Google Scholar] [CrossRef]

- Mirzabeigi, S.; Razkenari, M.; Crovella, P. Automated Thermal Anomaly Detection through Deep Learning-Based Semantic Segmentation of Building Envelope Images. In Proceedings of the ASCE International Conference on Computing in Civil Engineering, New Orleans, LA, USA, 28–31 July 2024. [Google Scholar]

- Liu, Y.; Liu, Y.; Duan, Y. MVG-Net: LiDAR Point Cloud Semantic Segmentation Network Integrating Multi-View Images. Remote Sens. 2024, 16, 2821. [Google Scholar] [CrossRef]

- Tan, Y.; Li, G.; Cai, R.; Ma, J.; Wang, M. Mapping and Modelling Defect Data from UAV Captured Images to BIM for Building External Wall Inspection. Autom. Constr. 2022, 139, 104284. [Google Scholar] [CrossRef]

- Borrmann, D.; Nüchter, A.; Dakulović, M.; Maurović, I.; Petrović, I.; Osmanković, D.; Velagić, J. A Mobile Robot Based System for Fully Automated Thermal 3D Mapping. Adv. Eng. Inform. 2014, 28, 425–440. [Google Scholar] [CrossRef]

- Dugstad, A.-K.; Noichl, F.; Koleva, B. UAV Path Planning for Photogrammetric Capture of Buildings towards Disaster Scenarios. In Proceedings of the 33. Forum Bauinformatik, München, Germany, 24–26 September 2025. [Google Scholar]

- Maes, W.H.; Huete, A.R.; Steppe, K. Optimizing the Processing of UAV-Based Thermal Imagery. Remote Sens. 2017, 9, 476. [Google Scholar] [CrossRef]

- Emelianov, S.; Bulgakow, A.; Sayfeddine, D. Aerial Laser Inspection of Buildings Facades Using Quadrotor. Procedia Eng. 2014, 85, 140–146. [Google Scholar] [CrossRef]

- Choi-Fitzpatrick, A.; Juskauskas, T. Up in the Air: Applying the Jacobs Crowd Formula to Drone Imagery. Procedia Eng. 2015, 107, 273–281. [Google Scholar] [CrossRef][Green Version]

- Haala, N.; Cramer, M.; Weimer, F.; Trittler, M. Performance test on UAV-based photogrammetric data collection. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 38, 7–12. [Google Scholar] [CrossRef]

- Siebert, S.; Teizer, J. Mobile 3D Mapping for Surveying Earthwork Projects Using an Unmanned Aerial Vehicle (UAV) System. Autom. Constr. 2014, 41, 1–14. [Google Scholar] [CrossRef]

- Mirzabeigi, S.; Khalili Nasr, B.; Mainini, A.G.; Blanco Cadena, J.D.; Lobaccaro, G. Tailored WBGT as a Heat Stress Index to Assess the Direct Solar Radiation Effect on Indoor Thermal Comfort. Energy Build. 2021, 242, 110974. [Google Scholar] [CrossRef]

- WuDunn, M.; Dunn, J.; Zakhor, A. Point Cloud Segmentation Using RGB Drone Imagery. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 2750–2754. [Google Scholar]

- Alawadhi, M.S. Building Energy Model Generation Using a Digital Photogrammetry-Based 3D Model. Ph.D. Thesis, Carleton University, Ottawa, ON, Canada, 2017. [Google Scholar]

- Li, L.; Mirzabeigi, S.; Soltanian-Zadeh, S.; Dong, B.; Krietemeyer, B.; Gao, P.; Wilson, N.; Zhang, J. A High-Performance Multi-Scale Modular-Based Green Design Studio Platform for Building and Urban Environmental Quality and Energy Simulations. Sustain. Cities Soc. 2025, 119, 106078. [Google Scholar] [CrossRef]

- Mirzabeigi, S.; Razkenari, M. Multiple Benefits through Residential Building Energy Retrofit and Thermal Resilient Design. In Proceedings of the 6th Residential Building Design & Construction Conference (RBDCC 2022), State College, PA, USA, 11–12 May 2022; pp. 456–465. [Google Scholar]

- Mirzabeigi, S.; Dong, B. Miscellaneous Electric Loads Prediction by Deep Learning Implementation: An Educational Case Study. ASHRAE Trans. 2024, 130, 230–238. [Google Scholar]

- Mirzabeigi, S.; Soltanian-Zadeh, S.; Carter, B.; Krietemeyer, B.; Zhang, J. “Jensen” Networked Sensors for In-Situ Real-Time Monitoring of the Local Hygrothermal Conditions and Heat Fluxes Across a Building Enclosure Before and After a Building Retrofit. In Proceedings of the Multiphysics and Multiscale Building Physics, Toronto, ON, Canada, 25–27 July 2024; Berardi, U., Ed.; Springer Nature: Singapore, 2025; pp. 527–532. [Google Scholar]

- Quamar, M.M.; Al-Ramadan, B.; Khan, K.; Shafiullah, M.; El Ferik, S. Advancements and Applications of Drone-Integrated Geographic Information System Technology—A Review. Remote Sens. 2023, 15, 5039. [Google Scholar] [CrossRef]

- Ma, Y.; Wu, H.; Wang, L.; Huang, B.; Ranjan, R.; Zomaya, A.; Jie, W. Remote Sensing Big Data Computing: Challenges and Opportunities. Future Gener. Comput. Syst. 2015, 51, 47–60. [Google Scholar] [CrossRef]

- Weng, W.; Wang, J.; Shen, L.; Song, Y. Review of Analyses on Crowd-Gathering Risk and Its Evaluation Methods. J. Saf. Sci. Resil. 2023, 4, 93–107. [Google Scholar] [CrossRef]

- Mahmoodzadeh, M.; Gretka, V.; Mukhopadhyaya, P. Challenges and Opportunities in Quantitative Aerial Thermography of Building Envelopes. J. Build. Eng. 2023, 69, 106214. [Google Scholar] [CrossRef]

- Berra, E.F.; Peppa, M.V. Advances and Challenges of UAV SFM MVS Photogrammetry and Remote Sensing: Short Review. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLII-3/W12-2020, 267–272. [Google Scholar] [CrossRef]

- Johnsen, B.H.; Nilsen, A.A.; Hystad, S.W.; Grytting, E.; Ronge, J.L.; Rostad, S.; Öhman, P.H.; Overland, A.J. Selection of Norwegian Police Drone Operators: An Evaluation of Selected Cognitive Tests from “The Vienna Test System”. Police Pract. Res. 2024, 25, 38–52. [Google Scholar] [CrossRef]

- Lee, D.; Hess, D.J.; Heldeweg, M.A. Safety and Privacy Regulations for Unmanned Aerial Vehicles: A Multiple Comparative Analysis. Technol. Soc. 2022, 71, 102079. [Google Scholar] [CrossRef]

- Federal Aviation Administration Code of Federal Regulations. Available online: https://www.govinfo.gov/content/pkg/CFR-2020-title14-vol1/xml/CFR-2020-title14-vol1-part48.xml (accessed on 12 July 2024).

- Federal Aviation Administration Remote Identification of Unmanned Aircraft, Federal Aviation Administration. Available online: https://www.faa.gov/sites/faa.gov/files/2021-08/RemoteID_Final_Rule.pdf (accessed on 12 July 2024).

- Watkins, S.; Burry, J.; Mohamed, A.; Marino, M.; Prudden, S.; Fisher, A.; Kloet, N.; Jakobi, T.; Clothier, R. Ten Questions Concerning the Use of Drones in Urban Environments. Build. Environ. 2020, 167, 106458. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mirzabeigi, S.; Razkenari, R.; Crovella, P. A Review of the Potential of Drone-Based Approaches for Integrated Building Envelope Assessment. Buildings 2025, 15, 2230. https://doi.org/10.3390/buildings15132230

Mirzabeigi S, Razkenari R, Crovella P. A Review of the Potential of Drone-Based Approaches for Integrated Building Envelope Assessment. Buildings. 2025; 15(13):2230. https://doi.org/10.3390/buildings15132230

Chicago/Turabian StyleMirzabeigi, Shayan, Ryan Razkenari, and Paul Crovella. 2025. "A Review of the Potential of Drone-Based Approaches for Integrated Building Envelope Assessment" Buildings 15, no. 13: 2230. https://doi.org/10.3390/buildings15132230

APA StyleMirzabeigi, S., Razkenari, R., & Crovella, P. (2025). A Review of the Potential of Drone-Based Approaches for Integrated Building Envelope Assessment. Buildings, 15(13), 2230. https://doi.org/10.3390/buildings15132230