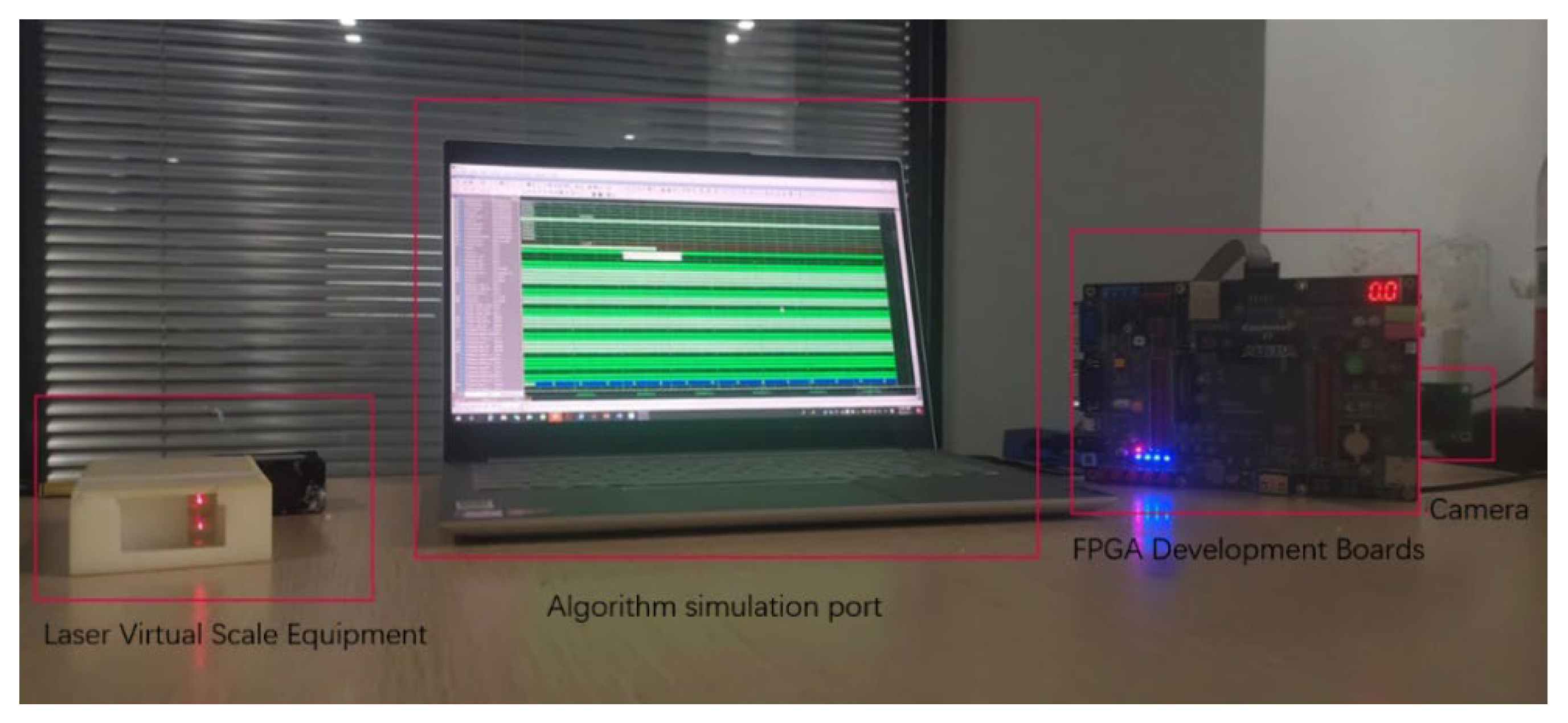

An FPGA-Based Laser Virtual Scale Method for Structural Crack Measurement

Abstract

1. Introduction

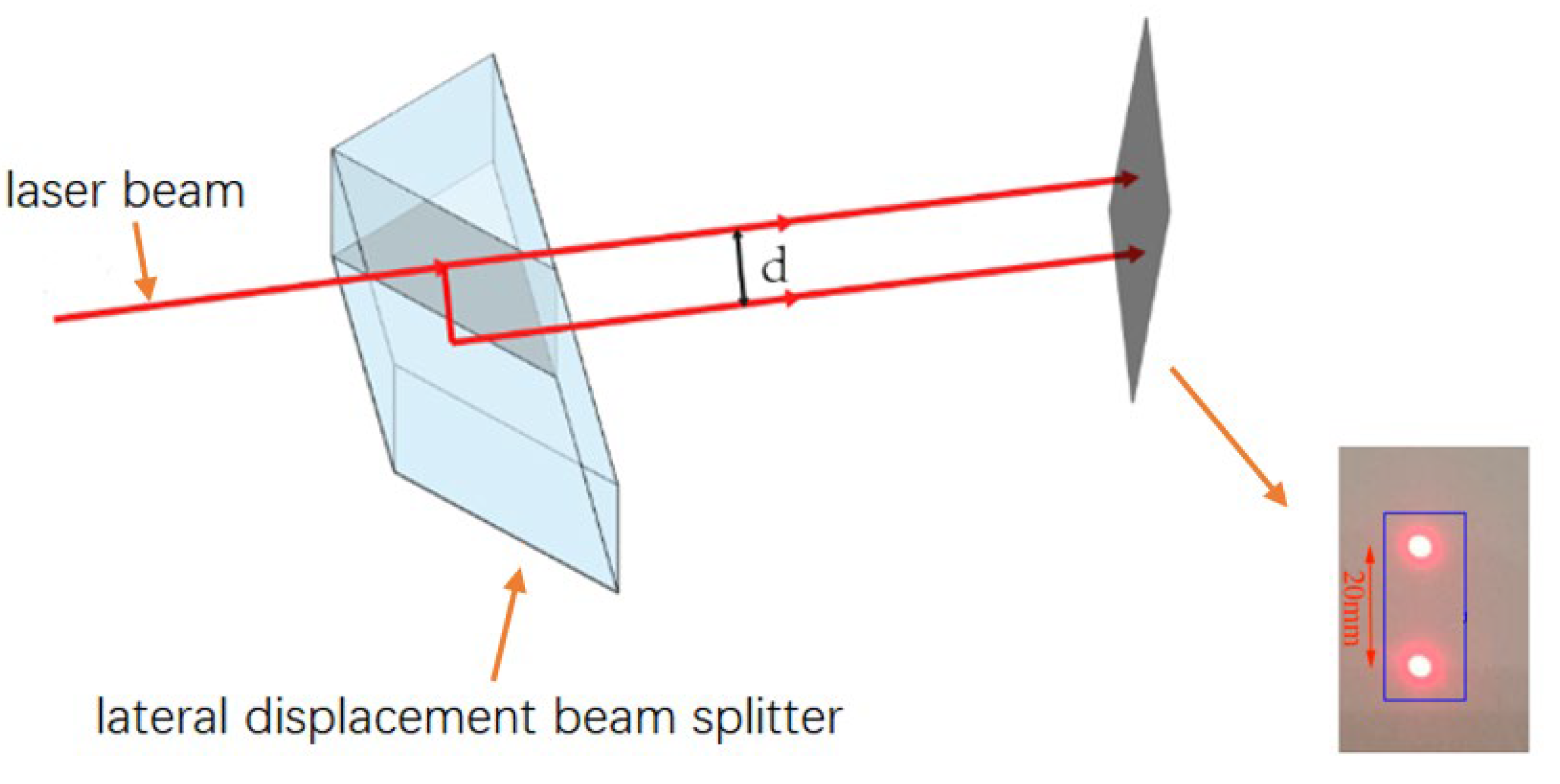

2. Laser Virtual Scale Model

3. FPGA-Based Laser Spot Image Processing

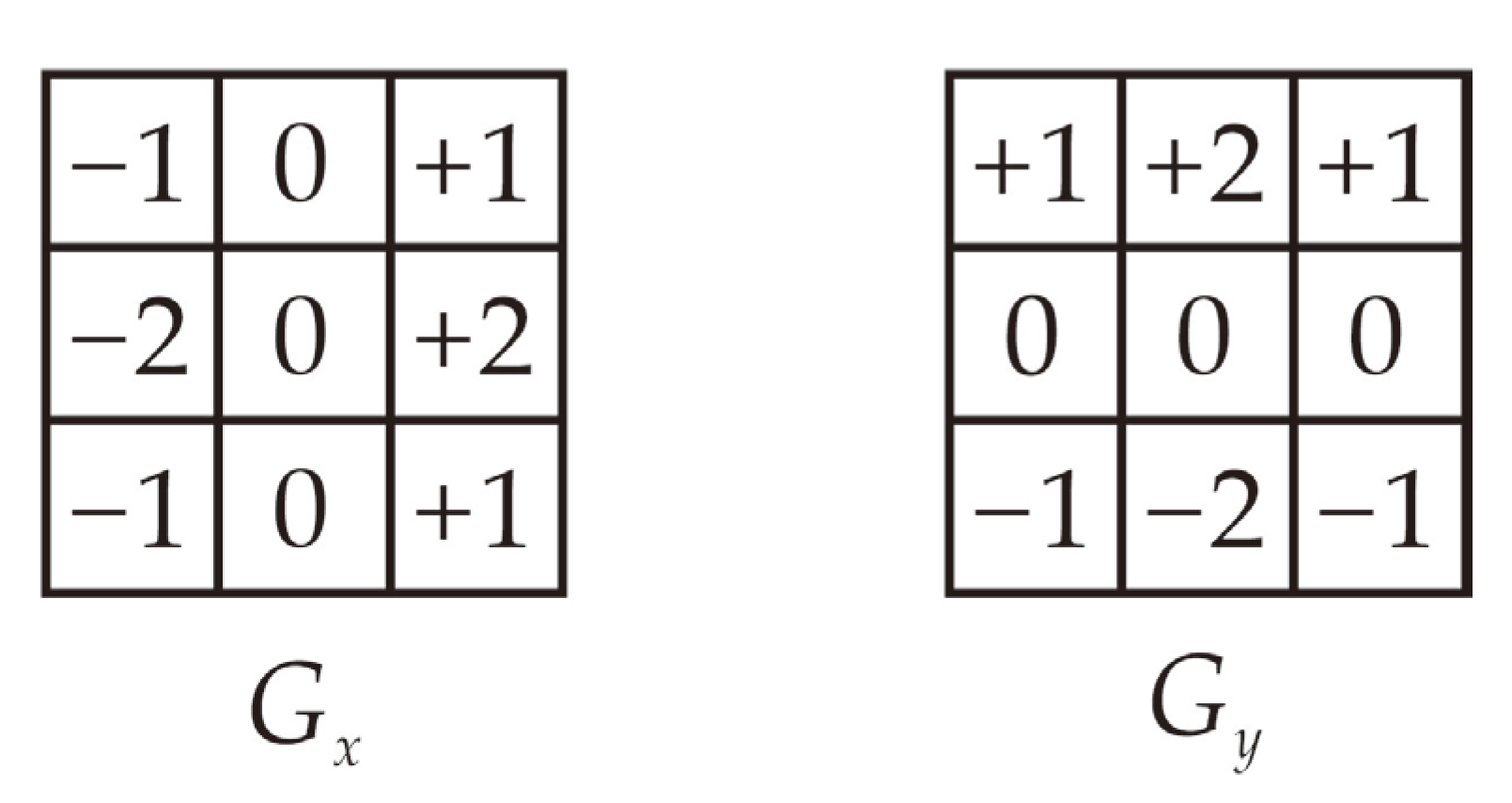

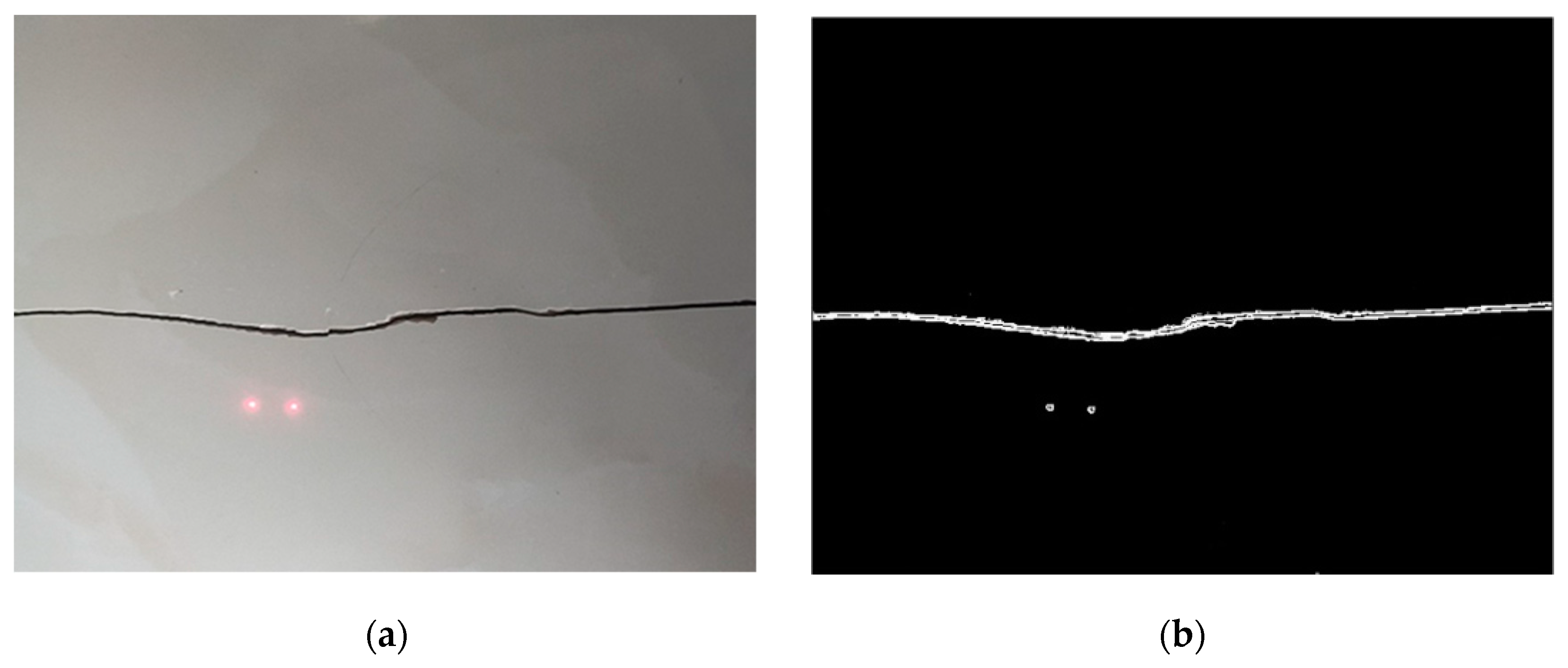

3.1. Image Processing

- (1)

- Preliminary de-noising

- (2)

- Local adaptive edge extraction

- (3)

- Deep de-noising

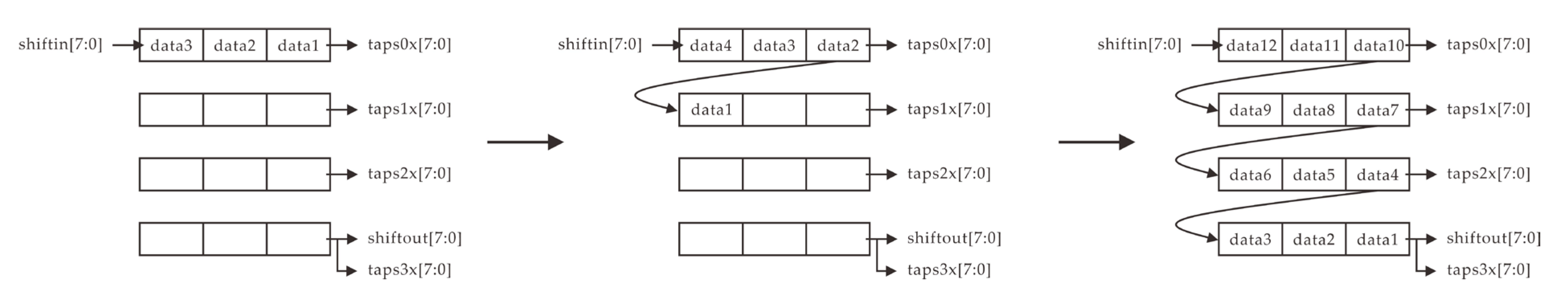

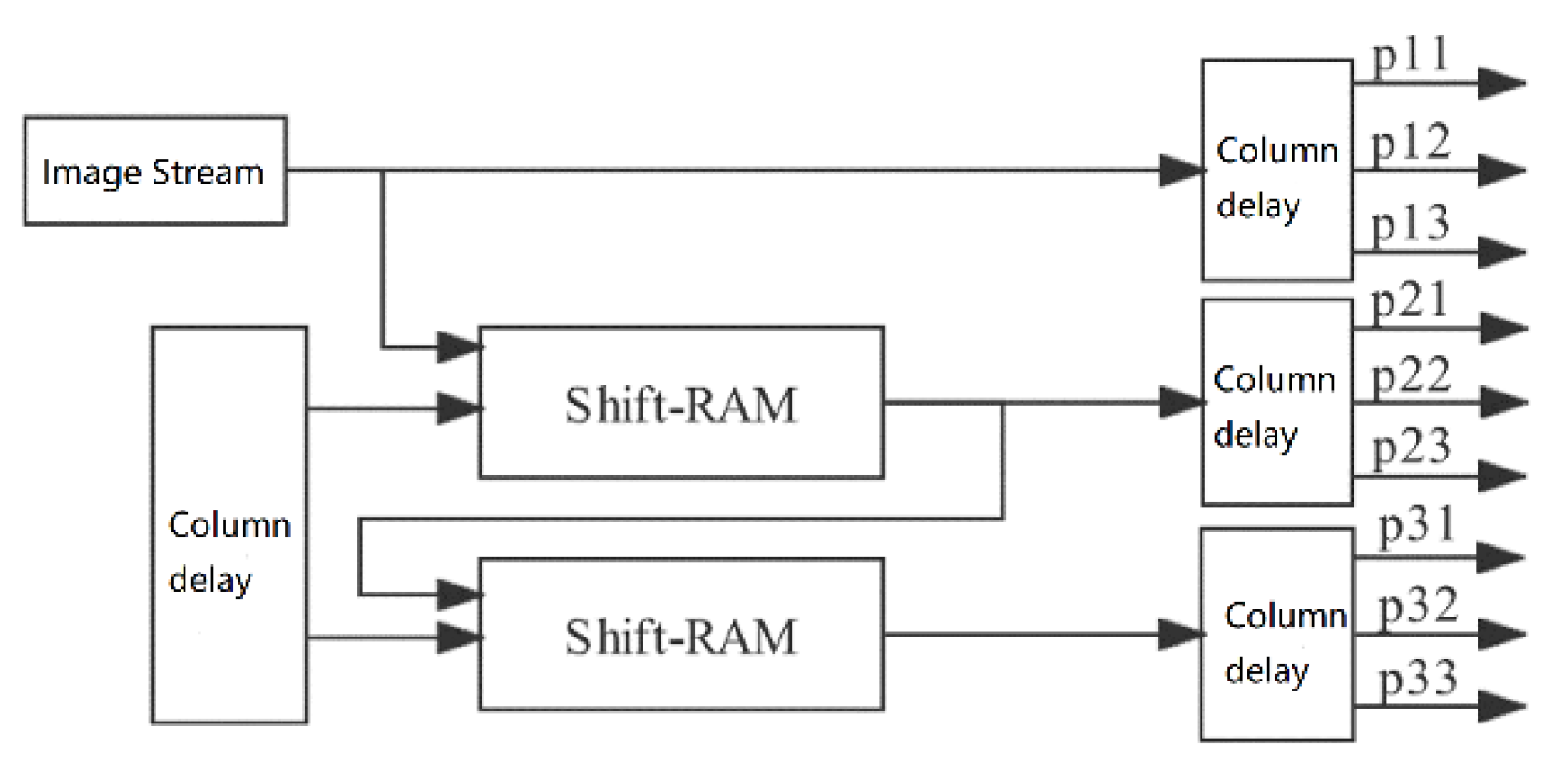

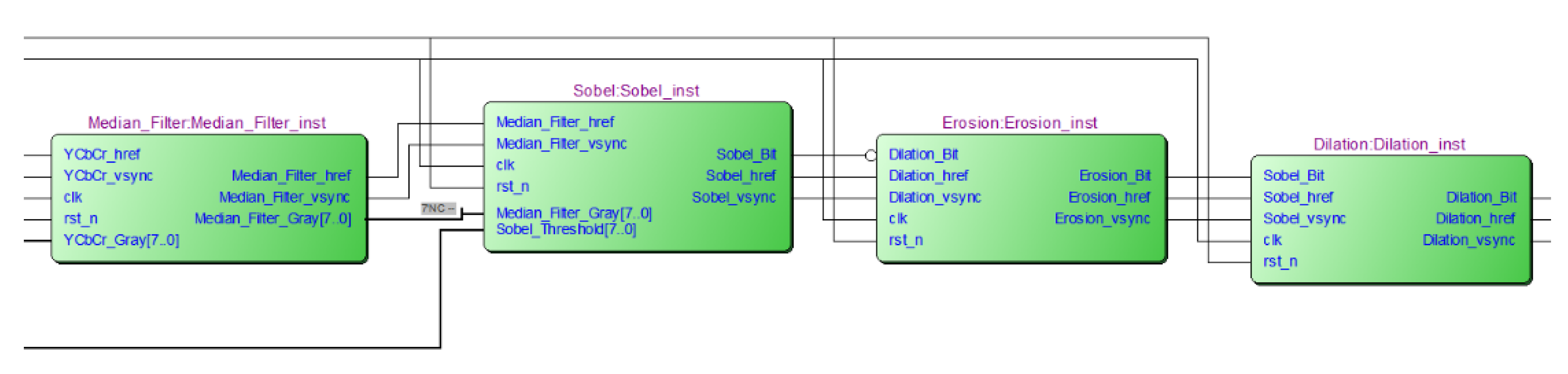

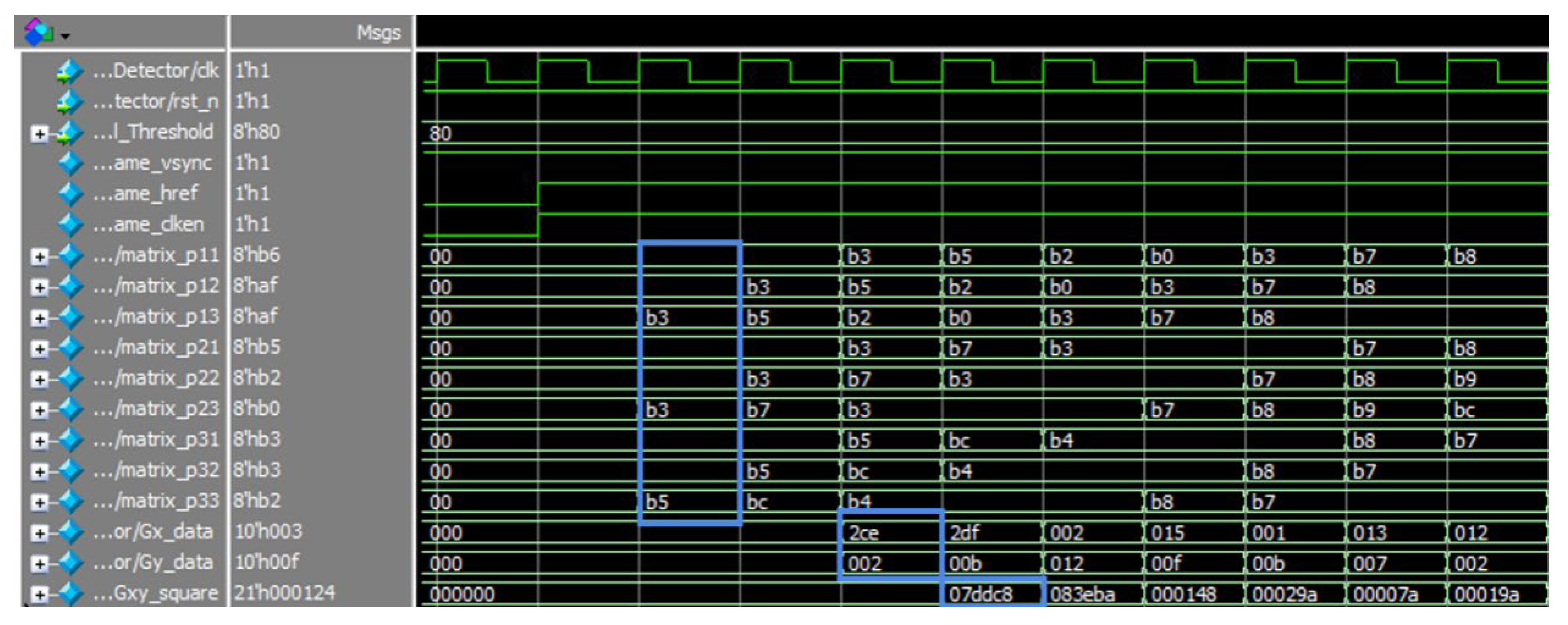

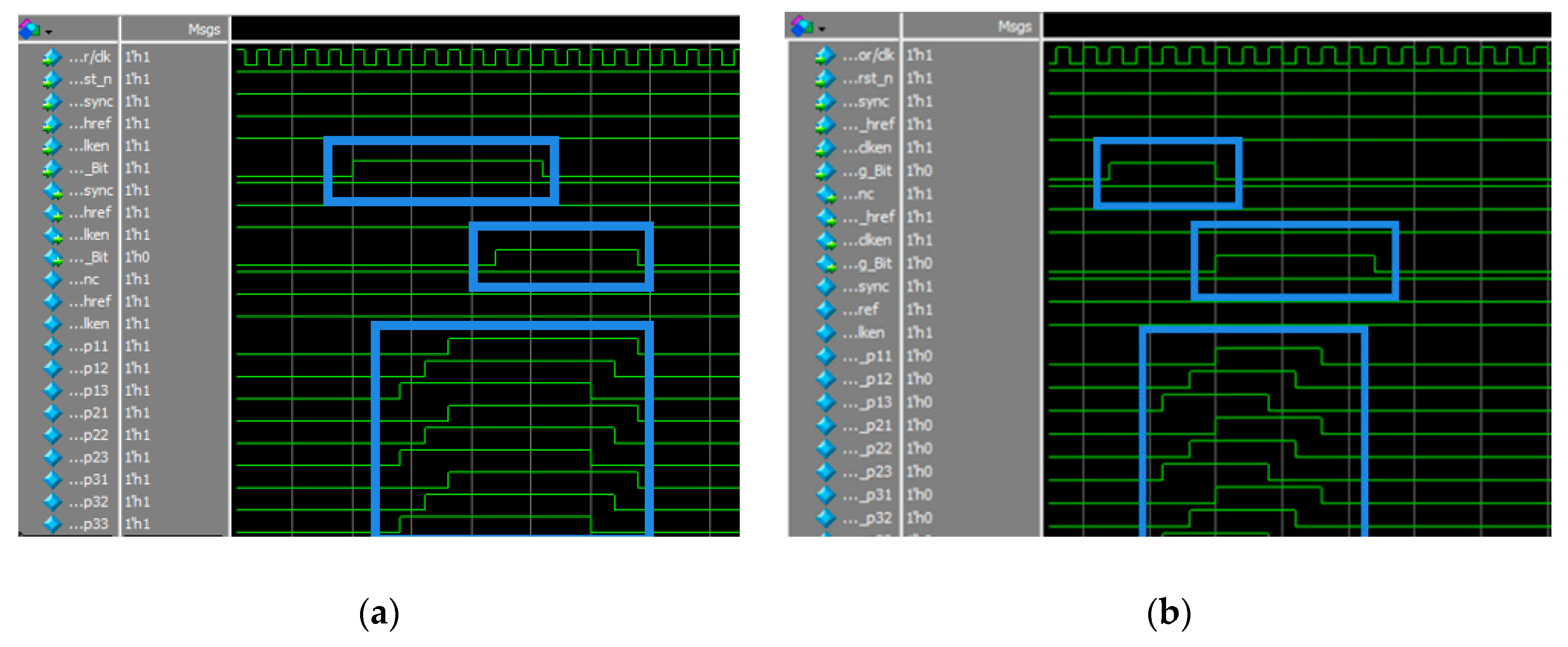

3.2. FPGA Hardware Implementation

4. FPGA-Based Spot Localization and Centroid Extraction

4.1. Spot Localization and Plasmonic Extraction

- (1)

- Obtain a pixel point scan of the spot edge image by row. When an unmarked valid pixel is scanned and the pixel point markers in all eight fields of the pixel are 0, then a new marker is given to . Continue scanning the row to the right, and if the pixel point to the right of is an unmarked valid pixel point, then assign the same marker to the pixel point to the right of .

- (2)

- While scanning the current row, the next row is marked, i.e., the valid pixel point of the marked pixel in the field of the next row is marked and given the same marker number as the valid pixel point. By scanning row by row, the adjacent pixel points are marked with the same marker.

- (3)

- While scanning the image, a storage space is opened to store the coordinate data and address the information of each connected domain, and data statistics are performed to calculate the number of points and centroid information inside the connected domain.

- (4)

- When the pixel below the neighbor of the marked pixel is marked in the same connected domain, the marking of the connected domain is considered completed, and the statistical results as well as the centroid information are output.

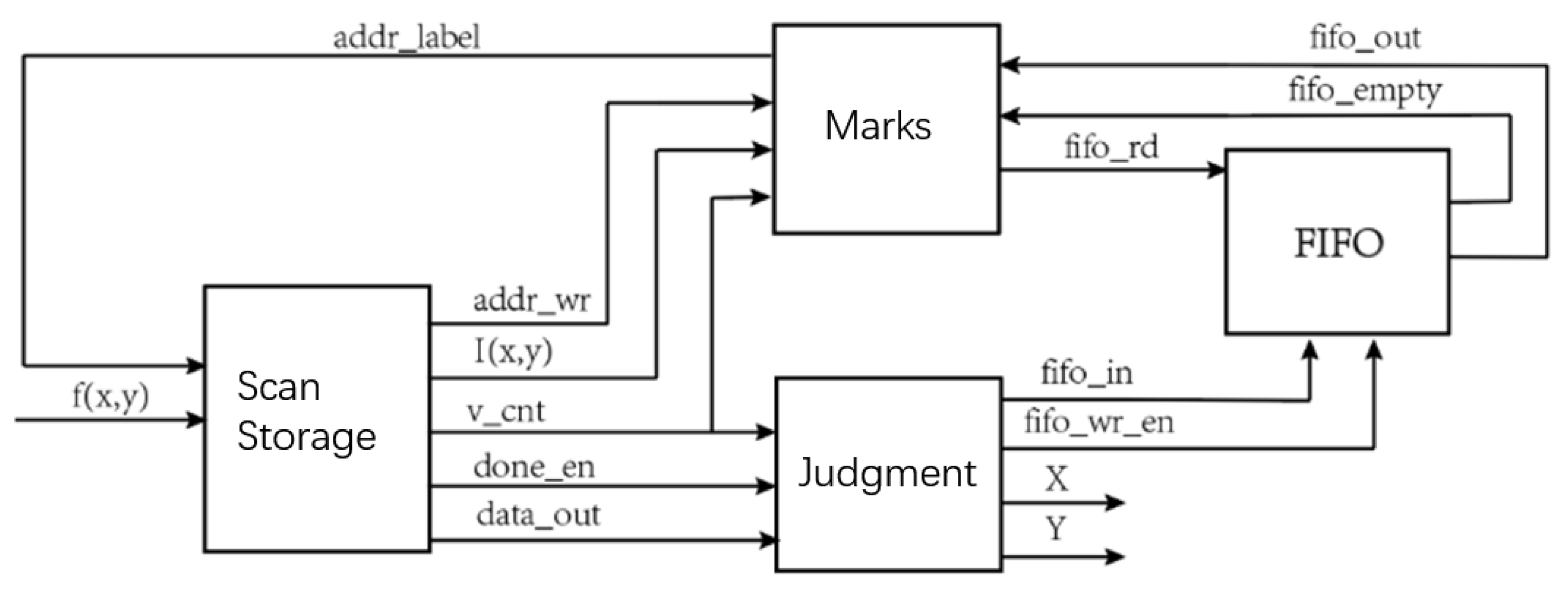

4.2. FPGA Hardware Implementation

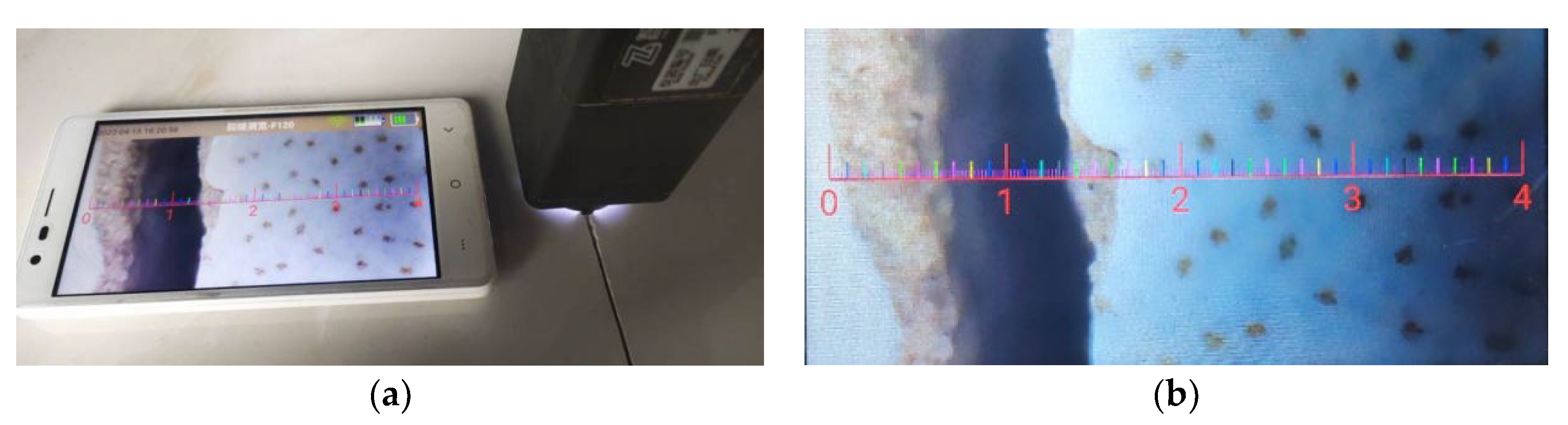

5. Experimental Verification

5.1. Accuracy Verification

5.2. Resource Consumption and Real-Time Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lukić, B.; Tengattini, A.; Dufour, F.; Briffaut, M. Visualising water vapour condensation in cracked concrete with dynamic neutron radiography. Mater. Lett. 2021, 283, 128755. [Google Scholar] [CrossRef]

- Zhao, M.; Nie, Z.; Wang, K.; Liu, P.; Zhang, X. Nonlinear ultrasonic test of concrete cubes with induced crack. Ultrasonics 2019, 97, 1–10. [Google Scholar] [CrossRef]

- Zolfaghari, A.; Zolfaghari, A.; Kolahan, F. Reliability and sensitivity of magnetic particle nondestructive testing in detecting the surface cracks of welded components. Nondestruct. Test. Eval. 2018, 33, 290–300. [Google Scholar] [CrossRef]

- Verstrynge, E.; Lacidogna, G.; Accornero, F.; Tomor, A. A review on acoustic emission monitoring for damage detection in masonry structures. Constr. Build. Mater. 2021, 268, 121089. [Google Scholar] [CrossRef]

- Yu, Y.; Samali, B.; Rashidi, M.; Mohammadi, M.; Nguyen, T.N.; Zhang, G. Vision-based concrete crack detection using a hybrid framework considering noise effect. J. Build. Eng. 2022, 61, 105246. [Google Scholar] [CrossRef]

- Ribeiro, D.; Calcada, R.; Ferreira, J.; Martins, T. Non-contact measurement of the dynamic displacement of railway bridges using an advanced video-based system. Eng. Struct. 2014, 75, 164–180. [Google Scholar] [CrossRef]

- Azimi, M.; Eslamlou, A.D.; Pekcan, G. Data-Driven Structural Health Monitoring and Damage Detection through Deep Learning: State-of-the-Art Review. Sensors 2020, 20, 2778. [Google Scholar] [CrossRef]

- Gao, Y.; Mosalam, K.M. Deep Transfer Learning for Image-Based Structural Damage Recognition. Comput. Aided Civ. Infrastruct. Eng. 2018, 33, 748–768. [Google Scholar] [CrossRef]

- Park, J.-H.; Huynh, T.-C.; Choi, S.-H.; Kim, J.-T. Vision-based technique for bolt-loosening detection in wind turbine tower. Wind Struct. 2015, 21, 709–726. [Google Scholar] [CrossRef]

- Mustapha, S.; Kassir, A.; Hassoun, K.; Dawy, Z.; Abi-Rached, H. Estimation of crowd flow and load on pedestrian bridges using machine learning with sensor fusion. Autom. Constr. 2020, 112, 103092. [Google Scholar] [CrossRef]

- Tang, Y.; Zhu, M.; Chen, Z.; Wu, C.; Chen, B.; Li, C.; Li, L. Seismic performance evaluation of recycled aggregate concrete-filled steel tubular columns with field strain detected via a novel mark-free vision method. Structures 2022, 37, 426–441. [Google Scholar] [CrossRef]

- Tang, Y.; Huang, Z.; Chen, Z.; Chen, M.; Zhou, H.; Zhang, H.; Sun, J. Novel visual crack width measurement based on backbone double-scale features for improved detection automation. Eng. Struct. 2023, 274, 115158. [Google Scholar] [CrossRef]

- Chan, S.C.; Ngai, H.O.; Ho, K.L. Programmable image processing system using FPGA. Int. J. Electron. 1994, 75, 725–730. [Google Scholar] [CrossRef]

- Chisholm, T.; Lins, R.; Givigi, S. FPGA-based design for real-time crack detection based on particle filter. IEEE Trans. Ind. Inform. 2019, 16, 5703–5711. [Google Scholar] [CrossRef]

- Zhuang, F.; Zhao, Y.; Yang, L.; Cao, Q.; Lee, J. Solar cell crack inspection by image processing. In Proceedings of the International Conference on Business of Electronic Product Reliability & Liability, Shanghai, China, 30 April 2004. [Google Scholar]

- Jian-Jia, P.; Yuan-Yan, T.; Bao-Chang, P. The algorithm of fast mean filtering. In Proceedings of the 2007 International Conference on Wavelet Analysis and Pattern Recognition, Beijing, China, 2–4 November 2007; pp. 244–248. [Google Scholar]

- Justusson, B.I. Median Filtering: Statistical Properties. In Two-Dimensional Digital Signal Prcessing II: Transforms and Median Filters; Springer: Berlin/Heidelberg, Germany, 1981; pp. 161–196. [Google Scholar]

- Jazwinski, A.H. Adaptive filtering. Automatica 1969, 5, 475–485. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Nakamura, S.; Saegusa, R.; Hashimoto, S. Image-Based Crack Detection for Real Concrete Surfaces. IEEJ Trans. Electr. Electron. Eng. 2008, 3, 128–135. [Google Scholar] [CrossRef]

- Alam, M.S.; Iftekharuddin, K.M.; Karim, M.A. Polarization-encoded optical shadow casting: Edge detection using roberts operator. Microw. Opt. Technol. Lett. 1993, 6, 190–193. [Google Scholar] [CrossRef]

- Kanopoulos, N.; Vasanthavada, N.; Baker, R.L. Design of an image edge detection filter using the Sobel operator. IEEE J. Solid-State Circuits 1988, 23, 358–367. [Google Scholar] [CrossRef]

- Dong, W.; Shisheng, Z. Color Image Recognition Method Based on the Prewitt Operator. In Proceedings of the 2008 International Conference on Computer Science and Software Engineering, Wuhan, China, 12–14 December 2008; pp. 170–173. [Google Scholar]

- Abdel-Qader, I.; Abudayyeh, O.; Kelly, M.E. Analysis of Edge-Detection Techniques for Crack Identification in Bridges. J. Comput. Civ. Eng. 2003, 17, 255–263. [Google Scholar] [CrossRef]

- Serra, J. Morphological filtering: An overview. Signal Process. 1994, 38, 3–11. [Google Scholar] [CrossRef]

- Bouhamidi, A.; Jbilou, K. Sylvester Tikhonov-regularization methods in image restoration. J. Comput. Appl. Math. 2007, 206, 86–98. [Google Scholar] [CrossRef]

- Wang, C.; Gu, Y.; Li, J.; He, X.; Zhang, Z.; Gao, Y.; Wu, C. Iterative Learning for Distorted Image Restoration. In Proceedings of the 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, Singapore, 23–27 May 2022; pp. 2085–2089. [Google Scholar]

- Nomura, Y.; Sagara, M.; Naruse, H.; Ide, A. Simple calibration algorithm for high-distortion lens camera. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 1095–1099. [Google Scholar] [CrossRef]

- Li, G.; He, S.; Ju, Y.; Du, K. Long-distance precision inspection method for bridge cracks with image processing. Autom. Constr. 2014, 41, 83–95. [Google Scholar] [CrossRef]

- Huang, S.; Ding, W.; Huang, Y. An Accurate Image Measurement Method Based on a Laser-Based Virtual Scale. Sensors 2019, 19, 3955. [Google Scholar] [CrossRef]

- Subirats, P.; Dumoulin, J.; Legeay, V.; Barba, D. Automation of Pavement Surface Crack Detection using the Continuous Wavelet Transform. In Proceedings of the 2006 International Conference on Image Processing, Atlanta, GA, USA, 8–11 October 2006; pp. 3037–3040. [Google Scholar]

- Chen, C.; Seo, H.; Zhao, Y. A novel pavement transverse cracks detection model using WT-CNN and STFT-CNN for smartphone data analysis. Int. J. Pavement Eng. 2022, 23, 4372–4384. [Google Scholar] [CrossRef]

- Klupsch, S.; Ernst, M.; Huss, S.A.; Rumpf, M.; Strzodka, R. Real time image processing based on reconfigurable hardware acceleration. In Proceedings of the Workshop Heterogeneous Reconfigurable Systems on Chip (SoC), April 2002, Hamburg, Germany; p. 1.

- Zhang, K.; Liao, Q. FPGA implementation of eight-direction Sobel edge detection algorithm based on adaptive threshold. J. Phys. Conf. Ser. 2020, 1678, 012105. [Google Scholar] [CrossRef]

- Alex Raj, S.M.; Khadeeja, N.; Supriya, M. Performance Evaluation of Image Processing Algorithms for Underwater Image Enhancement in FPGA. IOSR J. VLSI Signal Process. 2015, 5, 17–21. [Google Scholar]

- Uetsuhara, K.; Nagayama, H.; Shibata, Y.; Oguri, K. Discussion on High Level Synthesis FPGA Design of Camera Calibration. In Proceedings of the 12th International Conference on Complex, Intelligent, and Software Intensive Systems, Matsue, Japan, 4–6 July 2018; pp. 538–549. [Google Scholar]

- Hagiwara, H.; Touma, Y.; Asami, K.; Komori, M. FPGA-Based Stereo Vision System Using Gradient Feature Correspondence. J. Robot. Mechatron. 2015, 27, 681–690. [Google Scholar] [CrossRef]

- Sun, Y.; Liang, X.; Lang, Y. FPGA implementation of laser spot center location algorithm based on circle fitting. Infrared Laser Eng. 2011, 40, 970–973. [Google Scholar]

- Schaefer, S.; McPhail, T.; Warren, J. Image deformation using moving least squares. In Proceedings of the SIGGRAPH06: Special Interest Group on Computer Graphics and Interactive Techniques Conference, Boston, MA, USA, 30 July–3 August 2006; pp. 533–540. [Google Scholar]

- Kang, M.; Xu, Q.; Wang, B. A Roberts' Adaptive Edge Detection Method. J. Xi'an Jiaotong Univ. 2008, 42, 1240–1244. [Google Scholar]

- Azzeh, J.; Zahran, B.; Alqadi, Z. Salt and pepper noise: Effects and removal. Int. J. Inform. Vis. 2018, 2, 252–256. [Google Scholar] [CrossRef]

- Davies, E.R. CHAPTER 3—Basic Image Filtering Operations. In Machine Vision, 3rd edition; Davies, E.R., Ed.; Morgan Kaufmann: Burlington, MA, USA, 2005; pp. 47–101. [Google Scholar]

- Jin, L.; Jiang, H. Implementation of Adaptive Detection Threshold in Digital Channelized Receiver Based on FPGA. In Information Technology and Intelligent Transportation Systems, Proceedings of the 2015 International Conference on Information Technology and Intelligent Transportation Systems ITITS, Xi’an, China, 12–13 December 2015; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Soille, P.; Talbot, H. Directional morphological filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1313–1329. [Google Scholar] [CrossRef]

- Serra, J.; Vincent, L. An overview of morphological filtering. Circuits Syst. Signal Process. 1992, 11, 47–108. [Google Scholar] [CrossRef]

- AlAli, M.I.; Mhaidat, K.M.; Aljarrah, I.A. Implementing image processing algorithms in FPGA hardware. In Proceedings of the 2013 IEEE Jordan Conference on Applied Electrical Engineering and Computing Technologies (AEECT), Amman, Jordan, 3–5 December 2013; pp. 1–5. [Google Scholar]

| Left Coordinate | Right Coordinate | Barycenter Distance (Pixels) | |

|---|---|---|---|

| FPGA simulation | (203, 335) | (241, 336) | 38.013 |

| MATLAB | (205, 336) | (242, 338) | 37.054 |

| Laser Angle | 90° | 60° | 45° |

|---|---|---|---|

| Actual width | 1.62 mm | 1.62 mm | 1.62 mm |

| Calculated width | 1.58 mm | 1.50 mm | 1.42 mm |

| Error | 2.47% | 7.4% | 12.3% |

| Logic Utilization | Used | Available | Utilization |

|---|---|---|---|

| Number of slice registers | 4275 | 54,576 | 7% |

| Number of slice LUTs | 4497 | 27,288 | 16% |

| Number of fully used LUT-FF pairs | 1652 | 7120 | 23% |

| Number of bonded IOBs | 60 | 316 | 18% |

| Number of block RAM/FIFO | 22 | 116 | 18% |

| Number of BUFG/BUFGCTRLs | 2 | 16 | 12% |

| Number of DSP48A1s | 2 | 58 | 3% |

| Platform | Image Resolution | Time Spent Per Frame | Frame Rate |

|---|---|---|---|

| FPGA | 640 480 | 54 ms | 18.52 fps |

| PC | 640 480 | 6570 ms | 0.15 fps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, M.; Fang, Z.; Xiao, P.; Tong, R.; Zhang, M.; Huang, Y. An FPGA-Based Laser Virtual Scale Method for Structural Crack Measurement. Buildings 2023, 13, 261. https://doi.org/10.3390/buildings13010261

Yuan M, Fang Z, Xiao P, Tong R, Zhang M, Huang Y. An FPGA-Based Laser Virtual Scale Method for Structural Crack Measurement. Buildings. 2023; 13(1):261. https://doi.org/10.3390/buildings13010261

Chicago/Turabian StyleYuan, Miaomiao, Zhuneng Fang, Peng Xiao, Ruijin Tong, Min Zhang, and Yule Huang. 2023. "An FPGA-Based Laser Virtual Scale Method for Structural Crack Measurement" Buildings 13, no. 1: 261. https://doi.org/10.3390/buildings13010261

APA StyleYuan, M., Fang, Z., Xiao, P., Tong, R., Zhang, M., & Huang, Y. (2023). An FPGA-Based Laser Virtual Scale Method for Structural Crack Measurement. Buildings, 13(1), 261. https://doi.org/10.3390/buildings13010261