1. Introduction

We are in the midst of a digital revolution. The recent pandemic has brought to the fore a distinct set of challenges for the design and construction community in how we envision, iterate, and deliver the built environment. Given the rate of change in digital practices, there is a tension between rapid sensemaking in the here–and–now, with new language emerging as digital technologies become pervasive, and the need to focus on the uncertainties and ‘grand challenges’ that we face and the long-term consequences of buildings and infrastructure. We argue that this tension is important in considering the next generation of virtual and mixed reality in the built environment.

As digital technologies become pervasive, increasing references abound to being amid a ‘fourth industrial revolution’. This phrase, first coined by the founder and chairman of the World Economic Forum, Klaus Schwab, describes the current era as “a fusion of technologies that is blurring the lines between the physical, digital, and biological spheres, collectively referred to as cyber-physical systems” [

1]. Moreover, we are witnessing ongoing sensemaking processes through the rapidly changing digital vernacular, where terms such as ‘digitization’, ‘digitalization’ and ‘automation’, largely borrowed from the “smart” manufacturing domain [

2] illustrate the pervasiveness and dominance of digital technologies across architecture, engineering, and construction practices. A convergence of material science, robotics, 3D printing, sensors, artificial intelligence and other technologies is ushering in a new industrial age that is changing nearly all facets of society, including the built environment sector. Consequently, these new digital capabilities that connect physical environments with digital ecosystems have begun to transform design and project delivery, reengineer supply chains, increase offsite manufacturing, and change design practices to deliver not only physical but digital assets, increasing the complexity in our otherwise traditional ways of working. The term ‘digital twin’ has emerged to describe an increased level of interaction between the operation and use of the physical environment and its digital copy that can be updated with realtime information from sensors and scans [

3]. The ease with which large swaths of indiscriminate data can be produced and the proliferation of various sources of data about how this physical environment is used, including sensors or mobile devices, have given rise to the concepts of the “Internet of Things” and “Big Data” [

4], which are processed using big data analytics, machine learning, and artificial intelligence in an attempt to understand, derive, and create useful knowledge from the data. All these concepts and initiatives raise questions about how to make sense of the ever-growing raw and complex data sets and how we use the built environment to make informed decisions about the future.

At the same time, built environment practitioners are increasingly challenged to consider the long-term consequences of any interventions on the many areas of the physical environment, especially in response to uncertainties arising from climate change and pandemics [

5]. Moreover, the pursuit of zero-carbon and high-performance buildings has been further complicated by competing goals and priorities [

6] and challenged by the view that their performance is realized over time, rather than predetermined [

7]. This has consequences for how digital technologies are used, demanding new and different kinds of data and processes, providing new challenges to the construction informatics research community and to practitioners. The growing availability of data and a wide range of simulation and visualization technologies has begun to present opportunities to inform decision making around the use of existing and design of new assets, though not without challenges. Managing data increasingly made available from sensors, users, semantically-rich building information models (BIM), or mobile devices, for example, can be performed through data mining, machine learning, or artificial intelligence in order to automate aspects of interrogating large datasets to detect patterns. Yet, these tend to be perceived somewhat as “black box” approaches [

8] as they do not automatically provide insight into data. In such cases, we can leverage a set of technologies that primarily serve as communication platforms that engage a broader group of stakeholders in conversations about priorities and consequences of design options through visualizing, probing, and manipulating data more dynamically.

Virtual reality (VR) technologies have long been seen to offer the potential to engage diverse groups of built environment users in intuitive and interactive explorations of spatial and contextual data, where the technology attributes of realtime feedback, spatial and visual immersion, stereoscopy, and wide field of view are particularly beneficial to understanding visual information [

9,

10,

11]. In response to many environmental challenges, the design and engineering disciplines have been tasked with becoming more systematic and interdisciplinary in their approach [

12], where VR techniques are seen as distinct communication platforms that are well positioned to engage users in conversations both in person or virtually. The ubiquity of commercially available mobile and digital devices offers immense opportunities for broad user groups to begin to engage with the data in various ways. For example, the ubiquity and growing processing power of mobile phones offer users a range of ways to generate information or augment settings virtually, such as through 3D scanning applications, geo-tagged photos and videos. At the same time, while VR technologies have long served as platforms for interdisciplinary teams to make decisions about the built environment, we are still witnessing only a fragmented understanding in how to adequately mobilize these technologies across disciplinary boundaries for truly sustainable design.

In this conceptual paper, we build on our prior research trajectory [

3,

13] and our review and synthesis of the wider literatures to characterize the role that virtual reality could play in visualizing and realizing preferred futures and addressing the grand challenges in our uncertain and changing world. We are sensitive to the difficulties of making preferred futures online, with its remoteness from associated places, people, and materials and vulnerability to excluding relevant experience [

14]. Our contribution is to articulate how we can use these technologies to span disciplinary boundaries and integrate and make sense of diverse data to impact the designing and understanding of a more sustainable world. We explore a set of underlying assumptions and considerations such as the technology configurations, authority of the data, and VR models and procedures to enable constructive questioning. We situate this discussion of the use of VR technologies using examples of use scenarios, tasks, and user experiences. This approach allows us to understand how both virtual and augmented reality applications can alter user perceptions and the understanding of digital information, which can consequently affect the design and operation of built environment projects. We begin by mapping the VR technological configurations over the various modes of working and then describe the applications of VR technologies for informing future changes in the built environment. In doing so, we argue that the use of VR remains largely discipline-specific where projects are seen as singular entities, rather than as interventions within a larger and more complex system.

2. Digital Landscape and Changing Practices

The urgency of climate change and the recent pandemic have raised many questions of what we may want the future of the built environment to look like and the process through which that future can be accomplished. At a minimum, the concept of “sustainability” permeates conversations behind various initiatives to reduce carbon emissions and increase energy performance as a minimum, although it is argued that responding to the climate challenge is far more complex, or a “super-wicked” problem that defies simplistic technological solutions and often prioritizes short-term goals [

7,

15]. In response to these initiatives, in the built environment disciplines we see a number of strategies developed to improve information management processes through developing a more structured and purposeful digital information with a long shelf life.

This shift is profoundly influencing the way that public and private owners and operators conceive of infrastructure delivery projects, for example, and has led to a greater focus on outcomes rather than outputs, and a broader digital context within which project data can be situated, for example in the context of ‘smart cities’. When we want to base our decisions on how to design future uses, one way to achieve it is to try to make sense of how we inhabit our built environment now. For example, while “big data” may be a big buzzword, the term encapsulates the harvesting of realtime, user-generated data from mobile devices and sensors to provide clues for detecting traffic patterns, simulating and predicting future needs, and planning design interventions. For a long time, technologies have allowed us to create these sorts of simulations, but the idea now is to further automate these processes by making more direct links to readily available sources of data.

At the same time, we are acutely aware that technological development and increasingly available data often outpace our understanding of how to adequately use them to achieve much proclaimed benefits. Before becoming useful information, much available data from users or sensors need to be filtered, checked, or validated. Furthermore, data filtering and use are driven by the purpose of their primary users, further reflecting an inherent bias. Hence, data, even quantitative, are often subjective [

16] and thus open to interpretation depending on the goals of the data users. Furthermore, this increasing automation is well-placed for data pattern recognition and routine tasks, but as Brynjolfsson and McAfee [

17] point out, not for issues of perception, creative ability, and problem solving, which remain unmatched in that they are distinctly human activities. Planning and design approaches, especially at broad urban, social, and environmental scales, involve consideration of a range of factors, uncertainties, and conflicting goals, all largely part of a decision-making process of which automation is not yet capable [

18]. Hence, unlike the ‘calculative rationality’ of data processing [

19] (p. 120), human judgement is driven by purposefulness and ethics and therefore, accountable. In other words, while we are witnessing major strides in sensor-driven smart technologies such as autonomous driving, for example, the environmental context for such technologies to operate in is still shaped by human judgement. Thus, human agency in shaping the built environment can and should be further promoted where technology can encourage people to explore alternative visions and understand the consequence of the possible, probable, and the preferable future scenarios [

20]. Then, the primary question and problem we deal with is not one of technology availability but that of achieving the potential and value the technologies can bring into built environment practice [

3].

Therefore, we can discern a tension between short-term sensemaking of data aimed at understanding how we use the built environment and long-term future-oriented grand challenges to integrate processes, data, and stakeholders across scales to inform design and intervention strategies. Addressing these grand challenges have prompted calls for moving away from project-bound methodologies to those where developed models span organizational and jurisdictional units [

21]. Rabeneck [

6] argues that any understanding of (building) performance demands a systems perspective to better articulate needs within a given context. For example, a systems approach to designing and delivering large infrastructure projects is seen as a step toward understanding system complexity through recognizing interdependencies and consequences of various proposals [

21]. In any instance, it is becoming increasingly difficult to sustain the traditional compartmentalized practices, but it is becoming imperative to promote conversations between the allied built environment disciplines to avoid single-issue dominance that could lead to unintended consequences, furthered by partially informed policies [

5].

Despite the major strides in data processing and simulation, designing well-performing buildings still demonstrates how human experiences and behaviors do not lend themselves easily to this sort of automation. For example, designing for building performance relies on anticipating and understanding the effects of occupant behavior on performance or devising strategies to promote pro-environmental behavior in buildings [

22]. In such cases, we tend to look to technologies to engage participants and stakeholders in conversations to share knowledge that may otherwise remain elusive to designers. During operation, various digital information can be captured and visualized to inform preventative, rather than typically reactive, maintenance and retrofit. With the advent of building information modeling (BIM) approaches, three-dimensional models have become central to collaborative practices of sharing and reviewing design information, driving a more cyclical and iterative rather than linear approach to design development and production. As such, 3D models coupled with user-friendly technologies have offered clients and prospective users with opportunities to participate in the design process.

In such participatory instances, visualization technologies such as virtual reality (VR) can serve as powerful communication platforms to present design as something tangible and shareable with clients and users. Virtual mockups, as a term used to describe representations displayed in VR, can be seen as extensions of a design paradigm of active, mutual engagement in which designers, clients, and users work in close coordination [

23]. In this sense, virtual reality can be particularly useful in revealing numerous dependencies and tradeoffs by democratizing the process of design evaluation from different perspectives and methods. While one approach to evaluating design may be through simulations to understand the energy consumption, lighting levels, cost, or ease of assembly, evaluating design for the human aspect may consider individual viewpoints, accessibility of spaces or maintenance equipment, ergonomics, aesthetics, or indoor environment comfort levels. The appeal of this kind of engagement is a first-person experience for the users, which then allows designers a more empathetic lens to evaluate their work. Yet, VR as a communication platform may not always yield desirable outcomes, and in certain instances may even misrepresent the intended “reality”. For this reason, we map the broad extent of considerations for choosing the appropriate types of VR experiences to inform some answers to the questions such as: Why use VR instead of other technology platforms? How do we design effective VR experiences for interdisciplinary visualization tasks? Who needs to be involved to support the implementation for achieving the benefits and ensure a worthwhile investment?

3. Virtual Reality as an Interactive, Spatial and Social Experience

Virtual reality systems are broadly viewed as computer-generated environments that are displayed using special hardware to give users compelling, intuitively interactive, and immersive experiences. Since Jaron Lanier coined the term in the 1980s, virtual reality has matured to offer high-performance yet relatively affordable consumer market hardware components, such as head-mounted displays, providing opportunities to a broad range of built environment disciplines to simulate projects while also making connections with other technology domains, such as machine learning and robotics. These interactive visualization technologies have extended the standard design and information management applications used in the design and construction fields in that they act primarily as communication media that engage users in intuitively navigating, testing, and reviewing information.

Rooted in visual communication science, VR is primarily seen as a visualization tool [

24] and as such can help present complex information more clearly compared to text or other medium alone [

25]. Built environment practice as highly interdisciplinary and inherently spatial seems to naturally lend itself to the use of VR to support interactions between users and simulated reality. The advancements in information modeling (BIM) approaches [

26] as well as data capture methods such as laser scanning, sensors, and photogrammetry, have allowed professionals to generate myriad information about built environment projects at spatial scales ranging from building components to urban contexts. However, applications of VR often reveal the tension between the potency of the medium to support users in visualizing information and the elusiveness of straightforward VR solutions to consistently realize the said benefits. Built environment practices being multi-disciplinary but also fragmented further add to the complexity of addressing this tension, because each of the disciplines ends up developing their own methodologies for implementing VR, which Portman et al. [

24] describe as “reinventing the wheel”, without the cross-pollination of knowledge of what works, how, or when.

Due to their distinctive features, VR applications may not offer the most effective experience in all cases. There have been notable attempts at developing frameworks to systematically capture a broad range of factors to ensure both effective VR user experiences and consistent VR implementations. Vergara et al. [

27] considered realism and interaction as key considerations for developing VR experiences in engineering education, but driven by the specific objectives as the first step to determine the usefulness of VR before proceeding with the technical design and user evaluation. Mastrolembo Ventura et al. [

28] looked more broadly at both technological and procedural challenges when implementing VR in design reviews.

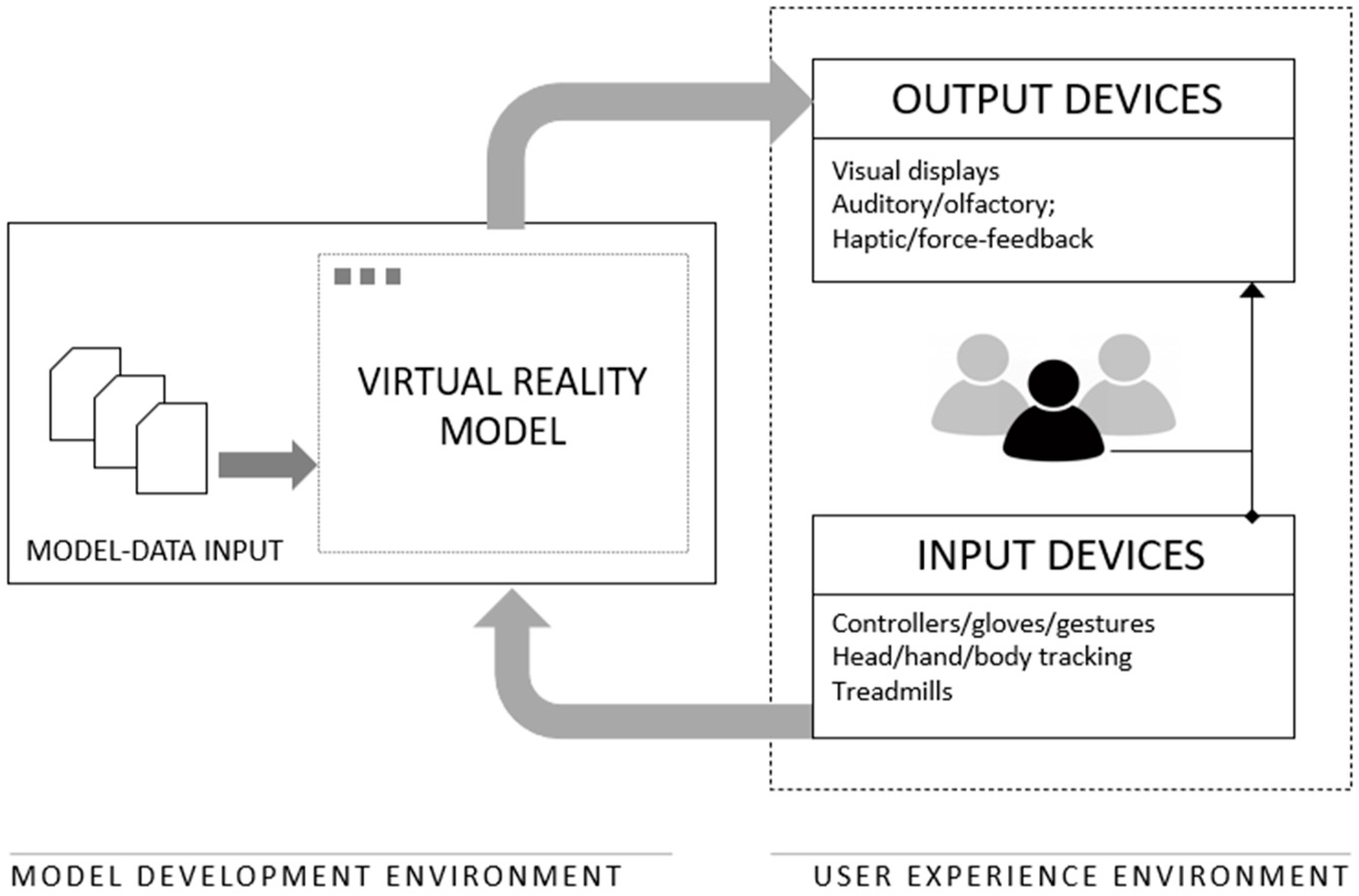

If we take a more integrative approach to VR that centers on user experience, we can discern at least three main components (

Figure 1) that shape the user experience: (1) the content or the representation, (2) the virtual reality medium as a configuration of input and output devices, and (3) the intended users with their goals and tasks [

3]. The choice of VR configuration therefore largely depends on the context of its application and understanding the nature of the tasks at hand, including identifying user groups, anticipating what outcomes they require and discerning what content and perceptual characteristics of the system will be best placed to support achieving those outcomes.

3.1. Content Representation

Virtual reality with its name has always conveyed an aim of verisimilitude, or the “realness” of the virtual experience, although the synthetic nature of this “realness” prompted Robinette to qualify the VR moniker as a “cute little oxymoron” [

29]. Of course, virtual reality cannot be naïvely conceived of as reality, as there are many ways in which virtual reality masks or distorts physical reality. The aspect of realness is most often associated with the photorealistic representation of content, although when we discuss the interactive aspects of the technology, it can also extend to experiential congruence [

30]. In the context of built environment practice, we use the term representation to refer to abstractions of real objects or concepts from the most abstract diagrams and drawings to more photorealistic images and models. The appropriateness of each depends on the purpose, the users, and the project development stage. For example, instances of design marketing or securing approvals for environmental planning applications increasingly depend on providing (photo)realistic representations [

24,

31], although in such instances representations can further engender possible legal consequences, especially in case of disparities between simulated and delivered “reality” [

32].

What distinguishes the use of VR in built environment disciplines from those in healthcare training or flight simulations, for example, is that VR does not only aim to create representational equivalents of real world scenarios, but often goes beyond this to simulate imagined, nonexistent or “inaccessible” realities [

24]. For example, photorealistic representations are generally appropriate to communicate the appearance and the aesthetic of a design or the wayfinding or operational aspects of the design. However, even in such cases, unless the content is captured by means of photogrammetry or scans of existing settings, simulated cityscapes and modeled urban contexts often do not include people, traffic, vegetation, or myriad other aspects that are experienced in real settings.

Alternatively, in the initial design stages, abstract representations allow practitioners greater flexibility and latitude in exploring design challenges through sketches or diagrams that are fluid and easy to iterate. In other instances, abstract representations such as maps and plans can be used to represent large or complex spatial information to support users with tasks such as strategic planning or understanding composition and spatial relationships. In such cases, abstract representations may complement realistic and dynamic representations to support navigation through 3D models and orientation.

In all applications, whether we refer to abstract or realistic representations, certain choices are made about what salient features should be included. Though these choices should be determined by the context of the application, they may inadvertently result from the creator’s professional bias in prioritizing certain aspects over others, the limitations of the technology used at any given time, or the complexity of the concepts to be represented and simulated. For example, technology in landscape architecture visualization has challenged the degree to which real landscapes can be validly reproduced in VR [

24,

33], especially aspects such as realistic vegetation, prompting efforts to realistically represent the movement of grass in prairie landscape [

34]. Not only have issues been raised of how realistic visualizations should be for given applications but also those of representational accuracy and simulation validity [

35], which also tend to increase in complexity across spatial and temporal scales. For environmental planning, Orland et al. [

33] raised the importance of showing changing environmental patterns and visualizing the consequences of land planning strategies. The integration of GIS and VR has allowed for visualizing temporal changes over time at the landscape scale, though the vegetation detail and accuracy depend on whether scenic visualizations are at macro- or microscale.

Environmental planning brings another important issue to the forefront, which is the difficulty of abstracting and representing complex systems in a reliable and valid manner. Barablios et al. [

36] illustrate this using the example of teaching the consequences of different resource-sharing strategies for which ecosystems modeling approaches are often too sophisticated due to their complexity and interdependence of the components. The timescale of changes in the environment and the lag between negative actions and consequences also make environmental education difficult because the lack of immediate or first-hand experiences tends to lead to the uncertainty about, or the dismissal of climate change [

37]. Similar challenges may affect the decisions made in professional settings.

For these reasons, content considerations largely depend on the context of application where salient features of the representation should be aimed at those users who are most likely to have a say in decisions with longer term consequences. The salient features of the VR technology will be then better positioned to amplify the psychological proximity to imagined future scenarios and promote communication between domain experts, policy makers and non-experts.

3.2. Technology Configuration

As external expressions of abstract concepts, visual representations or models can be developed for aesthetic value or pragmatic objectives [

38]. Still, the knowledge of what constitutes effective visualizations often lags behind rapid technological development [

33]. Here, experiencing visualizations is inseparable from technology media in which users view information on various displays or interact with using input devices. As a visualization medium, virtual reality ideally provides users with the illusion of an unmediated experience in which they can focus on the content of the environment and not the VR interface itself [

3]. In recent decades, researchers have studied user behavior in virtual environments to learn about human perception and cognition (e.g., [

39]) and social interaction [

40], suggesting a complex relationship between people and their environments. As a consequence, even the measurement and definition of successful VR environments have been often inconsistent and problematic due to implementations that ignore perceptual and human issues [

41], as well as a lack of understanding of how to choose and use VR systems to augment communication and convey ideas [

38]. Therefore, we take the approach that VR systems are not a monolithic concept, as users experience VR models through custom interfaces and combinations of input and output devices, which altogether implies a more flexible view of designing approaches to visualizing and interacting with information. This approach allows us to understand how virtual reality applications can change user perceptions and influence the understanding of digital information depending on the system characteristics and content attributes.

Our experience of the world comprises a complex interplay of perceptual and sensory inputs in which depth perception, movement, smell, or sound all play important roles. Since Morten Heilig’s Sensorama, his 1950s attempt to provide users with an experience of a ride through an urban environment using video, movement, sound, and smell, the media tradition behind VR has promoted the aim to replicate a multisensory experience of the real world. However, for built environment applications, fewer studies have used multisensory VR to explore perceptions and behaviors in designed spaces. For example, Tagliabue et al. [

42] explored the role of smell in how users perceive thermal comfort, but learned that lighting and color have far more influence. The combined audio-visual influence on human environment perception has also been known for a long time [

43] where sounds can evoke powerful emotions and thus enhance the perception of visual information. Studies that have explored the saliency of sound for evaluating noise pollution in urban settings [

43,

44] or for identifying risks on construction sites [

45] illustrate how sound and visual stimuli can be mutually affected, but also demonstrate the complexity of realistically simulating the randomness of aural environments. However, the majority of observed VR applications in the built environment primarily rely on the visual, and the use of haptics, sound and particularly smell remain largely underexplored.

Though the prevalence of single-sense applications may prompt criticisms of VR for not providing a comprehensive experience, studies have offered evidence of a strong visual bias over auditory and proprioceptive information about spatial location [

46] or concerning attention and information transfer [

47]. Except in cases of visual impairment, visual sense has higher acuity and therefore dominates the development of visual display configurations that range in type, size, and number that partially or fully envelop users in virtual information. Given that visual displays tend to largely define user experience, they also serve as a way of categorizing VR systems based on the coverage of the field of view into non-immersive, semi-immersive, and fully immersive systems [

3]. Fully immersive displays with stereoscopic capabilities, such as head-mounted displays or large projected room-like systems that cover the user field of view, aim to afford users a sense of presence, or moments when the medium “becomes transparent to the user” [

30] (p. 47), deemed to be one of the defining features of a VR experience. The VR pursuit for achieving the sense of presence is rooted in psychology and neuroscience which have linked presence to better learning, stronger brain and body responses, or cognition [

48,

49,

50]. Large displays, wide fields of view, but also user control over virtual environments are all said to contribute to achieving the sense of presence [

51,

52].

For built environment tasks, invoking a sense of presence may not always be a priority or even feasible when simulations do not have a real-world experiential analogue, such as in cases of scientific data simulations or analysis [

30]. At the same time, the sense of presence has been valuable in raising emotional responses and changing attitudes when teaching the effects of climate change and environmental issues [

53,

54]. In these cases, fully immersive VR configurations such as tracked head-mounted displays or room-like VR are the most effective in providing viewer-centric embodied experiences. These VR uses also tend to be aimed at individual users for promoting emotional responses and motivations, but single-user VR configurations such as tracked HMD are also well-placed for applications for which users need to evaluate spatial information from an egocentric (or viewer-centric) perspective. Examples include estimating the accessibility of building equipment in confined spaces and relative sizes of objects [

13], testing the operational sequence in construction equipment training scenarios [

55], or understanding human behaviors in various scenarios (such as fire evacuation) [

56], or modifying lighting levels for occupants to determine perceived comfort levels [

22]. One aspect of HMD configuration, though, that differentiates it from room-like VR, such as CAVE is the sense of disembodiment due to the user’s inability to see their own body in a virtual world, which can further invoke a sense of discomfort or motion sickness.

However, in the built environment practice, VR applications are used mainly for design review or construction sequence reviews, which typically involve groups of practitioners working together to discuss and evaluate project information. In such cases, fully immersive VR with tracking that is configured for single users is not appropriate, and semi-immersive solutions that can accommodate larger groups of users are preferred. Multiuser VR configurations, or room-like collaborative VR may not offer fully immersive experiences but can afford a degree of both physical and social presence (

Figure 2). Social presence in which users mutually engage in both verbal and nonverbal communication has been deemed a key aspect for establishing common ground and interpersonal trust in collaborative problem-solving [

57]. Because this type of trust tends to build through social cues and gestures, research in remote collaborative settings using VR is investigating the effect of recreating facial expressions and gestures through the use of avatars [

58,

59]. Even in instances of co-located collaboration, stereoscopy for example, can limit this type of communication as wearing glasses tends to restrict users’ view to the display, affecting the conversations and thus, collaboration [

13,

60].

4. VR Applications: Users, Goals, and Activities

The considerations of representations, content characteristics, and technology configurations ultimately depend on understanding who the intended users are as well as their goals and tasks in order to create value propositions for using VR and to develop effective VR experiences. This knowledge of tasks, goals, and activities typically comes from technology usability evaluations and from ethnographic studies of how users interact with information, technology, and with other participants in digitally mediated settings. For example, studies of human-computer interactions use scenario-based approaches [

61] as heuristics that can help technology developers envision and understand the user, information, and system requirements.

Built environment practice is characterized by a broad range of actors with varied roles and motivations working across scales and system complexities (

Figure 3). The drive for lifecycle systems thinking approaches requires the involvement of domain experts and professionals such as planners, designers, engineers, builders, or operators, as well as those users, citizens and occupants affected by design decisions. For these reasons, built environment design is rarely a solo activity, but instead involves users and professionals in collaborative processes that rely on shared understanding and conception of the intent of a given design. Indeed, post-occupancy evaluation studies reveal how users tend to evaluate the built environment differently from designers [

62]. Given that these participants often have varying backgrounds and expertise, enabling them to visualize design information in intuitive and unambiguous ways is central to achieving consensus around design projects.

4.1. Participatory Practices

Built environment projects with inherent system or design complexity such as healthcare facilities, data centers, or infrastructure projects tend to benefit from engaging a broad group of users who share their tacit knowledge and experience to inform the design process. The long tradition of participatory planning and design is behind the desire to engage citizens in decision-making processes and to encourage bottom-up strategies in which the needs of all affected social and user groups are taken into account when evaluating projects. Early lessons in participatory design using physical mockups, rendered images and maps have prompted studies that explore how different VR configurations can shape dialogue around virtual mockups. Experience-based or evidence-based design approaches in healthcare are used to engage nurses and end users to evaluate various aspects of projects, such as layout of patient and operating rooms, access requirements, patient safety, and wellbeing [

63,

64,

65]. Large display VR configurations also have demonstrated advantages for user-centered design review approaches as compared with small screens, monitors, or paper, their participants can more intuitively perceive and understand designed spaces [

13,

66,

67].

In environmental planning applications, VR for participatory planning is seen as well suited for scenarios in which participants are asked to act or make behavior-related decisions [

68]. In such cases, fully immersive single-user VR configurations may be used to create a stronger sense of presence and evoke emotional responses. For example, head-mounted displays such as OculusRift paired with the powerful Esri’s ArcGIS CityEngine, which is used in urban planning and design, have been used to engage citizens and communities in evaluating neighborhood walkability and street noise levels [

69], as well as urban resource allocation, disaster planning and environmental protection [

70,

71]. Information received from the behavior of built environment users, either in participatory practices or through big data initiatives, can also influence large project and infrastructure investment decisions, as operational outcomes, such as reduced congestion and increased capacity, may be achieved by either changing user behavior or building new infrastructure. In this sense, Portman et al. [

24] distinguish VR applications for environmental planning from those in design and construction, as being process-oriented rather than outcome-oriented, which operate at much greater spatial and time scales emphasizing human–environment interactions. For this reason, cross-fertilization of practices and considerations between disciplines is critical for achieving a truly integrative approach to environmental agenda but remains stubbornly in “short supply” [

24].

4.2. Participatory Practices

What makes VR a powerful and engaging platform is its ability to encourage users to interact with information in novel ways. Interaction is one of the defining characteristics of the VR experience [

72] and is typically achieved using input devices or gestures. VR offers opportunities to think about interactive interfaces beyond the use of mouse and keyboard for basic functions such as pointing or selecting, to those that can be programmed into a variety of tools with specific functions and mapped onto controllers. Examples from the gaming industry demonstrate rapid developments in interactive capabilities with tracked controllers, which can either appear as virtual “hands” or assume the appearance of any tool, such as paintbrush, a hammer, or bow and arrow. Google’s Daydream Labs (Daydream Labs: Lessons Learned from VR Prototyping—Google I/O 2016) has experimented with creating whimsical tools that did not always correspond to real-life experiences and observed that people are good at using proxy objects to manipulate the world and discover new ways of interacting with it.

In built environment practice, fewer studies have focused on the nature of VR interaction beyond the basic capabilities of navigating and walking through a space. In a study where we observed how infrastructure teams use a large three-screen collaborative VR system for design reviews [

13], the basic navigation requirements of moving forward and backward or turning left and right quickly revealed the needs for team members to do more with displayed information. The simplicity of the interaction setup allowed teams to focus quickly on the displayed information, but in doing so, they then wanted the ability to look up and down in order to observe overhead systems, to measure the distances between the components or to annotate models in order to create an audit trail. A number of plugins for standard design authoring solutions, such as Enscape and Fuzor begin to offer a set of functions that allow users to dynamically query or change the appearance or properties of model components in VR, filter views, and simulate various environmental scenarios and conditions, such as daylighting effects per locations and seasons. In addition, many commercial startups offer increasingly sophisticated, custom designed interactive solutions for scenarios such as construction site planning or 4D simulations where controllers can be scripted as tools for mark-up, measurements, placing and cloning objects and equipment, creating screenshots, advancing construction timelines or changing the viewing scale of the site (

Figure 4). However, as these possibilities for interaction increase, so may the time required for users to spend familiarizing themselves with VR systems [

13]. There is a fine line between having an adequate VR configuration with interaction capabilities that support users in completing tasks without drawing any attention to the mediating technology and a complex configuration in which capabilities grow at the expense of intuitive experiences.

4.3. Experiential Realism

Directly linked to these interaction-related considerations is the question of the extent to which VR experiences need to correspond to those of the real world. One of the notable drawbacks of using more immersive VR systems is the potential for users to sense discomfort or motion sickness. For this reason, some strategies to reduce this discomfort include modes of movement that do not have real-world equivalents, such as teleportation, flying or moving through obstacles. However, while teleportation or flying can alleviate a sense of motion sickness, for many tasks that evaluate access or walkability, these modes can alter the perception of virtual objects, leading to oversights of obstacles, such as stairs that are impossible to climb [

73]. Depending on the application or control settings, movement speed in VR can also introduce challenges in transitions from confined spaces to vast areas. For example, the same movement speed can be perceived as too fast in a small room and too slow on a long street. Studies that compared perceptions between actual and virtual environments have largely confirmed the tendency for users to underestimate distances in VR [

74] and to perceive walking speeds as slower in VR [

75,

76] largely due to perceptual motor-coupling issues. Further, Johnson et al. [

38] added that replicating the act of traversing distances in VR also requires the consideration of a dedicated purpose, as this further shapes movement behavior and the perception of appropriate rates of speed.

Another aspect of viewing experience that has demonstrated the ability to reduce motion sickness is the ability to switch between first- and third-person views. The first-person, or egocentric view allows users to experience VR from the perspective of their own “body”, which mimics the real world. Unlike HMD applications, screen-projected systems require users to relate a virtual environment to their own body. In a third-person, or exocentric view, users experience environments from the perspective of another object, such as an avatar. In larger user group settings, avatars indicate where a viewer is headed next in order to reduce the “erratic driver” effect, particularly for less experienced VR users. Moreover, the ability to dynamically switch between these two modes can offer both a respite from motion sickness and additional reference points for evaluation.

4.4. The Purpose of the VR Experience

As the integration of VR applications with design authoring tools expands and improves, so does the tendency to bring models indiscriminately into virtual environments. Depending on model size and detail, practical challenges may involve system latency due to graphic-intensive processes that render every component in the model. Equally important is when the highly interactive and explorative nature of virtual reality and the inherent lack of a narrative structure introduce additional complexity that may not always yield effective user experience. Free model navigation may lead users to wander into unfinished or irrelevant parts of models and divert discussion from salient tasks [

77]. Thus, some of the strategies used to structure narratives and focus attention include predetermined viewpoints to guide users through models, sectioning models into discrete scenes that can differ in visual appearance, and detailing interactivity in order to constrain navigation only to the sections relevant to a given review. Kumar [

78] for example, explored the value of developing task-based scenarios for healthcare design review, in which nurses were guided to complete tasks from moving medical equipment in an operating theater to evaluating room layouts, and found that these approaches can be beneficial for those with less practical experience in recreating and sharing tacit knowledge, but not necessarily for the nurses who had more experience.

While recent studies have begun to address the need for VR implementation protocols for design reviews [

28], crafting the narrative structure of the VR experience itself requires an understanding of user tasks, behavior, and perception in order to determine which elements are critical to include in models to maintain control.

5. Vision of VR for Interdisciplinary Integration

Building on our review and synthesis of the existing work on virtual reality and its applications, we approach digital technologies with both the assumption and the expectation that they can facilitate better understanding of the complex interactions between many layers of built environment design and inform future interventions, all while meeting an ever-growing number of performance and environmental targets. In this section we set out our vision for VR for interdisciplinary integration.

Generally, the design decisions made in early project stages often have broad-ranging impacts on people for extended periods of time and can transform their experiences in ways that often transcend the boundaries of the original design reasoning [

61]. Built environment design must absorb and manage many interdependencies and uncertainties, and thus, it requires a diversity of disciplines and skills, as no single designer, user or discipline knows enough to specify requirements and evaluate solutions from all possible perspectives. For this reason, most VR implementation efforts have consequently focused on supporting the design activities and design reviews.

Design has been categorized as a ‘wicked problem’ not only because it allows for multiple possible solutions to problems, but also because the information available to guide the design process is often incomplete or inaccurate. As Carroll [

61] illustrates, from the information systems design perspective, designers often pursue partial solutions to partial problems, which are then reformulated and recombined in search of more sophisticated solutions. Even the way design problems are defined can send designers in search of inappropriate kinds of solutions (e.g., designing faster cars vs. offering transportation alternatives). Designers often begin their process with a brief that defines a general scope of project goals and functional needs, but aspects such as the form, flow, user behavior, influence of contextual factors, and performance often cannot be specified a priori but are rather discovered during an iterative process of work and conversation with clients and users [

65]. Thus, design is seen as a social activity in which participatory activities, stakeholder engagement, and co-design processes are used to integrate different values and approaches to defining challenges and solving problems [

7]. However, because by definition design activities and design reasoning are largely guided by an incomplete and uncertain view of the world, it is also argued that designers work within the constraints of bounded rationality [

79]. As such, designers often use heuristics to deal with open-ended problems that may be underspecified or overconstrained to create solutions that are satisfactory rather than optimal.

For this reason, the interdisciplinary integration of disciplines becomes a critical “intersection” for sourcing novel ideas [

80] and learning and also a source of many challenges, particularly with the influx of novel technologies. New technologies and ever-increasing amounts of data may give the false impression of gradually gaining control over future predictions and realizing improved built environment performance. However, despite calls for discipline integration, fragmentation is still very much present not only between the engineering, architecture, and construction fields [

81] but also allied environmental disciplines, while the adoption of new tools has yet to realize significant innovation and productivity improvements [

80]. As such, traditional linear work processes remain well embedded in current practices, despite the development of information sharing initiatives such as BIM and integrated project delivery. Furthermore, the “silo” mentality remains sustained not only by professional institutions [

7] but also by discipline-specific tools and technologies for which the intention to bridge boundaries is often met with a lack of interoperability and differing methods of work. Disciplines with vested interests in developing sustainable built environment approaches, such as environmental planning and design, landscape architecture, architecture, construction and engineering, all use discipline-specific tools to generate, analyze, or simulate project information. Such tools are based on different epistemologies whereby information needs and visual representation vary greatly, which introduces interoperability problems for sharing information across disciplines. For example, BIM tools for parametric and object-oriented modeling used in architecture may not work for landscape architecture practitioners due to the lack of object libraries relevant for their tasks and practices. As a result, any pursued sustainability metrics may be contained equally within the dominating scopes and contexts of each discipline.

For this reason, conducting design conversations through approaches such as evidence-based or participatory design and design reviews remain unsurpassed in their potential for reaching more creative solutions. Effective design for sustainability requires an interdisciplinary approach to designing for interdependencies and thus, communication across stakeholder groups while considering new environmental and social goals over long time horizons [

82]. Models as artifacts do not hold this kind of knowledge a priori, it is constructed through social interactions and conversations. For example, Whyte [

83] found that even explicit BIM models can reveal gaps in collaborative understanding. Thus, the realization that the artifacts alone do not guarantee reaching a collaborative understanding lead some scholars to argue for the value of realtime [

84] and co-located interaction [

60] for problem-solving and decision-making tasks. Communication technologies that are discipline-agnostic, such as VR, offer flexibility for bringing disparate information into an intuitive environment for users to view and interact with while having conversations and “messy talk” [

80], and thus can be ideal canvases for drafting different narratives. VR allows the designers to view the design or a situation from another user’s perspective to account for multiple and different needs. Though multiple perspectives may lead to conflicting views, evidence suggests, for example, that insufficient perspective-taking limits our ability to prioritize human wellbeing over purely financial measures [

82,

85].

Yet, this integrating vision of VR for promoting conversations across disciplines is challenged by the reality of VR use in the built environment that tends to be largely discipline-specific and has seen inconsistent results. A review of the VR use literature across the built environment disciplines further reveals the complexity of interdisciplinary collaboration due in part to the spatial and temporal scales they primarily operate in (

Table 1). For example, the environmental design and landscape architecture disciplines often operate at much larger spatial and temporal scales, as the primary goal is to design for change. In contrast, architectural and engineering design disciplines generally operate at smaller site or component levels, where the primary goal is to design for stasis. These differences then unfold into practical challenges for VR use where the dynamic realism requirements of simulating movement, growth, and change over time are much more difficult to achieve compared to the static requirements of representing building components. The custom nature of such use scenarios coupled with the possibilities of VR configurations and modes of interactions with such diverse types of information at different scales, can quickly grow the complexity of the system, further straining the VR deployment and its sustained use.

This brings us back to the tension between the short-term sensemaking of VR technologies for discipline-based practices that are project-bound and often divorced from broader environmental considerations and the grand challenges of transcending disciplinary, spatial, and temporal boundaries for exploring and understanding long-term consequences. While the call for inclusion and integration of diverse and complementary perspectives to achieve sustainable solutions at larger scales is uncontested, the potential of VR to truly offer novel ways of visualizing the more sustainable world will hinge on these points of intersection and largely depend on overcoming some of the practical barriers inherent in the discipline-based methodologies. In articulating our vision for VR, we take the users-centered approach as the starting position for developing use scenarios that can serve as platforms for interdisciplinary integration. We discern three facets of VR experience development: the users, the information content, and the system configuration.

The user aspect drives the purpose of using VR; hence, this aspect seeks to understand who the users are, their characteristics, and modes of working. Studies of human-computer interactions (HCI) consider a range of user characteristics such as domain-related knowledge, cognitive and perceptual abilities, cultural and international diversity, as well as user experience [

61]. Determining the extent of domain expertise and the possible divergence of perspectives is particularly important in instances of user groups dealing with potentially contentious issues [

60] (e.g., infrastructure routing or land development and biodiversity constraints). In such cases, visualization approaches need to support tasks such as negotiation, valuation, and decision making. Additionally, as collaboration may further be distributed across co-located, hybrid, or remote methods of working, VR choices may also be driven by goals of supporting the sense of social presence for achieving shared understanding and dispute resolution.

Informed by the users’ characteristics and goals, the content aspect considers the questions of what information is relevant and what representation format is appropriate. Dominated by applications in architecture design and construction practice, VR representations most often include realistic 3D models for navigation, exploration, and review tasks. Issues of realism requirements and effectiveness have also been broadly recognized in engaging interdisciplinary teams. While eliciting emotional or behavioral responses from end users or securing approvals from clients or policy makers often require highly realistic representations, abstract representations have been seen to support more creative solutions due to interpretive flexibility [

3,

80]. Furthermore, integration of disciplines may require broader contextualization of information at greater scales or integration with additional data and types of information (

Figure 5), such as temporal or procedural elements that create more dynamic scenarios (e.g., visualizing growth or deterioration over time, comparing/anticipating future performance, affecting or simulating behaviors, etc.). Such scenarios then require the ability for users to interact with and modify information in novel and more complex ways beyond simple navigation and walkthroughs.

Lastly, the (VR) system aspect represents the configuration of input and output devices selected to accommodate the users’ requirements for viewing and interacting with information. The medium in this instance has the primary goal to render itself invisible to the user and focus attention on the information. This is often a challenging task as it requires knowledge of perceptual, cognitive, as well as technological considerations. VR experiences are dominated by the visual sense and thus, display configuration is the primary consideration when it comes to choosing between screen- or projection-based and HMD solutions. While HMD is by default a user-tracked experience with stereoscopic viewing tailored to support first-person view and interaction with a virtual environment, these attributes may not be desirable in collaborative settings as they may inhibit social interactions, interfere with the dialogue, and affect collaboration [

60]. The inherent challenge in configuring VR for interdisciplinary collaboration is the tension between offering users with rich interactions and increasing the complexity of interaction devices and user interfaces that detract and distract from intended user experiences.

6. Conclusions

The twin urgencies of climate change and the COVID-19 pandemic have raised many questions of how we choose to shape the future of our built environment and what processes we may employ to realize this future. The proliferation of digital data and technology has begun to offer opportunities to better understand the interactions between built and unbuilt environments at multiple scales. In turn, this convergence presents built environment professionals with a tension between short-term sensemaking of existing data and digital processes and the grand challenge of long-term planning and future interventions. In response to climate change and many other environmental challenges, the design and engineering disciplines have been tasked with becoming more systematic and interdisciplinary in their approaches to critical intersections of mutual learning, perspective-taking, and sourcing novel ideas. Answering the question of why use VR instead of other technology platforms thus, appears straightforward. As a visualization and communication technology, VR offers a powerful discipline-agnostic platform for integrating diverse knowledge and promoting human agency by democratizing the process of imagining, debating and shaping proposed interventions, both in person and virtually.

However, the efficacy of VR is not a constant but rather highly contextual and tailored to user needs, which then inform choices regarding information content and system configurations. Hence, answering the question of how to design effective VR experiences for interdisciplinary visualization tasks becomes less straightforward. As collaboration becomes distributed across remote, hybrid, and in-person modes of working, VR configurations may offer varying degrees of support for critical conversations across disciplinary boundaries. Problem solving, negotiation, and decision making, especially in cases of competing priorities, benefit most from settings that allow for the establishment of a strong social presence. In such instances, collaborative VR is preferred to single-user VR approaches, as users mutually engage in both verbal and nonverbal communication, a key aspect in establishing common ground and interpersonal trust. Alternatively, eliciting tacit knowledge and emotional response will benefit more from engaging users in tailored first-person experiences using HMD. Therefore, we take the approach that VR systems are not a monolithic concept but a flexible set of configuration characteristics driven by user goals, tasks, and information and interaction needs for given use scenarios.

Finally, while VR offers powerful and novel ways to engage diverse disciplines in shared conversations, its use continues to remain largely confined within individual disciplines. The most challenging aspect to address remains the question of mechanisms to support VR implementation for realizing the said benefits. Fragmented practices are perpetuated not only by traditional and institutionalized modes of working, but also discipline-specific tools and technologies designed to handle data at different scales, levels of detail and data needs. Integrating such diverse data sets not only requires overcoming issues of interoperability, but also crafting new narratives around salient spatial, temporal, and procedural features aimed at the users most likely to have a say in decisions with longer term consequences.