Abstract

The excretion behavior in daily life for the elderly and the disabled is a high frequency, high physical load, and risky behavior. Therefore, we proposed an auxiliary lavatory robot (ALR) with autonomous movement and self-cleaning capability. When the nursing staff assists a user in transferring from a standing or lying state to sitting on the ALR, the ALR can follow the user according to their position and posture. Over the whole transfer process, the ALR always provides the user with the best transfer position and posture, which is an effective approach to reduce workload and physical load. However, confusion and occlusion of the lower limbs between the nursing staff and the user would affect the user’s posture recognition. First, in this paper, a method combined with object segmentation and shape constraint was proposed to extract the contour of the lower limbs of the user and the nursing staff. Then, depending on the position constraint and dynamic characteristics of the legs contour and back contour of the user, a dynamic posture recognition approach based on a two-level joint probabilistic data association algorithm (JPDA) was proposed. Finally, the leg target recognition experiment, path-tracking experiment, and auxiliary excretion transfer experiment were implemented to verify the effectiveness and robustness of our proposed algorithm. The experimental results showed that our proposed method improved the safety and convenience of the user, and it also reduced the workload and physical load of the nursing staff. The ALR, integrated with the proposed method, has a good universal property for the elderly and disabled with weak motion capability in hospitals, pension centers, and families.

1. Introduction

For the elderly and people with weak motion capability [1], excretion is a high-frequency, high-risk, and high-consumption feature of physical strength utilization in daily life [2], which needs to be undertaken with the help of the nursing staff. Long-term and repeated auxiliary excretion increases the physical burden and work burden of the nursing staff [3] and reduces their work efficiency [4]. Therefore, against the background of the increasing number of people with weak motion capability and the shortage of social resources [5], developing an intelligent robot that can safely assist with excretion behavior significantly improves the quality of life [6] and the utilization rate of social resources for the elderly and the disabled [7].

Nursing robots, in the field of assisting the elderly and the disabled [8], are required to assist users in performing tasks safely and flexibly in the home or nursing environment based on an understanding of the user’s intentions [9]. Nursing robots become essential to auxiliary excretion behavior by assisting in getting up or transporting objects [10]. According to different work patterns, the nursing robot provides different solutions for auxiliary excretion, which can be divided into a mobile nursing robot [11] and an intelligent nursing bed [12]. The mobile nursing robot [13,14] is mainly used to assist the nursing staff in completing tedious work. It is worth mentioning that mobile nursing robots can carry out non-contact transportation tasks [15]. For example, the RI-MAN nursing robot can complete the task of patient care and transportation [16]. It analyzes the user’s behavioral intentions and assists in getting up for excretion behavior by smoothly picking up the person’s weak motion capability. The Omni-directional power-assist-modular mobile robot for the total nursing service system developed by the Korea Institute of Science and Technology can quickly move in limited areas such as hospital wards [17]. Therefore, this robot can move a heavy nursing cart and aid with the sit-to-stand motion of patients and elders in a narrow environment. In addition, the mobile robotic assistant developed by the Robotics Institute, Carnegie Mellon University, can assist elderly individuals with mild cognitive and physical impairments, support nurses in their daily activities, and provide reminders and guidance for elderly residents [18]. These robots can reduce the burden on the nursing staff and take care of the patients. However, all of these robots do not have an excretory device, and the robots still need the nursing staff to assist patients in excreting. The intelligent nursing bed is mainly used in families and pension institutions [19], and it usually has some functions such as physiological parameter monitoring, auxiliary excretion, human–computer interaction, long-range calling, and an emergency alarm system [20]. Shandong Jianzhu University developed a robotic nursing bed that has functions of raising the back, curling the legs, rollover, and voice interaction, according to the paralyzed patient’s requirements [21]. A multifunctional nursing bed was also developed by this University to realize the function of monitoring the patient’s condition in real-time [22]. This system works with a network video server, which can monitor the indoor situation in the nursing center. However, both kinds of intelligent beds lack the excretion function. At the South China University of Technology, a multifunctional nursing bed with physiological monitoring, key–press voice interaction, and excretion function has been developed [23]. The excretion device is fixed on the intelligent bed and can only be cleaned manually. In addition, some research institutions have also developed intelligent beds with multiple functions [24]. Nevertheless, these intelligent beds cannot clean up the user’s excretion in time without the assistance of the nursing staff [25]. If the excretion is not cleaned for a long time, the user’s health will be adversely affected. Meanwhile, the living area and toilet area highly overlap, which will also hurt the user’s psychology [26]. Moreover, the functions of the nursing robot above-mentioned are relatively concentrated, which cannot meet the relatively complex and comprehensive needs of the patients, and the device fixed on the bed cannot meet the patients’ excretion needs in different positions. Therefore, it is urgent to develop an auxiliary lavatory robot with an autonomous movement function and convenient cleaning at any time after use.

In the process of human–robot interaction to assist with excretion, it is necessary to reduce the physical burden of the nursing staff as much as possible to avoid secondary injury to the user due to the exhaustion of the nursing staff [27]. Therefore, the robot needs to implement follow-up movement according to the dynamic posture of the user to provide the best transfer posture for the user in the assisting process. Among them, a dynamic environment such as confusion between the nursing staff and the user’s limbs, and floating people around, will impact on the dynamic posture recognition of the user. The attitude sensor can be applied to measure the joint angle [28], and the human motion posture can be calculated according to this angle by the data fusion algorithm [29]. Although this method is flexible and accurate in attitude measurement [30], it is tedious for the user to wear it repeatedly. For people with weak motion capability, the frequent changing of clothes with attitude sensors will burden their physical strengths, so it is unsuitable for popularization in the scenario of assisting the elderly and the disabled [31]. A camera can accurately measure the dynamic position of multiple targets in a narrow space, and it has been widely used in the field of human–computer interaction [32]. However, when the distance between the two people is close, the confusion and occlusion of their limbs can easily lead to false recognition and missed recognition. In addition, the camera needs to be selected and installed in a fixed position in advance, so that the posture of the user can move out of the effective detection range of the camera. Thus, for different beds or different nursing needs in the scenario, it is cumbersome to measure posture with a camera [33]. When two people stand close, a single camera can easily misrecognize human bone information data, which reduces the robustness of the system [34]. Furthermore, because the pose recognition of the camera depends on the scene’s light quality, the camera will not work correctly if the environment does not have adequate illumination. Laser sensors have a high measurement accuracy and detection range [35], but it is still challenging to solve the problems of limb confusion, occlusion, and the surrounding mobile personnel in measuring environmental information in a single plane [36]. When using the laser sensor to measure human posture, due to the limb occlusion between the nursing staff and the user in the transfer process, it would probably cause the problems of limb confusion, the missing recognition of limbs, and target misrecognition [37]. In the meantime, the transfer process contains the environmental dynamic interference of the surrounding people, the bed, and the mechanical structure, so a single laser sensor cannot adapt to this complex scene [38]. The traditional joint probability data association algorithm (JPDA) [39] determines the effective measurement fusion value by calculating the association probability between the measured value and multiple targets. This method does not need the prior information of targets and clutter and can track multiple targets effectively in a cluttered environment [40]. Therefore, it is always used in the research on point-track association. This method can accurately track the contour information of multiple legs. However, it still cannot effectively distinguish the legs of the user and the nursing staff directly. The wrong recognition results of the leg contour would lead to incorrect robot tracking [41].

To sum up, we need to propose an auxiliary excretion mode that can meet various user groups with different conditions. It can optimize the limitations of traditional auxiliary excretion from the aspects of robot and detection system, auxiliary strategy, and an algorithm to improve its safety and portability. Therefore, this paper proposes an auxiliary lavatory robot (ALR) with autonomous movement capability and a convenient cleaning method. The ALR provides a novel auxiliary excretion approach, which can cooperate with the nursing staff to assist people with weak motion capability in completing excretion behavior safely. We highlight the main contributions of this paper in the following facts:

- (1)

- The ALR with remote awakening, autonomous movement, and security monitoring functions was developed. It provides a new solution for auxiliary excretion in a narrow indoor space to assist the elderly and the disabled.

- (2)

- A two-level joint probabilistic data association algorithm (JPDA) based on a multi-constraint contour was proposed. Under the complex background of the dynamic environment interference and limb occlusion of the nursing staff, this method can accurately recognize the leg contour and the user’s dynamic posture. It provides a high-robust solution to solve limb confusion, large-area trunk occlusion, and complex environment interference.

- (3)

- The robot, integrated with our proposed algorithm, provided a comfortable and convenient approach for auxiliary execution. The whole system lightened the psychological burden and improved safety during human–robot interaction. This approach can be applied to similar scenarios of helping the elderly and the disabled.

The rest of this paper is organized as follows: Section 2 introduces the ALR and its principal component analysis. Section 3 illustrates details about the two-level JPDA based on multi-constraint contour. In Section 4, the leg target recognition experiment, the path-tracking experiment in the complex background, and transfer experiment are conducted, and the experimental results are compared. Finally, the conclusions are given in Section 5.

2. Materials and Methods

In this section, the ALR and the posture recognition system are introduced. Then, a detailed description of the system block diagram is also explained.

2.1. Auxiliary Lavatory Robot

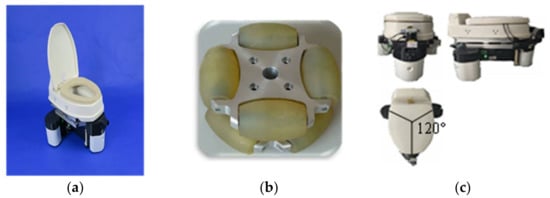

Aiming to resolve the daily behavior of auxiliary excretion based on the user’s appeal, our laboratory developed the ALR, in Figure 1, for people with motion disabilities such as stroke, foot drop, the elderly, and postoperative repair. The robot motion mechanism is mainly composed of three Omni-directional wheels, and its structure is shown in Figure 1b. The ALR, equipped with the Omni-directional wheel, could swerve in narrow spaces with no turning radius. The remote controller can control the directions and velocity of the ALR, and it can navigate autonomously, according to the actual situation of the patients. To avoid the user sliding from the ALR, six pressure sensors are integrated to detect the user’s transfer situation. The ALR also has a remote wake-up function, so a user with aa mobility impairment does not have to open the ALR switch at a close range.

Figure 1.

The mechanical structure of the ALR: (a) ALR; (b) Omni-directional wheels; (c) physical picture of the ALR.

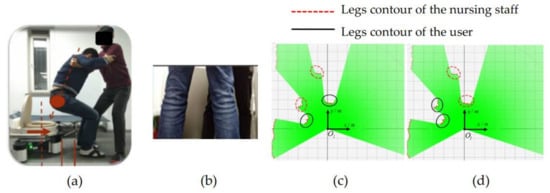

Accurate recognition of the dynamic posture of the user is a prerequisite to realizing the task of assisting excretion in the transfer process. In the transfer process, the legs of the nursing staff and the user have various standing patterns. For example, overlapping and occlusion of the trunk occur when the legs cross each other, which would directly lead to the false recognition and misrecognition of the posture of the legs. When the positional relationship between the user and the nursing staff in the transfer process is as depicted in Figure 2a, their legs would be occluded, as seen in Figure 2b. Although the position distribution of four legs can be recognized using lidar and the traditional recognition algorithm, it is impossible to accurately infer the position and posture of the legs of the user and the nursing staff through the relative position coordinates. These result in misidentification, as shown in Figure 2c,d.

Figure 2.

Leg detection and target recognition. (a) Standing patterns in the transfer process of assisting excretion. (b) Leg occlusion in real scene. (c) Misrecognition of standing patterns. (d) Distribution of real standing positions.

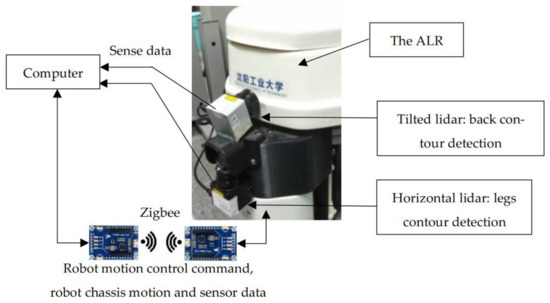

2.2. Posture Recognition System

The accurate recognition of the dynamic posture of the user is the prerequisite for the realization of the transfer task. Therefore, this paper proposed the use of two HOKUYO URG-04LX 2-d lidars with an inclination angle of 60° to identify the contour of the human legs and back and then obtain the user’s dynamic posture effectively through data fusion. The lidar has a scan range of 240°, and the resolution is 0.35°, which has good position recognition capability. The dynamic intention recognition system is shown in Figure 3, and the specific workflow of the system is as follows: First, when two lidar sensors collect the measured data, the ALR transmits the measured data directly to the computer. Then, through the algorithm proposed in this paper, the computer calculates the correct posture of the user. In the meantime, the computer gives the motion instruction of the robot according to this posture and transmits the instruction back to ALR through Zigbee communication. Finally, ALR controls the chassis movement through this command. The computer of this system can acquire the robot motion state and sensor data by the RS232 communication interface. Moreover, the system adopts the Zigbee point-to-point communication mode, and its bit rate was set to 115,200.

Figure 3.

The dynamic intention recognition system.

Matching the horizontal lidar and tracking algorithms can accurately track and recognize multiple-leg contours and effectively filter out dynamic contour interference. However, this traditional method cannot solve the confusion between the legs of the user and the nursing staff. The user’s back and legs have position constraints in the transfer process. Therefore, the user’s pose-recognition constraint condition was established based on the relative position relationship between the user’s back contour and the legs#x2019; circle-like contour. Based on the constraint condition, the suspected data points were filtered to recognize the pose’s legs accurately.

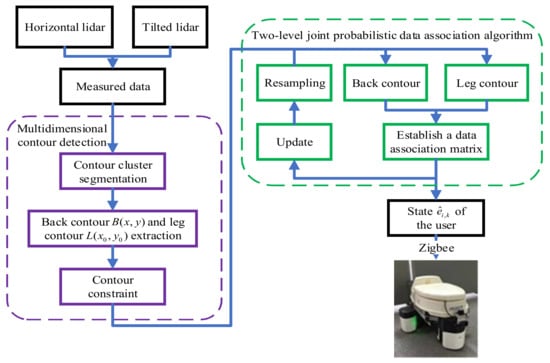

2.3. Posture Recognition System

In this paper, the dynamic posture recognition system based on dual lidar was used to recognize the leg contour and the back contour of the human body. In the meantime, the two-level JPDA based on the multi-constraint contour was used to accurately recognize the contour and dynamic posture of both legs of the user in a complex background. The system block diagram is shown in Figure 4.

Figure 4.

The diagram for the two-level JPDA based on the multi-constraint contour.

Figure 4 illustrates the system running process. First, the distance between the data points is collected by the horizontal and tilted lidar and are classified into the same object contour cluster and the other object contour clusters according to the comparison between the absolute value of the distance and the set threshold. Based on the contour data, the leg and back contour features are extracted, and the leg contour center and back contour center of the user and the nursing staff are calculated by the contour constraint. Then, according to the two-level JPDA based on the multi-constraint contour proposed in this paper, the state , which includes the user’s standing posture and the distance between the user and robot, is obtained. Finally, the state value is applied to control the movement of the ALR.

3. Two-Level JPDA Based on Multi-Constraint Contour

3.1. Multidimensional Contour Recognition Algorithm

The lidar is installed in the front of the mobile chassis of the robot, and the human body contour points it collects are saved in polar coordinates. Consequently, it is necessary to establish multi-dimensional contours according to the number of collected link data. However, the human body contour recognition error grows due to the contour deviation caused by the human body contour with circular-like features and the wrinkle of the leg clothes. Therefore, under the condition of setting the back contour length and position constraint, a circle-like contour-recognition method with a radian range was developed to outline the human legs accurately.

The multi-dimensional contour recognition algorithm includes the following four steps:

- (1)

- Contour cluster segmentation

When the horizontal lidar records the absolute value of the distance between the data points to be less than the set threshold , it is defined that the data points belong to the same object contour cluster. When the absolute value of the distance between the data points is more than the set threshold, it is defined that the data points do not belong to the same object cluster. These are as illustrated in (1).

- (2)

- Leg contour extraction

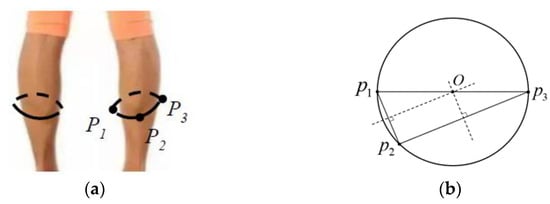

The leg contour features are calculated based on the contour data, and the center of the circle is the intersection point of the vertical line segment at the center of the connecting line segment between any three points on the circular arc. Let the coordinates of three points on the circle be , , and . A segment is created as , . As shown in Figure 5, the coordinates of the center of the circle are as follows:

Figure 5.

The feature extraction of the legs. (a) Circle-like contour recognition of legs. (b) Circle-like feature calculation.

The leg contour features are calculated based on the contour data, and the center of the circle is the intersection point of the vertical line segment at the center of the connecting lines; and are the slopes of and , respectively, and the two perpendicular lines can be expressed as:

The circle radius is

- (3)

- Contour constraint

The contour of human legs is circle-like due to the wrinkle of clothes and other factors, and the diameter of legs is usually between 0.08 m and 0.2 m. Therefore, the contour shape constraints and length constraints are separately set to filter out interference contours. Among them, the circle-like shape constraints are:

A margin is set in the process of leg contour detection, as shown in Figure 6. Suppose the coordinate of the center point of the same cluster data is , and the distance between this point and the line segment is , when satisfies Equation (7), then the cluster object is the leg contour. The length constraint is:

Figure 6.

The detection of the quasi circle.

Establish the lower lidar as the coordinate system , and the detected circular objects’ coordinates are where . is the number of circle-like objects, and is a set of positive integers. In the process of leg contour recognition, there is misrecognition of the leg contours caused by complex environments such as the nursing staff, the mobile staff, and the intelligent bed mechanical structure and its decorations. According to previous experiments, it is possible to detect more than four objects with circle-like contours. In the analysis of the station pattern, the distance between the legs of the user and the nursing staff is close, and there are the coordinates#x2019; contour position constraints that the Euclidean distance is close to four circle-like objects. Therefore, the Euclidean distance of all circle-like objects is calculated as :

where is the number of calculations. The distance between all detected circle-like objects is calculated by Equation (8), and a vector is established based on the data . The vector is sorted in ascending order based on the bubbling ascending algorithm, and the first three minimum distances are taken. The four circles corresponding to the three distances are the circle-like contours of the legs of the user and the nursing staff detected by lidar.

- (4)

- Back contour detection

The method in step (1) is used to segment the contour cluster of the tilted lidar’s detection data, and the coordinate of the center point of each cluster is calculated. Because the back contour is closest to the lidar, the coordinates of the back center point are calculated directly from the nearest contour cluster data using (9).

The multidimensional contour recognition algorithm is shown in Algorithm 1.

| Algorithm 1: Multidimensional contour recognition. | |

| Require: | |

| Data of horizontal lidar and horizontal lidar: | |

| 1: | while do |

| 2: | for do |

| 3: | |

| 4: | |

| 5: | end for |

| 6: | for do |

| 7: | |

| 8: | |

| 9: | |

| 10: | if then |

| 11: | |

| 12: | for do |

| 13: | |

| 14: | |

| 15: | end for |

| 16: | |

| 17: | |

| 18: | end if |

| 19: | end for |

| 20: | for do |

| 21: | |

| 22: | |

| 23: | for do |

| 24: | if then |

| 25: | |

| 26: | end if |

| 27: | end for |

| 28: | end for |

| 29: | end while |

| 30: | return |

3.2. Two-Level JPDA

A two-level JPDA based on the multi-constraint contour was proposed to accurately recognize the contour of the legs and their dynamic postures in a complex background of dynamic environment interference and the nursing staff’s limb occlusion in this paper. Given the mutual dynamic dependence and position constraint between the contour of the legs and back, a JPDA based on two-level architecture was developed. It can effectively distinguish the position of the user, the nursing staff, and the dynamic personnel around under the condition of multi-source dynamic interference, thus improving the robustness of the system. The block diagram of the algorithm is shown in Figure 4. The two-level JPDA calculates the observation sets corresponding to the sensors and targets at each level and fuses them to reduce the variance of the observation fusion values. It could avoid the tracking error of the leg confusion between the user and the nursing staff caused by the bottom-level data association error. As the input signal of the transfer control system, the dynamic posture improves the robustness of the system.

is set as the bottom-level grouping target, and is the bottom-level grouping measurement. is the target state at time . is the observed value at time . is a set of all of the continuous measurements observed before time ; is a value without the observed data. Joint-related events are the assigned value of target and measured value at time, . is all of the associated events. The bottom-level allocation probability calculation formula is given in (10).

Set as the top-level grouping target and is the top-level grouping measurement. The top-level grouping targets and the grouping measurement correspond to the bottom-level grouping targets and the grouping measurement, respectively. The top-level association event is the distribution value of the grouping target and measurement; and are the grouping targets and grouping measurement, respectively; is all of the top-level joint association events. By calculating the allocation probability value in the data association matrix, the position of the user is recognized. Similar to the bottom-level allocation probability calculation process, the top-level allocation probability formula is given as:

Based on the Bayesian theorem, the joint-related event is calculated as follows:

The top-level allocation probability is:

The number of the system recognition contour is ; the target estimated value obtained after probability correlation is ; the corresponding measurement is . A contour vector is established at time , and any combination of the contour is set as tuples , then, the basic probability assignment function of the estimated value of the combined contour at time and the basic probability assignment function with measurement are:

The tuple weight value is:

is the conflict function. When the conflict between the contours in a tuple is greater, it reflects that the correlation between the two groups of dynamic contours is worse. If it is smaller, it proves that the repulsion between the two groups of contours is less to obtain the weight coefficient of the tuple. According to the weight coefficient, the system status is updated as:

The bottom-level allocation probability is placed into Equation (12), and the preceding paragraph of the formula is calculated as follows:

In the formula, is the top-level probability distribution event for the bottom-level allocation probability event known at time , where the constant in the Equation is expressed by . represents the probability that the observed value is when the likelihood functions and are known at time k. This is the relationship between the allocation probability of the bottom level and the allocation probability of the top level when the system obtains the observation value. Equations (15) and (16) are placed into Equation (12) to obtain:

The top-level probability allocation matrix, calculated by Equation (17), is used to recognize and track the grouping targets. According to the correspondence between the top layer and the bottom layer in the distribution matrix, and comparing the unconstrained contour detection method with the algorithm proposed in this paper, it was found that the recognition result of the algorithm proposed in this paper was better than that of the unconstrained contour detection method. The two-level JPDA based on the multi-constraint contour is shown in Algorithm 2.

| Algorithm 2: Two-level JPDA. | |

| Require: | |

| Bottom-level parameter: | |

| Top-level parameter: | |

| 1: | while do |

| 2: | |

| 3: | |

| 4: | |

| 5: | |

| 6: | if then |

| 7: | return |

| 8: | end if |

| 9: | else |

| 10: | for do |

| 11: | |

| 12: | end for |

| 13: | end else |

| 14: | |

| 15: | end while |

4. Experiment and Analysis

The effectiveness of the proposed method was investigated in comprehensive experiments. The details of these steps are presented as follows:

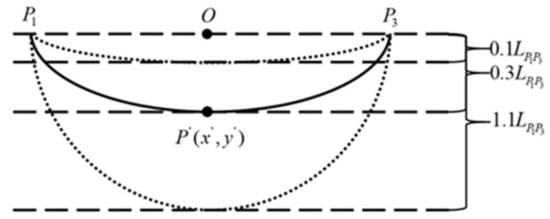

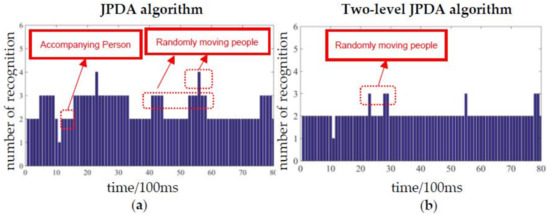

Twenty testers (10 females and 10 males), aged 22–30 years, were introduced to the experiment with the leg target recognition experiment, path-tracking experiment, and auxiliary excretion transfer experiment. To simulate a disabled lower limb, their legs were fixed with a knee supporter by an allowed bending angle. With the knee supporter, different levels of disability could be simulated with various restriction angles set. Three simulated disability levels (SDLs) were achieved, as shown in Figure 7, and are labelled as SDLs1, SDLs2, and SDLs3. All of the testers agreed to the experimental protocol and permitted the publication of their photographs for scientific purposes.

Figure 7.

The three simulated disability levels of the testers. (a) Simulated disability level 1. (b) Simulated disability level 2. (c) Simulated disability level 3.

First, the number of recognition targets in the environment with dynamic interference was calculated to evaluate the proposed method’s effectiveness in the transfer process. Additionally, the path-tracking experiment and the robot-following experiment were conducted. Their reliability analysis was implemented to check the feasibility of the proposed two-level JPDA based on multi-constraint contour under the complex indoor background. Finally, a comprehensive experiment was conducted in an intelligent house integrated with multiple heterogeneous robots to evaluate the effectiveness of the method proposed in this paper.

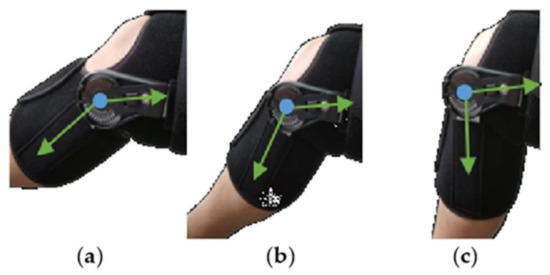

4.1. Leg Target Recognition Experiment

First, we verified the JPDA and the two-level JPDA algorithm in a scene with randomly moving people around the user. As shown in Figure 8a, because there were randomly moving people in the recognition range, the recognition targets fluctuated between two and three, and four recognition targets were reached at some time by using the traditional JPDA. Nevertheless, as shown in Figure 8b, if using the two-level JPDA based on the multi-constraint contour proposed in this paper, the recognition targets were two at most times, representing the user and the nursing staff. When people moving around appeared in the detection range of the recognition system, although the recognition targets became three in a short time, the algorithm filtered the dynamic interference in the subsequent calculation process. Thus, it could accurately track the targets of the user and the nursing staff. The traditional JPDA uses the rule of leg proximity decision to recognize the human body position, so it cannot accurately recognize the user’s leg contour. In contrast, the method proposed in this paper can effectively recognize the limbs of the user and the nursing staff and avoid leg confusion. This method can establish the dynamic position constraint between the limbs and back during the movement process and select the contours of legs that best match the dynamic back position through the joint matrix method, which causes the different recognition results. The experimental results show that the method proposed in this paper can accurately track the position of the target legs in the case of dynamic interference. Therefore, under dynamic interference in the surrounding environment and occlusion of the leg between the user and the nursing staff, using the proposed method to recognize the leg contour was better than the traditional JPDA.

Figure 8.

The comparison of the target recognition results. (a) JPDA algorithm. (b) Two-level JPDA algorithm.

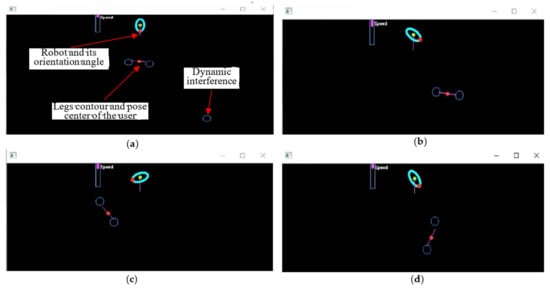

The recognition of the target leg contour in the crossed leg movement is shown in Figure 9. Visual Studio was applied in this paper to identify the contour of the user’s legs and establish an interactive interface, as shown in Figure 10. The traditional JPDA can accurately track the contours of four legs. However, the legs were identified by the principle of leg proximity, which leads to confusion between the legs of the tester and the nursing staff. In contrast, the method proposed in this paper established a dynamic relationship between the legs and the back by building a data association matrix, which effectively solves leg-identification problems. The blue circles represent robots, and the red dot represents the robot’s orientation angle. In addition, two circles represent the user’s left leg position and right leg position, and the red dots in the connecting line of confusion. As shown in Figure 10, the image representing the two-leg positions represents the center of gravity of both feet. The system proposed in this paper can directly filter the data of the nursing staff and the dynamic interference data and accurately recognize the posture of the user’s legs under the condition that the user and the nursing staff are standing with their legs crossed and moving dynamically. At the same time, to provide the best transfer posture, the orientation angle of the robot is always aligned with the center-standing position of the user. As shown in Figure 10a, when walking people or other similar contours suddenly appear in the system’s detection range, if the contour is not filtered, it may lead to the wrong following of the robot. Thus, the method proposed in this paper filters the dynamic interference directly at the following calculation frame. In addition, as shown in Figure 10b–d, when the nursing staff assists the user in moving right, left, and sideways, the system can always accurately recognize the dynamic posture of the user and ensure that the robot orientation angle is aligned with the user’s center of gravity. The results show that the whole system can accurately track the dynamic position of the user’s legs against the complex background of limb confusion, trunk occlusion, and dynamic environmental interference.

Figure 9.

The legs move across each other.

Figure 10.

Identify the user dynamic pose under multiple motion modes by Visual Studio. (a) Dynamic personnel interference. (b) Movement to the right. (c) Movement to the left. (d) Movement to the side.

4.2. Path-Tracking Experiment

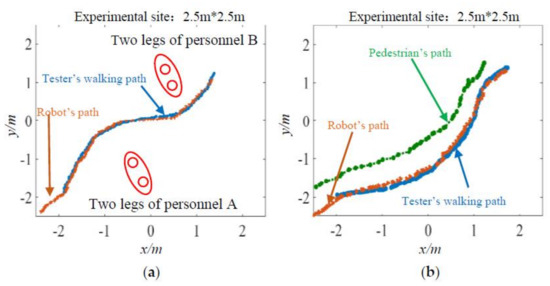

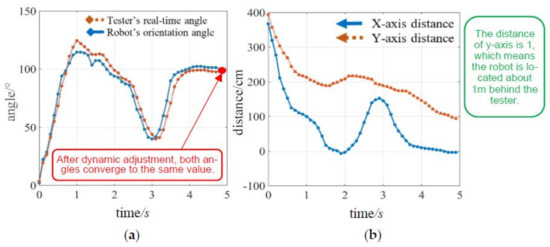

The path-tracking experiment in the complex indoor background was conducted to verify the effectiveness of the method proposed in this paper. We tested the two-level JPDA algorithm when passing through the fixed staff and the accompanying staff and provided the values of the angle and the relative position between the robot and the tester. In Figure 11a, the path records the whole process of the tester bypassing two standing personnel, and the robot can accurately track the position and posture of the target tester. In Figure 11b, a pedestrian walks side-by-side with the tester. The robot can filter the outline of the pedestrian’s legs and follow the tester’s motion with the optimal transfer position as the target. The path-tracking experiment’s angle and the relative position of the robot and the tester are shown in Figure 12. The robot can realize the irregular complex path-tracking based on recognizing the pose of the tester’s legs and follow the variable speed according to the control distance. Therefore, the result of the comparison between the real-time angle of the tester’s leg’s and the robot’s orientation angle during the tester’s walking is shown in Figure 12a. When the time is 5 s, it indicates that the tester and the robot are at the same orientation angle, which meets the optimal transfer point. In Figure 12b, the x-axis relative distance between the tester and robot was finally stabilized at 0cm, and the y-axis relative distance between the tester and robot was finally stabilized at 100 cm after the adaptive adjustment of the robot. This indicates that the robot and the tester are collinear in the x-axis direction at this moment and the distance meets the transfer point. The whole process of the path-tracking experiment is recorded, as shown in Figure 13. The experiment showed that the proposed transfer method can enable the robot to follow the motion smoothly based on the dynamic pose of the tester in a complex daily environment and finally converge to the optimal transfer point.

Figure 11.

The trajectories of the robot and the tester in the path-tracking experiment. (a) Path-tracking experiment when passing through two standing personnel. (b) Path-tracking experiment when there are pedestrians.

Figure 12.

The orientation angle and the relative position of the robot and the tester in the path-tracking experiment. (a) The blue data are the robot’s orientation angle and the red data are the tester’s real-time angle. (b) The data are the x-axis distance and y-axis distance between the robot and the tester.

Figure 13.

The path-tracking experiment for a complex path in an indoor environment.

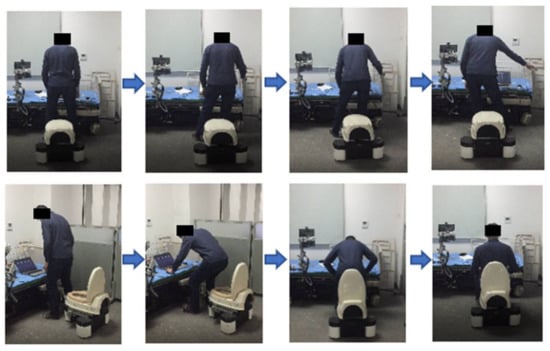

4.3. Auxiliary Excretion Transfer Experiment

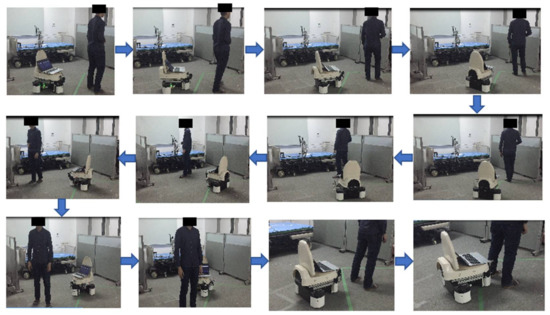

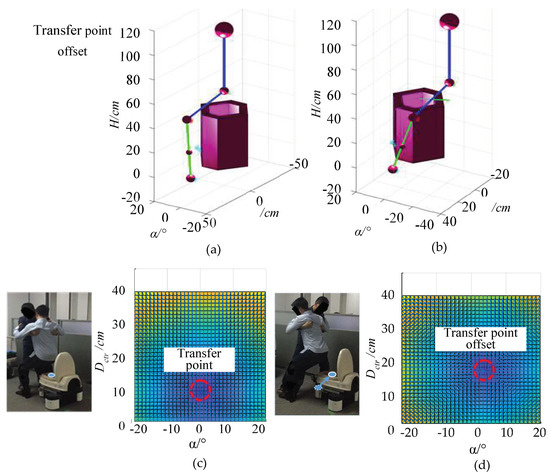

The robot-following experiment based on the tester’s optimal position and attitude analysis is shown in Figure 14. In the transfer process, the best transfer point of the lower leg will shift due to the change in the leg offset angle. The robot adjusts its position to the right to ensure that the tester is seated in the optimal position. When the tester moves to the left, right, and back of the syncline, respectively, the robot can follow with the target of providing the optimal transfer point. The tester remains in a fixed position and is ready to move into the seat until they are seated safely and stably. The simulation results and experiments of the transfer point of getting up and sitting are shown in Figure 15. As shown in Figure 15a,b, the y-axis is the control distance , the x-axis is the leg tilt angle , and the z-axis is the user height . Specifically, is the distance between the robot and the tester’s feet when the tester stands, and is the angle between the tester’s legs perpendicular to the ground. In addition, the red dot in the picture is the simplified point of the essential human skeleton, and the line connecting them represents the posture that the human body is half squatting and ready to sit down. The ALR represented by the hollow cone moves laterally due to the change in the human posture. When and have different values, the transfer point coordinates are calculated by the algorithm proposed in this paper. Moreover, when the tester’s legs are upright, the tester can directly sit in the center of the robot, and the best transfer point is shown in Figure 15c. In the transfer process, the best transfer point of the tester’s leg will be offset due to the change in the leg tilt angle. As shown in Figure 15d, the robot adjusts its position to the right to ensure that the tester sits in the best position. In the process of identifying the transfer points, the error of the y-axis was 0.56 cm ± 0.45 cm, and the error of the x-axis was 1.34 cm ± 0.86 cm. The x-axis error was larger than the y-axis error, which was due to the error caused by the calculation of the leg contour, but these errors were within an acceptable range. The correctness, feasibility, and rationality of the proposed method were proved by simulation and experiment in many cases.

Figure 14.

The robot-following experiment based on the analysis of the transfer position and posture.

Figure 15.

The simulation and experiment of auxiliary excretion transfer.(a) Simulation of auxiliary transfer. (b) Simulation of auxiliary transfer after position adjustment. (c) Transfer experiment and its transfer point. (d) Transfer experiment and the adjustment of the transfer point.

To investigate the patient’s feelings in the intelligent house we developed, subjective evaluation is usually acquired. During the comprehensive experiment, the comfortable feeling was evaluated by a questionnaire result from 20 testers, which verified the effectiveness and comfort of the proposed method [42]. On a scale of one-to-six, a higher score means a better comfortable feeling. From high to low, it sequentially means: quite comfortable, generally comfortable, a little comfortable, a little uncomfortable, generally uncomfortable, and quite uncomfortable. Testers used the auxiliary excretion method proposed in this paper and the traditional auxiliary excretion methods and scored these methods, respectively. A total of 85% of the testers considered that the method proposed in this paper was more comfortable, while only 15% thought that the method showed no apparent improvement in comfort. From this survey, we found that most testers felt more comfortable after an adjustment than before. The reasons for this are summarized as follows:

- (1)

- Compared to traditional auxiliary lavatory robots, the ALR integrates more functions. First, the ALR has a remote wake-up function so that the user can control the ALR by remote control, which will save the user’s physical strength. Furthermore, the ALR provided the best transfer posture, which can improve the safety of the transfer. Furthermore, the ALR could achieve autonomous navigation and clean the excrement in time, reducing the users’ psychological burden. These functions of the ALR provide a new solution for daily auxiliary excretion.

- (2)

- Compared with the traditional human posture recognition method by using a camera or wearable attitude sensor, the interactive method provided in this paper does not require the user to wear any equipment in advance. Therefore, it is very convenient for the elderly and people with weak motion capability.

- (3)

- Through the method proposed in this paper, the issue of recognizing the limb confusion and the occlusion of surrounding dynamic people was resolved. Because the ALR can accurately identify and track the user’s leg posture, the burden of the nursing staff and the probability of transfer failure are reduced, and the robustness of the human–computer interaction process is improved.

We compared the strategies, methods, and algorithms proposed in this paper with the traditional auxiliary excretion methods, as shown in Table 1. To sum up, we have made innovations in the auxiliary excretion strategies, methods, and algorithms. The ALR with remote awakening, autonomous movement, and security monitoring functions was developed on the auxiliary strategy. It provides a new solution for auxiliary excretion in a narrow indoor space of assisting the elderly and the disabled. The robot, integrated with our proposed algorithm, provides a comfortable and convenient approach for auxiliary execution. The whole system lightened the psychological burden and improved safety during human–robot interaction. In terms of methods, we proposed a non-contact monitoring method based on the combination of dual laser radars, which effectively improves the convenience and robustness of the auxiliary process. An algorithmic point of view proposes a two-level JPDA based on a multi-constraint contour and provides a high-robust solution to solve limb confusion, large-area trunk occlusion, and complex environmental interference.

Table 1.

A comparison between the proposed method and the traditional method.

5. Conclusions

With the aim to solve the problem of the inconvenient excretion of the elderly, an auxiliary lavatory robot (ALR) with autonomous movement capability and a comparatively convenient cleaning method was developed. The ALR can adjust the direction according to the user’s posture and provide the best transfer point. To solve the problem of misidentification caused by limb confusion and large-area trunk occlusion between the user and the nursing staff in the transfer process, a method combined with object segmentation and shape constraint was proposed. The method can extract the contour of the lower limbs of the user and the nursing staff. Meanwhile, given the mutual dynamic dependence and position constraint of multi-limb contours in transfer, a dynamic posture recognition method based on a two-level joint probability data association algorithm was proposed in this paper. This method allows the ALR to recognize the user pose in limb confusion and large-area trunk occlusion.

A path-tracking experiment was carried out in a complex indoor background, and the robot could realize the irregular complex path-tracking based on autonomous movement. Furthermore, a robot-following experiment based on the analysis of transfer position and posture was conducted, and the robot could adjust its position to ensure that the user was seated in the optimal position. According to the statistics of the transfer experiment, the error of the y-axis was 0.56 cm ± 0.45 cm, and the error of the x-axis was 1.34 cm ± 0.86 cm. The experimental results verified the correctness, feasibility, and effectiveness of the proposed method in the transfer process. By applying the ALR developed in this paper and its algorithm to an intelligent house, the safety and convenience of the user were improved, and the workload and physical load of the nursing staff were reduced. Thus, the ALR and the method proposed in this paper can be applied to hospitals, pension centers, intelligent families, and other scenes to assist the elderly and the disabled.

Author Contributions

Conceptualization, D.Z.; Methodology, D.Z., S.W. and Y.H.; Software, D.Z. and Z.Z.; Validation, D.Z., Z.Z. and J.Y.; Data collection and data processing, D.Z. and Z.Z.; Writing—review and editing, D.Z., Z.Z. and J.Y.; Funding acquisition, D.Z. and J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the “Special Fund for Guiding Local Scientific and Technological Development by the Central Government” under Grant No. 2021JH6/10500216”; in part by the “Natural Science Foundation of Liaoning Province” under Grant No. 2021-BS-152; and in part by the “General Project of Liaoning Provincial Department of Education” under Grant No. LJKZ0124.

Institutional Review Board Statement

The study was approved by the School of Electrical Engineering, Shenyang University of Technology.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Acknowledgments

We thank all of the entities who financed part of this research as well as all the users who wanted to participate and contribute to the development of this work.

Conflicts of Interest

The author declare that they have no conflicts of interest.

References

- Bakhshiev, A.; Smirnova, E.; Musienko, P. Methodological bases of exobalancer design for rehabilitation of people with limited mobility and impaired balance maintenance. J. Afr. Earth Sci. 2015, 12, 201–213. [Google Scholar]

- Zerah, L.; Bihan, K.; Kohler, S.; Mariani, L. Iatrogenesis and neurological manifestations in the elderly. Rev. Neurol. 2020, 176, 710–723. [Google Scholar] [CrossRef] [PubMed]

- Gallego, L.; Losada, A.; Vara, C.; Olazarán, J.; Muñiz, R.; Pillemer, K. Psychosocial predictors of anxiety in nursing home staff. Clin. Gerontol. 2017, 41, 282–292. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Gang, M. The moderating effect of communication behavior on nurses’ care burden associated with behavioral and psychological symptoms of dementia. Issues Ment. Health Nurs. 2019, 41, 132–137. [Google Scholar] [CrossRef] [PubMed]

- Lebet, R.; Hasbani, N.; Sisko, M.; Agus, M.; Curley, M. Nurses’ perceptions of workload burden in pediatric critical care. Am. J. Crit. Care 2021, 30, 27–35. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, X.; Lu, C.; Zhang, M.; Wang, Y. Compliant manipulation method for a nursing robot based on physical structure of human limb. Intell. Serv. Robot. Syst 2020, 100, 973–986. [Google Scholar] [CrossRef]

- Mcdonnell, S.; Timmins, F. Subjective burden experienced by nurses when caring for delirious patients. J. Clin. Nurs. 2012, 21, 2488–2498. [Google Scholar] [CrossRef] [PubMed]

- Vichitkraivin, P.; Naenna, T. Factors of healthcare robot adoption by medical staff in Thai government hospitals. Health Technol. 2021, 11, 139–151. [Google Scholar] [CrossRef]

- Liang, J.; Wu, J.; Huang, H.; Xu, W.; Xi, F. Soft sensitive skin for safety control of a nursing robot using proximity and tactile sensors. IEEE Sens. J. 2020, 20, 3822–3830. [Google Scholar] [CrossRef]

- Abubakar, S.; Das, S.; Robinson, C.; Saadatzi, M.; Logsdon, M.; Mitchell, H.; Chlebowy, D.; Popa, D. ARNA, a Service Robot for Nursing Assistance: System Overview and User Acceptability. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering, Hong Kong, China, 20–21 August 2020. [Google Scholar]

- Grewal, H.; Matthews, A.; Tea, R.; Contractor, V.; George, K. Sip-and-Puff Autonomous Wheelchair for Individuals with Severe Disabilities. In Proceedings of the 2018 9th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 8–10 November 2018. [Google Scholar]

- Ignacio, G.; Mario, M.; Teresita, M. Smart medical beds in patient-care environments of the twenty-first century: A state-of-art survey. BMC Med. Inf. Decis. Mak. 2018, 18, 63. [Google Scholar]

- Wang, Y.; Wang, S. Development of an excretion care support robot with human cooperative characteristics. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Milan, Italy, 5 November 2015. [Google Scholar]

- Wang, Y.; Wang, S.; Jiang, Y.; Tan, R. Control of an excretion care support robot using digital acceleration control algorithm: Path tracking problem in an indoor environment. J. Syst. Des. Dyn. 2013, 7, 472–487. [Google Scholar] [CrossRef] [Green Version]

- Moudpoklang, J.; Pedchote, C. Line tracking control of royal Thai air force nursing mobile robot using visual feedback. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2021; Volume 1137, p. 10. [Google Scholar]

- Luo, Z. Systematic innovation of health robotics for aging society—Health renaissance century is coming. Sci. Technol. Rev. 2015, 33, 45–53. [Google Scholar]

- Bae, G.; Kim, S.; Choi, D.; Cho, C.; Lee, S.; Kang, S. Omni-directional Power-Assist-Modular(PAM) Mobile Robot for Total Nursing Service System. In Proceedings of the 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence, Jeju, Korea, 28 June–1 July 2017. [Google Scholar]

- Pineau, J.; Montemerlo, M.; Pollack, M.; Roy, N.; Thrun, S. Towards robotic assistants in nursing homes: Challenges and results. Rob. Auton. Syst. 2003, 42, 271–281. [Google Scholar] [CrossRef]

- Amalia, N.; Hatta, A.; Koentjoro, S.; Nasution, A.; Puspita, I. Fiber Sensor for Simultaneous Measurement of Heart and Breath Rate on SmartBed. In Proceedings of the 2020 IEEE 8th International Conference on Photonics, Kota Bharu, Malaysia, 12 May–30 June 2020. [Google Scholar]

- Cai, H.; Toft, E.; Hejlesen, O.; Hansen, J.; Oestergaard, C.; Dinesen, B. Health professionals’ user experience of the intelligent bed in patients’ homes. Int. J. Technol. Assess. Health Care 2015, 31, 256–263. [Google Scholar] [CrossRef] [PubMed]

- Gao, H.; Lu, S.; Li, W. The Design of CAN and TCP/IP-based Robotic Multifunctional Nursing Bed. In Proceedings of the 2010 8th World Congress on Intelligent Control and Automation, Jinan, China, 7–9 July 2010. [Google Scholar]

- Lin, T.; Lu, S.; Wei, Z. A Robotic Nursing Bed Design and its Control System. In Proceedings of the 2009 IEEE International Conference on Robotics and Biomimetics, Guilin, China, 19–23 December 2009. [Google Scholar]

- Zhang, T.; Xie, C.; Lin, L. Research on Tele-monitoring System and Control System of Intelligent Nursing Bed Based on Network. In Proceedings of the 2006 6th World Congress on Intelligent Control and Automation, Dalian, China, 21–23 June 2006. [Google Scholar]

- Guo, S.; Zhao, X.; Matsuo, K.; Liu, J.; Mukai, T. Unconstrained detection of the respiratory motions of chest and abdomen in different lying positions using a flexible tactile sensor array. IEEE Sens. J. 2019, 19, 10067–10076. [Google Scholar] [CrossRef]

- Sun, Q.; Cheng, W. Mechanism design and debugging of an intelligent rehabilitation nursing bed. Int. J. Eng. Sci. 2015, 3, 19–21. [Google Scholar]

- Zhang, H.; Sun, H. Study on the parameters of cleaning water jet for nursing bed hips. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2021; Volume 632, p. 052030. [Google Scholar]

- Chang, H.; Huang, T.; Wong, M.; Ho, L.; Teng, C. How robots help nurses focus on professional task engagement and reduce nurses’ turnover intention. J. Nurs. Scholarsh. 2021, 53, 237–245. [Google Scholar] [CrossRef]

- Zhao, J.; Shen, L.; Yang, C.; Zhang, Z.; Zhao, J. 3D Shape Reconstruction of Human Spine Based on the Attitude Sensor. In Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference, Chongqing, China, 24–26 May 2019. [Google Scholar]

- Joukov, V.; Bonnet, V.; Karg, M.; Venture, G.; Kulic, D. Rhythmic extended Kalman filter for gait rehabilitation motion estimation and segmentation. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 407–418. [Google Scholar] [CrossRef]

- Zhu, G.; Wang, Y. Research on human body motion attitude capture and recognition based on multi-sensors. Rev. Tecn. Fac. Ingr. 2017, 32, 775–784. [Google Scholar]

- Shi, C.; Zhang, L.; Hu, W.; Liu, S. Attitude-sensor-aided in-process registration of multi-view surface measurement. Measurement 2011, 44, 663–673. [Google Scholar] [CrossRef]

- Mehralian, M.; Soryani, M. EKFPnP: Extended Kalman filter for camera pose estimation in a sequence of images. IET Image Process. 2020, 14, 3774–3780. [Google Scholar] [CrossRef]

- Rohe, D.P.; Witt, B.L.; Schoenherr, T.F. Predicting 3d motions from single-camera optical test data. Exp. Tech. 2020, 45, 313–327. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Cai, L.; Yan, J. Standing-posture recognition in human–robot collaboration based on deep learning and the Dempster–Shafer Evidence Theory. Sensors 2020, 20, 1158. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peng, J.; Xu, W.; Liang, B.; Wu, A. Pose measurement and motion estimation of space noncooperative targets based on laser radar and stereovision fusion. IEEE Sens. J. 2018, 19, 3008–3019. [Google Scholar] [CrossRef]

- Laurijssen, D.; Truijen, S.; Saeys, W.; Daems, W.; Steckel, J. Six-DOF Pose Estimation Using Dual-axis Rotating Laser Sweeps Using a Probabilistic Framework. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation, Lloret de Mar, Spain, 29 November–2 December 2021. [Google Scholar]

- Fan, Y.; Zhao, B.; Guolu, M.A. Coordinate measurement system of hidden parts based on optical target and rangefinder. Laser Technol. 2014, 38, 723–728. [Google Scholar]

- Li, Q.; Huang, X.; Li, S. A laser scanning posture optimization method to reduce the measurement uncertainty of large complex surface parts. Meas. Sci. Technol. 2019, 30, 105203. [Google Scholar] [CrossRef]

- Chen, J.; Yu, S.; Chen, X.; Zhao, Y.; Wang, S. Epipolar multitarget velocity probability data association algorithm based on the movement characteristics of blasting fragments. Math. Probl. Eng. 2021, 2021, 9994573 . [Google Scholar] [CrossRef]

- Li, Q.; Song, L.; Zhang, Y. Multiple extended target tracking by truncated JPDA in a clutter environment. IET Signal Process. 2021, 15, 207–219. [Google Scholar] [CrossRef]

- Matsubara, S.; Honda, A.; Ji, Y.; Umeda, K. Three-dimensional Human Tracking of a Mobile Robot by Fusion of Tracking Results of Two Cameras. In Proceedings of the 2020 21st International Conference on Research and Education in Mechatronics, Cracow, Poland, 9–11 December 2020. [Google Scholar]

- Wich, M.; Kramer, T. Enhanced Human-computer Interaction for Business Applications on Mobile Devices: A Design-oriented Development of a Usability Evaluation Questionnaire. In Proceedings of the 2015 48th Hawaii International Conference on System Sciences, Kauai, HI, USA, 5–8 January 2015. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).