This section details the IBPOA, beginning with related operators of the POA in

Section 3.1. We then develop an ITF leveraging B&B theory [

19] for real-to-binary encoding conversion, integrated with a greedy repair operator (GRO) for constraint handling.

Section 3.4 establishes the theoretical foundations for the ITF and analyzes the implementation properties, which represent advances beyond the conventional methodologies. An analysis of the computational complexity of the IBPOA is presented in

Section 3.6.

3.2. An Improved Transfer Function (ITF)

Section 3.1 outlined the core mechanisms of the POA. Due to its real encoding scheme, the POA is not directly applicable to discrete optimization problems such as the 0-1 KP. The sigmoid function serves as the classical transfer function for mapping continuous spaces to binary spaces and is widely adopted in PSO and its variants for discretization. Let

and

denote the binary counterparts of

and

after applying the transfer function:

where

is a uniformly distributed random number.

The sigmoid function maps the continuous-space solution into the interval . A random number rand is then used to determine the binary assignment: if , is assigned 1; otherwise, it is assigned 0. To enhance the search capability of the algorithm further, we propose an improved transfer function tailored to the characteristics of the 0-1 KP.

Very recently, Yang [

19] adapted the improved B&B method proposed by Dey et al. [

47] to solving the 0-1 KP. For a given instance

, the method first applies a greedy strategy: items are packed in non-increasing order of their profit density (i.e.,

) until the first item exceeds the knapsack’s capacity. This yields the

break item

b and the break solution

, where

,

and

. Next, the Dantzig upper bound

[

48] is computed via linear relaxation,

, where

denotes the residual capacity. Finally, the B&B reduction phase searches for feasible solutions with objective values no less than that of the break solution

.

From the literature [

19], we have the following definition and theorem.

Definition 2 ([

19])

. The item set , consisting of items with a higher profit density than the break item b, can be divided into m disjoint subsets, denoted as . Each subset has items and can be defined as follows:where r is the residual capacity. If no item satisfies the Formula (14) for a given ω (), then . Definition 2 first constructs a hyperplane based on the coordinates of the origin and the break item (i.e., the numerical values of the item’s profit

and weight

). Subsequently, the definition assigns a value

to each item according to its distance from the hyperplane, with

decreasing as the distance increases. Correspondingly, the set

, composed of items whose profit density is less than that of the break item, satisfies the following expression.

Depending on the value of , we have the following theorem.

Theorem 1 ([

19])

. For each ω (), let denote the set of items not packed into the optimal solution from , and let . These values constitute the vector . Therefore, we can conclude that Theorem 1 reveals that at most items in are excluded from the optimal solution. In other words, all of the items in are selected in the optimal solution. At most one item in is excluded, and so on. The smaller an item’s -value in , the higher the item’s likelihood of optimal inclusion.

Conversely, at most

items in

are included in the optimal solution. Specifically, no items in

are selected. Up to one item in

may be chosen, and so on. Smaller

values for items in

correlate with reduced selection probabilities. To streamline the subsequent discussion, we denote the

-value associated with each decision variable as

, which is computed as follows.

Building on the formula above, we propose an improved transfer function (ITF). The ITF partitions the set of items into two subsets based on the break item

b’s profit density. For each item, the ITF assigns a distance-weighted value

using the item’s distance to the hyperplane formed by the break item and the origin. For items with a profit density exceeding the break item, the ITF increases the probability of setting

; symmetrically, for items with a profit density below the break item, it increases the probability of setting

. The ITF is formally defined as follows:

where

.

Building on Formula (

13), the ITF in Formula (

17) partitions the item set

N into two subsets

and

based on the break item

b’s profit density. The value

for the

j-th item is determined via Formula (

14) for

and Formula (

15) for

, affecting

. To illustrate the refined transfer function, consider the items in

. For any

, the item is guaranteed inclusion in the optimal solution. Observe that

, ensuring

for all

. As

increases,

decreases monotonically, reflecting the item’s hyperplane proximity. Consequently, the items in

exhibit a diminishing selection certainty, leading the algorithm to prioritize searching for these variables. Conversely, for the items in

,

, forcing

for all

. Here, smaller

values correspond to stronger guarantees of exclusion from the optimal solution.

Therefore, the ITF prioritizes exploring decision variables near the hyperplane defined by the origin and the break item b. By reducing the number of variables definitively included in or excluded from the optimal solution, the algorithm maintains its search capability while accelerating the convergence.

3.4. The Theoretical Analysis of the ITF

In previous studies, researchers evaluating novel meta-heuristic algorithms typically conducted simulations on benchmark instances, employing computational results to demonstrate the effectiveness of the improvements. However, since meta-heuristic algorithms are stochastic, the results of individual simulation runs are not unique, rendering computational comparisons alone insufficient to robustly justify algorithmic enhancements. Unlike conventional approaches, the ITF is derived from the theoretical framework of B&B, providing stronger analytical guarantees.

Given an instance of the 0-1 KP composed of a set

N of

n items, the discretization strategies in previous research have first mapped the individual encoding

from the continuous search space

to the probability space

via the sigmoid function and acquired the corresponding probability vector

. Then, a binary encoding

is generated by comparing each value in the probability vector

with a randomly sampled number. The space mapping relationship is

From Formula (

17), the ITF narrows the probability mapping space for the

j-th decision variable to either

or

, depending on the value of

. This contrasts with traditional TFs, which use the full interval

. We denote the reduced probability mapping space of the ITF as

. To quantify this reduction, we employ the Lebesgue Measure for theoretical comparison. The measure of the probability mapping space for the traditional transfer function is

, whereas the measure per dimension for the ITF is

. Thus, the total measure of

is

Clearly, is less than . A reduced probability mapping space implies that the improved algorithm can eliminate unproductive search regions, thereby enhancing the search capability. Notably, the design of the ITF based on Theorem 1 guarantees that the optimal solutions remain within the search space. Furthermore, unlike parameter-dependent reduction methods, the ITF requires no additional parameter tuning, improving its generalization capability.

3.5. The Greedy Repair Operator (GRO)

Although the ITF significantly improves the search efficiency, solutions generated during iterations for constrained optimization problems often suffer from two common issues: constraint violations or suboptimal solutions that fail to saturate the constraint bounds. To address this challenge, meta-heuristic algorithms typically incorporate constraint-handling mechanisms. The current mainstream approaches include penalty function methods and repair strategies [

20,

49,

50]. For the 0-1 KP, this study adopts a widely used greedy repair operator (GRO) to refine the solutions generated during iterations.

Given an individual

processed by the ITF, the GRO operates in two phases: (1) repairing the infeasibility by eliminating items in ascending profit density order and (2) optimizing the feasibility by tentatively adding items in descending profit density order. We assume the item order reflects a non-increasing profit density (i.e.,

). The GRO procedure is formalized in Algorithm 1.

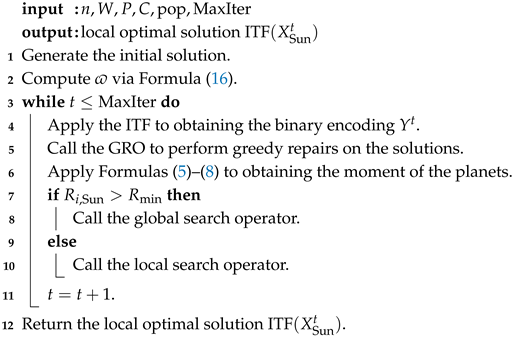

| Algorithm 1: Greedy repair operator (GRO) |

![Symmetry 17 01538 i001 Symmetry 17 01538 i001]() |

Algorithm 1 first repairs infeasible solutions through Steps 2–7. Subsequently, Steps 8–12 refine the solution via greedy optimization. The operations maintain a time complexity of . Compared to the original POA, the IBPOA introduces only two additions: the ITF and the GRO. Crucially, both components exhibit a lower time complexity than that of the core POA framework, preserving the algorithm’s computational efficiency.

Notably, since the GRO operates directly on , it may induce encoding–decoding disruption. This phenomenon stems from the algorithm’s dual-space operation (e.g., continuous and binary encodings): if the GRO modifies without synchronously updating , the search direction diverges from the actual optimization trajectory in the solution space. To mitigate this, the GRO adjusts for variables altered in (via Lines 6 and 12). Specifically, when changes from 1 to 0, the corresponding is repositioned to 20% of its original distance from , where the 0.2 scaling factor is an empirical value determined through experimentation. Conversely, when changes from 0 to 1, is similarly repositioned to 20% of its original distance from .

3.6. A Complexity Analysis of the IBPOA

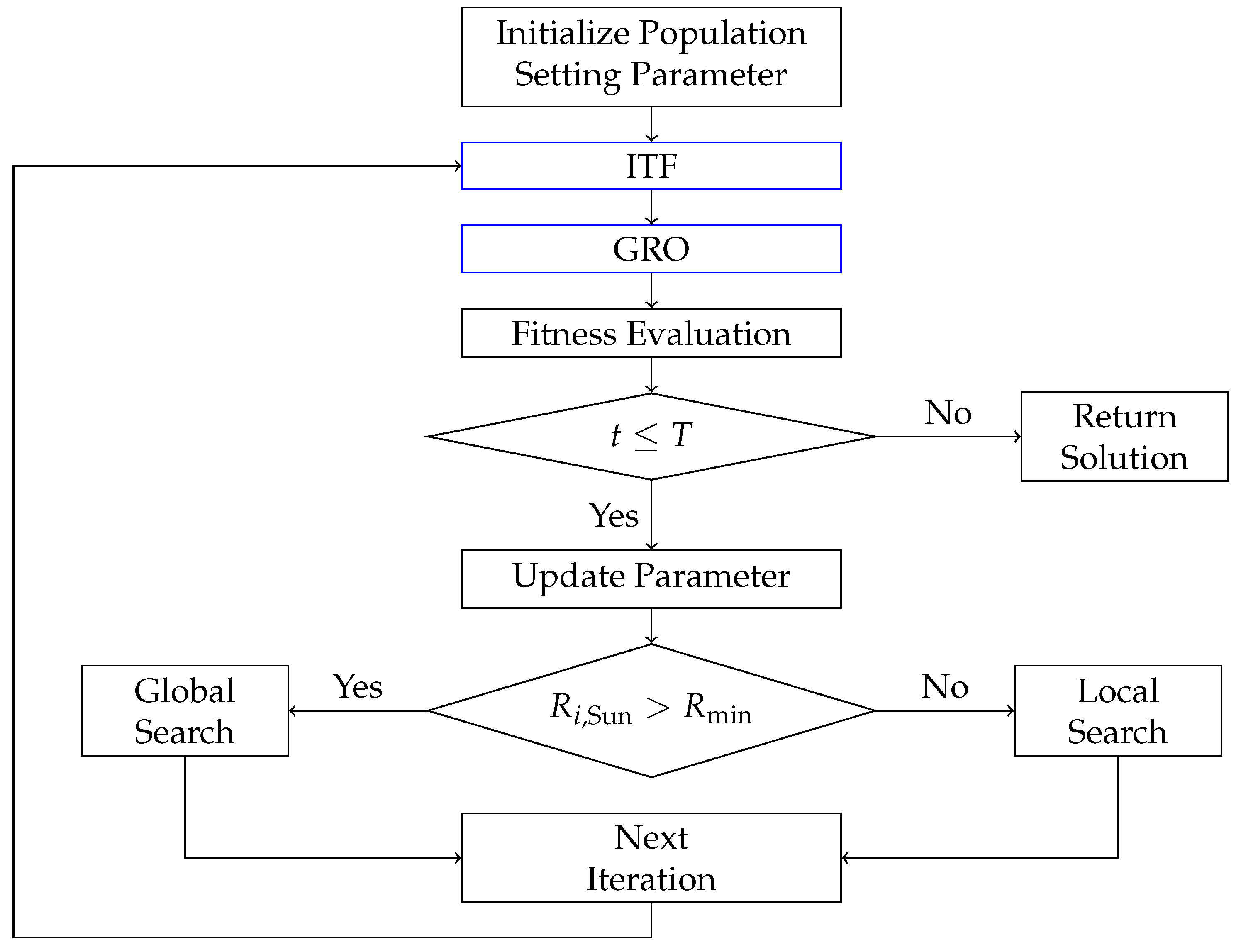

Building on the POA framework, the IBPOA introduces a greedy repair operator (GRO) to boost the search performance further. The workflow is illustrated in

Figure 2, where black boxes denote the original POA operations and blue boxes highlight IBPOA-specific additions.

The computational complexity of the IBPOA is analyzed in this subsection. Pseudo-code appears in Algorithm 2, with the population size pop and maximum iterations MaxIter.

In the IBPOA, the time complexity of generating the initial solution is

(Algorithm 2, Line 1). The computational complexity of computing

is

(Algorithm 2, Line 2). Within the loop, the time complexity of the

is

(Algorithm 2, Line 4), and that of the

is

(Algorithm 2, Line 5). For the planetary moment computations, the time complexities of Formulas (

5) to (

7) are all

, while that of Formula (

8) is

(Algorithm 2, Line 6). The time complexities of the global search operator and the local search operator are both

. Thus, the time complexity of the IBPOA is

.