1. Introduction

Automated detection of physical violence has become a significant technical challenge in multimedia content analysis, where surveillance systems and digital platforms process large amounts of images daily [

1]. Manual processing of such content faces scalability issues and high operational costs, driving the development of automated methods based on deep learning [

2].

Current violence detection systems have trouble with data heterogeneity. Traditional methods treat all training samples the same, ignoring differences in classification difficulty that can hinder learning efficiency [

3]. This homogeneous strategy leads to computational inefficiencies, particularly in large-scale cases with limited resources [

4]. Convolutional neural networks (CNNs), specifically DenseNet121, have demonstrated strong performance in image classification tasks due to their dense connectivity architecture, which enhances feature reuse [

5]. However, practical deployment of these models requires careful consideration of computational efficiency for real-time applications [

6].

Recent research has examined various methods for violence detection, including multimodal fusion [

7], attention mechanisms [

8], and lightweight architectures [

9]. Alejo et al. [

10] used confidence-based active learning to categorize data into

Safe,

Border, and

Average subsets, showing improvements in training efficiency. However, a gap remains in systematically applying confidence-based sample selection to optimize computational resources for violence detection more effectively.

Confidence-based active learning iteratively constructs models by identifying representative samples [

11]. This approach uses model uncertainty to direct training data selection, lowering computational requirements without sacrificing performance [

12]. However, its application to violence detection within images remains underexplored, especially regarding specialized sample selection based on predictive confidence levels [

13].

This work proposes a confidence-based selection method to optimize the training of DenseNet121 for automated violence detection. The methodology partitions the dataset into three specialized subsets: Border (), defined symmetrically around the decision boundary to capture maximally uncertain samples; Safe ( or ), representing high-confidence predictions at both extremes; and Average ( or ), encompassing moderately certain cases. This design utilizes a symmetric treatment of uncertainty at the decision boundary, while acknowledging functional asymmetries in sample utility across the confidence spectrum. Each subset is tailored to distinct classification complexity characteristics, enabling a data-centric strategy that balances efficiency and performance.

The key contributions of this approach include (1) a confidence-based selection procedure for computational resource optimization in violence detection, (2) the empirical validation of stratified training strategies based on classification complexity, (3) demonstration that strategic data selection can outperform volume-driven approaches, and (4) a comparative analysis of precision–efficiency trade-offs across specialized subsets.

2. Related Work

Automated violence detection with deep learning is an interdisciplinary research area that combines computer vision, spatiotemporal processing, and multimodal fusion. Current methods mainly fall into three categories: (1) unimodal systems using visual features, (2) audiovisual architectures for detecting aggression, and (3) optimization techniques for edge computing. The literature shows a clear shift from unimodal models toward hybrid, efficient, and adaptable solutions.

Table 1 synthesizes key studies in automated violence classification, organizing them by author, architecture, methodology, dataset, and performance metrics. It evaluates prior techniques for addressing classification complexity, including architectural optimizations and data processing strategies [

6,

14], to contextualize our confidence-based methodology within the current scientific landscape. The review progresses from broad surveys such as [

15] to specialized implementations [

9], culminating in works that explicitly use confidence thresholds [

11].

2.1. Evolution of Methodologies

The field of automated violence detection has experienced significant methodological progress, driven by the need to improve both accuracy and computational efficiency. Early methods primarily relied on unimodal visual analysis; however, challenges in managing ambiguous situations and real-world variability led to innovations in multimodal fusion, temporal modeling, and lightweight architectures. This subsection critically reviews key milestones in this development, from basic benchmarking studies to advanced hybrid systems, illustrating how architectural innovations and optimization techniques have addressed the core challenges of violence classification. By following this progression, we understand how confidence-based sample selection has emerged as a natural way to enable resource-efficient training without losing performance. In this sense, the following studies offer different approaches to address this problem:

Benchmarking Studies: [

15] established a comparative framework for analyzing CNNs, RNNs, and transformers on benchmark datasets (

Hockey Fights,

RWF-2000,

Violent Flows), reporting an accuracy of over 90% in optimized configurations. Their systematic review highlights trends toward attention mechanisms and lightweight architectures.

Multimodal Fusion: Ye et al. [

7] integrated MFCC audio features with C3D visual processing using Dempster–Shafer theory, achieving 97% accuracy in school environments. This demonstrates how auditory signals complement visual data in violence scenarios with identifiable acoustic components.

Temporal Modeling: Mumtaz et al. [

8] combined CNNs, Bi-LSTMs, and multiscale attention with statistical control charts for risk monitoring, attaining 89–91% accuracy across datasets. Their work proves that selective attention improves robustness in complex scenes.

Computational Optimization: Wang et al. [

6] implemented

EfficientNet with bidirectional motion attention and TSM modules, achieving perfect accuracy on

Movie Fights and >90% on other datasets with only 1.21 GFLOPs. This validates the feasibility of real-time efficient models.

Edge Deployment: Khan et al. [

14] designed an industrial surveillance pipeline combining person detection (CNN) with action classification (3D-CNN), reducing latency by processing only regions of interest. Their cascaded architecture outperforms baselines while optimizing resources.

Transformer Architectures: Rendón-Segador et al. [

9] proposed

CrimeNet, a transformer model with adaptive sliding windows that improved inter-dataset robustness by 15% while maintaining 99% AUC across 11 public datasets.

Confidence-Based Learning and Efficiency: Abundez et al. [

11] pioneered confidence-based active learning, categorizing images into

Safe,

Border, and

Average subsets to optimize DenseNet121 and EfficientNet training. Their method increased the AUC from 0.44 to 0.81–0.91 on the

AIRTLab,

RLVS, and

SCVD datasets, demonstrating that curating ambiguous examples enhances generalization without requiring the expansion of training data.

2.2. Spatiotemporal and Edge-Capable Models

Recent advances in violence detection focus on models that balance temporal reasoning with computational efficiency, allowing deployment in resource-limited edge environments. This subsection examines architectures that combine spatiotemporal feature extraction (such as 3D CNNs and ConvLSTMs) with lightweight design principles, tackling the challenges of capturing dynamic violent behaviors while maintaining real-time performance. From hybrid networks with dense connectivity to compact systems optimized for embedded devices, these methods show how innovative algorithms can expand the practical use of violence detection systems beyond cloud-dependent setups.

ViolenceNet [

1]: A 3D DenseNet121 with bidirectional ConvLSTM and multi-head attention achieved 95–100% intra-dataset and 70–81% cross-dataset accuracy, capturing long-term temporal dynamics.

Compact Architectures: Huillcen Baca et al. [

17] combined DenseNet121/MobileNetV2 with BiLSTM, reaching 98.2–100% accuracy on

Hockey Fight and

Movie Fight with only 3.5M parameters.

Edge Deployment: Azzakhnini et al. [

18] developed LAVID, an autonomous camera using DenseNet121 and

DSCNN-BiLSTM (0.57M parameters), achieving 96.6% accuracy on

Violent Flows, proving edge-based advanced analysis is viable.

2.3. Trends and Gaps

Table 1 highlights DenseNet121’s dominance in violence detection due to its balance of accuracy and efficiency. It is validated for real-time detection [

6], active learning frameworks [

11], and computational efficiency in systematic reviews [

15]. As can be observed, the recent literature shows a shift from computationally heavy models to hybrid, efficient, and adaptable solutions. The rise of multimodal fusion and active learning signals a new era of surveillance systems with greater precision, efficiency, and cross-domain transferability.

Despite significant progress in violence detection models, several critical limitations persist. One of the most pressing issues is cross-dataset generalization. While many models report intra-dataset accuracies exceeding 95%, their performance drops markedly (often to between 60% and 75%) when evaluated on different datasets (e.g., transferring from Hockey Fights to RWF-2000). This discrepancy features the challenges of domain adaptation and the need for more robust generalization techniques.

Another unresolved issue involves dynamic thresholding. Most current approaches rely on static confidence thresholds, which fail to account for context-dependent variability. This can result in poor discrimination in ambiguous scenes. Developing adaptive thresholding mechanisms that respond to scene complexity could enhance decision-making under uncertainty. Additionally, in terms of multimodal limitations, while audio–visual fusion has achieved high classification performance (up to 97% accuracy in some cases), these systems typically underperform in scenarios where audio cues are absent, such as silent violent acts. Finally, evaluation consistency remains a concern. The use of disparate metrics (such as AUC, classification accuracy, and computational cost) across studies complicates direct comparisons between models.

This landscape positions our work at the intersection of confidence-based efficiency and temporal robustness, offering a pathway to address generalization gaps through dynamic sample selection.

3. Methods

The methodology employed in this study was structured into a rigorous five-phase protocol to ensure the reliability and reproducibility of results. First, a comprehensive data preparation and balancing phase was conducted, addressing class imbalance and ensuring representative sampling across the dataset. Next, the base model training phase involved the use of DenseNet121 as the foundational architecture, selected for its proven performance in visual classification tasks. In the third phase, the trained model was used for prediction generation and confidence analysis, capturing the predicted labels and the associated confidence scores. These outputs served as the basis for subsequent intelligent data partitioning. The fourth phase introduced intelligent segmentation of the dataset into three specialized subsets (Safe, Average, and Border). Finally, an optimized model training and evaluation phase was executed.

3.1. Datasets and Preprocessing

To conduct this study, we used four publicly available datasets, which are summarized in

Table 2. It is essential to note that the datasets were not used directly; instead, we developed an experimental framework that utilized three carefully curated datasets,

DS1,

DS2, and

, each designed to address specific evaluation needs while maintaining class balance.

DS1 served as our baseline dataset, comprising exclusively AIRTLab content with 6000 selected images (3000 violent and 3000 non-violent scenes). This homogeneous composition allowed for controlled initial model training and validation.

For enhanced diversity, DS2 combined material from all four source datasets (AIRTLab, RLVS, Pexels, and SCVD), totaling 5600 images (2800 per class). This composite dataset intentionally incorporates the varied visual characteristics of surveillance footage (SCVD), real-world YouTube content (RLVS), and controlled stock imagery (Pexels), creating a more challenging training environment.

The

evaluation set (4400 images: 2200 violent/2200 non-violent) followed a stratified sampling approach across all sources, ensuring proportional representation of each dataset’s unique characteristics while maintaining complete mutual exclusivity with the training sets (

DS1∩

DS2∩

). This design ensures that

does not contain overlapping or visually similar images from

DS1 or

DS2 and includes diverse visual contexts from

AIRTLab,

RLVS,

SCVD, and

Pexels. Therefore, although this study does not test across datasets, the construction of

effectively simulates a cross-domain evaluation scenario. This approach aligns with recommendations in the recent literature, which emphasize the importance of testing in unseen environments to assess the generalization capability of physical violence detection systems [

15].

The final purpose of creating this data combination is to develop a broad spectrum of scene diversity. First, violent scenes include physical confrontations (hand-to-hand combat, pushing), organized urban conflicts (riots, vandalism), domestic violence scenarios, and weapon-involved aggression (from SCVD). Then, non-violent counterparts consist of peaceful social interactions (conversations, group activities), sports and recreational events, family gatherings, workplace activities, and ambiguous but non-aggressive behaviors (arguing without physical contact).

This intentional diversity ensures models learn discriminative features beyond superficial visual patterns, forcing them to recognize contextual violence indicators while avoiding overfitting to specific scene types. The inclusion of CCTV footage (SCVD) alongside consumer-grade video (RLVS) and studio-quality images (Pexels) further enhances real-world applicability across different capture conditions.

To ensure compatibility with the DenseNet121 architecture and improve model robustness, a structured preprocessing pipeline was implemented. First, all input frames were resized to 224 × 224 pixels to meet the input requirements of DenseNet121 and allow consistent processing across the dataset. Next, normalization was applied by scaling pixel values to the [0, 1] range, which promotes faster convergence during training and enhances numerical stability. During training, data augmentation techniques were used to increase variability and reduce overfitting. These included random rotations up to ±15°, horizontal scaling within ±10%, and brightness adjustments of up to ±20%. These transformations mimicked real-world variations in camera angles, distances, and lighting conditions, helping the model generalize better.

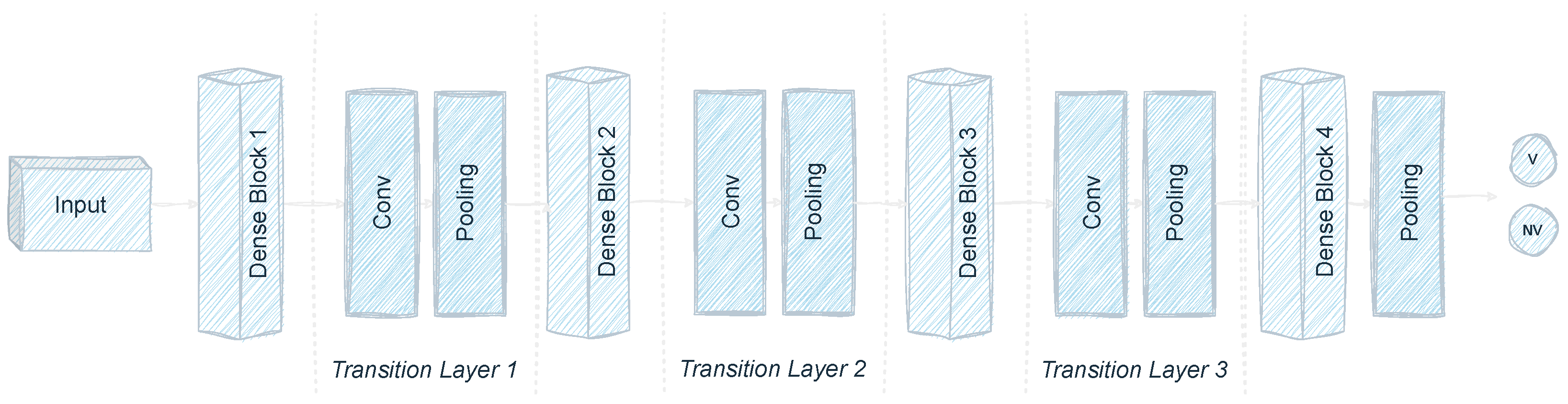

3.2. DenseNet121 Architecture and Model Configuration

The DenseNet121 architecture was chosen as our base model because of its proven effectiveness in complex image classification tasks and its ability to capture subtle visual patterns through dense inter-layer connections (

Figure 1). Unlike traditional CNNs, DenseNet121 uses an innovative approach where each layer directly receives inputs from all preceding layers, resulting in improved feature reuse (via concatenated feature maps), fewer parameters (20% less than ResNet variants), better gradient flow during backpropagation, and natural feature diversification through multi-scale feature aggregation.

The model implementation included several key customizations specific to the violence detection task. A transfer learning approach was used, starting with ImageNet pre-trained weights to utilize learned visual features. The original classification head of DenseNet121 was removed and replaced with a custom dense layer that has two output neurons, representing violence and non-violence classes. A softmax activation function was applied to generate normalized class probabilities.

The training used the Adam optimizer with a starting learning rate of 0.001, chosen for its adaptive gradient properties. To improve convergence, an automatic learning rate reduction was implemented when the validation performance stagnated, adjusting the learning rate accordingly. The loss function was categorical cross-entropy, suitable for the two-class probabilistic output. Additionally, a dropout layer with a probability of 0.3 was included before the final classification layer to reduce overfitting and improve generalization. The complete layer-by-layer architecture, including all dimensional transformations introduced during customization, is presented in

Table 3.

All experiments were conducted on a workstation equipped with an NVIDIA RTX 4060 GPU (8 GB VRAM) and 48 GB of system RAM, running Windows 11 Pro. This hardware configuration provided sufficient computational resources for training multiple deep learning models efficiently.

The software stack was built around Python 3.10.16, with core deep learning functionality provided by TensorFlow 2.10.0 and Keras 2.10.0. GPU acceleration was enabled through CUDA 11.2 and cuDNN 8.1, ensuring compatibility and optimized performance. For numerical operations, NumPy 1.26.4 and SciPy 1.15.1 were utilized, while TensorBoard 2.10.1 and Matplotlib 3.10.0 facilitated real-time visualization and analysis of results.

This configuration enabled efficient training and evaluation of multiple model variants, with the GPU’s tensor cores delivering up to 4× speedup over CPU-only execution for batch sizes of up to 64 images. Moreover, the standardized software environment contributed to the reproducibility and consistency of results across experimental runs.

3.3. Model Performance Metrics

To evaluate the effectiveness of our proposal, we employed both confusion matrix analysis (

Table 4) and standard classification metrics, including

precision,

recall,

F1-

Score, and

g-mean. These metrics provide complementary perspectives on model performance across both violent and non-violent classes.

From the confusion matrix (

Table 4), four key performance metrics were calculated.

Precision (Equation (

1)) indicates how accurately violence is identified by measuring the proportion of correctly predicted violent cases out of all cases predicted as violent.

Recall (Equation (

2)) assesses the model’s ability to detect violent events, reflecting coverage within the positive class. The (

F1-

Score (Equation (

3)) offers a balanced evaluation by combining

precision and

recall into a single harmonic mean; this is especially useful in imbalanced classification situations. Lastly, the geometric mean (

g-mean, Equation (

4)) evaluates the model’s overall performance by emphasizing balanced performance across both classes, ensuring that neither class is disproportionately favored.

These metrics collectively address the key requirements of violence detection systems: high true positive rates (minimizing missed violence) while maintaining low false positives (avoiding unnecessary alerts). The g-mean proves especially relevant for security applications where both over-reporting and under-reporting carry significant consequences.

3.4. Approach for Sample Selection: Safe, Average, and Border Subsets

This section details our methodology for selecting relevant samples based on prediction confidence scores () from the neural network. Inspired by active learning principles, we adapt these concepts to deep learning in violence detection, introducing three specialized data subsets: Safe, Average, and Border.

The core idea is to identify specialized subsets from based on prediction confidence levels, reflecting different degrees of model certainty. Unlike a strict partition, these subsets are defined by overlapping confidence intervals to independently assess the utility of samples in distinct reliability regimes.

A threshold,

, is used to create new partitioned specialized datasets. In this sense, the

Safe subset (

) includes samples with high-confidence predictions, defined as

These represent clear-cut cases where the model is confident in its prediction, corresponding to easily classifiable examples. We must observe that the probabilities are always limited within [0, 1].

On the other hand, the

Border subset (

) captures samples with maximum uncertainty, defined as

These lie near the decision boundary, where the model assigns nearly equal probabilities to both classes, making them the most ambiguous and potentially informative for learning.

Finally, the

Average subset (

) comprises samples of intermediate confidence, defined as

This group includes moderately certain predictions, excluding only the most extreme high-confidence cases.

Note that due to the choice of , these subsets may overlap. For instance, a sample near may belong to both Safe and Border. However, each subset is used independently for retraining, so overlapping does not affect the evaluation.

The threshold

was determined empirically by analyzing the distribution of confidence scores from the initial model trained on

(6000 balanced images). After experimentation, we selected

, which yielded meaningful and well-populated subsets while maintaining class balance. This value aligns with prior work in confidence-based sampling [

10,

11], where thresholds around this range have been shown to effectively capture high and low-confidence predictions in binary classification tasks, mainly the selection of 0.4 and 0.6 as boundaries for

.

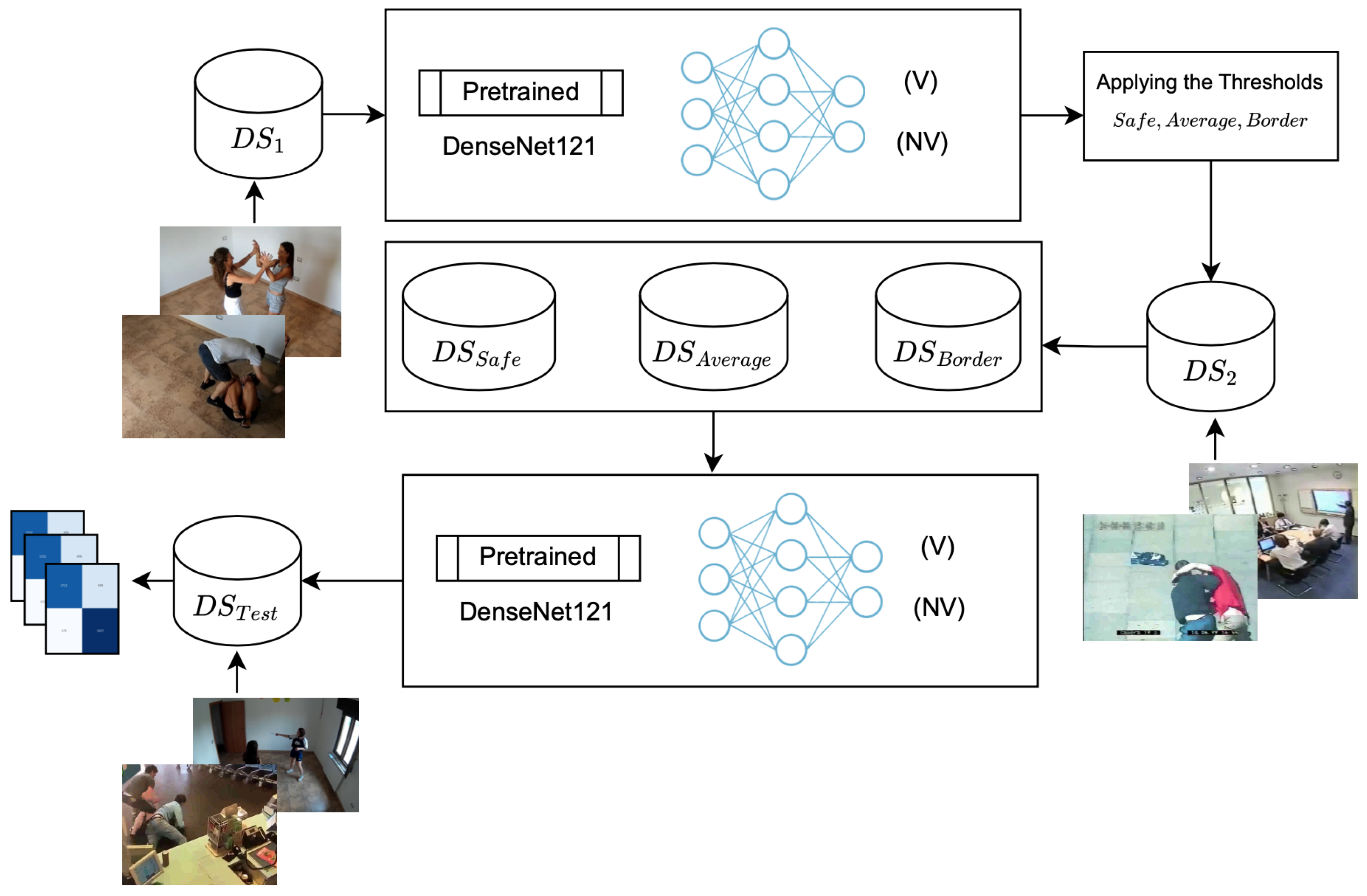

The methodology unfolds through three systematic phases, visualized in

Figure 2. The initial phase establishes the confidence threshold

through base model evaluation on

DS1, our curated dataset of 6000 balanced images. This calibration step ensures threshold values adapt to the specific characteristics of violence detection in visual data.

Phase two applies these thresholds to DS2 (5600 images) for subset identification, generating the partitions: , , and . All subsets maintain class balance while focusing on distinct confidence regions and , , and , where DS2, DS2, and DS2.

In the third phase, the initial model is retrained using only the subsets obtained in the previous phase, along with

DS2 (the entire dataset), to compare the classifier’s effectiveness when trained on partial data (i.e.,

,

, and

) versus when trained on the full dataset (

DS2). Subsequently, the dataset

is used to assess the impact of utilizing

Safe,

Border, and

Average samples on the overall training process of the neural model. It is important to note that the datasets used (

DS1,

DS2, and

) are mutually exclusive, i.e.,

DS1∩

DS2∩

. The complete procedure of the proposed method is detailed in Algorithm 1.

| Algorithm 1: Confidence Segmentation for Violence Detection Optimization |

- 1

Input: Base model (DenseNet121), initial dataset (6000 images), test dataset (4400 images), - 2

Output: Specialized models and comparative analysis - 1:

// Phase 0: Experimental reproducibility for

do - 2:

// Phase 1: Base model training with balanced dataset - 3:

Train on = {3000 V/3000 NV}, 80/20 stratified split - 4:

Augment: rotation, flipping, brightness; dropout = 0.3; early stop (10 epochs) - 5:

// Phase 2: Confidence analysis on test dataset - 6:

.predict() // Probabilistic predictions [0, 1] - 7:

max(P, axis=1) // Maximum confidence extraction - 8:

Calculate mean(C) and std(C) - 9:

Phase 3: Segmentation, Specialization and Evaluation - 10:

for each type ∈ {Safe, Border, Average} do - 11:

if - 12:

if - 13:

if - 14:

Organize each by class - 15:

for each do - 16:

clone() - 17:

Train on S with same augmentations, dropout = 0.3, early stop - 18:

Evaluate on = {2200 V/2200 NV} - 19:

end - 20:

for each do - 21:

Compute: precision, recall, F1-Score, g-mean - 22:

Analyze confusion matrix - 23:

end - 24:

return and comparative report

|

The training protocol was designed to optimize the effectiveness of each specialized subset and to ensure a fair and consistent comparison of experimental results. For each subset (, , and ), as well as for the entire dataset DS2, a dedicated DenseNet121 model was trained. All models used the same base architecture but were specifically fine-tuned to match the unique confidence characteristics of each segment.

The standardized protocol included several components: initializing with pre-trained weights from the base model to leverage prior knowledge, stratified cross-validation with an 80% training and 20% validation split, and early stopping based on validation loss to prevent overfitting. Consistent data augmentation techniques were applied: random rotations, horizontal scaling, and brightness adjustments, along with dropout regularization at a rate of 0.3. Model training was continuously monitored using key performance metrics, including precision, recall, F1-Score, and g-mean.

To ensure experimental reliability, each setup was run six times independently, using different random seeds for weight initialization and data splitting. This method allowed for evaluating the performance and the consistency of each training approach.

4. Results

This section presents experimental findings on confidence-threshold-based sample selection (Safe, Border, and Average subsets) for physical violence detection using DenseNet121, a deep convolutional network with dense connections known for high accuracy in image classification. The results demonstrate how strategic sample selection impacts two key criteria: classifier performance metrics and training set size reduction.

Also, to evaluate the generalizability of our confidence-based subset selection strategy, we conducted experiments on other architectures: MobileNetV2, a lightweight and efficient CNN designed for mobile and edge devices, prioritizing speed and low memory usage; and Vision Transformer (ViT), a Transformer-based architecture that models global image structure through self-attention, representing a different inductive bias from CNNs.

For each model, we applied the same confidence thresholds () to extract the Safe, Border, and Average subsets from . Each subset was used independently to retrain the model. This allows us to assess how the impact of sample selection behaves across models with different capacities, inductive biases, and computational demands.

Table 5 compares the effectiveness of each subset against the full-dataset baseline (

DS2) using four performance metrics:

precision,

recall,

F1-

Score, and

g-mean. The number of training samples and epochs is also reported for each configuration. All values represent the mean over six independent training runs, with standard deviation included in parentheses to reflect result consistency. As shown, the standard deviation across runs is consistently low, particularly for

g-mean (typically

–

), which indicates high reproducibility. This confirms that the observed trends are stable and not due to random initialization effects.

4.1. Performance Evaluation on DenseNet121

We begin our evaluation using DenseNet121 as the reference architecture to assess the impact of confidence-based subset selection on model performance and training efficiency. This CNN-based model was selected due to its proven effectiveness in image classification tasks and its widespread use in prior work on violence detection. The Safe, Border, and Average subsets were independently used to retrain the model, allowing for a controlled analysis of how sample relevance, as defined by prediction confidence, affects learning dynamics.

The full dataset (DS2) achieved near-perfect classification (all metrics about 0.99) with 5600 images and 20-epoch convergence, confirming DenseNet121’s inherent capability for violence detection. This establishes the theoretical upper bound for subset comparisons.

The Safe subset exhibited high precision (0.97) but significantly reduced recall (0.73), yielding moderate F1-Score (0.84) and g-mean (0.89) values. While sample reduction was modest (12% smaller than DS2), its 34-epoch convergence suggests efficient learning for unambiguous cases. The precision–recall trade-off indicates a bias toward minimizing false alarms at the cost of missed detections.

The Border subset showed high computational efficiency, achieving 97.2% sample reduction, but had lower overall performance, with an F1-Score of 0.82. Its balanced precision (0.79) and recall (0.83) indicate consistent difficulty in classifying ambiguous samples near the decision boundary. Although it needed 35 epochs, the small data volume makes this approach suitable for resource-limited situations.

Finally, the Average subset achieved the best balance among the subsets, with strong metrics (F1-Score = 0.88, g-mean = 0.93) and an 80% data reduction. The 44-epoch training duration, though longer than other subsets, remains practical given the significantly reduced computational load. This subset’s performance indicates that moderately challenging samples provide optimal information density for efficient model training.

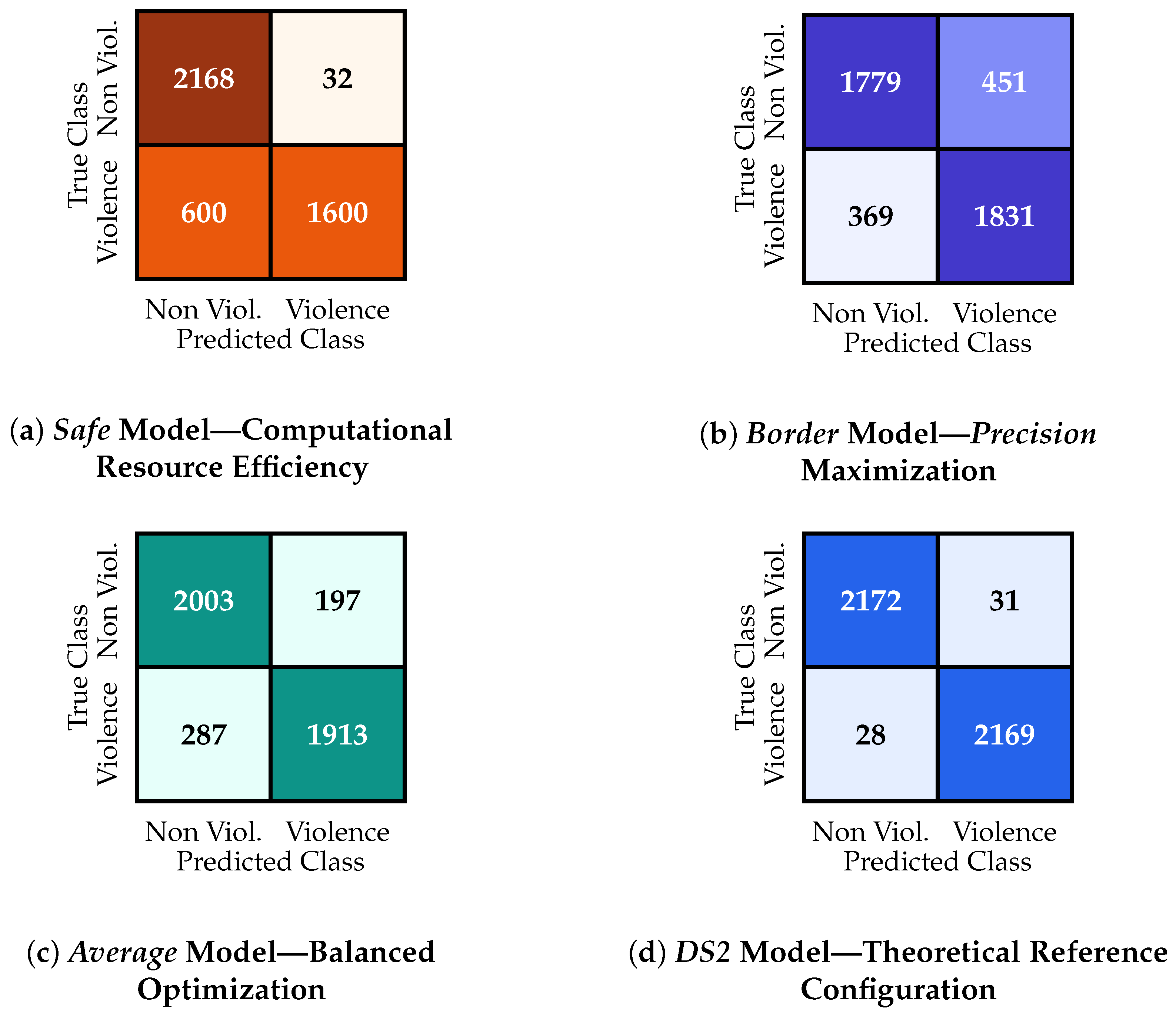

To provide more detail on the previous results discussion, the confusion matrices for each scenario are shown in

Figure 3. This figure offers a comparative analysis of the classifier’s performance across various configurations and highlights different classification behavior patterns that support the findings for each subset.

Starting with , a conservative strategy is observed, producing 1568 true negatives and 1833 true positives, along with 632 false positives and 367 false negatives. This error pattern indicates an improved precision profile, where the rise in false positives is balanced by a significant decrease in false negatives, which is an expected trade-off in confidence-based segmentation.

In contrast, displays highly specific behavior, with 2168 true negatives and 1600 true positives. The subset has a notably low false positive count (32), but at the expense of a significant increase in false negatives (600). This shift indicates a move toward computational efficiency, although it reduces sensitivity in detecting violent events (positive class).

shows the most balanced performance, with 1815 true negatives and 1858 true positives and errors spread proportionally (385 false positives and 342 false negatives). This symmetry in classification errors suggests that the subset is suitable for general-purpose uses, especially those requiring a trade-off between precision and recall, such as real-time physical violence detection systems.

The complete dataset (DS2) acts as the benchmark setup, producing nearly optimal results with 2172 true negatives and 2169 true positives, along with minimal error rates (31 false positives and 28 false negatives). These findings verify the technical strength of the DenseNet121 architecture and confirm the reliability of the evaluation set.

Overall, the observed patterns confirm that provides the best balance between false positives and false negatives, making it a strong option for practical use, especially given its significant reduction in dataset size. Meanwhile, although performs well for the positive class, it sacrifices the negative class and offers limited data reduction. Conversely, maximizes computational efficiency but sacrifices overall classification performance. These distinctions confirm that confidence-based sample selection is a promising approach for enhancing neural model training by aligning dataset features with task-specific goals.

In addition to performance, we evaluated the computational efficiency of each subset. As shown in

Table 5, training on smaller subsets significantly reduces the total time despite requiring more epochs. For instance, the

Average subset achieves an

F1-

Score of 0.89 in just 7.71 min, compared to 9.76 min for the full dataset (

), representing a 20% reduction in training time. Even more striking, the

Border subset completes training in only 1.74 min (82% reduction), making it highly suitable for rapid prototyping or resource-constrained environments.

4.2. Cross-Architecture Evaluation

To assess the generalizability of our confidence-based subset selection strategy, we extended the evaluation to multiple deep learning architectures with different inductive biases and computational profiles. This section presents the performance and efficiency results for MobileNetV2 and ViT, enabling a comparative analysis with the reference DenseNet121 model.

MobileNetV2 demonstrated exceptional computational efficiency while maintaining competitive performance. As shown in

Table 5, the model achieves an

F1-

Score of 0.77 on the

Border subset (104 samples, 2% of DS2) in just 0.08 min total training time (the fastest among all models). Also, is noticeable an

F1-

Score of 0.79 is achieved on the

Average subset (3490 samples) in 3 min, despite requiring 36 epochs. Finally, a strong baseline performance was achieved (

F1 = 0.98) on the full dataset (

), confirming its suitability for this task.

The extremely low per-epoch time (0.004 min for Border, 0.08 min for Average) confirms MobileNetV2’s suitability for edge deployment and real-time applications. Notably, the Safe subset underperforms relative to DenseNet121 (F1 = 0.81), suggesting that high-confidence samples may not transfer as effectively to this lightweight architecture, which is an interesting direction for future analysis.

As shown in

Table 5, ViT achieves moderate performance on the full dataset (

F1 = 0.88), but lags behind DenseNet121 (

F1 = 0.99). This gap is consistent with the well-documented sample inefficiency of Transformers in low-data regimes [

23]. Vision Transformers rely heavily on large-scale pretraining and global attention mechanisms, which struggle to generalize when training data is limited, which is a common constraint in real-world violence detection scenarios.

Nevertheless, the subset-based models demonstrate meaningful gains. The Average subset achieves F1 = 0.74 with only 47% of the training data, and the Border subset reaches F1 = 0.73 using just 13% of samples. Importantly, training time is reduced by up to 97% (e.g., Border: 0.66 min vs. 24.5 min), highlighting that even for architectures with lower absolute performance, confidence-based selection significantly improves efficiency.

This suggests a key strength of our methodology: it is not dependent on achieving peak model accuracy but on enabling faster, data-efficient training across diverse architectural families, including those less suited to the task.

In this sense, previous findings confirm that our confidence-based selection method is not limited to CNNs but can be applied to diverse architectures to improve training efficiency. While performance varies by model, the relative gains from subset selection remain consistent, supporting the robustness of our approach. We note that the results with ViT and MobileNetV2 are only for a cross-evaluation, and more work needs to be conducted to ensure a fair comparison, since our main goal is to evaluate primarily the well-established case of a proven architecture such as DenseNet121.

Training efficiency was also assessed for MobileNetV2 and ViT. Concerning MobileNetV2, despite requiring more epochs to converge, the use of specialized subsets results in exceptional time savings due to its extremely low per-epoch cost. The Border subset reduces the total training time from 11.30 min (full dataset) to just 0.08 min, which is a 99.3% reduction, while achieving an F1-Score of 0.77. Similarly, the Average subset completes training in only 3.03 min (73% faster than full training), maintaining an F1-Score of 0.79. These results demonstrate that confidence-based selection unlocks fast training cycles, making MobileNetV2 a highly suitable candidate for real-time, edge-based, or resource-constrained applications.

On the other hand, despite the higher computational cost per epoch of ViT, the use of specialized subsets leads to dramatic time savings for this architecture. The Border subset reduces the total training time from 25.5 min (full dataset) to only 0.66 min (97% reduction) while maintaining an F1-Score of 0.73. Similarly, the Average subset trains in 18.2 min (29% faster than full training), demonstrating that confidence-based selection is effective even for architectures with higher baseline costs. This further reinforces the practical value of our approach: it maximizes efficiency gains across diverse deployment scenarios.

It can be observed from the cross-model comparison that while absolute performance and computational demands vary significantly across architectures, the relative benefits of confidence-based subset selection remain consistent. MobileNetV2 achieves the fastest training times (as low as 0.08 min) for the Border subset, making it ideal for real-time or edge-based deployment. DenseNet121 delivers the highest accuracy, particularly on the full dataset, at the cost of higher training time. ViT, though computationally heavier and less accurate in this low-data regime, still benefits substantially from subset-based training, reducing the total time by up to 97%.

This spectrum of behaviors confirms the robustness and adaptability of our approach. Irrespective of whether the primary objective is computational efficiency, predictive accuracy, or architectural preference, confidence-based subset selection consistently enhances training efficiency. Thus, the proposed method can be readily applied across a wide range of model architectures, enabling researchers and practitioners to address specific operational requirements without compromising the benefits derived from strategic data utilization.

Finally, the results in

Table 5 correspond to an interesting interplay between structural symmetry and functional asymmetry in sample utility. The

Border subset, although symmetrically defined around the decision boundary (

), exhibits asymmetric practical utility because it enables ultra-fast training (e.g., 0.08 min with MobileNetV2) but yields only moderate accuracy. Conversely, the

Average subset, despite being defined on an asymmetric confidence band (

or

), achieves the most balanced trade-off between performance and efficiency across all architectures.

4.3. From Active Learning to Training Efficiency: Evolution of Confidence-Based Selection

Our approach is inspired by the confidence-based sample selection strategies proposed in active learning, particularly the work of Alejo et al. [

10]. Also, we began with the ideas of Abundez et al. [

11], which used a threshold-based criterion during training. However, we extend these frameworks in both scope and application to better suit deep learning in visual recognition tasks.

Abundez et al. [

11] introduced a threshold-based method to identify ambiguous samples using a single parameter

, primarily aimed at reducing labeling effort through active querying. In contrast, Alejo et al. [

10] proposed a three-way partitioning into

Safe,

Border, and

Average subsets, but applied it to tabular data using traditional machine learning models such as Multi-Layer Perceptrons (with one hidden layer). Their method further relied on Gaussian kernel functions to smooth and analyze prediction differences across model ensembles, increasing computational complexity.

Our work differs in several key aspects. First, we operate in the domain of image classification, specifically physical violence detection, where raw pixel data demands deep feature extraction. We leverage the DenseNet121 architecture to generate reliable confidence scores directly from the softmax output, eliminating the need for ensemble-based uncertainty estimation or auxiliary smoothing functions. This results in a simpler, faster, and more scalable segmentation process.

Second, our objective diverges from active learning: rather than selecting samples for labeling, we investigate how specialized subsets defined by confidence thresholds impact model performance and training efficiency when used in isolation. In particular, we show that the Average subset achieves 89% of the F1-Score of the full dataset using only 20% of the training samples, highlighting its potential for efficient model training.

Thus, by adapting and simplifying prior confidence-based frameworks for modern deep learning pipelines, our approach enables a practical and effective strategy for data-centric model optimization.

Notably, the cross-architecture evaluation demonstrates that the benefits of our method extend beyond a single model. Despite significant differences in architecture, inductive bias, and computational demands from lightweight models like MobileNetV2 to attention-based ViT, the relative gains in training efficiency and data utilization are consistently observed. This confirms the generalization capability of our confidence-based selection strategy, positioning it as a flexible and scalable tool for improving deep learning pipelines.

5. Conclusions

This work presents an empirical study focused on assessing the effectiveness of selecting representative samples to enhance the training process of the DenseNet121 neural model for classifying physical violence in images. The approach relies on the model’s output confidence scores to divide the data into three subsets: Safe (high confidence), Average (moderate confidence), and Border (low confidence), which indicate different levels of prediction certainty. The Border subset is defined symmetrically around the decision boundary (), reflecting a balanced treatment of uncertainty. In contrast, the Safe regions exhibit asymmetries in sample distribution and impact, as high-confidence predictions for violence and non-violence may not contribute equally to model efficiency.

Experimental results, obtained using the public datasets AIRTLab, RLVS, Pexels, and SCVD, demonstrate that confidence-based sample selection has great potential for reducing dataset size and improving computational efficiency. However, this efficiency gain often comes at the cost of decreased classification performance, especially in the subset. In contrast, the subset does not provide a significant reduction in dataset size, and the classifier’s performance is negatively impacted. Notably, the subset achieves a substantial reduction in dataset size while maintaining competitive classification performance, making it the most promising configuration and generating a functional asymmetry between data volume and performance.

These findings support the hypothesis that training on moderate-confidence (Average) samples can result in more efficient learning without sacrificing classification quality. Therefore, the proposed approach provides a practical strategy for enhancing the training process of DenseNet121 in binary violence classification tasks.

Additionally, the cross-architecture evaluation confirms that the benefits of confidence-based sample selection extend beyond DenseNet121. Despite differences in model design and computational demands, architectures such as MobileNetV2 and ViT exhibit consistent efficiency gains when trained on specialized subsets, particularly Average and Border. This demonstrates the generalization capability of our approach and supports its use as a flexible, architecture-agnostic strategy for efficient deep learning in other environments.

Building on the findings of this study, several promising research directions emerge. One main path involves optimizing confidence thresholds using adaptive methods, such as meta-learning or reinforcement learning, to go beyond fixed empirical values and enable flexible, data-driven segmentation strategies. Another key direction is expanding the proposed methodology to other neural architectures, including transformer-based models and multimodal systems that combine visual and auditory information. This would allow for a more thorough assessment of the framework’s applicability and robustness across different learning paradigms. Additionally, emphasis should be on real-world validation, especially through deployment in edge-computing environments for surveillance purposes. These scenarios provide valuable insights into the trade-offs among latency, computational costs, and detection accuracy under operational limits.

This work provides both a methodological foundation for confidence-based sample selection and empirical evidence of its effectiveness in violence detection tasks, particularly when utilizing the Average confidence regime, which strikes a balance between dataset reduction and classification performance. Thus, it demonstrates how controlled asymmetries in data selection can enhance learning efficiency.