1. Introduction

Marine plastic pollution is progressively infiltrating subsurface ecosystems, posing significant ecological and biosafety risks. Recent studies indicate that 68% of seabed plastic debris causes over 1 million marine organism mortalities annually, while microplastics (MPs) derived from their degradation have breached the human placental barrier [

1]. Global plastic production has grown exponentially, surging from 1.5 million tons in the 1950s to nearly 199 million tons in 2025, with approximately 4.8–12.7 million tons of plastic waste entering the marine environment each year [

2]. Conventional plastics gradually fragment in the marine environment to form microplastics (MPs) and nano plastics (NPs) with particle sizes smaller than 5 mm, which are extremely environmentally persistent and bioaccumulation potential [

3].

Surface floating debris detection has achieved significant breakthroughs through deep learning. The APM-YOLOv7 algorithm effectively suppresses water surface ripple interference through adaptive Canny edge detection (A Canny), and its dual thresholds are empirically set to 0.075 and 0.175, respectively, with the ratio of high and low thresholds of 7:3, to realize more accurate edge point calculation [

4]. The model introduces the PConv-ELAN module, inspired by Faster Net, which integrates a lightweight partial convolution technique into the YOLOv7 architecture, replacing the 3 × 3 convolutional layers in the original ELAN module. PConv significantly reduces the number of parameters by reducing redundant computations while maintaining the feature extraction capability. Together with the multi-scale gated attention mechanism (MGA), the algorithm achieves an mAP@0.5 of 89.5% on the self-built surface litter dataset. The recall of small target trash is improved by 11.82% [

5]. Lightweight design is another important trend in water surface detection, and the improved YOLOv5 developed by a team from Shanghai Ocean University uses EfficientNetV2-S as the backbone network, combined with deep separable convolution technology to compress the number of model parameters to 12% of the original structure. The algorithm achieves an mAP@0.5 of 84.9% on the Orca marine life dataset. The inference speed reaches 5.9 ms/frame, which lays the foundation for deployment in mobile monitoring platforms [

6]. For small target detection, for underwater small target detection, the improved YOLOv5 algorithm enhances the model’s ability to perceive targets in low-contrast, noisy, and blurred images by integrating the CBAM module and the BiFPN architecture, and significantly improves the detection of small targets by boosting the recall precision to 89.84% on the WSODD dataset [

7].

However, these advanced algorithms optimized for surface environments have significantly degraded target detection performance in special underwater environments. The core challenge stems from the complexity and degradation effects of underwater imaging: the absorption and scattering effects of light in the water column lead to severe attenuation of visible light bands, with the red band (600–700 nm) failing completely at a depth of 5 m, and the blue band (450–495 nm) having an the attenuation coefficient of blue light (450–495 nm) is as high as 0.2 m

−1. This selective attenuation destroys the color balance and natural symmetry of spectral response, reducing image contrast and resulting in a decrease in signal-to-noise ratio of more than 40%. At the same time, suspended particles and dissolved organic matter in the water column cause forward scattering effects, resulting in blurring of target edges and loss of details—a clear symmetry-breaking in spatial structures [

8]. This optical distortion makes it difficult for traditional detection algorithms based on RGB information to accurately extract target features, especially in turbid waters or deep-water environments; biological attachment and texture confusion further increase the difficulty of identification, and the surface of plastic trash that remains on the seafloor for a long period of time is gradually covered by algae and microbial films, forming a layer of biological peridium. This process changes the optical properties of the plastic surface, such as surface reflectivity, masking the geometry, and increasing the complexity of the texture. According to J-EDI (Underwater Litter Image Dataset), the texture similarity between plastic fragments covered by biofilm and seabed corals and seaweeds is more than 75%, which leads to a significant decrease in the accuracy of classifiers based on texture analysis. In addition, bio-attachment also accelerates the embrittlement and fragmentation of plastics, producing more small target fragments with particle sizes smaller than 50 pixels, which are difficult to distinguish in sonar or optical images; there are significant differences in the composition of marine litter, with plastic packaging accounting for as much as 41.7%, while bio-attached litter (e.g., shellfish-attached plastic fragments) accounts for only 2.3%, and this long-tailed distribution causes the model to over-focus on the major categories, ignoring rare but ecologically risky categories [

9]. This problem is particularly acute in underwater scenarios with limited training data—the model may accurately detect common plastic bottle bags but miss plastic debris covered in sea squirts or discarded fishing nets, which are the sources of entanglement and microplastic releases that pose the greatest threat to marine life. The degradation of imaging quality further exacerbates the difficulty of recognizing rare and complex forms of litter.

Notwithstanding these endeavors, a critical appraisal of the literature reveals that prevailing approaches often address the symptoms of underwater degradation in isolation rather than the root cause in a holistic manner. This leads to three persistent and interconnected research gaps that fundamentally limit deployment in real-world scenarios: (1) Static enhancement strategies are difficult to adapt to dynamic underwater degradation, which breaks the natural symmetry of terrestrial vision models; (2) Higher-order semantic associations of dense targets are insufficiently modeled, ignoring the structural symmetry present in man-made objects; and (3) It is profoundly challenging to combine both lightweight design and high accuracy under complex degradation conditions while retaining symmetry in computational graphs for efficient inference.

Degradation effects in underwater environments, such as insufficient lighting, color distortion, contrast degradation, and edge blurring, lead to serious degradation of image quality, which fundamentally restricts the performance of target detection models relying on raw image information underwater [

10]. These effects break the inherent symmetry assumptions standard in computer vision models trained on terrestrial imagery. Traditional preprocessing methods (e.g., histogram equalization, color correction) are difficult to adapt to complex underwater degradation patterns, while mainstream detection algorithms (including Faster R-CNN [

11,

12], YOLO series [

13,

14,

15,

16,

17]) are significantly less robust under low illumination, blurring, and dense occlusion scenes. In order to break through this technical bottleneck, this paper proposes a high-precision underwater garbage detection method based on the YOLOv13 framework, IFEM-YOLOv13, whose core innovation lies in the construction of an end-to-end degradation-aware learning system that explicitly models and compensates for symmetry-breaking phenomena [

18].

The main contributions of this paper include:

(1) Proposing a feature quality reconstruction module (IFEM) that can be embedded into a deep learning framework, establishing a physically inspired feature recovery pathway to effectively compensate for underwater optical degradation effects through a learnable sharpening filter, pixel-level filter, and disparity enhancement co-optimization, designed with symmetry principles to ensure balanced enhancement across color channels and spatial domains.

(2) Designing a multi-scale attention fusion mechanism and integrating a channel-space dual-attention guided feature optimization strategy to significantly improve the feature discrimination ability of small and biologically attached targets, leveraging scale symmetry in natural and artificial objects.

(3) Developing a degradation-aware Focal loss function to strengthen the model’s learning ability for low-frequency key categories (e.g., microplastic pollutants) through dynamic gradient remapping and solving the problem of uneven underwater sample distribution, incorporating symmetry-aware regularization to prevent model bias toward majority classes.

The IFEM acts as an intelligent “visual corrector” for the underwater detection model, and constructs physically accurate feature enhancement links at the input side: a learnable sharpening filter to recover the edge structure information, a pixel-level filter to improve the local contrast, and spatially varying gamma enhancement to realize detail enhancement, all designed with symmetry constraints to maintain natural image statistics. At the same time, the improved Focal loss establishes a decision optimization mechanism at the output side, and the two collaborate to form a complete enhancement system from feature extraction to decision inference that respects the underlying symmetry properties of visual scenes.

The rest of the paper is structured as follows:

Section 2 summarizes and analyzes the related work,

Section 3 describes the proposed method in detail,

Section 4 presents the experimental results, and

Section 5 summarizes the experimental results and proposes future research.

2. Related Works

Underwater target detection, as a core technology for marine monitoring and ecological protection, has long faced challenges such as light attenuation, motion blur, dense distribution of small targets, and data scarcity [

19]. Traditional methods rely on sonar or artificial visual analysis, which are inefficient and poorly generalized [

20]. In recent years, deep learning has significantly improved the detection accuracy and real-time performance of underwater targets through end-to-end feature learning capability. The single-stage detector represented by the YOLO series has become a mainstream solution due to its excellent speed-accuracy balance, but the complexity of underwater environments requires the model to be further optimized in terms of lightweighting, feature enhancement, and adaptive sensing [

21].

For the underwater trash detection task, the combination of lightweight network design and image enhancement strategies has become a research focus. SLD-Net proposed by Zhou Hua ping et al. [

22] fuses the two-stage detection framework with the lightweight backbone network MobileNetV2, which effectively mitigates the underwater image degradation problem through the Multi-Domain Image Enhancement Module (MDIE) combined with the color conversion and detail denoising submodules. These submodules implicitly leverage principles of symmetry by applying uniform transformation rules across spatial and chromatic domains to recover natural image statistics. Meanwhile, a two-way FPN structure is introduced to enhance the feature extraction capability of small targets, which achieves a real-time performance of 94.5% mAP and 65 FPS on the J-EDI dataset, with a model parameter count of only 5.4 M [

23]. This work demonstrates that the synergistic optimization of preprocessing enhancement and a lightweight backbone network significantly improves the robustness of spam detection. Similarly, Li He et al. [

24] improved YOLOv5s for water surface floating object detection by designing Mosaic-based small target data augmentation (STDA) and coordinate attention mechanism (MCA), enhancing small target sensing by adding shallow feature maps with bilinear interpolated upsampling, and combining with channel pruning to compress the model to 4.31 MB, and realizing 92.01% accuracy in edge devices with 33 FPS real-time detection at the edge device. Image enhancement provides preprocessing support for underwater detection. Cheng et al. [

25] design an adversarial generative lightweight infrared enhancement network, which reduces the number of parameters by 75% and the edge inference time by 32.07% through multilevel feature fusion. Zhao et al. [

26] propose the Swift Water network, which constructs a U-Net architecture based on the Swift Former encoder, and introduces an HSV encoder. Net architecture, introducing the HSV color space hinting module (LPB), and improving PSNR by 4.2 dB in the UIEB dataset, with only 1/3 of the number of parameters of the mainstream model, while adhering to color symmetry principles through consistent transformation across hue, saturation, and value channels.These works highlight the effectiveness of lightweight design with targeted enhancement for underwater small target detection.

In dense biological detection scenarios, dynamic routing and feature fusion mechanisms show unique value. Jiang Yu et al. [

27] proposed a feature enhancement and adaptive routing framework for fish detection in complex underwater environments: a learnable feature enhancement module mitigates the illumination and blurring problems, and an adaptive target routing strategy is designed to dynamically switch between simple/complex detection branches according to the complexity of the scenario (based on YOLOv7, respectively). Its multi-scale feature fusion module (MFFR) integrates two-branch features, while the routing module assigns detection paths based on an a priori threshold (τ = 0.497), achieving 73.3% mAP on the self-constructed DUFish dataset, which is a 3% enhancement over the baseline model. This work demonstrates that the dynamic adaptation mechanism can effectively balance the detection speed and accuracy of complex scenarios but does not address the higher-order semantic association modeling problem.

As the YOLO series continues to evolve, higher-order feature modeling becomes the key to breaking through the performance bottleneck. The Nankai University team [

28] proposed YOLO-MS, introduced a hierarchical feature fusion module (MS-Block) based on the Kernel-Size study, and enlarged the receptive field through progressive heterogeneous convolutional kernels to improve the AP of YOLOv8 from 37% to 40%. Lei et al. [

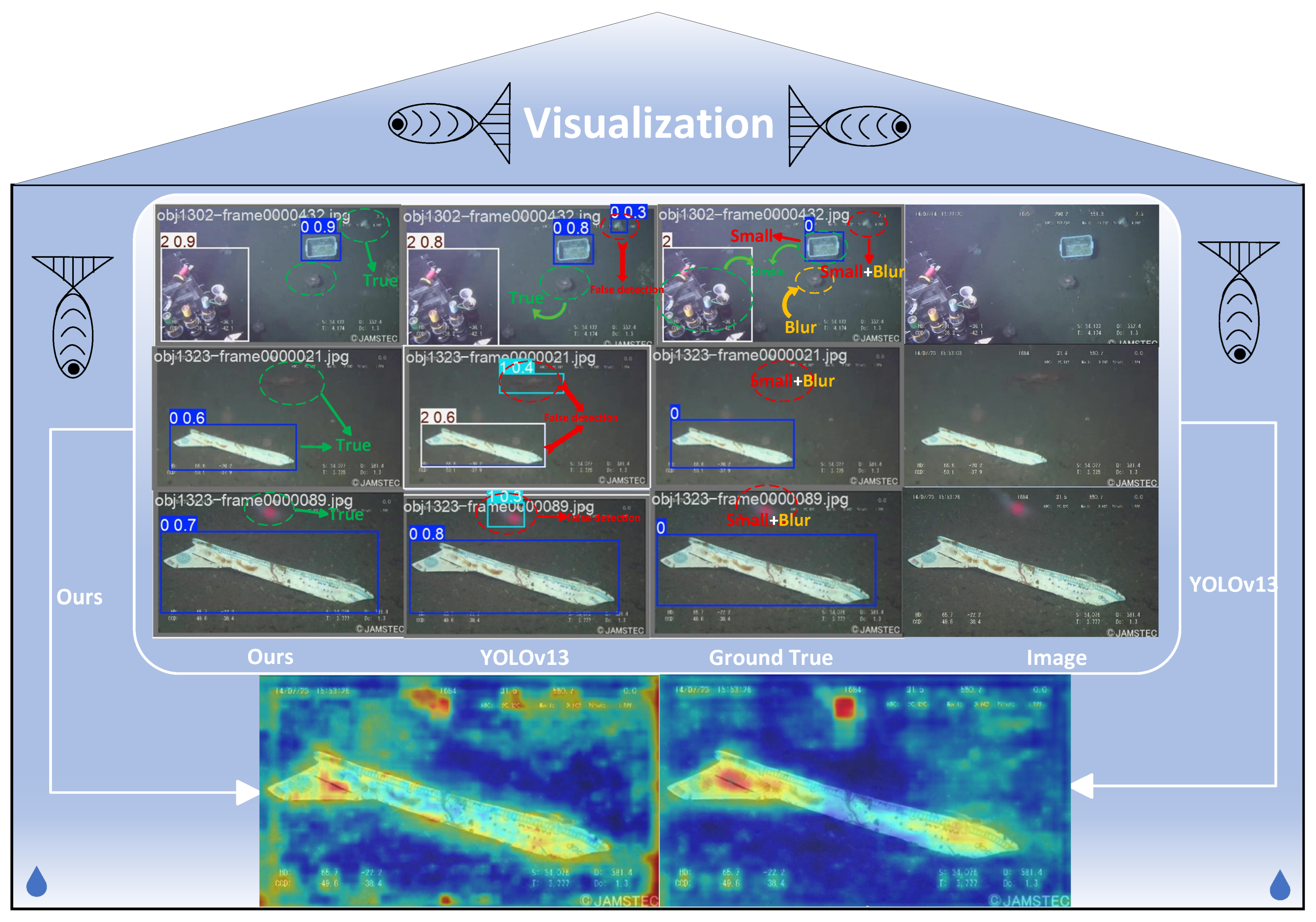

29] proposed the HyperACE mechanism for YOLOv13 to propose the Hypergraph Self-Adaptive Correlation Enhancement (HyperACE). Adaptive Correlation Enhancement (HyperACE) mechanism, which dynamically captures the many-to-many higher-order correlations across space and scale through a learnable hyperedge building block, replacing the traditional local convolution and binary attention mechanism; its Full Process Aggregation-Distribution (FullPAD) paradigm injects correlation-enhanced features into the backbone network, necks, and detection heads to realize the global information synergy. The model outperforms YOLOv12 on MS COCO with lower computation (1.5–3.0% mAP enhancement), but its adaptability in underwater scenarios has not been explored. Summarizing the existing work, current underwater detection methods still have three limitations: (1) static enhancement strategies are difficult to adapt to dynamic underwater degradation that breaks visual symmetry; (2) higher-order semantic associations of dense targets are insufficiently modeled, ignoring the structural symmetry present in man-made objects; and (3) it is difficult to combine both lightweight and high accuracy while maintaining computational symmetry for efficient inference. This paper accordingly improves YOLOv13 by fusing dynamic enhancement and hypergraph perception to fit the unique needs of underwater biology and trash detection.

To directly bridge these gaps, we propose IFEM-YOLOv13. Our framework is designed as an integrated solution: the Intelligent Feature Enhancement Module (IFEM) addresses the first limitation through learnable, adaptive compensation that restores imaging symmetry; the Hyper-ACE engine tackles the second by explicitly modeling cross-layer and cross-scale feature correlations while preserving relational symmetry; and the entire system’s design philosophy, culminating in only 2.5 M parameters and 217 FPS, squarely addresses the third challenge of achieving high accuracy without sacrificing efficiency, maintaining an elegant symmetry between performance and computational complexity.

3. Methods

This study builds upon the YOLOv13 architecture with core innovations: the introduction of the “Hypergraph Adaptive Correlation Enhancement (HyperACE)” mechanism and the “Full-Process Aggregation-Distribution Paradigm (FullPAD)”, significantly enhancing the model’s high-order semantic perception capabilities for multi-scale objects.

The HyperACE mechanism maps pixels from multi-scale feature maps to hypergraph vertices. It dynamically constructs hyperedges via a learnable participation matrix (rather than relying on manual thresholds) to capture multi-to-multi high-order associations between objects. This module incorporates parallel high-order (C3AH module) and low-order (DS-C3k module) branches. They aggregate global semantics and local details through hypergraph convolution and deep separable convolution, respectively. Finally, a shortcut branch preserves original information, achieving complementary feature enhancement.

The FullPAD paradigm distributes the correlation-enhanced features from HyperACE through three tunnels to the backbone network, neck network, and detection head: First, the features are scaled to each stage’s resolution and convolutionally aligned in channel count. Then, they undergo gated fusion with original features using learnable weights, ensuring the enhanced features permeate the entire pipeline and promoting gradient propagation and cross-scale information collaboration.

3.1. IFEM-YOLOv13

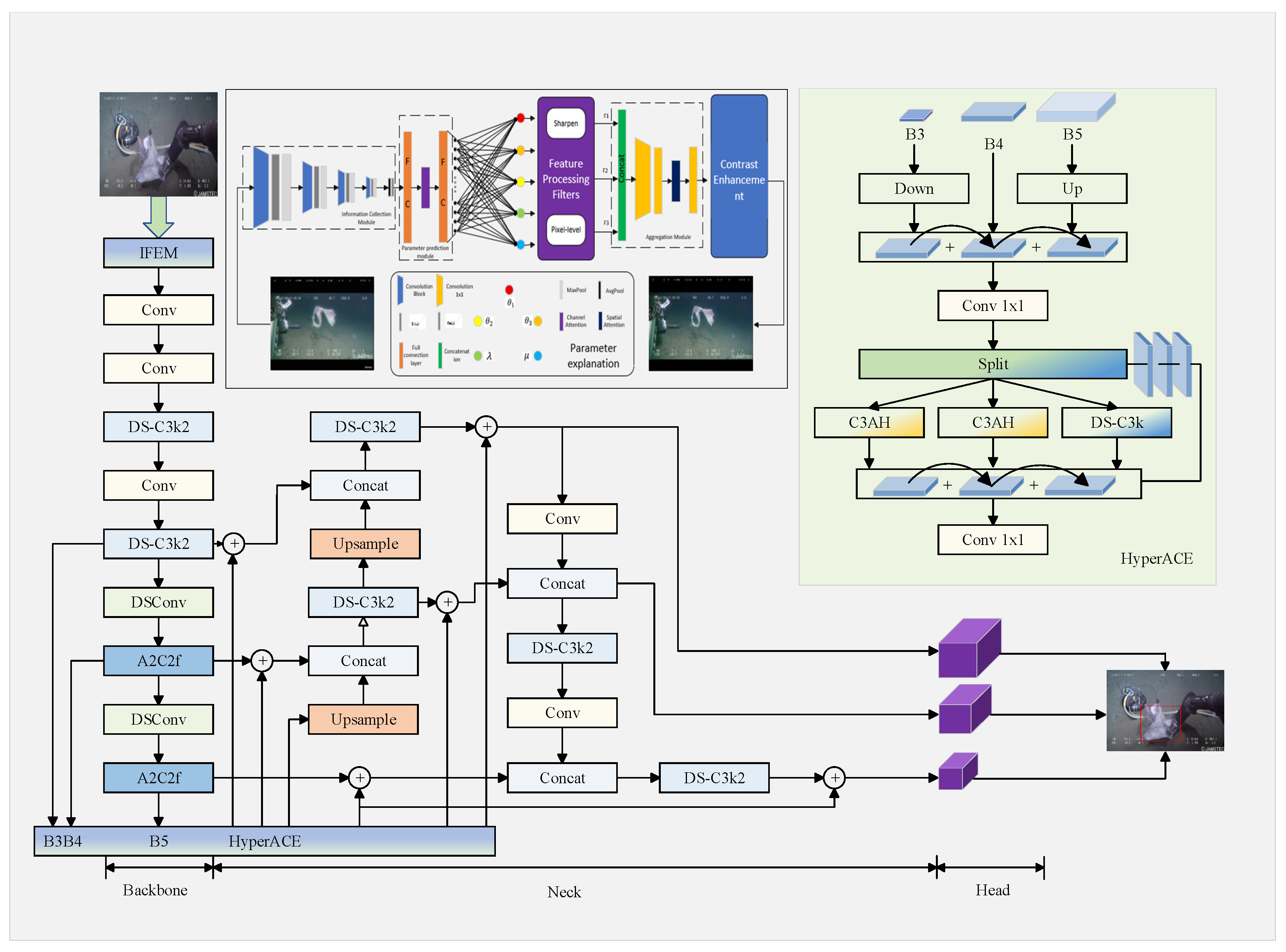

The overall network architecture of IFEM-YOLOv13 is shown in

Figure 1. IFEM-YOLOv13 reconfigures the traditional serial computation paradigm of YOLO by implementing a closed-loop information flow through the FullPAD (Full Pipeline Feature Aggregation and Distribution) framework, which establishes a symmetrical bidirectional pathway for feature reuse and gradient propagation.The input image first undergoes degradation compensation through the Intelligent Feature Enhancement Module (IFEM), which jointly learns the sharpening intensity

λ, pixel modulation coefficient

μ, and scattering simulation parameter σ to achieve source-level restoration of underwater optical degradation while preserving the intrinsic symmetry of natural color and structural distributions. The corrected high-quality image is then input into the improved backbone network, which extracts hierarchical features through a multi-scale feature pyramid extraction layer. The enhanced features are then processed through a high-resolution feature pyramid network (HR-FPN) for multi-scale fusion, using a combination of dilated convolutions and sub-pixel upsampling to preserve both the receptive field and the fragmented texture information of small objects. At this stage, the HyperACE hypergraph engine dynamically constructs a cross-layer feature correlation model that captures higher-order symmetries in spatial and semantic relationships; enhanced features undergo cross-scale feature fusion via the high-resolution FPN while retaining fragmented texture information of small objects; the final target detection results are output by the multi-scale detection head, which applies symmetry-aware prediction to handle variably shaped underwater objects.

The innovation of our framework lies in three synergistic mechanisms: (1) the IFEM constructs a physically inspired degradation compensation front end that restores visual symmetry disrupted by underwater conditions; (2) the HyperACE engine establishes a cross-layer feature correlation map with built-in relational symmetry to enhance target context modeling capabilities; (3) multi-scale residual connections form feature back-propagation pathways that ensure computational symmetry during information flow. This closed-loop architecture effectively addresses feature degradation issues in traditional serial pipelines by introducing symmetry-preserving learning throughout the network, providing a system-level solution for underwater debris detection that balances enhancement, detection, and reconstruction within a unified symmetrical framework.

3.2. Feature Quality Reconstruction Module

Underwater images exhibit significant variability in detection difficulty due to environmental dynamics (light attenuation, turbidity changes) and variations in target density [

30]. Existing target detection algorithms face dual challenges: on one hand, they struggle to robustly extract high-level semantic features from degraded images; on the other hand, they fail to effectively model the distribution patterns of targets in complex underwater scenes [

31]. Traditional image processing methods (such as contrast enhancement and Gaussian denoising) can locally improve image quality but cannot adaptively balance the mutual constraints of multiple degradation factors due to the unlearnable constraints between parameters, and often break the natural symmetry of color and structural information. Therefore, we propose the lightweight adaptive feature quality reconstruction module (IFEM) introduced by Yu Jiang et al. [

27]. By jointly learning parameters such as sharpening intensity

λ, color compensation μ, and scattering simulation parameter σ through a convolutional attention mechanism, the feature reconstruction process is dynamically optimized to suppress adverse noise while preserving the edge structure of biofouling targets, providing degradation-invariant discriminative features for downstream target detection [

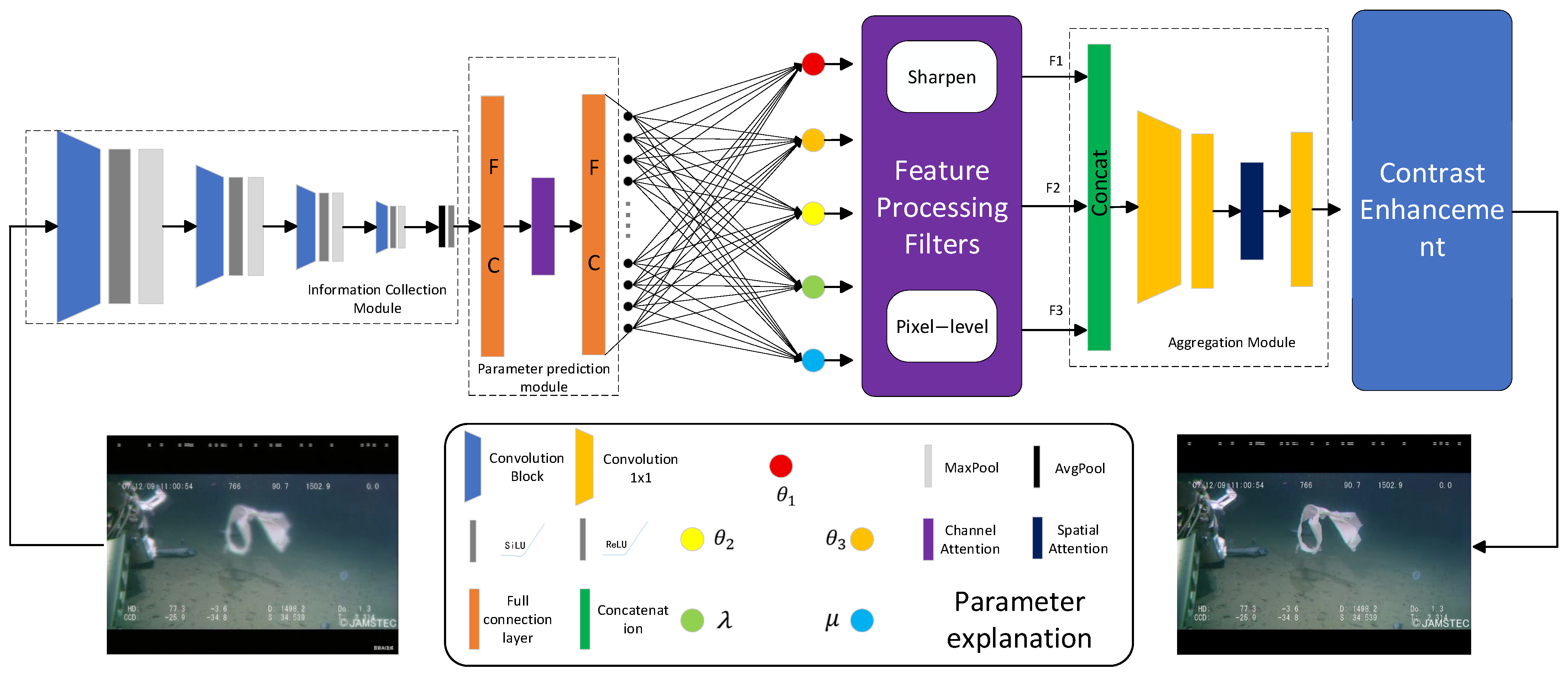

32]. As shown in

Figure 2, the module includes an information acquisition module, a parameter prediction module, a feature processing filter, an aggregation module, and a difference enhancement module, all designed with symmetry-aware learning constraints.

Information Collection Module: The information collection module employs a cascaded design combining convolution and max pooling to progressively transform raw images into high-dimensional features, gradually converting the three-dimensional spatial dependencies of the image into dependencies in the high-dimensional space while preserving spatial symmetry through structured downsampling. After the original image F1 is input, it first passes through a convolution layer to expand the 2D spatial information into a multi-channel space. This is followed by a max pooling layer that compresses redundant background information while retaining key feature responses in a symmetry-preserving manner. This cascaded operation progressively transforms the three-dimensional spatial dependencies of lower layers into semantic dependencies of higher layers, providing feature maps with complete structure and dense information for subsequent feature sampling. The specific cascade operations are as follows:

denotes the convolution and pooling operations performed on the

i-th feature map. At the end of the information collection module, a spatial attention mechanism is used to model the importance of the spatial location of the feature map, focusing on the key areas between pixels and ignoring irrelevant backgrounds while maintaining translational symmetry in attention weighting:

denotes the attention weight for the prediction space of , and denotes pixel-wise multiplication.

Parameter Prediction Module: As a key component affecting image reconstruction quality, the core function of this module is to predict five parameters used to regulate filter image processing:

. The main architecture of this module is based on fully connected layers. Specifically, the compressed feature map

is first fed into a Multi-Layer Perceptron for dimension reduction and feature transformation. Subsequently, a channel attention mechanism (CHANNEL) is applied to dynamically recalibrate the importance of each feature channel by modeling inter-channel dependencies, which enhances the discriminative power for degradation-specific parameter prediction while enforcing symmetry in channel-wise adaptation. The refined features are then passed through

, which outputs a 5-dimensional vector corresponding to the five target parameters. This is formally expressed as:

where the output vector

is directly mapped as [

]. The module introduces a channel attention mechanism to model the importance weights of different feature channels for parameter prediction, capturing the global dependencies between pixels with symmetry in parameter influence. Specifically,

is used to adjust the RGB channel information of the image in a symmetry-preserving way, while

and

serve as the core parameters of the filter.

Feature processing filter: The feature processing filter includes pixel-level filters and sharpening filters. Sharpening filters enhance the high-frequency components of an image (such as edges, textures, and other detailed information) to improve image sharpness (or edge clarity), which is particularly important in the field of underwater image restoration. This is because underwater optical attenuation and scattering effects can degrade images, breaking the structural symmetry of objects and making target detection difficult. The parameter

λ in this filter enables the model to adaptively adjust the sharpening intensity, allowing it to optimize based on the degradation level of the input image: for severely degraded images, the model can learn to apply high-intensity sharpening (larger

λ values); for mildly degraded images, it applies low-intensity sharpening (smaller

λ values). For detection tasks involving different categories such as fish schools and plastic debris, sharpening processing significantly enhances the contrast between target and background boundaries, effectively mitigating difficulties in contour recognition caused by target overlapping and blurring. The specific process is as follows:

Pixel-level filter: The core function of the pixel-level filter is to correct image pixel values and improve image brightness distribution in a targeted manner while maintaining illumination symmetry. In underwater imaging scenarios, non-uniform lighting conditions are a typical challenge, resulting in uneven image brightness distribution. This filter enhances local contrast through Gaussian difference operations to improve lighting unevenness issues. The parameter

μ serves as an adaptive adjustment factor, dynamically adjusting the intensity of contrast enhancement based on the characteristics of the input image while preserving the natural symmetry of luminance distribution. Specifically, for low-contrast images, the model can learn to use a larger

μ value; for images with sufficient contrast, a smaller

μ value is used for fine-tuning or suppressing enhancement.

Aggregation Module: The aggregation module aims to fuse the output features of the pixel-level filter and sharpening filter (

,

) with the original image (

) in a symmetry-preserving manner that balances contributions from different enhancement pathways. This module first concatenates

F2,

F3, and the original image in the channel dimension to generate a multi-channel feature tensor. It then applies a convolution operation to the concatenated features to perform a nonlinear transformation and channel normalization, ultimately outputting a fused feature map with the target number of channels while maintaining feature symmetry across spatial dimensions.

Contrast Enhancement Module: This module (

Figure 3) addresses the issue that traditional fixed Gaussian blurring is difficult to adapt to diverse image degradation levels. It introduces a learnable Gaussian kernel to enhance high-frequency details and adjust the intensity of image quality enhancement adaptively.

This module adopts a dual-branch architecture with symmetrical processing pathways. Branch 1: predicts the parameters of Gaussian blur with constant space; Branch 2: predicts the underwater scattering space enhancement coefficient

through a convolutional neural network (CNN).

In the learnable fuzzy implementation, we directly optimize the kernel weight matrix

instead of

. This learning method avoids the formal constraints of the Gaussian function and gives the model the flexibility to learn non-Gaussian shape fuzzy kernels while maintaining approximate symmetry in spatial filtering operations, which is very important for more accurately simulating complex underwater water scattering effects.

3.3. Loss Function Design

In the marine debris detection task, the category distribution of the J-EDI dataset exhibits a significant long-tail characteristic (

Table 1). Plastic samples account for as much as 55.4%, while biologically significant samples (BIO) account for only 23.0%, and ROV equipment samples account for 21.6% [

33]. The traditional cross-entropy loss function exacerbates category bias during training, particularly for difficult-to-classify samples such as small-sized microplastics and biologically attached debris, resulting in low learning efficiency [

34]. This long-tail distribution causes the model to overemphasize dominant categories while neglecting rare but ecologically high-risk categories. The traditional cross-entropy loss function exhibits overfitting toward dominant categories, leading to degraded recognition capabilities for low-frequency categories. To enhance the model’s ability to focus on difficult-to-classify samples (e.g., small targets, inter-category similar samples), this paper introduces an improved Focal loss function to overcome this limitation:

The inverse number of samples weighting scheme () is chosen for its direct proportionality to class imbalance severity, effectively increasing the gradient contribution of tail classes. Compared to alternative strategies—such as inverse class frequency weighting which may overcompensate extreme minorities, or the effective number of samples accounting for data overlap—this approach provides stable rebalancing without over-amplifying noise from very rare categories, which is particularly suitable for the moderate yet significant imbalance in J-EDI.

Among them, is the category balancing factor, is the total number of samples, and is the number of samples in category t. This factor assigns higher weights to low-frequency categories (such as BIO), alleviating the gradient imbalance caused by the long-tail distribution. When applied to the biological category, , which is significantly higher than 1.80 for the plastic category. Among them, is the focus modulation factor, which is set to in this paper, and the loss contribution of easily distinguishable samples is dynamically reduced through Formula (10). Among them, represents the model’s predicted probability of the true category t of the sample.

This design forms an adaptive learning mechanism: when the model predicts a low confidence level for difficult samples (such as small targets or occluded targets), the loss value is significantly amplified, forcing the network to strengthen feature learning for such samples and pushing the decision boundary to expand into sparse sample regions.

6. Conclusions

This paper proposes a new object detection framework to address the unique challenges posed by image quality degradation caused by adverse factors in complex underwater environments, including low visibility and blurring, which disrupt the natural symmetry of visual information. Extensive experimental results on the J-EDI dataset demonstrate that our proposed detection framework achieves outstanding detection performance compared to several mainstream advanced detection algorithms, while effectively restoring perceptual and structural symmetry. This detection framework holds promise for deployment in underwater detection systems, promoting sustainable practices in ecological conservation, resource management, and marine engineering construction.

We believe the outstanding performance of the proposed detection framework can be attributed to several key factors:

(1) Traditional methods are constrained by parameters and cannot be taught, making it difficult to balance the mutual constraints of multiple degradation factors (such as light attenuation and turbidity changes) that break environmental symmetry. The feature quality reconstruction module IFEM achieves adaptive optimization by combining dynamic learning of key parameters (such as sharpening intensity λ and color compensation μ) through a convolutional attention mechanism, effectively restoring chromatic and spatial symmetry. IFEM suppresses scattering noise while preserving critical structural information and natural symmetry, providing degradation-robust discriminative features for downstream object detection.

(2) Traditional cross-entropy loss tends to cause the model to overemphasize dominant categories while neglecting rare categories with high ecological risk. The improved Focal loss effectively addresses this issue through a dual-mechanism dynamic adjustment, enhancing the model’s ability to learn features of low-frequency key categories while maintaining symmetry in category attention. Its sample reweighting mechanism dynamically adjusts the loss contribution of difficult samples, effectively addressing core challenges in underwater environments such as large target size differences and imbalanced sample distributions while preserving symmetry in gradient updates.

Although this framework performs well in typical underwater degraded scenarios (insufficient lighting, color distortion, reduced contrast, and blurred edges) by restoring visual symmetry, its current limitations should be acknowledged: on one hand, modeling photon scattering effects in extremely turbid water bodies remains incomplete, particularly regarding the complex symmetry-breaking patterns in high-turbidity conditions; on the other hand, target separation capabilities in dense fish school occlusion scenarios need improvement, primarily constrained by the scarcity of high-quality dense sample data that captures the intricate spatial symmetry of schooling behavior. Despite the promising results, this study has certain limitations that warrant further investigation. As rightly pointed out by the reviewers, the proposed Intelligent Feature Enhancement Module (IFEM) is primarily designed and optimized to compensate for optical degradation effects, such as light attenuation, scattering, and color distortion, with a focus on restoring imaging symmetry. Its compliance and robustness to non-optical distortions—such as motion blur, severe occlusion, or geometric deformations that break different types of symmetry—were not explicitly validated and remain an open question. This represents a key area for our future work to enhance the general applicability of the framework in even more diverse and challenging underwater environments. To address these limitations and further advance research in the field of marine engineering, it may be necessary to construct an enhanced dataset that integrates complex degradation patterns such as turbid water bodies, chemical pollution color bias, and bioluminescence interference to establish realistic underwater scenarios with known symmetry properties; and to couple the detection framework with marine pollutant dispersion models that respect physical symmetry principles to achieve real-time prediction of plastic debris migration paths. The future work will include porting and evaluating the model on low-power embedded platforms to comprehensively assess its performance and latency while maintaining computational symmetry in real-world streaming applications.