1. Introduction

Rapid expansion of the high-speed railway network has significantly enhanced national transportation efficiency and economic development [

1]. Therefore, ensuring the safe and stable operation of high-speed railways is critical, with particular attention paid to the catenary system, which supplies continuous electrical power to trains. Structurally, the catenary exhibits a highly regular, near-symmetric geometry that underpins stable current collection. In real-world operations, however, catenary components are exposed to harsh environments and are vulnerable to the intrusion of foreign objects such as bird nests, plastic bags, and kites, which disrupt this regularity. Viewed through a symmetry lens, such intrusions constitute localized asymmetries embedded into otherwise repetitive line patterns—both explaining the detection challenge and offering a useful prior for vision-based methods. If left unaddressed, these intrusions can cause severe failures, including pantograph-contact loss, arc discharges, and dielectric breakdowns, ultimately leading to power outages, train delays, and potential safety hazards for passengers and railway assets.

The traditional inspection methods for catenary systems primarily rely on manual patrols or human review of surveillance footage [

2]. However, these approaches are labor-intensive, costly, and prone to missed detections, particularly under adverse weather conditions or in large-scale deployment scenarios. With the continuous growth of high-speed railway infrastructure, there is an urgent demand for intelligent, automated, and real-time foreign object detection systems [

3]. Driven by advances in deep learning [

4] and computer vision [

5], image-based object detection [

6,

7,

8,

9,

10] techniques have emerged as promising solutions for railway inspection tasks. Among them, the YOLO (You Only Look Once) family [

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19] of detectors has demonstrated superior trade-offs between detection accuracy and inference speed, making it highly suitable for real-time applications.

Earlier research [

20] applied YOLOv5 [

15] and Faster R-CNN [

21] frameworks to railway catenary inspections, focusing primarily on detecting relatively large and visually distinct foreign objects such as plastic bags or large debris. Traditional two-stage detectors, although achieving high accuracy, often suffer from slow inference speeds, while earlier one-stage detectors struggled with small object detection and background interference. Recent developments, such as YOLOv8 [

8] and YOLOv12 [

10], have introduced architectural refinements including lightweight modules and attention mechanisms, improving the detection performance on moderately challenging targets. Attention-based methods, including coordinate attention [

22] and self-attention-based [

23] backbones, have proven effective in enhancing the feature expressiveness, particularly for occluded or ambiguous targets. Beyond attention, recent studies emphasize the importance of structured contextual priors in improving the recognition accuracy. For example, leveraging habitat information has been shown to enhance fine-grained bird identification [

24]. This idea parallels the use of symmetry priors in railway nest detection, where the contextual regularities of the catenary can significantly strengthen the separation of true anomalies from background patterns.

However, bird nest detection presents unique challenges: nests are often small, visually similar to the background textures of the catenary, irregular in shape, and partially occluded. In addition, the scarcity of high-quality annotated datasets hinders model generalization further. Existing YOLO-based solutions, though effective for larger foreign objects, still struggle with small-scale, weakly textured targets like bird nests. Similar challenges are also noted in wildlife and animal monitoring tasks, where small targets in natural environments require robust detection under cluttered and imbalanced conditions [

25].

To address these challenges, this paper proposes a modified YOLOv12 network named the Symmetry-Aware Railway Nest Detection Framework (RNDF), which incorporates several targeted innovations. First, the original A2C2f modules are replaced with newly designed A2C2f_HRAMi (Hierarchical Reciprocal Attention Mixer with Improvements) [

26] modules, which enhance fine-grained feature extraction through residual–attention fusion strategies, further modeling the repetitive, near-symmetric geometry of catenary wires and increasing the contrast to localized asymmetric targets (nests). Second, a Spatial–Channel Synergistic Attention (SCSA) [

27] module is integrated at the end of the backbone to simultaneously strengthen the spatial and channel feature representations, thereby consolidating symmetric background regularities while surfacing asymmetric anomalies, improving the sensitivity to small and occluded targets. Third, a Focaler-GIoU loss function [

28] is adopted to stabilize bounding box regression and alleviate the sample imbalance during training, especially along slender, symmetric wire boundaries, particularly benefiting small object localization. Additionally, to support training, a dedicated bird nest detection dataset, named the High-Speed Railway Catenary Nest Dataset (HRC-Nest), is constructed. It comprises over 800 real-world images and 1000 synthetically generated samples with high-quality annotations, preserving the global regularity of the catenary and annotating nests as localized departures from symmetry.

The contributions of this paper are fivefold: (1) a lightweight and attention-enhanced, symmetry-aware detection framework, RNDF, is designed specifically for small object detection in railway catenary systems; (2) the A2C2f_HRAMi module is introduced to enhance hierarchical feature extraction and to model repetitive symmetric patterns together with their deviations; (3) the SCSA module is incorporated to synergistically capture spatial and channel contextual information, reinforcing the symmetric structure while highlighting localized asymmetries; (4) the Focaler-GIoU loss function is adopted to improve the localization accuracy for small and occluded targets, particularly around symmetric wire structures where minor offsets mimic symmetry breaks; and (5) a dedicated bird nest detection dataset, HRC-Nest, is constructed to facilitate model training and evaluation under complex environmental conditions, explicitly capturing catenary symmetry and nest-induced asymmetry.

The remainder of this paper is organized as follows.

Section 2 presents the materials and methods, including the dataset construction, network architecture, and loss function design.

Section 3 details the experimental results and visual analyses.

Section 4 discusses the results, limitations, and potential future work. Finally,

Section 5 concludes this paper.

2. Materials and Methods

2.1. Dataset Construction

Detecting bird nests in high-speed railway catenary systems is a complex and data-scarce computer vision task, where the performance of deep learning models heavily depends on the availability of high-quality and diverse training data. To validate the effectiveness of the proposed detection framework, a dedicated dataset named the High-Speed Railway Catenary Nest Dataset (HRC-Nest) is constructed, based on both real-world imagery and synthetically generated samples. The dataset aims to enhance the robustness under conditions with small objects, occlusion, and cluttered backgrounds. From a symmetry perspective, the dataset captures the repetitive, near-symmetric layout of contact and messenger wires, while nests are annotated as small, localized departures from these regular patterns, enabling symmetry-aware training and evaluation.

2.1.1. Real Image Collection and Processing

Over 800 real-world images of bird nests on railway catenary systems are collected, sourced from onboard monitoring footage and inspection images captured by railway personnel. All images are carefully filtered and cropped to retain frames where bird nests are clearly visible and structurally complete. Manual annotations are performed using the open-source tool LabelImg (version 1.8.1, Tzutalin, GitHub, USA), and the annotations are saved in both YOLO and COCO-compatible formats to support flexible training scenarios.

2.1.2. Synthetic Image Generation

Given the inherently infrequent occurrence of bird nests in real-world scenarios, collecting a sufficient number of samples poses significant challenges. Moreover, the distribution of bird nest instances is highly influenced by geographic and seasonal factors, resulting in data imbalance and bias. To mitigate these issues and improve the model’s ability to detect such small and rare targets, an augmented dataset is constructed. It comprises over 1000 synthetically generated bird nest images created using advanced image generation techniques.

To construct synthetic samples, background catenary images without foreign objects are first extracted. Candidate regions for nest placement are manually specified, and a large-scale generative model is accessed via an API to synthesize nest structures guided by semantic prompts, such as appearance, construction style, material distribution, and hanging position. During synthesis, the global catenary geometry and wire parallelism are preserved to maintain scene symmetry. The generated nests are then composited as localized symmetry-breaking artifacts with a controllable size, position, and occlusion. This process produces style-consistent synthetic nests that are automatically integrated into background images to form realistic and context-aware scenes. As a result, the approach effectively avoids the visual artifacts and boundary inconsistencies that are common in traditional cut-and-paste augmentation.

Although synthetic samples may not capture the full complexity of naturally collected data, they significantly enrich the dataset by introducing valuable diversity in terms of the background variation, scale distribution, and occlusion patterns. This augmentation is particularly beneficial for improving the robustness under small object and cluttered conditions, where real data are scarce. Nevertheless, their use can also introduce potential biases compared with real-world distributions, such as simplified textures or limited environmental variability. To address this issue, synthetic nests are composited onto authentic catenary backgrounds and combined with real samples during training. Empirical observations with different mixing ratios confirm that incorporating a sufficient proportion of real data substantially reduces the bias of synthetic samples while preserving their benefits, thereby improving the overall generalization. These observations also serve as a validation of the realism of the synthetic images since their inclusion consistently leads to measurable performance gains when integrated with real data. Therefore, the final dataset integrates all available real and synthetic images, thereby maximizing realism while preserving diversity and ensuring sufficient coverage of complex scenarios.

2.1.3. Dataset Split and Statistics

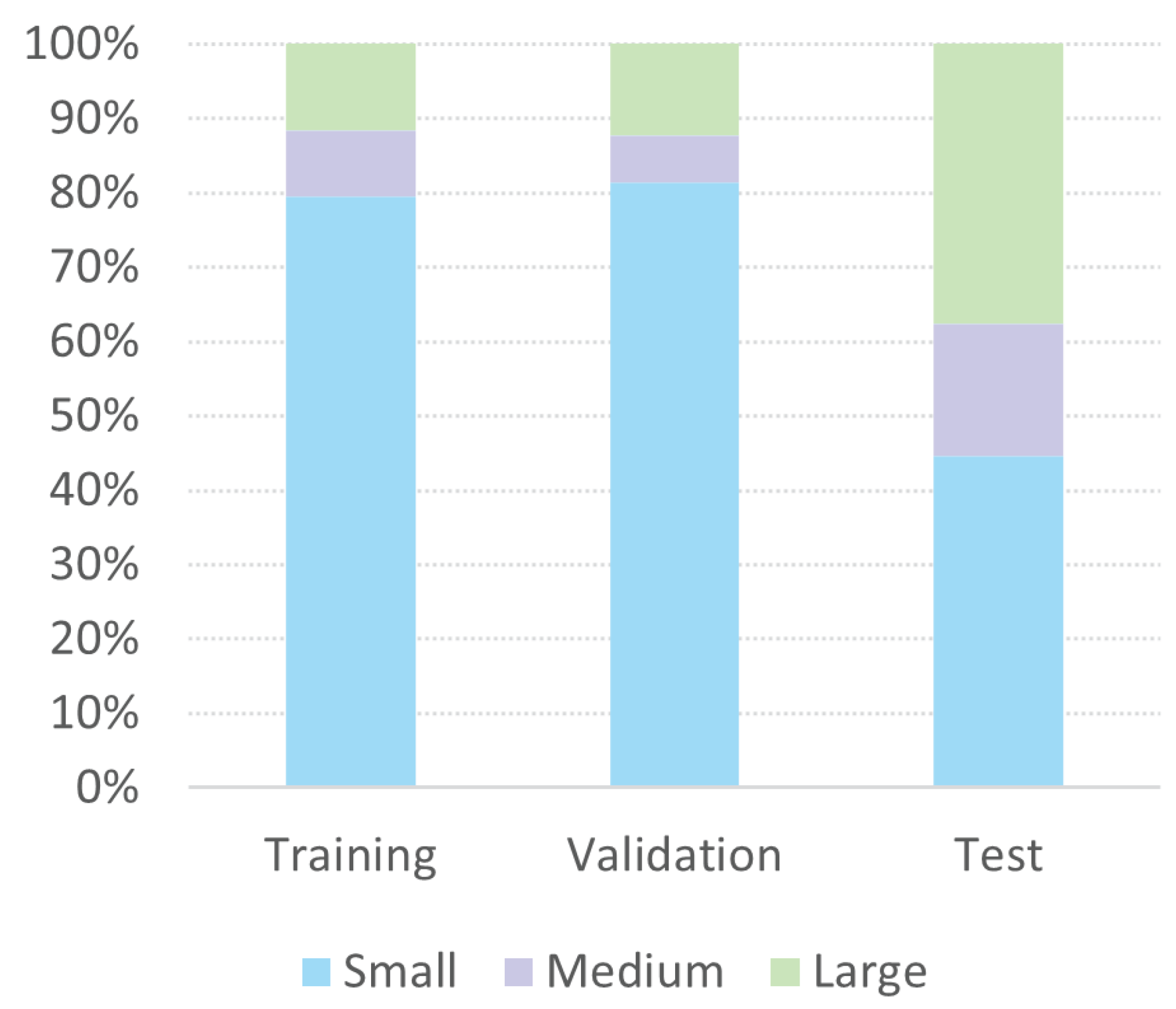

To ensure scientific training and a fair evaluation, the dataset is partitioned as follows: 15% of the real images are used as the test set, while the remaining 85% are split into training and validation sets at a 9:1 ratio.

Table 1 summarizes the detailed composition of each subset, clearly distinguishing between synthetic images, real positive samples, and real negative samples. Overall, the dataset maintains a real-to-synthetic ratio of approximately 4:5, effectively balancing realism with dataset diversity and generalizability.

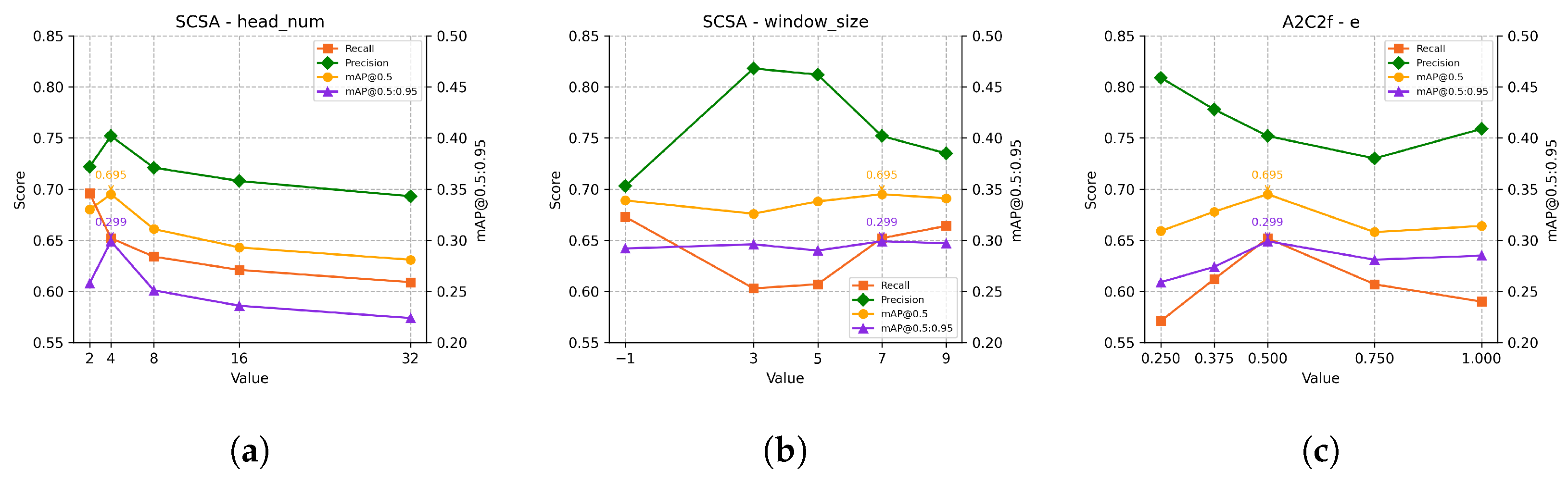

Table 2 and

Figure 1 illustrate the distribution of the object sizes across the training, validation, and test subsets. Small objects, defined as a ground truth area < 0.5% of the image, dominate the dataset. They comprise 1734 instances (approximately 84.3%) in the training set, 193 instances in the validation set, and 50 instances in the test set. Conversely, medium-sized objects (0.5–1% area) and large objects (greater than 1% area) are relatively uncommon. Large targets primarily appear in the test set to evaluate the model’s adaptability. This distribution reflects the real-world scale of bird nests in catenary scenes and validates the dataset’s design as representative of a typical small object detection scenario. The high proportion of small targets also justifies the introduction of attention modules and regression enhancement strategies into the proposed model.

2.2. The Overall Network Architecture

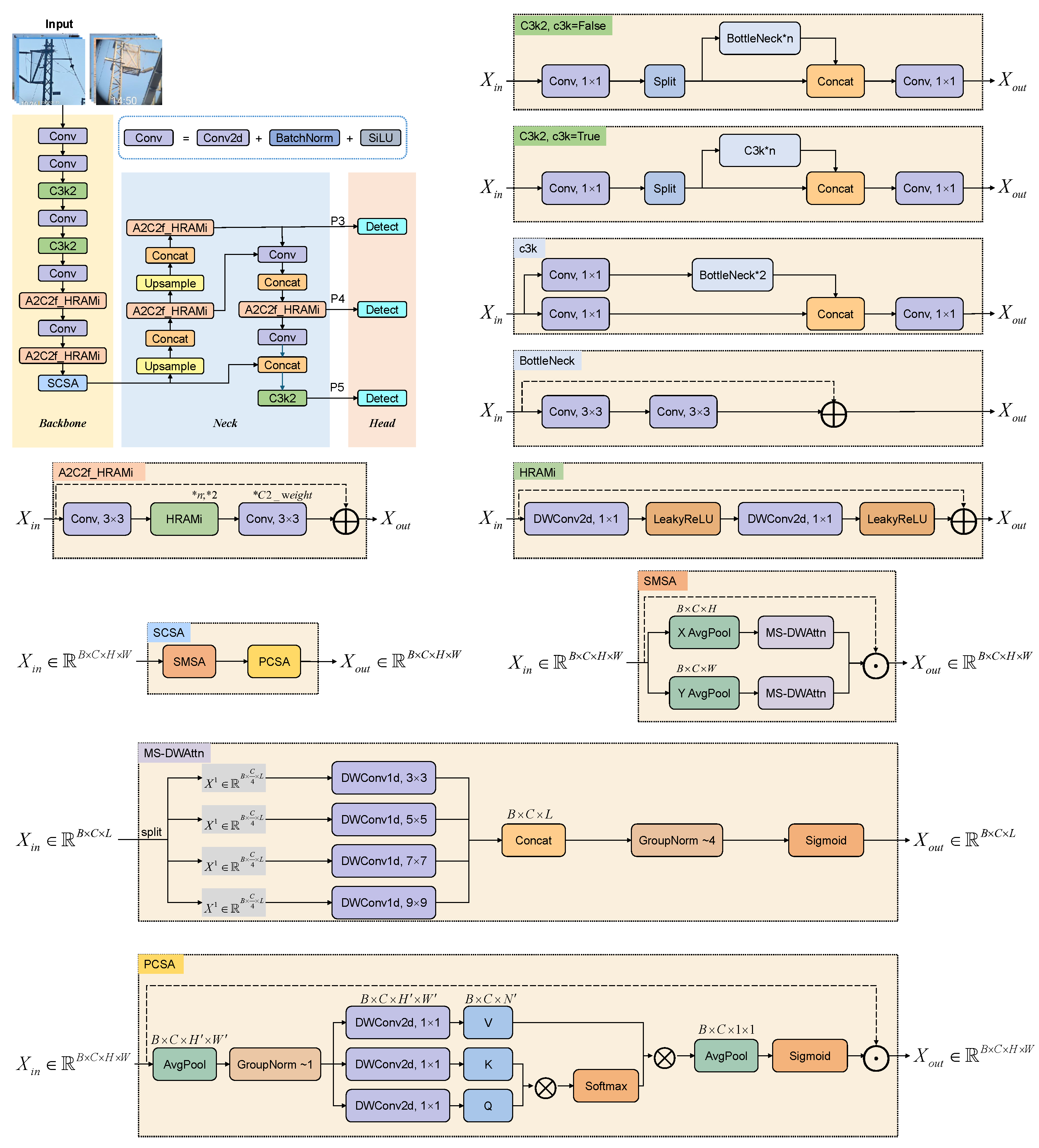

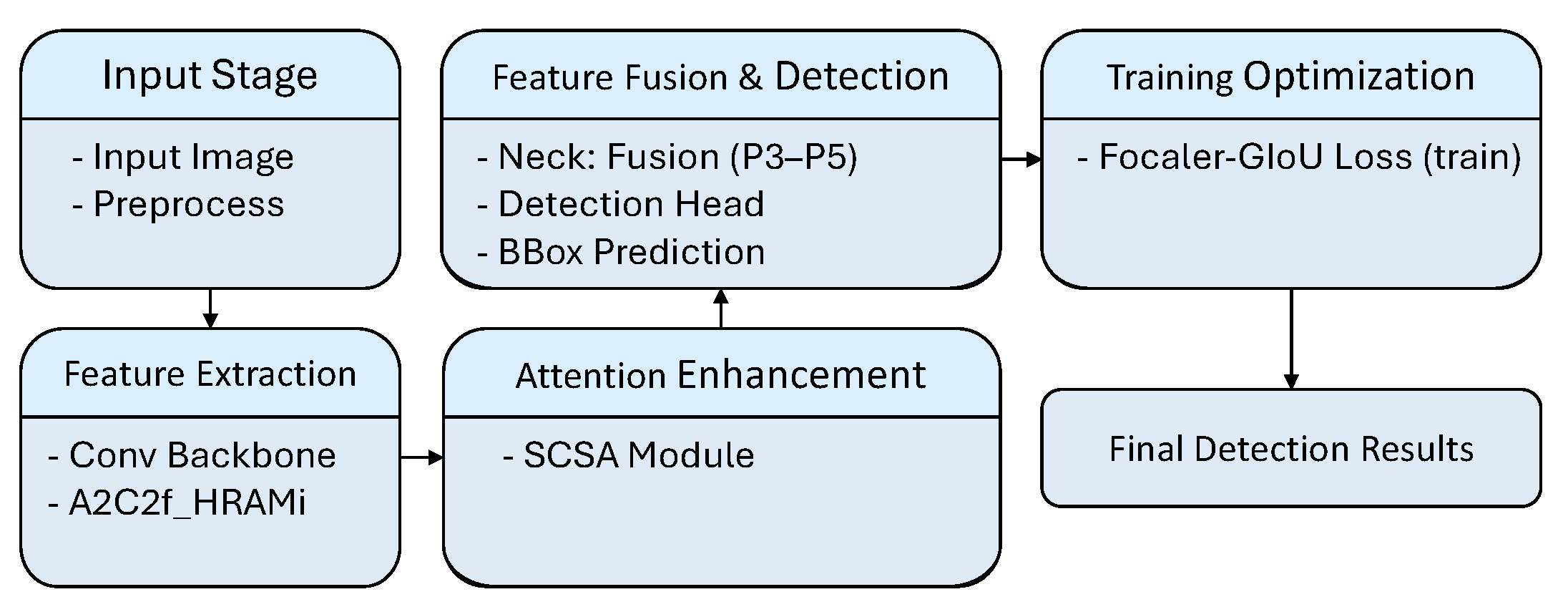

The improved network architecture is illustrated in

Figure 2 and follows the conventional backbone–neck–head structure typical of YOLO detectors. Specifically, compared with the vanilla YOLOv12, the backbone is modified in two ways. First, all original A2C2f modules are replaced with newly designed A2C2f_HRAMi modules. Second, an an SCSA block is integrated at the final stage. Additionally, the neck and detection head are inherited from YOLOv12-Turbo, which uses the R-ELAN enhanced feature aggregation strategy. This design employs multi-scale fusion and lightweight detection heads. These modifications collectively reinforce multi-semantic feature representation while maintaining real-time detection capabilities.

The detailed workflow of the proposed Railway Nest Detection Framework is shown in

Figure 3. Starting from an input image, basic preprocessing is first performed by the network before passing through the backbone, where multi-scale features are extracted via convolutional layers and A2C2f_HRAMi modules. Subsequently, the SCSA module refines the spatial and channel representations. Finally, the processed features are then fused in the neck using upsampling and concatenation operations (P3, P4, and P5 feature maps) and passed to the detection head. During training, bounding box regression is optimized using the Focaler-GIoU loss, while during inference, the final object detection results are produced after non-maximum suppression.

2.3. The A2C2f_HRAMi Module

The original A2C2f module in YOLOv12 was designed to efficiently model hierarchical spatial information through area attention and efficient aggregation. However, for small, low-texture targets such as bird nests, its modeling capacity remains limited. To address this, the A2C2f_HRAMi (Hierarchical Reciprocal Attention Mixer with Improvement) module is proposed, which introduces two key innovations. Specifically, hierarchical reciprocal attention (HRAMi) simultaneously captures cross-scale, cross-dimension feature interactions using progressive attention fusion. Furthermore, residual mixing employs residual blending between intermediate attention outputs, stabilizing training and preventing gradients from vanishing in deeper layers. Formally, the core operation of the HRAMi module is defined as follows:

In this formulation, represents the input feature map, while and denote lightweight spatial attention and channel attention operations, respectively. Moreover, indicates residual fusion with learned weights.

The fusion operator

is implemented as follows: the outputs of

and

are first concatenated along the channel dimension, forming a unified feature map. This concatenated feature is then sequentially processed using two

depthwise separable convolution layers, each followed by a non-linear activation function (such as LeakyReLU):

where

denotes a depthwise separable convolution,

represents the activation function, and

indicates channel-wise concatenation. This structure enables efficient and effective fusion of the spatial and channel attention features at multiple scales, reinforcing both the pixel-level detail and semantic context.

These cross-layer connections effectively ensure a stable gradient flow even under severe occlusions or blurry inputs, significantly enhancing the fine-grained localization capability for small objects. By strengthening the cross-scale feature interactions, A2C2f_HRAMi improves the modeling of repetitive, near-symmetric wire patterns, which in turn sharpens the contrast to localized asymmetric targets such as nests.

2.4. The SCSA Module

Attention mechanisms traditionally process spatial and channel cues sequentially, leading to suboptimal information utilization. This sequential processing often fails to fully exploit the mutual reinforcement between the spatial and channel dimensions, limiting the model’s ability to focus on salient features. To address this limitation, a Spatial and Channel Synergistic Attention (SCSA) module is integrated after the backbone, aiming to enhance both the capture of local details and the understanding of global context. Specifically, the SCSA module comprises two key components. The first component, Shareable Multi-Semantic Spatial Attention (SMSA), extracts diverse spatial semantic patterns through depth-shared lightweight convolutions. The second component, Progressive Channel-Wise Self-Attention (PCSA), mitigates multi-semantic disparities among channels by using progressive compression and single-head self-attention. Formally, the SMSA output is defined as

Subsequently, the PCSA refinement is computed by

where

Q,

K, and

V represent the projected queries, keys, and values derived from

, respectively;

C denotes the channel dimension;

denotes the sigmoid activation; and

denotes Group Normalization over grouped sub-features. Thus, the final SCSA output can be summarized as

This dual-stage attention design significantly enhances the robustness of the model, particularly under conditions involving severe background clutter and occlusions.

This dual-stage attention design significantly enhances the robustness of the model, particularly under conditions involving severe background clutter and occlusions. Jointly enhancing spatial and channel cues also helps consolidate symmetric background regularities while surfacing asymmetric anomalies, aligning SCSA with the symmetry-aware objective.

2.5. The Focaler-GIoU Loss

Localization accuracy remains crucial for effectively detecting small foreign objects. However, the commonly used loss functions often suffer from insufficient penalization of difficult-to-regress bounding boxes, particularly in heavily occluded or visually complex scenarios. To overcome this issue, the Focaler-GIoU loss is adopted, which is a focal-extended geometric Intersection over Union (IoU) loss. Specifically, the Focaler-GIoU loss is mathematically defined as follows:

where

is a focusing parameter, typically set to 2.0, and the GIoU measures the geometric overlap, accommodating both overlapping and non-overlapping cases. Moreover, this formulation dynamically adjusts the gradient contribution according to the quality of the predictions: hard samples (with poor GIoU values) receive amplified gradients, while easy samples (with good GIoU values) have their gradients suppressed.

Consequently, bounding box regression becomes more stable, significantly improving the localization performance for small, challenging targets such as bird nests. In practice, this improves the localization along slender, symmetric wire boundaries where slight offsets can mimic symmetry breaks, thereby stabilizing symmetry-aware detection.

2.6. The Training Setup

All experiments are conducted on the self-constructed bird nest detection dataset tailored to high-speed railway catenary systems. The models are built upon the YOLOv12 architecture and are trained for 50 epochs using stochastic gradient descent (SGD) with an initial learning rate of 0.01. A linear learning rate decay schedule is adopted, along with a warm-up phase during the first 3 epochs to accelerate the convergence and stabilize the early training dynamics.

Baseline. The YOLOv12-S variant is selected as the baseline model due to its balance of speed and accuracy. All proposed modifications are implemented on top of this baseline’s structural framework and scaling strategy. To ensure fair comparisons, no additional data augmentation methods or optimization tricks are introduced beyond those commonly used in the original YOLOv12 training pipeline. This design choice guarantees that performance improvements can be attributed solely to the architectural and loss function enhancements proposed in this study, ensuring both methodological clarity and result reliability.

2.7. The Inference Procedure

The inference workflow of the proposed RNDF model is summarized in Algorithm 1.

| Algorithm 1 The inference procedure of the proposed Railway Nest Detection Framework (RNDF). |

Input I: Input image

- 1:

Preprocess input image I (resize, normalization) - 2:

Extract backbone features from I: - 3:

Apply convolutions - 4:

Pass through stacked A2C2f_HRAMi modules - 5:

Refine feature maps using SCSA module - 6:

Fuse multi-scale features through the neck (P3, P4, P5) - 7:

Generate bounding box predictions and objectness scores via the detection head - 8:

Apply non-maximum suppression (NMS) to filtering overlapping boxes - 9:

Return the final detection results B

Output B: Final detected bounding boxes;

|

3. Results

3.1. Ablation Studies

To evaluate the individual and combined contributions of the SCSA module, the A2C2f_HRAMi module, and the Focaler-GIoU loss function, a series of ablation experiments are conducted without loading pre-trained weights. Each variant is trained for 50 epochs on the constructed bird nest detection dataset. The ablation results are summarized in

Table 3. To ensure reliability, each configuration is repeated ten times with different random seeds. Following deployment-oriented practice, the results reported correspond to the best-performing trained model among these runs, as real-world applications typically select the most accurate model for deployment.

The results reveal several key observations. The SCSA module alo0ne slightly improves the recall (from 0.575 to 0.654) and mAP@0.5 (from 0.656 to 0.683), demonstrating its effectiveness in enhancing spatial–channel feature sensitivity and increasing the detection of positive samples; however, a slight drop in precision (from 0.737 to 0.724) indicates a minor trade-off between improved recall and classification confidence when the module is applied in isolation. Meanwhile, the Focaler-GIoU loss leads to a significant improvement in mAP@0.5:0.95 (from 0.237 to 0.277), reflecting more accurate bounding box regression, particularly for small or difficult-to-localize targets. In addition, the A2C2f_HRAMi module contributes to a steady improvement in the feature robustness, shown by increases in both the precision (up to 0.817) and mAP@0.5 (0.672), while also modestly enhancing the localization quality. Viewed through a symmetry-aware lens, SCSA and A2C2f_HRAMi help encode the near-symmetric wire regularities, whereas Focaler-GIoU sharpens the localization to symmetry-breaking anomalies such as nests.

Moreover, various combinations of the modules further confirm their complementary nature. The configuration using both SCSA and Focaler-GIoU (ID 6) achieves the highest recall (0.688), highlighting its sensitivity to detecting difficult positive instances. The combination of A2C2f_HRAMi and Focaler-GIoU (ID 7) demonstrates a strong balance between detection sensitivity and regression accuracy, achieving recall of 0.671 and an mAP@0.5:0.95 of 0.276. The combination of SCSA and A2C2f_HRAMi results in the highest precision (0.766) among all tested configurations, although its recall (0.589) is moderately lower, suggesting a trade-off that prioritizes detection correctness over exhaustive retrieval. Ultimately, the full integration of all modules—denoted as the Railway Nest Detection Framework (RNDF)—achieves the best overall performance, with mAP@0.5 reaching 0.695 and mAP@0.5:0.95 reaching 0.299. These results clearly demonstrate the strong synergistic effect of hierarchical attention, spatial–channel synergy, and focal-based regression optimization, confirming the robustness and generalization capability of the proposed framework under complex small object detection conditions.

3.2. Comparative Experiments

The proposed Railway Nest Detection Framework (RNDF) is compared against several mainstream object detectors, including YOLOv5 [

15], YOLOv11 [

9], YOLOv12 [

10] (baseline), DEIM [

29], and RT-DETR [

30]. All comparison models are trained from scratch under consistent settings without using pre-trained weights. The comparative results are presented in

Table 4.

As shown in

Table 4, the RNDF consistently outperforms all compared detectors across all major evaluation metrics. In terms of mAP@0.5, the RNDF achieves the highest score of 0.695, exceeding YOLOv5 (0.480), YOLOv11 (0.609), YOLOv12 (0.656), DEIM (0.619), and RT-DETR (0.586) by a considerable margin. Similarly, the RNDF attains the highest mAP@0.5:0.95 of 0.299, demonstrating a superior fine-grained localization capability compared to that of DEIM (0.261) and RT-DETR (0.246).

In terms of precision and recall, the RNDF also leads with 0.752 and 0.652, respectively, showing a stronger detection reliability and comprehensive target retrieval ability than all baseline models. Notably, although DEIM and RT-DETR have been regarded as strong performers in small object detection, these results indicate that the RNDF surpasses both models even in small-object-sensitive metrics such as Recall and mAP@0.5:0.95.

In conclusion, these results confirm that the proposed architectural improvements, including hierarchical attention fusion and focal-based regression optimization, effectively enhance both localization precision and robustness under challenging scenarios such as small, occluded, and cluttered targets. Moreover, the RNDF achieves these advantages without relying on pre-trained weights, highlighting its strong adaptability to data-scarce domains and real-world deployment requirements where lightweight training and reliable detection are critical. This superiority aligns with a symmetry-aware view: by learning the regular, near-symmetric catenary context and elevating the responses to departures from that regularity, the RNDF improves both the detection reliability and fine-grained localization.

3.3. Practical Threshold Validation

To further connect the experimental results with real-world operational requirements, the RNDF is additionally evaluated on 15 independent video sequences containing bird nest anomalies. For practical interpretation, two metrics are reported: the miss-detection rate, defined as the proportion of nest-containing frames that are missed by the detector over all frames with real nests, and the false alarm rate, defined as the proportion of frames incorrectly detected as nests relative to all frames predicted as containing nests.

Across the 15 test videos, the RNDF achieves an average miss-detection rate of only 3.68%, which remains well below the practical threshold of 5% that is typically required in safety-critical railway inspection tasks. The false alarm rate is measured at 10.94%, which is also under the practical tolerance level of 15%. While false alarms imply that some normal frames are incorrectly flagged as nests, such cases are less critical in practice since all alarms are subsequently verified by human inspectors who can efficiently filter out spurious detections before field maintenance is scheduled. This operating mode emphasizes that minimizing missed detections is the foremost priority, and the results demonstrate that the RNDF meets this requirement while keeping false alarms within acceptable limits. The detailed results are summarized in

Table 5.

3.4. Precision–Recall Curve Analysis

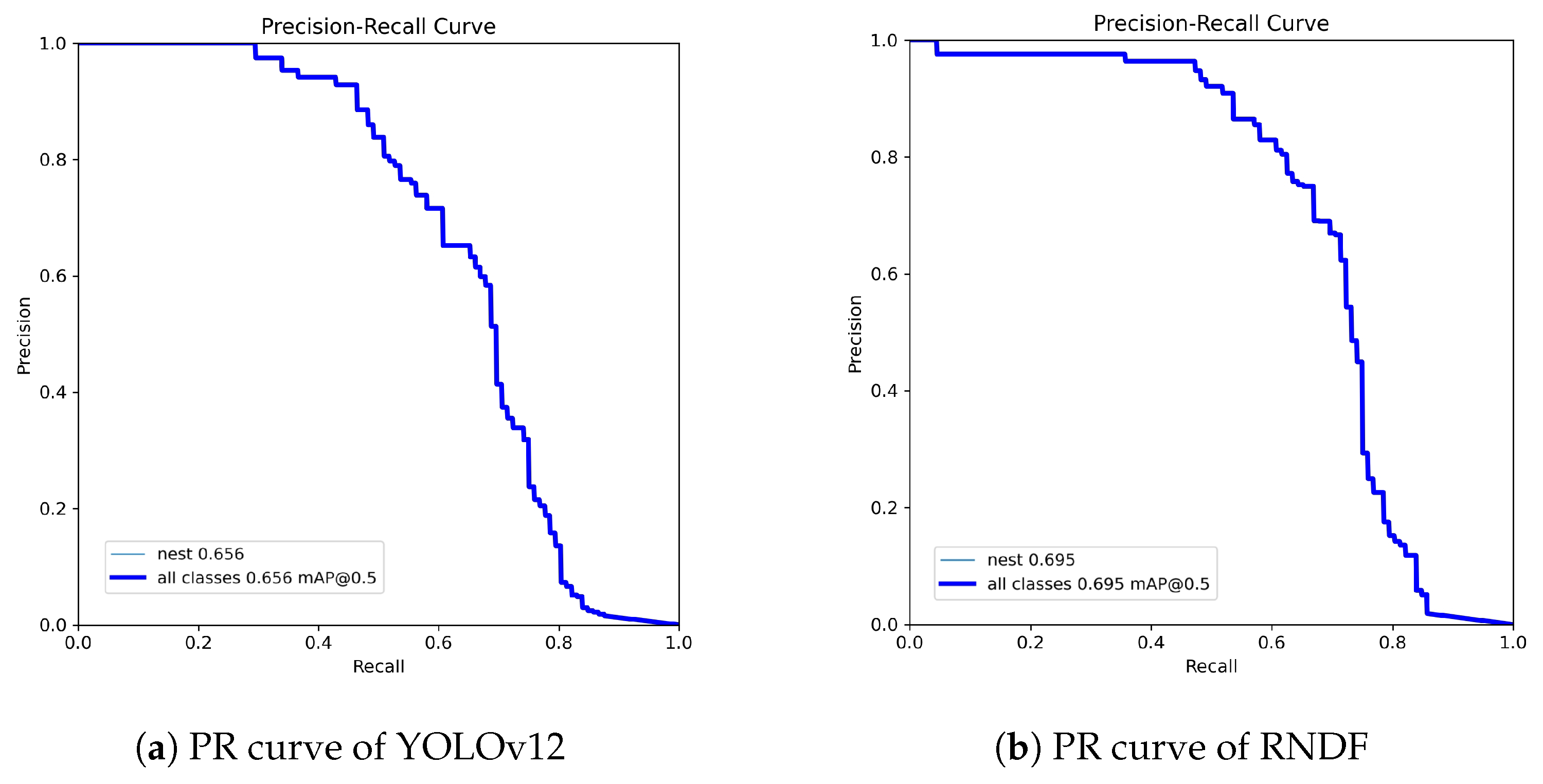

To evaluate the detection performance further, precision–recall (PR) curves are plotted for the baseline YOLOv12 and the proposed RNDF models on the test set.

Figure 4 presents the PR curves for comparison.

As shown in

Figure 4, the RNDF consistently achieves a higher precision across a wide range of recall thresholds, particularly in high-recall regions, indicating a superior balance between precision and recall. This trend is consistent with the quantitative results reported earlier, in which the RNDF outperforms the baseline model, with substantial improvements in both mAP@0.5 and mAP@0.5:0.95. Consequently, the elevated PR curve further validates that the architectural enhancements—namely the A2C2f_HRAMi module, the SCSA module, and the Focaler-GIoU loss—collectively contribute to an improved detection sensitivity and localization stability under challenging conditions. From a symmetry-aware perspective, the PR gains reflect better encoding of repetitive, symmetric line structures and heightened responses to the localized deviations introduced by nests.

3.5. The Efficiency Analysis

To validate the real-time feasibility of the proposed RNDF further, a systematic comparison of the model’s efficiency against representative baselines is conducted. The comparison covers three key aspects: the parameter size (Params), computational complexity (GFLOPs), and inference speed (Latency and FPS), all measured at an input resolution of 640 × 640. The detailed results are presented in

Table 6, which provides a holistic view of the trade-off between accuracy and efficiency, particularly critical for safety-critical railway monitoring tasks.

Table 6 summarizes the efficiency comparison between the RNDF and representative baseline detectors, including YOLO series models, RT-DETR, and DEIM. As can be observed, the RNDF achieves a favorable trade-off between computational cost and inference speed, ranking among the most efficient models while maintaining a competitive accuracy. Notably, although the RNDF introduces slightly more parameters (2.79 M vs. 2.55 M) and higher FLOPs (9.6 vs. 6.2) than the original YOLOv12 due to the integration of additional attention modules, its inference latency is significantly reduced (6.91 ms vs. 11.48 ms). This seemingly counterintuitive outcome is attributed to the reduction in the overall network depth (289 vs. 497 layers) and the use of GPU-friendly attention mechanisms, which enhance the parallelism and reduce the memory access overhead. Consequently, the RNDF delivers faster inference in practice despite its higher theoretical FLOPs, thereby confirming its real-time feasibility for deployment in safety-critical railway monitoring applications.

3.6. Visualization Results

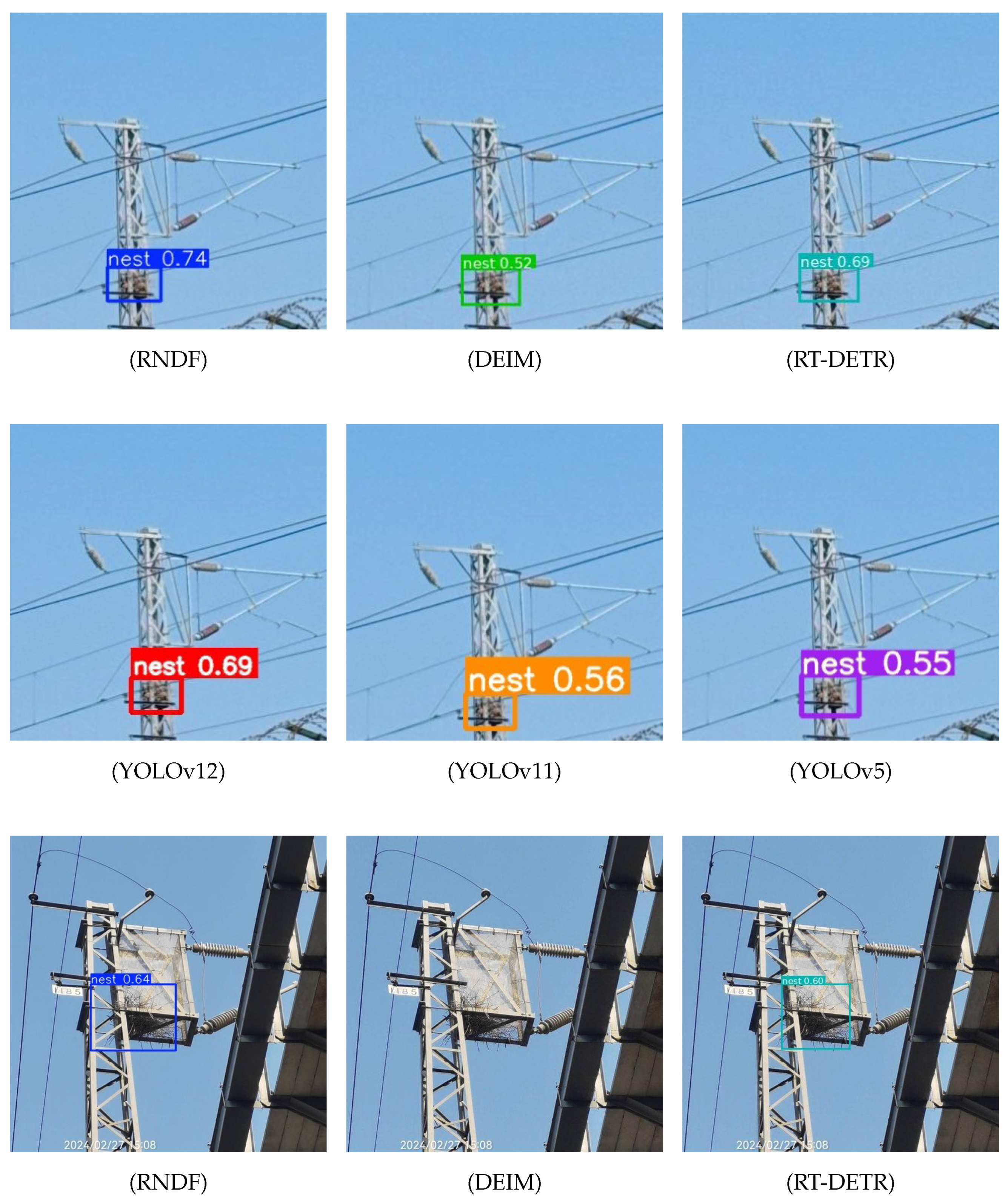

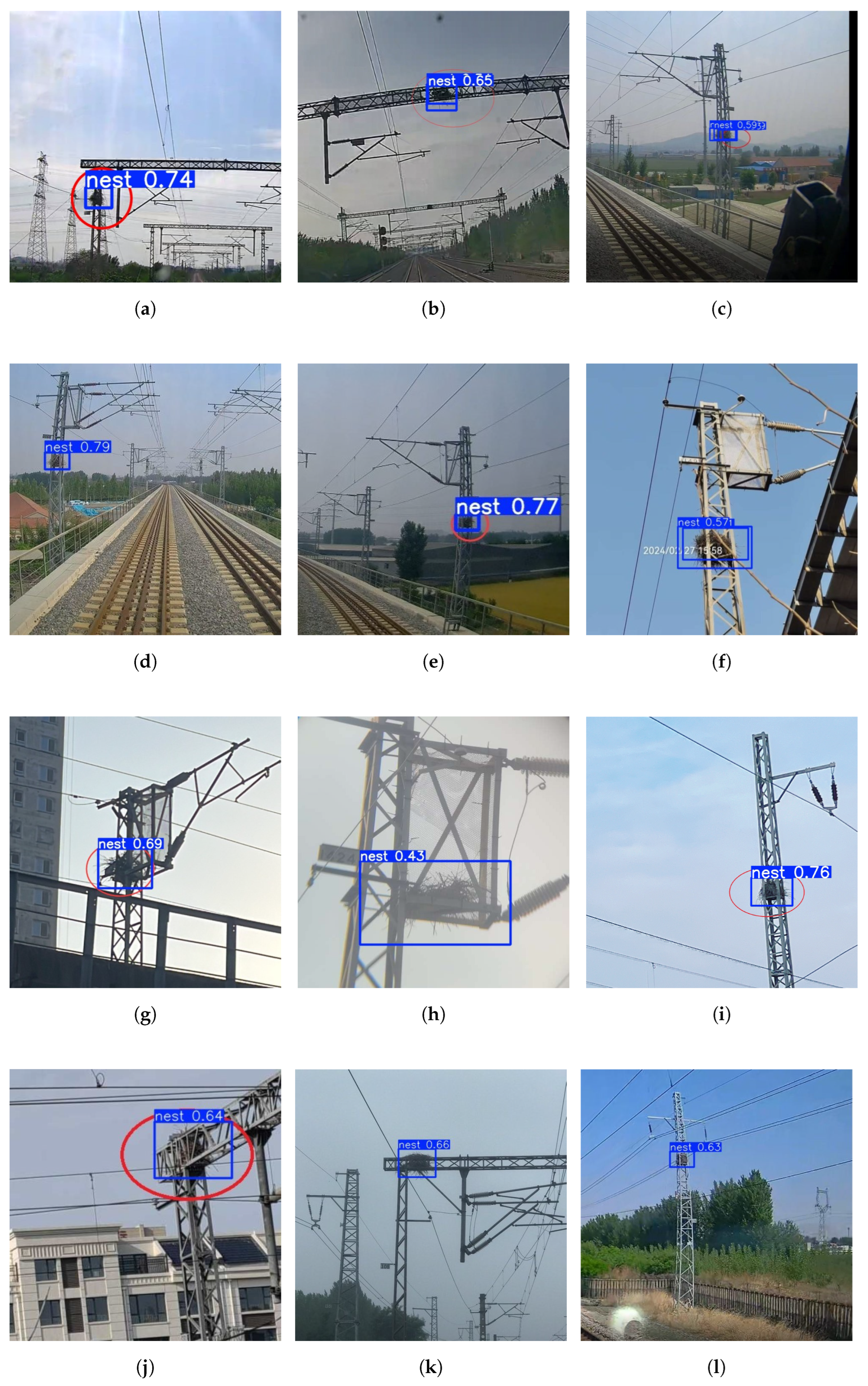

Qualitative visualization results are provided to compare the detection performance between the proposed RNDF and a range of mainstream detectors. For clarity, bounding boxes corresponding to different models are displayed in distinct colors: RNDF (blue, RGB: 0, 0, 255), YOLOv12 (red, RGB: 255, 0, 0), YOLOv11 (orange, RGB: 255, 140, 0), YOLOv5 (purple, RGB: 160, 32, 240), DEIM (green, RGB: 0, 200, 0), and RT-DETR (cyan, RGB: 0, 180, 180). Each bounding box also explicitly includes the predicted class label (nest) together with its confidence score to ensure interpretability. As shown in

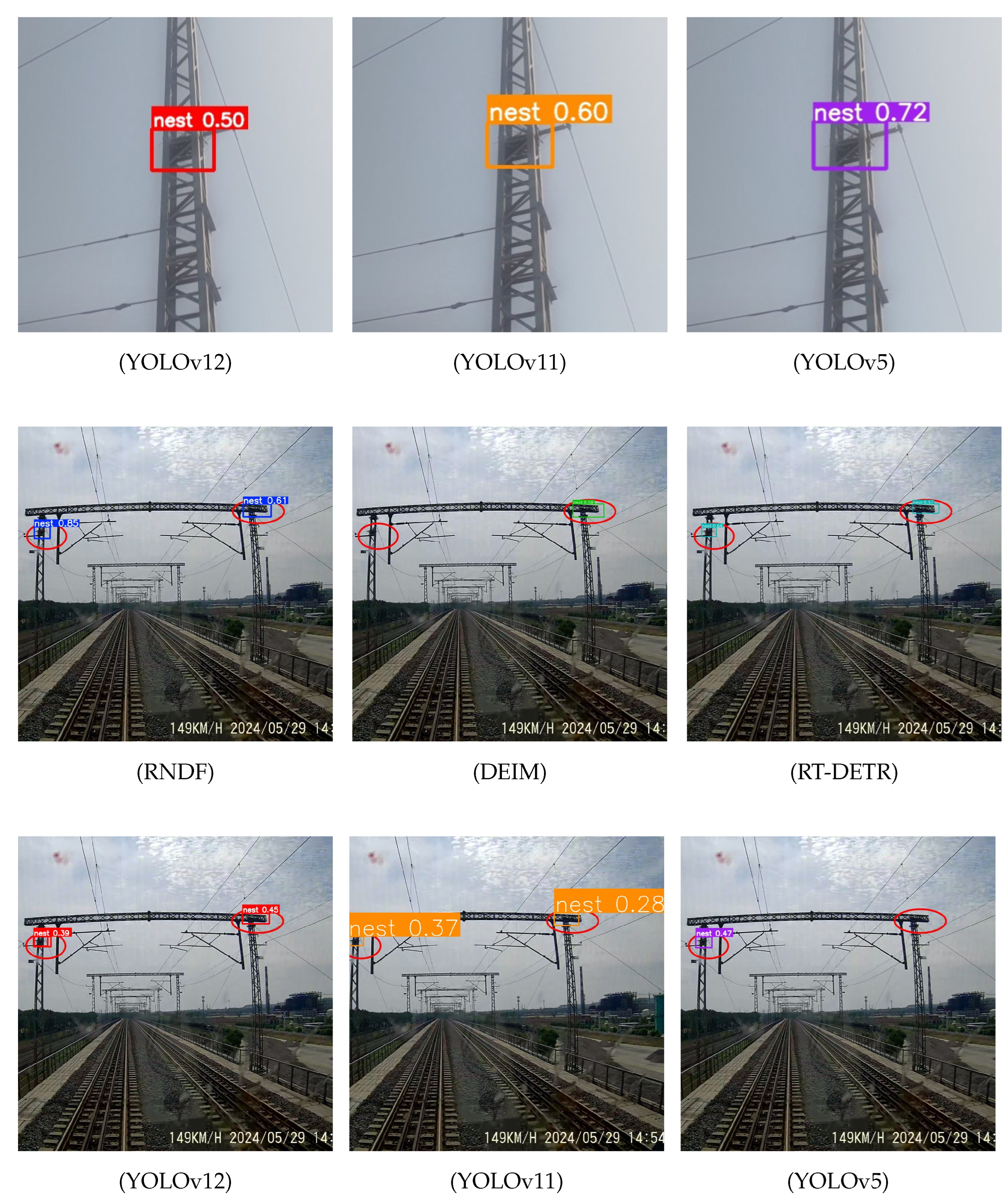

Figure 5, which includes multiple detection examples under complex environmental conditions such as fog, occlusion, and varied lighting, the RNDF consistently outperforms competing models—including YOLOv12, YOLOv5, YOLOv11, DEIM, and RT-DETR—by producing higher-confidence predictions and more accurate localization. In these scenes, the catenary forms highly regular, near-symmetric line patterns, while nests manifest as localized asymmetries; the RNDF exploits this symmetry prior to separate the regular structure from anomalies better. In addition,

Figure 6 showcases a diverse set of real-world detection cases where the RNDF successfully identifies bird nests that are completely missed by other methods.

As illustrated in

Figure 5, the RNDF consistently achieves a higher detection confidence and more accurate localization compared to those of baseline models such as YOLOv12 and other state-of-the-art detectors, including YOLOv5, YOLOv11, DEIM, and RT-DETR. These improvements are especially evident in scenarios involving small targets, partial occlusion, and low-visibility conditions, where other methods often suffer from false negatives or imprecise bounding boxes.

The superiority of the RNDF is further demonstrated in

Figure 6, which showcases a diverse collection of real-world detection examples. Each image in this figure corresponds to a case where competing methods fail to detect the bird nest (i.e., complete misses), while the RNDF accurately identifies the object with high confidence. Visually, nests appear as localized asymmetries against symmetric catenary lines; the RNDF highlights these departures more reliably than competing detectors. These qualitative results highlight the effectiveness of the proposed improvements in enabling robust and reliable bird nest detection under complex high-speed railway catenary environments.

Overall, these visual comparisons validate that the proposed RNDF significantly improves the detection completeness, accuracy, and robustness compared to those of existing mainstream detectors. The architectural enhancements are specifically tailored to addressing the challenges of small object detection under complex real-world conditions in high-speed railway catenary environments. These results further demonstrate the robustness and reliability of the RNDF in handling small, occluded, and visually ambiguous targets, contributing to more accurate and interpretable detection outcomes in practical deployment scenarios.

3.7. The Parameter Analysis

To further investigate the sensitivity of the proposed RNDF to critical architectural and training hyperparameters, a series of controlled experiments is conducted by varying one parameter at a time while keeping the others fixed. The results are visualized in

Figure 7 and

Figure 8, reflecting how different configurations affect the detection performance, especially in terms of mAP@0.5 and mAP@0.5:0.95.

3.7.1. SCSA Parameters

The effect of two core parameters in the SCSA module—head_num and window_size—is analyzed in

Figure 7a,b. As shown, setting head_num = 4 leads to the optimal performance, achieving the highest mAP@0.5 (0.695) and mAP@0.5:0.95 (0.299). In contrast, both head_num = 2 and head_num = 8 result in performance degradation, with mAP@0.5:0.95 falling to 0.258 and 0.251, respectively. This suggests that too few attention heads limit the feature diversity, while too many dilute the effective representation. Similarly, for window_size, a value of 7 provides the best trade-off, with mAP@0.5:0.95 reaching 0.299. Smaller (e.g., 3) and intermediate (e.g., 5) windows yield slightly lower results, indicating that moderate receptive fields are more effective at balancing the global and local spatial dependencies. These findings validate that attention granularity and the receptive field size are both crucial to enhancing spatial–channel interaction. Based on these results, the configuration with head_num = 4 and window_size = 7 is selected for the final model, as it consistently delivers the best balance across all key metrics.

3.7.2. A2C2f_HRAMi Parameters

Figure 7c presents the influence of the channel compression ratio

e in the A2C2f_HRAMi module. The default value

yields the best overall performance, achieving the highest mAP@0.5 (0.695) and mAP@0.5:0.95 (0.299). When

e is set too small (e.g.,

), although the precision increases to 0.809, the recall and mAP scores drop significantly, indicating underfitting due to excessive compression. Conversely, as

e increases to 1.0, the recall decreases to 0.590, and mAP@0.5 also drops to 0.664, suggesting that an excessive channel width leads to over-parameterization without a performance gain. These results validate that a balanced compression ratio is essential for maintaining sufficient feature diversity and effective residual attention learning. Therefore,

is selected as the final configuration in the RNDF.

Figure 7.

The effect of architectural parameters on the detection performance of the RNDF. (a) The variation in the head number in the SCSA module: four heads yield the best trade-off, achieving the highest mAP scores, while too few or too many heads degrade the performance. (b) The window size in the SCSA module: a size of 7 provides the optimal balance between local and global spatial dependencies. (c) The channel compression ratio e in the A2C2f_HRAMi module: the default value of 0.5 achieves the best overall performance, whereas overly small or large ratios lead to underfitting or over-parameterization.

Figure 7.

The effect of architectural parameters on the detection performance of the RNDF. (a) The variation in the head number in the SCSA module: four heads yield the best trade-off, achieving the highest mAP scores, while too few or too many heads degrade the performance. (b) The window size in the SCSA module: a size of 7 provides the optimal balance between local and global spatial dependencies. (c) The channel compression ratio e in the A2C2f_HRAMi module: the default value of 0.5 achieves the best overall performance, whereas overly small or large ratios lead to underfitting or over-parameterization.

3.7.3. Training Configuration

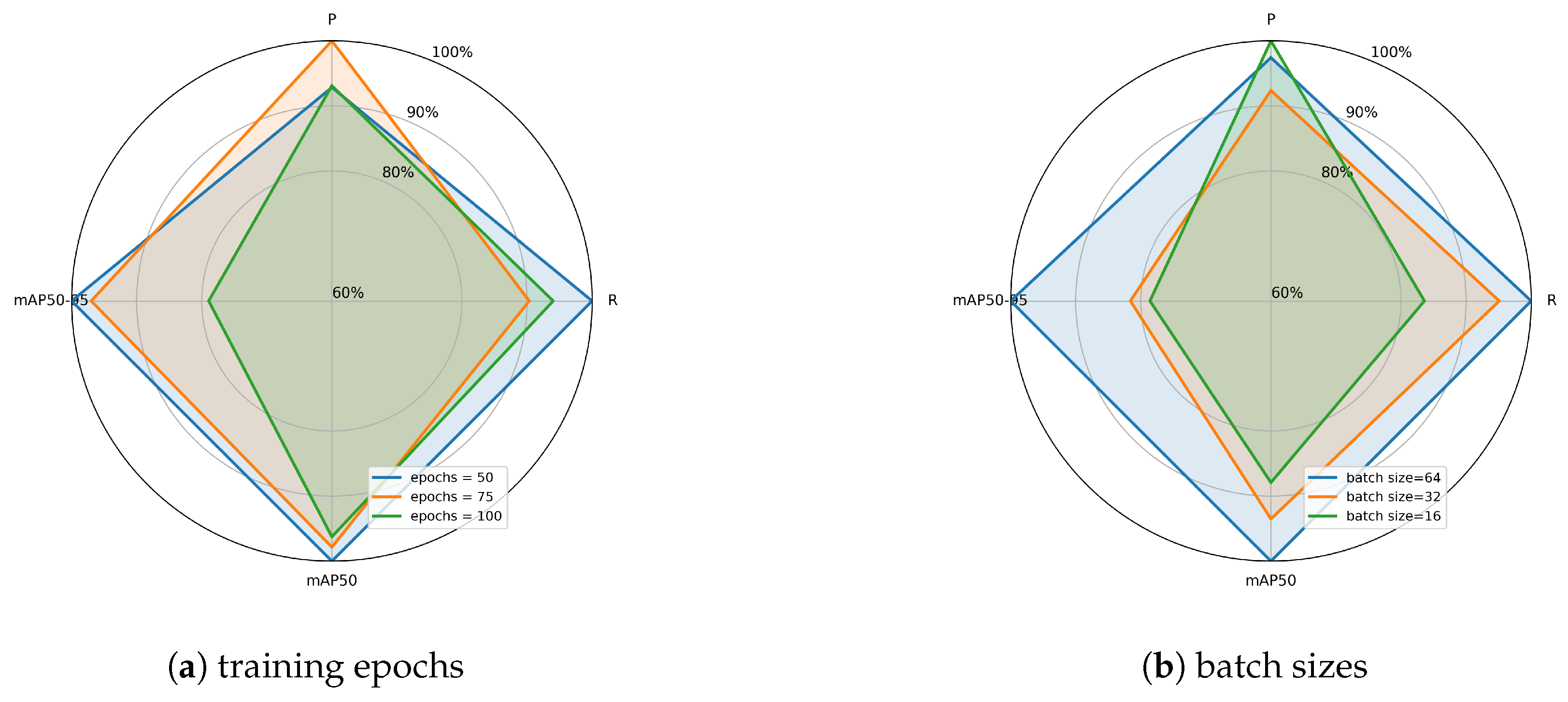

The effects of the training epochs and batch sizes on the detection performance are further evaluated and visualized in

Figure 8. In this radar plot, P denotes precision, and R denotes recall, while mAP@0.5 and mAP@0.5:0.95 represent the detection accuracy at a single IoU threshold and across multiple thresholds, respectively. In

Figure 8a, the results under different epoch counts (50, 75, and 100) are compared. Increasing the number of training epochs to 75 improves the precision (0.810) but reduces recall (0.589). At the same time, the detection accuracy declines, with mAP@0.5 falling to 0.680 and mAP@0.5:0.95 to 0.290. Extending the training further to 100 epochs slightly increases the recall (0.613) but causes a decline in the fine-grained localization accuracy, with mAP@0.5 dropping to 0.669 and mAP@0.5:0.95 falling to 0.236. This phenomenon suggests that prolonged training may lead to overfitting, particularly when training on relatively small-scale datasets with limited diversity.

Figure 8.

The effect of different training epochs and batch sizes on the detection performance, measured using precision (P), recall (R), mAP@0.5, and mAP@0.5:0.95. (a) Varying the number of training epochs (50, 75, 100) shows that 50 epochs achieve a balanced outcome, while 75 epochs reduce the recall and 100 epochs lead to overfitting with degraded mAP@0.5:0.95. (b) Varying the batch size (64, 32, 16) indicates that a larger batch size of 64 provides the most stable and well-balanced performance, whereas smaller batches cause unstable convergence and a sharp drop in the recall and fine-grained localization accuracy.

Figure 8.

The effect of different training epochs and batch sizes on the detection performance, measured using precision (P), recall (R), mAP@0.5, and mAP@0.5:0.95. (a) Varying the number of training epochs (50, 75, 100) shows that 50 epochs achieve a balanced outcome, while 75 epochs reduce the recall and 100 epochs lead to overfitting with degraded mAP@0.5:0.95. (b) Varying the batch size (64, 32, 16) indicates that a larger batch size of 64 provides the most stable and well-balanced performance, whereas smaller batches cause unstable convergence and a sharp drop in the recall and fine-grained localization accuracy.

In contrast, training for 50 epochs not only achieves a competitive performance in mAP@0.5 (0.695) but also preserves the best fine-grained localization accuracy with mAP@0.5:0.95 (0.299). Therefore, this setting is adopted to ensure both training efficiency and generalization.

In

Figure 8b, different batch sizes (64, 32, and 16) are evaluated. The results show that a larger batch size of 64 achieves the most stable and well-balanced performance across all metrics, pparticularly excelling in recall and in mAP@0.5:0.95, which reflects the fine-grained localization accuracy. In contrast, smaller batches lead to less stable convergence: a batch size of 32 shows moderate drops in accuracy, while a batch size of 16 exhibits a sharp decline in both recall and mAP@0.5:0.95. This indicates that maintaining consistent batch-level statistics plays a critical role in robust learning and detection consistency.

3.7.4. Summary

In summary, the parameter analysis confirms the necessity of properly tuning architectural modules such as the multi-head attention granularity and channel compression ratios, as well as training-related parameters. The best-performing configuration in this study uses head_num = 4, window_size = 7, e = 0.5, 50 training epochs, and a batch size of 64. These choices guide the final design of the RNDF for robust railway catenary nest detection. The final hyperparameter settings are summarized in

Table 7.

4. Discussion

This section discusses the experimental results presented in

Section 3, providing deeper insights into the performance characteristics of the proposed Railway Nest Detection Framework. In addition, the limitations of the proposed approach and potential future research directions are analyzed. From a symmetry-aware perspective, the findings are interpreted by treating the catenary’s near-symmetric regularity as a contextual prior and nests as localized asymmetries, thereby linking the method’s design choices to the observed gains.

4.1. An Analysis of the Experimental Results

The results of the ablation study in

Section 3.1 clearly demonstrate the effectiveness of each proposed component. Replacing the baseline A2C2f modules with the A2C2f_HRAMi modules enhances the hierarchical feature extraction, leading to significant improvements in both precision and localization robustness. Moreover, the integration of the SCSA module notably boosts the recall rates, indicating improved sensitivity to small, occluded, and visually ambiguous bird nest targets. In addition, the adoption of the Focaler-GIoU loss function further stabilizes bounding box regression and enhances the detection accuracy, particularly for hard-to-localize small objects. The comparative experiments confirm that the RNDF consistently outperforms mainstream detectors, including YOLOv5, YOLOv11, YOLOv12, DEIM, and RT-DETR. Specifically, the RNDF achieves the highest mAP@0.5 and mAP@0.5:0.95 scores while simultaneously maintaining competitive levels of precision and recall. Furthermore, the PR curve analysis reveals that the RNDF maintains higher precision across a broader range of recall thresholds compared to that of the baseline YOLOv12, suggesting better reliability and prediction consistency across varying confidence levels. These quantitative findings are further supported by qualitative visualization results, where the RNDF exhibits stronger attention localization, fewer false positives, and a more stable detection performance across complex backgrounds. Viewed through this symmetry-aware lens, components that encode regular, near-symmetric line structures better and amplify departures from this regularity naturally yield larger gains in recall and mAP@0.5:0.95 under clutter and occlusion.

4.2. Comparison with Existing Methods

Compared with DETR variants and earlier YOLO models, the proposed RNDF introduces several key advantages that contribute to its superior detection performance. First, it is lightweight and efficient; the architectural modifications maintain a low computational overhead and inference latency, making the model well suited to real-time deployment on resource-constrained platforms such as railway monitoring systems. Second, the RNDF enhances small object detection through hierarchical residual–attention fusion and synergistic spatial–channel enhancement, effectively addressing common limitations of YOLO-style detectors in detecting small and occluded targets under complex backgrounds. Third, the Focaler-GIoU loss formulation alleviates the sample imbalance during training and improves the bounding box localization accuracy, particularly for weakly textured or low-overlap objects. Distinct from the generic baselines, the RNDF explicitly benefits from the symmetric layout of catenary scenes by using this regularity as a contextual prior for anomaly (asymmetry) localization.

In particular, compared to the other models in the comparative experiments—including YOLOv5, YOLOv11, YOLOv12 (baseline), DEIM, and RT-DETR—the RNDF achieves the highest scores across all major evaluation metrics, including precision (0.752), recall (0.652), mAP@0.5 (0.695), and mAP@0.5:0.95 (0.299). Although models like RT-DETR and DEIM are often regarded as strong baselines for small object detection, the results in this study indicate that the RNDF surpasses both significantly, demonstrating an improved localization robustness and a more consistent detection performance across a wide range of target scales.

4.3. Limitations

Despite its promising results, the proposed RNDF has several limitations that warrant consideration. First, the current dataset and detection framework are specifically designed for bird nest detection, and the generalization capability to other types of foreign objects—such as plastic bags or kites—has not yet been fully explored. Second, although the use of synthetic images greatly enhances the dataset diversity and helps mitigate sample scarcity, distributional discrepancies between synthetic and real-world images may still introduce subtle biases that could affect model generalization. Furthermore, the training and evaluation images primarily represent typical catenary environments under normal weather conditions, and as a result, the detection robustness of the proposed model under extreme scenarios—such as heavy rain, snow, or low-light conditions—has not been thoroughly validated. Moreover, the symmetry prior may weaken in atypical spans (e.g., curves, non-standard fixtures) or under strong perspective distortion, reducing the contrast between regular patterns and anomalies and potentially diminishing the benefit of symmetry-aware cues.

4.4. Future Work

To improve the practical applicability of the proposed RNDF further, several directions are suggested for future research. One direction is the extension from single-class to multi-class detection. In this setting, the model would be adapted to identifying a broader range of foreign objects beyond bird nests, such as kites, plastic bags, and other potential hazards in catenary systems. This extension would enhance the system’s versatility in real-world deployment.

Another direction is the exploration of domain adaptation techniques, including style transfer, unsupervised domain adaptation, and adversarial training. These methods could help bridge the distribution gap between synthetic and real-world datasets, thereby improving model generalization.

Deployment optimization also remains an important topic. Model compression strategies such as pruning, quantization, and knowledge distillation may be employed to reduce the computational overhead and enable implementation on edge devices with limited resources.

In addition, incorporating multimodal data sources—such as infrared images or LiDAR data—has the potential to significantly enhance the detection robustness, particularly under adverse environmental conditions such as rain, snow, or nighttime operation.

Finally, future work will investigate explicit symmetry modeling. This may include wire-layout estimation, symmetry consistency regularization, symmetry-aware data augmentation, and group-equivariant backbone designs. These approaches will be studied with careful evaluations in non-standard or curved catenary sections to reduce the risk of overfitting to symmetry priors and to ensure robust generalization across diverse infrastructures.

5. Conclusions

This paper presents the RNDF, an enhanced YOLOv12-based symmetry-aware detection framework specifically designed for intelligent bird nest detection in high-speed railway overhead catenary systems. To address key challenges related to small object sizes, occlusion, and background complexity, the proposed model integrates several targeted innovations, including the A2C2f_HRAMi module for hierarchical residual–attention fusion, the SCSA module for synergistic spatial–channel feature enhancement, and the Focaler-GIoU loss function for improved bounding box regression stability, while explicitly exploiting the near-symmetric regularity of catenary layouts and treating nests as localized asymmetries. In support of training and evaluation, a dedicated bird nest detection dataset, named HRC-Nest, is constructed by combining real-world and synthetically generated images, enabling robust learning and a comprehensive performance assessment, with the global catenary symmetry preserved and the nests annotated as departures from this regularity. Extensive experimental results demonstrate that the RNDF achieves significant improvements over the baseline YOLOv12 and other mainstream detectors in terms of its precision, recall, mAP@0.5, and mAP@0.5:0.95, all without relying on pre-trained weights. Moreover, qualitative visualization analyses further validate the superior localization capability, attention focus, and overall robustness of the proposed model, particularly in detecting small and weakly textured targets embedded into complex backgrounds, by highlighting departures from symmetric line structures. Despite these promising results, several limitations remain, such as the current focus on a single object class and the potential domain gap introduced by synthetic data, as well as the reliance on a symmetry prior that may weaken in atypical spans or under strong perspective distortion. Future research directions include multi-class foreign object detection, domain adaptation techniques, lightweight deployment optimization, and the integration of multimodal data to enhance the model’s performance and adaptability further, together with explicit symmetry modeling (e.g., wire-layout estimation and symmetry consistency regularization) and symmetry-aware data augmentation. Overall, the proposed symmetry-aware RNDF offers a reliable, efficient, and practical solution for improving the safety and operational reliability of high-speed railway systems through intelligent visual inspections and foreign object detection.