Resolving Linguistic Asymmetry: Forging Symmetric Multilingual Embeddings Through Asymmetric Contrastive and Curriculum Learning

Abstract

1. Introduction

- We provide a detailed conceptualization of the multilingual embedding problem through the lens of symmetry and asymmetry, highlighting the limitations of symmetry-assuming approaches.

- We propose a novel dual-asymmetric learning framework that synergistically combines Contrastive Learning and DCL to generate superior, symmetrically-aligned multilingual sentence embeddings.

- Through extensive experiments on a suite of cross-lingual benchmarks, we demonstrate that our “asymmetric-to-symmetric” methodology consistently outperforms state-of-the-art models, validating the efficacy of our approach.

- Our analysis offers critical insights into the interplay between symmetry and asymmetry in LLMs, showing that embracing methodological asymmetry is a powerful principle for achieving functional symmetry in complex, real-world data.

2. Related Work

2.1. Symmetry in Multilingual Representations

2.2. Asymmetric Learning Paradigms

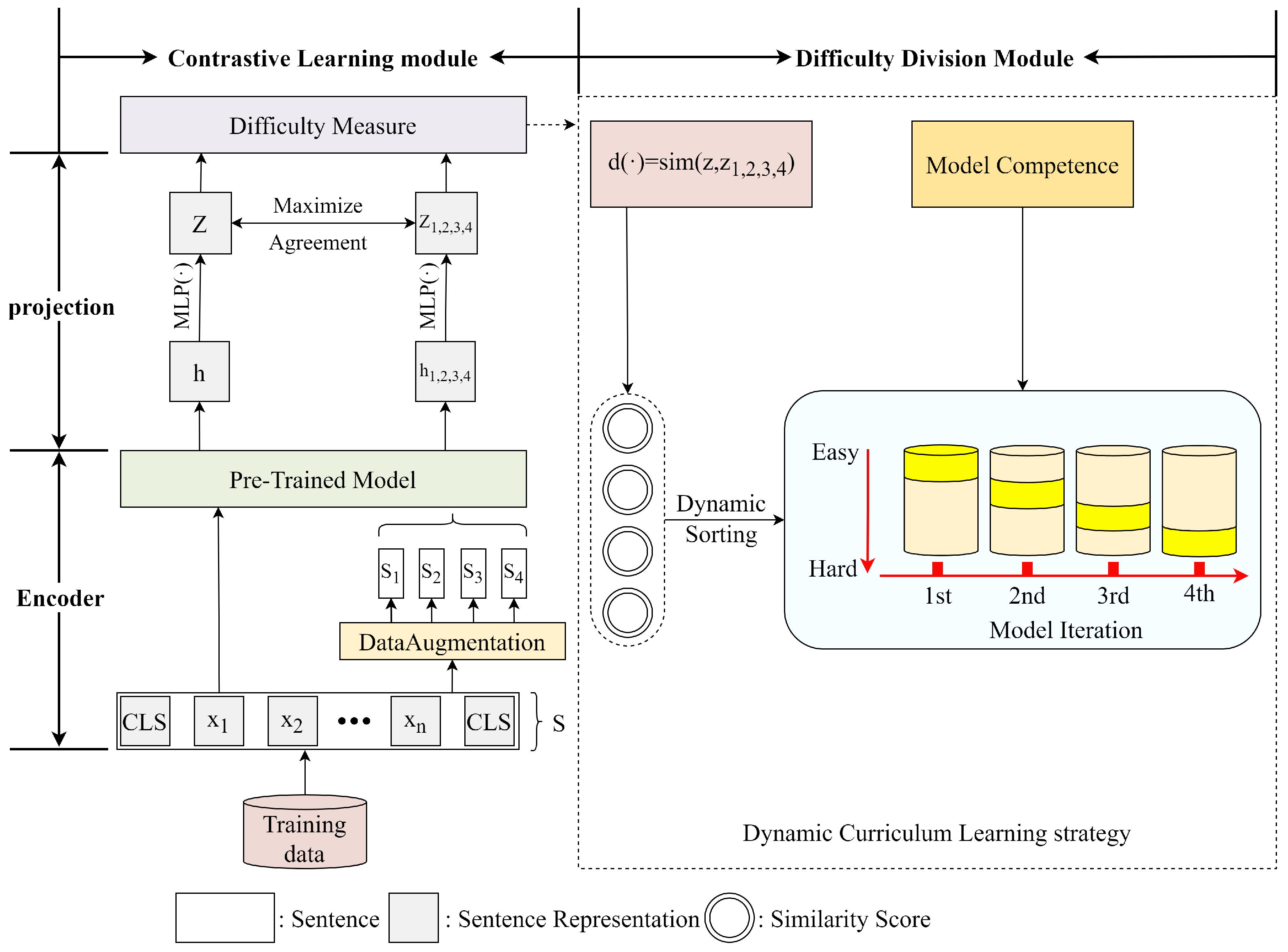

3. Methodology: A Dual-Asymmetric Framework

3.1. Defining the Target: A Symmetric Embedding Space

- Asymmetric Training Focus: By separating the contrastive optimization into a dedicated Projection head, we allow the Encoder to maintain its general language understanding capabilities while the Projection specifically targets cross-lingual alignment.

- Symmetric Space Forging: The Projection head learns to map language-specific representations from the Encoder into a shared space where translationally equivalent sentences become proximate, effectively resolving linguistic asymmetries.

- Training Stability: This separation prevents the contrastive objective from distorting the Encoder’s general linguistic knowledge, ensuring that the final embeddings retain both language-specific nuances and cross-lingual symmetry.

3.2. Asymmetric Objective: Contrastive Learning for Semantic Alignment

3.3. Asymmetric Pacing: DCL

3.4. Data Augmentation as a Source of Asymmetry

- Random Punctuation: Introduces noise that mimics stylistic variations.

- Random Insertion/Deletion: Alters sentence structure and length, creating asymmetric views.

- Synonym Replacement: Replaces words with their synonyms, breaking lexical symmetry while preserving semantic symmetry.

4. Experimental Setup

4.1. Evaluation Benchmarks

- Semantic Textual Similarity (STS): We use the multilingual STS benchmark from [2,13]. This task directly measures the semantic symmetry of embeddings by correlating the cosine similarity of sentence pairs with human-annotated scores (from 0 to 5). We evaluate on seven cross-lingual language pairs, English–Arabic (EN-AR), English–German (EN-DE), English–Turkish (EN-TR), English–Spanish (EN-ES), English–French (EN-FR), English–Italian (EN-IT) and English–Dutch (EN-NL).

- Cross-lingual Natural Language Inference (XNLI): The XNLI dataset [36] tests the model’s ability to understand logical relationships (entailment, contradiction, neutral) across 15 languages. This task probes a deeper level of semantic understanding beyond mere similarity.

- Cross-lingual Paraphrase Identification (PAWS-X): PAWS-X [37] requires the model to distinguish between subtle paraphrases and non-paraphrases across seven languages. It is a challenging test of robustness against lexical and syntactic variations—key forms of linguistic asymmetry.

- Multilingual Document Classification (MLDoc): MLDoc [38] is a topic classification task with news documents in eight languages. It evaluates the model’s capacity to capture topic-level semantic symmetry in longer texts.

- Multilingual Amazon Review Corpus (MARC): The MARC dataset [39] involves sentiment classification of product reviews in six languages, testing the model’s ability to align sentiment expressions, which are often culturally and linguistically asymmetric.

- Question-Answer Matching (QAM): From XGLUE [40], this task requires matching questions to answer passages across English, German, and French, evaluating fine-grained cross-lingual relevance matching.

4.2. Baseline Systems

- LASER [4]: A BiLSTM sequence-to-sequence model trained on parallel corpora. It learns embeddings optimized for translation, a direct way to enforce semantic symmetry.

- mUSE [14]: A dual-encoder Transformer model trained with a combination of translation pairs and SNLI data, representing a strong supervised approach to learning symmetric representations.

- LaBSE [10]: A state-of-the-art model that uses a contrastive objective on translation pairs. It is our most direct competitor, representing an approach that uses an asymmetric objective but lacks the asymmetric pacing of a curriculum.

- Xpara & MSE-AMR [2]: Recent competitive models that leverage parallel corpora or abstract meaning representations to improve cross-lingual alignment. They represent alternative strategies for bridging linguistic asymmetries.

- SOTA Embedders: We now also include several recent, top-performing sentence embedding models that are widely regarded as the state-of-the-art on public benchmarks. This includes paraphrase-multilingual-mpnet-base-v2 (MPNet-SBERT) [41], multilingual-e5-large (E5) [42], and BGE-m3 [43]. These models are typically based on massive pre-training and advanced contrastive learning objectives.

4.3. Implementation Details and Hyperparameters

- Architecture: We add a 2-layer MLP projection head with an ReLU activation on top of the [CLS] token representation from the encoder to produce the embeddings for the contrastive loss. The sentence embeddings used for downstream tasks are the [CLS] representations before the projection head.

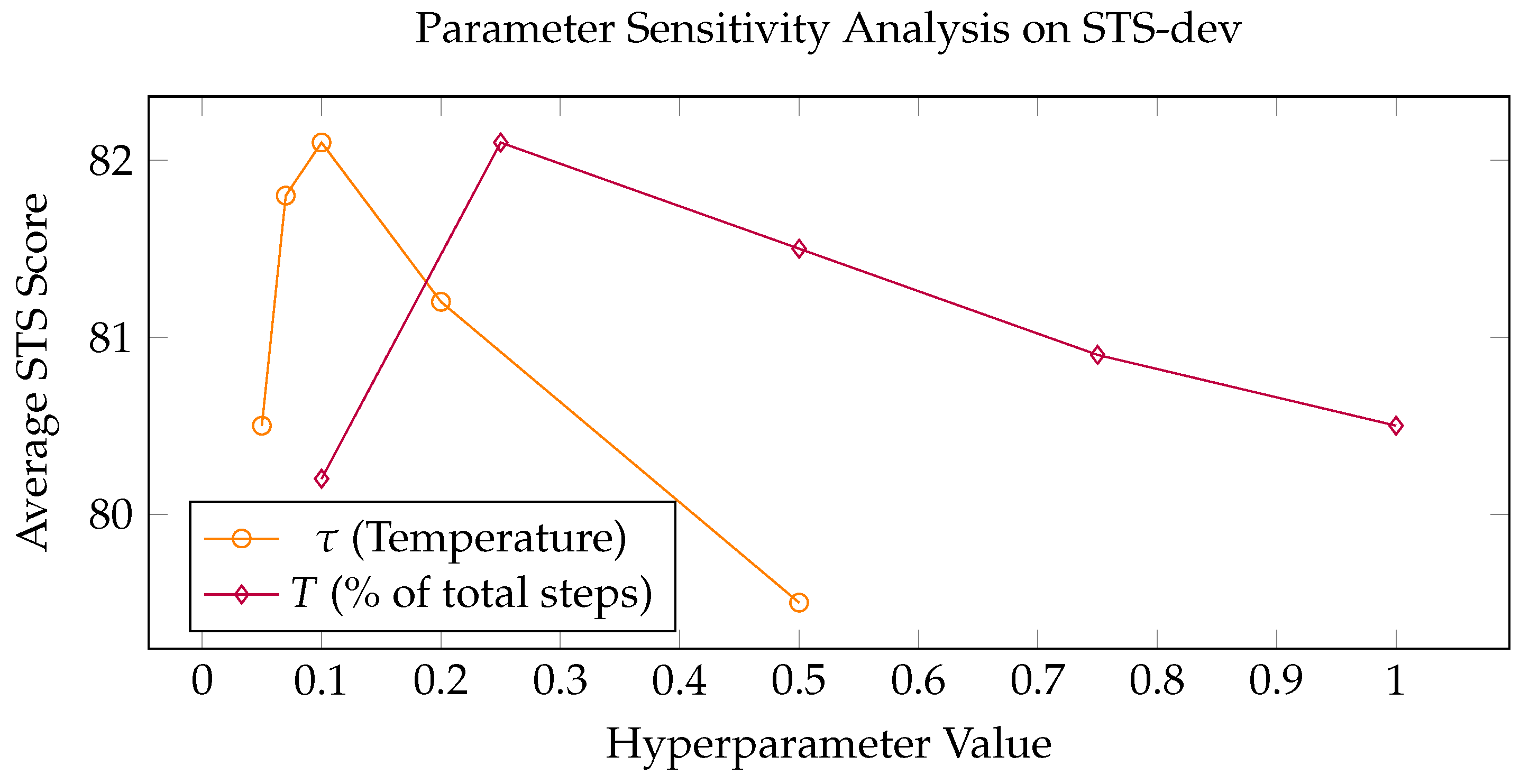

- Training Objective: The contrastive loss is calculated using cosine similarity with a temperature parameter set to 0.1.

- Optimizer & Learning Rate: We use the AdamW optimizer with a learning rate of 3 × 10−5 and a linear learning rate warmup for the first 10% of training steps, followed by a linear decay.

- DCL: The core hyperparameter for our asymmetric pacing strategy is the curriculum length or total warm-up steps T. Based on preliminary experiments, we set T to represent the first 25% of the total training epochs. The difficulty score is calculated as described in Section 3. The weighting function is a simple binary mask for simplicity in our main experiments: a sample is used if its difficulty , where the competence pacer grows linearly from 0 to 1 over T steps.

- Data Augmentation: We report results for four variants of our model, corresponding to the four augmentation strategies described in your original paper (and Section 3): (1) Random Punctuation, (2) Random Insertion, (3) Random Deletion, and (4) Synonym Replacement. This allows us to analyze how different sources of induced asymmetry affect final performance.

- General Hyperparameters: We use a batch size of 64 and train for 5 epochs on the combined parallel corpora used by LaBSE. The dropout rate is set to 0.3. All experiments are conducted on a single NVIDIA A100 GPU. The best-performing checkpoint on the STS development set is used for final evaluation across all test sets.

5. Results and Analysis

5.1. Performance on STS

5.2. Performance on Cross-Lingual Classification Tasks

- On XNLI, DACL significantly outperforms other models, demonstrating a superior ability to capture the nuanced logical relationships required for inference. This suggests that our asymmetric curriculum helps the model to build a more hierarchical and less superficial understanding of semantics.

- The most dramatic improvement is on PAWS-X, where our model surpasses the closest baseline by over 10 points. This task is specifically designed with adversarial examples that introduce syntactic asymmetry to trick models. The success of DACL here strongly validates our core thesis: by training the model to be robust to induced asymmetries (via augmentation) and guiding it through an asymmetric curriculum, it becomes exceptionally skilled at handling inherent linguistic asymmetries.

- On MLDoc and MARC, DACL also shows strong performance, indicating that the learned symmetric representations generalize well from the sentence level to the document level for tasks like topic and sentiment classification.

5.3. Analysis of Performance Profile: Generalization vs. Specialization

5.4. Ablation Study

- Consistent Positive Impact: DCL provides a performance boost across all evaluated tasks, confirming that our asymmetric pacing strategy is a robust and generally beneficial enhancement.

- Strength in Handling Asymmetry: The most dramatic improvement is on PAWS-X, with an absolute accuracy gain of +10.51 points. This task is specifically designed with adversarial examples that introduce syntactic asymmetry. The overwhelming success here provides the strongest possible evidence for our core thesis: by guiding the model through a curriculum of structural difficulty, we equip it to master complex linguistic variations.

- Enhancing Deeper Semantics: DCL also yields a significant gain of +2.83 points on XNLI. This demonstrates that the curriculum helps the model move beyond surface-level similarity to build a more hierarchical and nuanced understanding of logical relationships, which is crucial for inference.

- Defining the Limits of Applicability: The performance gains on MLDoc (+0.23) and MARC (+0.58) are more modest. This finding is equally insightful. These tasks, focused on document-level topic or sentiment classification, rely more heavily on the presence of specific keywords rather than the fine-grained sentence structure that DCL is designed to untangle. While DCL still helps create a slightly more stable representation, its primary impact is less pronounced in these scenarios.

5.5. Analysis of Data Augmentation Asymmetry

- Asymmetric Contrastive Objective: Unlike previous contrastive methods that use static negative sampling, our approach dynamically identifies the most informative negative examples based on the current embedding space. This allows DACL to focus on the most challenging distinctions, particularly for languages with significant structural differences (e.g., English vs. Turkish). In Table 1, we observe the largest improvements for EN-TR (76.58 vs. 72.19 for LaBSE), which has SOV word order compared to English’s SVO structure. The asymmetric contrastive objective specifically targets these challenging cross-lingual mappings by progressively refining the embedding space to handle structural divergence.

- Dynamic Curriculum Learning: The performance gap is most pronounced on PAWS-X (+11.52 points over LaBSE), a task designed with adversarial examples that introduce syntactic asymmetry. The curriculum learning component enables the model to first master basic semantic equivalences before tackling more complex, structurally divergent pairs. Without this progressive learning strategy, the model becomes trapped in local optima where it either overfits to surface-level features or fails to converge on meaningful representations. The curriculum acts as a “scaffold” that guides the model through the asymmetric linguistic landscape, allowing it to build increasingly sophisticated cross-lingual understanding.

5.6. Efficiency Considerations

5.7. Probing the Asymmetric-to-Symmetric Process

5.7.1. Parameter Sensitivity

5.7.2. Visualization of the Symmetric Embedding Space

5.7.3. Robustness to Linguistic Distance

- Asymmetric abstraction: The contrastive learning objective forces the model to extract language-invariant semantic features by treating translation pairs as positive examples. This creates an intermediate representation space that is less tied to any specific language’s surface features.

- Curriculum-guided bridging: The dynamic curriculum learning progressively exposes the model to increasingly divergent language pairs. Starting with easier, structurally similar pairs (e.g., English–French), the model learns basic semantic alignment. As training progresses, it encounters harder pairs (e.g., English–Chinese), but now has a foundation of abstract semantic understanding to build upon.

5.8. Validating the Core Contribution

- Limited Standalone Benefit of DCL: When applied to a traditional symmetric model (Model 3 vs. Model 1), DCL provides a modest gain of +1.67%. This shows that DCL is helpful on its own, but its impact is limited.

- Amplified Benefit and True Synergy: However, when DCL is applied to our asymmetric contrastive framework (Model 4 vs. Model 2), it yields a massive +10.51% improvement. This gain is over six times larger than DCL’s standalone contribution, robustly demonstrating that the two components work together in a synergistic, mutually reinforcing manner.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kvapilíková, I.; Artetxe, M.; Labaka, G.; Agirre, E.; Bojar, O. Unsupervised Multilingual Sentence Embeddings for Parallel Corpus Mining. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: Student Research Workshop, Online, 5–10 July 2020; pp. 255–262. [Google Scholar]

- Cai, D.; Li, X.; Ho, J.C.S.; Bing, L.; Lam, W. Retrofitting Multilingual Sentence Embeddings with Abstract Meaning Representation. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 6456–6472. [Google Scholar]

- Zong, T.; Zhang, L. An ensemble distillation framework for sentence embeddings with multilingual round-trip translation. Proc. AAAI Conf. Artif. Intell. 2023, 37, 14074–14082. [Google Scholar] [CrossRef]

- Artetxe, M.; Schwenk, H. Massively multilingual sentence embeddings for zero-shot cross-lingual transfer and beyond. Trans. Assoc. Comput. Linguist. 2019, 7, 597–610. [Google Scholar] [CrossRef]

- Zhu, S.; Mi, C.; Zhang, L. Inducing Bilingual Word Representations for Non-isomorphic Spaces by an Unsupervised Way. In Proceedings of the International Conference on Knowledge Science, Engineering and Management, Tokyo, Japan, 14–16 August 2021; Springer: Cham, Switzerland, 2021; pp. 458–466. [Google Scholar]

- Zhu, S.; Mi, C.; Li, T.; Zhang, F.; Zhang, Z.; Sun, Y. Improving bilingual word embeddings mapping with monolingual context information. Mach. Transl. 2021, 35, 503–518. [Google Scholar] [CrossRef]

- Pires, T.; Schlinger, E.; Garrette, D. How Multilingual is Multilingual BERT? In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019. [Google Scholar]

- Conneau, A.; Khandelwal, K.; Goyal, N.; Chaudhary, V.; Wenzek, G.; Guzmán, F.; Grave, É.; Ott, M.; Zettlemoyer, L.; Stoyanov, V. Unsupervised Cross-lingual Representation Learning at Scale. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 8440–8451. [Google Scholar]

- Mullov, C. Unsupervised Transfer Learning in Multilingual Neural Machine Translation with Cross-Lingual Word Embeddings. Ph.D. Thesis, Karlsruhe Institute of Technology, Karlsruhe, Germany, 2020. [Google Scholar]

- Feng, F.; Yang, Y.; Cer, D.; Arivazhagan, N.; Wang, W. Language-agnostic BERT Sentence Embedding. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 878–891. [Google Scholar]

- Zhu, S.; Xu, S.; Sun, H.; Pan, L.; Cui, M.; Du, J.; Jin, R.; Branco, A.; Xiong, D. Multilingual Large Language Models: A Systematic Survey. arXiv 2024, arXiv:2411.11072. [Google Scholar] [CrossRef]

- Chanchani, S.; Huang, R. Composition-contrastive Learning for Sentence Embeddings. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 15836–15848. [Google Scholar]

- Reimers, N.; Gurevych, I. Making Monolingual Sentence Embeddings Multilingual using Knowledge Distillation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020. [Google Scholar]

- Chidambaram, M.; Yang, Y.; Cer, D.; Yuan, S.; Sung, Y.; Strope, B.; Kurzweil, R. Learning Cross-Lingual Sentence Representations via a Multi-task Dual-Encoder Model. In Proceedings of the 4th Workshop on Representation Learning for NLP (RepL4NLP-2019), Florence, Italy, 2 August 2019; pp. 250–259. [Google Scholar]

- Zhang, T.; Ye, W.; Yang, B.; Zhang, L.; Ren, X.; Liu, D.; Sun, J.; Zhang, S.; Zhang, H.; Zhao, W. Frequency-aware contrastive learning for neural machine translation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 12–17 October 2022; Volume 36, pp. 11712–11720. [Google Scholar]

- Pan, X.; Wang, M.; Wu, L.; Li, L. Contrastive Learning for Many-to-many Multilingual Neural Machine Translation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 244–258. [Google Scholar]

- Zhu, S.; Mi, C.; Shi, X. An explainable evaluation of unsupervised transfer learning for parallel sentences mining. In Proceedings of the Web and Big Data: 5th International Joint Conference, APWeb-WAIM 2021, Guangzhou, China, 23–25 August 2021; Proceedings, Part I 5. Springer: Berlin/Heidelberg, Germany, 2021; pp. 273–281. [Google Scholar]

- Platanios, E.A.; Stretcu, O.; Neubig, G.; Poczos, B.; Mitchell, T. Competence-based Curriculum Learning for Neural Machine Translation. In Proceedings of the 2019 Conference of the North. Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Zhu, S.; Li, X.; Yang, Y.; Wang, L.; Mi, C. Learning Bilingual Lexicon for Low-Resource Language Pairs. In Proceedings of the Natural Language Processing and Chinese Computing: 6th CCF International Conference, NLPCC 2017, Dalian, China, 8–12 November 2017; Proceedings 6. Springer: Berlin/Heidelberg, Germany, 2018; pp. 760–770. [Google Scholar]

- Zhu, S.; Mi, C.; Li, T.; Yang, Y.; Xu, C. Unsupervised parallel sentences of machine translation for Asian language pairs. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22, 1–14. [Google Scholar] [CrossRef]

- Chowdhury, S.; Baili, N.; Vannah, B. Ensemble Fine-tuned mBERT for Translation Quality Estimation. In Proceedings of the Sixth Conference on Machine Translation, Punta Cana, Dominican Republic, 10–11 November 2021; pp. 897–903. [Google Scholar]

- Xu, Y.; Hu, L.; Zhao, J.; Qiu, Z.; Xu, K.; Ye, Y.; Gu, H. A survey on multilingual large language models: Corpora, alignment, and bias. Front. Comput. Sci. 2025, 19, 1911362. [Google Scholar] [CrossRef]

- Wu, D.; Lei, Y.; Yates, A.; Monz, C. Representational Isomorphism and Alignment of Multilingual Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 14074–14085. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, W.; Lei, T.; Cao, Y.; Peng, D.; Wang, X. CLSEP: Contrastive learning of sentence embedding with prompt. Knowl.-Based Syst. 2023, 266, 110381. [Google Scholar] [CrossRef]

- Zhang, M.; Mosbach, M.; Adelani, D.; Hedderich, M.; Klakow, D. MCSE: Multimodal Contrastive Learning of Sentence Embeddings. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 5959–5969. [Google Scholar]

- Liu, C.; Fu, Y.; Xu, C.; Yang, S.; Li, J.; Wang, C.; Zhang, L. Learning a few-shot embedding model with contrastive learning. Proc. AAAI Conf. Artif. Intell. 2021, 35, 8635–8643. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, H.; Liu, Z.; Yang, L.; Yu, P.S. Contrastvae: Contrastive variational autoencoder for sequential recommendation. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 2056–2066. [Google Scholar]

- Gan, Z.; Chen, Y.C.; Li, L.; Zhu, C.; Cheng, Y.; Liu, J. Large-scale adversarial training for vision-and-language representation learning. Adv. Neural Inf. Process. Syst. 2020, 33, 6616–6628. [Google Scholar]

- Zhu, S.; Cui, M.; Xiong, D. Towards robust in-context learning for machine translation with large language models. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-cOLING 2024), Torino, Italy, 20–25 May 2024; pp. 16619–16629. [Google Scholar]

- Chen, G.; Hou, L.; Chen, Y.; Dai, W.; Shang, L.; Jiang, X.; Liu, Q.; Pan, J.; Wang, W. mclip: Multilingual clip via cross-lingual transfer. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 13028–13043. [Google Scholar]

- Bengio, Y.; Louradour, J.; Collobert, R.; Weston, J. Curriculum learning. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009; pp. 41–48. [Google Scholar]

- Wang, Y.; Hou, H.; Sun, S.; Wu, N.; Jian, W.; Yang, Z.; Wang, P. Dynamic Mask Curriculum Learning for Non-Autoregressive Neural Machine Translation. In Proceedings of the China Conference on Machine Translation, Lhasa, China, 6–10 August 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 72–81. [Google Scholar]

- Zhu, S.; Pan, L.; Li, B.; Xiong, D. LANDeRMT: Dectecting and Routing Language-Aware Neurons for Selectively Finetuning LLMs to Machine Translation. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; pp. 12135–12148. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PmLR, Vienna, Austria, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Wei, J.; Zou, K. EDA: Easy Data Augmentation Techniques for Boosting Performance on Text Classification Tasks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 6382–6388. [Google Scholar]

- Conneau, A.; Rinott, R.; Lample, G.; Williams, A.; Bowman, S.; Schwenk, H.; Stoyanov, V. XNLI: Evaluating Cross-lingual Sentence Representations. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; p. 2475. [Google Scholar]

- Yang, Y.; Zhang, Y.; Tar, C.; Baldridge, J. PAWS-X: A Cross-lingual Adversarial Dataset for Paraphrase Identification. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3687–3692. [Google Scholar]

- Schwenk, H.; Li, X. A Corpus for Multilingual Document Classification in Eight Languages. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Keung, P.; Lu, Y.; Szarvas, G.; Smith, N.A. The Multilingual Amazon Reviews Corpus. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020. [Google Scholar]

- Liang, Y.; Duan, N.; Gong, Y.; Wu, N.; Guo, F.; Qi, W.; Gong, M.; Shou, L.; Jiang, D.; Cao, G.; et al. XGLUE: A New Benchmark Datasetfor Cross-lingual Pre-training, Understanding and Generation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 6008–6018. [Google Scholar]

- Song, K.; Tan, X.; Qin, T.; Lu, J.; Liu, T.Y. Mpnet: Masked and permuted pre-training for language understanding. Adv. Neural Inf. Process. Syst. 2020, 33, 16857–16867. [Google Scholar]

- Wang, L.; Yang, N.; Huang, X.; Jiao, B.; Yang, L.; Jiang, D.; Majumder, R.; Wei, F. Text embeddings by weakly-supervised contrastive pre-training. arXiv 2022, arXiv:2212.03533. [Google Scholar]

- Chen, J.; Xiao, S.; Zhang, P.; Luo, K.; Lian, D.; Liu, Z. Bge m3-embedding: Multi-lingual, multi-functionality, multi-granularity text embeddings through self-knowledge distillation. arXiv 2024, arXiv:2402.03216. [Google Scholar]

| Model | EN-AR | EN-DE | EN-TR | EN-ES | EN-FR | EN-IT | EN-NL | Avg. (Δ) |

|---|---|---|---|---|---|---|---|---|

| mBERT | 18.83 | 33.91 | 16.33 | 21.56 | 33.10 | 34.35 | 35.46 | 27.55 |

| XLM-R | 15.82 | 21.53 | 12.16 | 10.71 | 16.73 | 22.92 | 23.87 | 17.63 |

| LASER | 66.71 | 64.38 | 72.16 | 58.16 | 69.33 | 70.98 | 68.78 | 67.25 |

| mUSE | 79.31 | 82.26 | 75.53 | 79.71 | 82.73 | 84.66 | 84.18 | 81.32 |

| LaBSE | 74.67 | 73.96 | 72.19 | 65.83 | 77.06 | 78.13 | 75.65 | 73.81 |

| Xpara | 81.96 | 83.85 | 80.37 | 84.16 | 83.28 | 85.78 | 83.79 | 83.36 |

| MSE-AMR | 81.73 | 85.62 | 79.50 | 84.76 | 85.22 | 87.33 | 85.58 | 84.25 |

| DACL | 80.43 | 84.41 | 76.58 | 82.59 | 84.52 | 86.72 | 86.38 | 83.09 |

| SOTA Baselines | ||||||||

| MPNet-SBERT | 83.10 | 84.50 | 78.50 | 85.10 | 84.90 | 86.20 | 85.80 | 84.01 |

| E5-large | 84.20 | 85.80 | 79.80 | 86.30 | 85.90 | 87.50 | 86.10 | 85.09 |

| BGE-m3 | 85.40 | 86.70 | 79.90 | 87.10 | 86.80 | 87.80 | 87.20 | 85.84 |

| Model | XNLI | PAWS-X | MLDoc | MARC |

|---|---|---|---|---|

| mBERT | 44.58 | 57.05 | 77.90 | 38.43 |

| XLM-R | 46.96 | 56.06 | 77.86 | 50.03 |

| LASER | 49.38 | 58.61 | 74.63 | 47.97 |

| mUSE | 48.96 | 57.34 | 77.20 | 46.37 |

| LaBSE | 56.86 | 59.00 | 82.29 | 48.85 |

| Xpara | 53.33 | 58.06 | 62.42 | 48.79 |

| MSE-AMR | 53.33 | 59.07 | 62.42 | 48.31 |

| DACL (Ours) | 57.86 | 70.52 | 79.74 | 50.12 |

| Model | (Spearman ) | (Accuracy %) | |||

|---|---|---|---|---|---|

| STS | PAWS-X | XNLI | MLDoc | MARC | |

| Contrastive Only | 82.01 | 60.01 | 55.03 | 79.51 | 49.54 |

| DACL (with DCL) | 83.09 | 70.52 | 57.86 | 79.74 | 50.12 |

| Δ (Gain from DCL) | +1.08 | +10.51 | +2.83 | +0.23 | +0.58 |

| Model | Close (Avg.) | Distant (Avg.) | Perf. Drop |

|---|---|---|---|

| LaBSE | 60.15 | 54.32 | 5.83 |

| DACL (Ours) | 61.23 | 57.91 | 3.32 |

| Model Configuration | PAWS-X Acc. (%) | Gain (Δ) |

|---|---|---|

| 1. Symmetric Baseline | 56.45 | – |

| (No Asymmetry, No DCL) | ||

| 2. Asymmetric Contrastive Only | 60.01 | +3.56 |

| (vs. 1: Effect of Asymmetry) | ||

| 3. Symmetric Baseline + DCL | 58.12 | +1.67 |

| (vs. 1: Effect of DCL alone) | ||

| Full DACL | 70.52 | +10.51 |

| (Asymmetric Contrastive + DCL) | (vs. 2: Effect of DCL with Asymmetry) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, L.; Li, Y.; Wei, W.; Yang, C. Resolving Linguistic Asymmetry: Forging Symmetric Multilingual Embeddings Through Asymmetric Contrastive and Curriculum Learning. Symmetry 2025, 17, 1386. https://doi.org/10.3390/sym17091386

Meng L, Li Y, Wei W, Yang C. Resolving Linguistic Asymmetry: Forging Symmetric Multilingual Embeddings Through Asymmetric Contrastive and Curriculum Learning. Symmetry. 2025; 17(9):1386. https://doi.org/10.3390/sym17091386

Chicago/Turabian StyleMeng, Lei, Yinlin Li, Wei Wei, and Caipei Yang. 2025. "Resolving Linguistic Asymmetry: Forging Symmetric Multilingual Embeddings Through Asymmetric Contrastive and Curriculum Learning" Symmetry 17, no. 9: 1386. https://doi.org/10.3390/sym17091386

APA StyleMeng, L., Li, Y., Wei, W., & Yang, C. (2025). Resolving Linguistic Asymmetry: Forging Symmetric Multilingual Embeddings Through Asymmetric Contrastive and Curriculum Learning. Symmetry, 17(9), 1386. https://doi.org/10.3390/sym17091386