Abstract

Energy-aware scheduling plays a critical role in modern computing and manufacturing systems, where energy consumption often increases with job execution order or resource usage intensity. This study investigates a scheduling problem in which a sequence of heterogeneous jobs—classified as either heavy or light—must be assigned to multiple identical machines with monotonically increasing slot costs. While the machines are structurally symmetric, the fixed job order and cost asymmetry introduce significant challenges for optimal job allocation. We formulate the problem as an integer linear program and simplify the objective by isolating the cumulative cost of heavy jobs, thereby reducing the search for optimality to a position-based assignment problem. To address this challenge, we propose a structured assignment model termed monotonic machine assignment, which enforces index-based job distribution rules and restores a form of functional symmetry across machines. We prove that any feasible assignment can be transformed into a monotonic one without increasing the total energy cost, ensuring that the global optimum lies within this reduced search space. Building on this framework, we first present a general dynamic programming algorithm with complexity . More importantly, by introducing a structural correction scheme based on misaligned assignments, we design an iterative refinement algorithm that achieves global optimality in only time, offering significant scalability for large instances. Our results contribute both structural insight and practical methods for optimal, position-sensitive, energy-aware scheduling, with potential applications in embedded systems, pipelined computation, and real-time operations.

1. Introduction

Sustainability has become a critical design consideration in modern information and communication technologies (ICTs), as the energy footprint of digital infrastructure continues to grow rapidly. With the increasing deployment of cloud data centers, edge computing devices, and embedded systems, ICT systems are estimated to contribute significantly to global energy consumption and carbon emissions [1,2]. This concern has led to widespread efforts in energy-aware computing, where task scheduling has emerged as a core mechanism for reducing energy usage without sacrificing system performance. However, the growing complexity of computing and industrial systems has introduced a variety of scheduling challenges, stemming from hardware limitations and diverse job characteristics [3,4]. In embedded real-time platforms, energy-aware control loops often involve bursts of computation mixed with lightweight sensing or communication jobs [5]. In cloud computing clusters, tasks of varying computational intensity and deadline sensitivity arrive in a fixed order determined by upstream systems. In both cases, hardware units such as CPU cores, memory banks, or pipeline stages exhibit performance deterioration over time, resulting in increasing per-job energy costs with position or usage [6,7]. Meanwhile, due to strict timing or dependency constraints, jobs must often be processed in their arrival sequence, and their energy profiles are far from uniform. These asymmetries—across jobs, time, and resource cost—necessitate new scheduling models that go beyond traditional speed-scaling or homogeneous job assumptions.

Energy-aware scheduling plays a pivotal role in the design of modern computational and manufacturing systems, particularly amid rising energy costs and growing environmental concerns [8]. In applications such as cloud data centers, embedded control systems, industrial production pipelines, and autonomous devices, energy consumption typically increases with processing time, job position, or accumulated system workload [5,9,10]. This pattern is especially pronounced in contexts involving hardware degradation over time, such as battery-powered platforms and mission-critical real-time systems [11]. Consequently, there is a pressing need for scheduling strategies that minimize total energy consumption or operational costs under time-sensitive and resource-constrained environments.

Over the past decades, extensive research has focused on energy-efficient scheduling. A foundational model is the speed-scaling framework introduced by Yao et al. [9], where processor speeds are dynamically adjusted to balance energy consumption and execution time. This model has been extended to incorporate various energy constraints, as summarized in the survey by Albers [12]. However, these models often assume homogeneous jobs and do not account for cost escalation due to job position or system degradation.

An alternative line of research focuses on deteriorating jobs, where processing times or costs increase with job position, as introduced by Mosheiov [13]. Subsequent studies have explored time-dependent processing costs and interval scheduling problems, introducing complexities such as job release times and position-based penalties [14,15,16,17]. While these studies offer rich insights into temporal degradation, many assume jobs can be reordered to minimize costs, making them unsuitable for streaming or pipelined environments where job arrival order is fixed. Several recent studies have explored learning-based approaches for energy-aware scheduling. For example, Wang et al. [18] proposed a deep reinforcement learning (DRL) scheduler for fog and edge computing systems, targeting trade-offs between system load and response time. A recent survey by Hou et al. [19] reviewed DRL-based strategies for energy-efficient task scheduling in cloud systems. Furthermore, López et al. [20] introduced an intelligent energy-pairing scheduler for heterogeneous HPC clusters, and metaheuristic methods have also been applied to multi-robot swarm scheduling problems [21]. These studies reflect a growing interest in adaptive scheduling methods, which complement our structural and provably optimal approach. While such methods offer flexibility and empirical effectiveness, they typically yield approximate solutions without structural optimality guarantees. By contrast, our work focuses on deriving exact or provably near-optimal schedules under a formally defined position-dependent cost model.

More recently, researchers have begun exploring energy-aware scheduling in heterogeneous and constrained systems. For instance, in embedded and cyber–physical systems, Mahmood et al. [22] and Khaitan and McCalley [23] investigated scheduling in cyber–physical and embedded real-time environments, emphasizing the impact of system heterogeneity and energy constraints. In high-performance computing (HPC) contexts, Kocot et al. [8] reviewed energy-aware scheduling techniques in modern HPC platforms, highlighting mechanisms such as DVFS, job allocation heuristics, and power capping. Mei et al. [5] developed a deadline-aware scheduler for DVFS-enabled heterogeneous machines. Similarly, Esmaili and Pedram [24] applied deep reinforcement learning to minimize energy in cluster systems, and Zhang and Chen [25] surveyed scheduling methods in mixed-criticality systems, stressing the importance of balancing energy consumption and job reliability under strict timing constraints. Meanwhile, algorithmic studies have tackled time-dependent and cost-aware scheduling problems, offering complexity classifications and algorithmic solutions under various structural assumptions [26,27,28,29]. In particular, Im et al. [27] and Chen et al. [28] analyzed models with temporally evolving cost functions, while additional frameworks such as bipartite matching with decomposable weights [30,31], interval-constrained job assignments [32,33], and weighted matching under cost constraints [34] have enriched the algorithmic toolbox for cost-sensitive scheduling.

Despite these advancements, many existing models fall short in capturing key characteristics of real-world systems. First, most assume flexible job orderings or job independence, whereas many practical applications impose strict sequential constraints due to job arrival patterns or dependency relations, such as fixed workflow pipelines in cloud platforms [35,36]. Second, job heterogeneity is often overlooked, despite substantial differences in energy profiles (e.g., light vs. heavy jobs) [5,8,37,38]. Third, models with variable slot costs frequently assume piecewise or non-monotonic functions, failing to represent realistic scenarios with strictly increasing cost structures [39]. Collectively, these limitations restrict the applicability of prior approaches in energy-sensitive, real-time scheduling environments.

In this paper, we address these challenges by investigating a scheduling model with the following four key features: (i) each of the m identical machines processes exactly n sequentially arriving jobs; (ii) the job sequence is fixed and must be scheduled in order without rearrangement; (iii) each job is categorized as either a light job (with zero cost) or a heavy job (with positive cost); and (iv) each machine’s slot cost is a fixed monotonically increasing sequence, independent of the job type. To minimize the total energy cost incurred by assigning heavy jobs to higher-cost slots, we formally define this scheduling problem as an integer linear program (ILP) and normalize it to a simplified version where only the placement of heavy jobs affects the objective.

To illustrate the real-world relevance of this model, consider a battery-powered drone control system where lightweight sensing tasks and heavy navigation computations arrive in a fixed order and must be assigned to a limited set of processors. Such systems often exhibit energy asymmetry: heavy tasks consume more power, and later slots incur higher energy due to thermal or resource degradation—conditions that naturally align with our model assumptions.

Our contributions are summarized as follows. First, we formalize an energy-aware scheduling problem in which a fixed sequence of heterogeneous jobs must be assigned to identical machines with strictly increasing slot costs. By reformulating the objective function through cost simplification, we reduce the problem to a position-based assignment model focused solely on heavy jobs and provide an equivalent integer linear programming (ILP) formulation under positional constraints. Second, we introduce the concept of monotonic machine assignment, a structured scheduling framework that guarantees both feasibility and optimality preservation. We prove that any feasible assignment can be transformed into a monotonic one without increasing the total energy cost, and characterize the reduced solution space via a vector of heavy job counts per machine. This transformation effectively restores a form of functional symmetry in job allocation under asymmetric cost constraints. Third, building on this structure, we develop two algorithms for computing optimal monotonic schedules. The first is a dynamic programming algorithm with time complexity . More importantly, we propose a misalignment elimination algorithm that incrementally refines job distributions and provably converges to the global optimum in only time, offering significant scalability for large-scale scheduling applications. Collectively, our work delivers both theoretical insight and provably optimal algorithms for position-sensitive, energy-aware scheduling, with practical relevance to streaming computation, embedded systems, and real-time industrial platforms.

The remainder of this paper is structured as follows. Section 2 presents the formal problem definition, introduces the integer linear programming (ILP) formulation, and performs a cost simplification that reduces the objective to the placement of heavy jobs. Section 3 introduces the concept of monotonic machine assignments, establishes key structural properties, and develops a dynamic programming algorithm to compute the optimal schedule within this restricted space. Section 4 proposes an iterative misalignment elimination algorithm that incrementally refines any feasible schedule and guarantees convergence to the global optimum in polynomial time. Finally, Section 5 concludes this paper with a summary of the findings and a discussion of potential applications in energy-aware and real-time systems.

2. Problem Formulation

This section formalizes the energy-aware scheduling problem under consideration. The model captures practical systems such as cloud platforms, embedded processors, and production lines where later execution incurs higher energy cost due to thermal degradation, increased resistance, or growing workload intensity. We begin by presenting the system model and formulation, followed by a normalization step that simplifies subsequent algorithmic analysis. Table 1 summarizes the main notations used throughout this paper for clarity and ease of reference.

Table 1.

Summary of notations.

2.1. Model and ILP Formulation

We consider a scheduling system consisting of a set of m identical machines, denoted by . Each machine has n sequential execution slots, allowing it to process up to n jobs. A total of jobs, indexed by , arrive in a fixed and predetermined order. Each job belongs to one of two types, indicated by :

- Heavy job: , incurs higher energy cost;

- Light job: , incurs lower energy cost.

Each machine’s n slots are indexed in order, and their associated energy weights increase monotonically:

where denotes the energy weight of the k-th slot. Assigning job to slot on machine incurs an energy cost:

where are fixed positive coefficients reflecting the per-unit energy consumption of heavy and light jobs, respectively. We introduce the following binary variable:

The objective is to minimize the total energy cost:

subject to the following constraints:

- (C1) Job Assignment: Each job is assigned to exactly one machine and one slot:

- (C2) Slot Occupation: Each slot is occupied by exactly one job:

- (C3) Order Preservation on Each Machine: For any two jobs and ( with ), if both jobs are assigned to the same machine , then must be scheduled earlier than :

This formulation defines an integer linear program (ILP), where the decision variables are binary, and all constraints and the objective are linear. The problem is NP-hard, as it generalizes classic job assignment with precedence constraints and cost-based objectives.

2.2. Cost Normalization

We now show that the objective can be simplified via normalization. Observe that the total cost under any assignment is

with

Expanding the objective,

The first term is constant across all feasible assignments, since all slots are occupied exactly once. Specifically,

Therefore, for optimization purposes, we can equivalently minimize

This allows us to normalize the coefficients without loss of generality by setting

Under this normalization, the simplified total energy cost becomes

which will be the target function in our subsequent algorithmic design.

In summary, we formalized an energy-aware scheduling problem over identical machines with monotonically increasing slot costs and heterogeneous job types. The formulation incorporates assignment, exclusivity, and order-preserving constraints, and admits a normalized objective that focuses solely on the placement of heavy jobs. This structural insight simplifies the optimization landscape and guides the design of efficient algorithms, which we develop in the following sections.

3. Monotonic Machine Assignment

This section introduces a structured assignment model, referred to as the monotonic machine assignment, to facilitate the theoretical analysis of the energy-aware scheduling problem. We prove that any feasible assignment can be transformed into a monotonic one without increasing the total energy cost, and we further present a dynamic programming algorithm to compute an optimal assignment under this structure.

3.1. Definition

A monotonic machine assignment is defined as a job-to-machine allocation that satisfies the following three properties:

- Job Number Monotonicity: Let denote the number of jobs assigned to machine after the first j jobs have been scheduled. Then, for all and all , we requireThat is, machines with smaller indices must have at least as many jobs as those with larger indices at any moment.

- Light Job Monotonicity: Let be the machine to which job is assigned. If job is a light job (), then it must be assigned to the machine with the smallest index i such that . Formally, for all with , we require

- Heavy Job Monotonicity: For any two heavy jobs and , if , we require their machine indices to be in non-decreasing order:That is, heavy jobs must be assigned to machines in a non-decreasing order of machine indices with respect to their arrival order.

To illustrate these properties, we provide a simple numerical example. Suppose there are machines, each with slots, and thus 9 jobs in total. Let the arrival sequence have heavy jobs at positions 2, 5, and 8 (i.e., jobs , , and are heavy, and others are light). Under a monotonic assignment, the following hold:

- Machine handles jobs 1–3: job (light) in slot 1, job (heavy) in slot 2, and job (light) in slot 3.

- Machine handles jobs 4–6: job (light) in slot 1, job (heavy) in slot 2, and job (light) in slot 3.

- Machine handles jobs 7–9: job (light) in slot 1, job (heavy) in slot 2, and job (light) in slot 3.

This simple example shows how job number monotonicity ensures machines fill in order, and heavy job monotonicity places each heavy job in the earliest available slot on its machine while preserving non-decreasing machine indices. Light jobs occupy the remaining slots by light job monotonicity. Such a distribution concretely illustrates the abstract rules before proceeding to the formal algorithm.

Theorem 1.

For any feasible job assignment , there exists a monotonic machine assignment that satisfies the above three monotonicity properties such that the total energy cost under is no greater than that under . That is,

Proof.

We show that any feasible job assignment can be transformed into a monotonic machine assignment without increasing the total energy cost, by applying the following three steps:

Step 1: Enforcing Job Number Monotonicity. For each job , , if there exists a pair of adjacent machines such that , we select the earliest such violation. Then we have and swap the next job assigned to machine (starting from ) with a job on machine . Because all machines have the same slot cost structure, and only the indices of the machines are affected—not the relative positions of the slots within each machine—the total cost remains unchanged. We repeat this process until for all and all , we have . This ensures job number monotonicity.

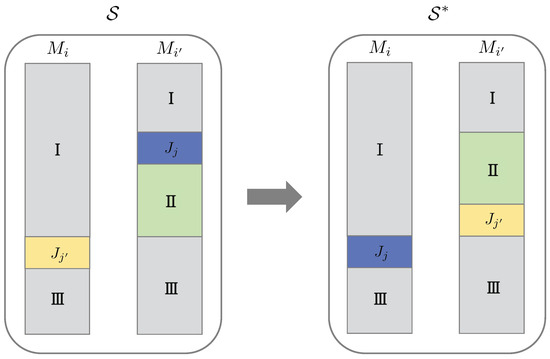

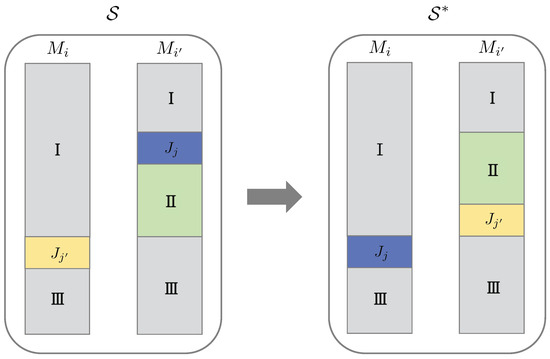

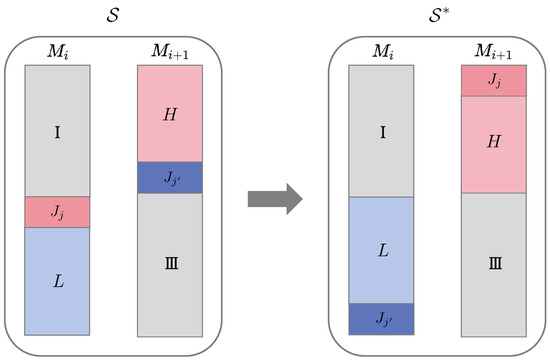

Step 2: Enforcing Light Job Monotonicity. Suppose that is a light job that is not assigned to the available machine with the smallest index, but is instead scheduled on a later machine with . Let be the earliest subsequent job () that is assigned to machine . We divide the job sequence into three consecutive phases: Phase I includes jobs scheduled before ; Phase II consists of jobs from to ; and Phase III includes jobs scheduled after , as shown on the left side of Figure 1. We construct a new schedule by swapping the assignments of and , reassigning to machine and to machine , as shown on the right side of Figure 1. Since the original schedule satisfies job number monotonicity, the slot position of in does not increase. Moreover, because is a light job with negligible cost, this reassignment does not increase the total energy cost. The updated schedule continues to maintain job number monotonicity. Therefore, this correction process can be applied iteratively until light job monotonicity is fully achieved.

Figure 1.

Correction of light job monotonicity by reassigning a light job to the smallest-indexed available machine.

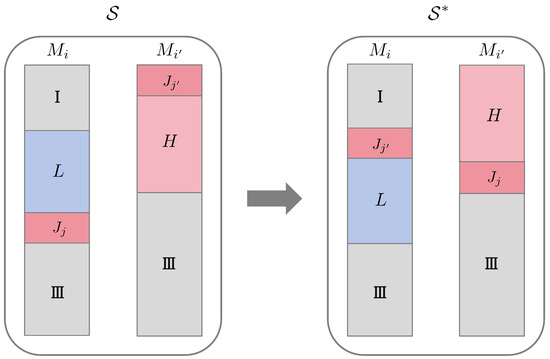

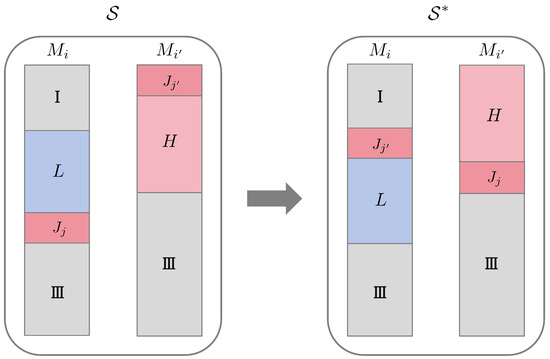

Step 3: Enforcing Heavy Job Monotonicity. Assume that the first violation of heavy job monotonicity occurs when a heavy job is assigned to machine , despite the existence of an earlier heavy job () that was previously assigned to a later machine with . In other words, is scheduled to a machine with a smaller index than a preceding heavy job, violating the required non-decreasing order of machine indices for heavy job assignments. We focus on this first violation, as depicted on the left side of Figure 2. Since machine was still available at the time was scheduled (i.e., ), light job monotonicity ensures that no light jobs could have been assigned to before . Therefore, all jobs on scheduled before must be heavy jobs, labeled as set in the figure. Let be the earliest such heavy job on .

Figure 2.

Swap-based correction of heavy job monotonicity to ensure non-decreasing machine indices for earlier jobs.

Meanwhile, because represents the first violation of heavy job monotonicity, all jobs scheduled to between and must be light jobs. These are labeled as set in Figure 2. The job sequence is thus divided into three phases: Phase I consists of all jobs prior to ; Phase II spans from to , and includes both heavy jobs on (set ) and light jobs on (set ); and Phase III contains all jobs after . To restore monotonicity, we construct a new schedule by swapping the assignments of and , reassigning to machine and to machine , as illustrated on the right side of Figure 2. Since has only received light jobs between and , and because light jobs incur negligible energy cost, this swap does not affect the overall cost contribution of the light jobs. Furthermore, the slot index of in is no greater than in the original schedule , and the number of heavy jobs assigned to remains unchanged. As the slot cost function is fixed and strictly increasing, the total energy cost does not increase.

Importantly, the reassignment does not violate light job monotonicity, and the updated schedule continues to satisfy job number monotonicity. Therefore, this correction procedure can be applied iteratively until all violations of heavy job monotonicity are eliminated.

Termination and Feasibility: Each of the above transformations resolves one violation without introducing new ones, and there is a finite number of jobs. Therefore, the process terminates in finite steps. The resulting schedule is feasible, satisfies all three monotonicity conditions, and achieves

3.2. Constructing Monotonic Assignments via Heavy Job Distribution

A monotonic machine assignment can be completely specified by a vector of heavy job quotas , where denotes the number of heavy jobs assigned to machine , and the total number of heavy jobs satisfies

with H denoting the total number of heavy jobs in the system.

Given a fixed job arrival sequence and a heavy job distribution vector, the monotonic machine assignment can be constructed by assigning each job dynamically based on its type, as outlined in Algorithm 1.

Assignment Rules.

- Heavy jobs: Assign each heavy job to the first available machine (smallest index) such that and ;

- Light jobs: Assign each light job to the first machine (smallest index) with an available slot, i.e., .

Here, is the number of heavy jobs remaining to be assigned to machine , and tracks the number of total jobs (heavy or light) assigned to thus far.

| Algorithm 1 Monotonic assignment construction from heavy quotas. |

|

Feasibility Condition: Not all heavy job vectors satisfying correspond to feasible schedules. To ensure feasibility, each machine must always have sufficient capacity to accommodate its remaining heavy job quota at every stage of the sequential assignment. That is, the following constraint must be satisfied throughout the process:

We define as the number of heavy jobs in the i-th block of the job sequence , given by

Then, a heavy job allocation vector is feasible if and only if

3.3. Dynamic Programming for Optimal Monotonic Assignment

We present a dynamic programming algorithm to compute an optimal monotonic machine assignment, minimizing total energy cost under a fixed job arrival order and slot-based cost structure.

DP State Definition: Let DP[i][h] denote the minimum total cost of assigning the first i machines with exactly h heavy jobs scheduled in total.

Transition Function: Let represent the minimum cost of assigning exactly k heavy jobs and light jobs to machine , assuming that heavy jobs have already been scheduled across the first machines. Then, the recurrence is

with the base cases

The final solution is given by

Feasibility Condition: If assigning k heavy jobs to machine is infeasible—e.g., there are insufficient remaining heavy jobs or too few available slots due to prior light jobs—then is set to and excluded from the transition.

Time Complexity: The total number of DP states is

Each state enumerates up to n values of k, and the corresponding values are retrieved in time via preprocessing. Hence, the total time complexity of the dynamic programming procedure is

Preprocessing of Transition Costs: Each value is computed by selecting the appropriate subsequence of jobs to be assigned to machine , and calculating the total slot cost of the top-k heavy jobs within that segment. This preprocessing step is efficiently performed using a prefix sum or scan-based technique, as detailed in Algorithm 2. For any fixed machine index i and heavy job prefix count , the full cost vector can be computed in time. Since there are at most distinct values of (i.e., the number of ways to distribute heavy jobs across the first machines), and m choices of i, the total number of pairs is . Therefore, the total time required to compute all values for all machines and heavy job prefix states is

Thus, the overall time complexity of the proposed algorithm—including both dynamic programming and preprocessing of all values—is

| Algorithm 2 ComputeAllGi: Preprocessing for machine . |

|

4. Misalignment Elimination Algorithm

In this section, we develop an iterative refinement algorithm that transforms an initial feasible assignment into an optimal monotonic one. Building on the structural properties of the assignment, we identify local inconsistencies—referred to as misaligned assignments—and propose a targeted adjustment strategy to eliminate them. The algorithm operates by incrementally resolving these misalignments, one at a time, while ensuring that feasibility is preserved and the total cost does not increase. Through repeated application of this refinement step, the algorithm progressively improves the assignment structure until all machines are properly aligned. We provide both the algorithmic procedure and a formal proof of its correctness and computational efficiency.

4.1. Misaligned Assignment

Definition 1 (Misaligned Assignment).

Given a monotonic machine assignment , we denote a misaligned assignment on adjacent machines and if the slot index of the last heavy job on is greater than or equal to that of the first light job on . This implies a sub-optimal assignment of heavy and light jobs across adjacent machines, which may degrade scheduling efficiency. Formally, let the following hold:

- denotes the slot index of the last heavy job assigned to (with if no heavy job exists);

- denotes the slot index of the first light job assigned to (with if no light job exists).

- Then machines and are said to be misaligned if

- For notational simplicity, we may omit the superscript when the assignment context is clear.

Consider two consecutive machines and that exhibit a misaligned assignment. Let denote the last heavy job assigned to , and denote the first light job assigned to , as illustrated on the left side of Figure 3. To eliminate this misalignment, we construct a new assignment by swapping these two jobs: is reassigned to , and is reassigned to , as shown on the right side of the Figure 3. Since the slot index of job on machine is no smaller than that of job on machine (i.e., ), the reassignment does not increase the total energy cost. Furthermore, the reassignment updates the number of heavy jobs as follows:

Figure 3.

Elimination of a misaligned assignment by exchanging a heavy job with a light job between adjacent machines.

- Machine : ;

- Machine : .

The swap affects only the slot configuration of machines and . Specifically, the following properties hold in the updated assignment :

- ;

- ;

- ;

- .

These local updates imply that the misalignment condition may no longer hold in the updated assignment , thereby resolving the inconsistency between machines and . However, this swap may introduce new misalignments involving adjacent machine pairs, specifically or . Nevertheless, each swap strictly improves the structural alignment by moving a heavy job forward along the machine sequence. Since there are at most heavy jobs in total, and each job can traverse at most m machines (from to in the worst case), the total number of swaps is bounded by .

4.2. Efficient Computation of Slot Positions

Let and denote the cumulative number of light and heavy jobs, respectively, among the first j jobs in the input sequence:

Let be the number of heavy jobs assigned to machine , and let denote the total number of heavy jobs assigned to machines through .

(1) Computation of : The total number of heavy jobs assigned to the first i machines is . The last heavy job assigned to machine corresponds to the -th heavy job in the global sequence. Let j be the smallest index such that

The number of light jobs among the first j jobs is , and the number of light jobs assigned to machines through is . Let

denote the number of light jobs already assigned to machine before job . Then, the slot position of the last heavy job on is

(2) Computation of : The number of light jobs assigned to machines through is . Hence, the first light job assigned to machine corresponds to the -th light job in the global sequence. Let j be the smallest index such that

The number of heavy jobs among the first j jobs is , and the number of heavy jobs already assigned to machines through is y. Let

denote the number of heavy jobs already assigned to machine before job . Then, the slot position of this first light job on is

Both prefix arrays can be preprocessed in time. By recording the first occurrence of each prefix value, the queries for and can be answered in constant time using hash maps or direct indexing. Consequently, both slot positions can be computed in time per query.

4.3. Misalignment Elimination Algorithm

We now present the complete iterative refinement procedure in Algorithm 3, which incrementally eliminates misaligned assignments until none remain, ultimately yielding an optimal monotonic assignment. The algorithm begins with the initial heavy job distribution , computed directly from the input job sequence. It then maintains a dynamic misalignment set C, which stores the indices of all machines currently involved in misaligned assignments, based on the slot positions and .

This reassignment strategy preserves feasibility at every step and ensures that the total assignment cost is non-increasing. Importantly, due to the monotonic behavior of and during each swap, the misalignment set C is only locally affected. Specifically, machine pairs that do not involve indices i or remain unaffected. This locality property guarantees that each iteration modifies only a small portion of the assignment and simplifies the update of C.

| Algorithm 3 Misalignment elimination algorithm. |

|

Moreover, the slot positions and can be maintained and updated in constant time per iteration, i.e., , as previously discussed. Since each iteration performs a single resolution step by relocating one heavy job between adjacent machines, and each heavy job can be moved across at most m machines, the total number of iterations is bounded by . As each iteration requires constant time, the overall time complexity of the algorithm is . The memory usage is .

We summarize the correctness and computational complexity of the proposed method in the following theorem.

Theorem 2.

The misalignment elimination algorithm computes an optimal monotonic assignment in time.

Proof.

This proof is primarily devoted to establishing the optimality of the output produced by the misalignment elimination algorithm. The time complexity of the method has already been analyzed earlier and is not the focus here. We denote by the assignment produced by the misalignment elimination algorithm when starting from the initial assignment .

We first prove that the algorithm always converges to a unique assignment. Assume, for the sake of contradiction, that the algorithm terminates at two distinct monotonic assignment vectors, denoted by and , such that . Without loss of generality, let i be the smallest index such that . Then there exists an intermediate assignment , which occurs during the execution of the algorithm that leads to , such that the partial sum up to index i satisfies

and yet contains a misalignment at index i, that is,

By the monotonicity of the assignment process, we have

which implies , contradicting the assumption that is misalignment-free. Therefore, the algorithm must converge to a unique final assignment.

Next, we show that the algorithm’s final assignment is optimal. Let denote the optimal initial assignment, i.e., the one that minimizes total cost among all monotonic assignments. Then is the corresponding optimal misalignment-free assignment produced by the algorithm . Let the assignment generated from the natural initial input be .

We prove by induction on m that . That is, we show that the final assignments match:

Base case: When , the result holds trivially since all heavy jobs must be assigned to the single machine.

Inductive step: Assume the claim holds for . For the case of m machines, we compare the first coordinate of the final assignment.

Suppose . Consider the modified input

which preserves the total number of heavy jobs. By the monotonicity of , we obtain

Now, by the induction hypothesis, we know that

so the entire assignment equals , contradicting the assumption .

Now suppose . Because the algorithm moves heavy jobs forward to eliminate misalignments, once the assignment becomes misalignment-free, any further movement of heavy jobs would violate the alignment constraint and increase the total cost due to the monotonic increase in slot costs. Thus, no additional improvement is possible beyond the optimal misalignment-free configuration.

Applying this logic repeatedly, we obtain

where the last equality follows from the induction hypothesis. This contradicts the optimality of the corresponding optimal misalignment-free assignment produced by the algorithm .

Therefore, we must have . By the induction hypothesis again, the assignments on the remaining machines also match. Thus, the entire final assignment produced by the algorithm matches the optimal one:

□

4.4. Experimental Evaluation

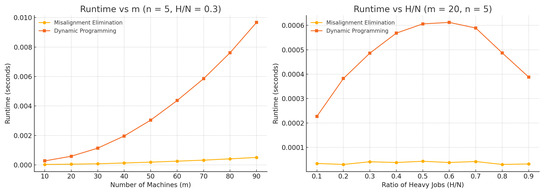

To evaluate the computational efficiency of the proposed misalignment elimination algorithm, a comprehensive runtime comparison was conducted against a baseline dynamic programming approach. The comparison focuses on two dimensions: the number of machines m, and the ratio of heavy jobs , under realistic scheduling configurations.

Implementation Details: All algorithms were implemented in C++17 and compiled with g++ using the -O2 optimization flag. Experiments were executed on a Linux machine with an Intel i7-12700H CPU and 32 GB RAM. High-precision timing was measured using std::chrono. For each data point, 100 random instances were generated with fixed parameters, and the average runtime was recorded to reduce variance.

Each test case consists of jobs, with exactly heavy jobs, randomly mixed with light jobs. The binary job sequence is randomly shuffled using std::shuffle to ensure unbiased sampling.

Scaling with Machine Number: The number of machines was varied while keeping the slot number fixed at and the heavy job ratio constant at . As illustrated in the left panel of Figure 4, the runtime of the dynamic programming method increases rapidly with m, exhibiting a clear quadratic trend. This is consistent with its theoretical complexity of , where both the number of machines and the slot count contribute multiplicatively to the computational cost. Since n is fixed in this experiment, the observed runtime growth reflects the term in the complexity, confirming that the method becomes substantially more expensive as the number of machines increases.

Figure 4.

Comparison of misalignment elimination and dynamic programming runtime under varying machine numbers and heavy job ratios.

In contrast, the misalignment elimination method achieves significantly faster runtime and better scalability. By targeting only local conflicts and incrementally refining job assignments, it avoids the combinatorial overhead of global enumeration. The observed runtime follows a near-quadratic pattern, consistent with its theoretical bound of , yet with substantially smaller constant factors. This efficiency enables it to handle large-scale instances with minimal delay, demonstrating clear practical advantages over dynamic programming.

Scaling with Heavy Job Ratio: With machine and slot counts fixed at and , the heavy job ratio was varied. The right panel of Figure 4 shows that dynamic programming runtime increases with , peaking around due to a denser and more complex state space. Slight reductions beyond this point are attributed to more uniform job types reducing branching complexity.

Meanwhile, misalignment elimination remains consistently fast across all , showing only minor variation. This robustness arises from its conflict-driven logic, which depends primarily on local slot-level constraints rather than the global distribution of job types.

Across both scaling dimensions, misalignment elimination demonstrates significantly better runtime performance than dynamic programming. It achieves reliable low-latency execution consistent with the bound, making it more suitable for large-scale or real-time scheduling scenarios where computational efficiency is critical.

5. Conclusions

This paper investigates an energy-aware scheduling problem in which a fixed sequence of heterogeneous jobs must be assigned to multiple identical machines with monotonically increasing slot costs. A key observation is that the contribution of light jobs remains invariant across all feasible assignments. This enables the problem to be reformulated as minimizing the cumulative cost associated with heavy job placements. To address the cost asymmetry under structural constraints, we propose the concept of monotonic machine assignment, which imposes index-based rules to guide job placement and restore regularity. This structure-aware reformulation narrows the solution space without sacrificing optimality, thereby facilitating efficient algorithmic design.

Based on this framework, we develop two optimal algorithms: a dynamic programming method with time complexity and a more scalable misalignment elimination algorithm that achieves global optimality in time. Although the machines are structurally symmetric, inherent asymmetry in job costs and arrival order can lead to imbalanced naive assignments. In contrast, optimal solutions restore regularity through structured job allocation. Beyond algorithmic efficiency, the monotonic assignment model offers structural insight into how order-based constraints can be exploited to reduce complexity in asymmetric scheduling environments. This contributes to the broader literature on structure-aware optimization and highlights the role of latent regularities in enabling efficient scheduling under combinatorial constraints. Moreover, the simplicity and modularity of the proposed algorithms make them suitable for deployment in decentralized controllers, energy-sensitive embedded processors, and edge computing environments with real-time constraints.

While our algorithms are designed under a fixed job sequence and deterministic slot costs, this structural assumption reflects many real-world systems such as embedded controllers, pipelined compute chains, and energy-aware edge processors. In such environments, jobs typically arrive in a fixed order due to sensing or control dependencies, and energy or delay costs increase with position as a result of thermal buildup or battery degradation. Compared to heuristic or learning-based scheduling strategies, our model-driven approach provides structural transparency and provable optimality, without relying on extensive parameter tuning or large training datasets. Future research may explore online extensions or hybrid models that integrate structure-aware optimization with adaptive learning mechanisms. In addition, further directions include handling dynamically arriving jobs, accommodating heterogeneous machine architectures, and formulating multi-objective strategies that balance trade-offs between energy efficiency, latency, and fairness. It would also be valuable to investigate learning-based scheduling frameworks that adapt to workload patterns over time, as well as stochastic models that incorporate uncertainty in job characteristics and energy estimation.

Author Contributions

L.Z.: Conceptualization; Methodology; Validation; Formal Analysis; Resources; Writing—Original Draft; Writing—review and editing; Visualization; and Funding Acquisition. H.F.: Conceptualization; Methodology; Validation; Formal Analysis; Writing—Original Draft; Writing—Review and Editing; Visualization; and Supervision. M.S.: Validation; Formal Analysis; Writing—Review and Editing; and Visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (72201009).

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

Author Mu Su was employed by the Chinese Academy of Science and Technology for Development. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Gelenbe, E.; Caseau, Y. The impact of information technology on energy consumption and carbon emissions. Ubiquity 2015, 2015, 1–15. [Google Scholar] [CrossRef]

- Freitag, C.; Berners-Lee, M.; Widdicks, K.; Knowles, B.; Blair, G.; Friday, A. The climate impact of ICT: A review of estimates, trends and regulations. arXiv 2021, arXiv:2102.02622. [Google Scholar]

- Venu, G.; Vijayanand, K. Task scheduling in cloud computing: A survey. Int. J. Res. Appl. Sci. Eng. Technol. (IJRASET) 2020, 8, 2258–2266. [Google Scholar] [CrossRef]

- Ding, L.; Guan, Z.; Luo, D.; Rauf, M.; Fang, W. An adaptive search algorithm for multiplicity dynamic flexible job shop scheduling with new order arrivals. Symmetry 2024, 16, 641. [Google Scholar] [CrossRef]

- Mei, X.; Wang, Q.; Chu, X.; Liu, H.; Leung, Y.W.; Li, Z. Energy-aware task scheduling with deadline constraint in DVFS-enabled heterogeneous clusters. arXiv 2021, arXiv:2104.00486. [Google Scholar]

- Sharifi, M.; Taghipour, S. Optimal production and maintenance scheduling for a degrading multi-failure modes single-machine production environment. Appl. Soft Comput. 2021, 106, 107312. [Google Scholar] [CrossRef]

- Chen, N.; Chen, Y.; Yi, W.; Pei, Z. A novel DQN-based hybrid algorithm for integrated scheduling and machine maintenance in dynamic flexible job shops. IET Collab. Intell. Manuf. 2025, 7, e70028. [Google Scholar] [CrossRef]

- Kocot, B.; Czarnul, P.; Proficz, J. Energy-aware scheduling for high-performance computing systems: A survey. Energies 2023, 16, 890. [Google Scholar] [CrossRef]

- Yao, F.; Demers, A.; Shenker, S. A scheduling model for reduced CPU energy. In Proceedings of the IEEE 36th Annual Foundations of Computer Science, Milwaukee, WI, USA, 23–25 October 1995; pp. 374–382. [Google Scholar]

- Ben Alla, S.; Ben Alla, H.; Touhafi, A.; Ezzati, A. An efficient energy-aware tasks scheduling with deadline-constrained in cloud computing. Computers 2019, 8, 46. [Google Scholar] [CrossRef]

- Jiang, F.; Xia, Y.; Yan, L.; Liu, W.; Zhang, X.; Li, H.; Peng, J. Battery-aware workflow scheduling for portable heterogeneous computing. IEEE Trans. Sustain. Comput. 2024, 9, 677–694. [Google Scholar] [CrossRef]

- Albers, S. Energy-efficient algorithms. Commun. ACM 2010, 53, 86–96. [Google Scholar] [CrossRef]

- Mosheiov, G. Scheduling jobs under simple linear deterioration. Comput. Oper. Res. 1994, 21, 653–659. [Google Scholar] [CrossRef]

- Boddy, M.; Dean, T.L. Solving Time-Dependent Planning Problems; Brown University, Department of Computer Science: Providence, RI, USA, 1989. [Google Scholar]

- Ho, K.I.; Leung, J.Y.; Wei, W. Complexity of scheduling tasks with time-dependent execution times. Inf. Process. Lett. 1993, 48, 315–320. [Google Scholar] [CrossRef]

- Etgar, R.; Shtub, A.; LeBlanc, L.J. Scheduling projects to maximize net present value—The case of time-dependent, contingent cash flows. Eur. J. Oper. Res. 1997, 96, 90–96. [Google Scholar] [CrossRef]

- Ng, C.T.; Cheng, T.E.; Bachman, A.; Janiak, A. Three scheduling problems with deteriorating jobs to minimize the total completion time. Inf. Process. Lett. 2002, 81, 327–333. [Google Scholar] [CrossRef]

- Wang, Z.; Goudarzi, M.; Gong, M.; Buyya, R. Deep reinforcement learning-based scheduling for optimizing system load and response time in edge and fog computing environments. arXiv 2023, arXiv:2309.07407. [Google Scholar] [CrossRef]

- Hou, H.; Agos Jawaddi, S.N.; Ismail, A. Energy efficient task scheduling based on deep reinforcement learning in cloud environment: A specialized review. Future Gener. Comput. Syst. 2024, 151, 214–231. [Google Scholar] [CrossRef]

- López, M.; Stafford, E.; Bosque, J.L. Intelligent energy pairing scheduler (InEPS) for heterogeneous HPC clusters. J. Supercomput. 2025, 81, 427. [Google Scholar] [CrossRef]

- Mokhtari, M.; Mohamadi, P.H.A.; Aernouts, M.; Singh, R.K.; Vanderborght, B.; Weyn, M.; Famaey, J. Energy-aware multi-robot task scheduling using meta-heuristic optimization methods for ambiently-powered robot swarms. Robot. Auton. Syst. 2025, 186, 104898. [Google Scholar] [CrossRef]

- Mahmood, A.; Khan, S.A.; Albalooshi, F.; Awwad, N. Energy-aware real-time task scheduling in multiprocessor systems using a hybrid genetic algorithm. Electronics 2017, 6, 40. [Google Scholar] [CrossRef]

- Khaitan, S.K.; McCalley, J.D. Design techniques and applications of cyberphysical systems: A survey. IEEE Syst. J. 2015, 9, 350–365. [Google Scholar] [CrossRef]

- Esmaili, A.; Pedram, M. Energy-aware scheduling of jobs in heterogeneous cluster systems using deep reinforcement learning. In Proceedings of the 2020 21st International Symposium on Quality Electronic Design (ISQED), Santa Clara, CA, USA, 25–26 March 2020; pp. 426–431. [Google Scholar]

- Zhang, Y.W.; Chen, R.K. A survey of energy-aware scheduling in mixed-criticality systems. J. Syst. Archit. 2022, 127, 102524. [Google Scholar] [CrossRef]

- Kulkarni, J.; Munagala, K. Algorithms for cost-aware scheduling. In Proceedings of the International Workshop on Approximation and Online Algorithms, Ljubljana, Slovenia, 13–14 September 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 201–214. [Google Scholar]

- Im, S.; Kulkarni, J.; Munagala, K. Competitive algorithms from competitive equilibria: Non-clairvoyant scheduling under polyhedral constraints. J. ACM 2017, 65, 1–33. [Google Scholar] [CrossRef]

- Chen, L.; Megow, N.; Rischke, R.; Stougie, L.; Verschae, J. Optimal algorithms and a PTAS for cost-aware scheduling. In Proceedings of the International Symposium on Mathematical Foundations of Computer Science, Milan, Italy, 24–28 August 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 211–222. [Google Scholar]

- Epstein, L.; Levin, A.; Marchetti-Spaccamela, A.; Megow, N.; Mestre, J.; Skutella, M.; Stougie, L. Universal sequencing on a single machine. In Proceedings of the Integer Programming and Combinatorial Optimization, Lausanne, Switzerland, 9–11 June 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 230–243. [Google Scholar]

- Burkard, R.E.; Rudolf, R.; Woeginger, G.J. Three-dimensional axial assignment problems with decomposable cost coefficients. Discret. Appl. Math. 1996, 65, 123–139. [Google Scholar] [CrossRef]

- Caseau, Y.; Laburthe, F. Solving various weighted matching problems with constraints. In Proceedings of the Principles and Practice of Constraint Programming-CP97, Linz, Austria, 29 October–1 November 1997; Smolka, G., Ed.; Springer: Berlin/Heidelberg, Germany, 1997; pp. 17–31. [Google Scholar]

- Chuzhoy, J.; Guha, S.; Khanna, S.; Naor, J.S. Machine minimization for scheduling jobs with interval constraints. In Proceedings of the 45th annual IEEE Symposium on Foundations of Computer Science, Rome, Italy, 17–19 October 2004; pp. 81–90. [Google Scholar]

- Im, S.; Li, S.; Moseley, B.; Torng, E. A dynamic programming framework for non-preemptive scheduling problems on multiple machines. In Proceedings of the Twenty-sixth Annual ACM-SIAM Symposium on Discrete Algorithms. SIAM, San Diego, CA, USA, 4–6 January 2015; pp. 1070–1086. [Google Scholar]

- Bansal, N.; Pruhs, K. The geometry of scheduling. SIAM J. Comput. 2014, 43, 1684–1698. [Google Scholar] [CrossRef]

- Wang, X.; Cao, J.; Buyya, R. Adaptive cloud bundle provisioning and multi-workflow scheduling via coalition reinforcement learning. IEEE Trans. Comput. 2022, 72, 1041–1054. [Google Scholar] [CrossRef]

- Chandrasiri, S.; Meedeniya, D. Energy-efficient dynamic workflow scheduling in cloud environments using deep learning. Sensors 2025, 25, 1428. [Google Scholar] [CrossRef]

- Hussain, M.; Wei, L.F.; Lakhan, A.; Wali, S.; Ali, S.; Hussain, A. Energy and performance-efficient task scheduling in heterogeneous virtualized cloud computing. Sustain. Comput. Inform. Syst. 2021, 30, 100517. [Google Scholar] [CrossRef]

- Grami, M. An energy-aware scheduling of dynamic workflows using big data similarity statistical analysis in cloud computing. J. Supercomput. 2022, 78, 4261–4289. [Google Scholar] [CrossRef]

- Brännvall, R.; Stark, T.; Gustafsson, J.; Eriksson, M.; Summers, J. Cost optimization for the edge-cloud continuum by energy-aware workload placement. In Proceedings of the Companion Proceedings of the 14th ACM International Conference on Future Energy Systems, New York, NY, USA, 20–23 June 2023; e-Energy ’23 Companion. pp. 79–84. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).